Contour Based Approaches for Visual Object Recognition Jamie

Contour Based Approaches for Visual Object Recognition Jamie Shotton University of Cambridge Joint work with Roberto Cipolla, Andrew Blake

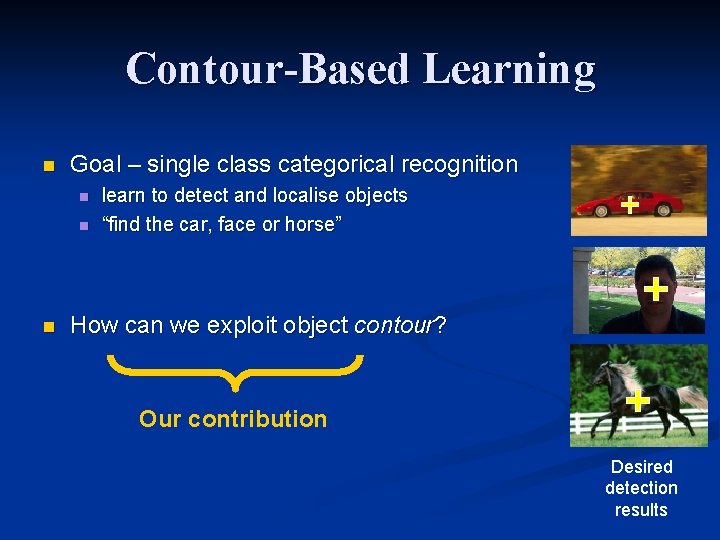

Contour-Based Learning n Goal – single class categorical recognition n learn to detect and localise objects “find the car, face or horse” How can we exploit object contour? Our contribution Desired detection results

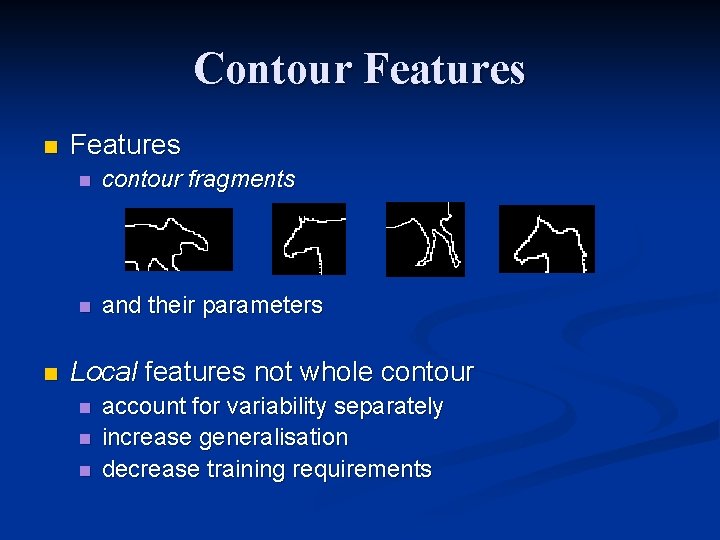

Contour Features n n Features n contour fragments n and their parameters Local features not whole contour n n n account for variability separately increase generalisation decrease training requirements

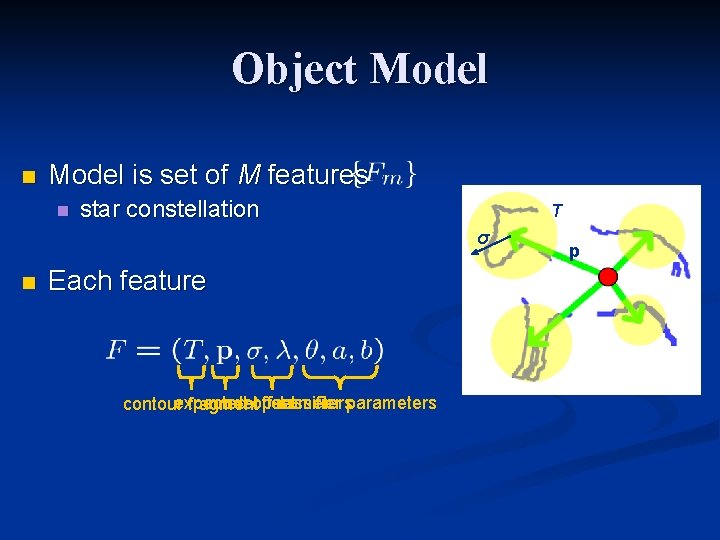

Object Model n Model is set of M features n star constellation T σ n Each feature expected modeloffset parameters classifier parameters contour fragment p

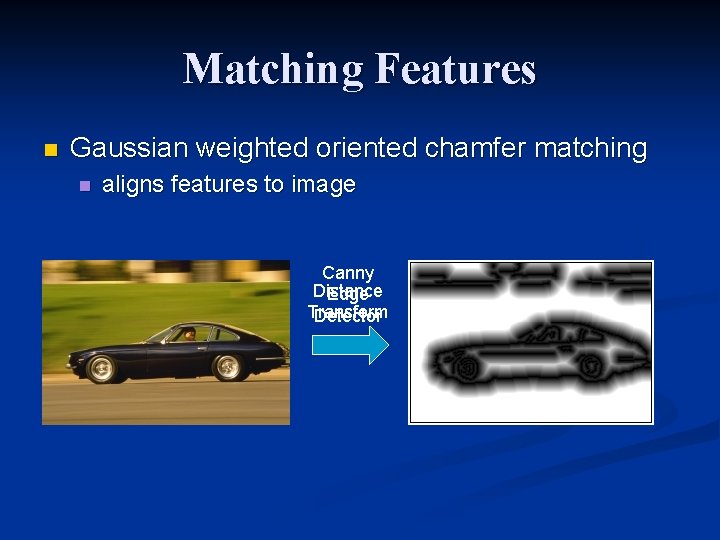

Matching Features n Gaussian weighted oriented chamfer matching n aligns features to image Canny Distance Edge Transform Detector

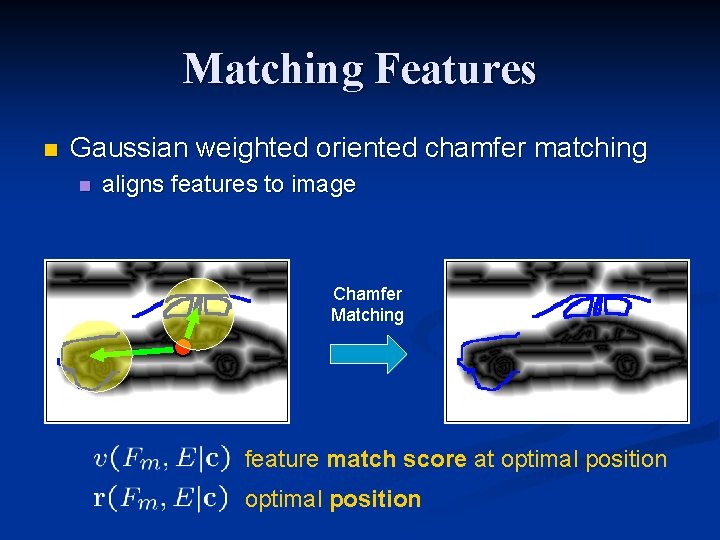

Matching Features n Gaussian weighted oriented chamfer matching n aligns features to image Chamfer Matching feature match score at optimal position

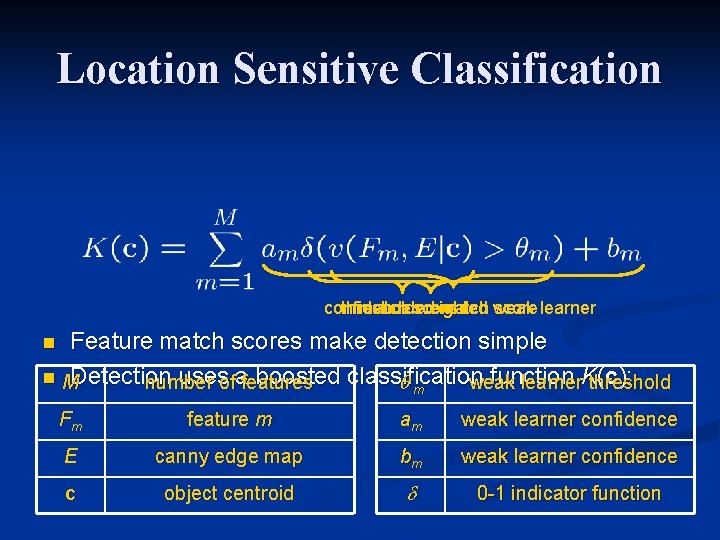

Location Sensitive Classification confidence thresholded match score weighted match weak score learner Feature match scores make detection simple n MDetection usesofafeatures boosted classification function (c): number weak learner. Kthreshold m n Fm feature m am weak learner confidence E canny edge map bm weak learner confidence c object centroid 0 -1 indicator function

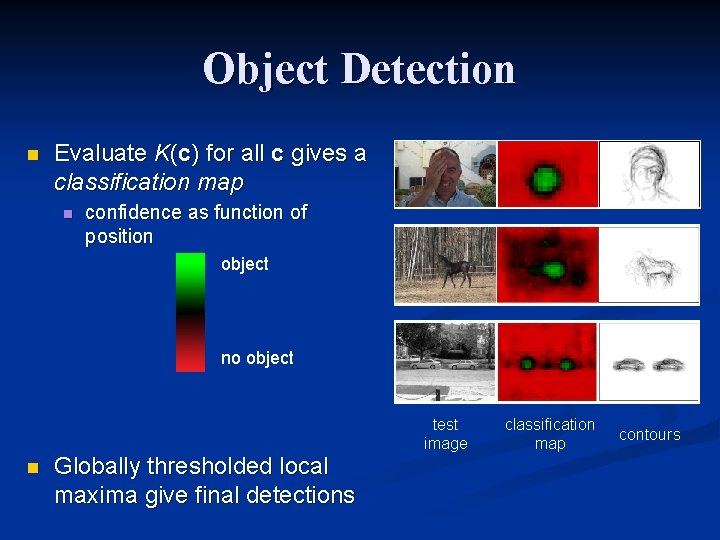

Object Detection n Evaluate K(c) for all c gives a classification map n confidence as function of position object no object n Globally thresholded local maxima give final detections test image classification map contours

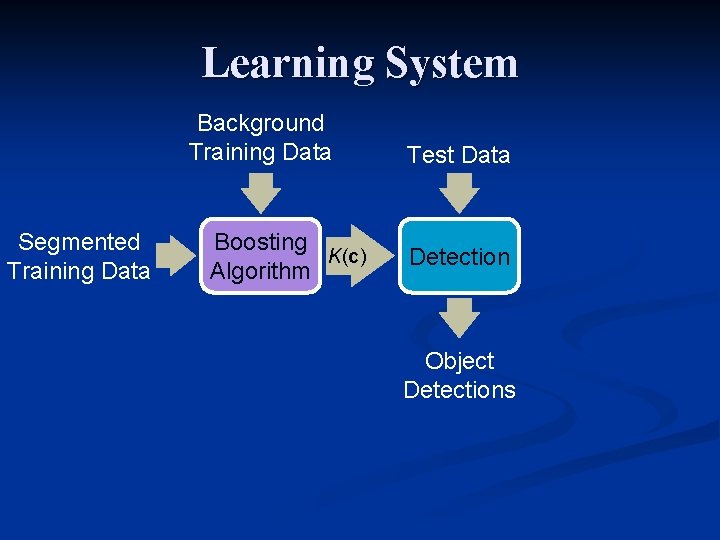

Learning System Background Training Data Segmented Training Data Boosting K(c) Algorithm Test Data Detection Object Detections

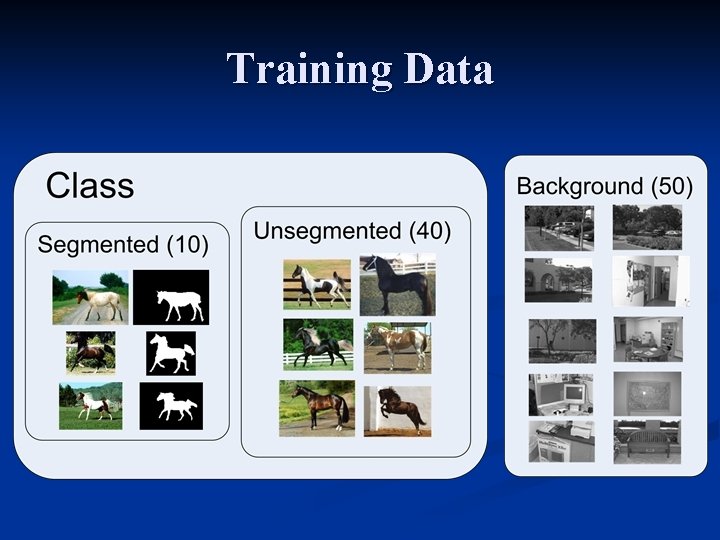

Training Data

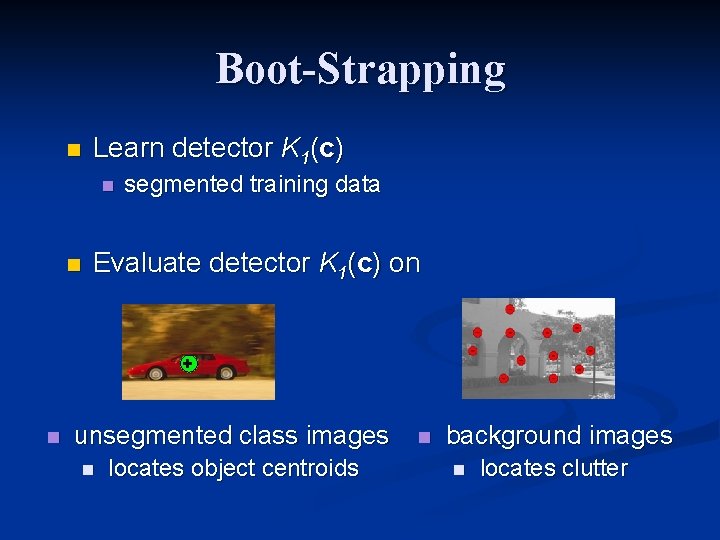

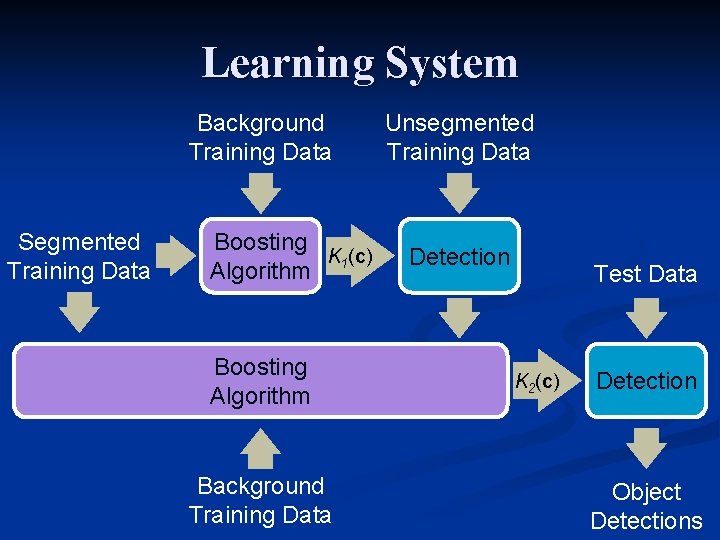

Boot-Strapping n Learn detector K 1(c) n n segmented training data Evaluate detector K 1(c) on + n unsegmented class images n locates object centroids n background images n locates clutter

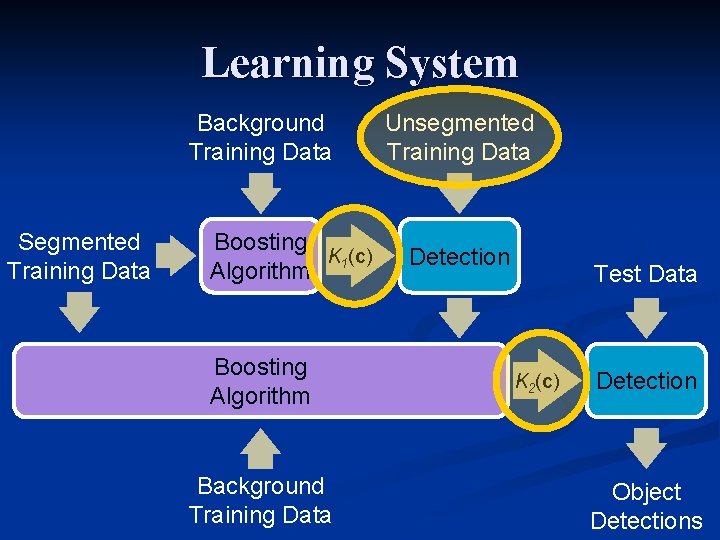

Learning System Background Training Data Segmented Training Data Boosting K 1(c) Algorithm Boosting Algorithm Background Training Data Unsegmented Training Data Detection Test Data K 2(c) Detection Object Detections

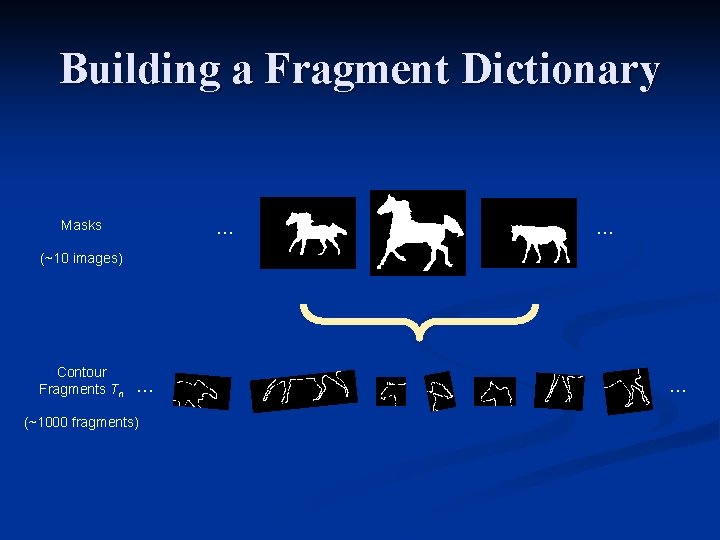

Building a Fragment Dictionary Masks … … (~10 images) Contour Fragments Tn … (~1000 fragments) …

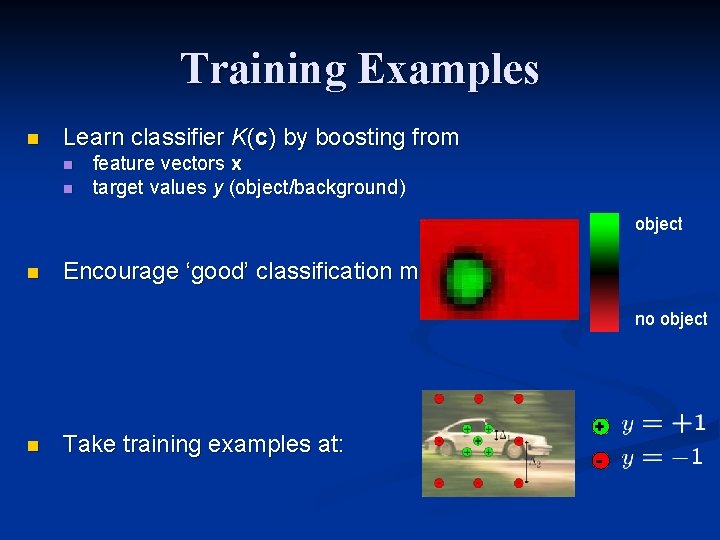

Training Examples n Learn classifier K(c) by boosting from n n feature vectors x target values y (object/background) object n Encourage ‘good’ classification map: no object n Take training examples at: + -

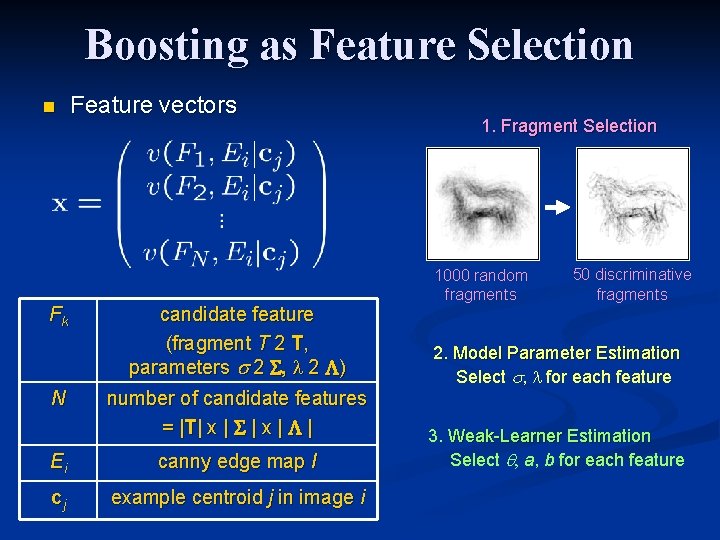

Boosting as Feature Selection n Fk N Feature vectors candidate feature (fragment T 2 T, parameters 2 , 2 ) number of candidate features = | T| x | | Ei canny edge map I cj example centroid j in image i 1. Fragment Selection 1000 random fragments 50 discriminative fragments 2. Model Parameter Estimation Select , for each feature 3. Weak-Learner Estimation Select , a, b for each feature

Learning System Background Training Data Segmented Training Data Boosting K 1(c) Algorithm Boosting Algorithm Background Training Data Unsegmented Training Data Detection Test Data K 2(c) Detection Object Detections

Contour Experiments n Datasets: n n n Weizmann Horses UIUC Cars Caltech Faces Caltech Motorbikes Caltech Background Each category evaluated in turn n n 10 segmented training images 40 unsegmented training images 50 background images single scale evaluation

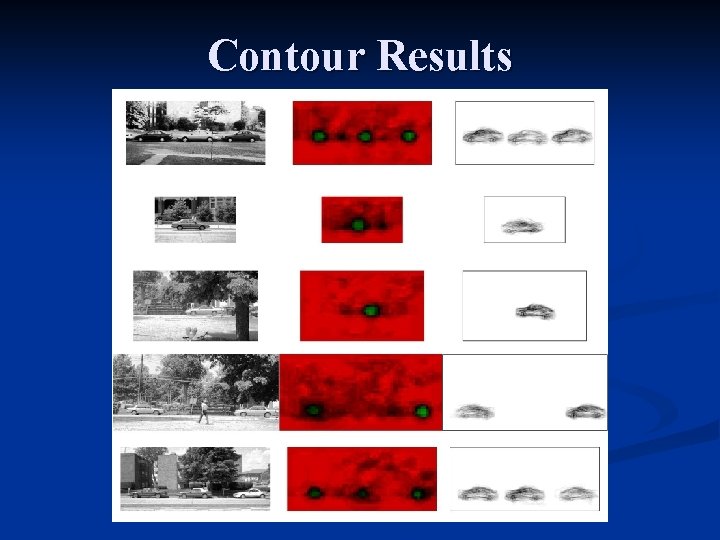

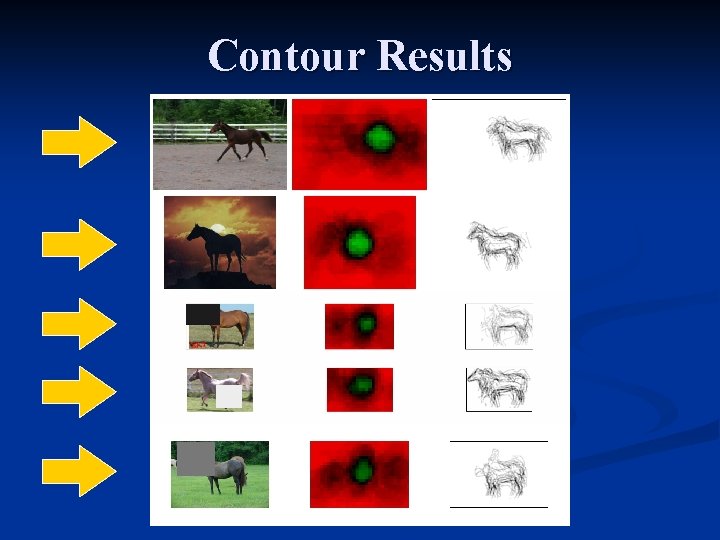

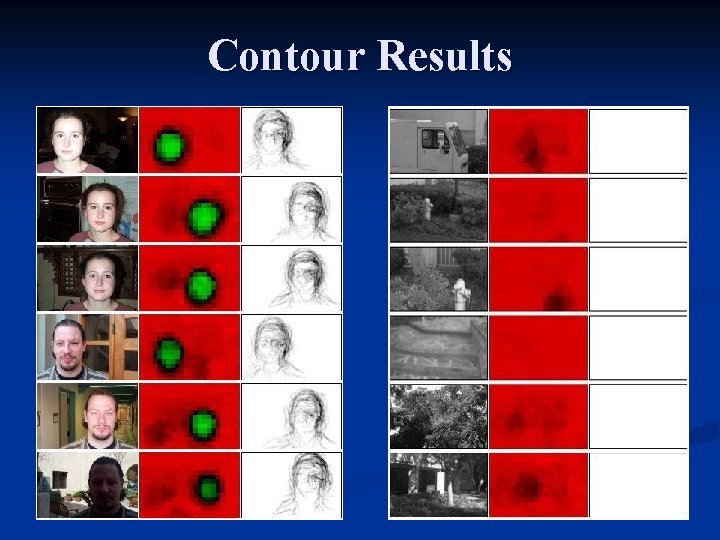

Contour Results

Contour Results

Contour Results

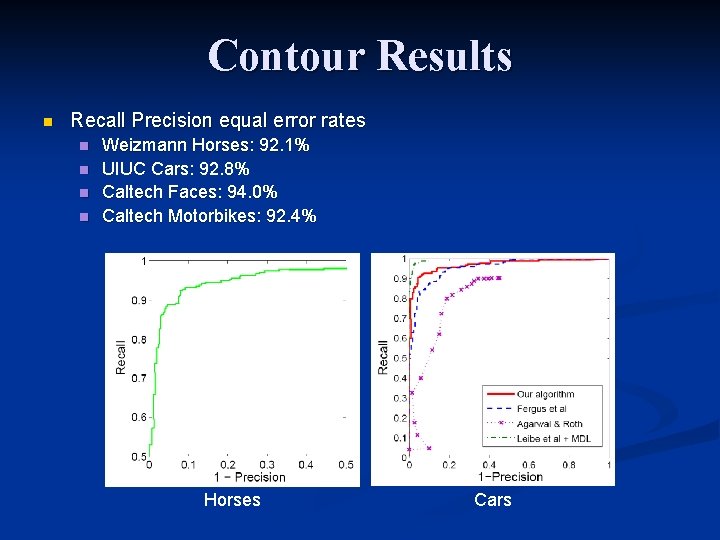

Contour Results n Recall Precision equal error rates n n Weizmann Horses: 92. 1% UIUC Cars: 92. 8% Caltech Faces: 94. 0% Caltech Motorbikes: 92. 4% Horses Cars

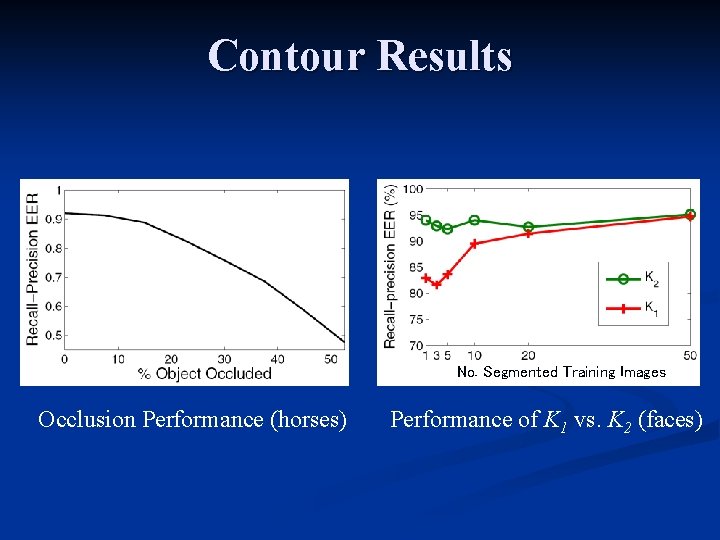

Contour Results No. Segmented Training Images Occlusion Performance (horses) Performance of K 1 vs. K 2 (faces)

Conclusions n Contour is very powerful cue n Boot-strapping improves results n Future directions n n extend to multiple classes, scales, views segmentation

- Slides: 23