Continuous Runahead Transparent Hardware Acceleration for Memory Intensive

![14 Dependence Chains LD [R 3] -> R 5 ADD R 4, R 5 14 Dependence Chains LD [R 3] -> R 5 ADD R 4, R 5](https://slidetodoc.com/presentation_image_h/37ff1f566b5e4c08029a387541137946/image-14.jpg)

- Slides: 44

Continuous Runahead: Transparent Hardware Acceleration for Memory Intensive Workloads Milad Hashemi, Onur Mutlu, Yale N. Patt UT Austin/Google, ETH Zürich, UT Austin October 19 th, 2016

2 Continuous Runahead Outline • • • Overview of Runahead Limitations Continuous Runahead Dependence Chains Continuous Runahead Engine Continuous Runahead Evaluation Conclusions

3 Continuous Runahead Outline • • • Overview of Runahead Limitations Continuous Runahead Dependence Chains Continuous Runahead Engine Continuous Runahead Evaluation Conclusions

4 Runahead Execution Overview • Runahead dynamically expands the instruction window when the pipeline is stalled [Mutlu et al. , 2003] • The core checkpoints architectural state • The result of the memory operation that caused the stall is marked as poisoned in the physical register file • The core continues to fetch and execute instructions • Operations are discarded instead of retired • The goal is to generate new independent cache misses

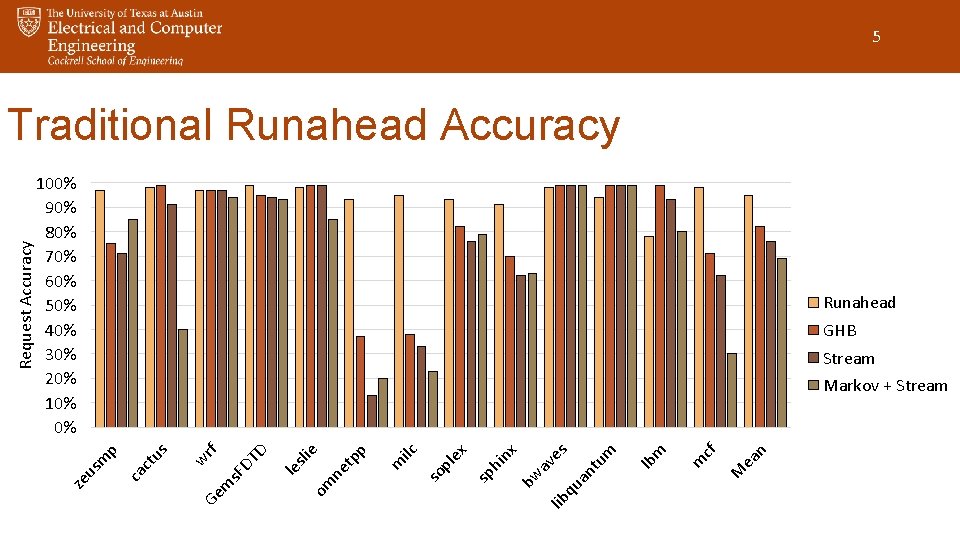

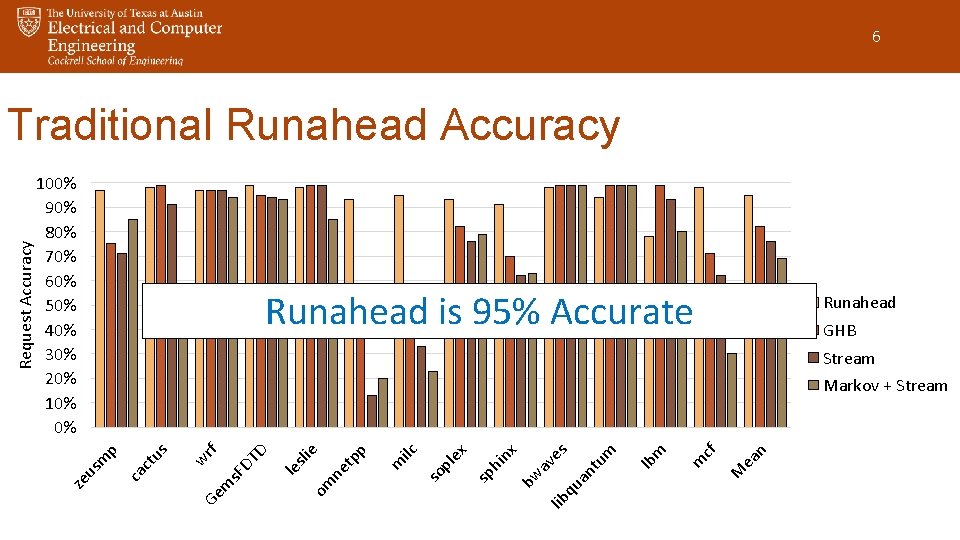

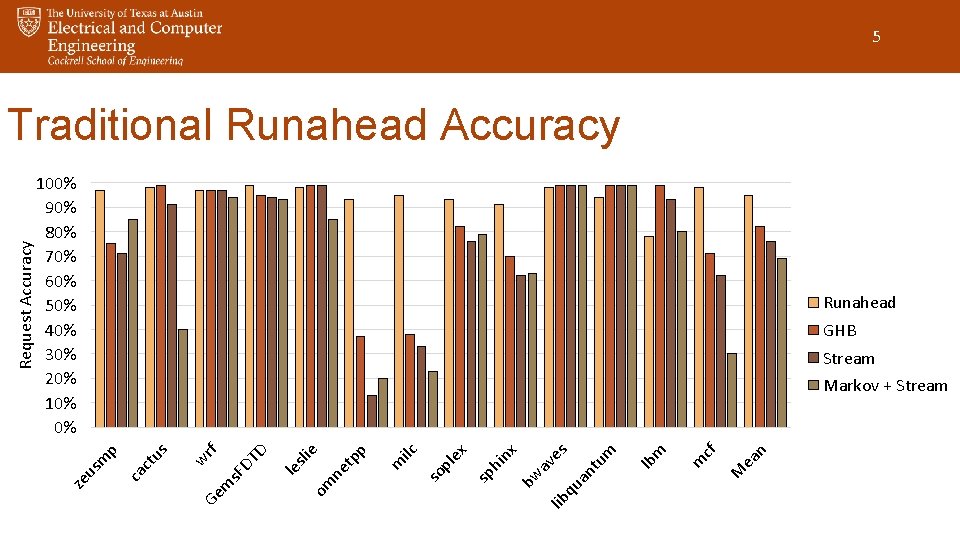

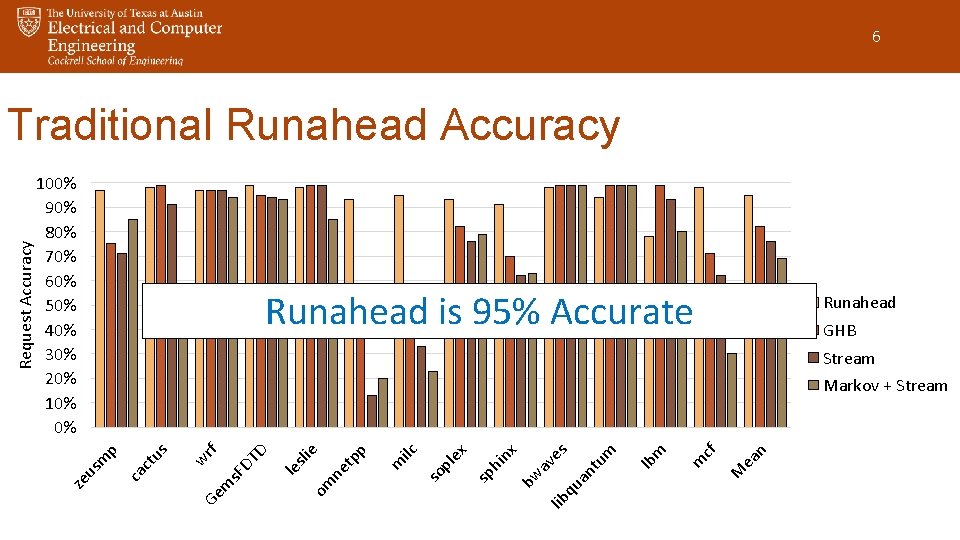

5 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% Runahead GHB Stream ea n M m cf m lb an tu m es qu av lib bw nx hi sp ex ilc m pl so om ne tp p sli e le m s. F DT D rf w Ge us ca ct us m p Markov + Stream ze Request Accuracy Traditional Runahead Accuracy

6 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% Runahead is 95% Accurate Runahead GHB Stream ea n M m cf m lb an tu m es qu av lib bw nx hi sp ex ilc m pl so om ne tp p sli e le m s. F DT D rf w Ge us ca ct us m p Markov + Stream ze Request Accuracy Traditional Runahead Accuracy

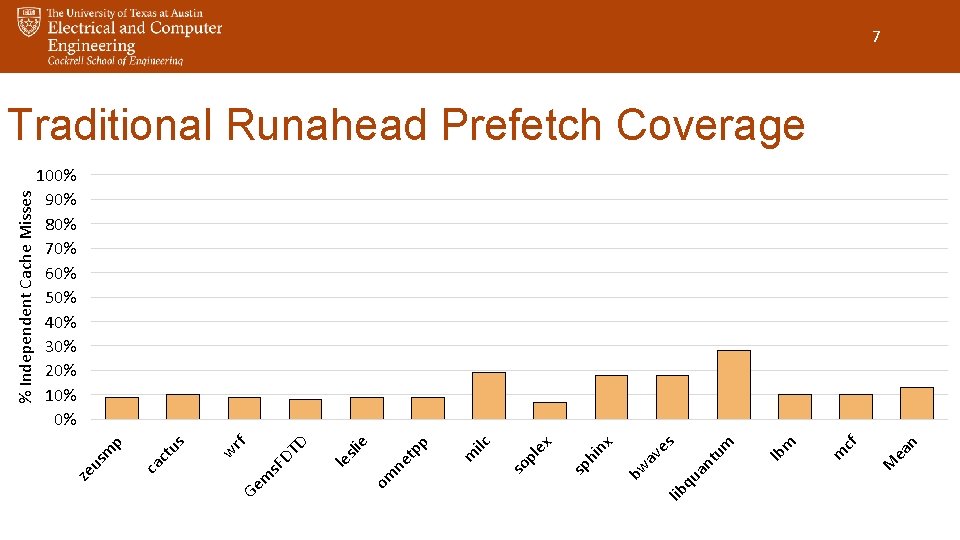

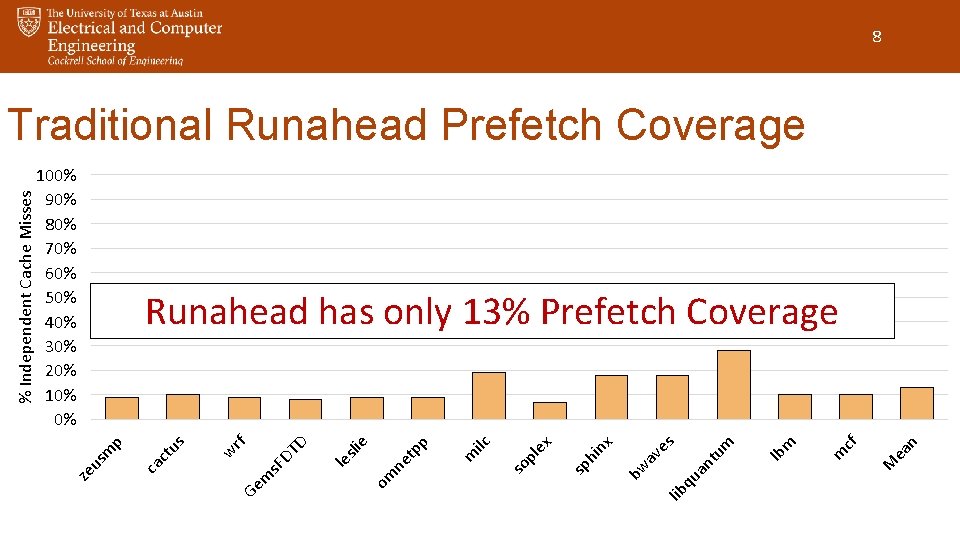

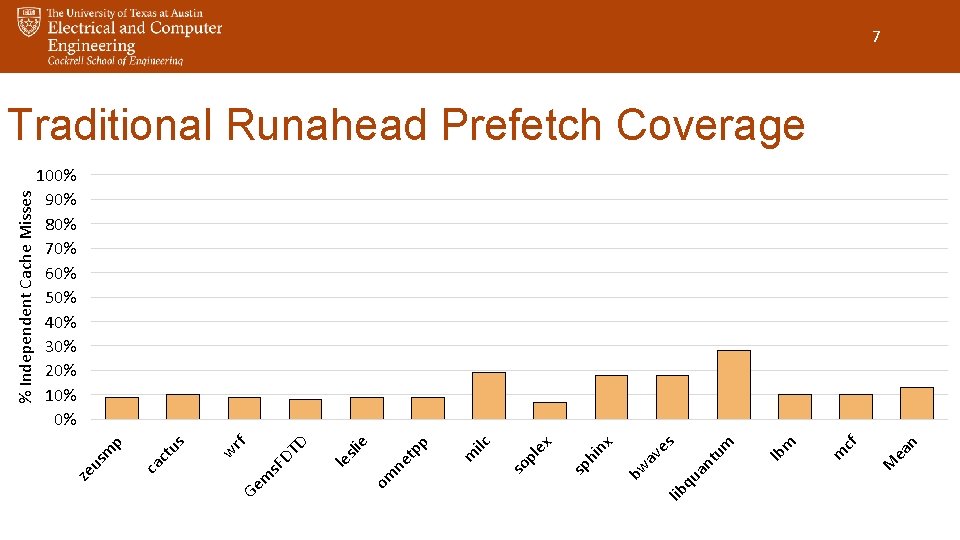

lib qu m ea n M m cf lb an tu m es av bw ex nx hi sp pl so p ilc m tp ne om sli e le D rf DT m s. F Ge w us ca ct p m us ze % Independent Cache Misses 7 Traditional Runahead Prefetch Coverage 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0%

8 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% lib qu ea n M m cf m lb an tu m es av bw nx hi sp ex pl so ilc m ne tp p sli e om m s. F Ge le DT D rf w us ca ct us m p Runahead has only 13% Prefetch Coverage ze % Independent Cache Misses Traditional Runahead Prefetch Coverage

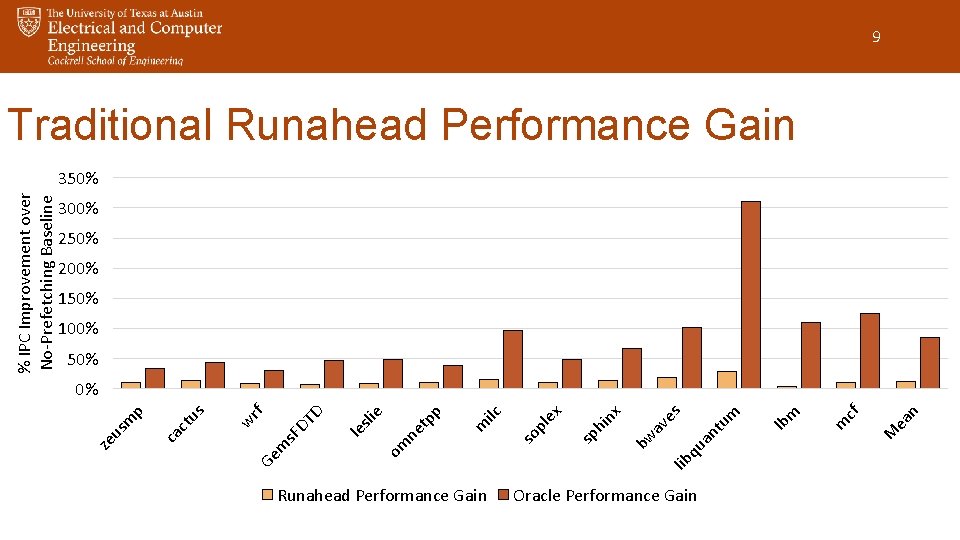

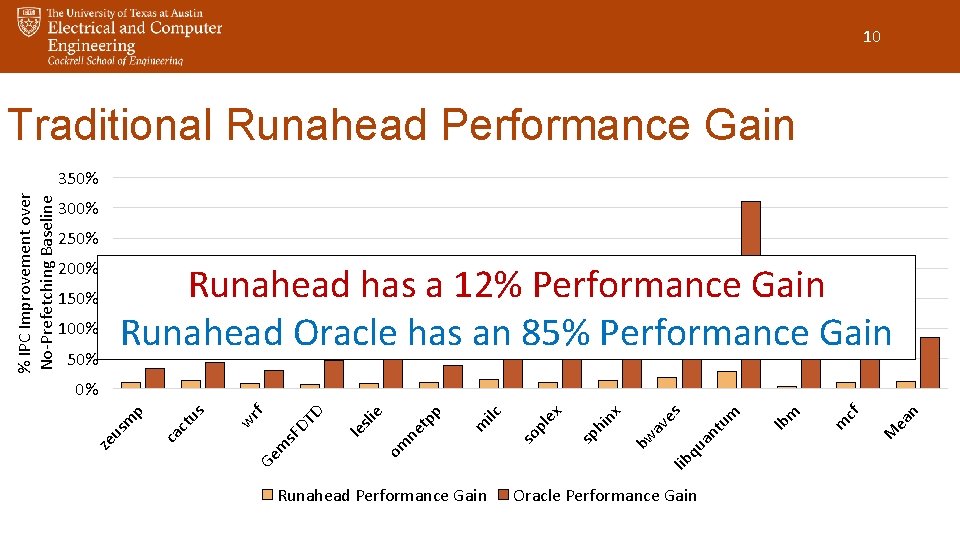

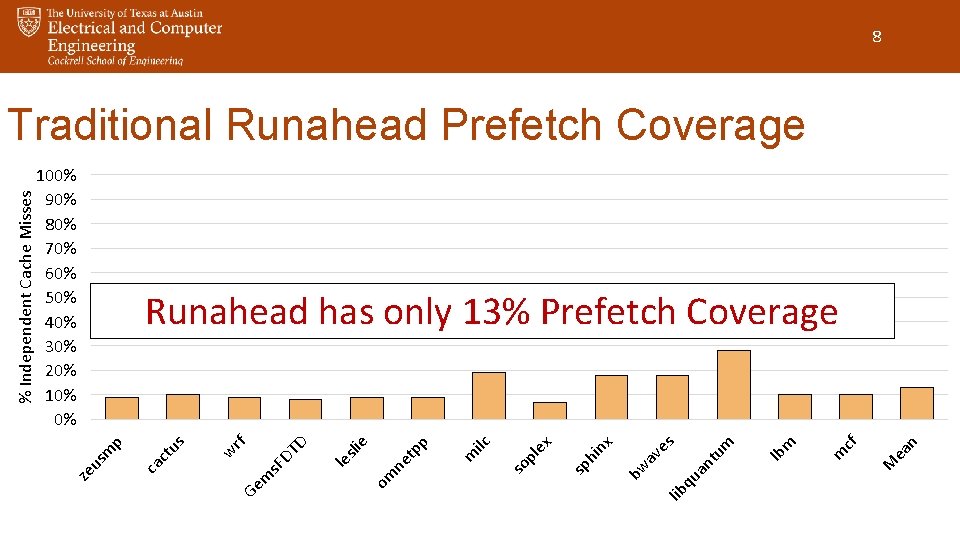

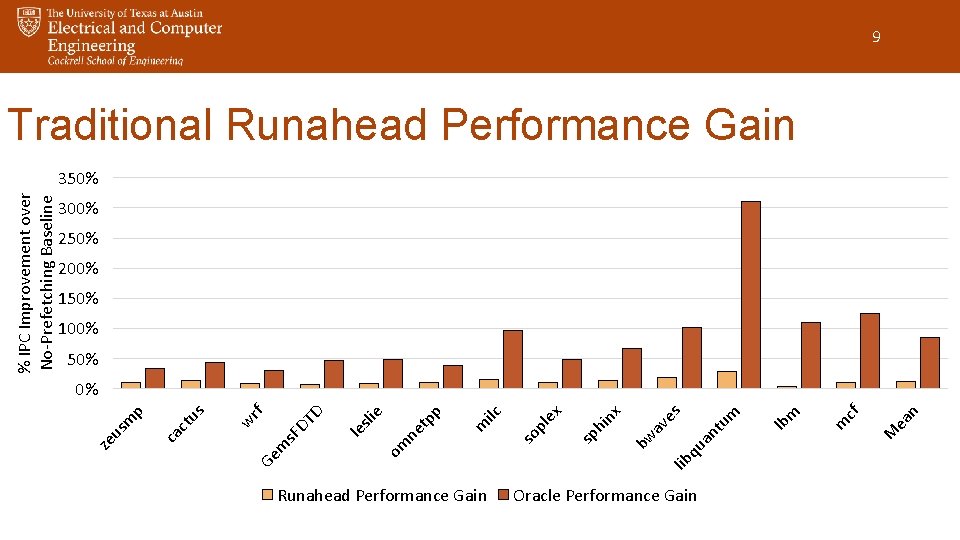

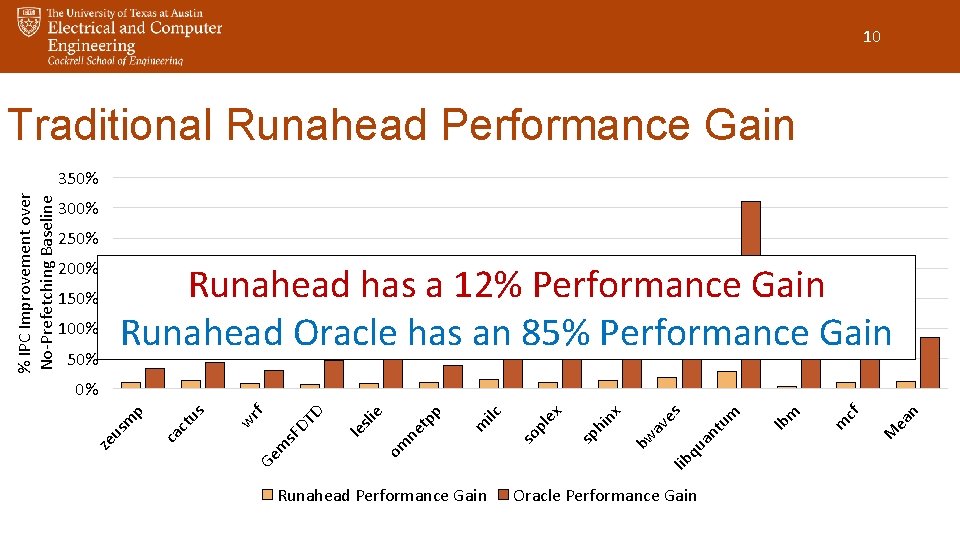

Runahead Performance Gain lib qu Oracle Performance Gain m ea n M m cf lb an tu m es av bw ex nx hi sp pl so ilc m p tp ne om sli e le D rf DT m s. F Ge w us ca ct p m us ze % IPC Improvement over No-Prefetching Baseline 9 Traditional Runahead Performance Gain 350% 300% 250% 200% 150% 100% 50% 0%

10 Traditional Runahead Performance Gain 300% 250% 200% Runahead has a 12% Performance Gain Runahead Oracle has an 85% Performance Gain 150% 100% 50% Runahead Performance Gain lib qu Oracle Performance Gain ea n M m cf m lb an tu m es av bw nx hi sp ex pl so ilc m ne tp p sli e om m s. F Ge le DT D rf w us ca ct us m p 0% ze % IPC Improvement over No-Prefetching Baseline 350%

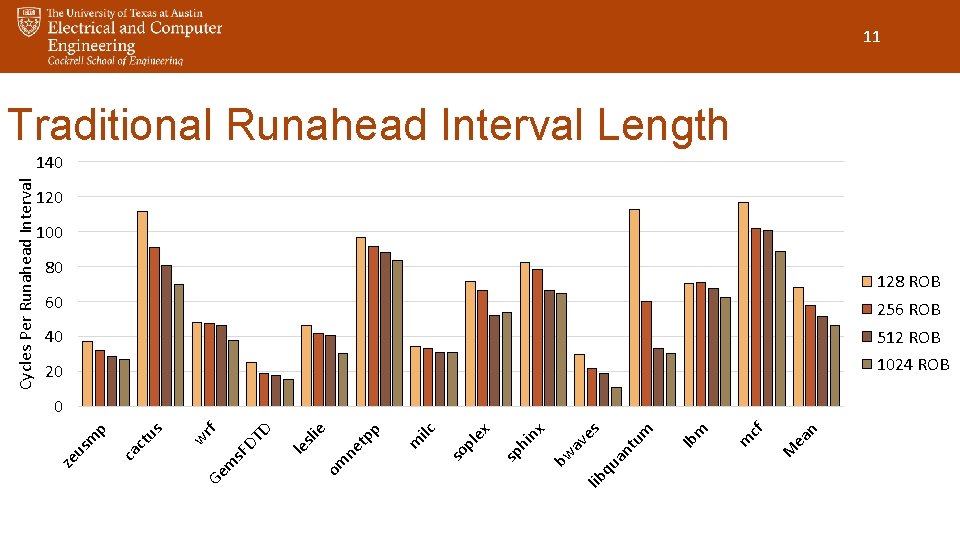

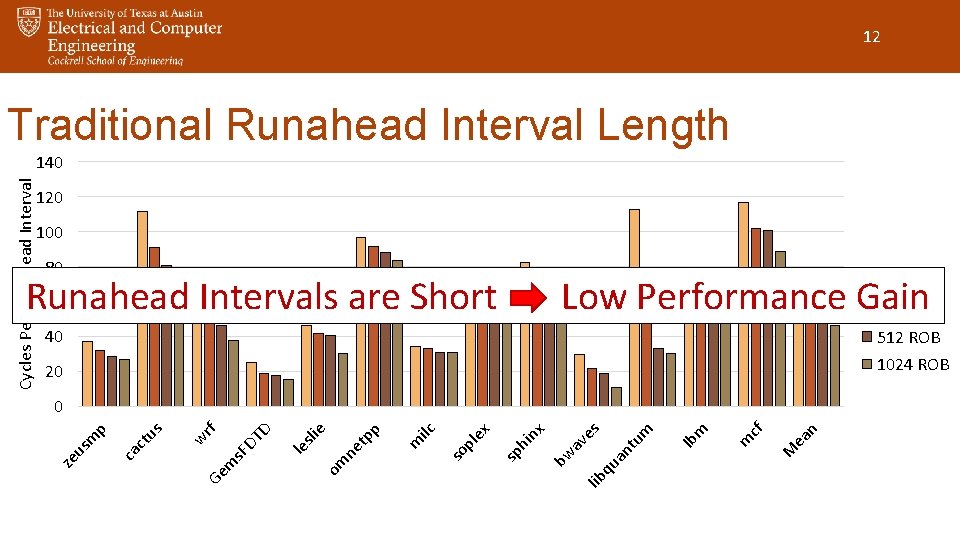

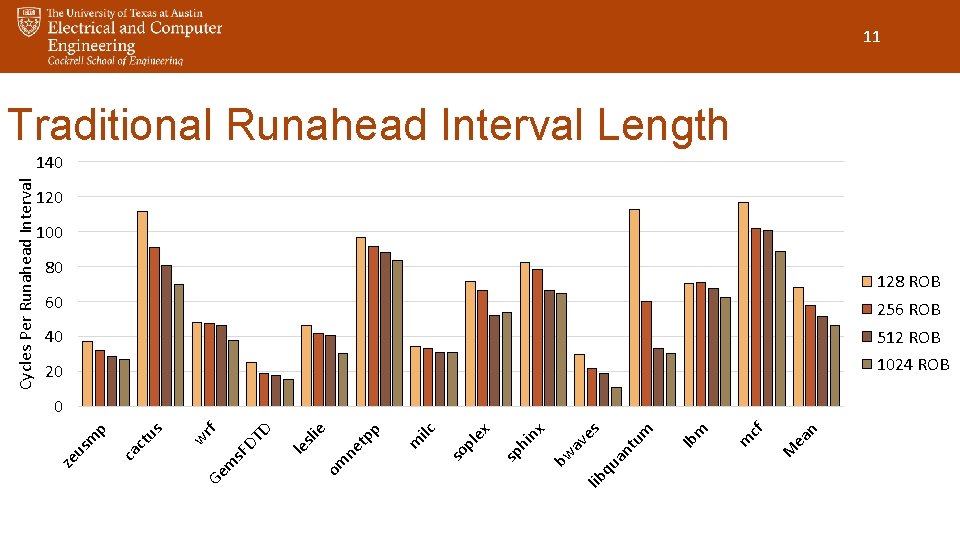

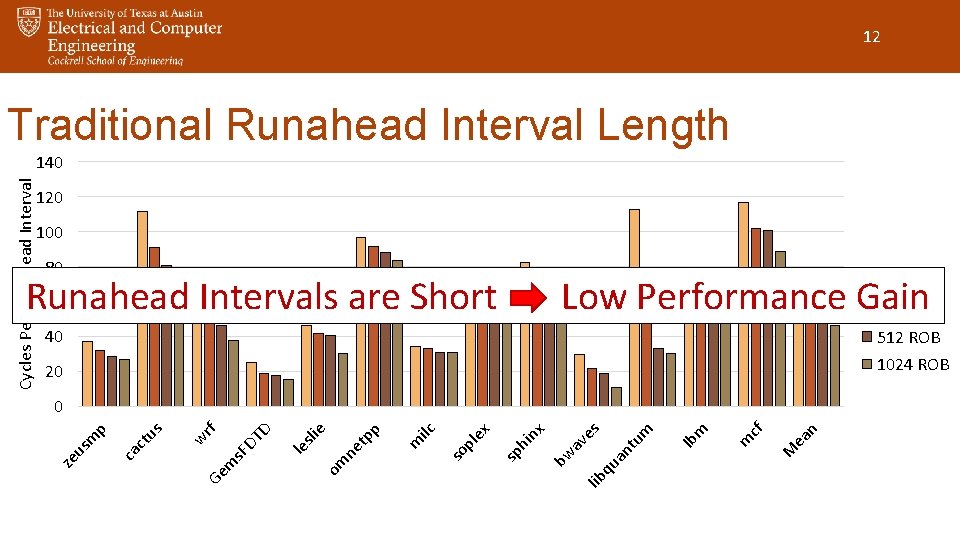

lib qu ea n M m cf m lb um an t es av bw ex nx hi sp pl so p ilc m tp sli e le ne om rf DT D m s. F Ge w us ca ct p m us ze Cycles Per Runahead Interval 11 Traditional Runahead Interval Length 140 120 100 80 60 128 ROB 256 ROB 40 512 ROB 20 1024 ROB 0

12 Traditional Runahead Interval Length 120 100 80 128 ROB 60 Runahead Intervals are Short Low Performance Gain 256 ROB 40 512 ROB 20 1024 ROB qu ea n M m cf m lb an t um es lib bw nx hi sp ex pl so ilc m p tp ne le sli e om Ge m s. F DT D rf w us ca ct ze us m p 0 av Cycles Per Runahead Interval 140

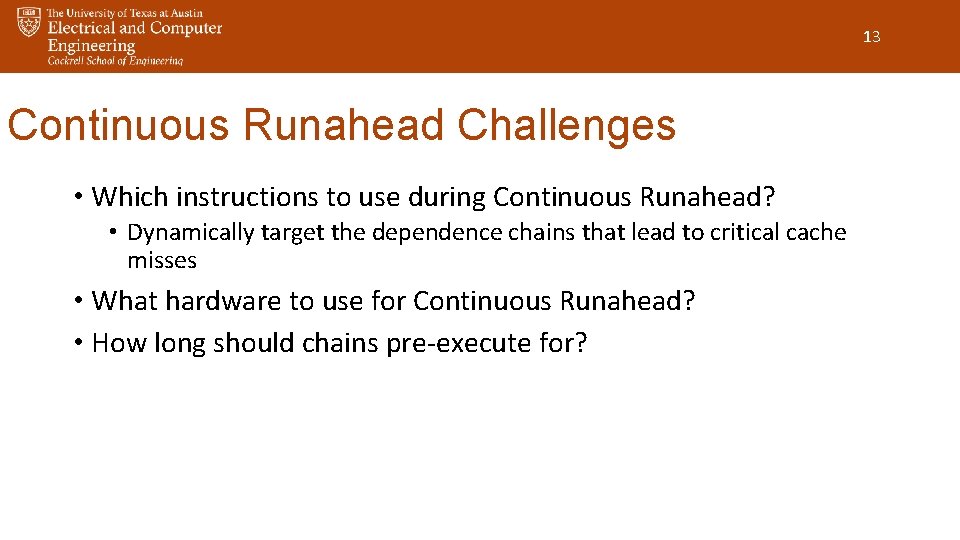

13 Continuous Runahead Challenges • Which instructions to use during Continuous Runahead? • Dynamically target the dependence chains that lead to critical cache misses • What hardware to use for Continuous Runahead? • How long should chains pre-execute for?

![14 Dependence Chains LD R 3 R 5 ADD R 4 R 5 14 Dependence Chains LD [R 3] -> R 5 ADD R 4, R 5](https://slidetodoc.com/presentation_image_h/37ff1f566b5e4c08029a387541137946/image-14.jpg)

14 Dependence Chains LD [R 3] -> R 5 ADD R 4, R 5 -> R 9 ADD R 9, R 1 -> R 6 LD [R 6] -> R 8 Cache Miss

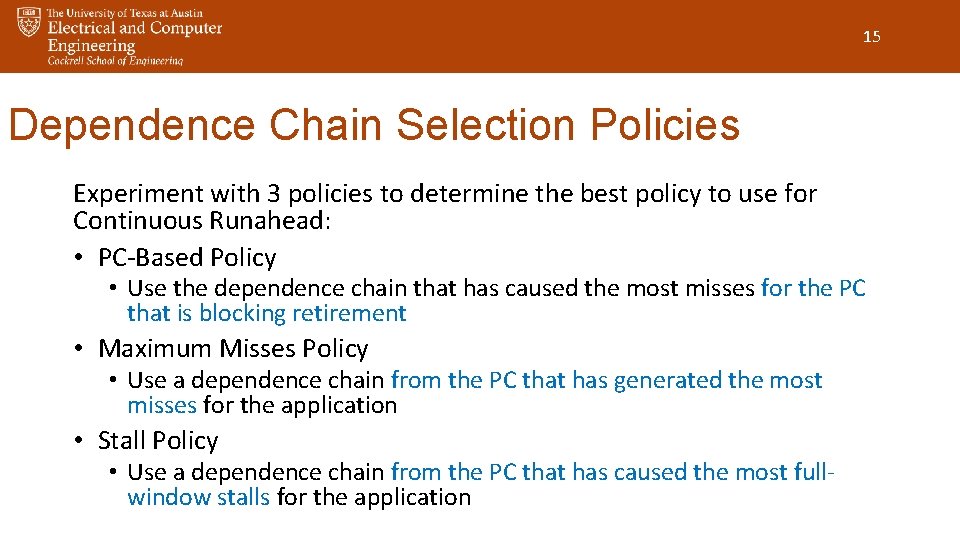

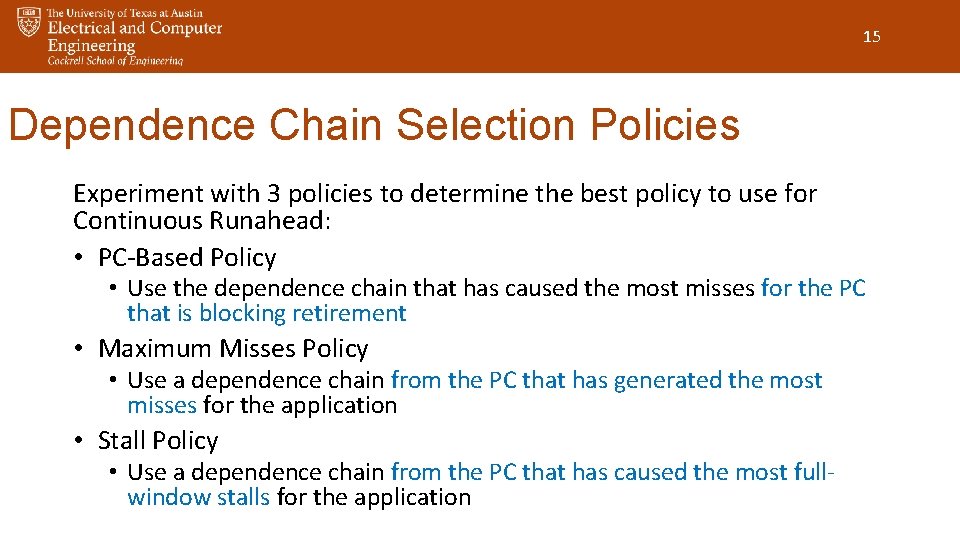

15 Dependence Chain Selection Policies Experiment with 3 policies to determine the best policy to use for Continuous Runahead: • PC-Based Policy • Use the dependence chain that has caused the most misses for the PC that is blocking retirement • Maximum Misses Policy • Use a dependence chain from the PC that has generated the most misses for the application • Stall Policy • Use a dependence chain from the PC that has caused the most fullwindow stalls for the application

16 Dependence Chain Selection Policies 100 60 Runahead Buffer PC-Policy 40 Maximum-Misses Policy 20 Stall Policy m cf M ea n m lb an tu m es qu av lib bw nx sp hi ex pl m ilc so om ne tp p sli e le rf m s. F DT D w Ge us ca ct us -20 m p 0 ze % IPC Improvement 80

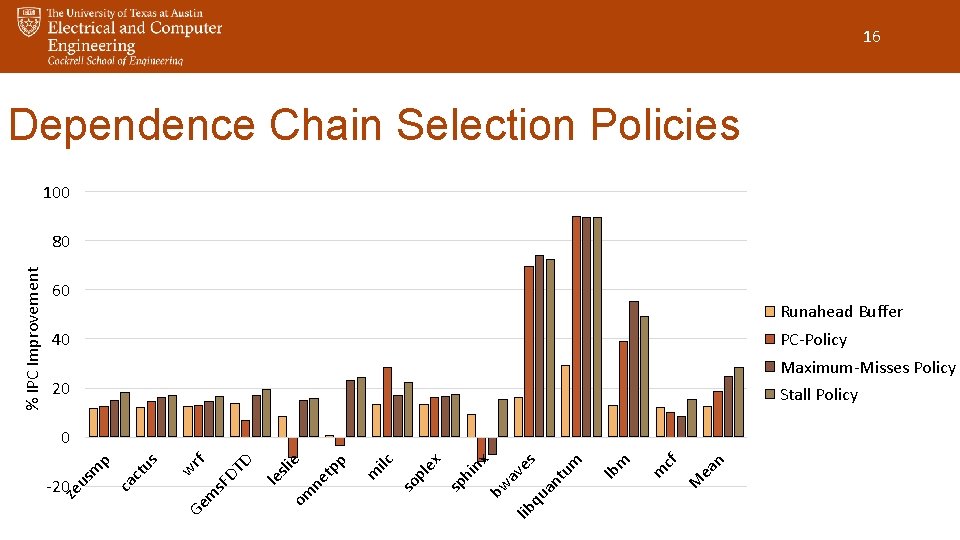

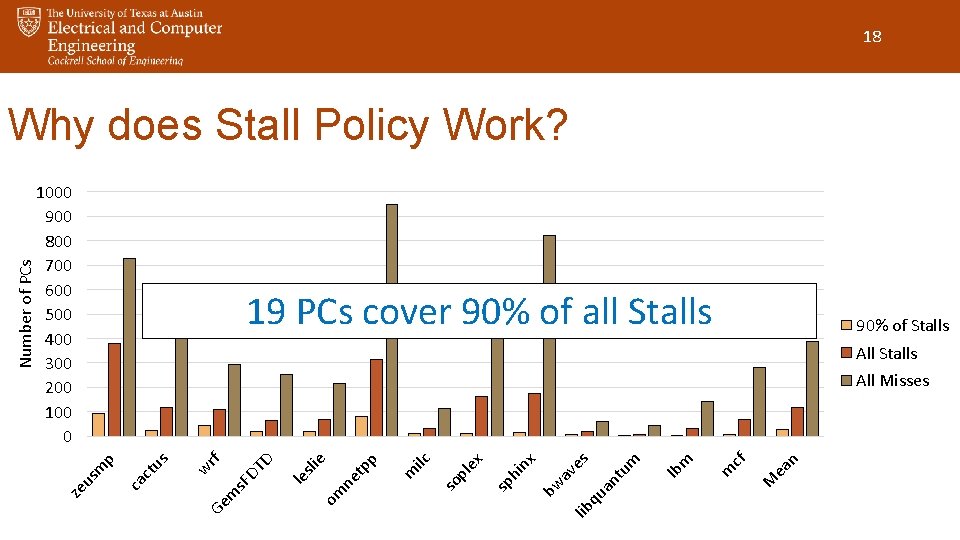

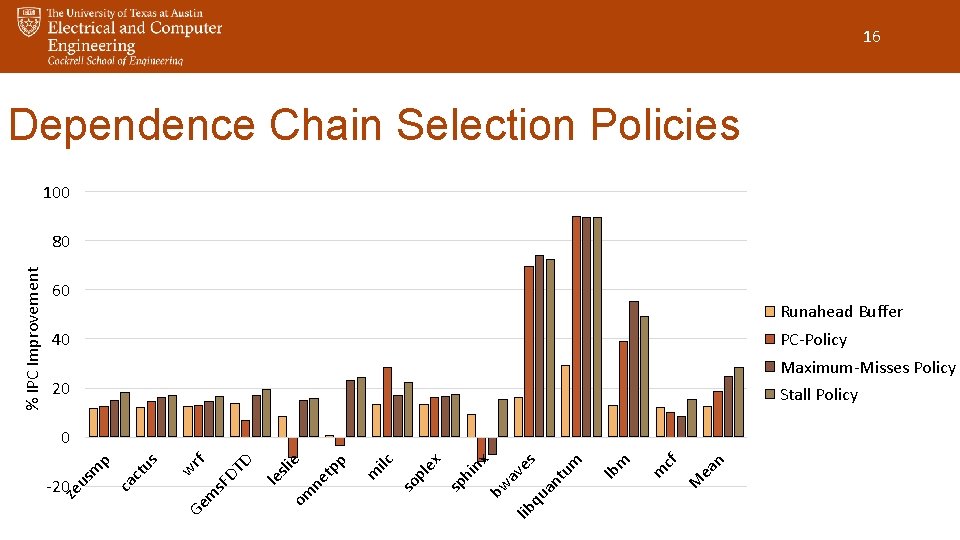

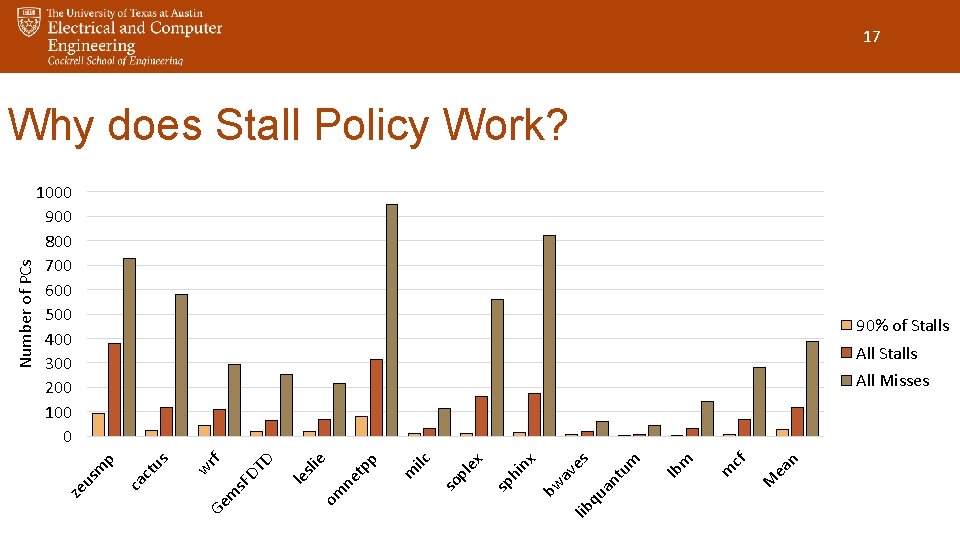

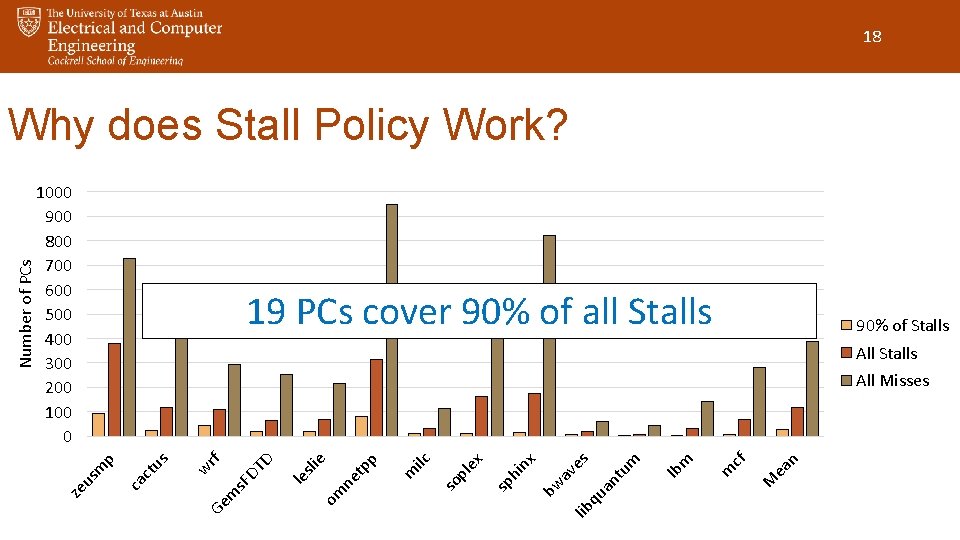

17 1000 900 800 700 600 500 400 300 200 100 0 90% of Stalls All Stalls lib qu ea n M m cf m lb an tu m es av bw nx hi sp ex ilc m pl so om ne tp p sli e le rf Ge m s. F DT D w us ca ct us m p All Misses ze Number of PCs Why does Stall Policy Work?

18 1000 900 800 700 600 500 400 300 200 100 0 19 PCs cover 90% of all Stalls 90% of Stalls All Stalls lib qu ea n M m cf m lb an tu m es av bw nx hi sp ex ilc m pl so om ne tp p sli e le rf Ge m s. F DT D w us ca ct us m p All Misses ze Number of PCs Why does Stall Policy Work?

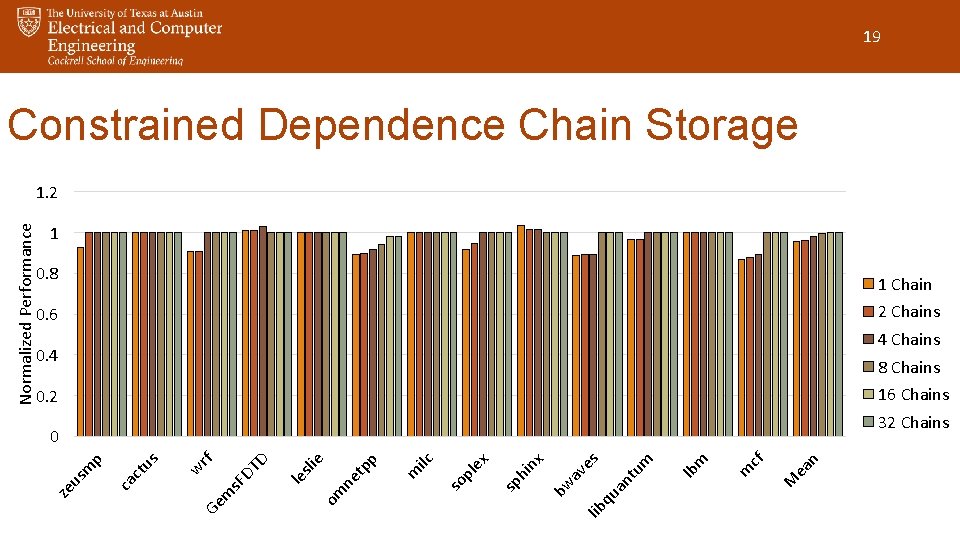

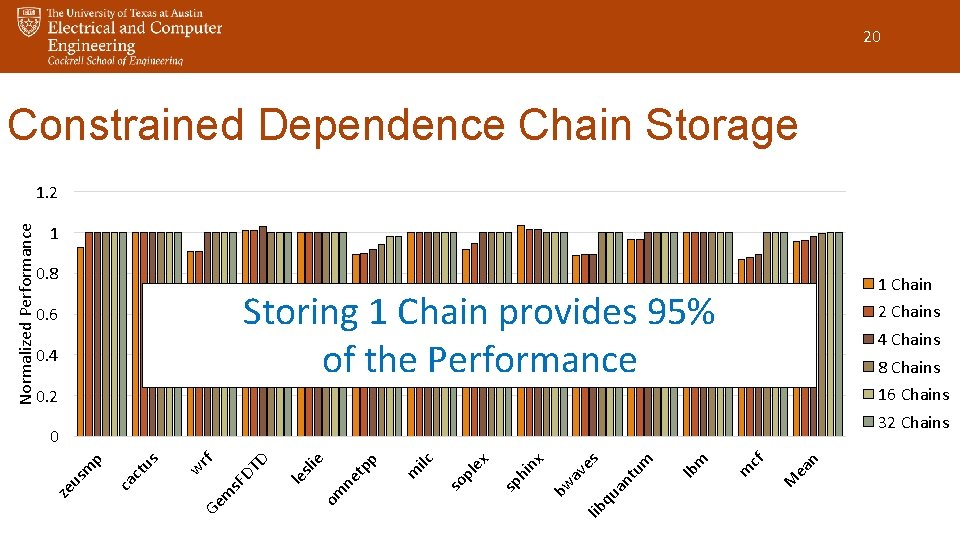

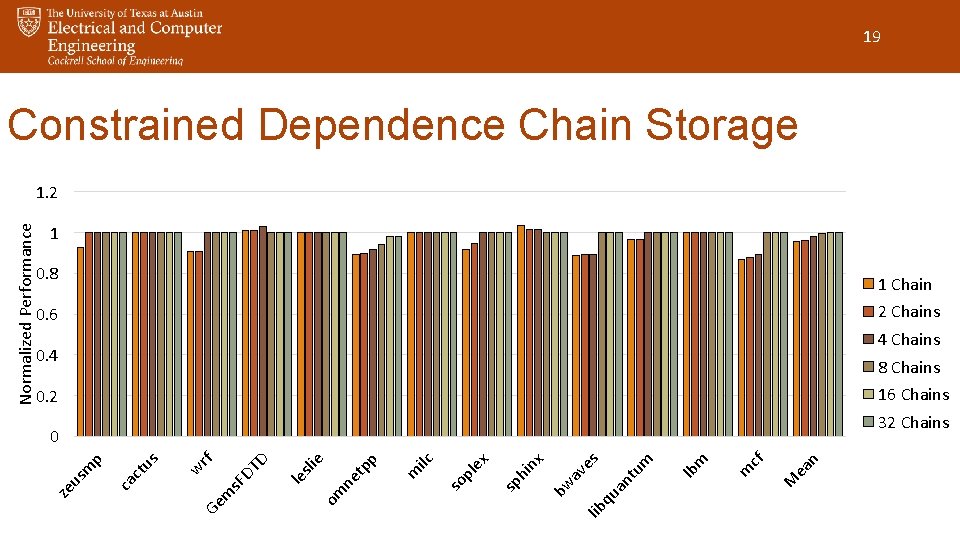

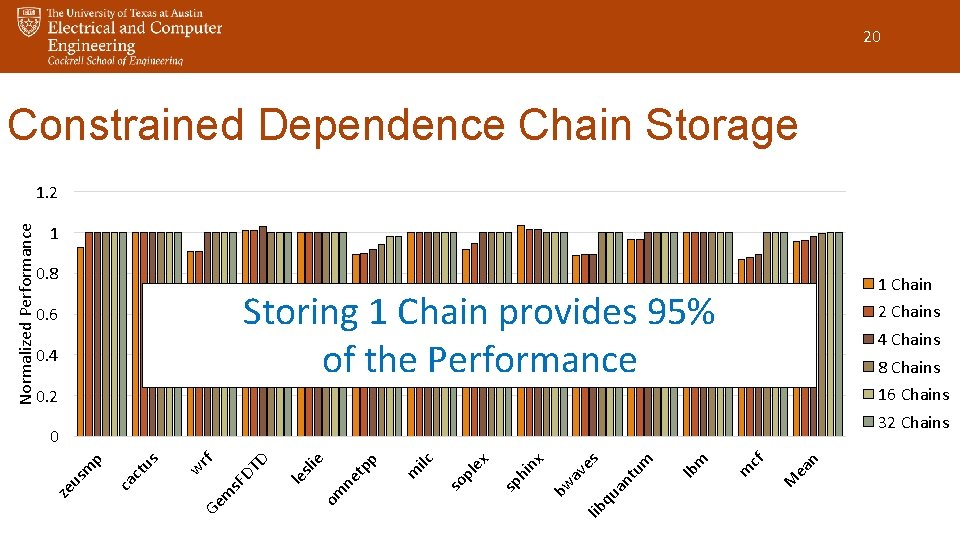

19 Constrained Dependence Chain Storage 1 0. 8 1 Chain 2 Chains 0. 6 4 Chains 0. 4 8 Chains 16 Chains 0. 2 32 Chains lib qu ea n M m cf m lb an t um es av bw nx hi sp ex ilc m pl so om ne tp p sli e le w rf Ge m s. F DT D us ca ct us m p 0 ze Normalized Performance 1. 2

20 Constrained Dependence Chain Storage 1 0. 8 1 Chain Storing 1 Chain provides 95% of the Performance 0. 6 0. 4 2 Chains 4 Chains 8 Chains 16 Chains 0. 2 32 Chains lib qu ea n M m cf m lb an t um es av bw nx hi sp ex ilc m pl so om ne tp p sli e le w rf Ge m s. F DT D us ca ct us m p 0 ze Normalized Performance 1. 2

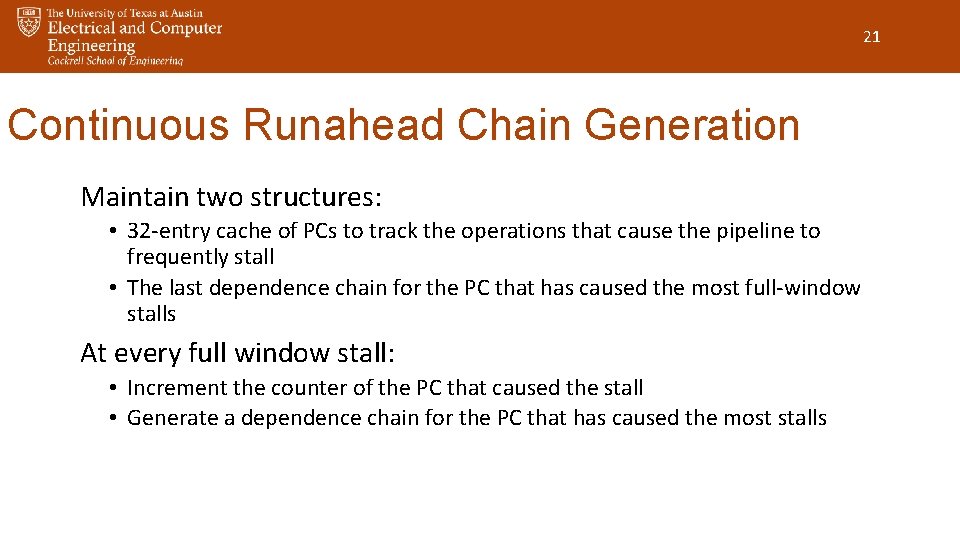

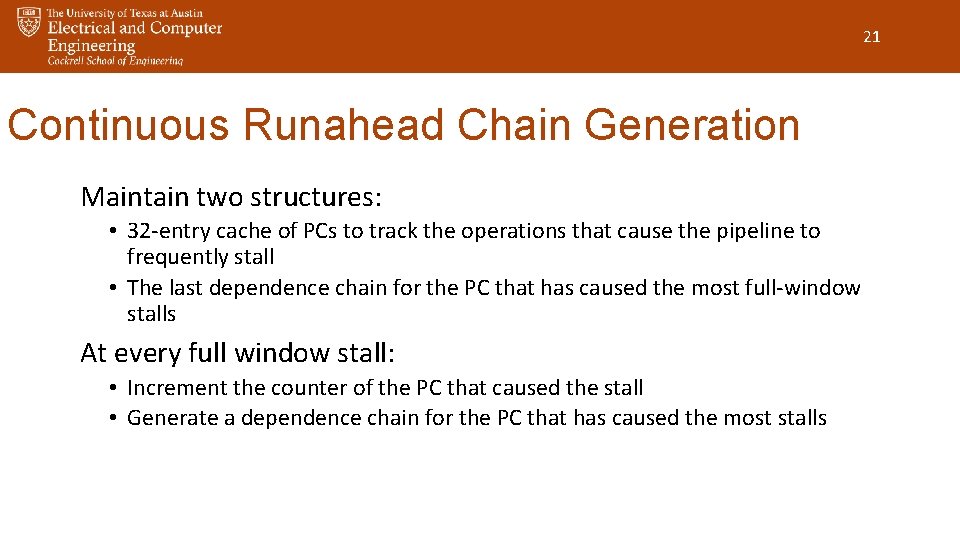

21 Continuous Runahead Chain Generation Maintain two structures: • 32 -entry cache of PCs to track the operations that cause the pipeline to frequently stall • The last dependence chain for the PC that has caused the most full-window stalls At every full window stall: • Increment the counter of the PC that caused the stall • Generate a dependence chain for the PC that has caused the most stalls

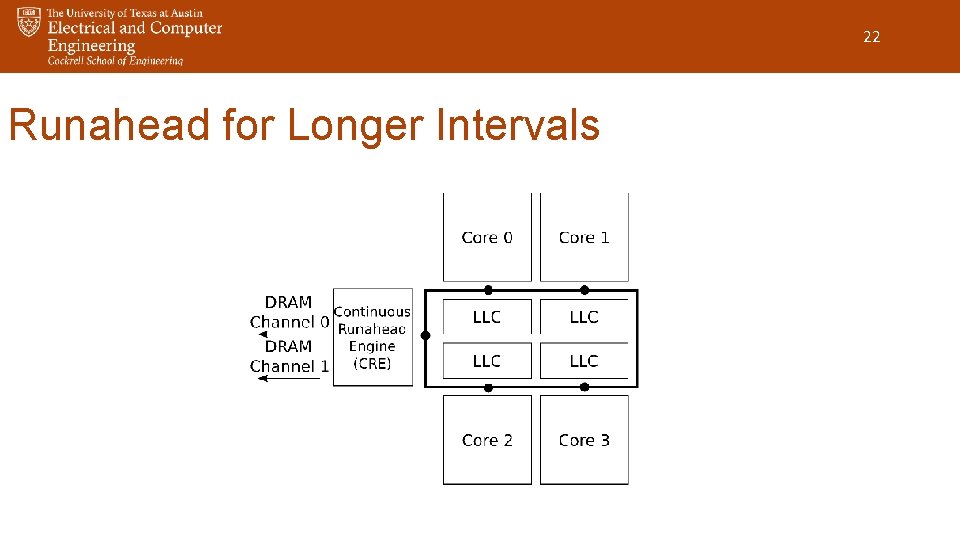

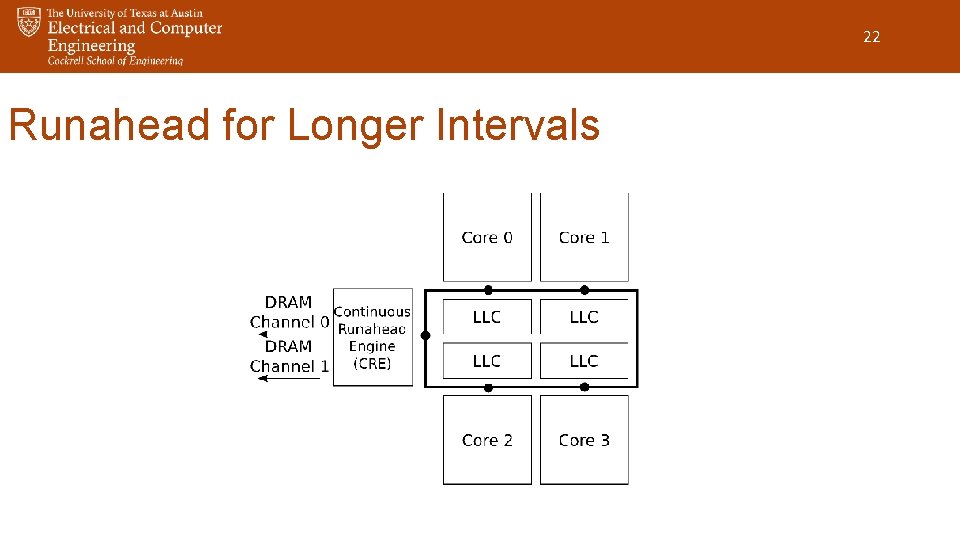

22 Runahead for Longer Intervals

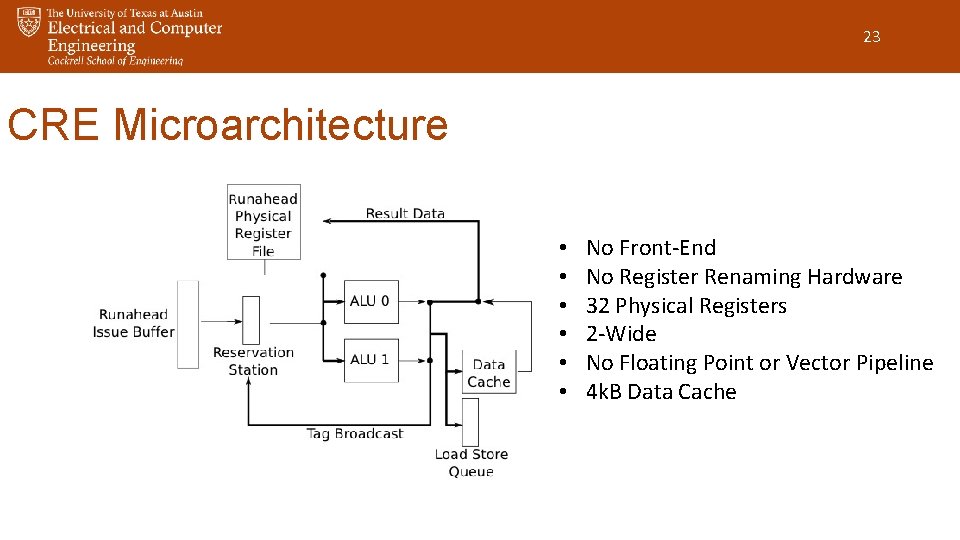

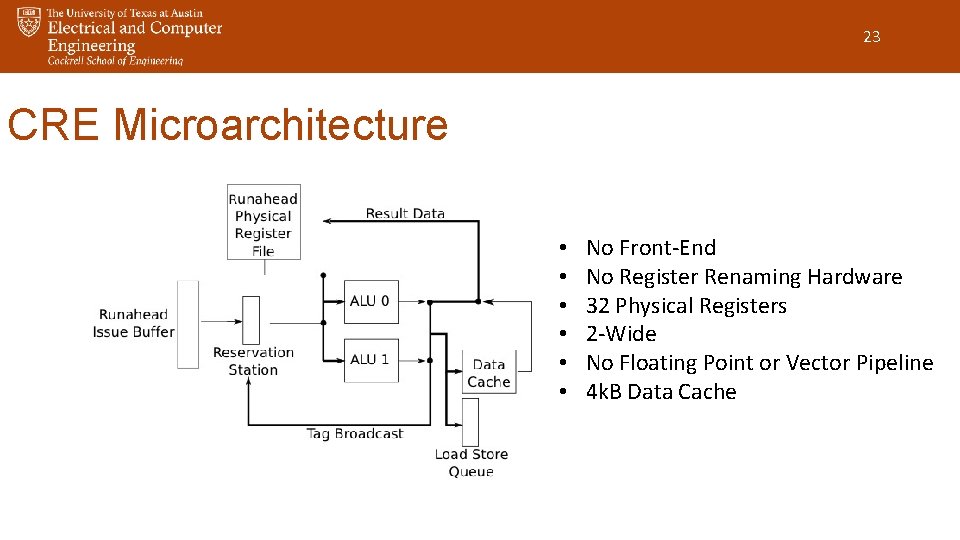

23 CRE Microarchitecture • • • No Front-End No Register Renaming Hardware 32 Physical Registers 2 -Wide No Floating Point or Vector Pipeline 4 k. B Data Cache

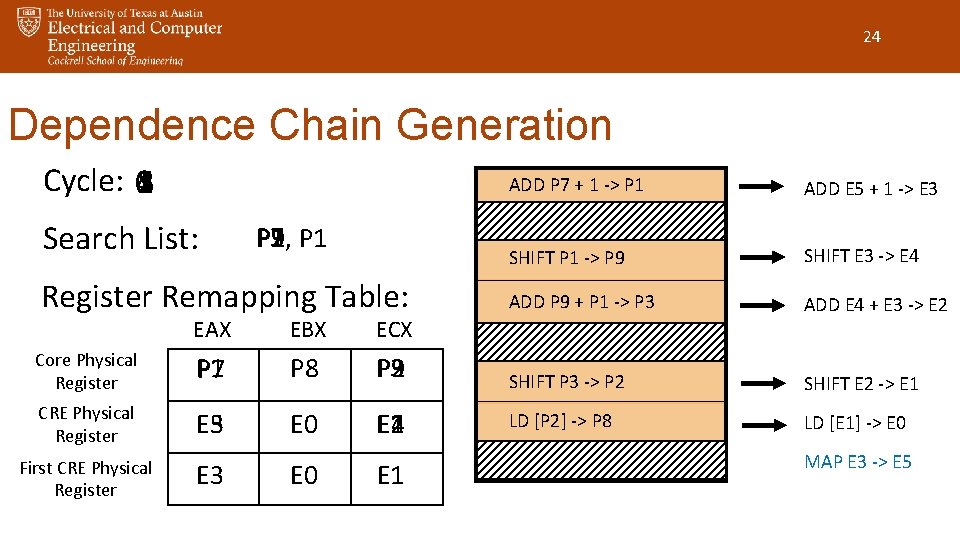

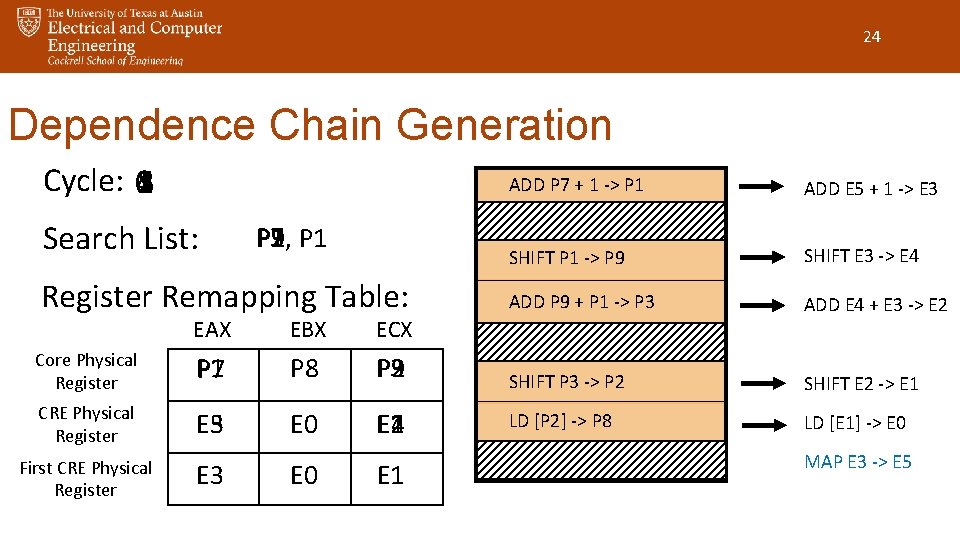

24 Dependence Chain Generation Cycle: 0 14253 ADD P 7 + 1 -> P 1 ADD E 5 + 1 -> E 3 SHIFT P 1 -> P 9 SHIFT E 3 -> E 4 Register Remapping Table: ADD P 9 + P 1 -> P 3 ADD E 4 + E 3 -> E 2 Search List: P 7 P 9, P 1 P 3 P 2 P 1 EAX EBX ECX Core Physical Register P 1 P 7 P 8 P 3 P 2 P 9 SHIFT P 3 -> P 2 SHIFT E 2 -> E 1 CRE Physical Register E 3 E 5 E 0 E 2 E 1 E 4 LD [P 2] -> P 8 LD [E 1] -> E 0 First CRE Physical Register E 3 E 0 E 1 MAP E 3 -> E 5

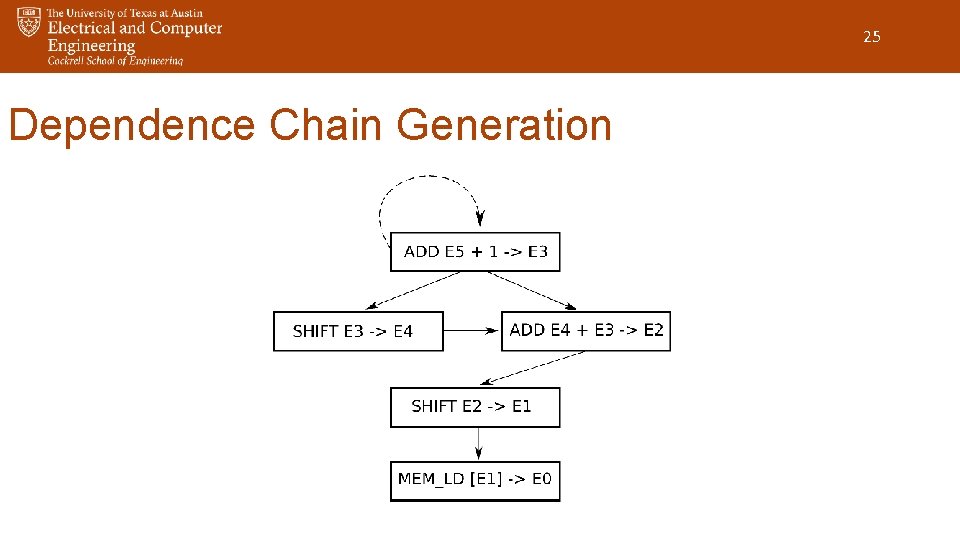

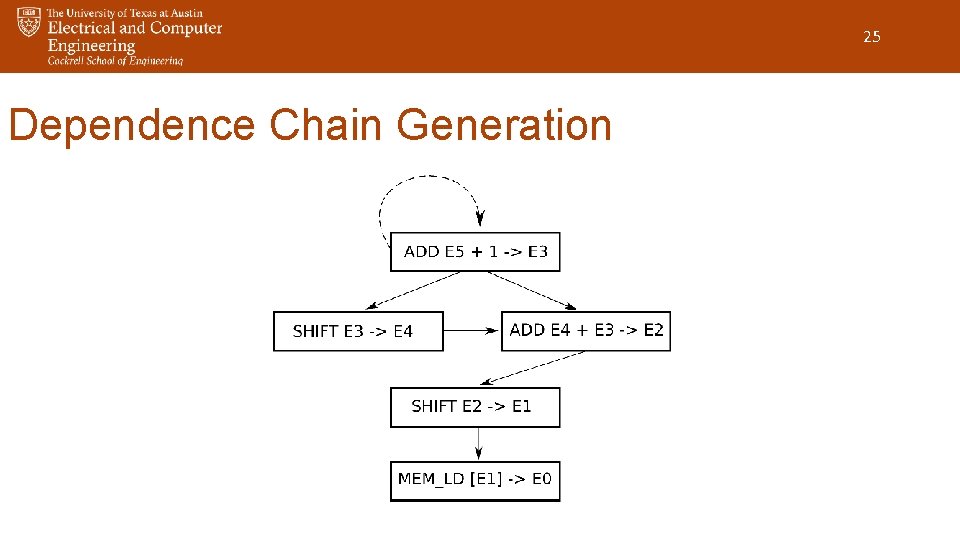

25 Dependence Chain Generation

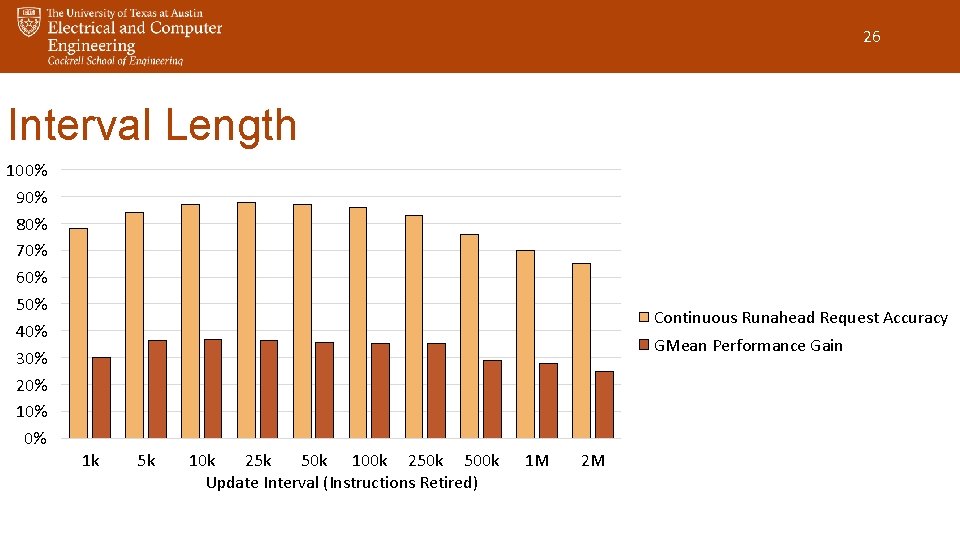

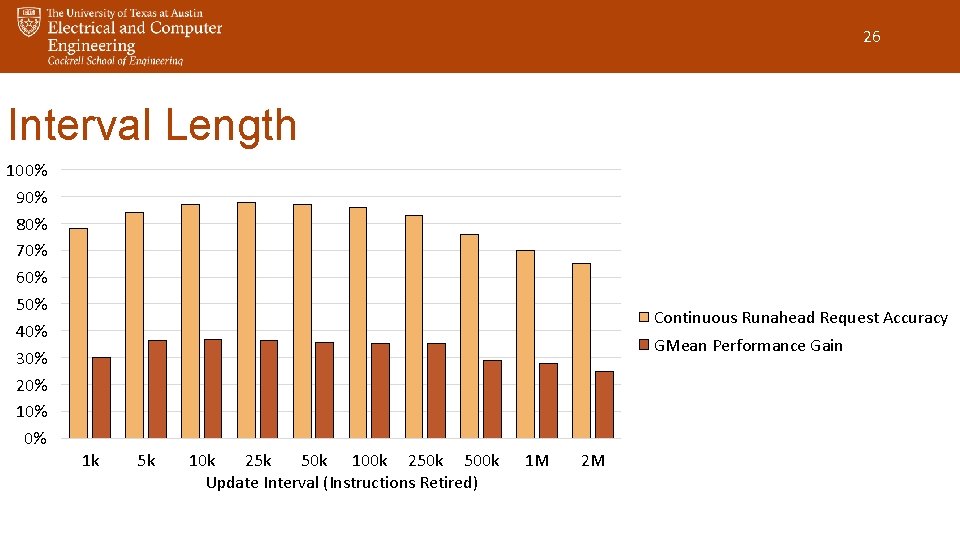

26 Interval Length 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% Continuous Runahead Request Accuracy GMean Performance Gain 1 k 5 k 10 k 25 k 50 k 100 k 250 k 500 k Update Interval (Instructions Retired) 1 M 2 M

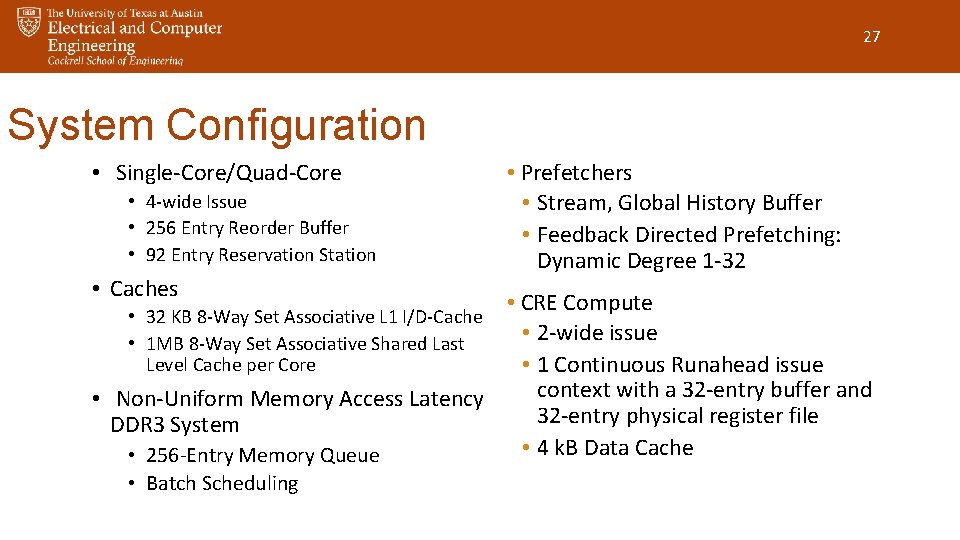

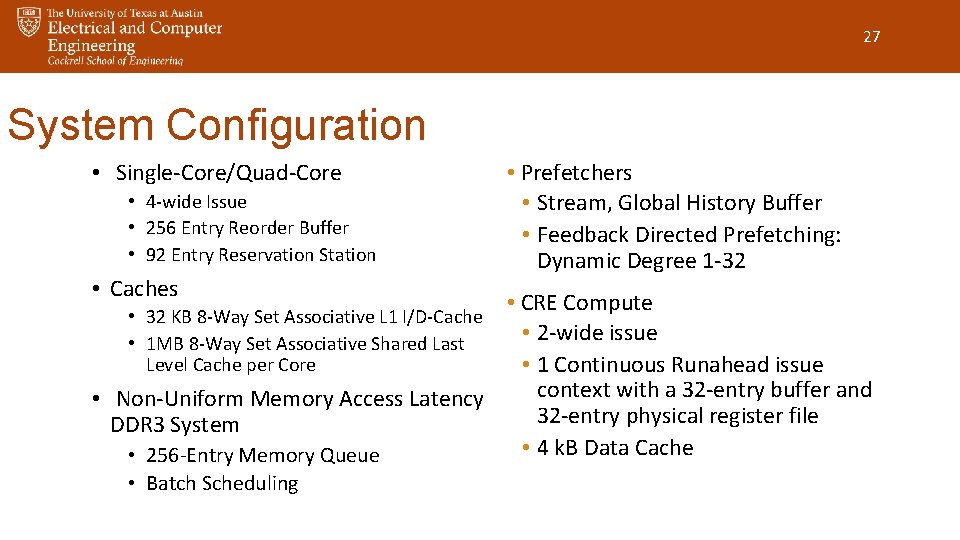

27 System Configuration • Single-Core/Quad-Core • 4 -wide Issue • 256 Entry Reorder Buffer • 92 Entry Reservation Station • Caches • Prefetchers • Stream, Global History Buffer • Feedback Directed Prefetching: Dynamic Degree 1 -32 • CRE Compute • 2 -wide issue • 1 Continuous Runahead issue context with a 32 -entry buffer and • Non-Uniform Memory Access Latency 32 -entry physical register file DDR 3 System • 4 k. B Data Cache • 256 -Entry Memory Queue • 32 KB 8 -Way Set Associative L 1 I/D-Cache • 1 MB 8 -Way Set Associative Shared Last Level Cache per Core • Batch Scheduling

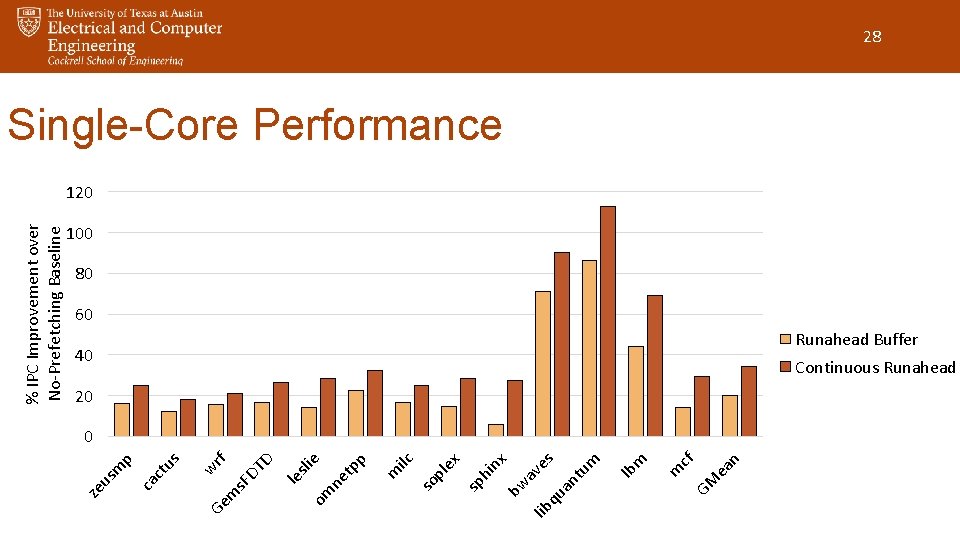

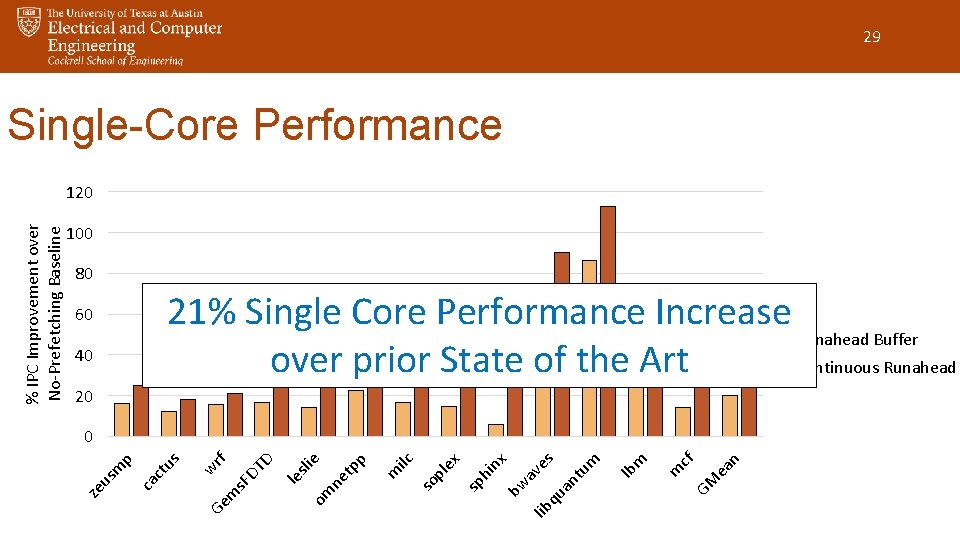

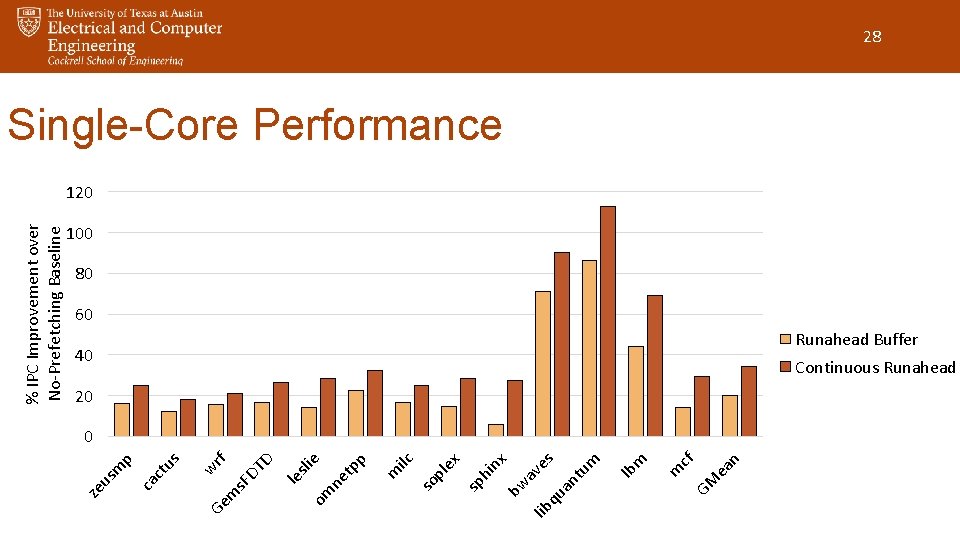

lib m lb um an t es m cf GM ea n qu av bw ex nx hi sp pl so p ilc m tp ne om sli e le Ge wrf m s. F DT D us ca ct m p us ze % IPC Improvement over No-Prefetching Baseline 28 Single-Core Performance 120 100 80 60 40 Runahead Buffer Continuous Runahead 20 0

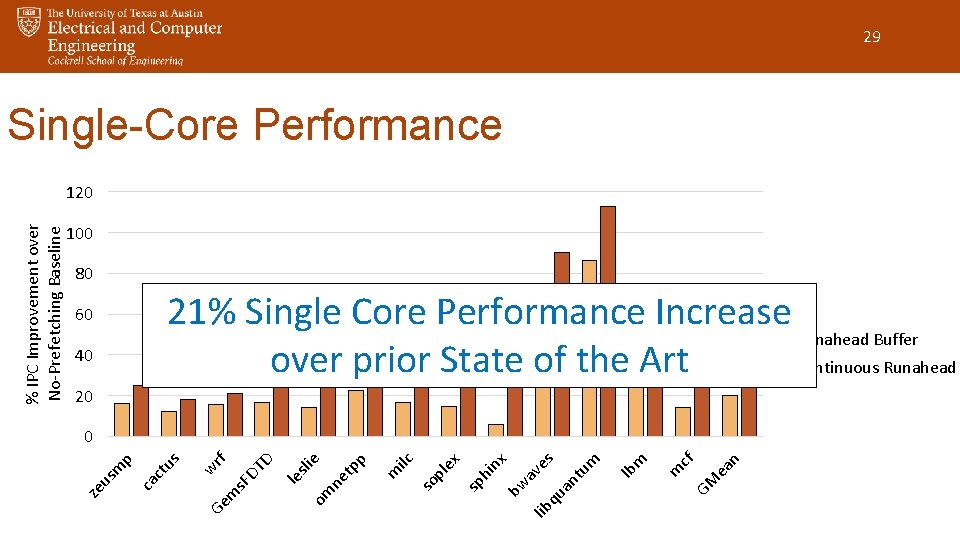

29 Single-Core Performance 100 80 21% Single Core Performance Increase Runahead Buffer over prior State of the Art Continuous Runahead 60 40 20 m cf GM ea n m lb um an t es qu av lib bw nx sp hi ex pl m ilc so om ne tp p sli e le Ge wrf m s. F DT D us ca ct us m p 0 ze % IPC Improvement over No-Prefetching Baseline 120

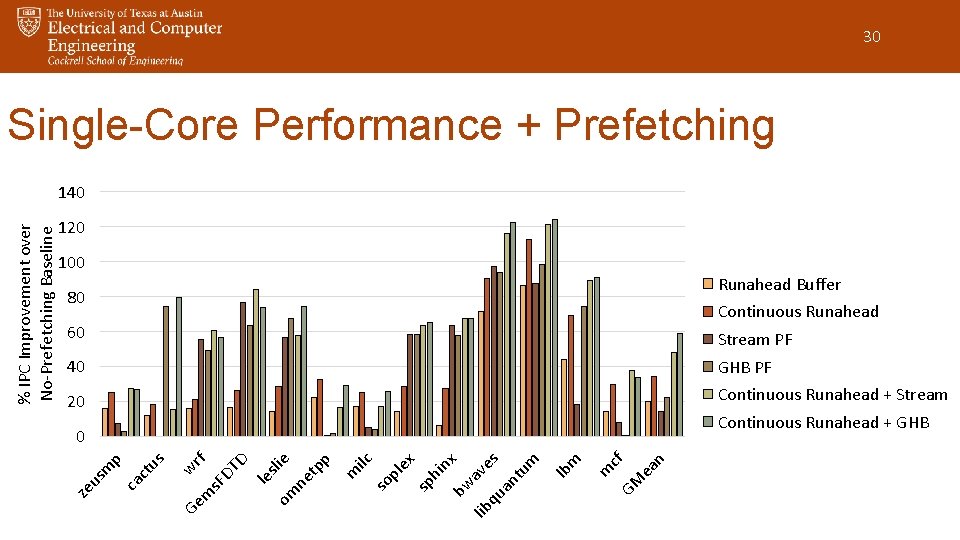

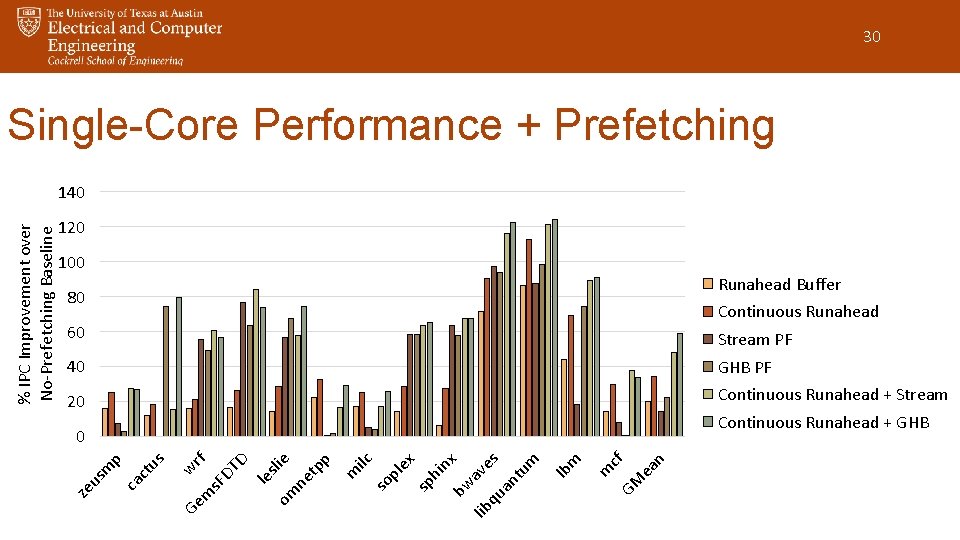

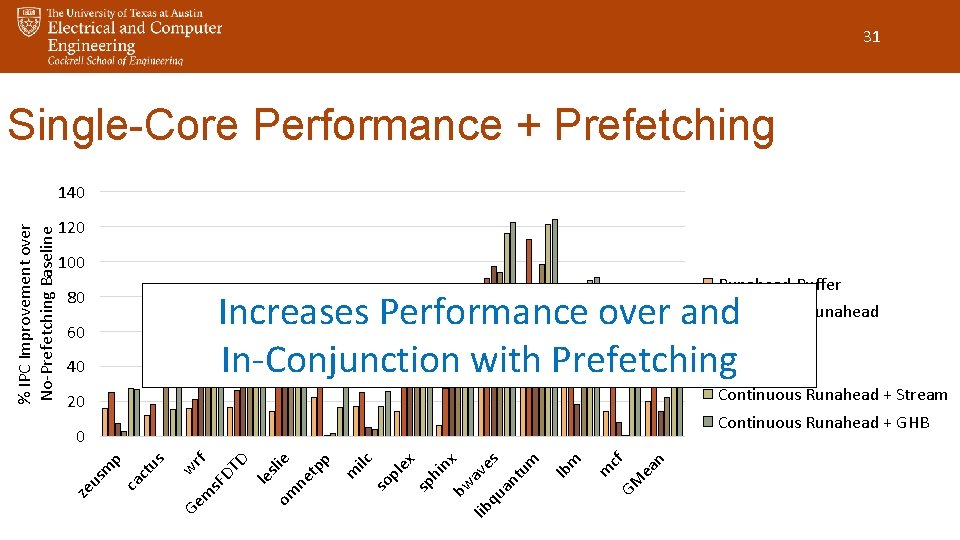

30 Single-Core Performance + Prefetching 120 100 Runahead Buffer 80 Continuous Runahead 60 Stream PF 40 GHB PF 20 Continuous Runahead + Stream Continuous Runahead + GHB m c GM f ea n m lb um an t es qu lib bw av nx sp hi ex so pl ilc m m rf s. F DT D le s om lie ne tp p w Ge us ca ct us m p 0 ze % IPC Improvement over No-Prefetching Baseline 140

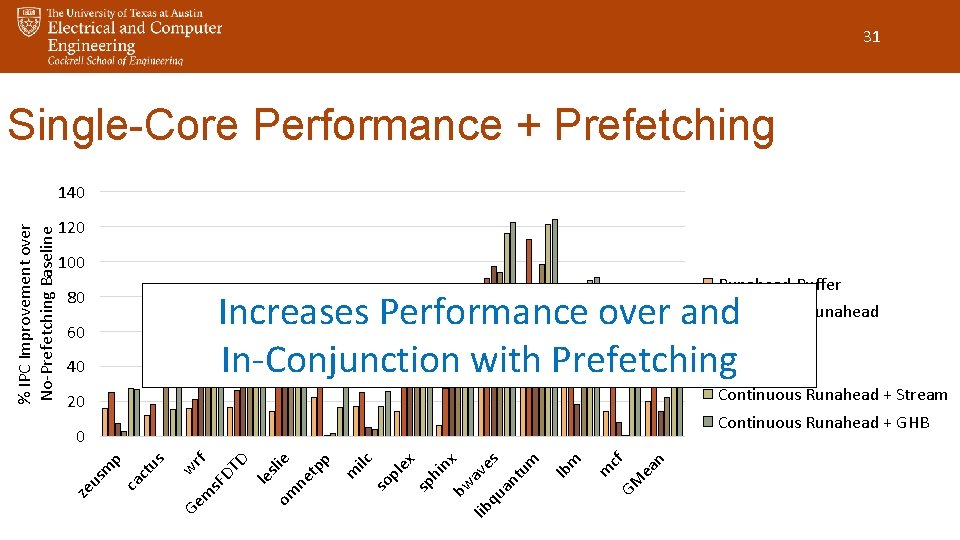

31 Single-Core Performance + Prefetching 120 100 Runahead Buffer 80 Continuous Runahead Increases Performance over and Stream PF In-Conjunction with Prefetching GHB PF 60 40 Continuous Runahead + Stream 20 Continuous Runahead + GHB m c GM f ea n m lb um an t es qu lib bw av nx sp hi ex so pl ilc m m rf s. F DT D le s om lie ne tp p w Ge us ca ct us m p 0 ze % IPC Improvement over No-Prefetching Baseline 140

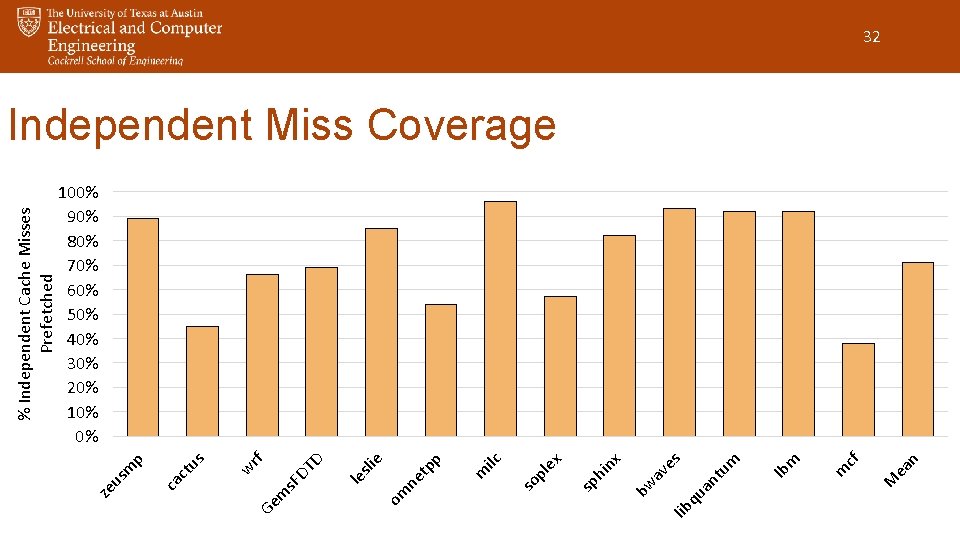

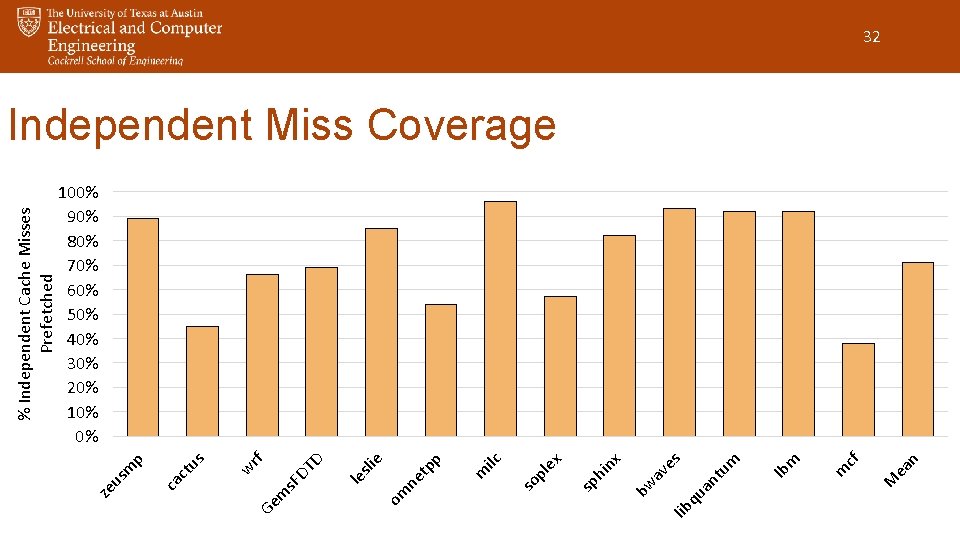

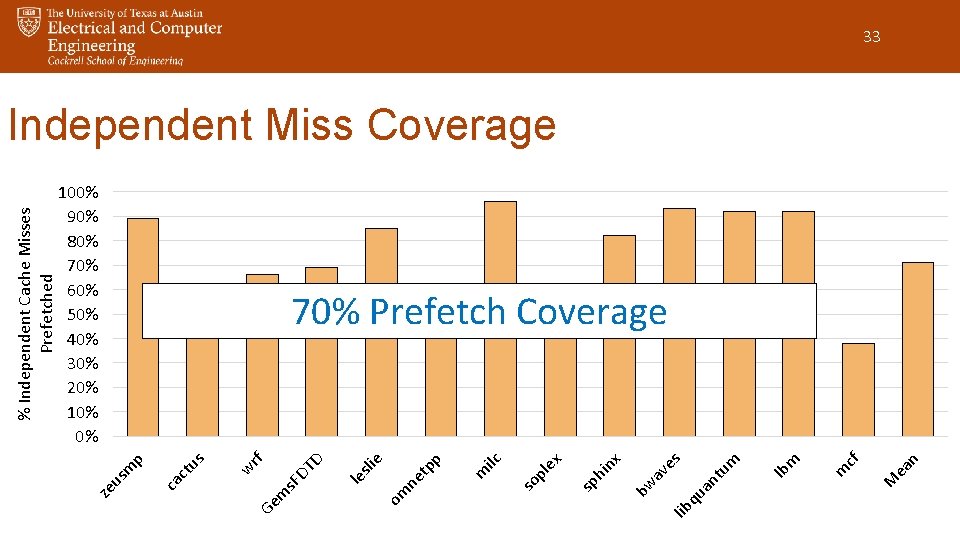

qu lib ea n M m cf m lb an tu m es av bw ex nx hi sp pl so p ilc m tp ne om sli e le rf Ge m s. F DT D w us ca ct p m us ze % Independent Cache Misses Prefetched 32 Independent Miss Coverage 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0%

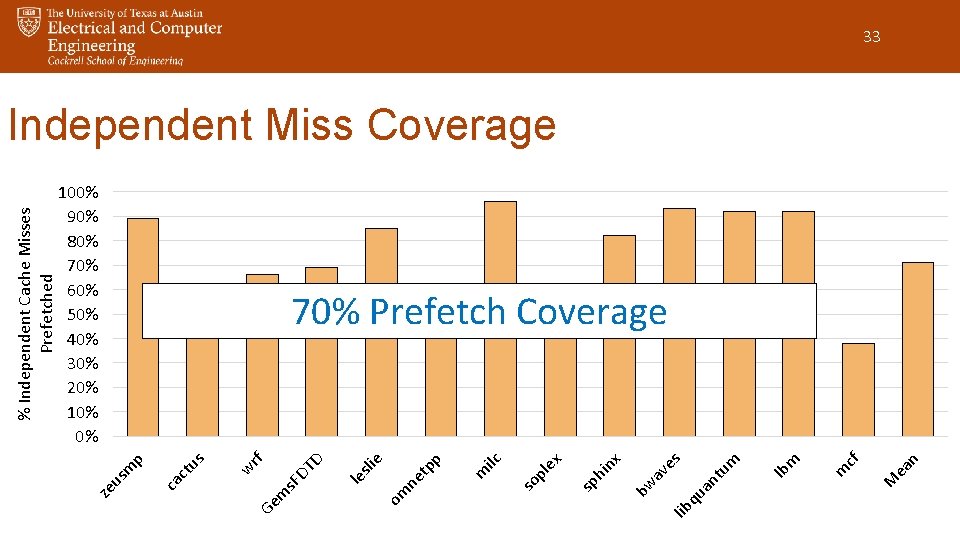

qu lib ea n M m cf m lb an tu m es av bw ex nx hi sp pl so p ilc m tp ne om sli e 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% le rf Ge m s. F DT D w us ca ct p m us ze % Independent Cache Misses Prefetched 33 Independent Miss Coverage 70% Prefetch Coverage

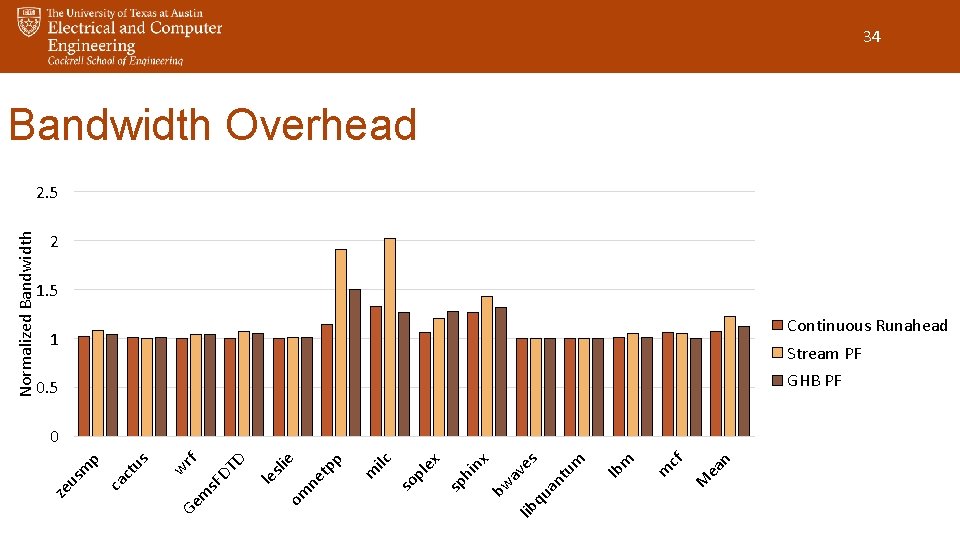

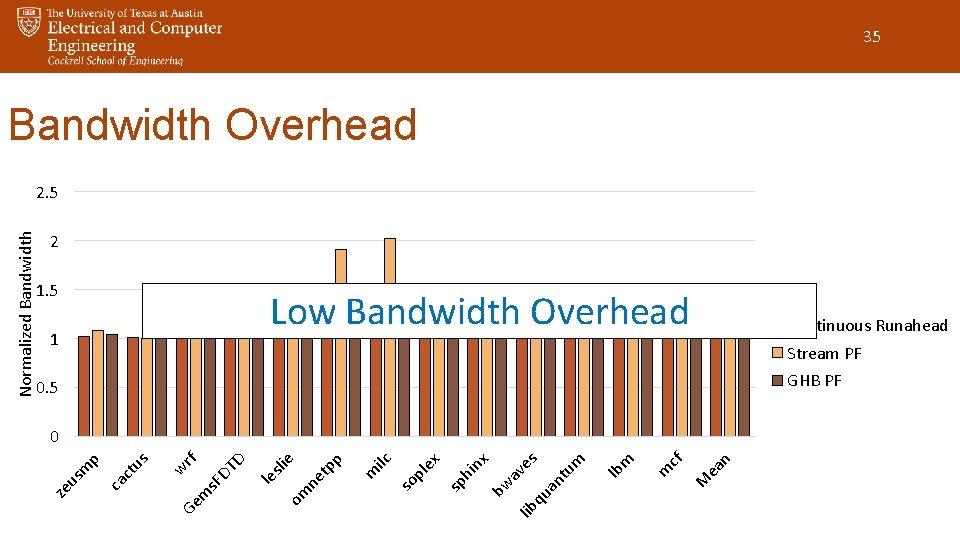

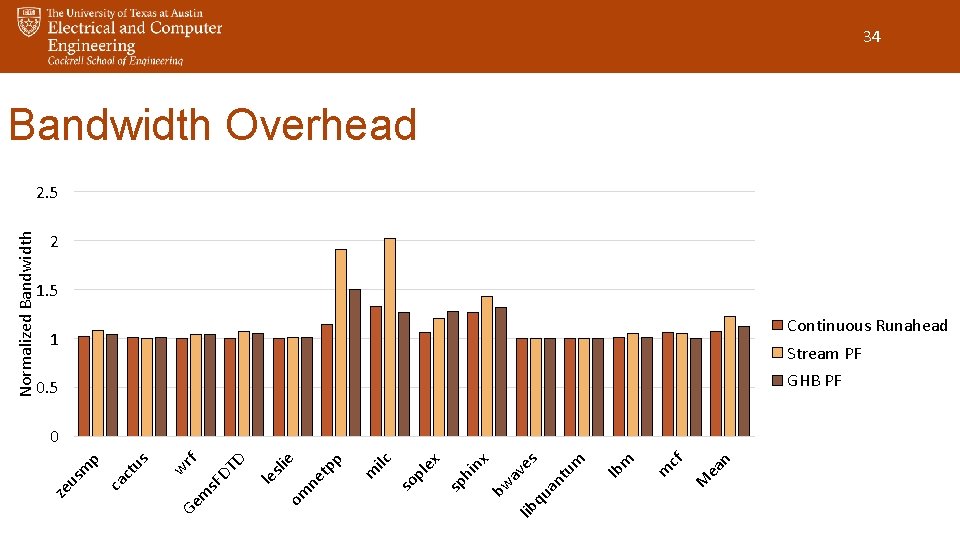

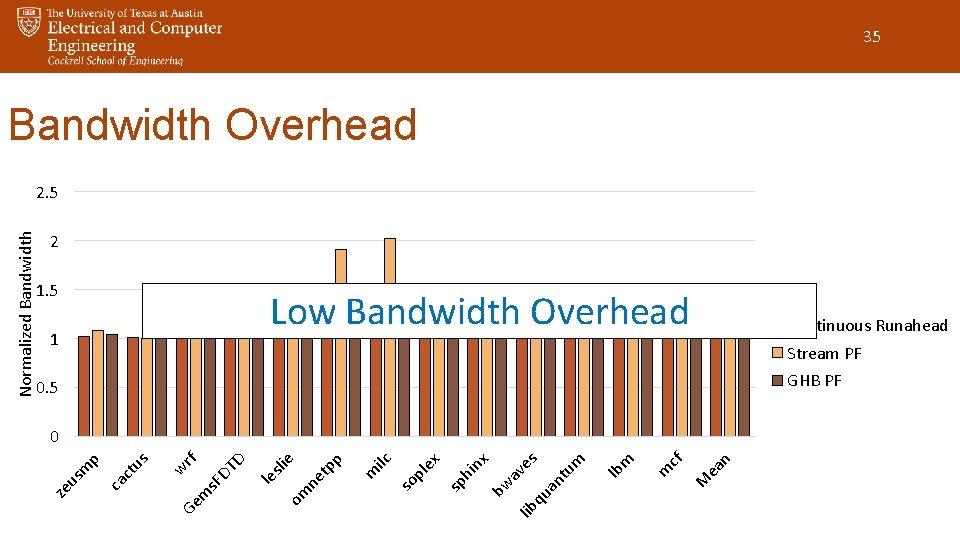

qu lib m ea n M m cf lb an tu m es av bw ex nx hi sp pl so p ilc m tp ne om sli e le Ge wrf m s. F DT D us ca ct p m us ze Normalized Bandwidth 34 Bandwidth Overhead 2. 5 2 1. 5 1 Continuous Runahead Stream PF 0. 5 GHB PF 0

qu lib m ea n M m cf lb an tu m es av bw ex nx hi sp pl so p ilc m tp ne om 1 sli e 1. 5 le Ge wrf m s. F DT D us ca ct p m us ze Normalized Bandwidth 35 Bandwidth Overhead 2. 5 2 Low Bandwidth Overhead Continuous Runahead Stream PF 0. 5 GHB PF 0

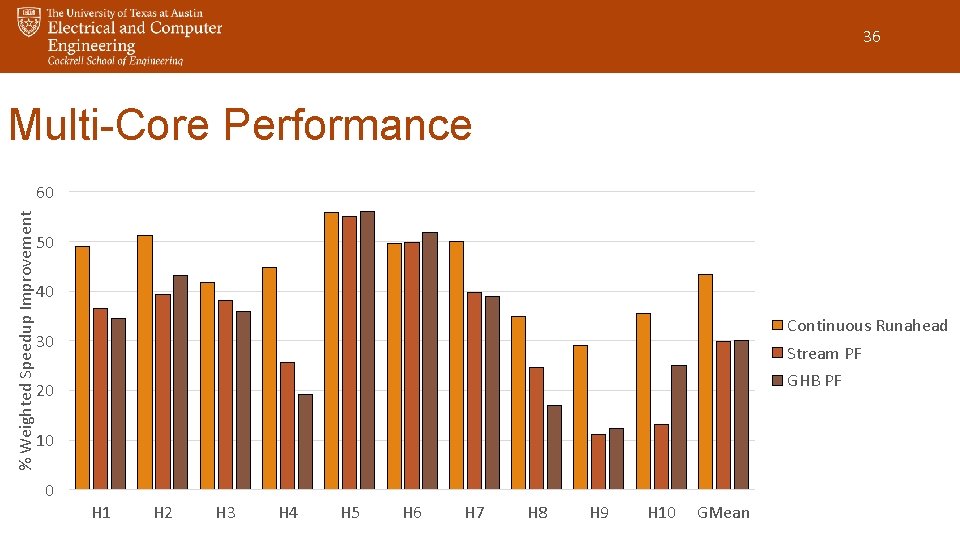

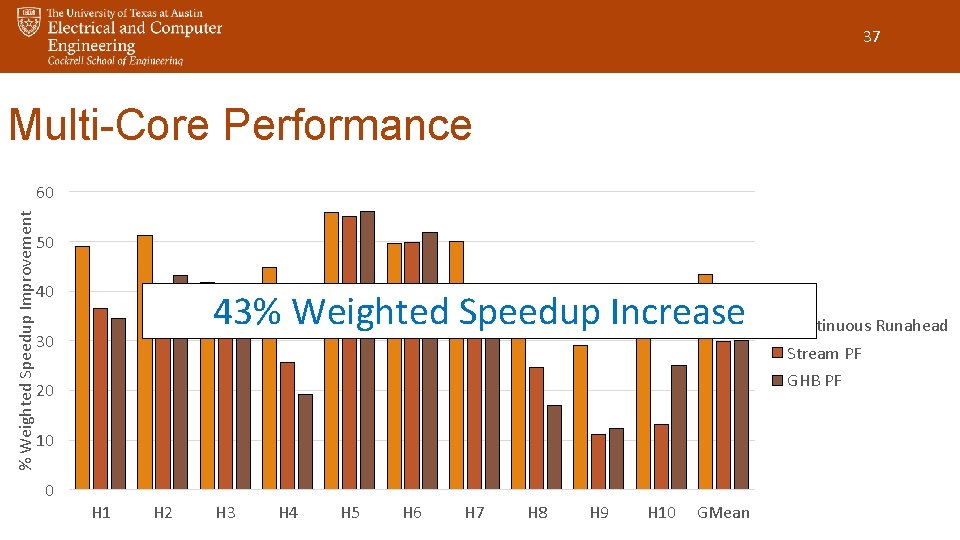

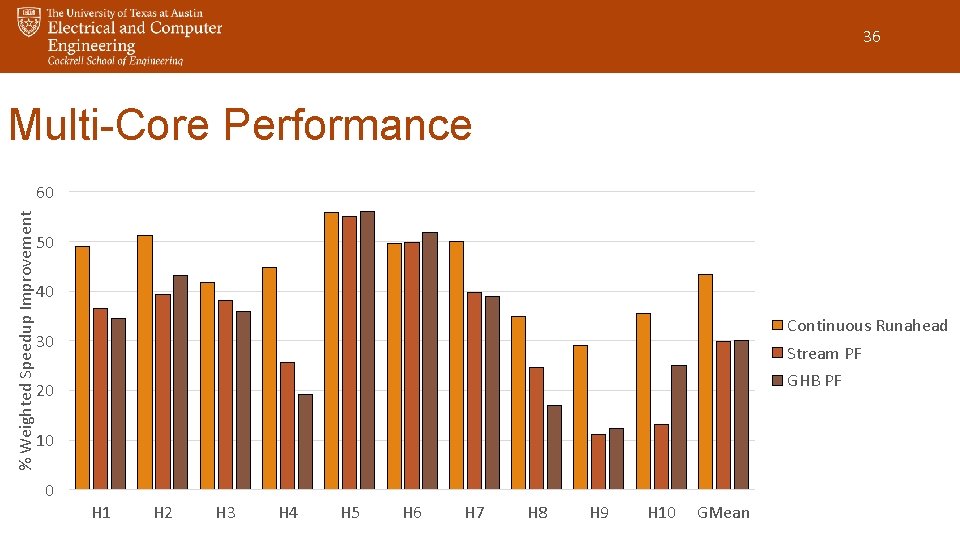

36 Multi-Core Performance % Weighted Speedup Improvement 60 50 40 Continuous Runahead 30 Stream PF GHB PF 20 10 0 H 1 H 2 H 3 H 4 H 5 H 6 H 7 H 8 H 9 H 10 GMean

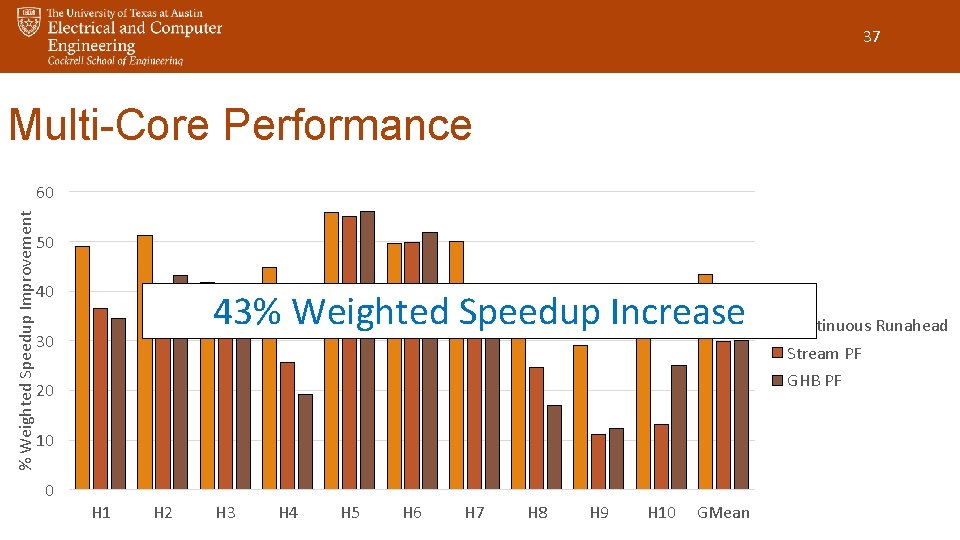

37 Multi-Core Performance % Weighted Speedup Improvement 60 50 40 43% Weighted Speedup Increase 30 Stream PF GHB PF 20 10 0 Continuous Runahead H 1 H 2 H 3 H 4 H 5 H 6 H 7 H 8 H 9 H 10 GMean

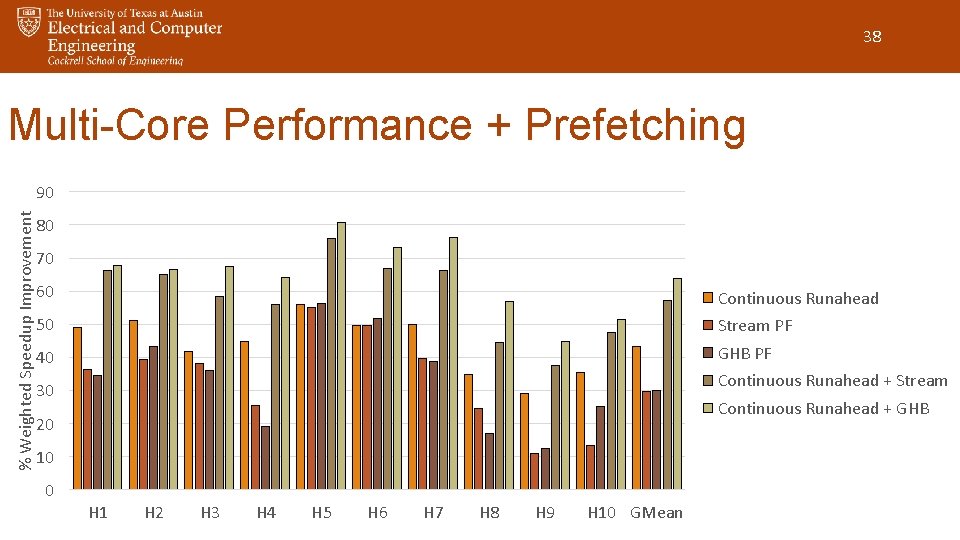

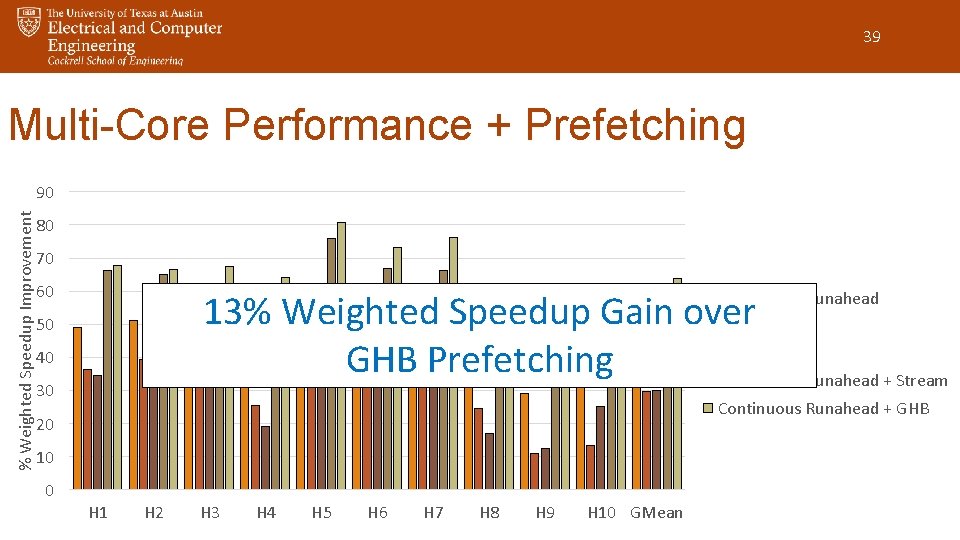

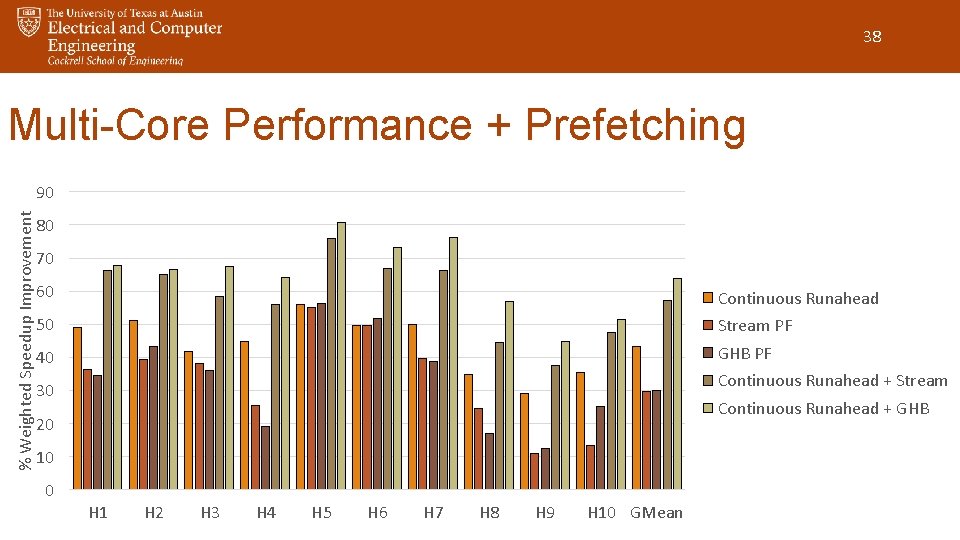

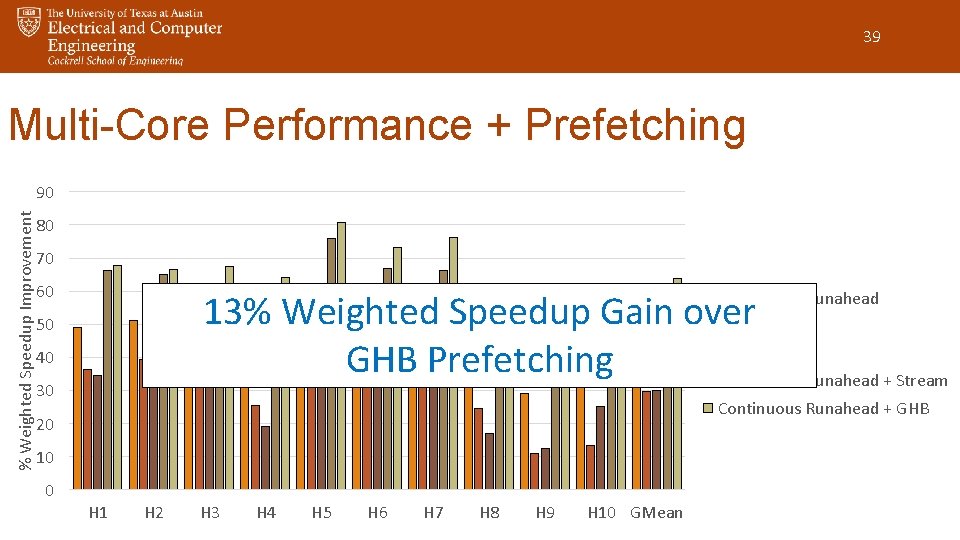

38 Multi-Core Performance + Prefetching % Weighted Speedup Improvement 90 80 70 60 Continuous Runahead 50 Stream PF 40 GHB PF Continuous Runahead + Stream 30 Continuous Runahead + GHB 20 10 0 H 1 H 2 H 3 H 4 H 5 H 6 H 7 H 8 H 9 H 10 GMean

39 Multi-Core Performance + Prefetching % Weighted Speedup Improvement 90 80 70 60 13% Weighted Speedup Gain over Stream PF GHB Prefetching Continuous Runahead + Stream Continuous Runahead 50 40 30 Continuous Runahead + GHB 20 10 0 H 1 H 2 H 3 H 4 H 5 H 6 H 7 H 8 H 9 H 10 GMean

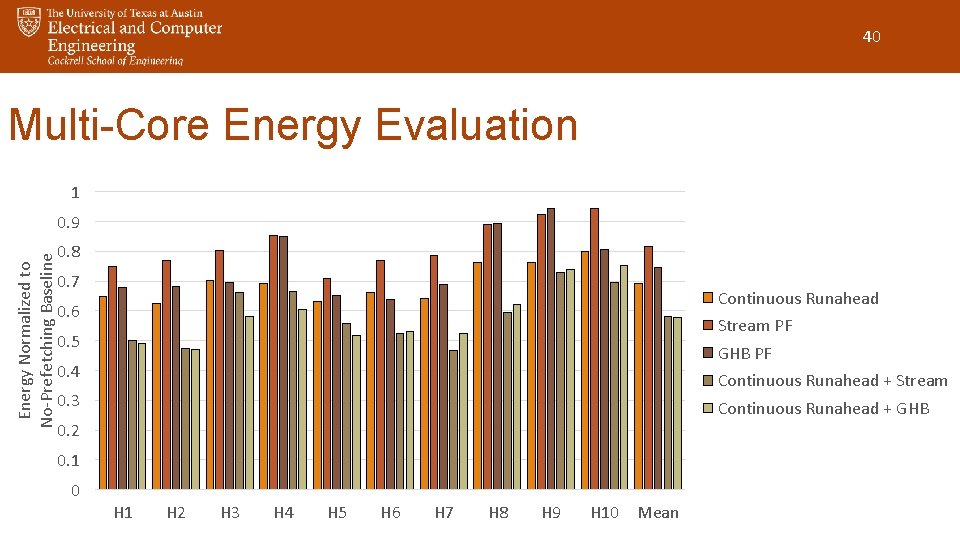

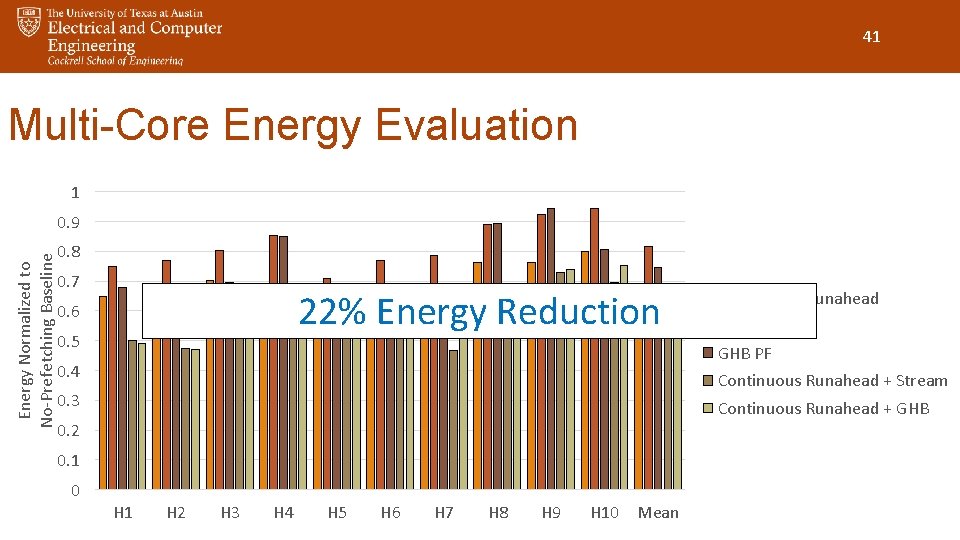

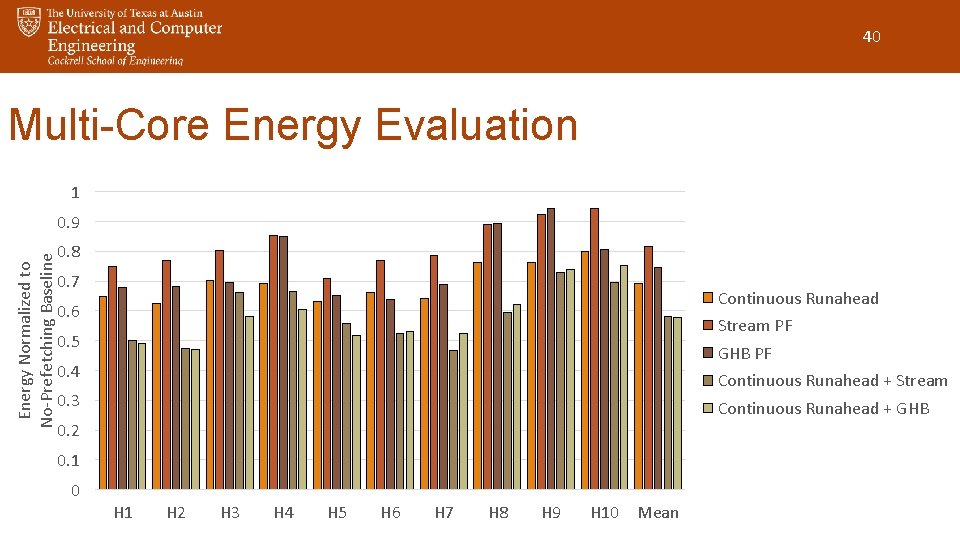

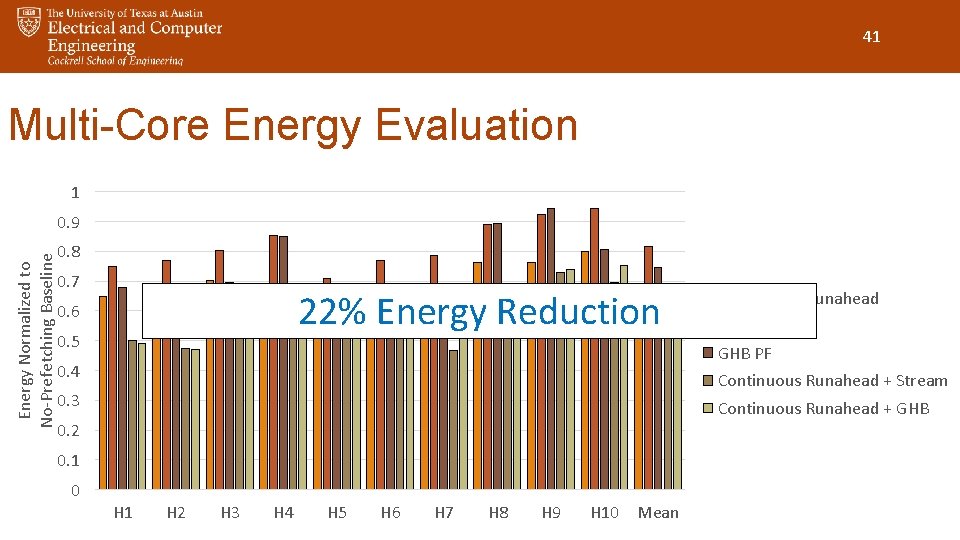

40 Multi-Core Energy Evaluation 1 Energy Normalized to No-Prefetching Baseline 0. 9 0. 8 0. 7 Continuous Runahead 0. 6 Stream PF 0. 5 GHB PF 0. 4 Continuous Runahead + Stream 0. 3 Continuous Runahead + GHB 0. 2 0. 1 0 H 1 H 2 H 3 H 4 H 5 H 6 H 7 H 8 H 9 H 10 Mean

41 Multi-Core Energy Evaluation 1 Energy Normalized to No-Prefetching Baseline 0. 9 0. 8 0. 7 22% Energy Reduction 0. 6 0. 5 Stream PF GHB PF 0. 4 Continuous Runahead + Stream 0. 3 Continuous Runahead + GHB 0. 2 0. 1 0 Continuous Runahead H 1 H 2 H 3 H 4 H 5 H 6 H 7 H 8 H 9 H 10 Mean

42 Conclusions • Runahead prefetch coverage is limited by the duration of each runahead interval • To remove this constraint, we introduce the notion of Continuous Runahead • We can dynamically identify the most critical LLC misses to target with Continuous Runahead by tracking the operations that cause the pipeline to frequently stall • We migrate these dependence chains to the CRE where they are executed continuously in a loop

43 Conclusions • Continuous Runahead greatly increases prefetch coverage • Increases single-core performance by 34. 4% • Increases multi-core performance by 43. 3% • Synergistic with various types of prefetching

Continuous Runahead: Transparent Hardware Acceleration for Memory Intensive Workloads Milad Hashemi, Onur Mutlu, Yale N. Patt UT Austin/Google, ETH Zürich, UT Austin October 19 th, 2016