Context Free Grammars Reading Chap 12 13 Jurafsky

![Subcategorization Sneeze: John sneezed Find: Please find [a flight to NY]NP Give: Give [me]NP[a Subcategorization Sneeze: John sneezed Find: Please find [a flight to NY]NP Give: Give [me]NP[a](https://slidetodoc.com/presentation_image_h/dab282fe505fd1dea3156a631ad51311/image-28.jpg)

![Movement • Core example (no movement yet) – [[My travel agent]NP [booked [the flight]NP]VP]S Movement • Core example (no movement yet) – [[My travel agent]NP [booked [the flight]NP]VP]S](https://slidetodoc.com/presentation_image_h/dab282fe505fd1dea3156a631ad51311/image-31.jpg)

- Slides: 49

Context Free Grammars Reading: Chap 12 -13, Jurafsky & Martin This slide set was adapted from J. Martin and Rada Mihalcea

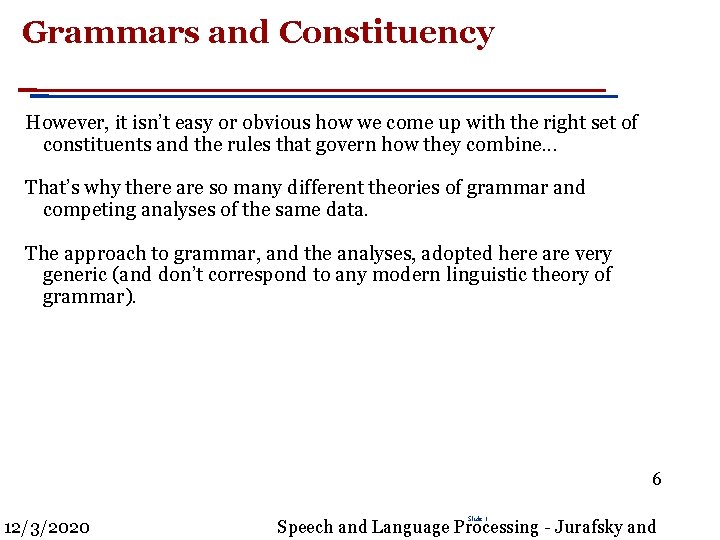

Syntax = rules describing how words can connect to each other * that and after year last I saw you yesterday colorless green ideas sleep furiously • the kind of implicit knowledge of your native language that you had mastered by the time you were 3 or 4 years old without explicit instruction • not necessarily the type of rules you were later taught in school. Slide 1

Syntax Why should you care? Grammar checkers Question answering Information extraction Machine translation Slide 1

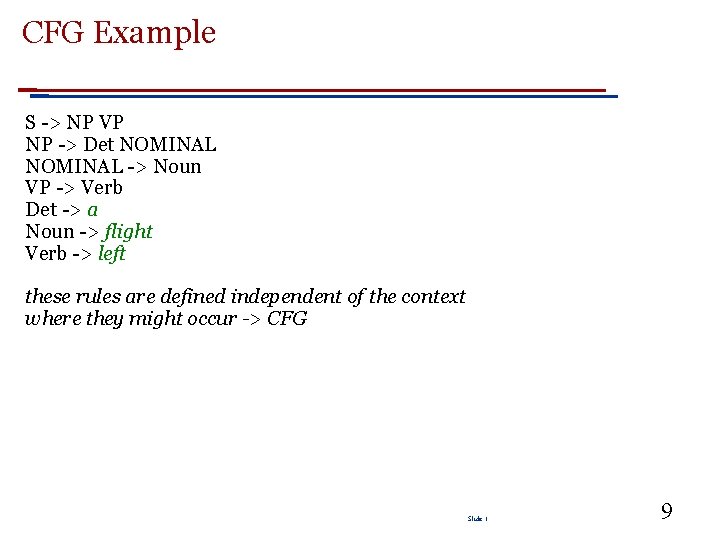

Constituency The basic idea here is that groups of words within utterances can be shown to act as single units. And in a given language, these units form coherent classes that can be be shown to behave in similar ways 12/3/2020 Slide 1 Speech and Language Processing - Jurafsky and 4

Constituency For example, it makes sense to the say that the following are all noun phrases in English. . . The person I ate dinner with yesterday The car that I drove in college Speech and Language Processing - Jurafsky and Martin 12/3/2020 Slide 1 5

Grammars and Constituency However, it isn’t easy or obvious how we come up with the right set of constituents and the rules that govern how they combine. . . That’s why there are so many different theories of grammar and competing analyses of the same data. The approach to grammar, and the analyses, adopted here are very generic (and don’t correspond to any modern linguistic theory of grammar). 6 12/3/2020 Slide 1 Speech and Language Processing - Jurafsky and

Context-Free Grammars Context-free grammars (CFGs) Also known as Phrase structure grammars Backus-Naur form Consist of Rules Terminals Non-terminals 7 12/3/2020 Slide 1 Speech and Language Processing - Jurafsky and

Context-Free Grammars Terminals We’ll take these to be words Non-Terminals The constituents in a language Rules Like noun phrase, verb phrase and sentence Rules are equations that consist of a single non-terminal on the left and any number of terminals and non-terminals on the right. 8 12/3/2020 Slide 1 Speech and Language Processing - Jurafsky and

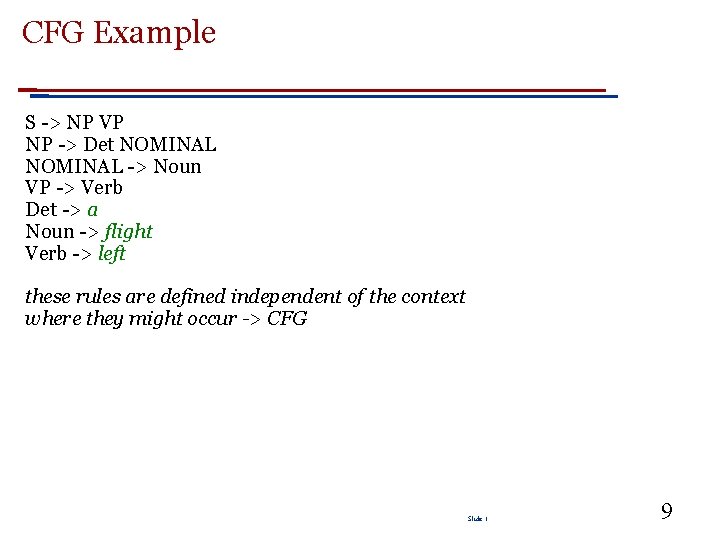

CFG Example S -> NP VP NP -> Det NOMINAL -> Noun VP -> Verb Det -> a Noun -> flight Verb -> left these rules are defined independent of the context where they might occur -> CFG Slide 1 9

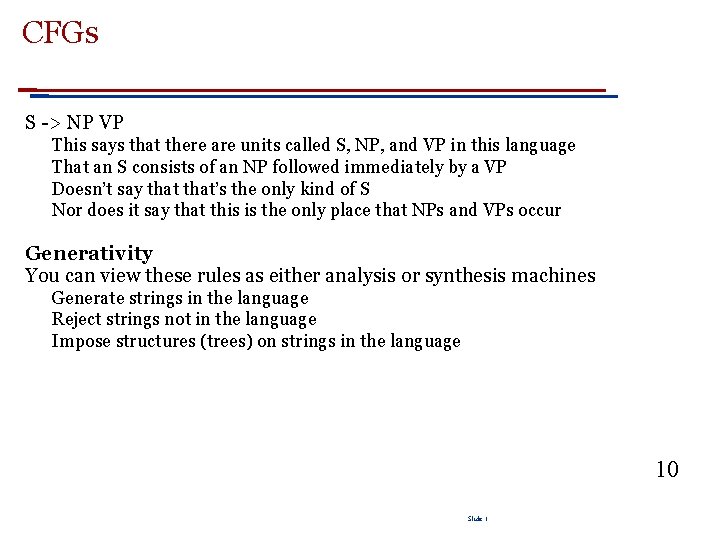

CFGs S -> NP VP This says that there are units called S, NP, and VP in this language That an S consists of an NP followed immediately by a VP Doesn’t say that’s the only kind of S Nor does it say that this is the only place that NPs and VPs occur Generativity You can view these rules as either analysis or synthesis machines Generate strings in the language Reject strings not in the language Impose structures (trees) on strings in the language 10 Slide 1

Parsing is the process of taking a string and a grammar and returning a (many) parse tree(s) for that string Other options Regular languages (expressions) Too weak – not expressive enough Context-sensitive Too powerful – parsing is not efficient Slide 1 11

Context? The notion of context in CFGs is not the same as the ordinary meaning of the word context in language. All it really means is that the non-terminal on the left-hand side of a rule is out there all by itself A -> B C Means that I can rewrite an A as a B followed by a C regardless of the context in which A is found Slide 1 12

Key Constituents (English) Sentences Noun phrases Verb phrases Prepositional phrases Slide 1 13

Some NP Rules Here are some rules for our noun phrases Together, these describe two kinds of NPs. One that consists of a determiner followed by a nominal And another that says that proper names are NPs. The third rule illustrates two things An explicit disjunction Two kinds of nominals A recursive definition Same non-terminal on the right and left-side of the rule 14 12/3/2020 Slide 1 Speech and Language Processing - Jurafsky and

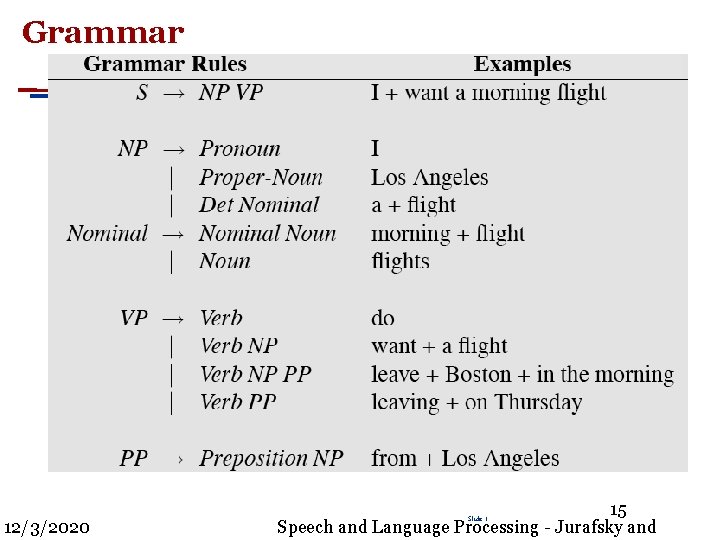

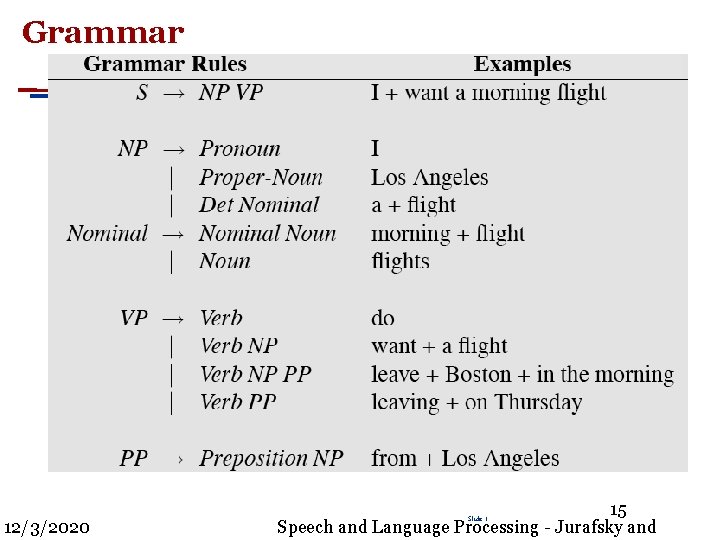

Grammar 12/3/2020 15 Speech and Language Processing - Jurafsky and Slide 1

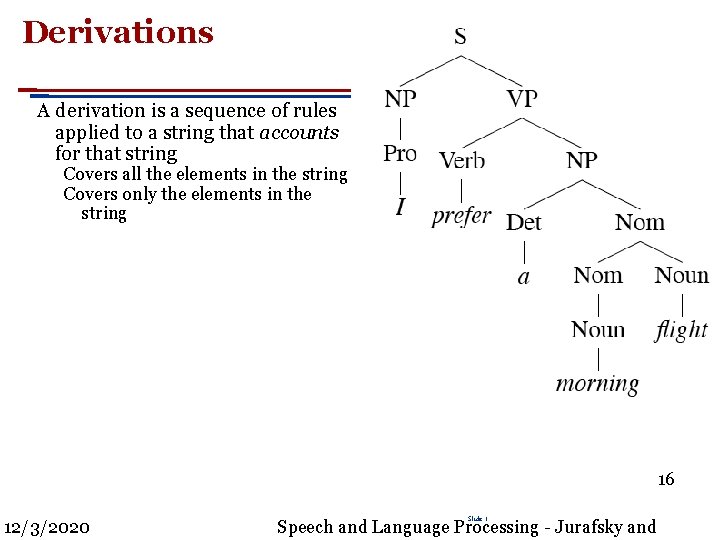

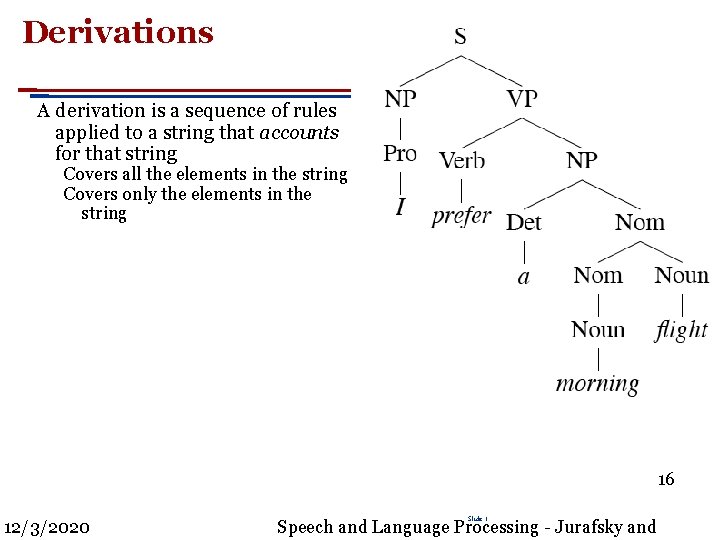

Derivations A derivation is a sequence of rules applied to a string that accounts for that string Covers all the elements in the string Covers only the elements in the string 16 12/3/2020 Slide 1 Speech and Language Processing - Jurafsky and

Practice: Another Recursion Example The following where the non-terminal on the left also appears somewhere on the right (directly). NP -> NP PP [[The flight] [to Boston]] VP -> VP PP [[departed Miami] [at noon]] Slide 1 17

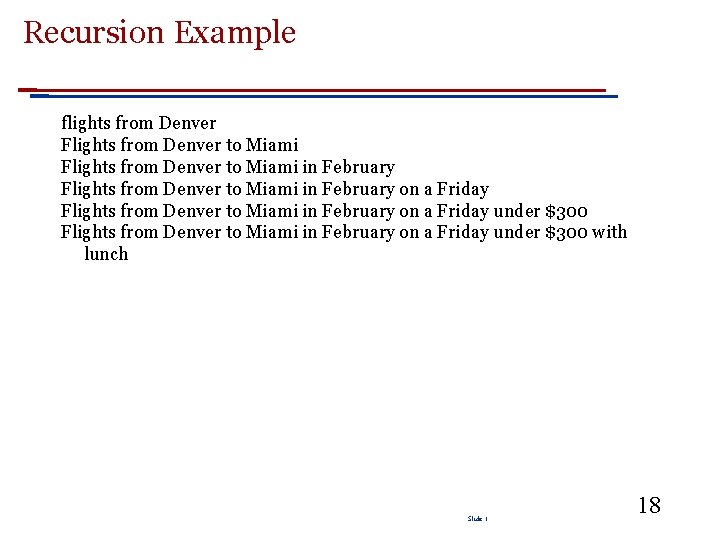

Recursion Example flights from Denver Flights from Denver to Miami in February on a Friday under $300 Flights from Denver to Miami in February on a Friday under $300 with lunch Slide 1 18

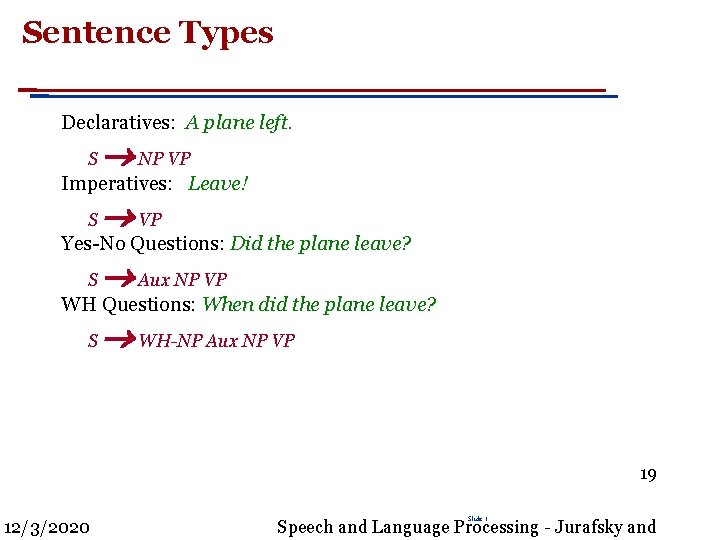

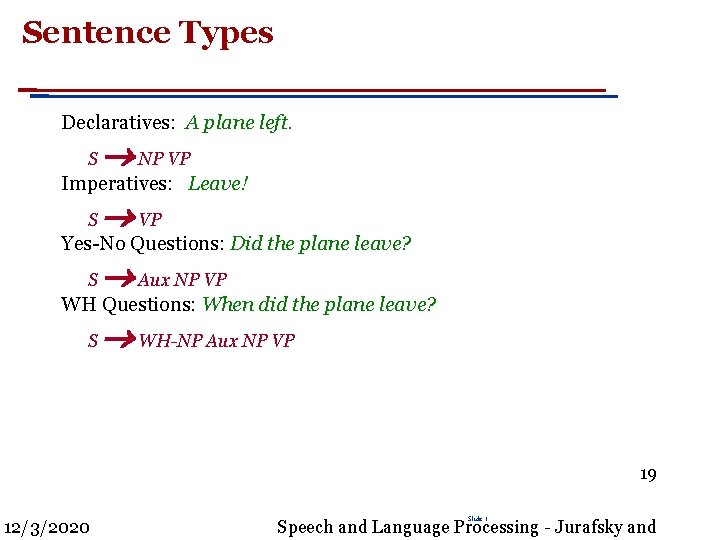

Sentence Types Declaratives: A plane left. S NP VP Imperatives: Leave! S VP Yes-No Questions: Did the plane leave? S Aux NP VP WH Questions: When did the plane leave? S WH-NP Aux NP VP 19 12/3/2020 Slide 1 Speech and Language Processing - Jurafsky and

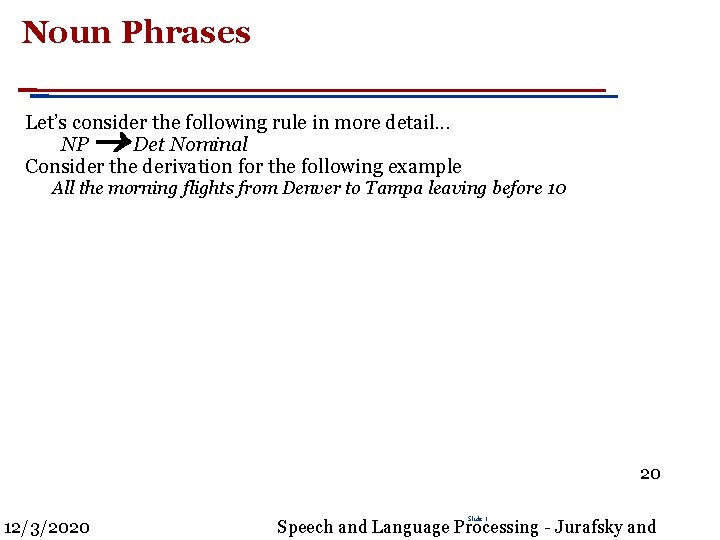

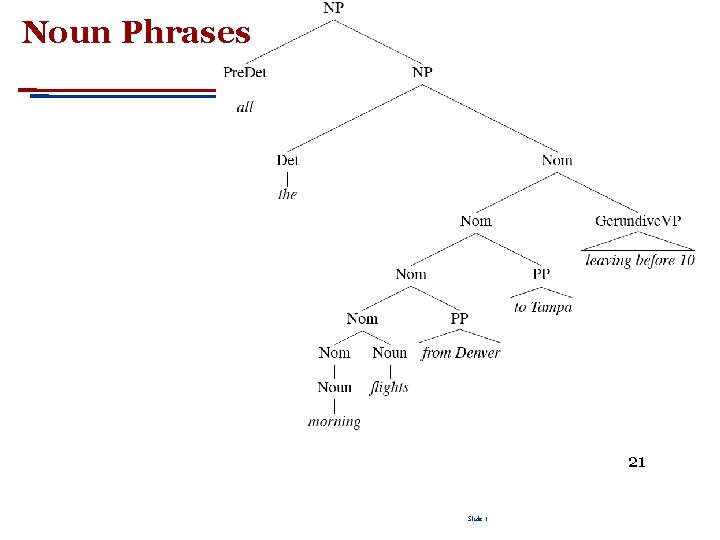

Noun Phrases Let’s consider the following rule in more detail. . . NP Det Nominal Consider the derivation for the following example All the morning flights from Denver to Tampa leaving before 10 20 12/3/2020 Slide 1 Speech and Language Processing - Jurafsky and

Noun Phrases 21 Slide 1

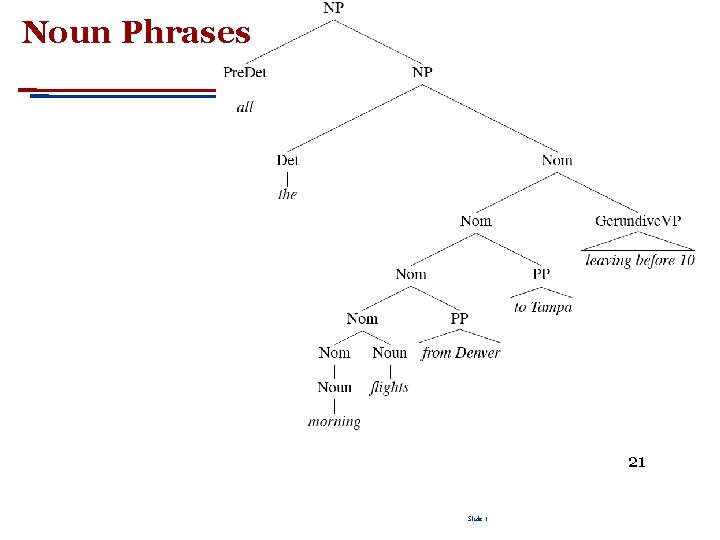

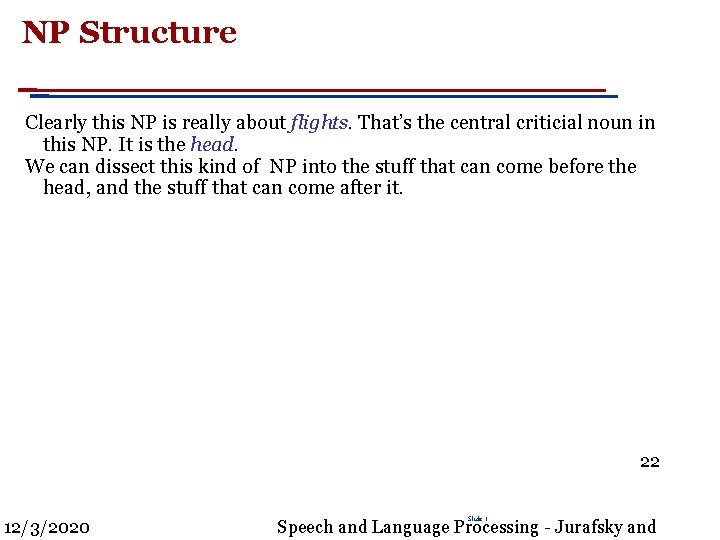

NP Structure Clearly this NP is really about flights. That’s the central criticial noun in this NP. It is the head. We can dissect this kind of NP into the stuff that can come before the head, and the stuff that can come after it. 22 12/3/2020 Slide 1 Speech and Language Processing - Jurafsky and

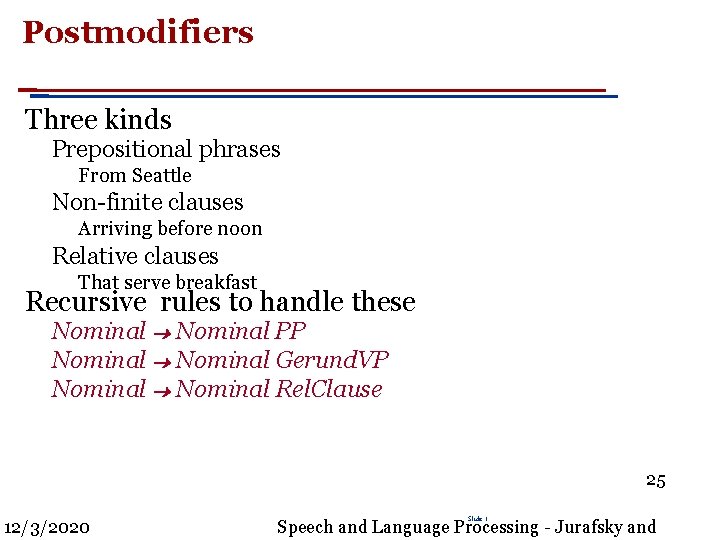

Determiners Noun phrases can start with determiners. . . Determiners can be Simple lexical items: the, this, a, an, etc. A car Or simple possessives John’s car Or complex recursive versions of that John’s sister’s husband’s son’s car 23 12/3/2020 Slide 1 Speech and Language Processing - Jurafsky and

Nominals Contains the head any pre- and post- modifiers of the head. Pre. Quantifiers, cardinals, ordinals. . . Three cars Adjectives large cars Note: there are ordering constraints Three large cars ? large three cars 24 12/3/2020 Slide 1 Speech and Language Processing - Jurafsky and

Postmodifiers Three kinds Prepositional phrases From Seattle Non-finite clauses Arriving before noon Relative clauses That serve breakfast Recursive rules to handle these Nominal PP Nominal Gerund. VP Nominal Rel. Clause 25 12/3/2020 Slide 1 Speech and Language Processing - Jurafsky and

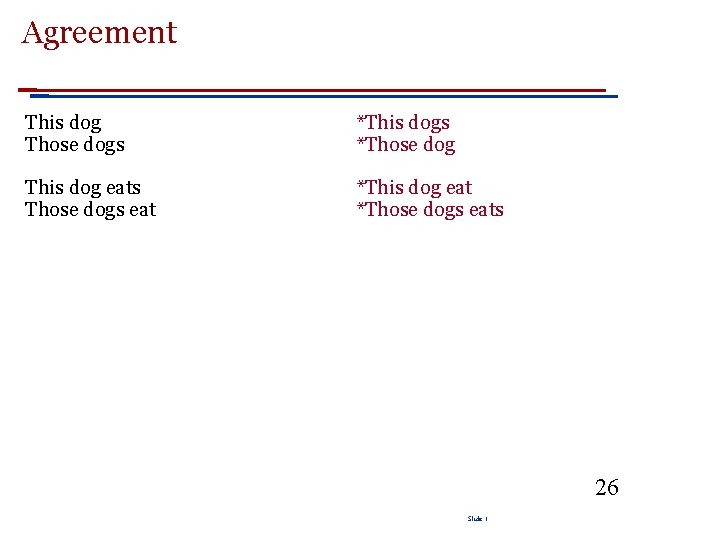

Agreement This dog Those dogs *This dogs *Those dog This dog eats Those dogs eat *This dog eat *Those dogs eats 26 Slide 1

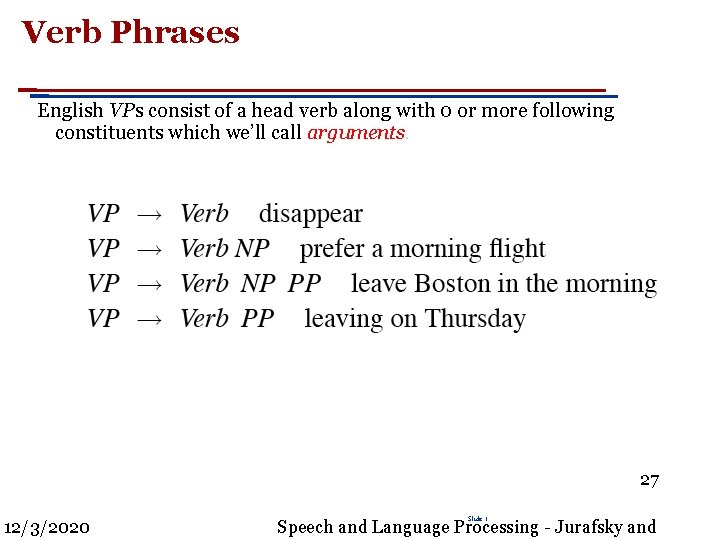

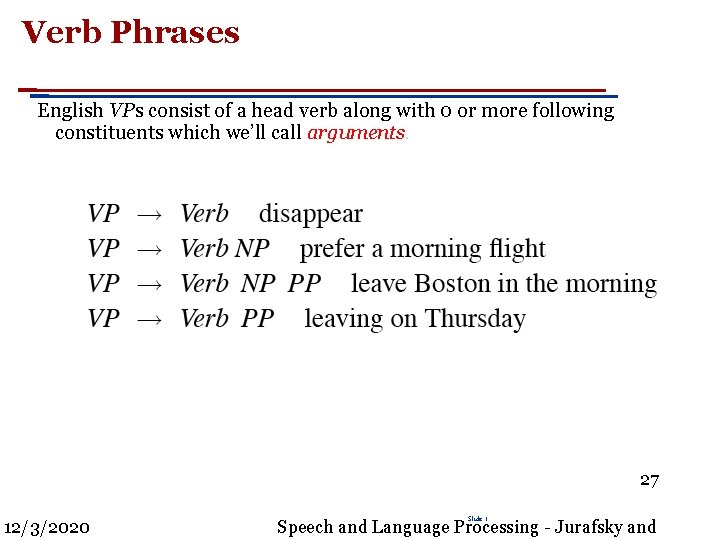

Verb Phrases English VPs consist of a head verb along with 0 or more following constituents which we’ll call arguments. 27 12/3/2020 Slide 1 Speech and Language Processing - Jurafsky and

![Subcategorization Sneeze John sneezed Find Please find a flight to NYNP Give Give meNPa Subcategorization Sneeze: John sneezed Find: Please find [a flight to NY]NP Give: Give [me]NP[a](https://slidetodoc.com/presentation_image_h/dab282fe505fd1dea3156a631ad51311/image-28.jpg)

Subcategorization Sneeze: John sneezed Find: Please find [a flight to NY]NP Give: Give [me]NP[a cheaper fare]NP Help: Can you help [me]NP[with a flight]PP Prefer: I prefer [to leave earlier]TO-VP Told: I was told [United has a flight]S … *John sneezed the book *I prefer United has a flight *Give with a flight Subcat expresses the constraints that a predicate places on the number and type of the argument it wants to take 28 Slide 1

Overgeneration The various rules for VPs overgenerate. They permit the presence of strings containing verbs and arguments that don’t go together For example VP -> V NP therefore Sneezed the book is a VP since “sneeze” is a verb and “the book” is a valid NP In lecture: go over the grammar for assignment 3 Slide 1

Possible CFG Solution Possible solution for agreement. Can use the same trick for all the verb/VP classes. (Like propositionalizing a firstorder knowledge base – the KB gets very large, but the inference algorithms are very efficient) Sg. S -> Sg. NP Sg. VP Pl. S -> Pl. Np Pl. VP Sg. NP -> Sg. Det Sg. Nom Pl. NP -> Pl. Det Pl. Nom Pl. VP -> Pl. V NP Sg. VP ->Sg. V Np … 30 12/3/2020 Slide 1

![Movement Core example no movement yet My travel agentNP booked the flightNPVPS Movement • Core example (no movement yet) – [[My travel agent]NP [booked [the flight]NP]VP]S](https://slidetodoc.com/presentation_image_h/dab282fe505fd1dea3156a631ad51311/image-31.jpg)

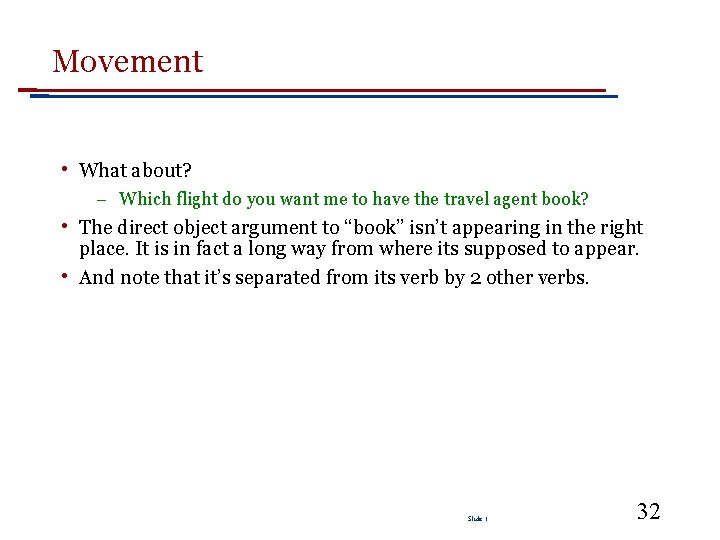

Movement • Core example (no movement yet) – [[My travel agent]NP [booked [the flight]NP]VP]S • I. e. “book” is a straightforward transitive verb. It expects a single NP arg within the VP as an argument, and a single NP arg as the subject. Slide 1

Movement • What about? – Which flight do you want me to have the travel agent book? • The direct object argument to “book” isn’t appearing in the right place. It is in fact a long way from where its supposed to appear. • And note that it’s separated from its verb by 2 other verbs. Slide 1 32

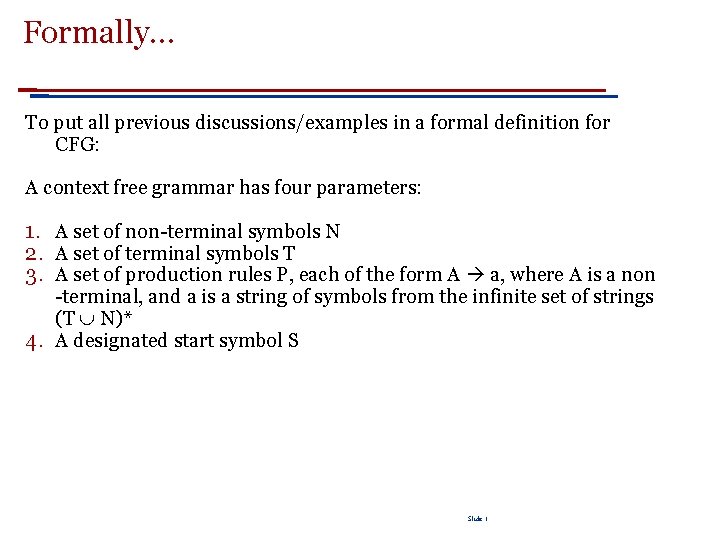

Formally… To put all previous discussions/examples in a formal definition for CFG: A context free grammar has four parameters: 1. A set of non-terminal symbols N 2. A set of terminal symbols T 3. A set of production rules P, each of the form A a, where A is a non -terminal, and a is a string of symbols from the infinite set of strings (T N)* 4. A designated start symbol S Slide 1

Grammar equivalence and normal form Strong equivalence: – two grammars are strongly equivalent if: • • they generate/accept the same set of strings they assign the same phrase structure to each sentence – two grammars are weakly equivalent if: • • they generate/accept the same set of strings they do not assign the same phrase structure to each sentence Normal form – Restrict the form of productions – Chomsky Normal Form (CNF) – Right hand side of the productions has either one or two terminals or nonterminals – e. g. A -> BC A -> a – Any grammar can be translated into a weakly equivalent CNF – A -> B C D <=> A-> B X X -> C D Slide 1 34

Treebanks are corpora in which each sentence has been paired with a parse tree (presumably the right one). These are generally created By first parsing the collection with an automatic parser And then having human annotators correct each parse as necessary. This generally requires detailed annotation guidelines that provide a POS tagset, a grammar and instructions for how to deal with particular grammatical constructions. 35 12/3/2020 Slide 1 Speech and Language Processing - Jurafsky and

Penn Treebank Penn Tree. Bank is a widely used treebank. §Most well known is the Wall Street Journal section of the Penn Tree. Bank. § 1 M words from the 1987 -1989 Wall Street Journal. 36 12/3/2020 Slide 1

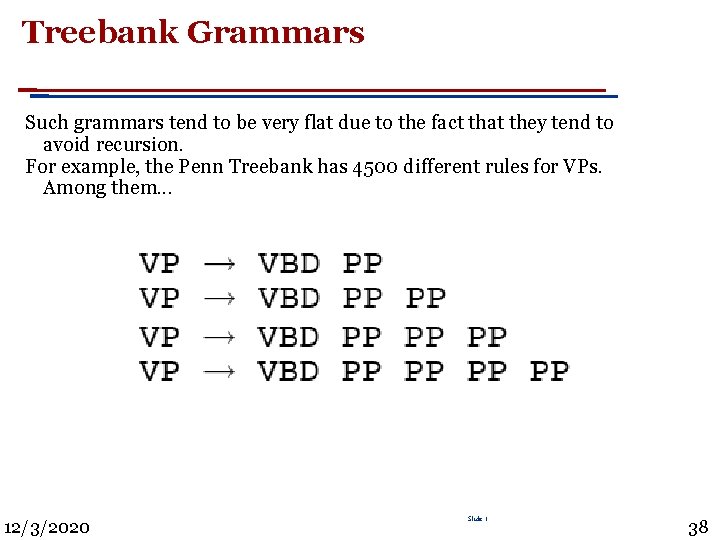

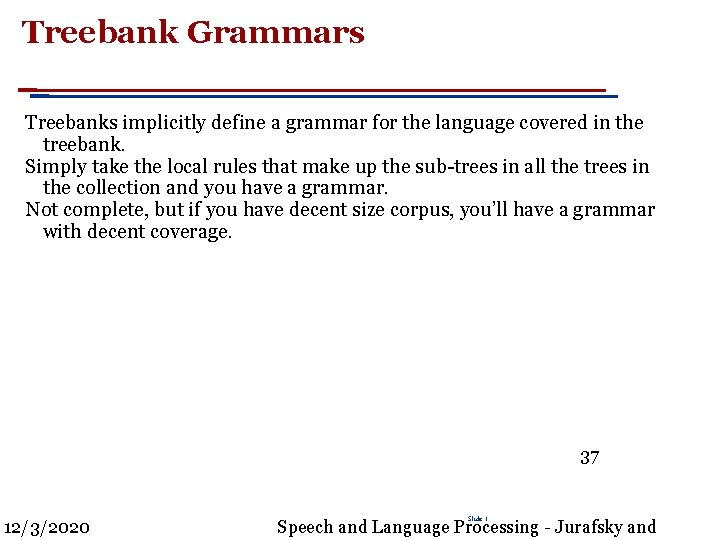

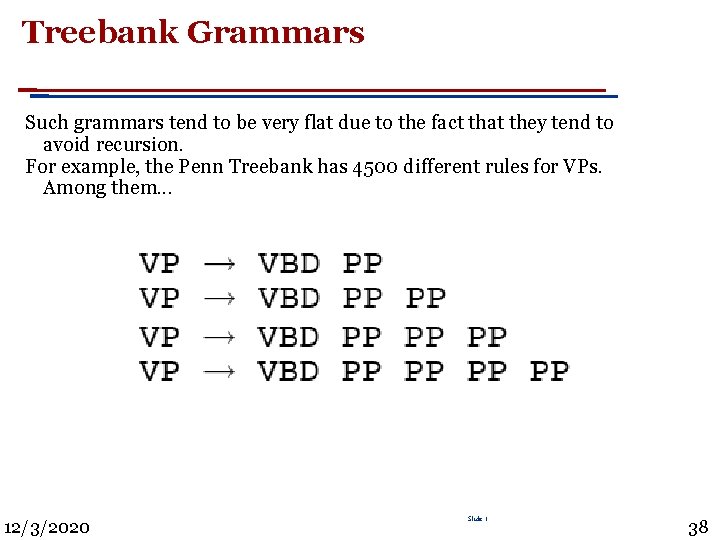

Treebank Grammars Treebanks implicitly define a grammar for the language covered in the treebank. Simply take the local rules that make up the sub-trees in all the trees in the collection and you have a grammar. Not complete, but if you have decent size corpus, you’ll have a grammar with decent coverage. 37 12/3/2020 Slide 1 Speech and Language Processing - Jurafsky and

Treebank Grammars Such grammars tend to be very flat due to the fact that they tend to avoid recursion. For example, the Penn Treebank has 4500 different rules for VPs. Among them. . . 12/3/2020 Slide 1 38

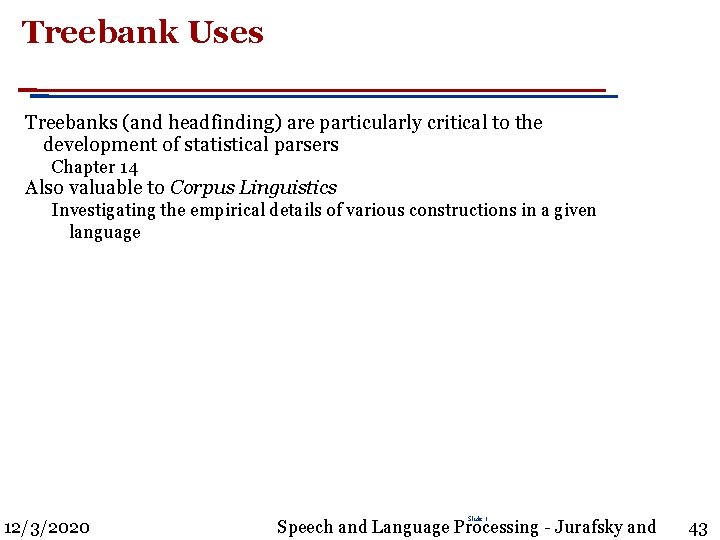

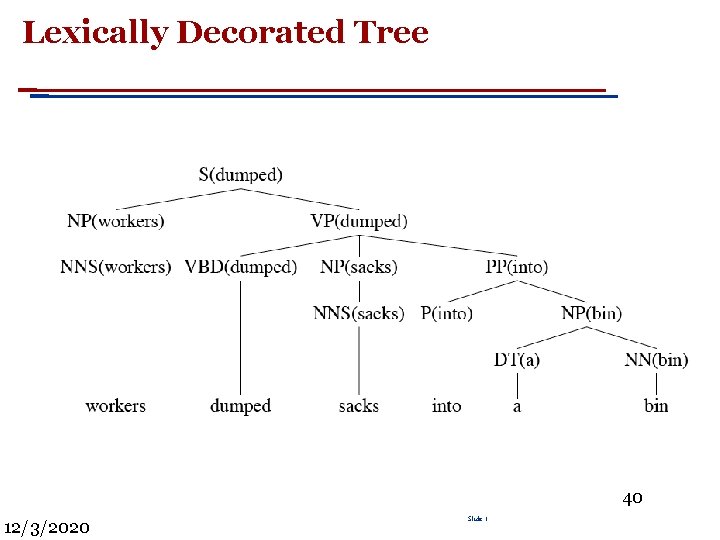

Heads in Trees Finding heads in treebank trees is a task that arises frequently in many applications. Particularly important in statistical parsing We can visualize this task by annotating the nodes of a parse tree with the heads of each corresponding node. 39 12/3/2020 Slide 1 Speech and Language Processing - Jurafsky and

Lexically Decorated Tree 40 12/3/2020 Slide 1

Head Finding The standard way to do head finding is to use a simple set of tree traversal rules specific to each non-terminal in the grammar. 41 12/3/2020 Slide 1 Speech and Language Processing - Jurafsky and

Noun Phrases 42 12/3/2020 Slide 1 Speech and Language Processing - Jurafsky and

Treebank Uses Treebanks (and headfinding) are particularly critical to the development of statistical parsers Chapter 14 Also valuable to Corpus Linguistics Investigating the empirical details of various constructions in a given language 12/3/2020 Slide 1 Speech and Language Processing - Jurafsky and 43

Dependency Grammars In CFG-style phrase-structure grammars the main focus is on constituents. But it turns out you can get a lot done with just binary relations among the words in an utterance. In a dependency grammar framework, a parse is a tree where the nodes stand for the words in an utterance The links between the words represent dependency relations between pairs of words. Relations may be typed (labeled), or not. 12/3/2020 Slide 1 Speech and Language Processing - Jurafsky and 44

Dependency Relations 45 12/3/2020 Slide 1 Speech and Language Processing - Jurafsky and

Dependency Parse See the Stanford parser on line They hid the letter on the shelf 46 12/3/2020 Slide 1

Dependency Parsing The dependency approach has a number of advantages over full phrasestructure parsing. Deals well with free word order languages where the constituent structure is quite fluid Parsing is much faster than CFG-bases parsers Dependency structure often captures the syntactic relations needed by later applications CFG-based approaches often extract this same information from trees anyway. 47 12/3/2020 Slide 1 Speech and Language Processing - Jurafsky and

Dependency Parsing There are two modern approaches to dependency parsing Optimization-based approaches that search a space of trees for the tree that best matches some criteria Shift-reduce approaches that greedily take actions based on the current word and state. 48 12/3/2020 Slide 1 Speech and Language Processing - Jurafsky and

Summary Context-free grammars can be used to model various facts about the syntax of a language. When paired with parsers, such grammars consititute a critical component in many applications. Constituency is a key phenomena easily captured with CFG rules. But agreement and subcategorization do pose significant problems Treebanks pair sentences in corpus with their corresponding trees. 49 12/3/2020 Slide 1