CONTENTS 1 ASM m th i r o

- Slides: 29

CONTENTS 1 ASM m th i r o alg 2 e is g a im thes e Fac e syn r u t tex 3 ef t c e f 4 r es c n re e f e

1 PART 01 ASM algorithm

ASM algorithm ASM is an algorithm based on point distribution publishing model (PDM). In PDM, geometric shapes of objects of similar shape, such as human faces, human hands, heart, lungs, etc. , can be represented by sequentially connecting the coordinates of several key feature points in series to form a shape vector. The ASM algorithm needs to first calibrate the training set by manual calibration, obtain the shape model through training, and then match the key points to achieve the matching of specific objects. The advantage of ASM is that it can limit the adjustment of parameters according to the training data, so that the shape change is limited to a reasonable range.

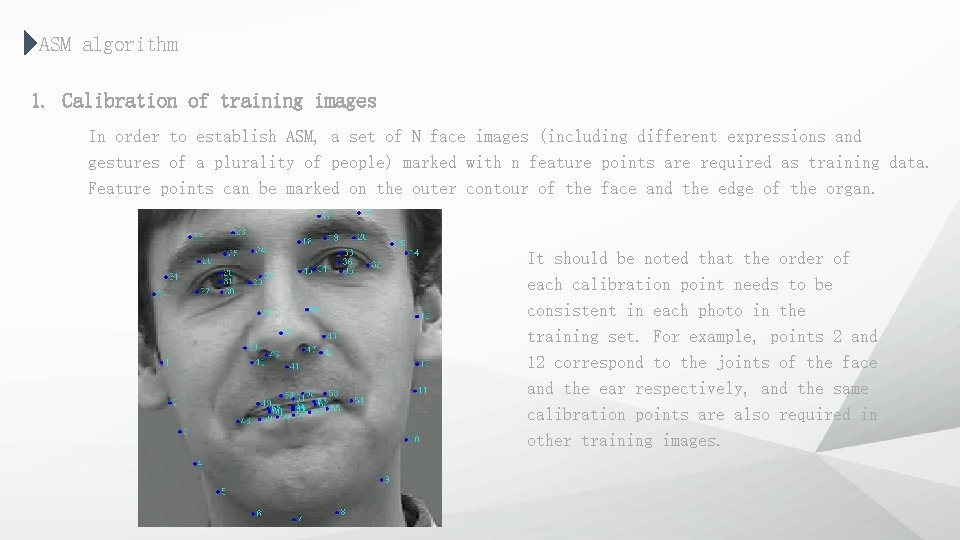

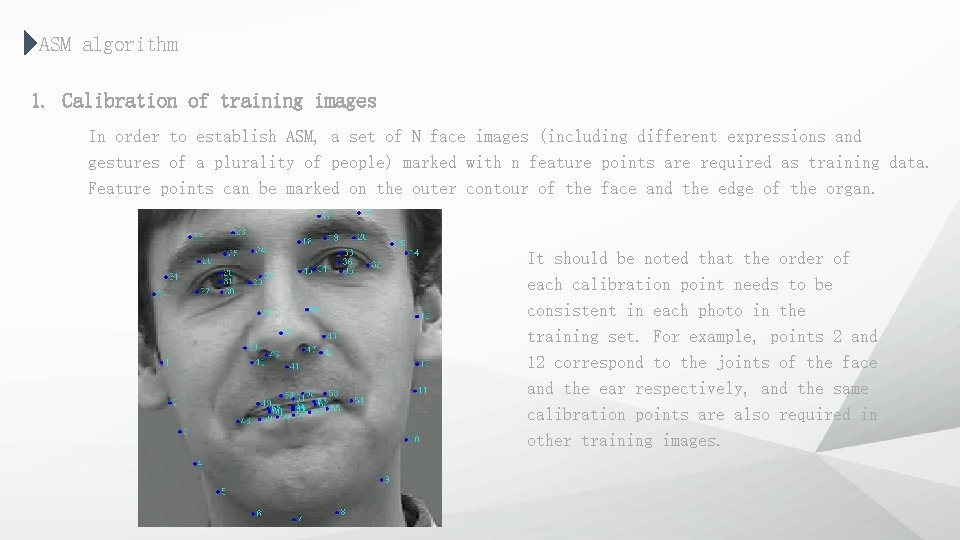

ASM algorithm 1. Calibration of training images In order to establish ASM, a set of N face images (including different expressions and gestures of a plurality of people) marked with n feature points are required as training data. Feature points can be marked on the outer contour of the face and the edge of the organ. It should be noted that the order of each calibration point needs to be consistent in each photo in the training set. For example, points 2 and 12 correspond to the joints of the face and the ear respectively, and the same calibration points are also required in other training images.

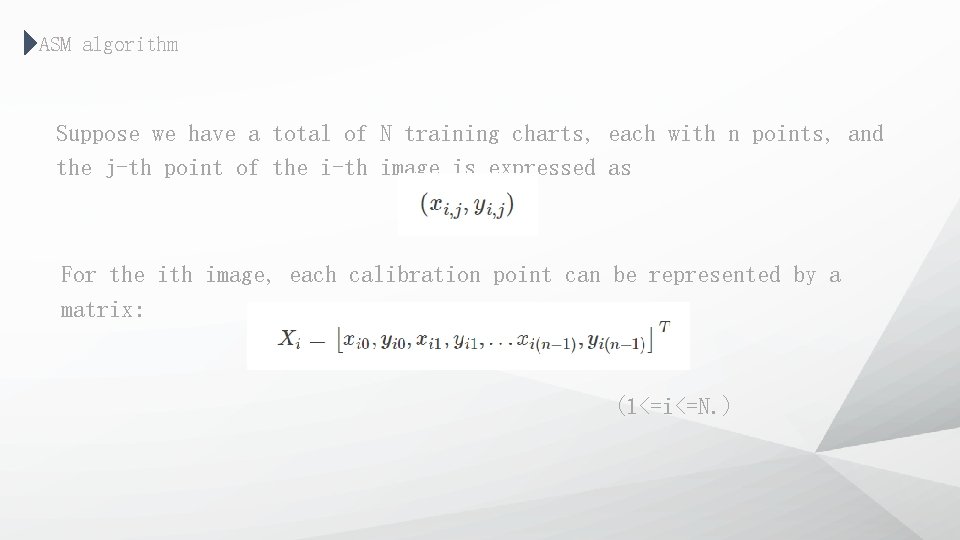

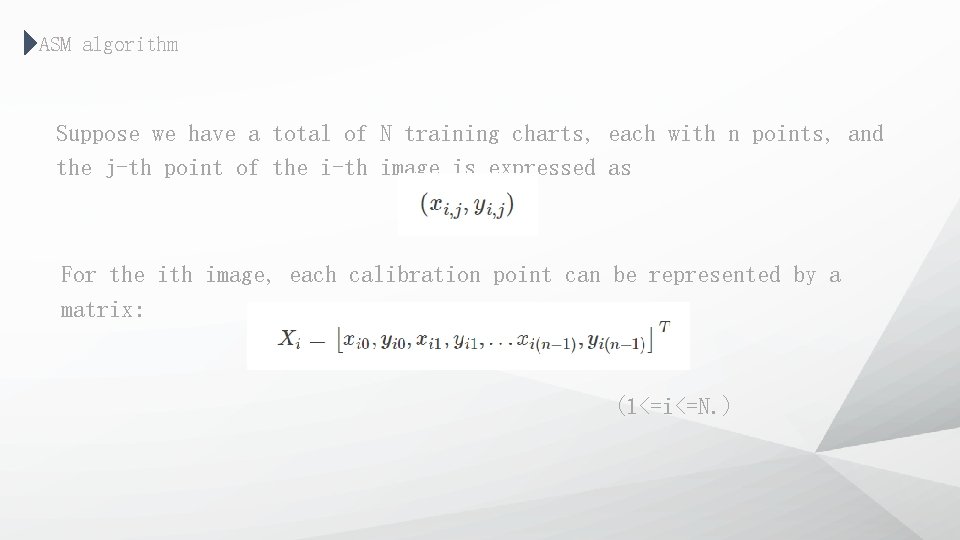

ASM algorithm Suppose we have a total of N training charts, each with n points, and the j-th point of the i-th image is expressed as For the ith image, each calibration point can be represented by a matrix: (1<=i<=N. )

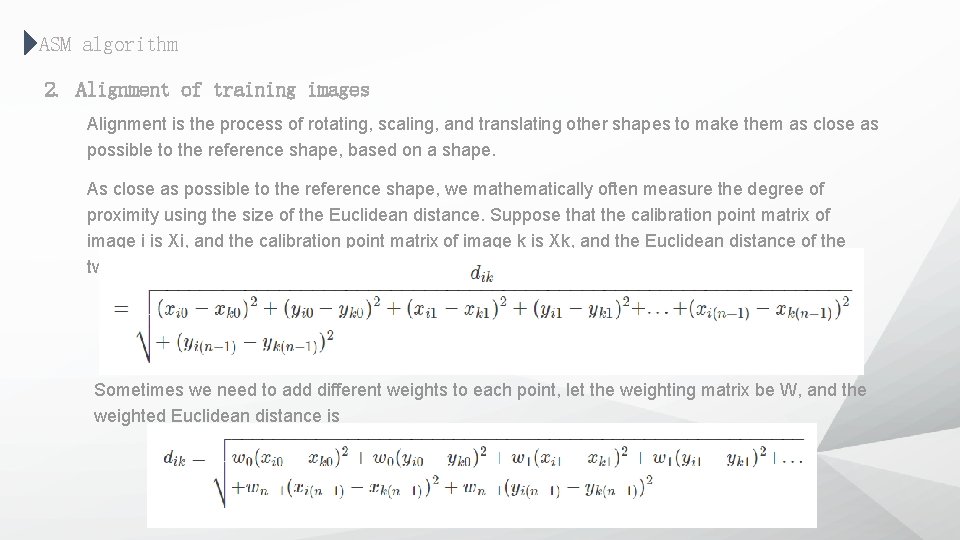

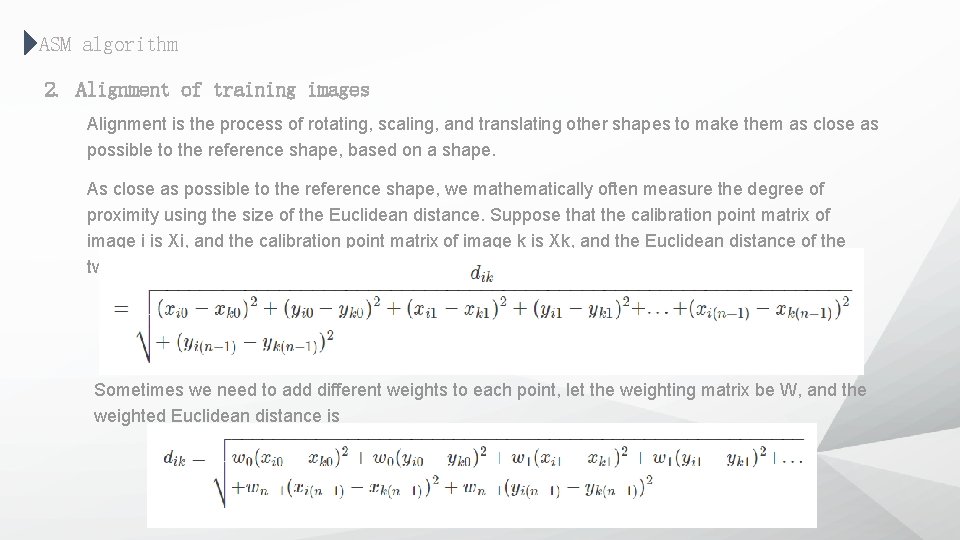

ASM algorithm 2. Alignment of training images Alignment is the process of rotating, scaling, and translating other shapes to make them as close as possible to the reference shape, based on a shape. As close as possible to the reference shape, we mathematically often measure the degree of proximity using the size of the Euclidean distance. Suppose that the calibration point matrix of image i is Xi, and the calibration point matrix of image k is Xk, and the Euclidean distance of the two is Sometimes we need to add different weights to each point, let the weighting matrix be W, and the weighted Euclidean distance is

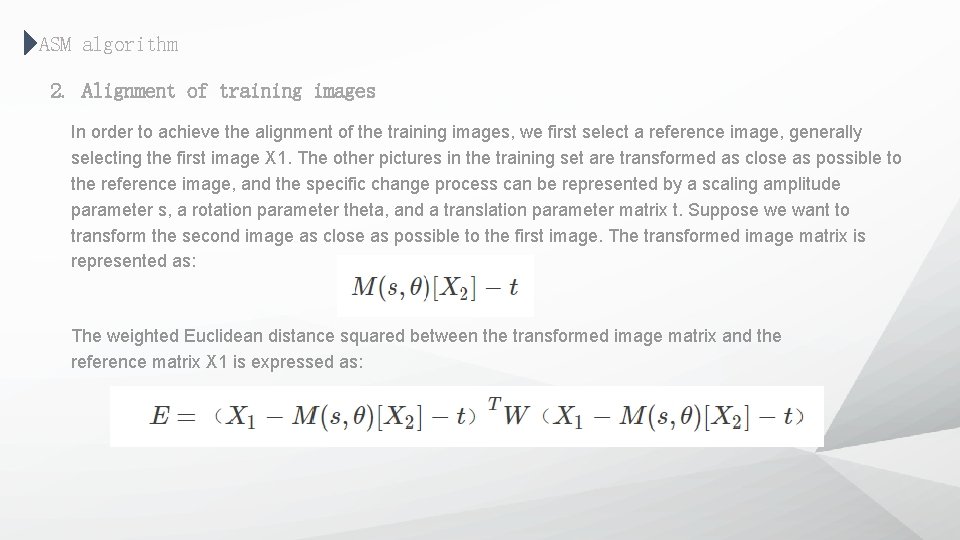

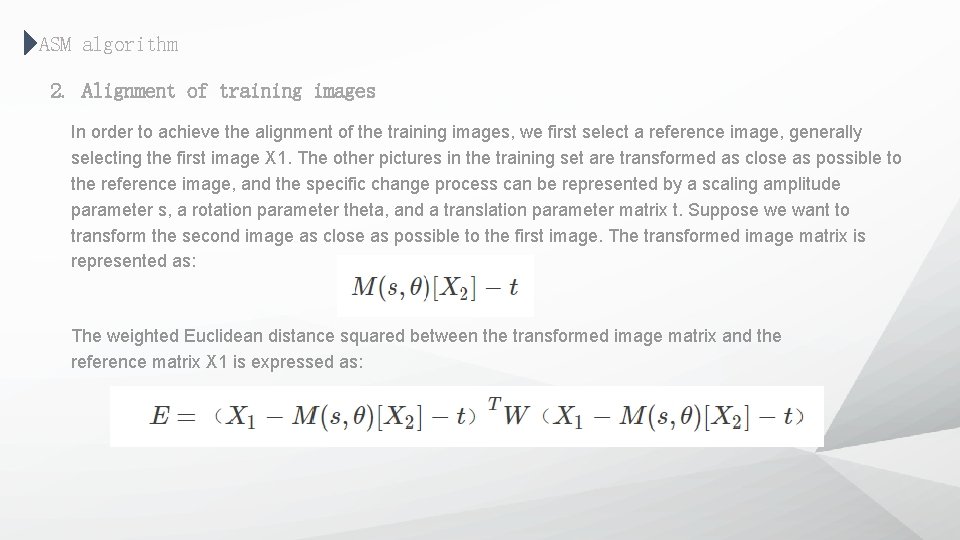

ASM algorithm 2. Alignment of training images In order to achieve the alignment of the training images, we first select a reference image, generally selecting the first image X 1. The other pictures in the training set are transformed as close as possible to the reference image, and the specific change process can be represented by a scaling amplitude parameter s, a rotation parameter theta, and a translation parameter matrix t. Suppose we want to transform the second image as close as possible to the first image. The transformed image matrix is represented as: The weighted Euclidean distance squared between the transformed image matrix and the reference matrix X 1 is expressed as:

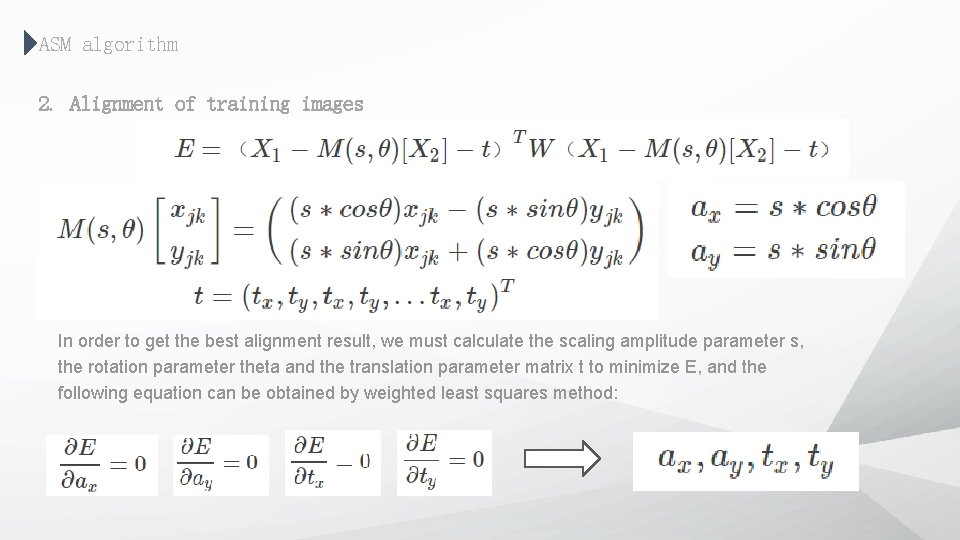

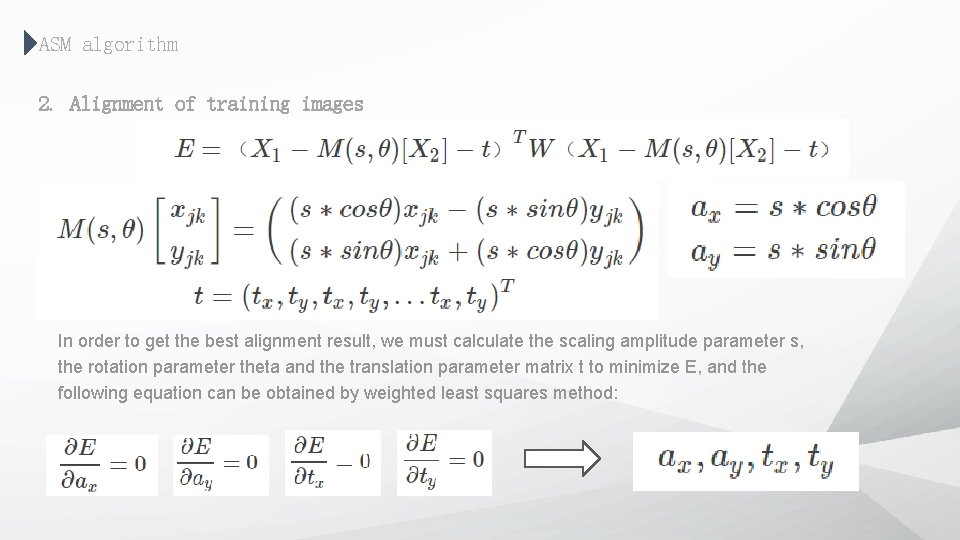

ASM algorithm 2. Alignment of training images In order to get the best alignment result, we must calculate the scaling amplitude parameter s, the rotation parameter theta and the translation parameter matrix t to minimize E, and the following equation can be obtained by weighted least squares method:

ASM algorithm 3. PCA analysis of images All the images in the training set have been aligned. The PCA analysis will be performed based on the aligned image data to finally obtain the trained shape model. It is a technique for analyzing and simplifying data sets. Principal component analysis is often used to reduce the dimensionality of a data set while maintaining the feature that maximizes the contribution of the variance in the data set. This is done by preserving the low-order principal components and ignoring the higher-order principal components. Such low-order components tend to retain the most important aspects of the data. However, this is not certain and depends on the specific application. Since principal component analysis relies on the given data, the accuracy of the data has a great impact on the analysis results.

2 PART 02 Face image texture synthesis

Face image texture synthesis Face feature point extraction Color conversion Seamless blending of faces and eyebrow restoration 3 D face reconstruction

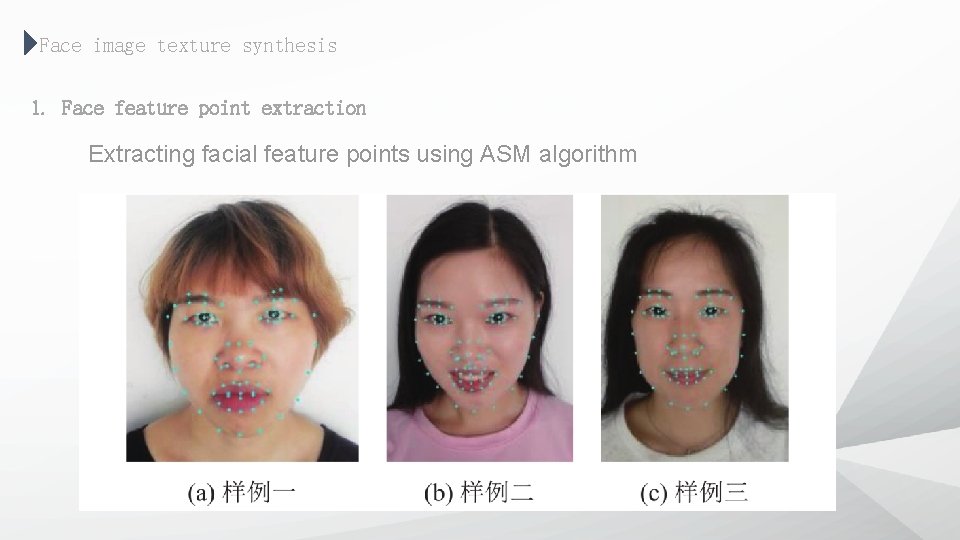

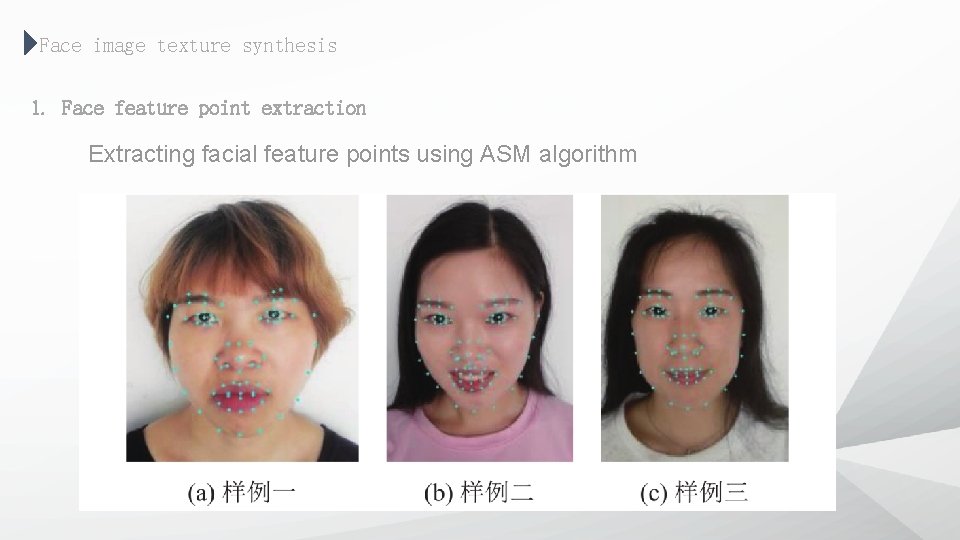

Face image texture synthesis 1. Face feature point extraction Extracting facial feature points using ASM algorithm

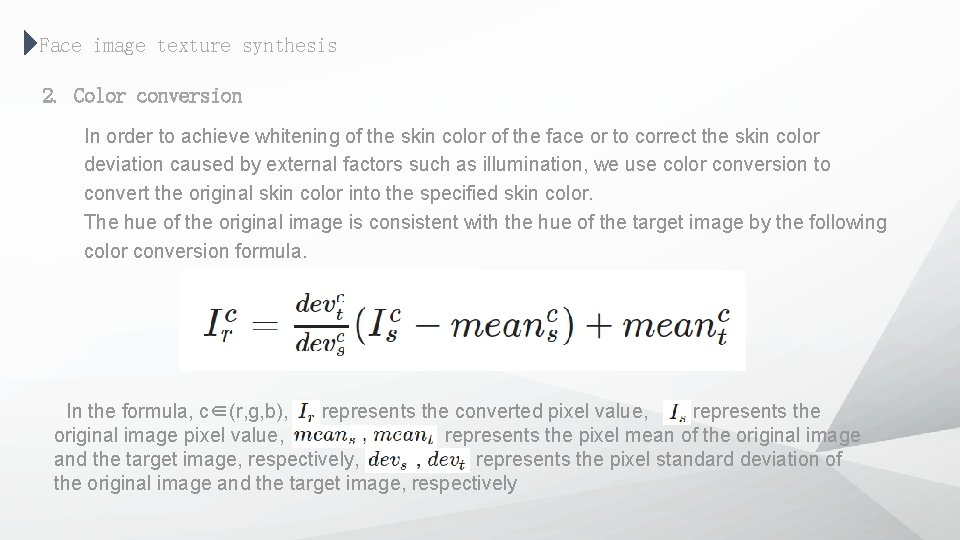

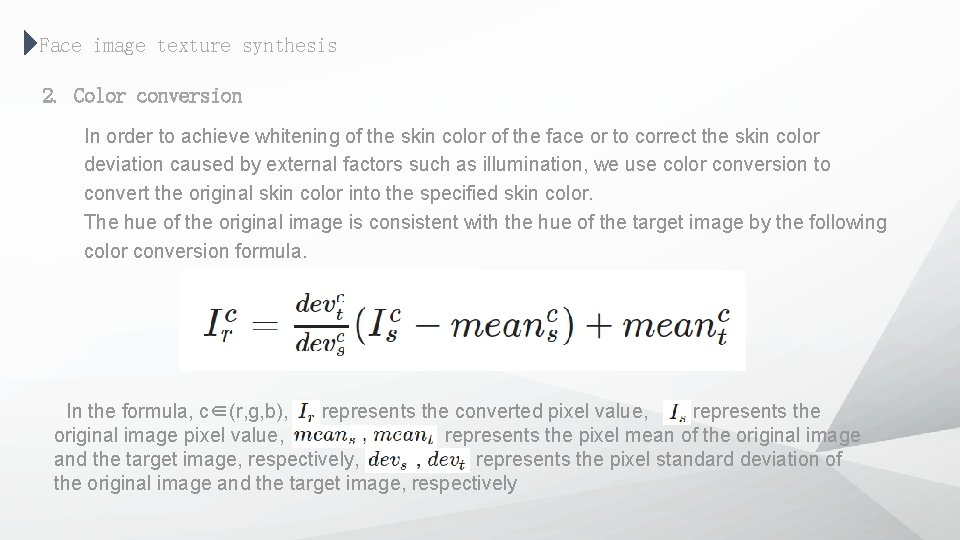

Face image texture synthesis 2. Color conversion In order to achieve whitening of the skin color of the face or to correct the skin color deviation caused by external factors such as illumination, we use color conversion to convert the original skin color into the specified skin color. The hue of the original image is consistent with the hue of the target image by the following color conversion formula. In the formula, c∈(r, g, b), represents the converted pixel value, represents the original image pixel value, represents the pixel mean of the original image and the target image, respectively, represents the pixel standard deviation of the original image and the target image, respectively

Face image texture synthesis 2. Color conversion The algorithm has a certain effect on the global tone change of the image, but there are problems in the local color conversion, such as the color conversion of the face. Local linear embedding (LLE) algorithm , Editing communication The face-to-face color conversion is realized by the edit propagation method based on the local linear embedding algorithm. Through color conversion, we eliminate the adverse effects caused by the environment such as over-light and darkness when the face photo is taken.

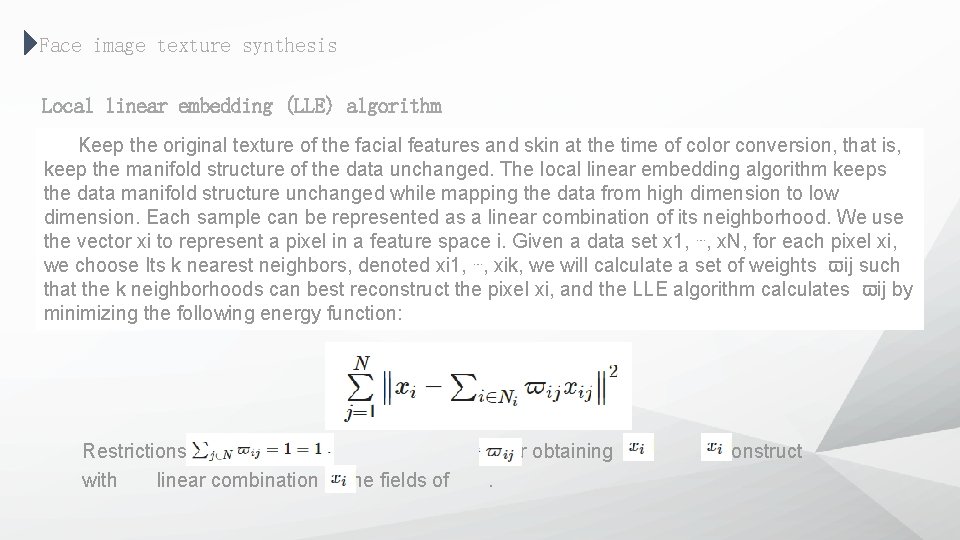

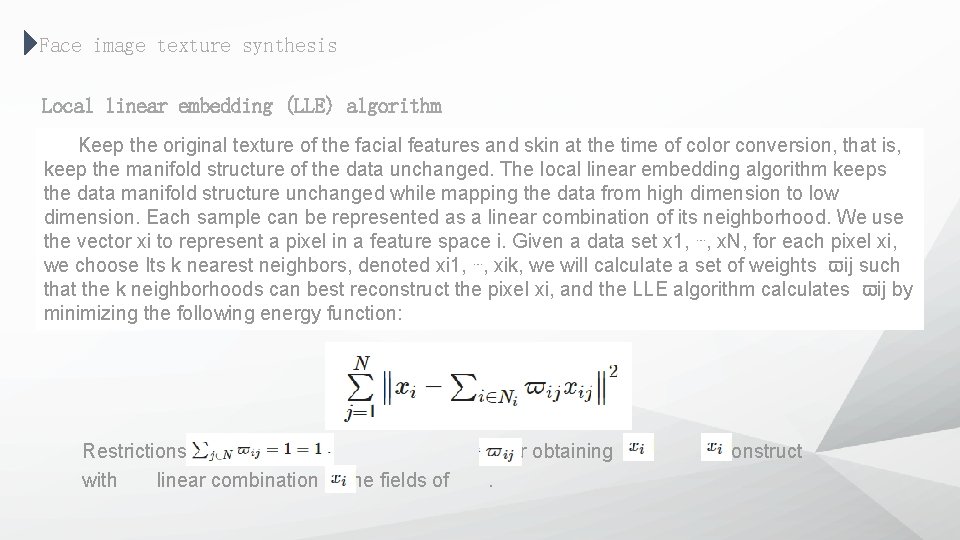

Face image texture synthesis Local linear embedding (LLE) algorithm Keep the original texture of the facial features and skin at the time of color conversion, that is, keep the manifold structure of the data unchanged. The local linear embedding algorithm keeps the data manifold structure unchanged while mapping the data from high dimension to low dimension. Each sample can be represented as a linear combination of its neighborhood. We use the vector xi to represent a pixel in a feature space i. Given a data set x 1, ⋯, x. N, for each pixel xi, we choose Its k nearest neighbors, denoted xi 1, ⋯, xik, we will calculate a set of weights ϖij such that the k neighborhoods can best reconstruct the pixel xi, and the LLE algorithm calculates ϖij by minimizing the following energy function: Restrictions with linear combination of the fields of after obtaining. reconstruct

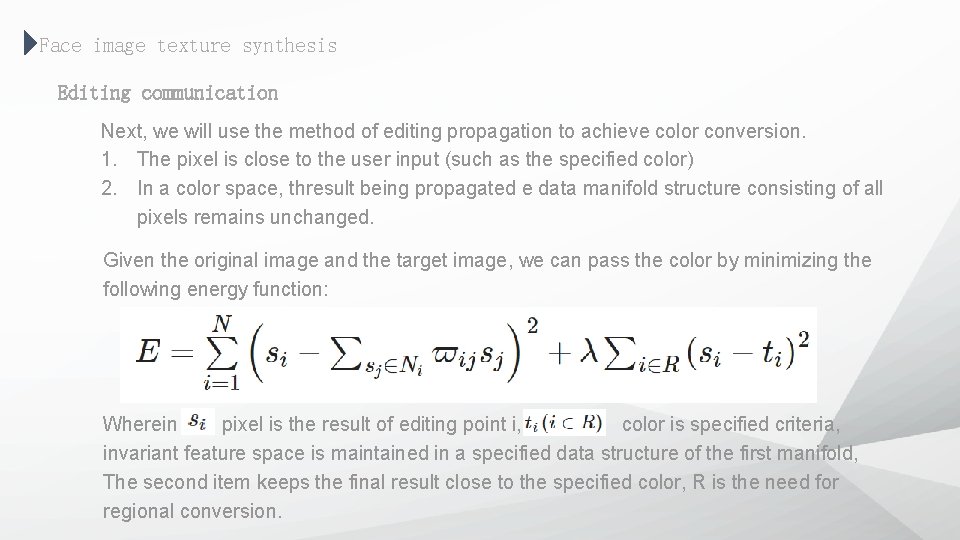

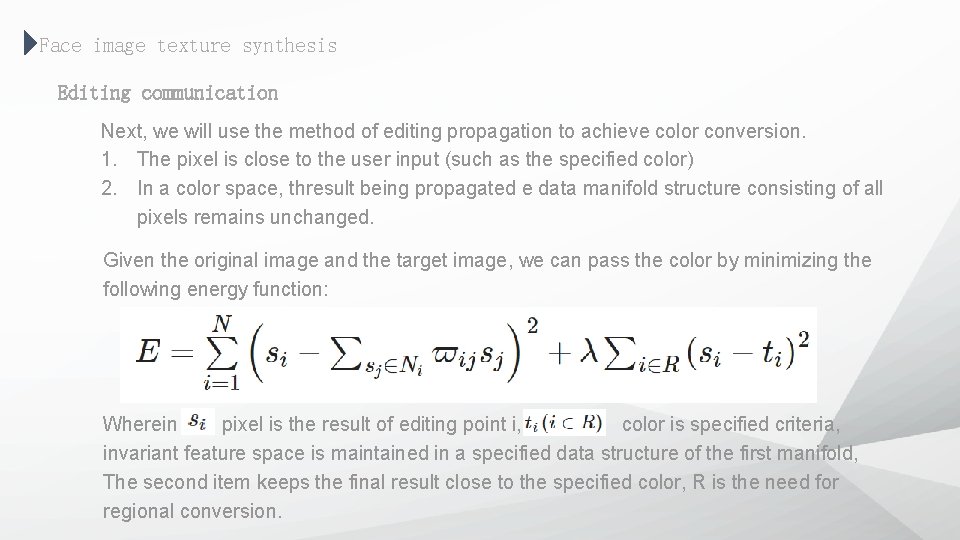

Face image texture synthesis Editing communication Next, we will use the method of editing propagation to achieve color conversion. 1. The pixel is close to the user input (such as the specified color) 2. In a color space, thresult being propagated e data manifold structure consisting of all pixels remains unchanged. Given the original image and the target image, we can pass the color by minimizing the following energy function: Wherein pixel is the result of editing point i, color is specified criteria, invariant feature space is maintained in a specified data structure of the first manifold, The second item keeps the final result close to the specified color, R is the need for regional conversion.

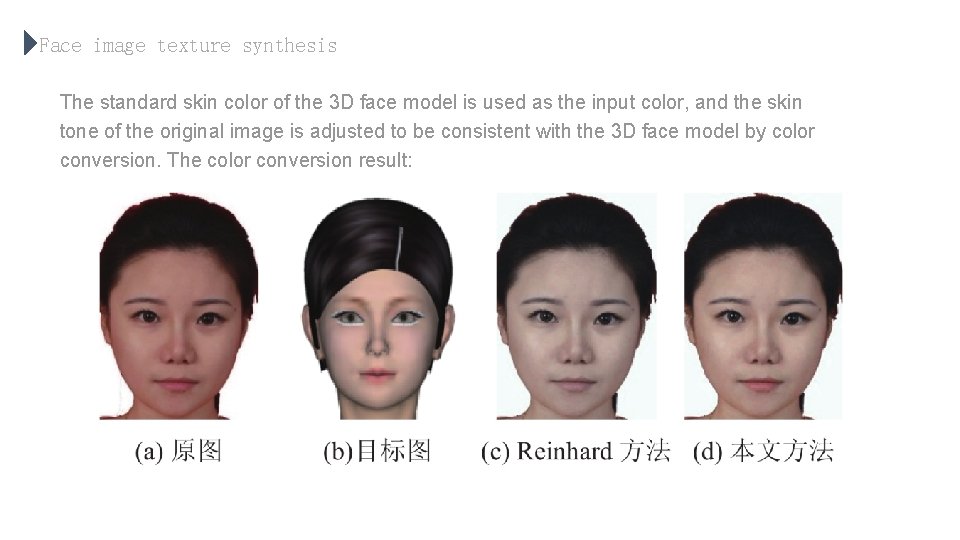

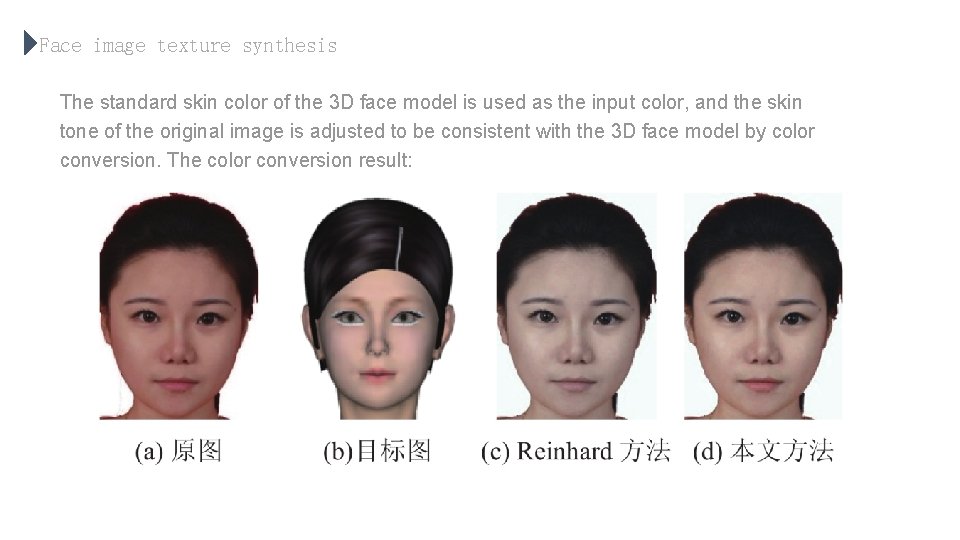

Face image texture synthesis The standard skin color of the 3 D face model is used as the input color, and the skin tone of the original image is adjusted to be consistent with the 3 D face model by color conversion. The color conversion result:

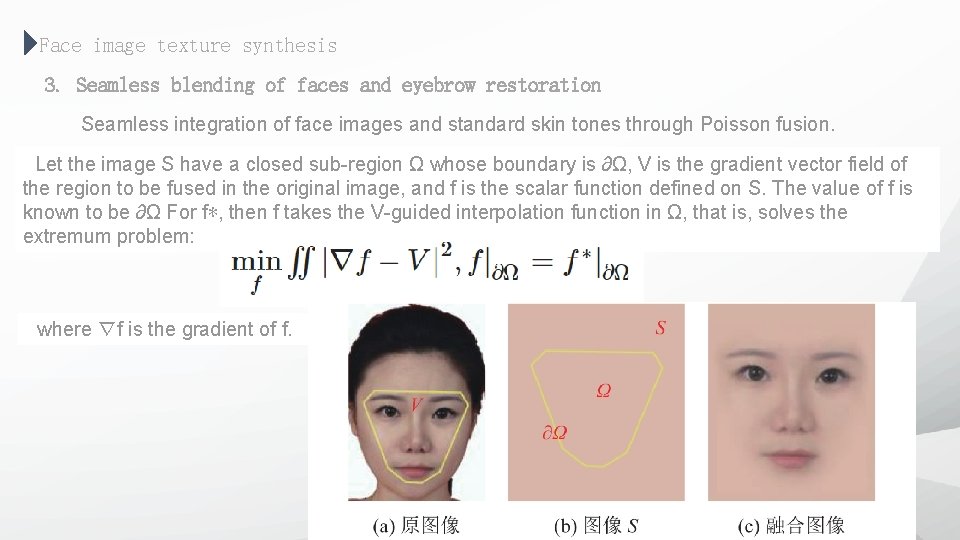

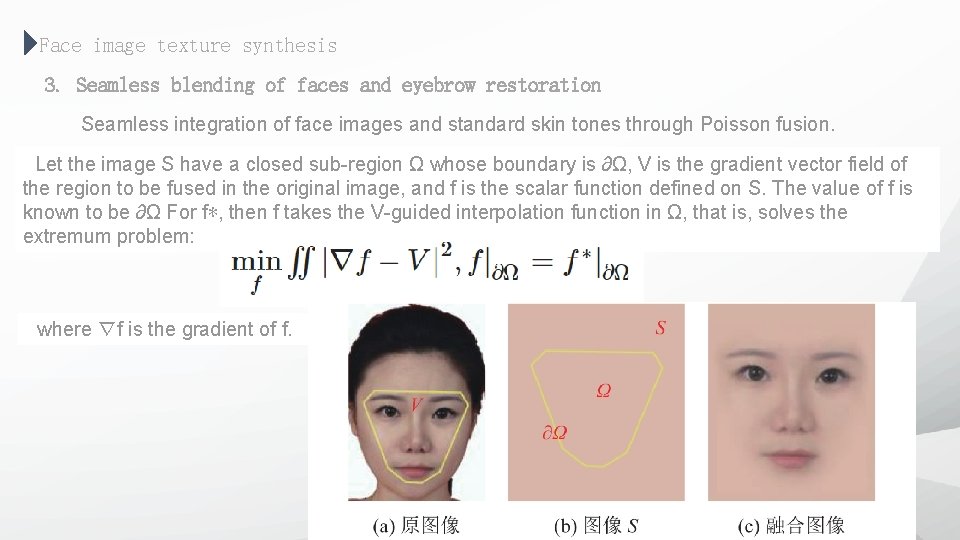

Face image texture synthesis 3. Seamless blending of faces and eyebrow restoration Seamless integration of face images and standard skin tones through Poisson fusion. Let the image S have a closed sub-region Ω whose boundary is ∂Ω, V is the gradient vector field of the region to be fused in the original image, and f is the scalar function defined on S. The value of f is known to be ∂Ω For f∗, then f takes the V-guided interpolation function in Ω, that is, solves the extremum problem: where ∇f is the gradient of f.

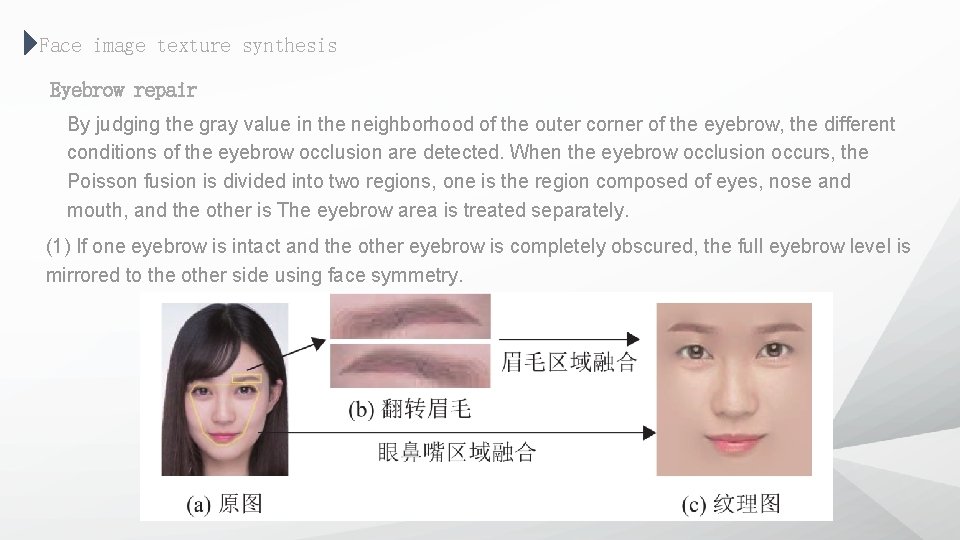

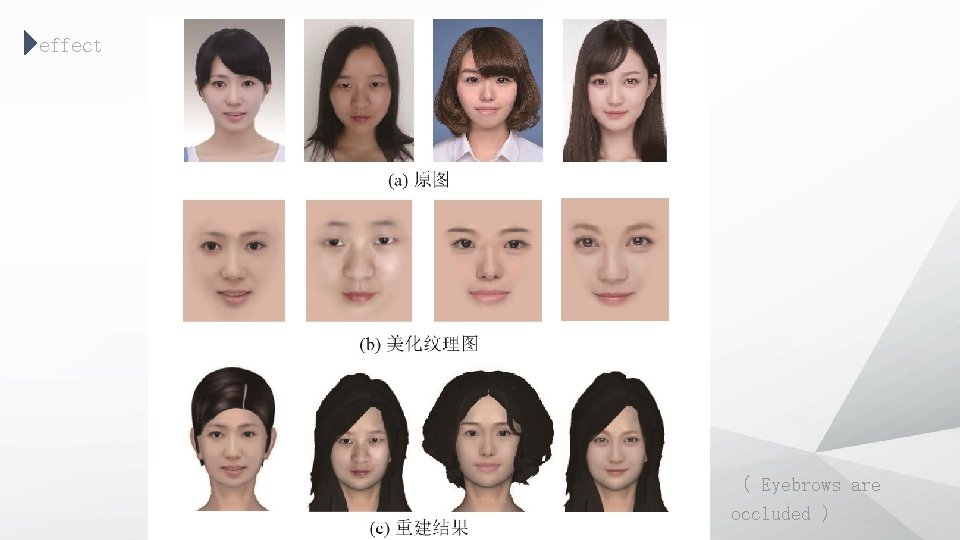

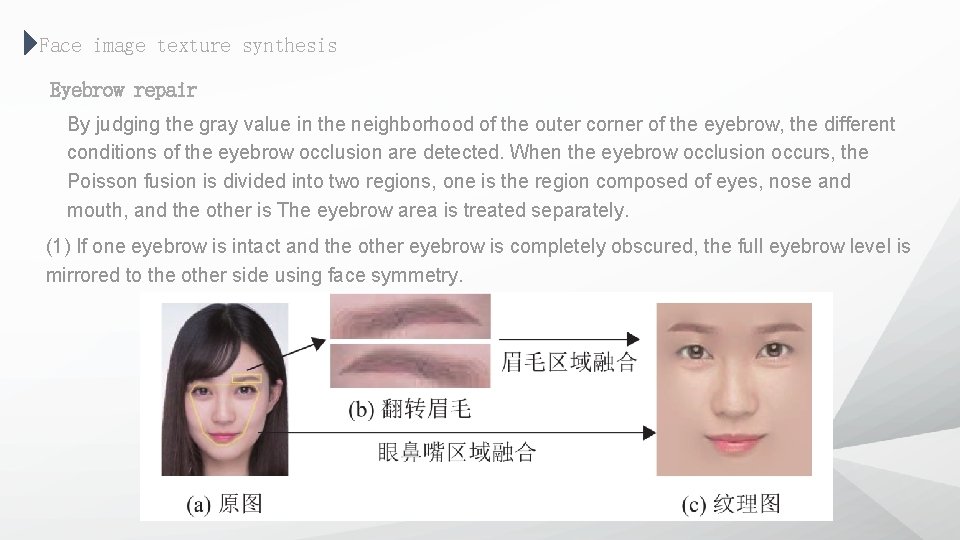

Face image texture synthesis Eyebrow repair By judging the gray value in the neighborhood of the outer corner of the eyebrow, the different conditions of the eyebrow occlusion are detected. When the eyebrow occlusion occurs, the Poisson fusion is divided into two regions, one is the region composed of eyes, nose and mouth, and the other is The eyebrow area is treated separately. (1) If one eyebrow is intact and the other eyebrow is completely obscured, the full eyebrow level is mirrored to the other side using face symmetry.

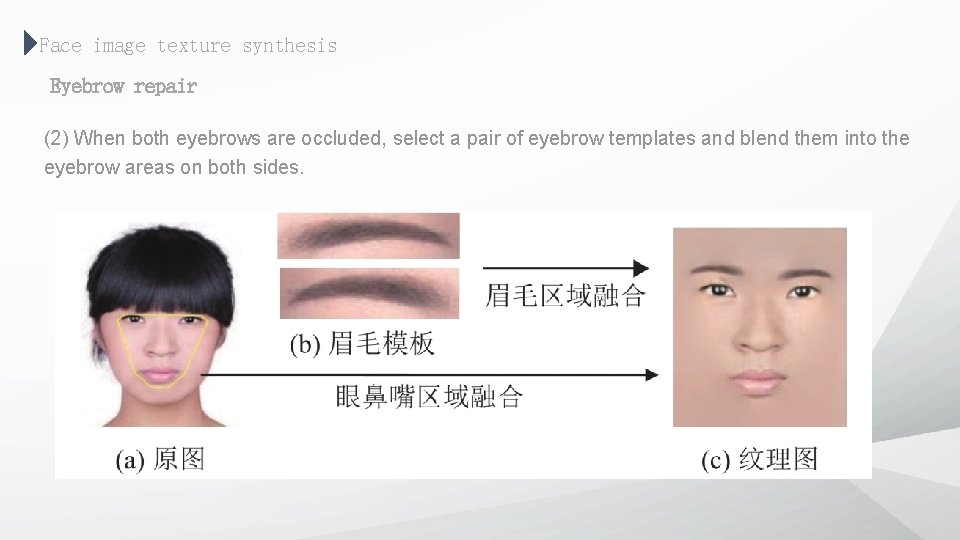

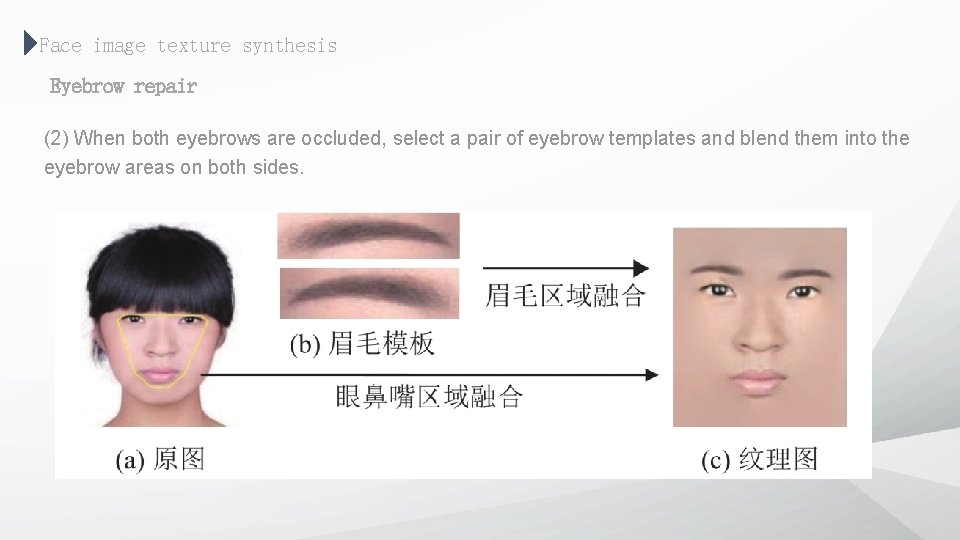

Face image texture synthesis Eyebrow repair (2) When both eyebrows are occluded, select a pair of eyebrow templates and blend them into the eyebrow areas on both sides.

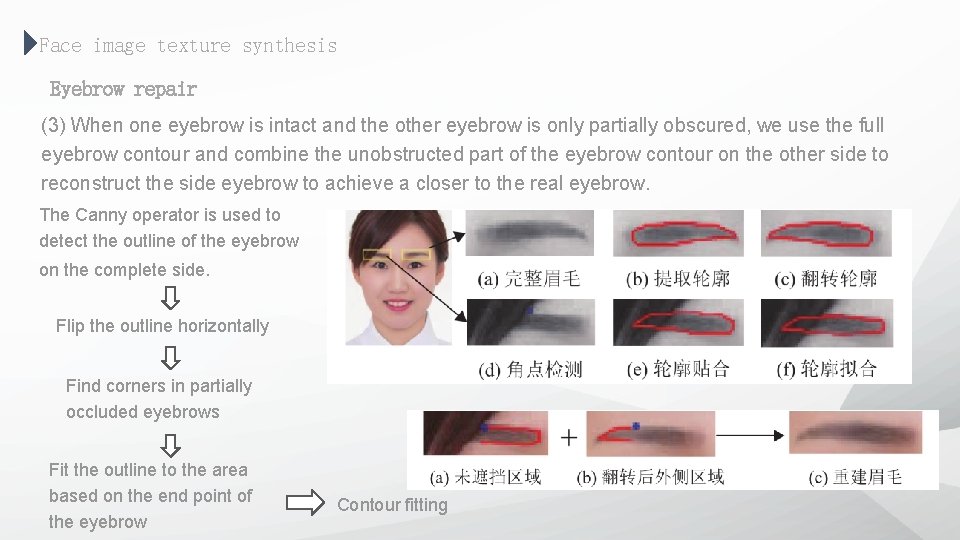

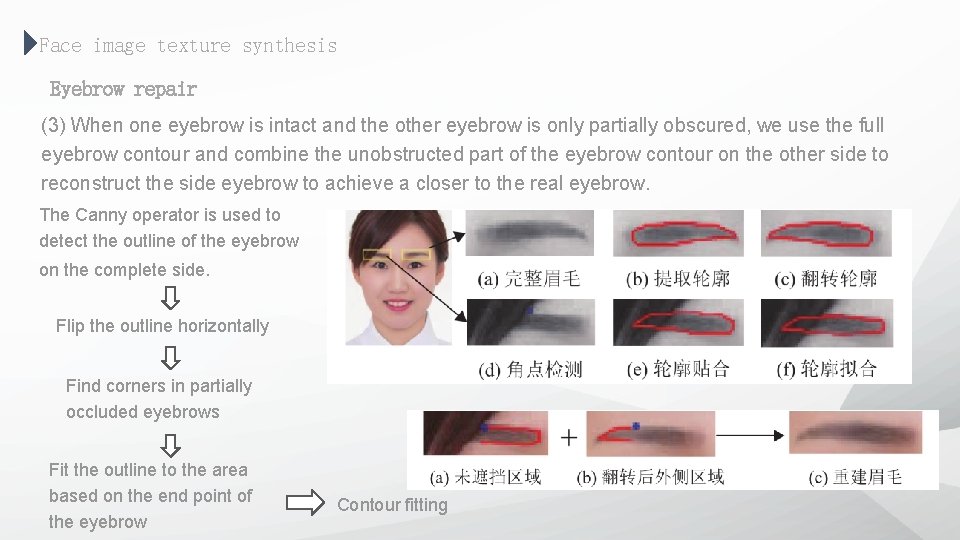

Face image texture synthesis Eyebrow repair (3) When one eyebrow is intact and the other eyebrow is only partially obscured, we use the full eyebrow contour and combine the unobstructed part of the eyebrow contour on the other side to reconstruct the side eyebrow to achieve a closer to the real eyebrow. The Canny operator is used to detect the outline of the eyebrow on the complete side. Flip the outline horizontally Find corners in partially occluded eyebrows Fit the outline to the area based on the end point of the eyebrow Contour fitting

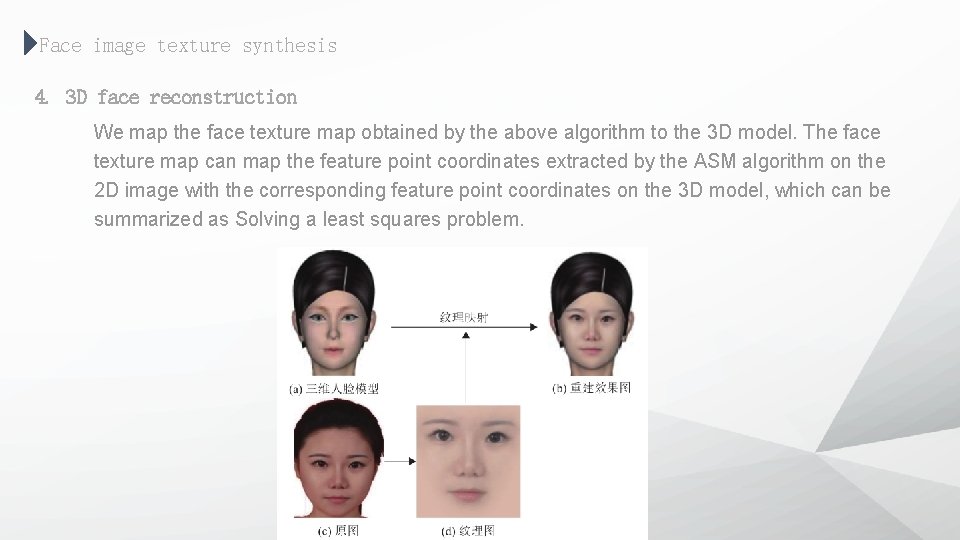

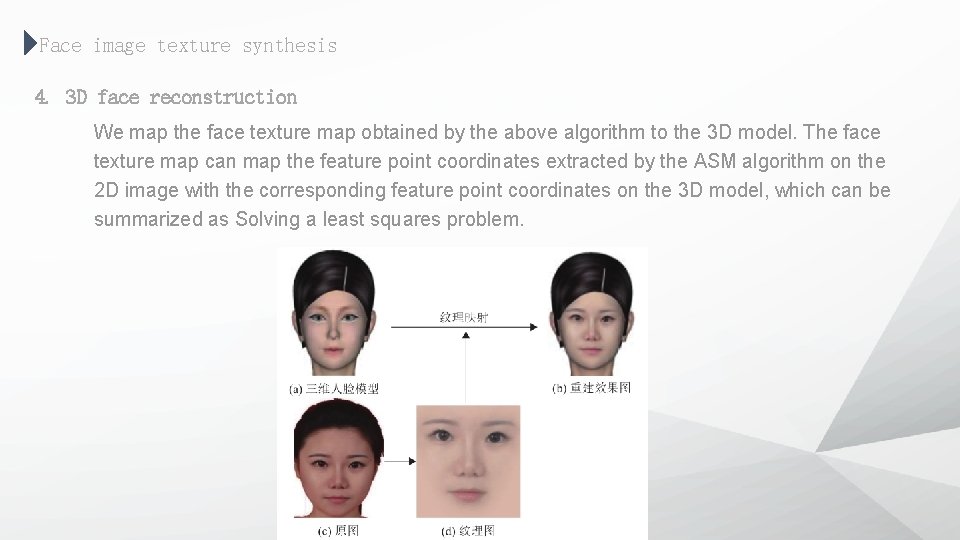

Face image texture synthesis 4. 3 D face reconstruction We map the face texture map obtained by the above algorithm to the 3 D model. The face texture map can map the feature point coordinates extracted by the ASM algorithm on the 2 D image with the corresponding feature point coordinates on the 3 D model, which can be summarized as Solving a least squares problem.

3 PART 03 effect

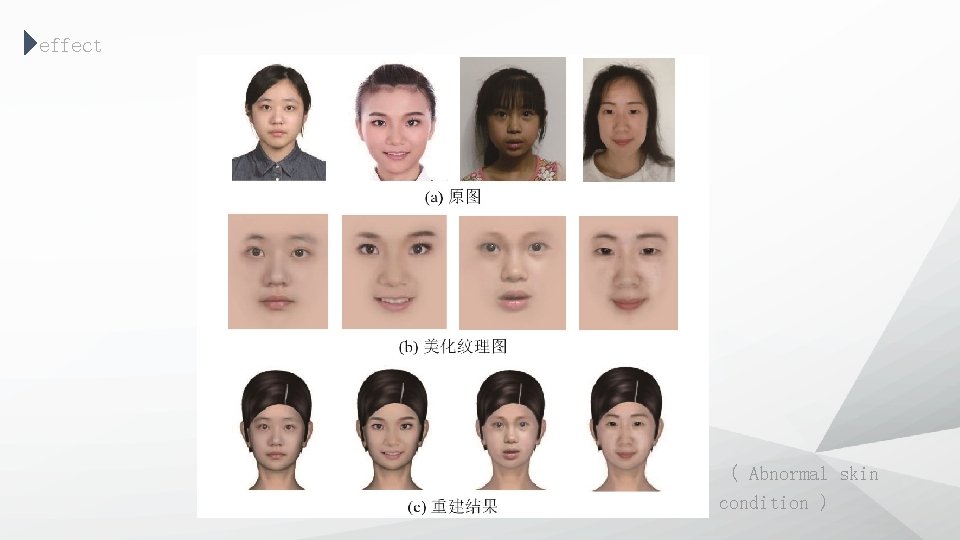

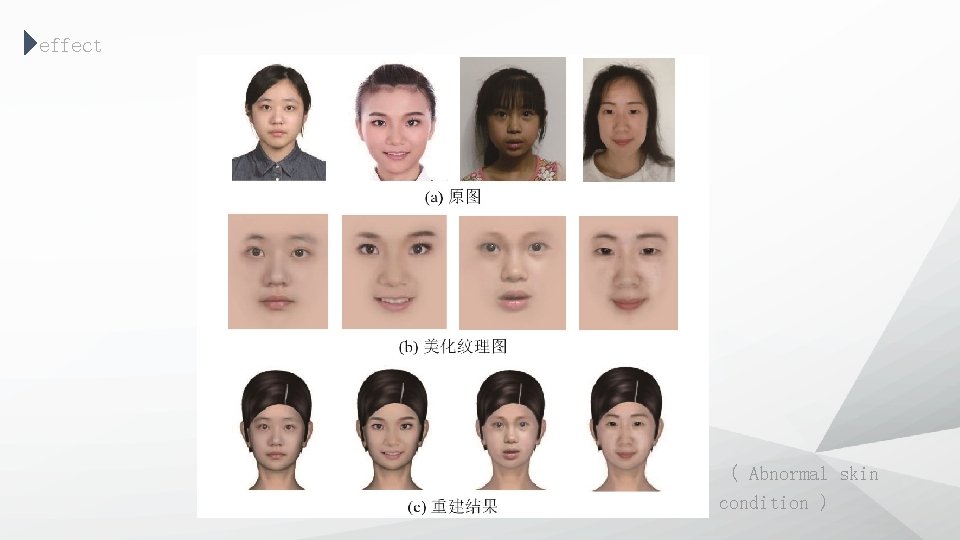

effect ( Abnormal skin condition )

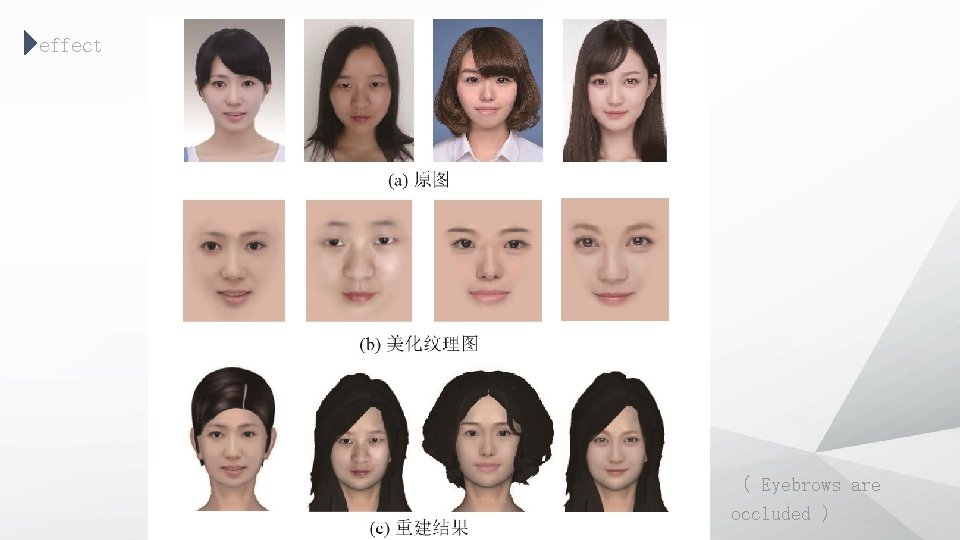

effect ( Eyebrows are occluded )

4 PART 04 references

references 1. ASM algorithm https: //blog. csdn. net/carson 2005/article/details/8194317 https: //blog. csdn. net/cbl 709/article/details/46239571 2. Face texture synthesis http: //www. c-s-a. org. cn/html/2019/5/6878. html#Figure 2 3. Manifold structure https: //www. zhihu. com/question/24015486

DOWNLOADS at http: //vcc. szu. edu. cn Thank You!