Constructive Computer Architecture Caches and store buffers Arvind

Constructive Computer Architecture Caches and store buffers Arvind Computer Science & Artificial Intelligence Lab. Massachusetts Institute of Technology October 23, 2017 http: //csg. csail. mit. edu/6. 175 L 15 -1

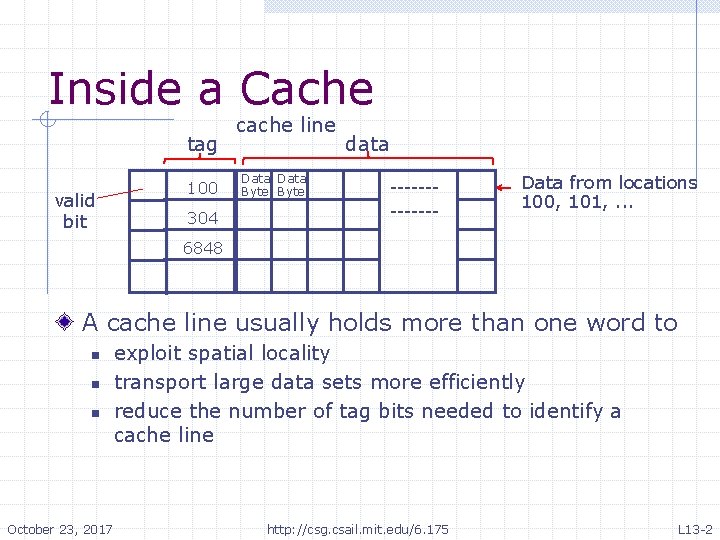

Inside a Cache tag valid bit 100 cache line data Data Byte 304 Data from locations 100, 101, . . . 6848 A cache line usually holds more than one word to n n n October 23, 2017 exploit spatial locality transport large data sets more efficiently reduce the number of tag bits needed to identify a cache line http: //csg. csail. mit. edu/6. 175 L 13 -2

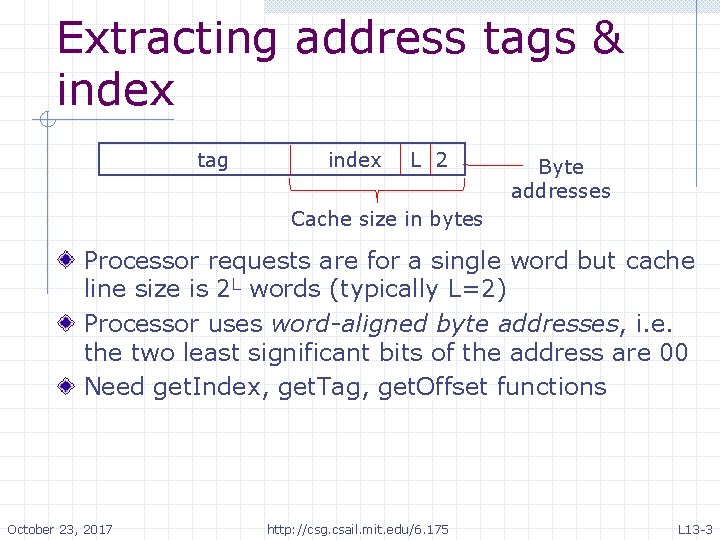

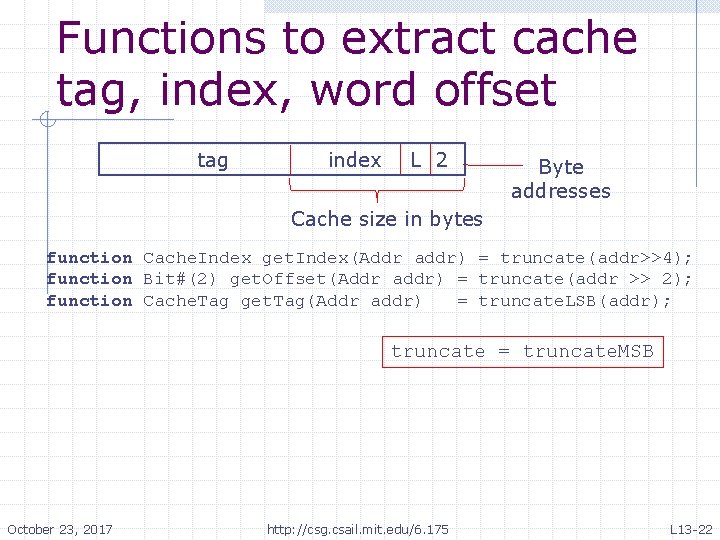

Extracting address tags & index tag index L 2 Byte addresses Cache size in bytes Processor requests are for a single word but cache line size is 2 L words (typically L=2) Processor uses word-aligned byte addresses, i. e. the two least significant bits of the address are 00 Need get. Index, get. Tag, get. Offset functions October 23, 2017 http: //csg. csail. mit. edu/6. 175 L 13 -3

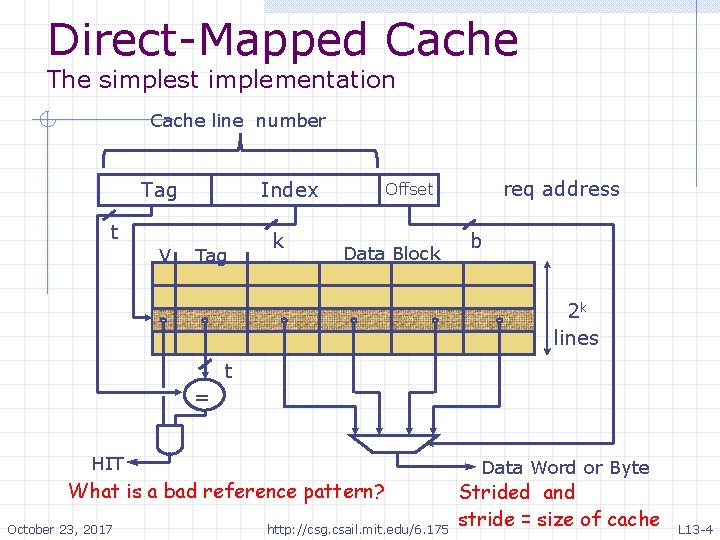

Direct-Mapped Cache The simplest implementation Cache line number Tag Index t V Tag k req address Offset Data Block b 2 k lines t = HIT What is a bad reference pattern? October 23, 2017 http: //csg. csail. mit. edu/6. 175 Data Word or Byte Strided and stride = size of cache L 13 -4

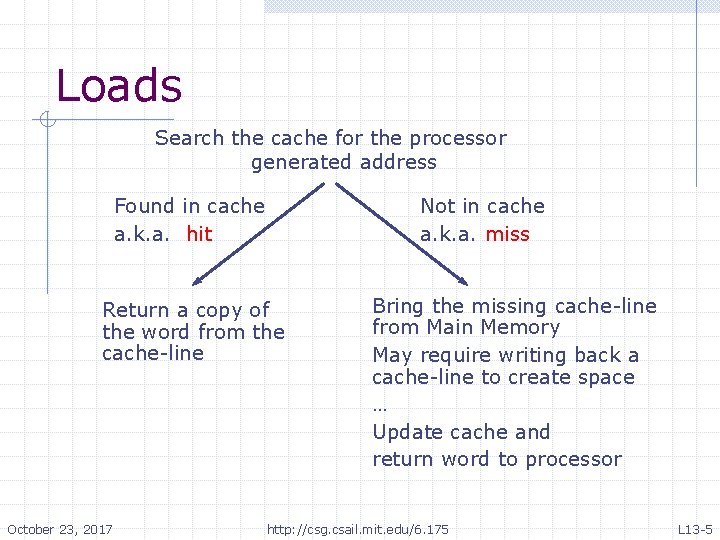

Loads Search the cache for the processor generated address Found in cache a. k. a. hit Not in cache a. k. a. miss Return a copy of the word from the cache-line October 23, 2017 Bring the missing cache-line from Main Memory May require writing back a cache-line to create space … Update cache and return word to processor http: //csg. csail. mit. edu/6. 175 L 13 -5

Stores On a write hit: write only to cache and update the next level memory when line is evacuated On a write miss: allocate – because of multiword lines we first fetch the line, and then update a word in it October 23, 2017 http: //csg. csail. mit. edu/6. 175 L 13 -6

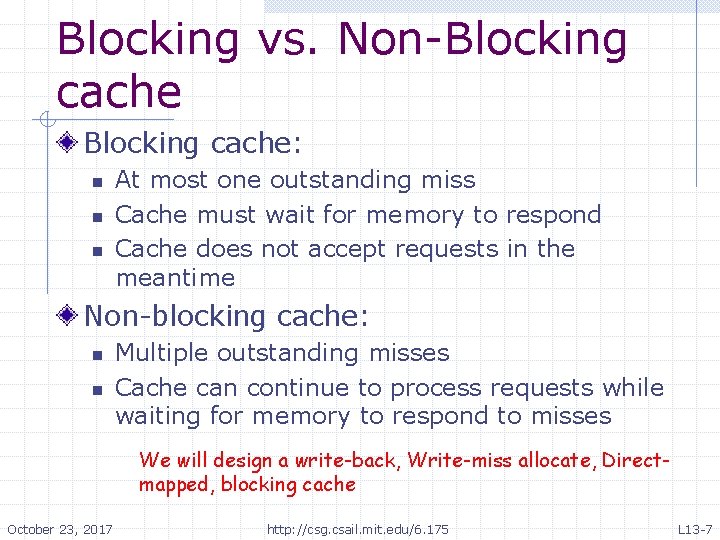

Blocking vs. Non-Blocking cache: n n n At most one outstanding miss Cache must wait for memory to respond Cache does not accept requests in the meantime Non-blocking cache: n n Multiple outstanding misses Cache can continue to process requests while waiting for memory to respond to misses We will design a write-back, Write-miss allocate, Directmapped, blocking cache October 23, 2017 http: //csg. csail. mit. edu/6. 175 L 13 -7

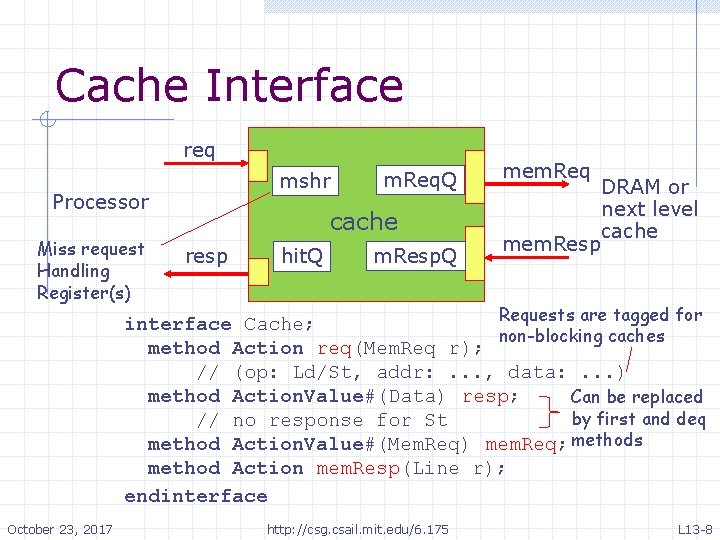

Cache Interface req mshr Processor Miss request Handling Register(s) m. Req. Q cache resp hit. Q m. Resp. Q mem. Req DRAM or next level cache mem. Resp Requests are tagged for interface Cache; non-blocking caches method Action req(Mem. Req r); // (op: Ld/St, addr: . . . , data: . . . ) method Action. Value#(Data) resp; Can be replaced by first and deq // no response for St method Action. Value#(Mem. Req) mem. Req; methods method Action mem. Resp(Line r); endinterface October 23, 2017 http: //csg. csail. mit. edu/6. 175 L 13 -8

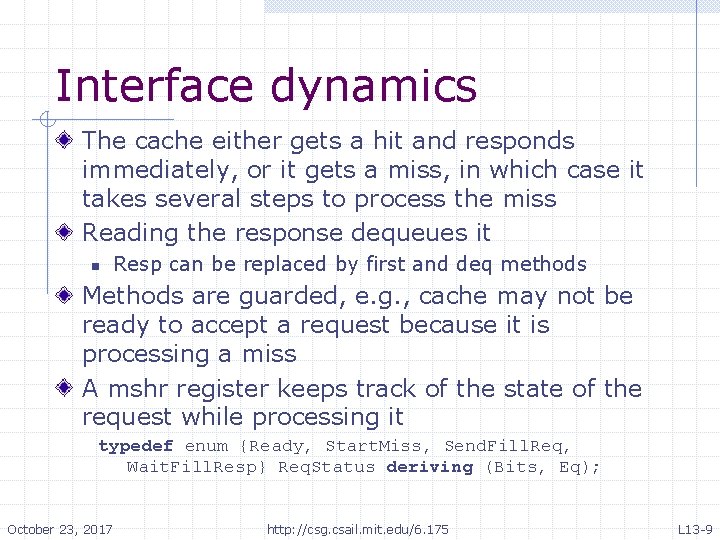

Interface dynamics The cache either gets a hit and responds immediately, or it gets a miss, in which case it takes several steps to process the miss Reading the response dequeues it n Resp can be replaced by first and deq methods Methods are guarded, e. g. , cache may not be ready to accept a request because it is processing a miss A mshr register keeps track of the state of the request while processing it typedef enum {Ready, Start. Miss, Send. Fill. Req, Wait. Fill. Resp} Req. Status deriving (Bits, Eq); October 23, 2017 http: //csg. csail. mit. edu/6. 175 L 13 -9

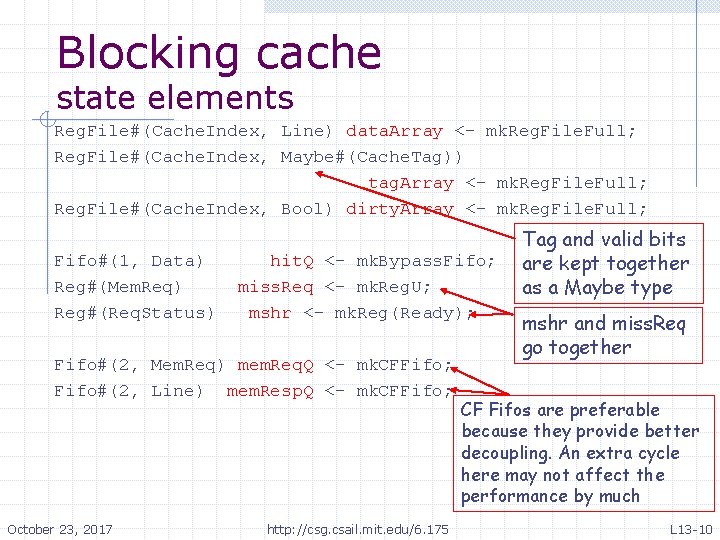

Blocking cache state elements Reg. File#(Cache. Index, Line) data. Array <- mk. Reg. File. Full; Reg. File#(Cache. Index, Maybe#(Cache. Tag)) tag. Array <- mk. Reg. File. Full; Reg. File#(Cache. Index, Bool) dirty. Array <- mk. Reg. File. Full; Fifo#(1, Data) Reg#(Mem. Req) Reg#(Req. Status) hit. Q <- mk. Bypass. Fifo; miss. Req <- mk. Reg. U; mshr <- mk. Reg(Ready); Fifo#(2, Mem. Req) mem. Req. Q <- mk. CFFifo; Fifo#(2, Line) mem. Resp. Q <- mk. CFFifo; October 23, 2017 http: //csg. csail. mit. edu/6. 175 Tag and valid bits are kept together as a Maybe type mshr and miss. Req go together CF Fifos are preferable because they provide better decoupling. An extra cycle here may not affect the performance by much L 13 -10

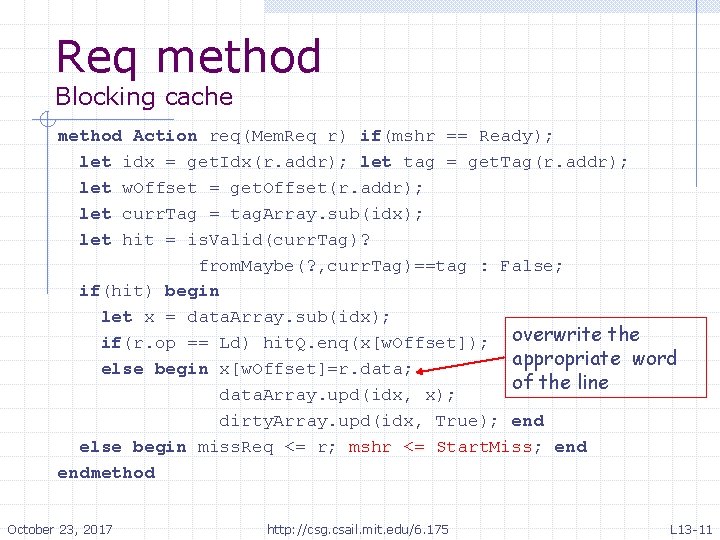

Req method Blocking cache method Action req(Mem. Req r) if(mshr == Ready); let idx = get. Idx(r. addr); let tag = get. Tag(r. addr); let w. Offset = get. Offset(r. addr); let curr. Tag = tag. Array. sub(idx); let hit = is. Valid(curr. Tag)? from. Maybe(? , curr. Tag)==tag : False; if(hit) begin let x = data. Array. sub(idx); if(r. op == Ld) hit. Q. enq(x[w. Offset]); overwrite the appropriate word else begin x[w. Offset]=r. data; of the line data. Array. upd(idx, x); dirty. Array. upd(idx, True); end else begin miss. Req <= r; mshr <= Start. Miss; endmethod October 23, 2017 http: //csg. csail. mit. edu/6. 175 L 13 -11

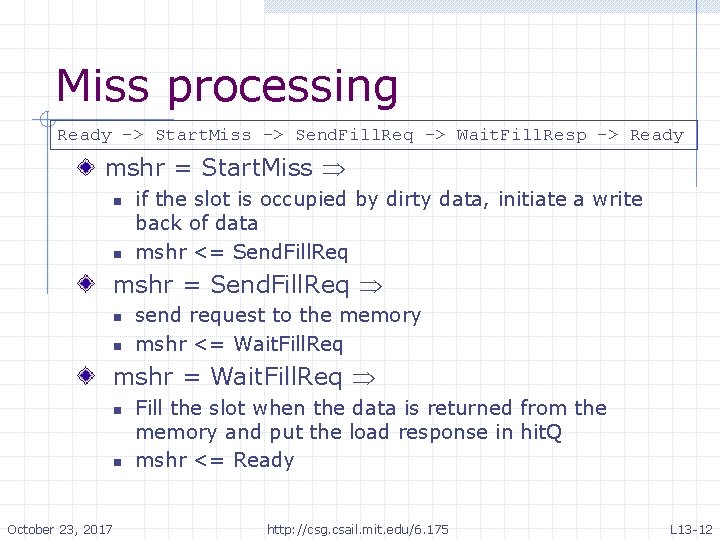

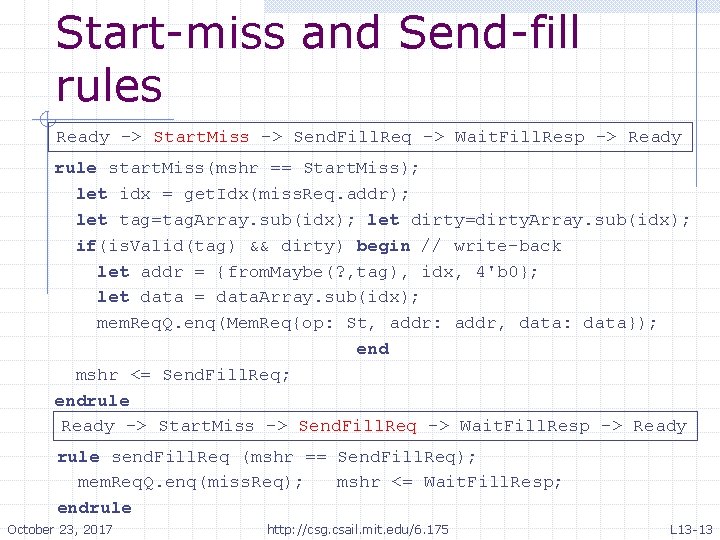

Miss processing Ready -> Start. Miss -> Send. Fill. Req -> Wait. Fill. Resp -> Ready mshr = Start. Miss n n if the slot is occupied by dirty data, initiate a write back of data mshr <= Send. Fill. Req mshr = Send. Fill. Req n n send request to the memory mshr <= Wait. Fill. Req mshr = Wait. Fill. Req n n October 23, 2017 Fill the slot when the data is returned from the memory and put the load response in hit. Q mshr <= Ready http: //csg. csail. mit. edu/6. 175 L 13 -12

Start-miss and Send-fill rules Ready -> Start. Miss -> Send. Fill. Req -> Wait. Fill. Resp -> Ready rule start. Miss(mshr == Start. Miss); let idx = get. Idx(miss. Req. addr); let tag=tag. Array. sub(idx); let dirty=dirty. Array. sub(idx); if(is. Valid(tag) && dirty) begin // write-back let addr = {from. Maybe(? , tag), idx, 4'b 0}; let data = data. Array. sub(idx); mem. Req. Q. enq(Mem. Req{op: St, addr: addr, data: data}); end mshr <= Send. Fill. Req; endrule Ready -> Start. Miss -> Send. Fill. Req -> Wait. Fill. Resp -> Ready rule send. Fill. Req (mshr == Send. Fill. Req); mem. Req. Q. enq(miss. Req); mshr <= Wait. Fill. Resp; endrule October 23, 2017 http: //csg. csail. mit. edu/6. 175 L 13 -13

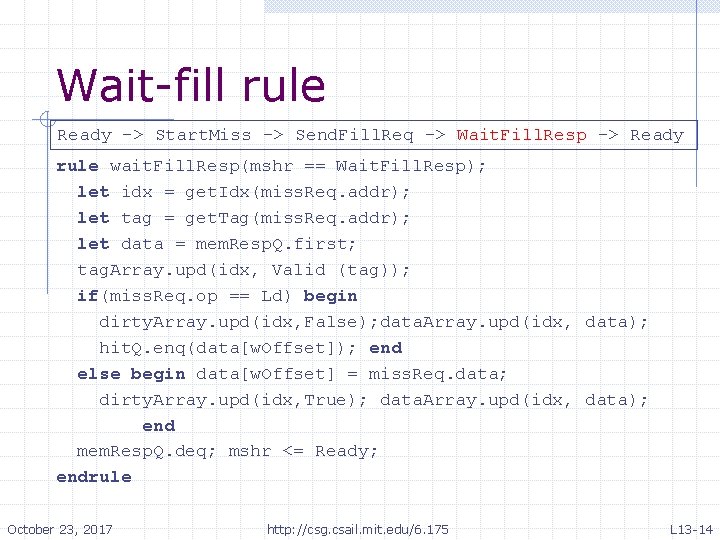

Wait-fill rule Ready -> Start. Miss -> Send. Fill. Req -> Wait. Fill. Resp -> Ready rule wait. Fill. Resp(mshr == Wait. Fill. Resp); let idx = get. Idx(miss. Req. addr); let tag = get. Tag(miss. Req. addr); let data = mem. Resp. Q. first; tag. Array. upd(idx, Valid (tag)); if(miss. Req. op == Ld) begin dirty. Array. upd(idx, False); data. Array. upd(idx, data); hit. Q. enq(data[w. Offset]); end else begin data[w. Offset] = miss. Req. data; dirty. Array. upd(idx, True); data. Array. upd(idx, data); end mem. Resp. Q. deq; mshr <= Ready; endrule October 23, 2017 http: //csg. csail. mit. edu/6. 175 L 13 -14

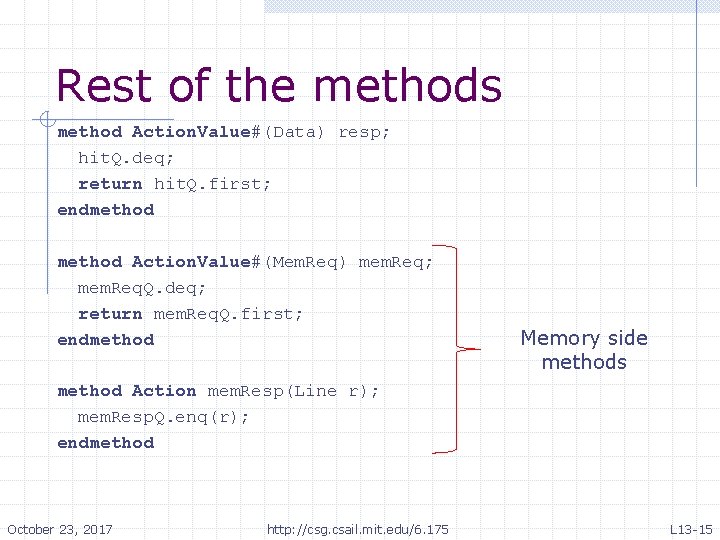

Rest of the methods method Action. Value#(Data) resp; hit. Q. deq; return hit. Q. first; endmethod Action. Value#(Mem. Req) mem. Req; mem. Req. Q. deq; return mem. Req. Q. first; endmethod Memory side methods method Action mem. Resp(Line r); mem. Resp. Q. enq(r); endmethod October 23, 2017 http: //csg. csail. mit. edu/6. 175 L 13 -15

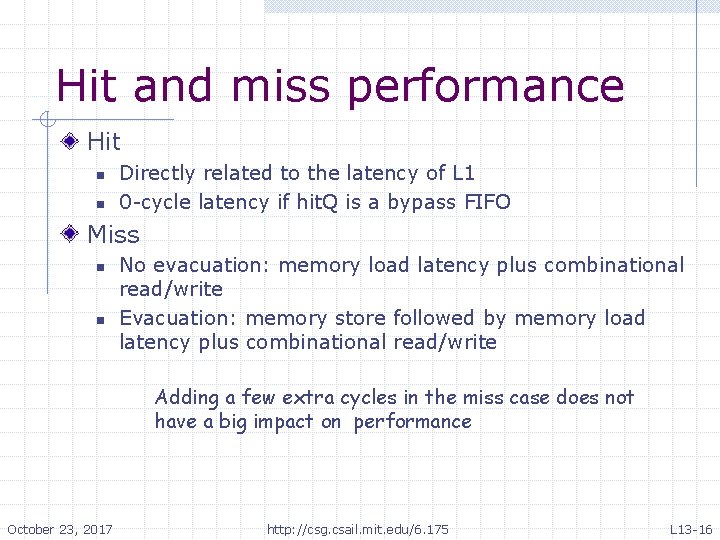

Hit and miss performance Hit n n Directly related to the latency of L 1 0 -cycle latency if hit. Q is a bypass FIFO Miss n n No evacuation: memory load latency plus combinational read/write Evacuation: memory store followed by memory load latency plus combinational read/write Adding a few extra cycles in the miss case does not have a big impact on performance October 23, 2017 http: //csg. csail. mit. edu/6. 175 L 13 -16

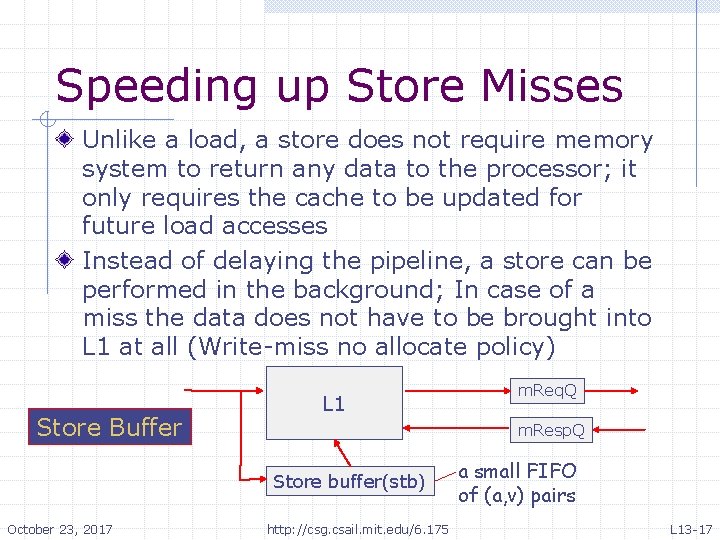

Speeding up Store Misses Unlike a load, a store does not require memory system to return any data to the processor; it only requires the cache to be updated for future load accesses Instead of delaying the pipeline, a store can be performed in the background; In case of a miss the data does not have to be brought into L 1 at all (Write-miss no allocate policy) Store Buffer L 1 m. Resp. Q Store buffer(stb) October 23, 2017 m. Req. Q http: //csg. csail. mit. edu/6. 175 a small FIFO of (a, v) pairs L 13 -17

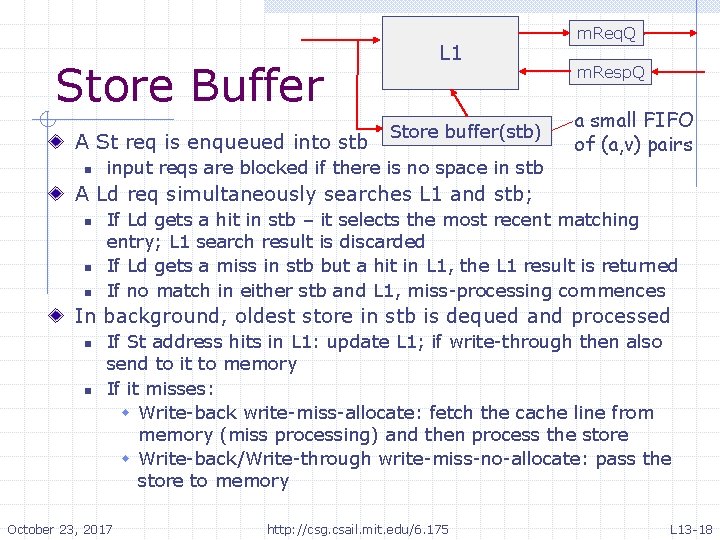

Store Buffer A St req is enqueued into stb n L 1 Store buffer(stb) input reqs are blocked if there is no space in stb m. Req. Q m. Resp. Q a small FIFO of (a, v) pairs A Ld req simultaneously searches L 1 and stb; n n n If Ld gets a hit in stb – it selects the most recent matching entry; L 1 search result is discarded If Ld gets a miss in stb but a hit in L 1, the L 1 result is returned If no match in either stb and L 1, miss-processing commences In background, oldest store in stb is dequed and processed n n If St address hits in L 1: update L 1; if write-through then also send to it to memory If it misses: w Write-back write-miss-allocate: fetch the cache line from memory (miss processing) and then process the store w Write-back/Write-through write-miss-no-allocate: pass the store to memory October 23, 2017 http: //csg. csail. mit. edu/6. 175 L 13 -18

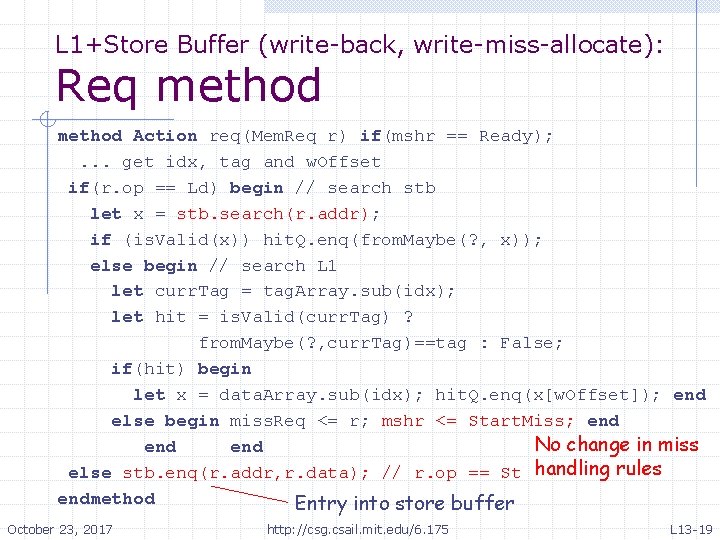

L 1+Store Buffer (write-back, write-miss-allocate): Req method Action req(Mem. Req r) if(mshr == Ready); . . . get idx, tag and w. Offset if(r. op == Ld) begin // search stb let x = stb. search(r. addr); if (is. Valid(x)) hit. Q. enq(from. Maybe(? , x)); else begin // search L 1 let curr. Tag = tag. Array. sub(idx); let hit = is. Valid(curr. Tag) ? from. Maybe(? , curr. Tag)==tag : False; if(hit) begin let x = data. Array. sub(idx); hit. Q. enq(x[w. Offset]); end else begin miss. Req <= r; mshr <= Start. Miss; end No change in miss end else stb. enq(r. addr, r. data); // r. op == St handling rules endmethod Entry into store buffer October 23, 2017 http: //csg. csail. mit. edu/6. 175 L 13 -19

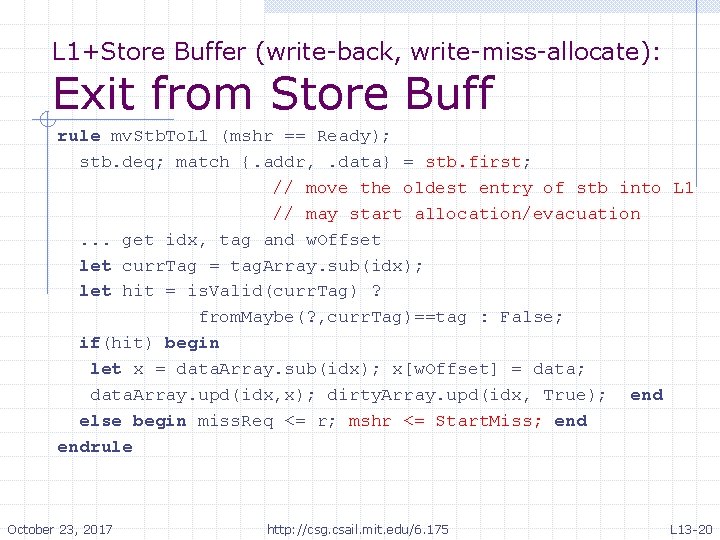

L 1+Store Buffer (write-back, write-miss-allocate): Exit from Store Buff rule mv. Stb. To. L 1 (mshr == Ready); stb. deq; match {. addr, . data} = stb. first; // move the oldest entry of stb into L 1 // may start allocation/evacuation. . . get idx, tag and w. Offset let curr. Tag = tag. Array. sub(idx); let hit = is. Valid(curr. Tag) ? from. Maybe(? , curr. Tag)==tag : False; if(hit) begin let x = data. Array. sub(idx); x[w. Offset] = data; data. Array. upd(idx, x); dirty. Array. upd(idx, True); end else begin miss. Req <= r; mshr <= Start. Miss; endrule October 23, 2017 http: //csg. csail. mit. edu/6. 175 L 13 -20

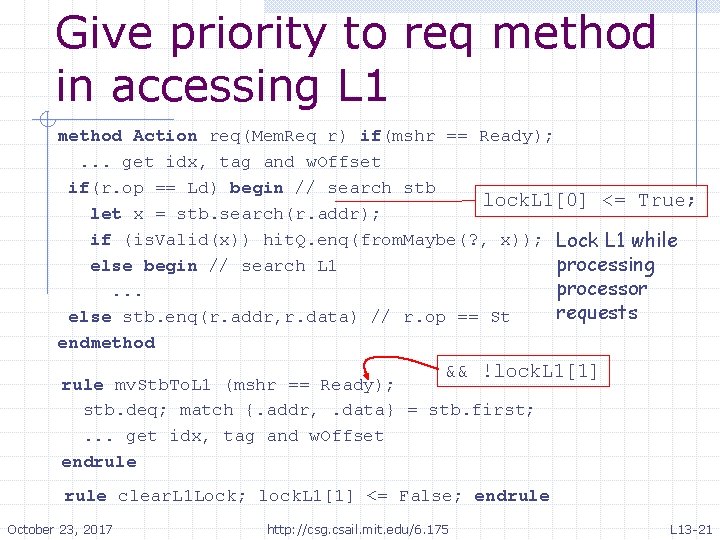

Give priority to req method in accessing L 1 method Action req(Mem. Req r) if(mshr == Ready); . . . get idx, tag and w. Offset if(r. op == Ld) begin // search stb lock. L 1[0] <= True; let x = stb. search(r. addr); if (is. Valid(x)) hit. Q. enq(from. Maybe(? , x)); Lock L 1 while else begin // search L 1 processing processor. . . requests else stb. enq(r. addr, r. data) // r. op == St endmethod && !lock. L 1[1] rule mv. Stb. To. L 1 (mshr == Ready); stb. deq; match {. addr, . data} = stb. first; . . . get idx, tag and w. Offset endrule clear. L 1 Lock; lock. L 1[1] <= False; endrule October 23, 2017 http: //csg. csail. mit. edu/6. 175 L 13 -21

Functions to extract cache tag, index, word offset tag index L 2 Byte addresses Cache size in bytes function Cache. Index get. Index(Addr addr) = truncate(addr>>4); function Bit#(2) get. Offset(Addr addr) = truncate(addr >> 2); function Cache. Tag get. Tag(Addr addr) = truncate. LSB(addr); truncate = truncate. MSB October 23, 2017 http: //csg. csail. mit. edu/6. 175 L 13 -22

- Slides: 22