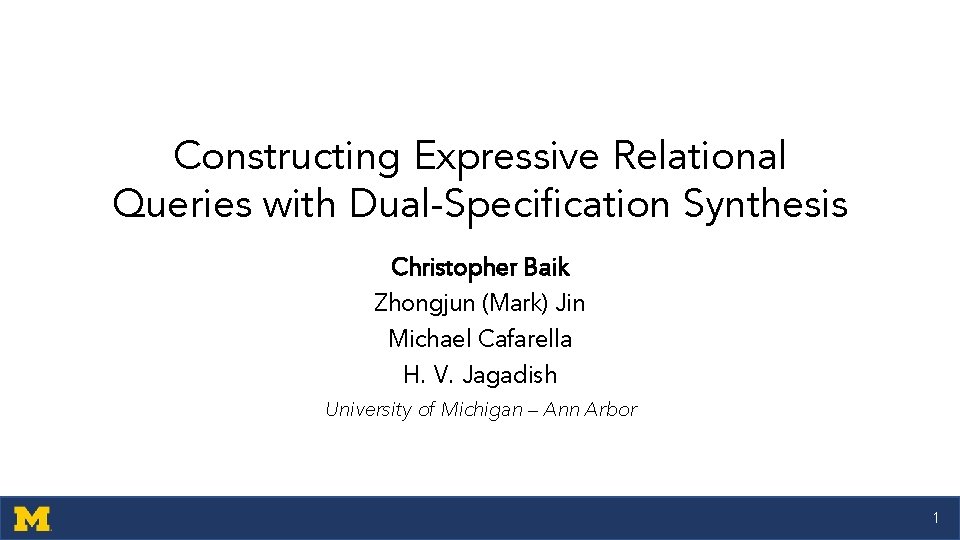

Constructing Expressive Relational Queries with DualSpecification Synthesis Christopher

![Algorithm Overview NLQ: Find all Tom Hanks PBE: [Forrest Gump, 1994] + movies before Algorithm Overview NLQ: Find all Tom Hanks PBE: [Forrest Gump, 1994] + movies before](https://slidetodoc.com/presentation_image_h2/c8267f85490edccb3cffcc1a076a30bd/image-17.jpg)

![Algorithm Overview NLQ: Find all Tom Hanks PBE: [Forrest Gump, 1994] + movies before Algorithm Overview NLQ: Find all Tom Hanks PBE: [Forrest Gump, 1994] + movies before](https://slidetodoc.com/presentation_image_h2/c8267f85490edccb3cffcc1a076a30bd/image-18.jpg)

![Pruning: Minimize Number of Verifications NLQ: Find all Tom Hanks PBE: [Forrest Gump, 1994] Pruning: Minimize Number of Verifications NLQ: Find all Tom Hanks PBE: [Forrest Gump, 1994]](https://slidetodoc.com/presentation_image_h2/c8267f85490edccb3cffcc1a076a30bd/image-19.jpg)

![Pruning: Minimize Number of Verifications NLQ: Find all Tom Hanks PBE: [Forrest Gump, 1994] Pruning: Minimize Number of Verifications NLQ: Find all Tom Hanks PBE: [Forrest Gump, 1994]](https://slidetodoc.com/presentation_image_h2/c8267f85490edccb3cffcc1a076a30bd/image-20.jpg)

![Pruning: Minimize Per-Query Verification Time PBE: [Forrest Gump, 1994] [Big, 1988] Intuition: If cheap Pruning: Minimize Per-Query Verification Time PBE: [Forrest Gump, 1994] [Big, 1988] Intuition: If cheap](https://slidetodoc.com/presentation_image_h2/c8267f85490edccb3cffcc1a076a30bd/image-21.jpg)

- Slides: 31

Constructing Expressive Relational Queries with Dual-Specification Synthesis Christopher Baik Zhongjun (Mark) Jin Michael Cafarella H. V. Jagadish University of Michigan – Ann Arbor 1

Agenda • Motivation • Approach • Experiments • Conclusion 2

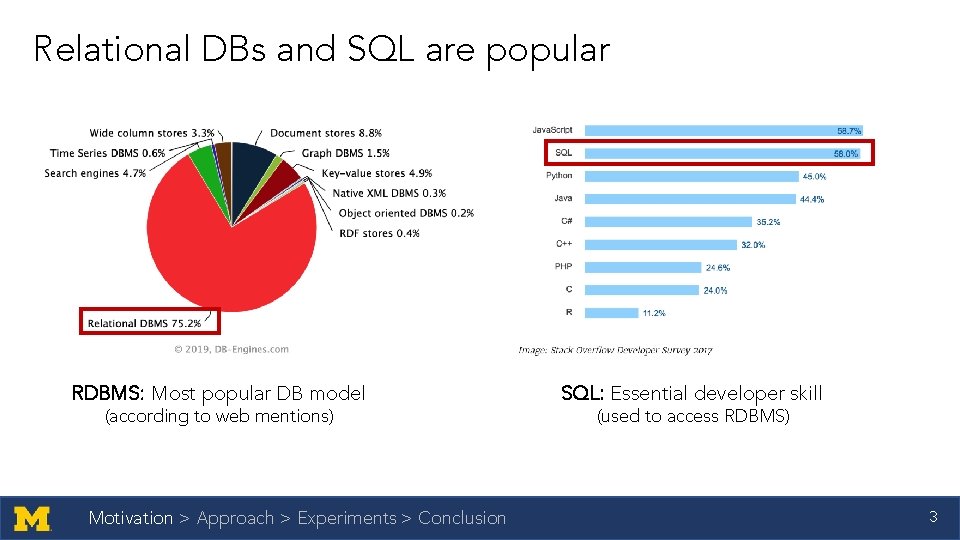

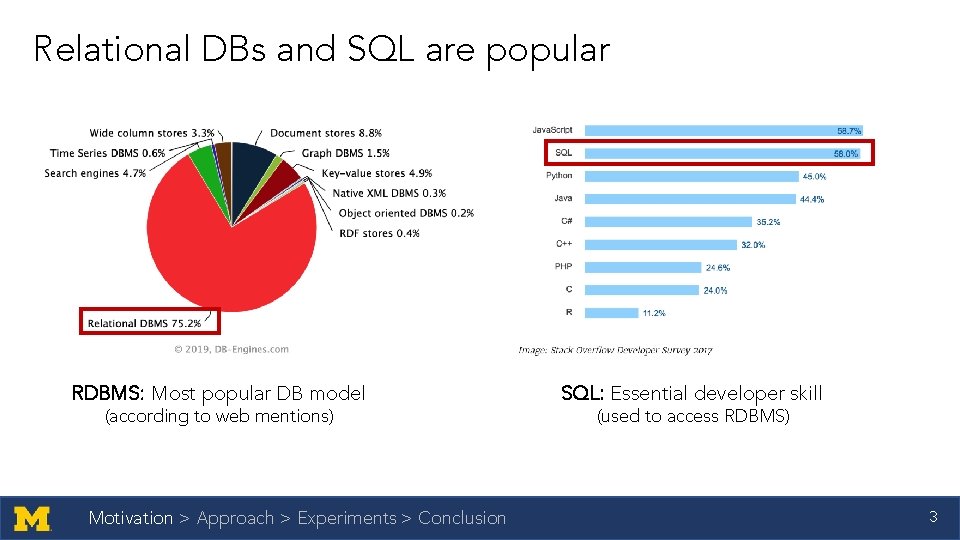

Relational DBs and SQL are popular RDBMS: Most popular DB model (according to web mentions) Motivation > Approach > Experiments > Conclusion SQL: Essential developer skill (used to access RDBMS) 3

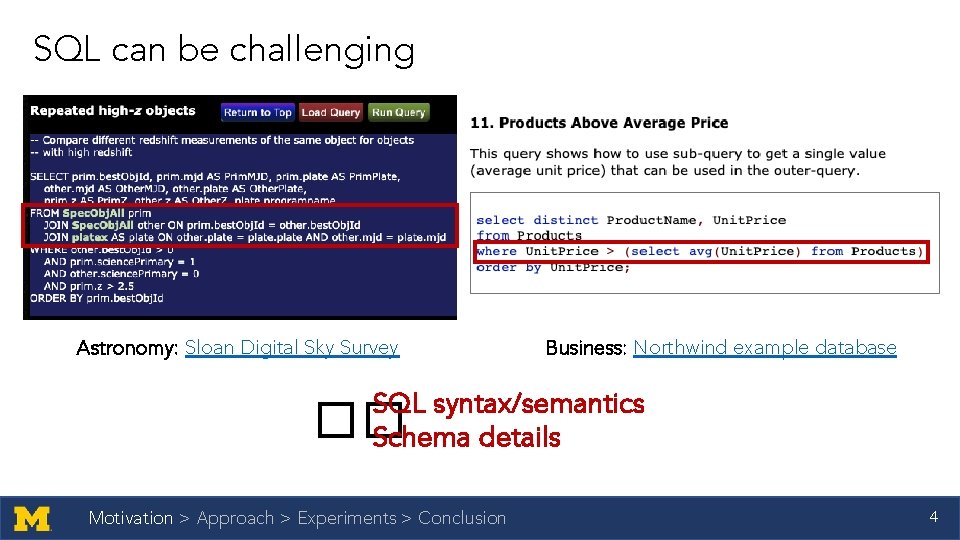

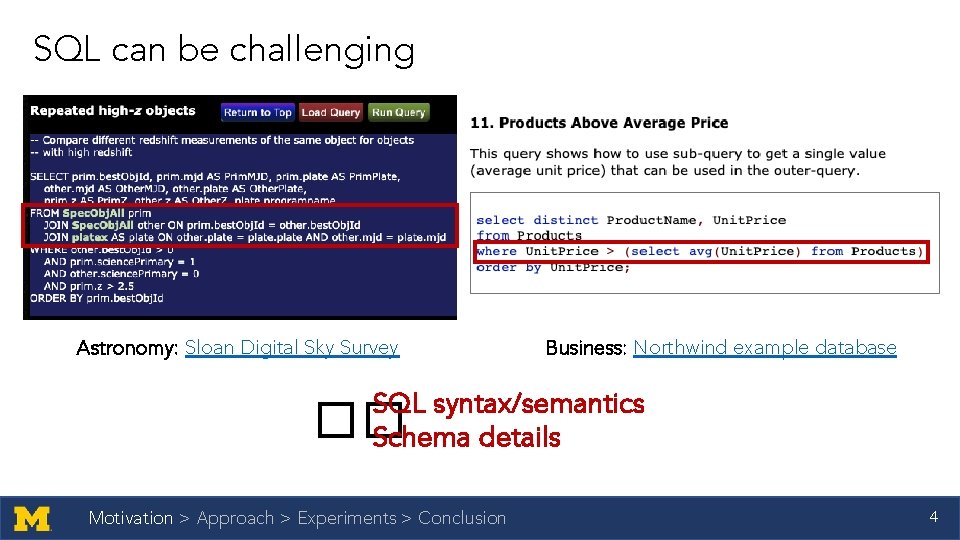

SQL can be challenging Astronomy: Sloan Digital Sky Survey Business: Northwind example database SQL syntax/semantics Schema details �� Motivation > Approach > Experiments > Conclusion 4

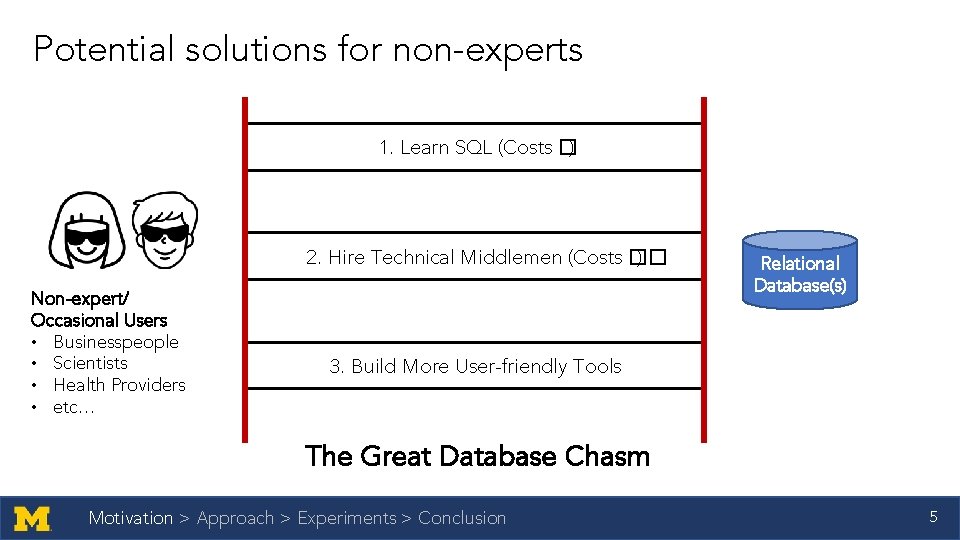

Potential solutions for non-experts 1. Learn SQL (Costs �) 2. Hire Technical Middlemen (Costs �� ) Non-expert/ Occasional Users • Businesspeople • Scientists • Health Providers • etc… Relational Database(s) 3. Build More User-friendly Tools The Great Database Chasm Motivation > Approach > Experiments > Conclusion 5

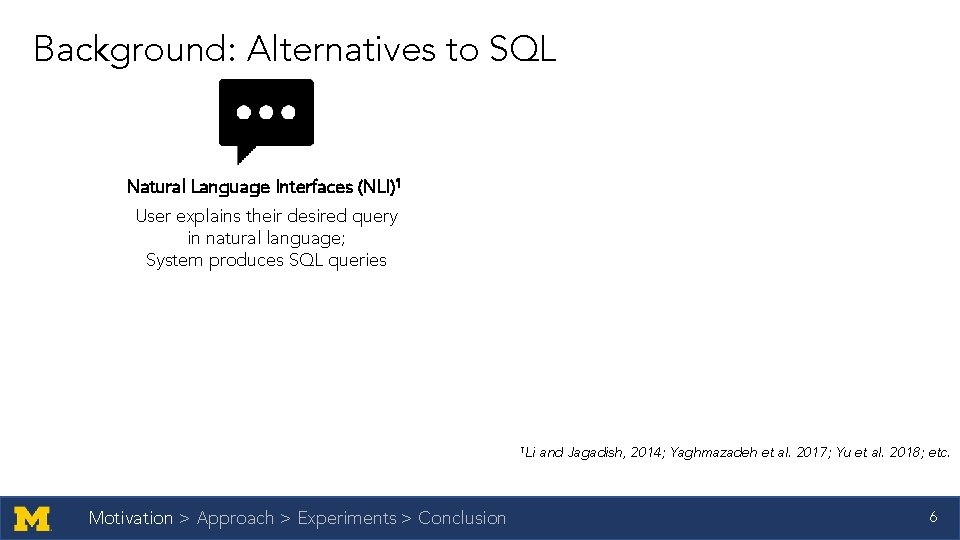

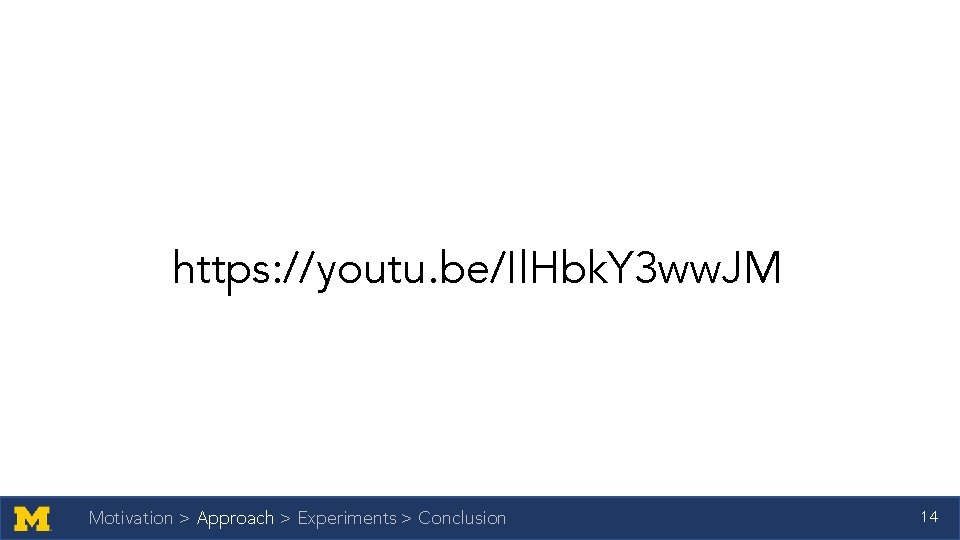

Background: Alternatives to SQL Natural Language Interfaces (NLI)1 User explains their desired query in natural language; System produces SQL queries 1 Li Motivation > Approach > Experiments > Conclusion and Jagadish, 2014; Yaghmazadeh et al. 2017; Yu et al. 2018; etc. 6

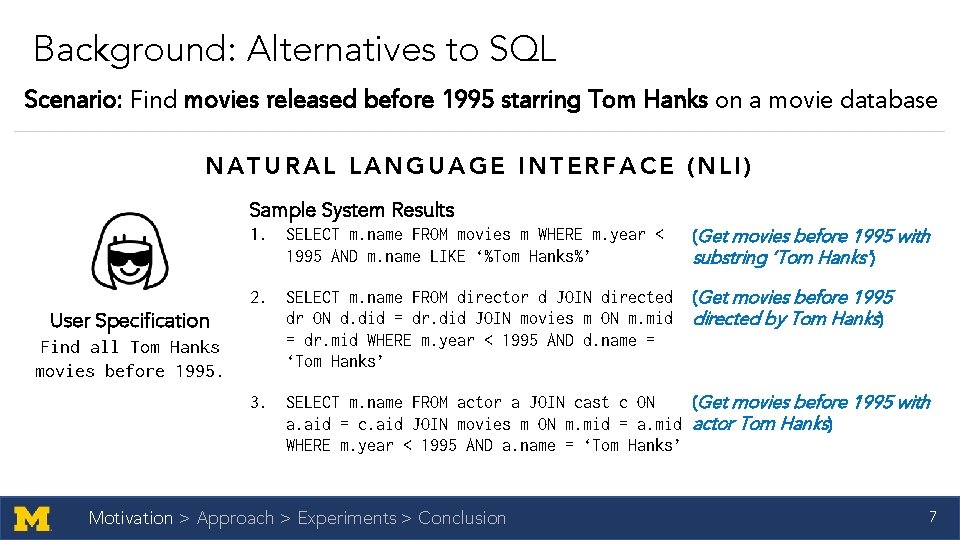

Background: Alternatives to SQL Scenario: Find movies released before 1995 starring Tom Hanks on a movie database NATURAL LANGUAGE INTERFACE (NLI) Sample System Results User Specification Find all Tom Hanks movies before 1995. 1. SELECT m. name FROM movies m WHERE m. year < 1995 AND m. name LIKE ‘%Tom Hanks%’ (Get movies before 1995 with substring ‘Tom Hanks’) 2. SELECT m. name FROM director d JOIN directed dr ON d. did = dr. did JOIN movies m ON m. mid = dr. mid WHERE m. year < 1995 AND d. name = ‘Tom Hanks’ (Get movies before 1995 directed by Tom Hanks) (Get movies before 1995 with 3. SELECT m. name FROM actor a JOIN cast c ON a. aid = c. aid JOIN movies m ON m. mid = a. mid actor Tom Hanks) WHERE m. year < 1995 AND a. name = ‘Tom Hanks’ Motivation > Approach > Experiments > Conclusion 7

Background: Alternatives to SQL Natural Language Interfaces (NLI)1 User explains their desired query in natural language; System produces SQL queries Expressive, but not accurate • Users can describe expressive queries, including aggregates • Inherent ambiguity • State-of-the-art: Top-1 accuracy only ~60% on prominent benchmark 2 1 Li and Jagadish, 2014; Yaghmazadeh et al. 2017; Yu et al. 2018; etc. 2 https: //yale-lily. github. io/spider Motivation > Approach > Experiments > Conclusion 8

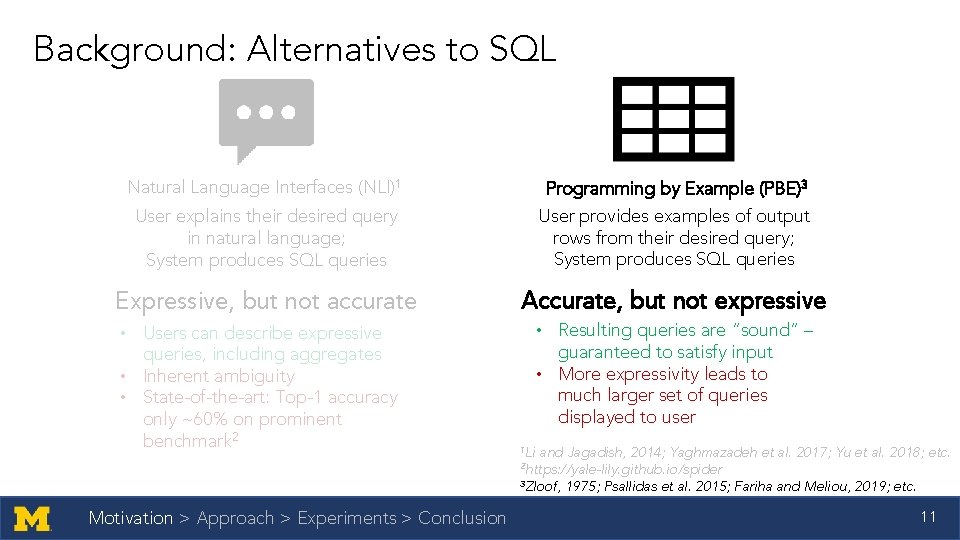

Background: Alternatives to SQL Natural Language Interfaces (NLI)1 Programming by Example (PBE)3 User explains their desired query in natural language; System produces SQL queries User provides examples of output rows from their desired query; System produces SQL queries Expressive, but not accurate • Users can describe expressive queries, including aggregates • Inherent ambiguity • State-of-the-art: Top-1 accuracy only ~60% on prominent benchmark 2 1 Li and Jagadish, 2014; Yaghmazadeh et al. 2017; Yu et al. 2018; etc. 2 https: //yale-lily. github. io/spider 3 Zloof, Motivation > Approach > Experiments > Conclusion 1975; Psallidas et al. 2015; Fariha and Meliou, 2019; etc. 9

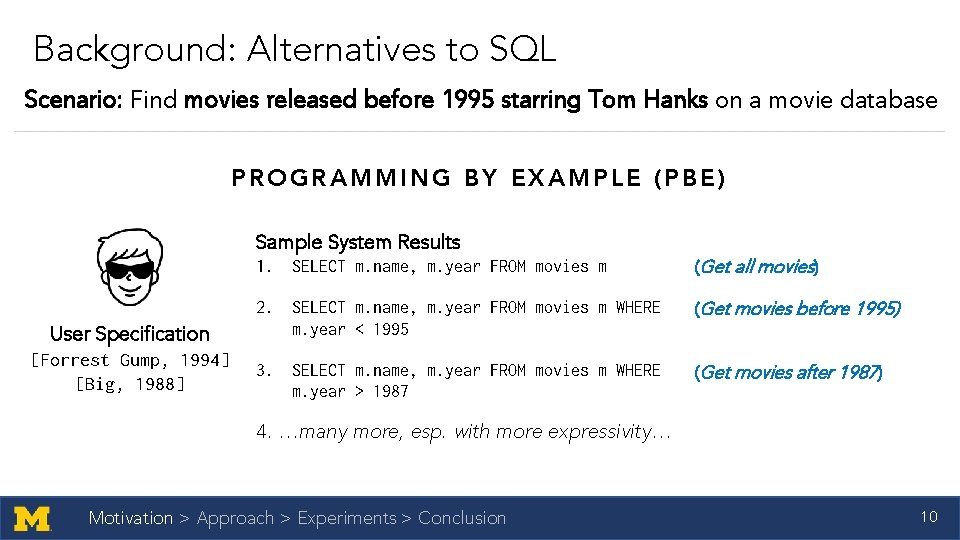

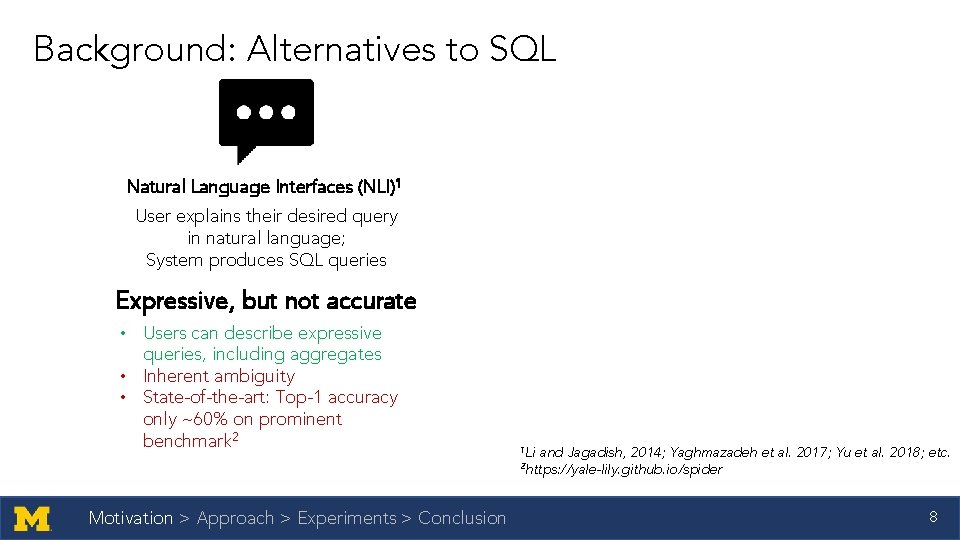

Background: Alternatives to SQL Scenario: Find movies released before 1995 starring Tom Hanks on a movie database PROGRAMMING BY EXAMPLE (PBE) Sample System Results User Specification [Forrest Gump, 1994] [Big, 1988] 1. SELECT m. name, m. year FROM movies m (Get all movies) 2. SELECT m. name, m. year FROM movies m WHERE m. year < 1995 (Get movies before 1995) 3. SELECT m. name, m. year FROM movies m WHERE m. year > 1987 (Get movies after 1987) 4. …many more, esp. with more expressivity… Motivation > Approach > Experiments > Conclusion 10

Background: Alternatives to SQL Natural Language Interfaces (NLI)1 Programming by Example (PBE)3 User explains their desired query in natural language; System produces SQL queries User provides examples of output rows from their desired query; System produces SQL queries Expressive, but not accurate Accurate, but not expressive • Users can describe expressive queries, including aggregates • Inherent ambiguity • State-of-the-art: Top-1 accuracy only ~60% on prominent benchmark 2 • Resulting queries are “sound” – guaranteed to satisfy input • More expressivity leads to much larger set of queries displayed to user 1 Li and Jagadish, 2014; Yaghmazadeh et al. 2017; Yu et al. 2018; etc. 2 https: //yale-lily. github. io/spider 3 Zloof, Motivation > Approach > Experiments > Conclusion 1975; Psallidas et al. 2015; Fariha and Meliou, 2019; etc. 11

Our research question… Natural Language Interfaces (NLI) Expressive, but not accurate + Programming by Example (PBE) Accurate, but not expressive Can we get the best of both worlds: expressive and accurate? Motivation > Approach > Experiments > Conclusion 12

Agenda • Motivation • Approach • Demo • Algorithm Details • Experiments • Conclusion 13

https: //youtu. be/Il. Hbk. Y 3 ww. JM Motivation > Approach > Experiments > Conclusion 14

Agenda • Motivation • Approach • Demo • Algorithm Details • Experiments • Conclusion 15

Algorithm Goals 1. Accuracy Synthesize the user’s desired query in top-n results Motivation > Approach > Experiments > Conclusion 2. Efficiency Operate in interactive time 16

![Algorithm Overview NLQ Find all Tom Hanks PBE Forrest Gump 1994 movies before Algorithm Overview NLQ: Find all Tom Hanks PBE: [Forrest Gump, 1994] + movies before](https://slidetodoc.com/presentation_image_h2/c8267f85490edccb3cffcc1a076a30bd/image-17.jpg)

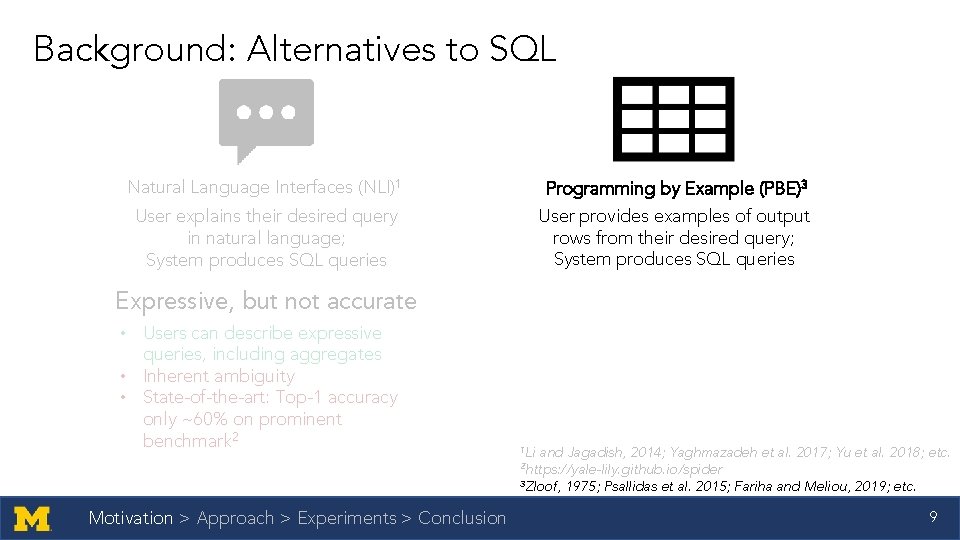

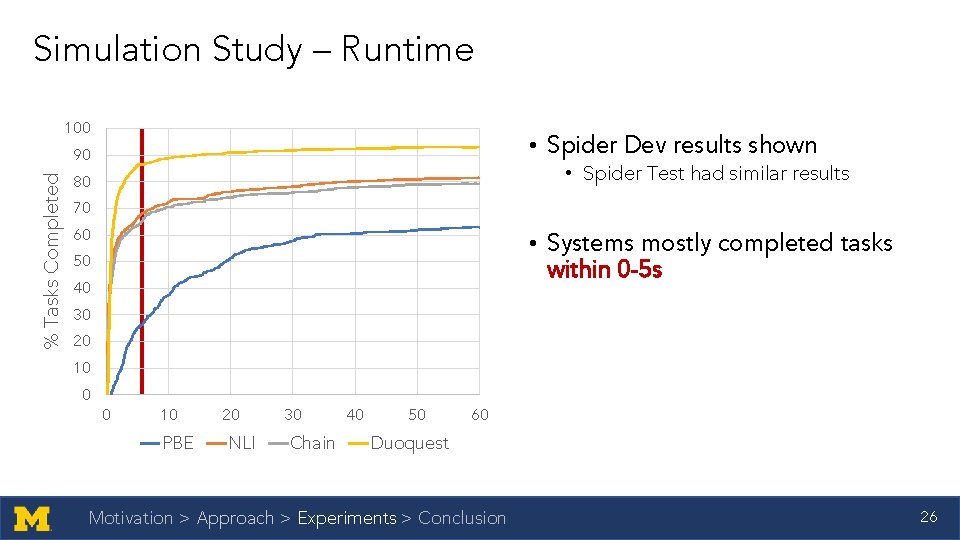

Algorithm Overview NLQ: Find all Tom Hanks PBE: [Forrest Gump, 1994] + movies before 1995. [Big, 1988] ∅ • Each node is a partial query SELECT ? FROM ? WHERE ? SELECT name, ? FROM actor WHERE ? SELECT title, year FROM movie WHERE year < 1995 … SELECT title, ? FROM movie WHERE ? SELECT title, year FROM movie WHERE year > 1995 • Lower layers are more “concrete” versions of previous layers … (Note: figure is simplified) … Motivation > Approach > Experiments > Conclusion • Leaf nodes are executable queries 17

![Algorithm Overview NLQ Find all Tom Hanks PBE Forrest Gump 1994 movies before Algorithm Overview NLQ: Find all Tom Hanks PBE: [Forrest Gump, 1994] + movies before](https://slidetodoc.com/presentation_image_h2/c8267f85490edccb3cffcc1a076a30bd/image-18.jpg)

Algorithm Overview NLQ: Find all Tom Hanks PBE: [Forrest Gump, 1994] + movies before 1995. [Big, 1988] ∅ • O(cn) space, n is # cols in schema SELECT ? FROM ? WHERE ? SELECT name, ? FROM actor WHERE ? SELECT title, year FROM movie WHERE year < 1995 • Which branches do we explore first? • How do we prune search space? … SELECT title, ? FROM movie WHERE ? SELECT title, year FROM movie WHERE year > 1995 • Super naïve approach: Breadth-first search, execute each leaf node • Our approach: Guided partial query enumeration (GPQE) … … Motivation > Approach > Experiments > Conclusion • Guidance: deep learning + NLQ • Pruning: use partial queries to prune early 18

![Pruning Minimize Number of Verifications NLQ Find all Tom Hanks PBE Forrest Gump 1994 Pruning: Minimize Number of Verifications NLQ: Find all Tom Hanks PBE: [Forrest Gump, 1994]](https://slidetodoc.com/presentation_image_h2/c8267f85490edccb3cffcc1a076a30bd/image-19.jpg)

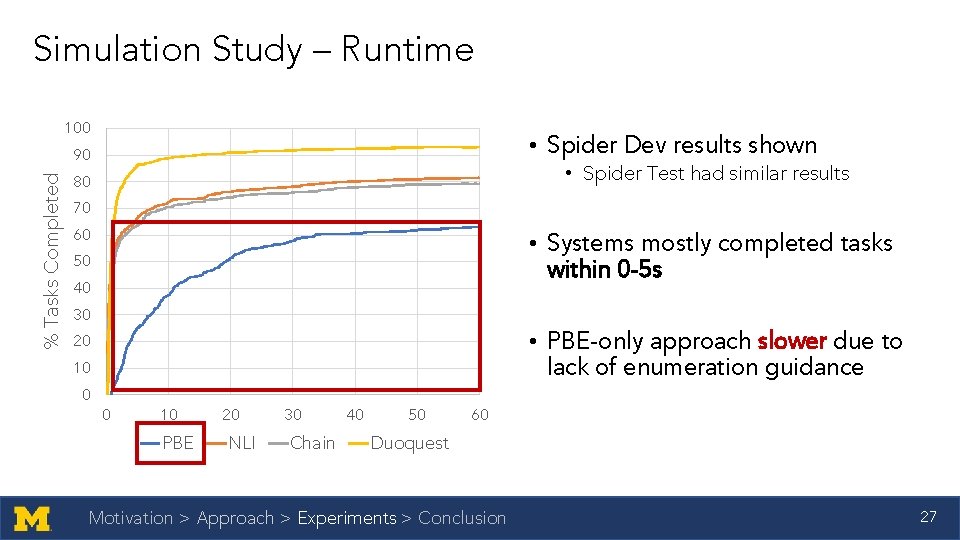

Pruning: Minimize Number of Verifications NLQ: Find all Tom Hanks PBE: [Forrest Gump, 1994] + movies before 1995. [Big, 1988] ∅ SELECT ? FROM ? … • Why? Verifications cost � SELECT ? FROM ? WHERE ? … … … • Naïve: Independently execute and verify each leaf node • Solution: Avoid redundant verifications, test partial queries SELECT m. genre, m. year FROM SELECT m. genre, m. year … WHERE m. year <= 1995 AND FROM … WHERE m. year < 1995 AND a. name = ‘Tom Hanks’ Motivation > Approach > Experiments > Conclusion 19

![Pruning Minimize Number of Verifications NLQ Find all Tom Hanks PBE Forrest Gump 1994 Pruning: Minimize Number of Verifications NLQ: Find all Tom Hanks PBE: [Forrest Gump, 1994]](https://slidetodoc.com/presentation_image_h2/c8267f85490edccb3cffcc1a076a30bd/image-20.jpg)

Pruning: Minimize Number of Verifications NLQ: Find all Tom Hanks PBE: [Forrest Gump, 1994] + movies before 1995. [Big, 1988] ∅ SELECT ? FROM ? … • Why? Verifications cost � SELECT ? FROM ? WHERE ? … … SELECT m. genre, ? FROM ? WHERE ? • Naïve: Independently execute and verify each leaf node • Solution: Avoid redundant verifications, test partial queries SELECT m. genre, m. year FROM SELECT m. genre, m. year … WHERE m. year <= 1995 AND FROM … WHERE m. year < 1995 AND a. name = ‘Tom Hanks’ Motivation > Approach > Experiments > Conclusion 20

![Pruning Minimize PerQuery Verification Time PBE Forrest Gump 1994 Big 1988 Intuition If cheap Pruning: Minimize Per-Query Verification Time PBE: [Forrest Gump, 1994] [Big, 1988] Intuition: If cheap](https://slidetodoc.com/presentation_image_h2/c8267f85490edccb3cffcc1a076a30bd/image-21.jpg)

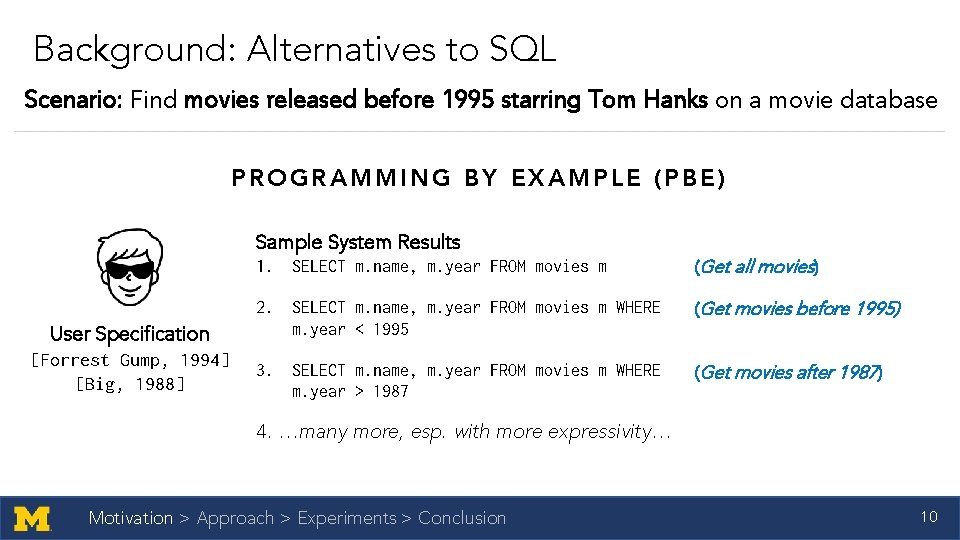

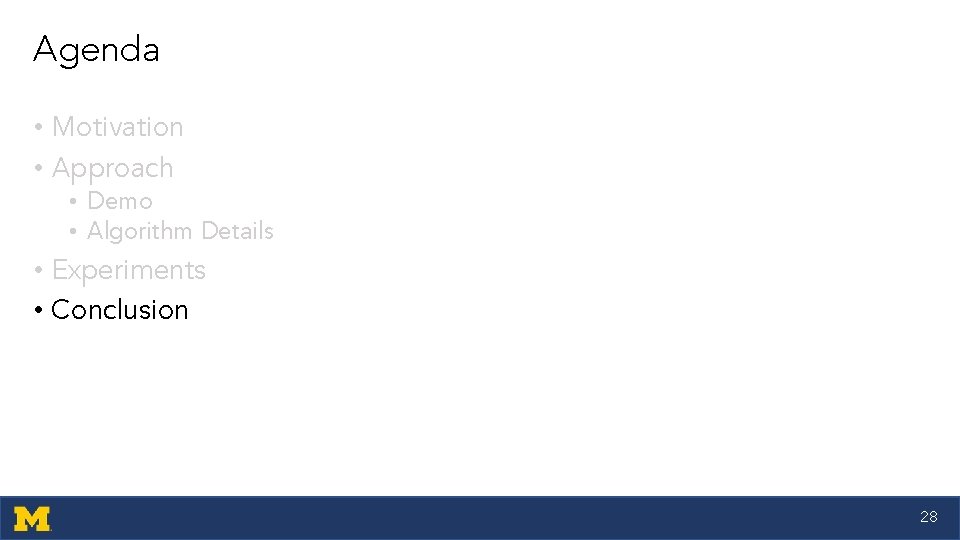

Pruning: Minimize Per-Query Verification Time PBE: [Forrest Gump, 1994] [Big, 1988] Intuition: If cheap verification fails, no need for costly verification • Semantic validity 2. SELECT genre, ? FROM movie • Cheap, join-less query for partial queries with incomplete SELECT 3. SELECT title, year FROM movie m JOIN cast c ON c. mid = m. mid JOIN actor a WHERE a. name = ‘Tom Hanks’ • More expensive query for row-wise match SELECT 1 FROM movie WHERE genre = ‘Forrest Gump’ SELECT 1 FROM movie m JOIN cast c ON c. mid = m. mid JOIN actor a ON c. aid = a. aid WHERE m. title = ‘Forrest Gump’ AND m. year = 1994 AND a. name = ‘Tom Hanks’ Motivation > Approach > Experiments > Conclusion Verification Cost Candidate (Partial) Queries 1. SELECT AVG(title), ? FROM movie 21

Agenda • Motivation • Approach • Demo • Algorithm Details • Experiments • Conclusion 22

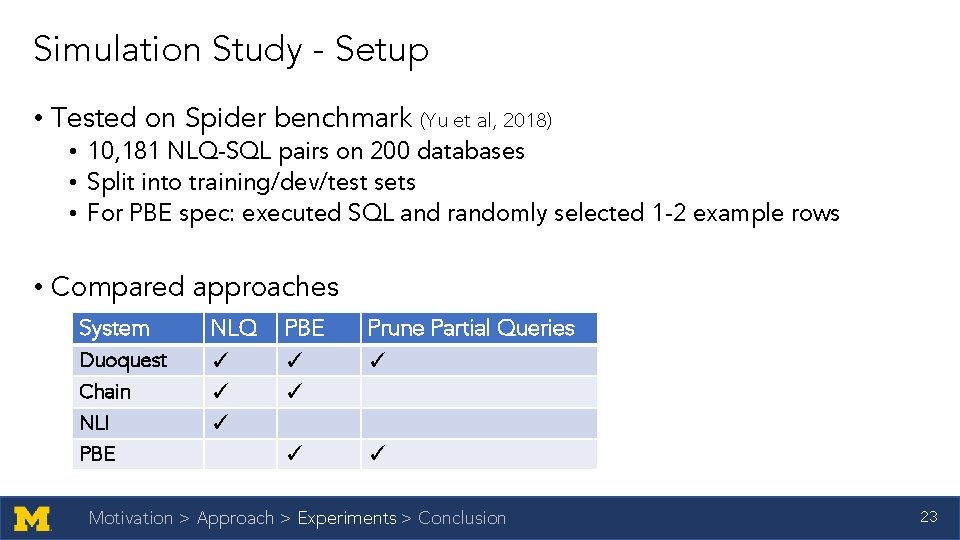

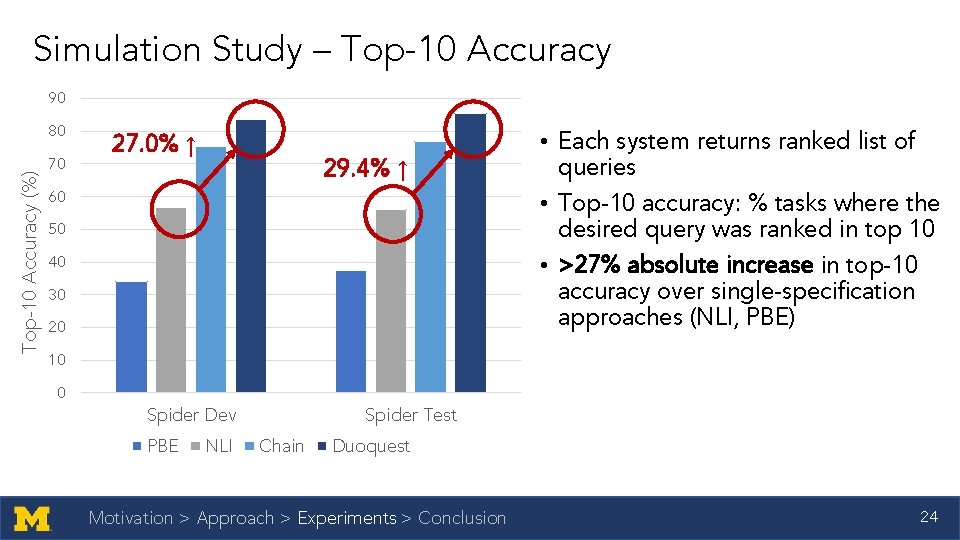

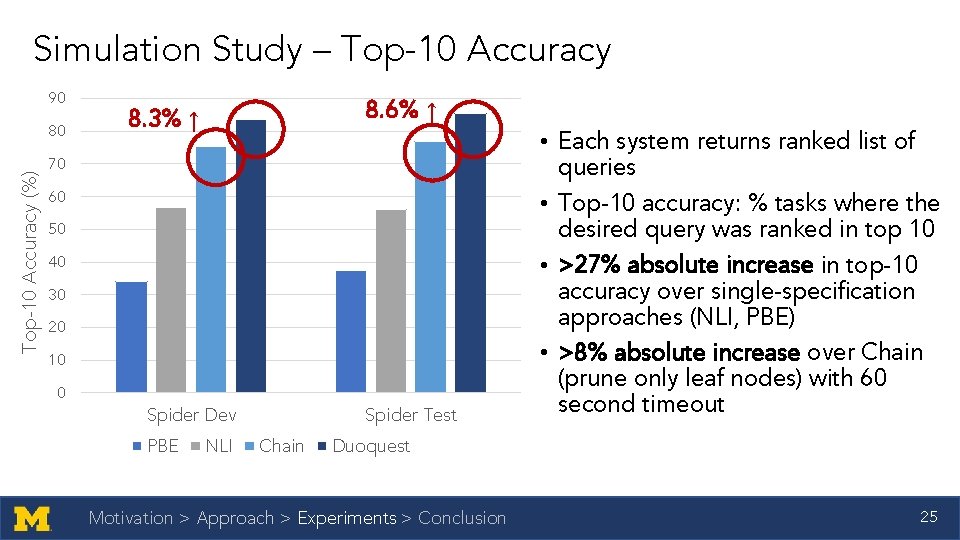

Simulation Study - Setup • Tested on Spider benchmark (Yu et al, 2018) • 10, 181 NLQ-SQL pairs on 200 databases • Split into training/dev/test sets • For PBE spec: executed SQL and randomly selected 1 -2 example rows • Compared approaches System NLQ PBE Prune Partial Queries Duoquest ✓ ✓ ✓ Chain ✓ ✓ NLI ✓ PBE ✓ ✓ Motivation > Approach > Experiments > Conclusion 23

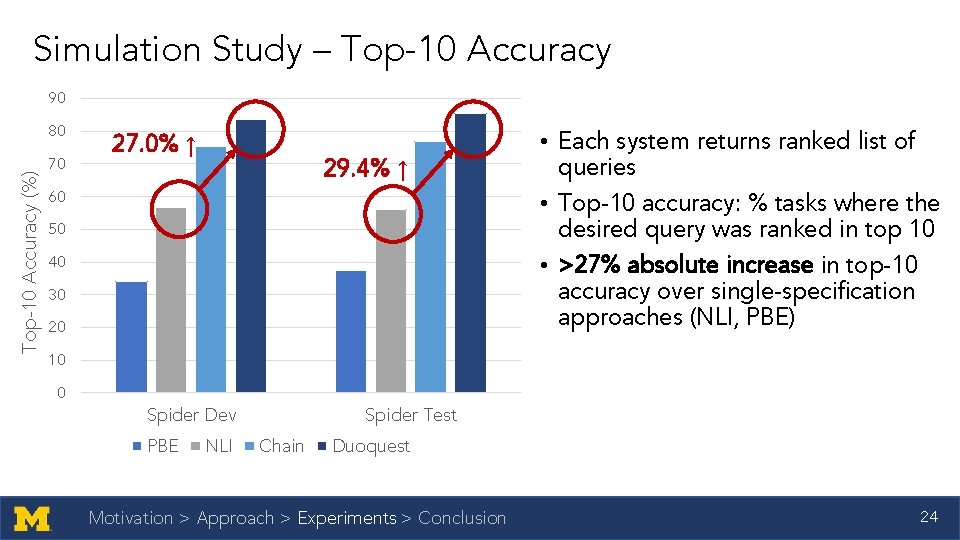

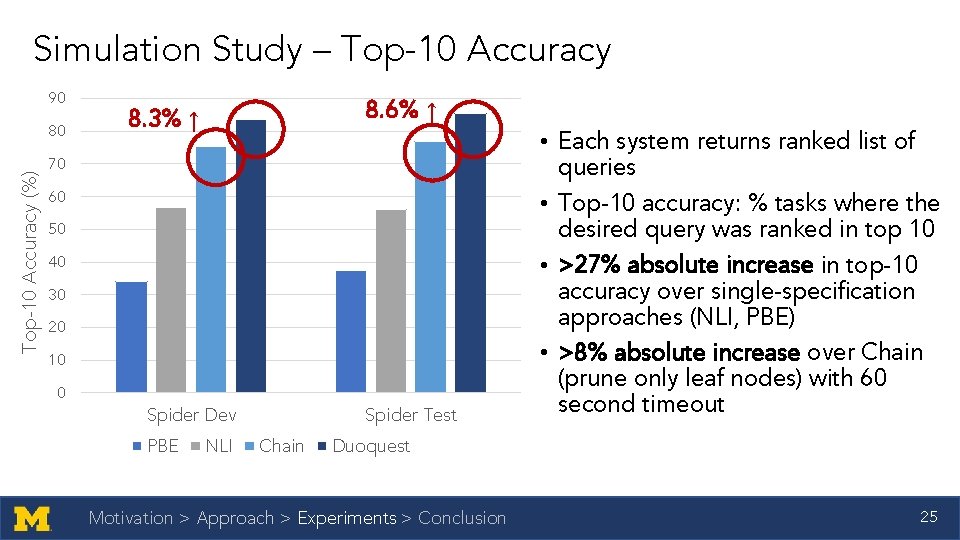

Simulation Study – Top-10 Accuracy 90 Top-10 Accuracy (%) 80 70 27. 0% ↑ 29. 4% ↑ 60 50 40 30 20 • Each system returns ranked list of queries • Top-10 accuracy: % tasks where the desired query was ranked in top 10 • >27% absolute increase in top-10 accuracy over single-specification approaches (NLI, PBE) 10 0 Spider Dev PBE NLI Spider Test Chain Duoquest Motivation > Approach > Experiments > Conclusion 24

Simulation Study – Top-10 Accuracy 90 Top-10 Accuracy (%) 80 8. 6% ↑ 8. 3% ↑ 70 60 50 40 30 20 10 0 Spider Dev PBE NLI Spider Test Chain • Each system returns ranked list of queries • Top-10 accuracy: % tasks where the desired query was ranked in top 10 • >27% absolute increase in top-10 accuracy over single-specification approaches (NLI, PBE) • >8% absolute increase over Chain (prune only leaf nodes) with 60 second timeout Duoquest Motivation > Approach > Experiments > Conclusion 25

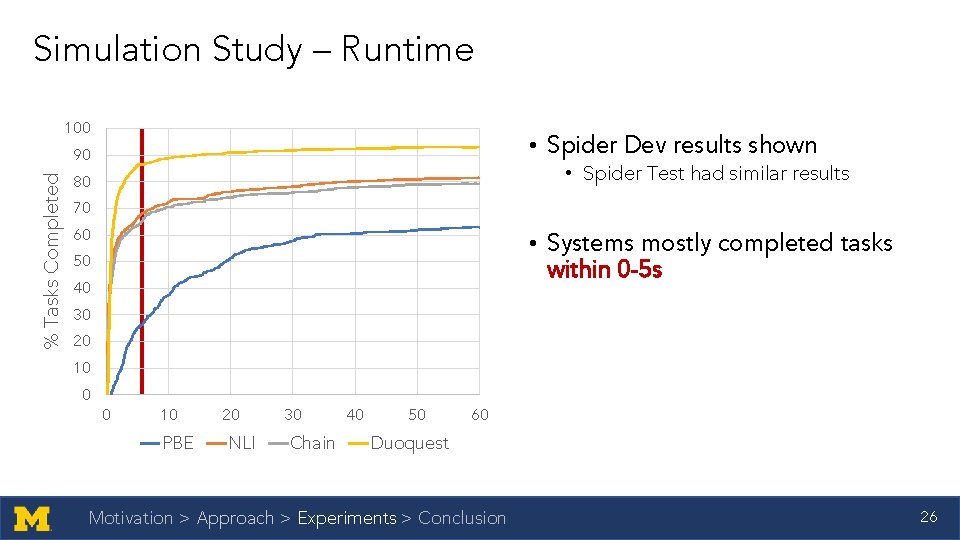

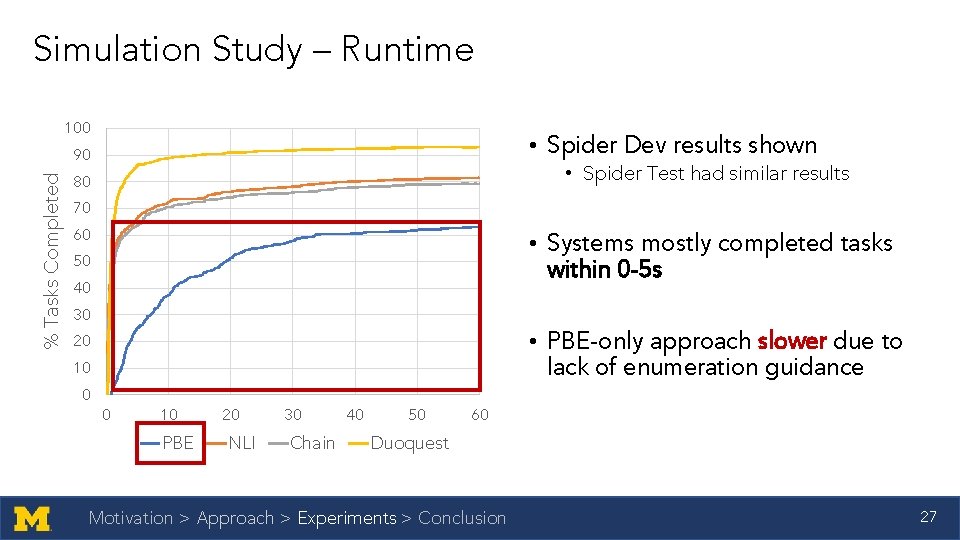

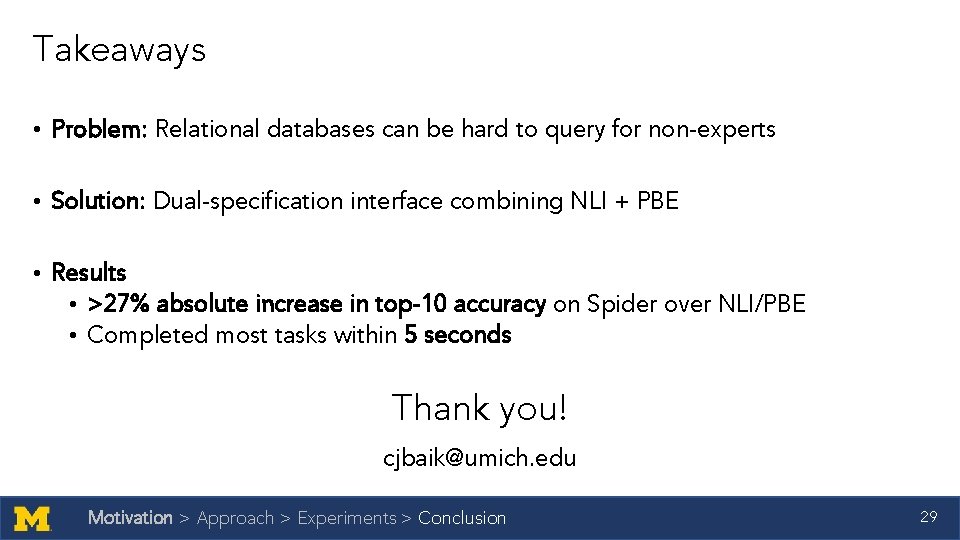

Simulation Study – Runtime 100 • Spider Dev results shown % Tasks Completed 90 • Spider Test had similar results 80 70 60 • Systems mostly completed tasks within 0 -5 s 50 40 30 20 10 0 0 10 PBE 20 NLI 30 Chain 40 50 60 Duoquest Motivation > Approach > Experiments > Conclusion 26

Simulation Study – Runtime 100 • Spider Dev results shown % Tasks Completed 90 • Spider Test had similar results 80 70 60 • Systems mostly completed tasks within 0 -5 s 50 40 30 • PBE-only approach slower due to lack of enumeration guidance 20 10 0 0 10 PBE 20 NLI 30 Chain 40 50 60 Duoquest Motivation > Approach > Experiments > Conclusion 27

Agenda • Motivation • Approach • Demo • Algorithm Details • Experiments • Conclusion 28

Takeaways • Problem: Relational databases can be hard to query for non-experts • Solution: Dual-specification interface combining NLI + PBE • Results • >27% absolute increase in top-10 accuracy on Spider over NLI/PBE • Completed most tasks within 5 seconds Thank you! cjbaik@umich. edu Motivation > Approach > Experiments > Conclusion 29

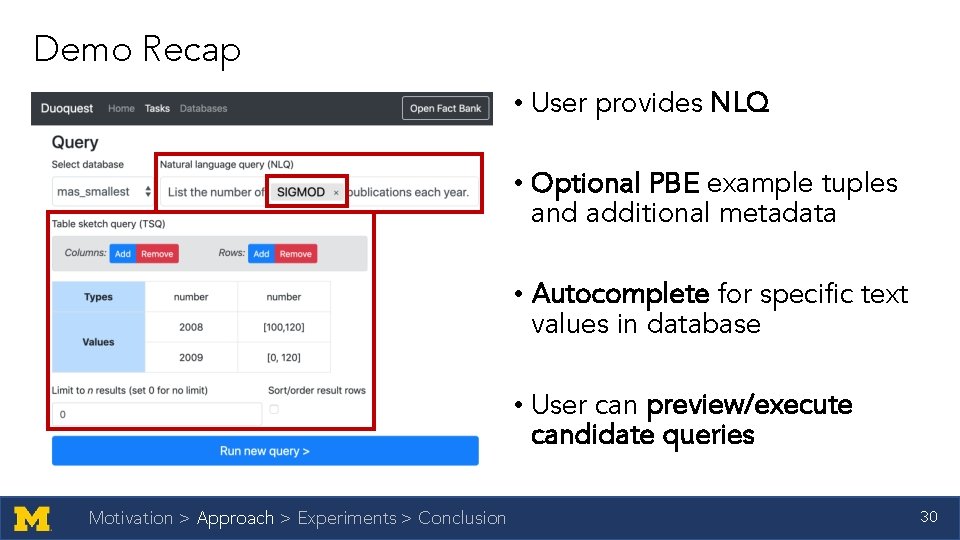

Demo Recap • User provides NLQ • Optional PBE example tuples and additional metadata • Autocomplete for specific text values in database • User can preview/execute candidate queries Motivation > Approach > Experiments > Conclusion 30

Icons • Aom. Am from the Noun Project Motivation > Approach > Experiments > Conclusion 31