Consistency An estimator is a consistent estimator of

- Slides: 13

Consistency • An estimator is a consistent estimator of θ, if converge in probability to θ. week 3 , i. e. , if 1

Theorem • An unbiased estimator for θ, is a consistent estimator of θ if • Proof: week 3 2

Example • Suppose X 1, X 2, …, Xn are i. i. d Poisson(λ). Let week 3 then… 3

Important comment • Consistency is an asymptotic property so we can have a consistent estimator that is biased as long as it is asymptotically unbiased. • Example: Uniform example above. week 3 4

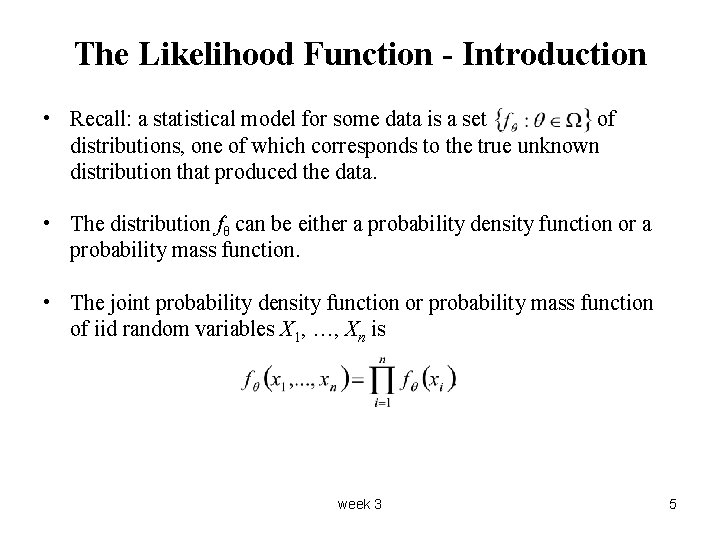

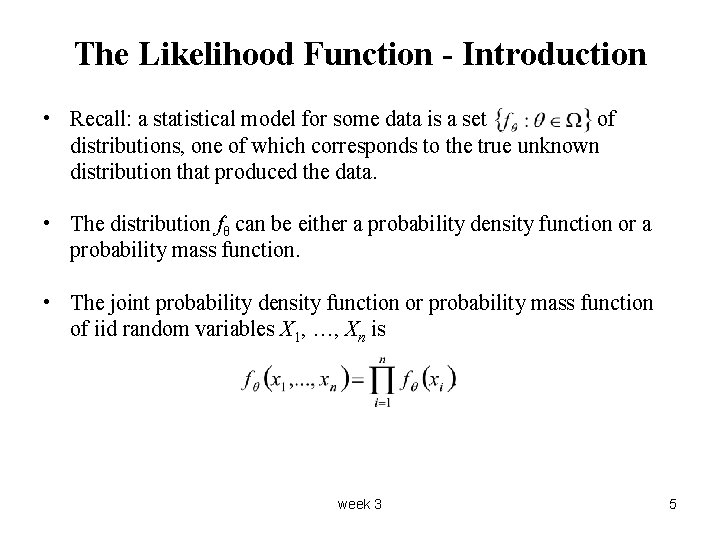

The Likelihood Function - Introduction • Recall: a statistical model for some data is a set of distributions, one of which corresponds to the true unknown distribution that produced the data. • The distribution fθ can be either a probability density function or a probability mass function. • The joint probability density function or probability mass function of iid random variables X 1, …, Xn is week 3 5

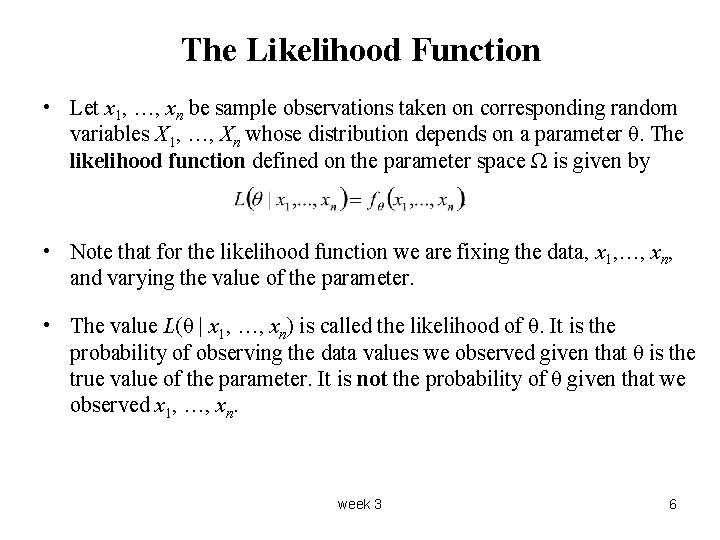

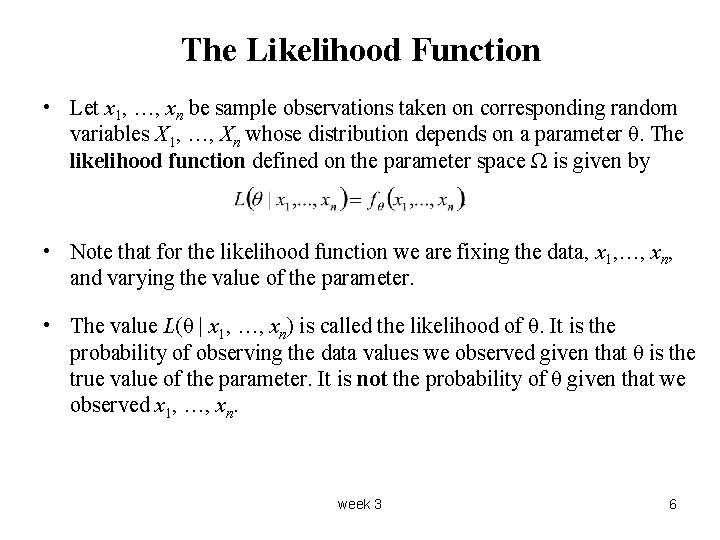

The Likelihood Function • Let x 1, …, xn be sample observations taken on corresponding random variables X 1, …, Xn whose distribution depends on a parameter θ. The likelihood function defined on the parameter space Ω is given by • Note that for the likelihood function we are fixing the data, x 1, …, xn, and varying the value of the parameter. • The value L(θ | x 1, …, xn) is called the likelihood of θ. It is the probability of observing the data values we observed given that θ is the true value of the parameter. It is not the probability of θ given that we observed x 1, …, xn. week 3 6

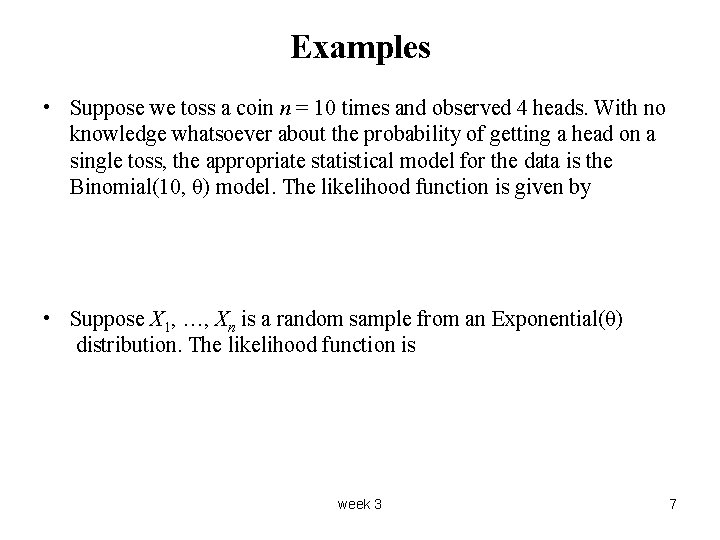

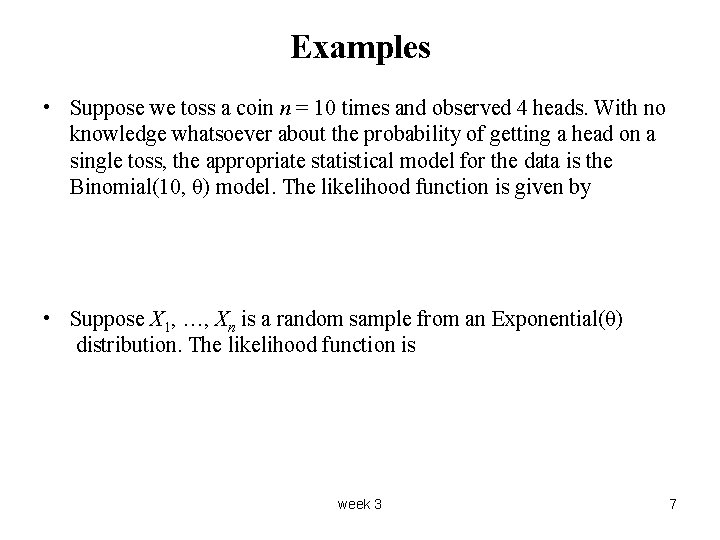

Examples • Suppose we toss a coin n = 10 times and observed 4 heads. With no knowledge whatsoever about the probability of getting a head on a single toss, the appropriate statistical model for the data is the Binomial(10, θ) model. The likelihood function is given by • Suppose X 1, …, Xn is a random sample from an Exponential(θ) distribution. The likelihood function is week 3 7

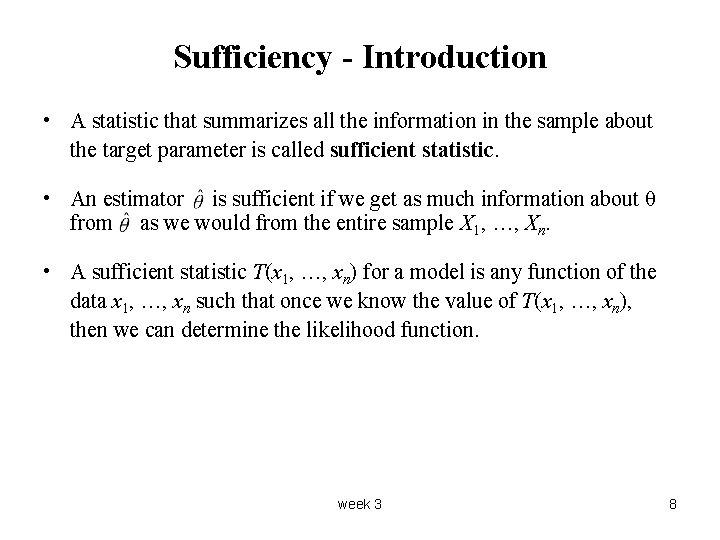

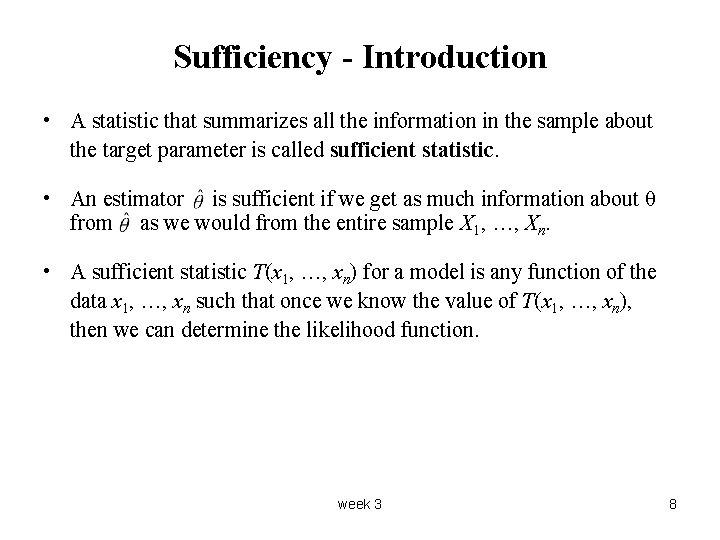

Sufficiency - Introduction • A statistic that summarizes all the information in the sample about the target parameter is called sufficient statistic. • An estimator is sufficient if we get as much information about θ from as we would from the entire sample X 1, …, Xn. • A sufficient statistic T(x 1, …, xn) for a model is any function of the data x 1, …, xn such that once we know the value of T(x 1, …, xn), then we can determine the likelihood function. week 3 8

Sufficient Statistic • A sufficient statistic is a function T(x 1, …, xn) defined on the sample space, such that whenever T(x 1, …, xn) = T(y 1, …, yn), then for some constant c. • Typically, T(x 1, …, xn) will be of lower dimension than x 1, …, xn, so we can consider replacing x 1, …, xn by T(x 1, …, xn) as a data reduction and this simplifies the analysis. • Example… week 3 9

Minimal Sufficient Statistics • A minimal sufficient statistic T for s model is any sufficient statistic such that once we know a likelihood function L(θ|x 1, …, xn) for the model and data then we can determine T(x 1, …, xn). • A relevant likelihood function can always be obtained from the value of any sufficient statistic T, but if T is minimal sufficient as well, then we can also obtain the value of T from any likelihood function. • It can be shown that a minimal sufficient statistics gives the maximal reduction of the data. • Example… week 3 10

Alternative Definition of Sufficient Statistic • Let X 1, …, Xn be a random sample from a distribution with unknown parameter θ. The statistic T(x 1, …, xn) is said to be sufficient for θ if the conditional distribution of X 1, …, Xn given T does not depend on θ. • This definition is much harder to work with as the conditional distribution of the sample X 1, …, Xn given the sufficient statistics T is often hard to derive. week 3 11

Factorization Theorem • Let T be a statistic based on a random sample X 1, …, Xn. Then T is a sufficient statistic for θ if i. e. if the likelihood function can be factored into two nonnegative functions one that depend on T(x 1, …, xn) and θ and one that depend only on the data x 1, …, xn. • Proof: week 3 12

Examples week 3 13