Connectionist Temporal Classification Labelling Unsegmented Sequence Data with

Connectionist Temporal Classification: Labelling Unsegmented Sequence Data with Recurrent Neural Networks Alex Graves, Santiago Fernandez, Faustion Gomez, Jiirgen Schmidhuber Presented By Ashiq Imran

Outline • Recurrent Neural Network (RNN) ØRNN for Sequence Modeling • Encoder-Decoder Model • Connectionist Temporal Classification (CTC) ØCTC Problem Definition ØCTC Description ØCTC Objective ØHow CTC collapsing works ØCTC Objective and Gradient ØExperiments ØResults ØSummary

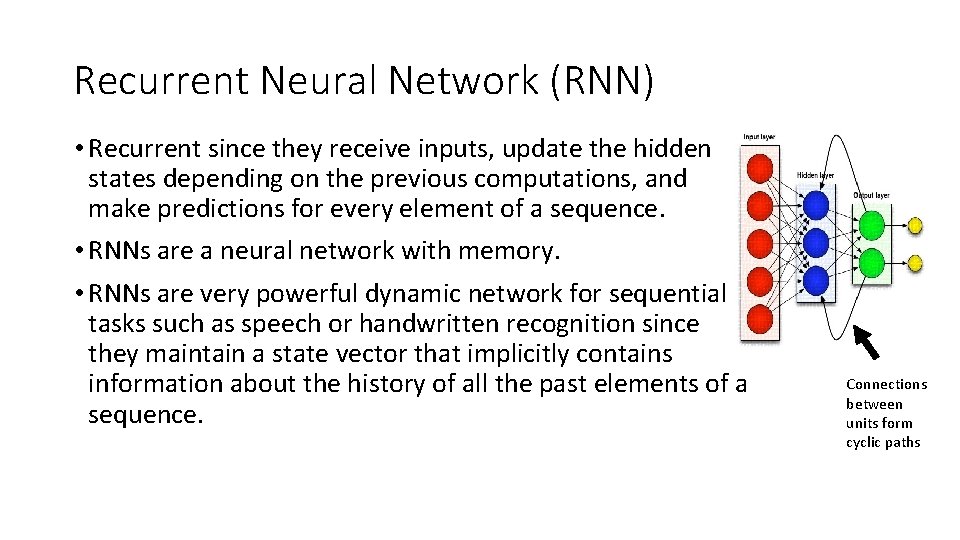

Recurrent Neural Network (RNN) • Recurrent since they receive inputs, update the hidden states depending on the previous computations, and make predictions for every element of a sequence. • RNNs are a neural network with memory. • RNNs are very powerful dynamic network for sequential tasks such as speech or handwritten recognition since they maintain a state vector that implicitly contains information about the history of all the past elements of a sequence. Connections between units form cyclic paths

RNN for Sequential Modeling • https: //www. researchgate. net/publication/314124036_Candidate_Oil_Spill_Detection_in_SLAR_Data_A_Recurrent_Neural_Network-based_Approach/figures? lo=1

Encoder Decoder Model • Most commonly used framework for sequence modeling with neural networks. • The encoder maps the input sequence X into a hidden representation. • The decoder consumes the hidden representation and produces a distribution over the outputs. H = encode(X) P(Y |X ) = decode(H) • Encode(. ) and Decode(. ) functions are typically RNNs. • Example, Machine Translation, Speech recognition, so on

Connectionist Temporal Classification (CTC) • Scenario: • A lot of real world problem needs to predict sequence label from unsegmented or noisy data. For example, speech recognition, handwriting recognition and so on. • Problem: • Requires pre-segmentation of input sequence • Requires post processing of transforming output to label sequence • Proposed Method: • Training RNN directly so that it can take unsegmented input sequences and produce output labelling sequences Graves, Alex, et al. "Connectionist temporal classification: labelling unsegmented sequence data with recurrent neural networks. " Proceedings of the 23 rd international conference on Machine learning. ACM, 2006.

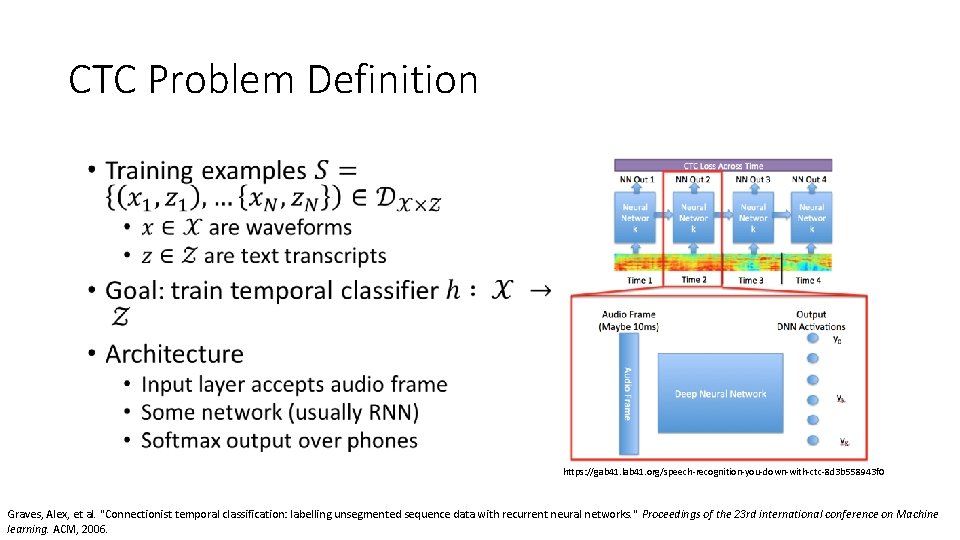

Connectionist Temporal Classification (CTC) • https: //gab 41. lab 41. org/speech-recognition-you-down-with-ctc-8 d 3 b 558943 f 0 Graves, Alex, et al. "Connectionist temporal classification: labelling unsegmented sequence data with recurrent neural networks. " Proceedings of the 23 rd international conference on Machine https: //gab 41. lab 41. org/speech-recognition-you-down-with-ctc-8 d 3 b 558943 f 0, ftp: //ftp. idsia. ch/pub/juergen/icml 2006. pdf learning. ACM, 2006.

CTC Problem Definition • https: //gab 41. lab 41. org/speech-recognition-you-down-with-ctc-8 d 3 b 558943 f 0 Graves, Alex, et al. "Connectionist temporal classification: labelling unsegmented sequence data with recurrent neural networks. " Proceedings of the 23 rd international conference on Machine https: //gab 41. lab 41. org/speech-recognition-you-down-with-ctc-8 d 3 b 558943 f 0, ftp: //ftp. idsia. ch/pub/juergen/icml 2006. pdf learning. ACM, 2006.

CTC Description • Graves, Alex, et al. "Connectionist temporal classification: labelling unsegmented sequence data with recurrent neural networks. " Proceedings of the 23 rd international conference on Machine learning. ACM, 2006.

CTC Description Output Activations • https: //gab 41. lab 41. org/speech-recognition-you-down-with-ctc-8 d 3 b 558943 f 0 Graves, Alex, et al. "Connectionist temporal classification: labelling unsegmented sequence data with recurrent neural networks. " Proceedings of the 23 rd international conference on Machine https: //gab 41. lab 41. org/speech-recognition-you-down-with-ctc-8 d 3 b 558943 f 0, ftp: //ftp. idsia. ch/pub/juergen/icml 2006. pdf learning. ACM, 2006.

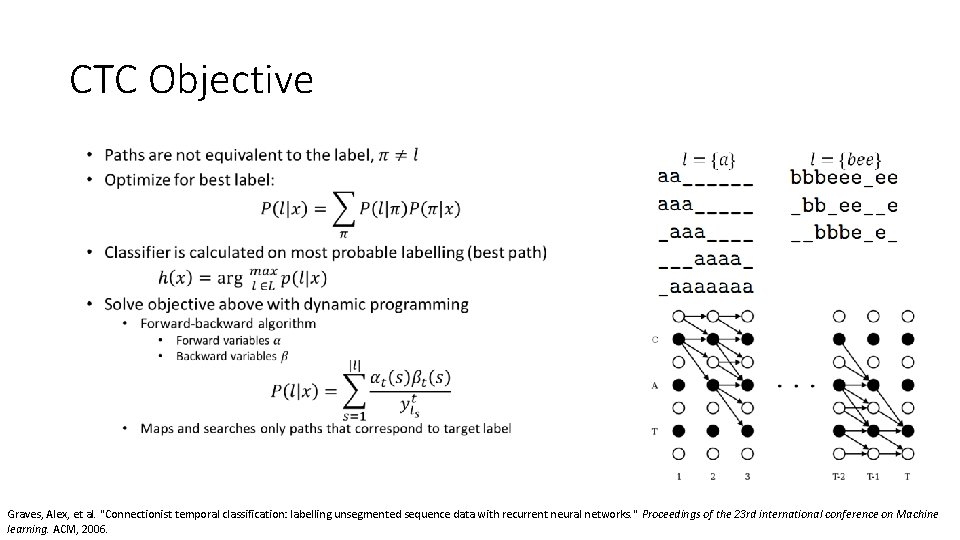

CTC Objective • Graves, Alex, et al. "Connectionist temporal classification: labelling unsegmented sequence data with recurrent neural networks. " Proceedings of the 23 rd international conference on Machine https: //github. com/yiwangbaidu/notes/blob/master/CTC. pdf, ftp: //ftp. idsia. ch/pub/juergen/icml 2006. pdf learning. ACM, 2006.

How CTC Collapsing works https: //distill. pub/2017/ctc/ Graves, Alex, et al. "Connectionist temporal classification: labelling unsegmented sequence data with recurrent neural networks. " Proceedings of the 23 rd international conference on Machine https: //github. com/yiwangbaidu/notes/blob/master/CTC. pdf, ftp: //ftp. idsia. ch/pub/juergen/icml 2006. pdf learning. ACM, 2006.

CTC Objective and Gradient • Graves, Alex, et al. "Connectionist temporal classification: labelling unsegmented sequence data with recurrent neural networks. " Proceedings of the 23 rd international conference on Machine ftp: //ftp. idsia. ch/pub/juergen/icml 2006. pdf learning. ACM, 2006.

Experiments • Dataset : TIMIT speech corpus. • It contains recordings of prompted English speech, accompanied by manually segmented phonetic transcripts. • CTC was compared with both HMM & HMM-RNN • Bi-directional LSTM (BLSTM) used for both CTC & HMM-RNN Hybrid Model • BRNN & Unidirectional RNN gives worse result Graves, Alex, et al. "Connectionist temporal classification: labelling unsegmented sequence data with recurrent neural networks. " Proceedings of the 23 rd international conference on Machine learning. ACM, 2006.

Experimental Results Label Error Rate (LER) on TIMIT. System Context Independent HMM 38. 85% Context Dependent HMM 35. 21% BLSTM/HMM Weighted Error BLSTM/HMM CTC (best path) CTC (prefix search) • With Prefix search decoding, CTC outperforms both HMM & HMMRNN Hybrid methods Graves, Alex, et al. "Connectionist temporal classification: labelling unsegmented sequence data with recurrent neural networks. " Proceedings of the 23 rd international conference on Machine learning. ACM, 2006.

Discussions • CTC doesn’t explicitly segments input sequences. • Rather allows the network to be trained directly for labelling sequence • Without task specific knowledge, it outperforms both HMM & HMMRNN Hybrid model • For segmented data (protein secondary structure prediction), it may not give good results. Graves, Alex, et al. "Connectionist temporal classification: labelling unsegmented sequence data with recurrent neural networks. " Proceedings of the 23 rd international conference on Machine learning. ACM, 2006.

CTC Summary • Graves, Alex, et al. "Connectionist temporal classification: labelling unsegmented sequence data with recurrent neural networks. " Proceedings of the 23 rd international conference on Machine learning. ACM, 2006.

• CTC Summary Graves, Alex, et al. "Connectionist temporal classification: labelling unsegmented sequence data with recurrent neural networks. " Proceedings of the 23 rd international conference on Machine learning. ACM, 2006.

Thank you • Any Questions?

- Slides: 19