Congestion Control EE 122 Intro to Communication Networks

- Slides: 49

Congestion Control EE 122: Intro to Communication Networks Fall 2010 (MW 4 -5: 30 in 101 Barker) Scott Shenker TAs: Sameer Agarwal, Sara Alspaugh, Igor Ganichev, Prayag Narula http: //inst. eecs. berkeley. edu/~ee 122/ Materials with thanks to Jennifer Rexford, Ion Stoica, Vern Paxson and other colleagues at Princeton and UC Berkeley

Goals of Today’s Lecture • Congestion Control: Overview – End system adaptation controlling network load • Congestion Control: Some Details – Additive-increase, multiplicative-decrease (AIMD) – How to begin transmitting: Slow Start (really “fast start”) • Why AIMD? • Listing of Additional Congestion Control Topics Will repeat myself several times in this lecture Easy to think you understand congestion control Trust me, you don’t……because I don’t either! 2

Course So Far…. We know: • How to process packets in a switch • How to route packets in the network • How to send packets reliably • How to not overload a receiver • How to split infinitives…… We don’t know: • How to not overload the network! 3

Congestion Control Overview 4

It’s Not Just The Sender & Receiver • Flow control keeps one fast sender from overwhelming a slow receiver • Congestion control keeps a set of senders from overloading the network • Huge academic literature on congestion control – Advances in mid-80 s by Jacobson “saved” the Internet – Well-defined performance question: easy to write papers – Less of a focus now (bottlenecked access links? )… – …but still far from academically settled o E. g. battle over “fairness” with Bob Briscoe… 5

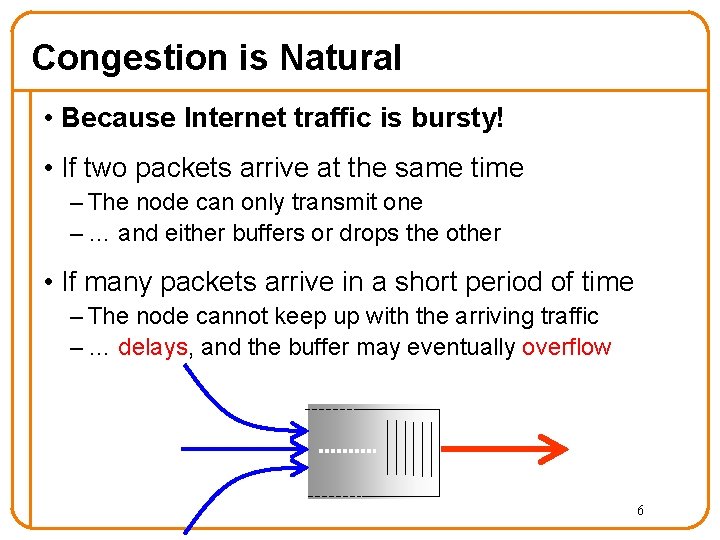

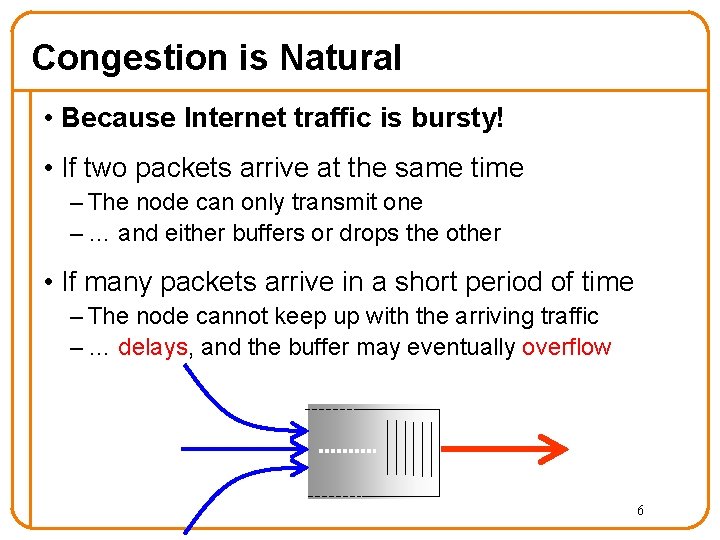

Congestion is Natural • Because Internet traffic is bursty! • If two packets arrive at the same time – The node can only transmit one – … and either buffers or drops the other • If many packets arrive in a short period of time – The node cannot keep up with the arriving traffic – … delays, and the buffer may eventually overflow 6

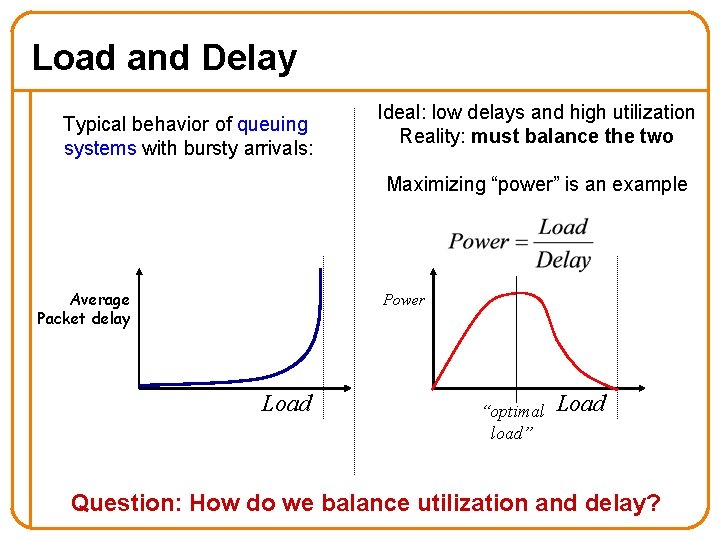

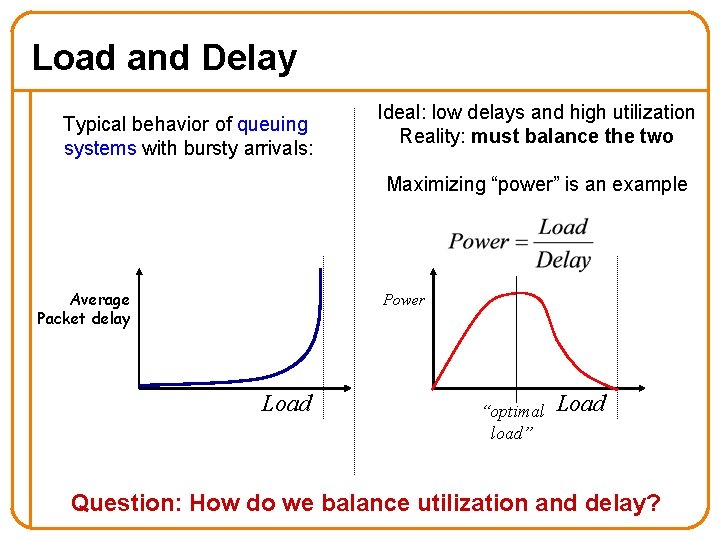

Load and Delay Typical behavior of queuing systems with bursty arrivals: Ideal: low delays and high utilization Reality: must balance the two Maximizing “power” is an example Average Packet delay Power Load “optimal load” Load Question: How do we balance utilization and delay? 7

Dynamic Adjustment by End systems • Probe network to test level of congestion – Speed up when no congestion – Slow down when congestion • Drawbacks: – Suboptimal (always above or below optimal point) – Messy dynamics – Relies on end system cooperation • Might seem complicated to implement – But algorithms are pretty simple (Jacobson/Karels ‘ 88) – Understanding global behavior is not simple…. 8

Basics of TCP Congestion Control • Each source determines the available capacity – … so it knows how many packets to have in flight • Congestion window (CWND) – Maximum # of unacknowledged bytes to have in flight – Congestion-control equivalent of receiver window – Max. Window = min{congestion window, receiver window} – Send at the rate of the slowest component • Adapting the congestion window – Decrease upon detecting congestion – Increase upon lack of congestion: optimistic exploration • Note: TCP congestion control done only by end systems, not by mechanisms inside the network 9

Detecting Congestion • How can a TCP sender determine that the network is under stress? • Network could tell it (ICMP Source Quench) – Risky, because during times of overload the signal itself could be dropped (and add to congestion)! • Packet delays go up (knee of load-delay curve) – Tricky: noisy signal (delay often varies considerably) • Packet loss – Fail-safe signal that TCP already has to detect – Complication: non-congestive loss (checksum errors) 10

How to Adjust CWND? • Increase linearly, decrease multiplicatively (AIMD) – Consequences of over-sized window much worse than having an under-sized window o Over-sized window: packets dropped and retransmitted o Under-sized window: somewhat lower throughput – Will come back to this later • Additive increase – On success for last window of data, increase linearly o TCP uses an increase of one packet (MSS) per RTT • Multiplicative decrease – On loss of packet, TCP divides congestion window in half 11

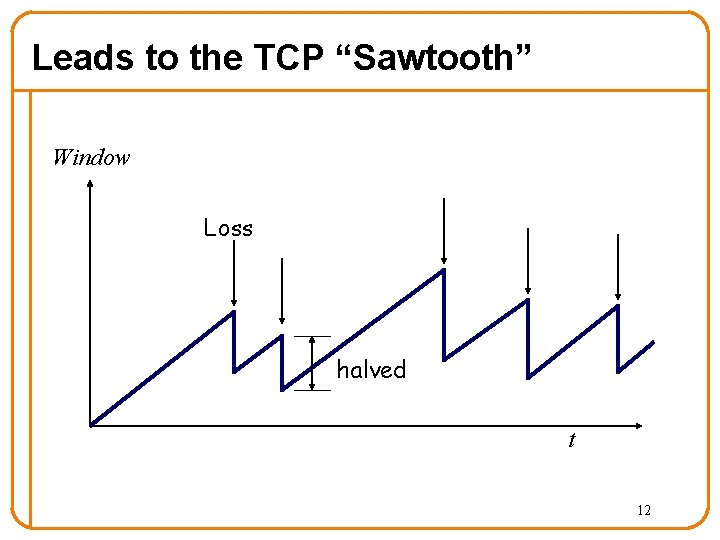

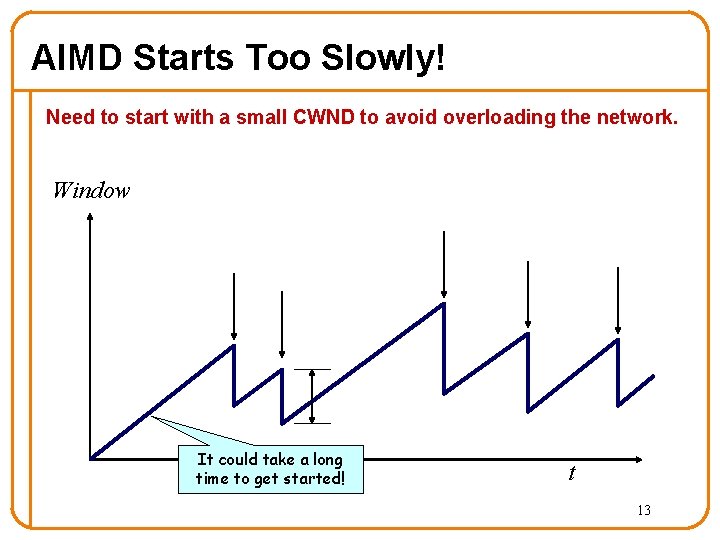

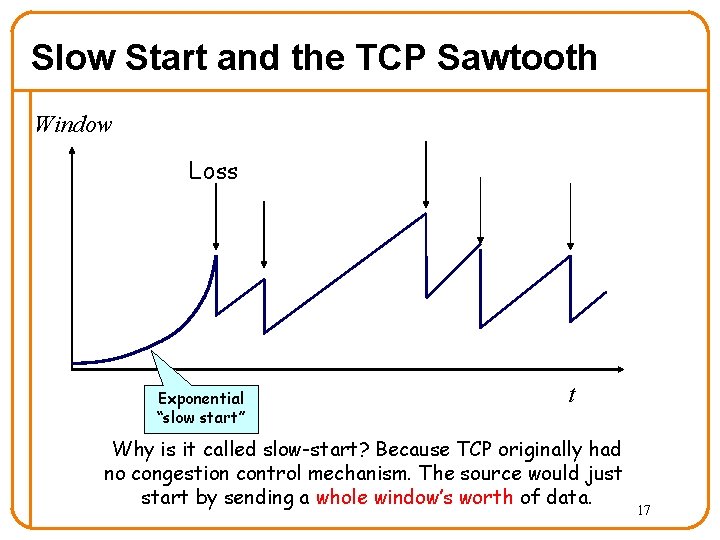

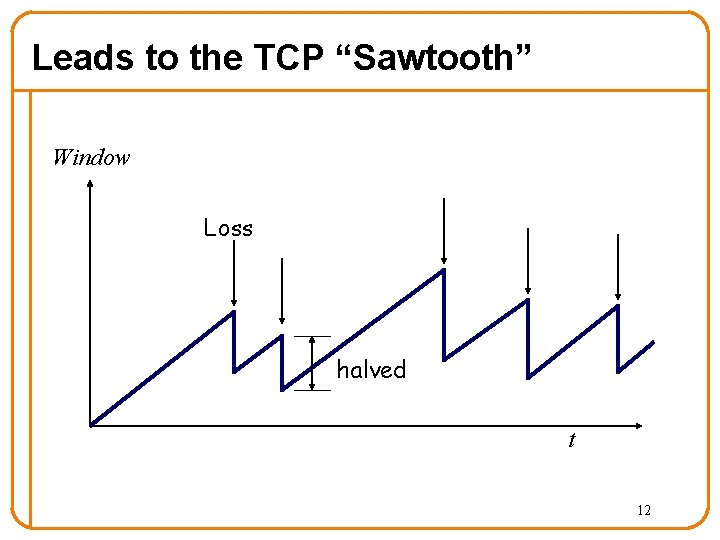

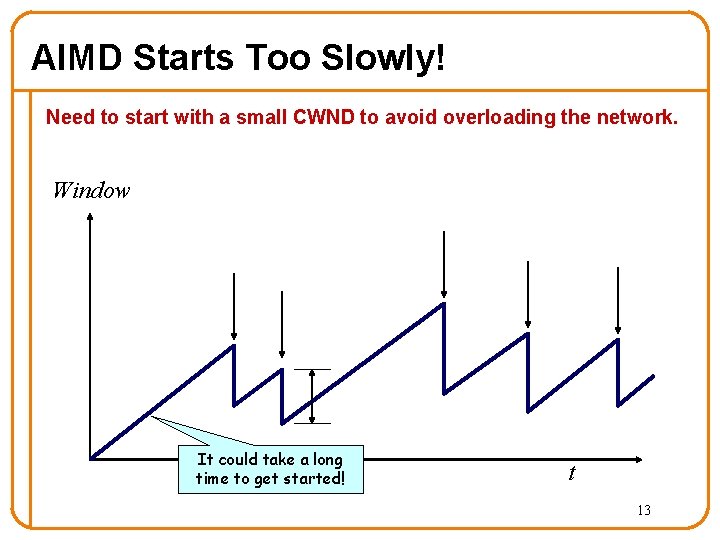

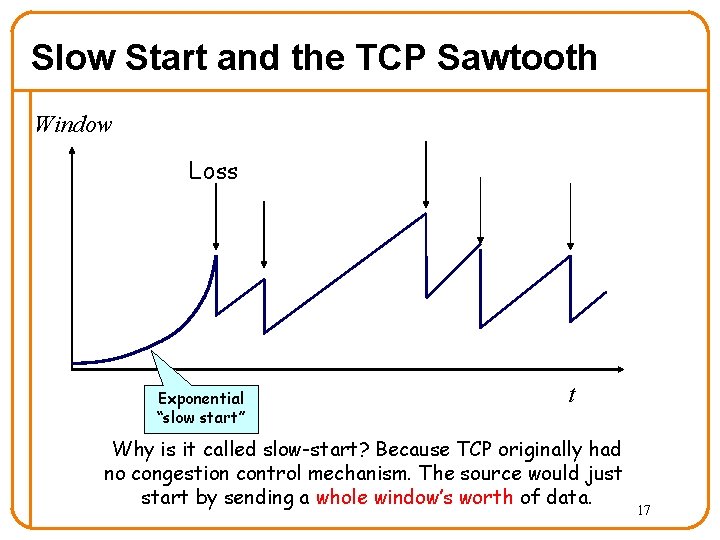

Leads to the TCP “Sawtooth” Window Loss halved t 12

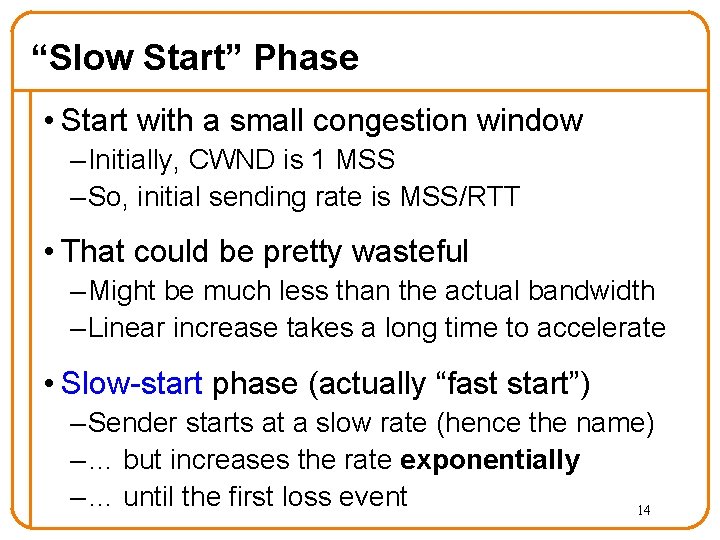

AIMD Starts Too Slowly! Need to start with a small CWND to avoid overloading the network. Window It could take a long time to get started! t 13

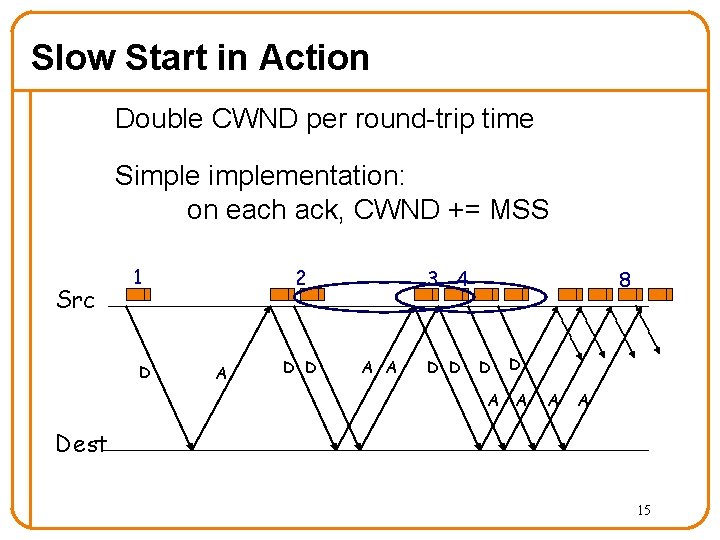

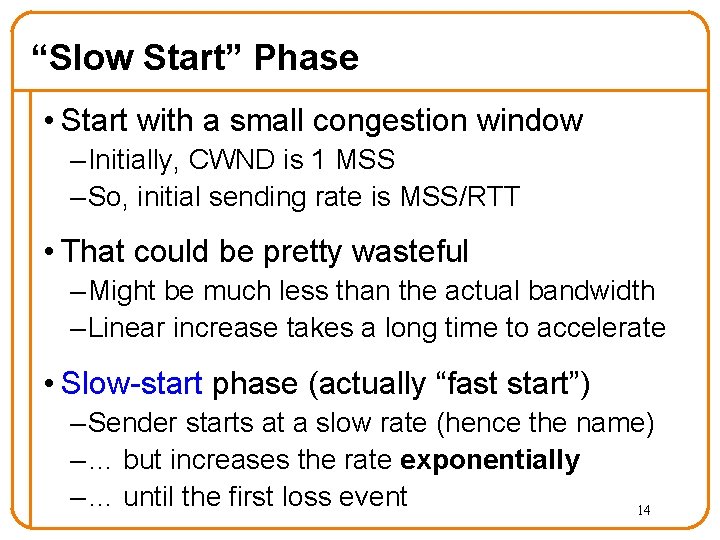

“Slow Start” Phase • Start with a small congestion window – Initially, CWND is 1 MSS – So, initial sending rate is MSS/RTT • That could be pretty wasteful – Might be much less than the actual bandwidth – Linear increase takes a long time to accelerate • Slow-start phase (actually “fast start”) – Sender starts at a slow rate (hence the name) – … but increases the rate exponentially – … until the first loss event 14

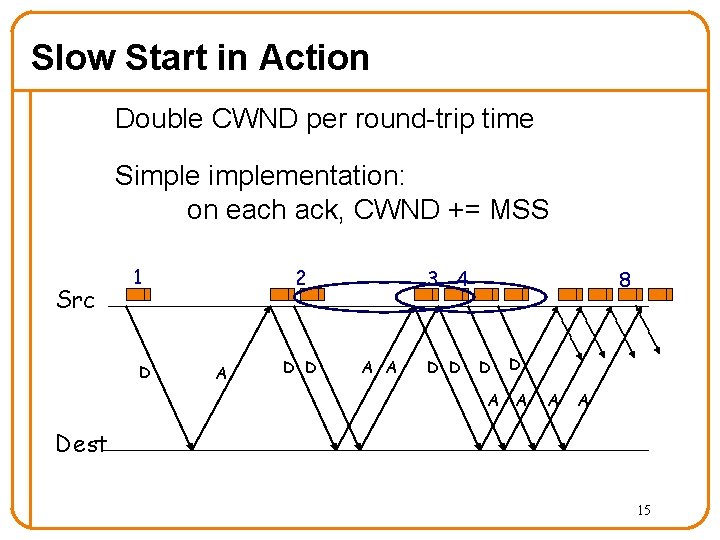

Slow Start in Action Double CWND per round-trip time Simplementation: on each ack, CWND += MSS Src 1 D 2 A D D 3 4 A A D D 8 D A A A Dest 15

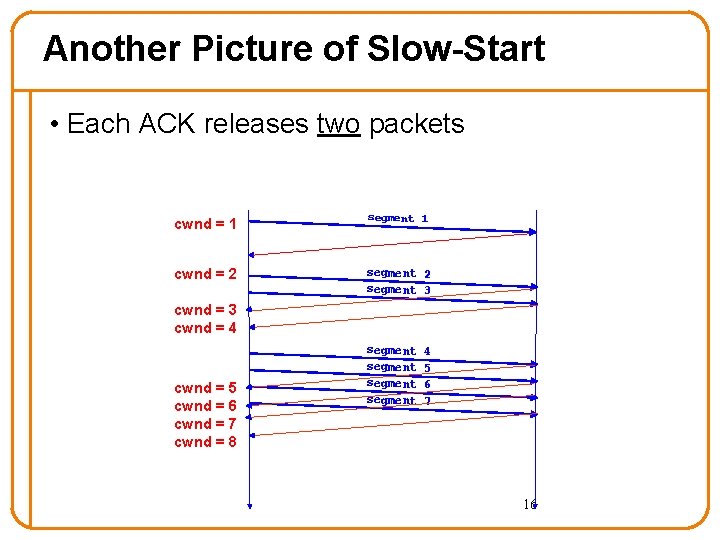

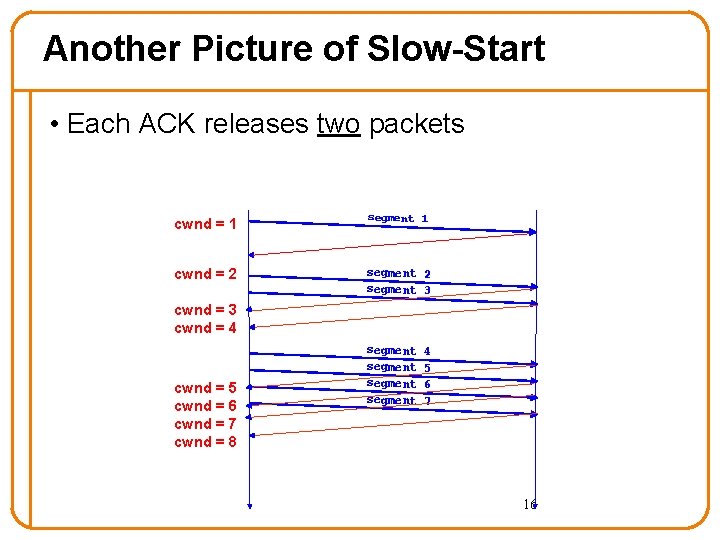

Another Picture of Slow-Start • Each ACK releases two packets cwnd = 1 segment 1 cwnd = 2 segment 3 cwnd = 4 cwnd = 5 cwnd = 6 cwnd = 7 cwnd = 8 segment 4 5 6 7 16

Slow Start and the TCP Sawtooth Window Loss Exponential “slow start” t Why is it called slow-start? Because TCP originally had no congestion control mechanism. The source would just start by sending a whole window’s worth of data. 17

This has been incredibly successful • Leads to theoretical puzzle: If TCP congestion control is the answer, then what was the question? • Not about optimizing, but about robustness – Hard to capture… 18

Congestion Control Details 19

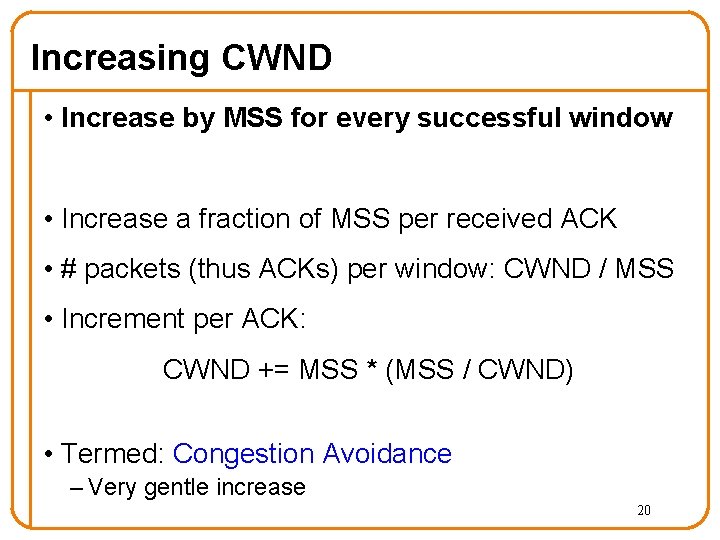

Increasing CWND • Increase by MSS for every successful window • Increase a fraction of MSS per received ACK • # packets (thus ACKs) per window: CWND / MSS • Increment per ACK: CWND += MSS * (MSS / CWND) • Termed: Congestion Avoidance – Very gentle increase 20

Detecting Packet Loss • If retransmitting, already assuming packet loss – Two criteria for retransmissions • Packet can timeout (RTO timer expires) – But RTO is often ~ 500 msec • Fast retransmit – When same packet gets acked 4 times (3 Dup. ACKs) • Congestion control treats these events differently 21

Fast Retransmission • Packet n is lost, but packets n+1, n+2, …, arrive • On each arrival of a packet not in sequence, receiver generates an ACK – ACK is for seq. no. just beyond highest in-sequence • So as n+1, n+2, … arrive, receiver generates repeated ACKs for seq. no. n – “duplicate” acknowledgments since they look the same • Sender sees 3 of these dup. ACKs and immediately retransmits packet n (and only n) • Multiplicative decrease and keep going – CWND halved 22

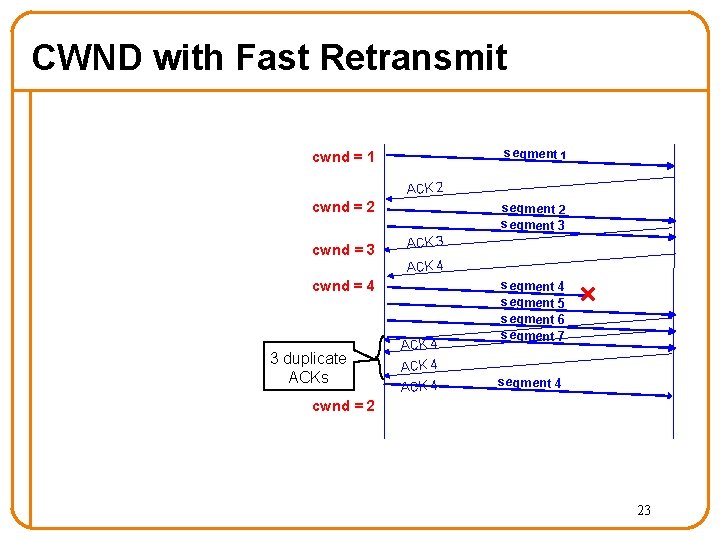

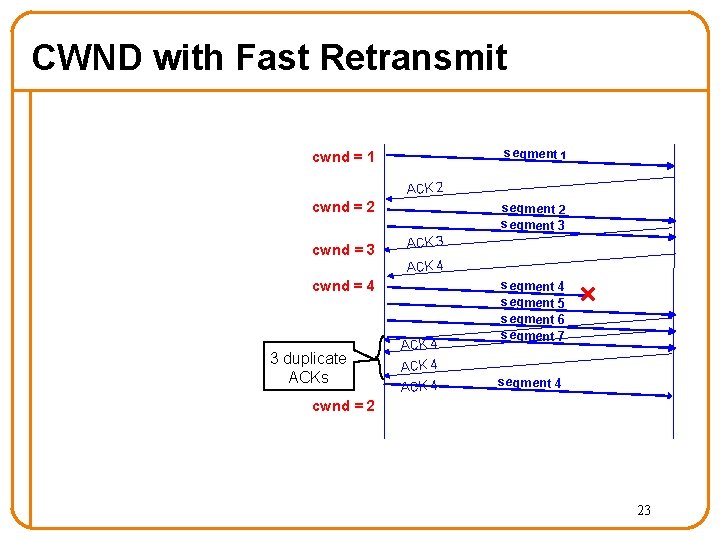

CWND with Fast Retransmit segment 1 cwnd = 1 ACK 2 cwnd = 3 segment 2 segment 3 ACK 4 cwnd = 4 3 duplicate ACKs ACK 4 segment 5 segment 6 segment 7 segment 4 cwnd = 2 23

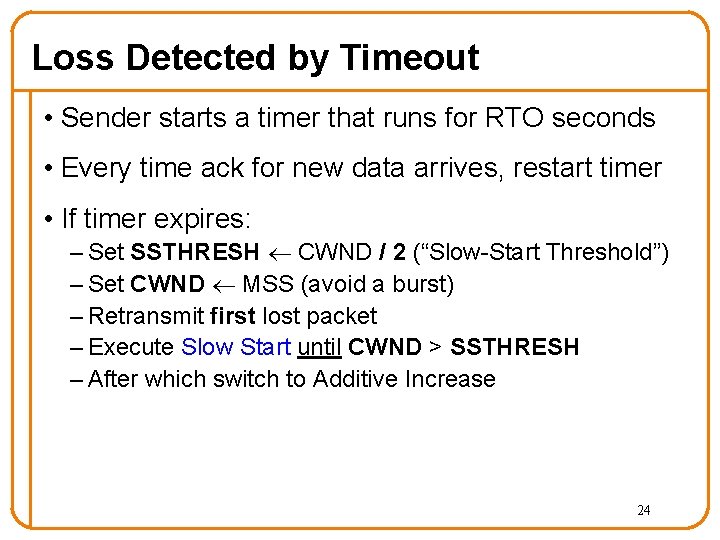

Loss Detected by Timeout • Sender starts a timer that runs for RTO seconds • Every time ack for new data arrives, restart timer • If timer expires: – Set SSTHRESH CWND / 2 (“Slow-Start Threshold”) – Set CWND MSS (avoid a burst) – Retransmit first lost packet – Execute Slow Start until CWND > SSTHRESH – After which switch to Additive Increase 24

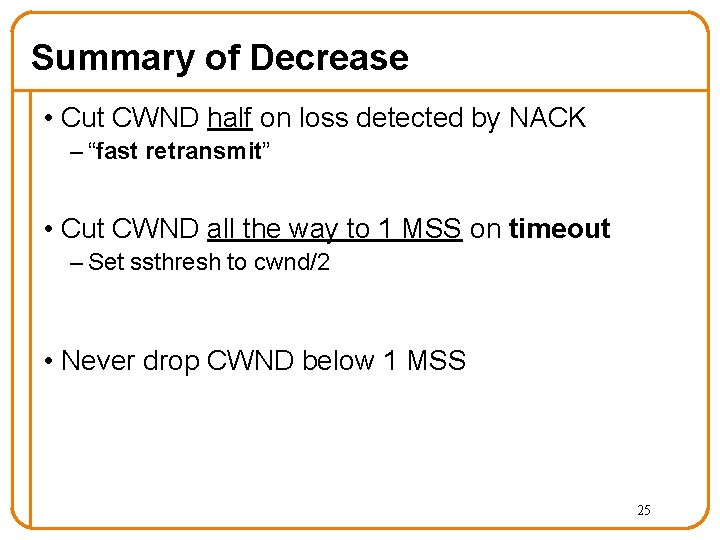

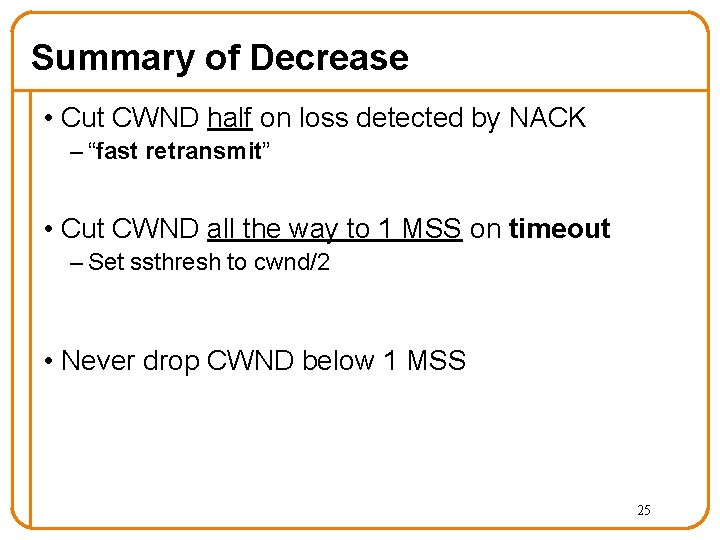

Summary of Decrease • Cut CWND half on loss detected by NACK – “fast retransmit” • Cut CWND all the way to 1 MSS on timeout – Set ssthresh to cwnd/2 • Never drop CWND below 1 MSS 25

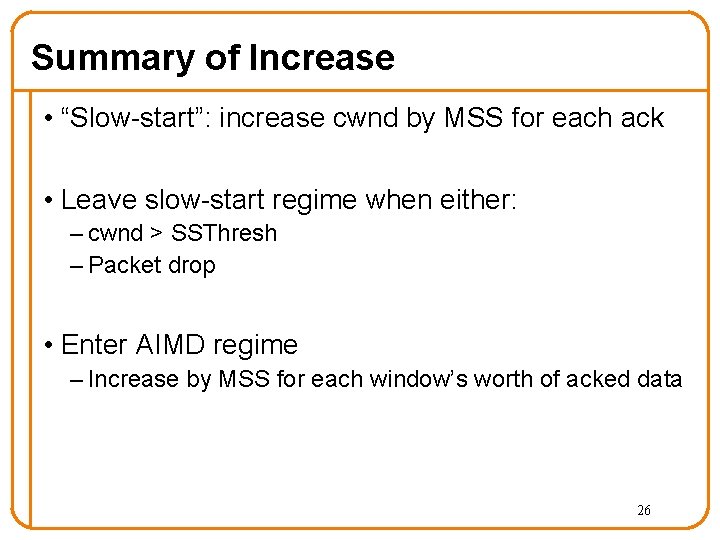

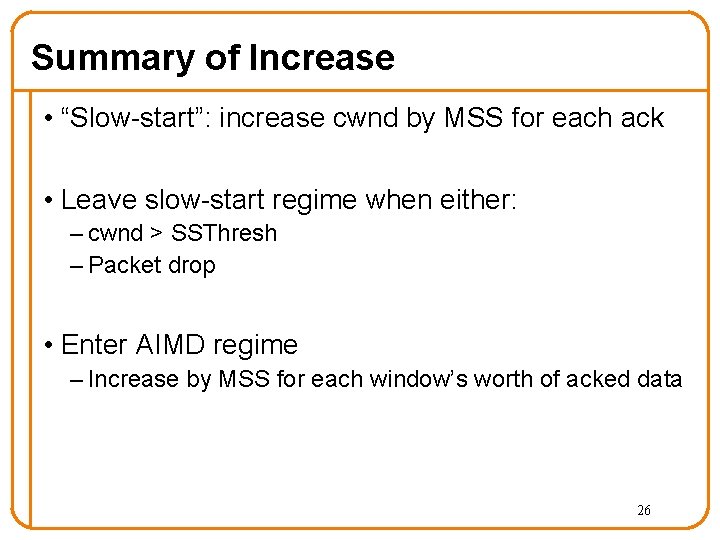

Summary of Increase • “Slow-start”: increase cwnd by MSS for each ack • Leave slow-start regime when either: – cwnd > SSThresh – Packet drop • Enter AIMD regime – Increase by MSS for each window’s worth of acked data 26

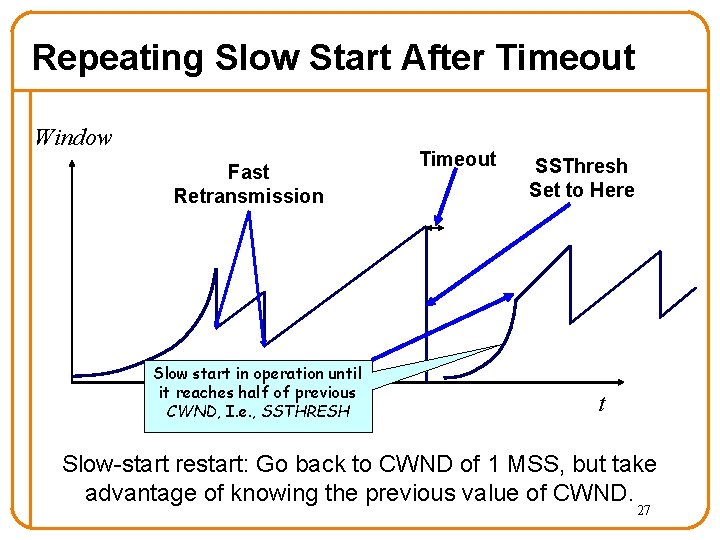

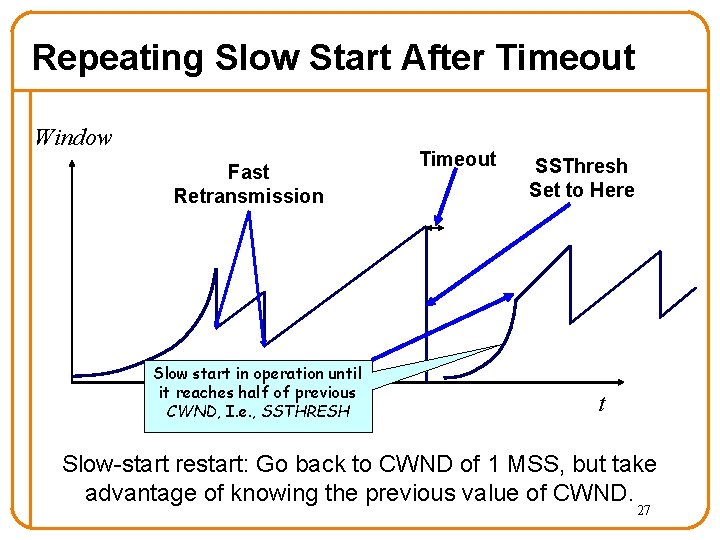

Repeating Slow Start After Timeout Window Fast Retransmission Slow start in operation until it reaches half of previous CWND, I. e. , SSTHRESH Timeout SSThresh Set to Here t Slow-start restart: Go back to CWND of 1 MSS, but take advantage of knowing the previous value of CWND. 27

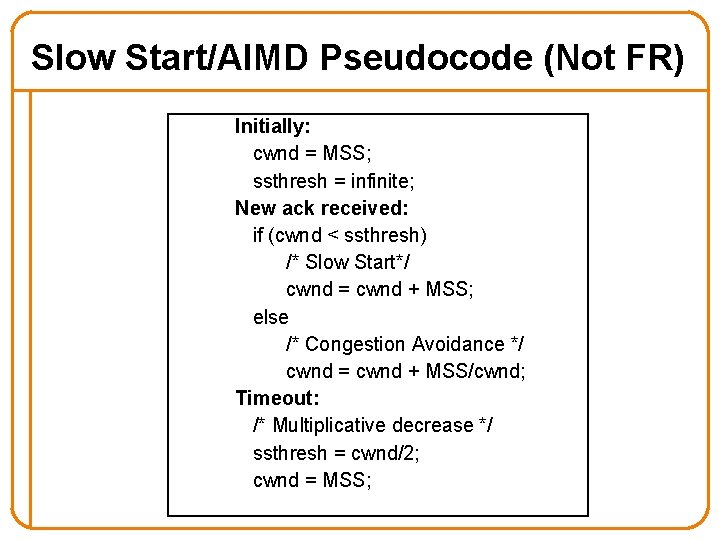

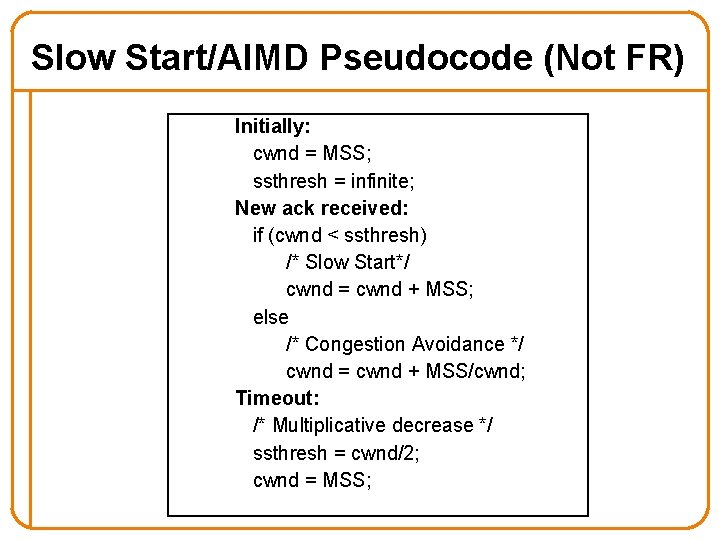

Slow Start/AIMD Pseudocode (Not FR) Initially: cwnd = MSS; ssthresh = infinite; New ack received: if (cwnd < ssthresh) /* Slow Start*/ cwnd = cwnd + MSS; else /* Congestion Avoidance */ cwnd = cwnd + MSS/cwnd; Timeout: /* Multiplicative decrease */ ssthresh = cwnd/2; cwnd = MSS; 28

5 Minute Break Questions Before We Proceed? 29

Announcements • No office hours today • HW 3 a grades released • On Monday Igor will review Networking Libraries – For 45 minutes • Then I will cover Overlays and P 2 P…. 30

Why AIMD? In what follows refer to cwnd in units of MSS 31

Three Congestion Control Challenges • Single flow adjusting to bottleneck bandwidth – Without any a priori knowledge – Could be a Gbps link; could be a modem • Single flow adjusting to variations in bandwidth – When bandwidth decreases, must lower sending rate – When bandwidth increases, must increase sending rate • Multiple flows sharing the bandwidth – Must avoid overloading network – And share bandwidth “fairly” among the flows 32

Problem #1: Single Flow, Fixed BW • Want to get a first-order estimate of the available bandwidth – Assume bandwidth is fixed – Ignore presence of other flows • Want to start slow, but rapidly increase rate until packet drop occurs (“slow-start”) • Adjustment: – cwnd initially set to 1 (MSS) – cwnd++ upon receipt of ACK 33

Problems with Slow-Start • Slow-start can result in many losses – Roughly the size of cwnd ~ BW*RTT • Example: – At some point, cwnd is enough to fill “pipe” – After another RTT, cwnd is double its previous value – All the excess packets are dropped! • Need a more gentle adjustment algorithm once have rough estimate of bandwidth 34

Problem #2: Single Flow, Varying BW Want to track available bandwidth • Oscillate around its current value • If you never send more than your current rate, you won’t know if more bandwidth is available Possible variations: (in terms of change per RTT) • Multiplicative increase or decrease: cwnd * / a • Additive increase or decrease: cwnd +- b 35

Four alternatives • AIAD: gentle increase, gentle decrease • AIMD: gentle increase, drastic decrease • MIAD: drastic increase, gentle decrease – too many losses: eliminate • MIMD: drastic increase and decrease 36

Why AIMD? • What’s wrong with AIAD? • What’s wrong with MIMD? 37

Problem #3: Multiple Flows • Want steady state to be “fair” • Many notions of fairness, but here just require two identical flows to end up with the same bandwidth • This eliminates MIMD and AIAD – As we shall see… • AIMD is the only remaining solution! – Not really, but close enough…. 38

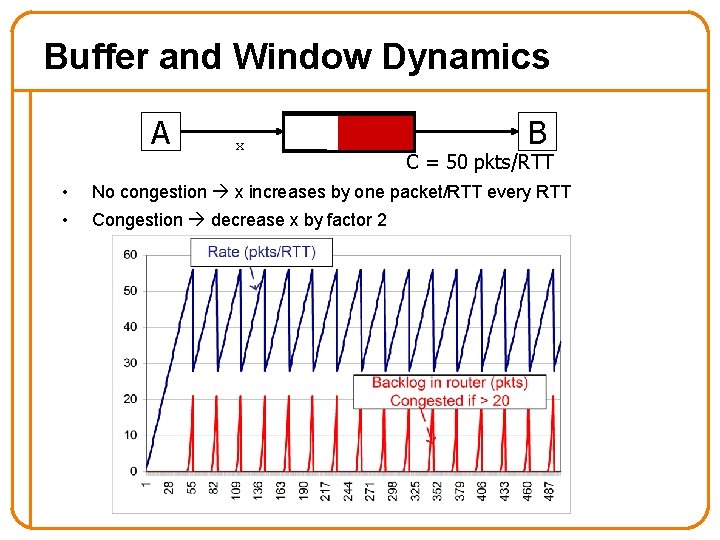

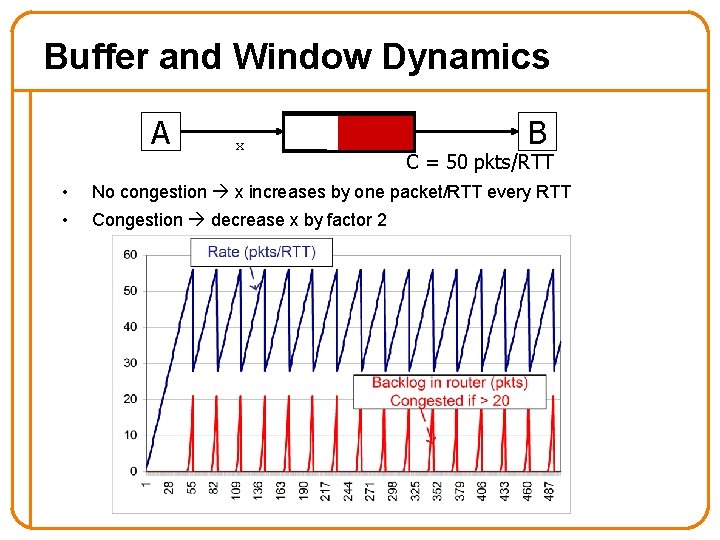

Buffer and Window Dynamics A x B C = 50 pkts/RTT • No congestion x increases by one packet/RTT every RTT • Congestion decrease x by factor 2 39

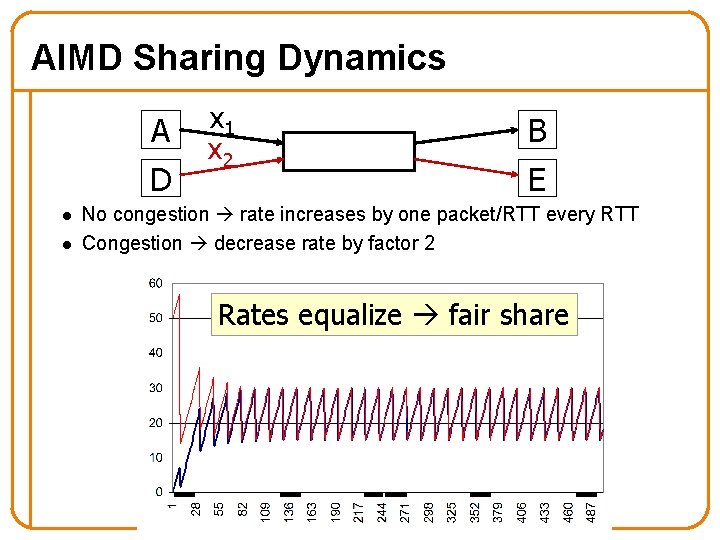

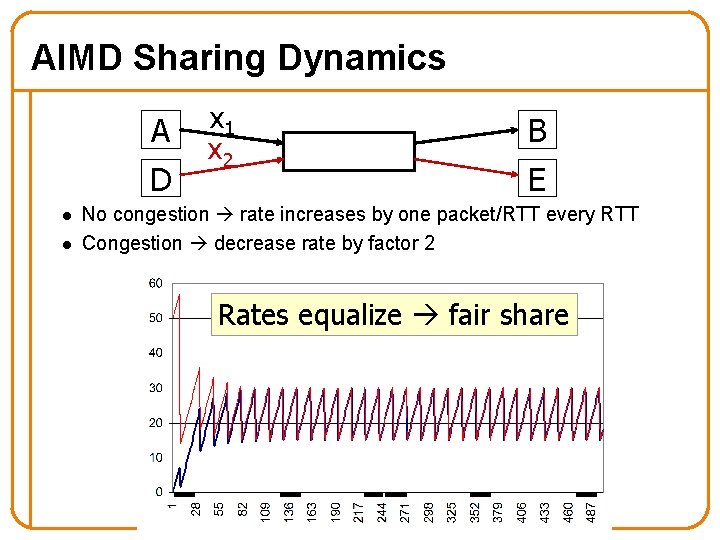

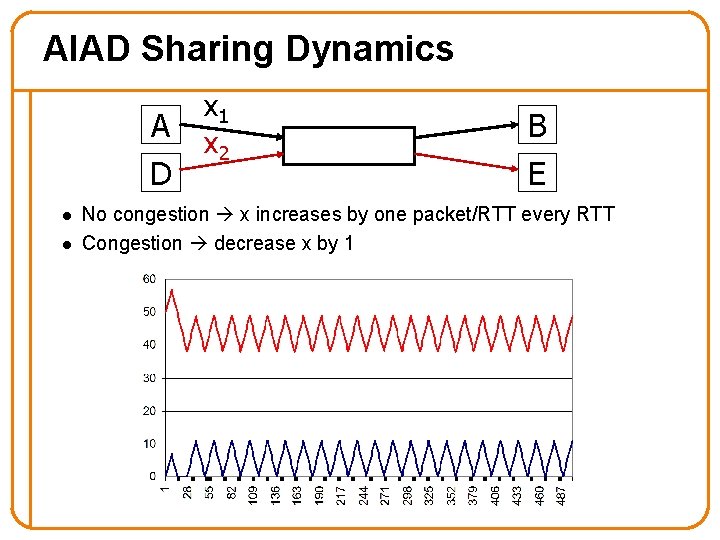

AIMD Sharing Dynamics A D l l x 1 x 2 B E No congestion rate increases by one packet/RTT every RTT Congestion decrease rate by factor 2 Rates equalize fair share 40

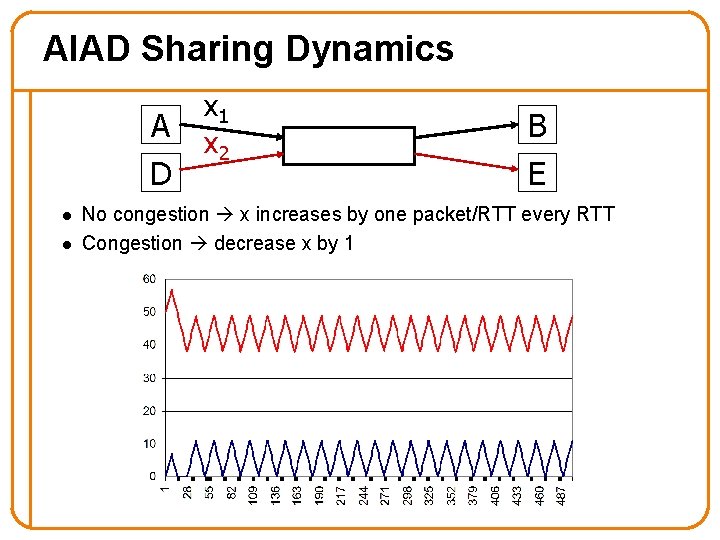

AIAD Sharing Dynamics A D l l x 1 x 2 B E No congestion x increases by one packet/RTT every RTT Congestion decrease x by 1 41

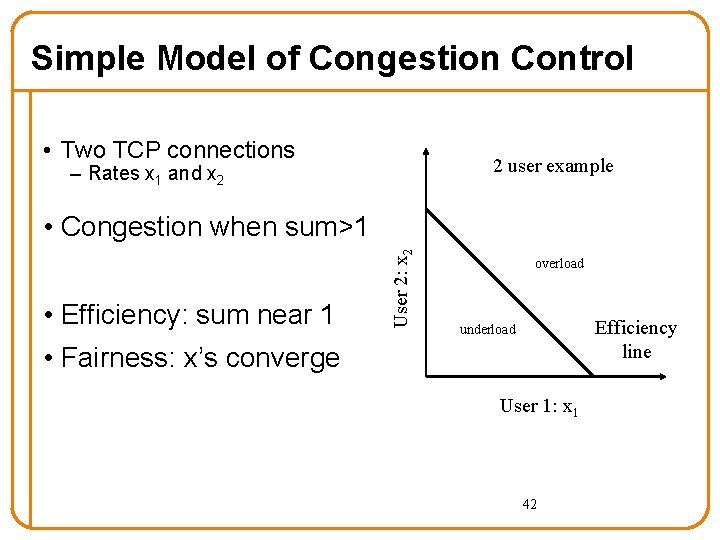

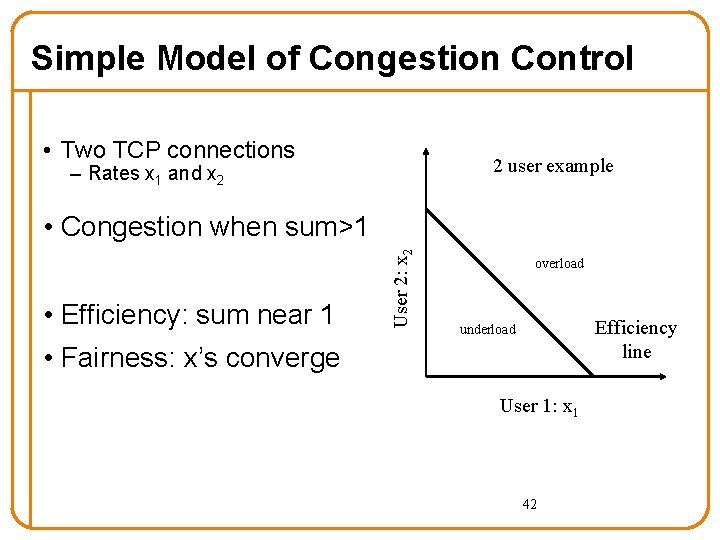

Simple Model of Congestion Control • Two TCP connections 2 user example – Rates x 1 and x 2 • Efficiency: sum near 1 User 2: x 2 • Congestion when sum>1 overload Efficiency line underload • Fairness: x’s converge User 1: x 1 42

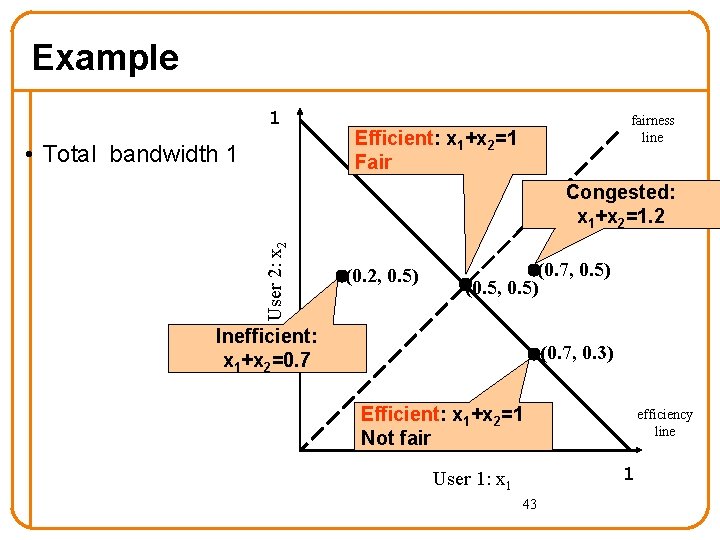

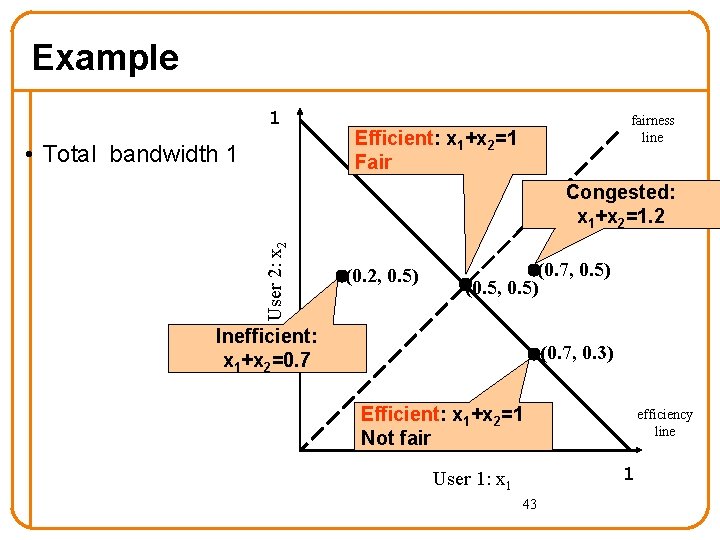

Example 1 • Total bandwidth 1 fairness line Efficient: x 1+x 2=1 Fair User 2: x 2 Congested: x 1+x 2=1. 2 (0. 2, 0. 5) (0. 7, 0. 5) (0. 5, 0. 5) Inefficient: x 1+x 2=0. 7 (0. 7, 0. 3) Efficient: x 1+x 2=1 Not fair efficiency line 1 User 1: x 1 43

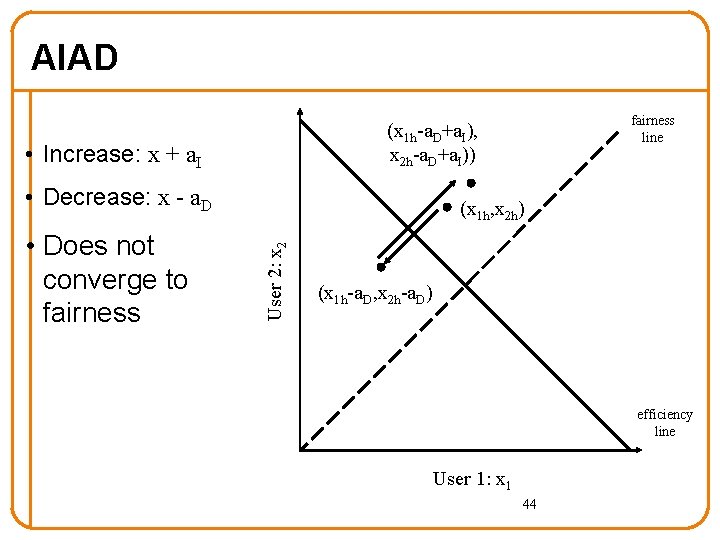

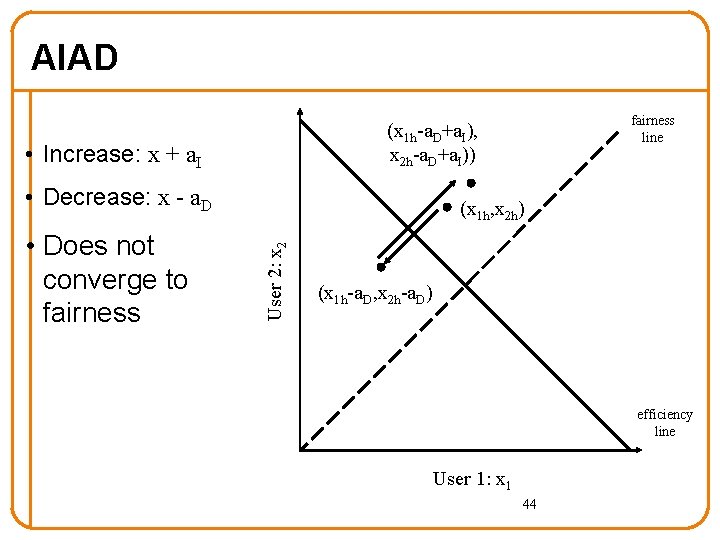

AIAD • Increase: x + a. I • Decrease: x - a. D (x 1 h, x 2 h) User 2: x 2 • Does not converge to fairness line (x 1 h-a. D+a. I), x 2 h-a. D+a. I)) (x 1 h-a. D, x 2 h-a. D) efficiency line User 1: x 1 44

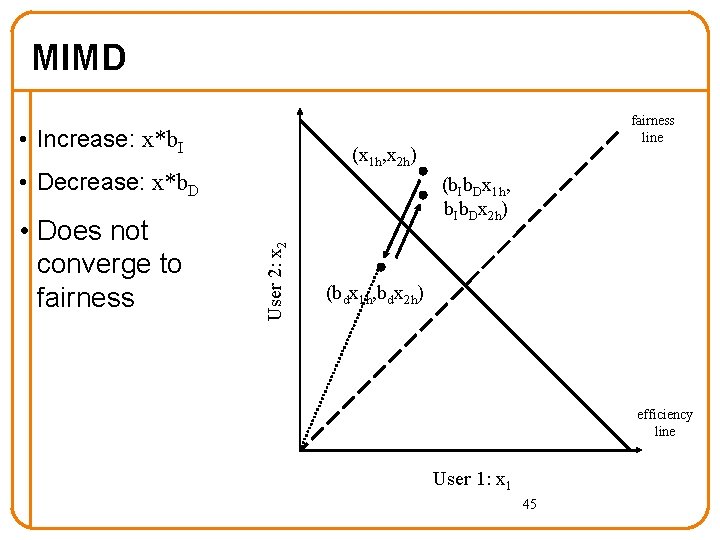

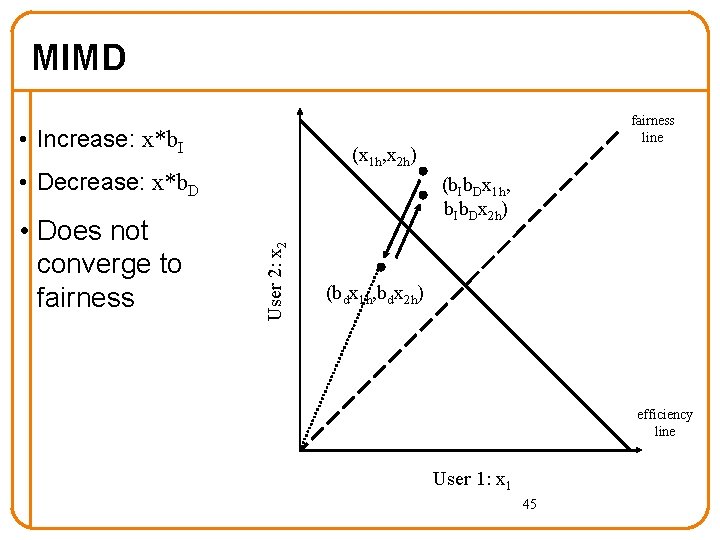

MIMD • Increase: x*b. I (x 1 h, x 2 h) • Decrease: x*b. D (b. Ib. Dx 1 h, b. Ib. Dx 2 h) User 2: x 2 • Does not converge to fairness line (bdx 1 h, bdx 2 h) efficiency line User 1: x 1 45

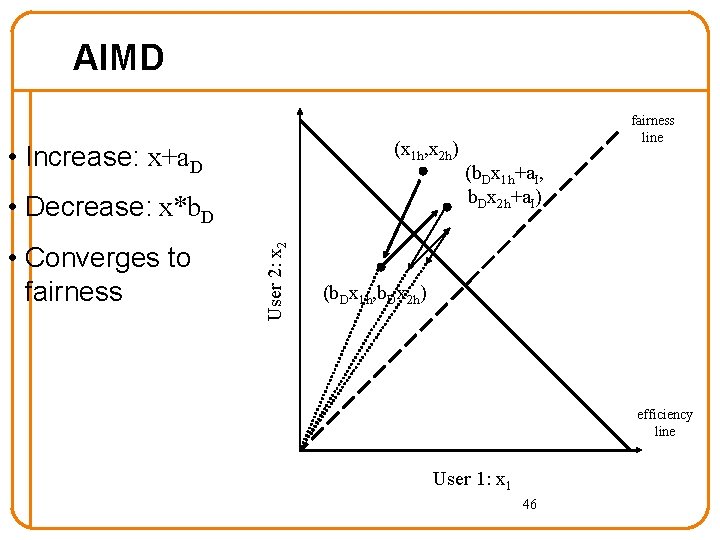

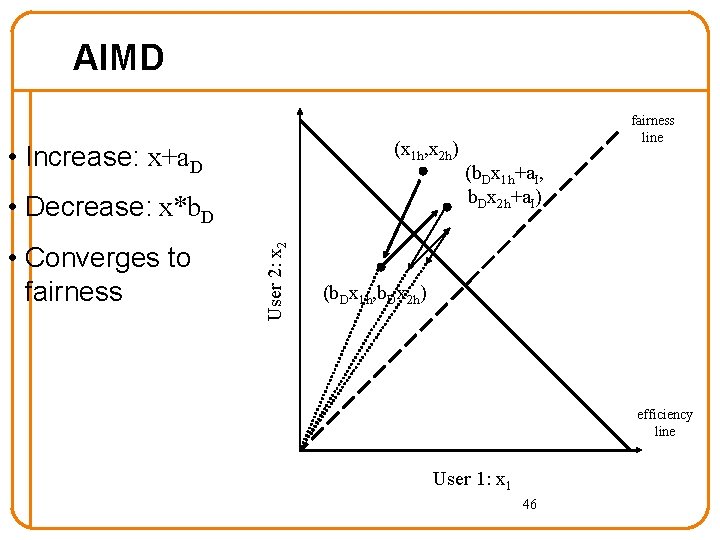

AIMD (x 1 h, x 2 h) • Increase: x+a. D • Converges to fairness User 2: x 2 • Decrease: x*b. D fairness line (b. Dx 1 h+a. I, b. Dx 2 h+a. I) (b. Dx 1 h, b. Dx 2 h) efficiency line User 1: x 1 46

Other Congestion Control Topics 47

Additional Congestion Control Topics • TCP compatibility – Everyone has to use congestion control that shares fairly • Equation-based congestion control [ r ~ 1/sqrt(d) ] • Extending TCP to higher speeds • Signaling congestion without packet drops (ECN) • Router involvement with congestion control – Ensuring fairness (no need for single standard) – Better than AIMD • Economic models (Kelly) 48

Summary • Congestion is natural – Internet does not reserve resources in advance – TCP actively tries to grab capacity • Congestion control critically important – AIMD: Additive Increase, Multiplicative Decrease – Congestion detected via packet loss (fail-safe) – Slow start to find initial sending rate & to restart after timeout • Next class: – Igor: Networking Libraries – Scott: Overlays and P 2 P 49