Congestion Control Algorithms Congestion is a situation in

- Slides: 46

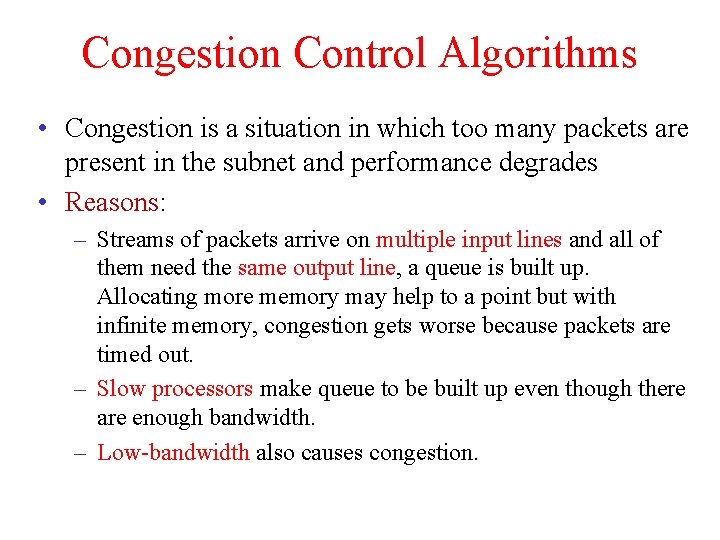

Congestion Control Algorithms • Congestion is a situation in which too many packets are present in the subnet and performance degrades • Reasons: – Streams of packets arrive on multiple input lines and all of them need the same output line, a queue is built up. Allocating more memory may help to a point but with infinite memory, congestion gets worse because packets are timed out. – Slow processors make queue to be built up even though there are enough bandwidth. – Low-bandwidth also causes congestion.

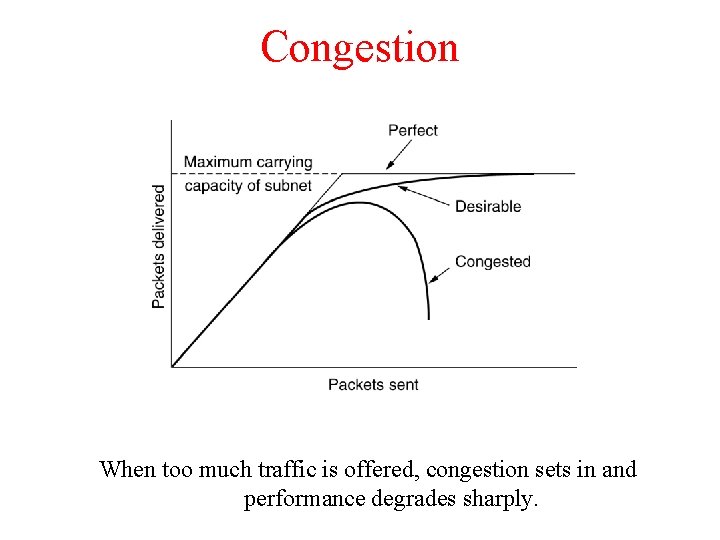

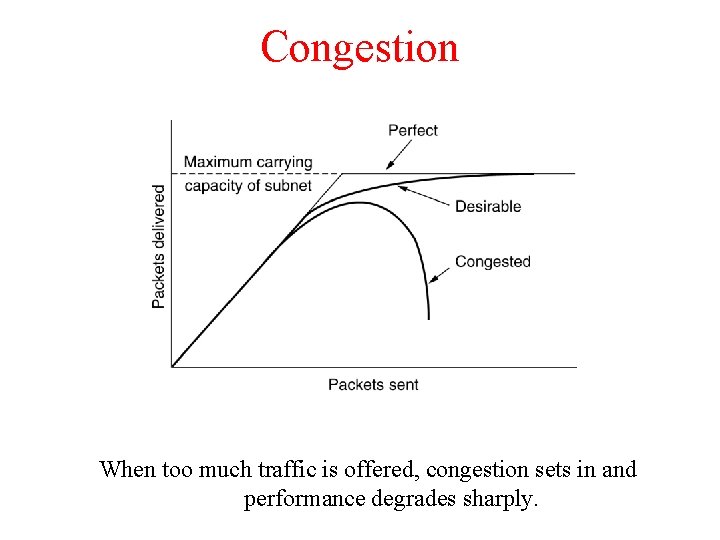

Congestion When too much traffic is offered, congestion sets in and performance degrades sharply.

Congestion Control vs. Flow Control • Congestions control is a global issue, involving all hosts, routers, and other factors • Flow control relates to the point-to-point traffic between a given sender and a given receiver, making sure a faster sender won’t swamp a slow receiver.

Congestion Control Approaches Open loop – solve the problem by essentially good design. Ø Ø Ø Deciding when to accept new traffic Deciding when to discard packets, and Making scheduling decisions at various points in the network Close loop – solve the problem based on the feedback. Ø Monitor the system to detect when and where congestion occurs. Ø Pass information to where action can be taken. Ø Adjust system operation to correct the problem.

General Principles of Congestion Control • The presence of congestion means that the load is greater than the resources can handle • Two solutions – Increase the resources: Ø Increase the bandwidth, Ø Split the traffic over multiple routes, Ø Put spare routers on-line – Decrease the traffic: Deny service to new users (hire-freezing), Ø Degrade service to some/all users, Ø Drop some users (lay off) Ø

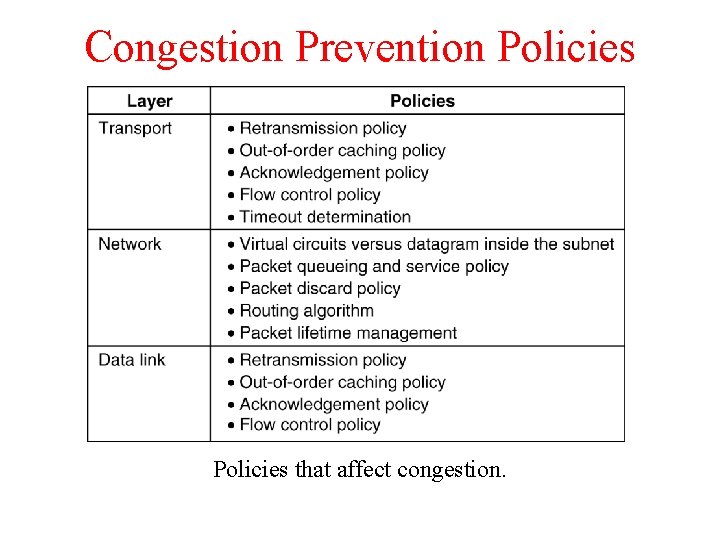

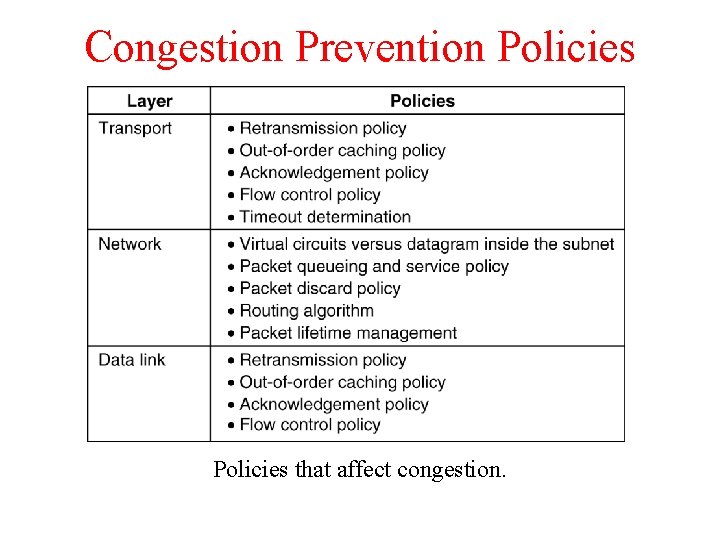

Congestion Prevention Policies 5 -26 Policies that affect congestion.

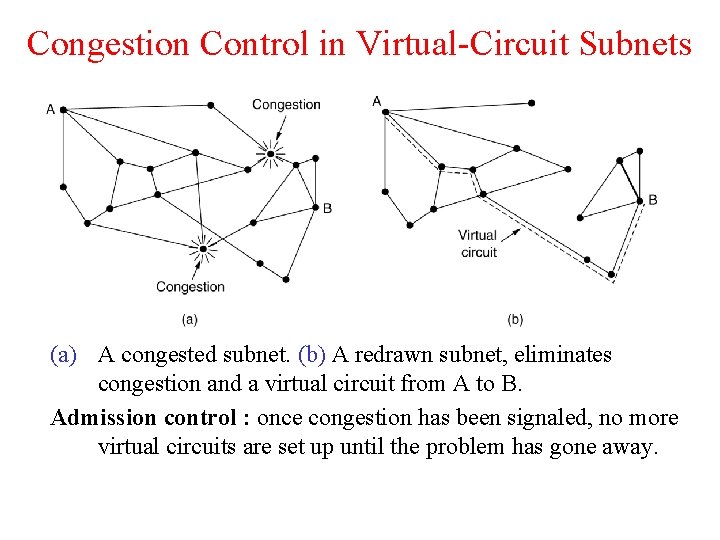

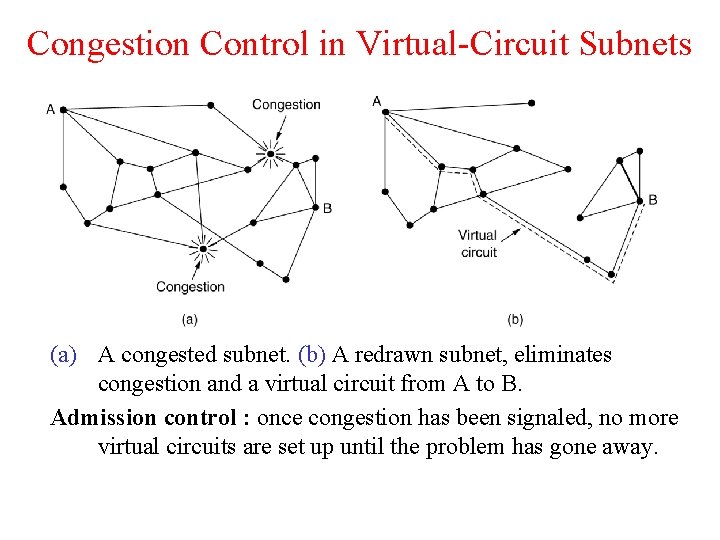

Congestion Control in Virtual-Circuit Subnets (a) A congested subnet. (b) A redrawn subnet, eliminates congestion and a virtual circuit from A to B. Admission control : once congestion has been signaled, no more virtual circuits are set up until the problem has gone away.

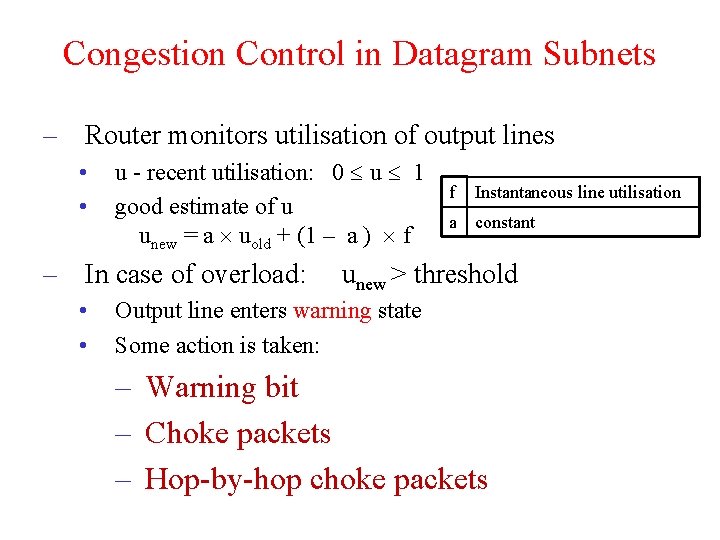

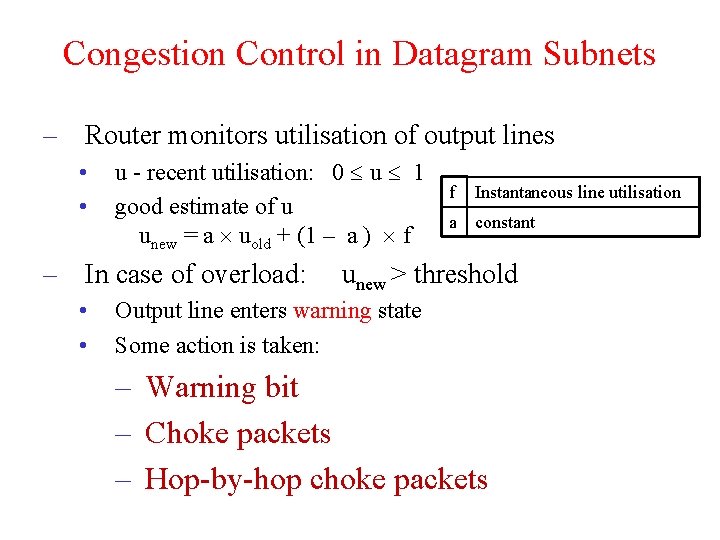

Congestion Control in Datagram Subnets – Router monitors utilisation of output lines • • u - recent utilisation: 0 u 1 good estimate of u unew = a uold + (1 – a ) f – In case of overload: • • f Instantaneous line utilisation a constant unew > threshold Output line enters warning state Some action is taken: – Warning bit – Choke packets – Hop-by-hop choke packets

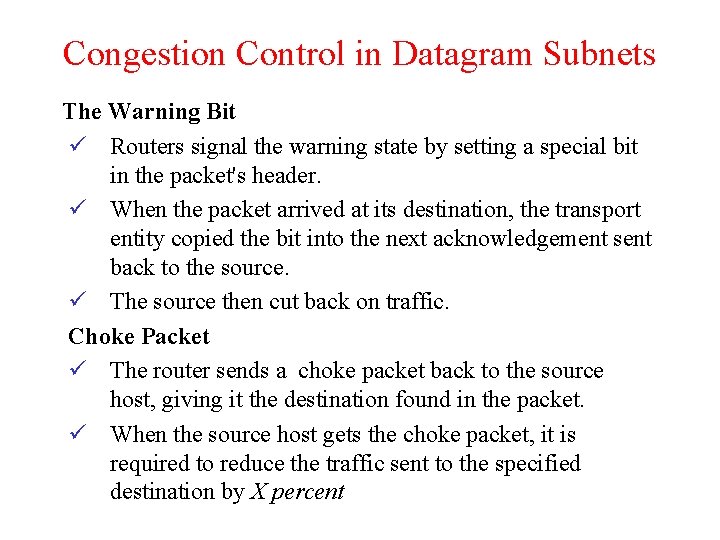

Congestion Control in Datagram Subnets The Warning Bit ü Routers signal the warning state by setting a special bit in the packet's header. ü When the packet arrived at its destination, the transport entity copied the bit into the next acknowledgement sent back to the source. ü The source then cut back on traffic. Choke Packet ü The router sends a choke packet back to the source host, giving it the destination found in the packet. ü When the source host gets the choke packet, it is required to reduce the traffic sent to the specified destination by X percent

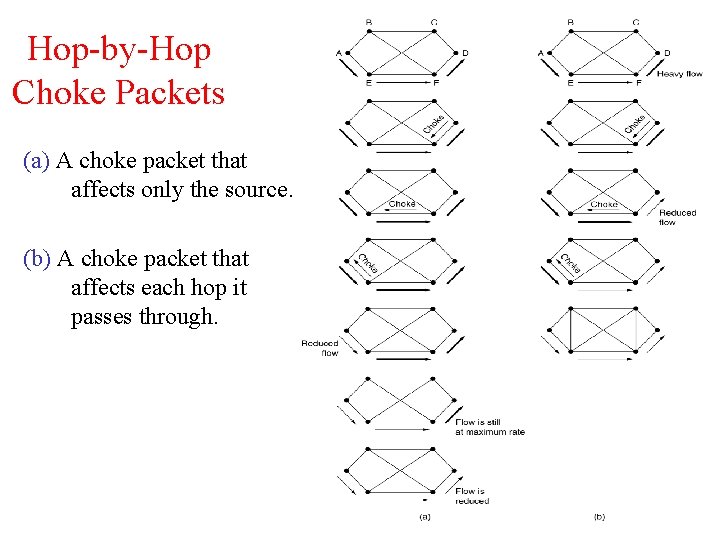

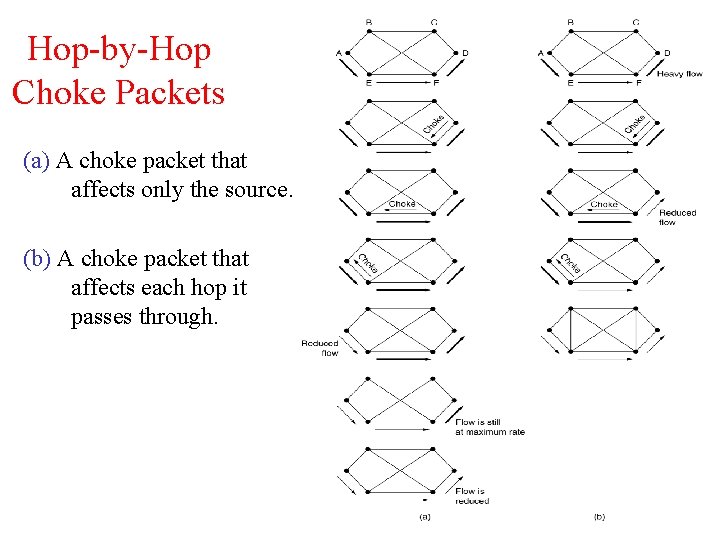

Hop-by-Hop Choke Packets (a) A choke packet that affects only the source. (b) A choke packet that affects each hop it passes through.

Load shedding a) b) Throw away packets that cannot be handled!! Packet selection? – Random – Based on application – File transfer: discard new packet – Multimedia: discard old packet – Let sender indicate importance of packets – Low/ high priority c) Random Early Detection (RED) – Discard packets before all buffer space is exhausted – Routers maintain running average of queue lengths – Select at random a packet – Inform source? • Send choke packet? more load!!

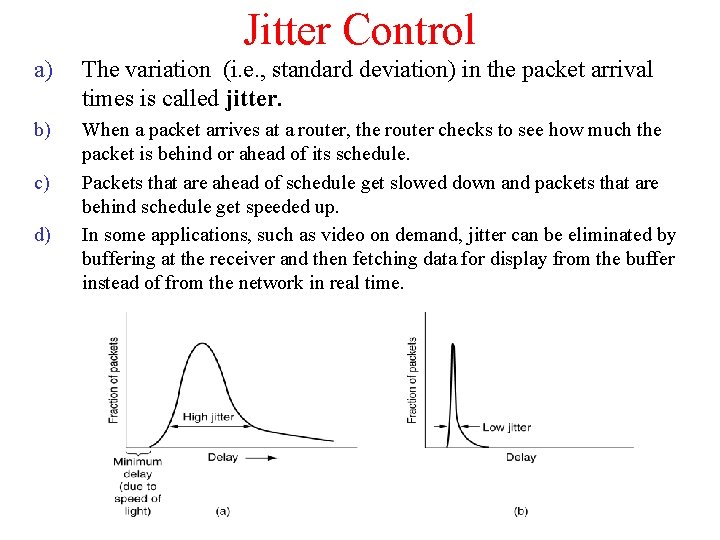

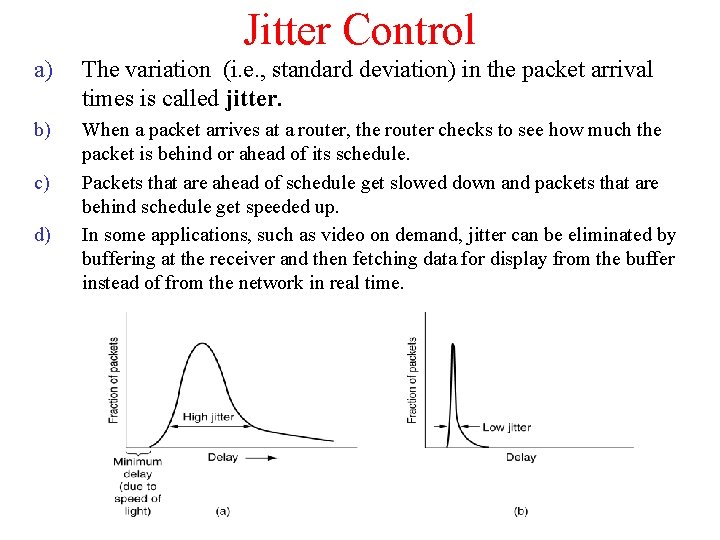

Jitter Control a) The variation (i. e. , standard deviation) in the packet arrival times is called jitter. b) When a packet arrives at a router, the router checks to see how much the packet is behind or ahead of its schedule. Packets that are ahead of schedule get slowed down and packets that are behind schedule get speeded up. In some applications, such as video on demand, jitter can be eliminated by buffering at the receiver and then fetching data for display from the buffer instead of from the network in real time. c) d)

Quality of Service • A stream of packets from a source to a destination is called a flow. • Qo. S (Quality of Service) is characterized by four primary parameters: – – Reliability, Delay, Jitter, and Bandwidth. • To achieve high reliability: – The checksum is used to verify the packet at the destination. – If a packet is damaged in transit, it is not acknowledged and will be retransmitted eventually.

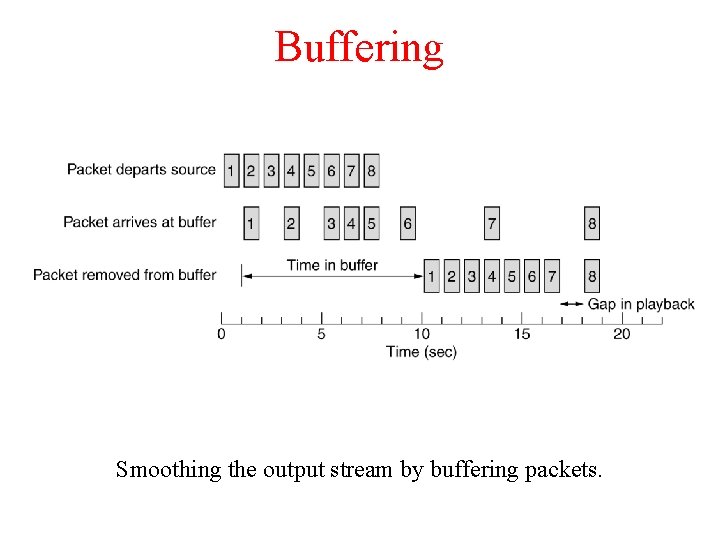

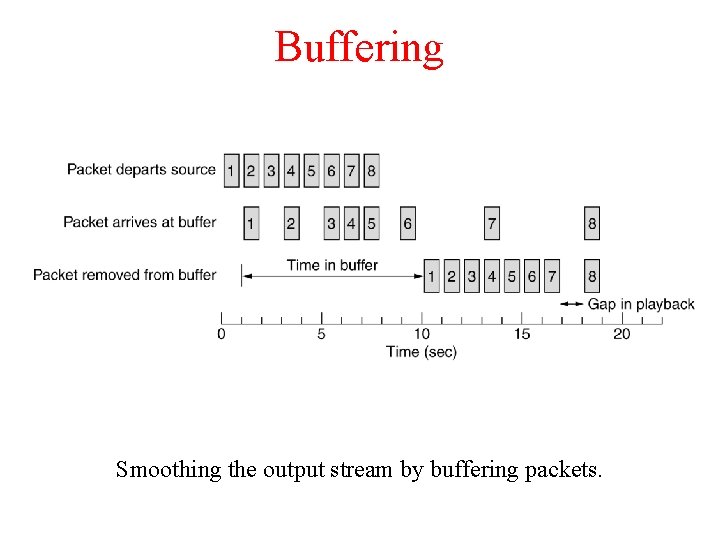

Techniques for Achieving Good Quality of Service a) Over-Provisioning An easy solution is to provide so much router capacity, buffer space, and bandwidth that the packets just fly through easily. – Expensive a) Buffering Flows can be buffered on the receiving side before being delivered. ü Buffering does not affect the reliability or bandwidth, and increases the delay, but it smooths out the jitter. a) Traffic Shaping ü Traffic shaping is about regulating the average rate (and burstiness) of data transmission. ü When a connection is set up, the user and the subnet (i. e. , the customer and the carrier) agree on a certain traffic pattern (i. e. , shape) for that circuit. ü Sometimes this is called a service level agreement. ü Monitoring a traffic flow is called traffic policing

Buffering Smoothing the output stream by buffering packets.

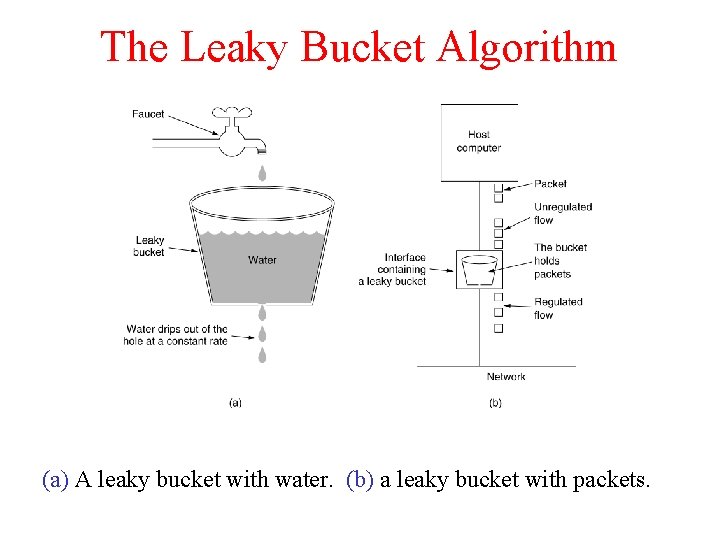

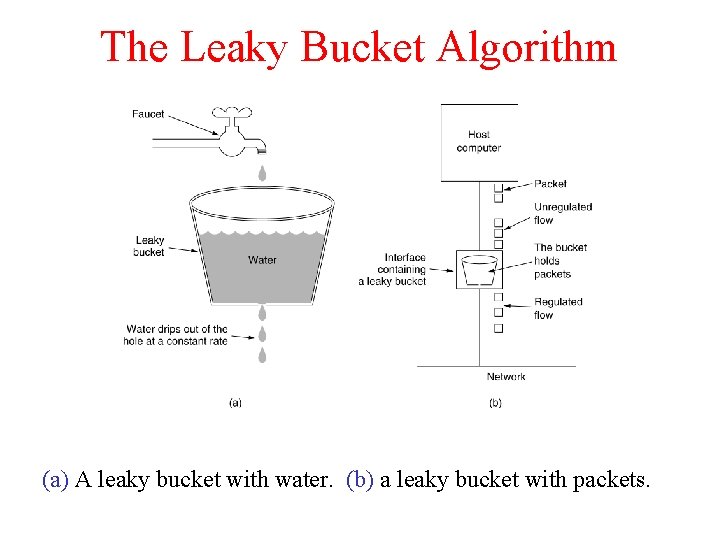

The Leaky Bucket Algorithm (a) A leaky bucket with water. (b) a leaky bucket with packets.

Leaky Bucket Algorithm ü ü ü Each host is connected to the network by an interface containing a leaky bucket, that is, a finite internal queue. If a packet arrives at the queue when it is full, the packet is discarded. The leaky bucket consists of a finite queue. When a packet arrives, if there is room on the queue it is appended to the queue; otherwise, it is discarded. At every clock tick, one packet is transmitted (unless the queue is empty)

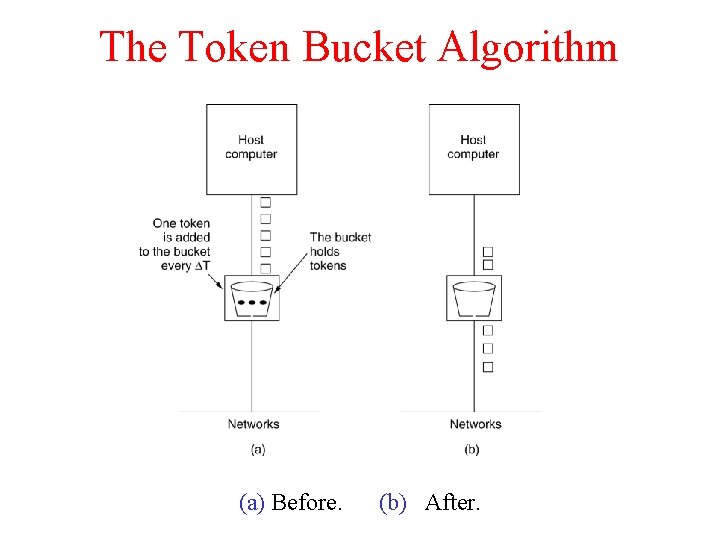

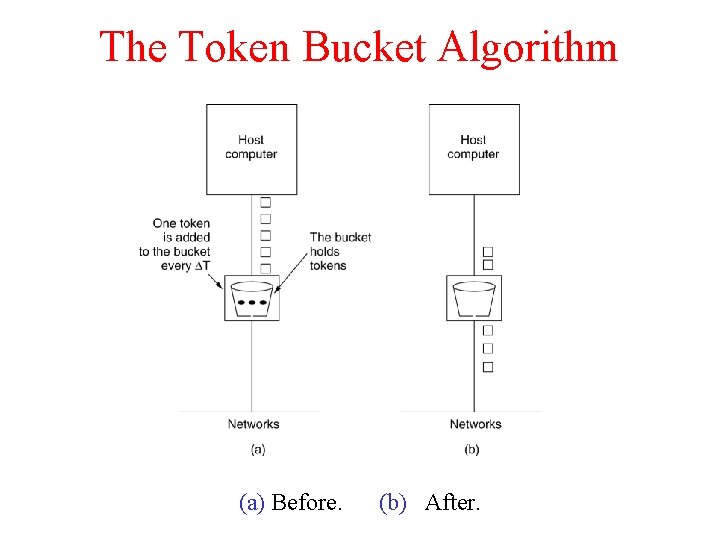

The Token Bucket Algorithm 5 -34 (a) Before. (b) After.

Token Bucket Algorithm ü ü ü The token bucket algorithm provides a different kind of traffic shaping than that of the leaky bucket algorithm. The leaky bucket holds tokens, generated by a clock at the rate of one token every ΔT sec For a packet to be transmitted, it must capture and destroy one token. The token bucket algorithm does allow saving, up to the maximum size of the bucket, n. This property means that bursts of up to n packets can be sent at once, allowing some burstiness in the output stream and giving faster response to sudden bursts of input.

Integrated Services Ø Architecture for streaming multimedia. Ø Also called flow-based algorithms Ø It was aimed at both unicast and multicast applications. Ø Unicast Ø Example : single user streaming a video clip from a news site Ø Multicast Ø Example: Digital television stations broadcasting their programs as streams of IP packets to many receivers at various locations.

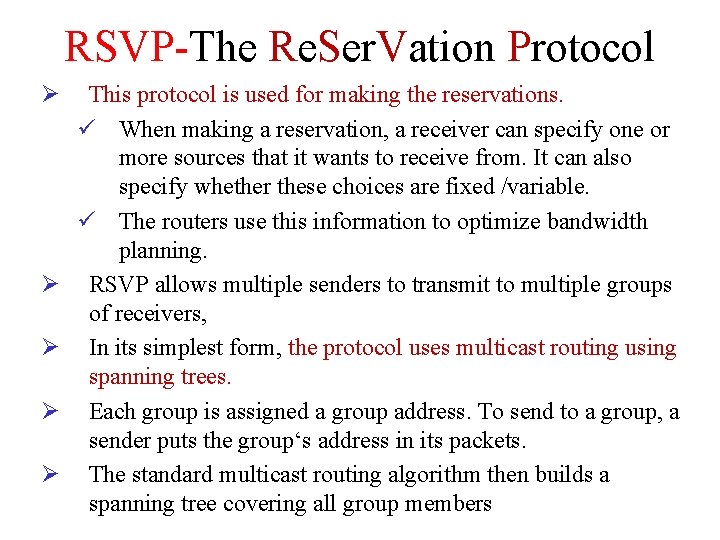

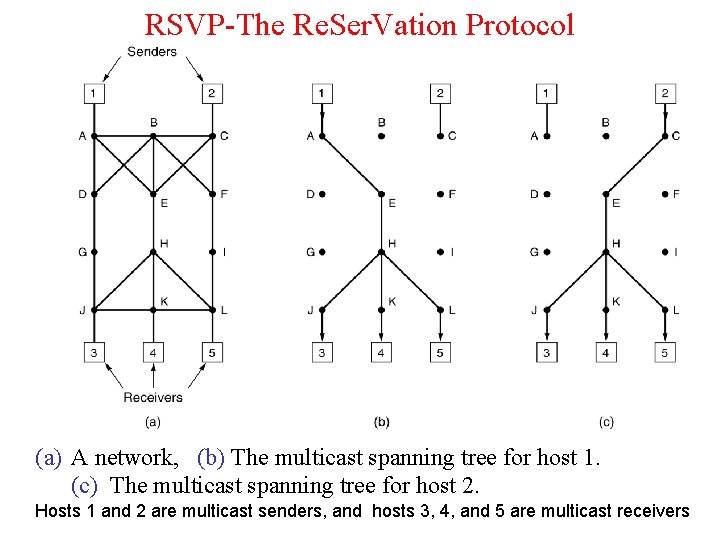

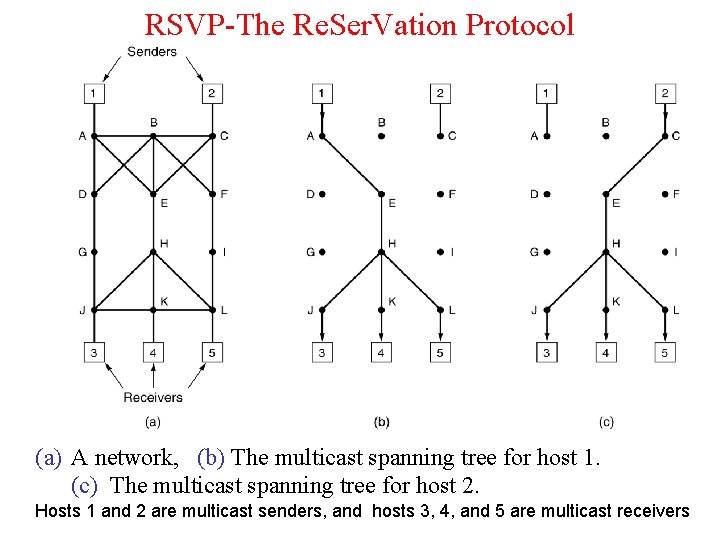

RSVP-The Re. Ser. Vation Protocol Ø Ø Ø This protocol is used for making the reservations. ü When making a reservation, a receiver can specify one or more sources that it wants to receive from. It can also specify whether these choices are fixed /variable. ü The routers use this information to optimize bandwidth planning. RSVP allows multiple senders to transmit to multiple groups of receivers, In its simplest form, the protocol uses multicast routing using spanning trees. Each group is assigned a group address. To send to a group, a sender puts the group‘s address in its packets. The standard multicast routing algorithm then builds a spanning tree covering all group members

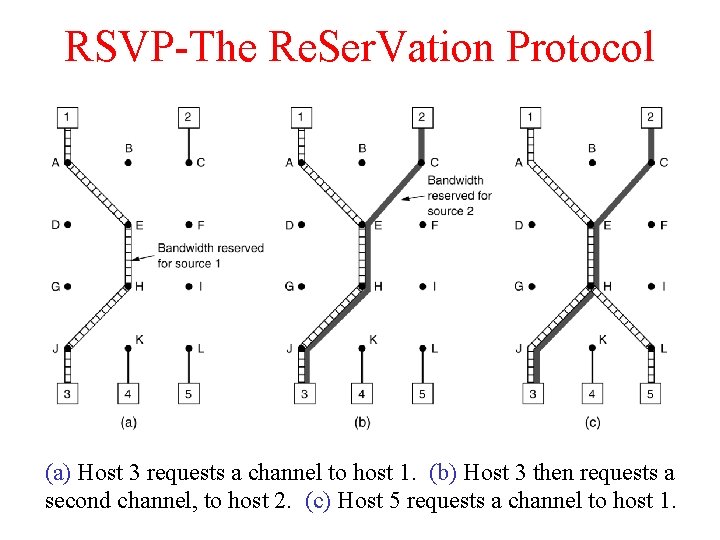

RSVP-The Re. Ser. Vation Protocol (a) A network, (b) The multicast spanning tree for host 1. (c) The multicast spanning tree for host 2. Hosts 1 and 2 are multicast senders, and hosts 3, 4, and 5 are multicast receivers

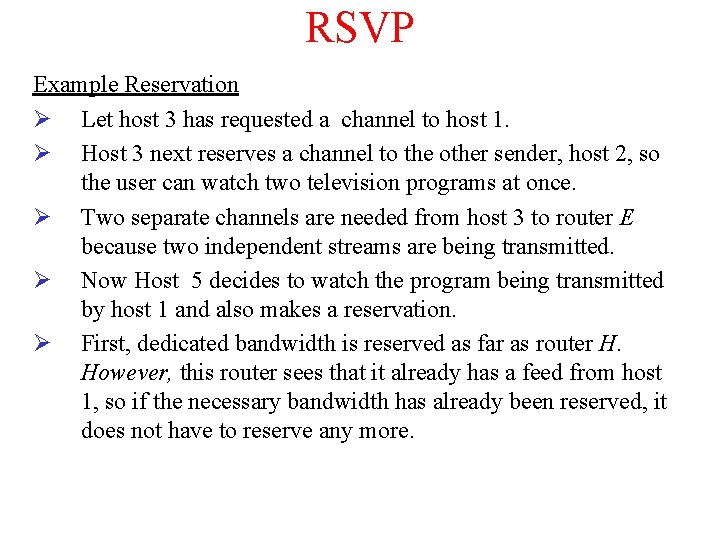

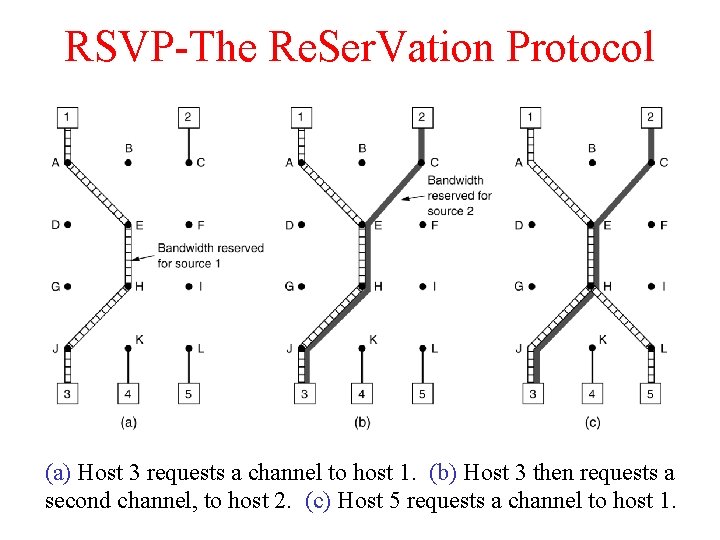

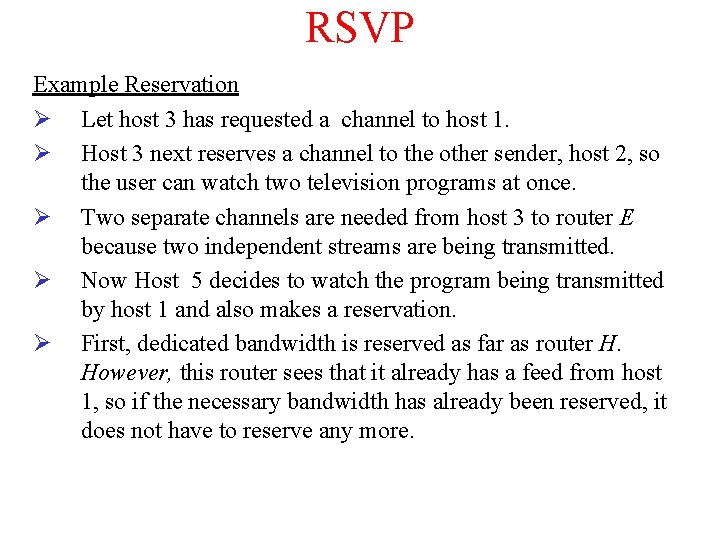

RSVP Example Reservation Ø Let host 3 has requested a channel to host 1. Ø Host 3 next reserves a channel to the other sender, host 2, so the user can watch two television programs at once. Ø Two separate channels are needed from host 3 to router E because two independent streams are being transmitted. Ø Now Host 5 decides to watch the program being transmitted by host 1 and also makes a reservation. Ø First, dedicated bandwidth is reserved as far as router H. However, this router sees that it already has a feed from host 1, so if the necessary bandwidth has already been reserved, it does not have to reserve any more.

RSVP-The Re. Ser. Vation Protocol (a) Host 3 requests a channel to host 1. (b) Host 3 then requests a second channel, to host 2. (c) Host 5 requests a channel to host 1.

Differentiated Services Ø Ø Ø Class based Algorithms Simpler approach to quality of service, one that can be largely implemented locally in each router without advance setup and without having the whole path involved. Differentiated services (DS) can be offered by a set of routers forming an administrative domain (e. g. , an ISP). Ø Expedited Forwarding Ø Assured Forwarding ü ü To make the difference between flow-based quality of service and class-based quality of service clearer, consider an example: Internet telephony. With a flow-based scheme, each telephone call gets its own resources and guarantees. With a class-based scheme, all the telephone calls together get the resources reserved for the class telephony

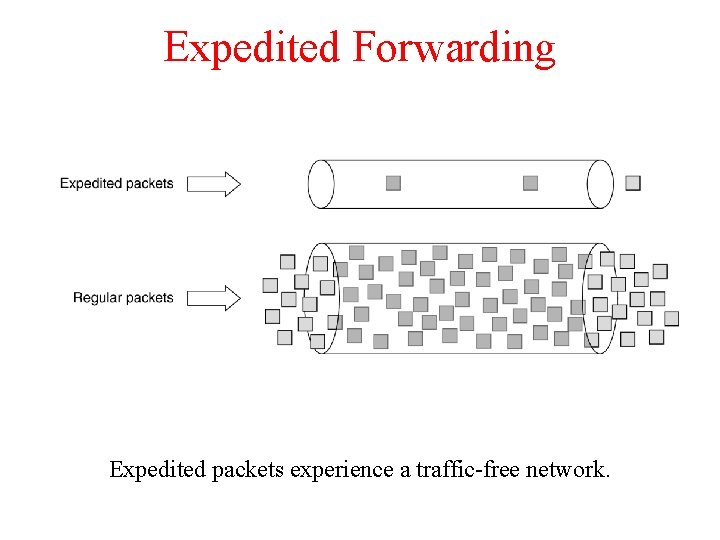

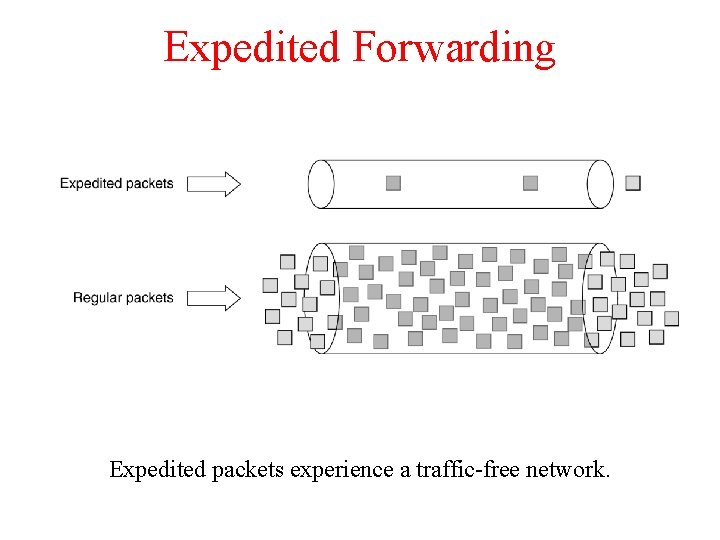

Expedited Forwarding Expedited packets experience a traffic-free network.

Expedited Forwarding Ø Ø The idea behind expedited forwarding is very simple. Two classes of service are available: Ø Regular Ø Expedited. Ø Ø The vast majority of the traffic is expected to be regular, but a small fraction of the packets are expedited. The expedited packets should be able to transit the subnet as though no other packets were present

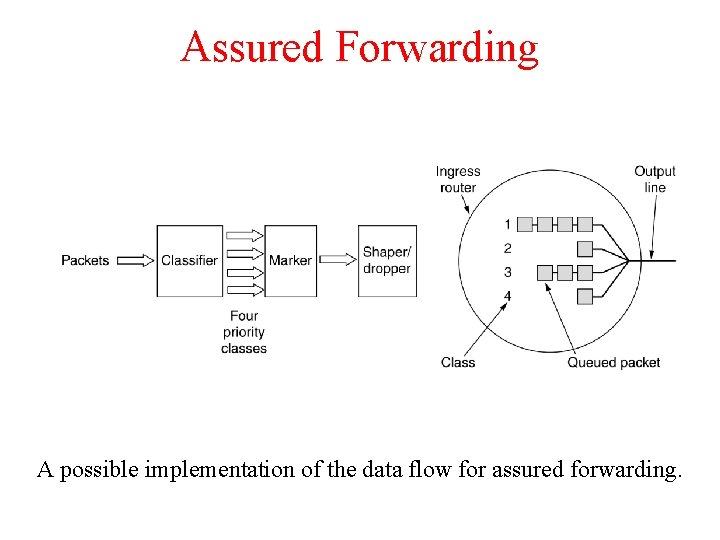

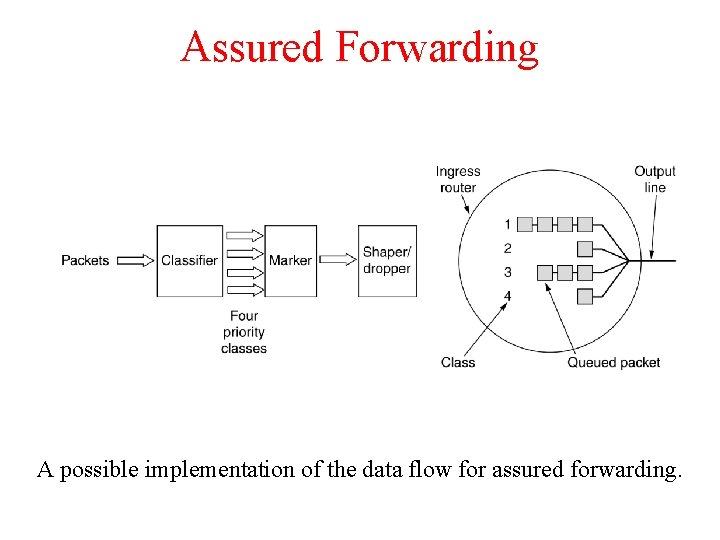

Assured Forwarding A possible implementation of the data flow for assured forwarding.

Assured Forwarding Ø Ø Step 1 is to classify the packets into one of the four priority classes Step 2 is to mark the packets according to their class. Step 3 is to pass the packets through a shaper/dropper filter that may delay or drop some of them to shape the four streams into acceptable forms, for example, by using leaky or token buckets. Note : If there are too many packets, some of them may be discarded here, by discard category.

Internetworking • • How Networks Differ How Networks Can Be Connected Concatenated Virtual Circuits Connectionless Internetworking Tunneling Internetwork Routing Fragmentation

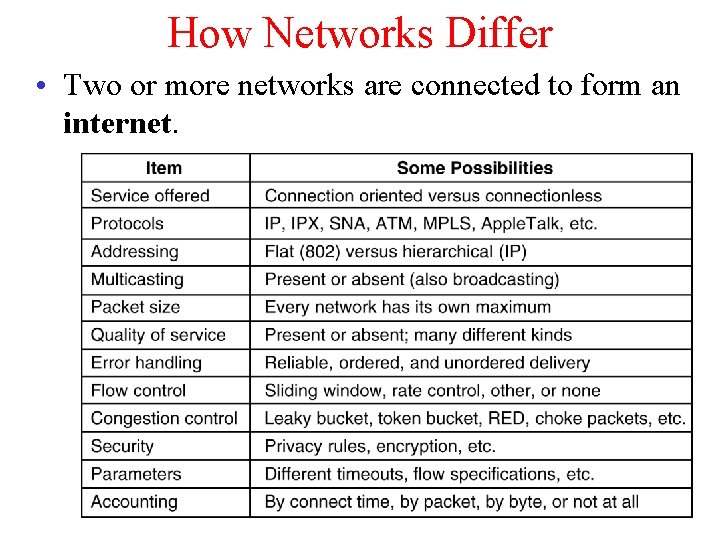

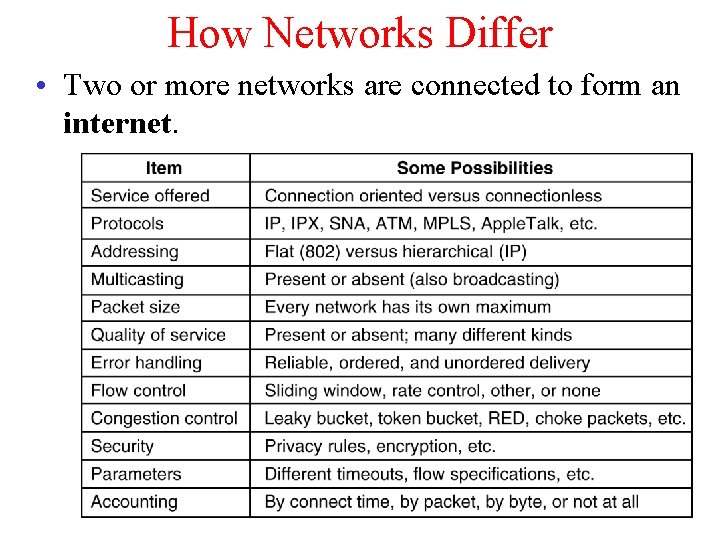

How Networks Differ • Two or more networks are connected to form an internet.

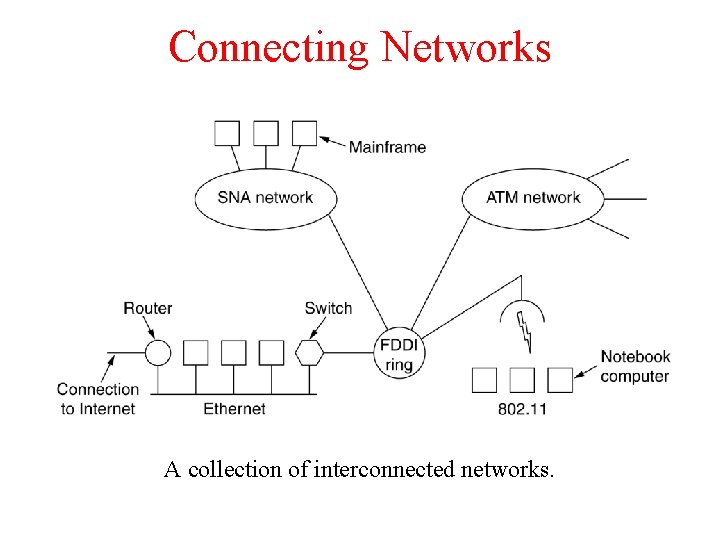

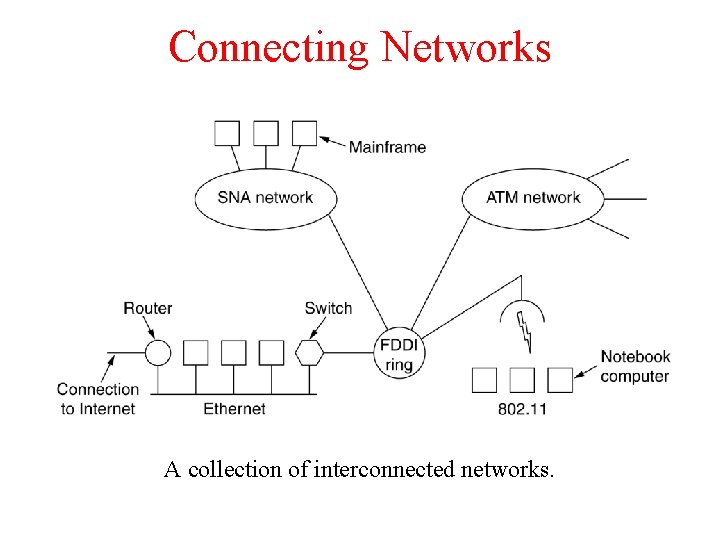

Connecting Networks A collection of interconnected networks.

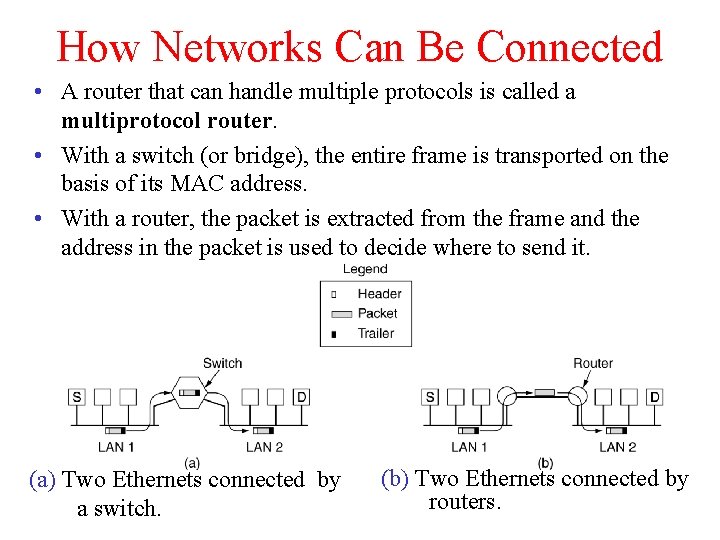

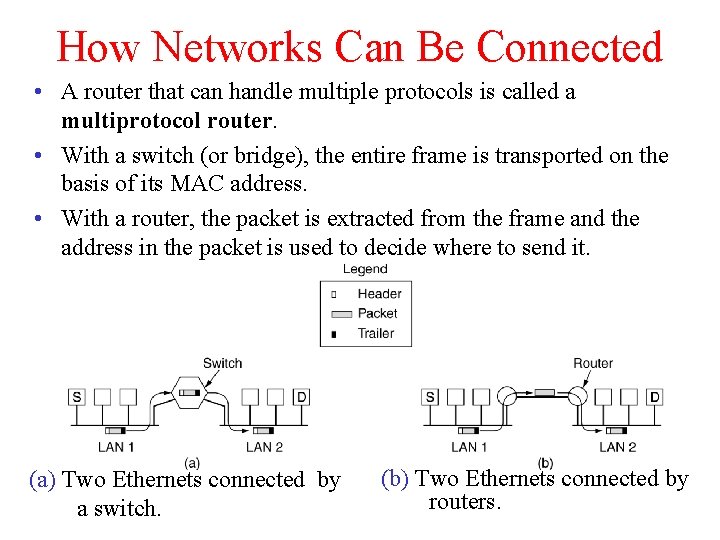

How Networks Can Be Connected • A router that can handle multiple protocols is called a multiprotocol router. • With a switch (or bridge), the entire frame is transported on the basis of its MAC address. • With a router, the packet is extracted from the frame and the address in the packet is used to decide where to send it. (a) Two Ethernets connected by a switch. (b) Two Ethernets connected by routers.

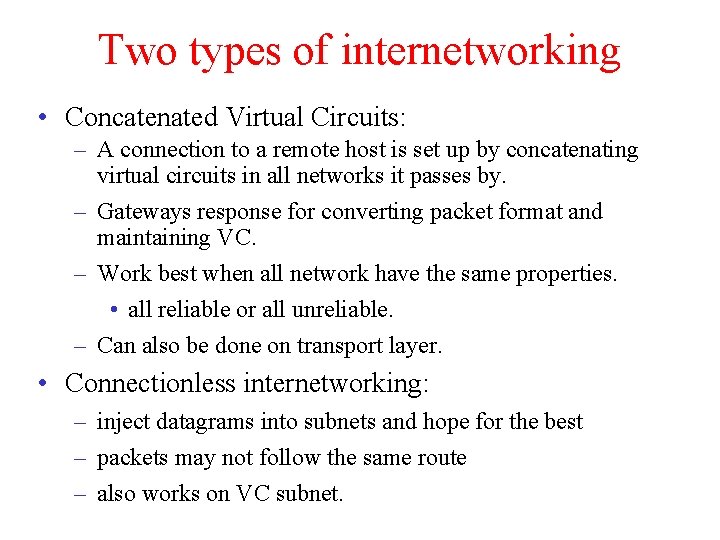

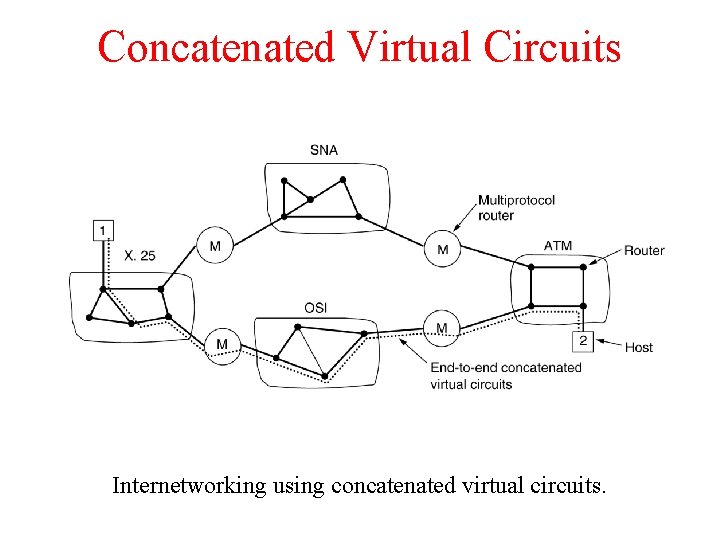

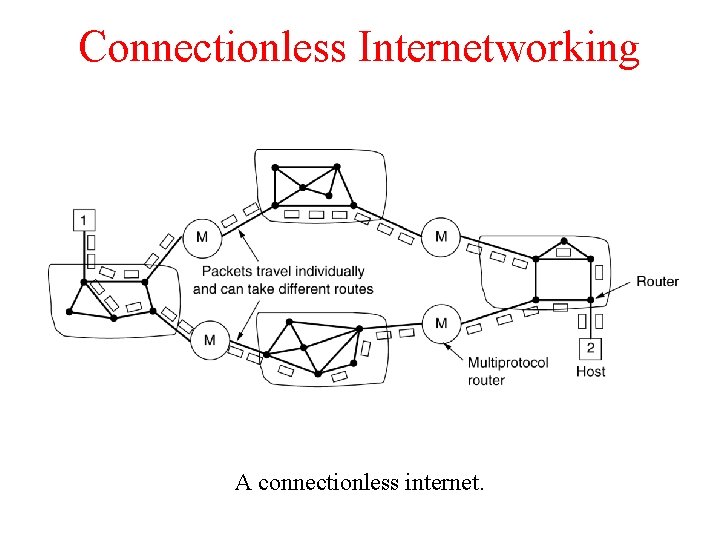

Two types of internetworking • Concatenated Virtual Circuits: – A connection to a remote host is set up by concatenating virtual circuits in all networks it passes by. – Gateways response for converting packet format and maintaining VC. – Work best when all network have the same properties. • all reliable or all unreliable. – Can also be done on transport layer. • Connectionless internetworking: – inject datagrams into subnets and hope for the best – packets may not follow the same route – also works on VC subnet.

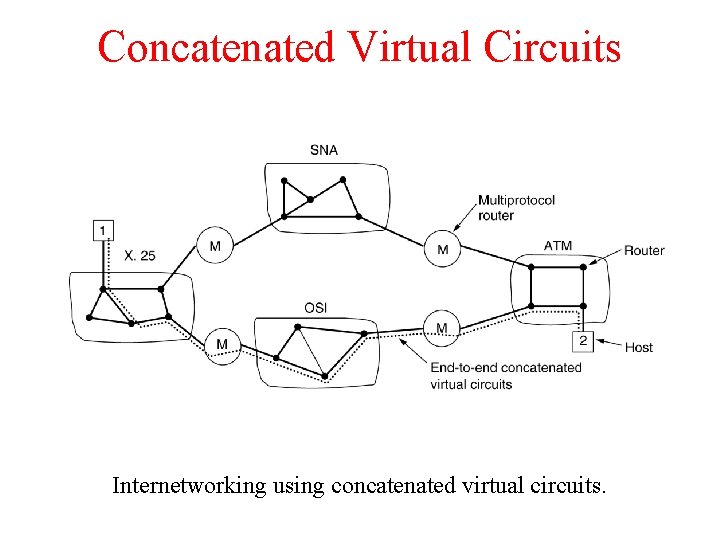

Concatenated Virtual Circuits Internetworking using concatenated virtual circuits.

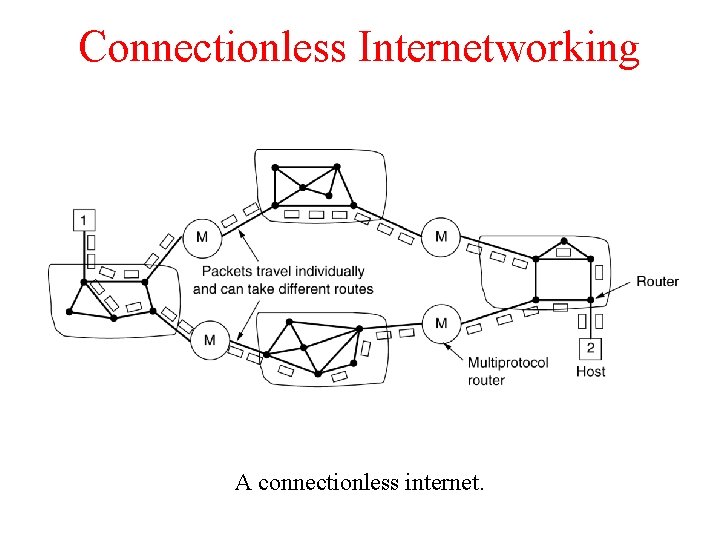

Connectionless Internetworking A connectionless internet.

Concatenated Virtual Circuits • Advantages: – Buffers can be reserved. – Sequencing can be guaranteed. – Shorter headers can be used. – Troubles caused by delayed duplicate packets can be avoid. • Disadvantages: – Table space required in the router for each open connection. – No alternate routing to avoid congested areas. – Vulnerability to router failure along the path. – Difficult to implement if one of the networks is an unreliable datagram network.

Connectionless internetworking • Advantages: – More potential for adapting to congestion – Robustness in the face of router failures – Various adaptive routing algorithms are possible. – It can be used over subnets that do not use virtual circuits inside. • Disadvantages: – More potential for congestion – Longer header needed

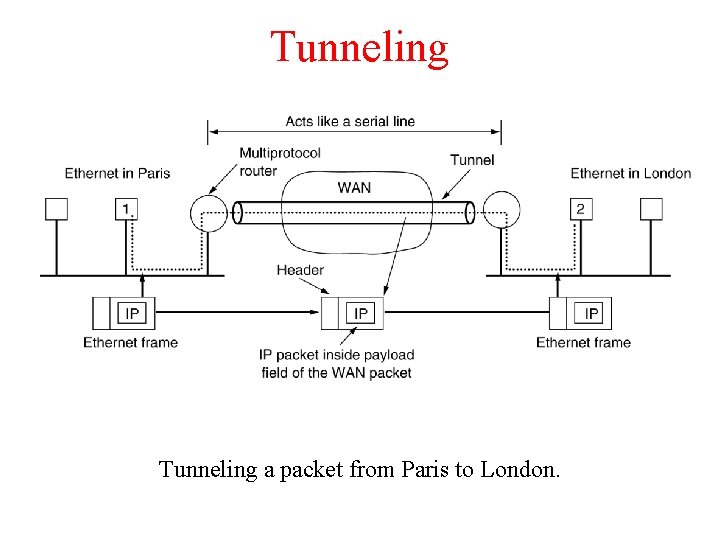

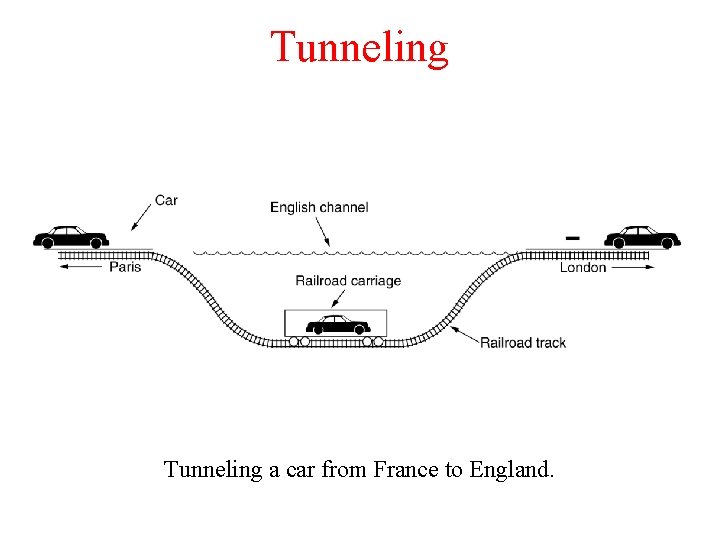

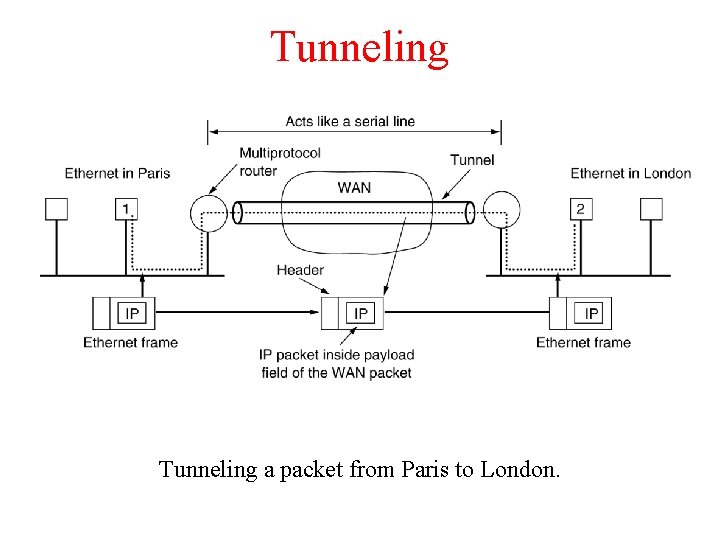

Tunneling • Internetworking for the general case is extremely difficult. – Common case: The source and destination are on the same type of network but different networks are in between. – Tunneling is the transmission of data in such a way that the routing nodes in the network are unaware that the transmission is from a different network. – How it works? • Source sends packets to an intermediate gateway • Intermediate gateways put the whole packet into the payload field (don't interpret it). • The destination will understand the packet

Tunneling a packet from Paris to London.

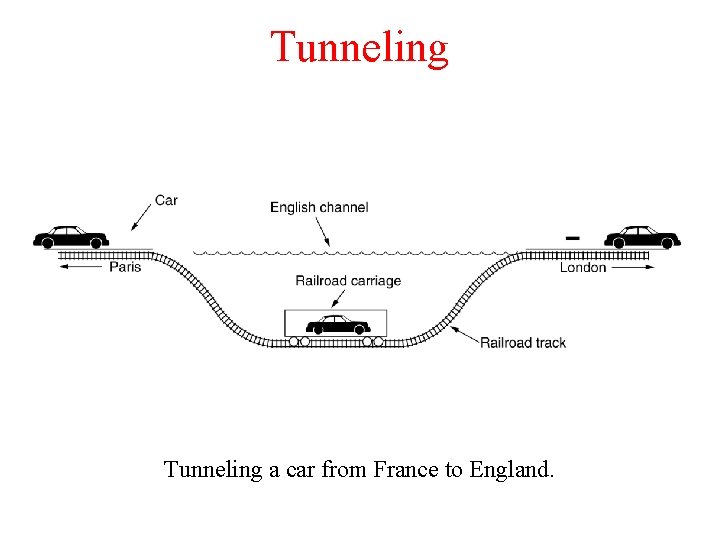

Tunneling a car from France to England.

Fragmentation • Each network imposes maximum size on its packets. These limits have various causes: – Hardware (e. g. , the size of an Ethernet frame). – Operating system (e. g. , all buffers are 512 bytes). – Protocols (e. g. , the number of bits I nthe packet length field). – Compliance with some (inter)national standard. – Desire to reduce error-induced retransmissions to some level. – Desire to prevent one packet from occupying the channel too long.

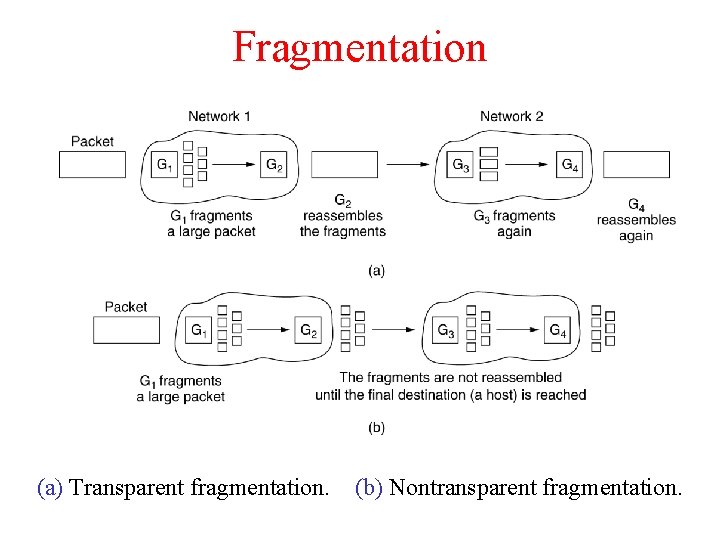

Fragmentation • Maximum packet size is different in different networks • Fragmentation deals with the cases when large packet sends to the network whose maximum packet size is small. • Solutions: – Use a routing algorithm that avoids to sending packets through networks that cannot handle it. Infeasible if the destination can handle large packets. – Chop the large packet into small fragments and send fragments as individual internet packets.

Fragmentation • Reassemble at gateways: – transparent to other networks – high overhead - all packet must pass through the same exit gateway – ATM • Reassemble at the destination: – – – every fragment is treated as an internet packet smart end hosts (may not always be true) fragments must be numbered retransmission overhead (can be complicated). IP

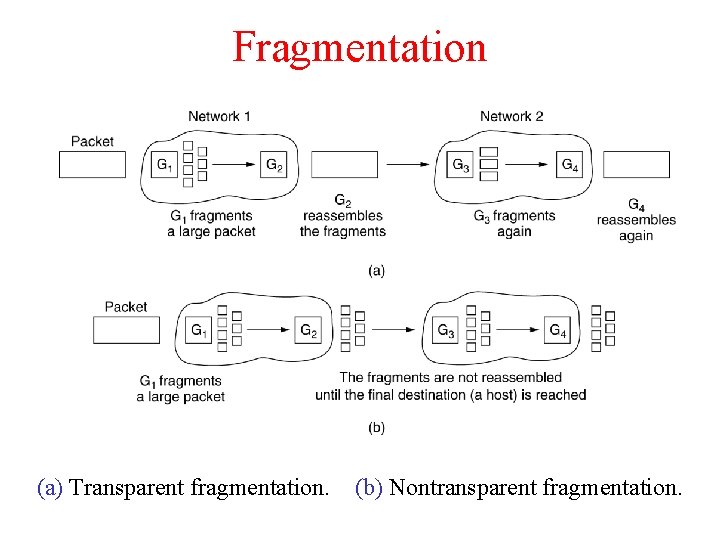

Fragmentation (a) Transparent fragmentation. (b) Nontransparent fragmentation.

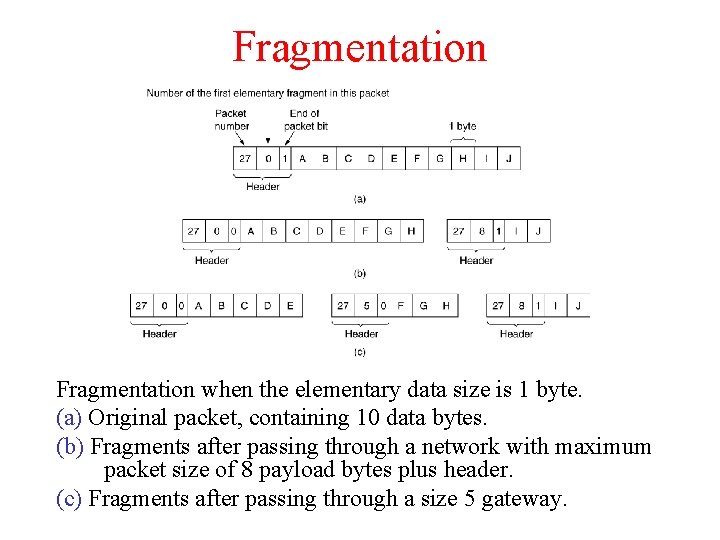

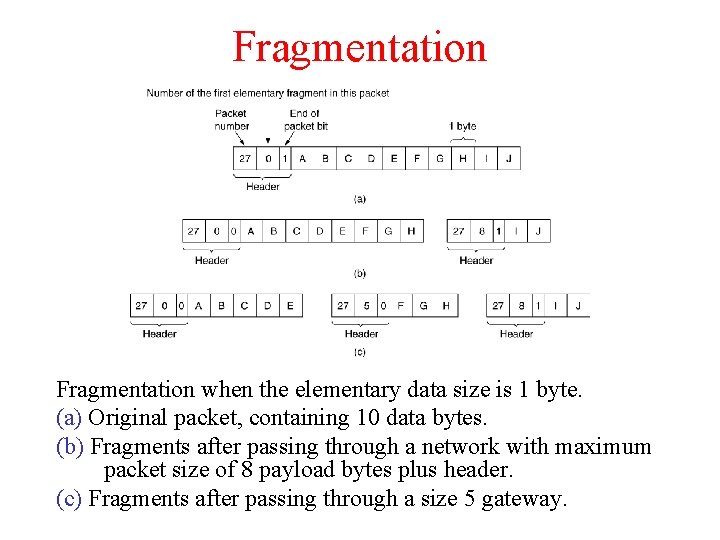

Fragmentation when the elementary data size is 1 byte. (a) Original packet, containing 10 data bytes. (b) Fragments after passing through a network with maximum packet size of 8 payload bytes plus header. (c) Fragments after passing through a size 5 gateway.