Configuration and Programming of Heterogeneous Multiprocessors on a

![Background: Design Flow Flexible Hardware-Software Co-design Flow Previous work: • Patel et al. [1] Background: Design Flow Flexible Hardware-Software Co-design Flow Previous work: • Patel et al. [1]](https://slidetodoc.com/presentation_image_h2/7a3d15e2370357075f7d8e16ef4e8359/image-13.jpg)

![References [1] Arun Patel, Christopher Madill, Manuel Saldaña, Christopher Comis, Régis Pomès, and Paul References [1] Arun Patel, Christopher Madill, Manuel Saldaña, Christopher Comis, Régis Pomès, and Paul](https://slidetodoc.com/presentation_image_h2/7a3d15e2370357075f7d8e16ef4e8359/image-26.jpg)

- Slides: 43

Configuration and Programming of Heterogeneous Multiprocessors on a Multi-FPGA System Using TMD-MPI by Manuel Saldaña, Daniel Nunes, Emanuel Ramalho, and Paul Chow University of Toronto Department of Electrical and Computer Engineering 3 rd International Conference on Re. Con. Figurable Computing and FPGAs (Re. Con. Fig 06) San Luis Potosi, Mexico Manuel Saldaña September, 2006

Agenda n n Motivation Background ¡ ¡ ¡ n n New Developments Example Application ¡ ¡ n TMD-MPI Classes of HPC Design Flow Heterogeneity test Scalability test Conclusions 1/17/2022 Manuel Saldaña 2

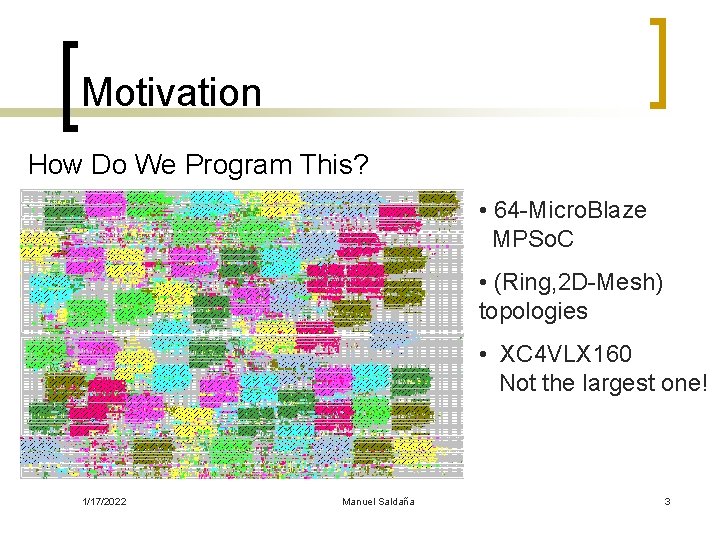

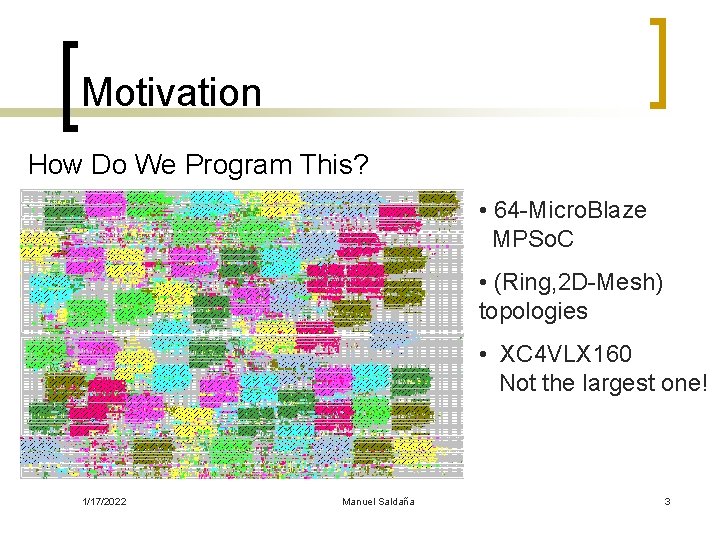

Motivation How Do We Program This? • 64 -Micro. Blaze MPSo. C • (Ring, 2 D-Mesh) topologies • XC 4 VLX 160 Not the largest one! 1/17/2022 Manuel Saldaña 3

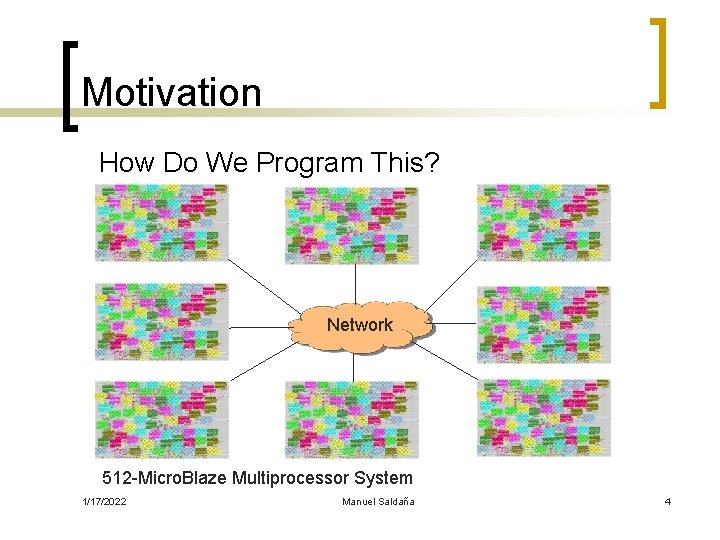

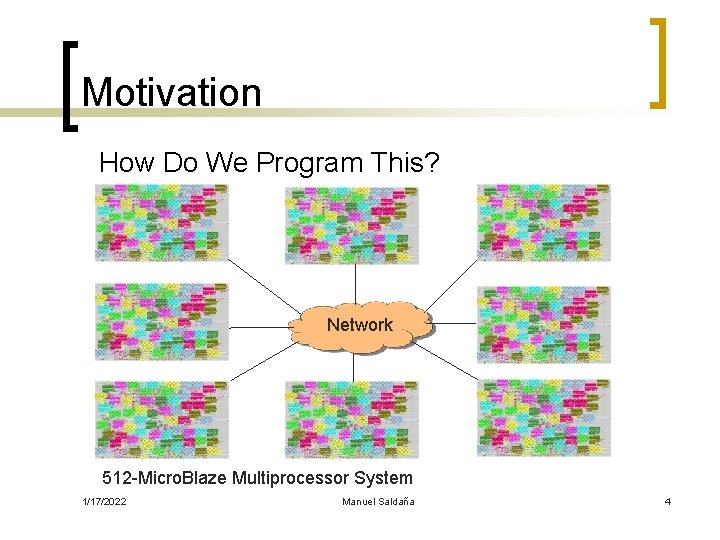

Motivation How Do We Program This? Network 512 -Micro. Blaze Multiprocessor System 1/17/2022 Manuel Saldaña 4

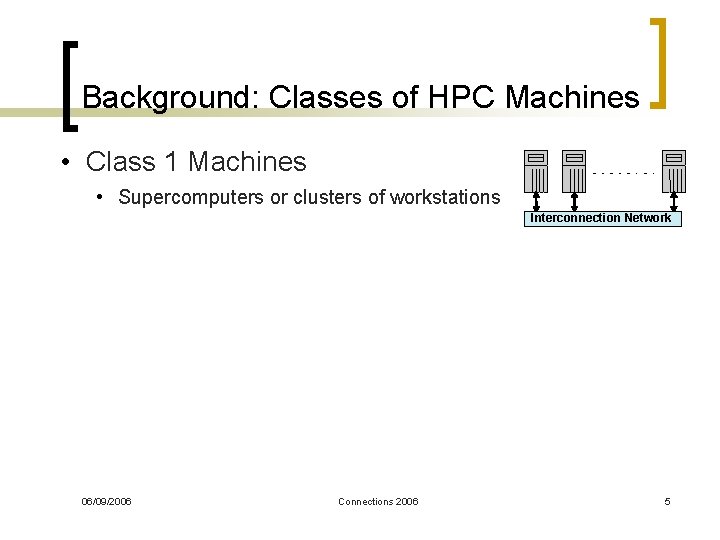

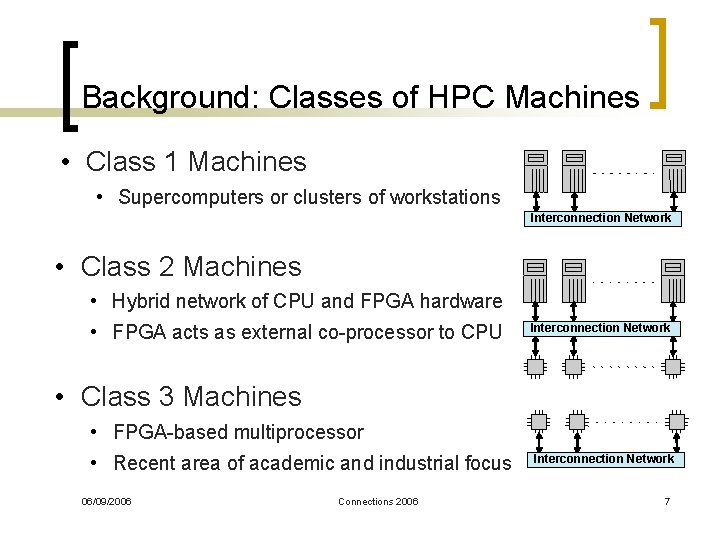

Background: Classes of HPC Machines • Class 1 Machines • Supercomputers or clusters of workstations Interconnection Network 06/09/2006 Connections 2006 5

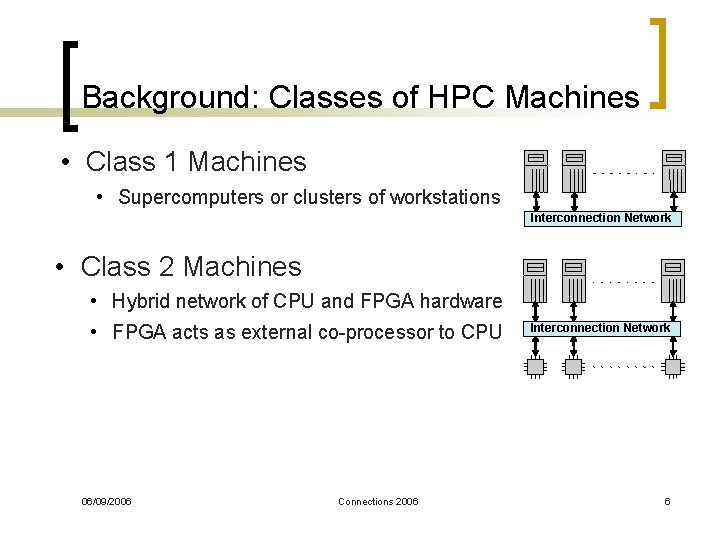

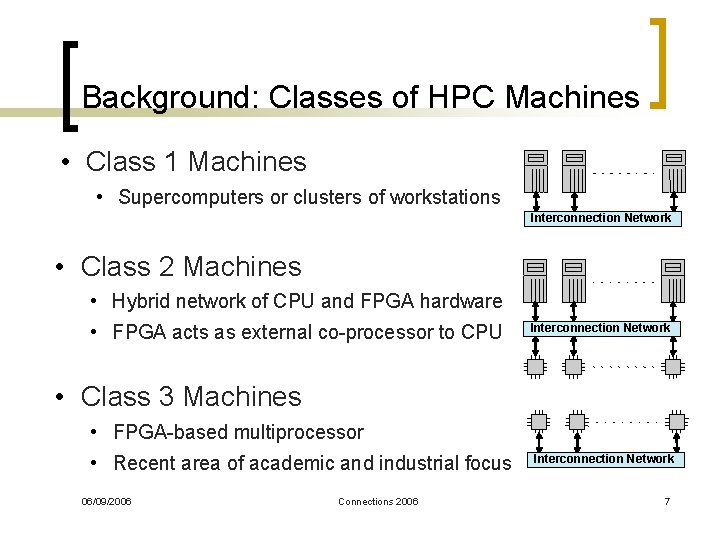

Background: Classes of HPC Machines • Class 1 Machines • Supercomputers or clusters of workstations Interconnection Network • Class 2 Machines • Hybrid network of CPU and FPGA hardware • FPGA acts as external co-processor to CPU 06/09/2006 Connections 2006 Interconnection Network 6

Background: Classes of HPC Machines • Class 1 Machines • Supercomputers or clusters of workstations Interconnection Network • Class 2 Machines • Hybrid network of CPU and FPGA hardware • FPGA acts as external co-processor to CPU Interconnection Network • Class 3 Machines • FPGA-based multiprocessor • Recent area of academic and industrial focus 06/09/2006 Connections 2006 Interconnection Network 7

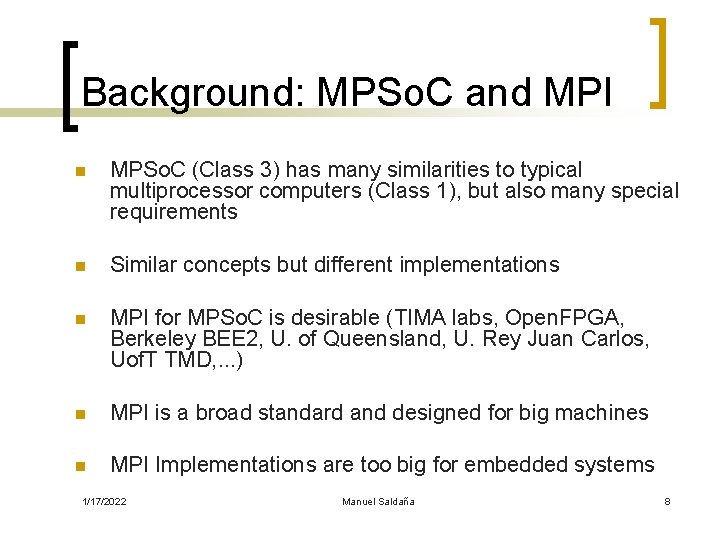

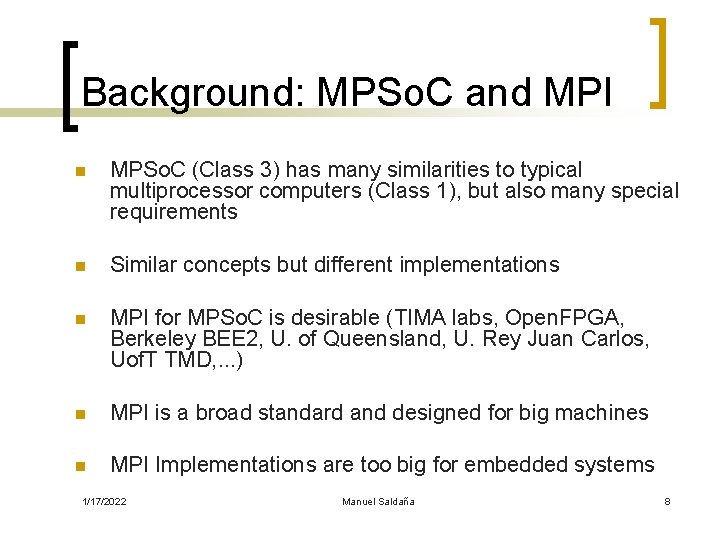

Background: MPSo. C and MPI n MPSo. C (Class 3) has many similarities to typical multiprocessor computers (Class 1), but also many special requirements n Similar concepts but different implementations n MPI for MPSo. C is desirable (TIMA labs, Open. FPGA, Berkeley BEE 2, U. of Queensland, U. Rey Juan Carlos, Uof. T TMD, . . . ) n MPI is a broad standard and designed for big machines n MPI Implementations are too big for embedded systems 1/17/2022 Manuel Saldaña 8

Background: TMD-MPI MPSo. C (TMD-MPI) Linux Cluster (MPICH) m. P Network m. P the same code… m. P 1/17/2022 Manuel Saldaña m. P 9

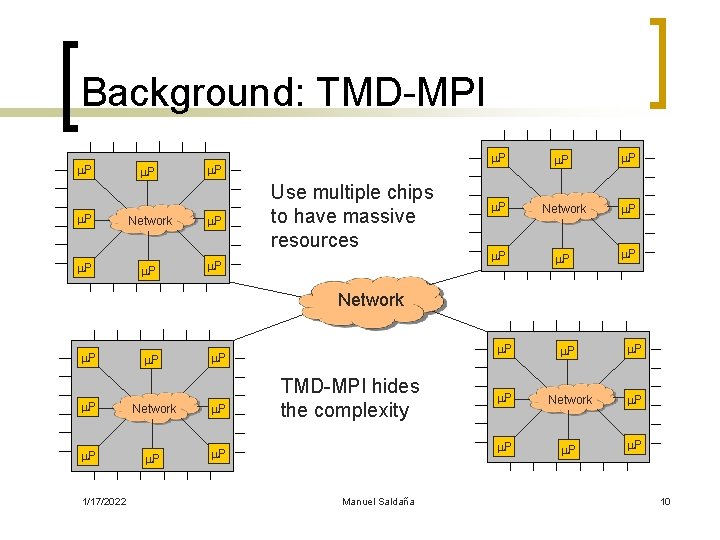

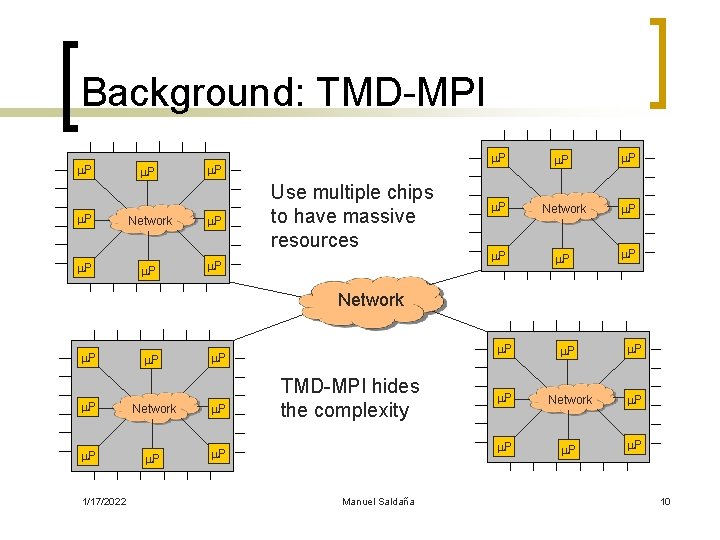

Background: TMD-MPI m. P m. P Network m. P Use multiple chips to have massive resources m. P m. P Network m. P 1/17/2022 TMD-MPI hides the complexity Manuel Saldaña m. P Network m. P 10

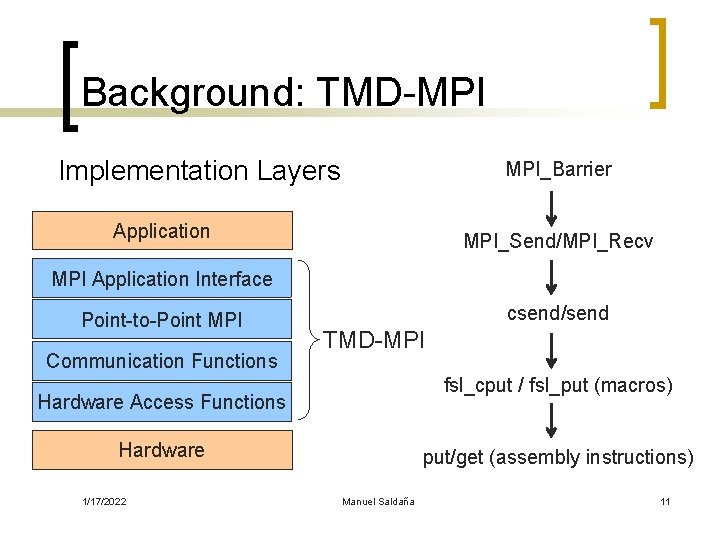

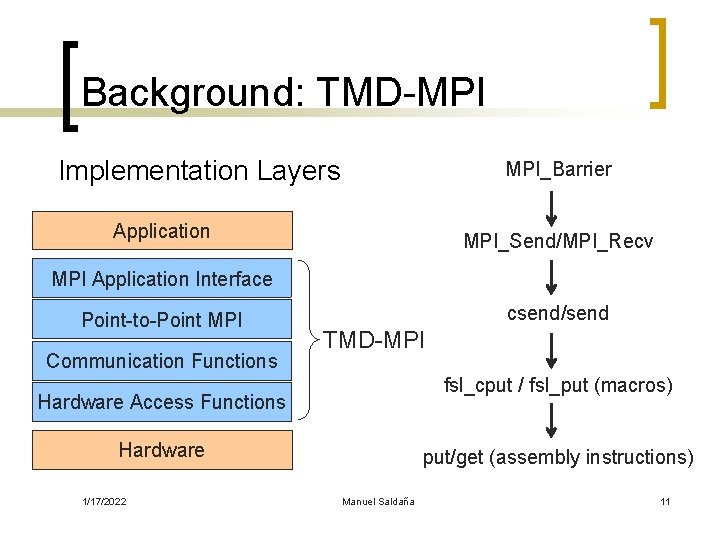

Background: TMD-MPI Implementation Layers MPI_Barrier Application MPI_Send/MPI_Recv MPI Application Interface Point-to-Point MPI Communication Functions csend/send TMD-MPI fsl_cput / fsl_put (macros) Hardware Access Functions Hardware 1/17/2022 put/get (assembly instructions) Manuel Saldaña 11

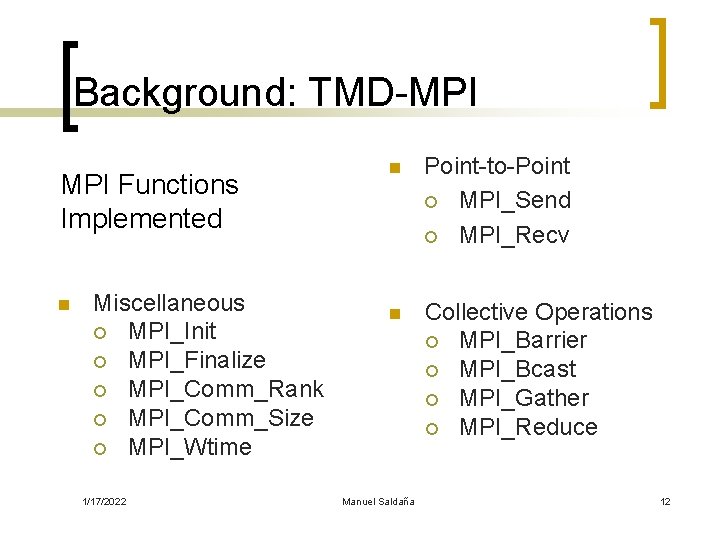

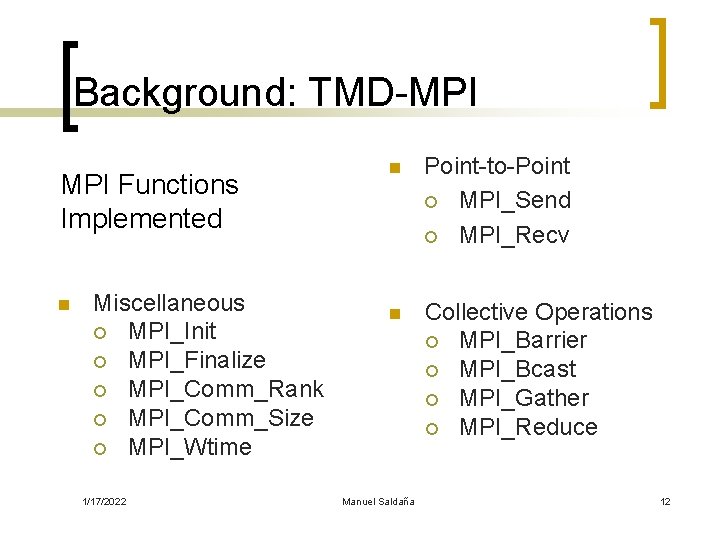

Background: TMD-MPI Functions Implemented n Miscellaneous ¡ MPI_Init ¡ MPI_Finalize ¡ MPI_Comm_Rank ¡ MPI_Comm_Size ¡ MPI_Wtime 1/17/2022 n Point-to-Point ¡ MPI_Send ¡ MPI_Recv n Collective Operations ¡ MPI_Barrier ¡ MPI_Bcast ¡ MPI_Gather ¡ MPI_Reduce Manuel Saldaña 12

![Background Design Flow Flexible HardwareSoftware Codesign Flow Previous work Patel et al 1 Background: Design Flow Flexible Hardware-Software Co-design Flow Previous work: • Patel et al. [1]](https://slidetodoc.com/presentation_image_h2/7a3d15e2370357075f7d8e16ef4e8359/image-13.jpg)

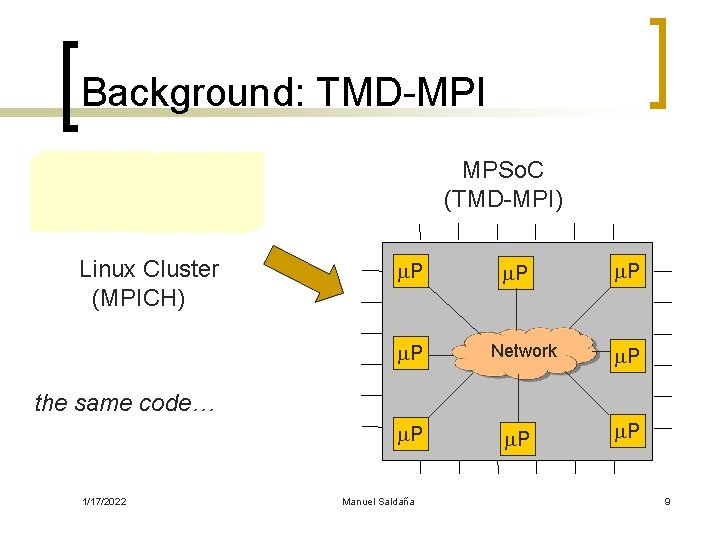

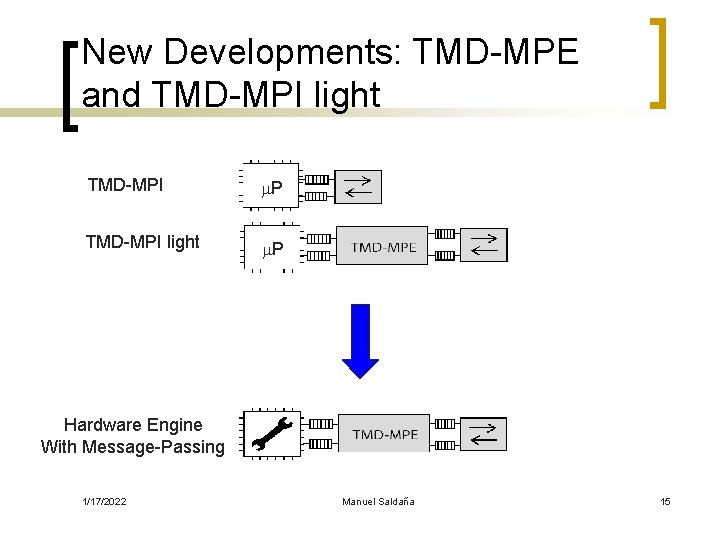

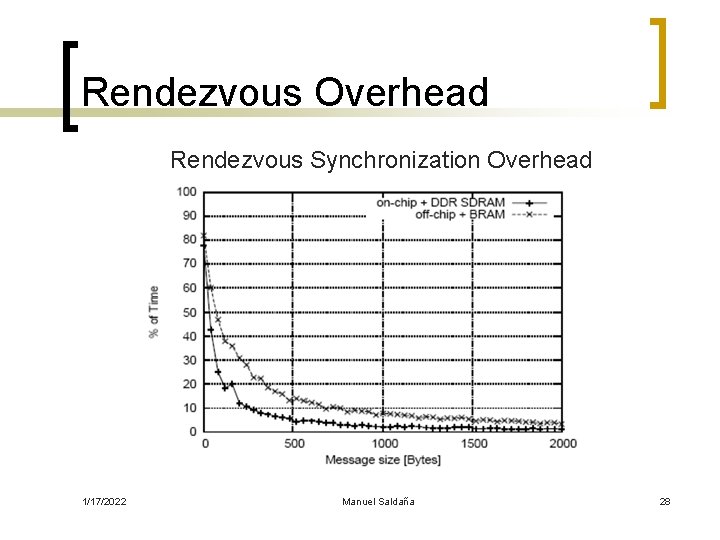

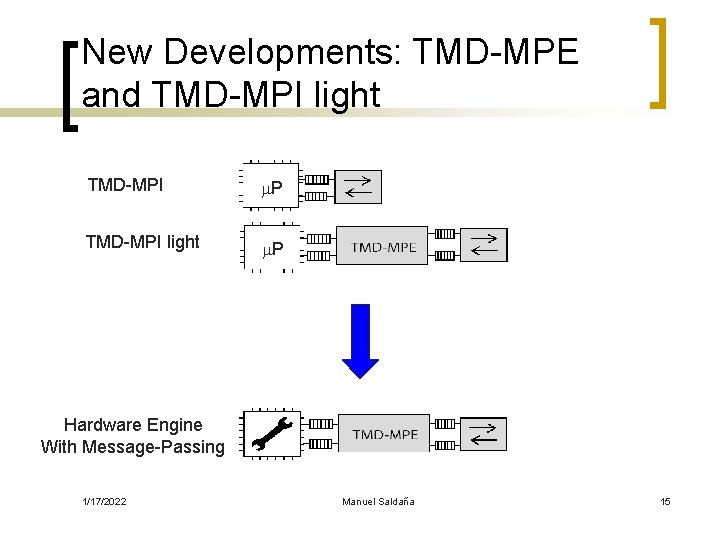

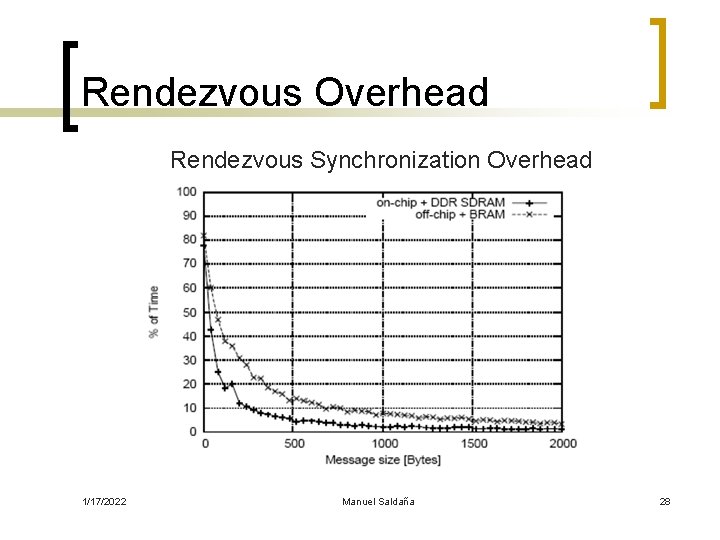

Background: Design Flow Flexible Hardware-Software Co-design Flow Previous work: • Patel et al. [1] (FCCM 2006) • Saldaña et al. [2] (FPL 2006) Re. Con. Fig 06 1/17/2022 Manuel Saldaña 13

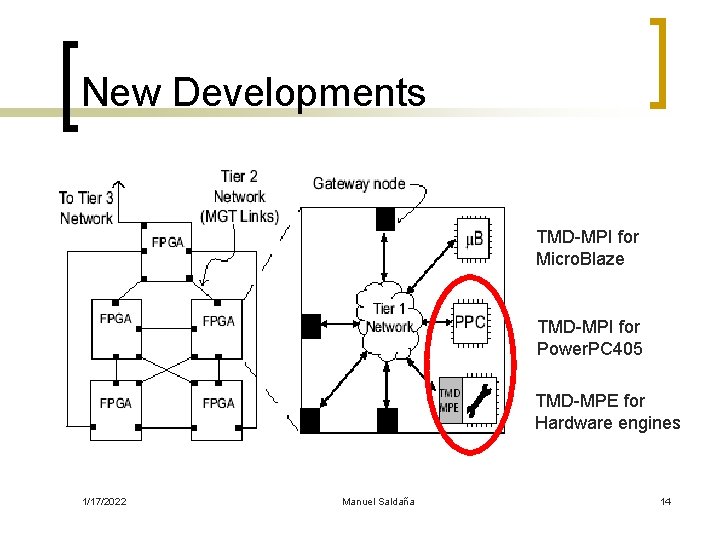

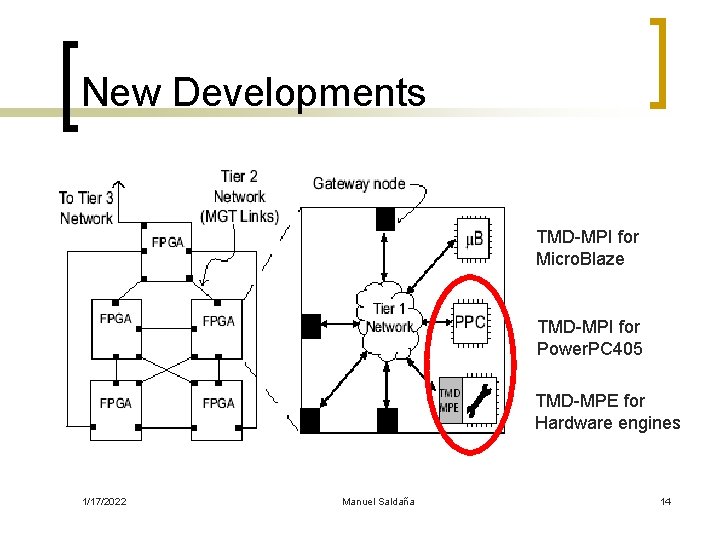

New Developments TMD-MPI for Micro. Blaze TMD-MPI for Power. PC 405 TMD-MPE for Hardware engines 1/17/2022 Manuel Saldaña 14

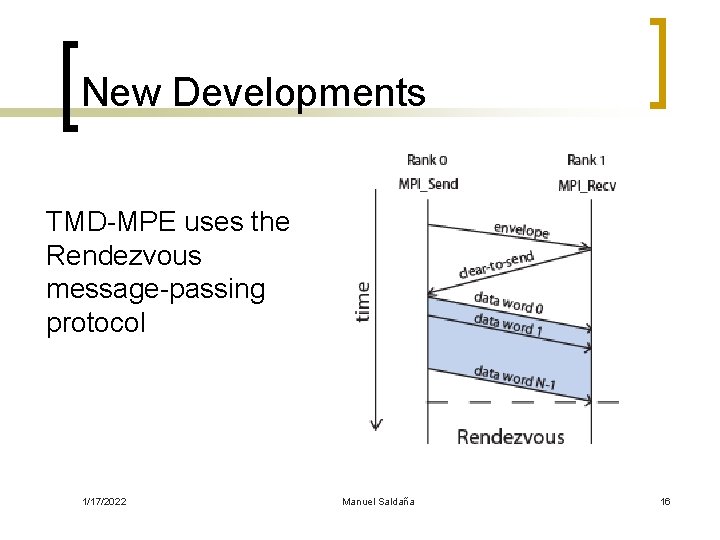

New Developments: TMD-MPE and TMD-MPI light TMD-MPI m. P TMD-MPI light Hardware Engine With Message-Passing 1/17/2022 Manuel Saldaña 15

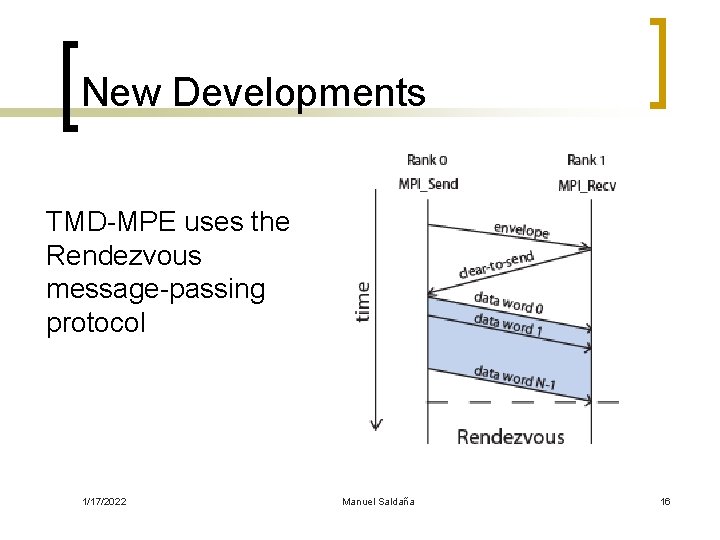

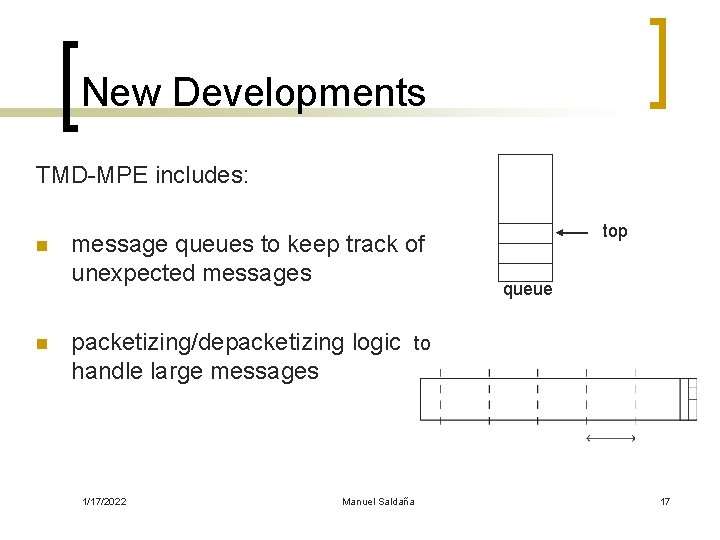

New Developments TMD-MPE uses the Rendezvous message-passing protocol 1/17/2022 Manuel Saldaña 16

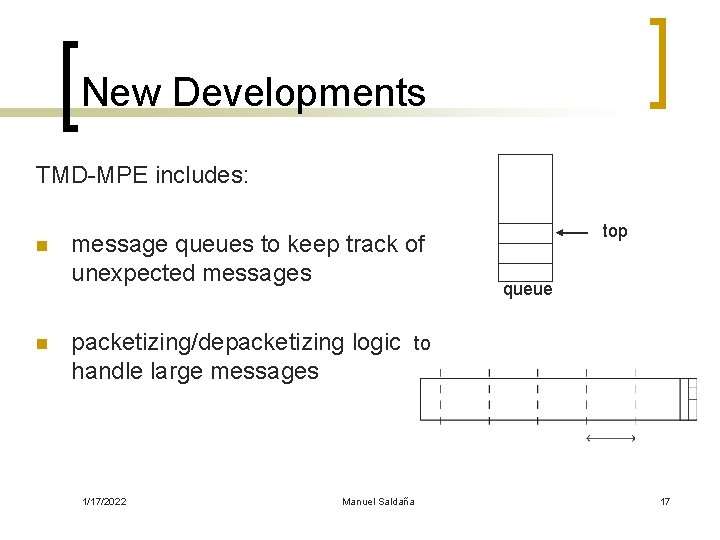

New Developments TMD-MPE includes: n n message queues to keep track of unexpected messages top queue packetizing/depacketizing logic to handle large messages 1/17/2022 Manuel Saldaña 17

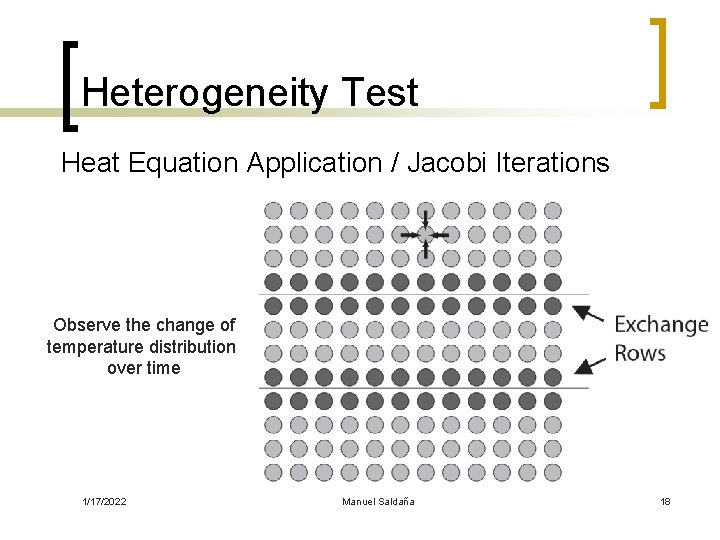

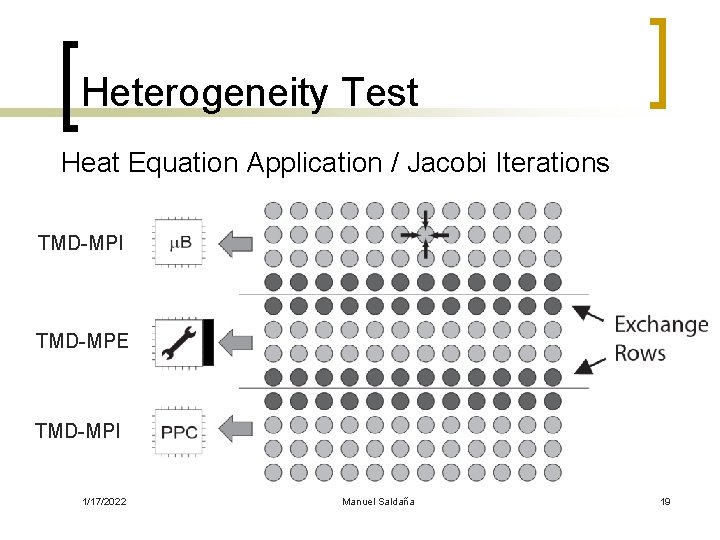

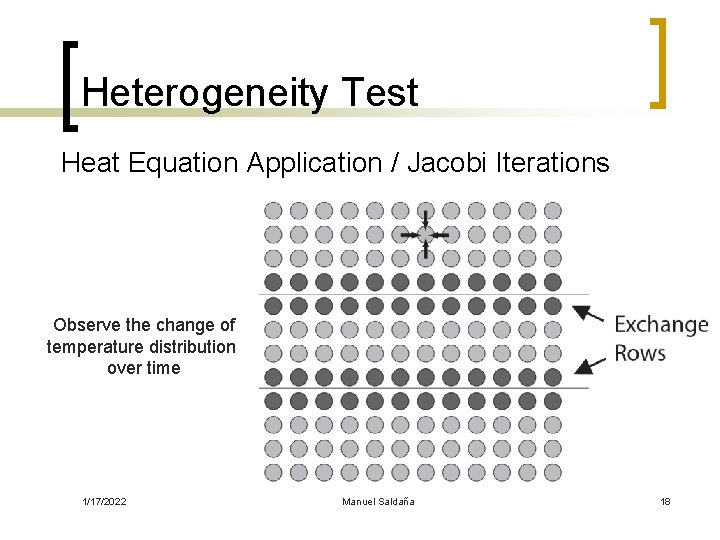

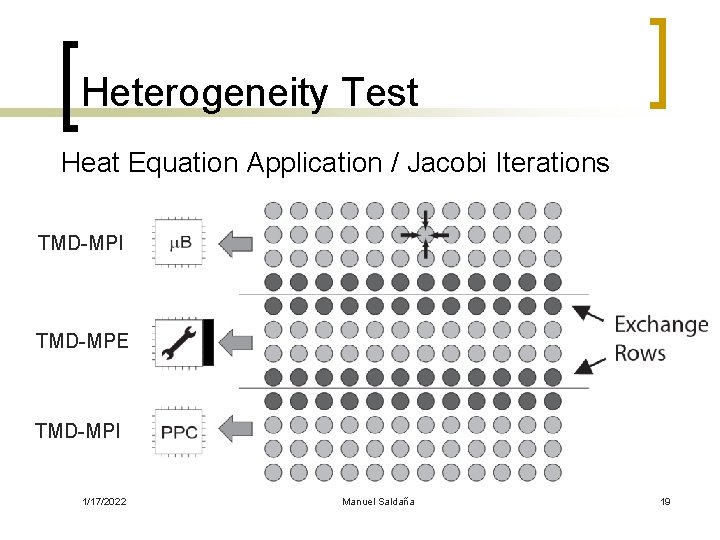

Heterogeneity Test Heat Equation Application / Jacobi Iterations TMD-MPI Observe the change of TMD-MPE temperature distribution over time TMD-MPI 1/17/2022 Manuel Saldaña 18

Heterogeneity Test Heat Equation Application / Jacobi Iterations TMD-MPI TMD-MPE TMD-MPI 1/17/2022 Manuel Saldaña 19

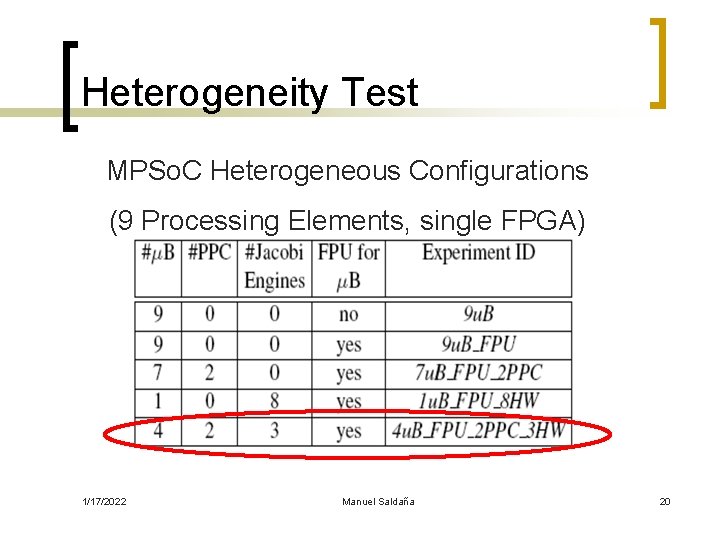

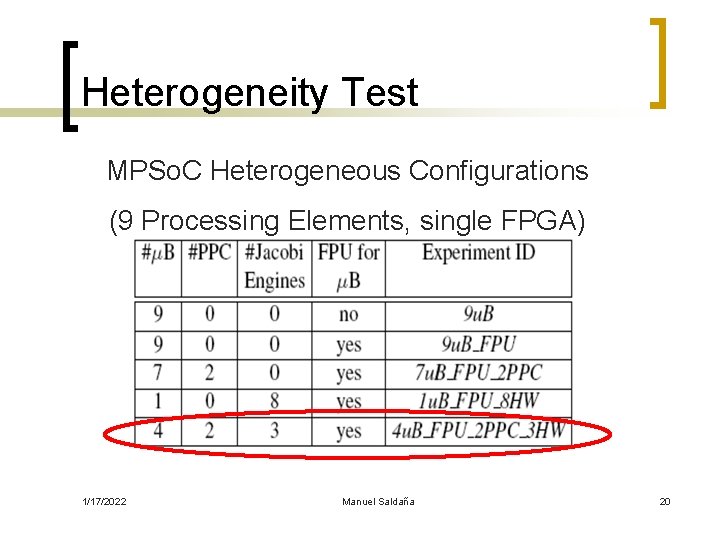

Heterogeneity Test MPSo. C Heterogeneous Configurations (9 Processing Elements, single FPGA) 1/17/2022 Manuel Saldaña 20

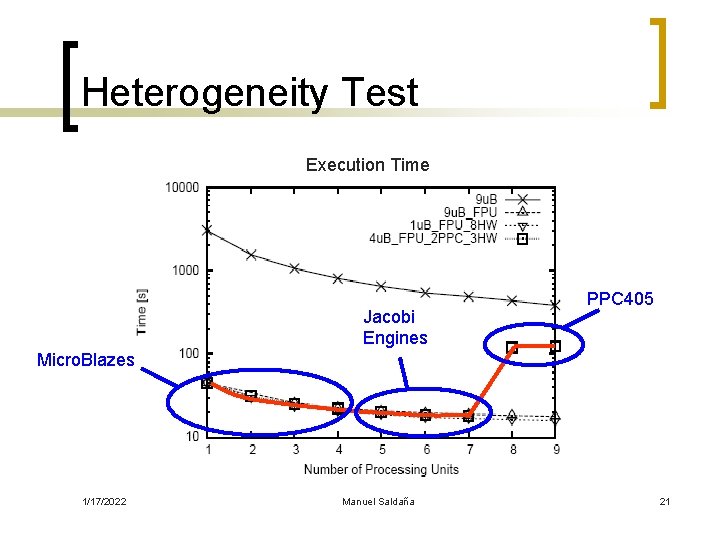

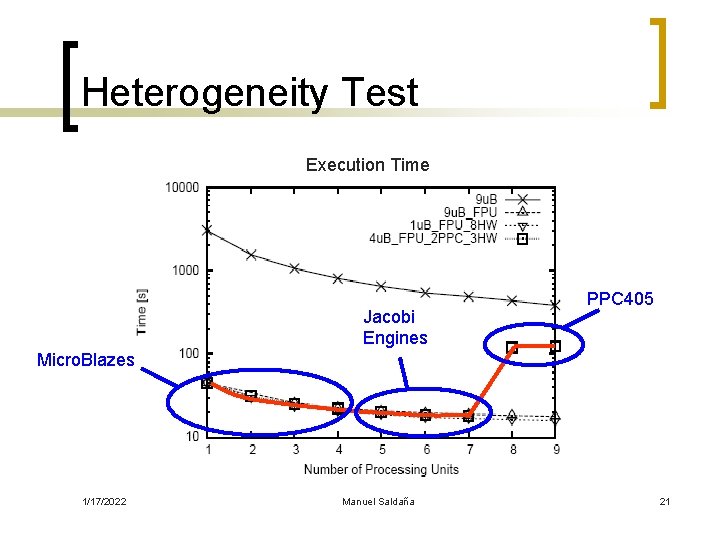

Heterogeneity Test Execution Time Jacobi Engines PPC 405 Micro. Blazes 1/17/2022 Manuel Saldaña 21

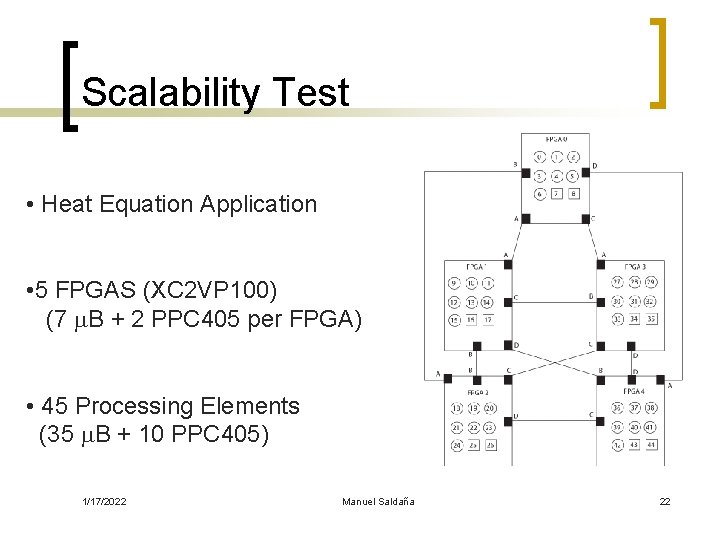

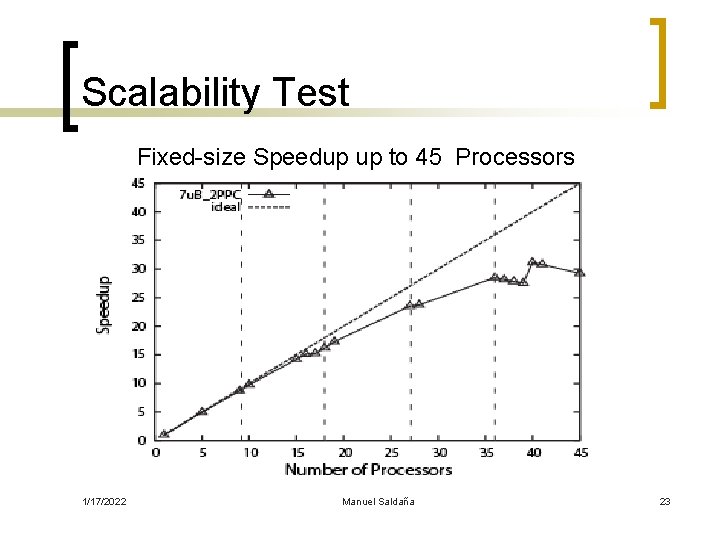

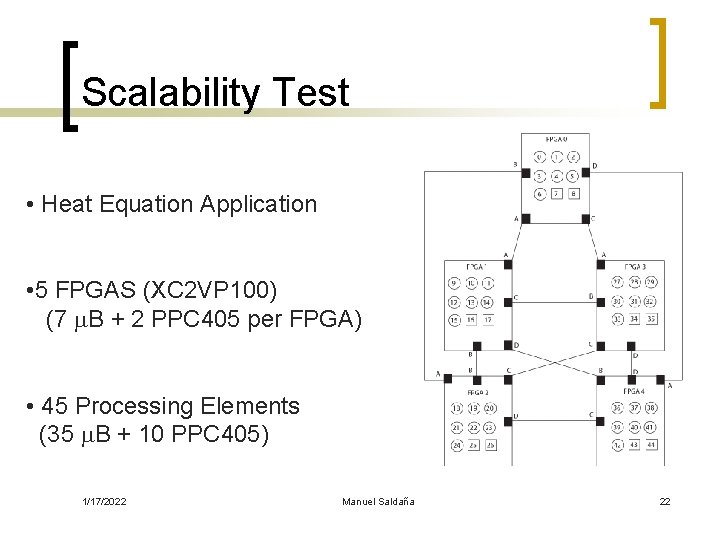

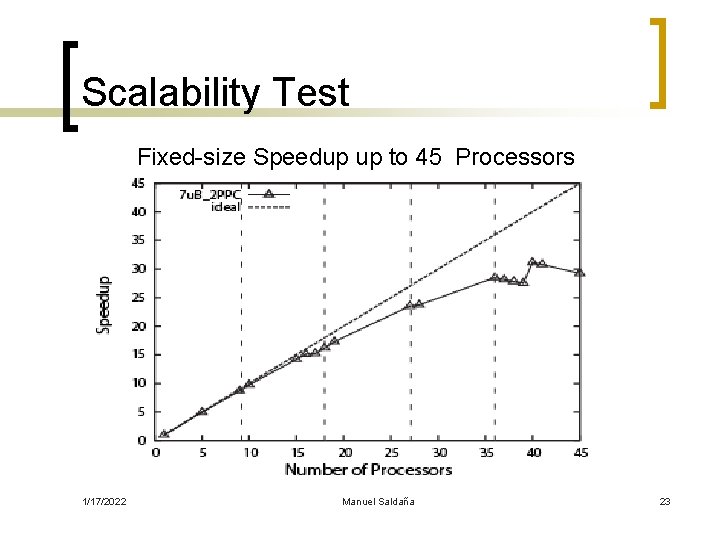

Scalability Test • Heat Equation Application • 5 FPGAS (XC 2 VP 100) (7 m. B + 2 PPC 405 per FPGA) • 45 Processing Elements (35 m. B + 10 PPC 405) 1/17/2022 Manuel Saldaña 22

Scalability Test Fixed-size Speedup up to 45 Processors 1/17/2022 Manuel Saldaña 23

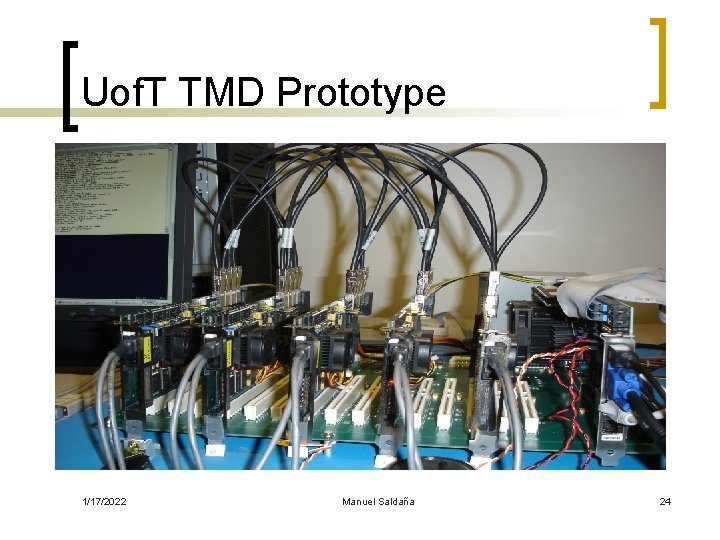

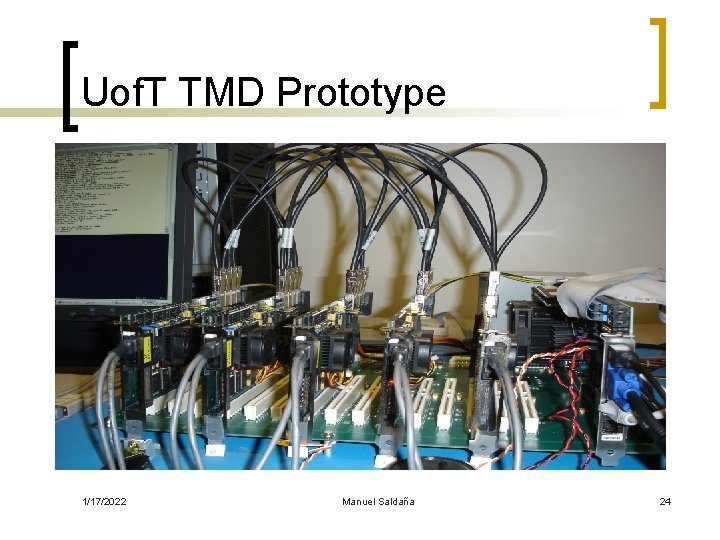

Uof. T TMD Prototype 1/17/2022 Manuel Saldaña 24

Conclusions n TMD-MPI and TMD-MPE enable the parallel programming of heterogeneous MPSo. C across multiple FPGAs including hardware engines n TMD-MPI hides the complexity of using heterogeneous links n The Heat equation application code was executed in a Linux Cluster and in our multi-FPGA system with minimal changes n TMD-MPI can be adapted to a particular architecture n TMD prototype is a good platform for further research on MPSo. C 1/17/2022 Manuel Saldaña 25

![References 1 Arun Patel Christopher Madill Manuel Saldaña Christopher Comis Régis Pomès and Paul References [1] Arun Patel, Christopher Madill, Manuel Saldaña, Christopher Comis, Régis Pomès, and Paul](https://slidetodoc.com/presentation_image_h2/7a3d15e2370357075f7d8e16ef4e8359/image-26.jpg)

References [1] Arun Patel, Christopher Madill, Manuel Saldaña, Christopher Comis, Régis Pomès, and Paul Chow. A Scalable FPGA-based Multiprocessor. In IEEE Symposium on Field-Programmable Custom Computing Machines (FCCM’ 06), April 2006 [2] Manuel Saldaña and Paul Chow. TMD-MPI: An MPI Implementation for Multiple Processors across Multiple FPGAs. In IEEE International Conference on Field-Programmable Logic and Applications (FPL 2006), August 2006. 1/17/2022 Manuel Saldaña 26

Thank you! (¡Gracias!) 1/17/2022 Manuel Saldaña 27

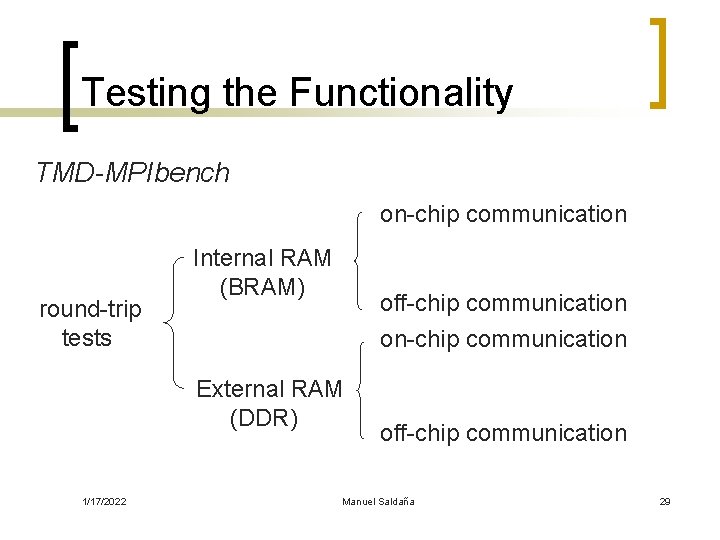

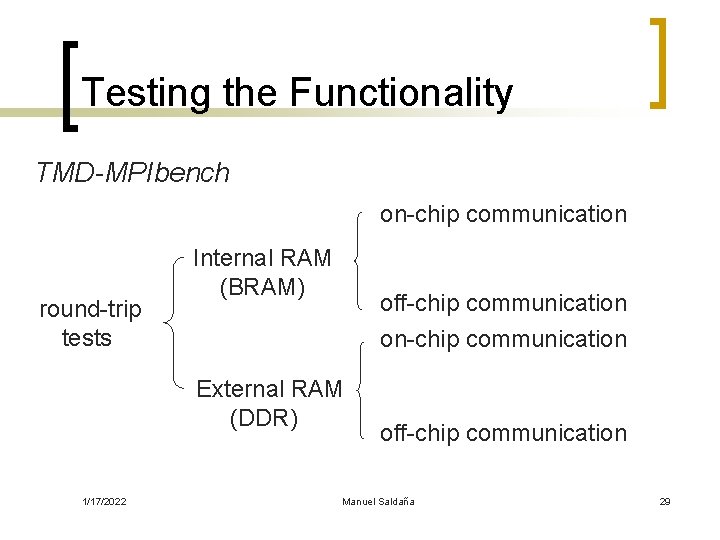

Rendezvous Overhead Rendezvous Synchronization Overhead 1/17/2022 Manuel Saldaña 28

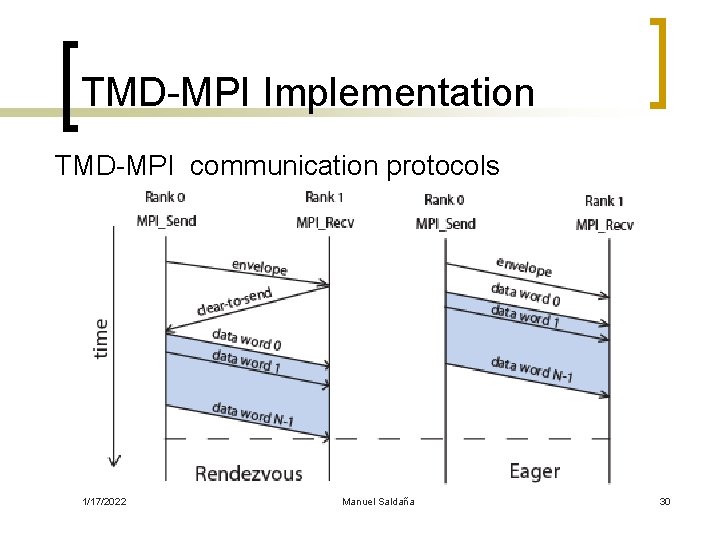

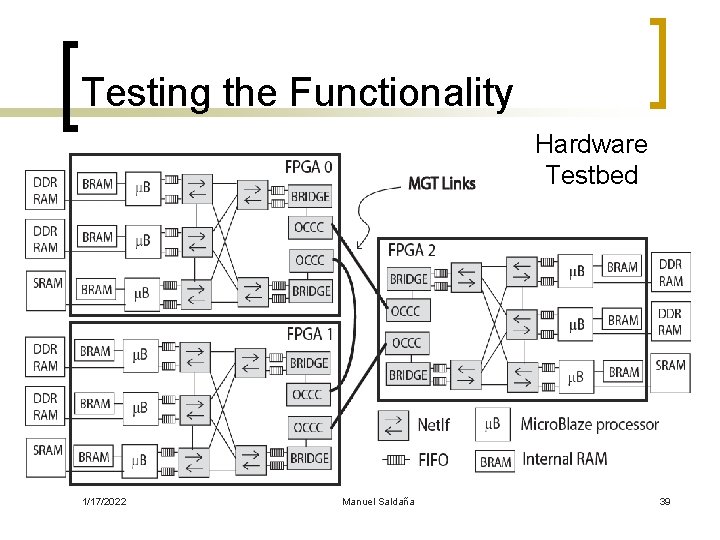

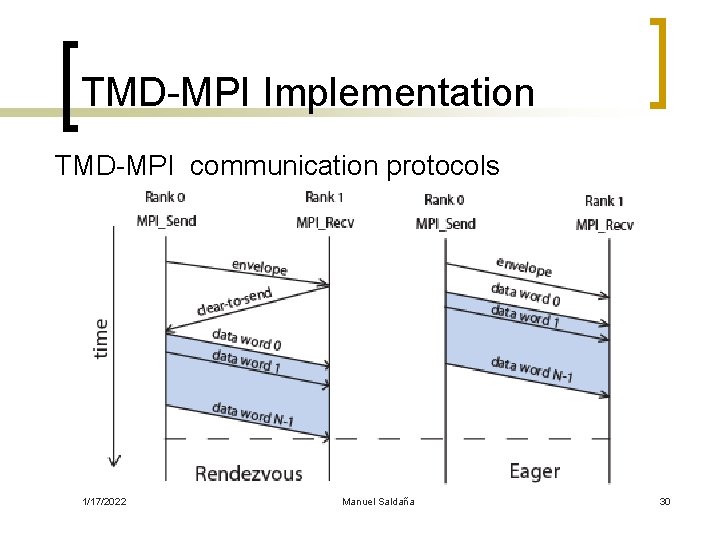

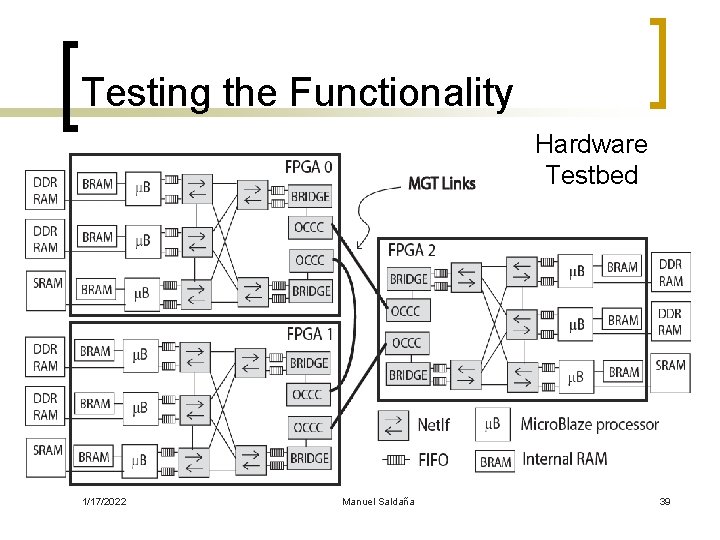

Testing the Functionality TMD-MPIbench on-chip communication round-trip tests Internal RAM (BRAM) off-chip communication on-chip communication External RAM (DDR) 1/17/2022 off-chip communication Manuel Saldaña 29

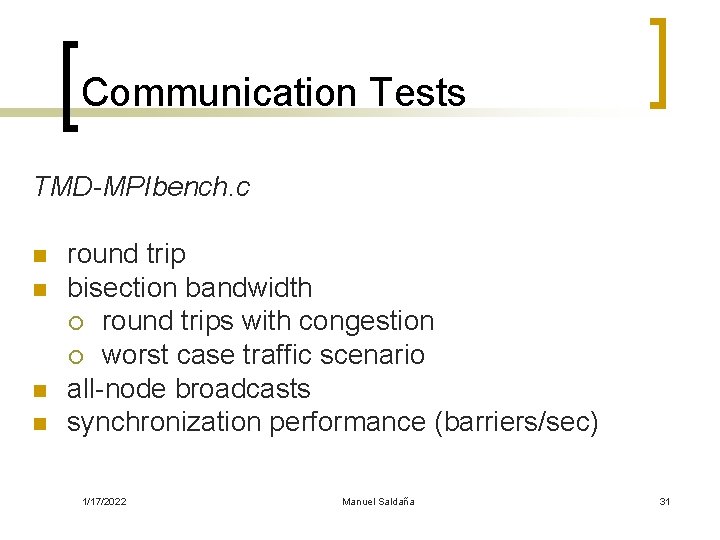

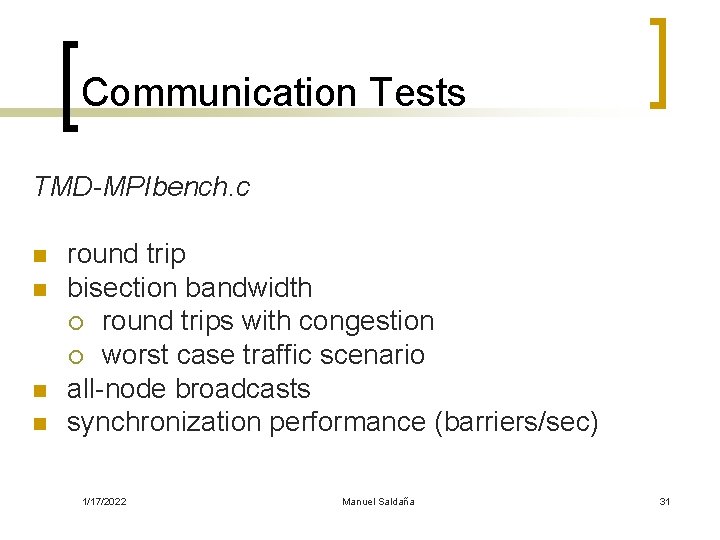

TMD-MPI Implementation TMD-MPI communication protocols 1/17/2022 Manuel Saldaña 30

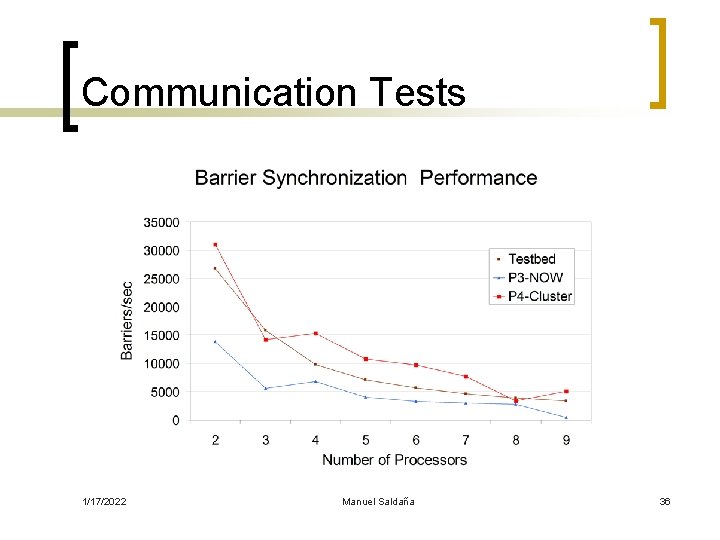

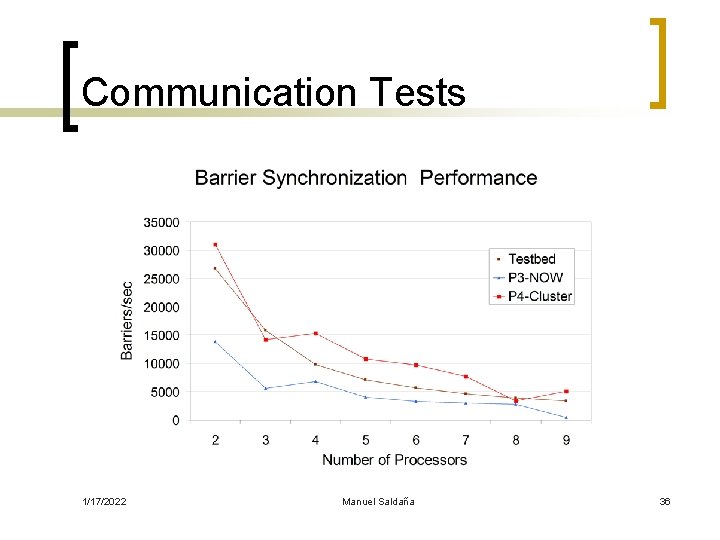

Communication Tests TMD-MPIbench. c n n round trip bisection bandwidth ¡ round trips with congestion ¡ worst case traffic scenario all-node broadcasts synchronization performance (barriers/sec) 1/17/2022 Manuel Saldaña 31

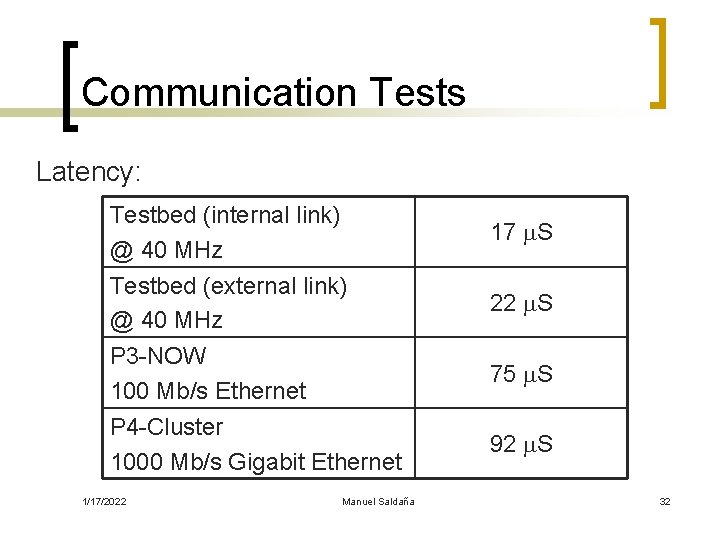

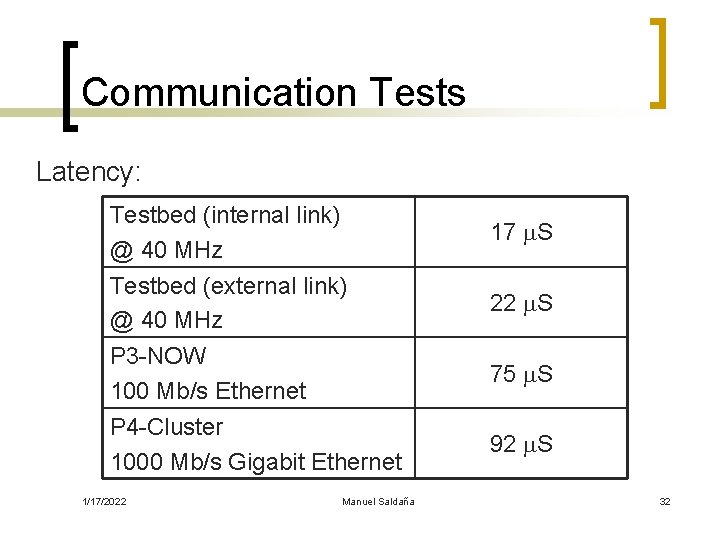

Communication Tests Latency: Testbed (internal link) @ 40 MHz 17 m. S Testbed (external link) @ 40 MHz 22 m. S P 3 -NOW 100 Mb/s Ethernet 75 m. S P 4 -Cluster 1000 Mb/s Gigabit Ethernet 92 m. S 1/17/2022 Manuel Saldaña 32

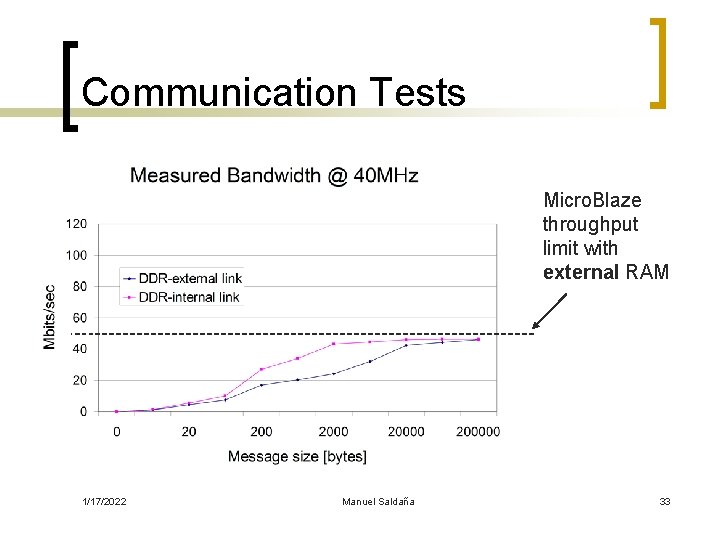

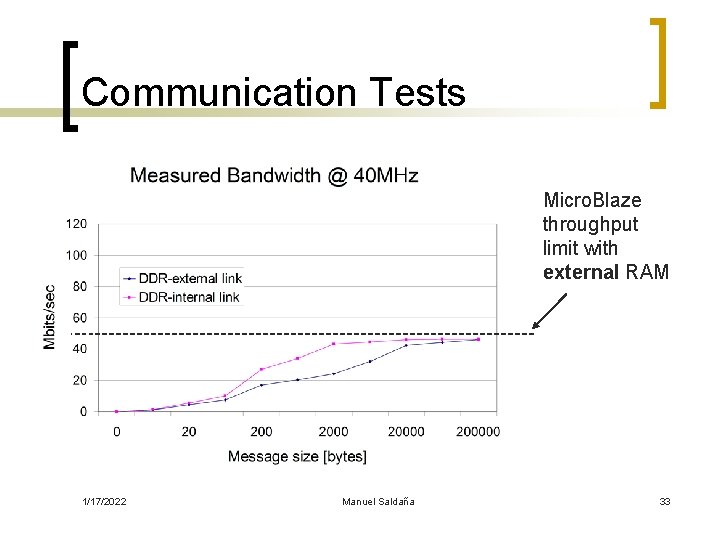

Communication Tests Micro. Blaze throughput limit with external RAM 1/17/2022 Manuel Saldaña 33

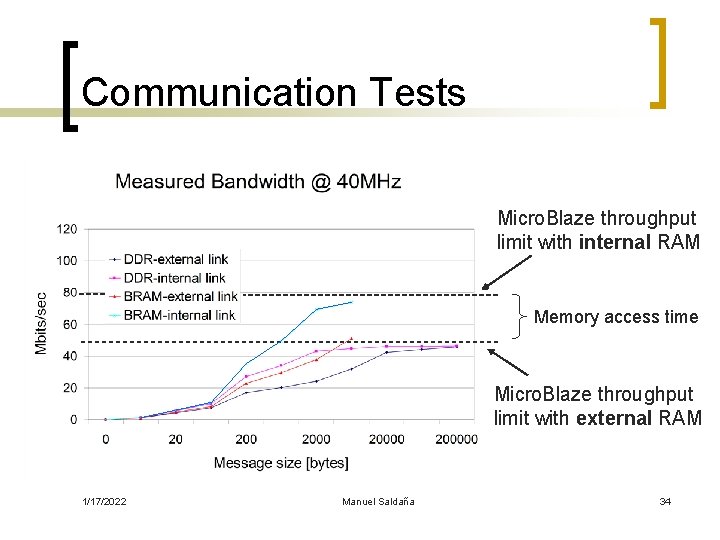

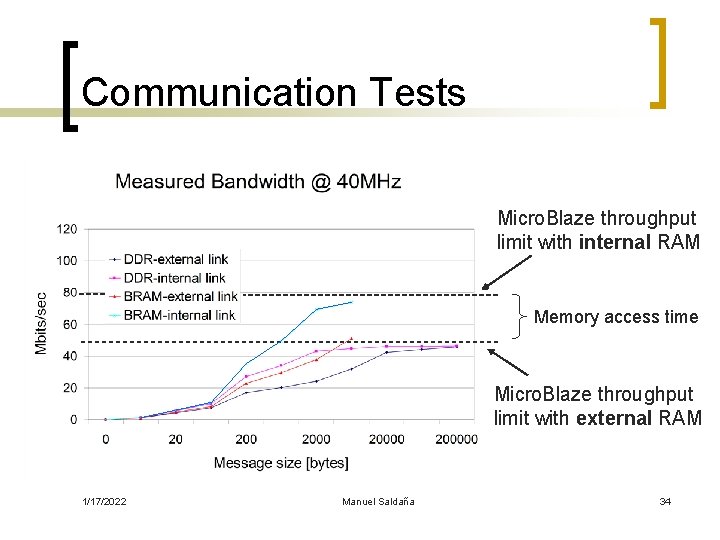

Communication Tests Micro. Blaze throughput limit with internal RAM Memory access time Micro. Blaze throughput limit with external RAM 1/17/2022 Manuel Saldaña 34

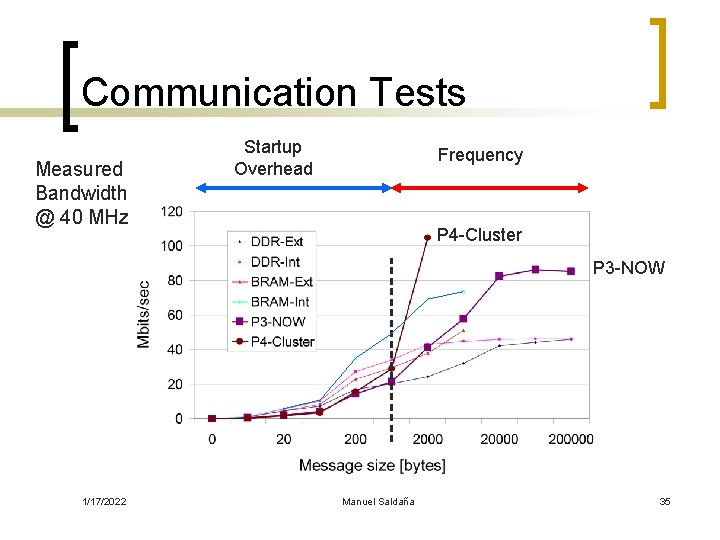

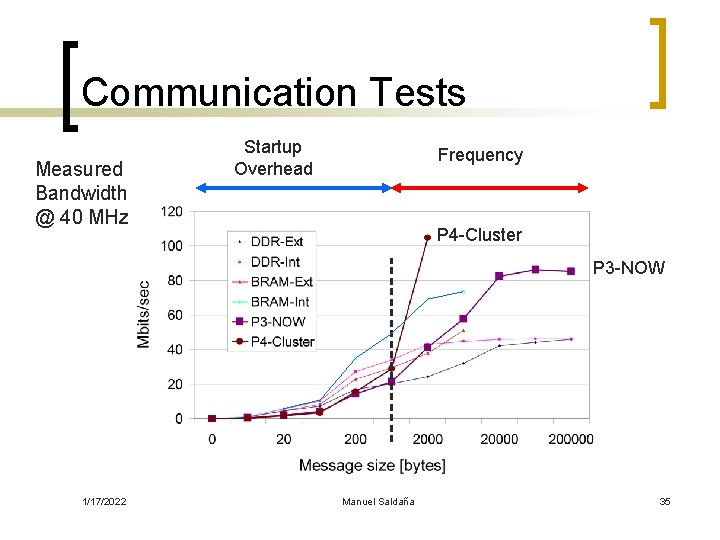

Communication Tests Measured Bandwidth @ 40 MHz Startup Overhead Frequency P 4 -Cluster P 3 -NOW 1/17/2022 Manuel Saldaña 35

Communication Tests 1/17/2022 Manuel Saldaña 36

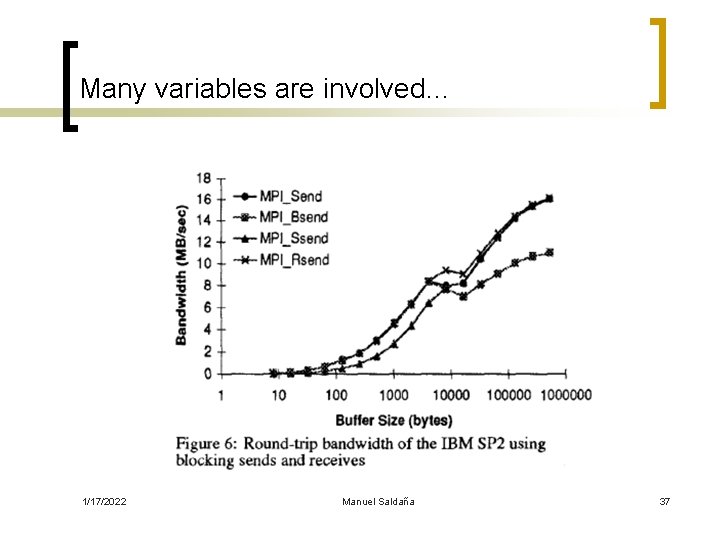

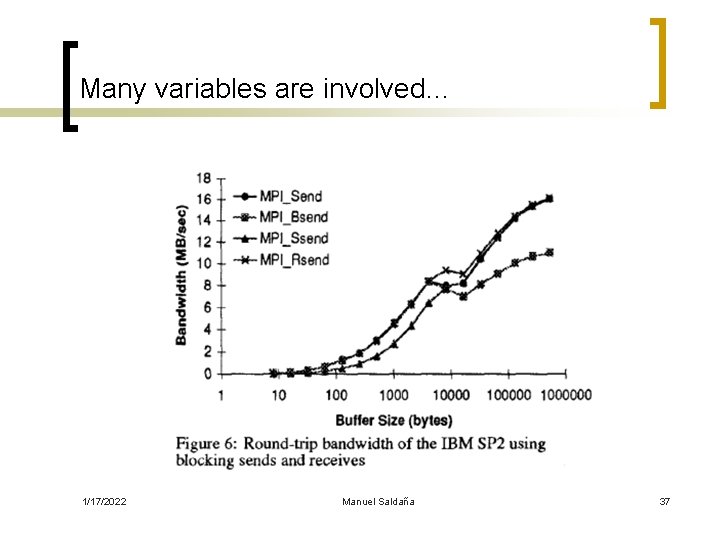

Many variables are involved… 1/17/2022 Manuel Saldaña 37

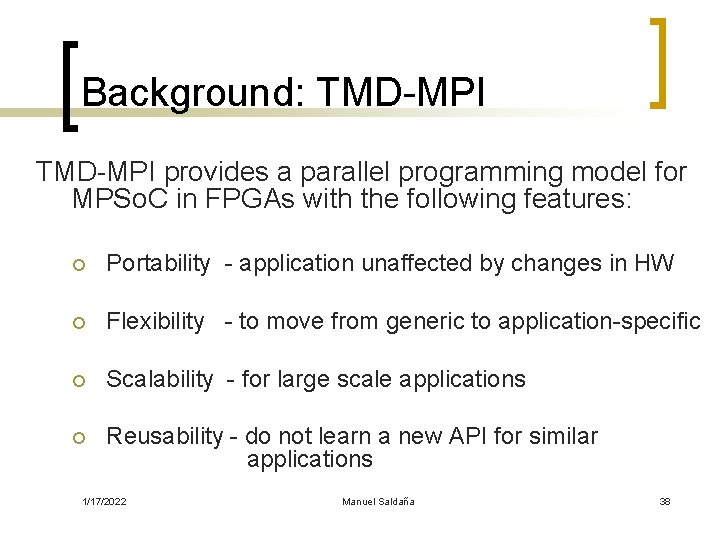

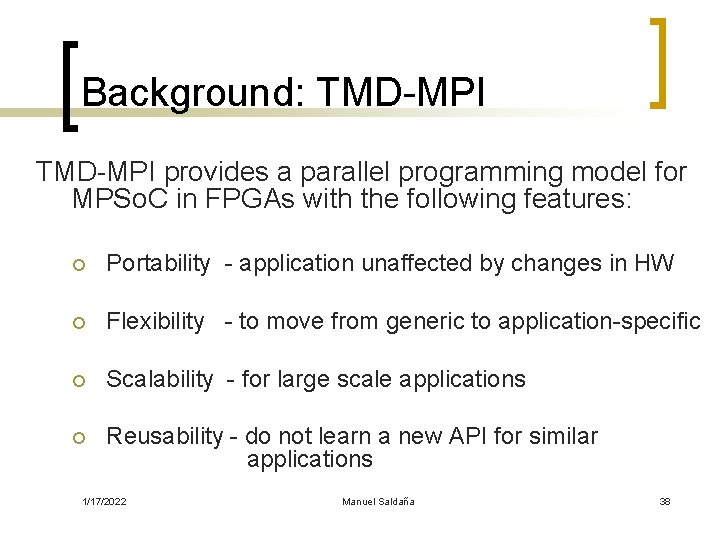

Background: TMD-MPI provides a parallel programming model for MPSo. C in FPGAs with the following features: ¡ Portability - application unaffected by changes in HW ¡ Flexibility - to move from generic to application-specific ¡ Scalability - for large scale applications ¡ Reusability - do not learn a new API for similar applications 1/17/2022 Manuel Saldaña 38

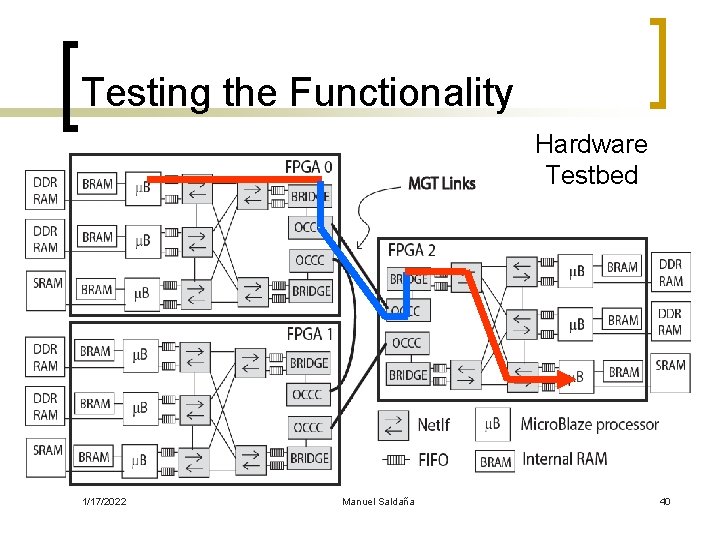

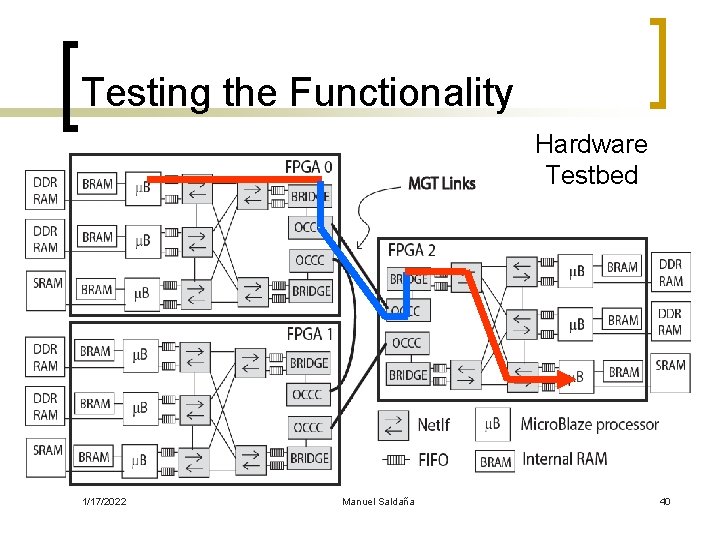

Testing the Functionality Hardware Testbed 1/17/2022 Manuel Saldaña 39

Testing the Functionality Hardware Testbed 1/17/2022 Manuel Saldaña 40

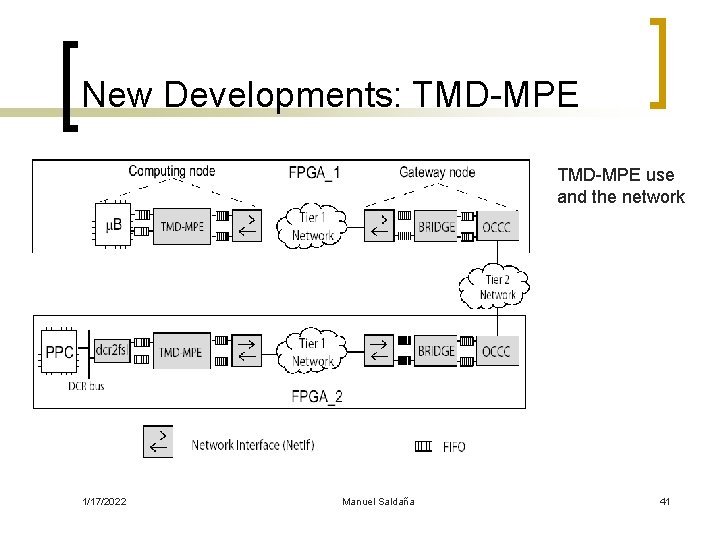

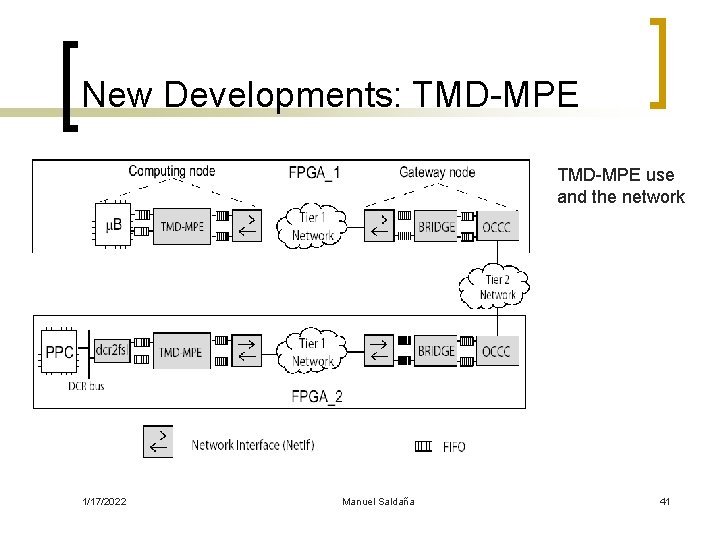

New Developments: TMD-MPE use and the network 1/17/2022 Manuel Saldaña 41

Background: TMD-MPI n TMD-MPI ¡ ¡ ¡ is a lightweight subset of the MPI standard is tailored to a particular application does not require an Operating System has a small memory footprint ~8. 7 KB uses a simple protocol 1/17/2022 Manuel Saldaña 42

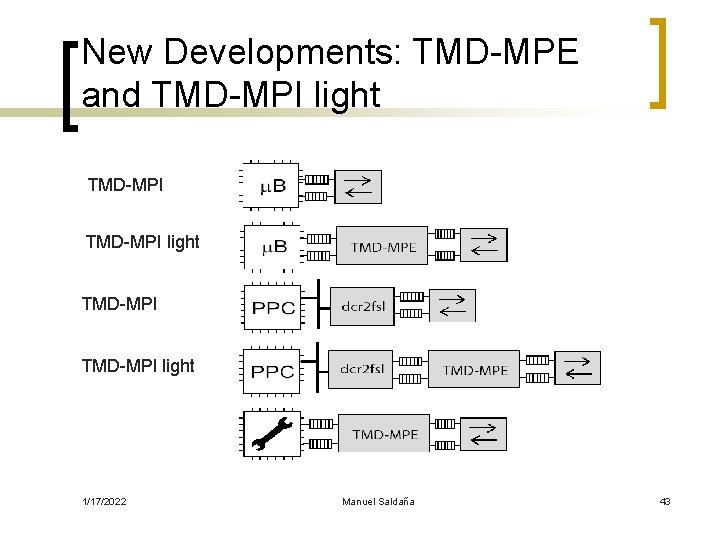

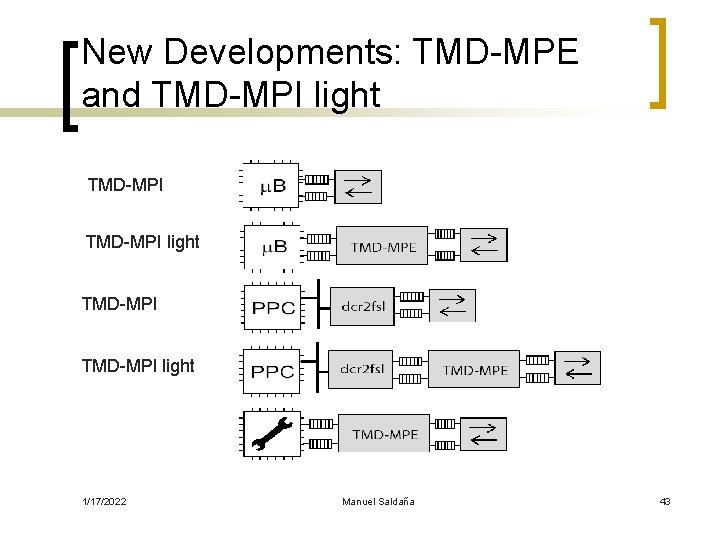

New Developments: TMD-MPE and TMD-MPI light TMD-MPI light 1/17/2022 Manuel Saldaña 43