Concurrent Programming in Java http flic krp7 HT

- Slides: 36

Concurrent Programming in Java http: //flic. kr/p/7 HT 8 q. T

What are you going to learn about today? • Concurrency in Java – What – How – Why • Problems with concurrency • … and some solutions! http: //flic. kr/p/8 Jpk. Tg

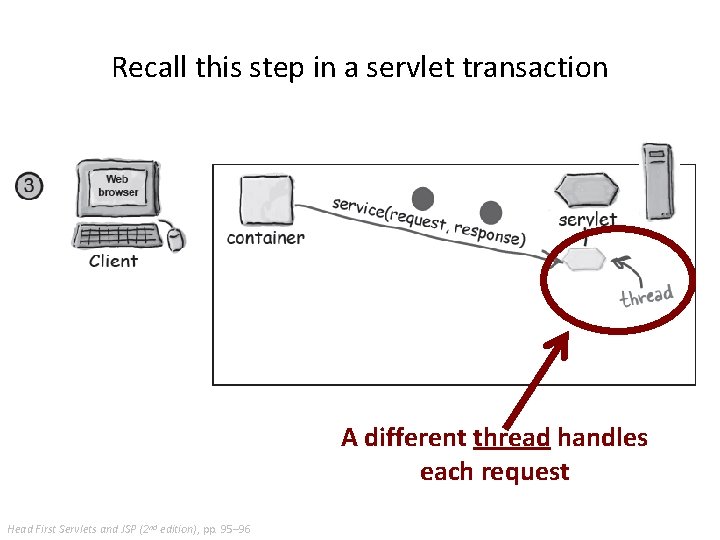

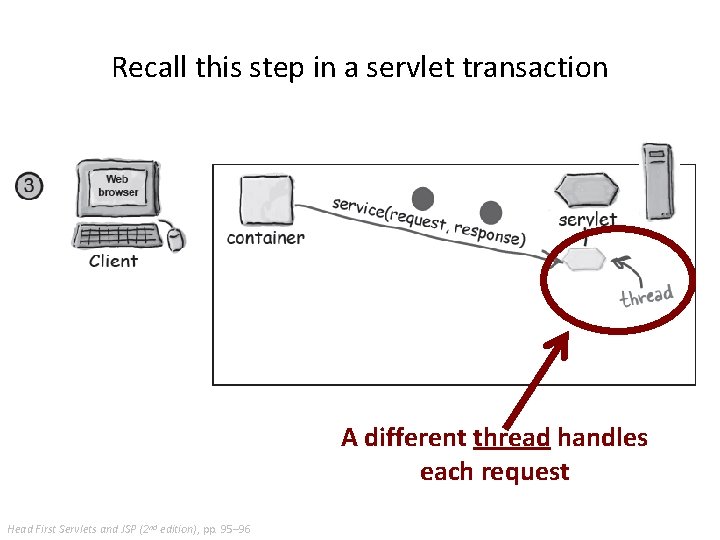

Recall this step in a servlet transaction A different thread handles each request Head First Servlets and JSP (2 nd edition), pp. 95– 96

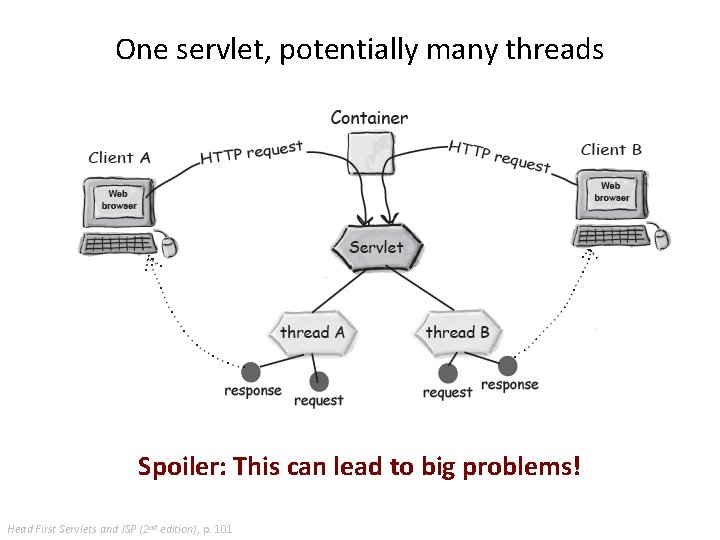

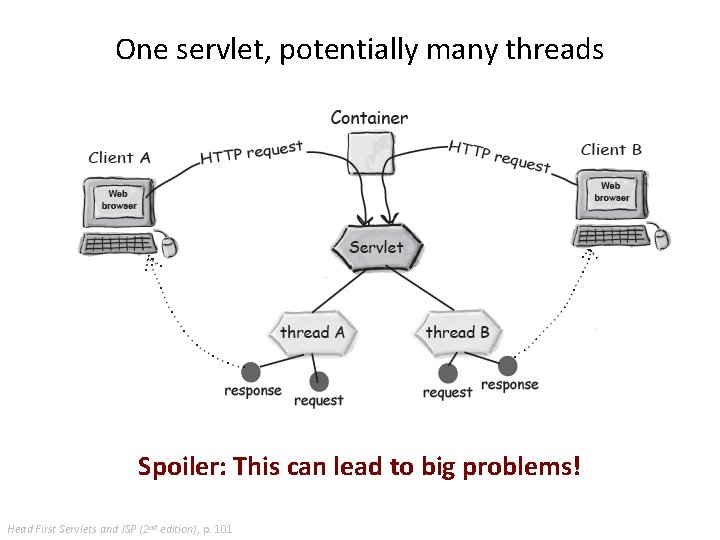

One servlet, potentially many threads Spoiler: This can lead to big problems! Head First Servlets and JSP (2 nd edition), p. 101

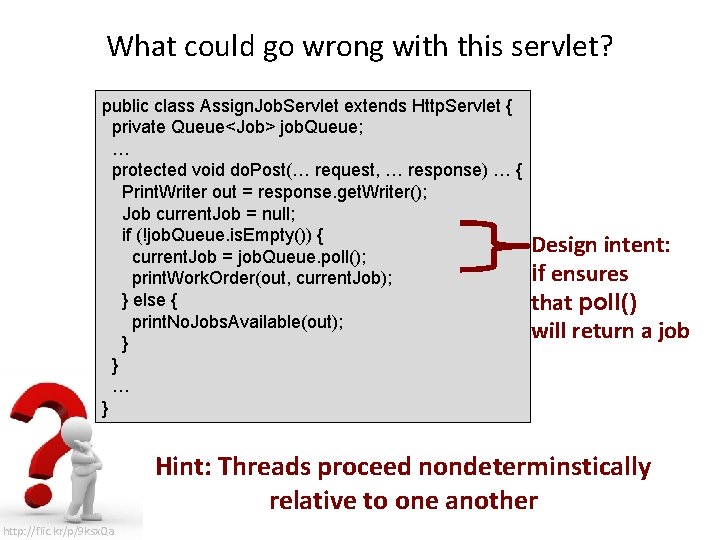

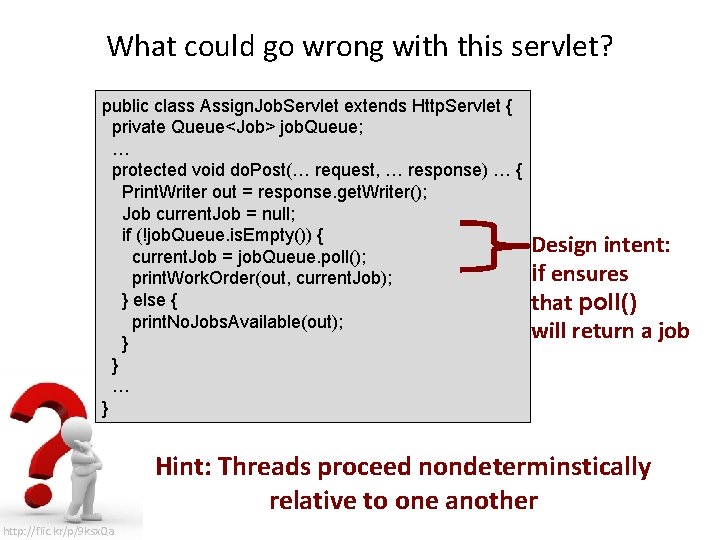

What could go wrong with this servlet? public class Assign. Job. Servlet extends Http. Servlet { private Queue<Job> job. Queue; … protected void do. Post(… request, … response) … { Print. Writer out = response. get. Writer(); Job current. Job = null; if (!job. Queue. is. Empty()) { current. Job = job. Queue. poll(); print. Work. Order(out, current. Job); } else { print. No. Jobs. Available(out); } } … } Design intent: if ensures that poll() will return a job Hint: Threads proceed nondeterminstically relative to one another http: //flic. kr/p/9 ksx. Qa

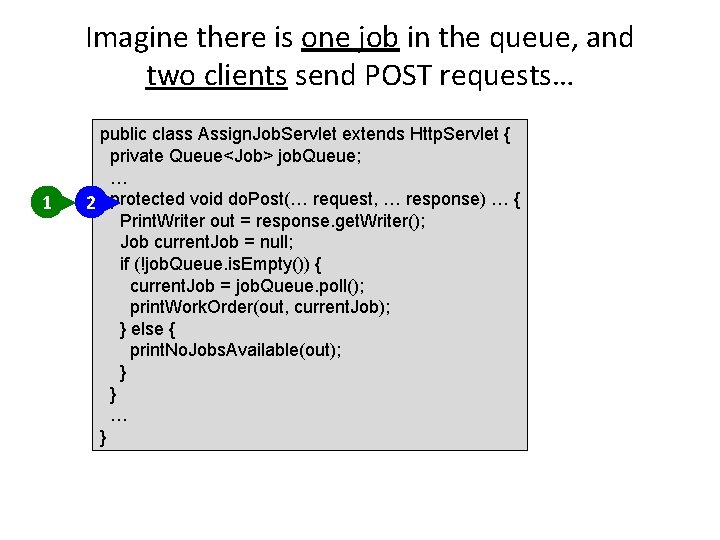

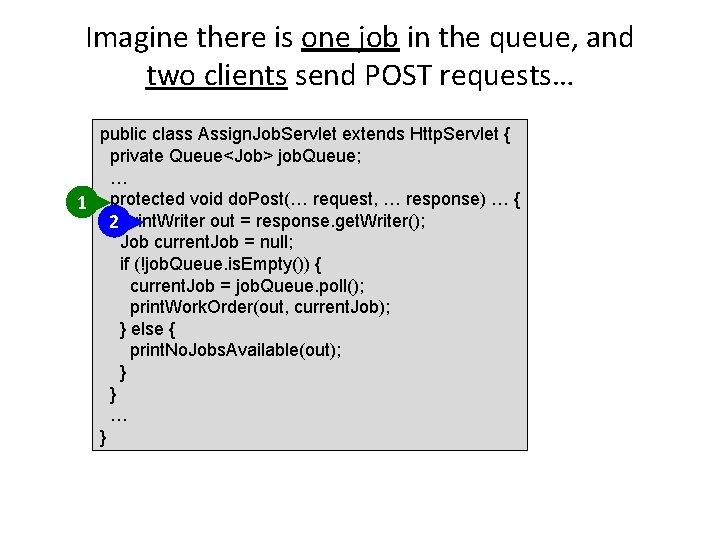

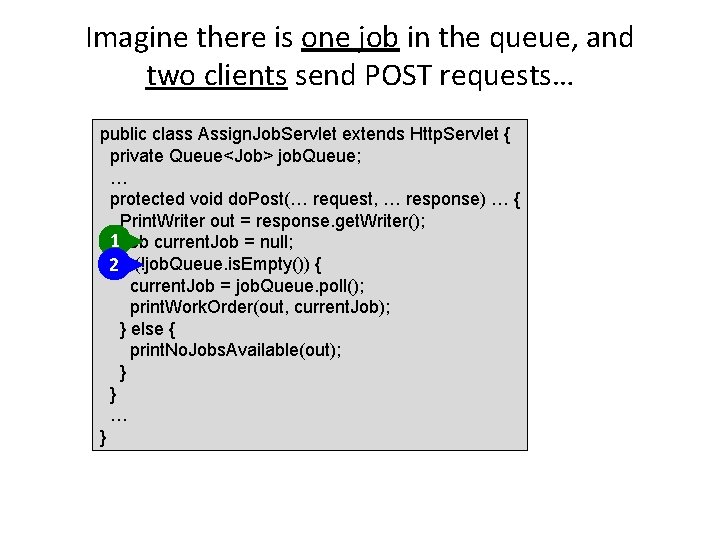

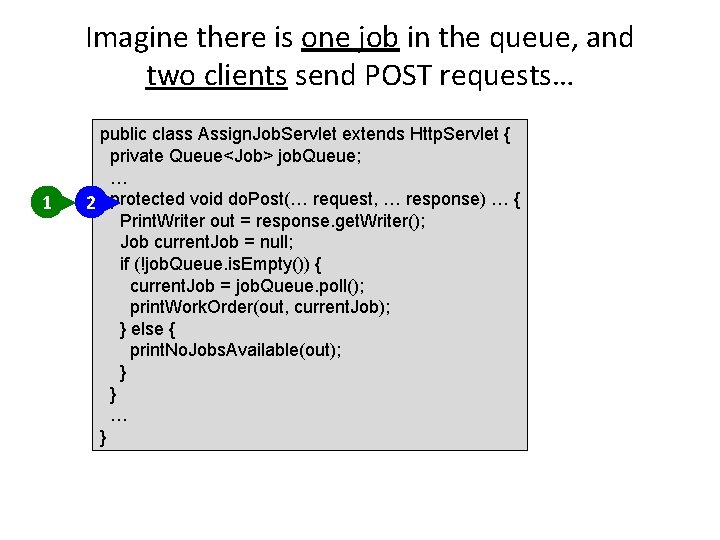

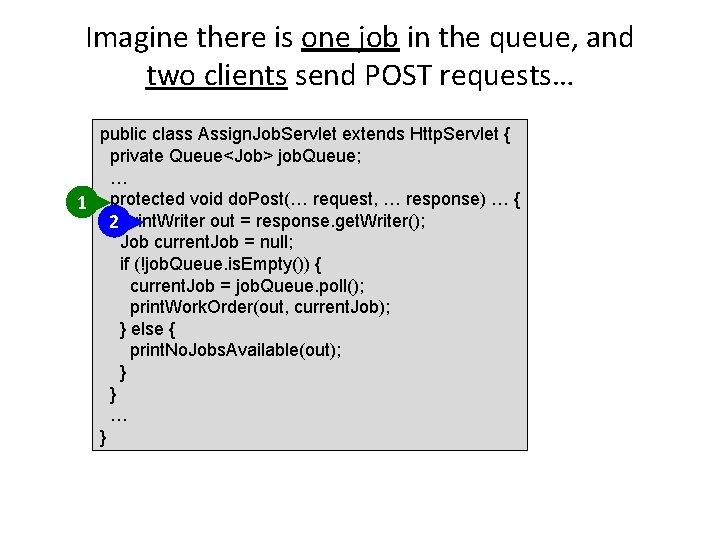

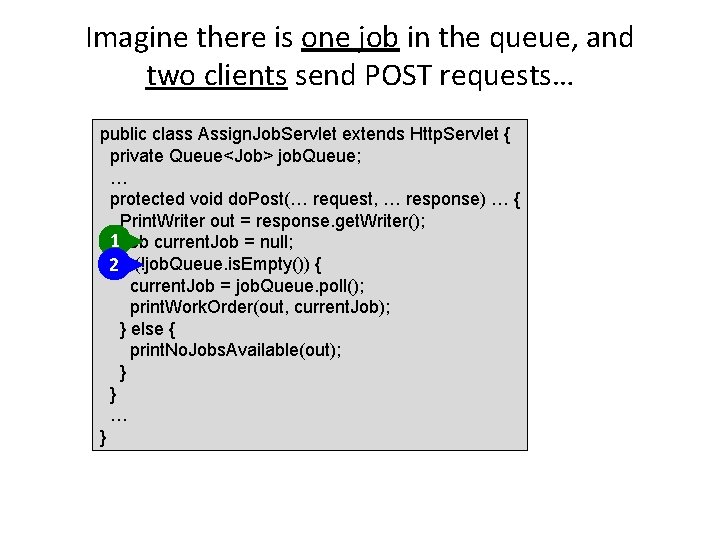

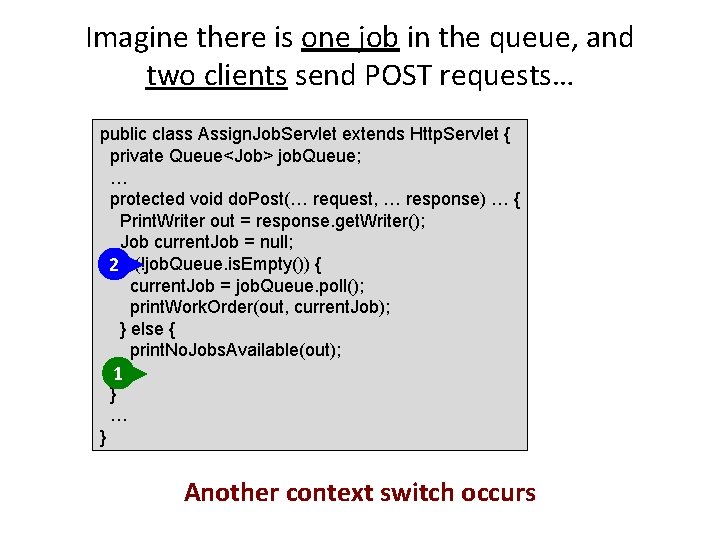

Imagine there is one job in the queue, and two clients send POST requests… 1 public class Assign. Job. Servlet extends Http. Servlet { private Queue<Job> job. Queue; … 2 protected void do. Post(… request, … response) … { Print. Writer out = response. get. Writer(); Job current. Job = null; if (!job. Queue. is. Empty()) { current. Job = job. Queue. poll(); print. Work. Order(out, current. Job); } else { print. No. Jobs. Available(out); } } … }

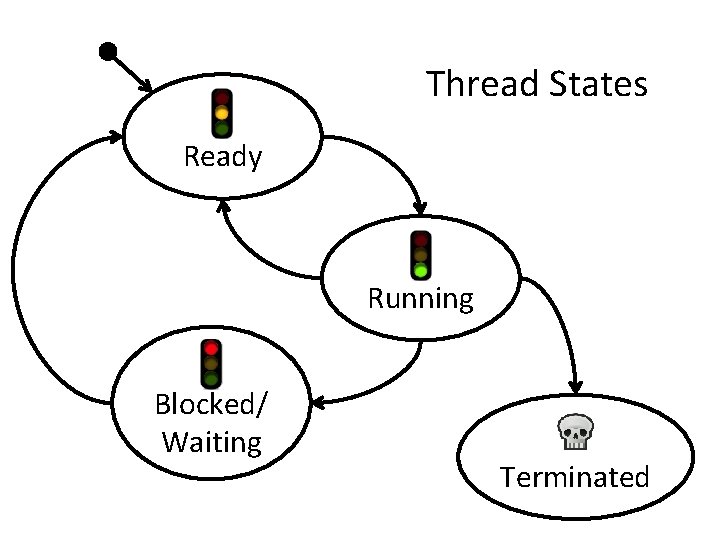

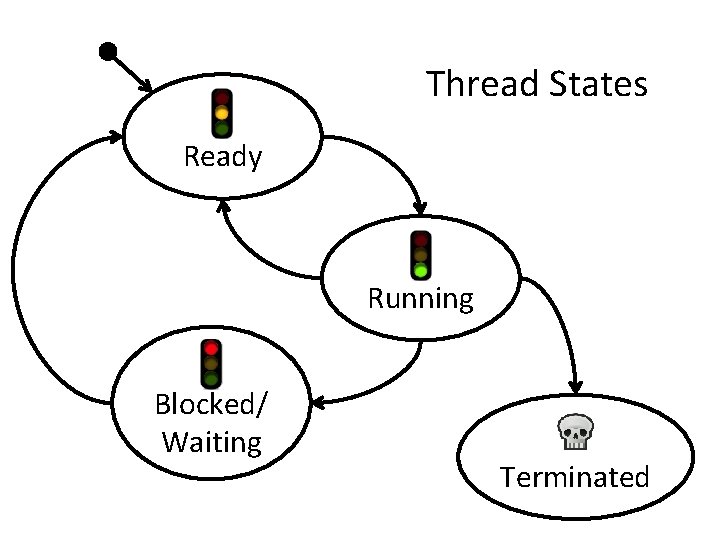

Thread States Ready Running Blocked/ Waiting Terminated

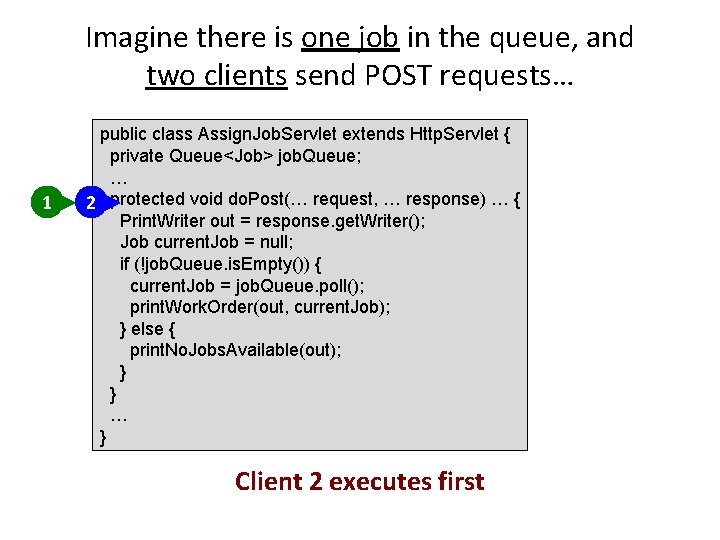

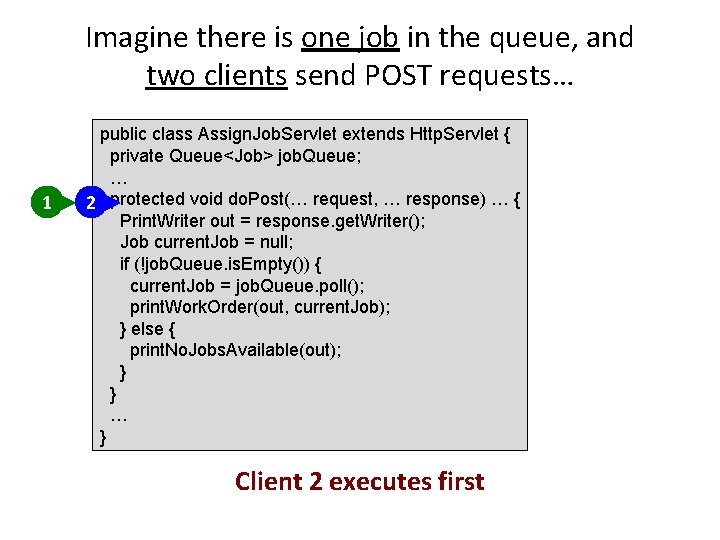

Imagine there is one job in the queue, and two clients send POST requests… 1 public class Assign. Job. Servlet extends Http. Servlet { private Queue<Job> job. Queue; … 2 protected void do. Post(… request, … response) … { Print. Writer out = response. get. Writer(); Job current. Job = null; if (!job. Queue. is. Empty()) { current. Job = job. Queue. poll(); print. Work. Order(out, current. Job); } else { print. No. Jobs. Available(out); } } … } Client 2 executes first

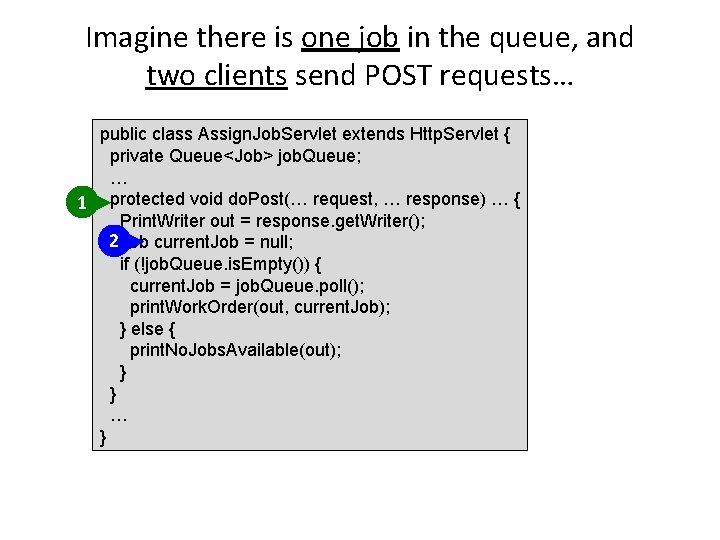

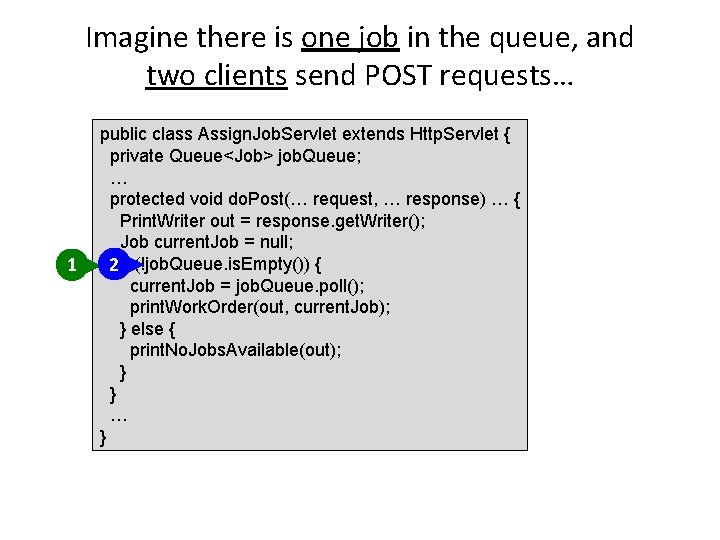

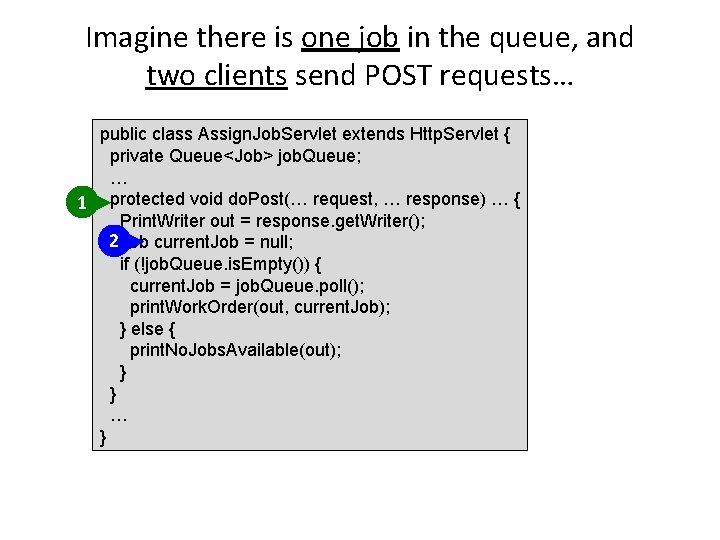

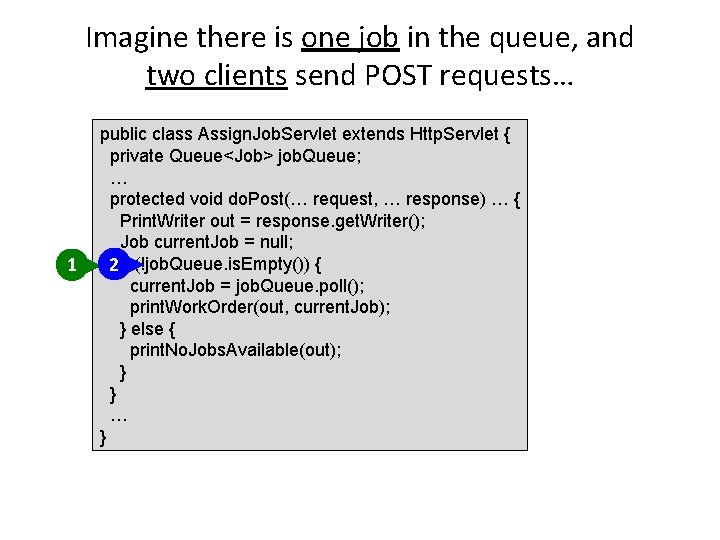

Imagine there is one job in the queue, and two clients send POST requests… public class Assign. Job. Servlet extends Http. Servlet { private Queue<Job> job. Queue; … 1 protected void do. Post(… request, … response) … { 2 Print. Writer out = response. get. Writer(); Job current. Job = null; if (!job. Queue. is. Empty()) { current. Job = job. Queue. poll(); print. Work. Order(out, current. Job); } else { print. No. Jobs. Available(out); } } … }

Imagine there is one job in the queue, and two clients send POST requests… public class Assign. Job. Servlet extends Http. Servlet { private Queue<Job> job. Queue; … 1 protected void do. Post(… request, … response) … { Print. Writer out = response. get. Writer(); 2 Job current. Job = null; if (!job. Queue. is. Empty()) { current. Job = job. Queue. poll(); print. Work. Order(out, current. Job); } else { print. No. Jobs. Available(out); } } … }

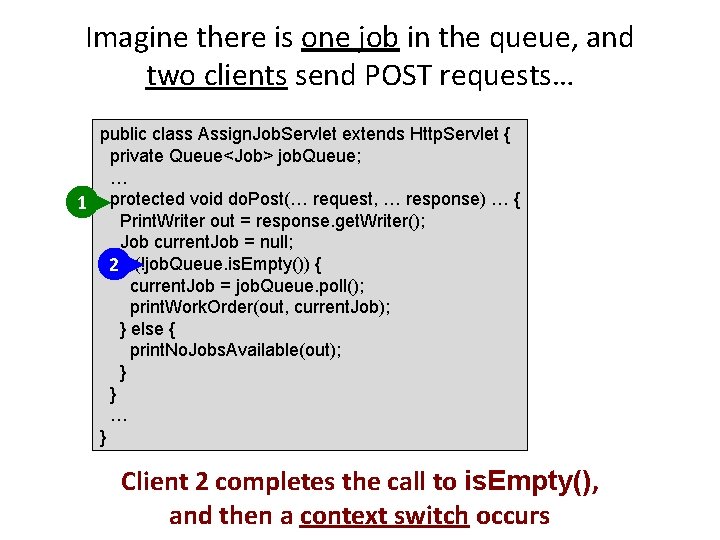

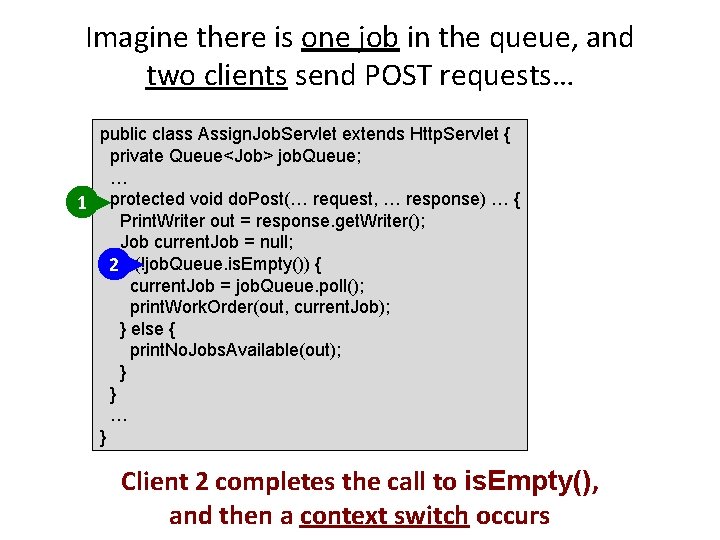

Imagine there is one job in the queue, and two clients send POST requests… public class Assign. Job. Servlet extends Http. Servlet { private Queue<Job> job. Queue; … 1 protected void do. Post(… request, … response) … { Print. Writer out = response. get. Writer(); Job current. Job = null; 2 if (!job. Queue. is. Empty()) { current. Job = job. Queue. poll(); print. Work. Order(out, current. Job); } else { print. No. Jobs. Available(out); } } … } Client 2 completes the call to is. Empty(), and then a context switch occurs

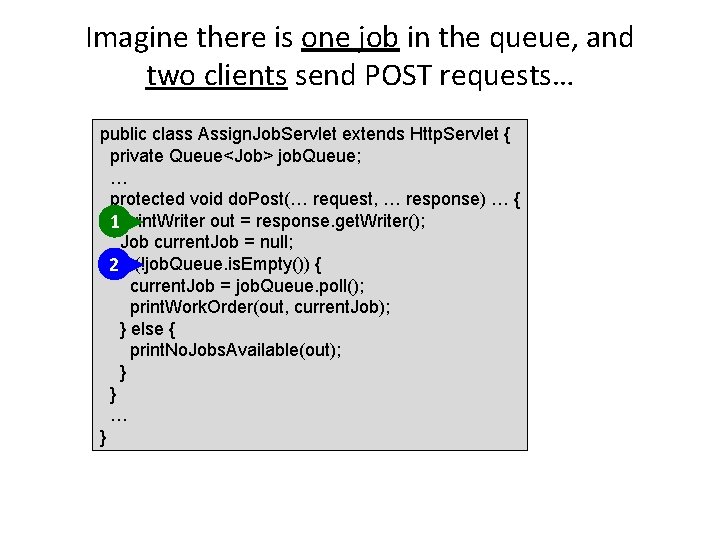

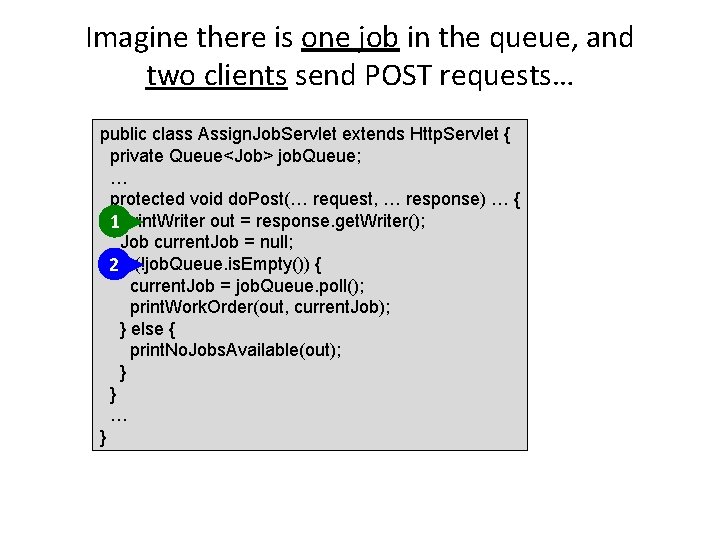

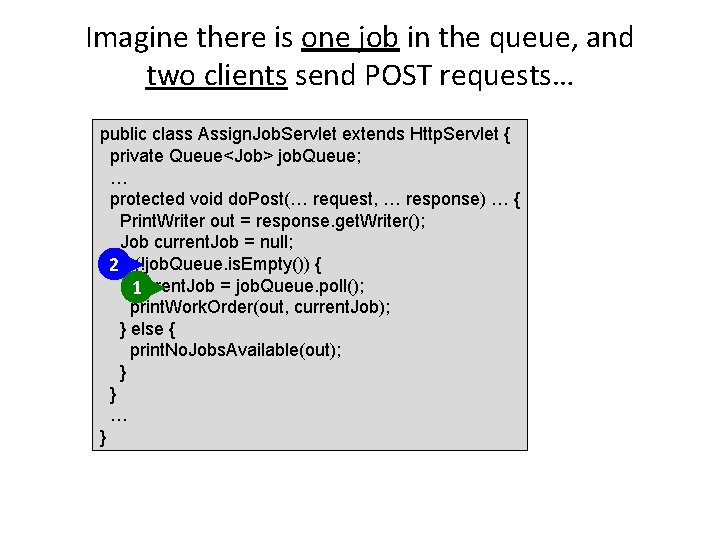

Imagine there is one job in the queue, and two clients send POST requests… public class Assign. Job. Servlet extends Http. Servlet { private Queue<Job> job. Queue; … protected void do. Post(… request, … response) … { 1 Print. Writer out = response. get. Writer(); Job current. Job = null; 2 if (!job. Queue. is. Empty()) { current. Job = job. Queue. poll(); print. Work. Order(out, current. Job); } else { print. No. Jobs. Available(out); } } … }

Imagine there is one job in the queue, and two clients send POST requests… public class Assign. Job. Servlet extends Http. Servlet { private Queue<Job> job. Queue; … protected void do. Post(… request, … response) … { Print. Writer out = response. get. Writer(); 1 Job current. Job = null; 2 if (!job. Queue. is. Empty()) { current. Job = job. Queue. poll(); print. Work. Order(out, current. Job); } else { print. No. Jobs. Available(out); } } … }

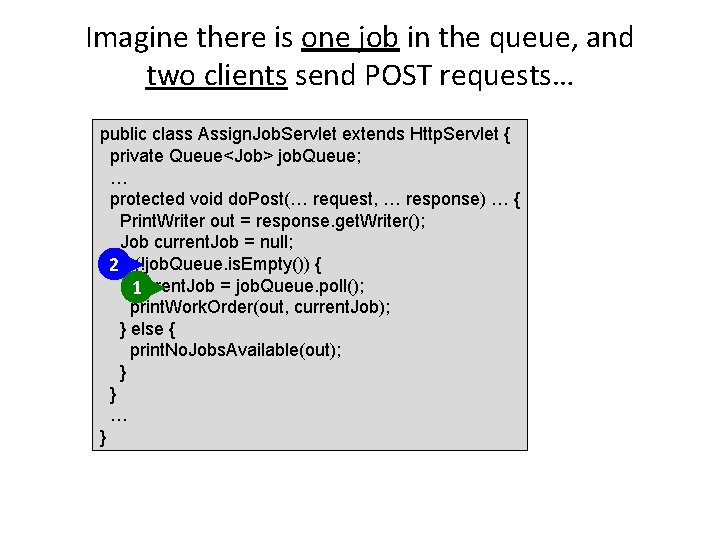

Imagine there is one job in the queue, and two clients send POST requests… 1 public class Assign. Job. Servlet extends Http. Servlet { private Queue<Job> job. Queue; … protected void do. Post(… request, … response) … { Print. Writer out = response. get. Writer(); Job current. Job = null; 2 if (!job. Queue. is. Empty()) { current. Job = job. Queue. poll(); print. Work. Order(out, current. Job); } else { print. No. Jobs. Available(out); } } … }

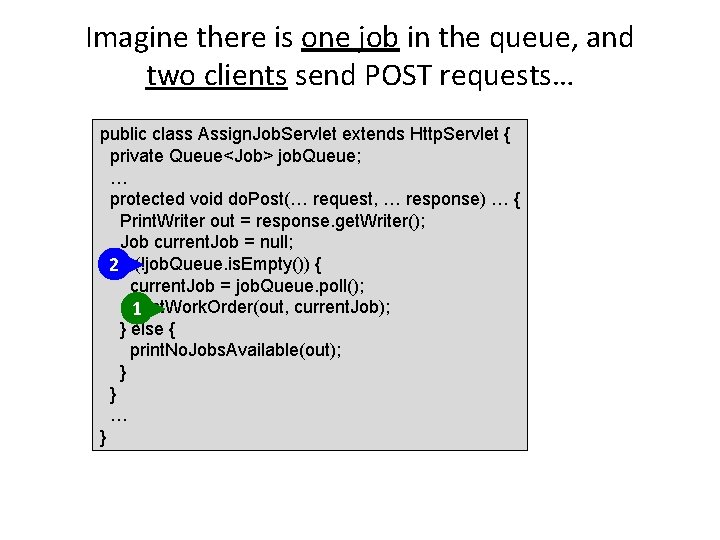

Imagine there is one job in the queue, and two clients send POST requests… public class Assign. Job. Servlet extends Http. Servlet { private Queue<Job> job. Queue; … protected void do. Post(… request, … response) … { Print. Writer out = response. get. Writer(); Job current. Job = null; 2 if (!job. Queue. is. Empty()) { current. Job = job. Queue. poll(); 1 print. Work. Order(out, current. Job); } else { print. No. Jobs. Available(out); } } … }

Imagine there is one job in the queue, and two clients send POST requests… public class Assign. Job. Servlet extends Http. Servlet { private Queue<Job> job. Queue; … protected void do. Post(… request, … response) … { Print. Writer out = response. get. Writer(); Job current. Job = null; 2 if (!job. Queue. is. Empty()) { current. Job = job. Queue. poll(); print. Work. Order(out, current. Job); 1 } else { print. No. Jobs. Available(out); } } … }

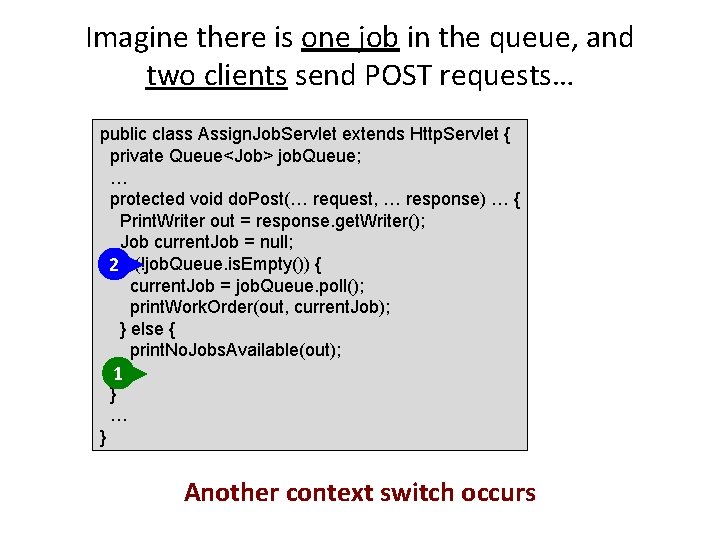

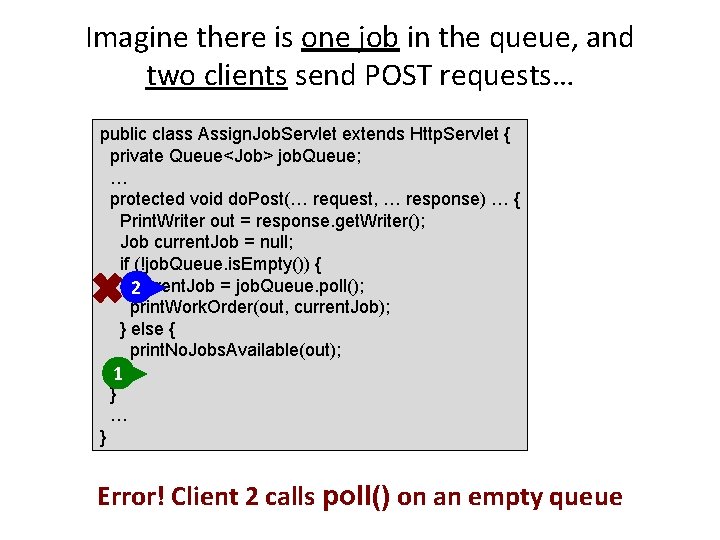

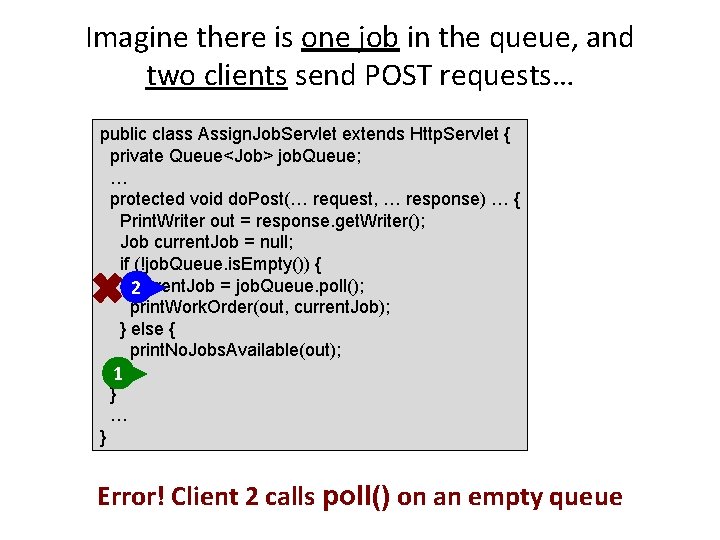

Imagine there is one job in the queue, and two clients send POST requests… public class Assign. Job. Servlet extends Http. Servlet { private Queue<Job> job. Queue; … protected void do. Post(… request, … response) … { Print. Writer out = response. get. Writer(); Job current. Job = null; 2 if (!job. Queue. is. Empty()) { current. Job = job. Queue. poll(); print. Work. Order(out, current. Job); } else { print. No. Jobs. Available(out); 1} } … } Another context switch occurs

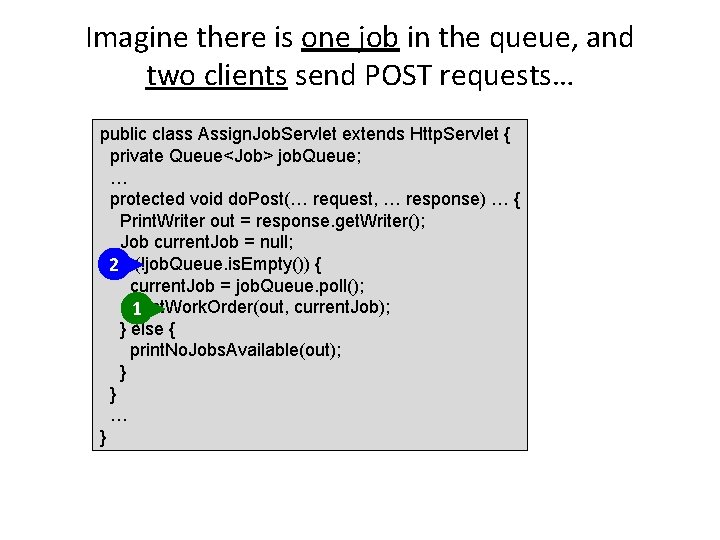

Imagine there is one job in the queue, and two clients send POST requests… public class Assign. Job. Servlet extends Http. Servlet { private Queue<Job> job. Queue; … protected void do. Post(… request, … response) … { Print. Writer out = response. get. Writer(); Job current. Job = null; if (!job. Queue. is. Empty()) { current. Job = job. Queue. poll(); 2 print. Work. Order(out, current. Job); } else { print. No. Jobs. Available(out); 1} } … } Error! Client 2 calls poll() on an empty queue

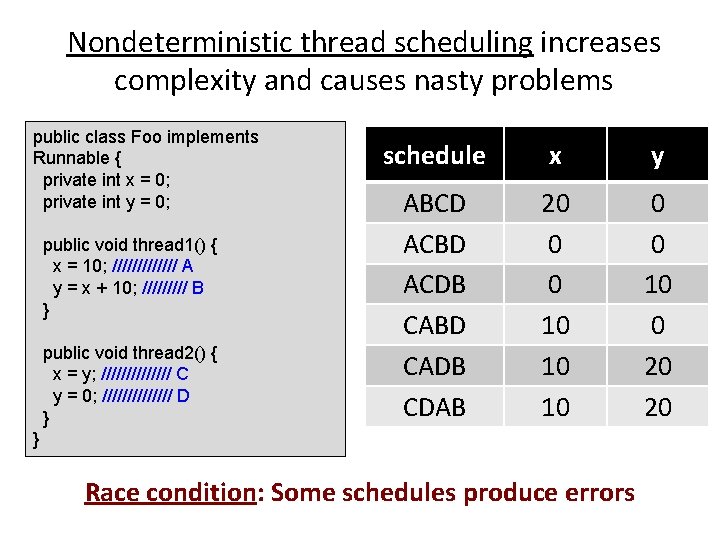

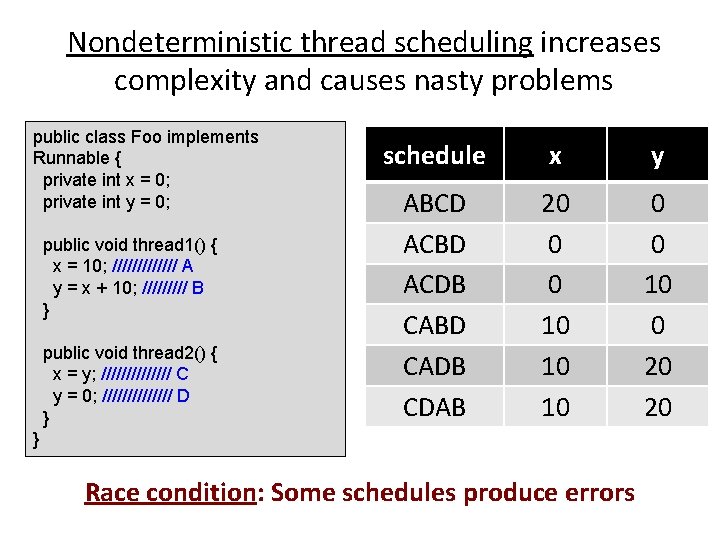

Nondeterministic thread scheduling increases complexity and causes nasty problems public class Foo implements Runnable { private int x = 0; private int y = 0; public void thread 1() { x = 10; /////// A y = x + 10; ///// B } public void thread 2() { x = y; /////// C y = 0; /////// D } schedule x y ABCD ACBD ACDB CABD CADB CDAB 20 0 0 10 10 10 0 20 20 } Race condition: Some schedules produce errors

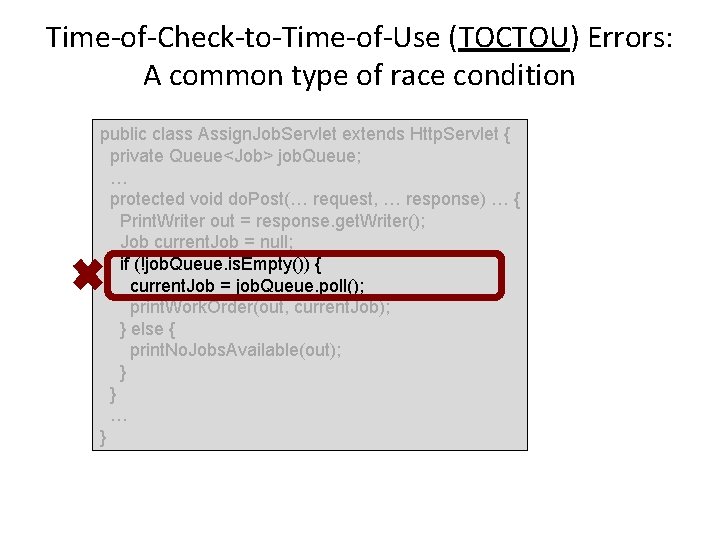

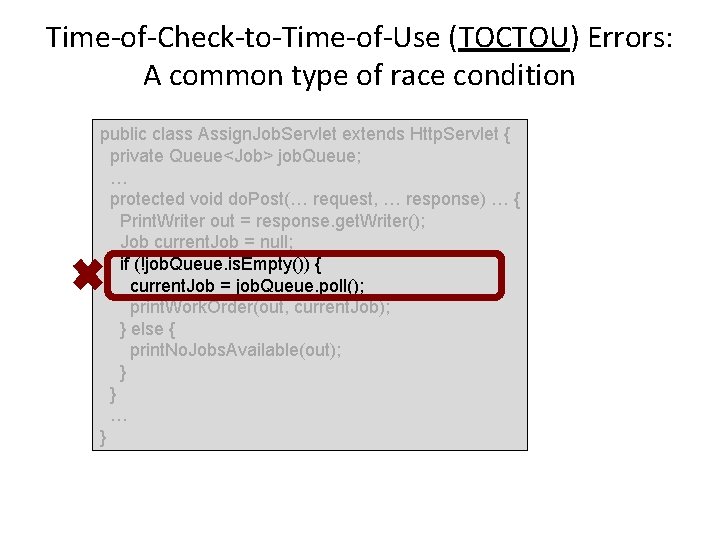

Time-of-Check-to-Time-of-Use (TOCTOU) Errors: A common type of race condition public class Assign. Job. Servlet extends Http. Servlet { private Queue<Job> job. Queue; … protected void do. Post(… request, … response) … { Print. Writer out = response. get. Writer(); Job current. Job = null; if (!job. Queue. is. Empty()) { current. Job = job. Queue. poll(); print. Work. Order(out, current. Job); } else { print. No. Jobs. Available(out); } } … }

So why would you want concurrency anyway? Performance. Increase responsiveness. Do more work in less time. Increase throughput. http: //flic. kr/p/9 ksx. Qa

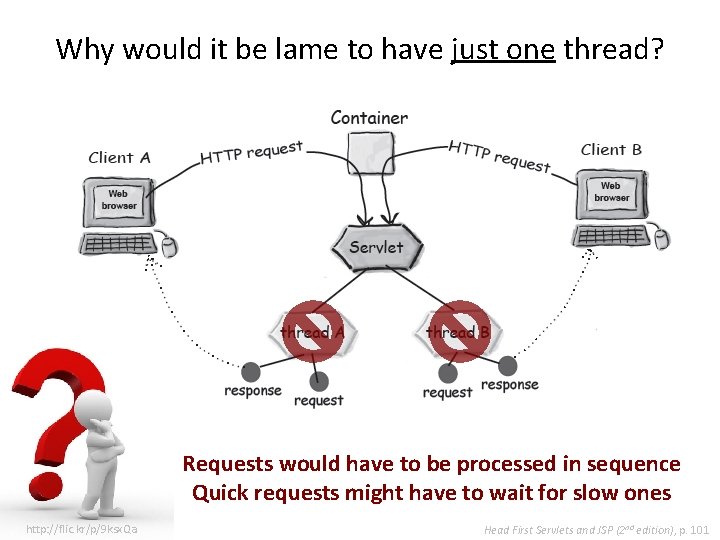

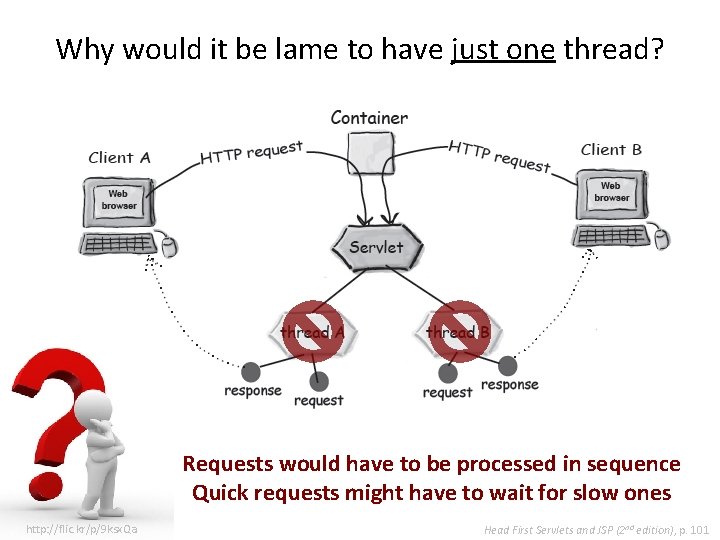

Why would it be lame to have just one thread? Requests would have to be processed in sequence Quick requests might have to wait for slow ones http: //flic. kr/p/9 ksx. Qa Head First Servlets and JSP (2 nd edition), p. 101

How do we prevent race conditions? Synchronization! Java provides two basic mechanisms: • Mutex locks – For enforcing mutually exclusive access to shared data • Condition variables – For enabling threads to wait for application-specific conditions to become true http: //flic. kr/p/a. Gcq. Rp

Mutex locks • States: – unlocked – locked by exactly 1 thread with 0 or more waiting threads • Operations: – lock() (aka acquire) – unlock() (aka release) • Operations are atomic • Threads that call lock() on a held lock must wait http: //flic. kr/p/678 N 6 L

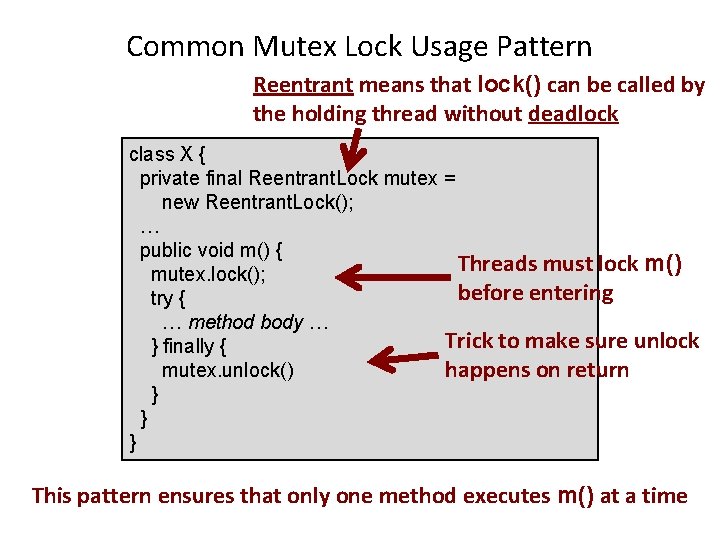

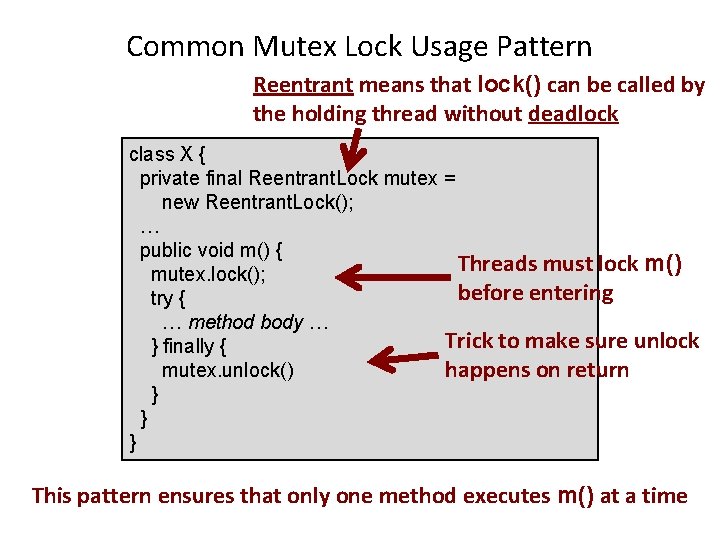

Common Mutex Lock Usage Pattern Reentrant means that lock() can be called by the holding thread without deadlock class X { private final Reentrant. Lock mutex = new Reentrant. Lock(); … public void m() { Threads must lock m() mutex. lock(); before entering try { … method body … Trick to make sure unlock } finally { mutex. unlock() happens on return } } } This pattern ensures that only one method executes m() at a time

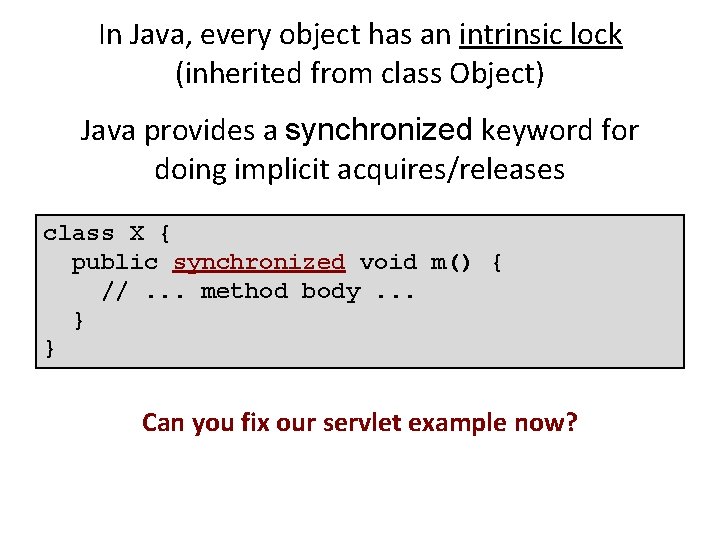

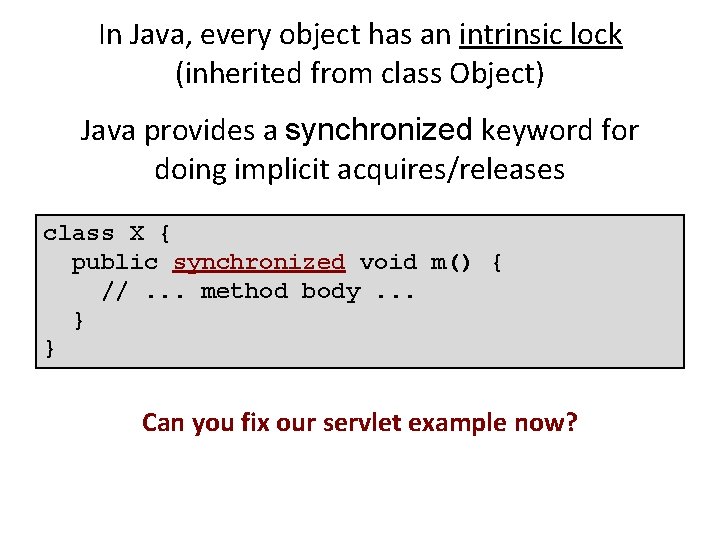

In Java, every object has an intrinsic lock (inherited from class Object) Java provides a synchronized keyword for doing implicit acquires/releases class X { public synchronized void m() { //. . . method body. . . } } Can you fix our servlet example now?

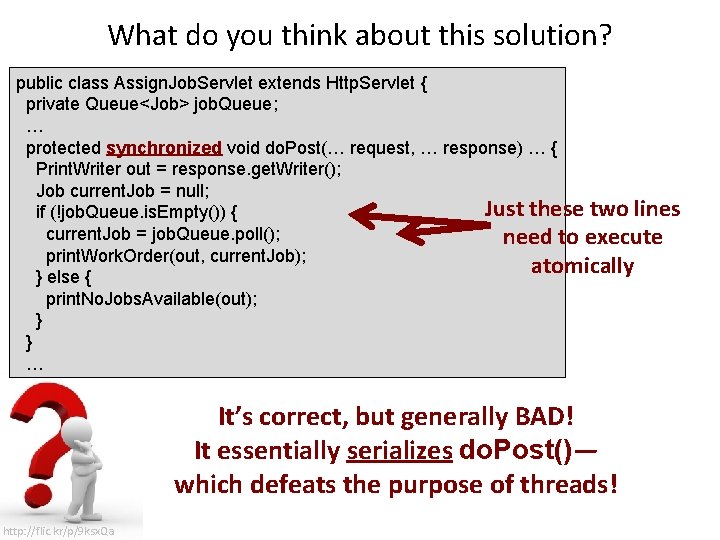

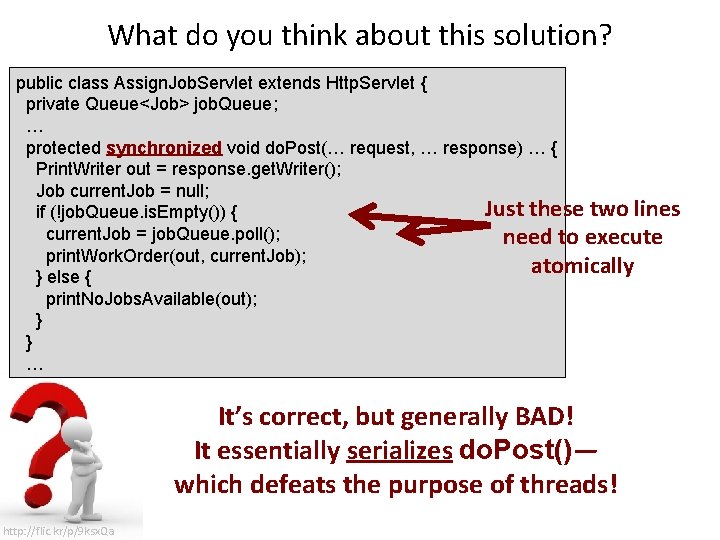

What do you think about this solution? public class Assign. Job. Servlet extends Http. Servlet { private Queue<Job> job. Queue; … protected synchronized void do. Post(… request, … response) … { Print. Writer out = response. get. Writer(); Job current. Job = null; Just these two lines if (!job. Queue. is. Empty()) { current. Job = job. Queue. poll(); need to execute print. Work. Order(out, current. Job); atomically } else { print. No. Jobs. Available(out); } } … It’s correct, but generally BAD! It essentially serializes do. Post()— which defeats the purpose of threads! http: //flic. kr/p/9 ksx. Qa

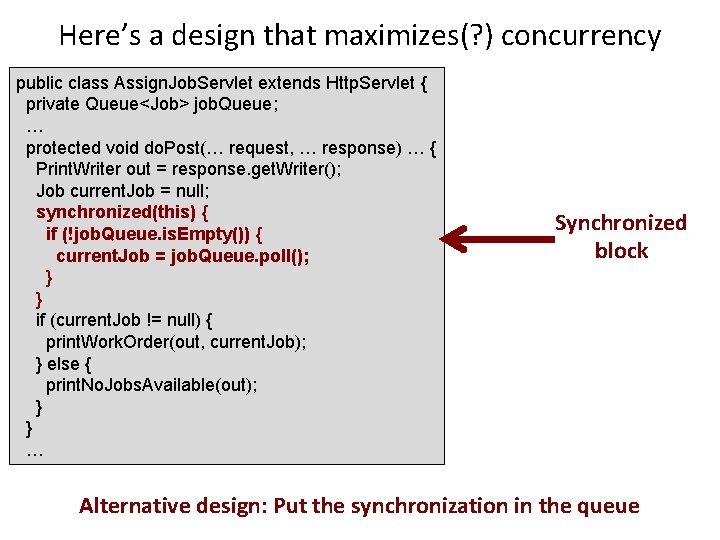

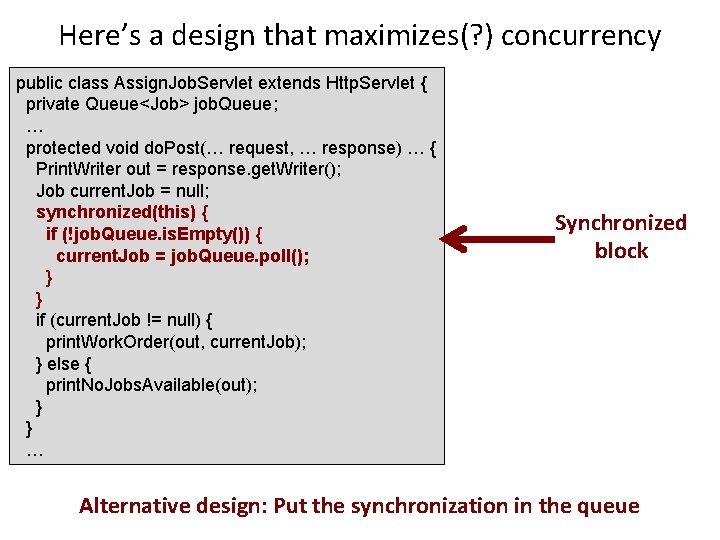

Here’s a design that maximizes(? ) concurrency public class Assign. Job. Servlet extends Http. Servlet { private Queue<Job> job. Queue; … protected void do. Post(… request, … response) … { Print. Writer out = response. get. Writer(); Job current. Job = null; synchronized(this) { if (!job. Queue. is. Empty()) { current. Job = job. Queue. poll(); } } if (current. Job != null) { print. Work. Order(out, current. Job); } else { print. No. Jobs. Available(out); } } … Synchronized block Alternative design: Put the synchronization in the queue

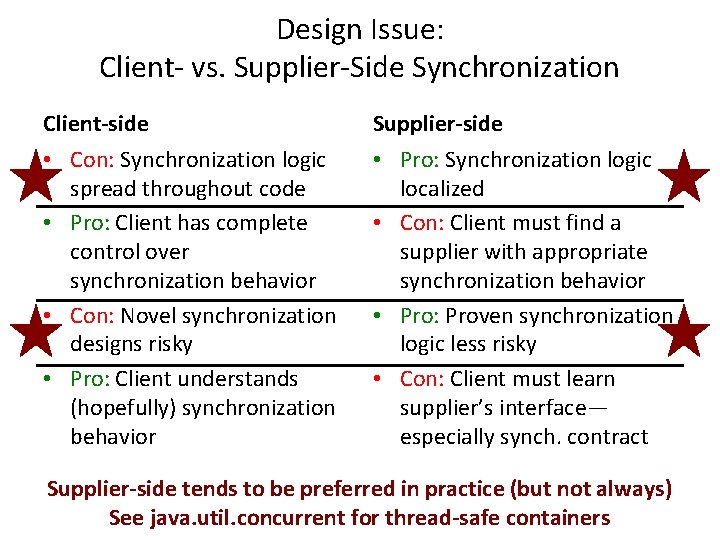

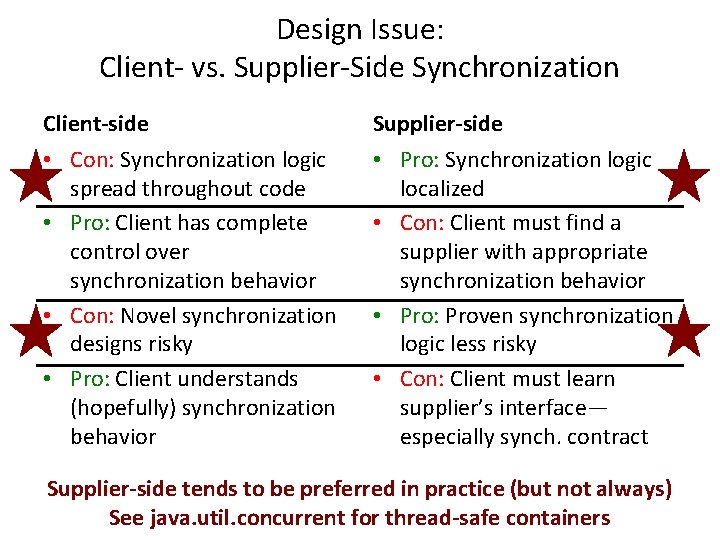

Design Issue: Client- vs. Supplier-Side Synchronization Client-side Supplier-side • Con: Synchronization logic spread throughout code • Pro: Client has complete control over synchronization behavior • Con: Novel synchronization designs risky • Pro: Client understands (hopefully) synchronization behavior • Pro: Synchronization logic localized • Con: Client must find a supplier with appropriate synchronization behavior • Pro: Proven synchronization logic less risky • Con: Client must learn supplier’s interface— especially synch. contract Supplier-side tends to be preferred in practice (but not always) See java. util. concurrent for thread-safe containers

Monitor Pattern: Most common way to implement supplier-side synchronization • Monitor object: One whose methods are executed in mutual exclusion http: //flic. kr/p/7 Zk. GEH – All public methods are synchronized

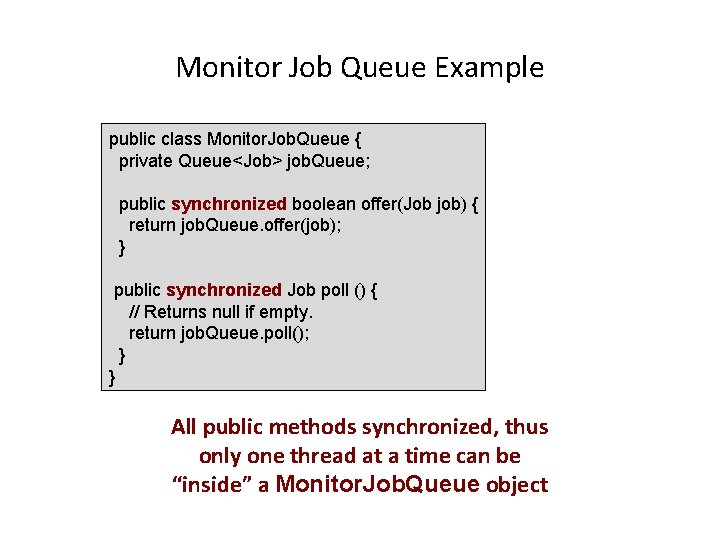

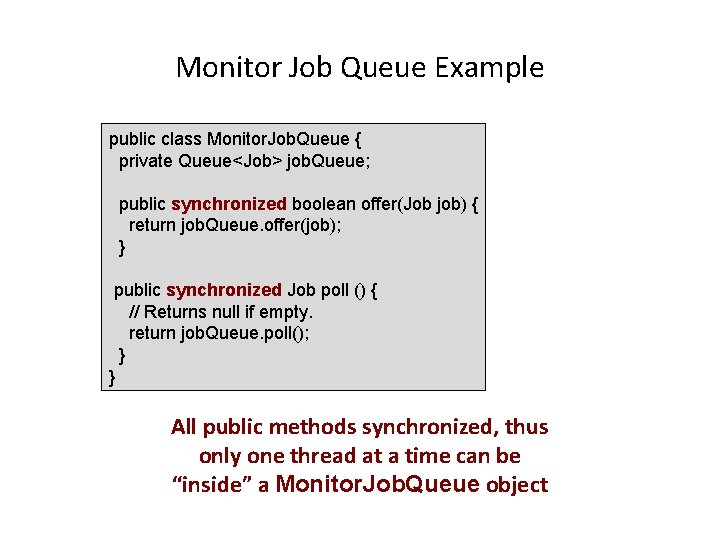

Monitor Job Queue Example public class Monitor. Job. Queue { private Queue<Job> job. Queue; public synchronized boolean offer(Job job) { return job. Queue. offer(job); } public synchronized Job poll () { // Returns null if empty. return job. Queue. poll(); } } All public methods synchronized, thus only one thread at a time can be “inside” a Monitor. Job. Queue object

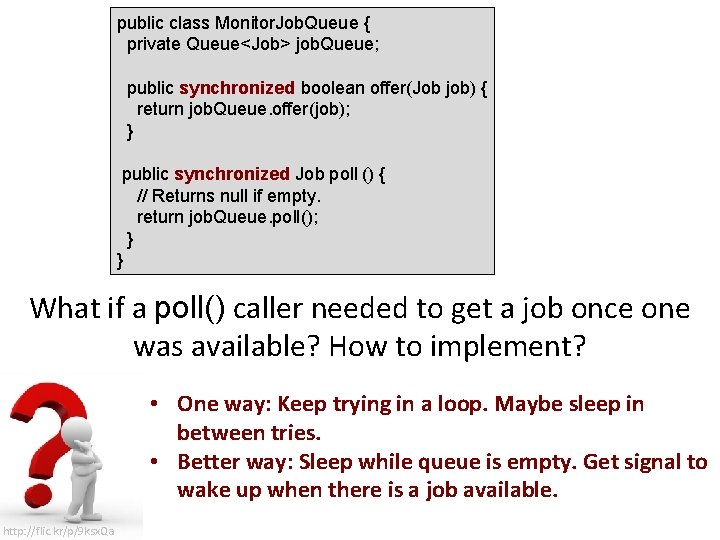

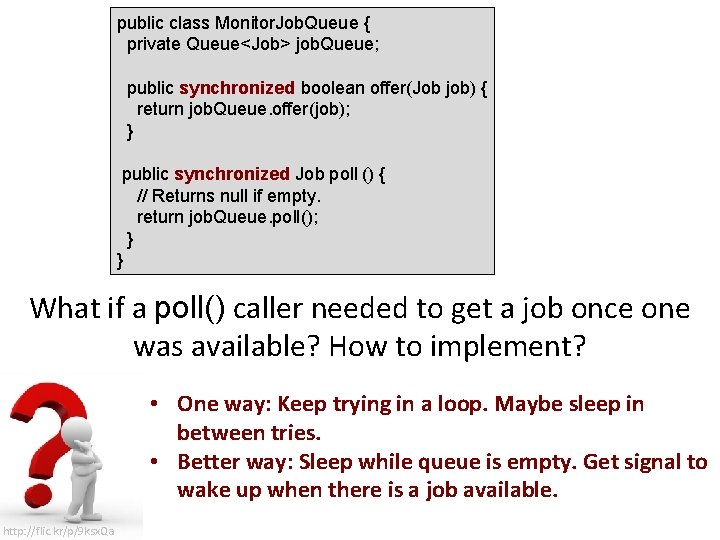

public class Monitor. Job. Queue { private Queue<Job> job. Queue; public synchronized boolean offer(Job job) { return job. Queue. offer(job); } public synchronized Job poll () { // Returns null if empty. return job. Queue. poll(); } } What if a poll() caller needed to get a job once one was available? How to implement? • One way: Keep trying in a loop. Maybe sleep in between tries. • Better way: Sleep while queue is empty. Get signal to wake up when there is a job available. http: //flic. kr/p/9 ksx. Qa

Condition variables • Object for enabling waiting for some condition – Always associated with a particular mutex – Mutex must be held while invoking operations • Operations – await() (aka wait) – signal() (aka notify) – signal. All() (aka notify. All; aka broadcast)

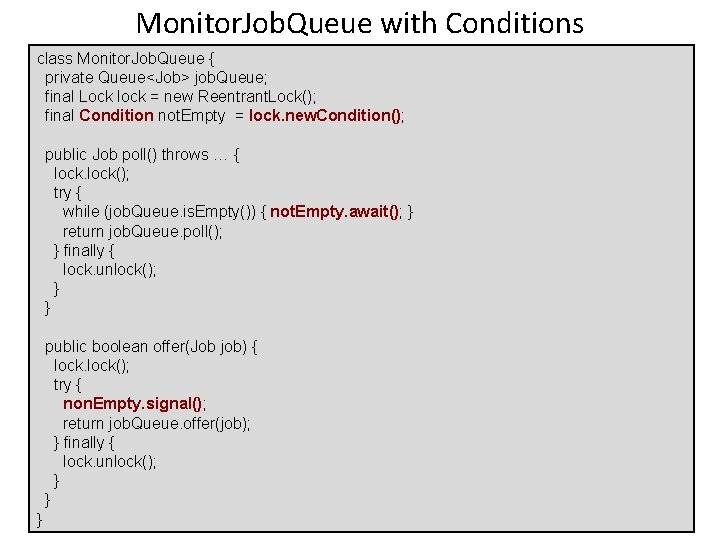

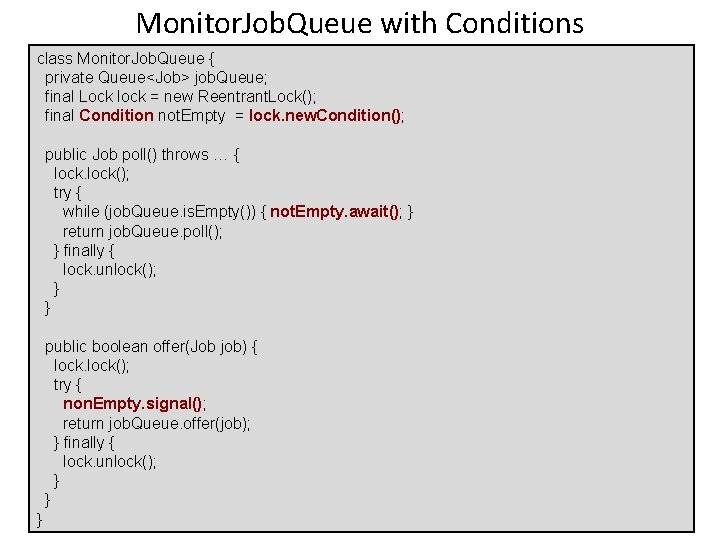

Monitor. Job. Queue with Conditions class Monitor. Job. Queue { private Queue<Job> job. Queue; final Lock lock = new Reentrant. Lock(); final Condition not. Empty = lock. new. Condition(); public Job poll() throws … { lock(); try { while (job. Queue. is. Empty()) { not. Empty. await(); } return job. Queue. poll(); } finally { lock. unlock(); } } public boolean offer(Job job) { lock(); try { non. Empty. signal(); return job. Queue. offer(job); } finally { lock. unlock(); } } }

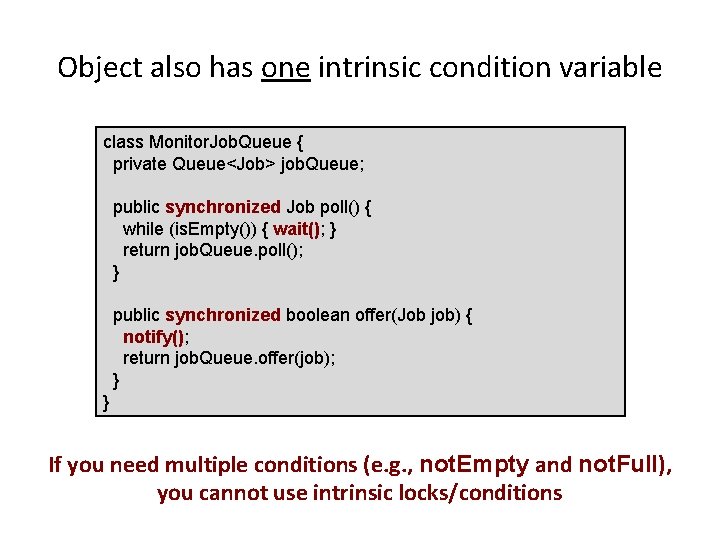

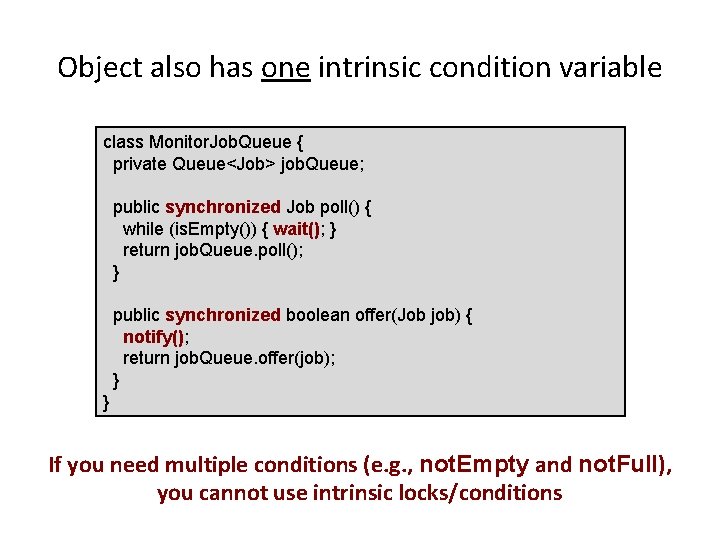

Object also has one intrinsic condition variable class Monitor. Job. Queue { private Queue<Job> job. Queue; public synchronized Job poll() { while (is. Empty()) { wait(); } return job. Queue. poll(); } public synchronized boolean offer(Job job) { notify(); return job. Queue. offer(job); } } If you need multiple conditions (e. g. , not. Empty and not. Full), you cannot use intrinsic locks/conditions

Summary • Java Threads • Concurrency is for performance • Race conditions • Mutex locks • Condition variables • Monitor pattern http: //flic. kr/p/YSY 3 X