Concept learning Overview What do we mean concept

![Gelman and Markman (1986) Conceptual knowledge trumps appearance Training Test generalisation [brontosaurus] [rhinoceros] [triceratops] Gelman and Markman (1986) Conceptual knowledge trumps appearance Training Test generalisation [brontosaurus] [rhinoceros] [triceratops]](https://slidetodoc.com/presentation_image/46d34cca27a3a0cad370d63e337c09eb/image-19.jpg)

- Slides: 27

Concept learning

Overview What do we mean concept? Why is concept learning tricky to understand? Connectionist nets as a simple model of concept learning Some features of natural concept learning that make the picture less simple – – Role of existing background knowledge Qualitative shifts during conceptual development Conceptual essence – an innate expectation? Domain specificity – different types of learning for different kinds of concept? – Social learning

Explaining concept learning is hard because… In principle, the number of possible concepts is infinite, or at least very large - All the permutations of all possible features So brute force, trial & error, would face a huge computational task Almost all realistic models seek to constrain the space of candidate concepts that is considered

“Classic” view of concepts • • Adult concepts have defining features Children's are "complexive" Concepts stand alone Concepts are static

Alternatively • Adults’ are not always classical either, … and sometime children's are classical • Concepts are embedded in "theories" • Concepts are radically context dependent • Concepts may be convenient fictions

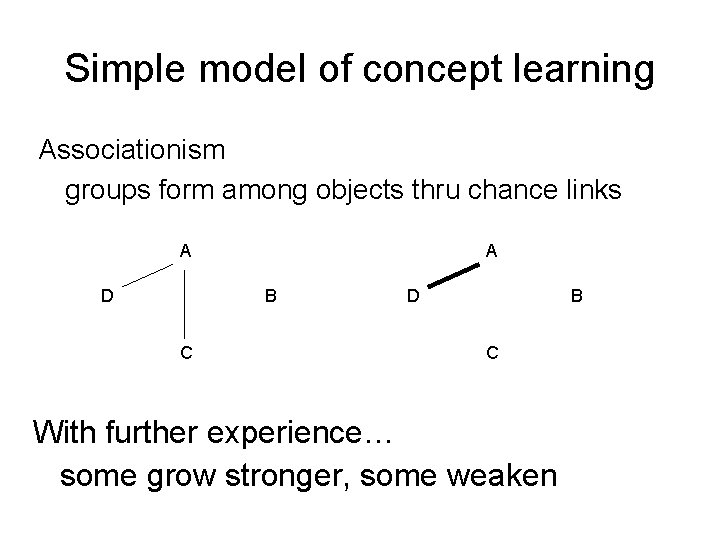

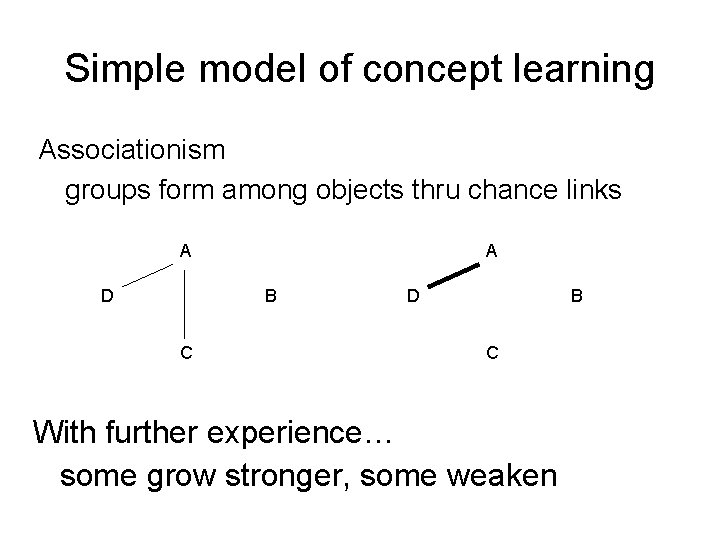

Simple model of concept learning Associationism groups form among objects thru chance links A D A B C D B C With further experience… some grow stronger, some weaken

… it’s not so simple Ach determining tendency Barsalou ad hoc categories Vygotsky qualitative shifts in concept learning tactics at different developmental stages: contingent associations complexes “scientific concepts”

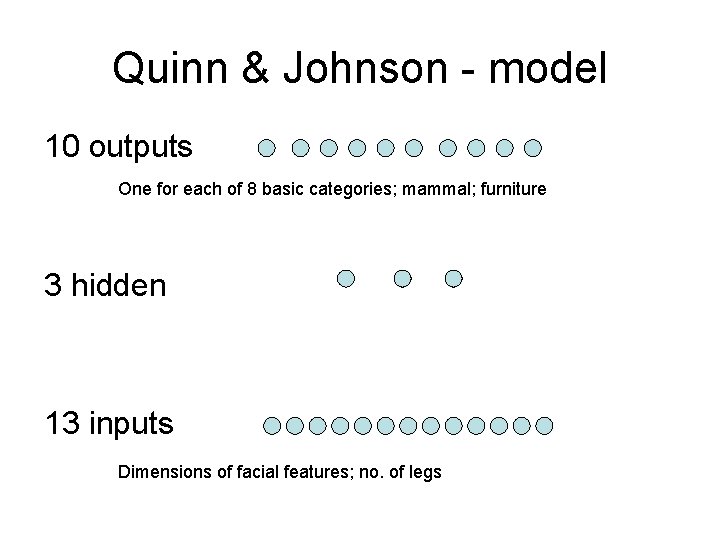

Quinn & Johnson (1997) Previous developmental research Children at 3 -4 months old … can form category representations ‘Basic’ category: CAT Show a few cats and then switch to another animal – they notice ‘Global’ [superordinate] category: MAMMAL Show a few mammals and then switch to a fish, or a chair – they notice

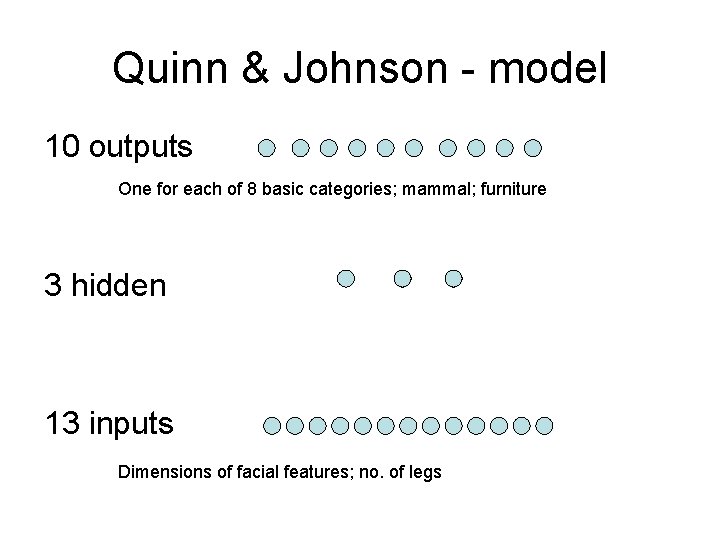

Quinn & Johnson - model 10 outputs One for each of 8 basic categories; mammal; furniture 3 hidden 13 inputs Dimensions of facial features; no. of legs

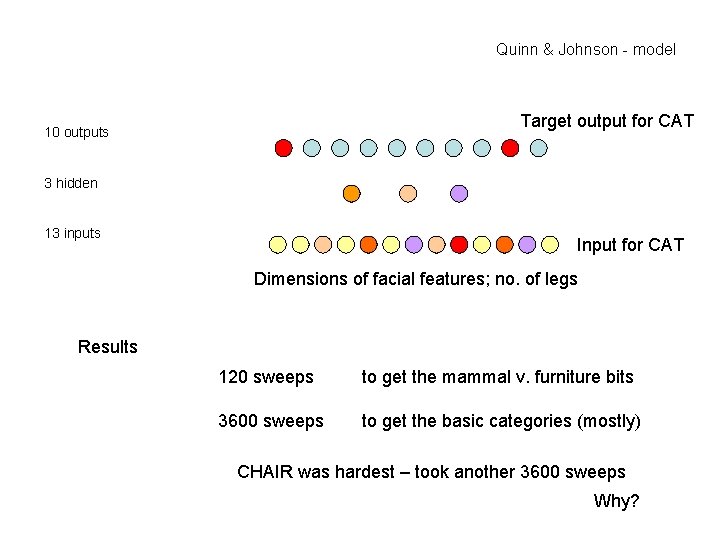

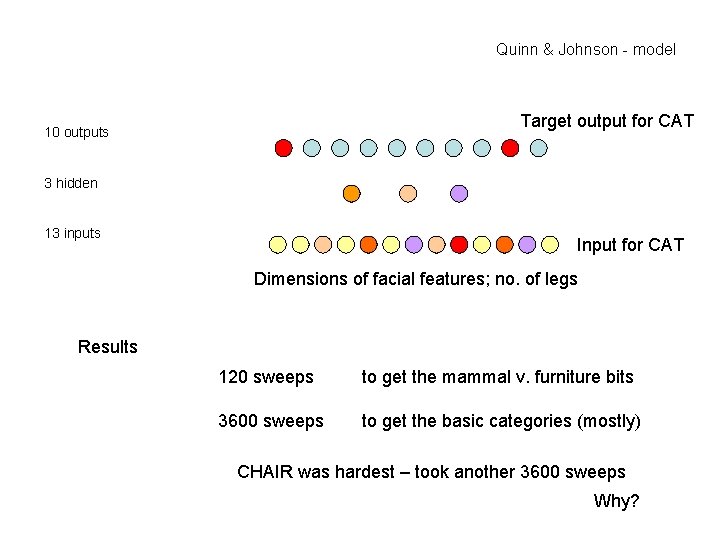

Quinn & Johnson - model Target output for CAT 10 outputs 3 hidden 13 inputs Input for CAT Dimensions of facial features; no. of legs Results 120 sweeps to get the mammal v. furniture bits 3600 sweeps to get the basic categories (mostly) CHAIR was hardest – took another 3600 sweeps Why?

Quinn & Johnson - model Example questions for critical evaluation: Does the “‘global’ first, then later ‘basic’” order correspond to developmental data? Is one test pattern for testing generalisation enough? How does the network perform the categorisation ie what does it learn? Do the results critically depend on values chosen for learning rate or momentum? Answers to most of these questions can be gleaned from the original paper

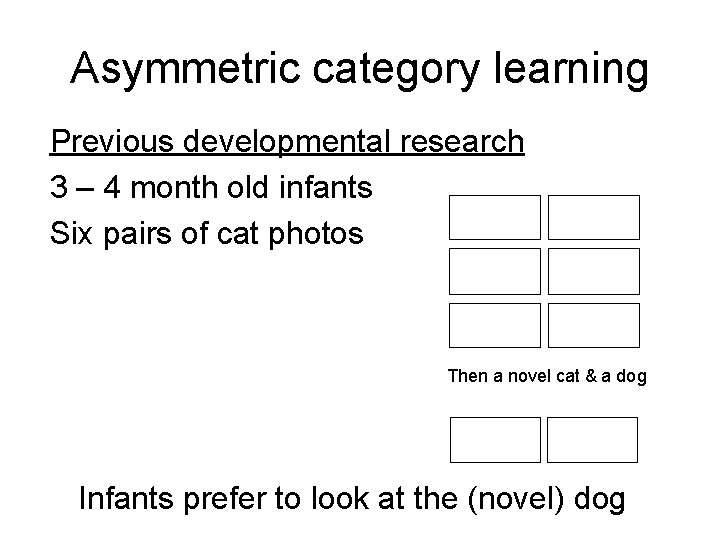

Asymmetric category learning Previous developmental research 3 – 4 month old infants Six pairs of cat photos Then a novel cat & a dog Infants prefer to look at the (novel) dog

Asymmetric category learning Six pairs of DOG photos Then a novel cat & a dog Infants do not show significant preference for (novel) CAT

Mareschal, French, & Quinn (2000) Why? Possible explanation: the category CAT is “tighter”, has less variability than DOG – the exemplars are more similar than the dogs - the novel CAT is more likely to fall right inside the learned CAT category

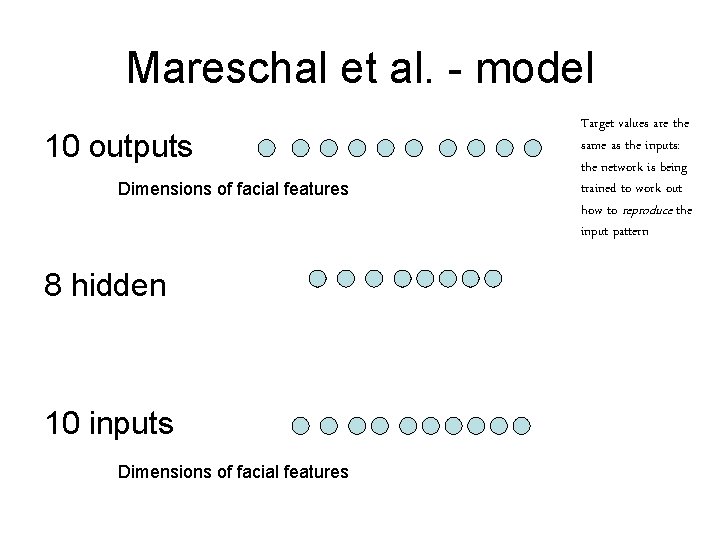

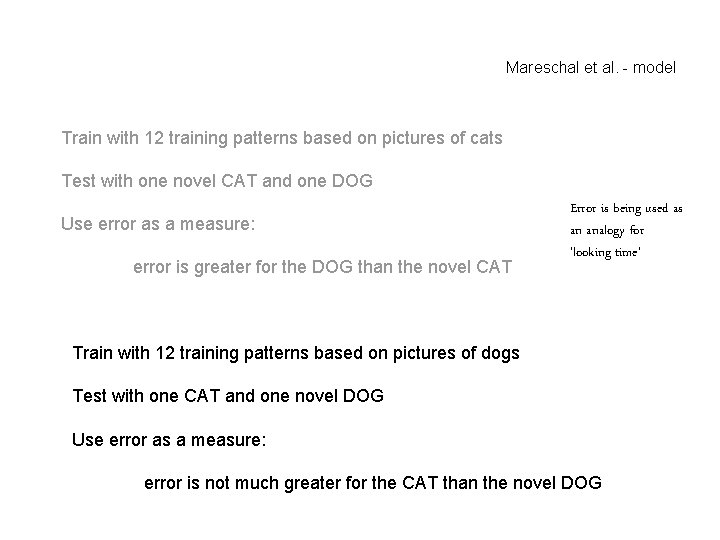

Mareschal et al. - model 10 outputs Dimensions of facial features 8 hidden 10 inputs Dimensions of facial features Target values are the same as the inputs: the network is being trained to work out how to reproduce the input pattern

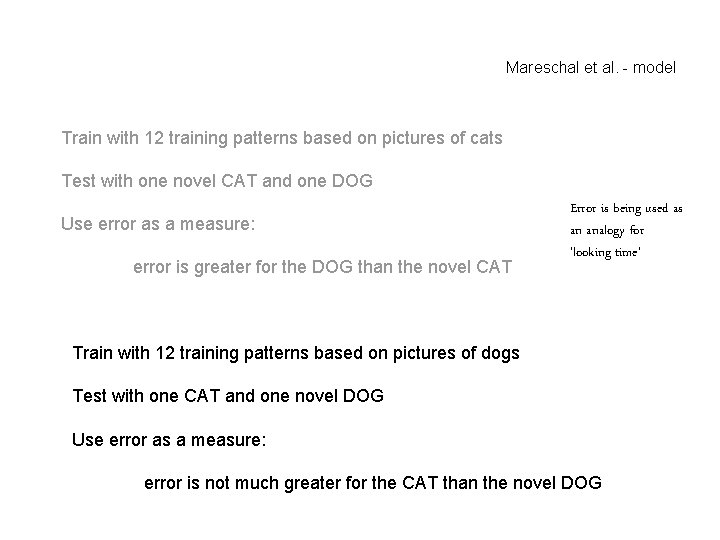

Mareschal et al. - model Train with 12 training patterns based on pictures of cats Test with one novel CAT and one DOG Use error as a measure: error is greater for the DOG than the novel CAT Error is being used as an analogy for ‘looking time’ Train with 12 training patterns based on pictures of dogs Test with one CAT and one novel DOG Use error as a measure: error is not much greater for the CAT than the novel DOG

Some features of natural concept learning that make the picture less simple – Role of existing background knowledge – Qualitative shifts during conceptual development – Conceptual essence – an innate expectation? – Domain specificity – different types of learning for different kinds of concept? – Social learning

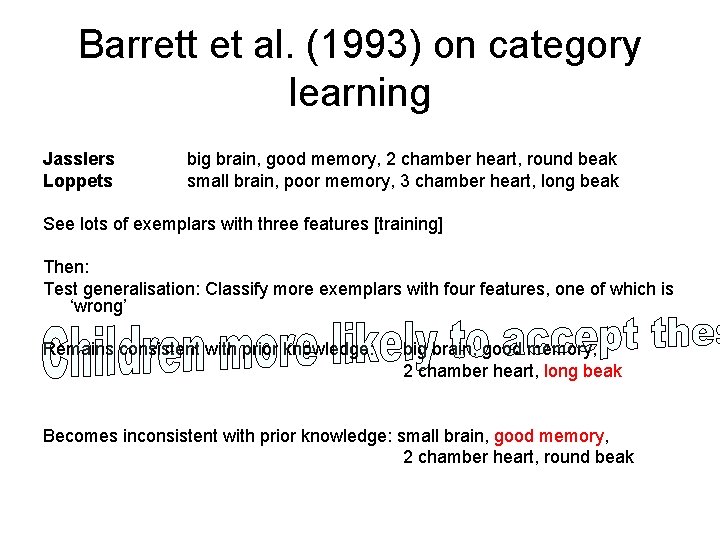

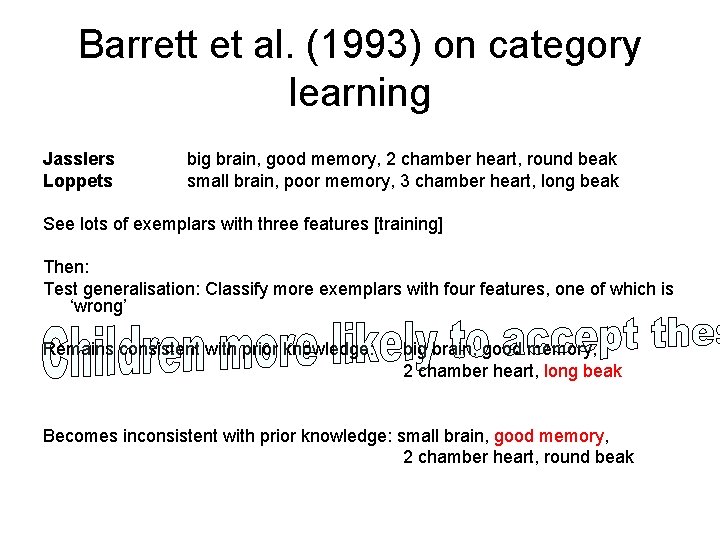

Barrett et al. (1993) on category learning Jasslers Loppets big brain, good memory, 2 chamber heart, round beak small brain, poor memory, 3 chamber heart, long beak See lots of exemplars with three features [training] Then: Test generalisation: Classify more exemplars with four features, one of which is ‘wrong’ Remains consistent with prior knowledge: big brain, good memory, 2 chamber heart, long beak Becomes inconsistent with prior knowledge: small brain, good memory, 2 chamber heart, round beak

![Gelman and Markman 1986 Conceptual knowledge trumps appearance Training Test generalisation brontosaurus rhinoceros triceratops Gelman and Markman (1986) Conceptual knowledge trumps appearance Training Test generalisation [brontosaurus] [rhinoceros] [triceratops]](https://slidetodoc.com/presentation_image/46d34cca27a3a0cad370d63e337c09eb/image-19.jpg)

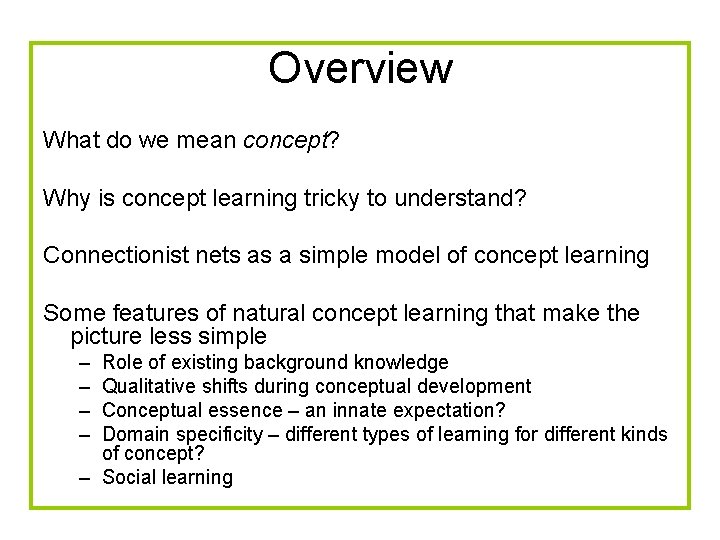

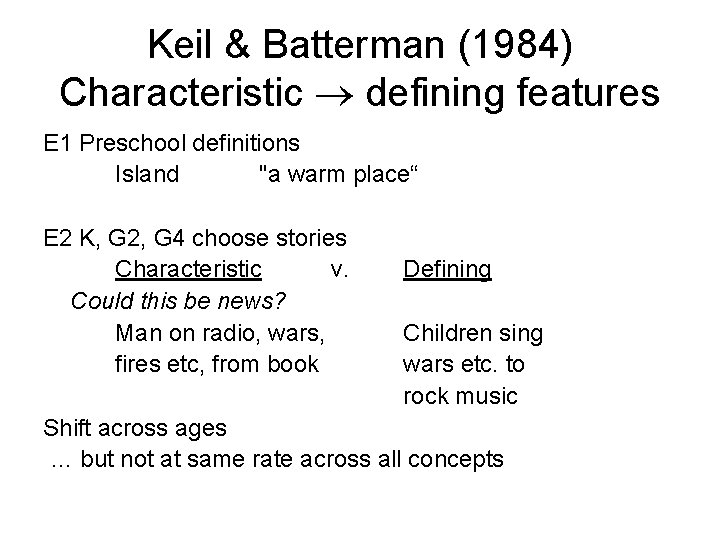

Gelman and Markman (1986) Conceptual knowledge trumps appearance Training Test generalisation [brontosaurus] [rhinoceros] [triceratops] dinosaur rhinoceros dinosaur cold blood warm blood ? 2 ½ years old: "cold blood"

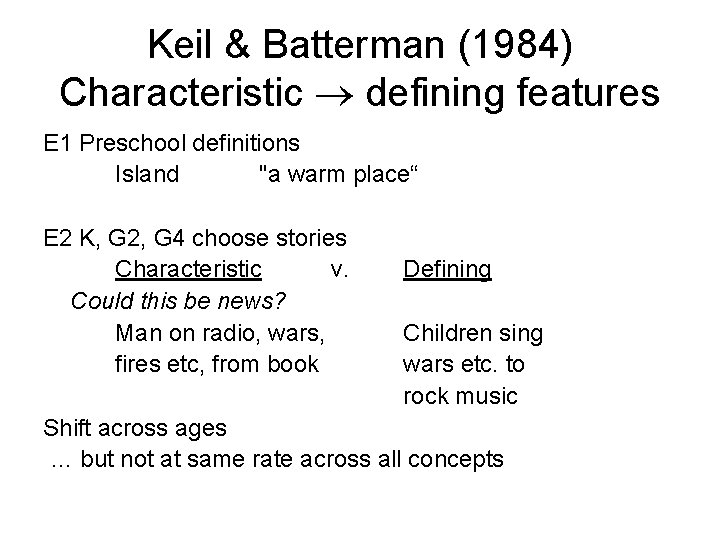

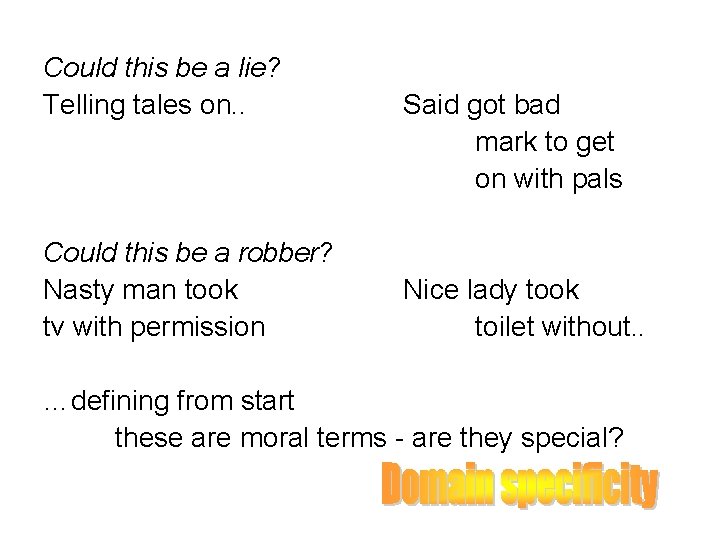

Keil & Batterman (1984) Characteristic defining features E 1 Preschool definitions Island "a warm place“ E 2 K, G 2, G 4 choose stories Characteristic v. Could this be news? Man on radio, wars, fires etc, from book Defining Children sing wars etc. to rock music Shift across ages … but not at same rate across all concepts

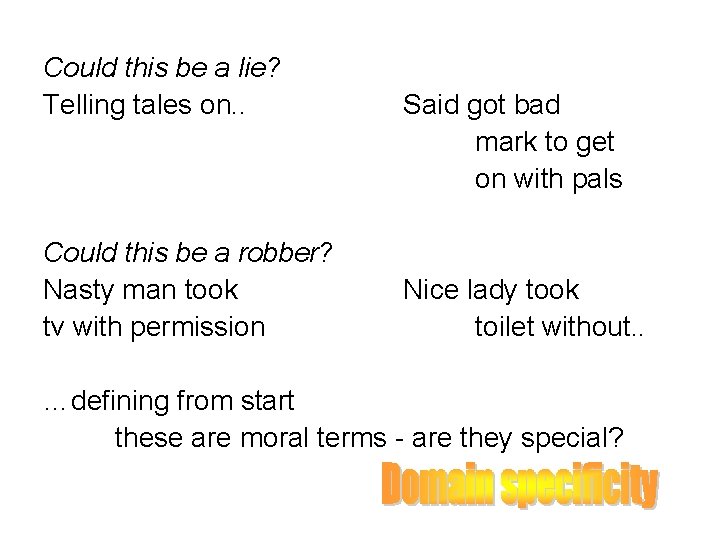

Could this be a lie? Telling tales on. . Could this be a robber? Nasty man took tv with permission Said got bad mark to get on with pals Nice lady took toilet without. . …defining from start these are moral terms - are they special?

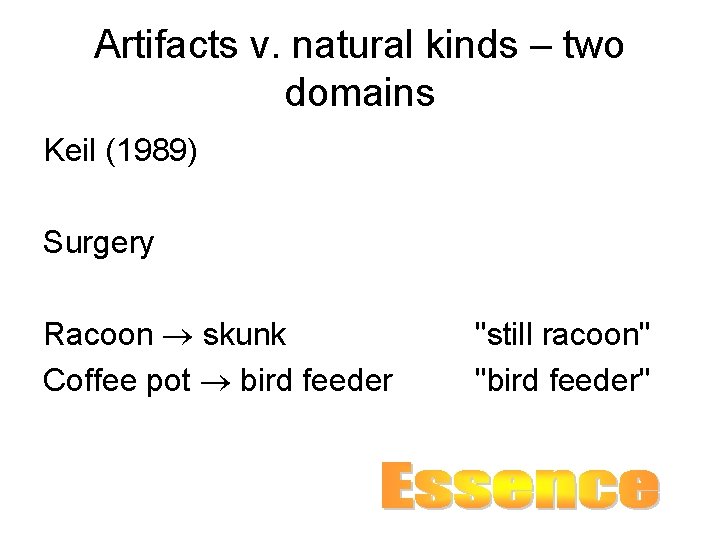

Artifacts v. natural kinds – two domains Keil (1989) Surgery Racoon skunk Coffee pot bird feeder "still racoon" "bird feeder"

Summary The terms concept/conceptual are used in different ways Some uses are highly simplified Concept learning involves wide ranging background knowledge, and may involve qualitative changes and re-organisations Computationally, working out how to bring to bear just the relevant knowledge, quickly, is key

Training and testing generalisation Training present a set of patterns allow the ‘training algorithm’ to learn from these patterns On each training sweep… - it gets to see what output the current weights would produce for the input pattern - it can compare that to the ‘correct answer’ - it can modify the weights towards being more correct

Training and testing generalisation Testing generalisation present a new set of patterns (the training process has not had any chance to try these out) The network will perform well only if the weights learned during training abstracted a general rule that works for instances generally, not merely the specific items in the training set

Modelling project Guidelines

Next week’s lecture will look at learning word meanings Quite similar issues are involved in learning concepts and learning word meanings