Concept Learning and the GeneraltoSpecific Ordering Concept Learning

- Slides: 31

Concept Learning and the General-to-Specific Ordering 이 종우 자연언어처리연구실

Concept Learning • Concepts or Categories – “birds” – “car” – “situations in which I should study more in order to pass the exam” – Concept • some subset of objects or events defined over a larger set, or a boolean valued function defined over this larger set.

– Learning • inducing general functions from specific training examples – Concept Learning • acquiring the definition of a general category given a sample of positive and negative training examples of the category

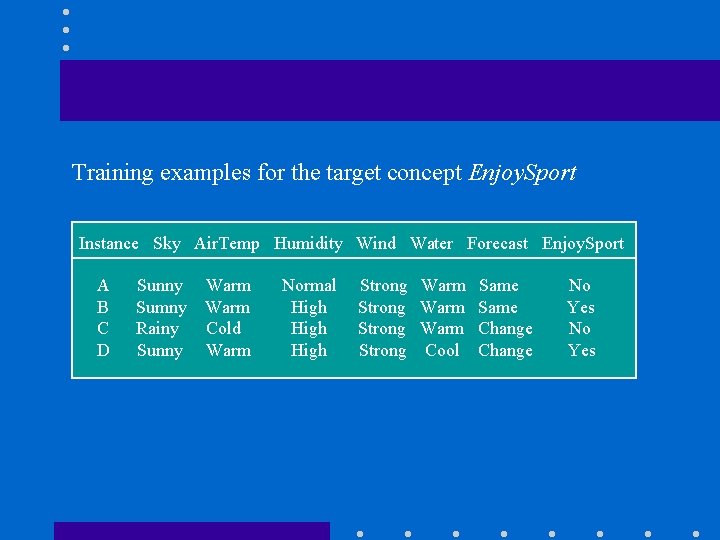

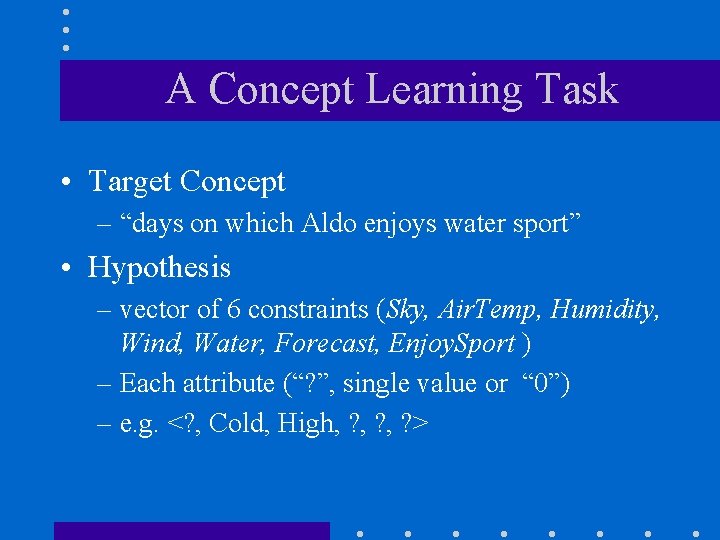

A Concept Learning Task • Target Concept – “days on which Aldo enjoys water sport” • Hypothesis – vector of 6 constraints (Sky, Air. Temp, Humidity, Wind, Water, Forecast, Enjoy. Sport ) – Each attribute (“? ”, single value or “ 0”) – e. g. <? , Cold, High, ? , ? >

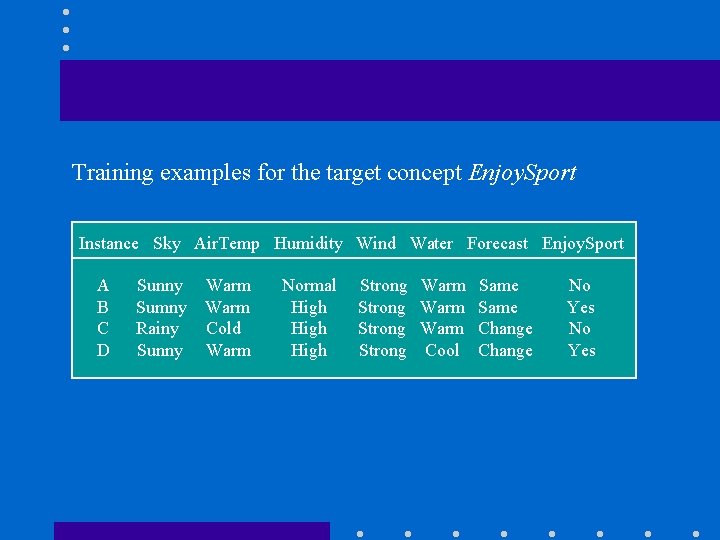

Training examples for the target concept Enjoy. Sport Instance Sky Air. Temp Humidity Wind Water Forecast Enjoy. Sport A B C D Sunny Sumny Rainy Sunny Warm Cold Warm Normal High Strong Warm Cool Same Change No Yes

• Given : – instances (X): set of iterms over which the concept is defined. – target concept (c) : c : X → {0, 1} – training examples (positive/negative) : <x, c(x)> – training set D: available training examples – set of all possible hypotheses: H • Determine : – to find h(x) = c(x) (for all x in X)

Inductive Learning Hypothesis • Inductive Learning Hypothesis – Any good hypothesis over a sufficiently large set of training examples will also approximate the target function. well over unseen examples.

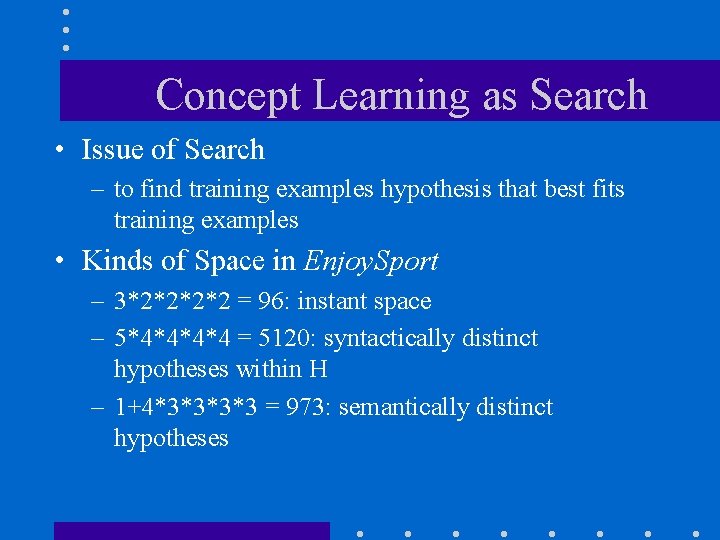

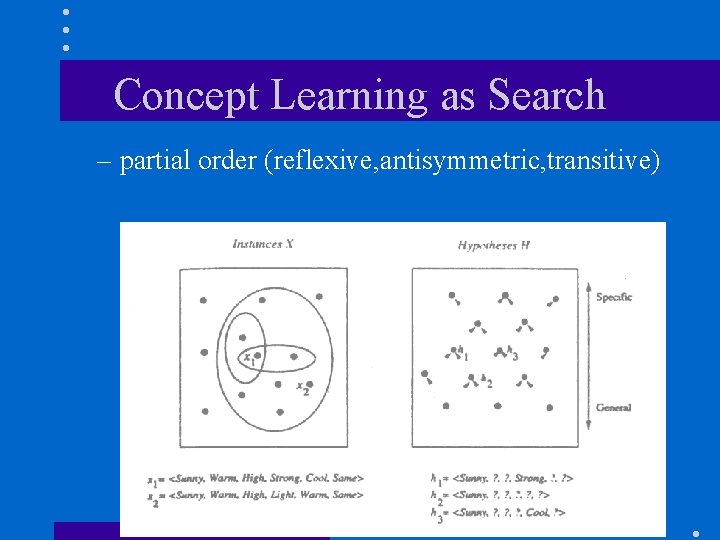

Concept Learning as Search • Issue of Search – to find training examples hypothesis that best fits training examples • Kinds of Space in Enjoy. Sport – 3*2*2 = 96: instant space – 5*4*4 = 5120: syntactically distinct hypotheses within H – 1+4*3*3 = 973: semantically distinct hypotheses

• Search Problem – efficient search in hypothesis space(finite/infinite)

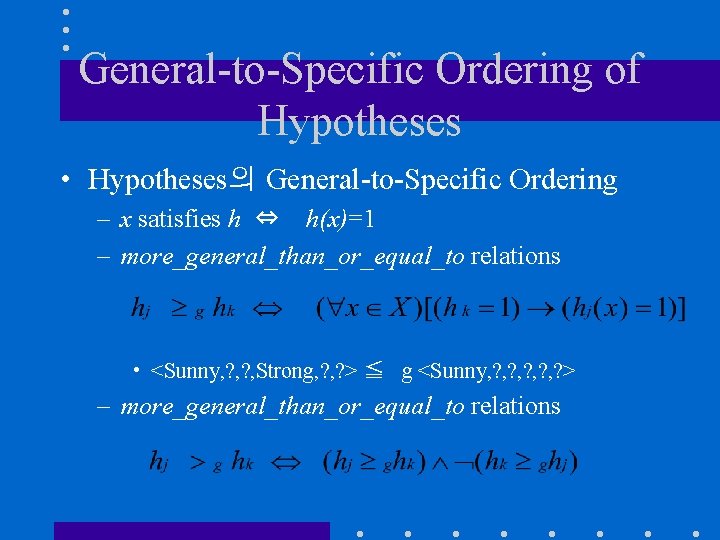

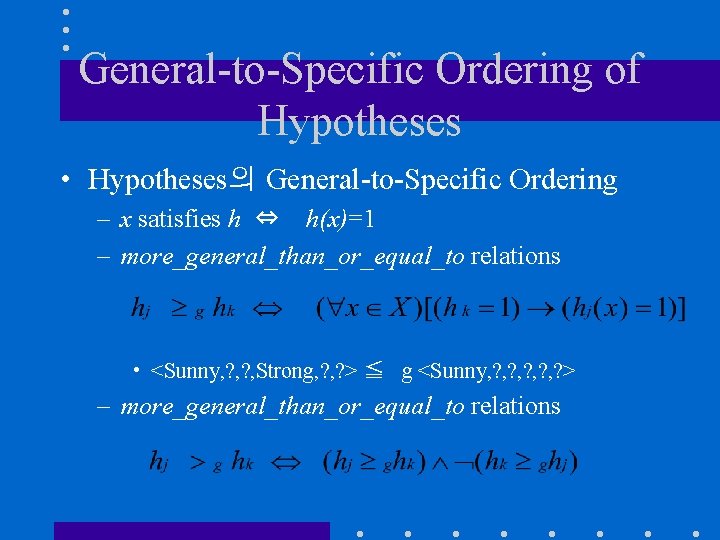

General-to-Specific Ordering of Hypotheses • Hypotheses의 General-to-Specific Ordering – x satisfies h ⇔ h(x)=1 – more_general_than_or_equal_to relations • <Sunny, ? , Strong, ? > ≦ g <Sunny, ? , ? , ? > – more_general_than_or_equal_to relations

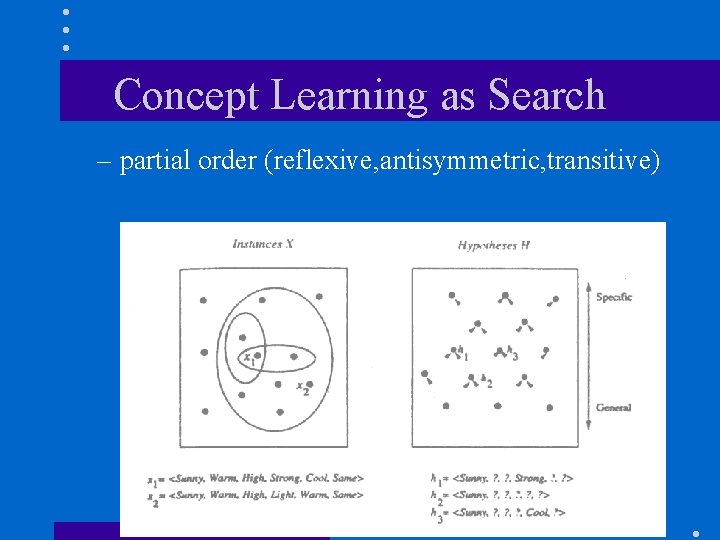

Concept Learning as Search – partial order (reflexive, antisymmetric, transitive)

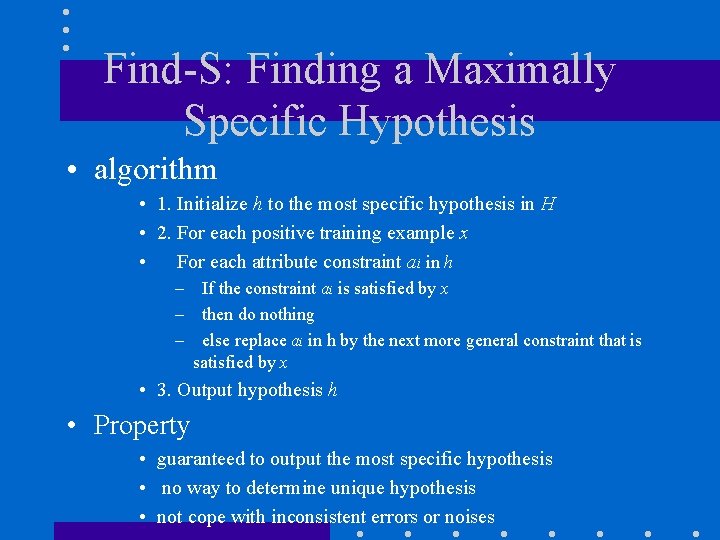

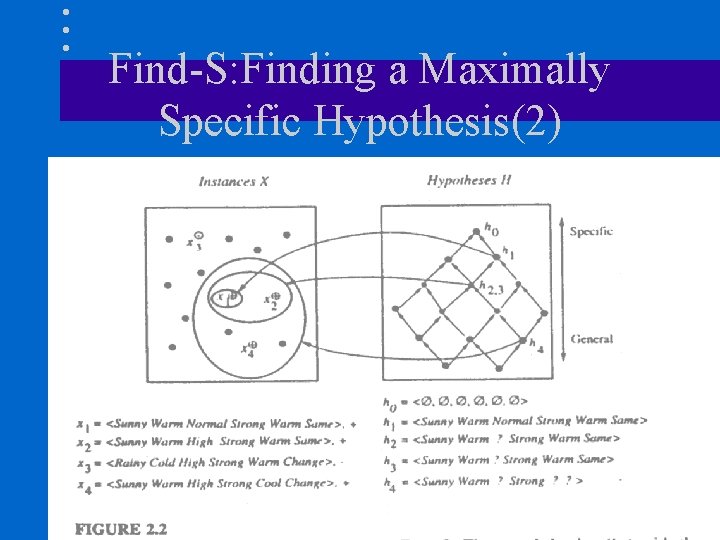

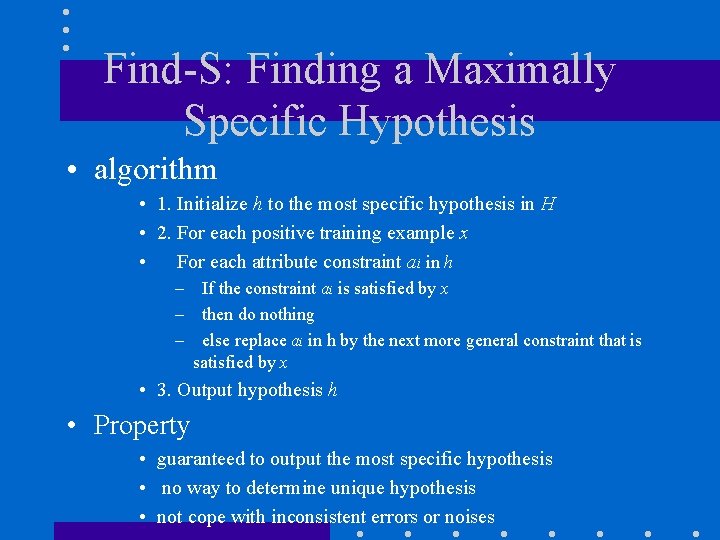

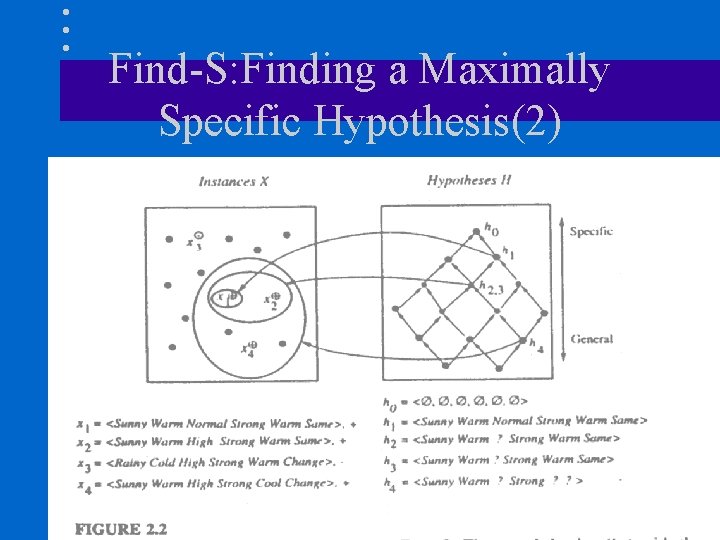

Find-S: Finding a Maximally Specific Hypothesis • algorithm • 1. Initialize h to the most specific hypothesis in H • 2. For each positive training example x • For each attribute constraint ai in h – If the constraint ai is satisfied by x – then do nothing – else replace ai in h by the next more general constraint that is satisfied by x • 3. Output hypothesis h • Property • guaranteed to output the most specific hypothesis • no way to determine unique hypothesis • not cope with inconsistent errors or noises

Find-S: Finding a Maximally Specific Hypothesis(2)

Version Spaces and the Candidate. Elimination Algorithm – output all hypotheses consistent with the training examples. – perform poorly with noisy training data. • Representation – Consistent(h, D) ⇔(∀<x, c(x)> D) h(x) = c(x) – VSH, D ⇔ {h H | Consistent(h, D)} • List-Then-Eliminate Algorithm – lists all hypotheses -> remove inconsistent ones. – Appliable to finite H

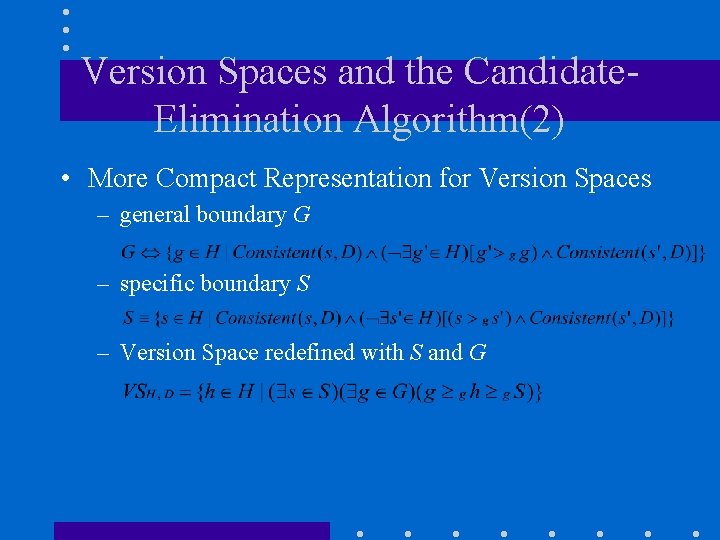

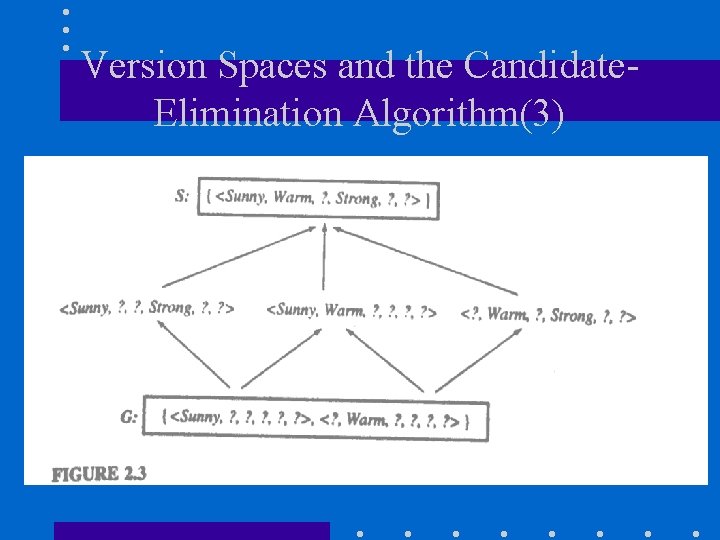

Version Spaces and the Candidate. Elimination Algorithm(2) • More Compact Representation for Version Spaces – general boundary G – specific boundary S – Version Space redefined with S and G

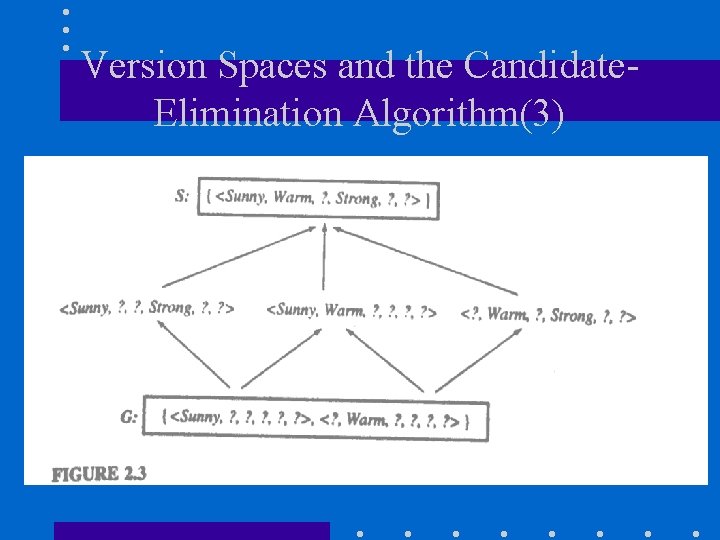

Version Spaces and the Candidate. Elimination Algorithm(3)

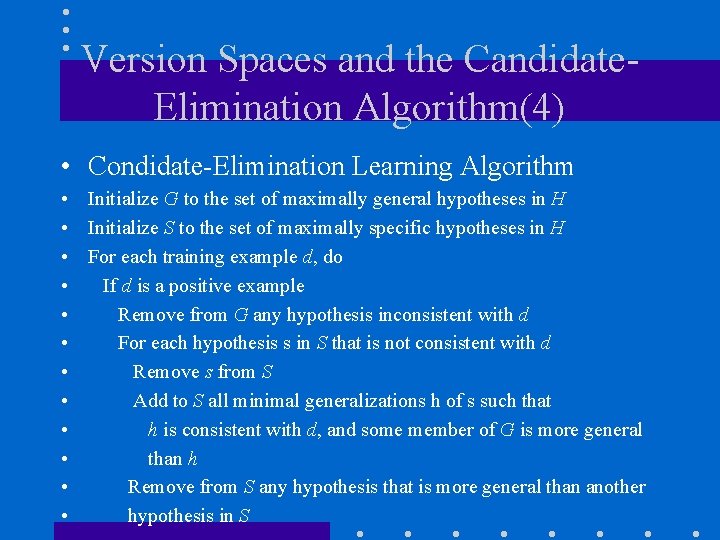

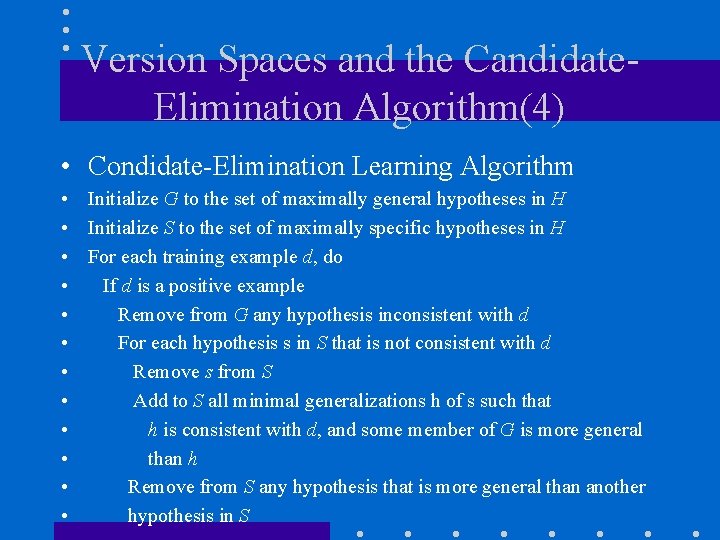

Version Spaces and the Candidate. Elimination Algorithm(4) • Condidate-Elimination Learning Algorithm • Initialize G to the set of maximally general hypotheses in H • Initialize S to the set of maximally specific hypotheses in H • For each training example d, do • If d is a positive example • Remove from G any hypothesis inconsistent with d • For each hypothesis s in S that is not consistent with d • Remove s from S • Add to S all minimal generalizations h of s such that • h is consistent with d, and some member of G is more general • than h • Remove from S any hypothesis that is more general than another • hypothesis in S

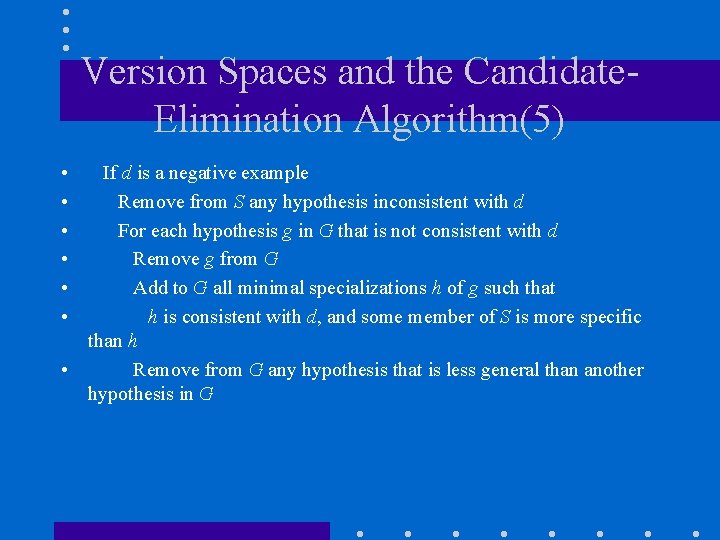

Version Spaces and the Candidate. Elimination Algorithm(5) • • • If d is a negative example Remove from S any hypothesis inconsistent with d For each hypothesis g in G that is not consistent with d Remove g from G Add to G all minimal specializations h of g such that h is consistent with d, and some member of S is more specific than h • Remove from G any hypothesis that is less general than another hypothesis in G

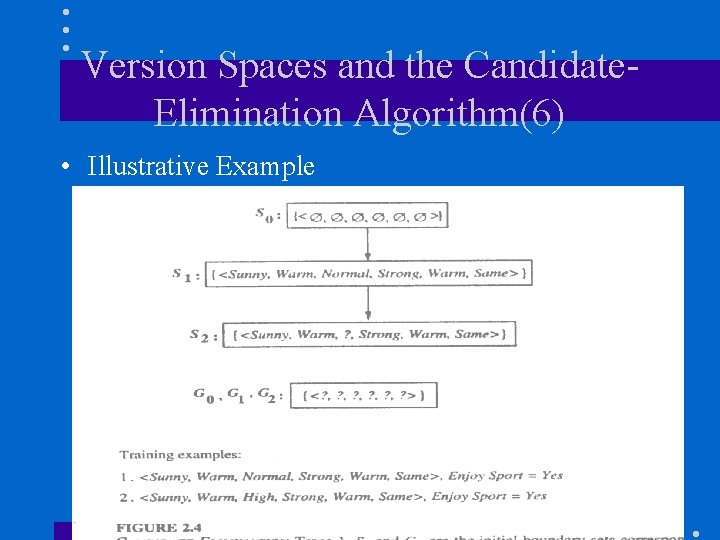

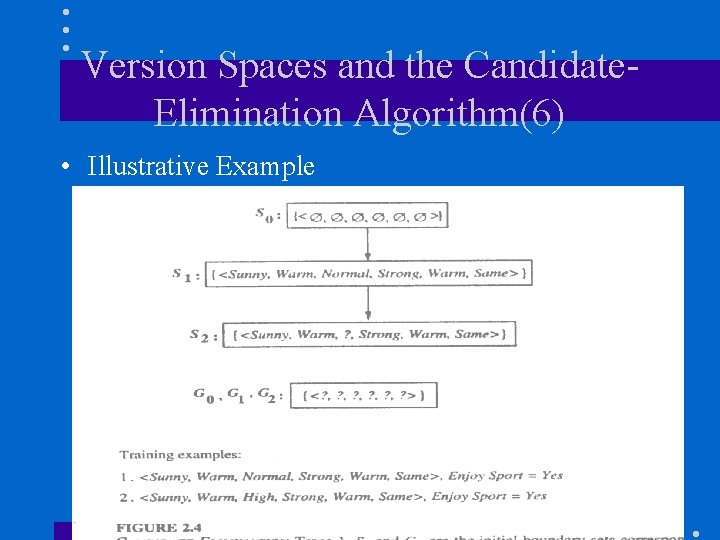

Version Spaces and the Candidate. Elimination Algorithm(6) • Illustrative Example

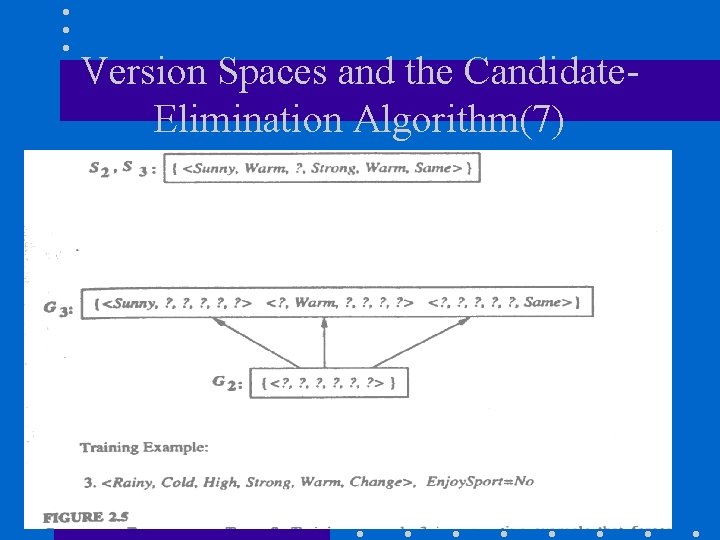

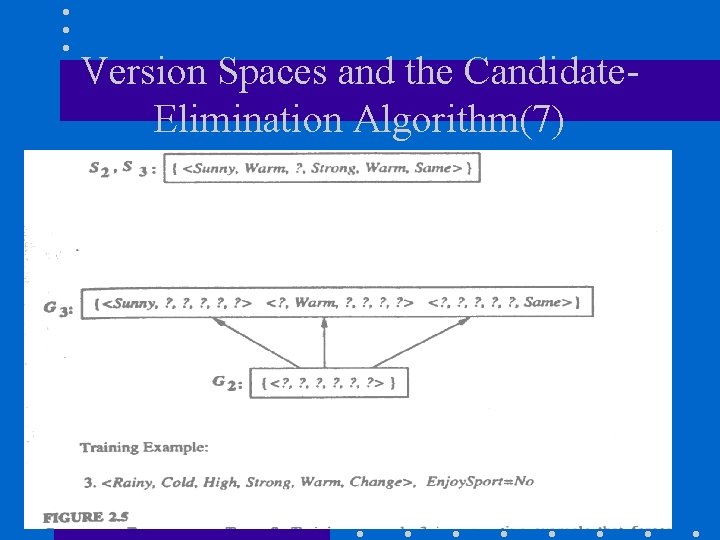

Version Spaces and the Candidate. Elimination Algorithm(7)

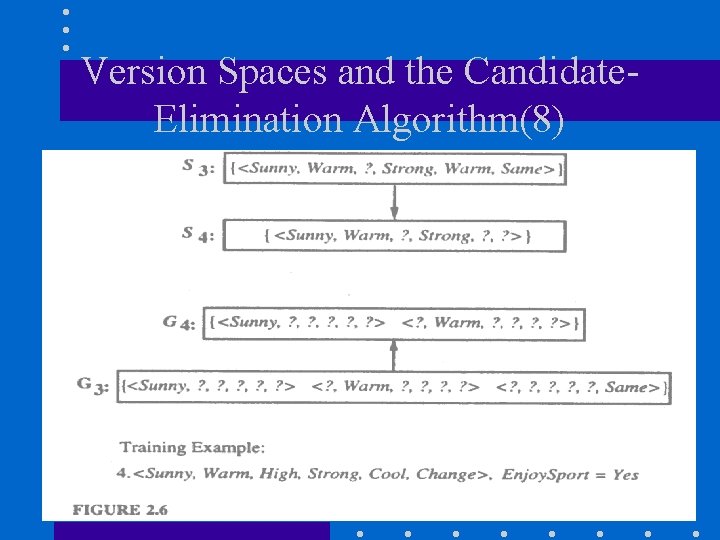

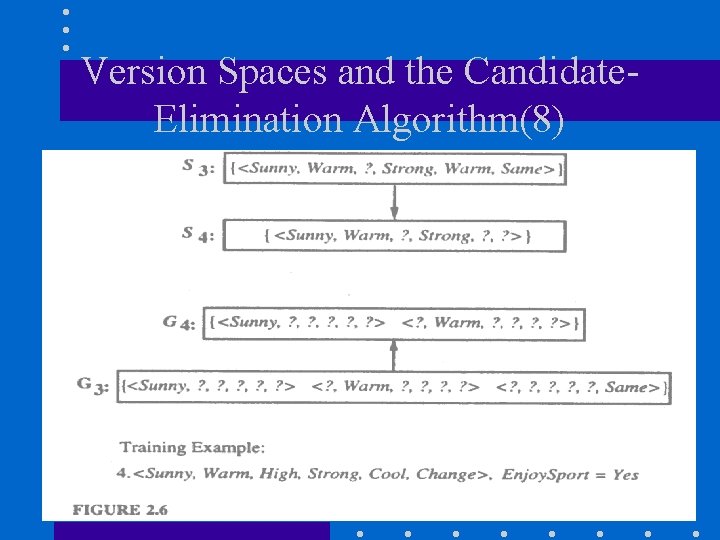

Version Spaces and the Candidate. Elimination Algorithm(8)

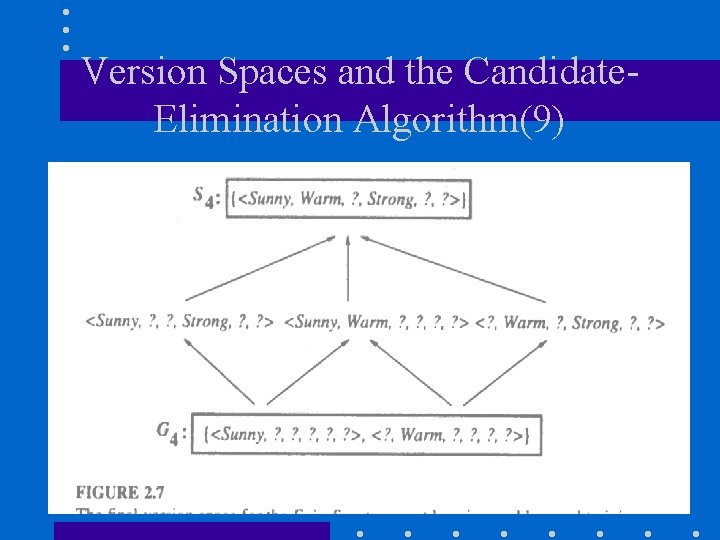

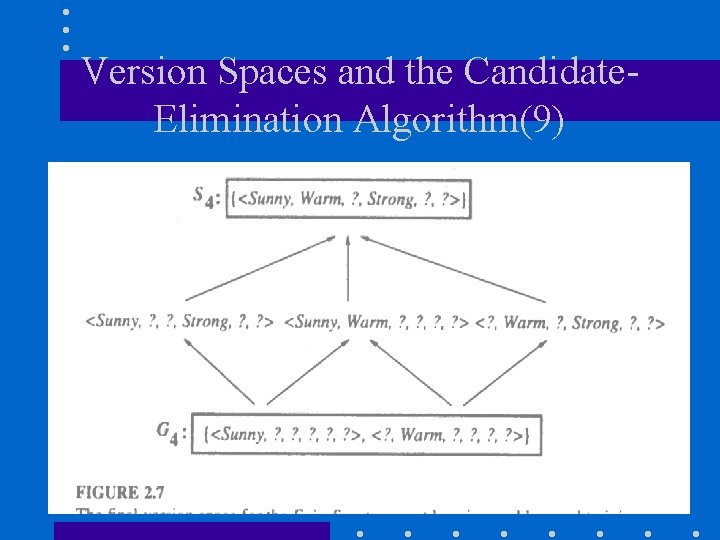

Version Spaces and the Candidate. Elimination Algorithm(9)

Remarks on Version Spaces and Candidate-Elimination • Will the Candidate-Elimination Algorithm Converge to the Correct Hypothesis? – Prerequisite – 1. No error in training examples – 2. Hypothesis exists which correctly describes c(x). – S and G boundary sets converge to an empty set => no hypothesis in H consistent with observed examples. • What Training Example Should the Learner Request Next? – Negative one specifies G , positive one generalizes S. – optimal query satisfy half the hypotheses.

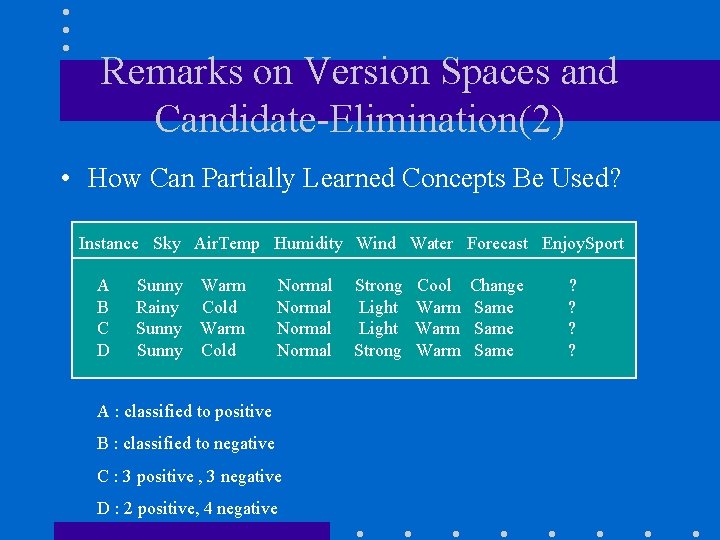

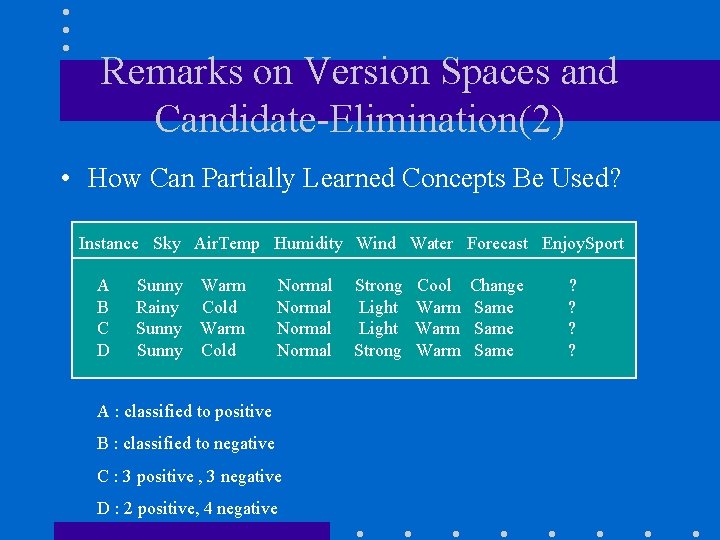

Remarks on Version Spaces and Candidate-Elimination(2) • How Can Partially Learned Concepts Be Used? Instance Sky Air. Temp Humidity Wind Water Forecast Enjoy. Sport A B C D Sunny Warm Rainy Cold Sunny Warm Sunny Cold Normal A : classified to positive B : classified to negative C : 3 positive , 3 negative D : 2 positive, 4 negative Strong Light Strong Cool Warm Change Same ? ?

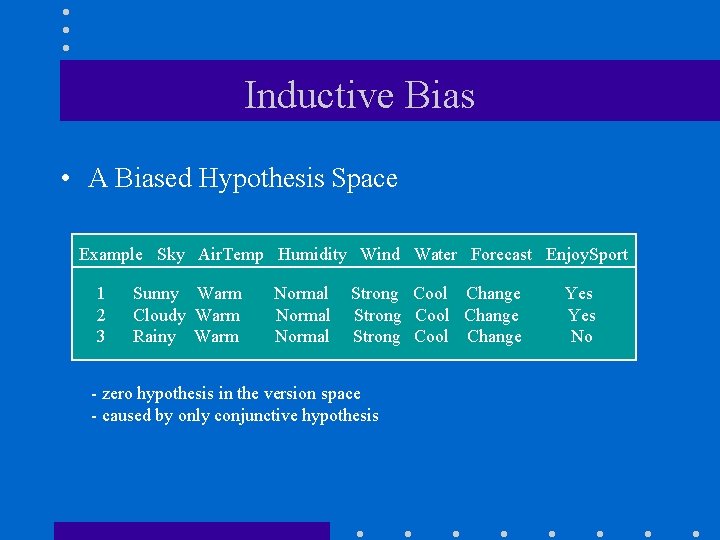

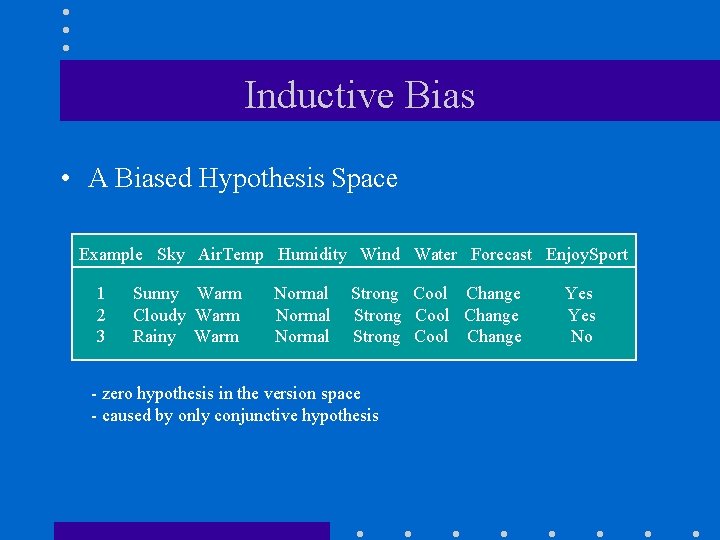

Inductive Bias • A Biased Hypothesis Space Example Sky Air. Temp Humidity Wind Water Forecast Enjoy. Sport 1 2 3 Sunny Warm Cloudy Warm Rainy Warm Normal Strong Cool Change - zero hypothesis in the version space - caused by only conjunctive hypothesis Yes No

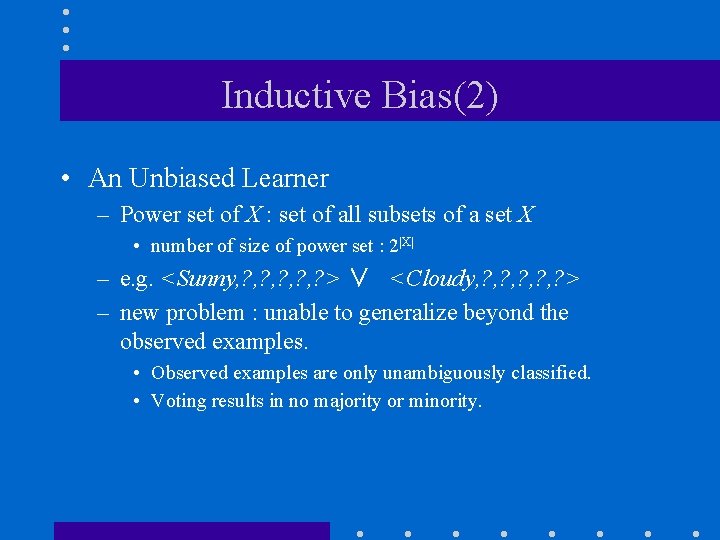

Inductive Bias(2) • An Unbiased Learner – Power set of X : set of all subsets of a set X • number of size of power set : 2|X| – e. g. <Sunny, ? , ? , ? > ∨ <Cloudy, ? , ? , ? > – new problem : unable to generalize beyond the observed examples. • Observed examples are only unambiguously classified. • Voting results in no majority or minority.

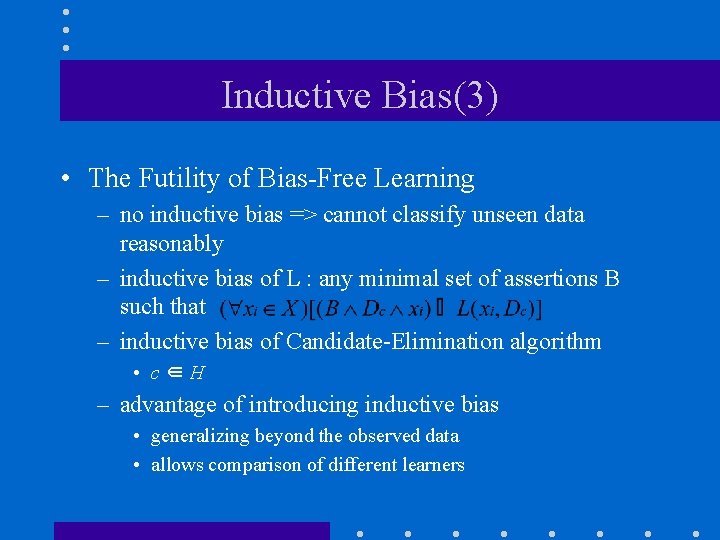

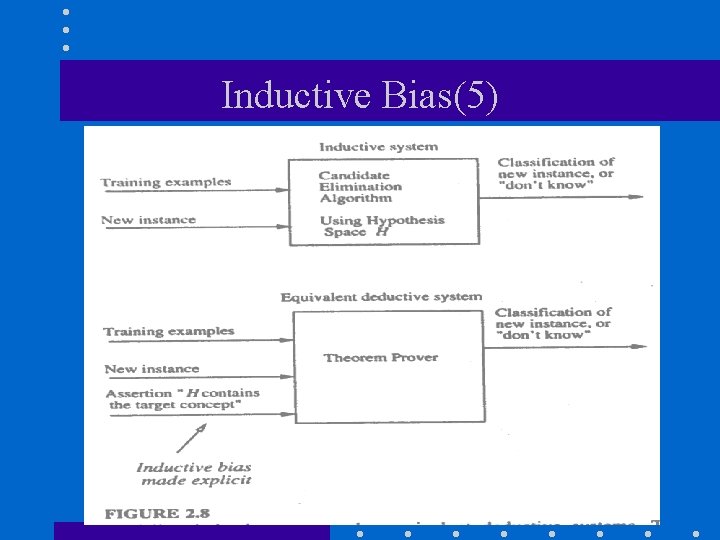

Inductive Bias(3) • The Futility of Bias-Free Learning – no inductive bias => cannot classify unseen data reasonably – inductive bias of L : any minimal set of assertions B such that – inductive bias of Candidate-Elimination algorithm • c∈H – advantage of introducing inductive bias • generalizing beyond the observed data • allows comparison of different learners

Inductive Bias(4) • e. g – Rote-learner : no inductive bias – Candidate-Elimination algo : c ∈ H => more strong – Find-S : c ∈ H and that all are negative unless not proved positive

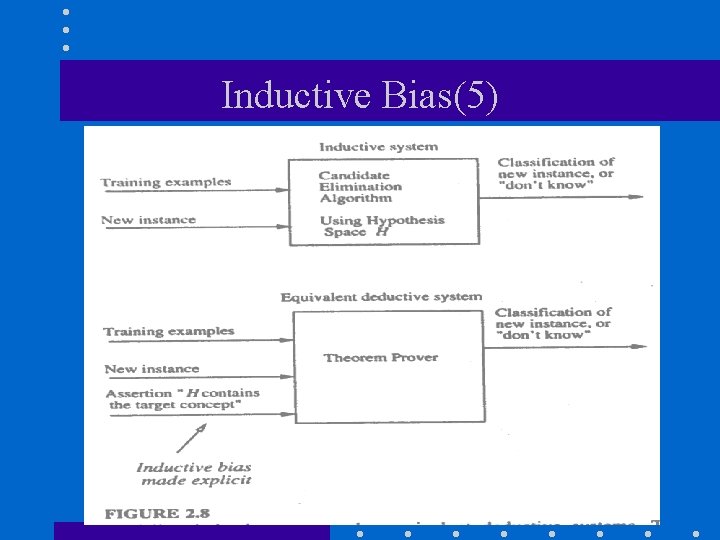

Inductive Bias(5)

Summary • Concept learning can be cast as a problem of searching through a large predefined space of potential hypotheses. • General-to-specific partial ordering of hypotheses provides a useful structure for search. • Find-S algorithm performs specific-to-general search to find the most specific hypothesis. • Candidate-Elimination algorithm computes version space by incrementally computing the sets of maximally specific (S) and maximally general (G) hypotheses. • S and G delimit the entire set of hypotheses consistent with the data.

• Version spaces and Candidate-Elimination algorithm provide a useful conceptual framework for studying concept learning. • Candidate-Elimination algorithm not robust to noisy data or to situations where the unknown target concept is not expressible in the provided hypothesis space. • Inductive bias in Candidate-Elimination algorithm is that target concept exists in H • If hypothesis space be enriched so that there is a every possible hypothesis, that would remove the ability to classify any instance beyond the observed examples.