COMS 4705 Natural Language Processing Fall 2010 Machine

- Slides: 47

COMS 4705: Natural Language Processing Fall 2010 Machine Translation Dr. Nizar Habash Center for Computational Learning Systems Columbia University

Why (Machine) Translation? Languages in the world • 6, 800 living languages • 600 with written tradition • 95% of world population speaks 100 languages Translation Market • $26 Billion Global Market (2010) • Doubling every five years (Donald Barabé, invited talk, MT Summit 2003)

Why (Machine) Translation? Languages in the world • 6, 800 living languages • 600 with written tradition • 95% of world population speaks 100 languages Translation Market • $26 Billion Global Market (2010) • Doubling every five years (Donald Barabé, invited talk, MT Summit 2003)

Machine Translation Science Fiction • Star Trek Universal Translator an "extremely sophisticated computer program" which functions by "analyzing the patterns" of an unknown foreign language, starting from a speech sample of two or more speakers in conversation. The more extensive the conversational sample, the more accurate and reliable is the "translation matrix"….

Machine Translation Reality http: //www. medialocate. com/

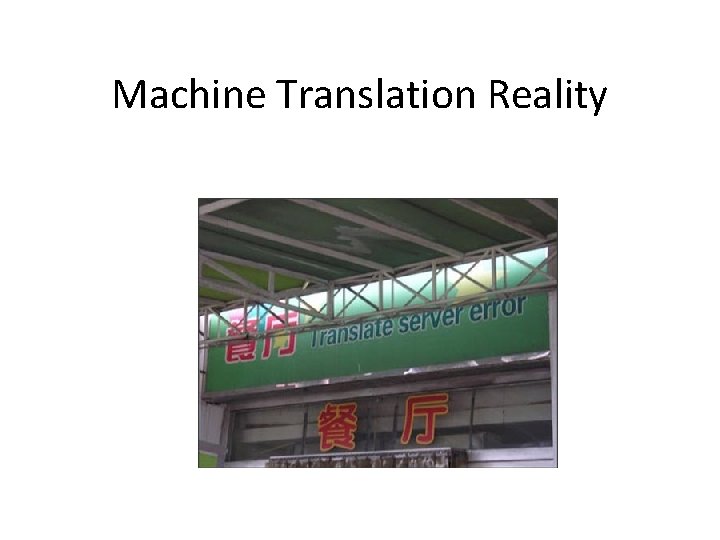

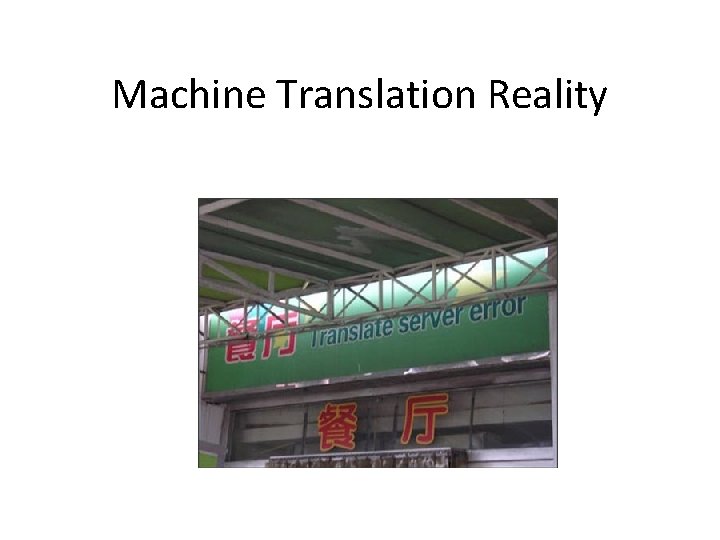

Machine Translation Reality

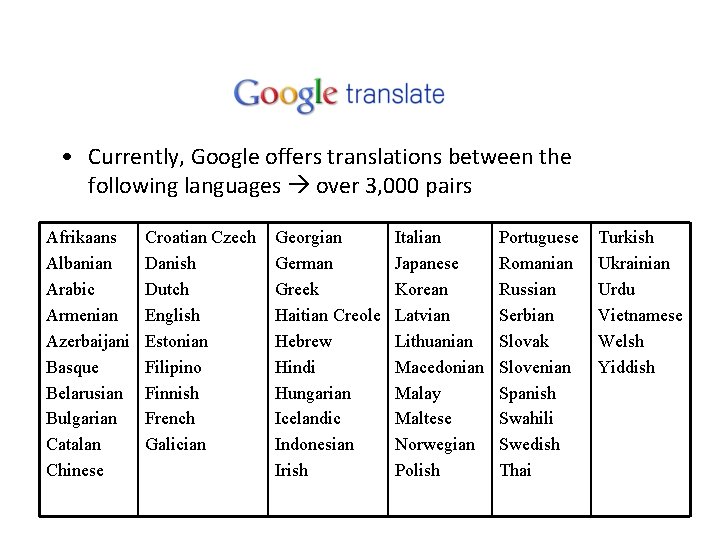

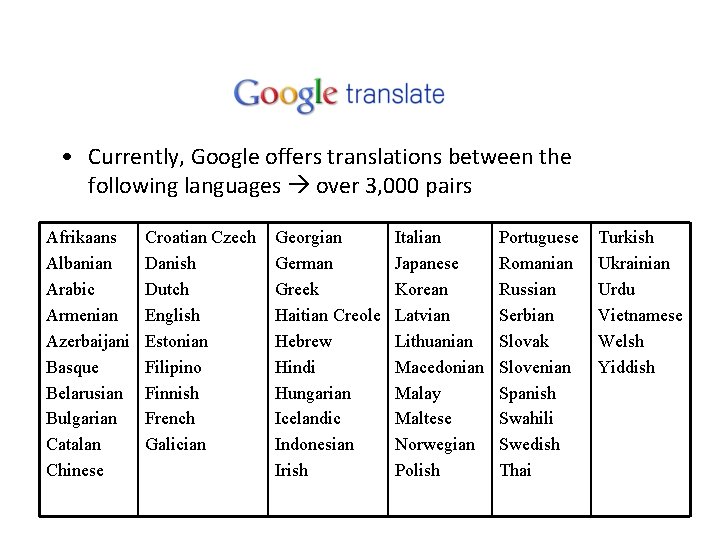

• Currently, Google offers translations between the following languages over 3, 000 pairs Afrikaans Albanian Arabic Armenian Azerbaijani Basque Belarusian Bulgarian Catalan Chinese Croatian Czech Danish Dutch English Estonian Filipino Finnish French Galician Georgian German Greek Haitian Creole Hebrew Hindi Hungarian Icelandic Indonesian Irish Italian Japanese Korean Latvian Lithuanian Macedonian Malay Maltese Norwegian Polish Portuguese Romanian Russian Serbian Slovak Slovenian Spanish Swahili Swedish Thai Turkish Ukrainian Urdu Vietnamese Welsh Yiddish

“BBC found similar support”!!!

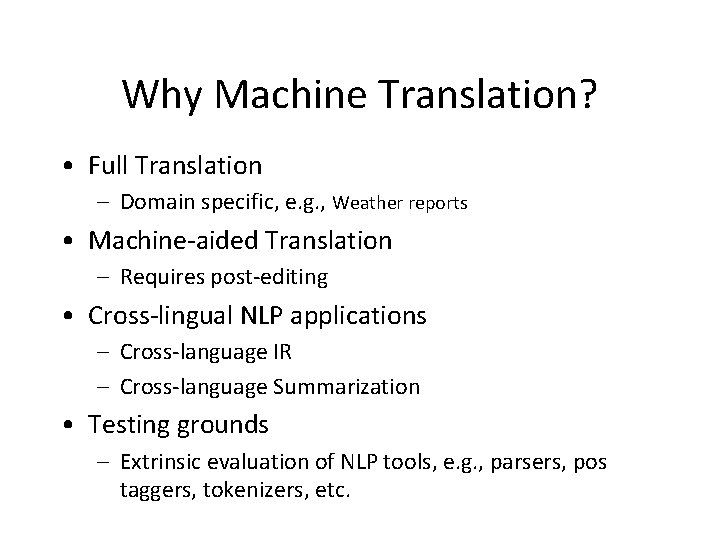

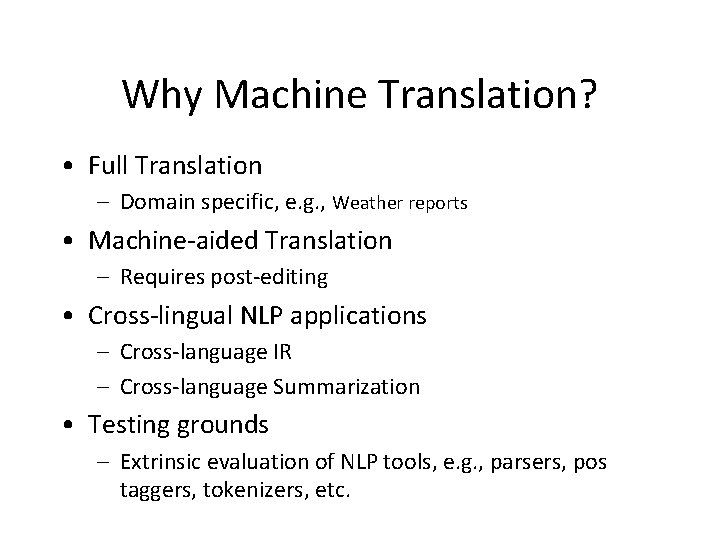

Why Machine Translation? • Full Translation – Domain specific, e. g. , Weather reports • Machine-aided Translation – Requires post-editing • Cross-lingual NLP applications – Cross-language IR – Cross-language Summarization • Testing grounds – Extrinsic evaluation of NLP tools, e. g. , parsers, pos taggers, tokenizers, etc.

Road Map • Multilingual Challenges for MT • MT Approaches • MT Evaluation

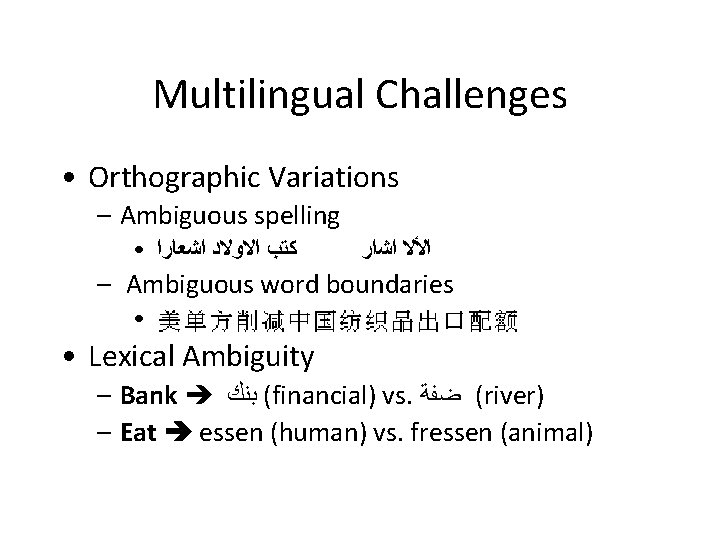

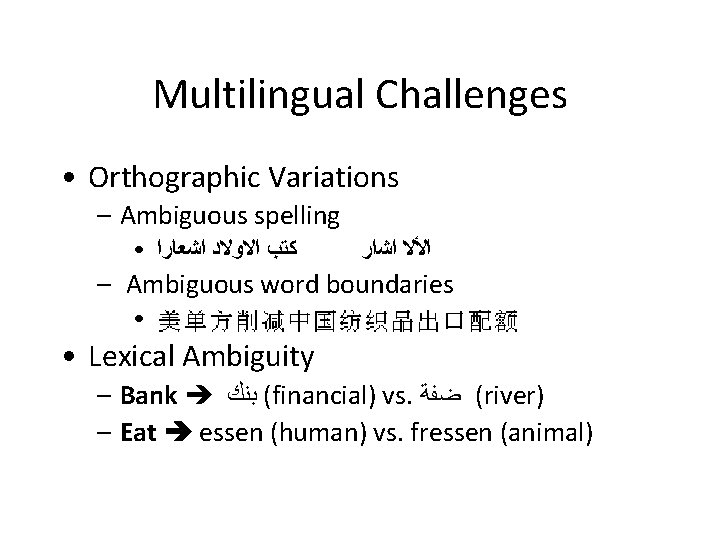

Multilingual Challenges • Orthographic Variations – Ambiguous spelling • ﻛﺘﺐ ﺍﻻﻭﻻﺩ ﺍﺷﻌﺎﺭﺍ ﺍﻷﻻ ﺍﺷﺍﺭ – Ambiguous word boundaries • • Lexical Ambiguity – Bank ( ﺑﻨﻚ financial) vs. ( ﺿﻔﺔ river) – Eat essen (human) vs. fressen (animal)

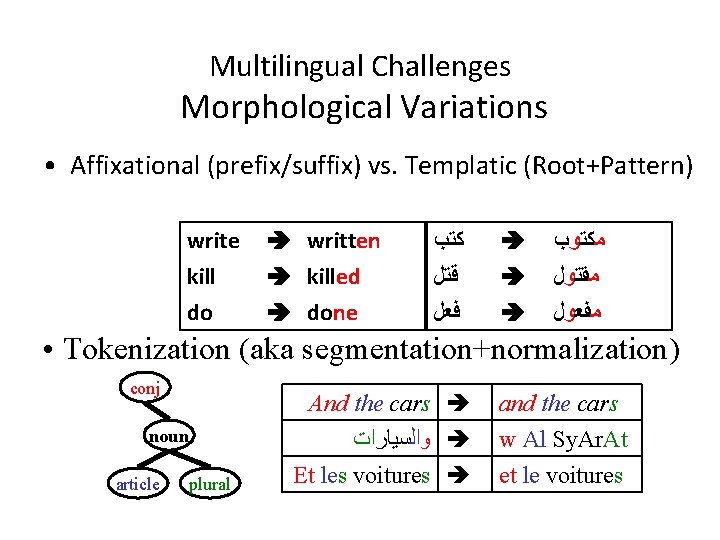

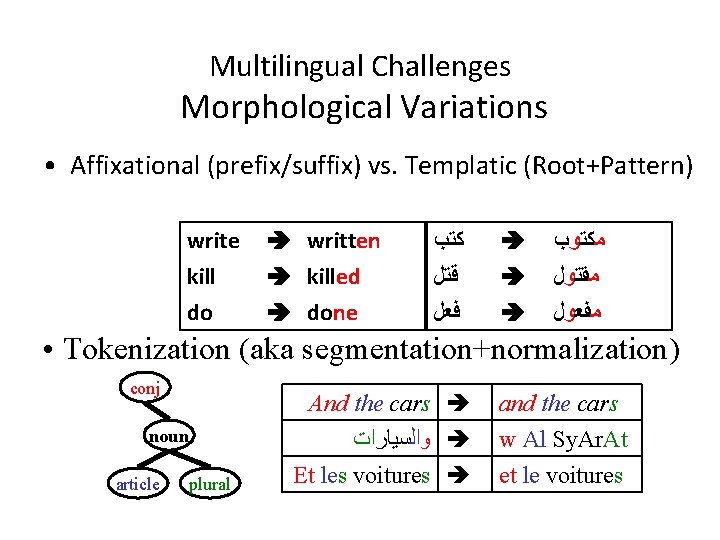

Multilingual Challenges Morphological Variations • Affixational (prefix/suffix) vs. Templatic (Root+Pattern) write kill do written killed done ﻛﺘﺐ ﻗﺘﻞ ﻓﻌﻞ ﻣﻜﺘﻮﺏ ﻣﻘﺘﻮﻝ ﻣﻔﻌﻮﻝ • Tokenization (aka segmentation+normalization) conj noun article plural And the cars ﻭﺍﻟﺴﻴﺎﺭﺍﺕ Et les voitures and the cars w Al Sy. Ar. At et le voitures

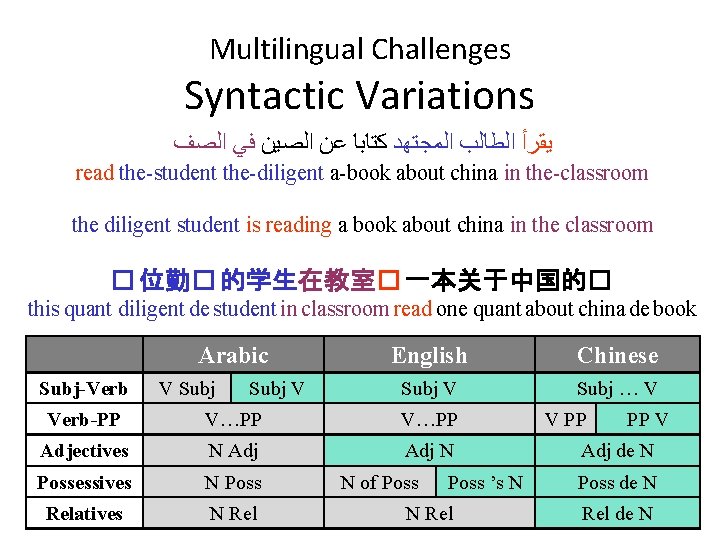

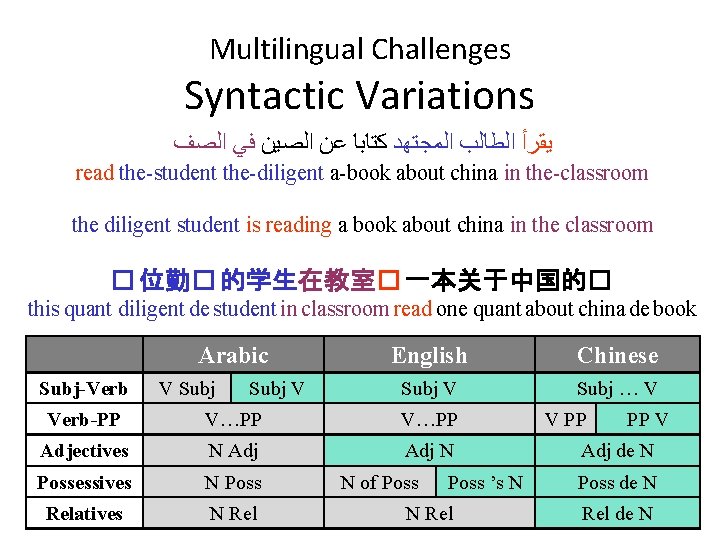

Multilingual Challenges Syntactic Variations ﻳﻘﺮﺃ ﺍﻟﻄﺎﻟﺐ ﺍﻟﻤﺠﺘﻬﺪ ﻛﺘﺎﺑﺎ ﻋﻦ ﺍﻟﺼﻴﻦ ﻓﻲ ﺍﻟﺼﻒ read the-student the-diligent a-book about china in the-classroom the diligent student is reading a book about china in the classroom � 位勤� 的学生在教室� 一本关于中国的� this quant diligent de student in classroom read one quant about china de book Arabic Subj-Verb V Subj V English Chinese Subj V Subj … V Verb-PP V…PP Adjectives N Adj N Possessives N Poss Relatives N Rel N of Poss ’s N N Rel V PP PP V Adj de N Poss de N Rel de N

Road Map • Multilingual Challenges for MT • MT Approaches • MT Evaluation

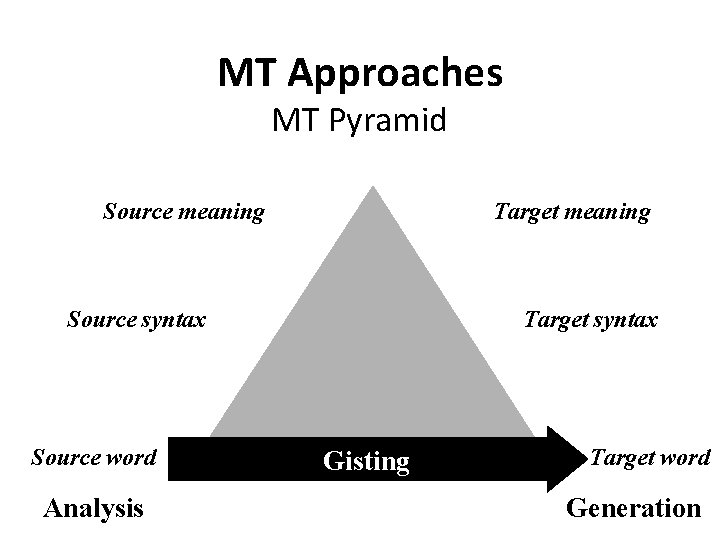

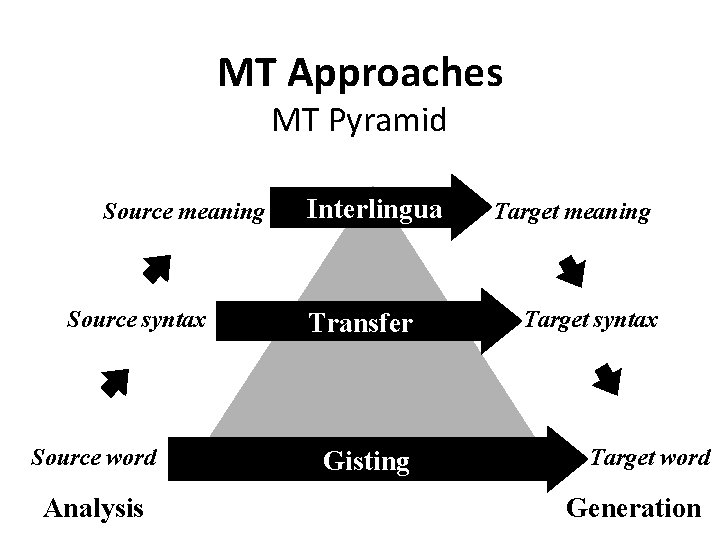

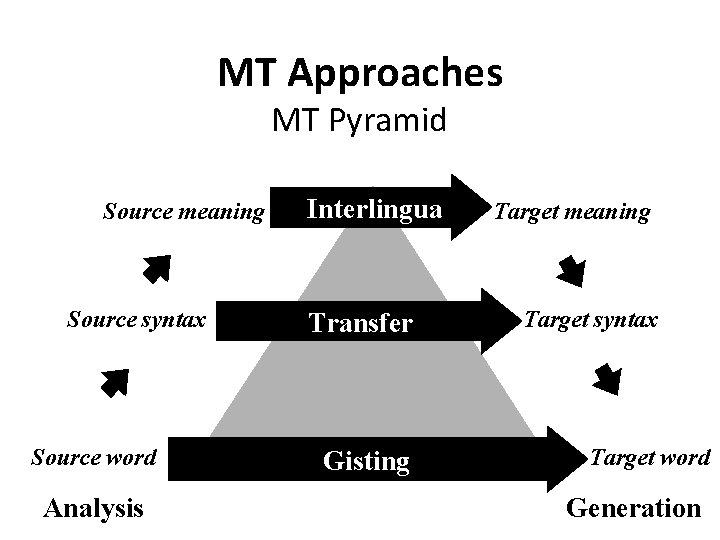

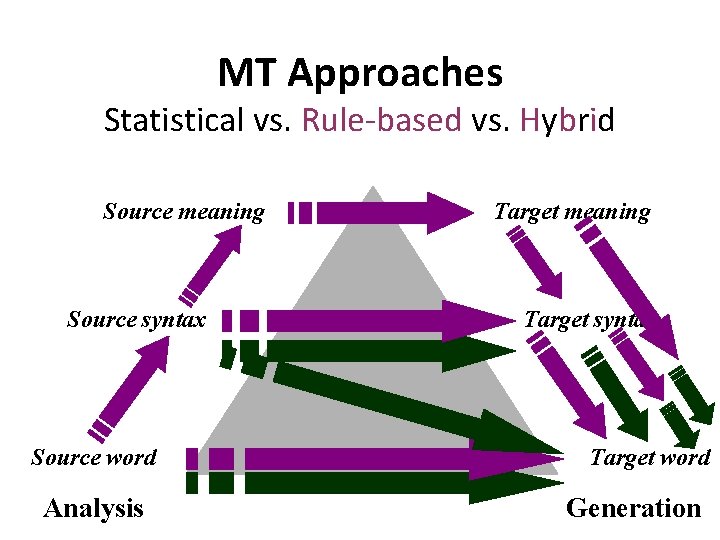

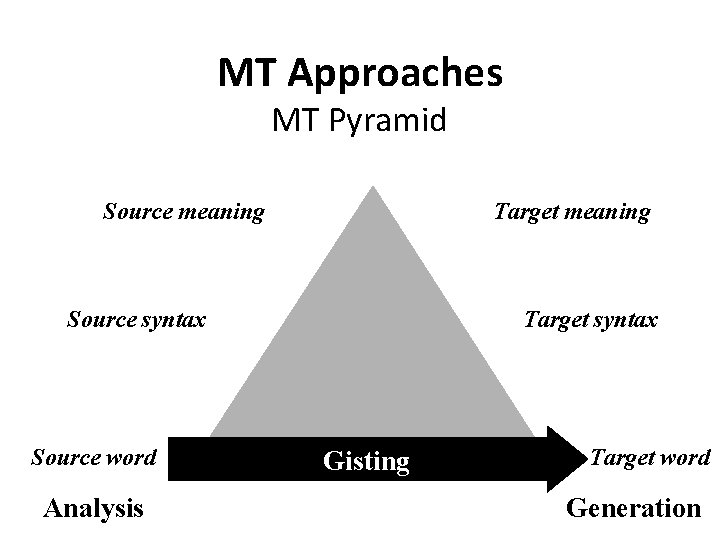

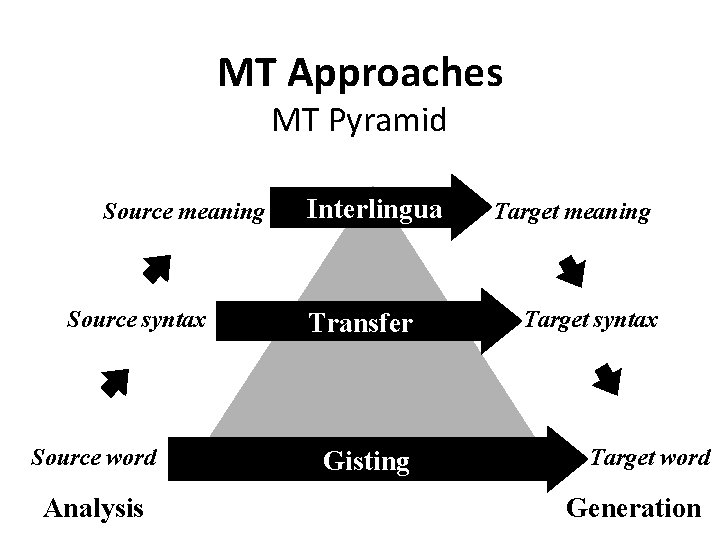

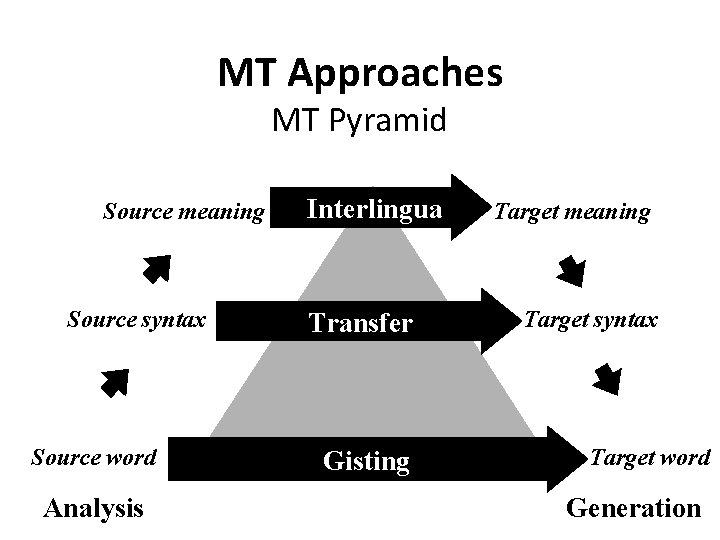

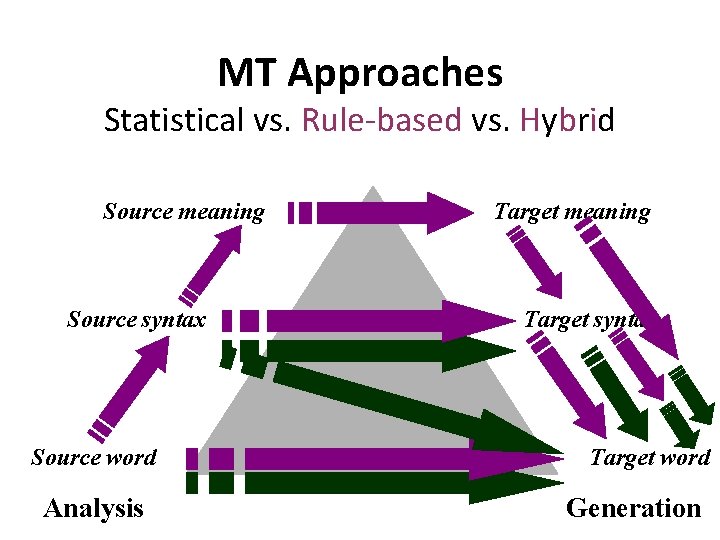

MT Approaches MT Pyramid Source meaning Target meaning Source syntax Source word Analysis Target syntax Gisting Target word Generation

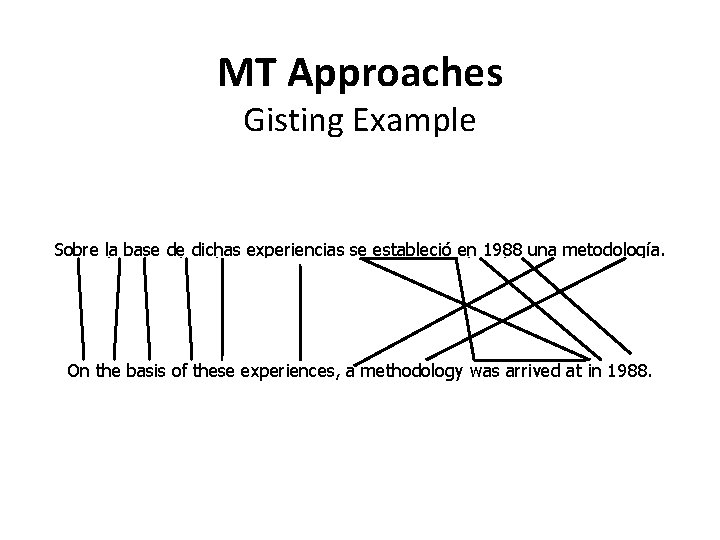

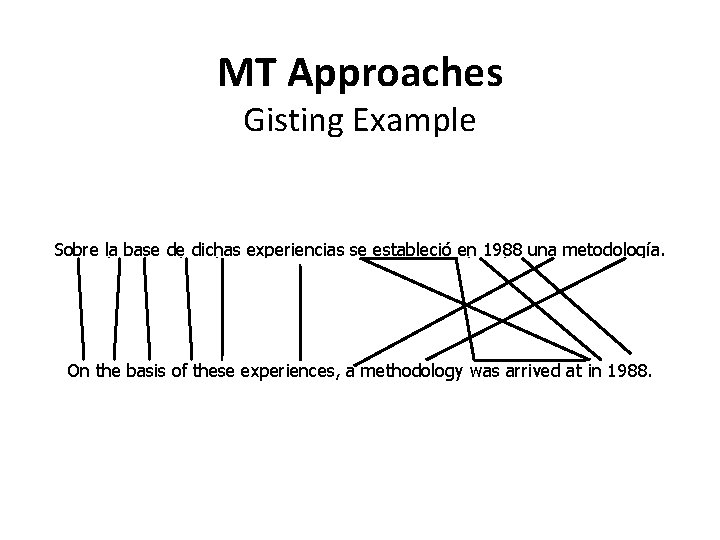

MT Approaches Gisting Example Sobre la base de dichas experiencias se estableció en 1988 una metodología. Envelope her basis out speak experiences them settle at 1988 one methodology. On the basis of these experiences, a methodology was arrived at in 1988.

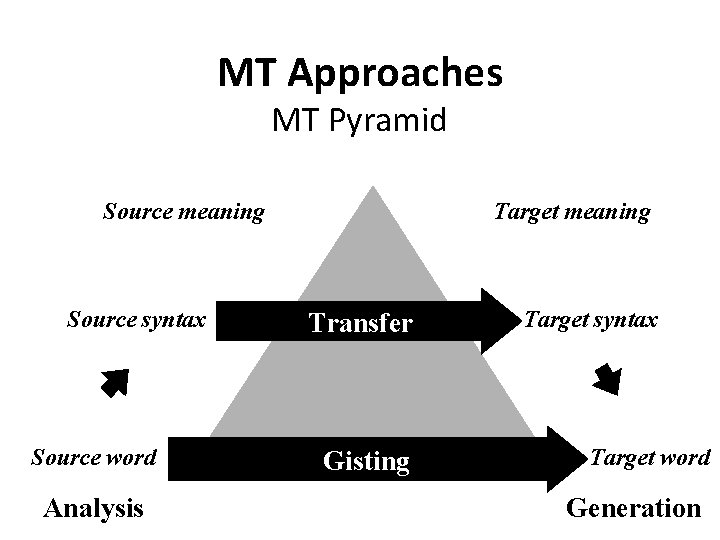

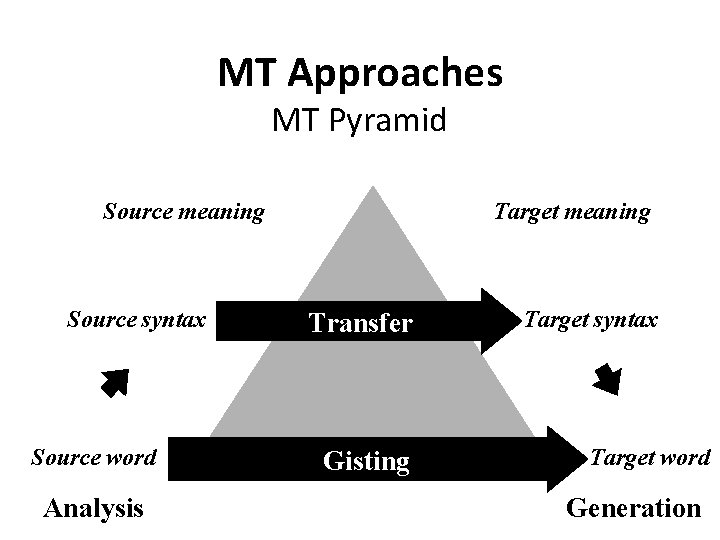

MT Approaches MT Pyramid Source meaning Source syntax Source word Analysis Target meaning Transfer Gisting Target syntax Target word Generation

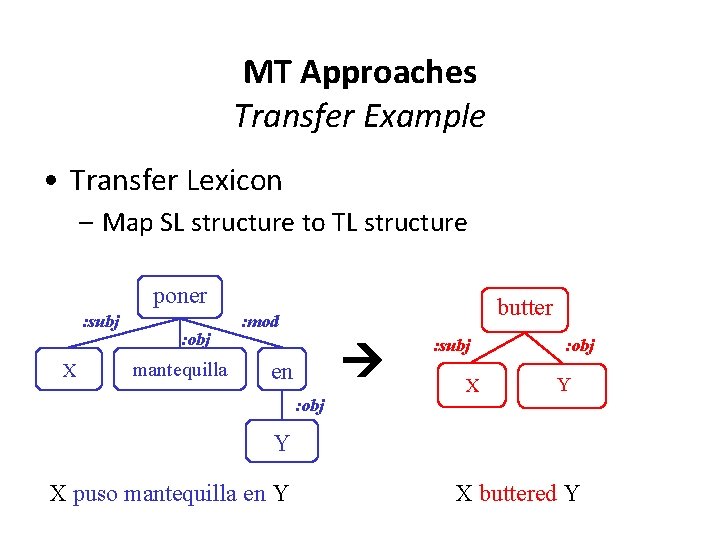

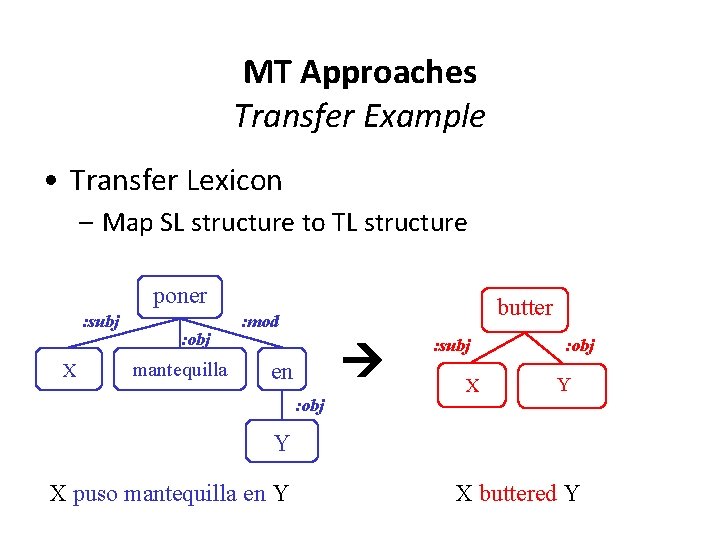

MT Approaches Transfer Example • Transfer Lexicon – Map SL structure to TL structure poner : subj X : obj mantequilla butter : mod en : obj : subj X : obj Y Y X puso mantequilla en Y X buttered Y

MT Approaches MT Pyramid Source meaning Source syntax Source word Analysis Interlingua Transfer Gisting Target meaning Target syntax Target word Generation

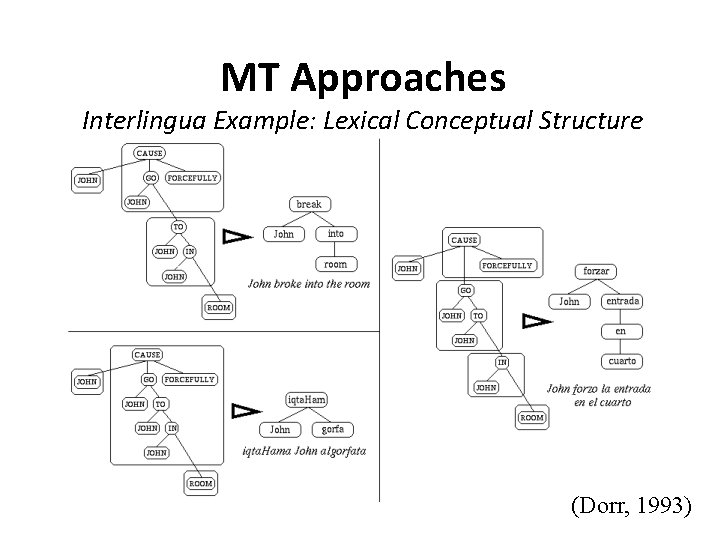

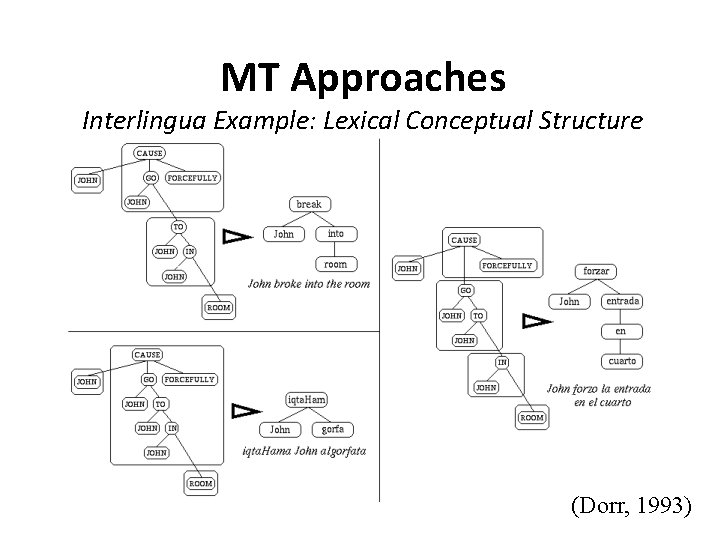

MT Approaches Interlingua Example: Lexical Conceptual Structure (Dorr, 1993)

MT Approaches MT Pyramid Source meaning Source syntax Source word Analysis Interlingua Transfer Gisting Target meaning Target syntax Target word Generation

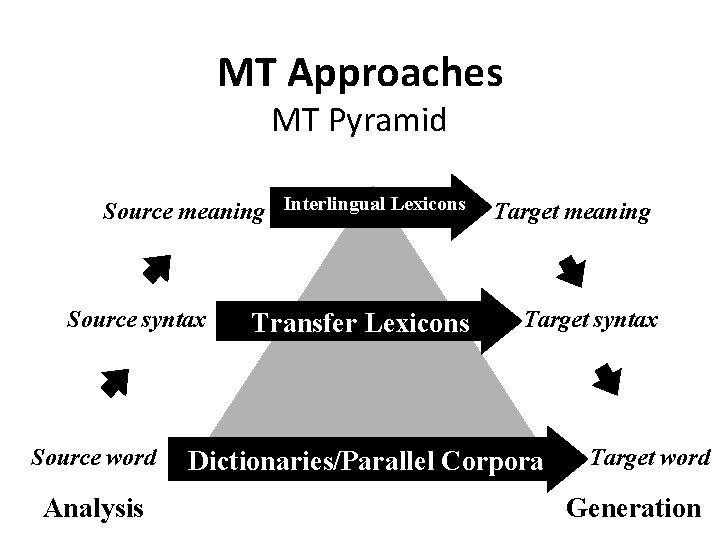

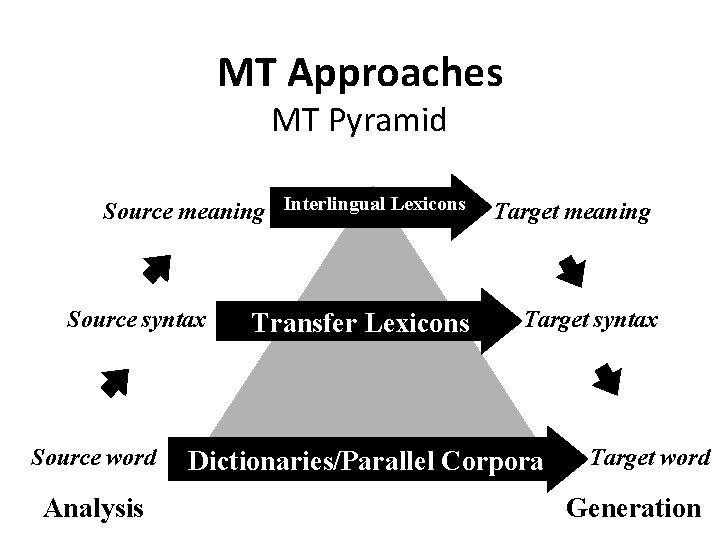

MT Approaches MT Pyramid Source meaning Interlingual Lexicons Source syntax Source word Analysis Transfer Lexicons Target meaning Target syntax Dictionaries/Parallel Corpora Target word Generation

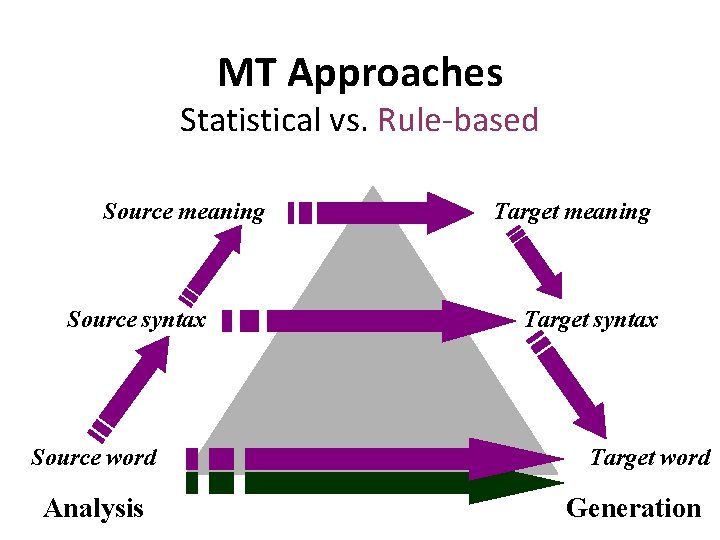

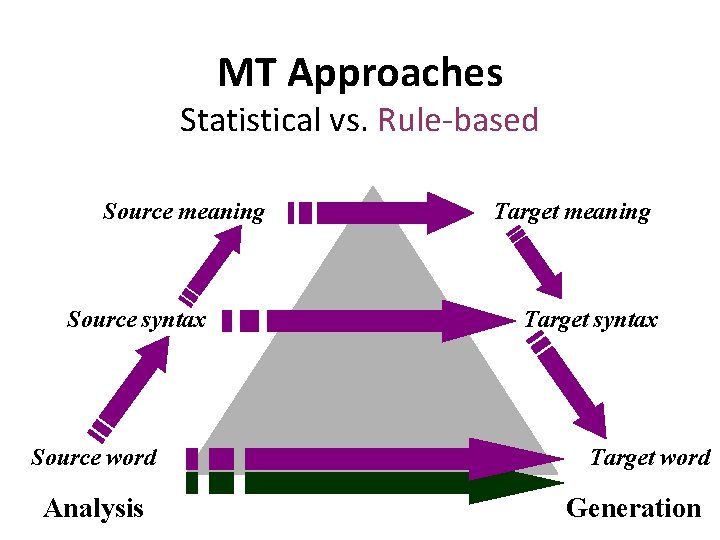

MT Approaches Statistical vs. Rule-based Source meaning Source syntax Source word Analysis Target meaning Target syntax Target word Generation

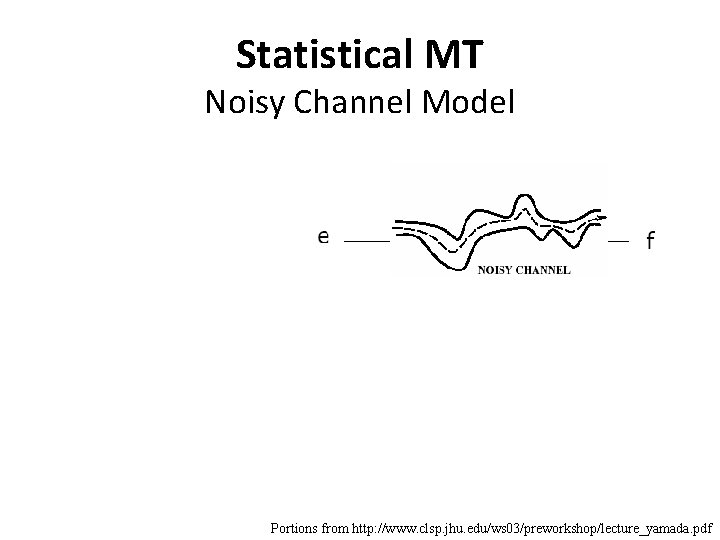

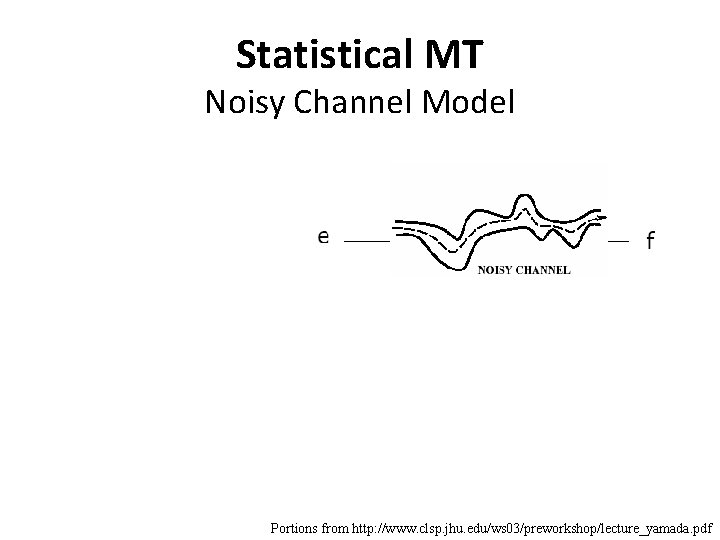

Statistical MT Noisy Channel Model Portions from http: //www. clsp. jhu. edu/ws 03/preworkshop/lecture_yamada. pdf

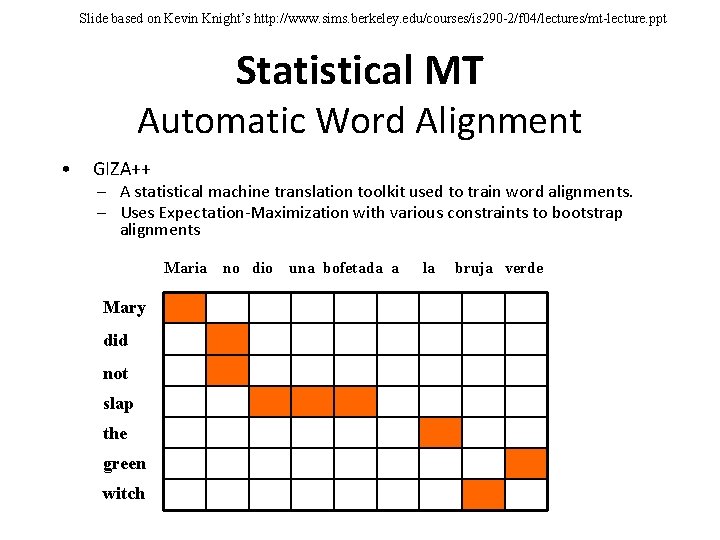

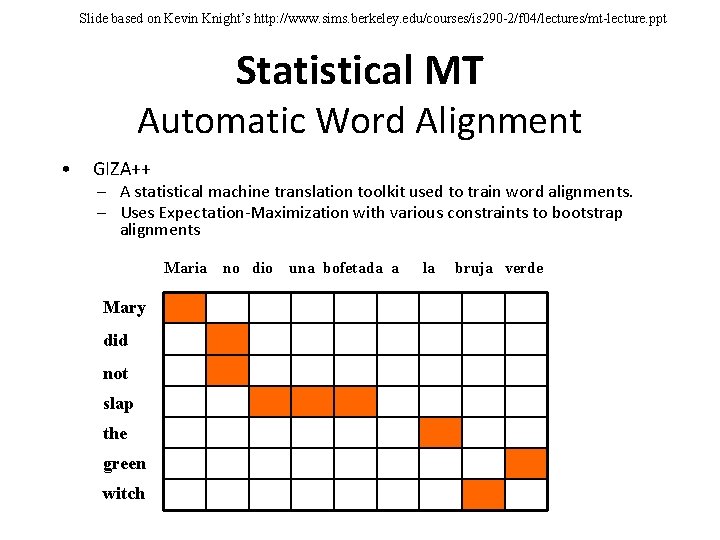

Slide based on Kevin Knight’s http: //www. sims. berkeley. edu/courses/is 290 -2/f 04/lectures/mt-lecture. ppt Statistical MT Automatic Word Alignment • GIZA++ – A statistical machine translation toolkit used to train word alignments. – Uses Expectation-Maximization with various constraints to bootstrap alignments Maria Mary did not slap the green witch no dio una bofetada a la bruja verde

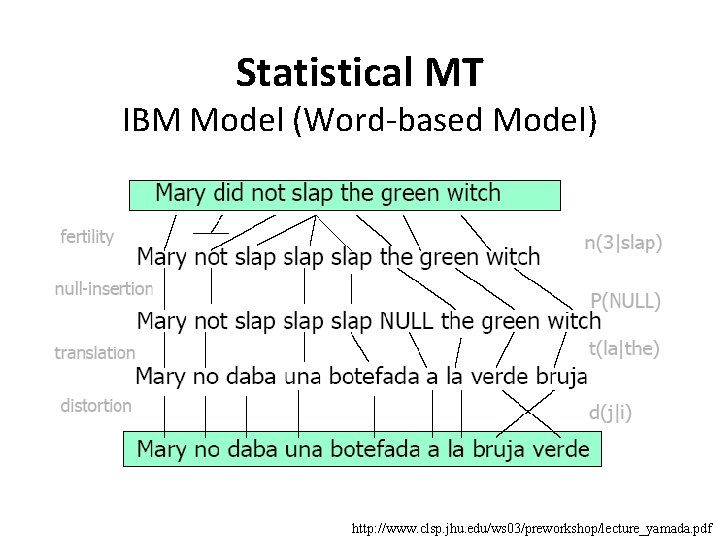

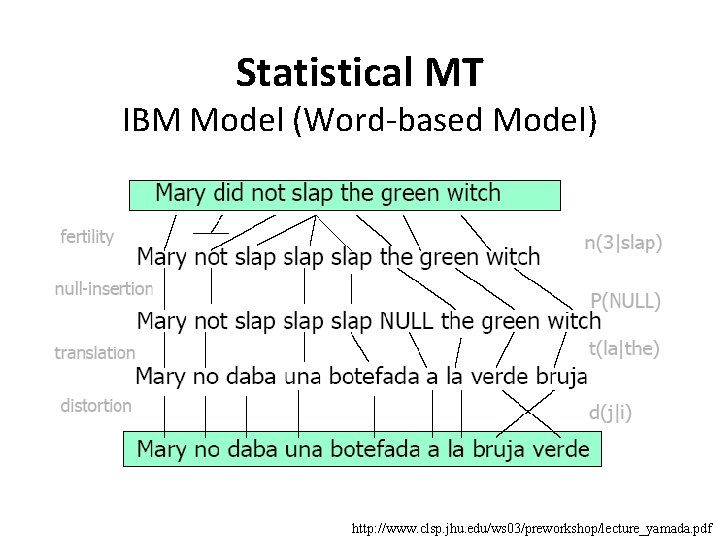

Statistical MT IBM Model (Word-based Model) http: //www. clsp. jhu. edu/ws 03/preworkshop/lecture_yamada. pdf

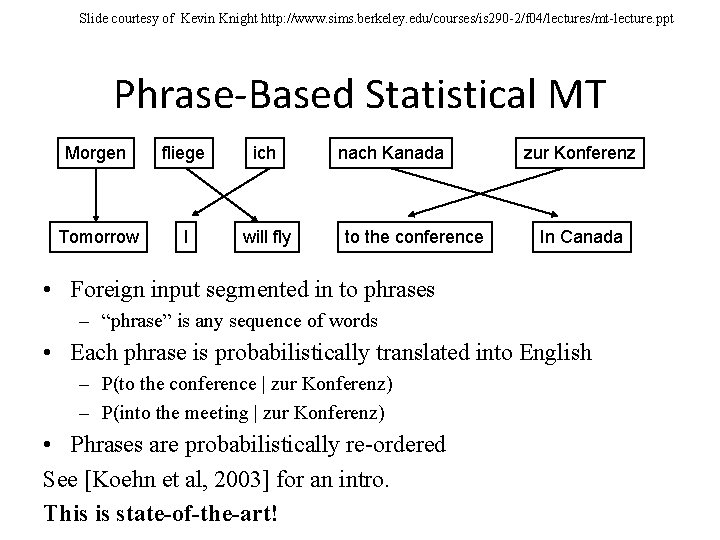

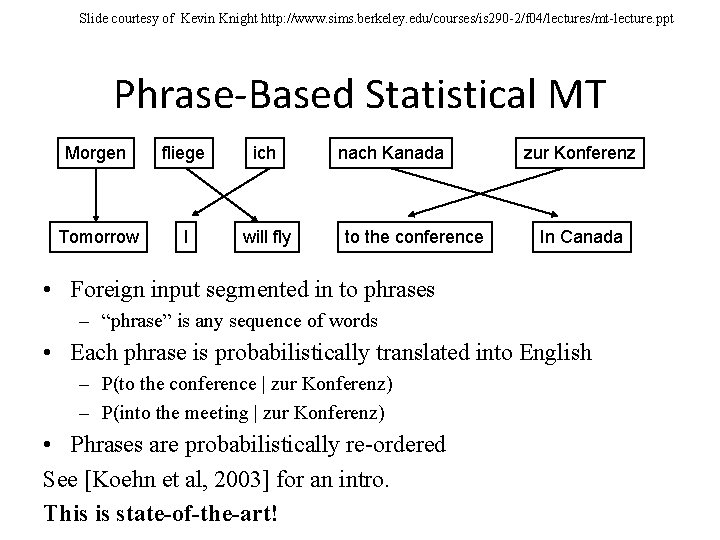

Slide courtesy of Kevin Knight http: //www. sims. berkeley. edu/courses/is 290 -2/f 04/lectures/mt-lecture. ppt Phrase-Based Statistical MT Morgen fliege ich Tomorrow I will fly nach Kanada to the conference zur Konferenz In Canada • Foreign input segmented in to phrases – “phrase” is any sequence of words • Each phrase is probabilistically translated into English – P(to the conference | zur Konferenz) – P(into the meeting | zur Konferenz) • Phrases are probabilistically re-ordered See [Koehn et al, 2003] for an intro. This is state-of-the-art!

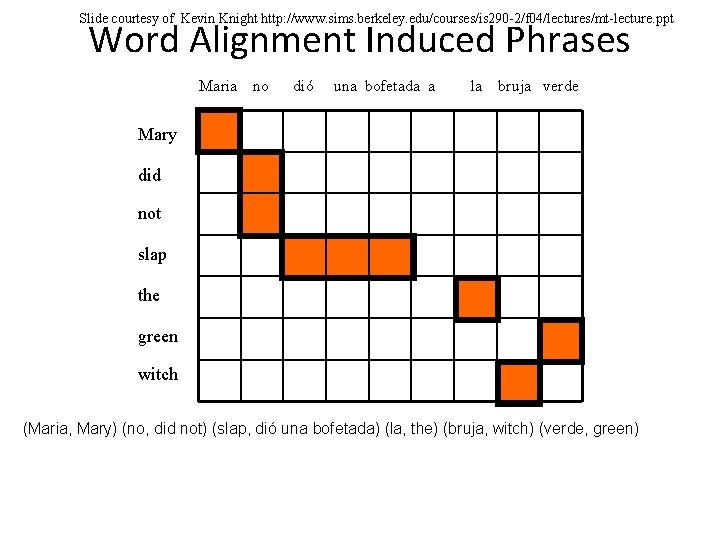

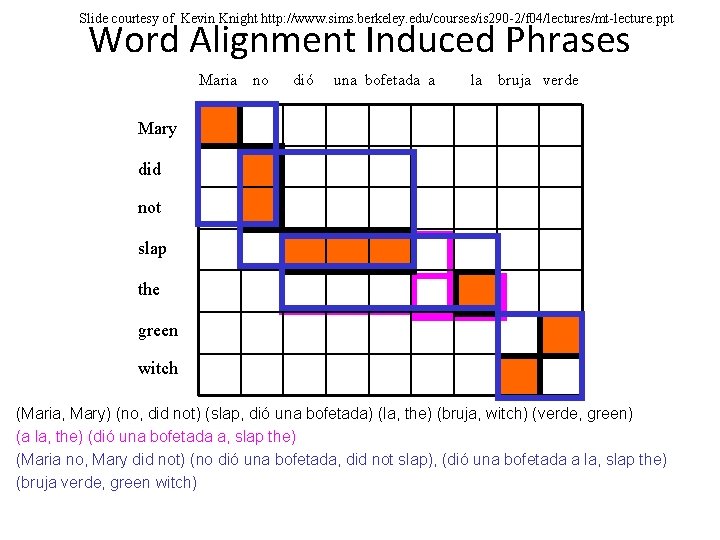

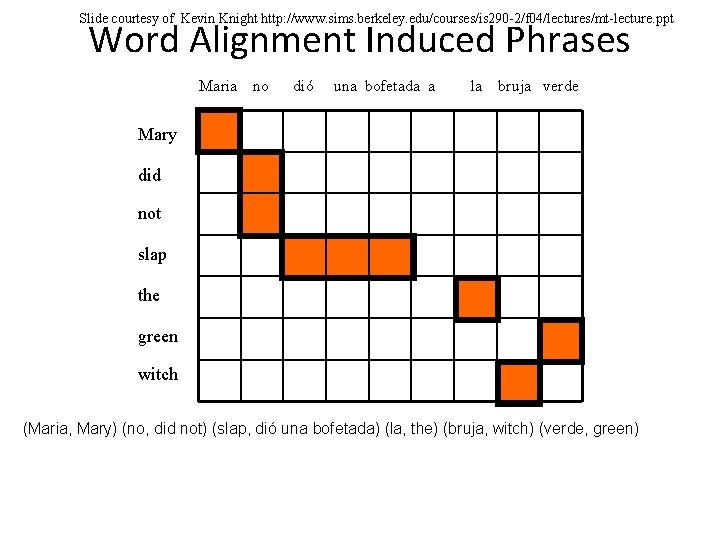

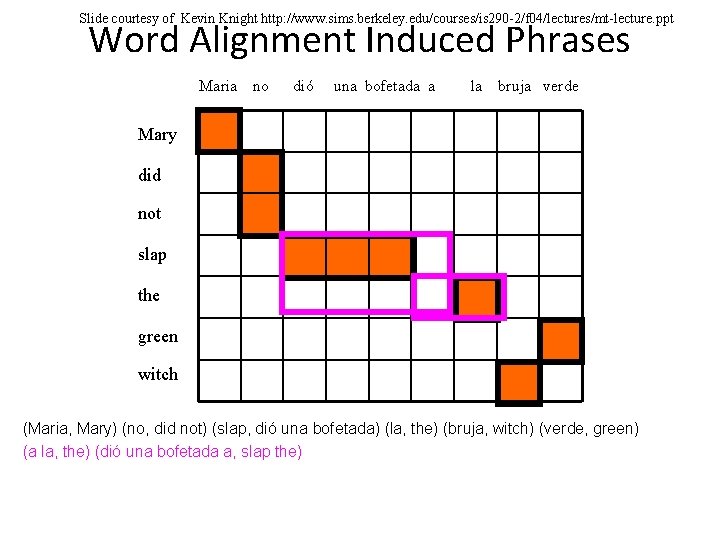

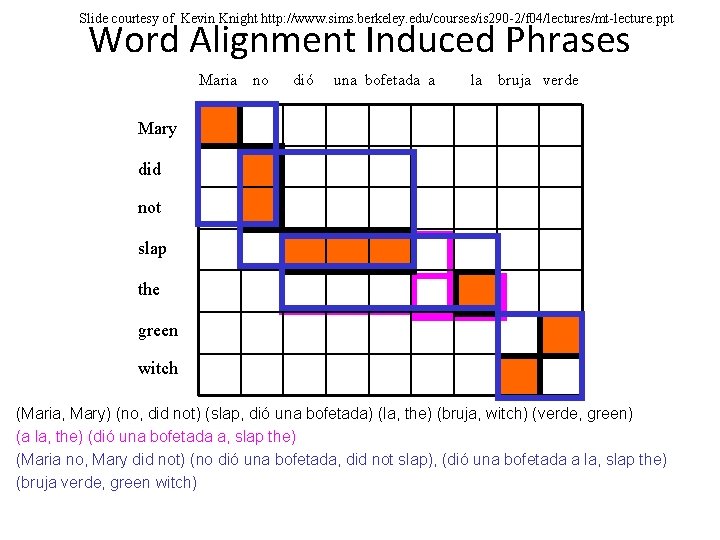

Slide courtesy of Kevin Knight http: //www. sims. berkeley. edu/courses/is 290 -2/f 04/lectures/mt-lecture. ppt Word Alignment Induced Phrases Maria no dió una bofetada a la bruja verde Mary did not slap the green witch (Maria, Mary) (no, did not) (slap, dió una bofetada) (la, the) (bruja, witch) (verde, green)

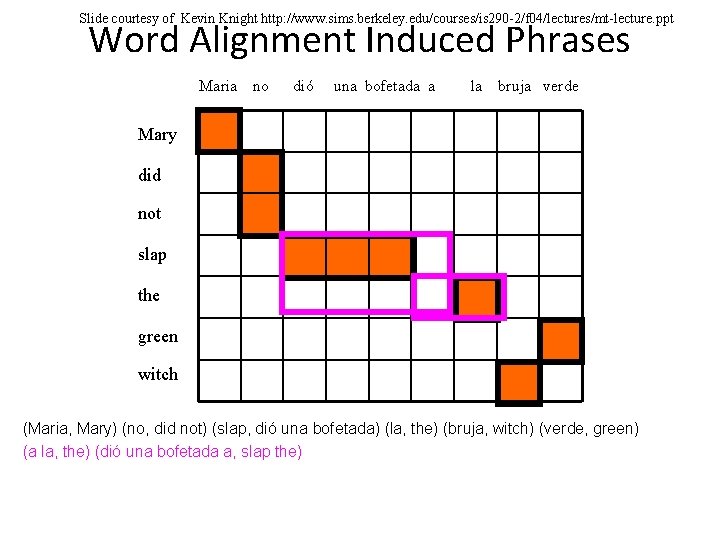

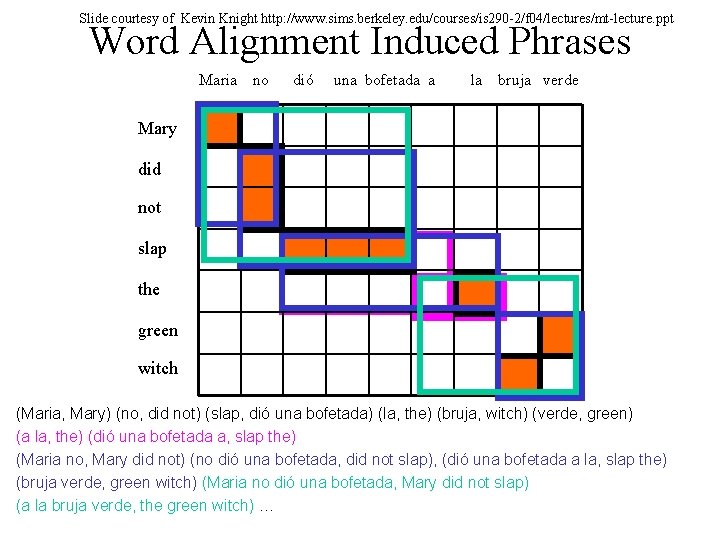

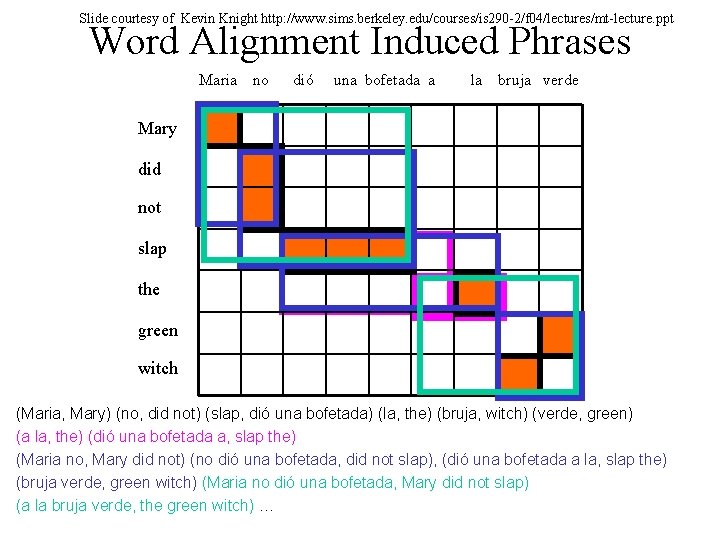

Slide courtesy of Kevin Knight http: //www. sims. berkeley. edu/courses/is 290 -2/f 04/lectures/mt-lecture. ppt Word Alignment Induced Phrases Maria no dió una bofetada a la bruja verde Mary did not slap the green witch (Maria, Mary) (no, did not) (slap, dió una bofetada) (la, the) (bruja, witch) (verde, green) (a la, the) (dió una bofetada a, slap the)

Slide courtesy of Kevin Knight http: //www. sims. berkeley. edu/courses/is 290 -2/f 04/lectures/mt-lecture. ppt Word Alignment Induced Phrases Maria no dió una bofetada a la bruja verde Mary did not slap the green witch (Maria, Mary) (no, did not) (slap, dió una bofetada) (la, the) (bruja, witch) (verde, green) (a la, the) (dió una bofetada a, slap the) (Maria no, Mary did not) (no dió una bofetada, did not slap), (dió una bofetada a la, slap the) (bruja verde, green witch)

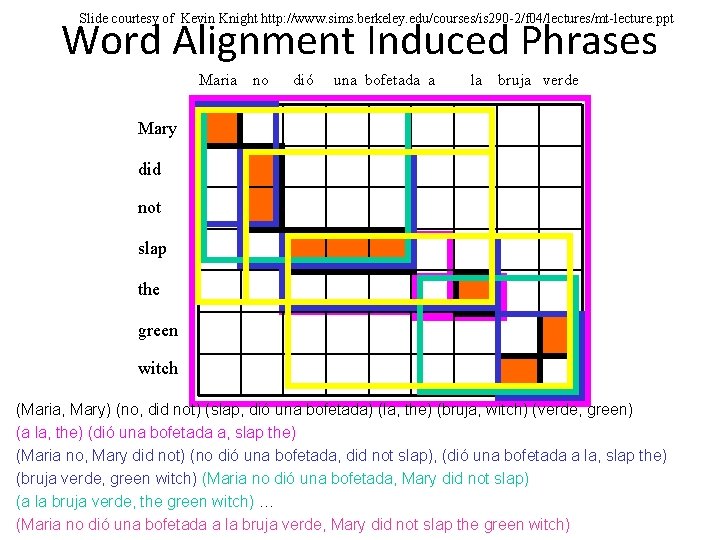

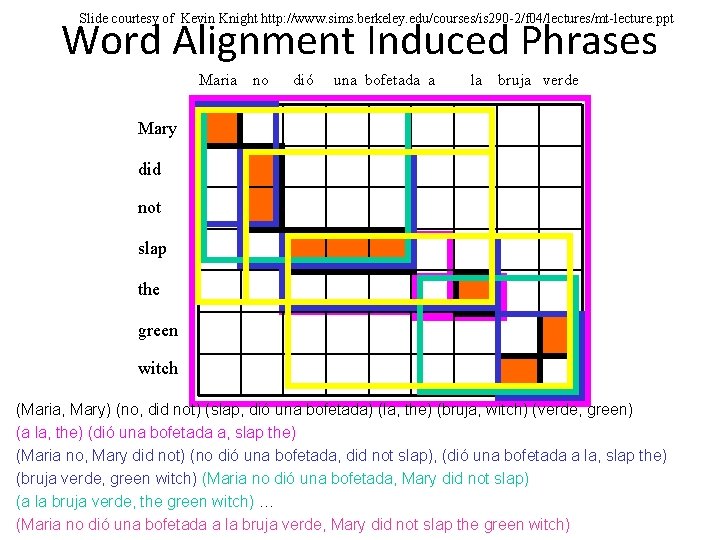

Slide courtesy of Kevin Knight http: //www. sims. berkeley. edu/courses/is 290 -2/f 04/lectures/mt-lecture. ppt Word Alignment Induced Phrases Maria no dió una bofetada a la bruja verde Mary did not slap the green witch (Maria, Mary) (no, did not) (slap, dió una bofetada) (la, the) (bruja, witch) (verde, green) (a la, the) (dió una bofetada a, slap the) (Maria no, Mary did not) (no dió una bofetada, did not slap), (dió una bofetada a la, slap the) (bruja verde, green witch) (Maria no dió una bofetada, Mary did not slap) (a la bruja verde, the green witch) …

Slide courtesy of Kevin Knight http: //www. sims. berkeley. edu/courses/is 290 -2/f 04/lectures/mt-lecture. ppt Word Alignment Induced Phrases Maria no dió una bofetada a la bruja verde Mary did not slap the green witch (Maria, Mary) (no, did not) (slap, dió una bofetada) (la, the) (bruja, witch) (verde, green) (a la, the) (dió una bofetada a, slap the) (Maria no, Mary did not) (no dió una bofetada, did not slap), (dió una bofetada a la, slap the) (bruja verde, green witch) (Maria no dió una bofetada, Mary did not slap) (a la bruja verde, the green witch) … (Maria no dió una bofetada a la bruja verde, Mary did not slap the green witch)

Slide courtesy of Kevin Knight http: //www. sims. berkeley. edu/courses/is 290 -2/f 04/lectures/mt-lecture. ppt Advantages of Phrase-Based SMT • Many-to-many mappings can handle noncompositional phrases • Local context is very useful for disambiguating – “Interest rate” … – “Interest in” … • The more data, the longer the learned phrases – Sometimes whole sentences

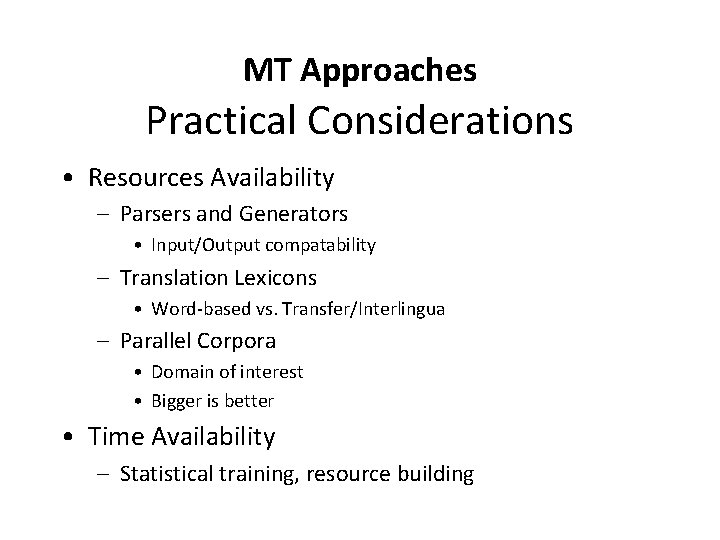

MT Approaches Statistical vs. Rule-based vs. Hybrid Source meaning Source syntax Source word Analysis Target meaning Target syntax Target word Generation

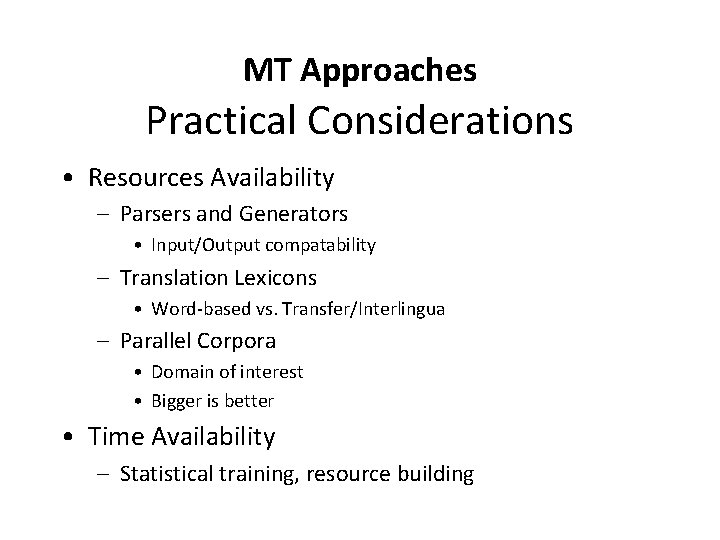

MT Approaches Practical Considerations • Resources Availability – Parsers and Generators • Input/Output compatability – Translation Lexicons • Word-based vs. Transfer/Interlingua – Parallel Corpora • Domain of interest • Bigger is better • Time Availability – Statistical training, resource building

Road Map • Multilingual Challenges for MT • MT Approaches • MT Evaluation

MT Evaluation • More art than science • Wide range of Metrics/Techniques – interface, …, scalability, …, faithfulness, . . . space/time complexity, … etc. • Automatic vs. Human-based – Dumb Machines vs. Slow Humans

Human-based Evaluation Example Accuracy Criteria

Human-based Evaluation Example Fluency Criteria

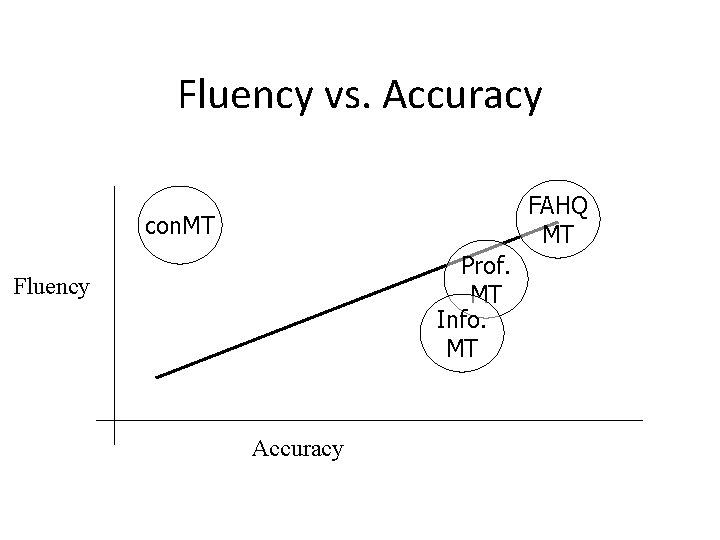

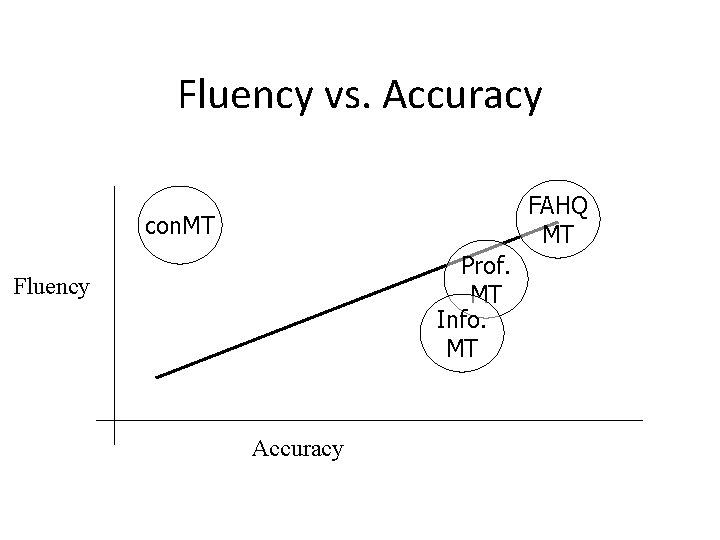

Fluency vs. Accuracy FAHQ MT con. MT Prof. MT Info. MT Fluency Accuracy

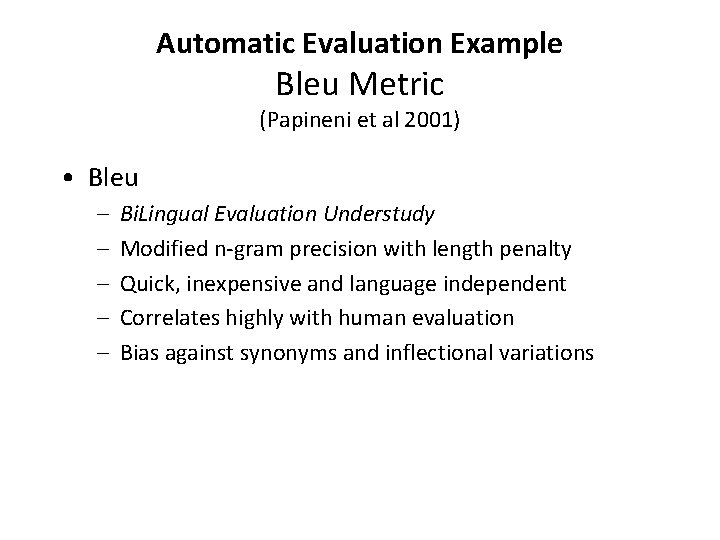

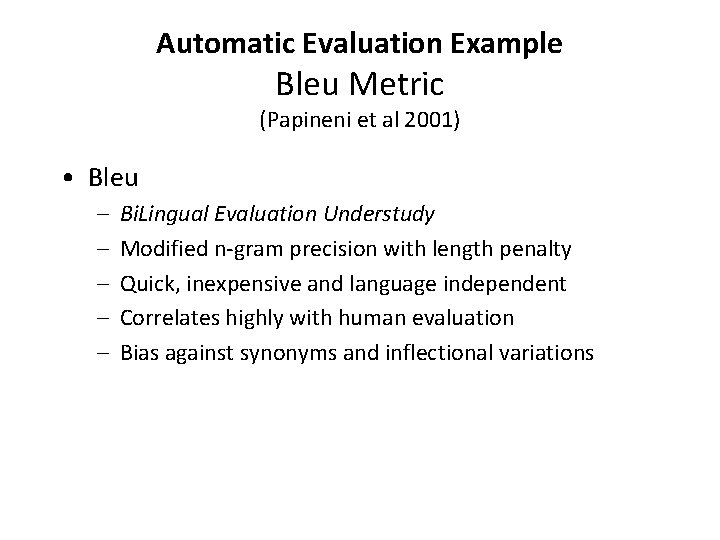

Automatic Evaluation Example Bleu Metric (Papineni et al 2001) • Bleu – – – Bi. Lingual Evaluation Understudy Modified n-gram precision with length penalty Quick, inexpensive and language independent Correlates highly with human evaluation Bias against synonyms and inflectional variations

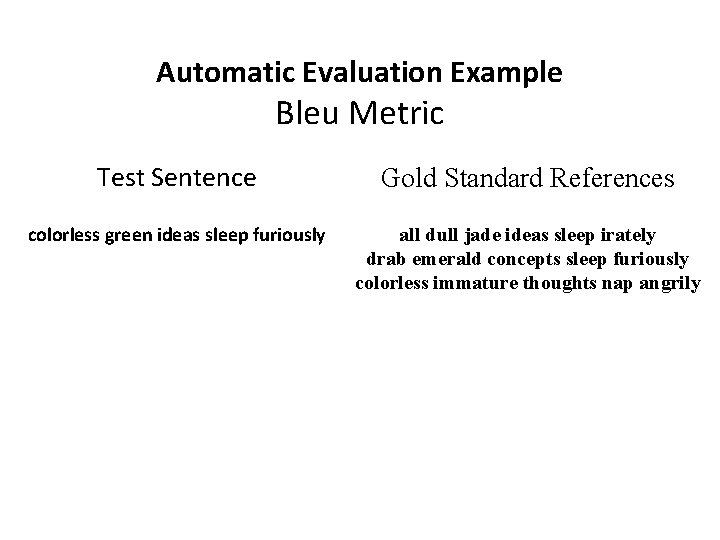

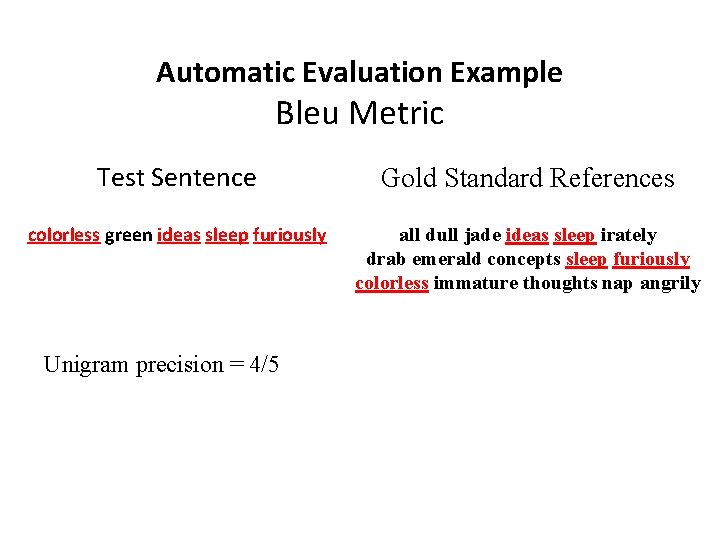

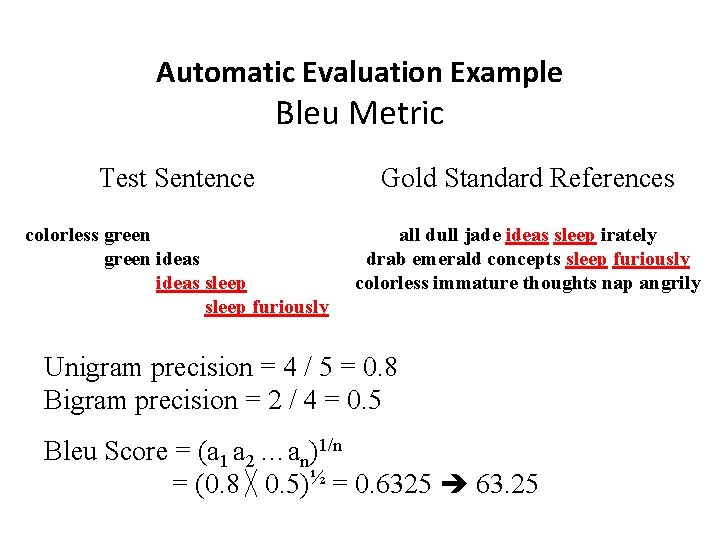

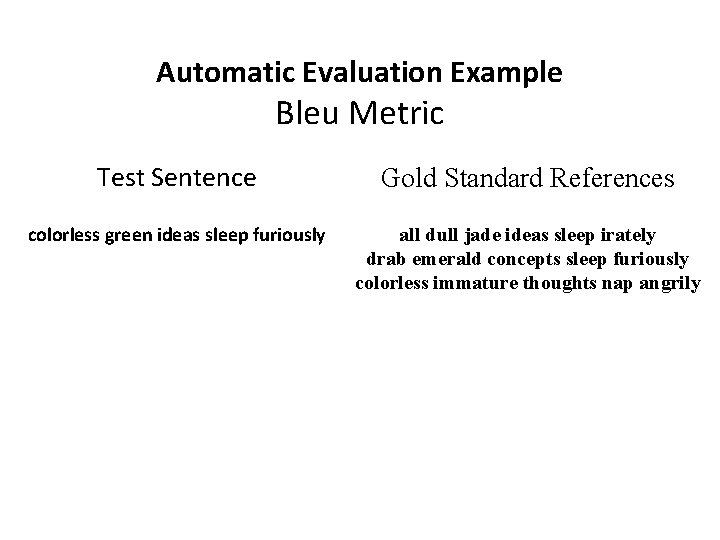

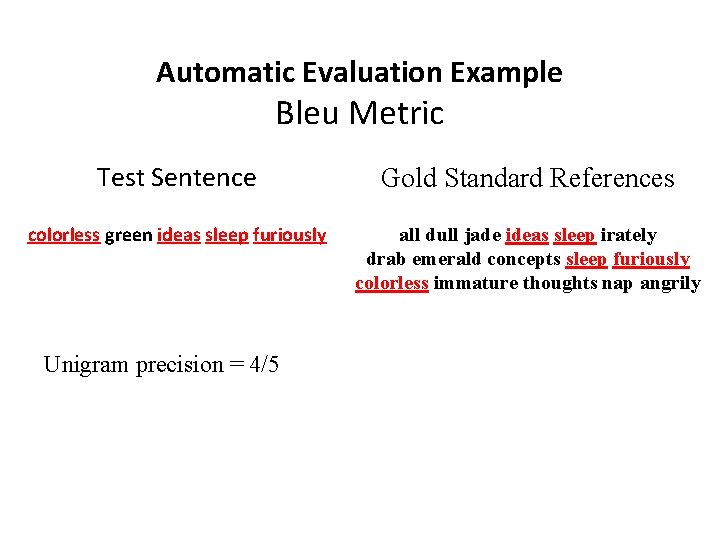

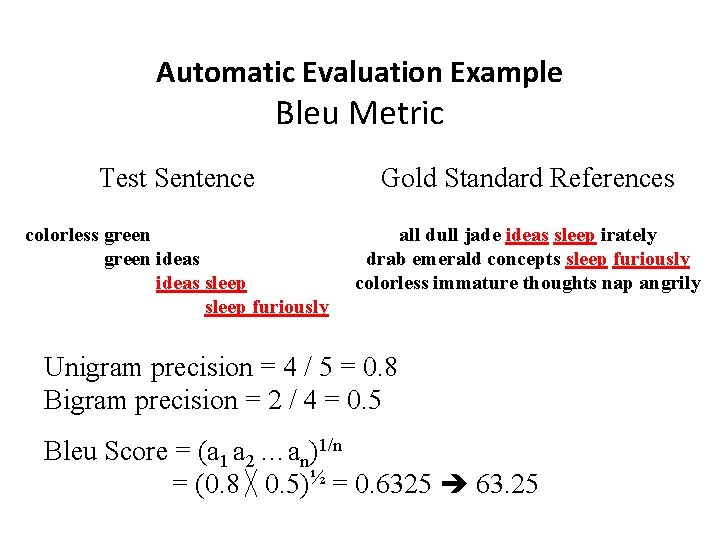

Automatic Evaluation Example Bleu Metric Test Sentence Gold Standard References colorless green ideas sleep furiously all dull jade ideas sleep irately drab emerald concepts sleep furiously colorless immature thoughts nap angrily

Automatic Evaluation Example Bleu Metric Test Sentence Gold Standard References colorless green ideas sleep furiously all dull jade ideas sleep irately drab emerald concepts sleep furiously colorless immature thoughts nap angrily Unigram precision = 4/5

Automatic Evaluation Example Bleu Metric Test Sentence Gold Standard References colorless green ideas sleep furiously all dull jade ideas sleep irately drab emerald concepts sleep furiously colorless immature thoughts nap angrily Unigram precision = 4 / 5 = 0. 8 Bigram precision = 2 / 4 = 0. 5 Bleu Score = (a 1 a 2 …an)1/n = (0. 8 ╳ 0. 5)½ = 0. 6325 63. 25

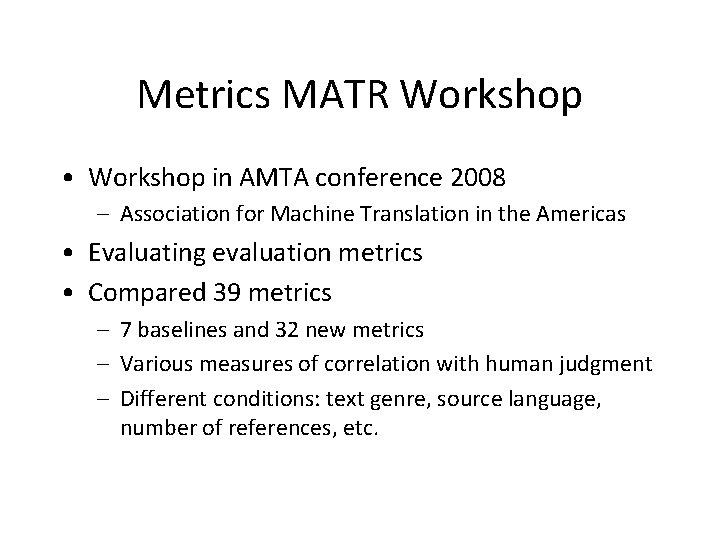

Metrics MATR Workshop • Workshop in AMTA conference 2008 – Association for Machine Translation in the Americas • Evaluating evaluation metrics • Compared 39 metrics – 7 baselines and 32 new metrics – Various measures of correlation with human judgment – Different conditions: text genre, source language, number of references, etc.

Interested in MT? ? • Contact me (habash@cs. columbia. edu) • Research courses, projects • Languages of interest: – English, Arabic, Hebrew, Chinese, Urdu, Spanish, Russian, …. • Topics – Statistical, Hybrid MT • Phrase-based MT with linguistic extensions • Component improvements or full-system improvements – MT Evaluation – Multilingual computing

Thank You