ComputerAssisted Text Analysis CATA Content Analysis Scoring of

- Slides: 28

Computer-Assisted Text Analysis (CATA): Content Analysis Scoring of Structured Interview Questions James F. Johnson, Ph. D United States Air Force 19 Nov 2020

What is CATA? • Computer-Assisted Text Analysis • CATA is a technique for identifying/summarizing topics, themes, or other dimensions of interest in a text corpus using varying levels of automated assistance • CATA can provide narrative summaries or quantitative values like counts, composites, regression estimates, or other varying indices • Results can be used to summarize narrative data or develop metrics to correlate/predict other variables or outcomes • Reduces historical subjectivity out of qualitative data analysis; analyses are more transparent, standardized, and scientific

Why is CATA Important? • Increased availability textual data (“big data”), which has historically been underutilized within the I/O field • Computer software now more readily available, accessible, and approachable to I/O Psychologists • Provides capability to (a) discover new constructs, (b) analyze new domains, and (c) develop new applications not possible with traditional numeric data • Has potential application in any topic area where textual information can be generated and measured, including personnel selection

Types of CATA methods can be categorized by Level of Automation and Level of Human Intervention • Automation: Degree to which the analysis is automated • Human Intervention: Degree of human intervention required to impart meaning High Automation Unsupervised Machine Learning Supervised Machine Learning Low Human Intervention High Human Intervention Dictionary-based Categorizationbased Low Automation

Overview Question: Can CATA assist in performance ratings of structured interview responses? How do CATA-derived ratings compare to those of human raters? 1. Describe development of structured interview questions for Air Force (AF) Officer Training School (OTS) candidate application process 2. Describe study examining CATA-derived versus human ratings of interview response quality on modified OTS interview questions 3. Examine the relative accuracy of CATA-based versus human raters 4. Discuss potential applications of CATA in the AF structured interview process

Background • Officer Training School (OTS) Line Officer (LO) applicants, as part of a competitive selection process, must complete an interview with an Air Force recruiter • Air Force Recruiting Service (AFRS) revising application process to potentially incorporate structured recruiter interviews • Ensures competencies measured are relevant to effective officer performance, and are defined in terms of specific, observable behaviors 1, 2, 3 • Standardizes questions and interview practices across interviewers • Contractor-developed situational and behavioral structured interview questions using standard S-A-O format and 3 -point (L/M/H) Behaviorally Anchored Rating Scales (BARS)

OTS Officership and Air Force Needs Analysis • OTS structured interview project still in development, but early feedback possible via enlisted trainees at Lackland Air Force Base • Significant overlap between OTS Officership and AF-derived Needs Analysis competencies 4; Relevant to both officers and enlisted Airmen during initial selection • Due to testing constraints, interview questions for six AF competencies were chosen using the following criteria: 1. Identified as “hard to train” and “trouble if ignored during selection” by OTS and Basic Military Training (BMT) instructors 2. Relevant/applicable to both enlisted recruits and officer candidates 3. Balanced between situational and behavioral questions

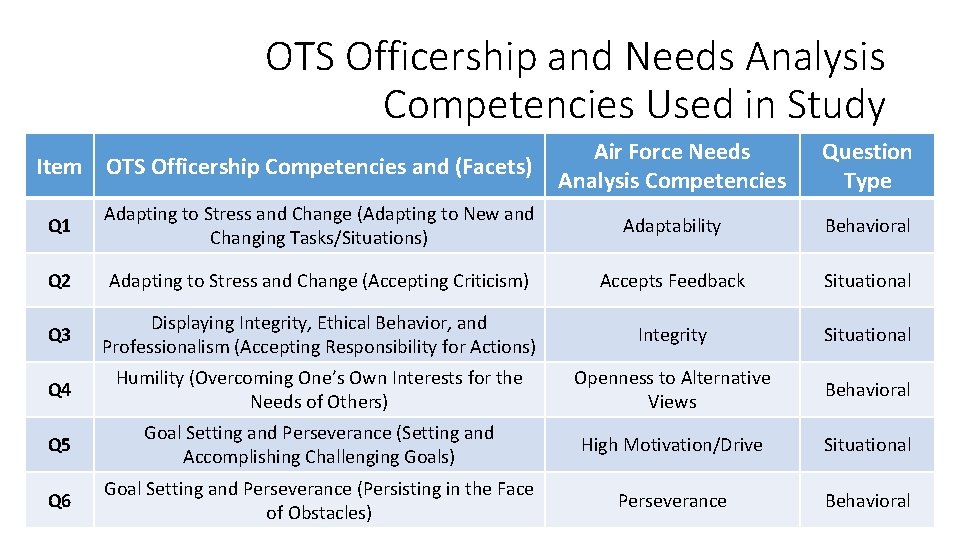

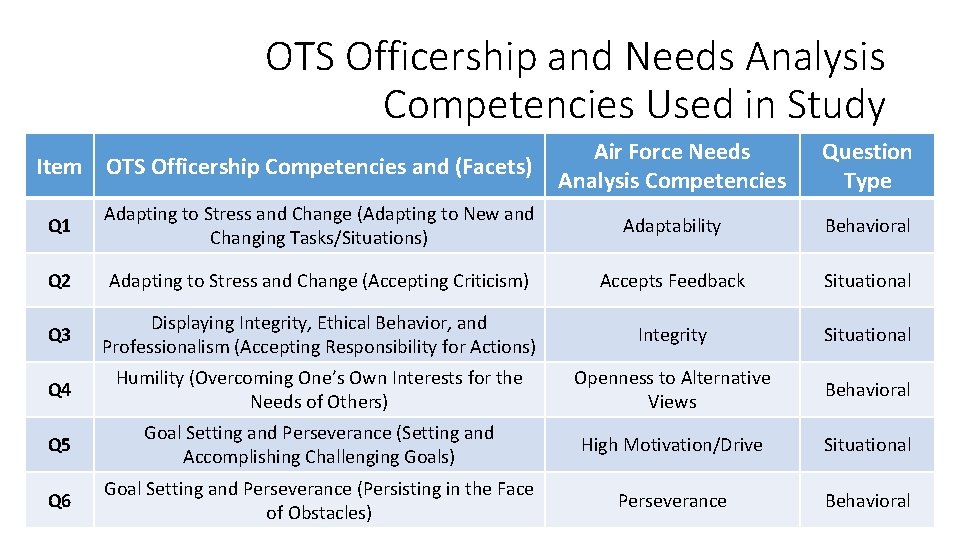

OTS Officership and Needs Analysis Competencies Used in Study Item OTS Officership Competencies and (Facets) Air Force Needs Analysis Competencies Question Type Q 1 Adapting to Stress and Change (Adapting to New and Changing Tasks/Situations) Adaptability Behavioral Q 2 Adapting to Stress and Change (Accepting Criticism) Accepts Feedback Situational Q 3 Displaying Integrity, Ethical Behavior, and Professionalism (Accepting Responsibility for Actions) Integrity Situational Q 4 Humility (Overcoming One’s Own Interests for the Needs of Others) Openness to Alternative Views Behavioral Q 5 Goal Setting and Perseverance (Setting and Accomplishing Challenging Goals) High Motivation/Drive Situational Q 6 Goal Setting and Perseverance (Persisting in the Face of Obstacles) Perseverance Behavioral

Interview Question Modifications • OTS structured interview questions and probes adapted for use in typed openended response question format • “Tell me about a time when…” “Describe a time when…” • Situational prompts for Integrity item modified to reflect ethical sensemaking 5 process • Item modified to assess quality of decision making process instead of overall decision ethicality as the latter would likely have little to no response variance • Behavioral question stems and prompts adapted to prompt enlisted trainees about “work, school, or personal life” experiences • Maximizes potential response variance by enlisted trainees on questions originally developed for older/more experienced officer candidates • Officer: Older (22+) with at least a Bachelor’s degree • Enlisted: Younger (18 -19) with a GED, HS Diploma, or 1 -2 years college study

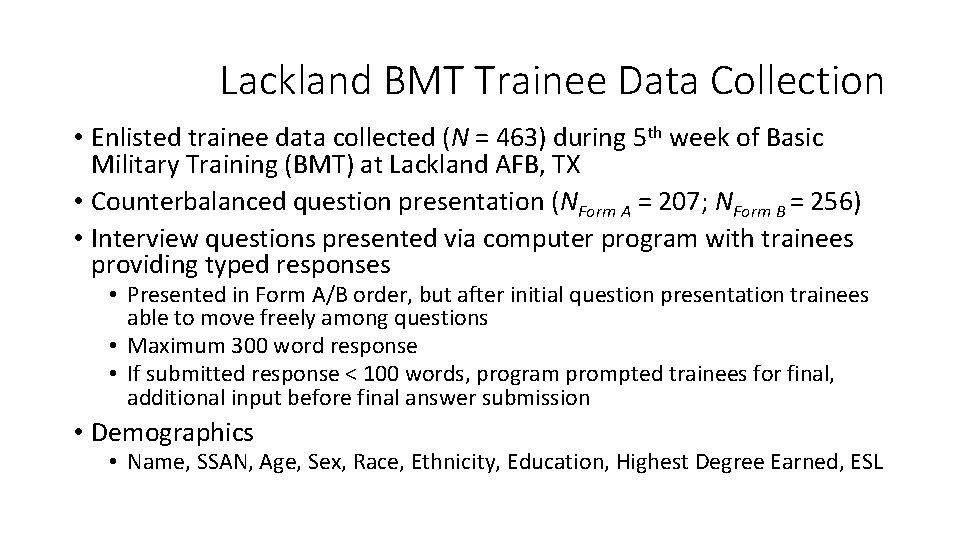

Lackland BMT Trainee Data Collection • Enlisted trainee data collected (N = 463) during 5 th week of Basic Military Training (BMT) at Lackland AFB, TX • Counterbalanced question presentation (NForm A = 207; NForm B = 256) • Interview questions presented via computer program with trainees providing typed responses • Presented in Form A/B order, but after initial question presentation trainees able to move freely among questions • Maximum 300 word response • If submitted response < 100 words, program prompted trainees for final, additional input before final answer submission • Demographics • Name, SSAN, Age, Sex, Race, Ethnicity, Education, Highest Degree Earned, ESL

Generating Human Performance Ratings • Trainee response quality rated by two Air Force I-O Psychologists • Rater Training Process • Provided standardized coding manual with L/M/H trainee-derived exemplar responses for all competency questions • Raters completed initial Frame of Reference 6 training as well as two rounds of consensus meetings • Raters regularly revisited coding manual during rating activities to avoid potential rater drift • Final ratings indicated a high level of inter-rater agreement • Percentage Agreement = 78. 0% to 84. 9%; MAgreement = 81. 87% • Intra-Class Correlation =. 778 to. 887; MICC =. 838

Generating CATA Performance Ratings • Text mining categories extracted for each question using SPSS Modeler Premium 18. 2. 1 • “Trained” model successively to asymptotic level, then converted remaining uncategorized concepts into categories • Categories retained if appeared in ≥ 1% of trainee responses and had a zero-order correlation with the criterion (human ratings) of r ≥ |. 05| • Consecutive analyses determined unit-weighted composite of variables Category Count and Word Count were most predictive of human performance ratings • Category Count: # of retained text-mined categories present in trainee response • Trainee response contains content relevant to overall response performance • Word Count: Length of trainee typed response • Relationship between amount of words written and propensity to provide content related to response performance

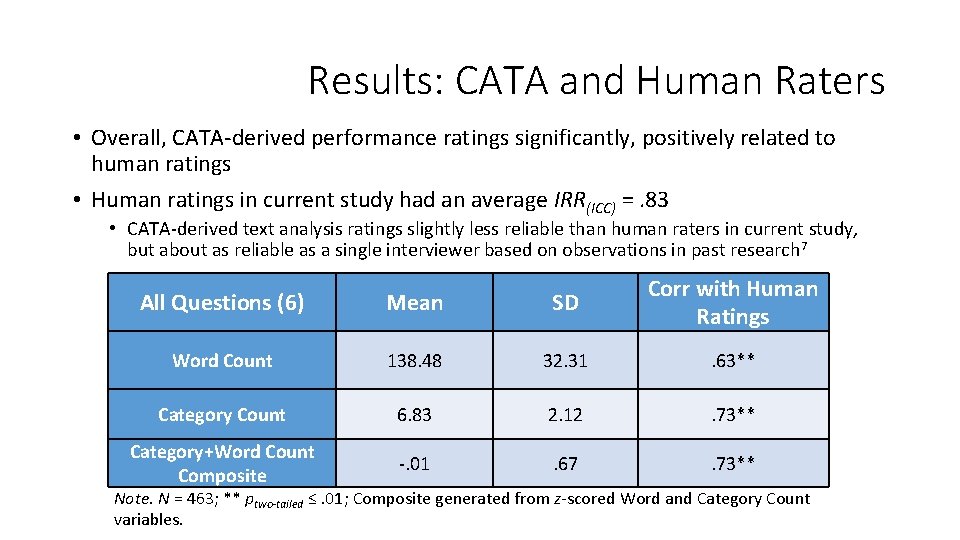

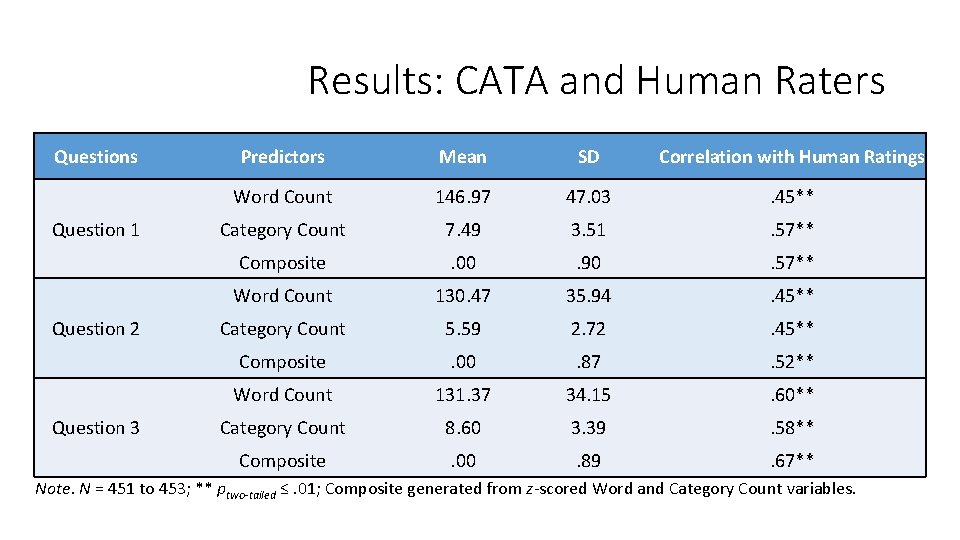

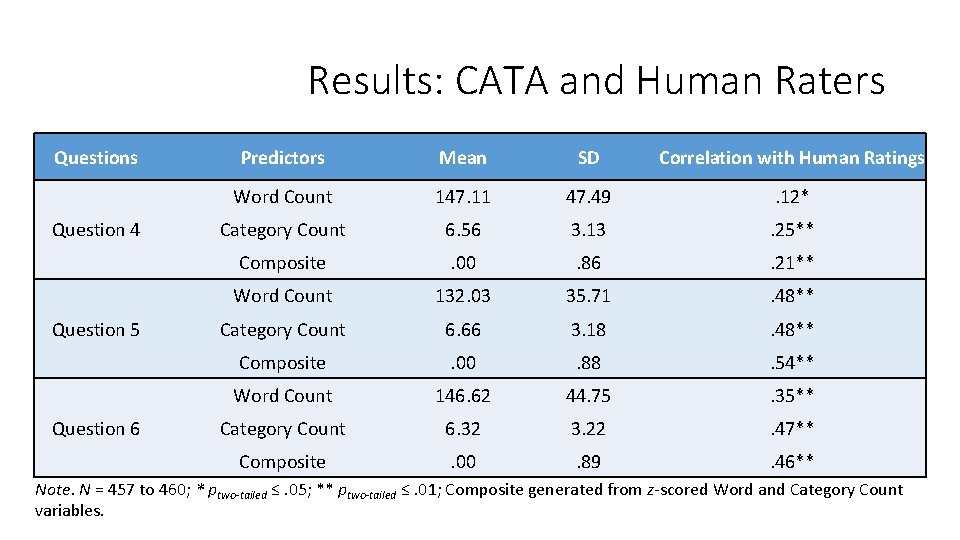

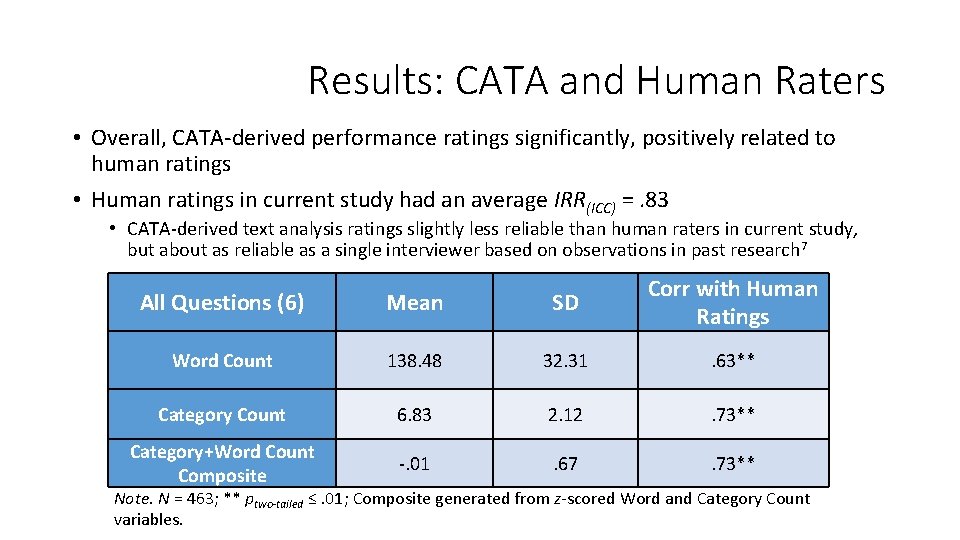

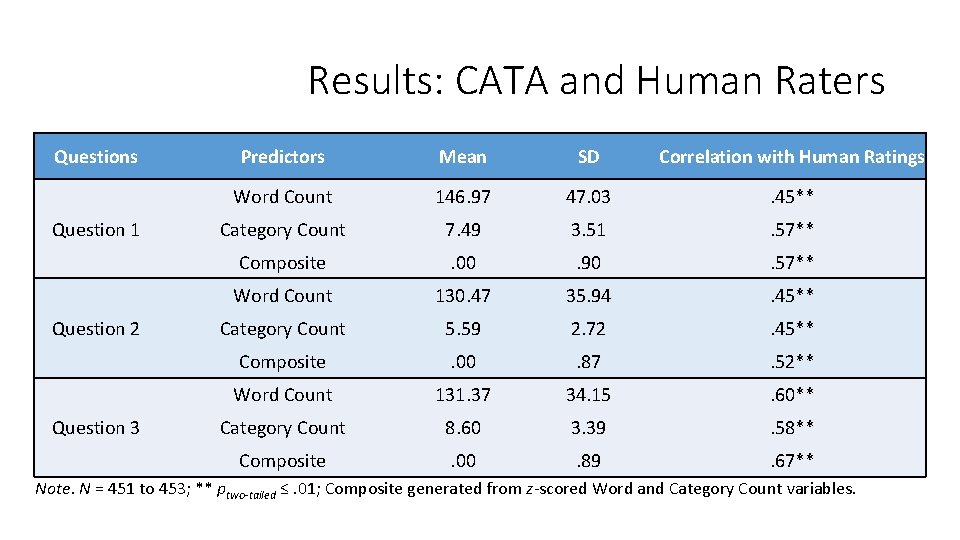

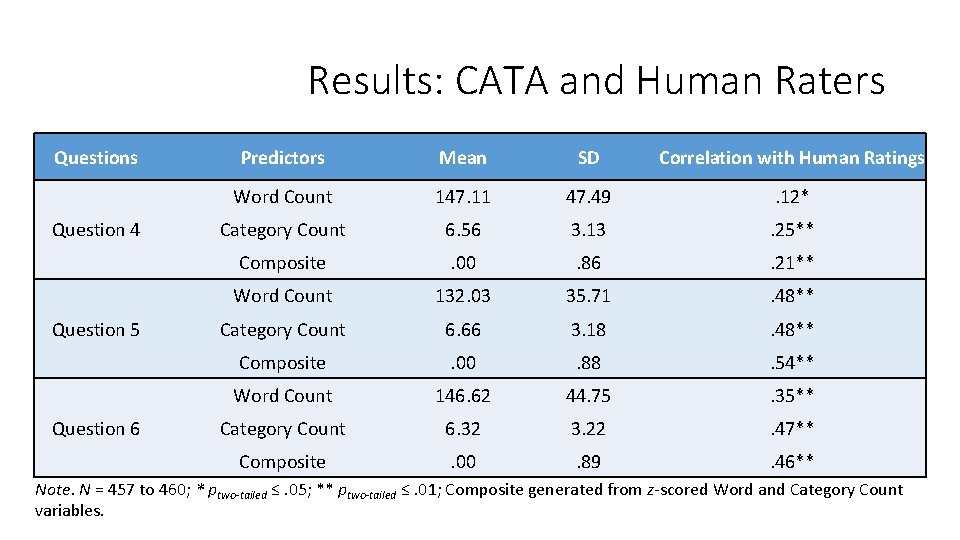

Results: CATA and Human Raters • Overall, CATA-derived performance ratings significantly, positively related to human ratings • Human ratings in current study had an average IRR(ICC) =. 83 • CATA-derived text analysis ratings slightly less reliable than human raters in current study, but about as reliable as a single interviewer based on observations in past research 7 All Questions (6) Mean SD Corr with Human Ratings Word Count 138. 48 32. 31 . 63** Category Count 6. 83 2. 12 . 73** Category+Word Count Composite -. 01 . 67 . 73** Note. N = 463; ** ptwo-tailed ≤. 01; Composite generated from z-scored Word and Category Count variables.

CATA Model Cross-Validation • For all six questions samples were randomly assigned into two subsamples • For each subsample, CATA was used to extract text categories that were then refined to meet previously mentioned prescreening criteria 1. Validities were calculated for each subsample to determine similarity to human ratings criteria 2. Categories extracted for one subsample were then applied to the other subsample 3. Cross-validities were calculated for each subsample to determine generalizability of results to other sample • Overall, validities and cross-validities were stable and demonstrated low levels of shrinkage

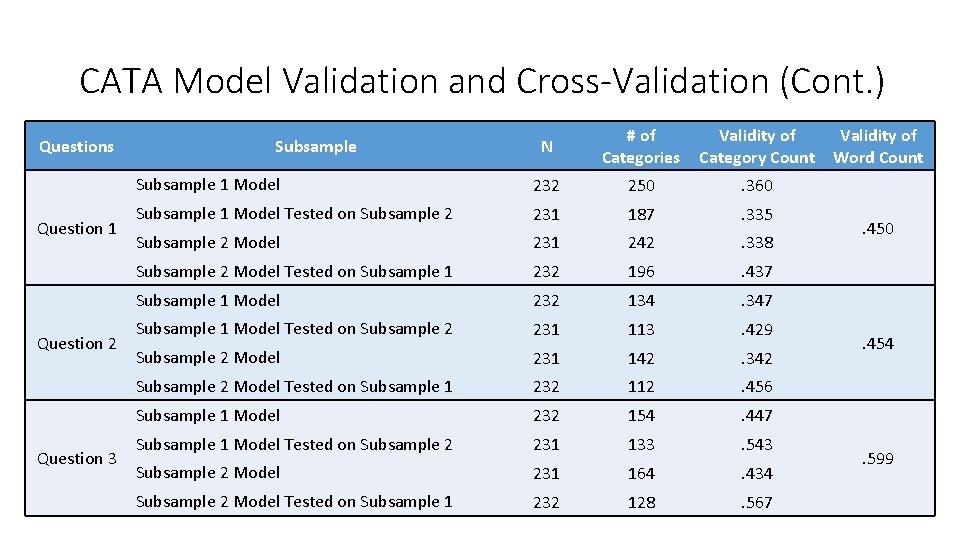

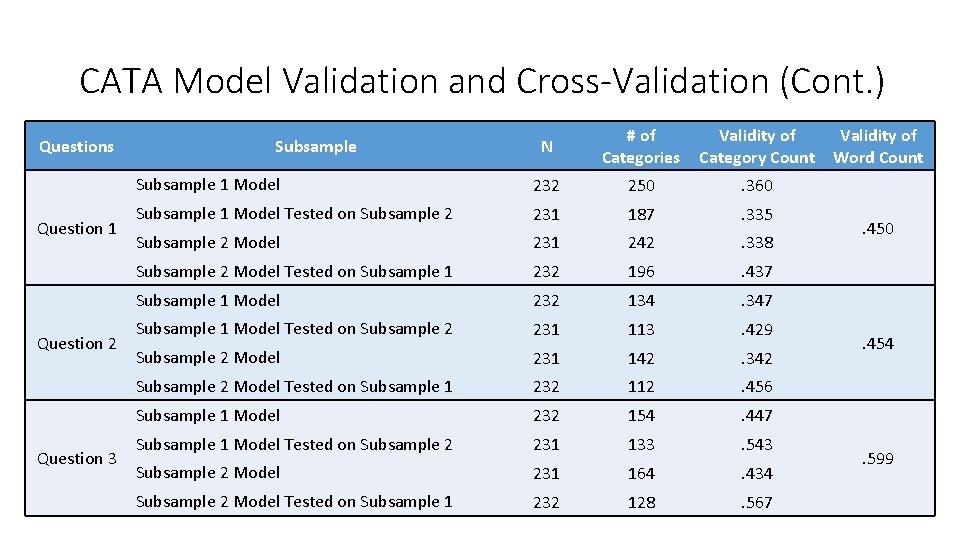

CATA Model Validation and Cross-Validation (Cont. ) Questions Question 1 Question 2 Question 3 N # of Categories Subsample 1 Model 232 250 . 360 Subsample 1 Model Tested on Subsample 2 231 187 . 335 Subsample 2 Model 231 242 . 338 Subsample 2 Model Tested on Subsample 1 232 196 . 437 Subsample 1 Model 232 134 . 347 Subsample 1 Model Tested on Subsample 2 231 113 . 429 Subsample 2 Model 231 142 . 342 Subsample 2 Model Tested on Subsample 1 232 112 . 456 Subsample 1 Model 232 154 . 447 Subsample 1 Model Tested on Subsample 2 231 133 . 543 Subsample 2 Model 231 164 . 434 Subsample 2 Model Tested on Subsample 1 232 128 . 567 Subsample Validity of Category Count Word Count . 450 . 454 . 599

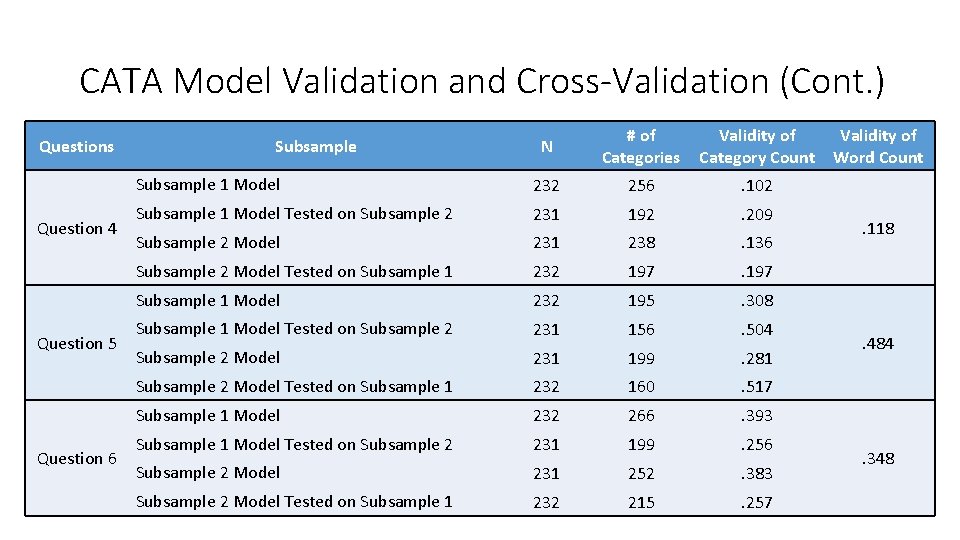

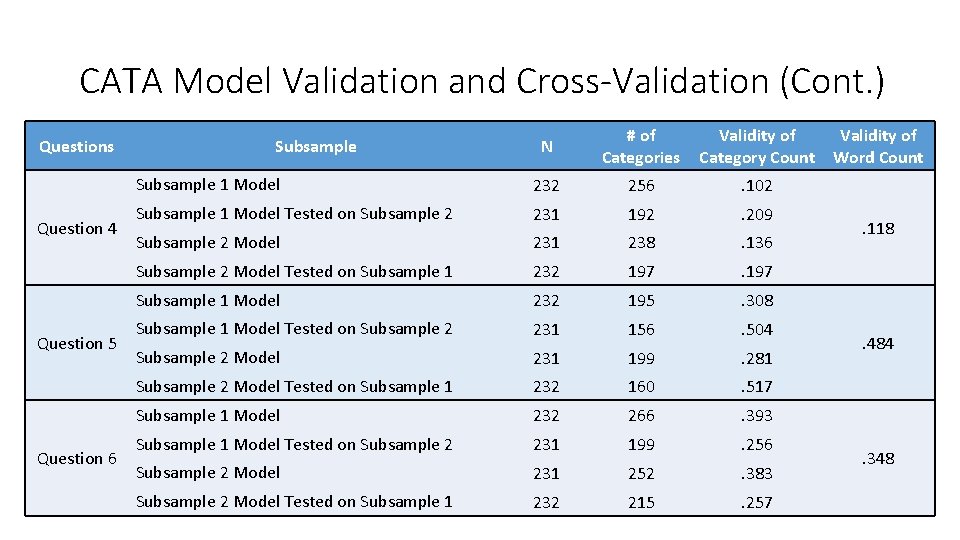

CATA Model Validation and Cross-Validation (Cont. ) Questions Question 4 Question 5 Question 6 N # of Categories Subsample 1 Model 232 256 . 102 Subsample 1 Model Tested on Subsample 2 231 192 . 209 Subsample 2 Model 231 238 . 136 Subsample 2 Model Tested on Subsample 1 232 197 Subsample 1 Model 232 195 . 308 Subsample 1 Model Tested on Subsample 2 231 156 . 504 Subsample 2 Model 231 199 . 281 Subsample 2 Model Tested on Subsample 1 232 160 . 517 Subsample 1 Model 232 266 . 393 Subsample 1 Model Tested on Subsample 2 231 199 . 256 Subsample 2 Model 231 252 . 383 Subsample 2 Model Tested on Subsample 1 232 215 . 257 Subsample Validity of Category Count Word Count . 118 . 484 . 348

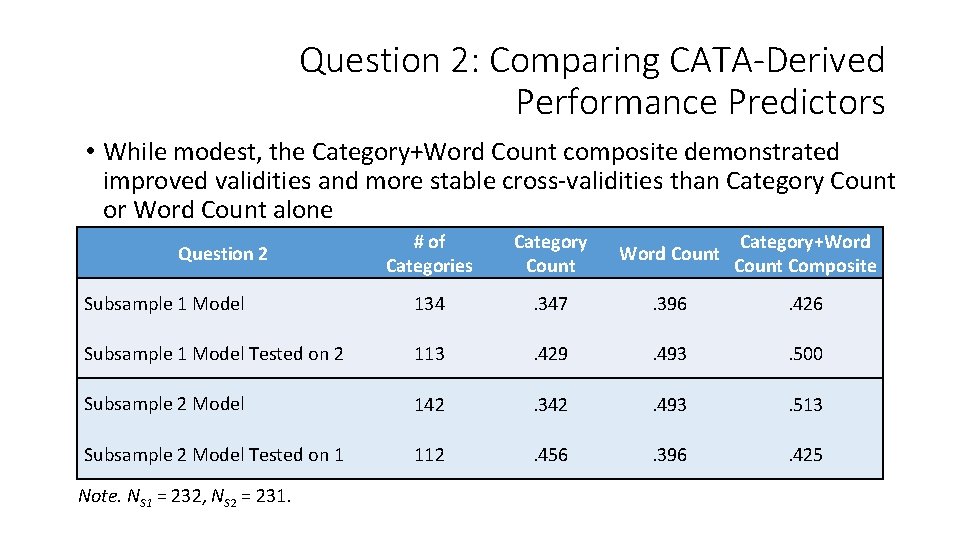

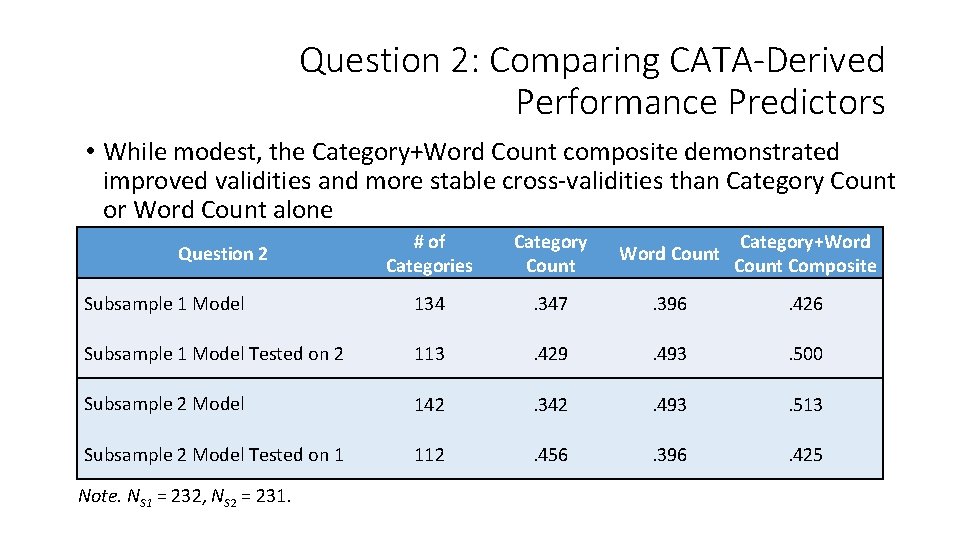

Question 2: Comparing CATA-Derived Performance Predictors • While modest, the Category+Word Count composite demonstrated improved validities and more stable cross-validities than Category Count or Word Count alone # of Categories Category Count Word Count Category+Word Count Composite Subsample 1 Model 134 . 347 . 396 . 426 Subsample 1 Model Tested on 2 113 . 429 . 493 . 500 Subsample 2 Model 142 . 342 . 493 . 513 Subsample 2 Model Tested on 1 112 . 456 . 396 . 425 Question 2 Note. NS 1 = 232, NS 2 = 231.

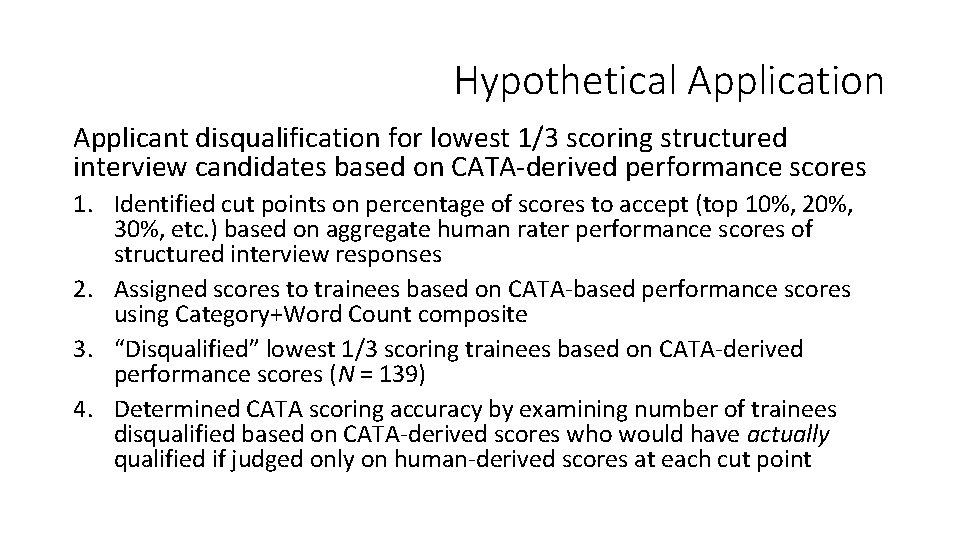

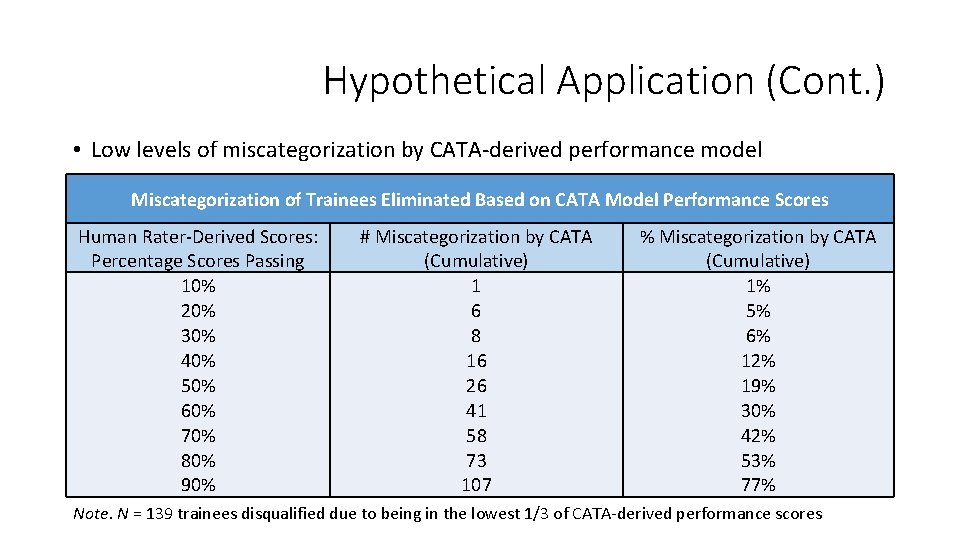

Hypothetical Application Applicant disqualification for lowest 1/3 scoring structured interview candidates based on CATA-derived performance scores 1. Identified cut points on percentage of scores to accept (top 10%, 20%, 30%, etc. ) based on aggregate human rater performance scores of structured interview responses 2. Assigned scores to trainees based on CATA-based performance scores using Category+Word Count composite 3. “Disqualified” lowest 1/3 scoring trainees based on CATA-derived performance scores (N = 139) 4. Determined CATA scoring accuracy by examining number of trainees disqualified based on CATA-derived scores who would have actually qualified if judged only on human-derived scores at each cut point

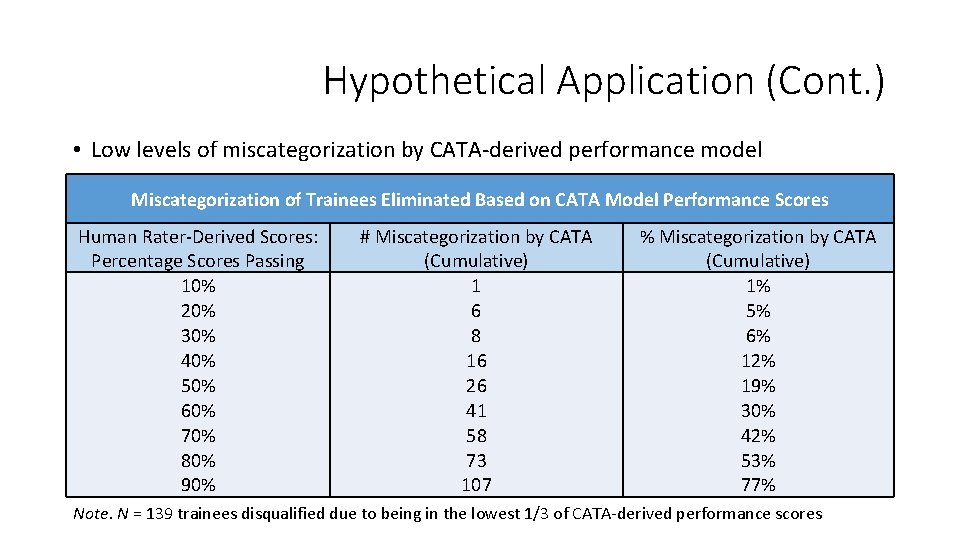

Hypothetical Application (Cont. ) • Low levels of miscategorization by CATA-derived performance model Miscategorization of Trainees Eliminated Based on CATA Model Performance Scores Human Rater-Derived Scores: Percentage Scores Passing 10% 20% 30% 40% 50% 60% 70% 80% 90% # Miscategorization by CATA (Cumulative) 1 6 8 16 26 41 58 73 107 % Miscategorization by CATA (Cumulative) 1% 5% 6% 12% 19% 30% 42% 53% 77% Note. N = 139 trainees disqualified due to being in the lowest 1/3 of CATA-derived performance scores

Summary of Findings • Overall, CATA was able to adequately approximate the accuracy of human raters in scoring overall performance on written structured interview responses • Unit-weighted Category Count, Word Count, and a Category + Word Count composite were the best, most stable predictors of structured interview performance scores • CATA-derived performance scores result in minimal levels of miscategorization of human-rater approved trainees

Practical Implications • CATA-based models are able to approximate human raters on competency-based structured interview questions • Text-mining models may have utility for making selection decisions in high-volume organizations like the Air Force • Systematically identify applicants with desirable KSAOs • Quickly reduce number of applications in highly-competitive selection scenarios by removing poor candidates early • Ability to quickly score open-ended responses may improve assessment of KSAOs not easily assessed via traditional Likert -based or multiple-choice selection test formats

Practical Implications and Application • Current study serves as an initial Air Force “proof of concept” for CATA-based selection models • Potential future Air Force studies, steps, and applications: • Validation of text analysis-based performance scoring on transcribed in-person OTS structured interviews • Development of text analysis models to provide OTS board members supplementary metrics of candidate quality • OTS board workload reduction by removing applications with poor CATA-derived metrics of future AF officer “potential” • OTS graduation, technical training success, post-training competencies, promotions and awards

References 1. Lentz, E. , Horgen, K. E. , Borman, W. C. , Dullaghan, T. R. , Smith, T. , Schwartz, K. L. , & Weissmuller, J. J. (2009). Air Force officership survey volume I: Survey development and analyses, AFCAPS-FR-20100010. Randolph AFB, TX: Air Force Personnel Center, Strategic Research and Assessment Branch. 2. Borman, W. C. & Brush, D. H. (1993). More progress toward a taxonomy of managerial performance requirements. Human Performance, 6(1), 1 -21. 3. Tett, R. P. , Guterman, H. A. , Bleier, A. , & Murphy, P. J. (2000). Development and content validation of a "hyperdimensional" taxonomy of managerial competence. Human Performance, 13(3), 205 -251. 4. Air Force Manual (AFMAN) 36 -2647. Institutional Competency Development and Management, 15 Sept 2016. 5. Mac. Dougall, A. E. , Bagdasarov, Z. , Johnson, J. F. , & Mumford, M. D. (2015). Managing workplace ethics: An extended conceptualization of ethical sensemaking and the facilitative role of human resources. Research in Personnel and Human Resources Management, 33, 121 -189. 6. Bernardin, H. J. & Buckley, M. R. (1981). Strategies in rater training. Academy of Management Review, 6(2), 205 -212. 7. Conway, J. M. , Jako, R. A. , & Goodman, D. F. (1995). A meta-analysis of interrater and internal consistency reliability of selection interviews. Journal of Applied Psychology, 80(5), 565.

Further Questions: James F. Johnson, USAF AFPC/DSYX james. johnson. 271@us. af. mil Full CATA technical report to include this study will be available upon request once published in the Defense Technical Information Center (DTIC) repository

Back-Up Slides

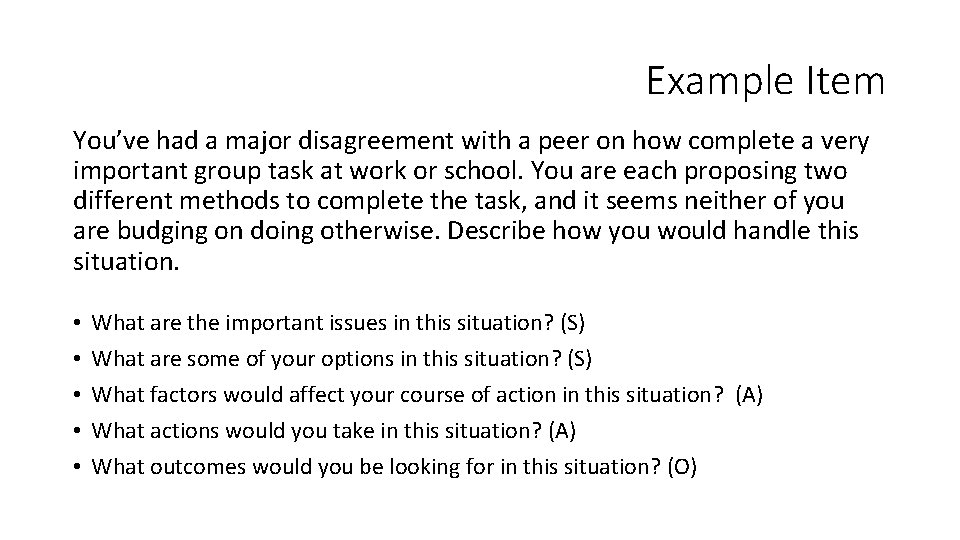

Example Item You’ve had a major disagreement with a peer on how complete a very important group task at work or school. You are each proposing two different methods to complete the task, and it seems neither of you are budging on doing otherwise. Describe how you would handle this situation. • • • What are the important issues in this situation? (S) What are some of your options in this situation? (S) What factors would affect your course of action in this situation? (A) What actions would you take in this situation? (A) What outcomes would you be looking for in this situation? (O)

Results: CATA and Human Raters Question 1 Question 2 Question 3 Predictors Mean SD Correlation with Human Ratings Word Count 146. 97 47. 03 . 45** Category Count 7. 49 3. 51 . 57** Composite . 00 . 90 . 57** Word Count 130. 47 35. 94 . 45** Category Count 5. 59 2. 72 . 45** Composite . 00 . 87 . 52** Word Count 131. 37 34. 15 . 60** Category Count 8. 60 3. 39 . 58** Composite . 00 . 89 . 67** Note. N = 451 to 453; ** ptwo-tailed ≤. 01; Composite generated from z-scored Word and Category Count variables.

Results: CATA and Human Raters Question 4 Question 5 Question 6 Predictors Mean SD Correlation with Human Ratings Word Count 147. 11 47. 49 . 12* Category Count 6. 56 3. 13 . 25** Composite . 00 . 86 . 21** Word Count 132. 03 35. 71 . 48** Category Count 6. 66 3. 18 . 48** Composite . 00 . 88 . 54** Word Count 146. 62 44. 75 . 35** Category Count 6. 32 3. 22 . 47** Composite . 00 . 89 . 46** Note. N = 457 to 460; * ptwo-tailed ≤. 05; ** ptwo-tailed ≤. 01; Composite generated from z-scored Word and Category Count variables.