Computer Vision Computer Image Artificial Vision Processing Intelligence

- Slides: 53

Computer Vision 一目 了然 一看 便知 眼睛 頭腦 影像 智慧 Computer = Image + Artificial Vision Processing Intelligence 1

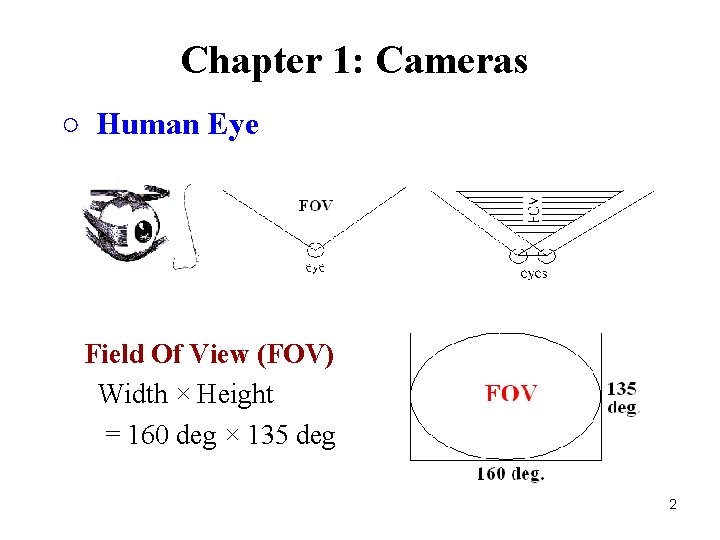

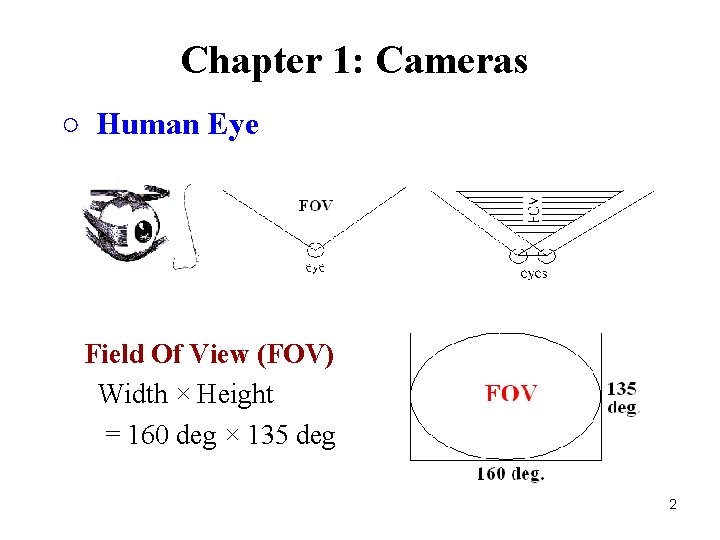

Chapter 1: Cameras ○ Human Eye Field Of View (FOV) Width × Height = 160 deg × 135 deg 2

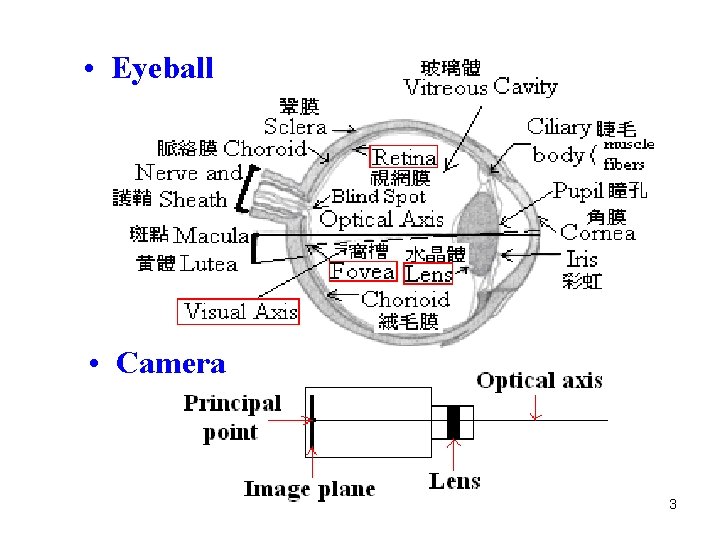

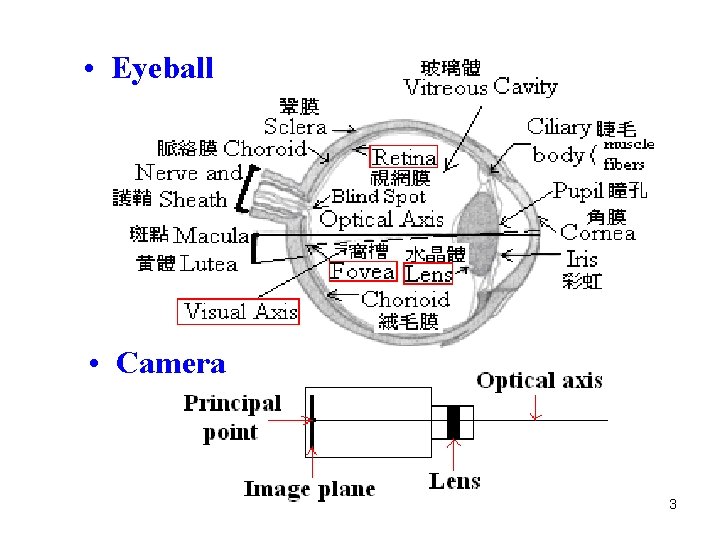

• Eyeball • Camera 3

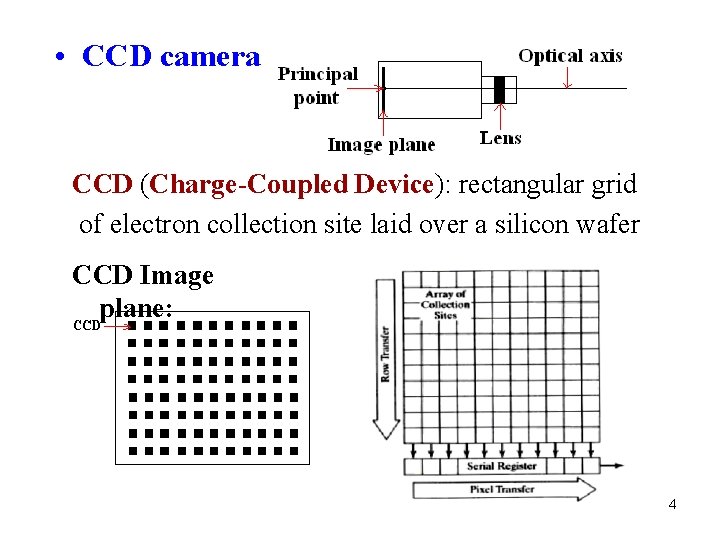

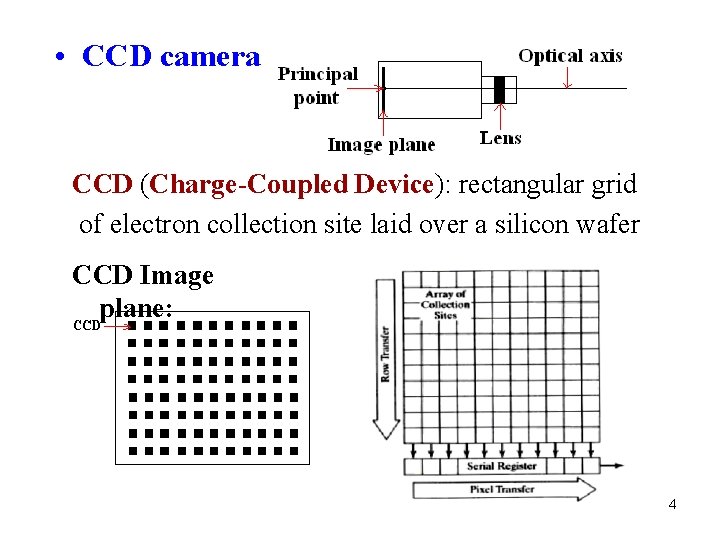

• CCD camera CCD (Charge-Coupled Device): rectangular grid of electron collection site laid over a silicon wafer CCD Image plane: 4

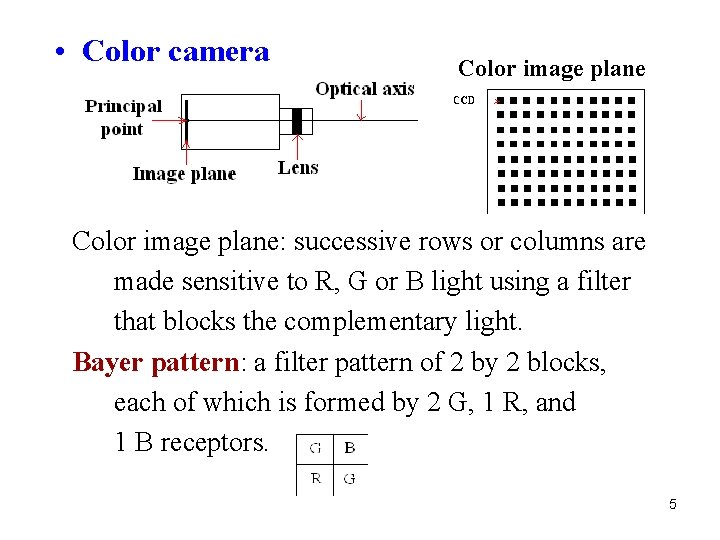

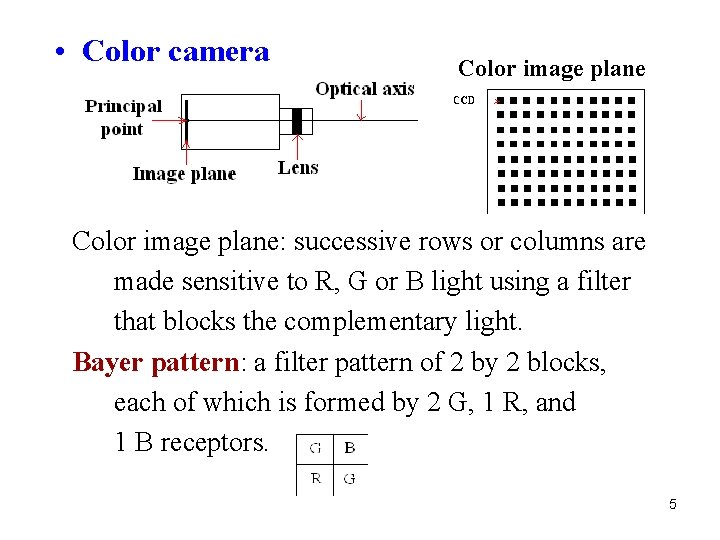

• Color camera Color image plane: successive rows or columns are made sensitive to R, G or B light using a filter that blocks the complementary light. Bayer pattern: a filter pattern of 2 by 2 blocks, each of which is formed by 2 G, 1 R, and 1 B receptors. 5

○ Visual Sensors Animal eyes: birds, bats, snakes, fishes, insects (fly, bee, locust, grasshopper, cricket, cicada) Cameras: fish-eye, panoramic, omni, PTZ Imaging Devices : telescopes, microscopes 6

○ Imaging Surfaces Planar, Spherical, Cylindrical ○ Signals Single value (gray images) A few values (color images) Many values (multi-spectral images) 7

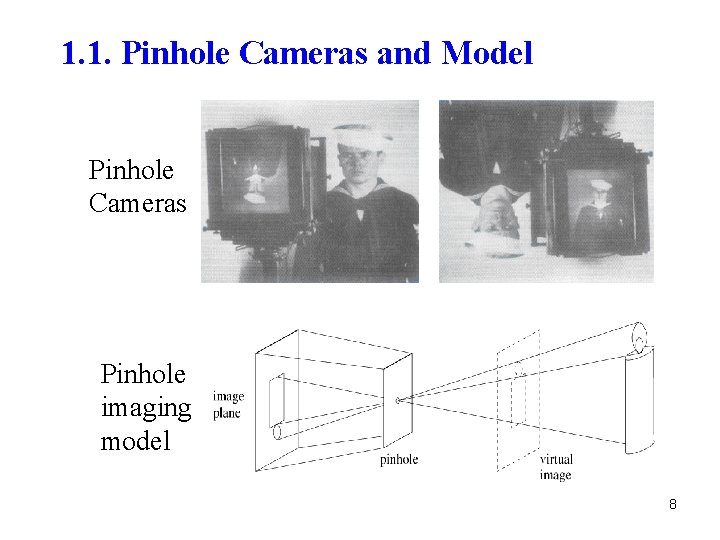

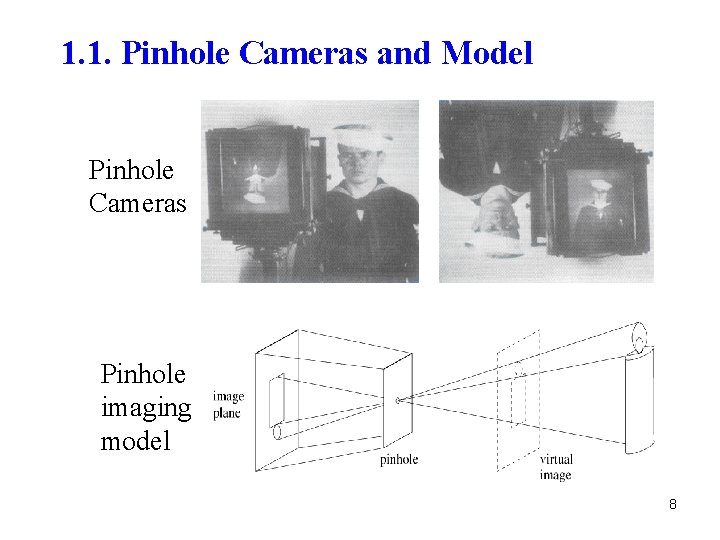

1. 1. Pinhole Cameras and Model Pinhole Cameras Pinhole imaging model 8

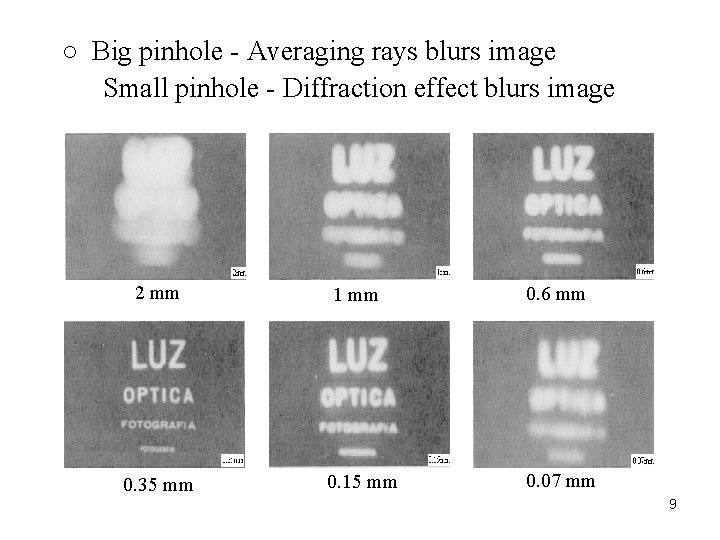

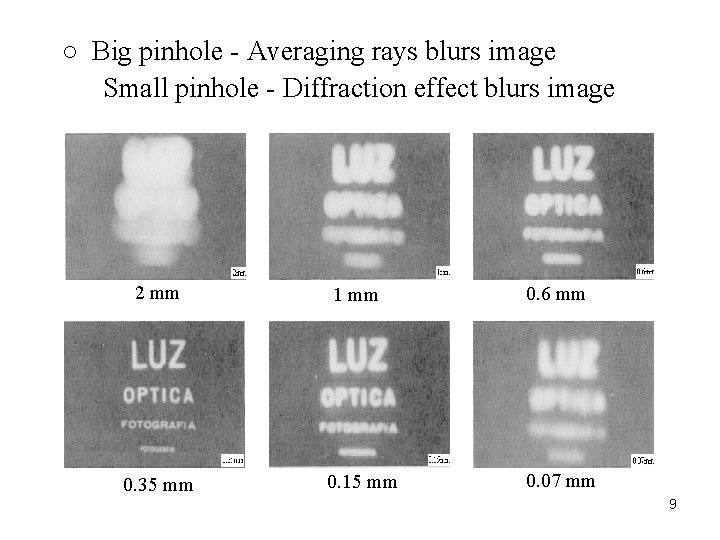

○ Big pinhole - Averaging rays blurs image Small pinhole - Diffraction effect blurs image 2 mm 0. 35 mm 1 mm 0. 6 mm 0. 15 mm 0. 07 mm 9

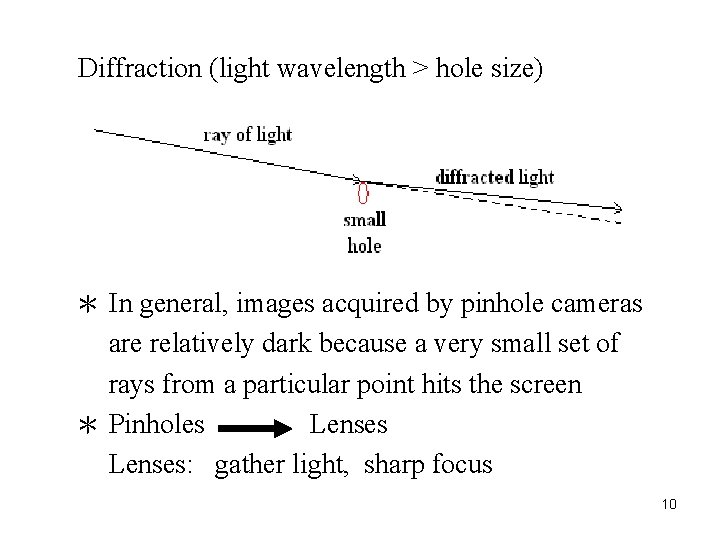

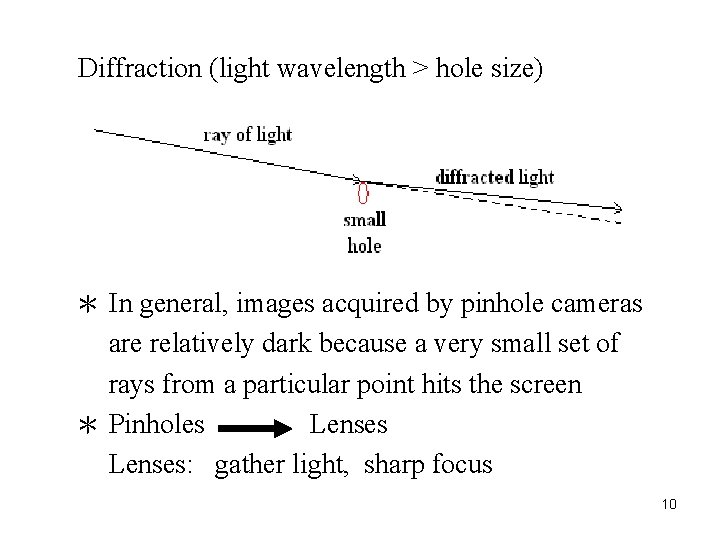

Diffraction (light wavelength > hole size) * In general, images acquired by pinhole cameras are relatively dark because a very small set of rays from a particular point hits the screen * Pinholes Lenses: gather light, sharp focus 10

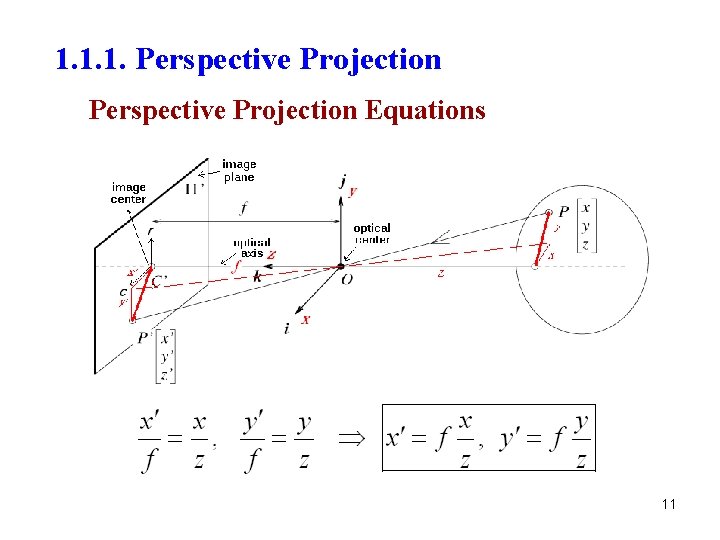

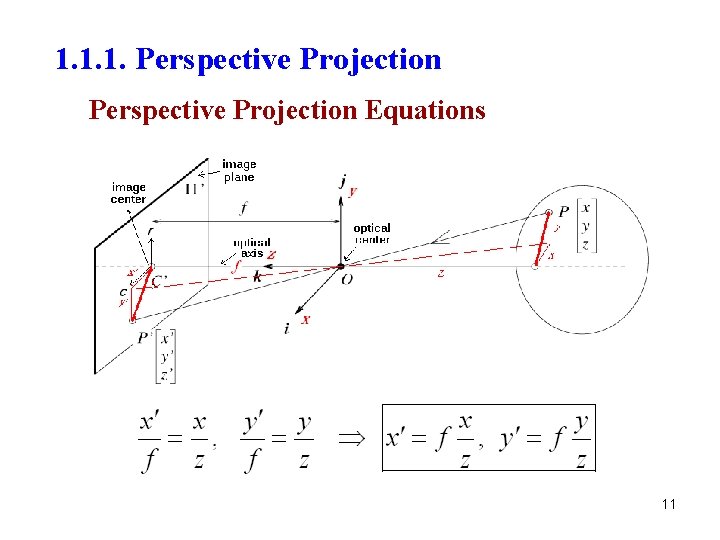

1. 1. 1. Perspective Projection Equations 11

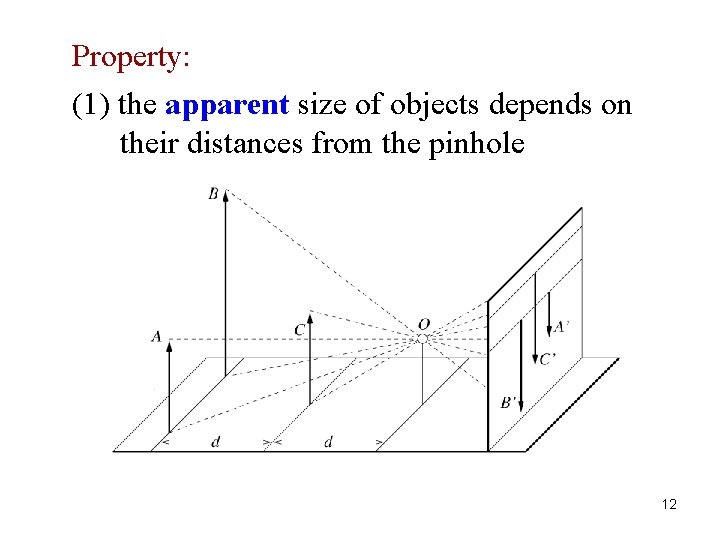

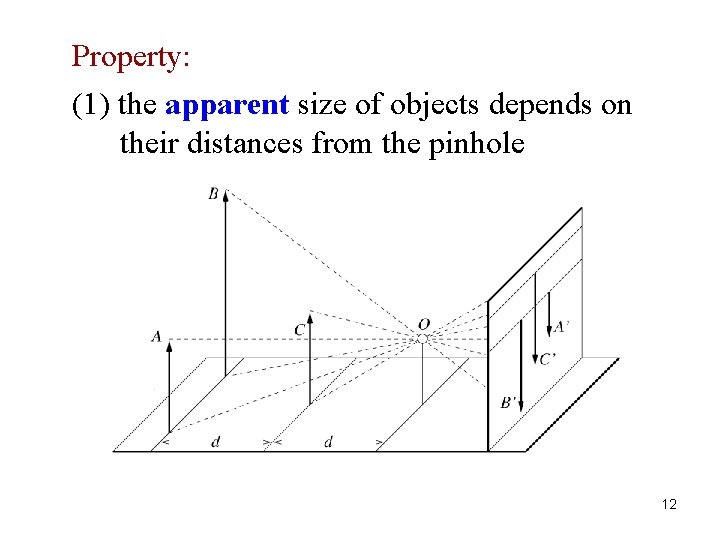

Property: (1) the apparent size of objects depends on their distances from the pinhole 12

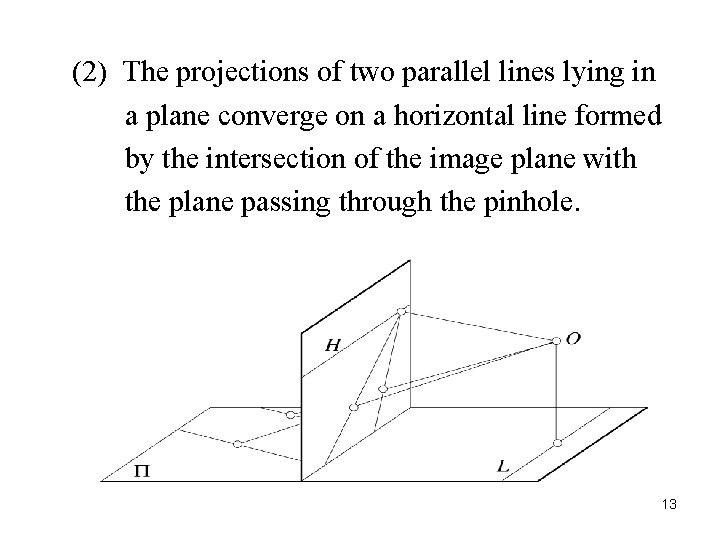

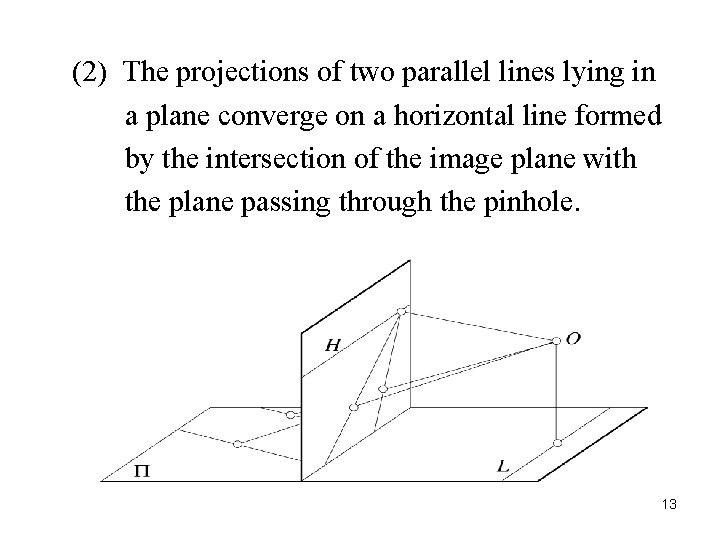

(2) The projections of two parallel lines lying in a plane converge on a horizontal line formed by the intersection of the image plane with the plane passing through the pinhole. 13

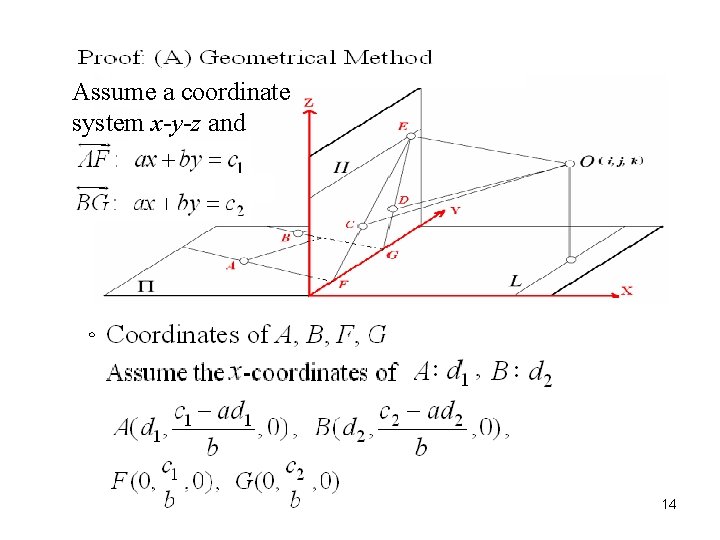

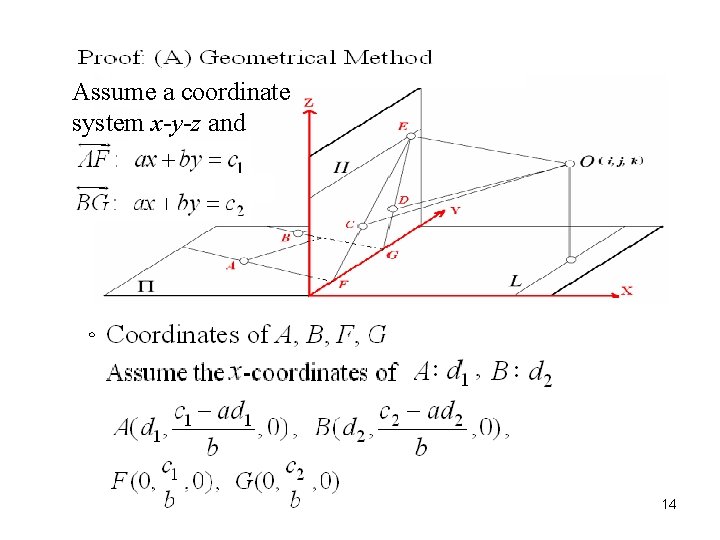

Assume a coordinate system x-y-z and 14

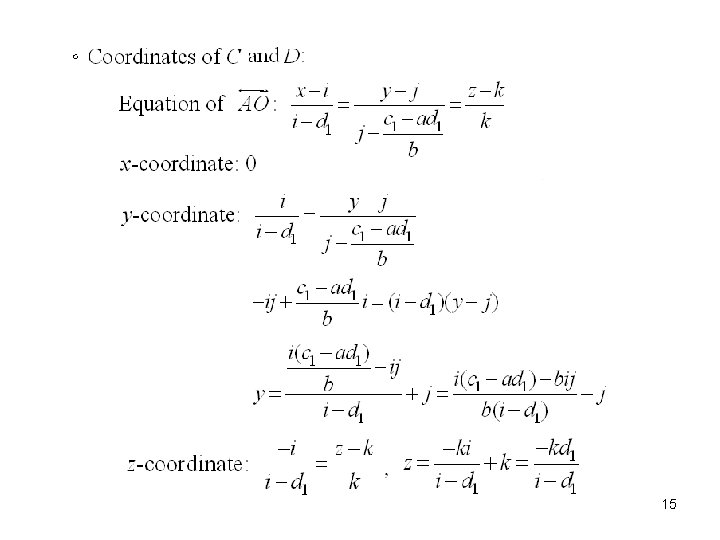

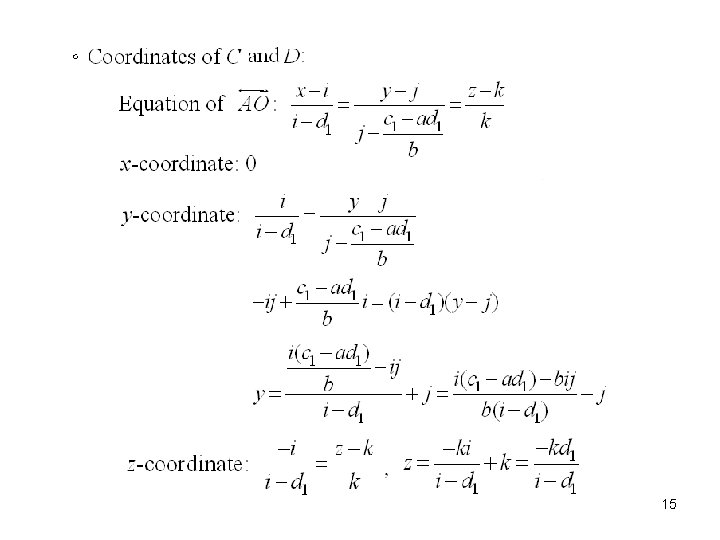

15

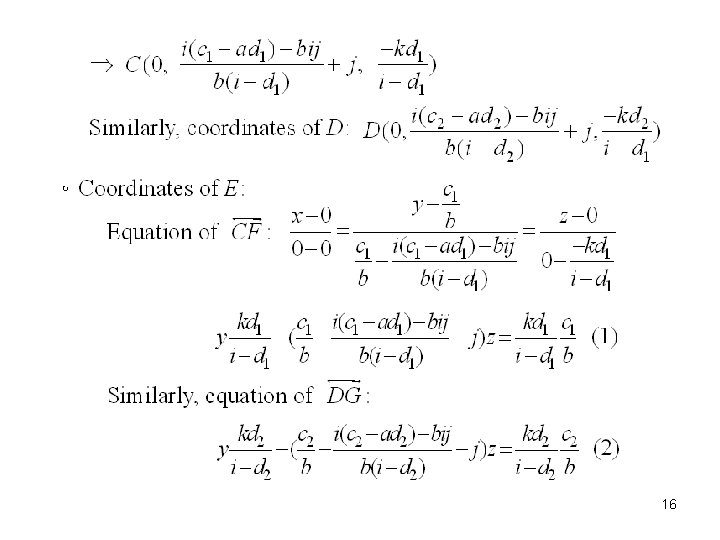

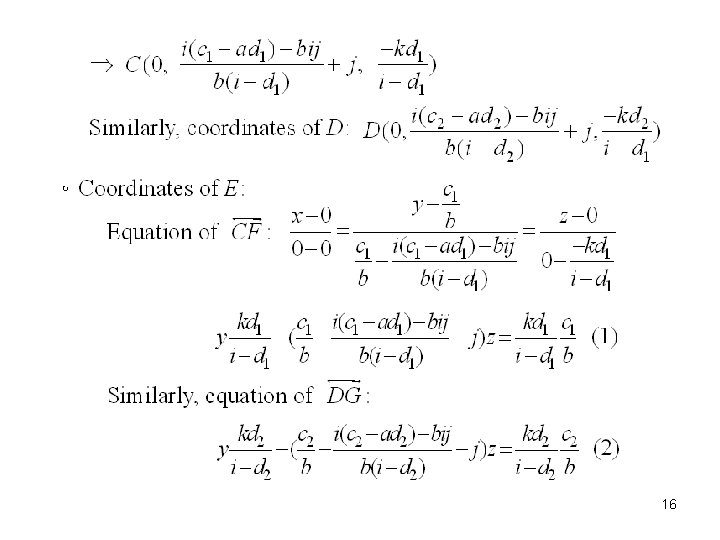

16

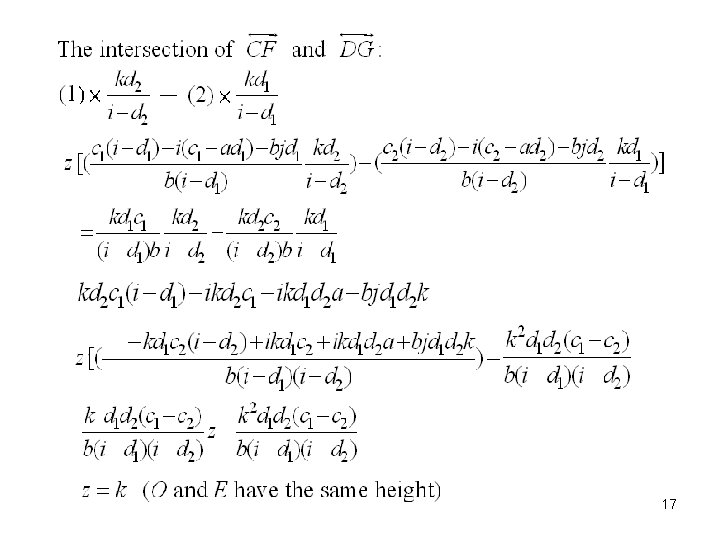

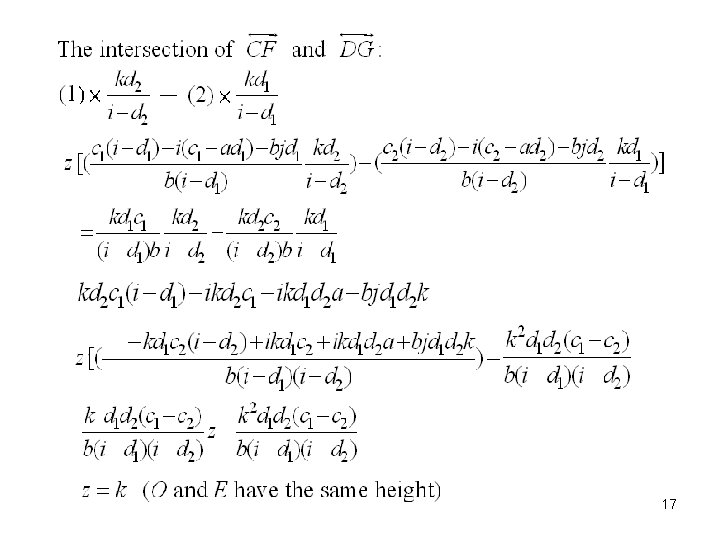

17

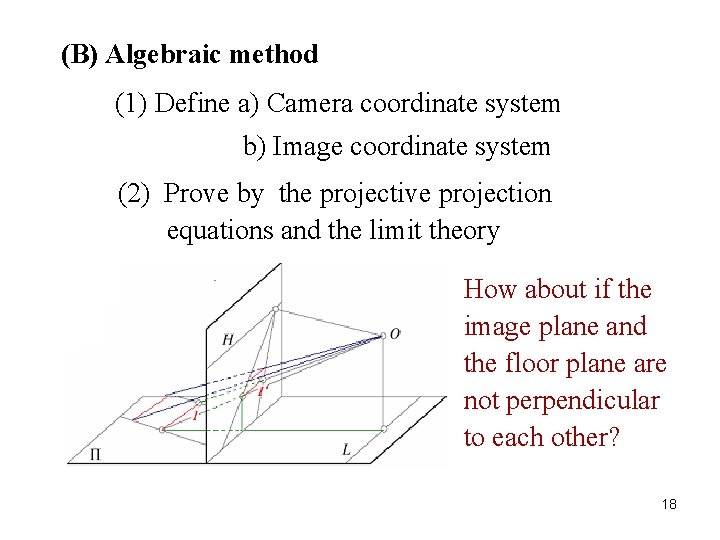

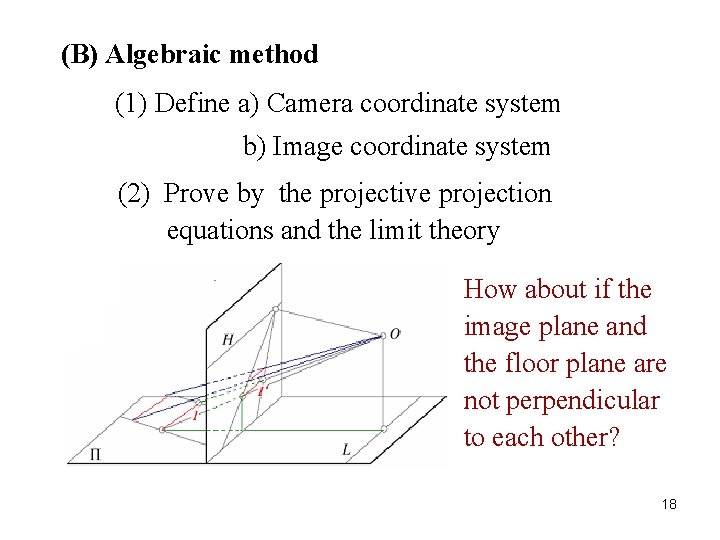

(B) Algebraic method (1) Define a) Camera coordinate system b) Image coordinate system (2) Prove by the projective projection equations and the limit theory How about if the image plane and the floor plane are not perpendicular to each other? 18

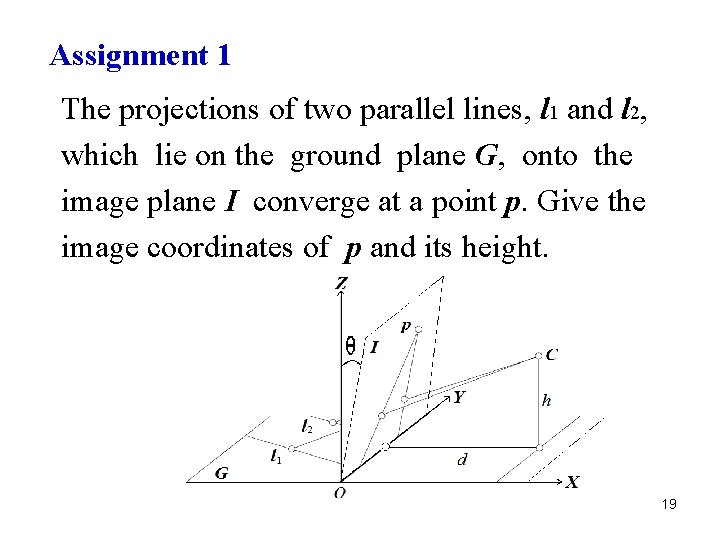

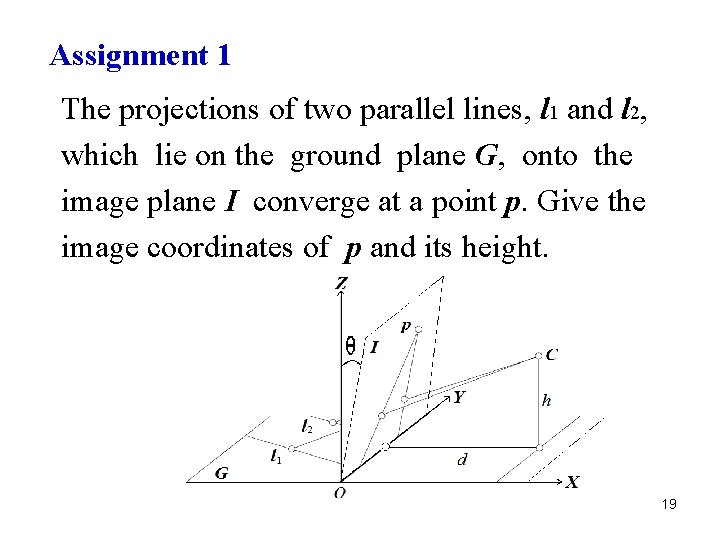

Assignment 1 The projections of two parallel lines, l 1 and l 2, which lie on the ground plane G, onto the image plane I converge at a point p. Give the image coordinates of p and its height. 19

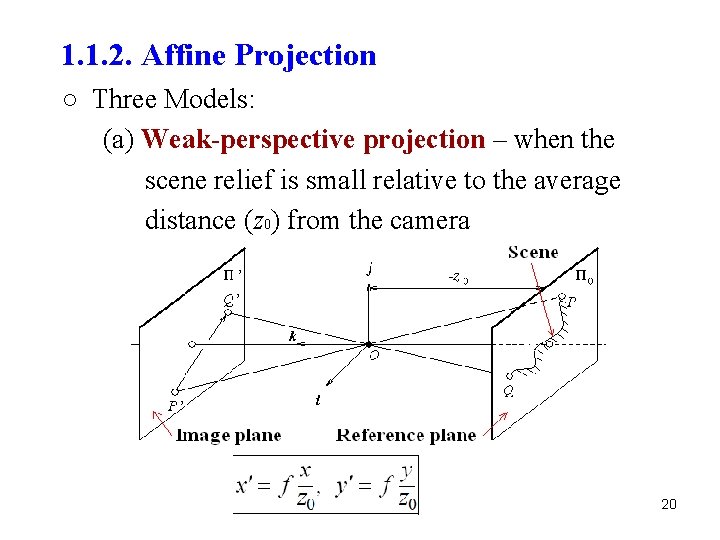

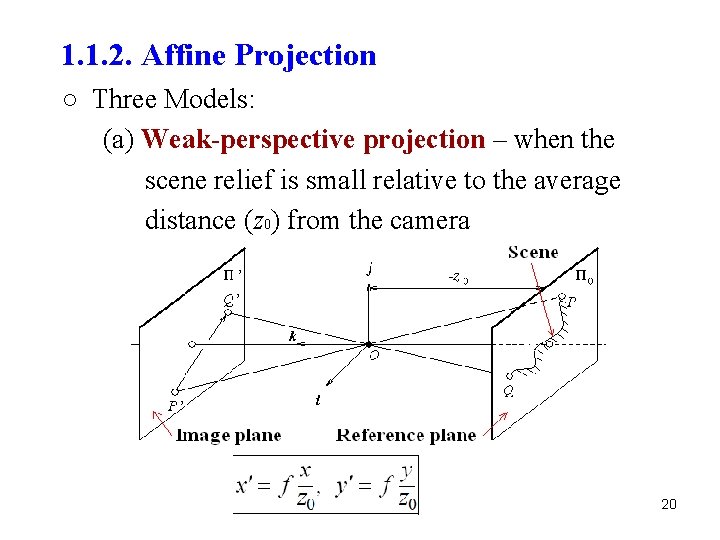

1. 1. 2. Affine Projection ○ Three Models: (a) Weak-perspective projection – when the scene relief is small relative to the average distance (z 0) from the camera 20

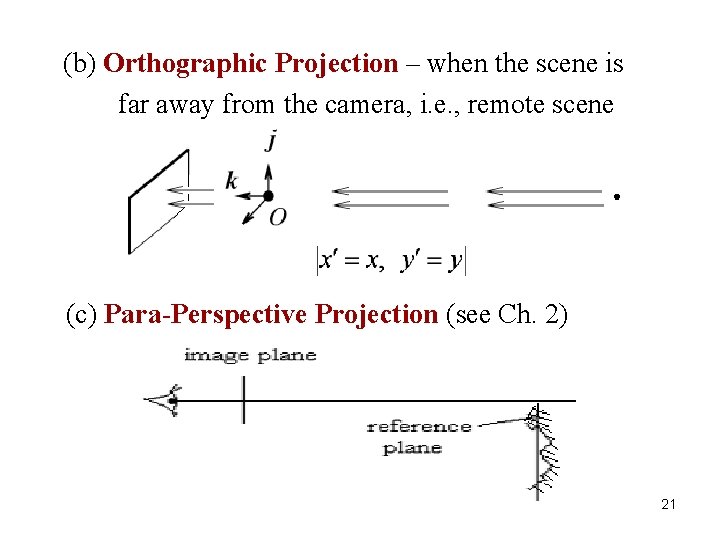

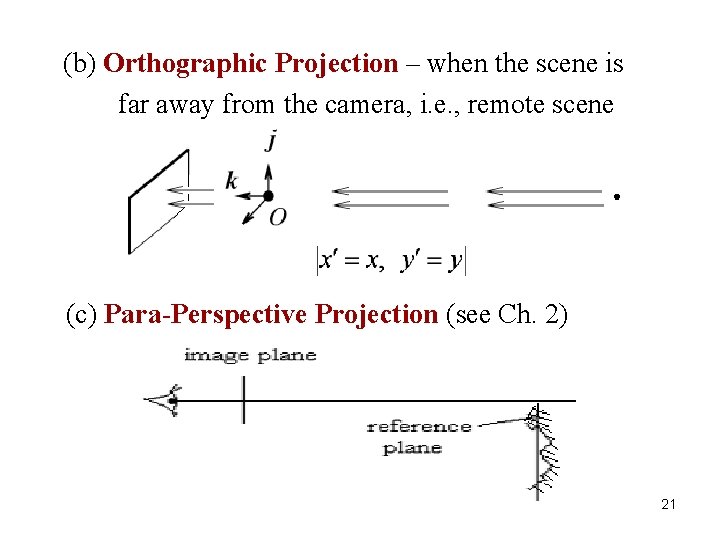

(b) Orthographic Projection – when the scene is far away from the camera, i. e. , remote scene (c) Para-Perspective Projection (see Ch. 2) 21

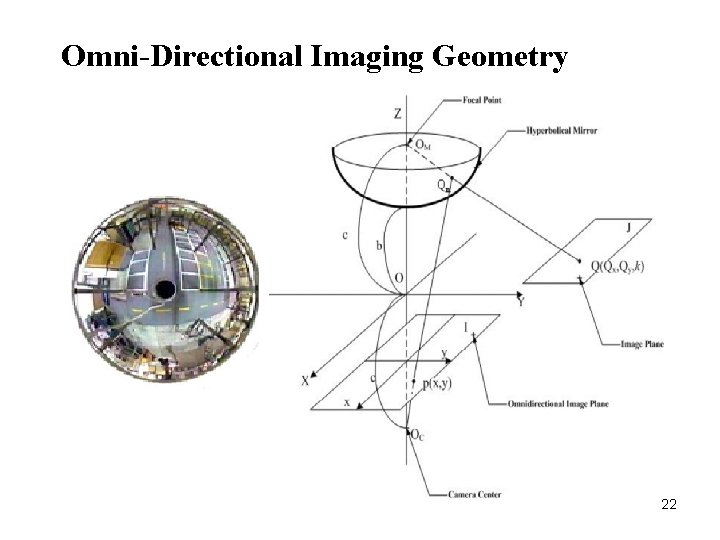

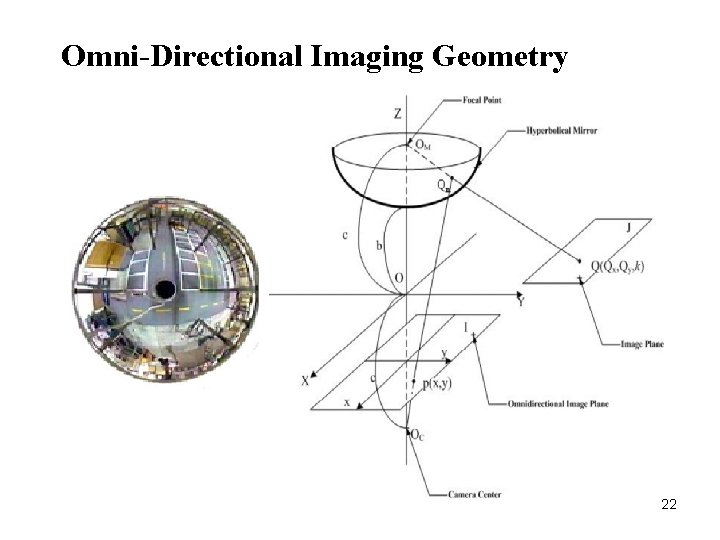

Omni-Directional Imaging Geometry 22

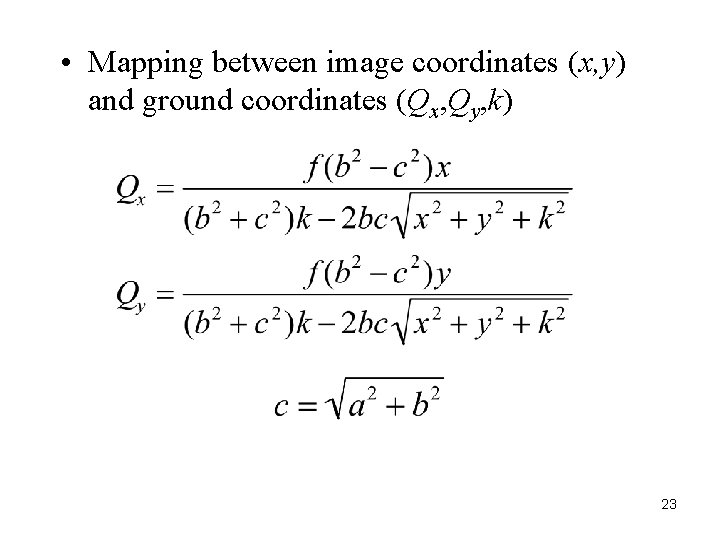

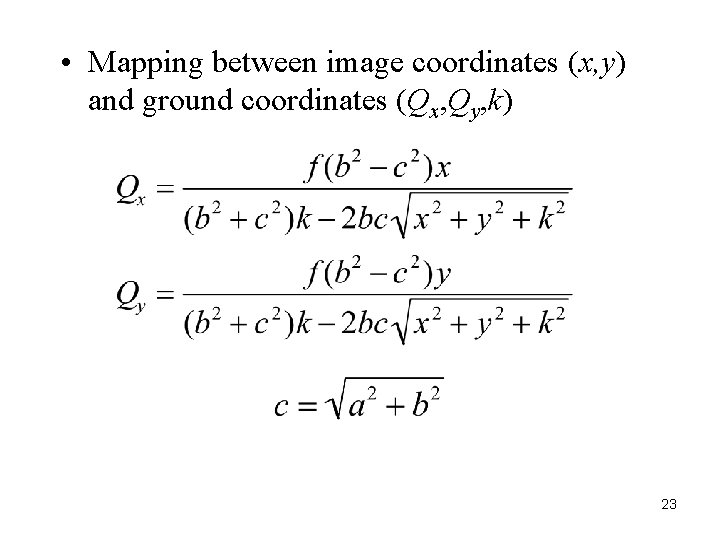

• Mapping between image coordinates (x, y) and ground coordinates (Qx, Qy, k) 23

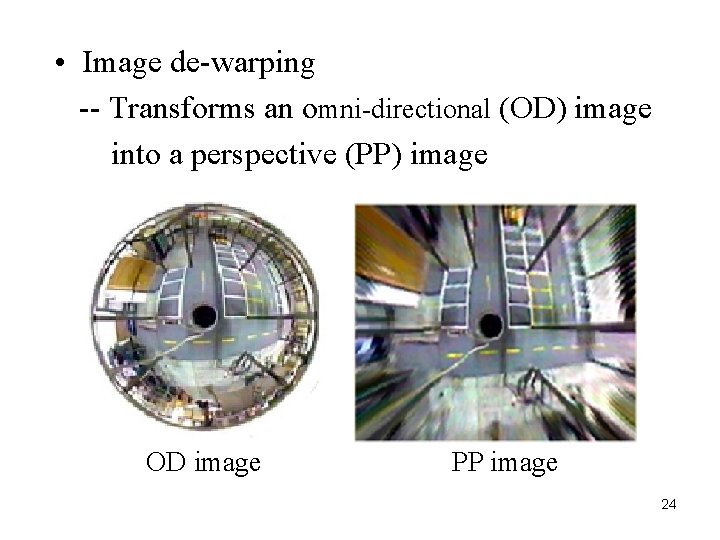

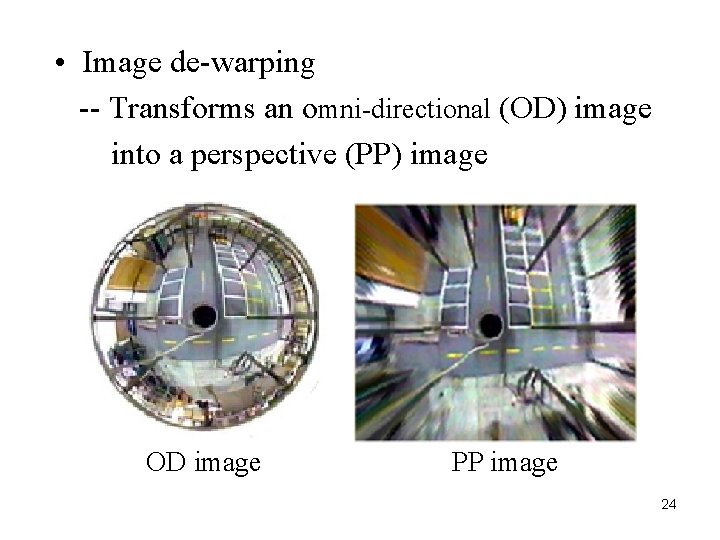

• Image de-warping -- Transforms an omni-directional (OD) image into a perspective (PP) image OD image PP image 24

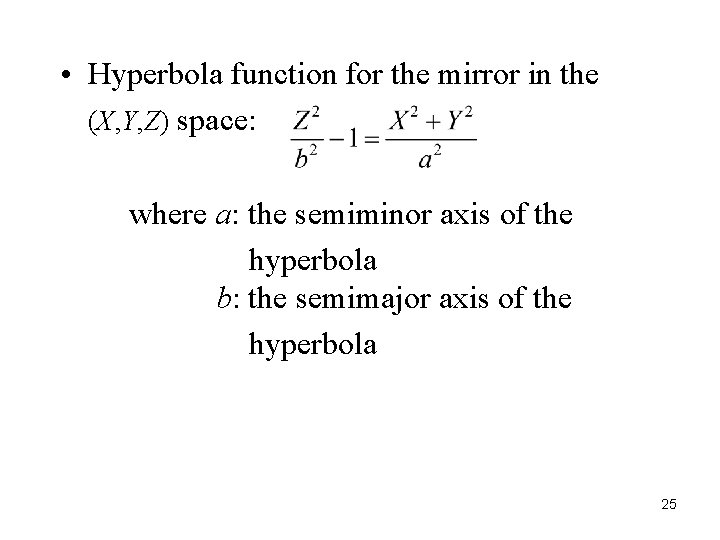

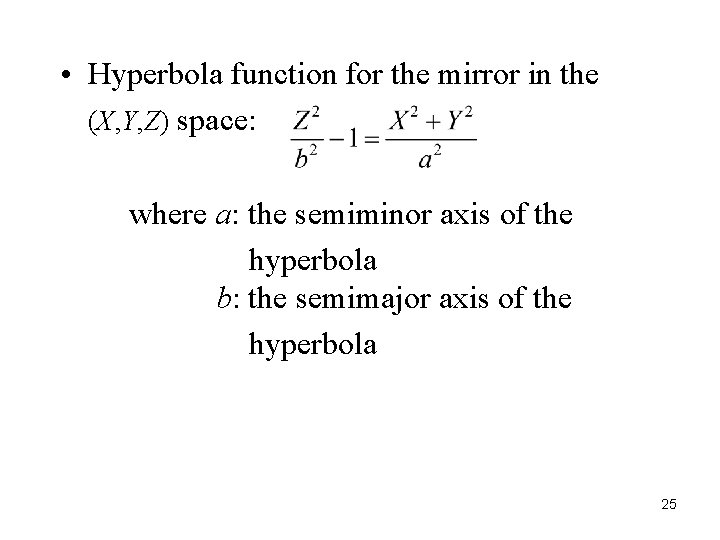

• Hyperbola function for the mirror in the (X, Y, Z) space: where a: the semiminor axis of the hyperbola b: the semimajor axis of the hyperbola 25

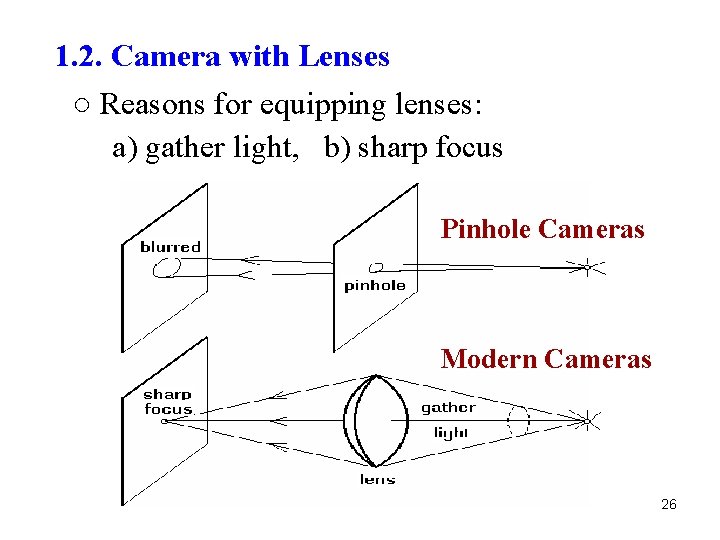

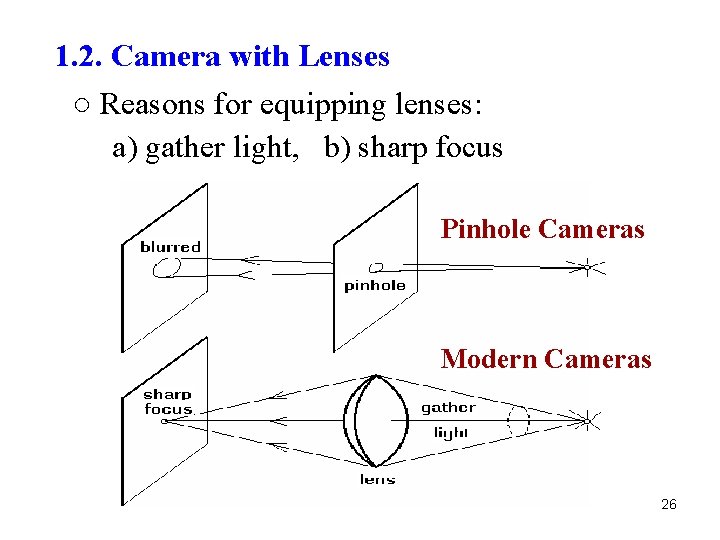

1. 2. Camera with Lenses ○ Reasons for equipping lenses: a) gather light, b) sharp focus Pinhole Cameras Modern Cameras 26

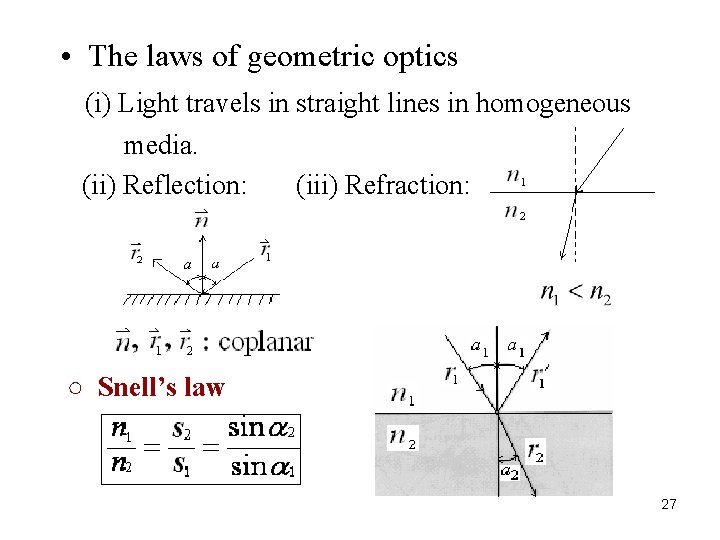

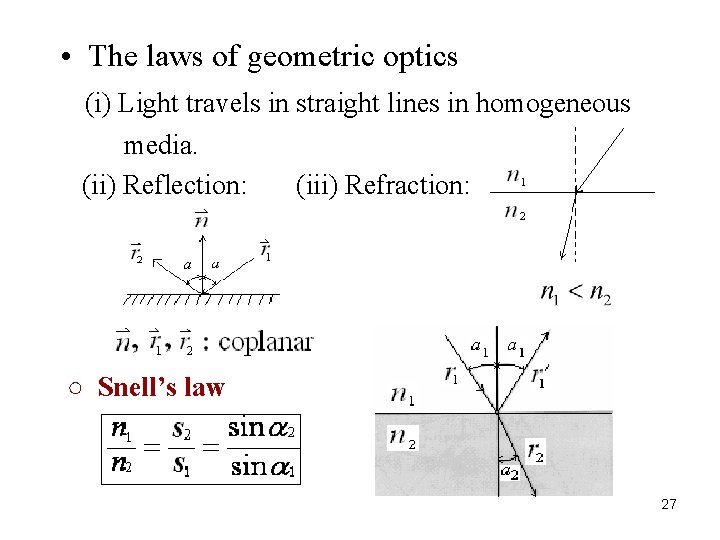

• The laws of geometric optics (i) Light travels in straight lines in homogeneous media. (ii) Reflection: (iii) Refraction: ○ Snell’s law 27

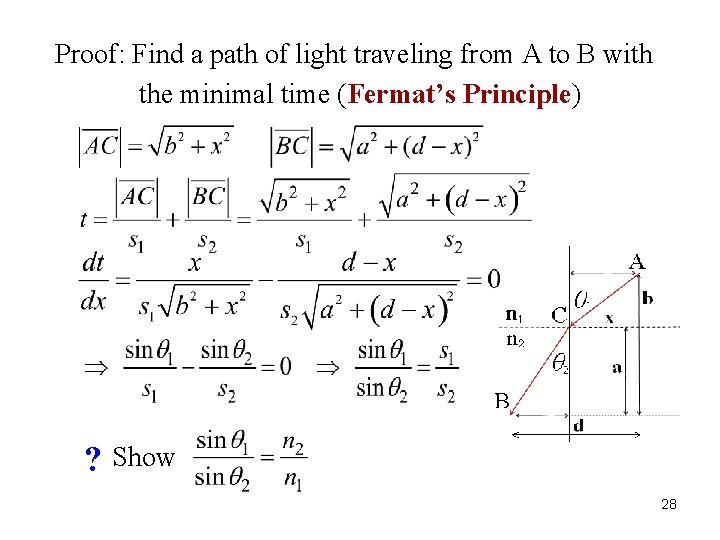

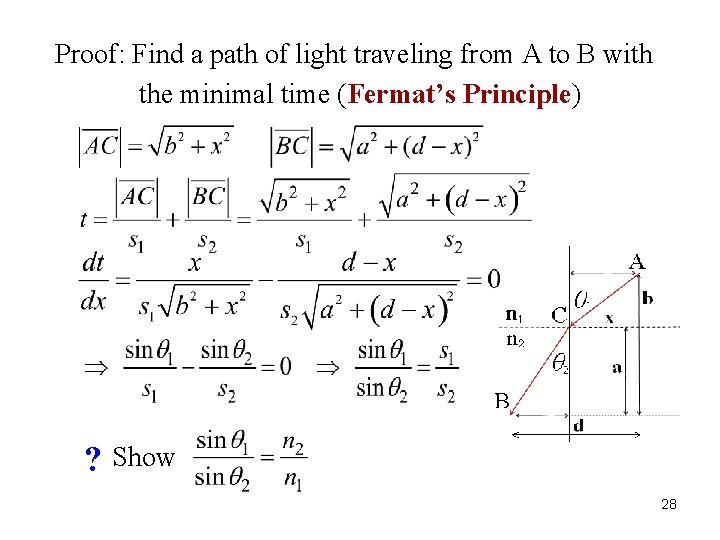

Proof: Find a path of light traveling from A to B with the minimal time (Fermat’s Principle) ? Show 28

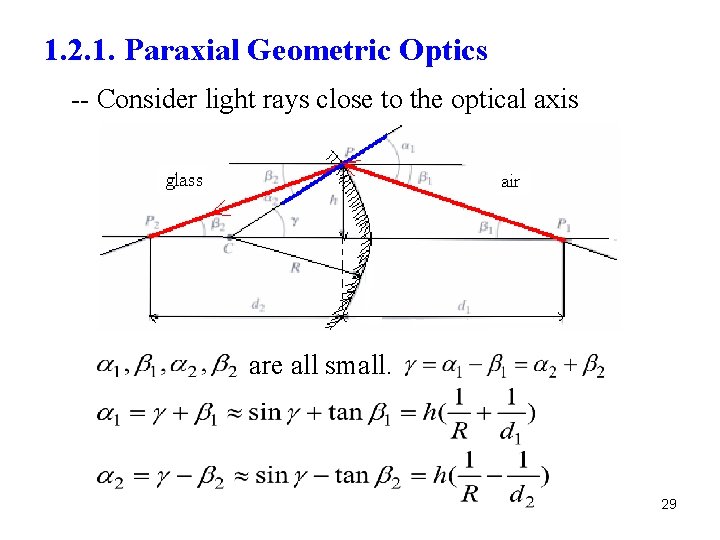

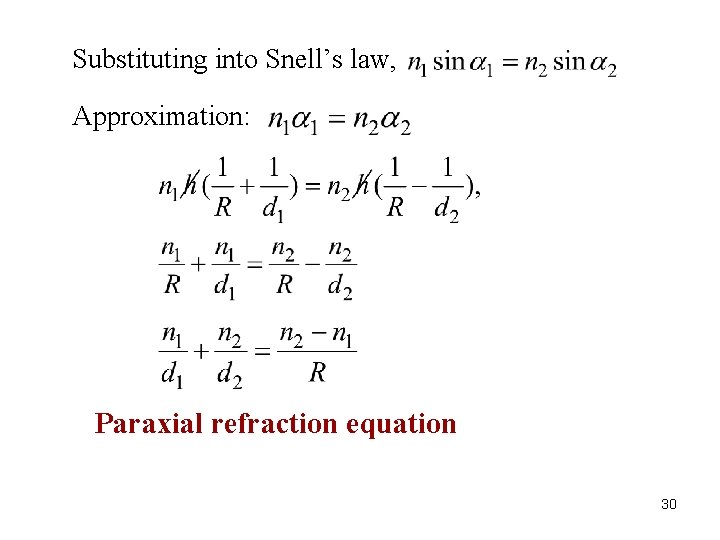

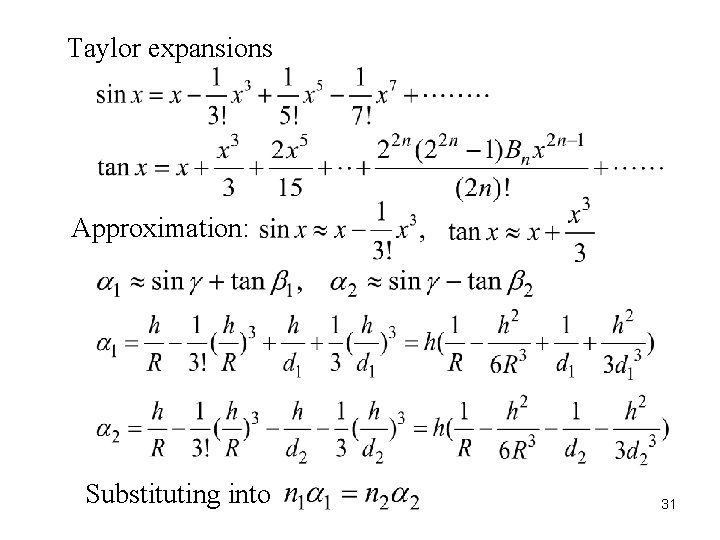

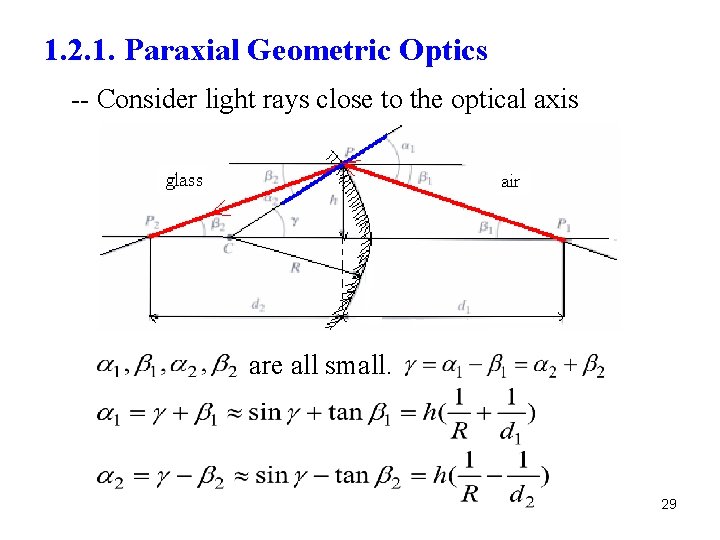

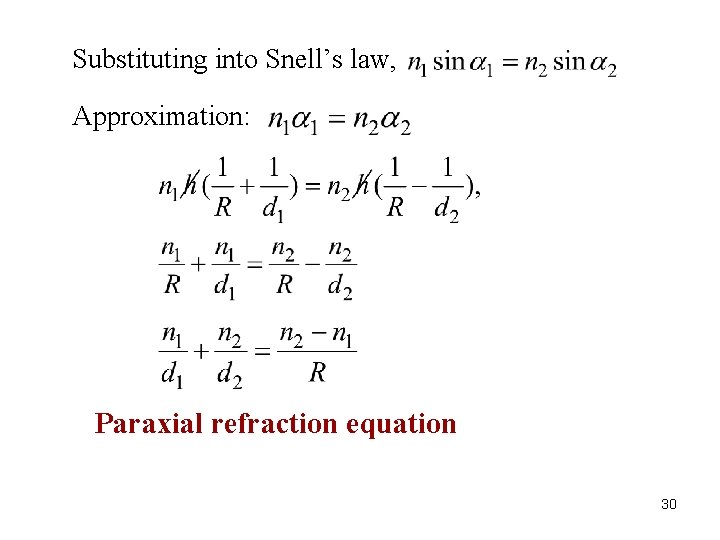

1. 2. 1. Paraxial Geometric Optics -- Consider light rays close to the optical axis are all small. 29

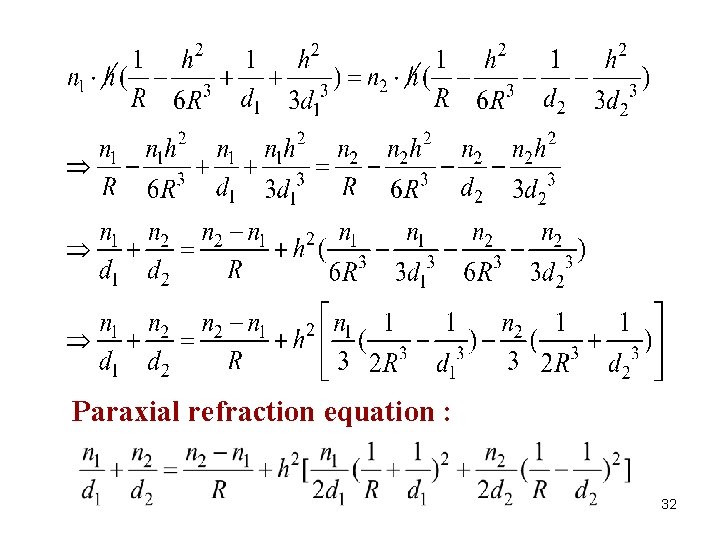

Substituting into Snell’s law, Approximation: Paraxial refraction equation 30

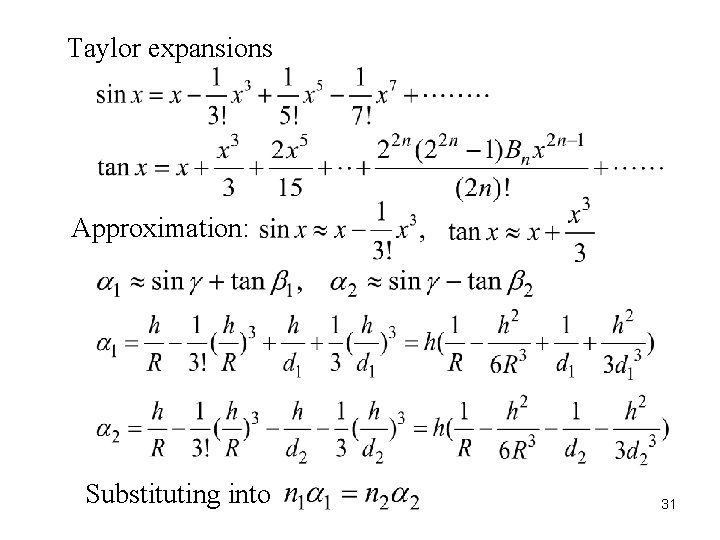

Taylor expansions Approximation: Substituting into 31

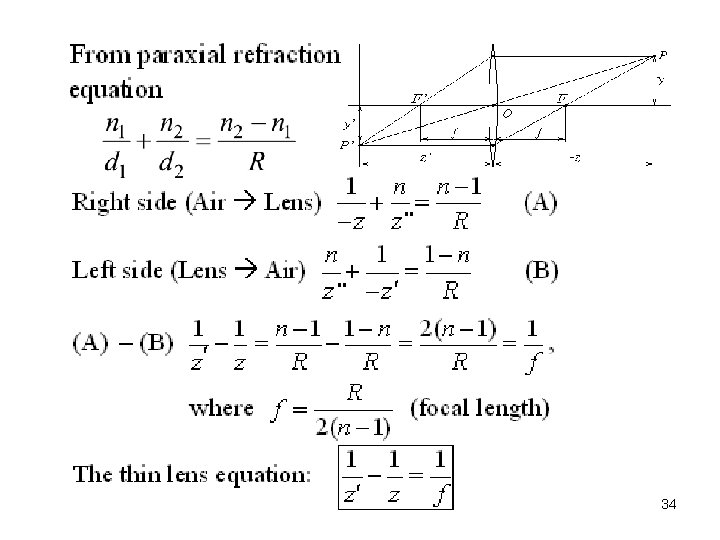

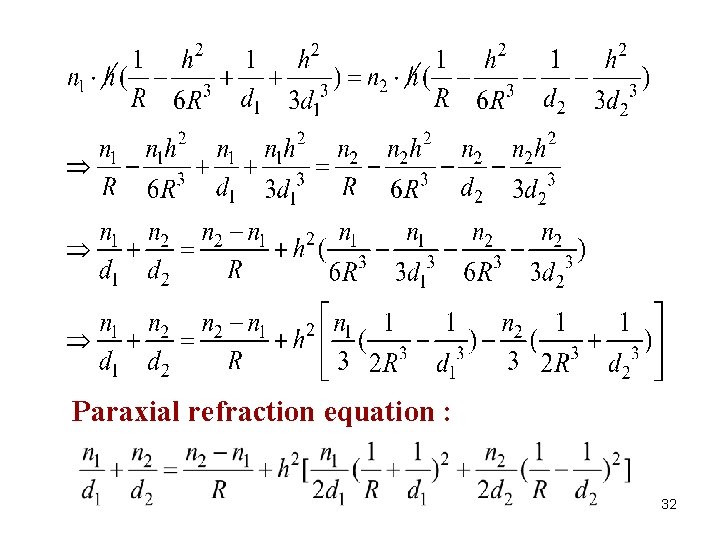

Paraxial refraction equation : 32

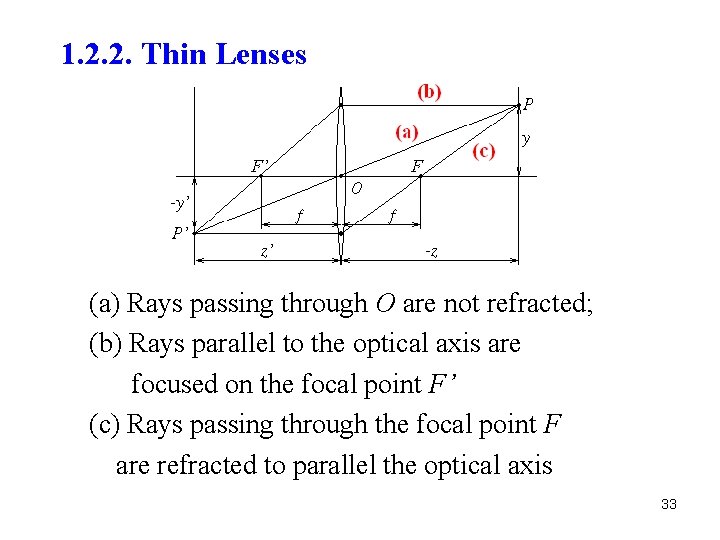

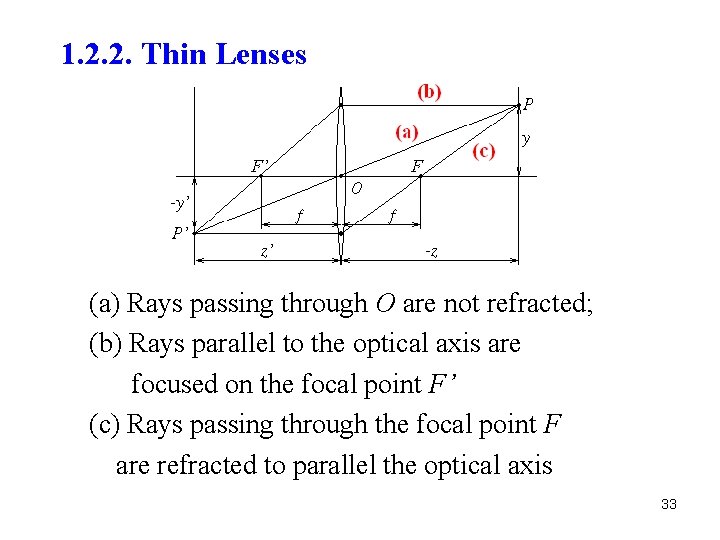

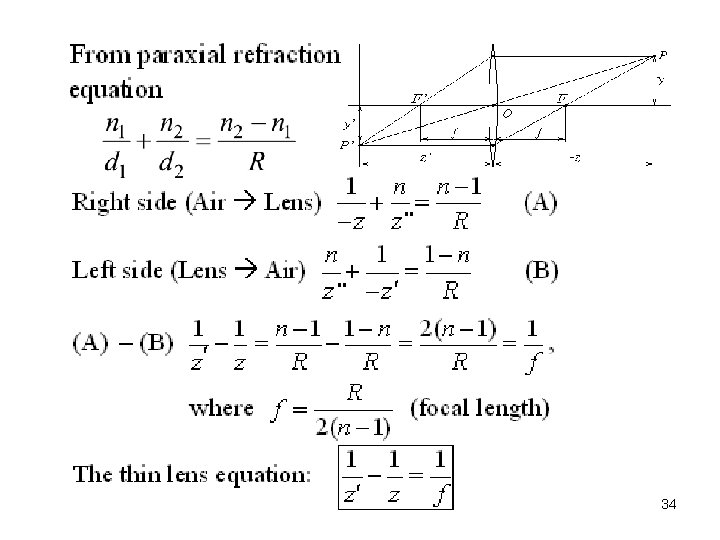

1. 2. 2. Thin Lenses (a) Rays passing through O are not refracted; (b) Rays parallel to the optical axis are focused on the focal point F’ (c) Rays passing through the focal point F are refracted to parallel the optical axis 33

34

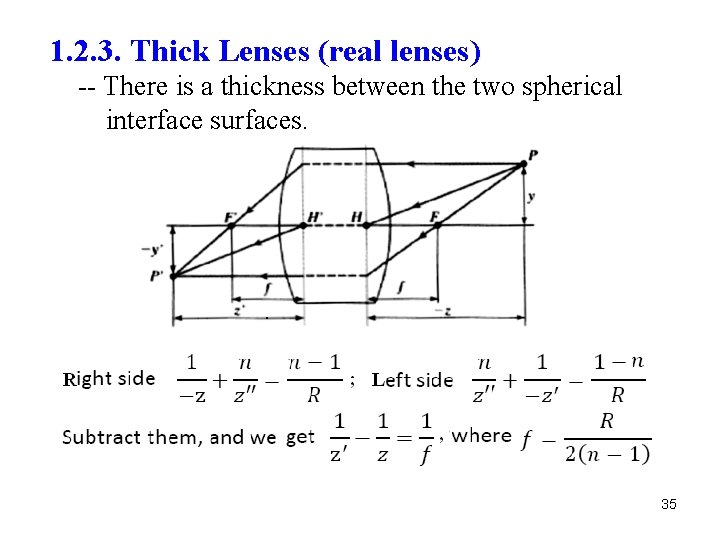

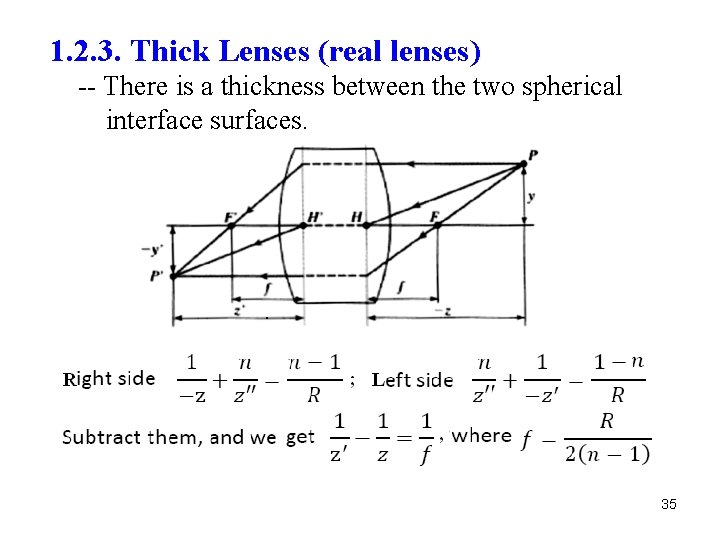

1. 2. 3. Thick Lenses (real lenses) -- There is a thickness between the two spherical interface surfaces. . 35

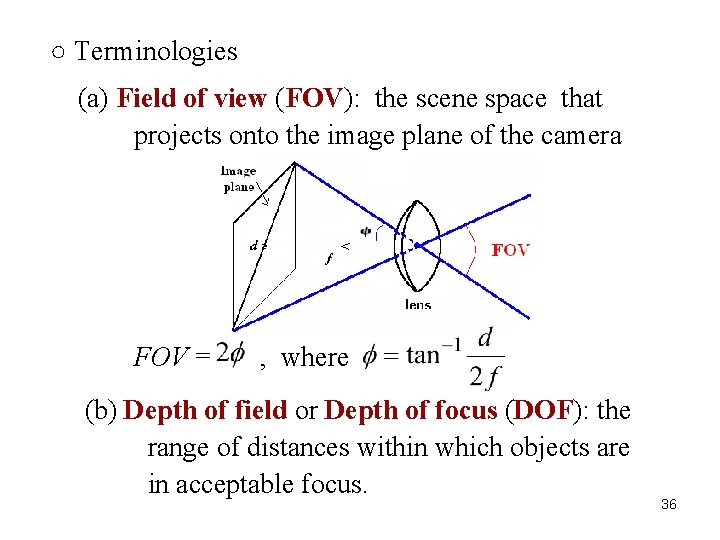

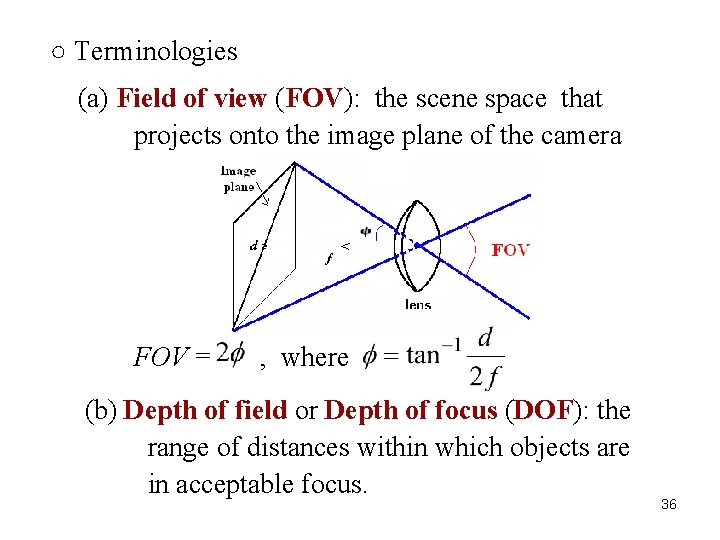

○ Terminologies (a) Field of view (FOV): the scene space that projects onto the image plane of the camera FOV = , where (b) Depth of field or Depth of focus (DOF): the range of distances within which objects are in acceptable focus. 36

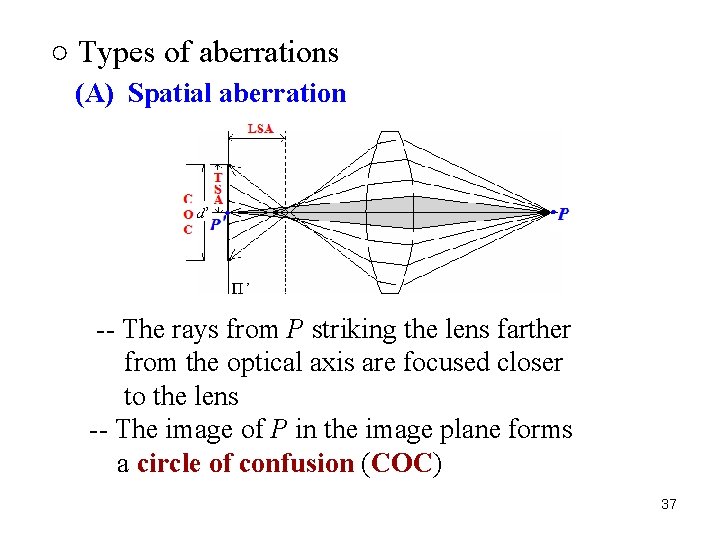

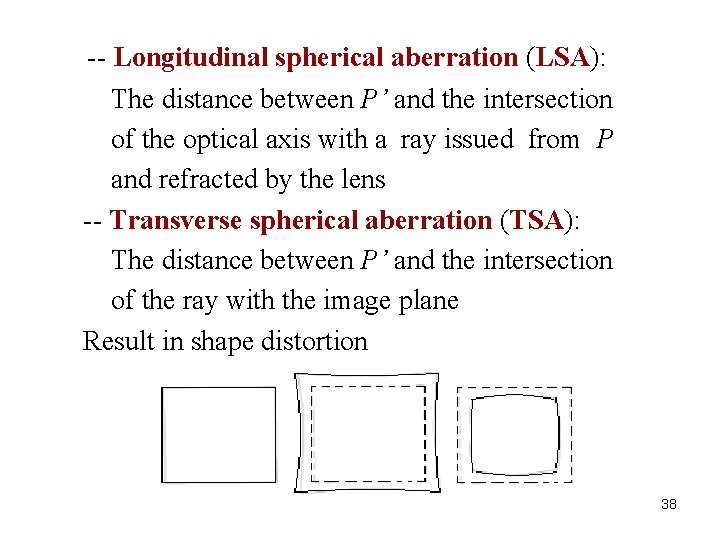

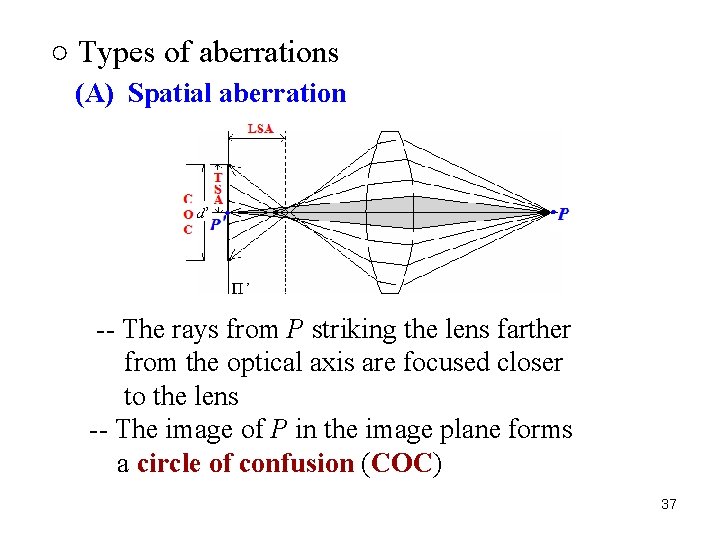

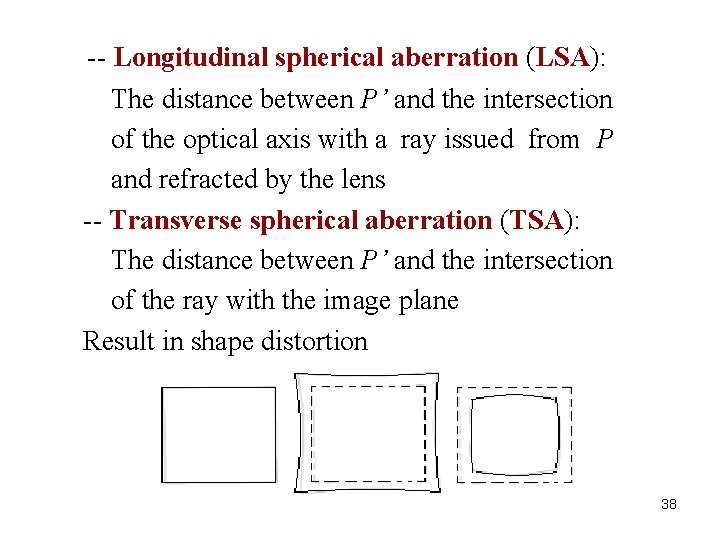

○ Types of aberrations (A) Spatial aberration -- The rays from P striking the lens farther from the optical axis are focused closer to the lens -- The image of P in the image plane forms a circle of confusion (COC) 37

-- Longitudinal spherical aberration (LSA): The distance between P’ and the intersection of the optical axis with a ray issued from P and refracted by the lens -- Transverse spherical aberration (TSA): The distance between P’ and the intersection of the ray with the image plane Result in shape distortion 38

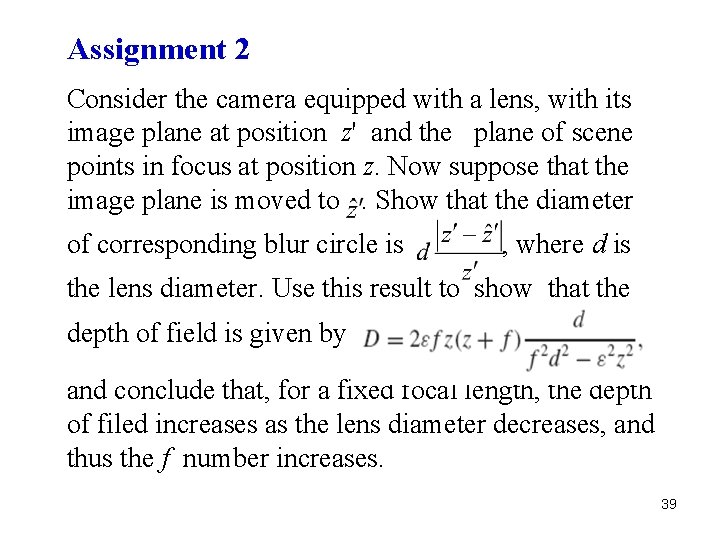

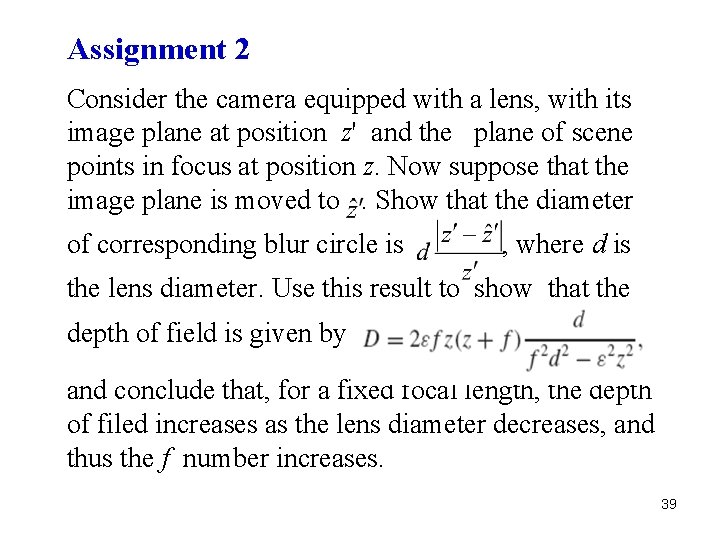

Assignment 2 Consider the camera equipped with a lens, with its image plane at position z' and the plane of scene points in focus at position z. Now suppose that the image plane is moved to. Show that the diameter of corresponding blur circle is , where d is the lens diameter. Use this result to show that the depth of field is given by and conclude that, for a fixed focal length, the depth of filed increases as the lens diameter decreases, and thus the f number increases. 39

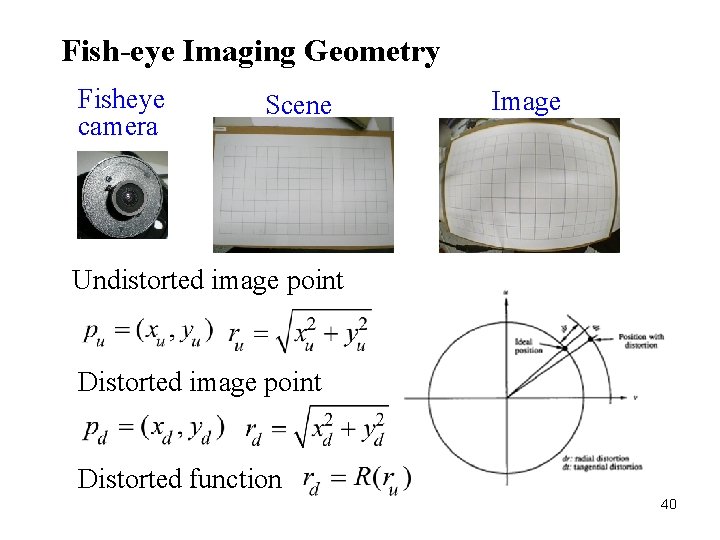

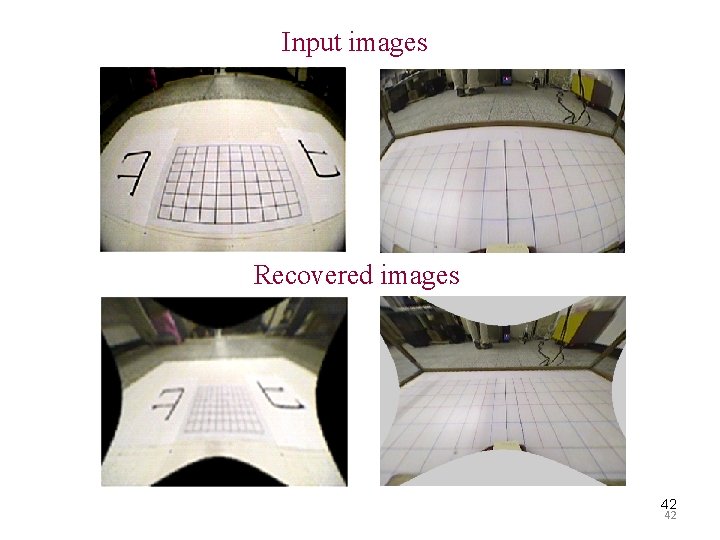

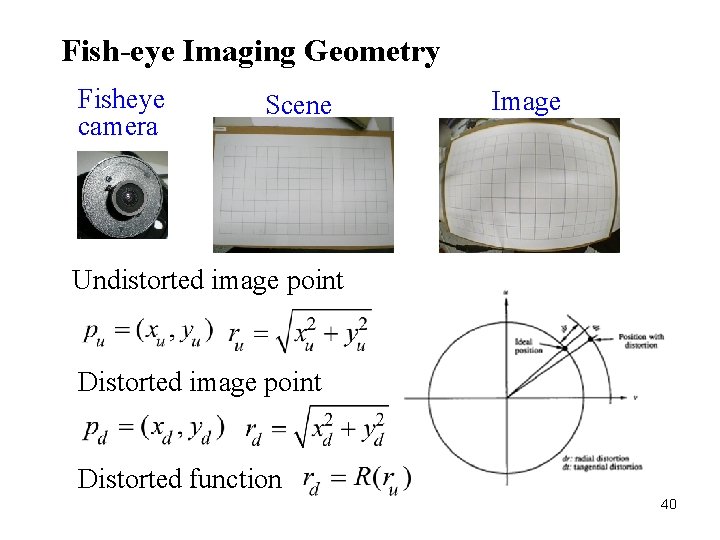

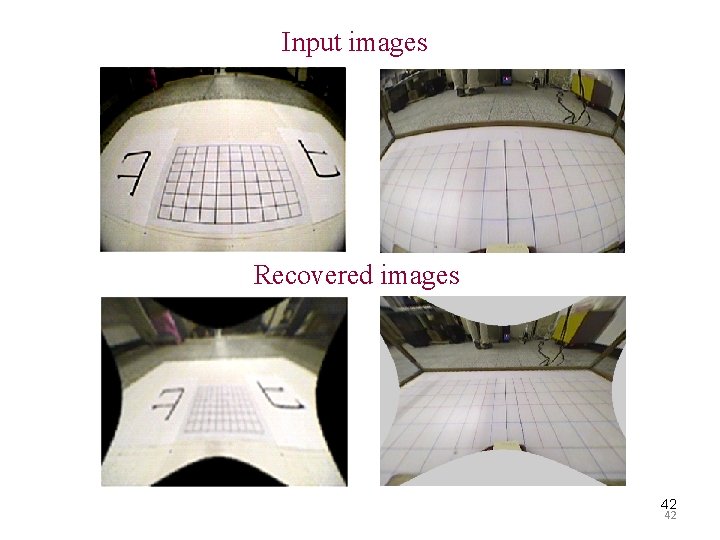

Fish-eye Imaging Geometry Fisheye camera Scene Image Undistorted image point Distorted function 40

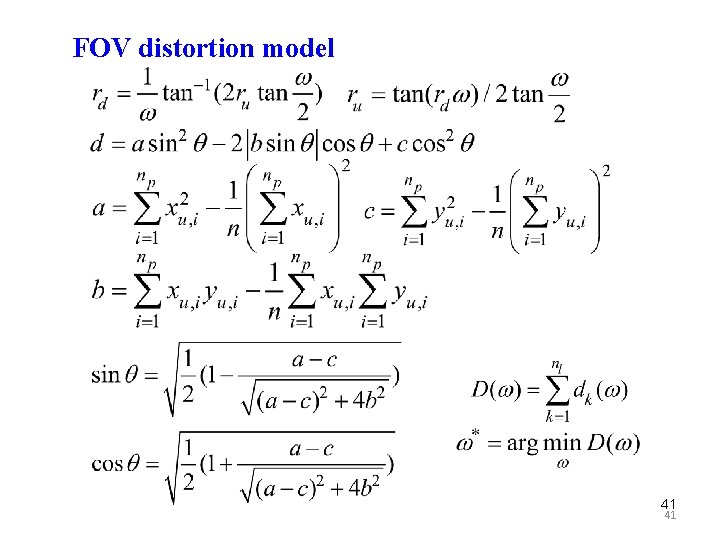

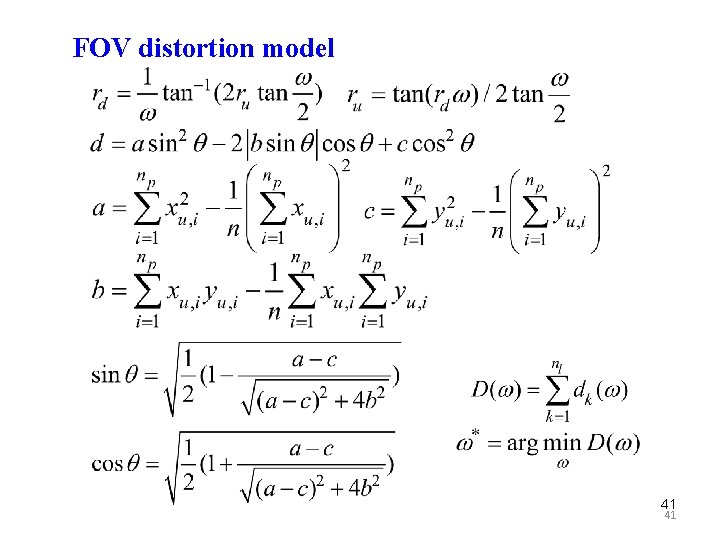

FOV distortion model 41 41

Input images Recovered images 42 42

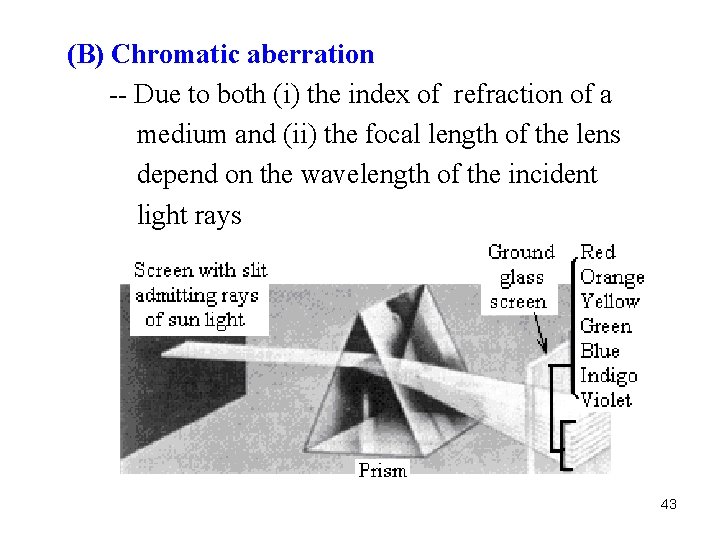

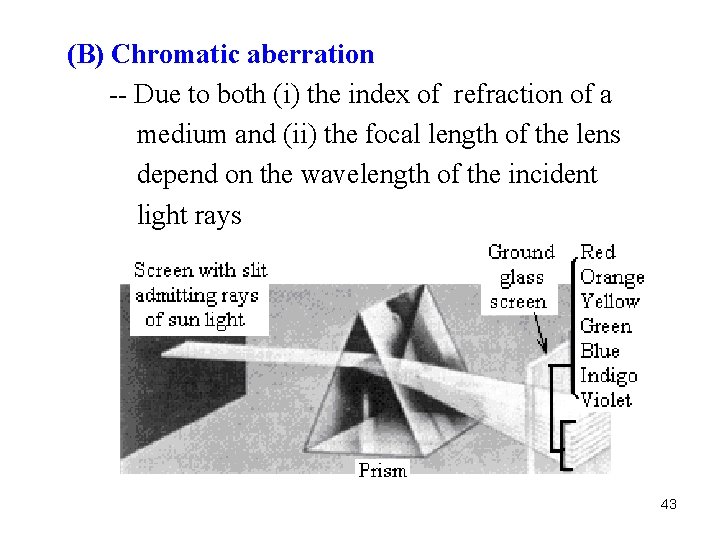

(B) Chromatic aberration -- Due to both (i) the index of refraction of a medium and (ii) the focal length of the lens depend on the wavelength of the incident light rays 43

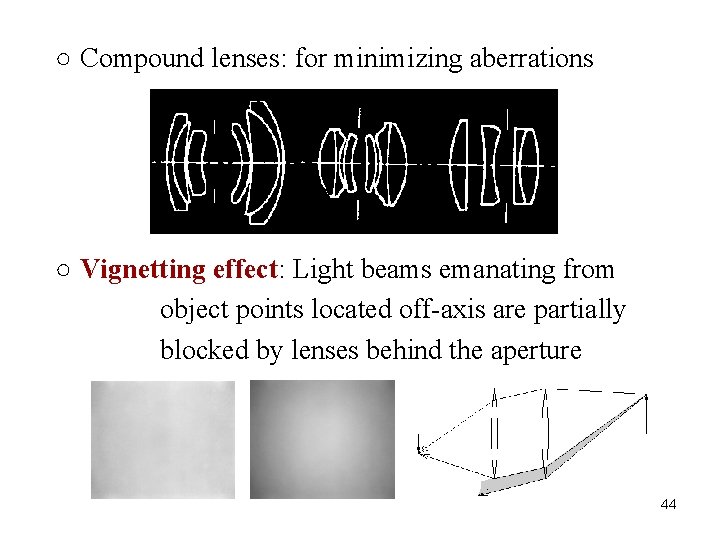

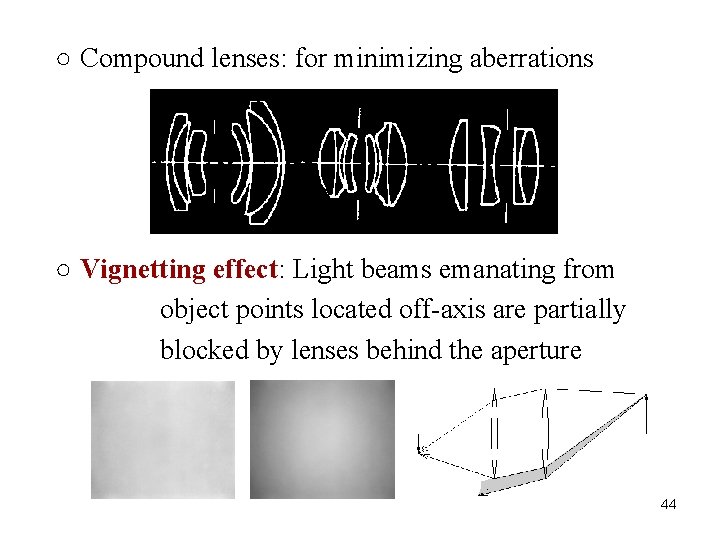

○ Compound lenses: for minimizing aberrations ○ Vignetting effect: Light beams emanating from object points located off-axis are partially blocked by lenses behind the aperture 44

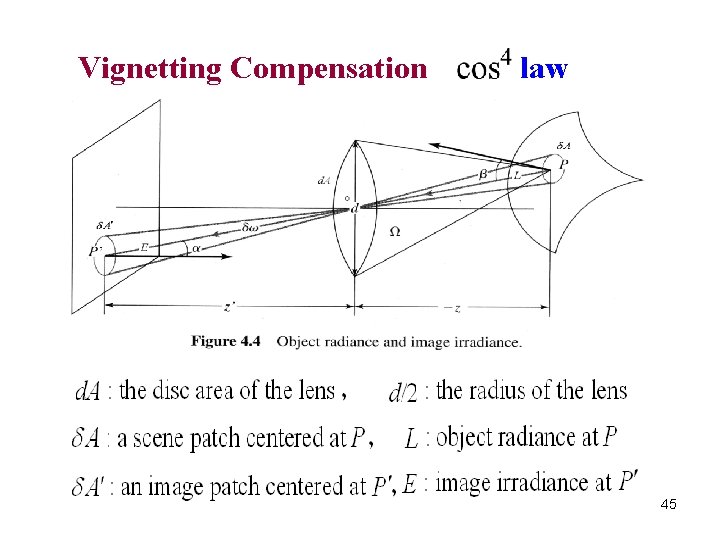

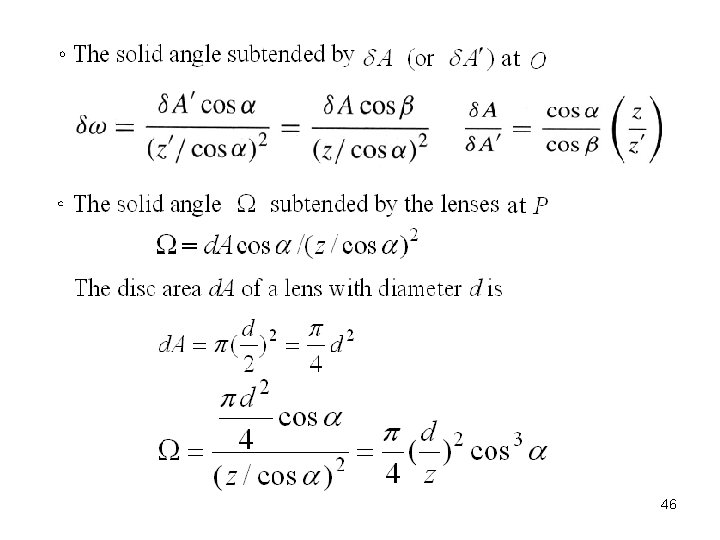

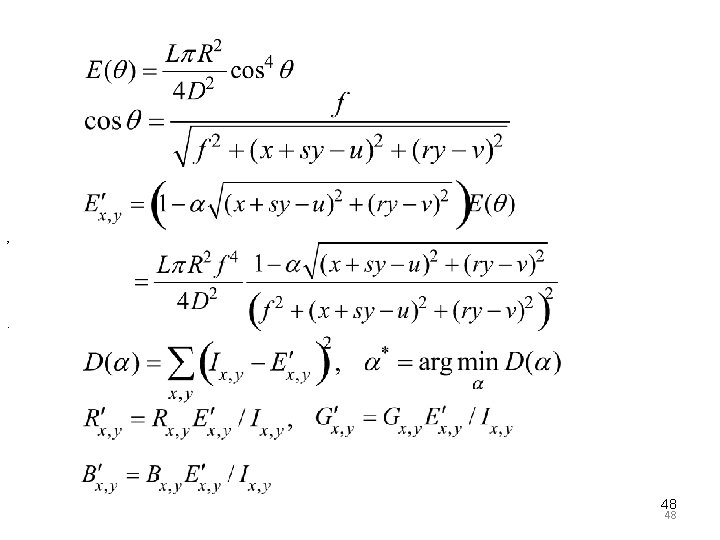

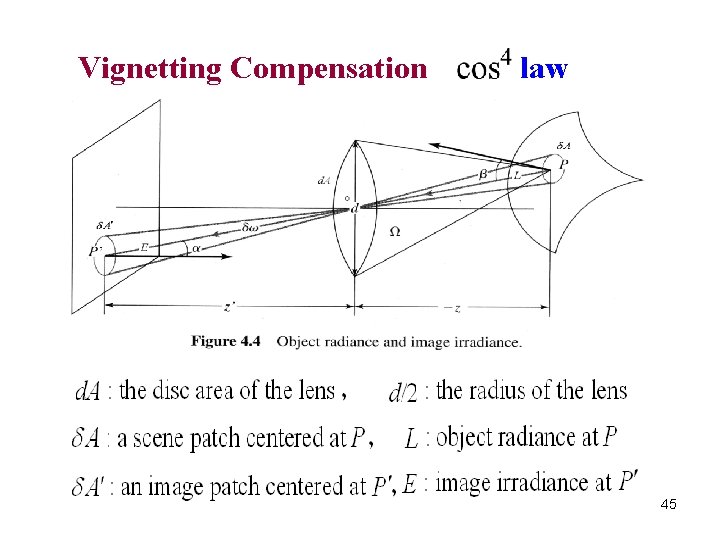

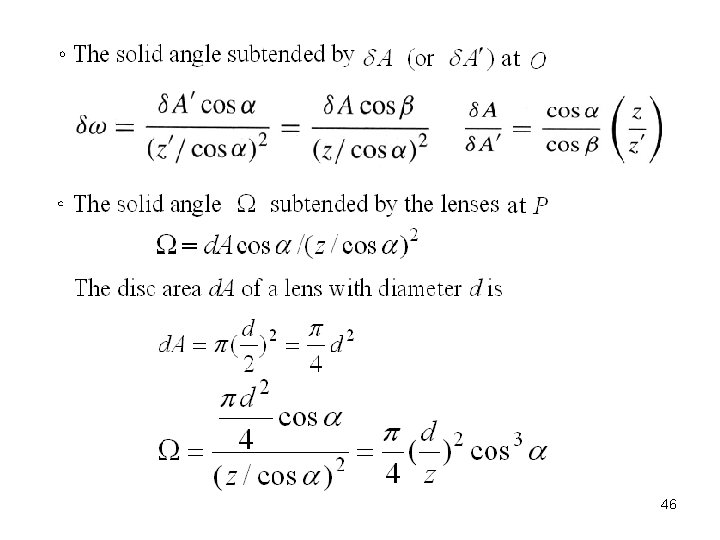

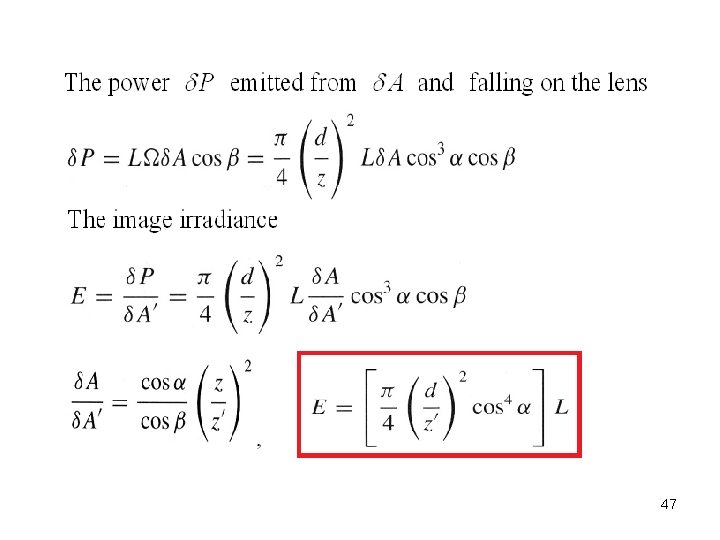

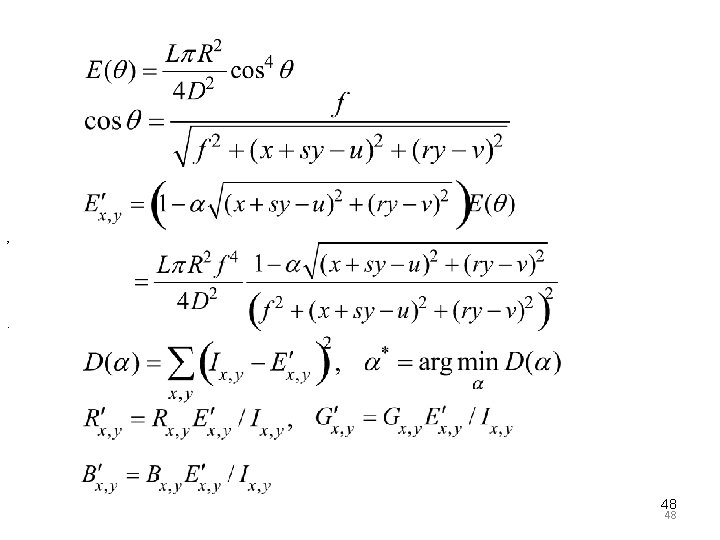

Vignetting Compensation law 45

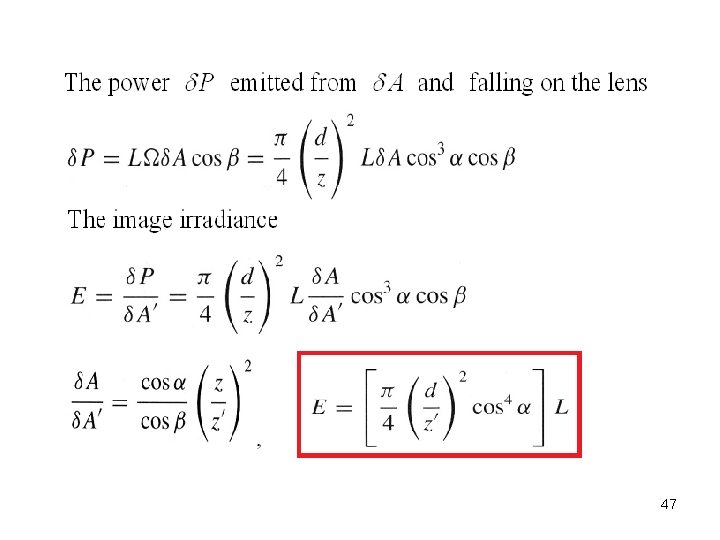

46

47

, . 48 48

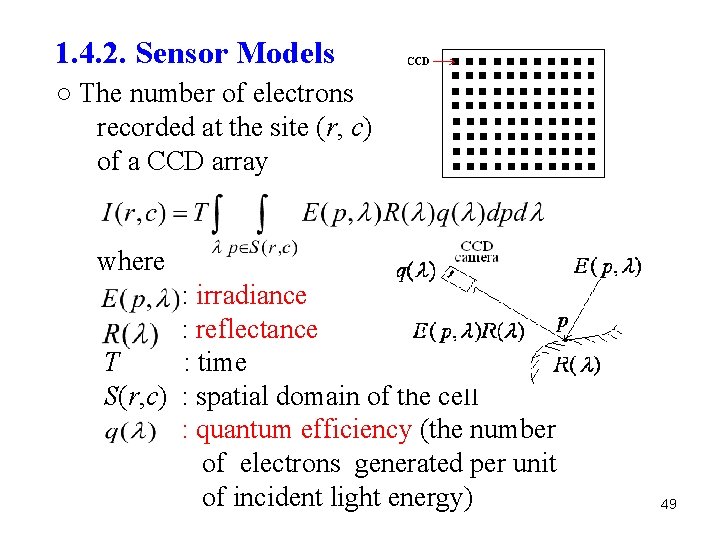

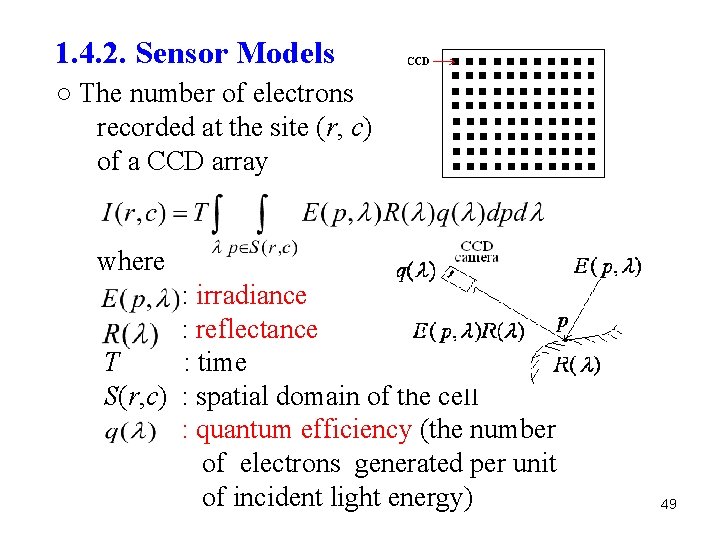

1. 4. 2. Sensor Models ○ The number of electrons recorded at the site (r, c) of a CCD array where : irradiance : reflectance T : time S(r, c) : spatial domain of the cell : quantum efficiency (the number of electrons generated per unit of incident light energy) 49

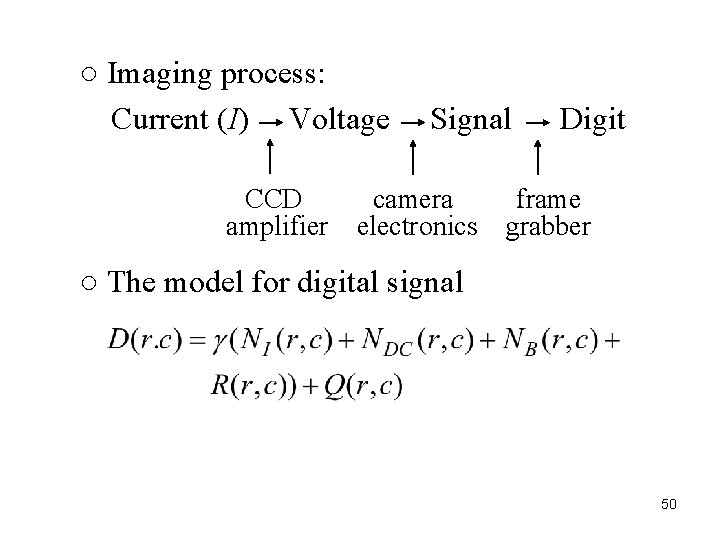

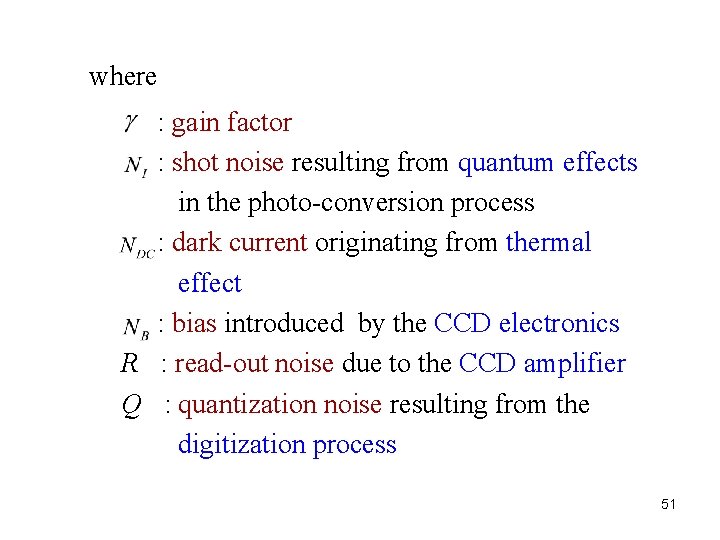

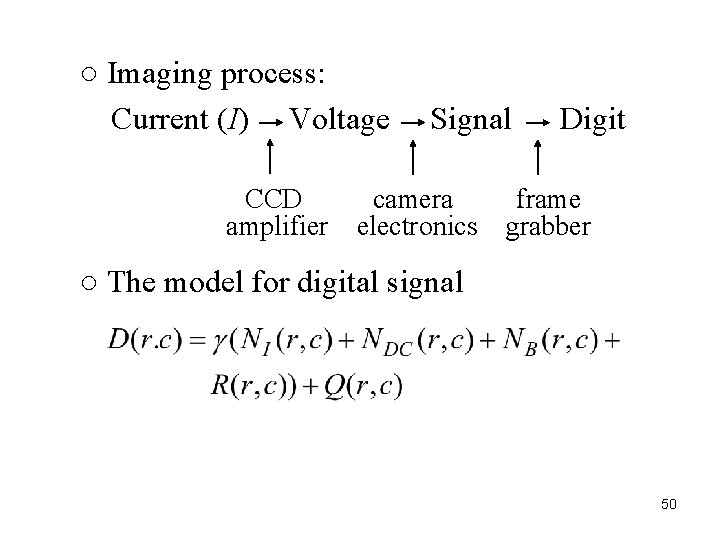

○ Imaging process: Current (I) Voltage CCD amplifier Signal camera electronics Digit frame grabber ○ The model for digital signal 50

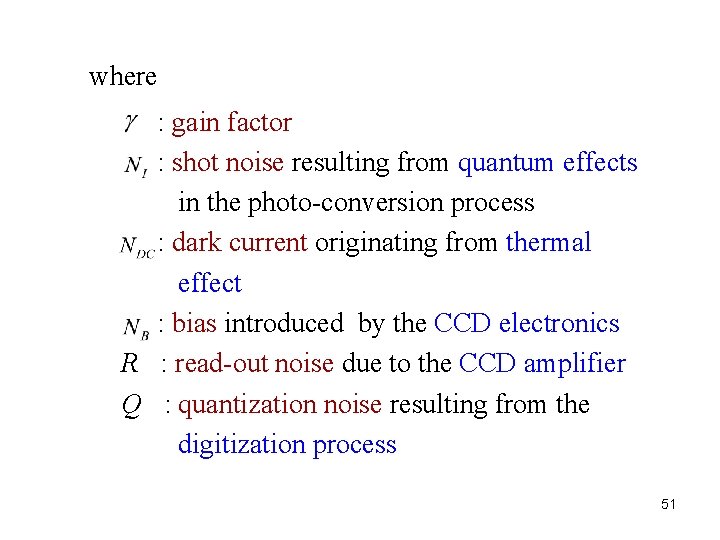

where : gain factor : shot noise resulting from quantum effects in the photo-conversion process : dark current originating from thermal effect : bias introduced by the CCD electronics R : read-out noise due to the CCD amplifier Q : quantization noise resulting from the digitization process 51

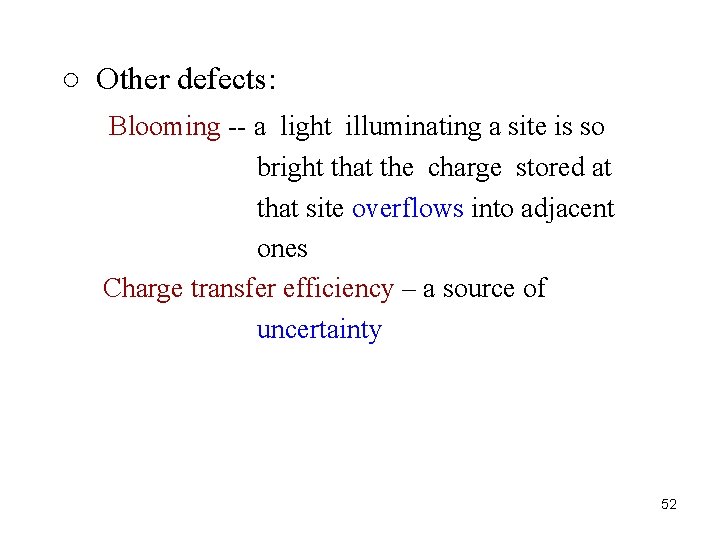

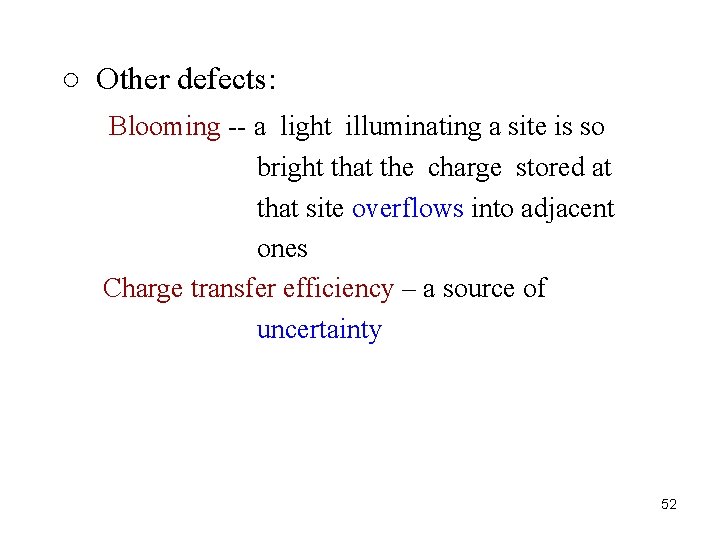

○ Other defects: Blooming -- a light illuminating a site is so bright that the charge stored at that site overflows into adjacent ones Charge transfer efficiency – a source of uncertainty 52

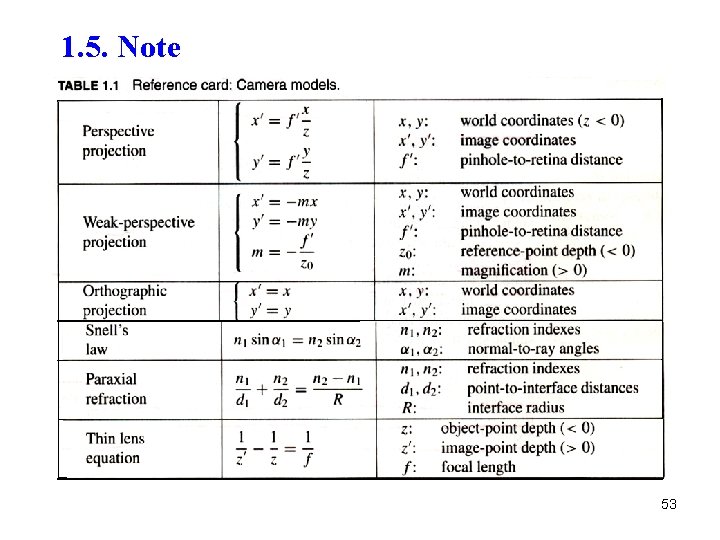

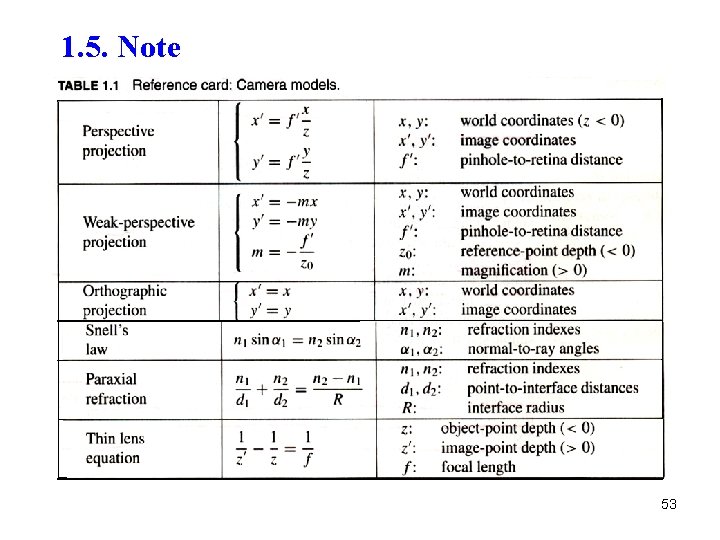

1. 5. Note 53