Computer Science 425 Distributed Systems CS 425 CSE

Computer Science 425 Distributed Systems CS 425 / CSE 424 / ECE 428 Fall 2010 Indranil Gupta (Indy) August 31, 2010 Lecture 3 2010, I. Gupta Lecture 3 -1

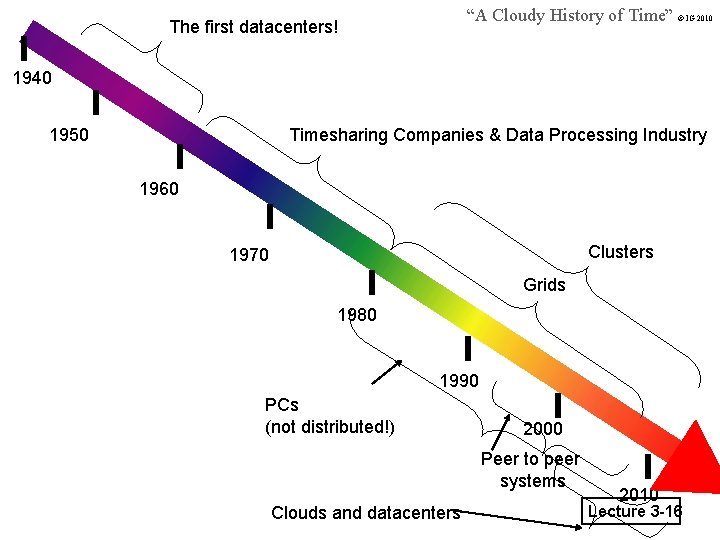

Recap • Last Thursday’s Lecture – Clouds vs. Clusters » At least 3 differences – Types of Clouds – Economics of Clouds – A Cloudy History of Time » Clouds are the latest in a long generation of distributed systems • Today’s Lecture – Cloud Programming: Map. Reduce (the heart of Hadoop) – Grids Lecture 3 -2

Programming Cloud Applications - New Parallel Programming Paradigms: Map. Reduce • • • Highly-Parallel Data-Processing Originally designed by Google (OSDI 2004 paper) Open-source version called Hadoop, by Yahoo! – Hadoop written in Java. Your implementation could be in Java, or any executable • Google (Map. Reduce) – Indexing: a chain of 24 Map. Reduce jobs – ~200 K jobs processing 50 PB/month (in 2006) • Yahoo! (Hadoop + Pig) – Web. Map: a chain of 100 Map. Reduce jobs – 280 TB of data, 2500 nodes, 73 hours • Annual Hadoop Summit: 2008 had 300 attendees, 2009 had 700 attendees Lecture 3 -3

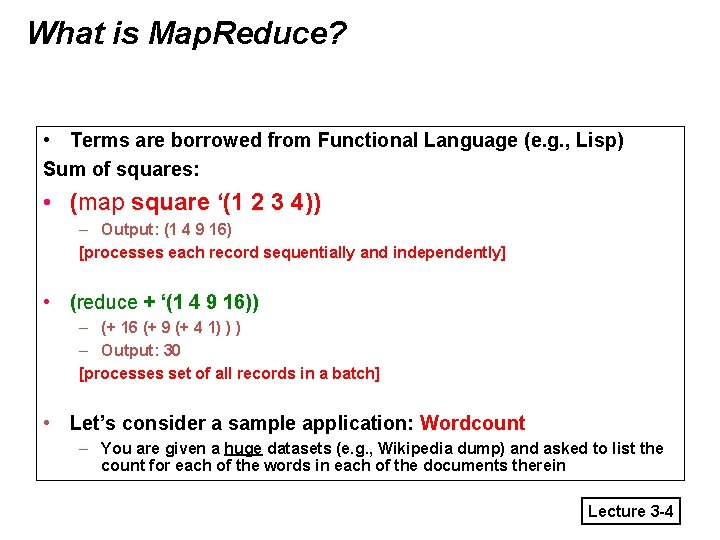

What is Map. Reduce? • Terms are borrowed from Functional Language (e. g. , Lisp) Sum of squares: • (map square ‘(1 2 3 4)) – Output: (1 4 9 16) [processes each record sequentially and independently] • (reduce + ‘(1 4 9 16)) – (+ 16 (+ 9 (+ 4 1) ) ) – Output: 30 [processes set of all records in a batch] • Let’s consider a sample application: Wordcount – You are given a huge datasets (e. g. , Wikipedia dump) and asked to list the count for each of the words in each of the documents therein Lecture 3 -4

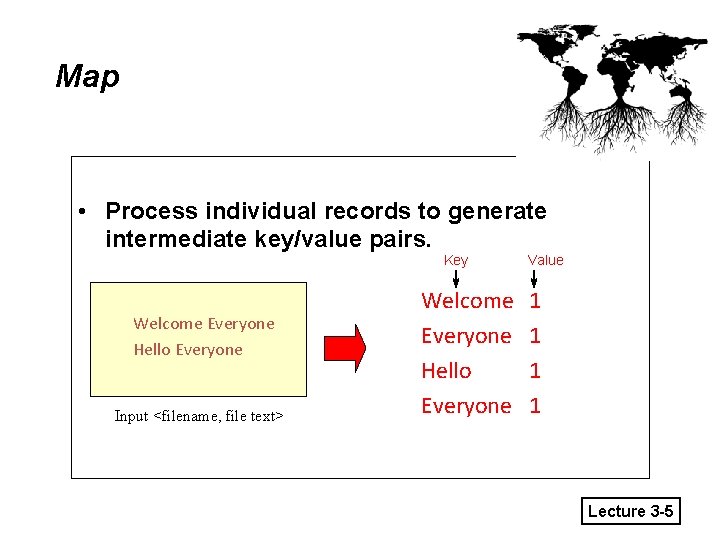

Map • Process individual records to generate intermediate key/value pairs. Key Welcome Everyone Hello Everyone Input <filename, file text> Welcome Everyone Hello Everyone Value 1 1 Lecture 3 -5

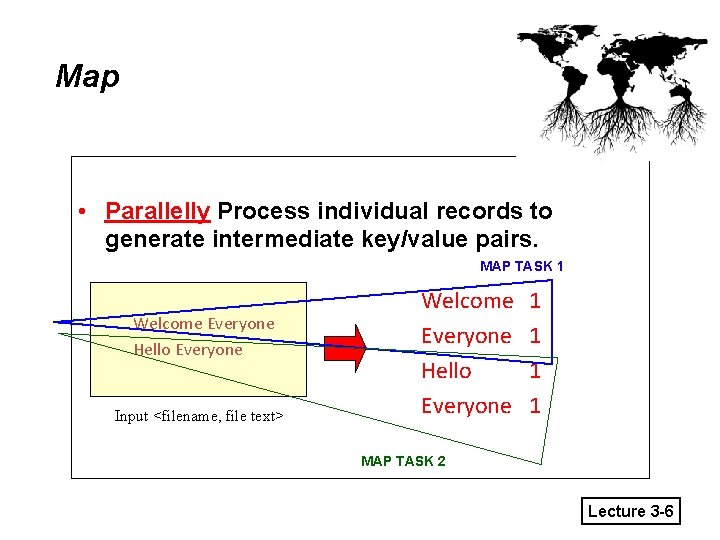

Map • Parallelly Process individual records to generate intermediate key/value pairs. MAP TASK 1 Welcome Everyone Hello Everyone Input <filename, file text> Welcome Everyone Hello Everyone 1 1 MAP TASK 2 Lecture 3 -6

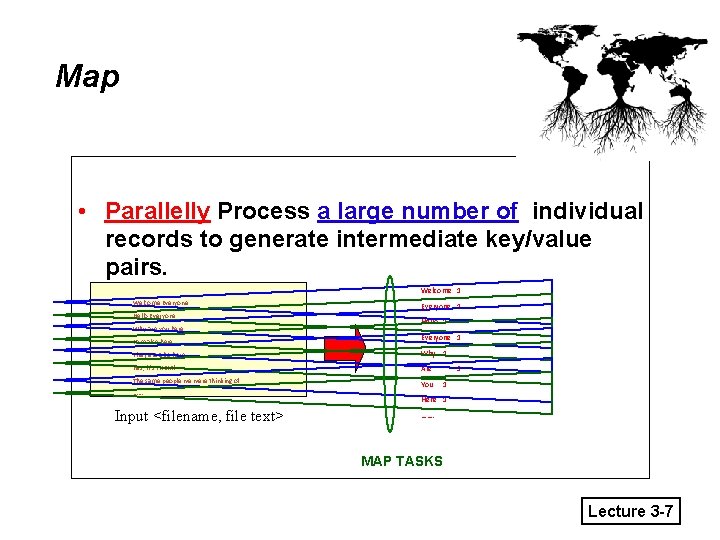

Map • Parallelly Process a large number of individual records to generate intermediate key/value pairs. Welcome 1 Welcome Everyone Hello Everyone Why are you here I am also here Everyone 1 Hello 1 Everyone 1 They are also here Why 1 Yes, it’s THEM! Are The same people we were thinking of You ……. Input <filename, file text> 1 1 Here 1 ……. MAP TASKS Lecture 3 -7

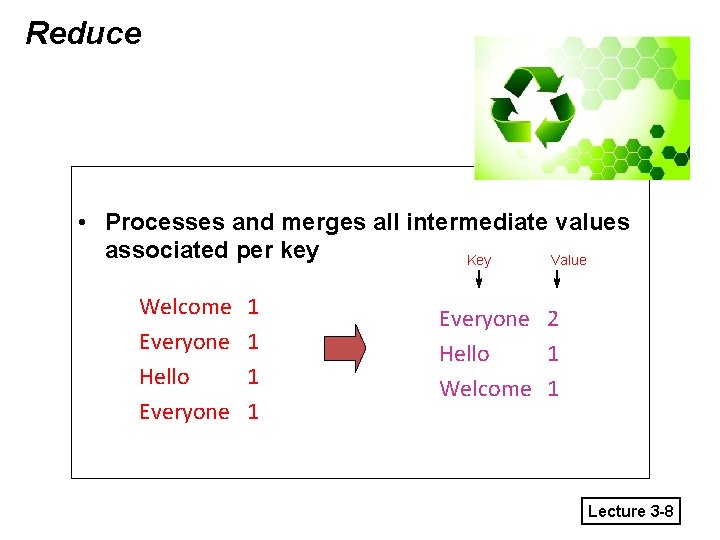

Reduce • Processes and merges all intermediate values associated per key Key Value Welcome Everyone Hello Everyone 1 1 Everyone 2 Hello 1 Welcome 1 Lecture 3 -8

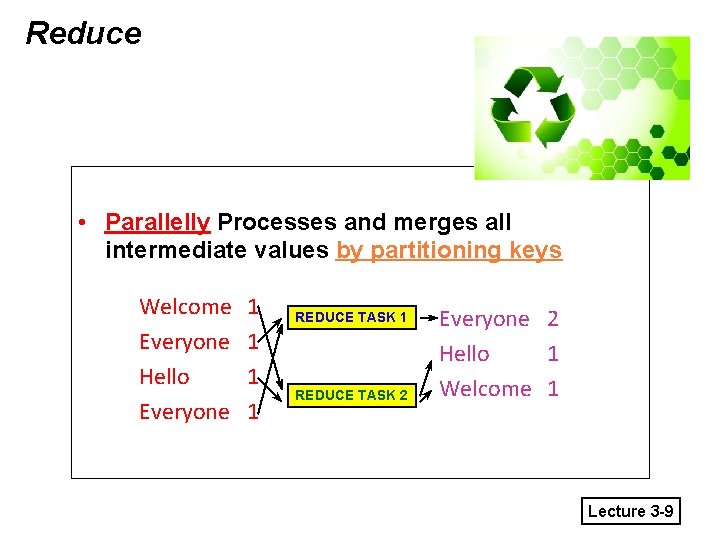

Reduce • Parallelly Processes and merges all intermediate values by partitioning keys Welcome Everyone Hello Everyone 1 1 REDUCE TASK 2 Everyone 2 Hello 1 Welcome 1 Lecture 3 -9

Some Other Applications of Map. Reduce • Distributed Grep: – Input: large set of files – Map – Emits a line if it matches the supplied pattern – Reduce - Copies the intermediate data to output • Count of URL access frequency – Input: Web access log, e. g. , from proxy server – Map – Process web log and outputs <URL, 1> – Reduce - Emits <URL, total count> • Reverse Web-Link Graph – Input: Web graph (page page) – Map – process web log and outputs <target, source> – Reduce - emits <target, list(source)> Lecture 3 -10

Programming Map. Reduce • Externally: For user 1. 2. 3. • Write a Map program (short), write a Reduce program (short) Submit job; wait for result Need to know nothing about parallel/distributed programming! Internally: For the cloud (and for us distributed systems researchers) 1. 2. 3. 4. Parallelize Map Transfer data from Map to Reduce Parallelize Reduce Implement Storage for Map input, Map output, Reduce input, and Reduce output Lecture 3 -11

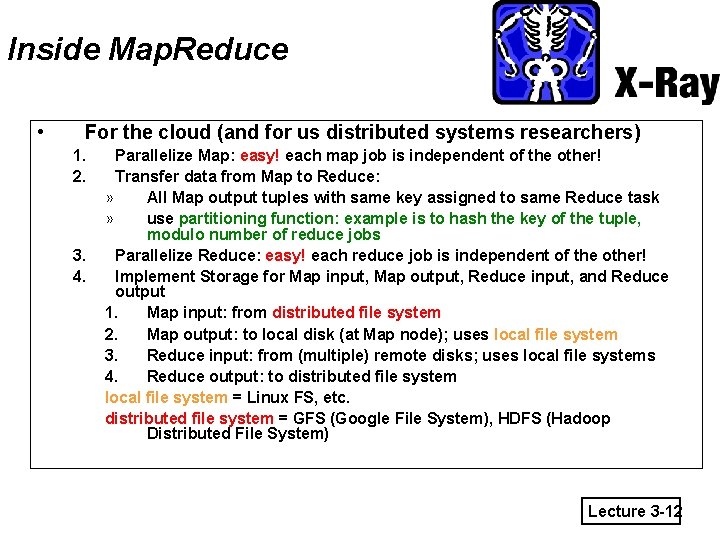

Inside Map. Reduce • For the cloud (and for us distributed systems researchers) 1. 2. 3. 4. Parallelize Map: easy! each map job is independent of the other! Transfer data from Map to Reduce: » All Map output tuples with same key assigned to same Reduce task » use partitioning function: example is to hash the key of the tuple, modulo number of reduce jobs Parallelize Reduce: easy! each reduce job is independent of the other! Implement Storage for Map input, Map output, Reduce input, and Reduce output 1. Map input: from distributed file system 2. Map output: to local disk (at Map node); uses local file system 3. Reduce input: from (multiple) remote disks; uses local file systems 4. Reduce output: to distributed file system local file system = Linux FS, etc. distributed file system = GFS (Google File System), HDFS (Hadoop Distributed File System) Lecture 3 -12

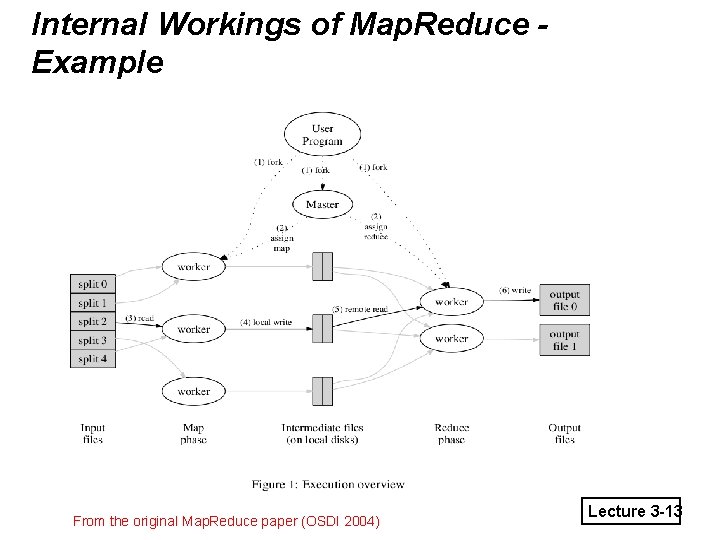

Internal Workings of Map. Reduce Example From the original Map. Reduce paper (OSDI 2004) Lecture 3 -13

Questions for You to Ponder about • What happens when there are failures? • What about bottlenecks within the datacenter? – Think of the routers and switches. Lecture 3 -14

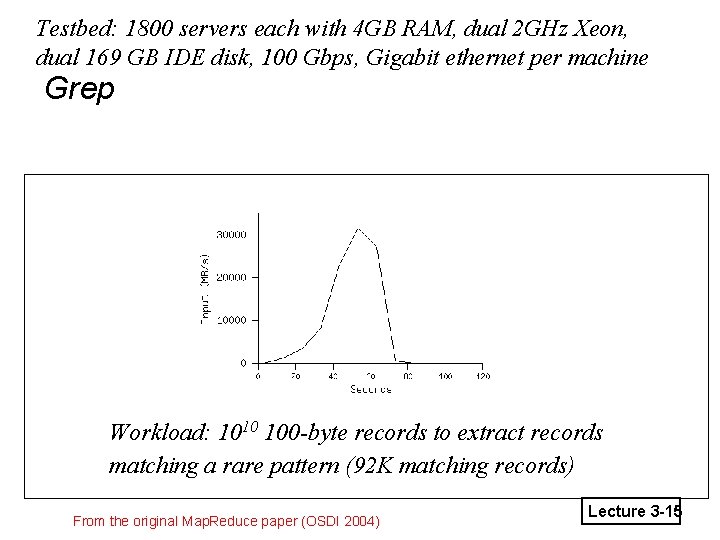

Testbed: 1800 servers each with 4 GB RAM, dual 2 GHz Xeon, dual 169 GB IDE disk, 100 Gbps, Gigabit ethernet per machine Grep Workload: 1010 100 -byte records to extract records matching a rare pattern (92 K matching records) From the original Map. Reduce paper (OSDI 2004) Lecture 3 -15

“A Cloudy History of Time” © IG 2010 The first datacenters! 1940 1950 Timesharing Companies & Data Processing Industry 1960 Clusters 1970 Grids 1980 1990 PCs (not distributed!) 2000 Peer to peer systems Clouds and datacenters 2010 Lecture 3 -16

Before clouds there were… Grids! What is a Grid? Lecture 3 -17

Example: Rapid Atmospheric Modeling System, Colo. State U • Hurricane Georges, 17 days in Sept 1998 – “RAMS modeled the mesoscale convective complex that dropped so much rain, in good agreement with recorded data” – Used 5 km spacing instead of the usual 10 km – Ran on 256+ processors • Computation-intensive application rather than data-intensive • Can one run such a program without access to a supercomputer? Lecture 3 -18

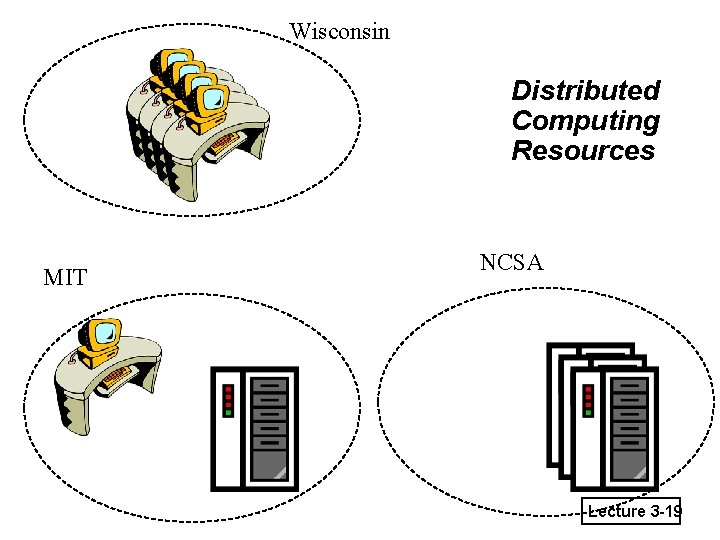

Wisconsin Distributed Computing Resources MIT NCSA Lecture 3 -19

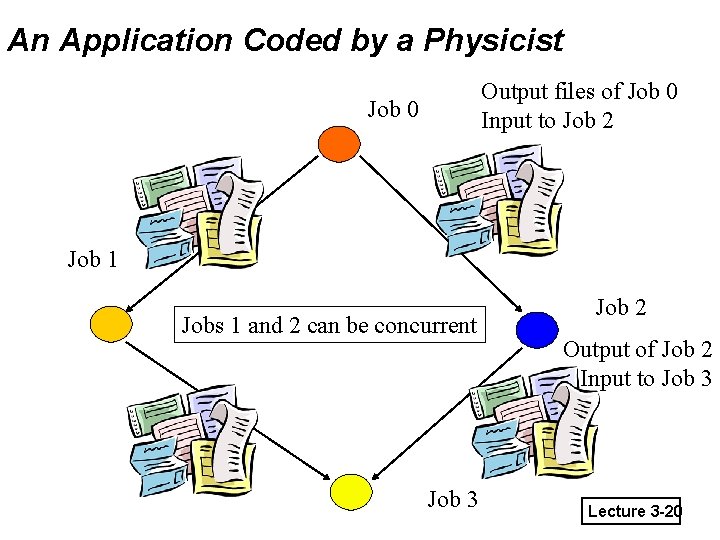

An Application Coded by a Physicist Output files of Job 0 Input to Job 2 Job 0 Job 1 Jobs 1 and 2 can be concurrent Job 3 Job 2 Output of Job 2 Input to Job 3 Lecture 3 -20

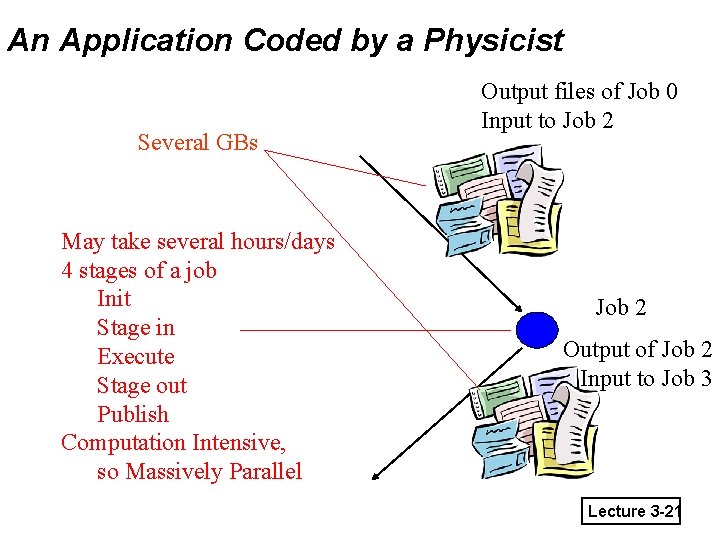

An Application Coded by a Physicist Several GBs May take several hours/days 4 stages of a job Init Stage in Execute Stage out Publish Computation Intensive, so Massively Parallel Output files of Job 0 Input to Job 2 Output of Job 2 Input to Job 3 Lecture 3 -21

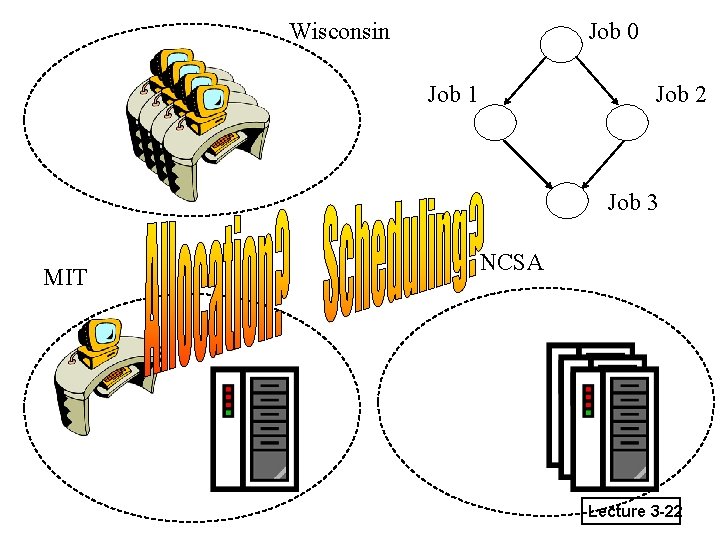

Wisconsin Job 0 Job 1 Job 2 Job 3 MIT NCSA Lecture 3 -22

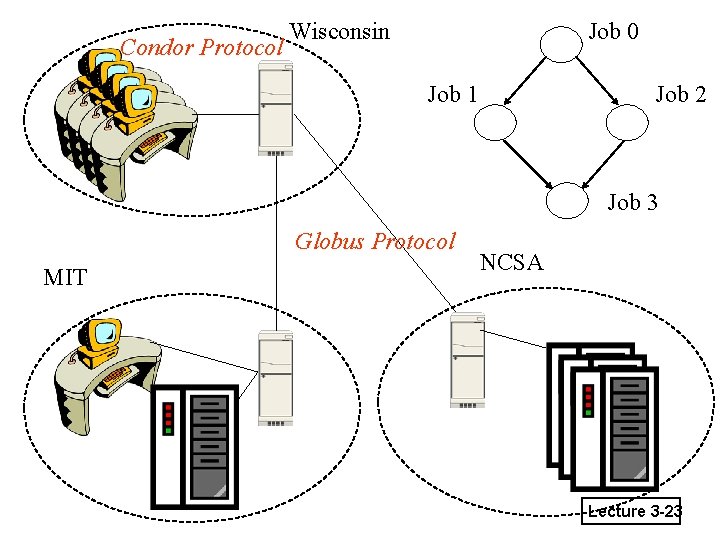

Condor Protocol Wisconsin Job 0 Job 1 Job 2 Job 3 Globus Protocol MIT NCSA Lecture 3 -23

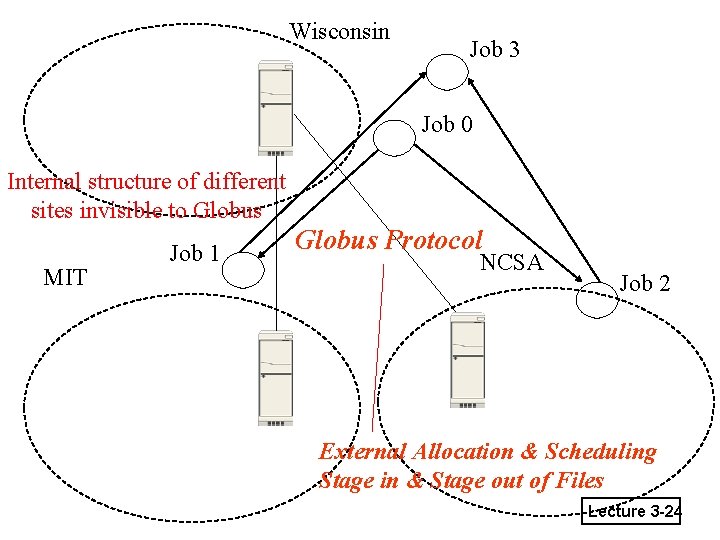

Wisconsin Job 3 Job 0 Internal structure of different sites invisible to Globus MIT Job 1 Globus Protocol NCSA Job 2 External Allocation & Scheduling Stage in & Stage out of Files Lecture 3 -24

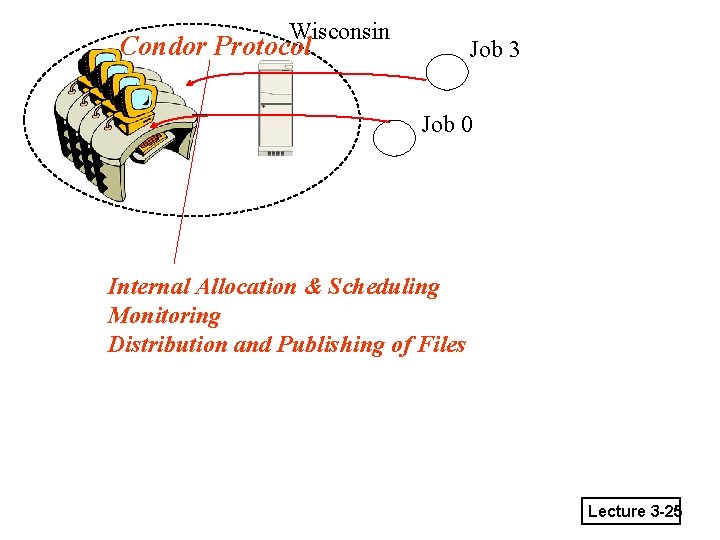

Wisconsin Condor Protocol Job 3 Job 0 Internal Allocation & Scheduling Monitoring Distribution and Publishing of Files Lecture 3 -25

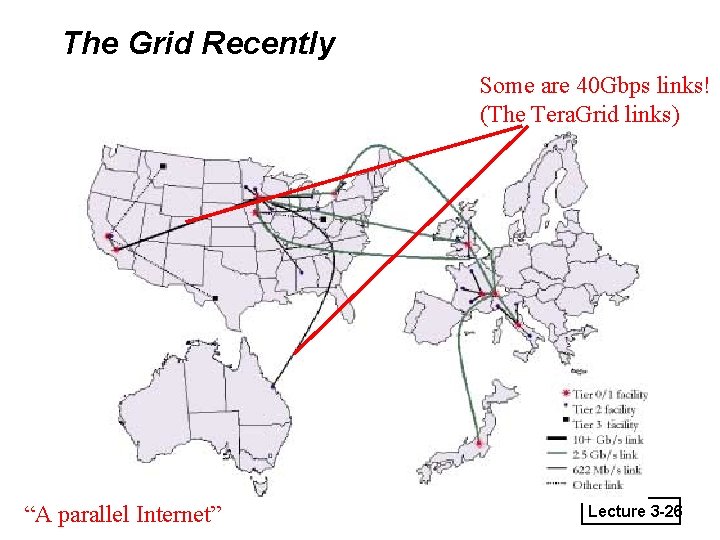

The Grid Recently Some are 40 Gbps links! (The Tera. Grid links) “A parallel Internet” Lecture 3 -26

Question to Ponder • Cloud computing vs. Grid computing: what are the differences? Lecture 3 -27

Announcements • HW 1 out today, due Sep 9 th – Please read instructions carefully! • MP 1 out tomorrow, due end of the month – Please read instructions carefully! – Groups of 3 students Lecture 3 -28

Readings • For next lecture – Readings: Section 12. 1, parts of Section 2. 3. 2 • We will discuss a topic relevant to MP 1! Lecture 3 -29

- Slides: 29