computer architecture Operating Systems and Architecture UFCFCU30 1

- Slides: 26

<computer architecture> Operating Systems and Architecture (UFCFCU-30 -1)

<outcomes> • After completing this section of the module you will be able to: • Describe and explain the purposes and implementation of operating systems • • Dispatch Scheduling Concurrency Synchronisation • When two trains approach each other at a crossing, both shall come to a full stop and neither shall start up again until the other has gone. STATUTE PASSED BY THE KANSAS STATE LEGISLATURE, EARLY IN THE 20 TH CENTURY

<basics> • In this section we will use process and thread interchangeably • A dispatcher gives control of the CPU to the process selected by the scheduler • The scheduler chooses the next jobs to enter the system and the next process to run • Concurrency is where several processes are executed during overlapping time periods • Synchronisation ensures that two or more concurrent processes do not simultaneously execute a shared resource

<dispatch> • The dispatcher gives control of the CPU to the process selected by the scheduler • It receives control in kernel mode as the result of an interrupt or system call • At this point the context (registers, stack etc) of the previously executing process are intact (Where is this context held? ) • The dispatcher will store this state in memory • The dispatcher restores all of the stored state of the new process to be run • The dispatcher jumps to the appropriate program counter value as listed in the process that has its full context established • The dispatcher should be as fast as possible (Why? )

<scheduler> • There are three schedulers: • Short term scheduler – decides which ready, and in memory, processes are to be run • Medium term scheduler – decides which processes are to be swapped out or in • Long term scheduler – decides which processes are to be put on the ready queue

<scheduler objectives> • A scheduler must: Maximise the number of users receiving acceptable response times Run a program in a consistent time regardless of system load Maximise the number of processes completed in a fixed time Effectively utilise the CPU Treat processes fairly Observe any process priorities Avoid starvation, where a process is overlooked indefinitely by the scheduler • Balance resources • • It is not possible to optimise all of these simultaneously

<strategies> • Schedulers can be pre-emptive or non-pre-emptive • Pre-emptive can interrupt a process • Non pre-emptive waits for a process to end or switch to waiting • Priority scheduling • There is a queue for each level of priority • The scheduler takes a process from the highest priority queue each time interval • What is the problem with this?

<strategies> • First come first served (FIFO) • As each process comes ready it joins the ready queue • The process that has been in the queue the longest is selected to run • The problem with this is that it can be inefficient of both I/O and CPU processes • Throughput can be low as longer processes can block shorter ones • But it can work well in conjunction with priority scheduling

<strategies> • Round Robin • This is a time slicing procedure • On a clock interrupt, the current process is moved to the ready queue and the next ready process is selected • If the clock interval is short, processes are handled quickly, but there is more overhead • An I/O process may not use all of the time slot, but a CPU process may use all the time • This favours processor processes

<strategies> • Shortest process next • The process with the shortest process execution time is selected first • Does not use pre-emption • The OS has to use an algorithm to predict the execution time • Can use statistics gathered from previous usage • Possibility of starvation for longer jobs

<strategies> • Shortest remaining time • A pre-emptive version of shortest process next • The OS has to use an algorithm to predict the execution time • Can use statistics gathered from previous usage • Possibility of starvation for longer jobs, but shorter jobs can be pre-empted

<strategies> • Feedback • A pre-emptive process using the priority queues • On first entry a process is put into RQ 0 (ready queue 0, where 0 is highest priority) • At each subsequent time it is pre-empted it is moved to the next lower priority ready queue (RQ 1, then RQ 2 etc) • In each queue a first come first served strategy is used • Short processes will complete quickly • Longer processes will move towards the lower priority queues • When it reaches the lowest queue it is handled by round robin • What is the problem with this scheme?

<strategies> • Feedback • The starvation problem can be minimised by changing the time slices • A process from RQ 0 gets one time slice • A process from RQ 1 gets two time slices • And so on • If a process has been on the lowest priority for a long time it can be promoted back up the priorities • Windows and mac. OS use multi-level feedback queues • Linux now uses the Completely Fair Scheduler

<strategies> • Completely Fair Scheduler • This doesn’t use any of the previous strategies • It is based on binary trees and therefore won’t be covered at this level

<strategies> • The previous strategies are used by short term schedulers • Long term schedulers may use first come first served to select jobs • Medium term schedulers are part of the swapping process, discussed in the Operating System Principles resources ( day 9)

<concurrency> • Concurrent computing consists of processes overlapping • In multiprocessor systems this means parallel computing • In single processor systems this means processes overlap, each being time sliced into single execution • Managing concurrency is therefore an issue for both multiprogramming and multiprocessor systems

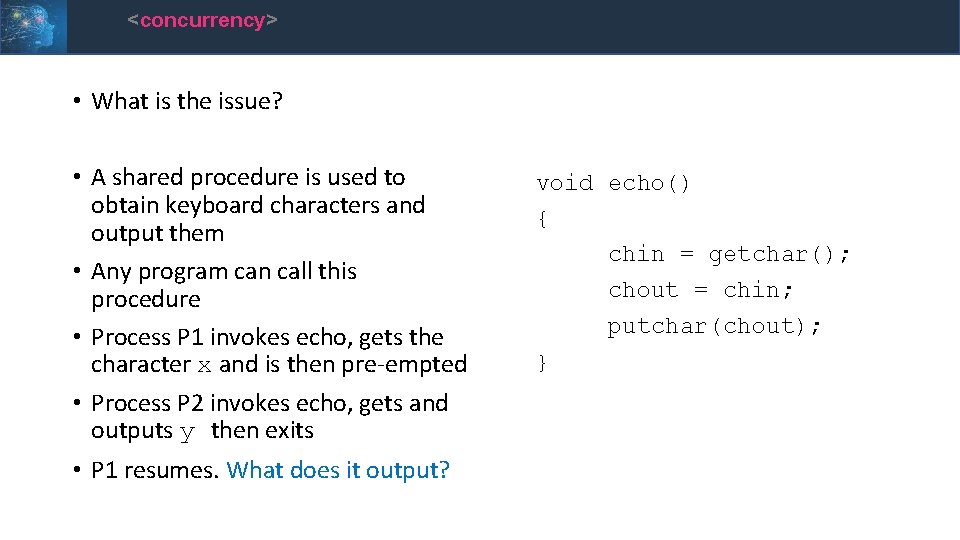

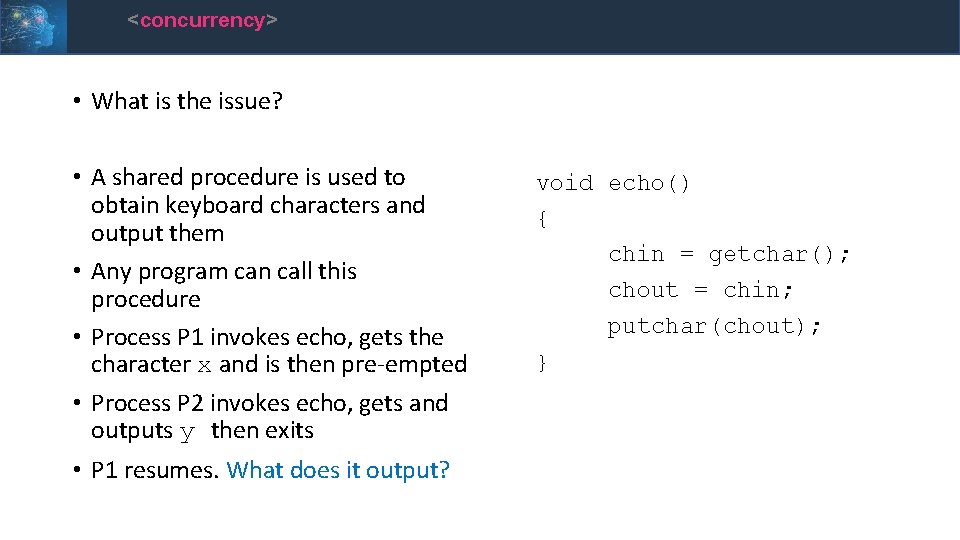

<concurrency> • What is the issue? • A shared procedure is used to obtain keyboard characters and output them • Any program can call this procedure • Process P 1 invokes echo, gets the character x and is then pre-empted • Process P 2 invokes echo, gets and outputs y then exits • P 1 resumes. What does it output? void echo() { chin = getchar(); chout = chin; putchar(chout); }

<concurrency> • Another issue is the race condition: • If two processes are running on two processors and both update a global variable, then the final value of the variable will be the loser of the race to finish first • Global resources must therefore be protected and access to them controlled

<critical section> • A critical section cannot be executed by more than one process at a time. • When a thread requires access to a shared resource it must enter a critical section: • In the critical section mutual exclusion is achieved by locking the resource during the access • This can be done in hardware by disabling interrupts. Is this a good idea?

<compare and swap> • x 86 and other processors have a Compare and Swap instruction • This checks a value on memory against a test value. • If they match, the value is replaced by a new value and a swap is allowed into the critical section • If they don’t match, then the process enters a busy-wait state where it idles until it checks the value again • While simple it has issues: • wasted CPU cycles in busy-wait • starvation and deadlocks can occur

<software solutions> • Semaphores • A semaphore is a variable that indicates if a resource is available or not • A process wanting to enter a critical section can check the semaphore • For instance, if the semaphore is 0, the resource is locked, if it is 1 the process can enter the critical section • Counting semaphores can be used where multiple access is allowed How would this work?

<software solutions> • Semaphores are powerful and flexible, however their use may be scattered throughout code and be difficult to check their correct operation • An alternative solution is the monitor (supported in C# and Java) • This is code that controls access to the resource • It only allows one process at a time to enter the monitor • Other processes must wait in a queue to enter the monitor • When a process exits the monitor, another enters from the queue • If a process is blocked while in the monitor, it must be released and only put back in the queue when the condition is cleared

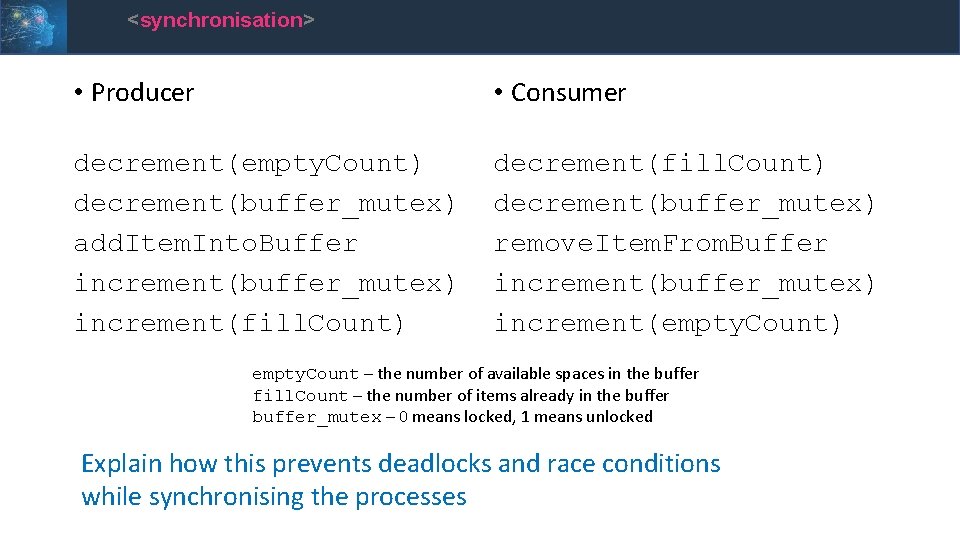

<synchronisation> • The previous methods have shown how the OS can achieve synchronisation, ensuring that concurrent processes can co-operate • A classic example is the producer/consumer model where one process puts data into a buffer and another extracts it • Buffer operations must not overlap, only one process can access the buffer at a time • The producer must not try to add data to a full buffer • The consumer must not try to take data from an empty buffer

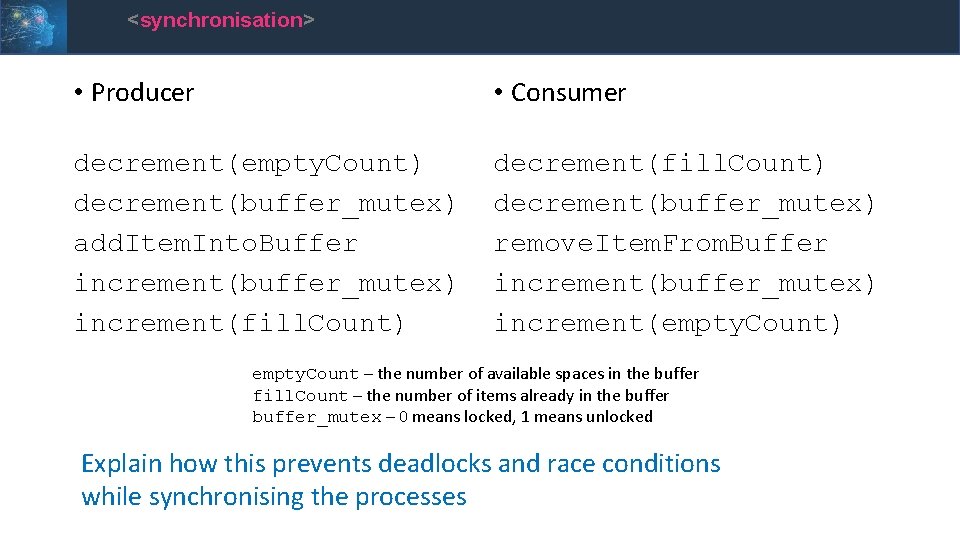

<synchronisation> • Semaphores are used, including a special mutex semaphore Note: The example ignores the empty/full condition • A mutex semaphore is owned by the process. It can only be incremented or decremented by the same process, thus locking out any other process. This prevents deadlocks. • The mutex initialises as 1 • Semaphores used: • empty. Count – the number of available spaces in the buffer • fill. Count – the number of items already in the buffer • buffer_mutex – 0 means locked, 1 means unlocked

<synchronisation> • Producer • Consumer decrement(empty. Count) decrement(buffer_mutex) add. Item. Into. Buffer increment(buffer_mutex) increment(fill. Count) decrement(buffer_mutex) remove. Item. From. Buffer increment(buffer_mutex) increment(empty. Count) empty. Count – the number of available spaces in the buffer fill. Count – the number of items already in the buffer_mutex – 0 means locked, 1 means unlocked Explain how this prevents deadlocks and race conditions while synchronising the processes

<conclusion> • The dispatcher and scheduler allow multiprogramming to take place on single and multiprocessors • Issues with overlapping use of resources by threads and processes are managed with concurrent hardware and programming techniques • Synchronisation between processes is achieved with the use of semaphores • That ends all the sessions for this module