Computer Architecture Lecture 9 GPUs and GPGPU Programming

![How Can You Exploit Parallelism Here? for (i=0; i < N; i++) C[i] = How Can You Exploit Parallelism Here? for (i=0; i < N; i++) C[i] =](https://slidetodoc.com/presentation_image/27879eddad8bb27d1cd1ed2657d55312/image-6.jpg)

![for (i=0; i < N; i++) C[i] = A[i] + B[i]; Prog. Model 1: for (i=0; i < N; i++) C[i] = A[i] + B[i]; Prog. Model 1:](https://slidetodoc.com/presentation_image/27879eddad8bb27d1cd1ed2657d55312/image-7.jpg)

![for (i=0; i < N; i++) C[i] = A[i] + B[i]; Prog. Model 2: for (i=0; i < N; i++) C[i] = A[i] + B[i]; Prog. Model 2:](https://slidetodoc.com/presentation_image/27879eddad8bb27d1cd1ed2657d55312/image-8.jpg)

![for (i=0; i < N; i++) C[i] = A[i] + B[i]; Prog. Model 3: for (i=0; i < N; i++) C[i] = A[i] + B[i]; Prog. Model 3:](https://slidetodoc.com/presentation_image/27879eddad8bb27d1cd1ed2657d55312/image-9.jpg)

![for (i=0; i < N; i++) C[i] = A[i] + B[i]; Prog. Model 3: for (i=0; i < N; i++) C[i] = A[i] + B[i]; Prog. Model 3:](https://slidetodoc.com/presentation_image/27879eddad8bb27d1cd1ed2657d55312/image-10.jpg)

![Multithreading of Warps n n n for (i=0; i < N; i++) C[i] = Multithreading of Warps n n n for (i=0; i < N; i++) C[i] =](https://slidetodoc.com/presentation_image/27879eddad8bb27d1cd1ed2657d55312/image-15.jpg)

![Warp Execution (Recall the Slide) 32 -thread warp executing ADD A[tid], B[tid] C[tid] Execution Warp Execution (Recall the Slide) 32 -thread warp executing ADD A[tid], B[tid] C[tid] Execution](https://slidetodoc.com/presentation_image/27879eddad8bb27d1cd1ed2657d55312/image-19.jpg)

![Indexing and Memory Access n Image layout in memory q q Row-major layout Image[j][i] Indexing and Memory Access n Image layout in memory q q Row-major layout Image[j][i]](https://slidetodoc.com/presentation_image/27879eddad8bb27d1cd1ed2657d55312/image-52.jpg)

![Data Reuse n Shared memory tiling __shared__ int l_data[(L_SIZE+2)*(L_SIZE+2)]; … Load tile into shared Data Reuse n Shared memory tiling __shared__ int l_data[(L_SIZE+2)*(L_SIZE+2)]; … Load tile into shared](https://slidetodoc.com/presentation_image/27879eddad8bb27d1cd1ed2657d55312/image-67.jpg)

![Vector Reduction n Naïve mapping __shared__ float partial. Sum[] unsigned int t = thread. Vector Reduction n Naïve mapping __shared__ float partial. Sum[] unsigned int t = thread.](https://slidetodoc.com/presentation_image/27879eddad8bb27d1cd1ed2657d55312/image-75.jpg)

![Vector Reduction n Divergence-free mapping __shared__ float partial. Sum[] unsigned int t = thread. Vector Reduction n Divergence-free mapping __shared__ float partial. Sum[] unsigned int t = thread.](https://slidetodoc.com/presentation_image/27879eddad8bb27d1cd1ed2657d55312/image-77.jpg)

- Slides: 88

Computer Architecture Lecture 9: GPUs and GPGPU Programming Prof. Onur Mutlu ETH Zürich Fall 2017 19 October 2017

Agenda for Today n GPUs n Introduction to GPU Programming Digitaltechnik (Spring 2017) You. Tube videos Lecture 21: GPUs https: //youtu. be/MUPTdxl 3 JKs? t=23 m 17 s 2

GPUs (Graphics Processing Units)�

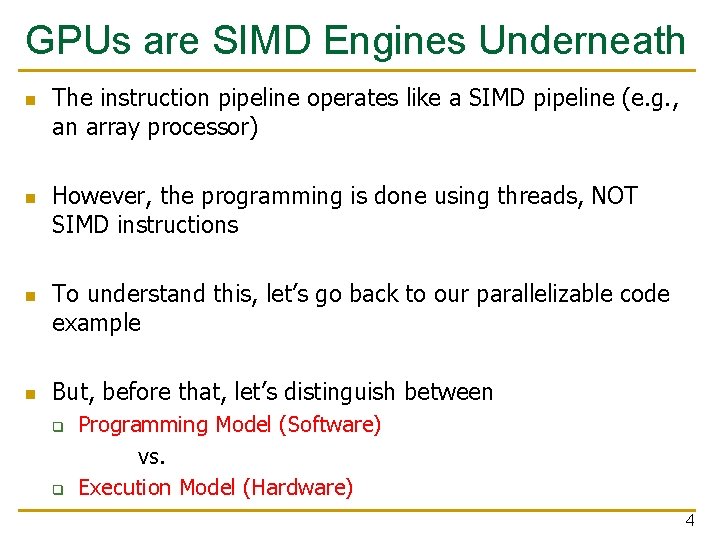

GPUs are SIMD Engines Underneath n n The instruction pipeline operates like a SIMD pipeline (e. g. , an array processor) However, the programming is done using threads, NOT SIMD instructions To understand this, let’s go back to our parallelizable code example But, before that, let’s distinguish between q q Programming Model (Software) vs. Execution Model (Hardware) 4

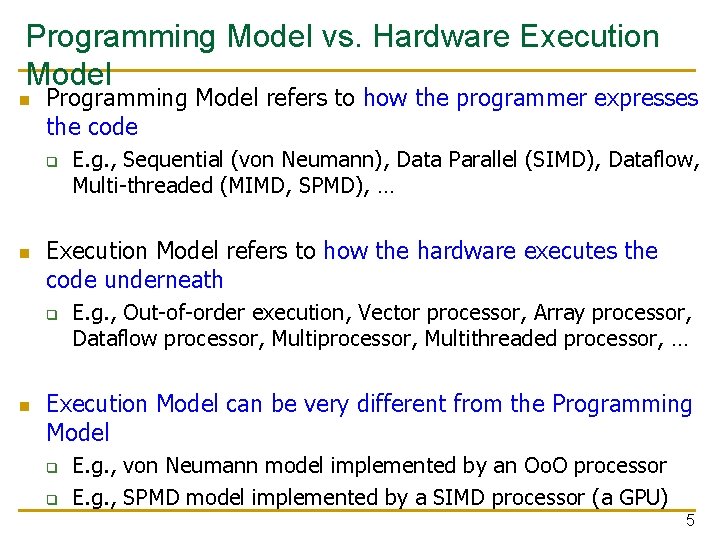

Programming Model vs. Hardware Execution Model n Programming Model refers to how the programmer expresses the code q n Execution Model refers to how the hardware executes the code underneath q n E. g. , Sequential (von Neumann), Data Parallel (SIMD), Dataflow, Multi-threaded (MIMD, SPMD), … E. g. , Out-of-order execution, Vector processor, Array processor, Dataflow processor, Multithreaded processor, … Execution Model can be very different from the Programming Model q q E. g. , von Neumann model implemented by an Oo. O processor E. g. , SPMD model implemented by a SIMD processor (a GPU) 5

![How Can You Exploit Parallelism Here for i0 i N i Ci How Can You Exploit Parallelism Here? for (i=0; i < N; i++) C[i] =](https://slidetodoc.com/presentation_image/27879eddad8bb27d1cd1ed2657d55312/image-6.jpg)

How Can You Exploit Parallelism Here? for (i=0; i < N; i++) C[i] = A[i] + B[i]; Scalar Sequential Code load Iter. 1 add Let’s examine three programming options to exploit instruction-level parallelism present in this sequential code: store 1. Sequential (SISD) load Iter. 2 2. Data-Parallel (SIMD) add store 3. Multithreaded (MIMD/SPMD) 6

![for i0 i N i Ci Ai Bi Prog Model 1 for (i=0; i < N; i++) C[i] = A[i] + B[i]; Prog. Model 1:](https://slidetodoc.com/presentation_image/27879eddad8bb27d1cd1ed2657d55312/image-7.jpg)

for (i=0; i < N; i++) C[i] = A[i] + B[i]; Prog. Model 1: Sequential (SISD) Scalar Sequential Code n load Iter. 1 n n Can be executed on a: Pipelined processor Out-of-order execution processor add q store q load Iter. 2 add store q n Independent instructions executed when ready Different iterations are present in the instruction window and can execute in parallel in multiple functional units In other words, the loop is dynamically unrolled by the hardware Superscalar or VLIW processor q Can fetch and execute multiple instructions per cycle 7

![for i0 i N i Ci Ai Bi Prog Model 2 for (i=0; i < N; i++) C[i] = A[i] + B[i]; Prog. Model 2:](https://slidetodoc.com/presentation_image/27879eddad8bb27d1cd1ed2657d55312/image-8.jpg)

for (i=0; i < N; i++) C[i] = A[i] + B[i]; Prog. Model 2: Data Parallel (SIMD) Vector Instruction Scalar Sequential Code load load Iter. 1 load Vectorized Code VLD A V 1 VLD B V 2 add add VADD store VST load Iter. 2 1 add store load V 1 + V 2 V 3 C Iter. Realization: Each iteration is independent 2 Idea: Programmer or compiler generates a SIMD instruction to execute the same instruction from all iterations across different data Best executed by a SIMD processor (vector, array) 8

![for i0 i N i Ci Ai Bi Prog Model 3 for (i=0; i < N; i++) C[i] = A[i] + B[i]; Prog. Model 3:](https://slidetodoc.com/presentation_image/27879eddad8bb27d1cd1ed2657d55312/image-9.jpg)

for (i=0; i < N; i++) C[i] = A[i] + B[i]; Prog. Model 3: Multithreaded Scalar Sequential Code load load Iter. 1 load add add store load Iter. 2 1 add store load Iter. Realization: Each iteration is independent 2 Idea: Programmer or compiler generates a thread to execute each iteration. Each thread does the same thing (but on different data) Can be executed on a MIMD machine 9

![for i0 i N i Ci Ai Bi Prog Model 3 for (i=0; i < N; i++) C[i] = A[i] + B[i]; Prog. Model 3:](https://slidetodoc.com/presentation_image/27879eddad8bb27d1cd1ed2657d55312/image-10.jpg)

for (i=0; i < N; i++) C[i] = A[i] + B[i]; Prog. Model 3: Multithreaded load Iter. 1 load add store Iter. Realization: Each iteration is independent 2 Idea: This Programmer compiler generates a thread particularormodel is also called: to execute each iteration. Each thread does the SPMD: Single Multiple Data same thing (but on Program different data) be on machine Can be executed on a a SIMT SIMD machine Can be executed on a MIMD machine Single Instruction Multiple Thread 10

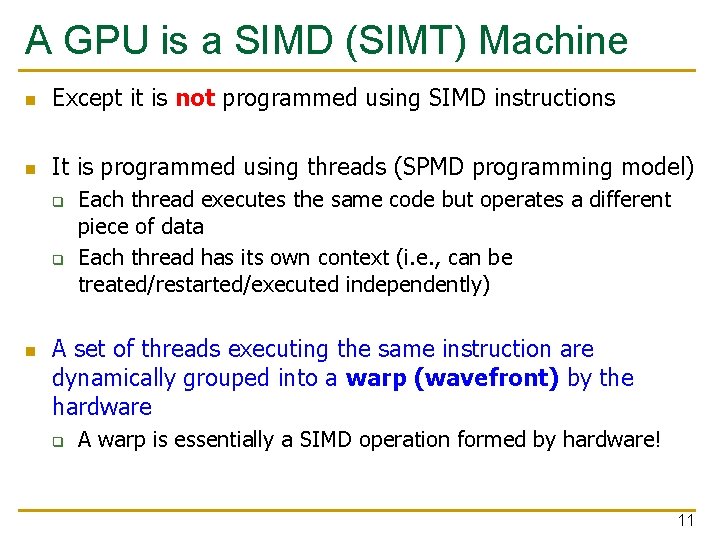

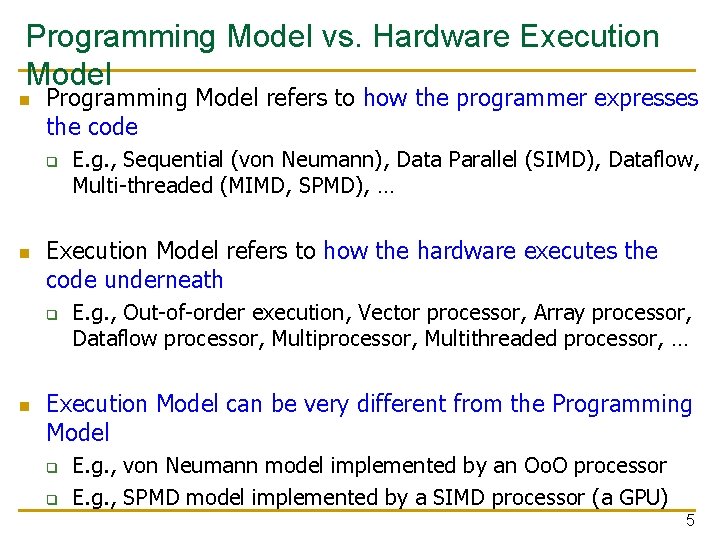

A GPU is a SIMD (SIMT) Machine n Except it is not programmed using SIMD instructions n It is programmed using threads (SPMD programming model) q q n Each thread executes the same code but operates a different piece of data Each thread has its own context (i. e. , can be treated/restarted/executed independently) A set of threads executing the same instruction are dynamically grouped into a warp (wavefront) by the hardware q A warp is essentially a SIMD operation formed by hardware! 11

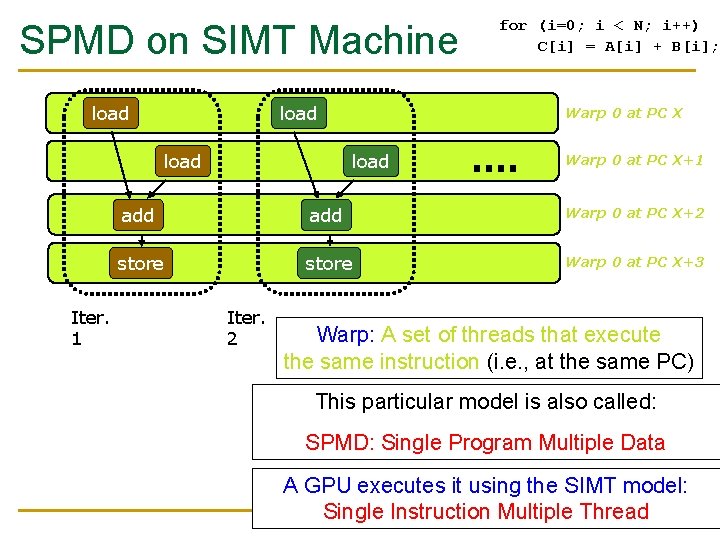

SPMD on SIMT Machine load Warp 0 at PC X load Iter. 1 for (i=0; i < N; i++) C[i] = A[i] + B[i]; load Warp 0 at PC X+1 add Warp 0 at PC X+2 store Warp 0 at PC X+3 Iter. 2 Warp: A set of threads that execute Realization: Each iteration is independent the same instruction (i. e. , at the same PC) Idea: This Programmer compiler generates a thread particularormodel is also called: to execute each iteration. Each thread does the SPMD: Multiple Data same thing Single (but on Program different data) A GPU executes the SIMT model: Can be executed on a SIMD machine Can be executed onitausing MIMD machine Single Instruction Multiple Thread 12

Graphics Processing Units SIMD not Exposed to Programmer (SIMT)

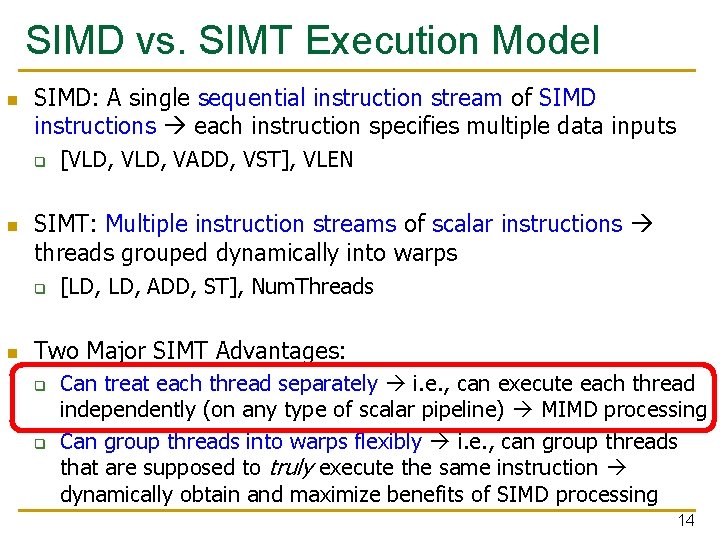

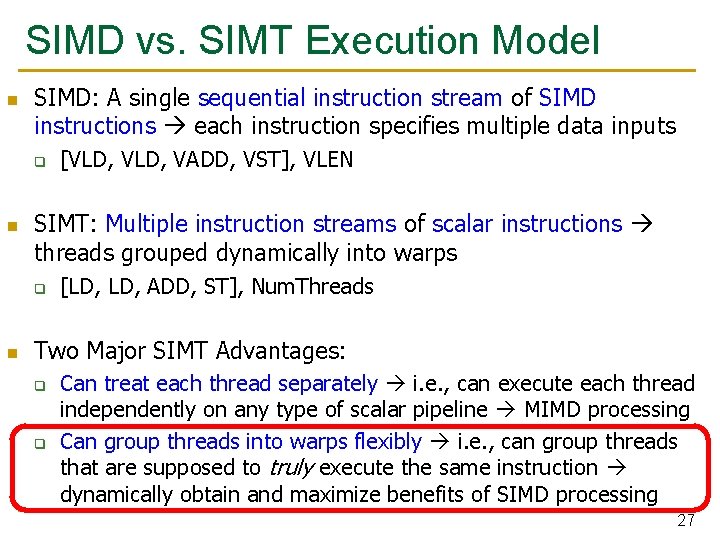

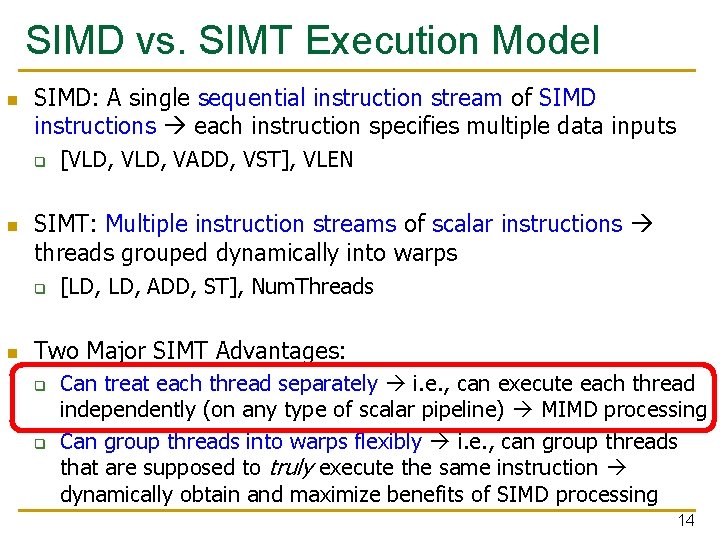

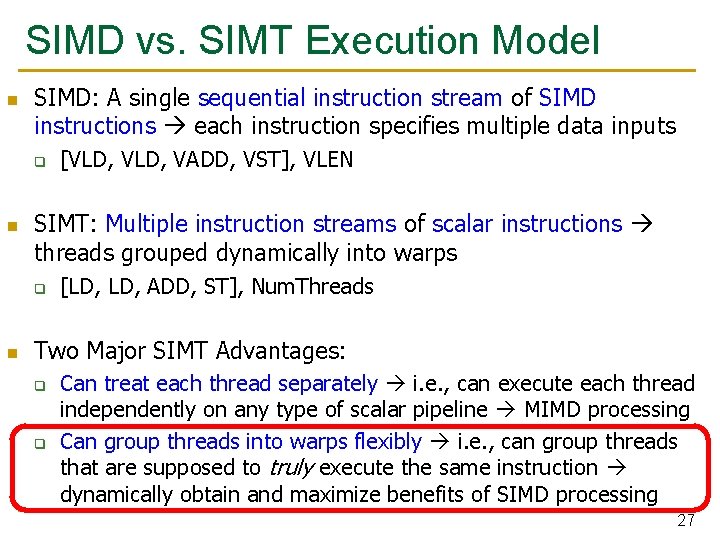

SIMD vs. SIMT Execution Model n SIMD: A single sequential instruction stream of SIMD instructions each instruction specifies multiple data inputs q n SIMT: Multiple instruction streams of scalar instructions threads grouped dynamically into warps q n [VLD, VADD, VST], VLEN [LD, ADD, ST], Num. Threads Two Major SIMT Advantages: q q Can treat each thread separately i. e. , can execute each thread independently (on any type of scalar pipeline) MIMD processing Can group threads into warps flexibly i. e. , can group threads that are supposed to truly execute the same instruction dynamically obtain and maximize benefits of SIMD processing 14

![Multithreading of Warps n n n for i0 i N i Ci Multithreading of Warps n n n for (i=0; i < N; i++) C[i] =](https://slidetodoc.com/presentation_image/27879eddad8bb27d1cd1ed2657d55312/image-15.jpg)

Multithreading of Warps n n n for (i=0; i < N; i++) C[i] = A[i] + B[i]; Assume a warp consists of 32 threads If you have 32 K iterations, and 1 iteration/thread 1 K warps Warps can be interleaved on the same pipeline Fine grained multithreading of warps load add store Iter. 1 33 20*32 + 1 0 at PC X Warp 1 Warp 20 at PC X+2 Iter. 2 34 20*32 +2 15

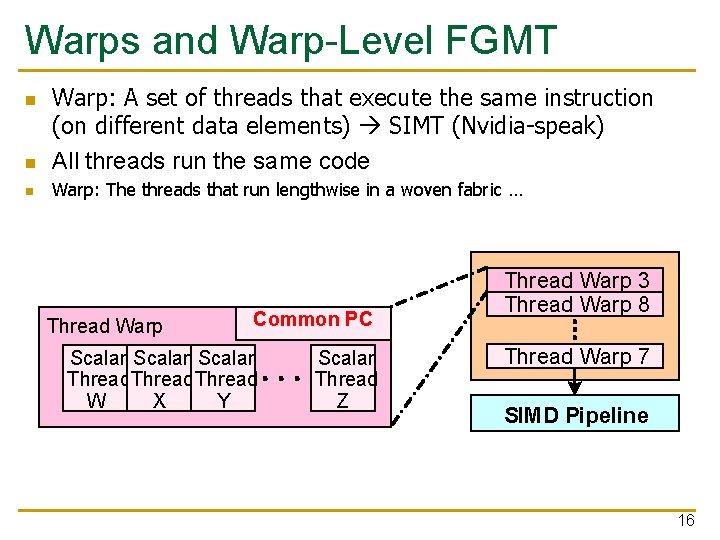

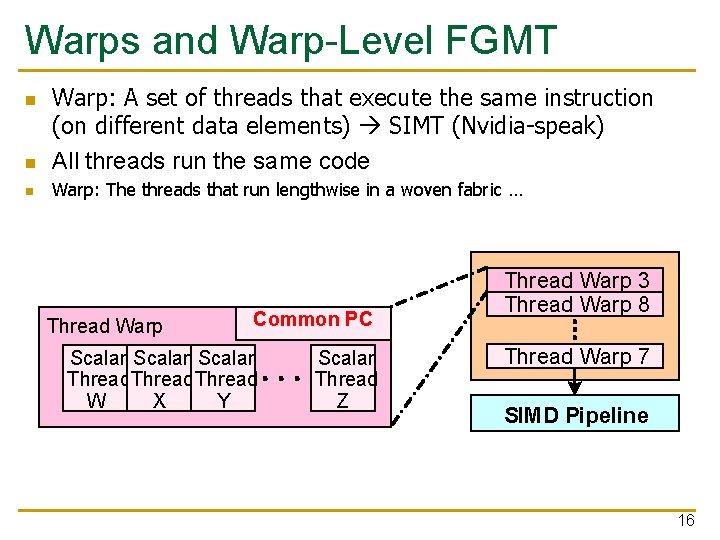

Warps and Warp-Level FGMT n Warp: A set of threads that execute the same instruction (on different data elements) SIMT (Nvidia-speak) All threads run the same code n Warp: The threads that run lengthwise in a woven fabric … n Thread Warp Common PC Scalar Thread W X Y Scalar Thread Z Thread Warp 3 Thread Warp 8 Thread Warp 7 SIMD Pipeline 16

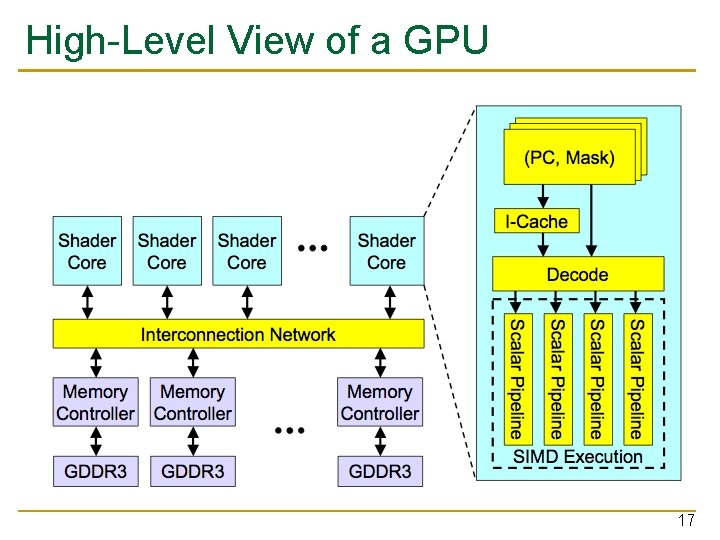

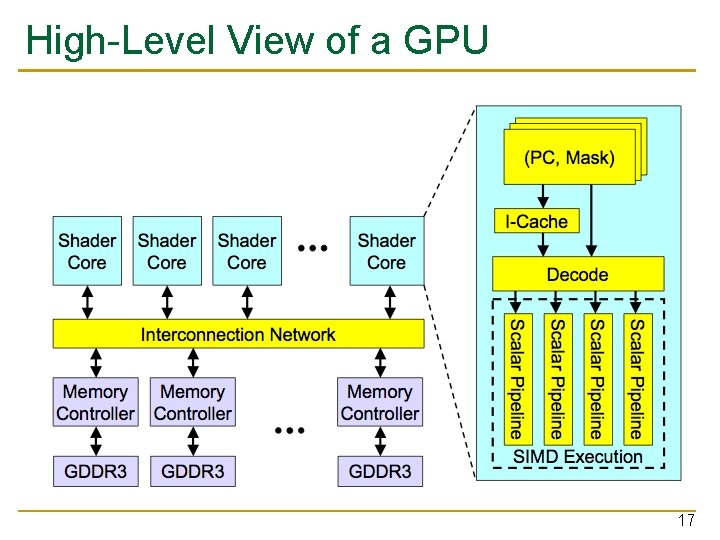

High-Level View of a GPU 17

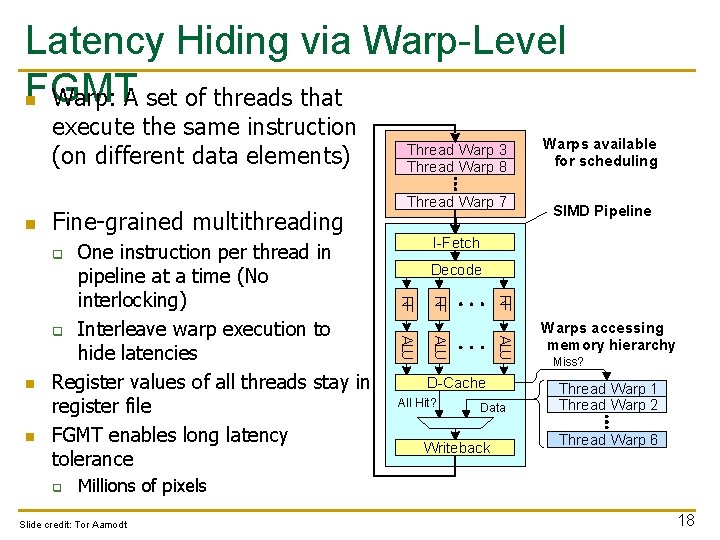

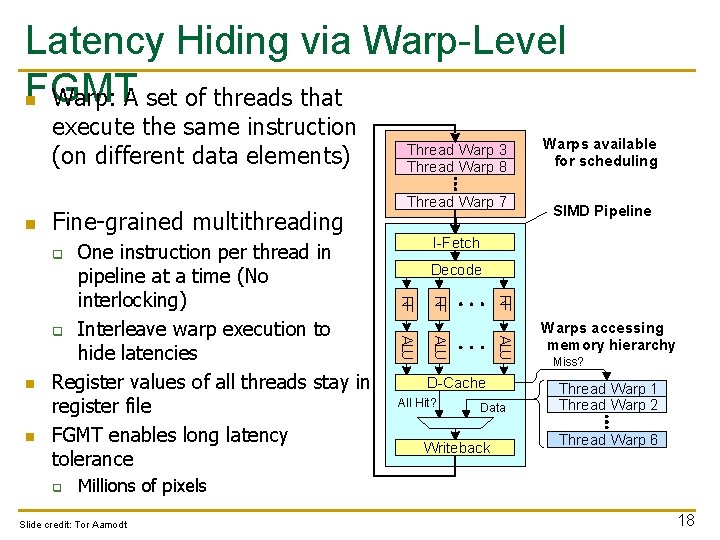

Latency Hiding via Warp-Level FGMT n Warp: A set of threads that execute the same instruction (on different data elements) n Fine-grained multithreading Thread Warp 7 RF ALU q ALU n SIMD Pipeline Decode RF n Warps available for scheduling I-Fetch q RF One instruction per thread in pipeline at a time (No interlocking) q Interleave warp execution to hide latencies Register values of all threads stay in register file FGMT enables long latency tolerance Thread Warp 3 Thread Warp 8 D-Cache All Hit? Data Writeback Warps accessing memory hierarchy Miss? Thread Warp 1 Thread Warp 2 Thread Warp 6 Millions of pixels Slide credit: Tor Aamodt 18

![Warp Execution Recall the Slide 32 thread warp executing ADD Atid Btid Ctid Execution Warp Execution (Recall the Slide) 32 -thread warp executing ADD A[tid], B[tid] C[tid] Execution](https://slidetodoc.com/presentation_image/27879eddad8bb27d1cd1ed2657d55312/image-19.jpg)

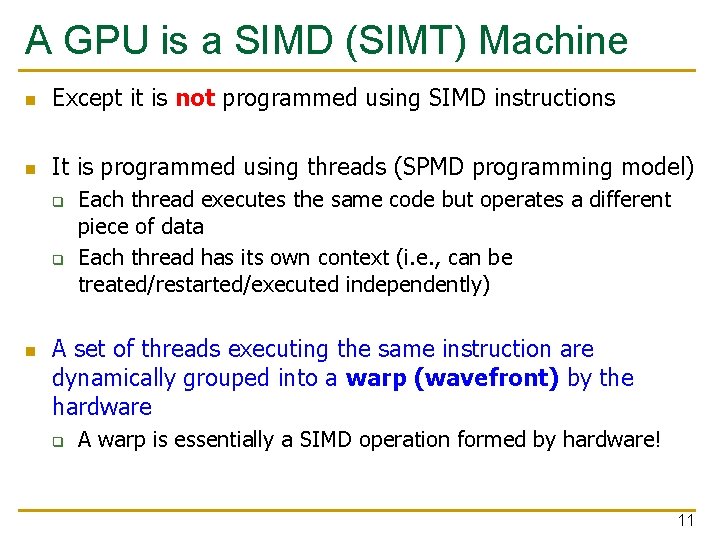

Warp Execution (Recall the Slide) 32 -thread warp executing ADD A[tid], B[tid] C[tid] Execution using one pipelined functional unit Execution using four pipelined functional units A[6] B[6] A[24] B[24] A[25] B[25] A[26] B[26] A[27] B[27] A[5] B[5] A[20] B[20] A[21] B[21] A[22] B[22] A[23] B[23] A[4] B[4] A[16] B[16] A[17] B[17] A[18] B[18] A[19] B[19] A[3] B[3] A[12] B[12] A[13] B[13] A[14] B[14] A[15] B[15] C[2] C[8] C[9] C[10] C[11] C[4] C[5] C[6] C[7] C[0] C[1] C[2] C[3] Slide credit: Krste Asanovic 19

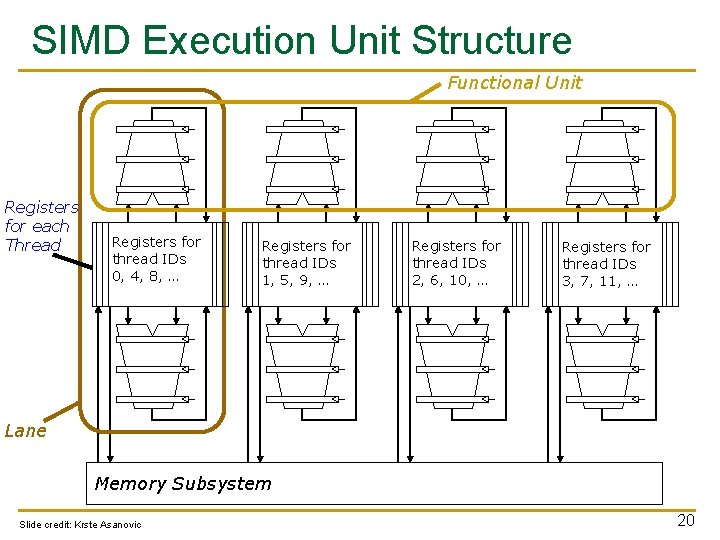

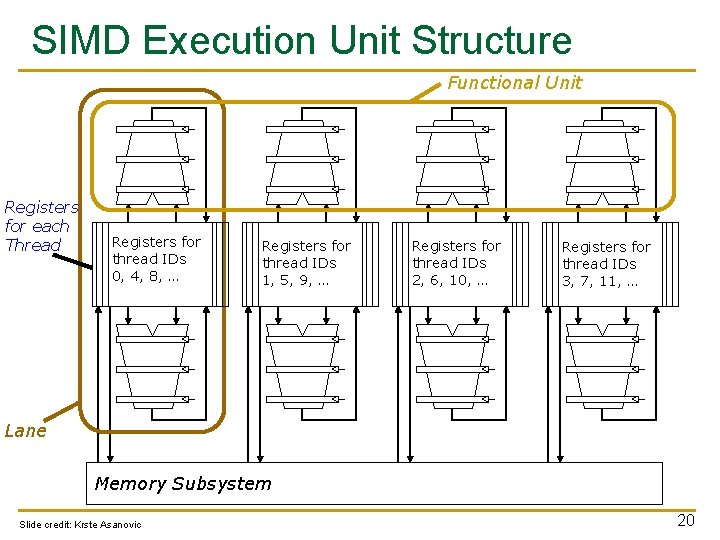

SIMD Execution Unit Structure Functional Unit Registers for each Thread Registers for thread IDs 0, 4, 8, … Registers for thread IDs 1, 5, 9, … Registers for thread IDs 2, 6, 10, … Registers for thread IDs 3, 7, 11, … Lane Memory Subsystem Slide credit: Krste Asanovic 20

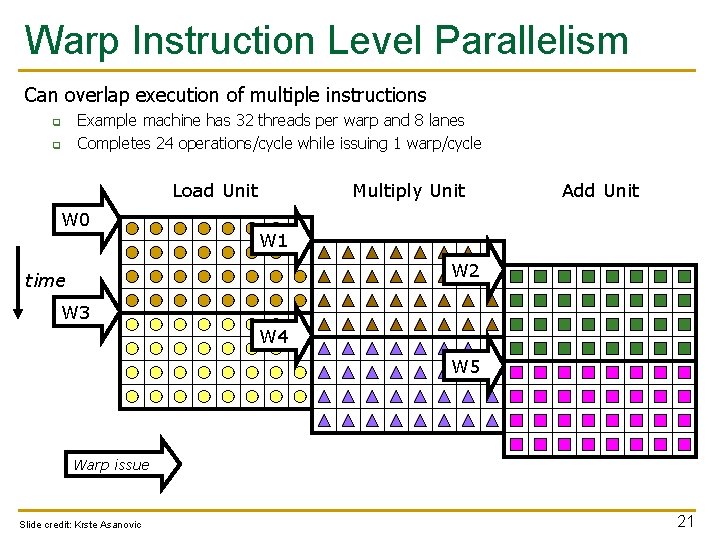

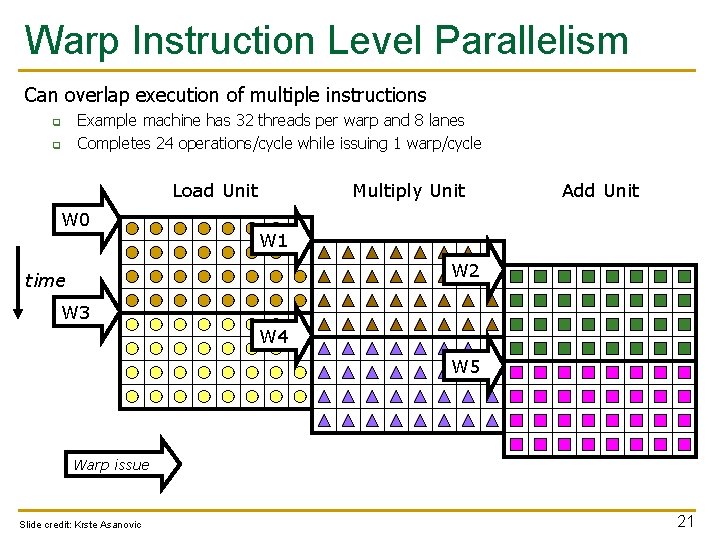

Warp Instruction Level Parallelism Can overlap execution of multiple instructions q q Example machine has 32 threads per warp and 8 lanes Completes 24 operations/cycle while issuing 1 warp/cycle Load Unit W 0 Multiply Unit Add Unit W 1 W 2 time W 3 W 4 W 5 Warp issue Slide credit: Krste Asanovic 21

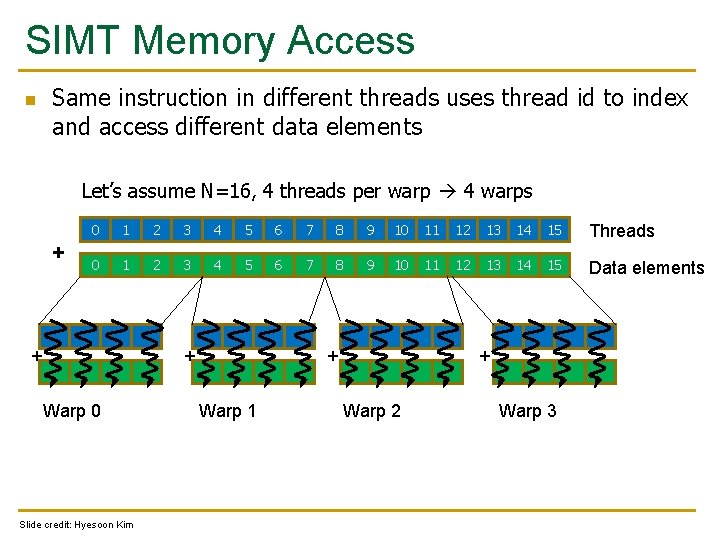

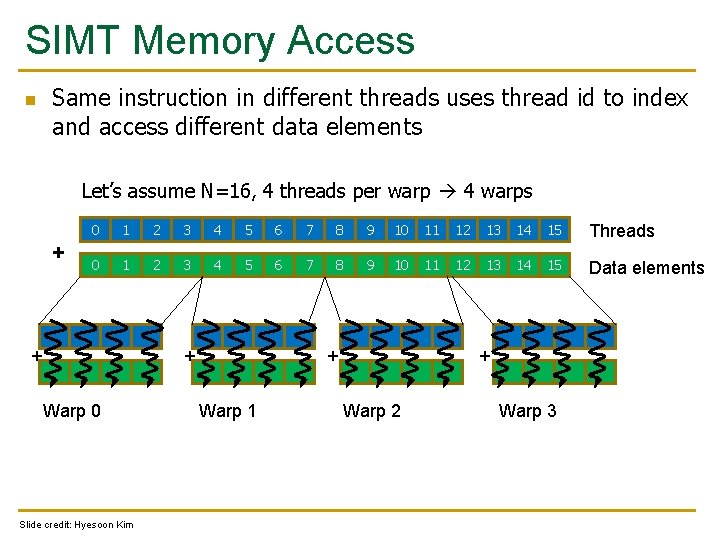

SIMT Memory Access Same instruction in different threads uses thread id to index and access different data elements n Let’s assume N=16, 4 threads per warp 4 warps + 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 Threads 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 Data elements + Warp 0 Slide credit: Hyesoon Kim + + Warp 1 + Warp 2 Warp 3

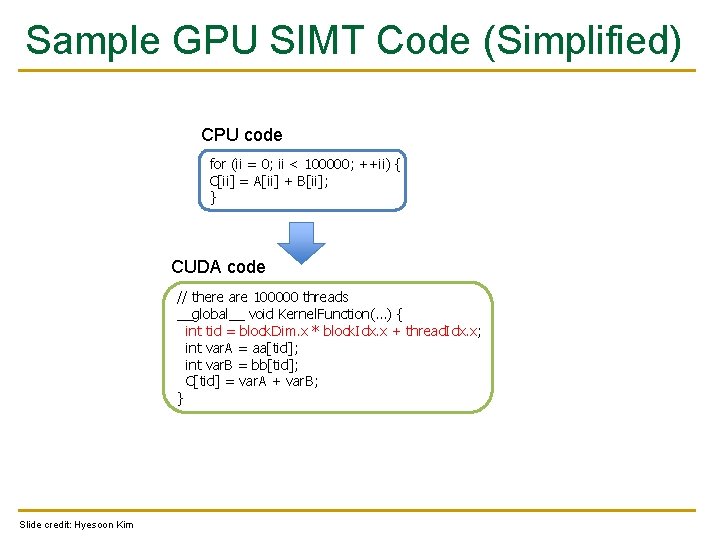

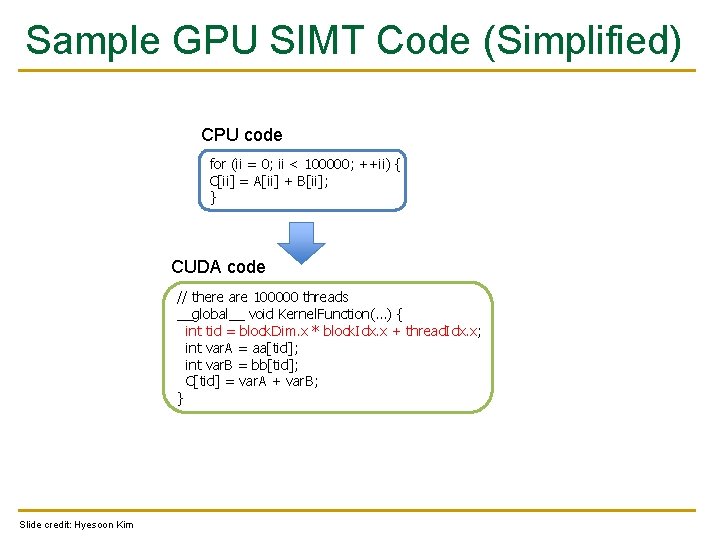

Sample GPU SIMT Code (Simplified) CPU code for (ii = 0; ii < 100000; ++ii) { C[ii] = A[ii] + B[ii]; } CUDA code // there are 100000 threads __global__ void Kernel. Function(…) { int tid = block. Dim. x * block. Idx. x + thread. Idx. x; int var. A = aa[tid]; int var. B = bb[tid]; C[tid] = var. A + var. B; } Slide credit: Hyesoon Kim

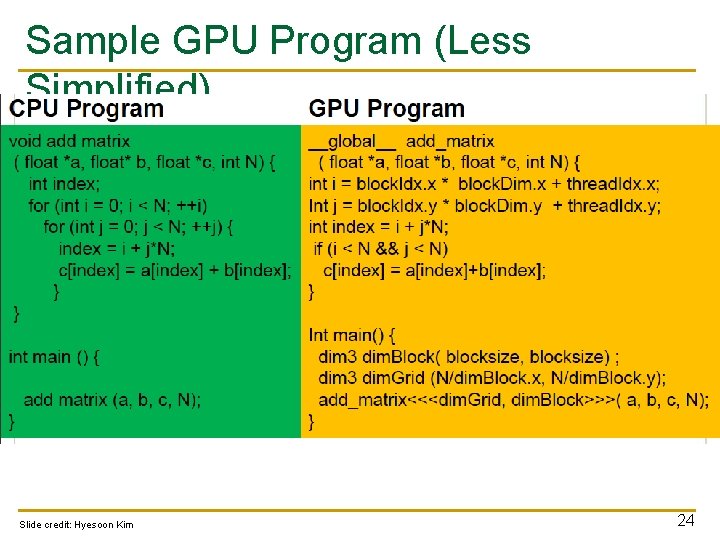

Sample GPU Program (Less Simplified) Slide credit: Hyesoon Kim 24

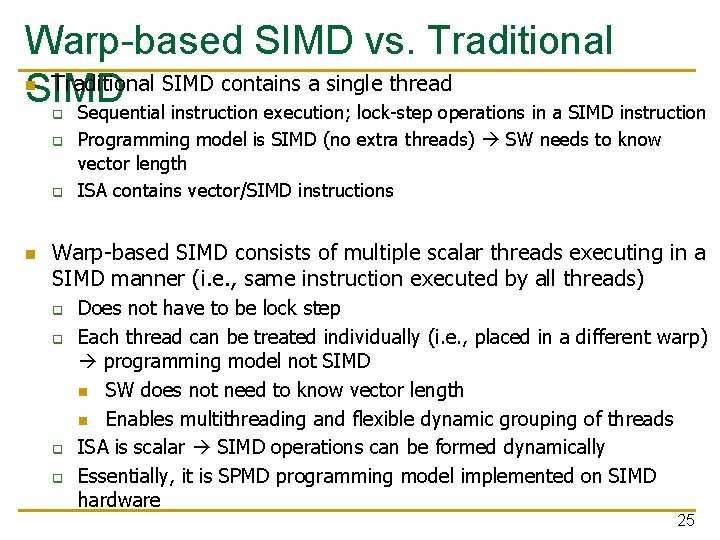

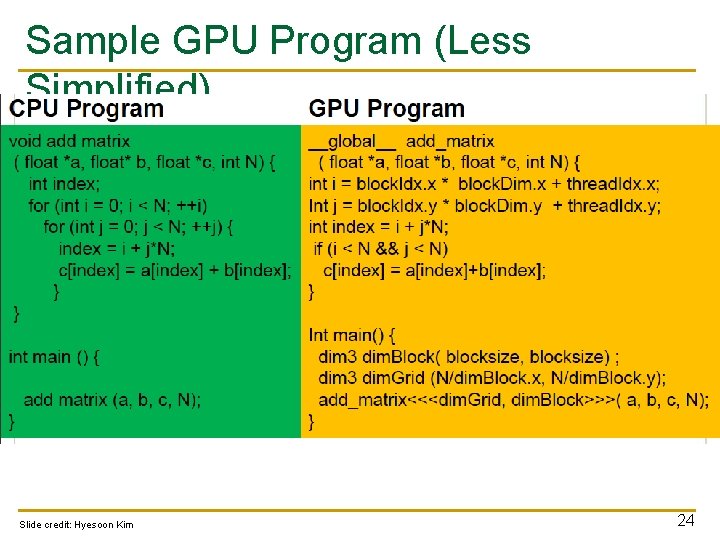

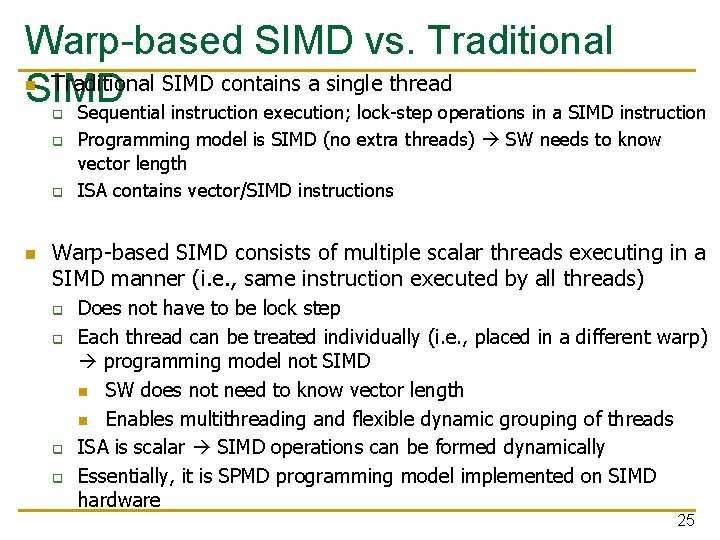

Warp-based SIMD vs. Traditional SIMD contains a single thread SIMD Sequential instruction execution; lock-step operations in a SIMD instruction n q q q n Programming model is SIMD (no extra threads) SW needs to know vector length ISA contains vector/SIMD instructions Warp-based SIMD consists of multiple scalar threads executing in a SIMD manner (i. e. , same instruction executed by all threads) q q Does not have to be lock step Each thread can be treated individually (i. e. , placed in a different warp) programming model not SIMD n SW does not need to know vector length n Enables multithreading and flexible dynamic grouping of threads ISA is scalar SIMD operations can be formed dynamically Essentially, it is SPMD programming model implemented on SIMD hardware 25

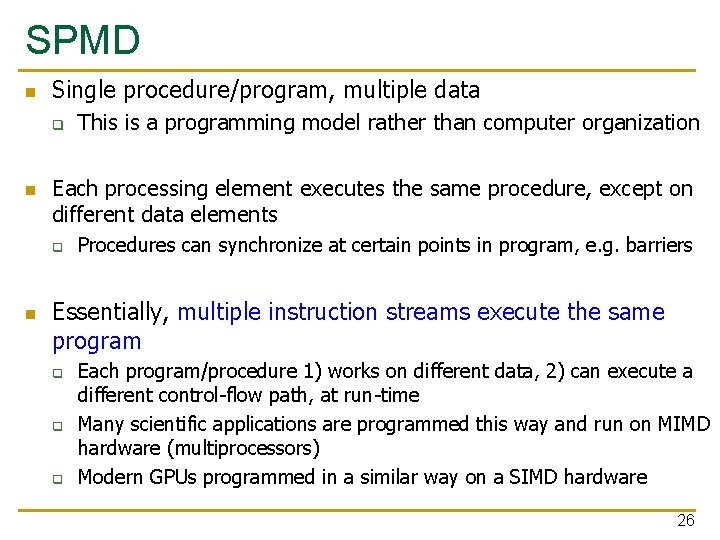

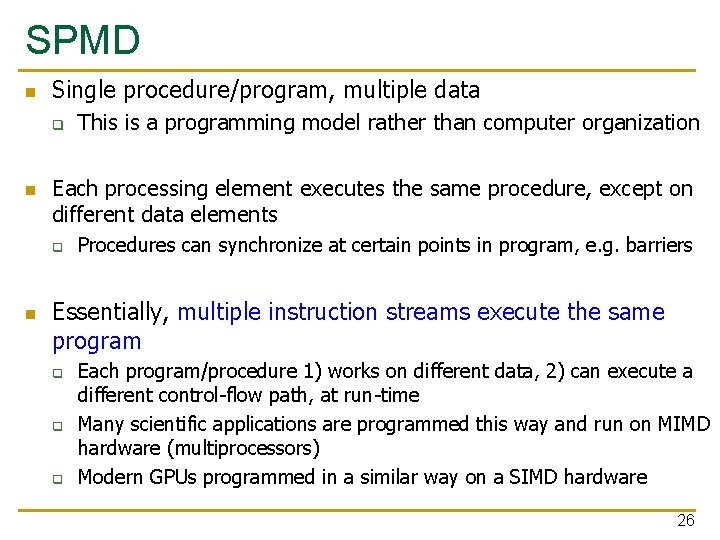

SPMD n Single procedure/program, multiple data q n Each processing element executes the same procedure, except on different data elements q n This is a programming model rather than computer organization Procedures can synchronize at certain points in program, e. g. barriers Essentially, multiple instruction streams execute the same program q q q Each program/procedure 1) works on different data, 2) can execute a different control-flow path, at run-time Many scientific applications are programmed this way and run on MIMD hardware (multiprocessors) Modern GPUs programmed in a similar way on a SIMD hardware 26

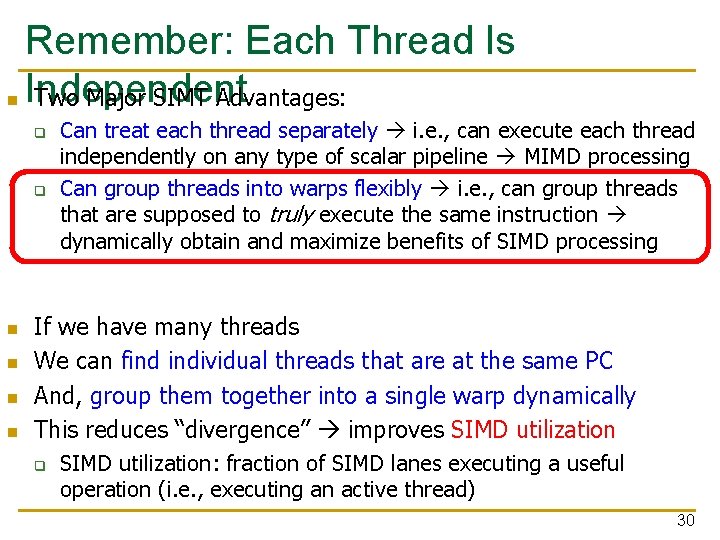

SIMD vs. SIMT Execution Model n SIMD: A single sequential instruction stream of SIMD instructions each instruction specifies multiple data inputs q n SIMT: Multiple instruction streams of scalar instructions threads grouped dynamically into warps q n [VLD, VADD, VST], VLEN [LD, ADD, ST], Num. Threads Two Major SIMT Advantages: q q Can treat each thread separately i. e. , can execute each thread independently on any type of scalar pipeline MIMD processing Can group threads into warps flexibly i. e. , can group threads that are supposed to truly execute the same instruction dynamically obtain and maximize benefits of SIMD processing 27

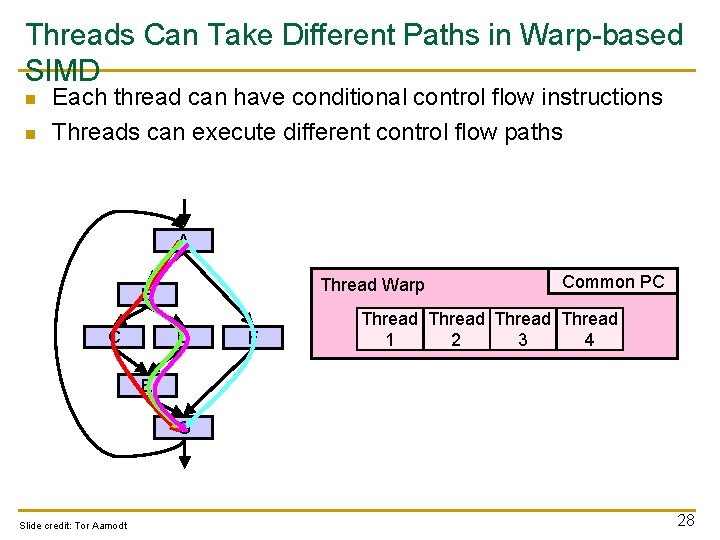

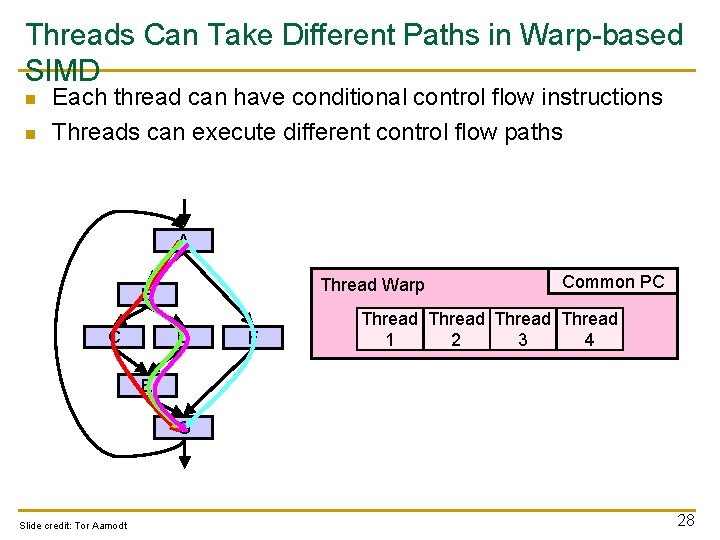

Threads Can Take Different Paths in Warp-based SIMD n n Each thread can have conditional control flow instructions Threads can execute different control flow paths A Thread Warp B C D F Common PC Thread 1 2 3 4 E G Slide credit: Tor Aamodt 28

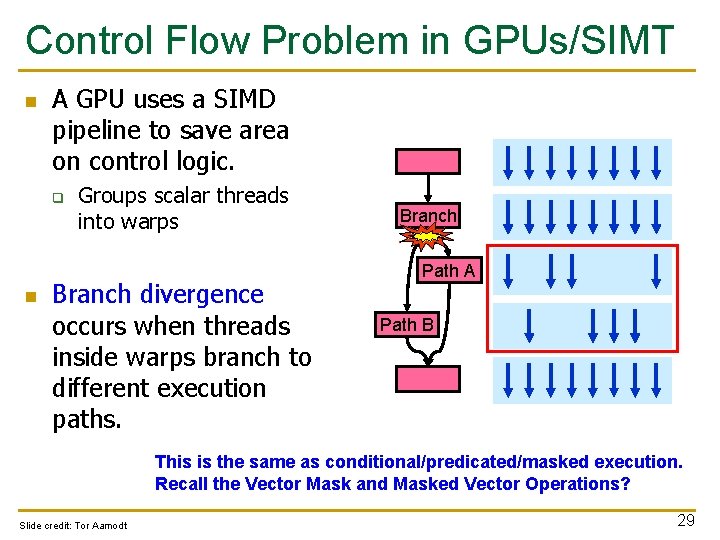

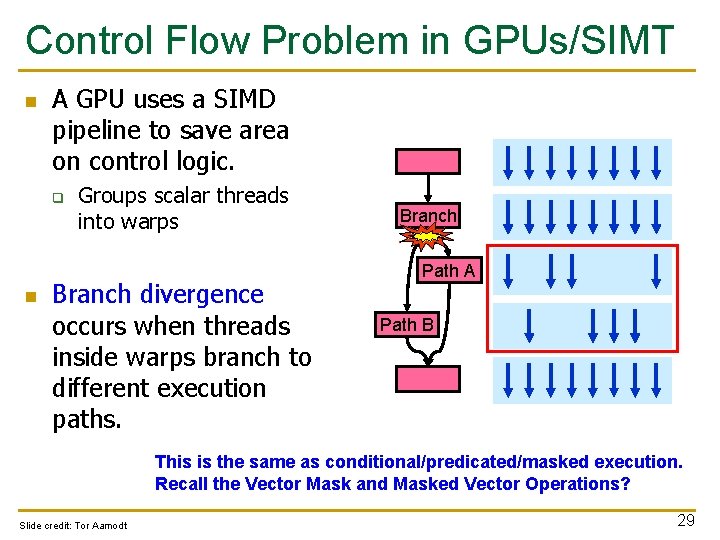

Control Flow Problem in GPUs/SIMT n A GPU uses a SIMD pipeline to save area on control logic. q n Groups scalar threads into warps Branch divergence occurs when threads inside warps branch to different execution paths. Branch Path A Path B This is the same as conditional/predicated/masked execution. Recall the Vector Mask and Masked Vector Operations? Slide credit: Tor Aamodt 29

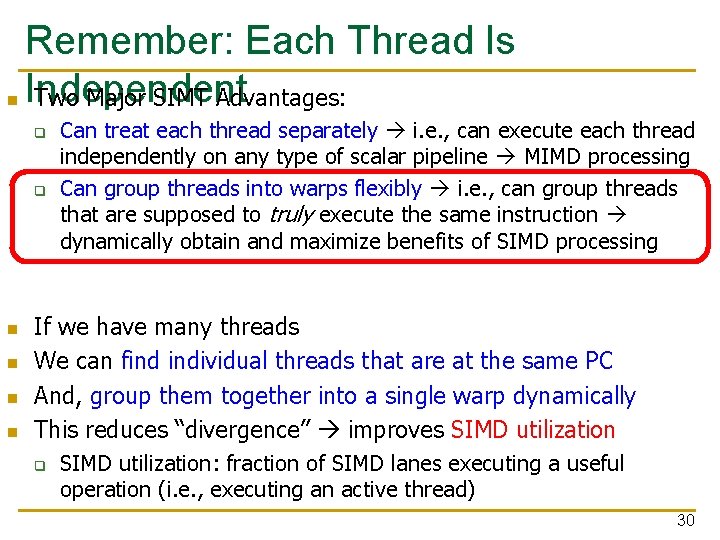

Remember: Each Thread Is n Independent Two Major SIMT Advantages: q q n n Can treat each thread separately i. e. , can execute each thread independently on any type of scalar pipeline MIMD processing Can group threads into warps flexibly i. e. , can group threads that are supposed to truly execute the same instruction dynamically obtain and maximize benefits of SIMD processing If we have many threads We can find individual threads that are at the same PC And, group them together into a single warp dynamically This reduces “divergence” improves SIMD utilization q SIMD utilization: fraction of SIMD lanes executing a useful operation (i. e. , executing an active thread) 30

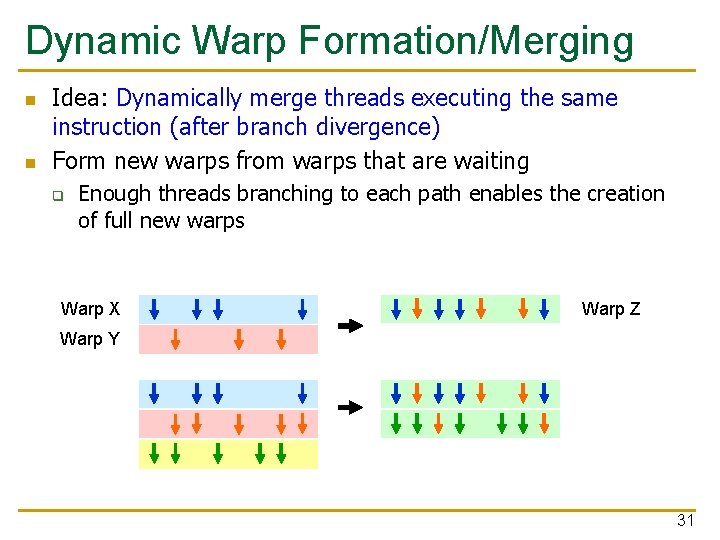

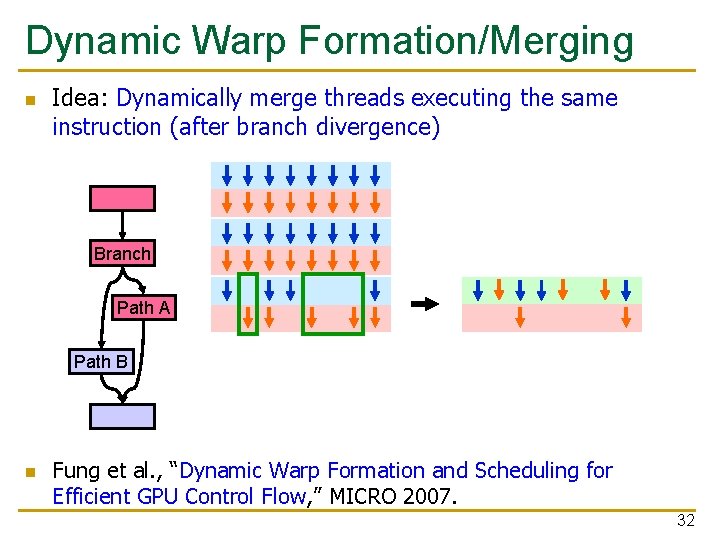

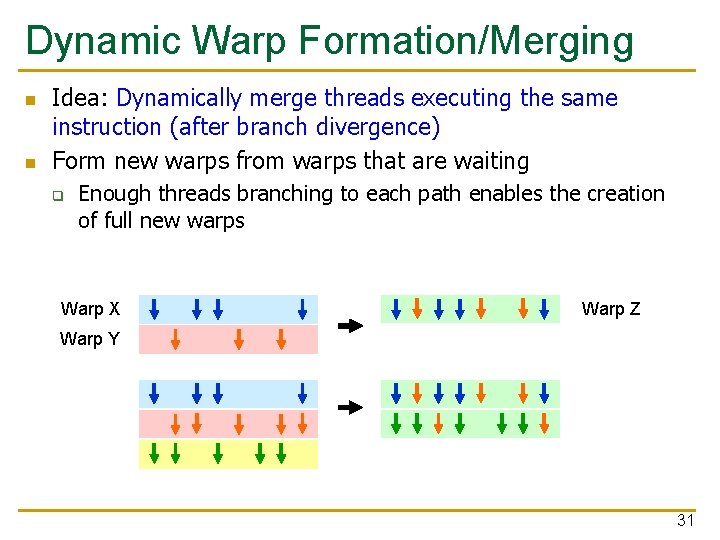

Dynamic Warp Formation/Merging n n Idea: Dynamically merge threads executing the same instruction (after branch divergence) Form new warps from warps that are waiting q Enough threads branching to each path enables the creation of full new warps Warp X Warp Z Warp Y 31

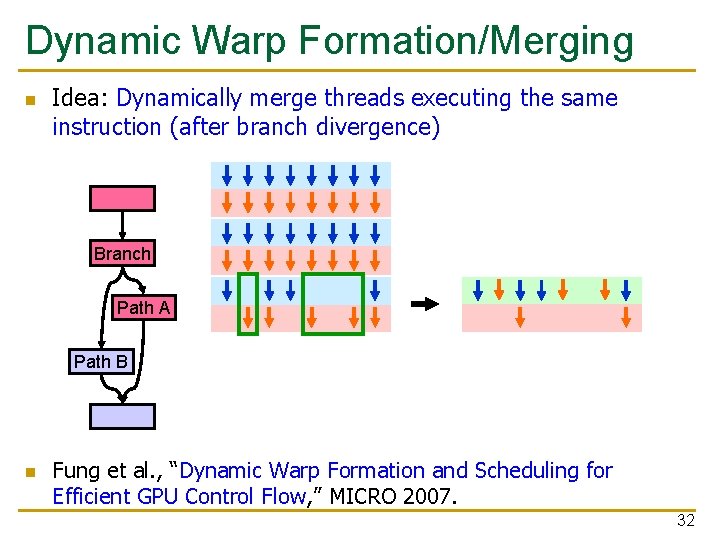

Dynamic Warp Formation/Merging n Idea: Dynamically merge threads executing the same instruction (after branch divergence) Branch Path A Path B n Fung et al. , “Dynamic Warp Formation and Scheduling for Efficient GPU Control Flow, ” MICRO 2007. 32

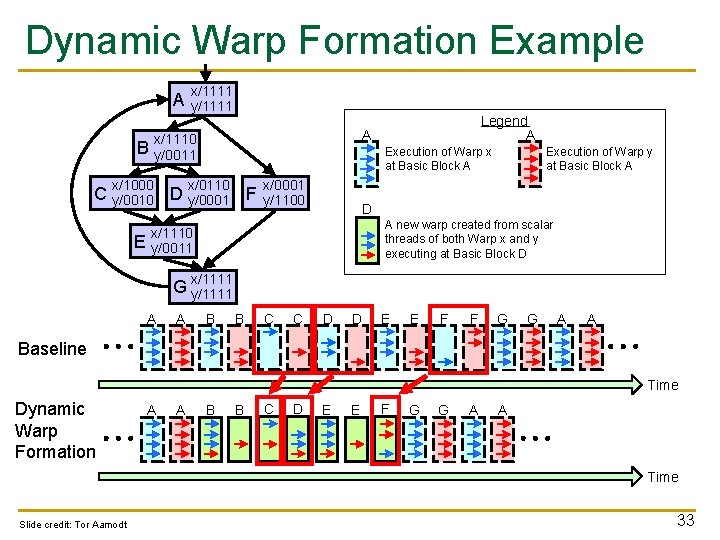

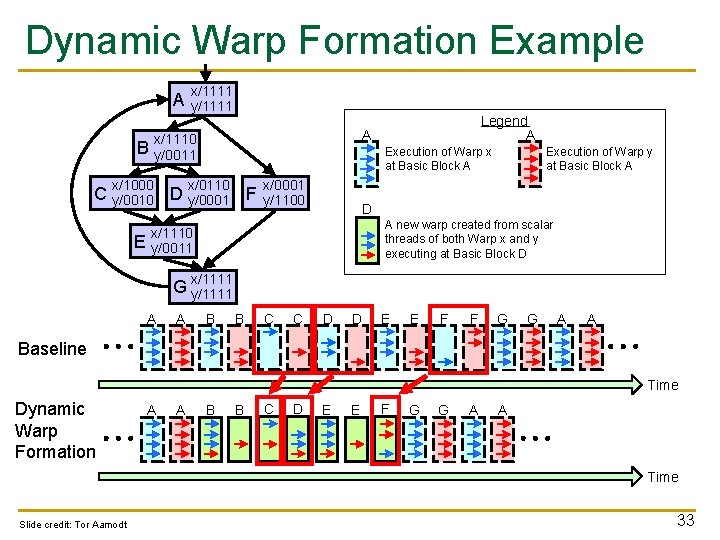

Dynamic Warp Formation Example A x/1111 y/1111 A x/1110 y/0011 B x/1000 Execution of Warp x at Basic Block A x/0110 C y/0010 D y/0001 F E Legend A x/0001 y/1100 Execution of Warp y at Basic Block A D A new warp created from scalar threads of both Warp x and y executing at Basic Block D x/1110 y/0011 x/1111 G y/1111 A A B B C C D D E E F F G G A A Baseline Time Dynamic Warp Formation A A B B C D E E F G G A A Time Slide credit: Tor Aamodt 33

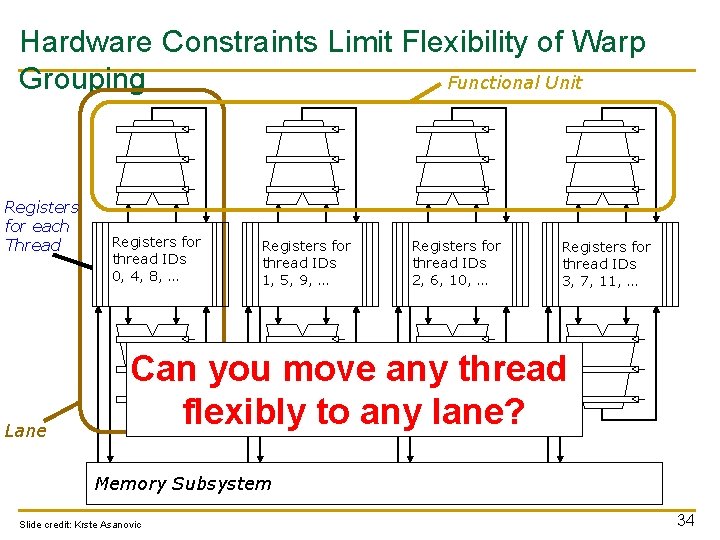

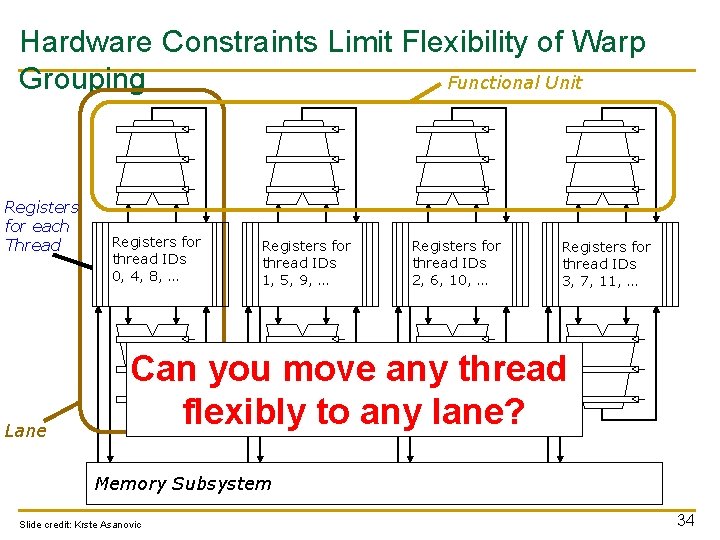

Hardware Constraints Limit Flexibility of Warp Functional Unit Grouping Registers for each Thread Lane Registers for thread IDs 0, 4, 8, … Registers for thread IDs 1, 5, 9, … Registers for thread IDs 2, 6, 10, … Registers for thread IDs 3, 7, 11, … Can you move any thread flexibly to any lane? Memory Subsystem Slide credit: Krste Asanovic 34

An Example GPU

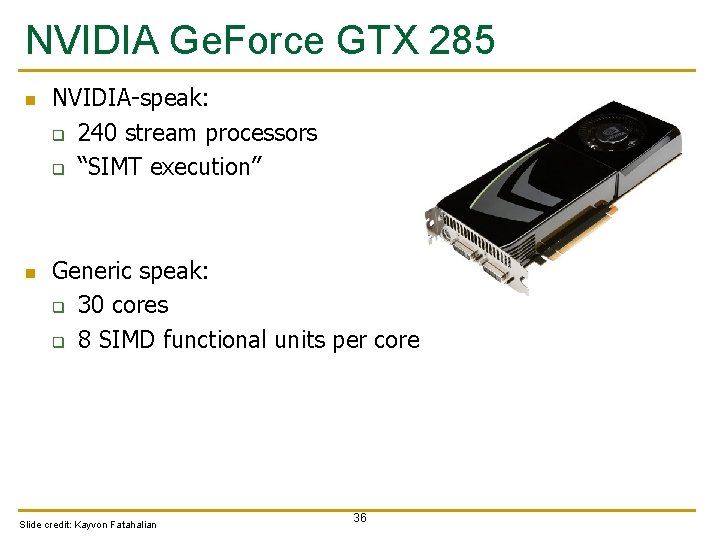

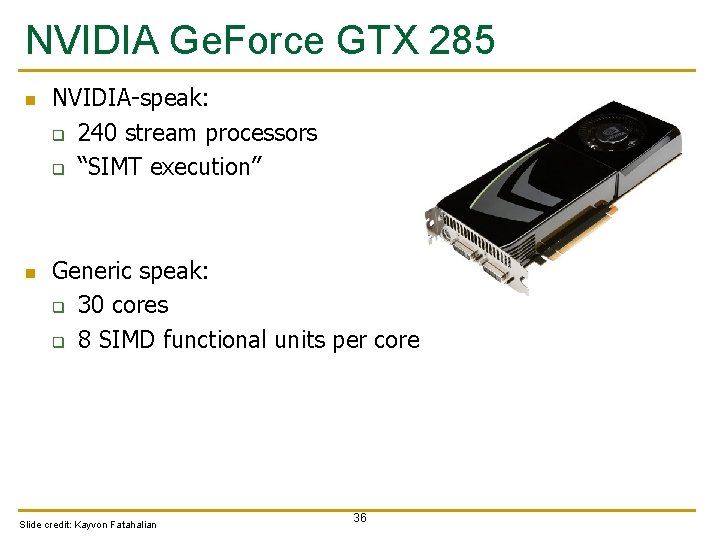

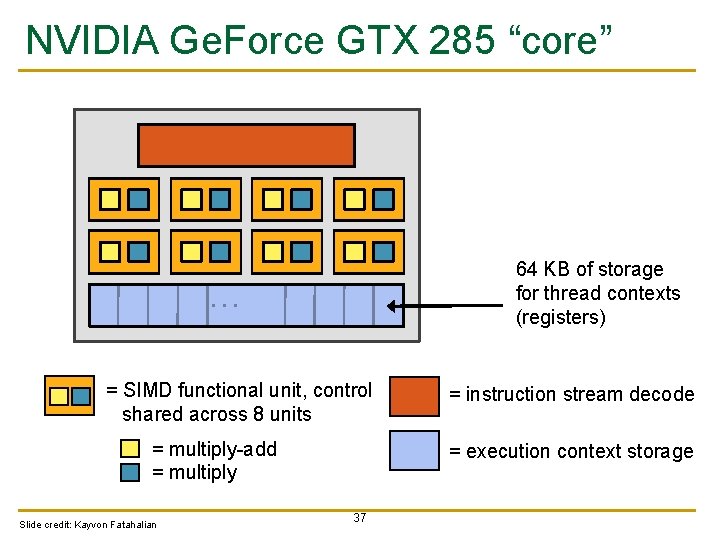

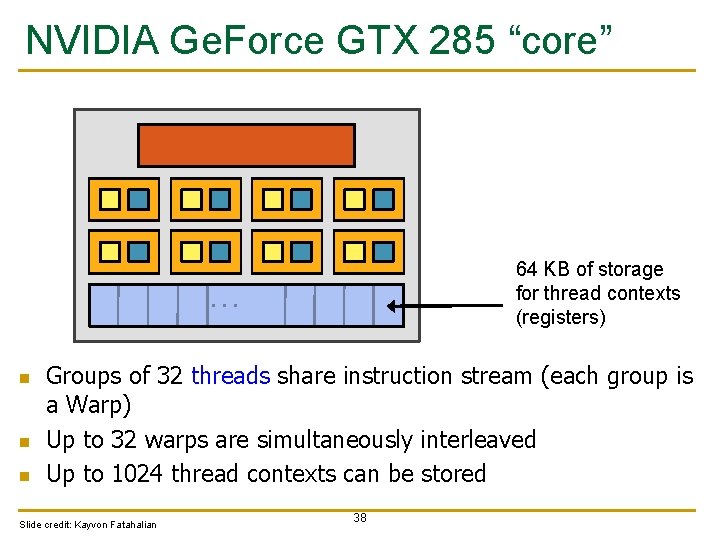

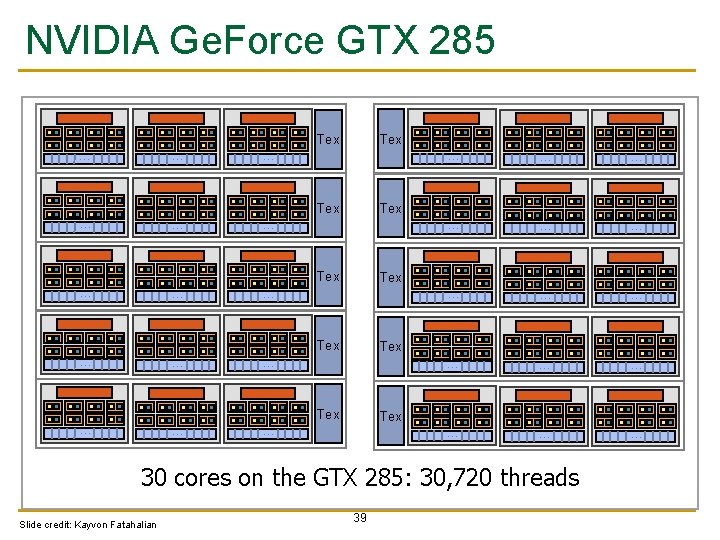

NVIDIA Ge. Force GTX 285 n n NVIDIA-speak: q 240 stream processors q “SIMT execution” Generic speak: q 30 cores q 8 SIMD functional units per core Slide credit: Kayvon Fatahalian 36

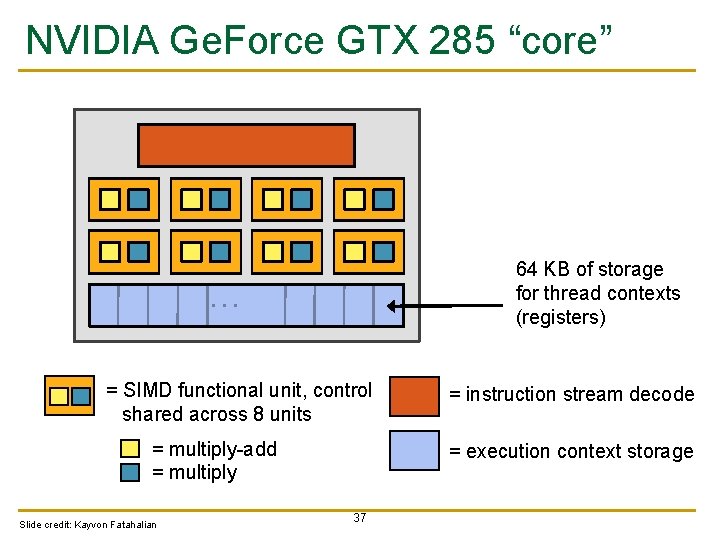

NVIDIA Ge. Force GTX 285 “core” 64 KB of storage for thread contexts (registers) … = SIMD functional unit, control shared across 8 units = multiply-add = multiply Slide credit: Kayvon Fatahalian = instruction stream decode = execution context storage 37

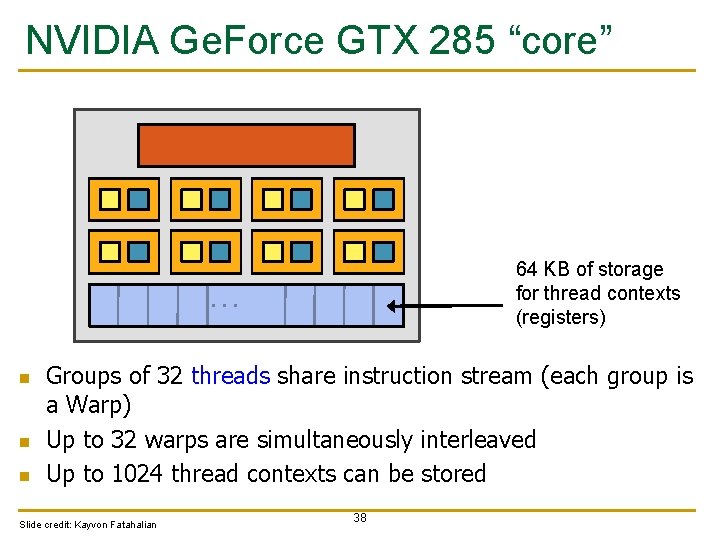

NVIDIA Ge. Force GTX 285 “core” 64 KB of storage for thread contexts (registers) … n n n Groups of 32 threads share instruction stream (each group is a Warp) Up to 32 warps are simultaneously interleaved Up to 1024 thread contexts can be stored Slide credit: Kayvon Fatahalian 38

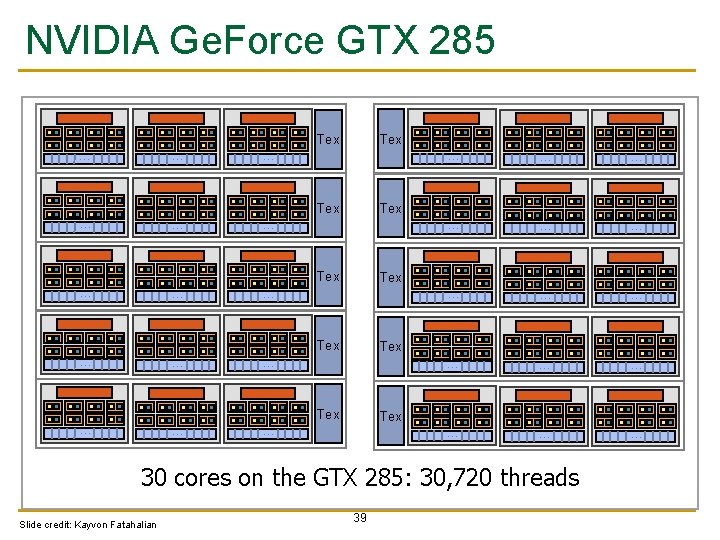

NVIDIA Ge. Force GTX 285 Tex … … … … … … … Tex … … Tex … 30 cores on the GTX 285: 30, 720 threads Slide credit: Kayvon Fatahalian 39

Introduction to GPGPU Programming ETH Zürich Fall 2017 19 October 2017

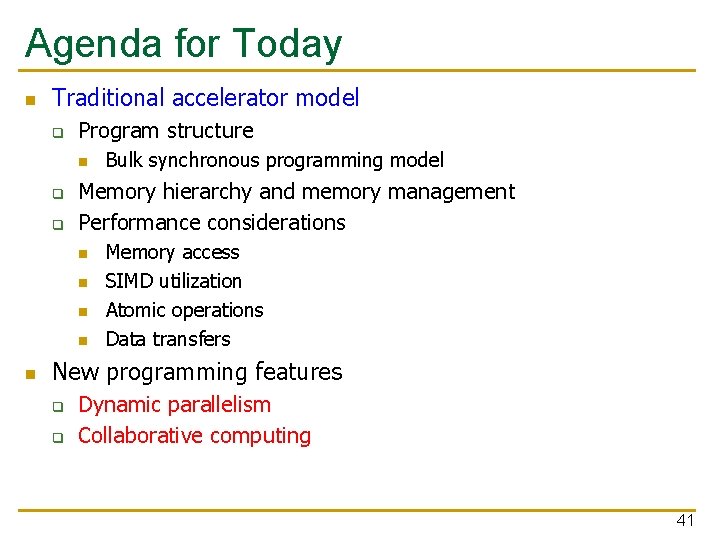

Agenda for Today n Traditional accelerator model q Program structure n q q Memory hierarchy and memory management Performance considerations n n n Bulk synchronous programming model Memory access SIMD utilization Atomic operations Data transfers New programming features q q Dynamic parallelism Collaborative computing 41

General Purpose Processing on GPUs have democratized HPC q n n n However, this is not for free q New programming model q New challenges Algorithms need to be re-implemented and rethought Many workloads exhibit inherent parallelism q q n Great FLOP/$, massively parallel chip on a commodity PC Matrices Image processing Main bottlenecks q CPU-GPU data transfers (PCIe, NVLINK) q DRAM memory (GDDR 5, HBM 2) 42

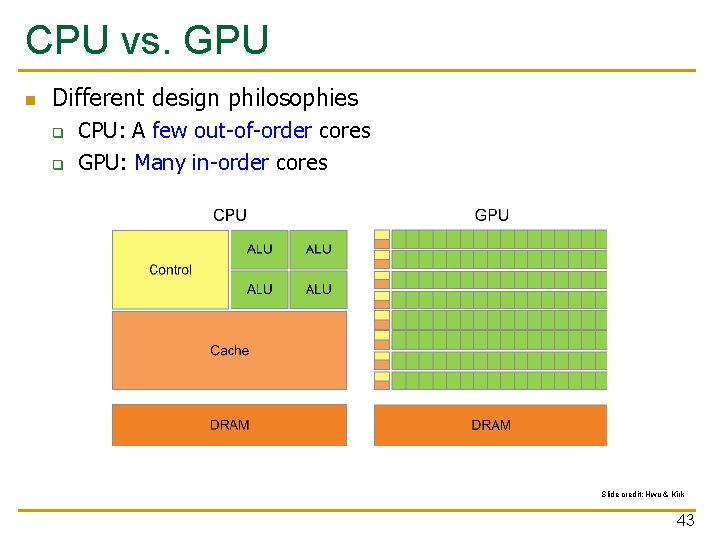

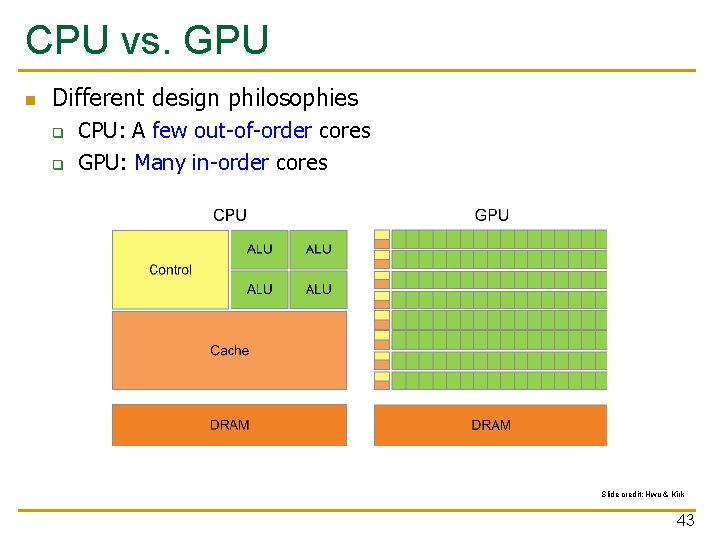

CPU vs. GPU n Different design philosophies q q CPU: A few out-of-order cores GPU: Many in-order cores Slide credit: Hwu & Kirk 43

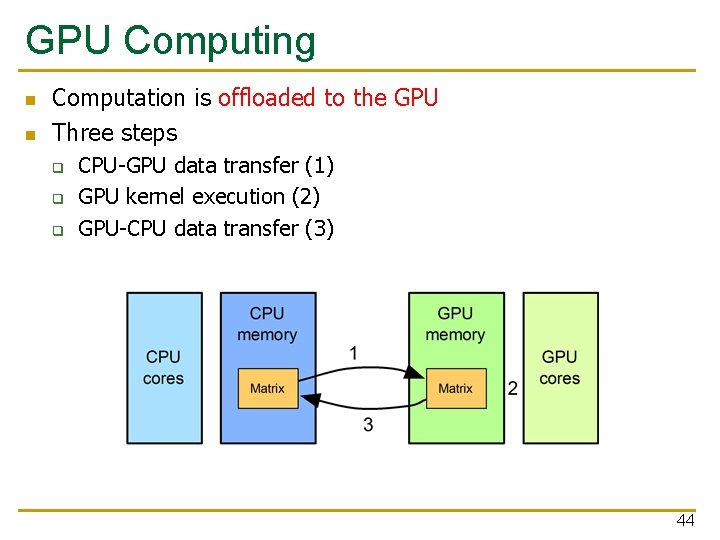

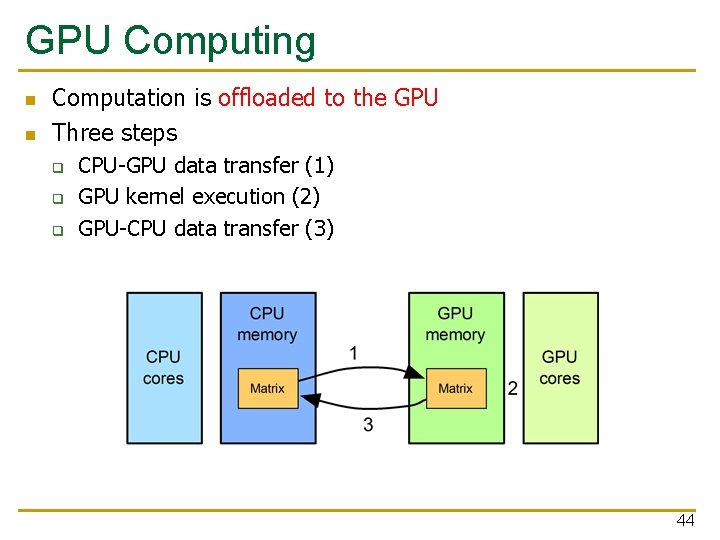

GPU Computing n n Computation is offloaded to the GPU Three steps q q q CPU-GPU data transfer (1) GPU kernel execution (2) GPU-CPU data transfer (3) 44

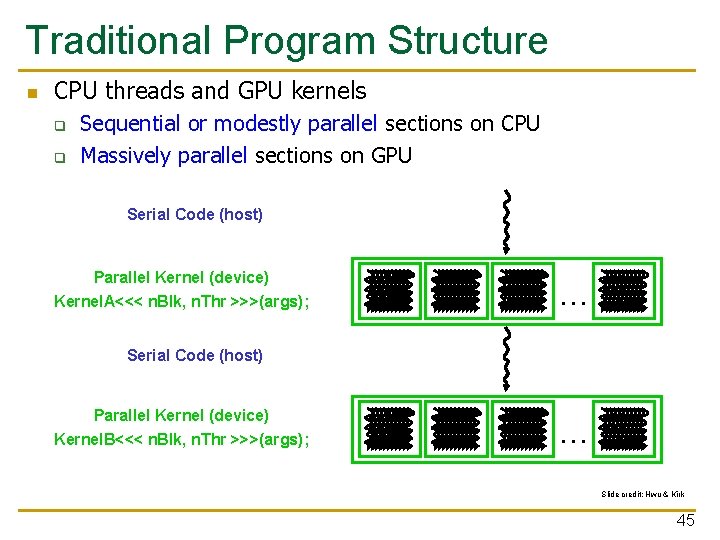

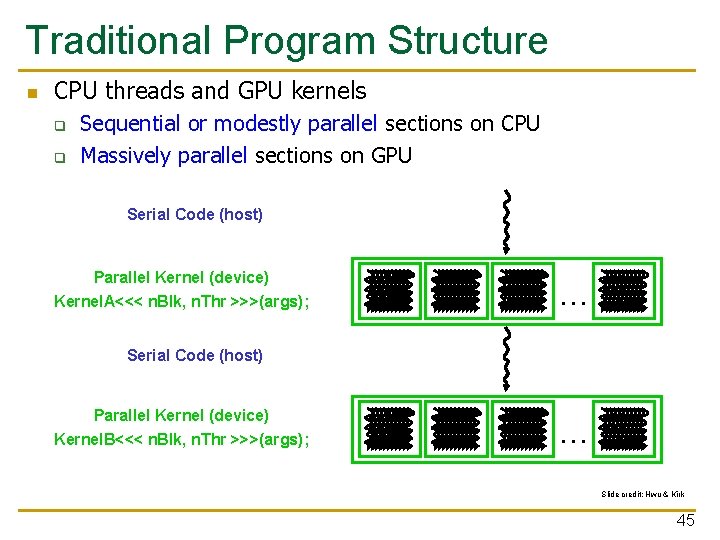

Traditional Program Structure n CPU threads and GPU kernels q q Sequential or modestly parallel sections on CPU Massively parallel sections on GPU Serial Code (host) Parallel Kernel (device) Kernel. A<<< n. Blk, n. Thr >>>(args); . . . Serial Code (host) Parallel Kernel (device) Kernel. B<<< n. Blk, n. Thr >>>(args); . . . Slide credit: Hwu & Kirk 45

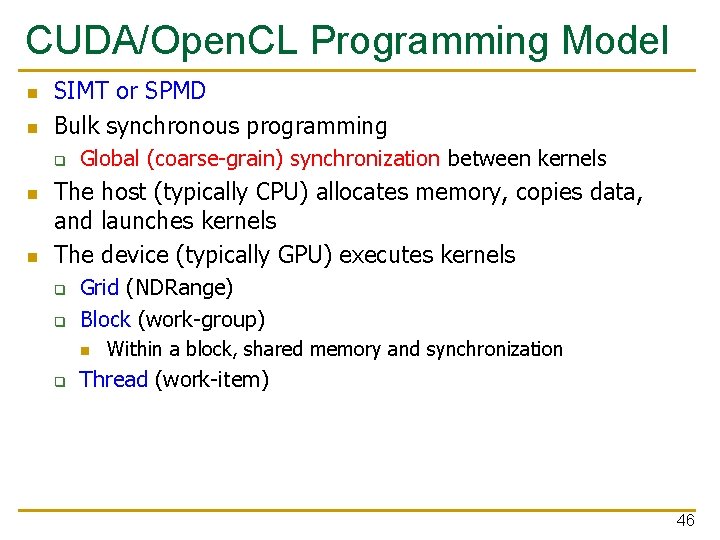

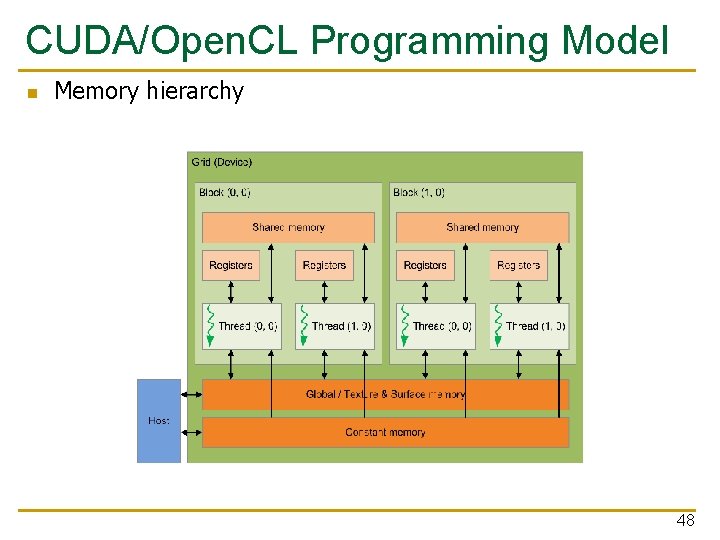

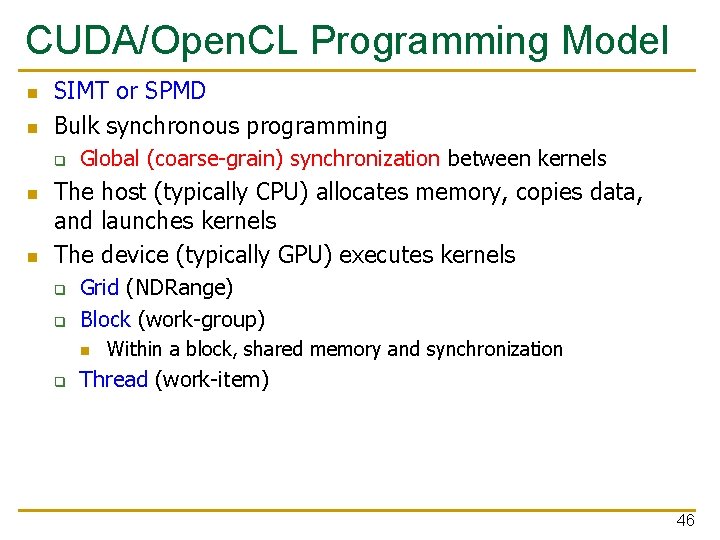

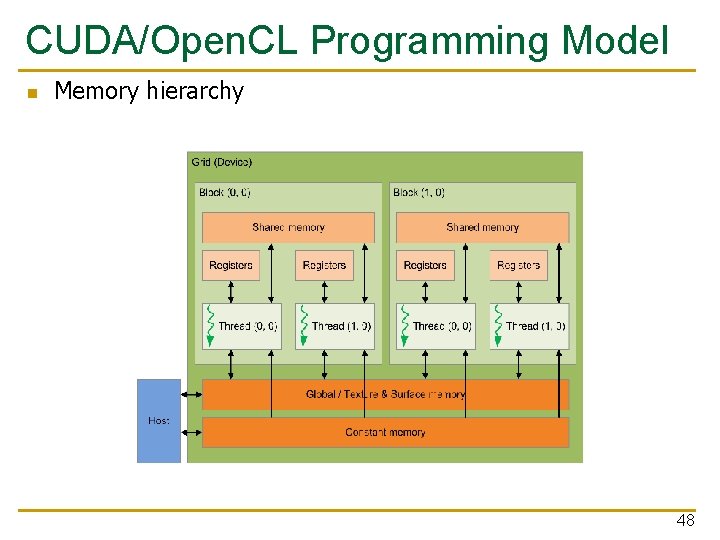

CUDA/Open. CL Programming Model n n SIMT or SPMD Bulk synchronous programming q n n Global (coarse-grain) synchronization between kernels The host (typically CPU) allocates memory, copies data, and launches kernels The device (typically GPU) executes kernels q q Grid (NDRange) Block (work-group) n q Within a block, shared memory and synchronization Thread (work-item) 46

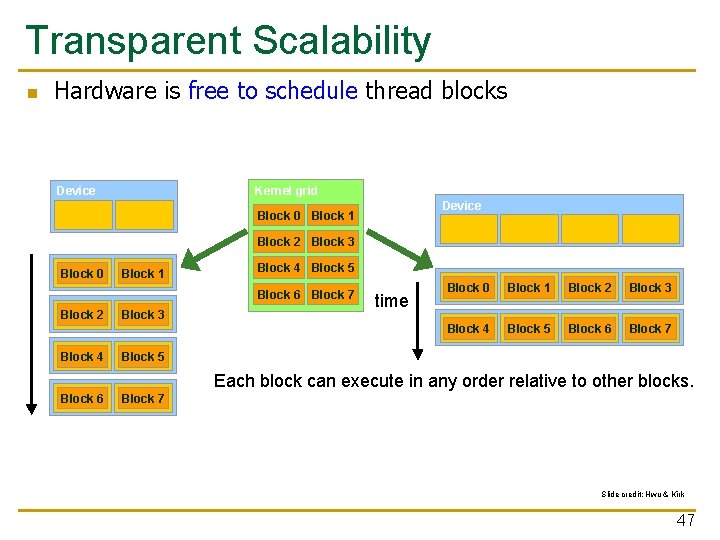

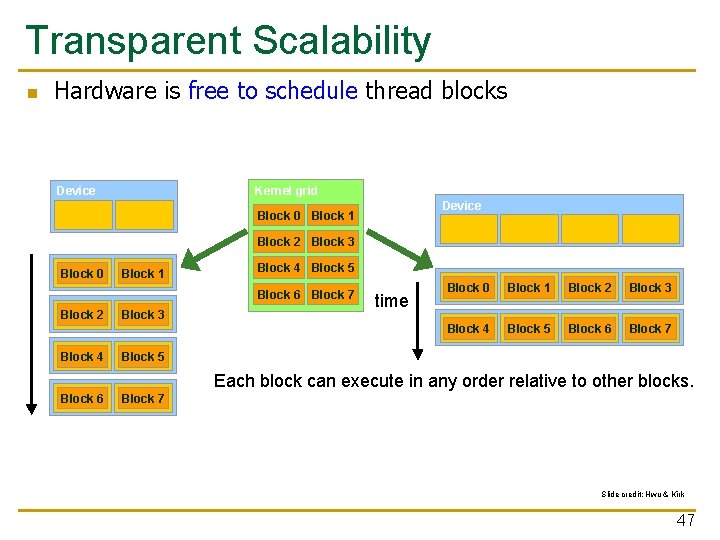

Transparent Scalability n Hardware is free to schedule thread blocks Kernel grid Device Block 0 Block 1 Block 2 Block 3 Block 0 Block 1 Block 4 Block 5 Block 6 Block 7 Block 2 Block 4 Block 3 time Block 0 Block 1 Block 2 Block 3 Block 4 Block 5 Block 6 Block 7 Block 5 Each block can execute in any order relative to other blocks. Block 6 Block 7 Slide credit: Hwu & Kirk 47

CUDA/Open. CL Programming Model n Memory hierarchy 48

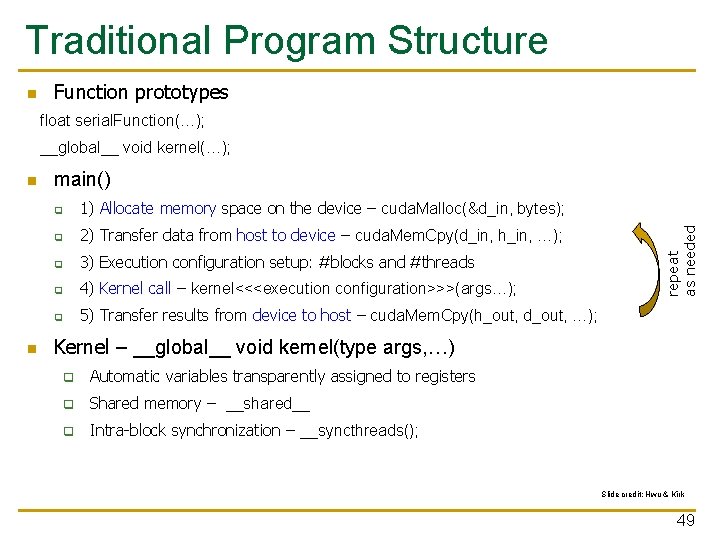

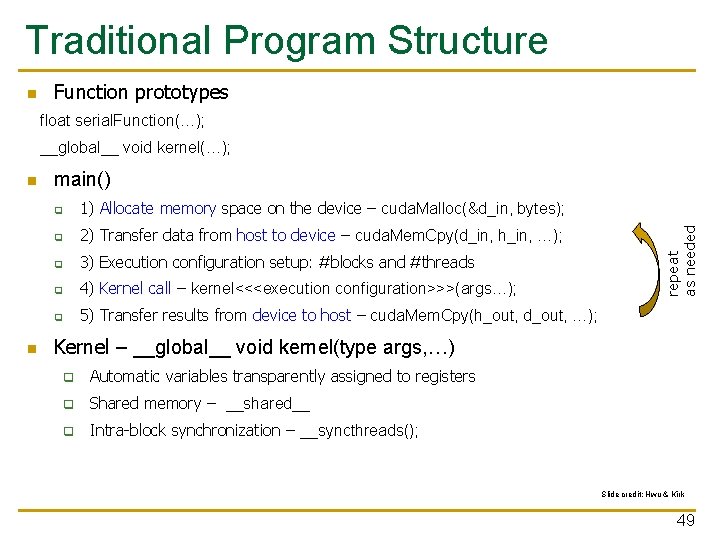

Traditional Program Structure n Function prototypes float serial. Function(…); __global__ void kernel(…); n main() q 1) Allocate memory space on the device – cuda. Malloc(&d_in, bytes); q 2) Transfer data from host to device – cuda. Mem. Cpy(d_in, h_in, …); q 3) Execution configuration setup: #blocks and #threads q 4) Kernel call – kernel<<<execution configuration>>>(args…); q 5) Transfer results from device to host – cuda. Mem. Cpy(h_out, d_out, …); repeat as needed n Kernel – __global__ void kernel(type args, …) q Automatic variables transparently assigned to registers q Shared memory – __shared__ q Intra-block synchronization – __syncthreads(); Slide credit: Hwu & Kirk 49

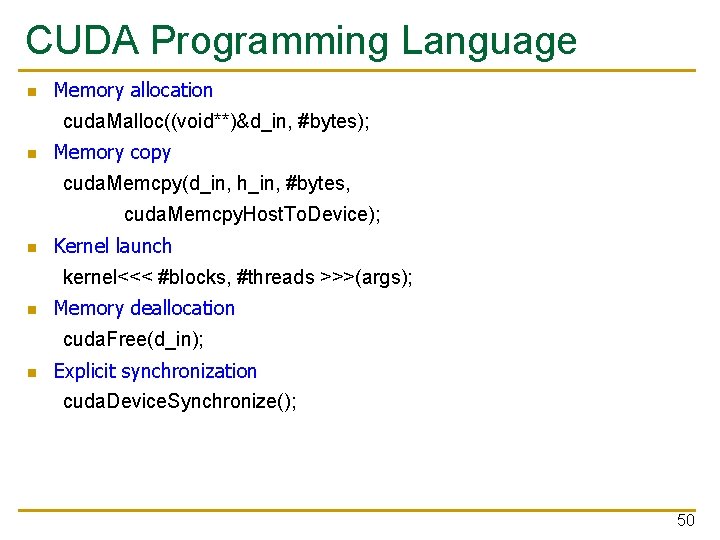

CUDA Programming Language n Memory allocation cuda. Malloc((void**)&d_in, #bytes); n Memory copy cuda. Memcpy(d_in, h_in, #bytes, cuda. Memcpy. Host. To. Device); n Kernel launch kernel<<< #blocks, #threads >>>(args); n Memory deallocation cuda. Free(d_in); n Explicit synchronization cuda. Device. Synchronize(); 50

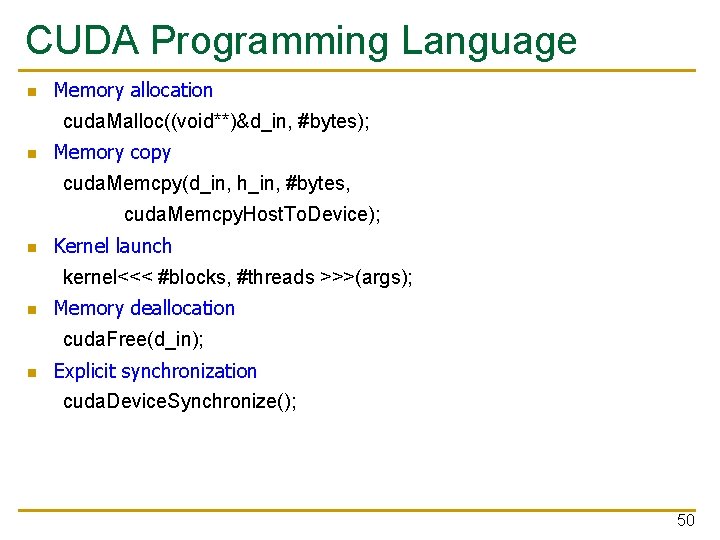

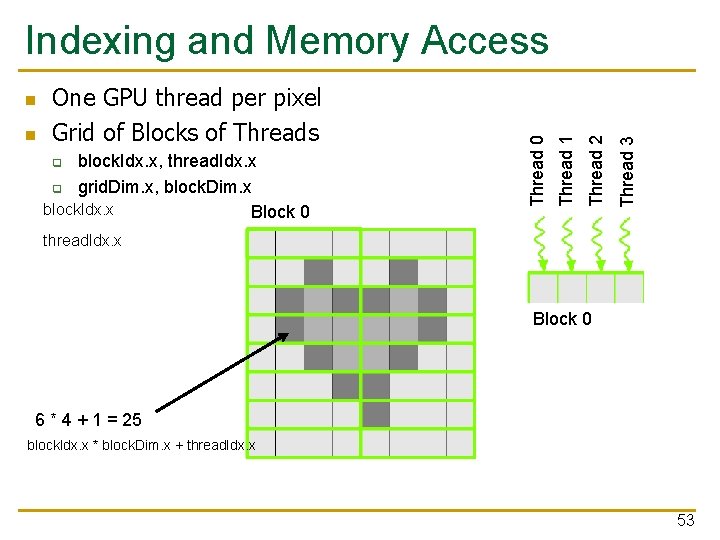

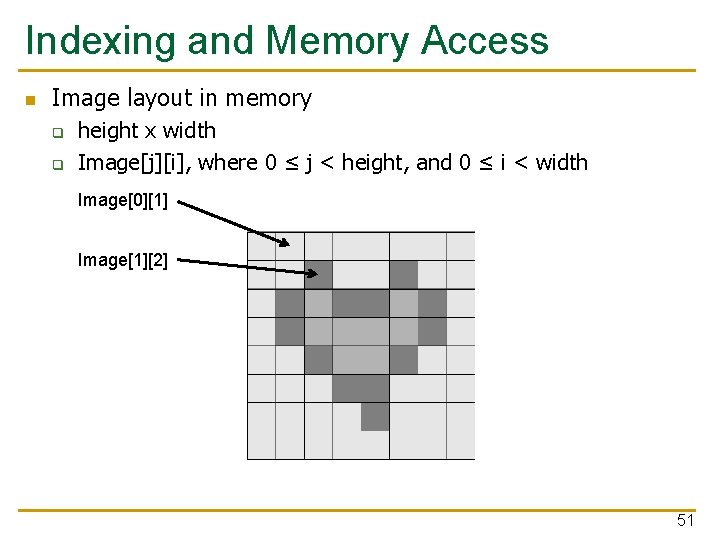

Indexing and Memory Access n Image layout in memory q q height x width Image[j][i], where 0 ≤ j < height, and 0 ≤ i < width Image[0][1] Image[1][2] 51

![Indexing and Memory Access n Image layout in memory q q Rowmajor layout Imageji Indexing and Memory Access n Image layout in memory q q Row-major layout Image[j][i]](https://slidetodoc.com/presentation_image/27879eddad8bb27d1cd1ed2657d55312/image-52.jpg)

Indexing and Memory Access n Image layout in memory q q Row-major layout Image[j][i] = Image[j x width + i] Image[0][1] = Image[0 x 8 + 1] Image[1][2] = Image[1 x 8 + 2] 52

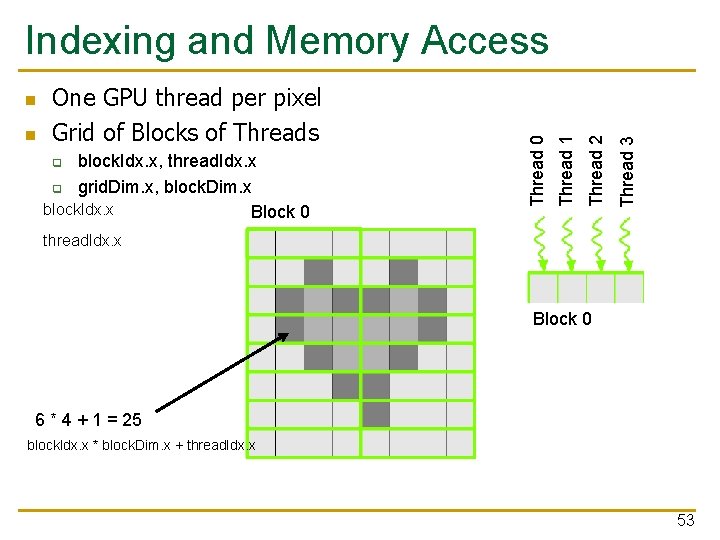

Thread 3 block. Idx. x, thread. Idx. x q grid. Dim. x, block. Dim. x block. Idx. x Block 0 q Thread 2 n One GPU thread per pixel Grid of Blocks of Threads Thread 1 n Thread 0 Indexing and Memory Access thread. Idx. x Block 0 6 * 4 + 1 = 25 block. Idx. x * block. Dim. x + thread. Idx. x 53

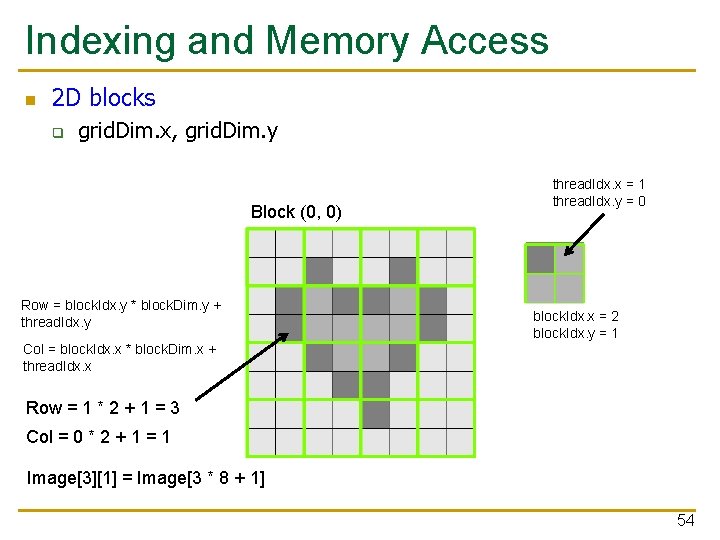

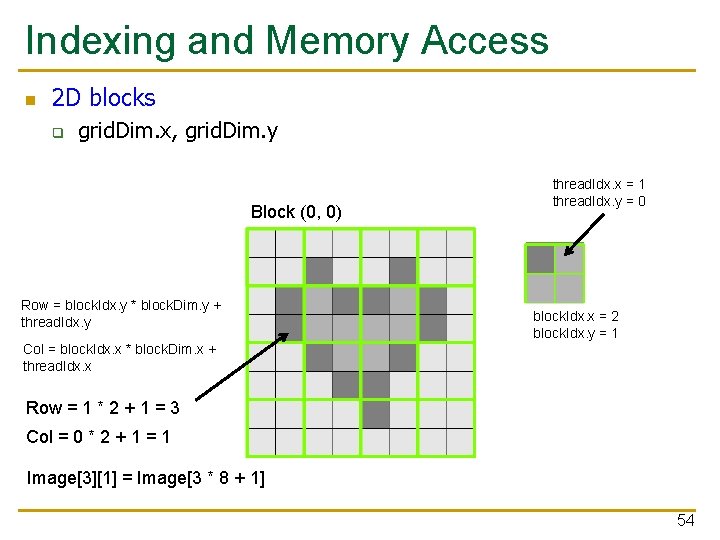

Indexing and Memory Access n 2 D blocks q grid. Dim. x, grid. Dim. y Block (0, 0) Row = block. Idx. y * block. Dim. y + thread. Idx. y thread. Idx. x = 1 thread. Idx. y = 0 block. Idx. x = 2 block. Idx. y = 1 Col = block. Idx. x * block. Dim. x + thread. Idx. x Row = 1 * 2 + 1 = 3 Col = 0 * 2 + 1 = 1 Image[3][1] = Image[3 * 8 + 1] 54

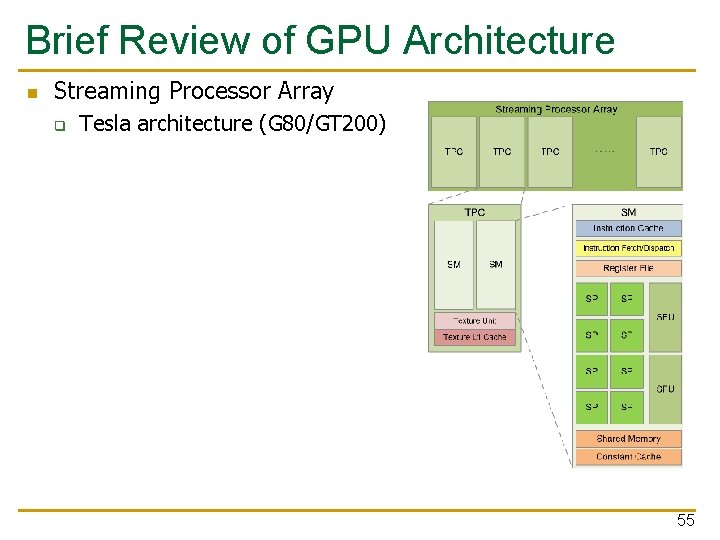

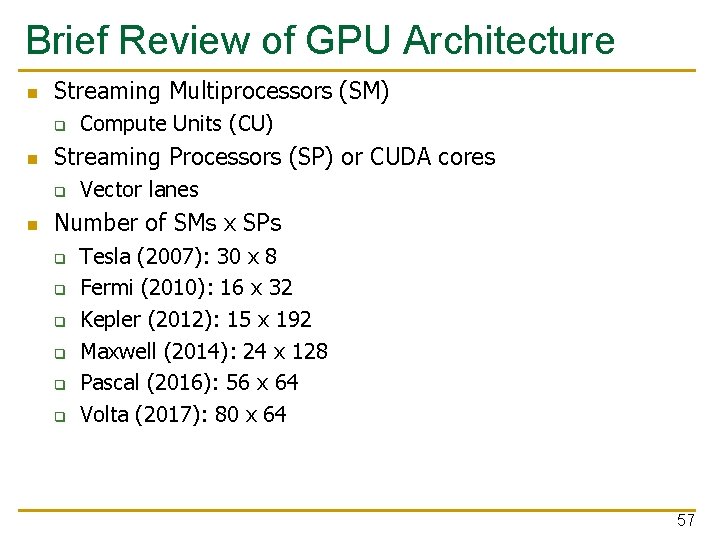

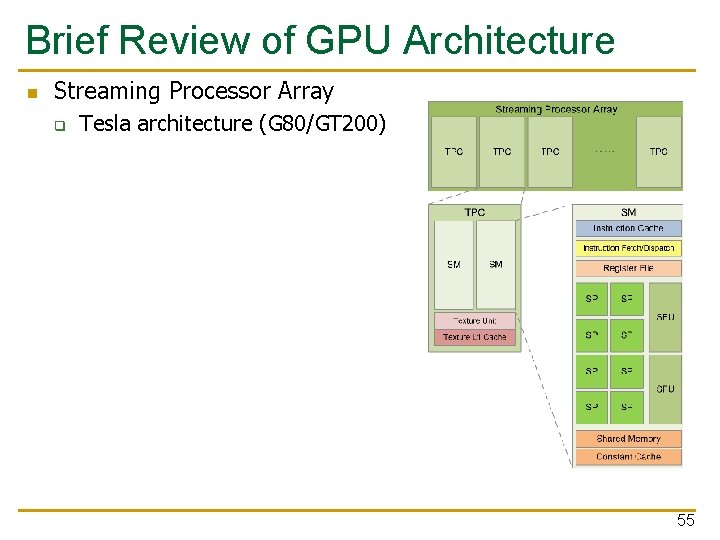

Brief Review of GPU Architecture n Streaming Processor Array q Tesla architecture (G 80/GT 200) 55

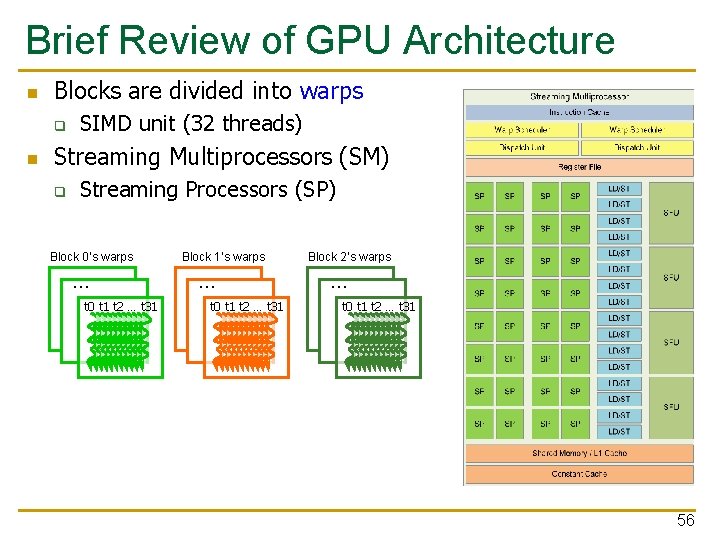

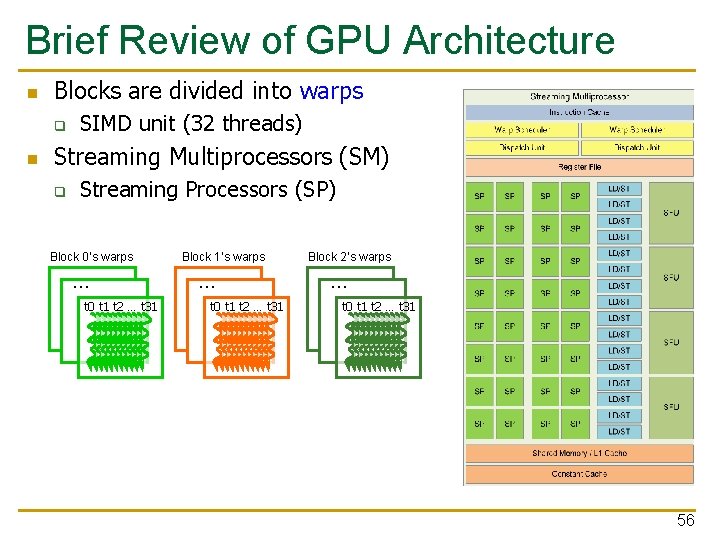

Brief Review of GPU Architecture n Blocks are divided into warps q n SIMD unit (32 threads) Streaming Multiprocessors (SM) q Streaming Processors (SP) Block 0’s warps … Block 1’s warps … t 0 t 1 t 2 … t 31 … Block 2’s warps … t 0 t 1 t 2 … t 31 … 56

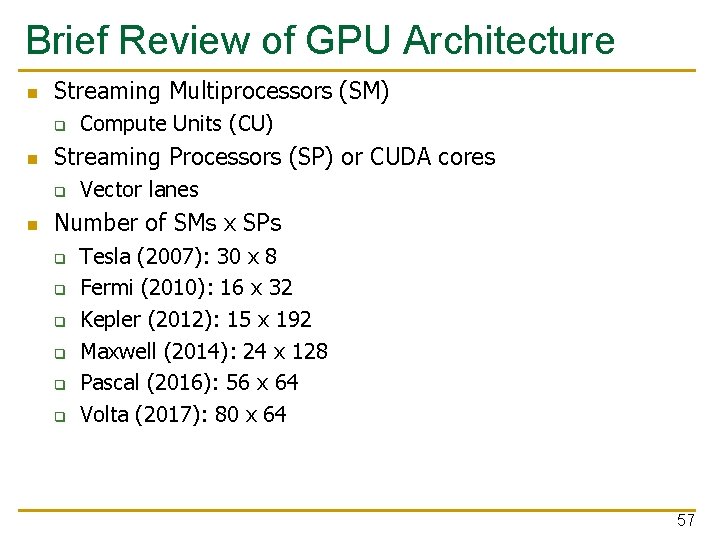

Brief Review of GPU Architecture n Streaming Multiprocessors (SM) q n Streaming Processors (SP) or CUDA cores q n Compute Units (CU) Vector lanes Number of SMs x SPs q q q Tesla (2007): 30 x 8 Fermi (2010): 16 x 32 Kepler (2012): 15 x 192 Maxwell (2014): 24 x 128 Pascal (2016): 56 x 64 Volta (2017): 80 x 64 57

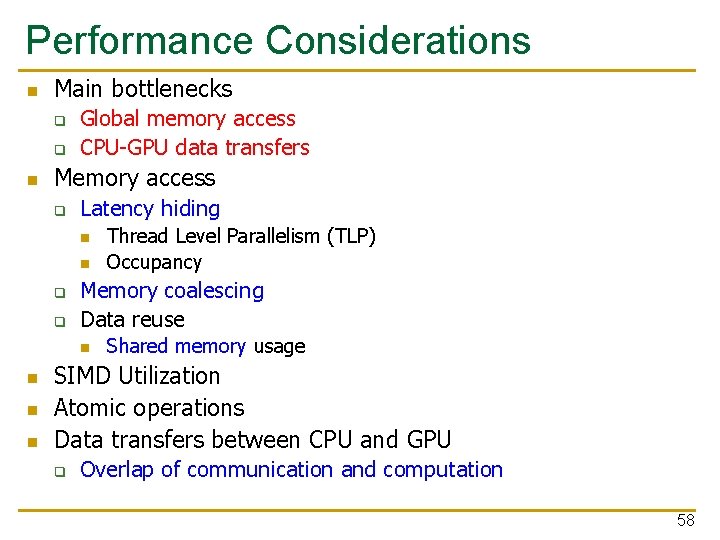

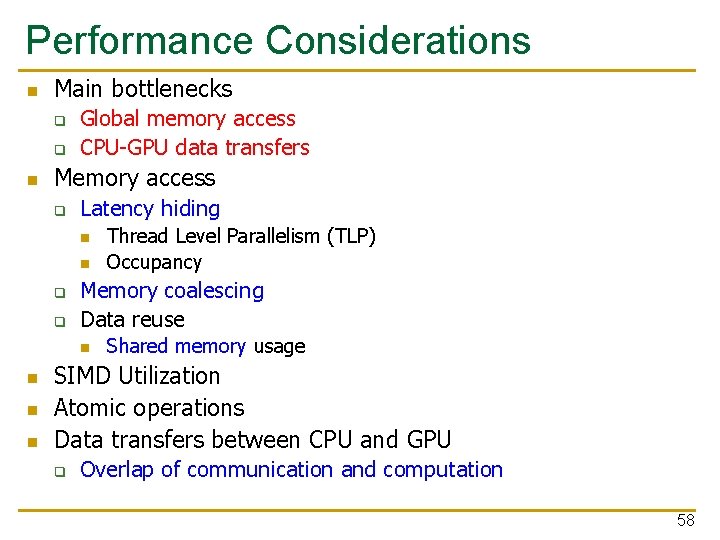

Performance Considerations n Main bottlenecks q q n Global memory access CPU-GPU data transfers Memory access q Latency hiding n n q q Memory coalescing Data reuse n n Thread Level Parallelism (TLP) Occupancy Shared memory usage SIMD Utilization Atomic operations Data transfers between CPU and GPU q Overlap of communication and computation 58

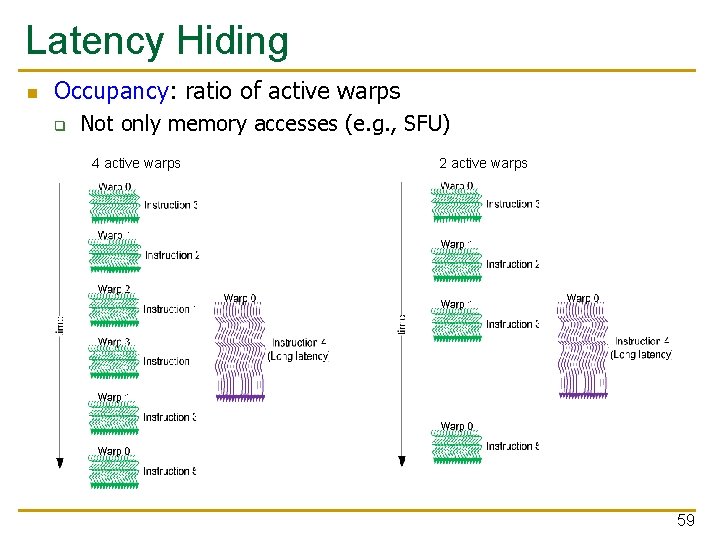

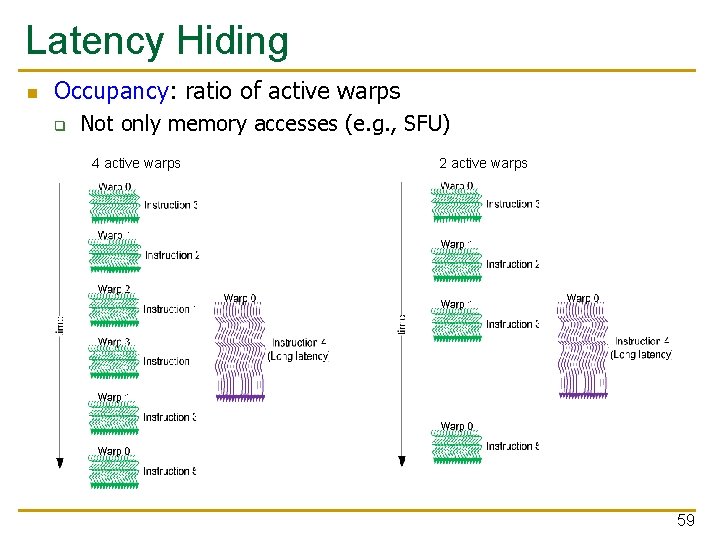

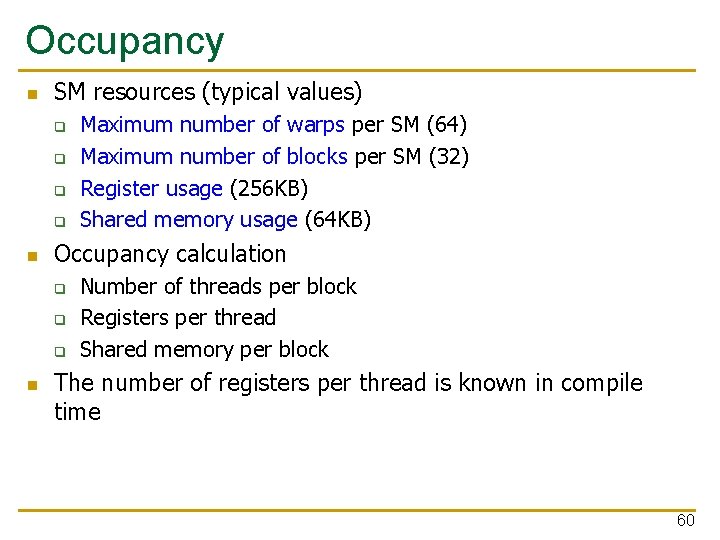

Latency Hiding n Occupancy: ratio of active warps q Not only memory accesses (e. g. , SFU) 4 active warps 2 active warps 59

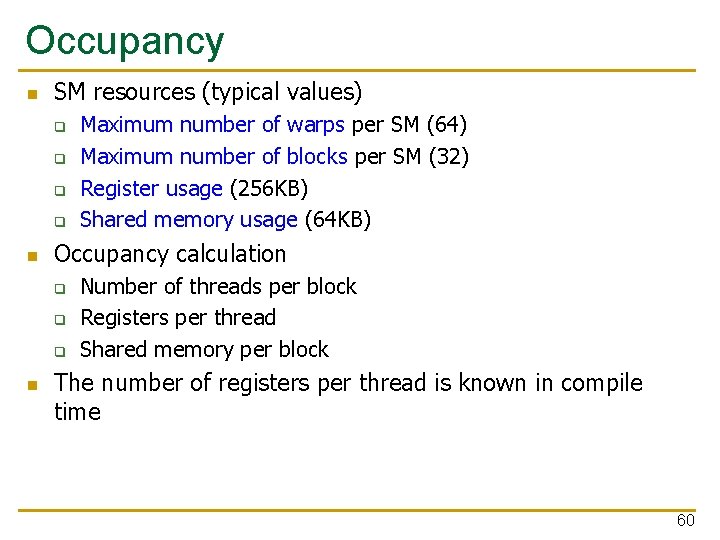

Occupancy n SM resources (typical values) q q n Occupancy calculation q q q n Maximum number of warps per SM (64) Maximum number of blocks per SM (32) Register usage (256 KB) Shared memory usage (64 KB) Number of threads per block Registers per thread Shared memory per block The number of registers per thread is known in compile time 60

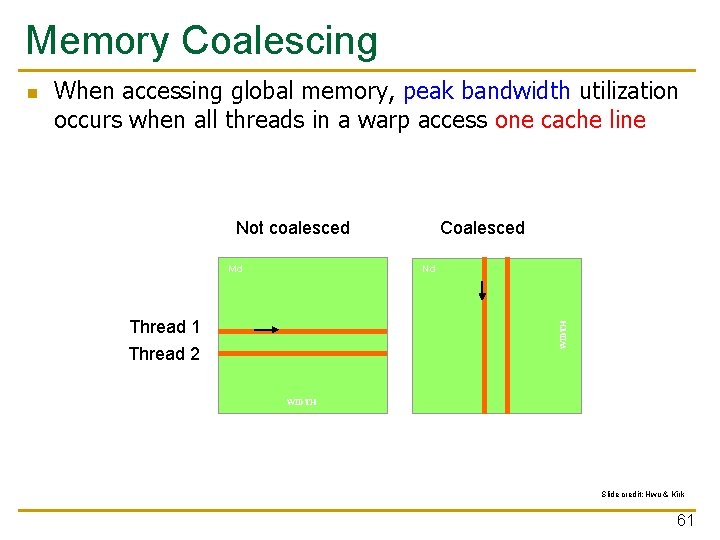

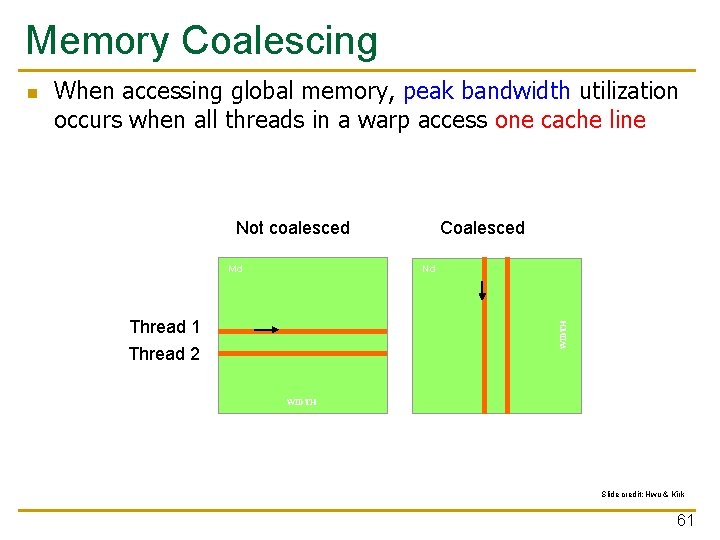

Memory Coalescing When accessing global memory, peak bandwidth utilization occurs when all threads in a warp access one cache line Not coalesced Md Coalesced Nd Thread 1 WIDTH n Thread 2 WIDTH Slide credit: Hwu & Kirk 61

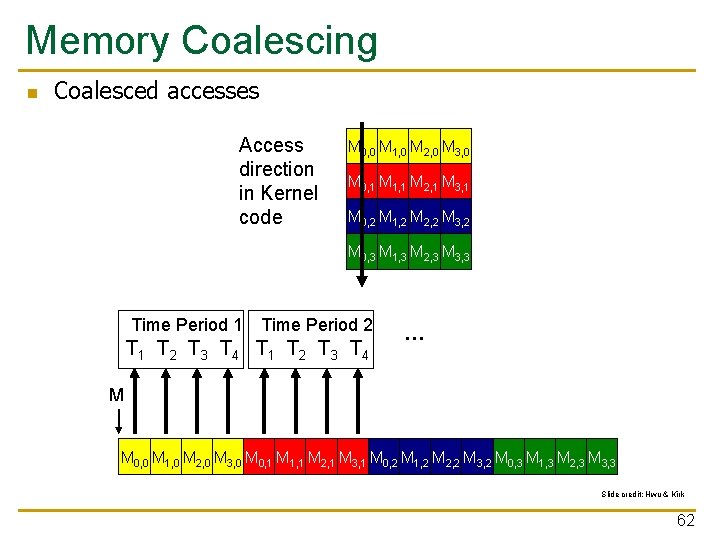

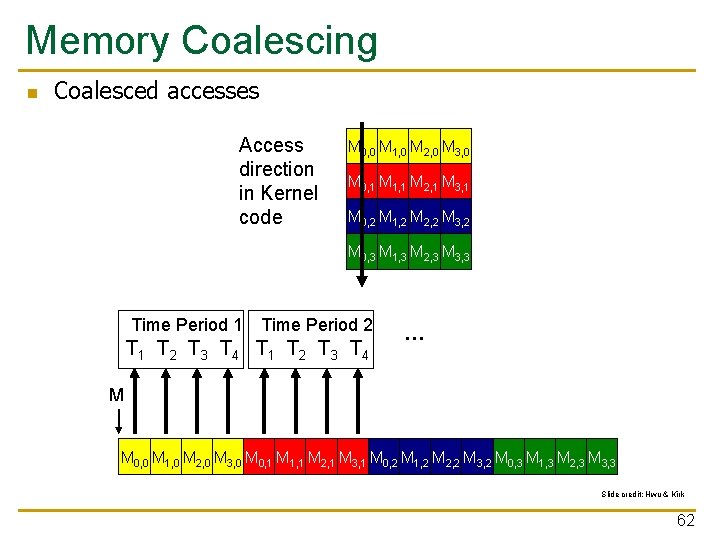

Memory Coalescing n Coalesced accesses Access direction in Kernel code M 0, 0 M 1, 0 M 2, 0 M 3, 0 M 0, 1 M 1, 1 M 2, 1 M 3, 1 M 0, 2 M 1, 2 M 2, 2 M 3, 2 M 0, 3 M 1, 3 M 2, 3 M 3, 3 Time Period 1 Time Period 2 T 1 T 2 T 3 T 4 … M M 0, 0 M 1, 0 M 2, 0 M 3, 0 M 0, 1 M 1, 1 M 2, 1 M 3, 1 M 0, 2 M 1, 2 M 2, 2 M 3, 2 M 0, 3 M 1, 3 M 2, 3 M 3, 3 Slide credit: Hwu & Kirk 62

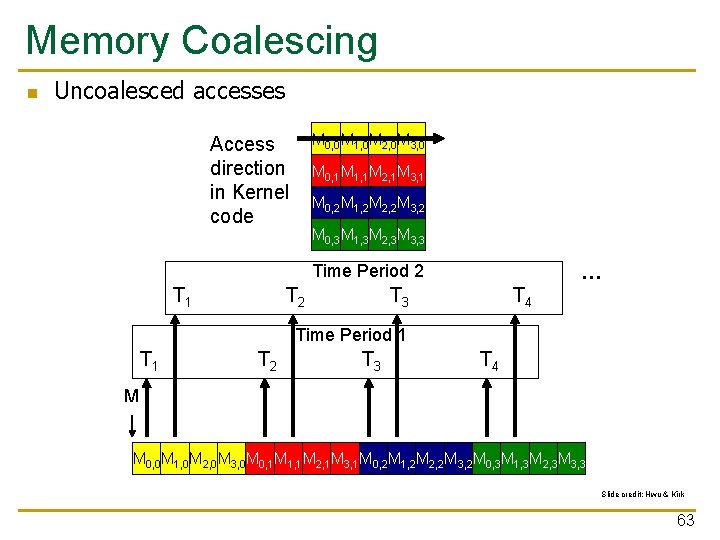

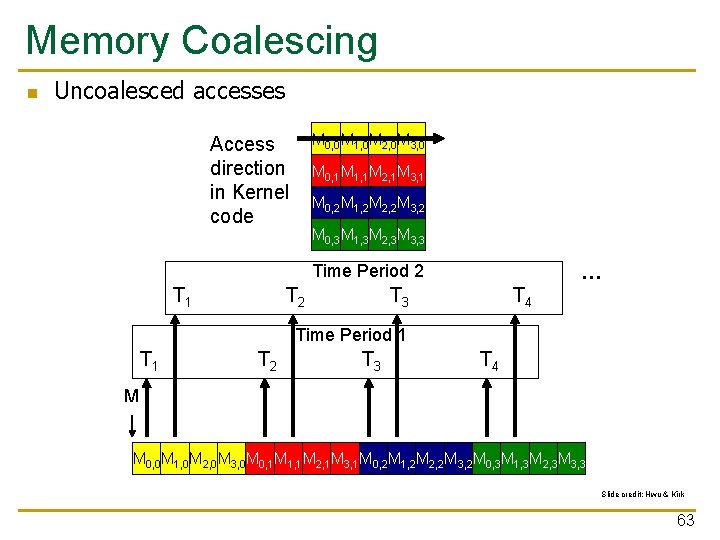

Memory Coalescing n Uncoalesced accesses M 0, 0 M 1, 0 M 2, 0 M 3, 0 Access direction in Kernel code M 0, 1 M 1, 1 M 2, 1 M 3, 1 M 0, 2 M 1, 2 M 2, 2 M 3, 2 M 0, 3 M 1, 3 M 2, 3 M 3, 3 Time Period 2 T 1 T 2 … T 3 T 4 Time Period 1 T 2 T 3 T 4 M M 0, 0 M 1, 0 M 2, 0 M 3, 0 M 0, 1 M 1, 1 M 2, 1 M 3, 1 M 0, 2 M 1, 2 M 2, 2 M 3, 2 M 0, 3 M 1, 3 M 2, 3 M 3, 3 Slide credit: Hwu & Kirk 63

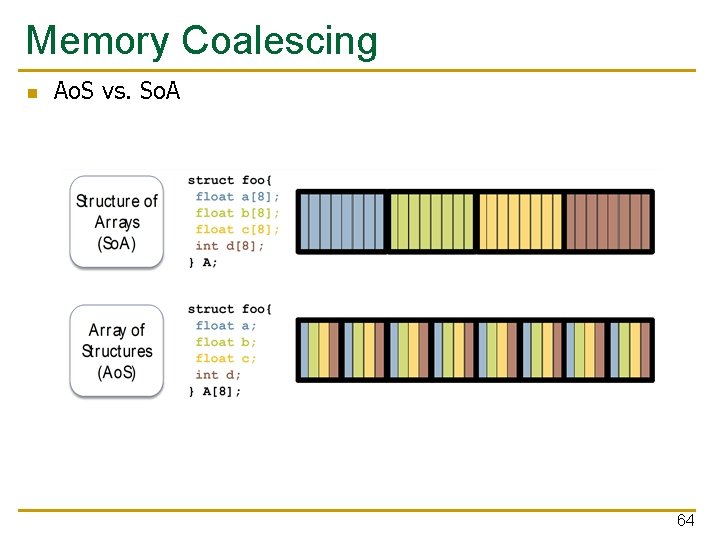

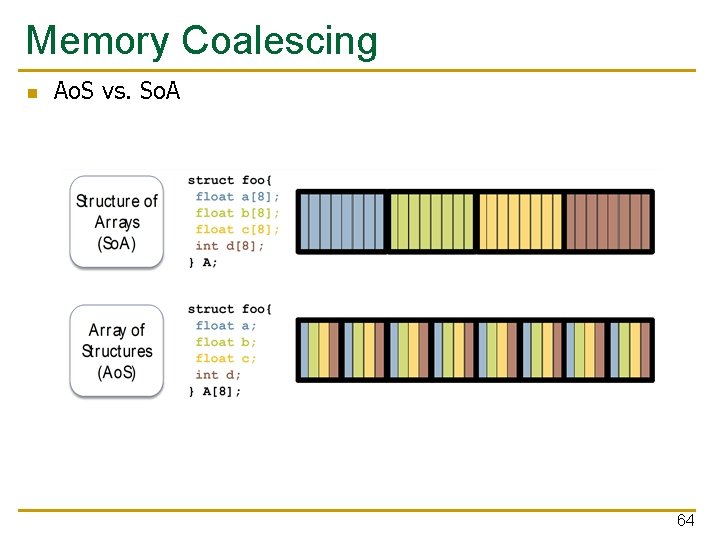

Memory Coalescing n Ao. S vs. So. A 64

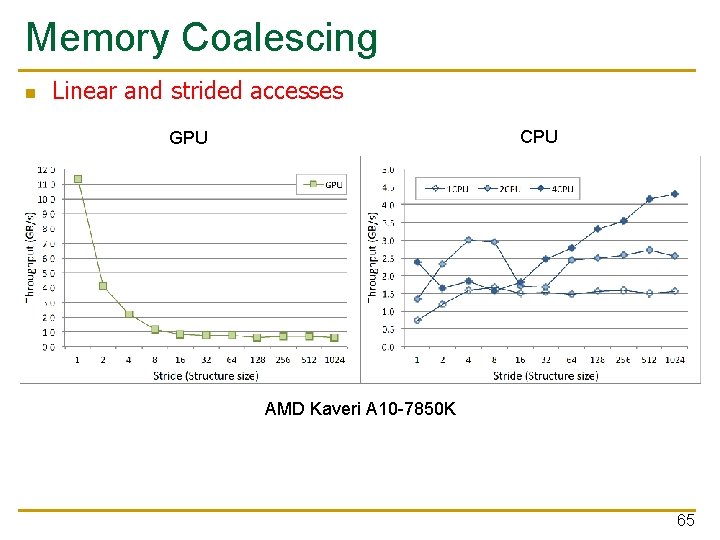

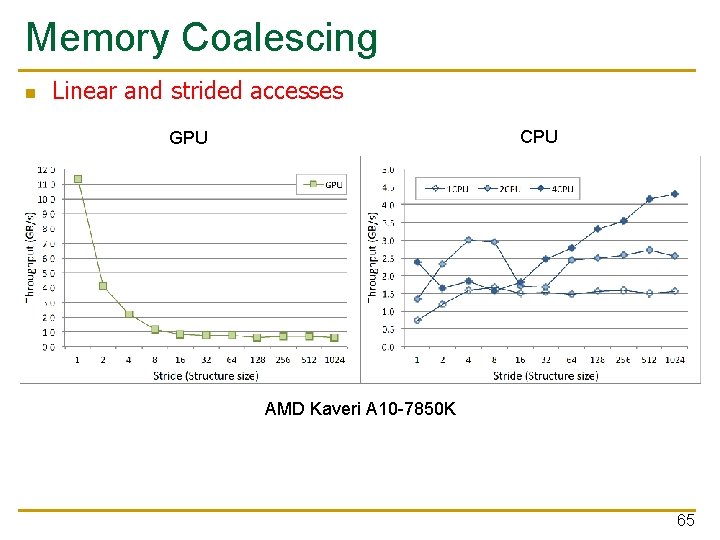

Memory Coalescing n Linear and strided accesses CPU GPU AMD Kaveri A 10 -7850 K 65

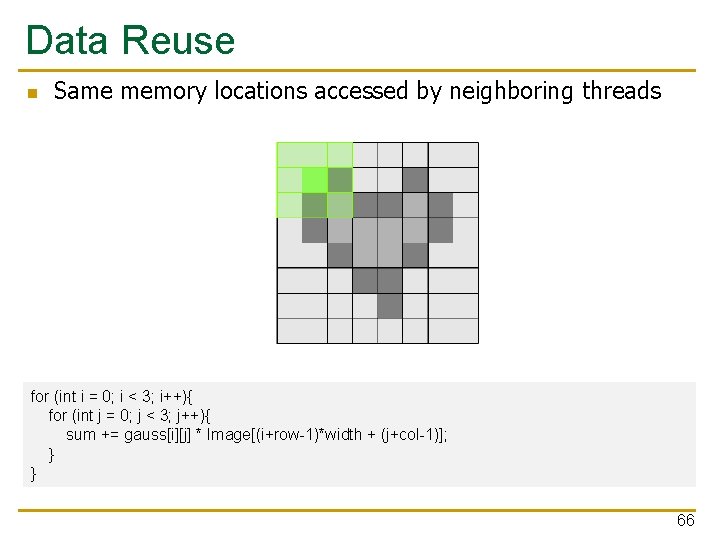

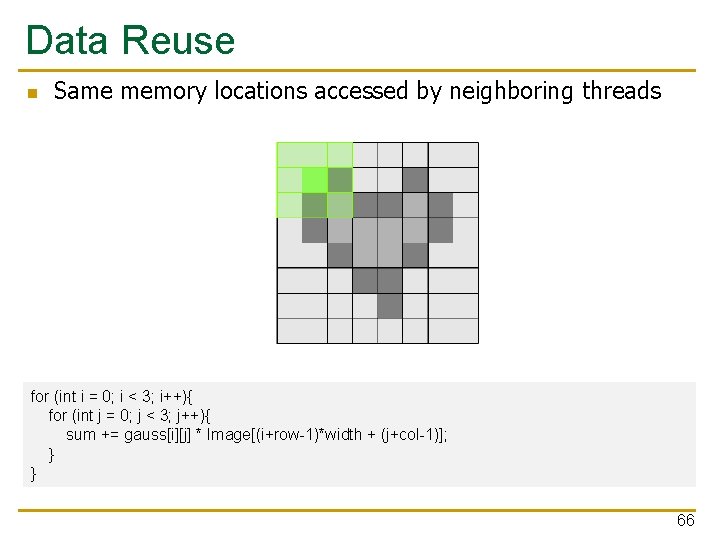

Data Reuse n Same memory locations accessed by neighboring threads for (int i = 0; i < 3; i++){ for (int j = 0; j < 3; j++){ sum += gauss[i][j] * Image[(i+row-1)*width + (j+col-1)]; } } 66

![Data Reuse n Shared memory tiling shared int ldataLSIZE2LSIZE2 Load tile into shared Data Reuse n Shared memory tiling __shared__ int l_data[(L_SIZE+2)*(L_SIZE+2)]; … Load tile into shared](https://slidetodoc.com/presentation_image/27879eddad8bb27d1cd1ed2657d55312/image-67.jpg)

Data Reuse n Shared memory tiling __shared__ int l_data[(L_SIZE+2)*(L_SIZE+2)]; … Load tile into shared memory __syncthreads(); for (int i = 0; i < 3; i++){ for (int j = 0; j < 3; j++){ sum += gauss[i][j] * l_data[(i+l_row-1)*(L_SIZE+2)+j+l_col-1]; } } 67

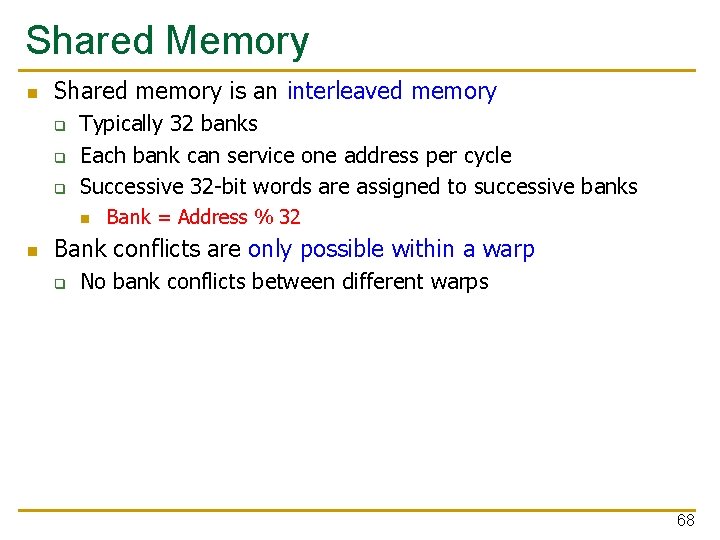

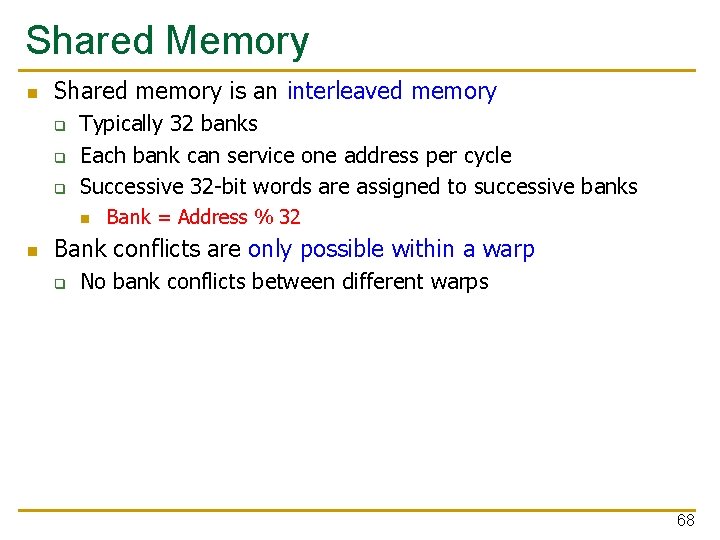

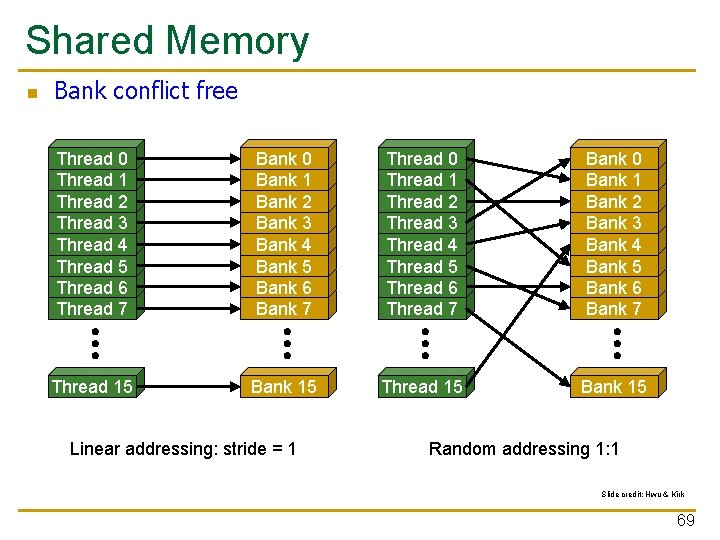

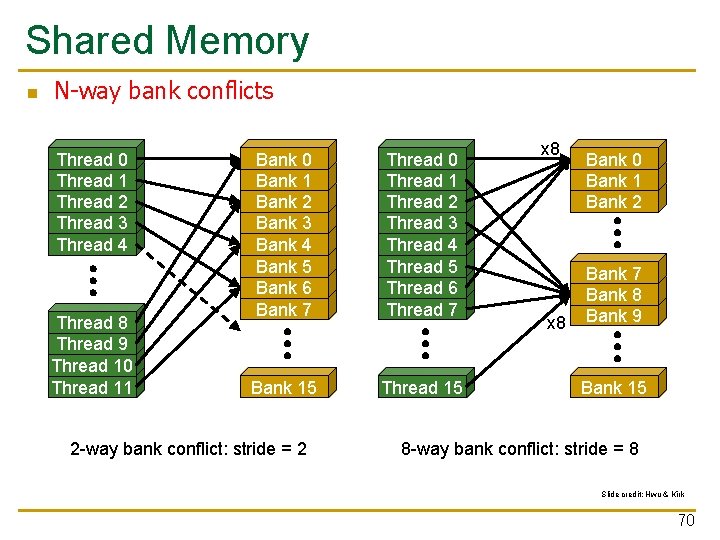

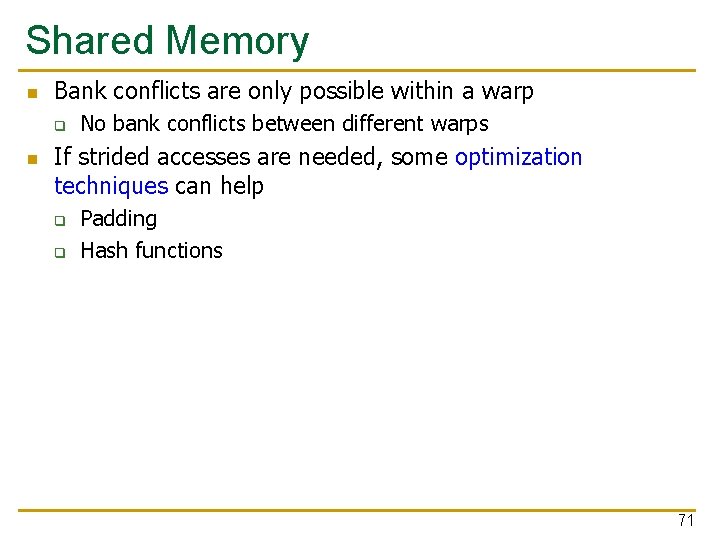

Shared Memory n Shared memory is an interleaved memory q q q Typically 32 banks Each bank can service one address per cycle Successive 32 -bit words are assigned to successive banks n n Bank = Address % 32 Bank conflicts are only possible within a warp q No bank conflicts between different warps 68

Shared Memory n Bank conflict free Thread 0 Thread 1 Thread 2 Thread 3 Thread 4 Thread 5 Thread 6 Thread 7 Bank 0 Bank 1 Bank 2 Bank 3 Bank 4 Bank 5 Bank 6 Bank 7 Thread 15 Bank 15 Linear addressing: stride = 1 Random addressing 1: 1 Slide credit: Hwu & Kirk 69

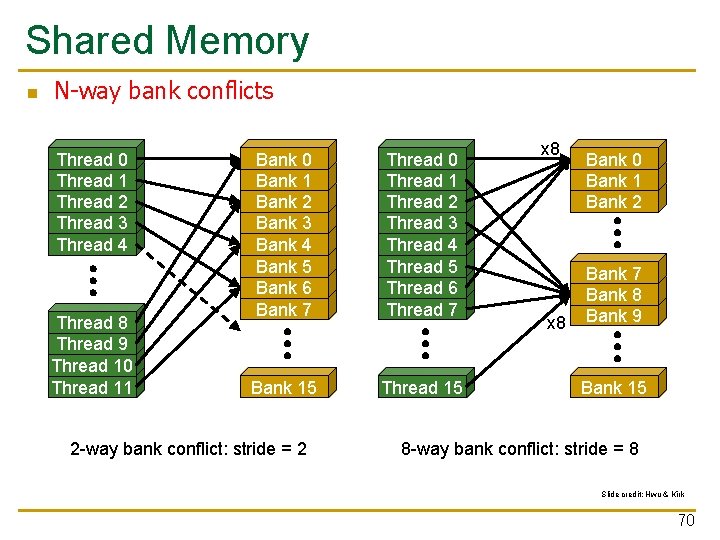

Shared Memory n N-way bank conflicts Thread 0 Thread 1 Thread 2 Thread 3 Thread 4 Thread 8 Thread 9 Thread 10 Thread 11 Bank 0 Bank 1 Bank 2 Bank 3 Bank 4 Bank 5 Bank 6 Bank 7 Thread 0 Thread 1 Thread 2 Thread 3 Thread 4 Thread 5 Thread 6 Thread 7 Bank 15 Thread 15 2 -way bank conflict: stride = 2 x 8 Bank 0 Bank 1 Bank 2 Bank 7 Bank 8 Bank 9 Bank 15 8 -way bank conflict: stride = 8 Slide credit: Hwu & Kirk 70

Shared Memory n Bank conflicts are only possible within a warp q n No bank conflicts between different warps If strided accesses are needed, some optimization techniques can help q q Padding Hash functions 71

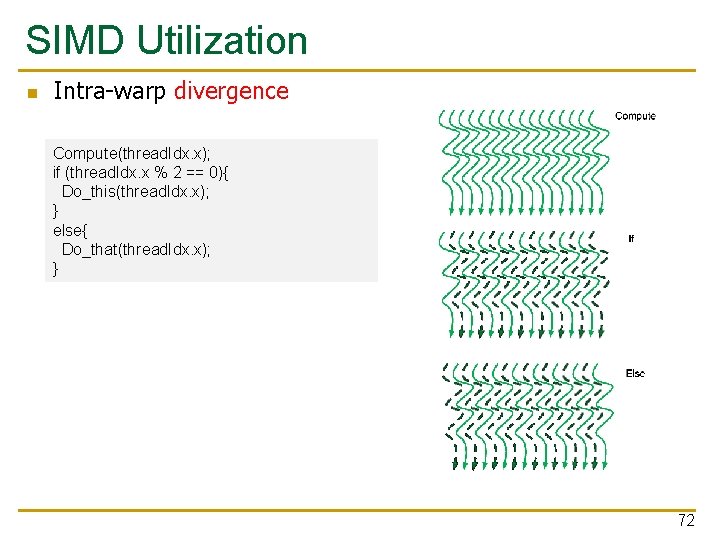

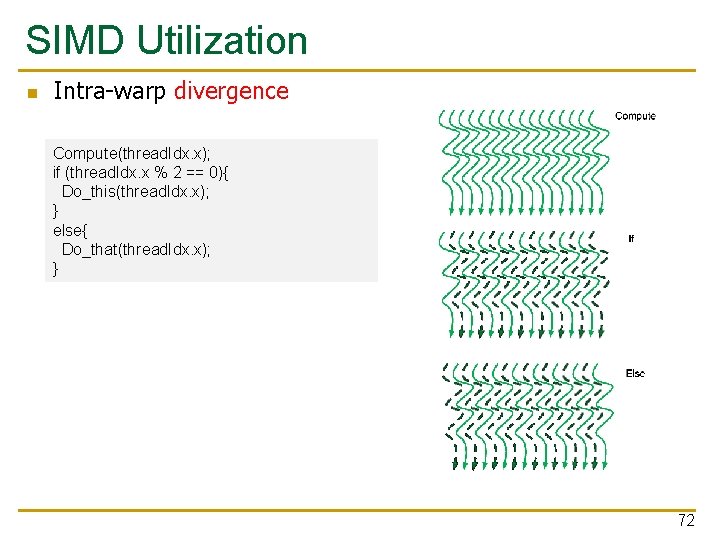

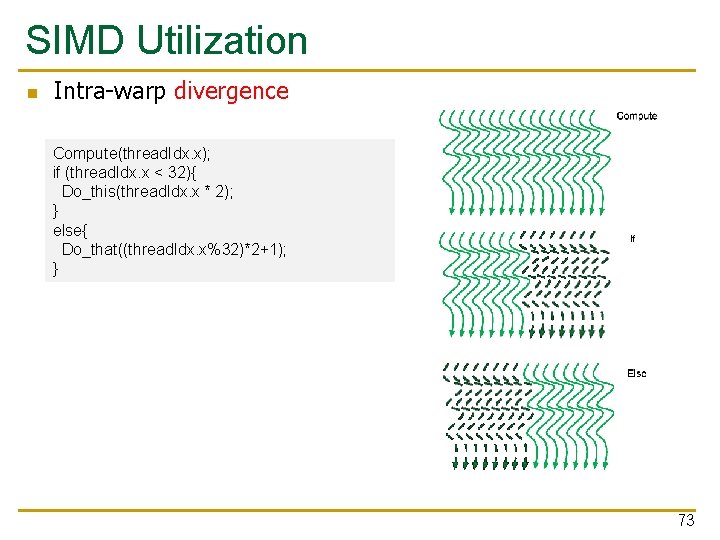

SIMD Utilization n Intra-warp divergence Compute(thread. Idx. x); if (thread. Idx. x % 2 == 0){ Do_this(thread. Idx. x); } else{ Do_that(thread. Idx. x); } 72

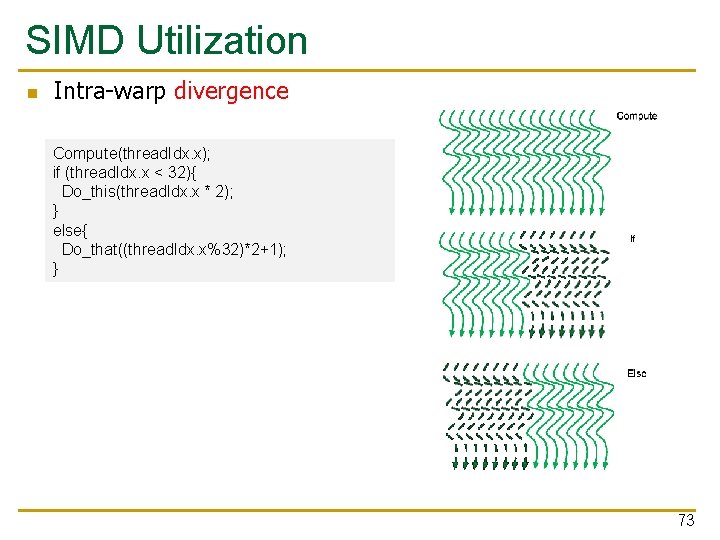

SIMD Utilization n Intra-warp divergence Compute(thread. Idx. x); if (thread. Idx. x < 32){ Do_this(thread. Idx. x * 2); } else{ Do_that((thread. Idx. x%32)*2+1); } 73

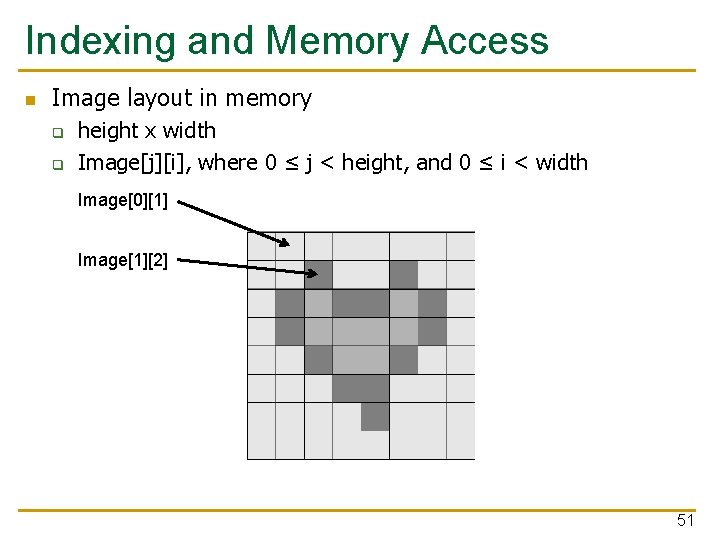

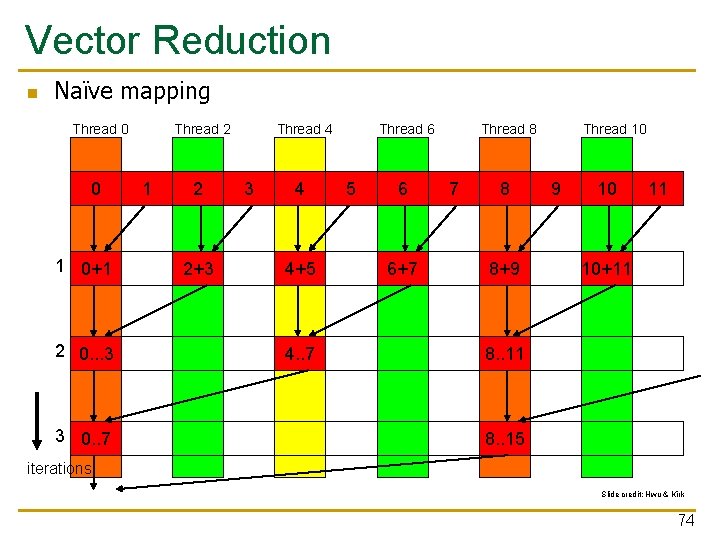

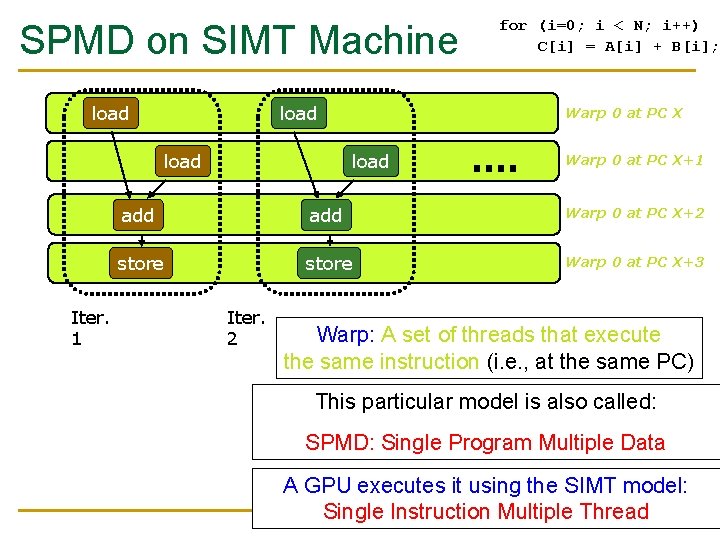

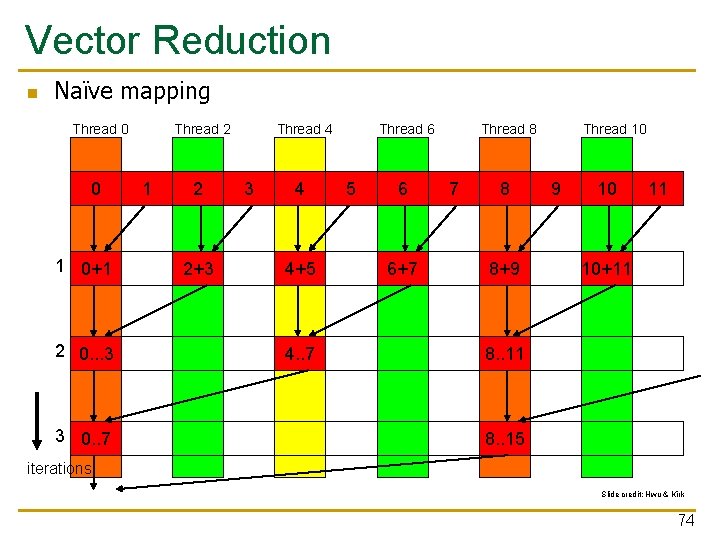

Vector Reduction n Naïve mapping Thread 0 0 1 0+1 2 0. . . 3 3 0. . 7 Thread 2 1 2 2+3 Thread 4 3 4 4+5 4. . 7 Thread 6 5 6 6+7 Thread 8 7 8 8+9 Thread 10 9 10 11 10+11 8. . 15 iterations Slide credit: Hwu & Kirk 74

![Vector Reduction n Naïve mapping shared float partial Sum unsigned int t thread Vector Reduction n Naïve mapping __shared__ float partial. Sum[] unsigned int t = thread.](https://slidetodoc.com/presentation_image/27879eddad8bb27d1cd1ed2657d55312/image-75.jpg)

Vector Reduction n Naïve mapping __shared__ float partial. Sum[] unsigned int t = thread. Idx. x; for (int stride = 1; stride < block. Dim. x; stride *= 2) { __syncthreads(); if (t % (2*stride) == 0) partial. Sum[t] += partial. Sum[t + stride]; } 75

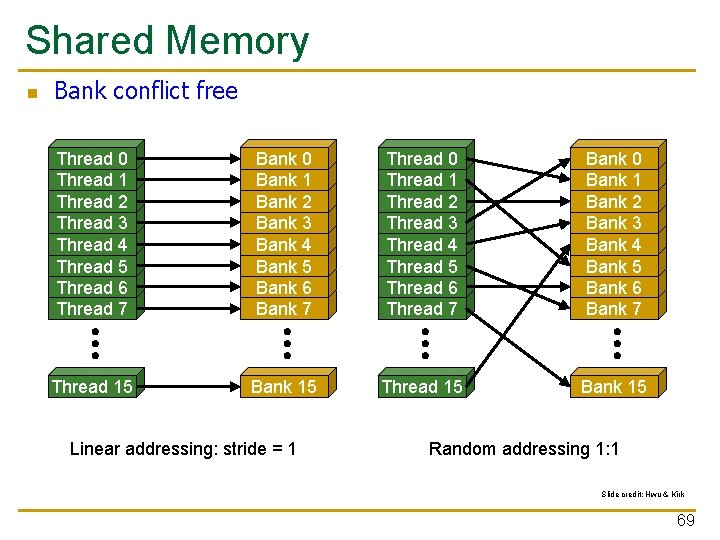

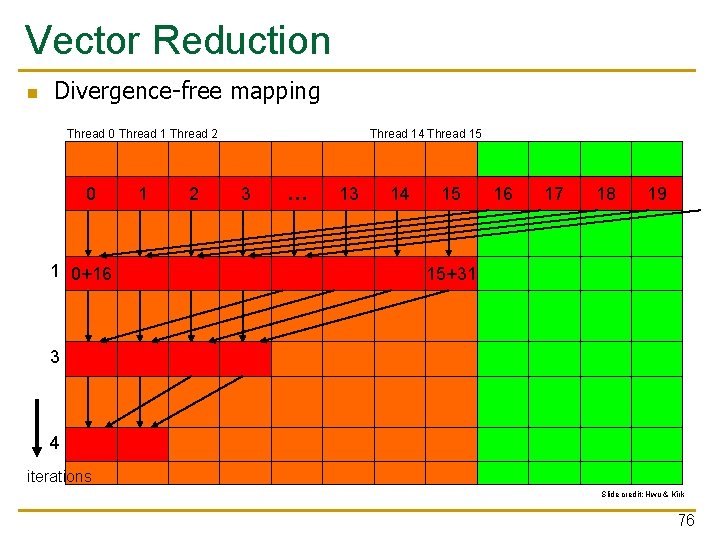

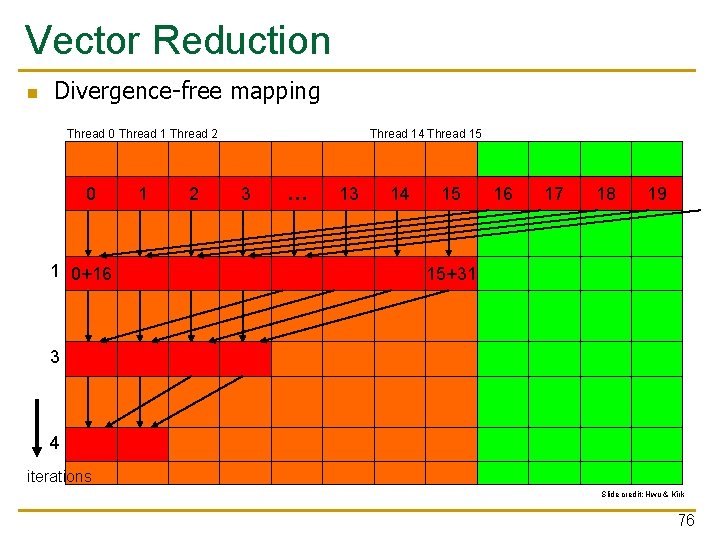

Vector Reduction n Divergence-free mapping Thread 0 Thread 1 Thread 2 0 1 0+16 1 2 Thread 14 Thread 15 3 … 13 14 15 16 17 18 19 15+31 3 4 iterations Slide credit: Hwu & Kirk 76

![Vector Reduction n Divergencefree mapping shared float partial Sum unsigned int t thread Vector Reduction n Divergence-free mapping __shared__ float partial. Sum[] unsigned int t = thread.](https://slidetodoc.com/presentation_image/27879eddad8bb27d1cd1ed2657d55312/image-77.jpg)

Vector Reduction n Divergence-free mapping __shared__ float partial. Sum[] unsigned int t = thread. Idx. x; for (int stride = block. Dim. x; stride > 1; stride >> 1){ __syncthreads(); if (t < stride) partial. Sum[t] += partial. Sum[t + stride]; } 77

We did not cover the following slides in lecture. These are for your preparation for the next lecture.

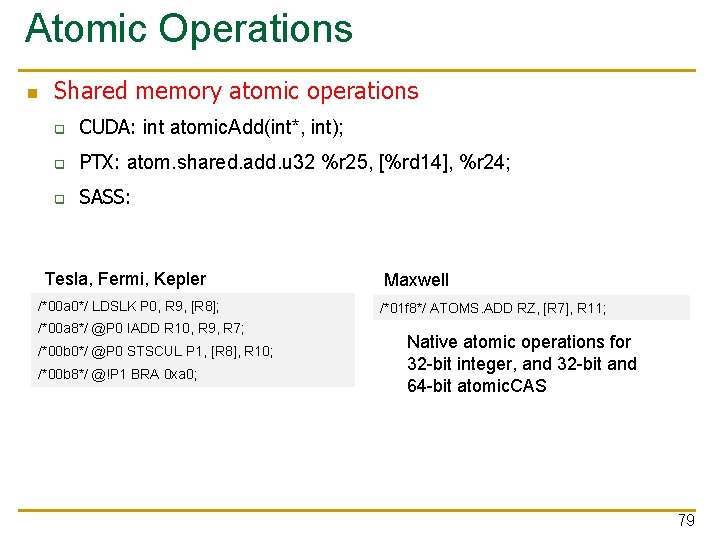

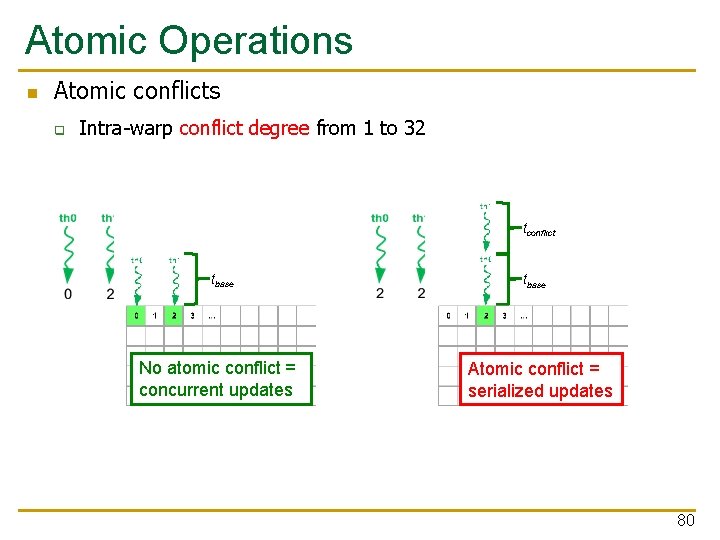

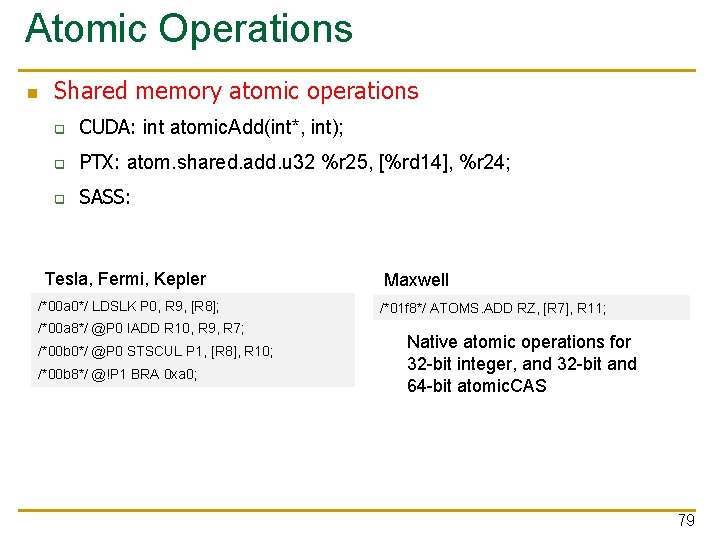

Atomic Operations n Shared memory atomic operations q CUDA: int atomic. Add(int*, int); q PTX: atom. shared. add. u 32 %r 25, [%rd 14], %r 24; q SASS: Tesla, Fermi, Kepler /*00 a 0*/ LDSLK P 0, R 9, [R 8]; /*00 a 8*/ @P 0 IADD R 10, R 9, R 7; /*00 b 0*/ @P 0 STSCUL P 1, [R 8], R 10; /*00 b 8*/ @!P 1 BRA 0 xa 0; Maxwell /*01 f 8*/ ATOMS. ADD RZ, [R 7], R 11; Native atomic operations for 32 -bit integer, and 32 -bit and 64 -bit atomic. CAS 79

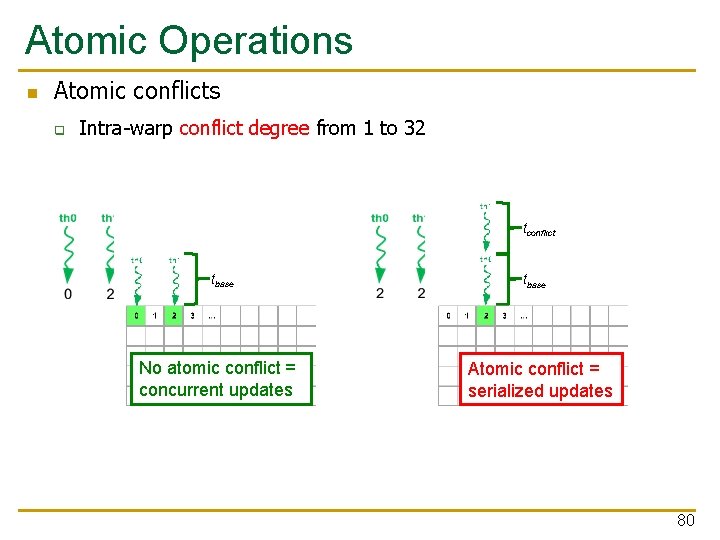

Atomic Operations n Atomic conflicts q Intra-warp conflict degree from 1 to 32 tconflict tbase No atomic conflict = concurrent updates Shared memory tbase Atomic conflict = serialized updates Shared memory 80

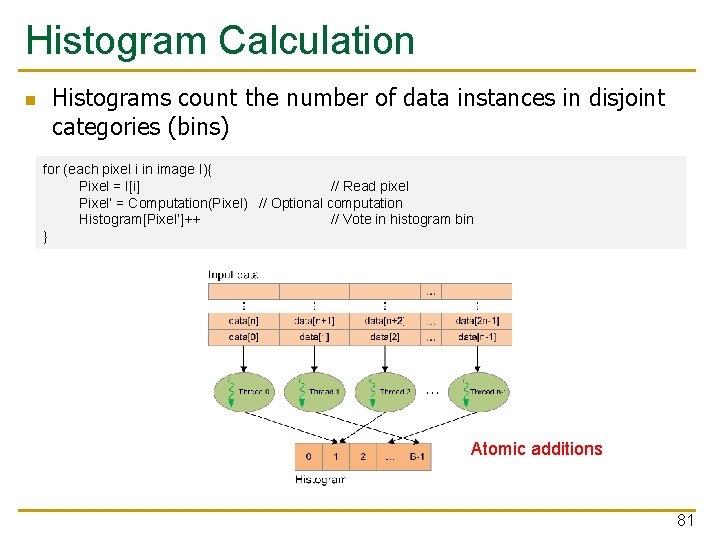

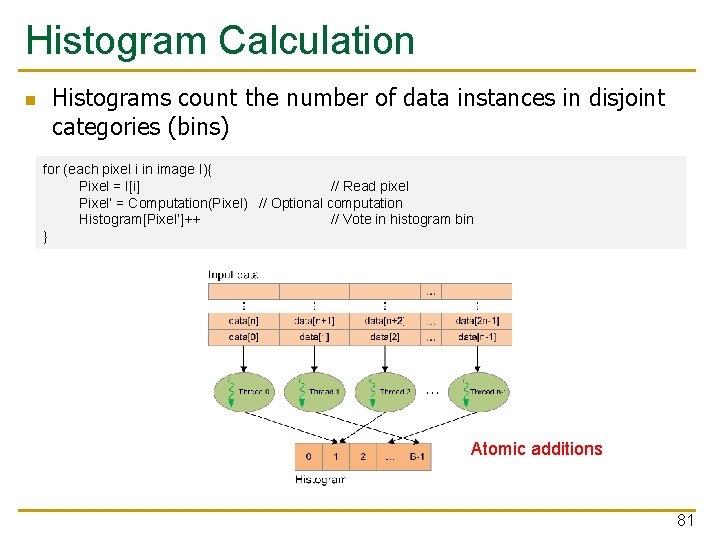

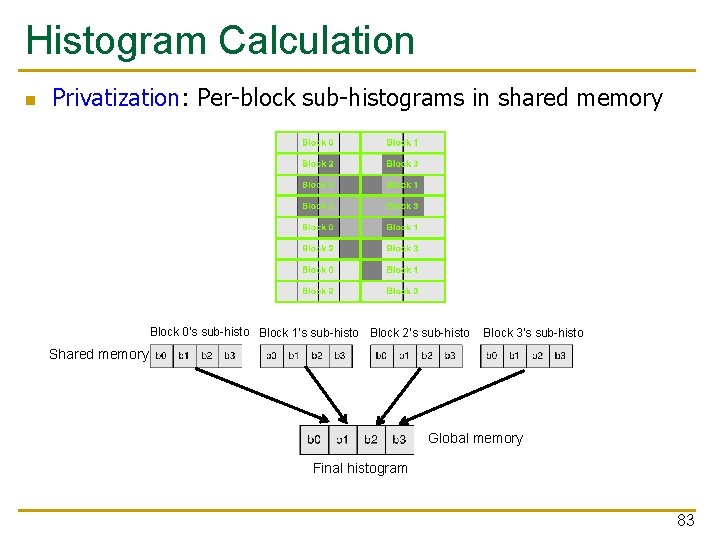

Histogram Calculation n Histograms count the number of data instances in disjoint categories (bins) for (each pixel i in image I){ Pixel = I[i] // Read pixel Pixel’ = Computation(Pixel) // Optional computation Histogram[Pixel’]++ // Vote in histogram bin } Atomic additions 81

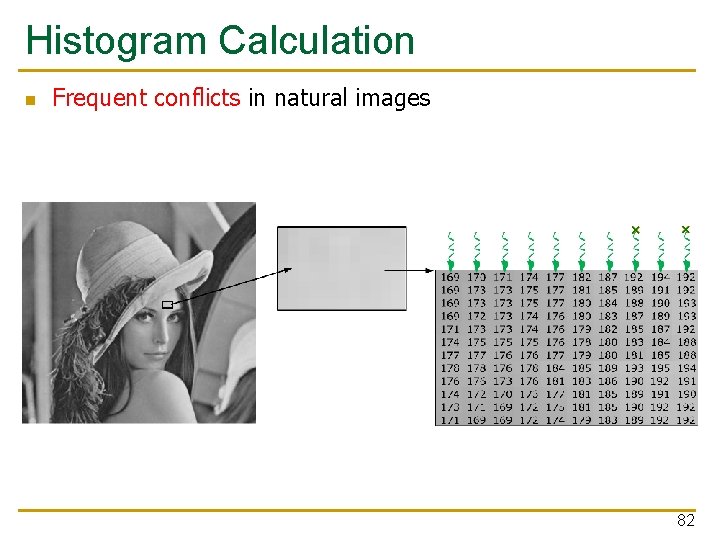

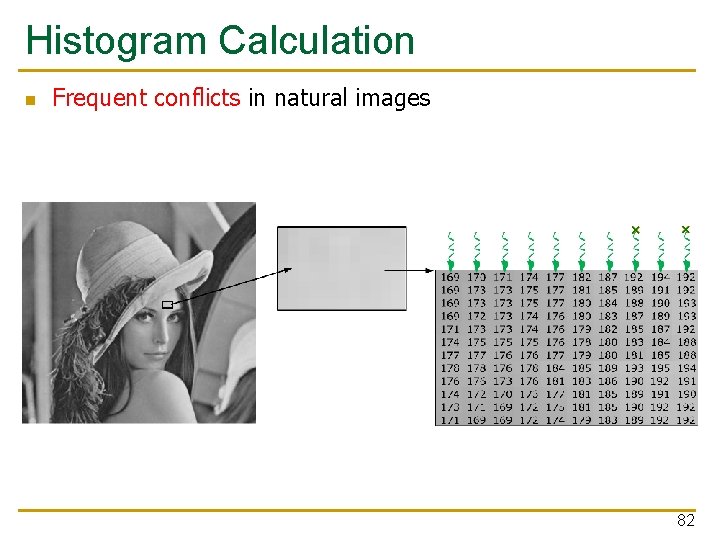

Histogram Calculation n Frequent conflicts in natural images 82

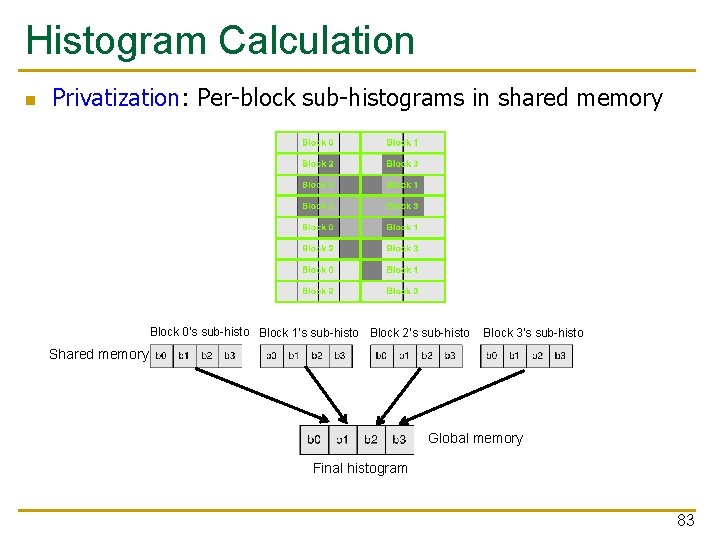

Histogram Calculation n Privatization: Per-block sub-histograms in shared memory Block 0’s sub-histo Block 1’s sub-histo Block 2’s sub-histo Block 3’s sub-histo Shared memory Global memory Final histogram 83

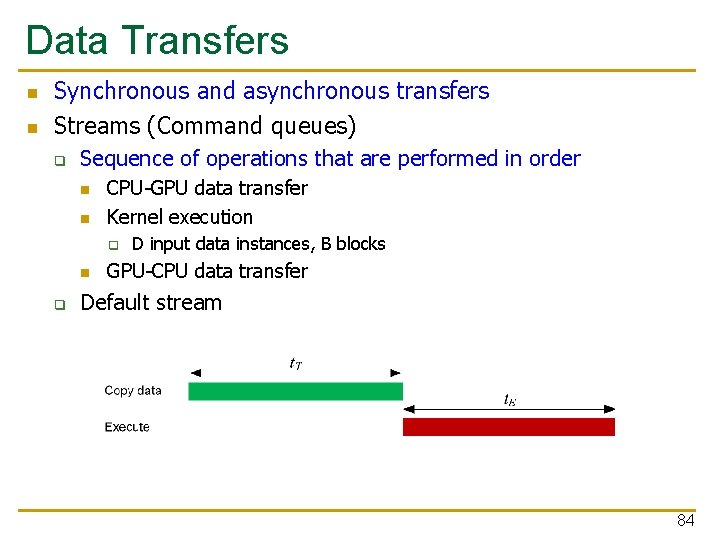

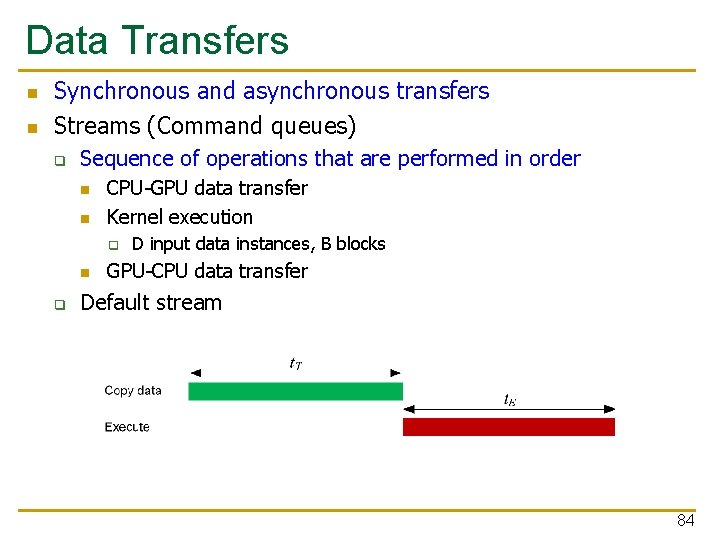

Data Transfers n n Synchronous and asynchronous transfers Streams (Command queues) q Sequence of operations that are performed in order n n CPU-GPU data transfer Kernel execution q D input data instances, B blocks GPU-CPU data transfer Default stream 84

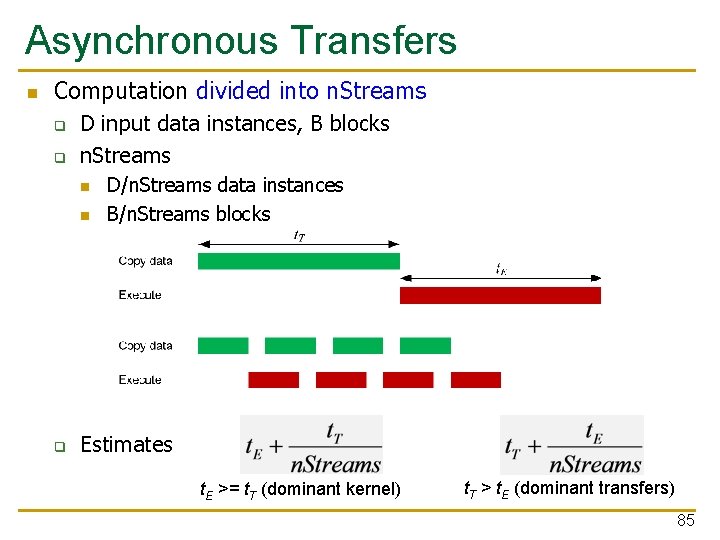

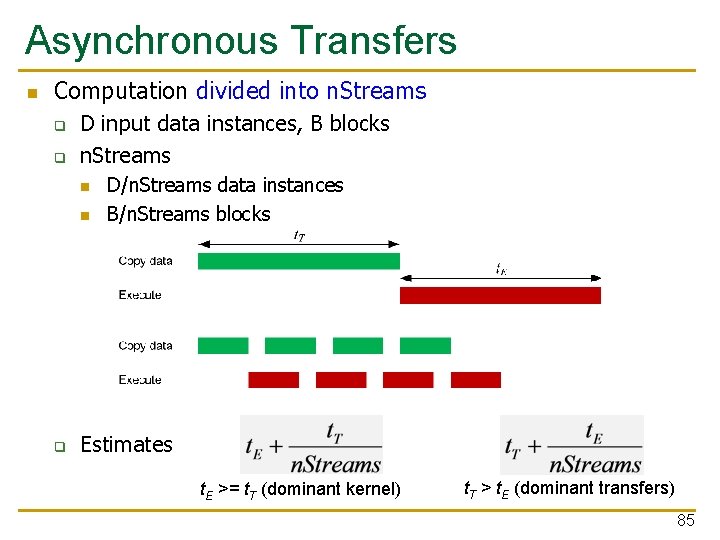

Asynchronous Transfers n Computation divided into n. Streams q q D input data instances, B blocks n. Streams n n q D/n. Streams data instances B/n. Streams blocks Estimates t. E >= t. T (dominant kernel) t. T > t. E (dominant transfers) 85

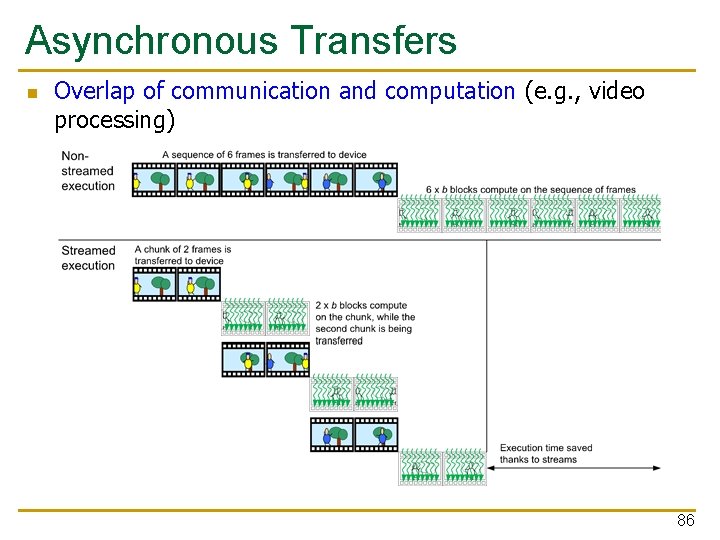

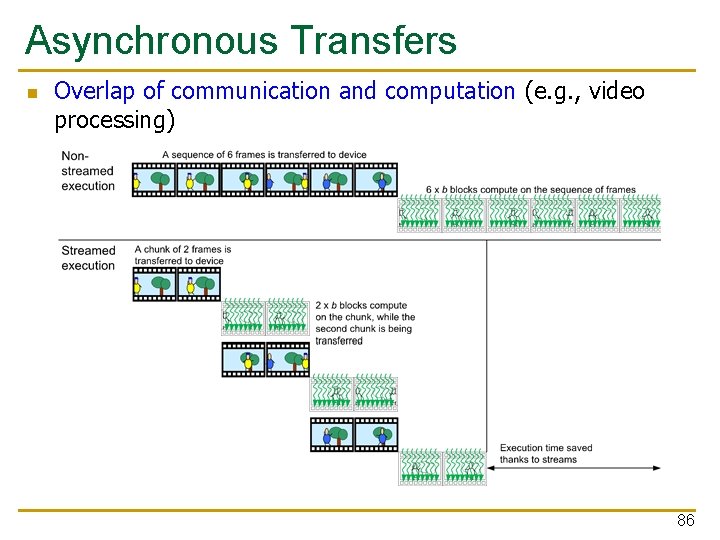

Asynchronous Transfers n Overlap of communication and computation (e. g. , video processing) 86

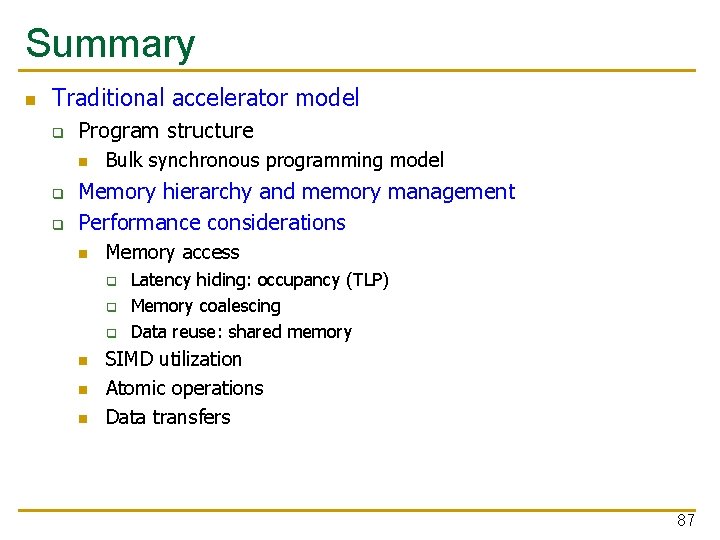

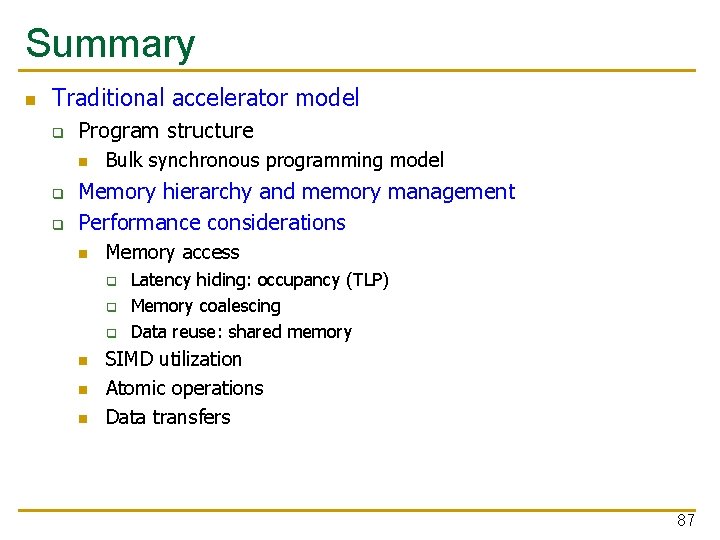

Summary n Traditional accelerator model q Program structure n q q Bulk synchronous programming model Memory hierarchy and memory management Performance considerations n Memory access q q q n n n Latency hiding: occupancy (TLP) Memory coalescing Data reuse: shared memory SIMD utilization Atomic operations Data transfers 87

Computer Architecture Lecture 9: GPUs and GPGPU Programming Prof. Onur Mutlu ETH Zürich Fall 2017 19 October 2017