Computational Argumentation 20202021 HC 10 Dynamics of Argumentation

- Slides: 39

Computational Argumentation 2020/2021, HC 10 Dynamics of Argumentation (2) Argumentation as Dialogue (1) Henry Prakken October 14, 2020

Overview n Resolving argumentation frameworks n n Structured Argumentation as dialogue (1)

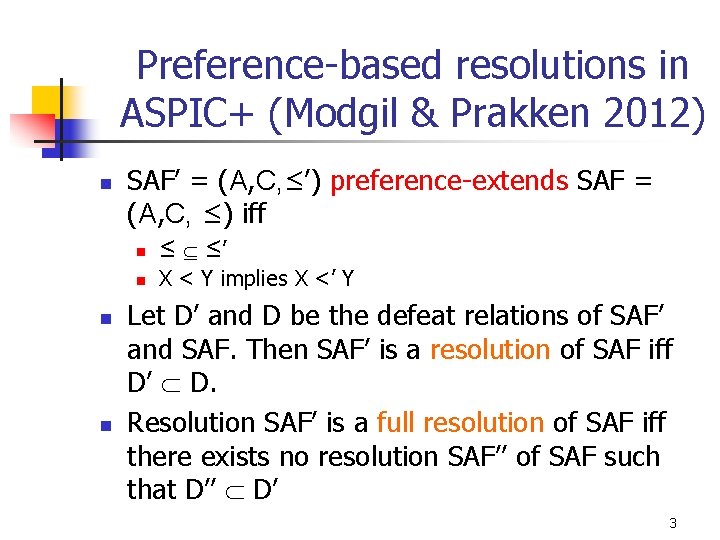

Preference-based resolutions in ASPIC+ (Modgil & Prakken 2012) n n n SAF’ = (A, C, ≤’) preference-extends SAF = (A, C, ≤) iff n ≤ ≤’ n X < Y implies X <’ Y Let D’ and D be the defeat relations of SAF’ and SAF. Then SAF’ is a resolution of SAF iff D’ D. Resolution SAF’ is a full resolution of SAF iff there exists no resolution SAF’’ of SAF such that D’’ D’ 3

Properties of preference-based resolutions n n Grounded semantics still satisfies Lto. R sceptical (but only for finitary AFs) Preferred now fails Rto. L sceptical

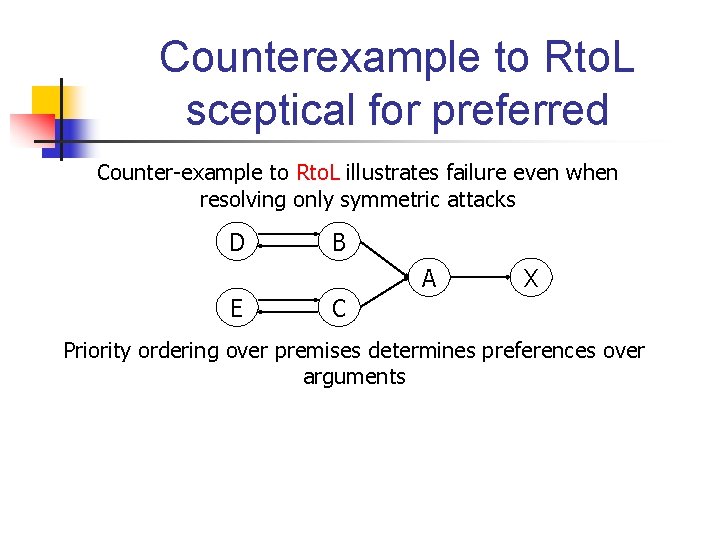

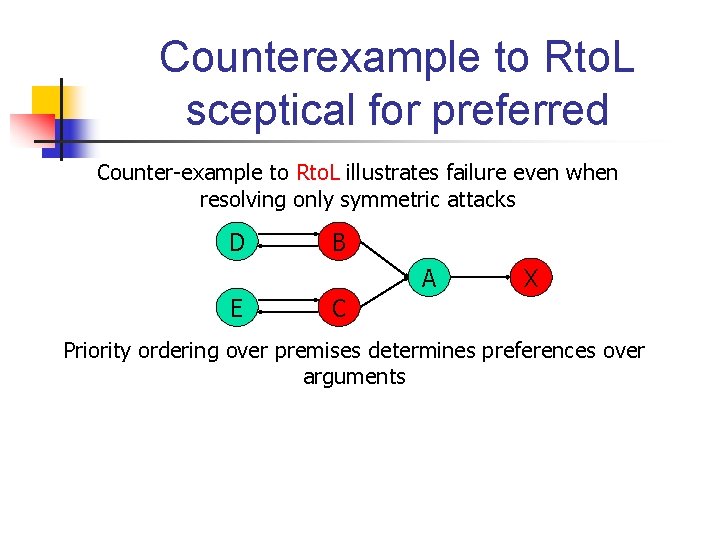

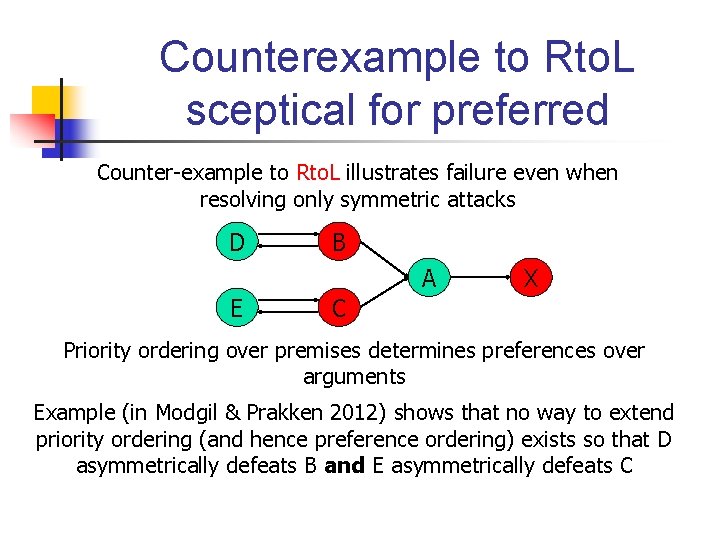

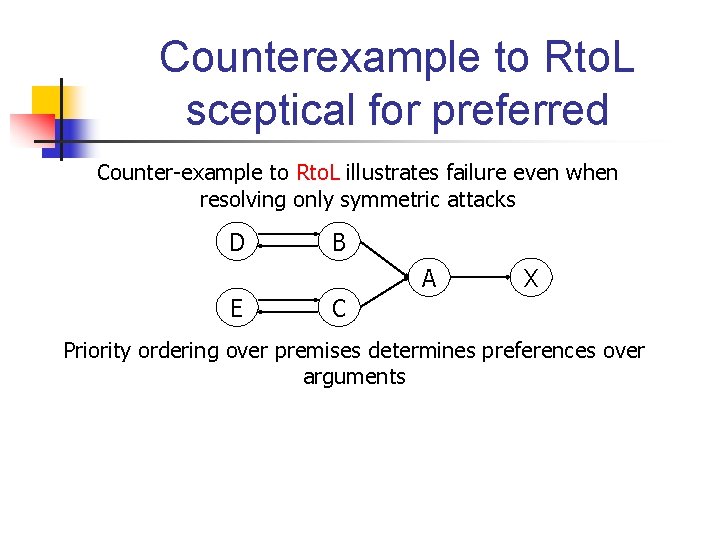

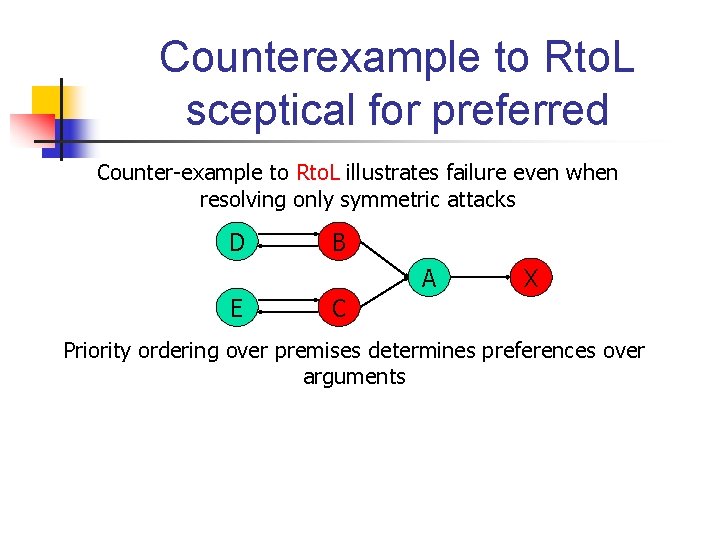

Counterexample to Rto. L sceptical for preferred Counter-example to Rto. L illustrates failure even when resolving only symmetric attacks D B A E X C Priority ordering over premises determines preferences over arguments

Counterexample to Rto. L sceptical for preferred Counter-example to Rto. L illustrates failure even when resolving only symmetric attacks D B A E X C Priority ordering over premises determines preferences over arguments

Counterexample to Rto. L sceptical for preferred Counter-example to Rto. L illustrates failure even when resolving only symmetric attacks D B A E X C Priority ordering over premises determines preferences over arguments

Counterexample to Rto. L sceptical for preferred Counter-example to Rto. L illustrates failure even when resolving only symmetric attacks D B A E X C Priority ordering over premises determines preferences over arguments

Counterexample to Rto. L sceptical for preferred Counter-example to Rto. L illustrates failure even when resolving only symmetric attacks D B A E X C Priority ordering over premises determines preferences over arguments

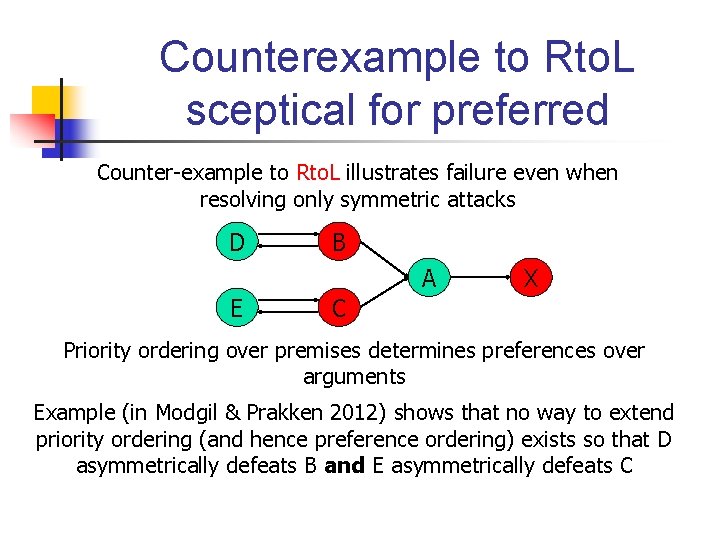

Counterexample to Rto. L sceptical for preferred Counter-example to Rto. L illustrates failure even when resolving only symmetric attacks D B A E X C Priority ordering over premises determines preferences over arguments Example (in Modgil & Prakken 2012) shows that no way to extend priority ordering (and hence preference ordering) exists so that D asymmetrically defeats B and E asymmetrically defeats C

Argumentation as dialogue (1)

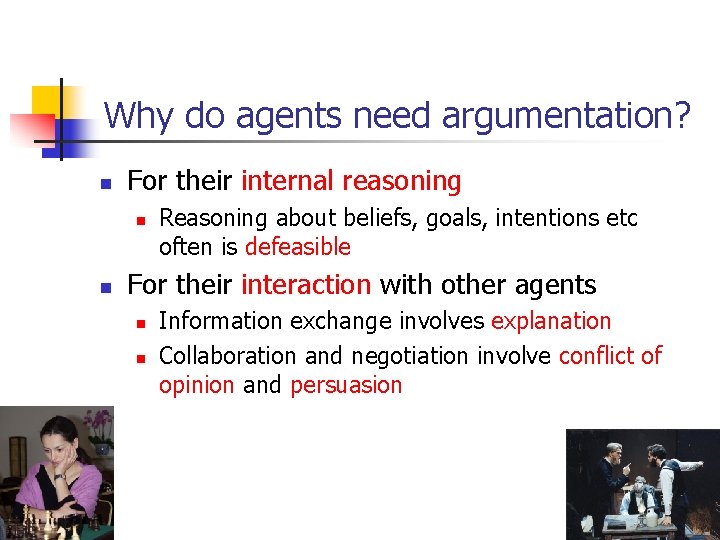

Why do agents need argumentation? n For their internal reasoning n n Reasoning about beliefs, goals, intentions etc often is defeasible For their interaction with other agents n n Information exchange involves explanation Collaboration and negotiation involve conflict of opinion and persuasion

Overview n Dialogue systems for argumentation n Inference vs. dialogue Use of argumentation in MAS General ideas

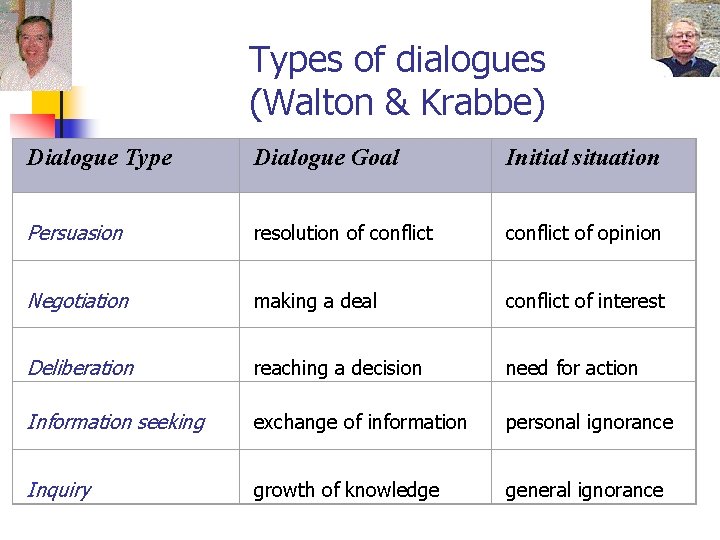

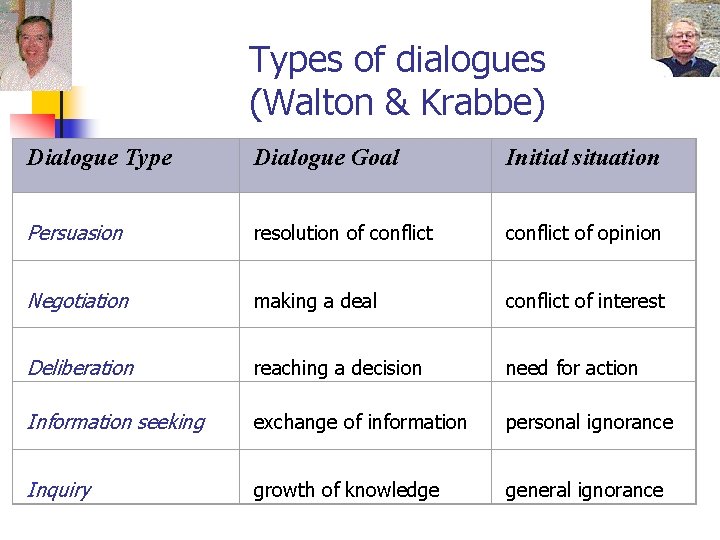

Types of dialogues (Walton & Krabbe) Dialogue Type Dialogue Goal Initial situation Persuasion resolution of conflict of opinion Negotiation making a deal conflict of interest Deliberation reaching a decision need for action Information seeking exchange of information personal ignorance Inquiry growth of knowledge general ignorance

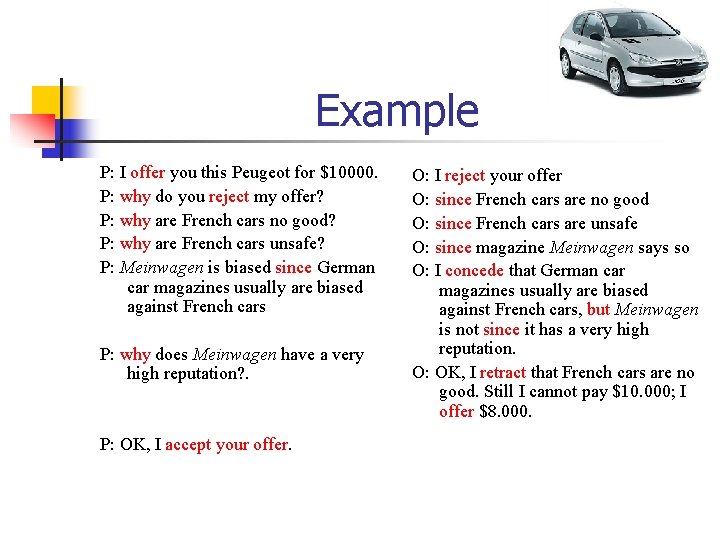

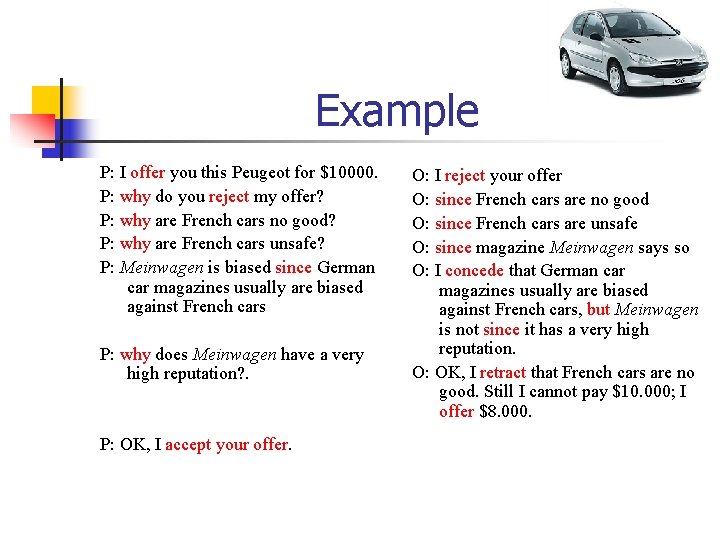

Example P: I offer you this Peugeot for $10000. P: why do you reject my offer? P: why are French cars no good? P: why are French cars unsafe? P: Meinwagen is biased since German car magazines usually are biased against French cars P: why does Meinwagen have a very high reputation? . P: OK, I accept your offer. O: I reject your offer O: since French cars are no good O: since French cars are unsafe O: since magazine Meinwagen says so O: I concede that German car magazines usually are biased against French cars, but Meinwagen is not since it has a very high reputation. O: OK, I retract that French cars are no good. Still I cannot pay $10. 000; I offer $8. 000.

Example (2) P: I offer you this Peugeot for $10000. P: why do you reject my offer? P: why are French cars no good? P: why are French cars unsafe? P: Meinwagen is biased since German car magazines usually are biased against French cars P: why does Meinwagen have a very high reputation? . P: OK, I accept your offer. O: I reject your offer O: since French cars are no good O: since French cars are unsafe O: since magazine Meinwagen says so O: I concede that German car magazines usually are biased against French cars, but Meinwagen is not since it has a very high reputation. O: OK, I retract that French cars are no good. Still I cannot pay $10. 000; I offer $8. 000.

Example (3) P: I offer you this Peugeot for $10000. P: why do you reject my offer? P: why are French cars no good? P: why are French cars unsafe? P: Meinwagen is biased since German car magazines usually are biased against French cars P: why does Meinwagen have a very high reputation? . P: OK, I accept your offer. O: I reject your offer O: since French cars are no good O: since French cars are unsafe O: since magazine Meinwagen says so O: I concede that German car magazines usually are biased against French cars, but Meinwagen is not since it has a very high reputation. O: OK, I retract that French cars are no good. Still I cannot pay $10. 000; I offer $8. 000.

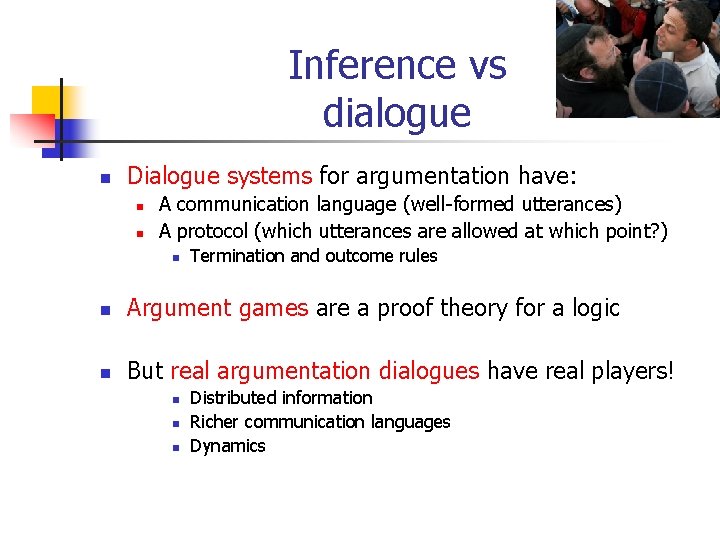

Inference vs dialogue n Dialogue systems for argumentation have: n n A communication language (well-formed utterances) A protocol (which utterances are allowed at which point? ) n Termination and outcome rules n Argument games are a proof theory for a logic n But real argumentation dialogues have real players! n n n Distributed information Richer communication languages Dynamics

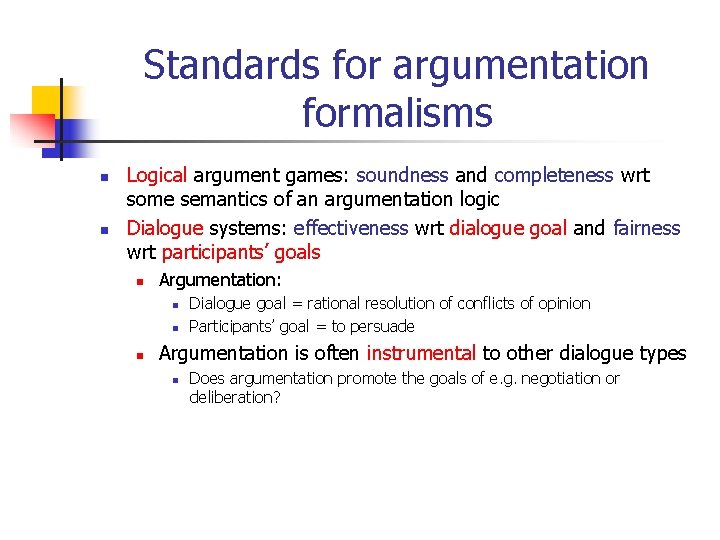

Standards for argumentation formalisms n n Logical argument games: soundness and completeness wrt some semantics of an argumentation logic Dialogue systems: effectiveness wrt dialogue goal and fairness wrt participants’ goals n Argumentation: n n n Dialogue goal = rational resolution of conflicts of opinion Participants’ goal = to persuade Argumentation is often instrumental to other dialogue types n Does argumentation promote the goals of e. g. negotiation or deliberation?

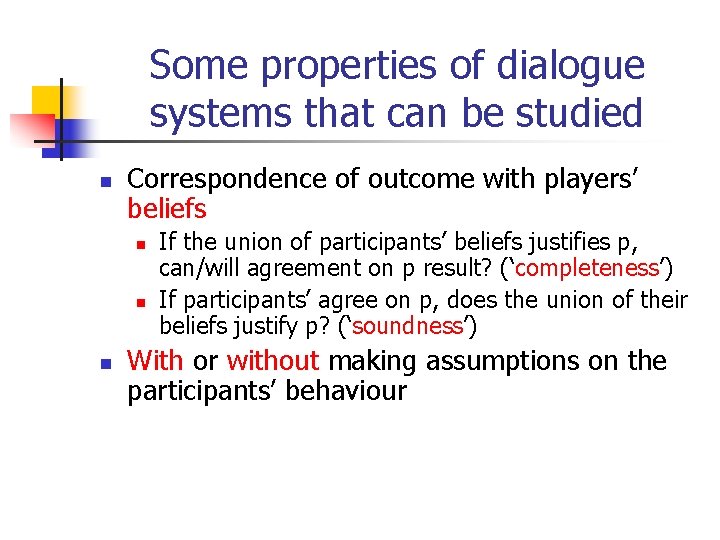

Some properties of dialogue systems that can be studied n Correspondence of outcome with players’ beliefs n n n If the union of participants’ beliefs justifies p, can/will agreement on p result? (‘completeness’) If participants’ agree on p, does the union of their beliefs justify p? (‘soundness’) With or without making assumptions on the participants’ behaviour

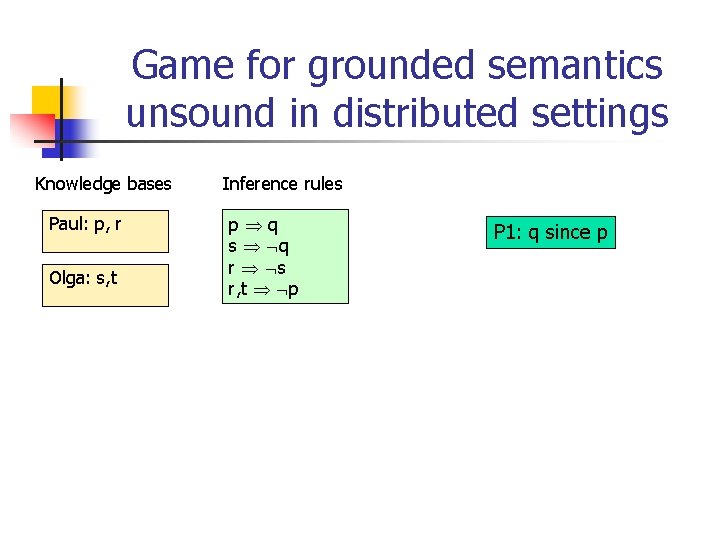

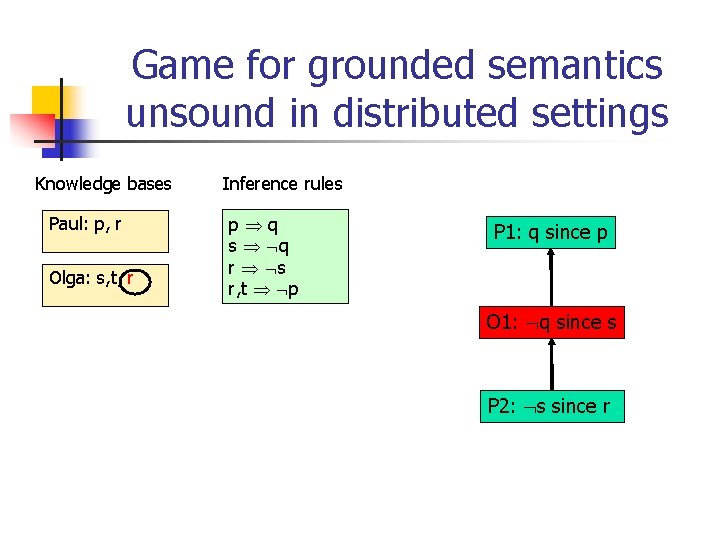

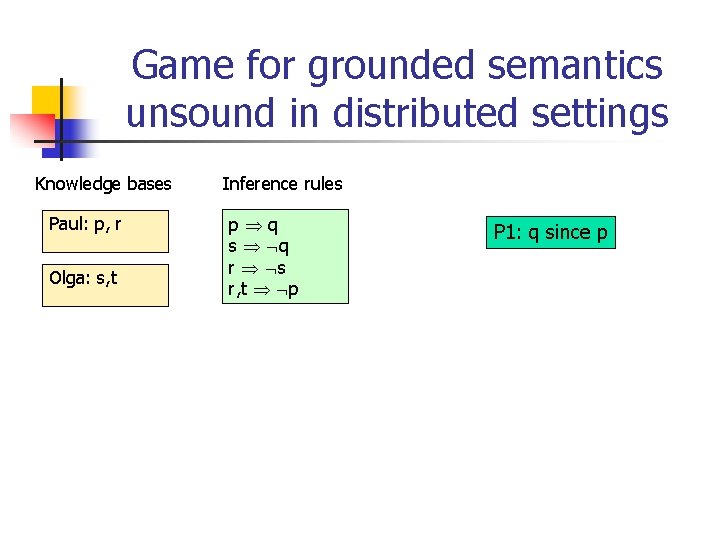

Game for grounded semantics unsound in distributed settings Knowledge bases Paul: p, r Olga: s, t Inference rules p q s q r s r, t p P 1: q since p

Game for grounded semantics unsound in distributed settings Knowledge bases Paul: p, r Olga: s, t Inference rules p q s q r s r, t p P 1: q since p O 1: q since s

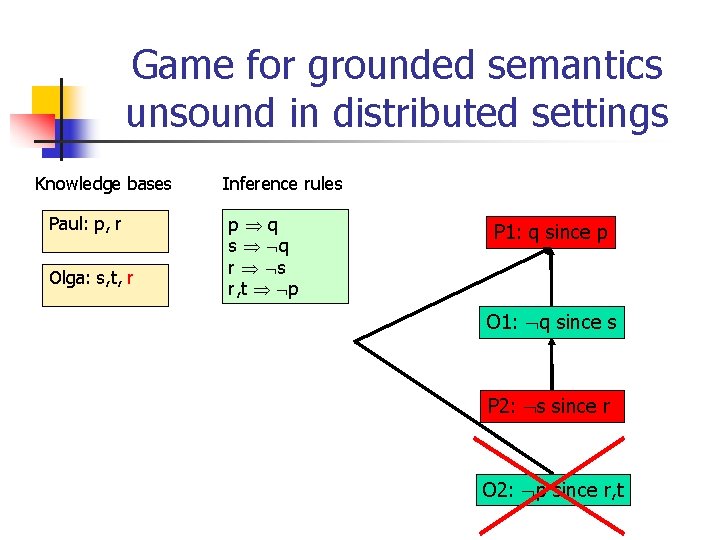

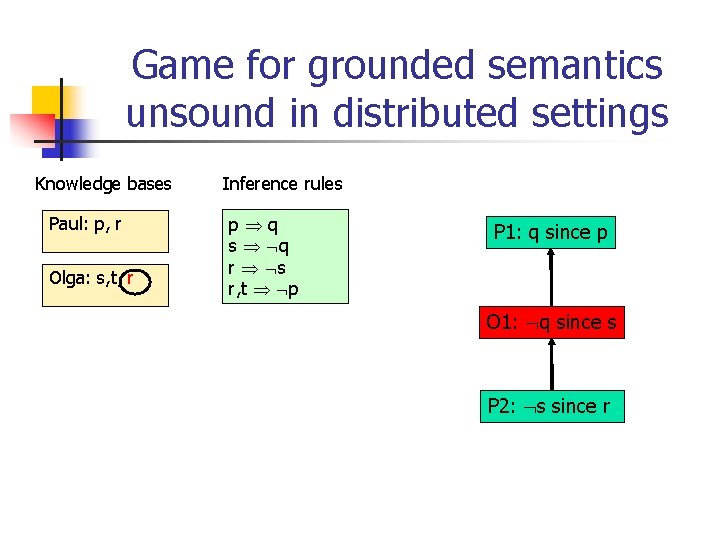

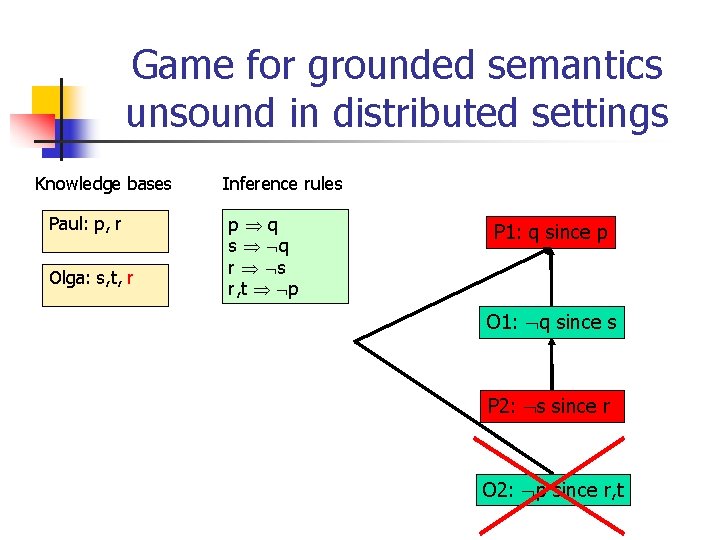

Game for grounded semantics unsound in distributed settings Knowledge bases Paul: p, r Olga: s, t, r Inference rules p q s q r s r, t p P 1: q since p O 1: q since s P 2: s since r

Game for grounded semantics unsound in distributed settings Knowledge bases Paul: p, r Olga: s, t, r Inference rules p q s q r s r, t p P 1: q since p O 1: q since s P 2: s since r O 2: p since r, t

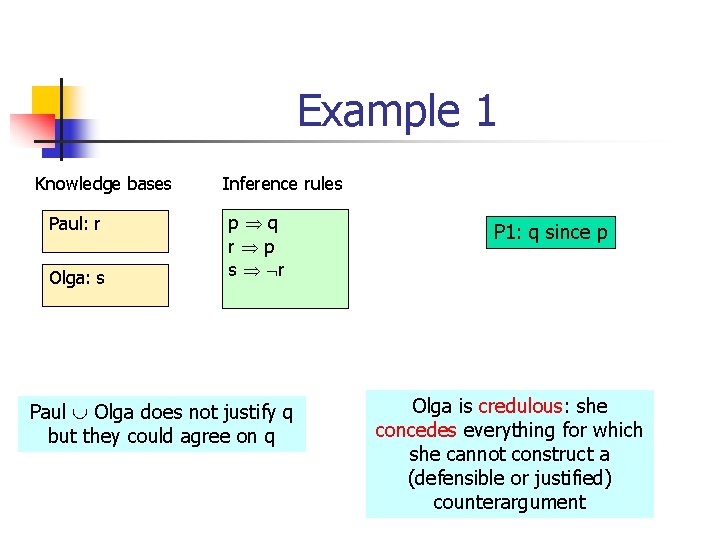

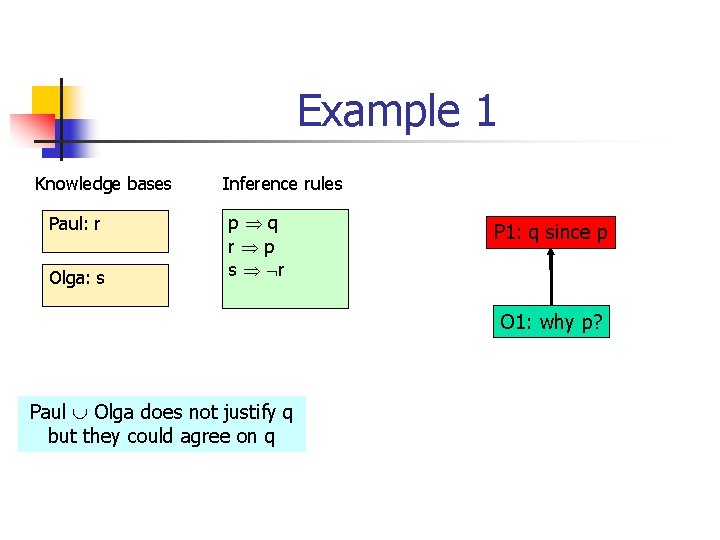

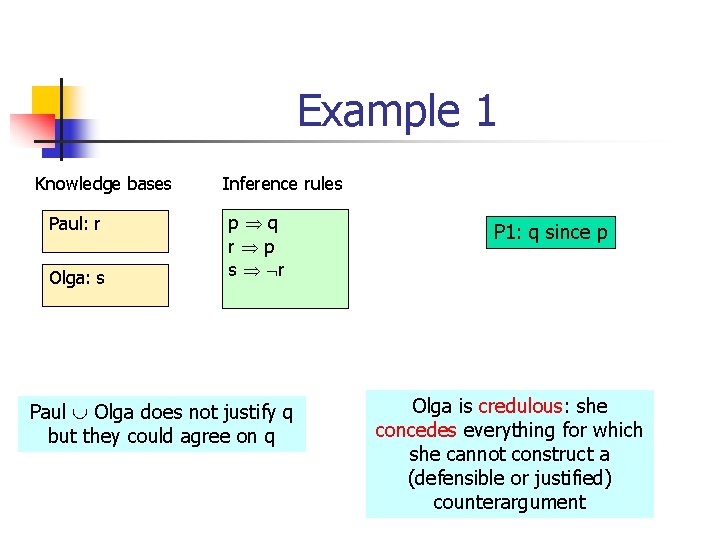

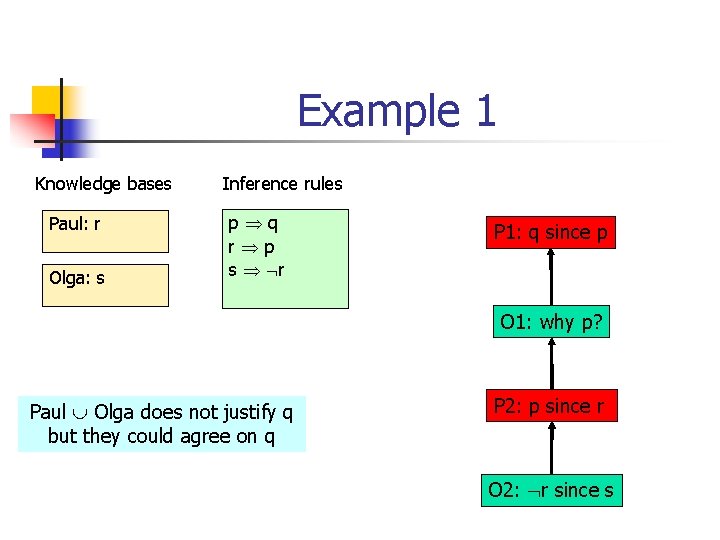

Example 1 Knowledge bases Paul: r Olga: s Inference rules p q r p s r Paul Olga does not justify q but they could agree on q P 1: q since p Olga is credulous: she concedes everything for which she cannot construct a (defensible or justified) counterargument

Example 1 Knowledge bases Paul: r Olga: s Inference rules p q r p s r P 1: q since p O 1: concede p, q Paul Olga does not justify q but they could agree on q

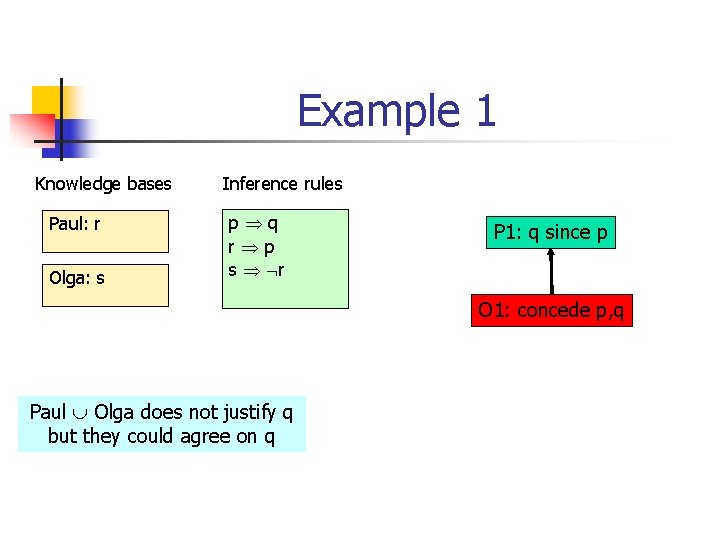

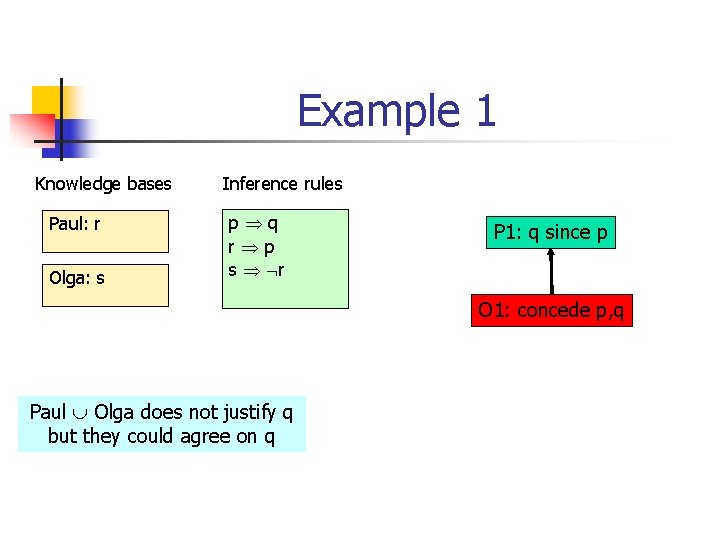

Example 1 Knowledge bases Paul: r Olga: s Inference rules p q r p s r Paul Olga does not justify q but they could agree on q P 1: q since p Olga is sceptical: she challenges everything for which she cannot construct a (defensible or justified) argument

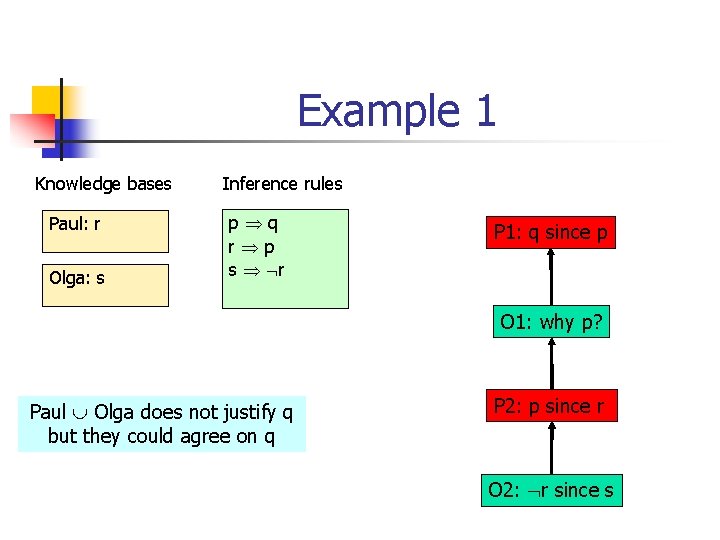

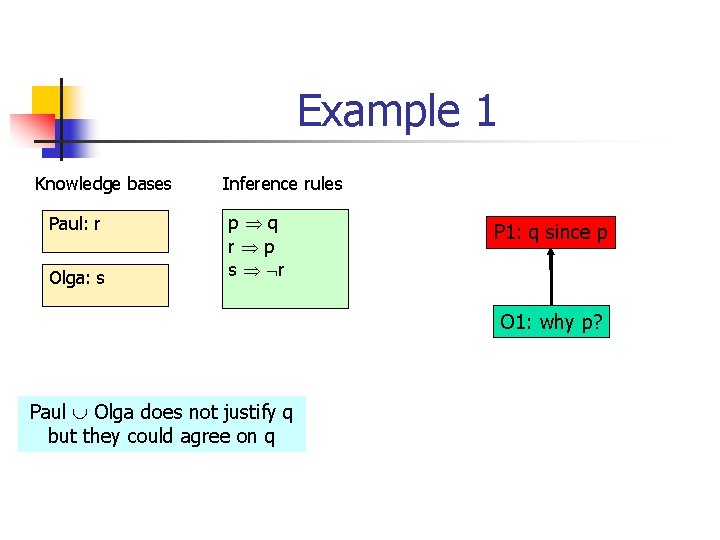

Example 1 Knowledge bases Paul: r Olga: s Inference rules p q r p s r P 1: q since p O 1: why p? Paul Olga does not justify q but they could agree on q

Example 1 Knowledge bases Paul: r Olga: s Inference rules p q r p s r P 1: q since p O 1: why p? Paul Olga does not justify q but they could agree on q P 2: p since r

Example 1 Knowledge bases Paul: r Olga: s Inference rules p q r p s r P 1: q since p O 1: why p? Paul Olga does not justify q but they could agree on q P 2: p since r O 2: r since s

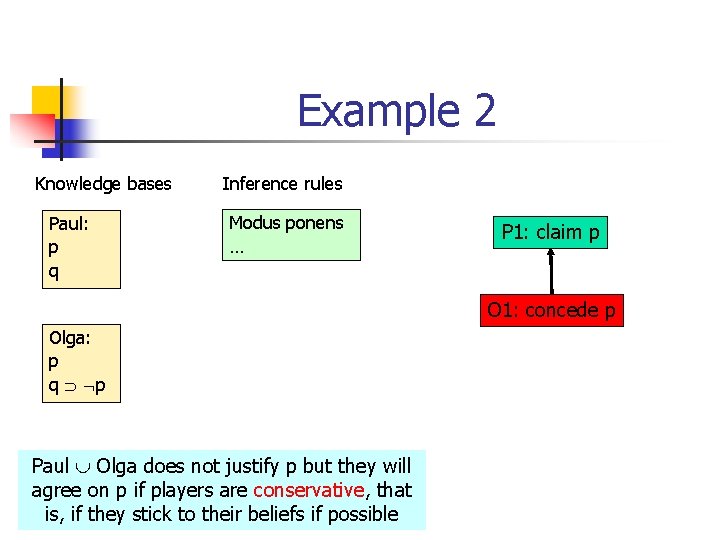

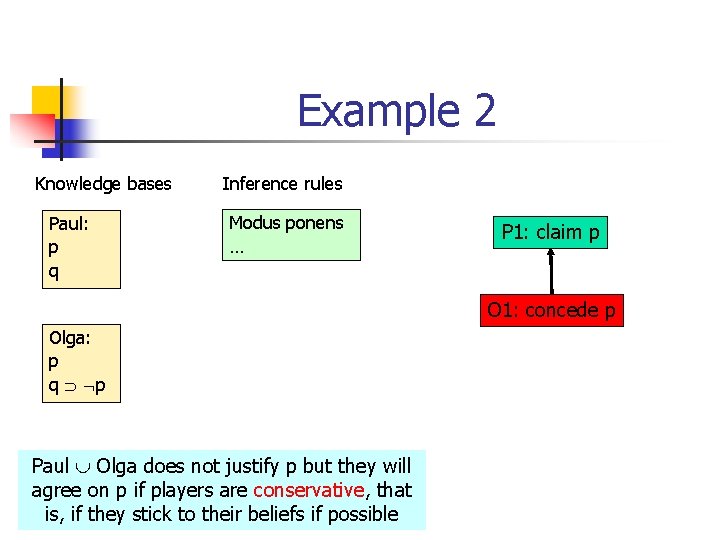

Example 2 Knowledge bases Paul: p q Inference rules Modus ponens … Olga: p q p Paul Olga does not justify p but they will agree on p if players are conservative, that is, if they stick to their beliefs if possible P 1: claim p

Example 2 Knowledge bases Paul: p q Inference rules Modus ponens … P 1: claim p O 1: concede p Olga: p q p Paul Olga does not justify p but they will agree on p if players are conservative, that is, if they stick to their beliefs if possible

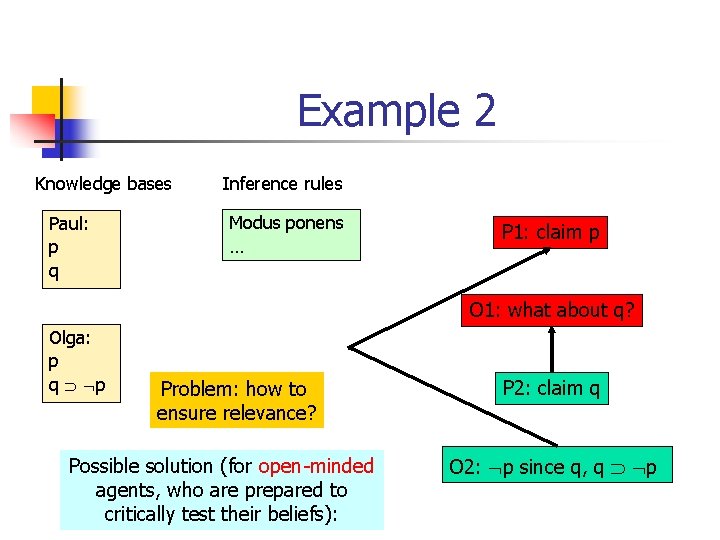

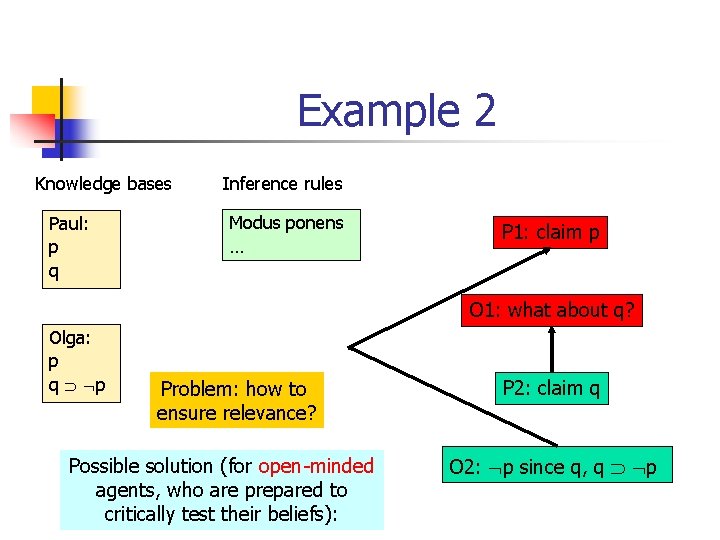

Example 2 Knowledge bases Paul: p q Inference rules Modus ponens … P 1: claim p O 1: what about q? Olga: p q p Possible solution (for open-minded agents, who are prepared to critically test their beliefs):

Example 2 Knowledge bases Paul: p q Inference rules Modus ponens … P 1: claim p O 1: what about q? Olga: p q p Possible solution (for open-minded agents, who are prepared to critically test their beliefs): P 2: claim q

Example 2 Knowledge bases Paul: p q Inference rules Modus ponens … P 1: claim p O 1: what about q? Olga: p q p Problem: how to ensure relevance? Possible solution (for open-minded agents, who are prepared to critically test their beliefs): P 2: claim q O 2: p since q, q p

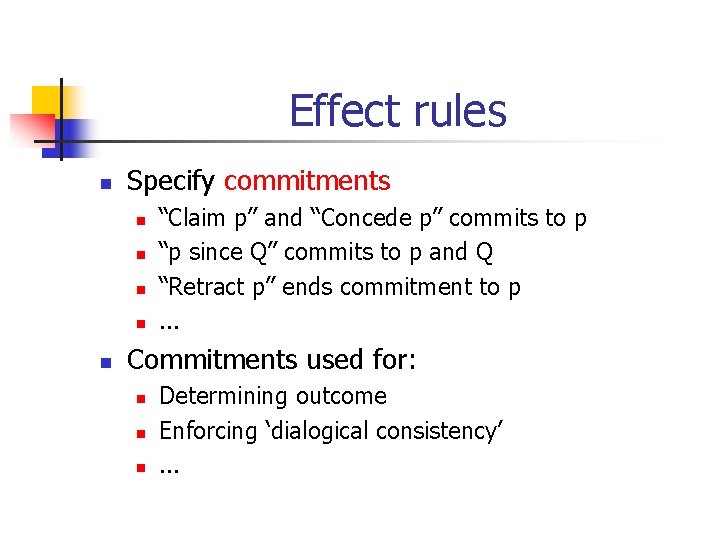

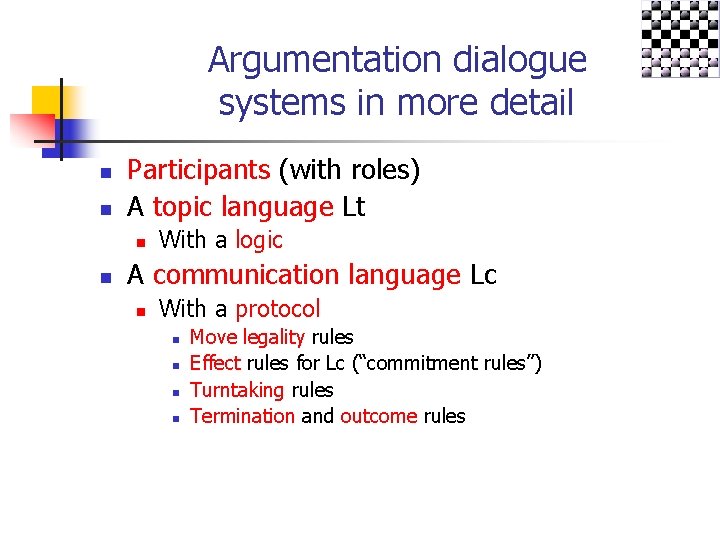

Argumentation dialogue systems in more detail n n Participants (with roles) A topic language Lt n n With a logic A communication language Lc n With a protocol n n Move legality rules Effect rules for Lc (“commitment rules”) Turntaking rules Termination and outcome rules

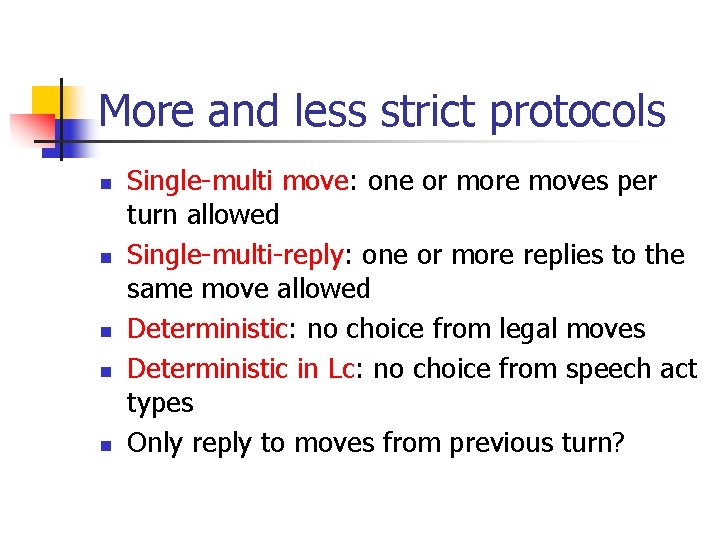

Effect rules n Specify commitments n n n “Claim p” and “Concede p” commits to p “p since Q” commits to p and Q “Retract p” ends commitment to p. . . Commitments used for: n n n Determining outcome Enforcing ‘dialogical consistency’. . .

Public semantics for dialogue protocols n n n Public semantics: can protocol compliance be externally observed? Commitments are a participant’s publicly declared standpoints, so not the same as beliefs! Only commitments and dialogical behaviour should count for move legality: n n “Claim p is allowed only if you believe p” vs. “Claim p is allowed only if you are not committed to p and have not challenged p”

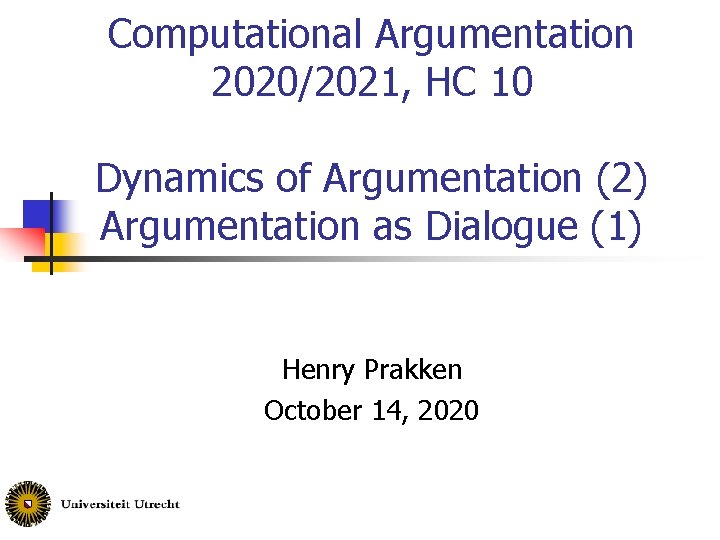

More and less strict protocols n n n Single-multi move: one or more moves per turn allowed Single-multi-reply: one or more replies to the same move allowed Deterministic: no choice from legal moves Deterministic in Lc: no choice from speech act types Only reply to moves from previous turn?

Dokumen 1 ktsp sma tahun 20202021

Dokumen 1 ktsp sma tahun 20202021 Computational fluid dynamics

Computational fluid dynamics Computational fluid dynamics

Computational fluid dynamics Computational fluid dynamics

Computational fluid dynamics Computational fluid dynamics

Computational fluid dynamics Computational fluid dynamics

Computational fluid dynamics Maysam mousaviraad

Maysam mousaviraad English syntax and argumentation

English syntax and argumentation Linking words for arguments

Linking words for arguments Juridisk argumentation

Juridisk argumentation Retoriske virkemidler eksempler

Retoriske virkemidler eksempler Argumentation

Argumentation Akademisk argumentation

Akademisk argumentation Argumentation

Argumentation Introduction to argumentation

Introduction to argumentation Types of argumentation

Types of argumentation Argumentation

Argumentation Tierversuche argumentation

Tierversuche argumentation Gliederung gutachten

Gliederung gutachten De123rf

De123rf Logos argumentation

Logos argumentation Eine argumentation

Eine argumentation Textgebundene erörterung aufbau

Textgebundene erörterung aufbau Four opinion writers

Four opinion writers For and against essay linking words

For and against essay linking words Amortized computational complexity

Amortized computational complexity Computational photography uiuc

Computational photography uiuc Computational math

Computational math On the computational efficiency of training neural networks

On the computational efficiency of training neural networks Formal vs informal fallacies

Formal vs informal fallacies Computational geometry

Computational geometry Computational diagnostics

Computational diagnostics Computational thinking jeannette wing

Computational thinking jeannette wing Universal computational device

Universal computational device Computational speed

Computational speed Abstraction computational thinking

Abstraction computational thinking Xkcd computational linguistics

Xkcd computational linguistics Computational linguist jobs

Computational linguist jobs Grc computational chemistry

Grc computational chemistry Usc neuroscience minor

Usc neuroscience minor