Computational and Statistical Issues in DataMining Yoav Freund

- Slides: 42

Computational and Statistical Issues in Data-Mining Yoav Freund Banter Inc. 2/20/2021 1

Plan of talk • • • Two large scale classification problems. Generative versus Predictive modeling Boosting Applications of boosting Computational issues in data-mining. 2/20/2021 2

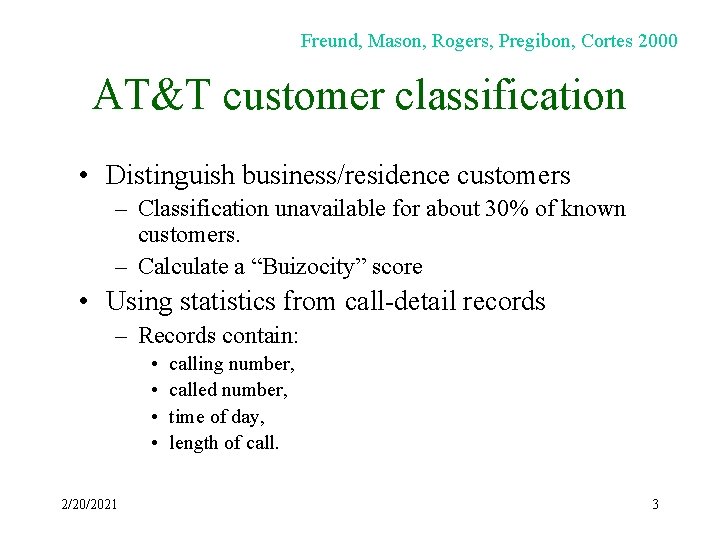

Freund, Mason, Rogers, Pregibon, Cortes 2000 AT&T customer classification • Distinguish business/residence customers – Classification unavailable for about 30% of known customers. – Calculate a “Buizocity” score • Using statistics from call-detail records – Records contain: • • 2/20/2021 calling number, called number, time of day, length of call. 3

Massive datasets • 260 Million calls / day • 230 Million telephone numbers to be classified. 2/20/2021 4

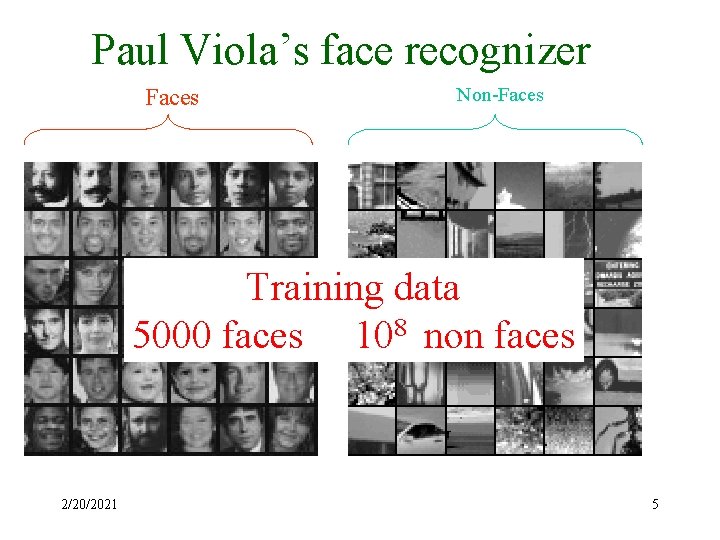

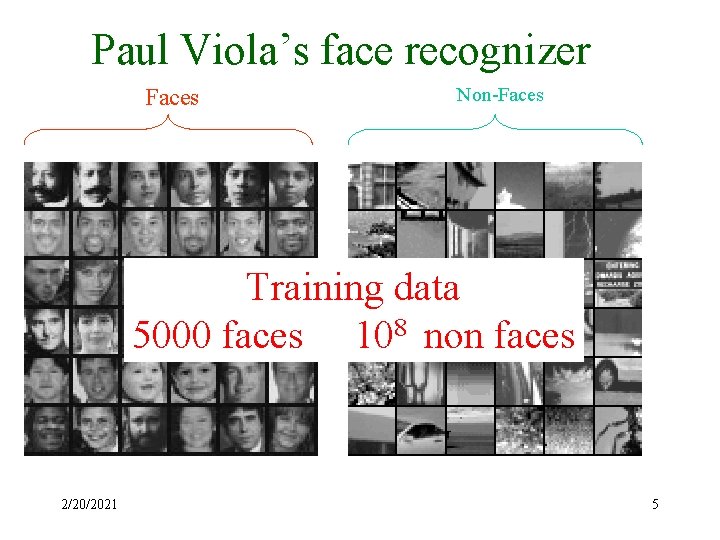

Paul Viola’s face recognizer Faces Non-Faces Training data 5000 faces 108 non faces 2/20/2021 5

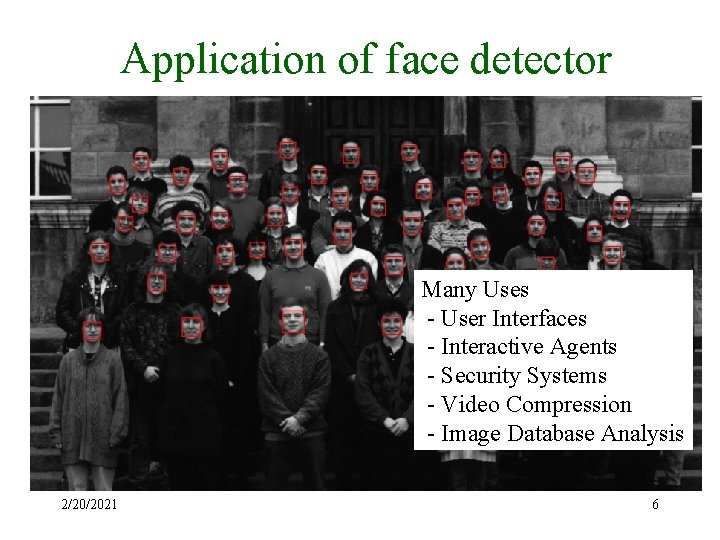

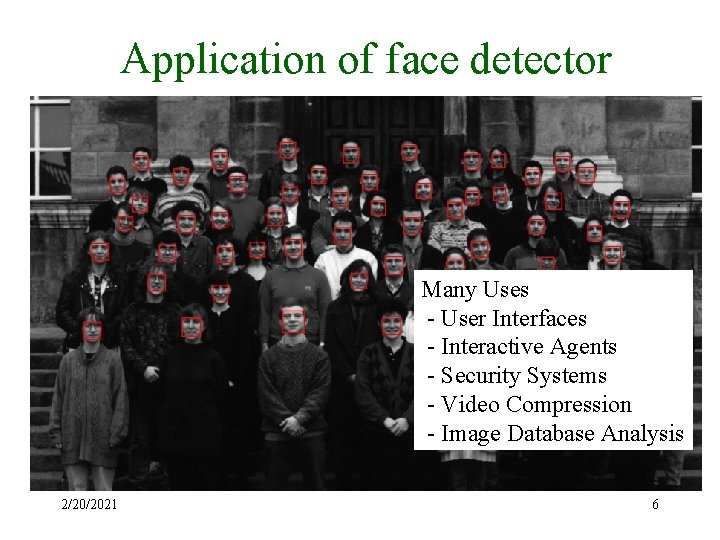

Application of face detector Many Uses - User Interfaces - Interactive Agents - Security Systems - Video Compression - Image Database Analysis 2/20/2021 6

Generative vs. Predictive models 2/20/2021 7

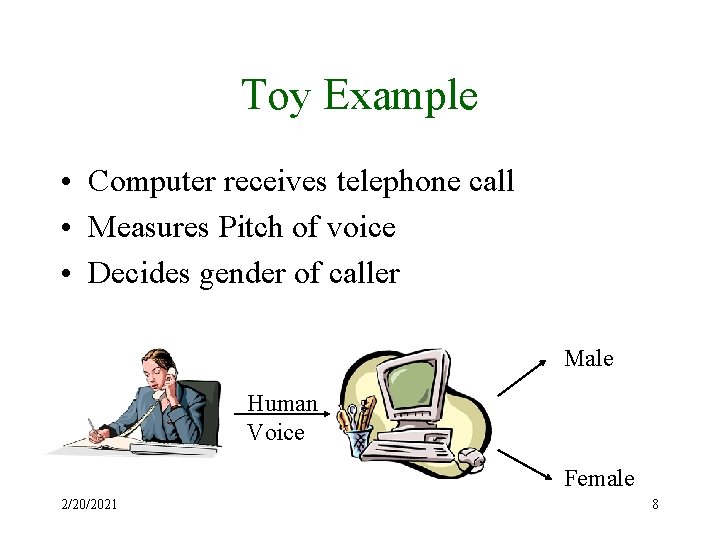

Toy Example • Computer receives telephone call • Measures Pitch of voice • Decides gender of caller Male Human Voice Female 2/20/2021 8

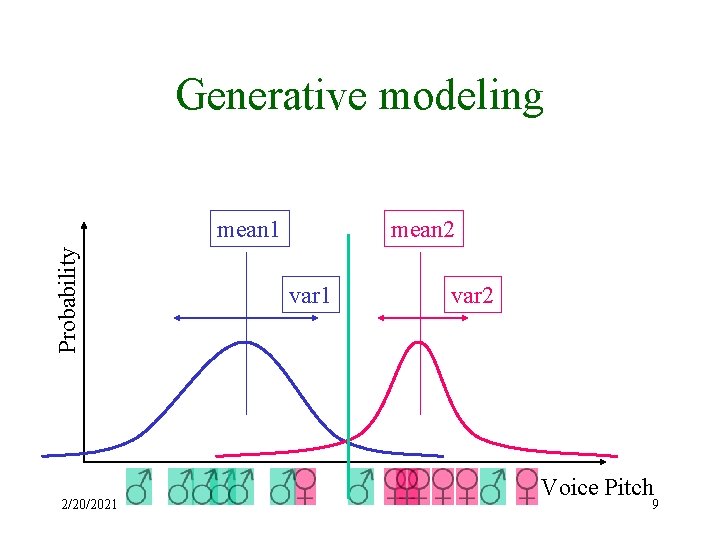

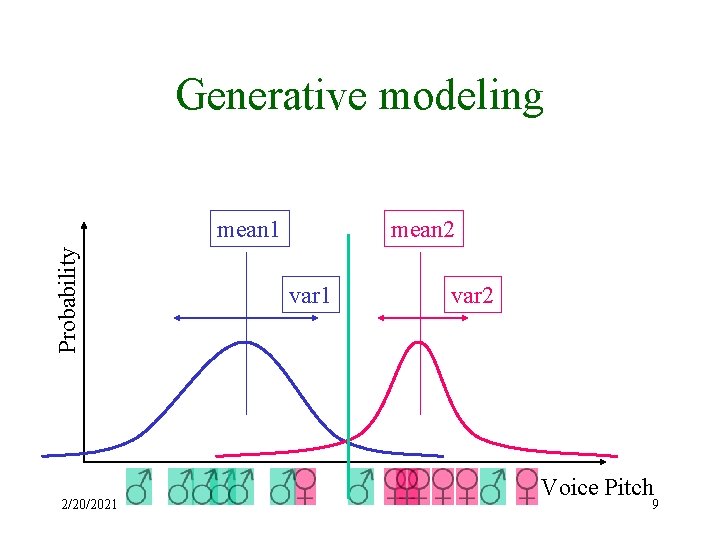

Generative modeling Probability mean 1 2/20/2021 mean 2 var 1 var 2 Voice Pitch 9

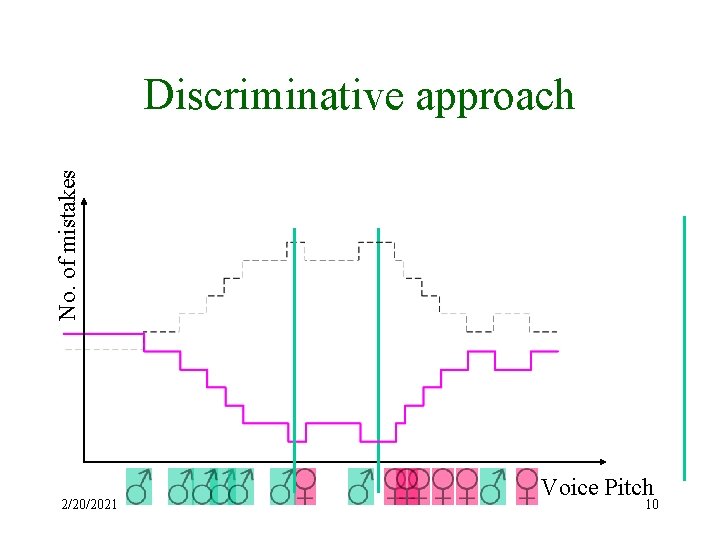

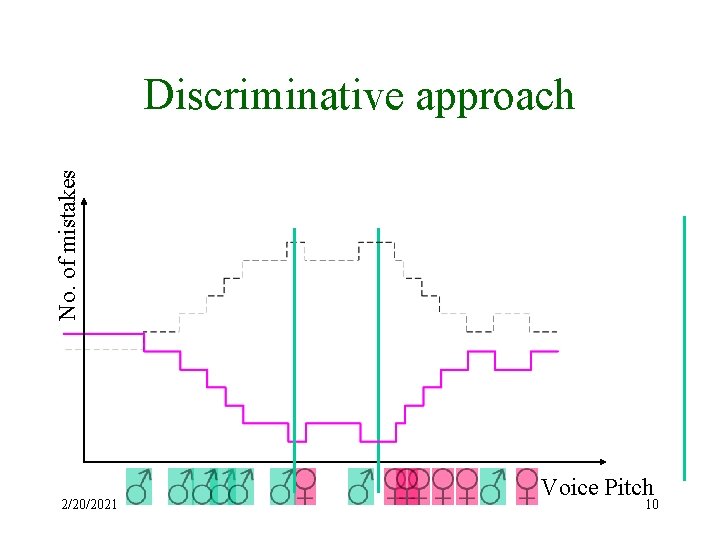

No. of mistakes Discriminative approach 2/20/2021 Voice Pitch 10

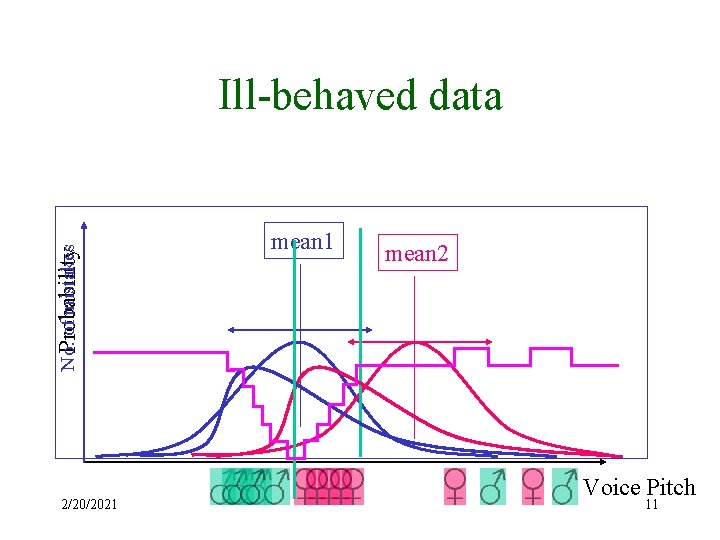

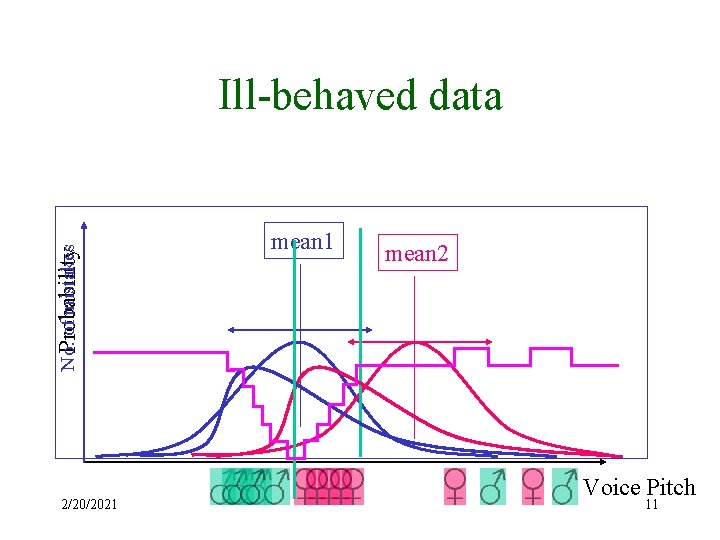

No. of mistakes Probability Ill-behaved data 2/20/2021 mean 2 Voice Pitch 11

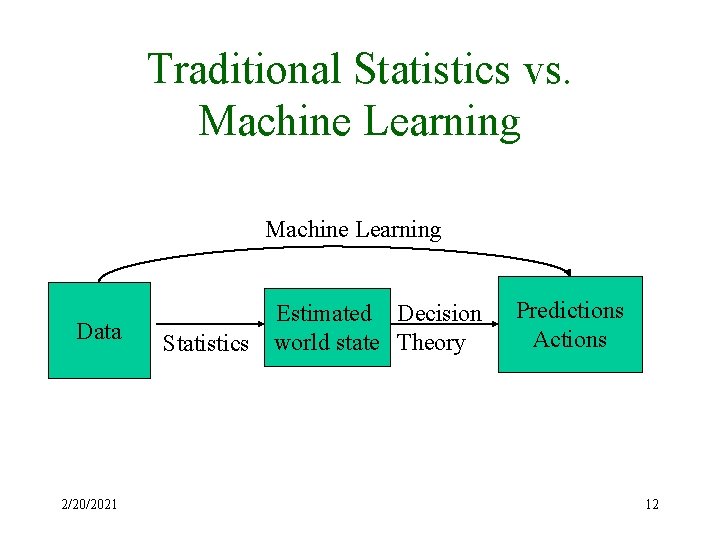

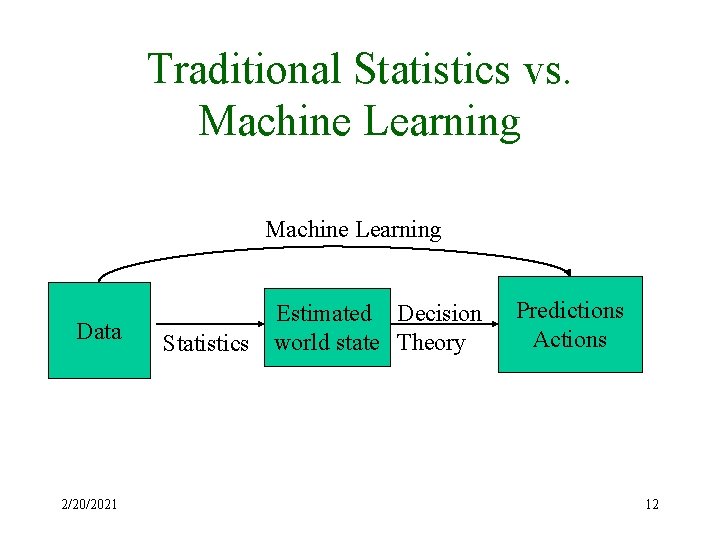

Traditional Statistics vs. Machine Learning Data 2/20/2021 Statistics Estimated Decision world state Theory Predictions Actions 12

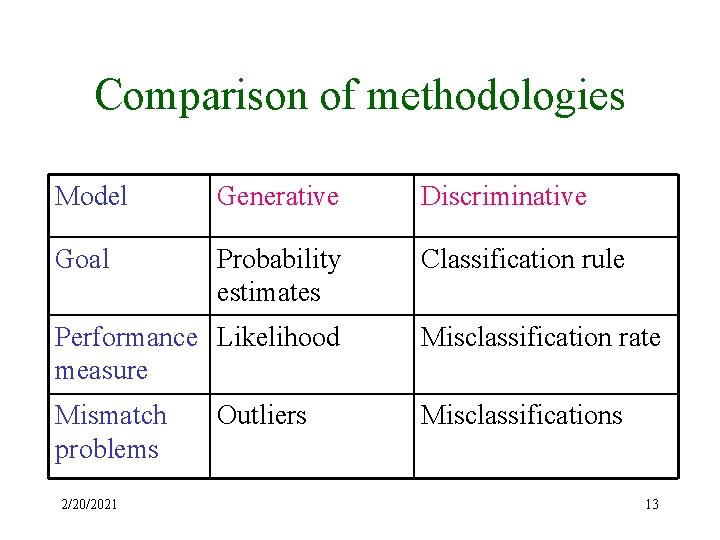

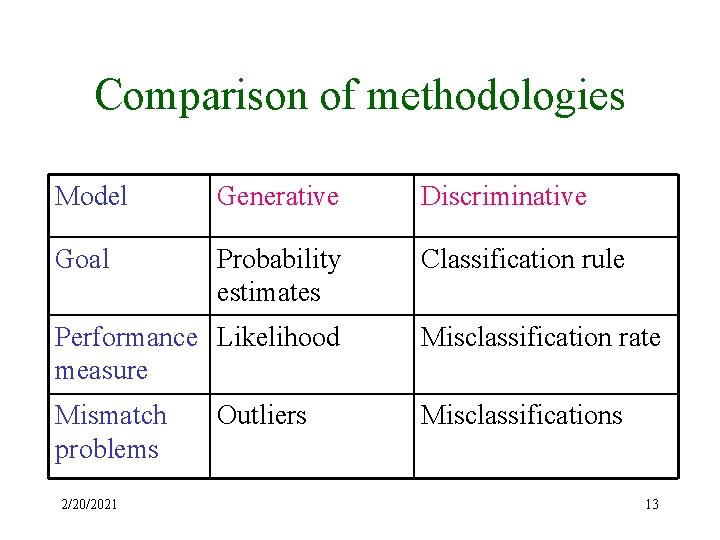

Comparison of methodologies Model Generative Discriminative Goal Probability estimates Classification rule Performance Likelihood measure Misclassification rate Mismatch problems Misclassifications 2/20/2021 Outliers 13

Boosting 2/20/2021 14

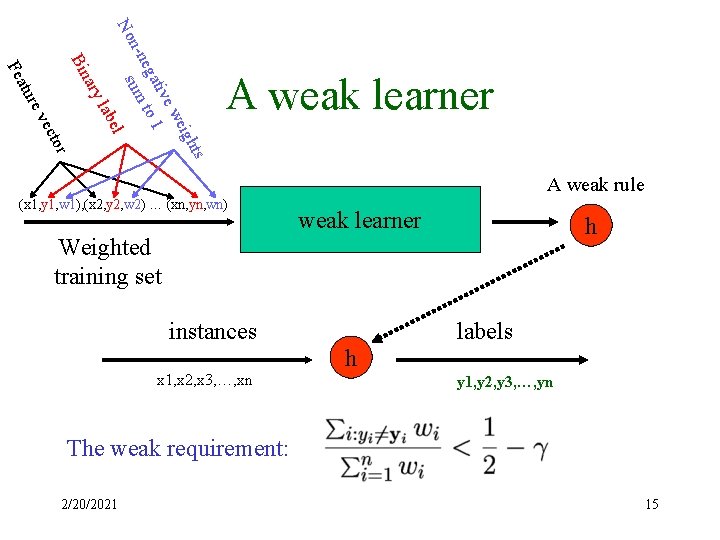

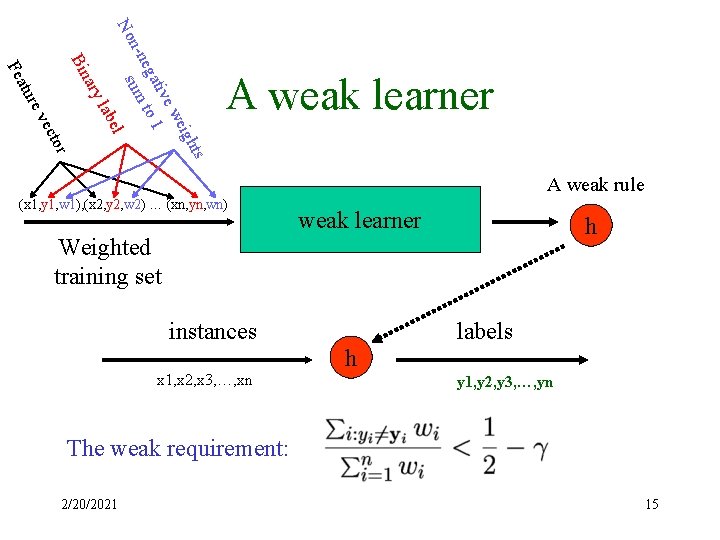

ary or ect e lab ev tur hts eig ew tiv 1 ega m to n-n u s l No Bin Fea A weak learner A weak rule (x 1, y 1, w 1), (x 2, y 2, w 2) … (xn, yn, wn) weak learner h Weighted training set instances x 1, x 2, x 3, …, xn labels h y 1, y 2, y 3, …, yn The weak requirement: 2/20/2021 15

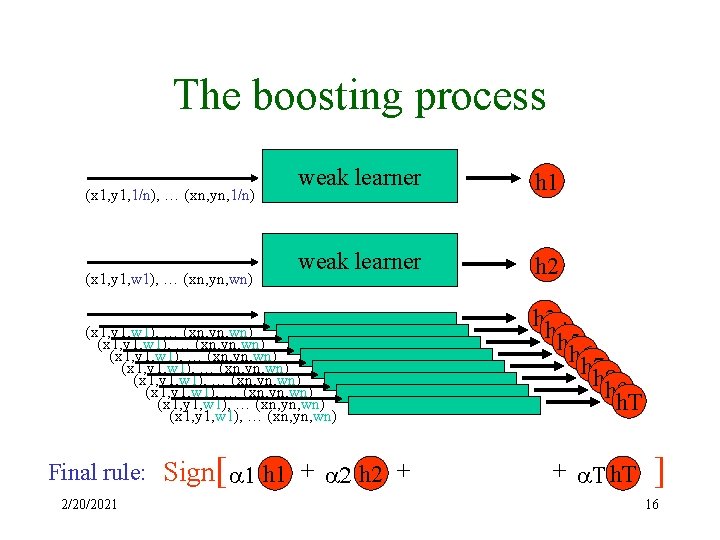

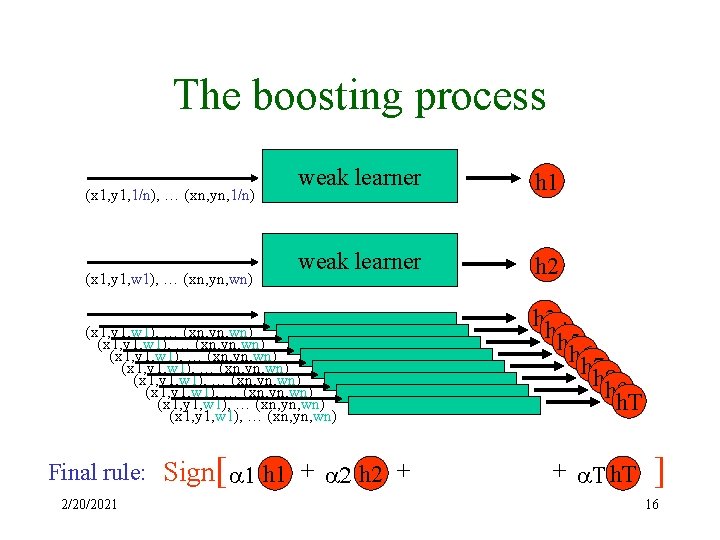

The boosting process (x 1, y 1, 1/n), … (xn, yn, 1/n) (x 1, y 1, w 1), … (xn, yn, wn) weak learner h 1 weak learner h 2 (x 1, y 1, w 1), … (xn, yn, wn) (x 1, y 1, w 1), … (xn, yn, wn) Final rule: Sign[ a 1 h 1 + a 2 h 2 + 2/20/2021 h 3 h 4 h 5 h 6 h 7 h 8 h 9 h. T + a. T h. T ] 16

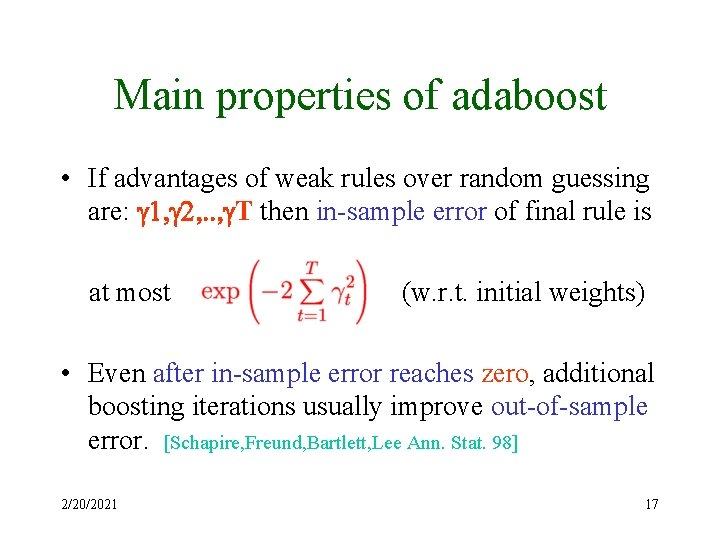

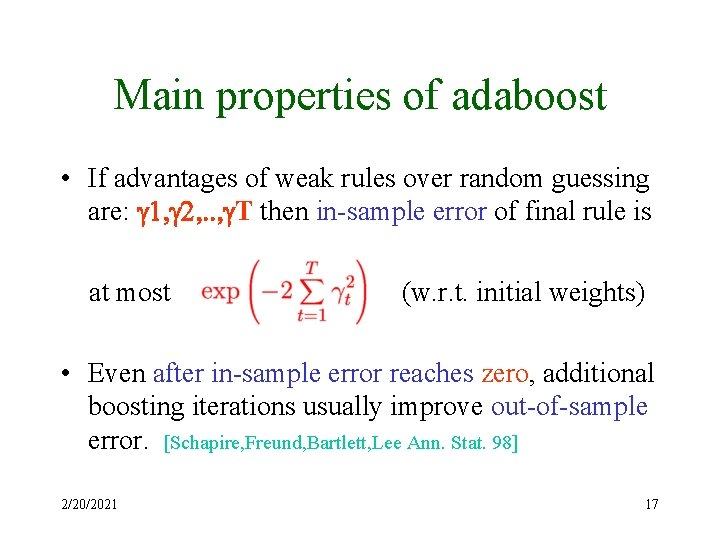

Main properties of adaboost • If advantages of weak rules over random guessing are: g 1, g 2, . . , g. T then in-sample error of final rule is at most (w. r. t. initial weights) • Even after in-sample error reaches zero, additional boosting iterations usually improve out-of-sample error. [Schapire, Freund, Bartlett, Lee Ann. Stat. 98] 2/20/2021 17

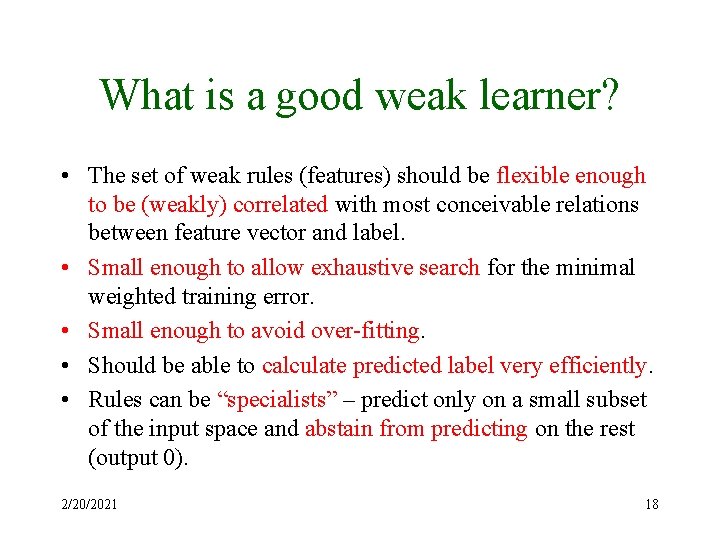

What is a good weak learner? • The set of weak rules (features) should be flexible enough to be (weakly) correlated with most conceivable relations between feature vector and label. • Small enough to allow exhaustive search for the minimal weighted training error. • Small enough to avoid over-fitting. • Should be able to calculate predicted label very efficiently. • Rules can be “specialists” – predict only on a small subset of the input space and abstain from predicting on the rest (output 0). 2/20/2021 18

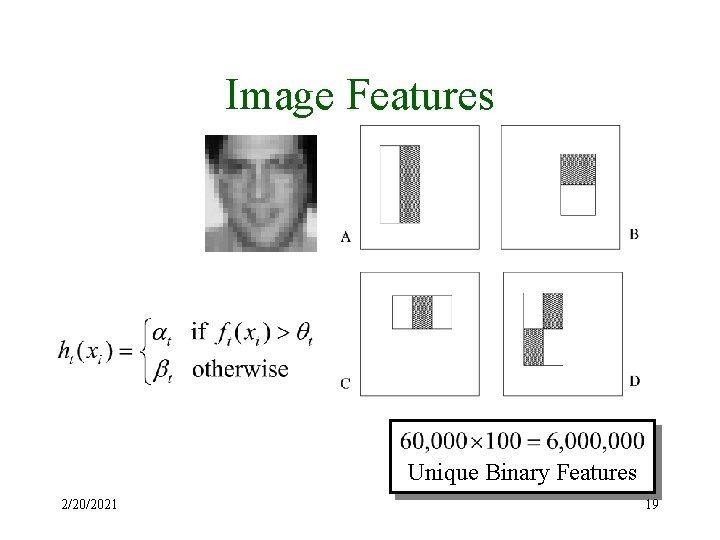

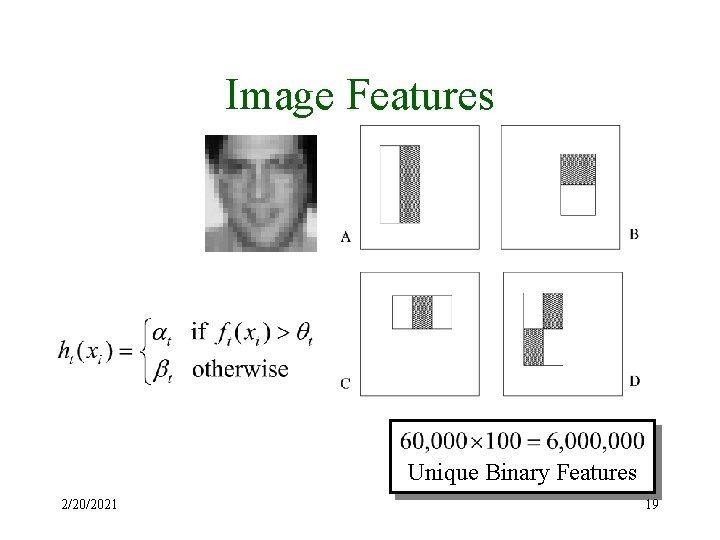

Image Features Unique Binary Features 2/20/2021 19

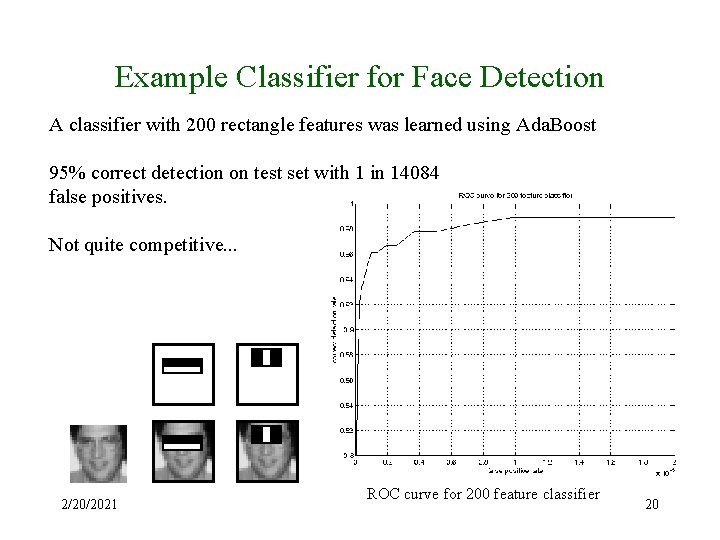

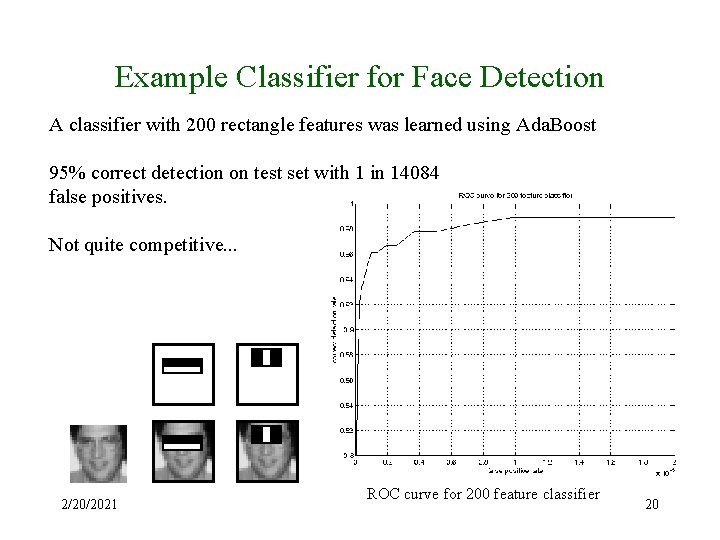

Example Classifier for Face Detection A classifier with 200 rectangle features was learned using Ada. Boost 95% correct detection on test set with 1 in 14084 false positives. Not quite competitive. . . 2/20/2021 ROC curve for 200 feature classifier 20

Alternating Trees Joint work with Llew Mason 2/20/2021 21

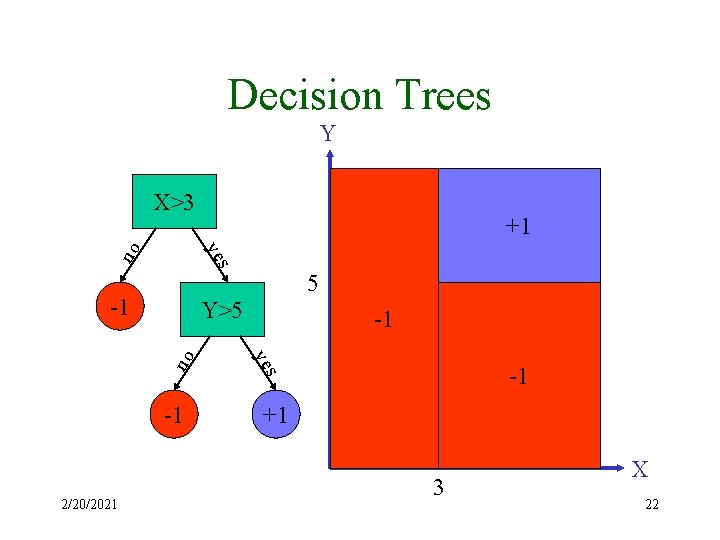

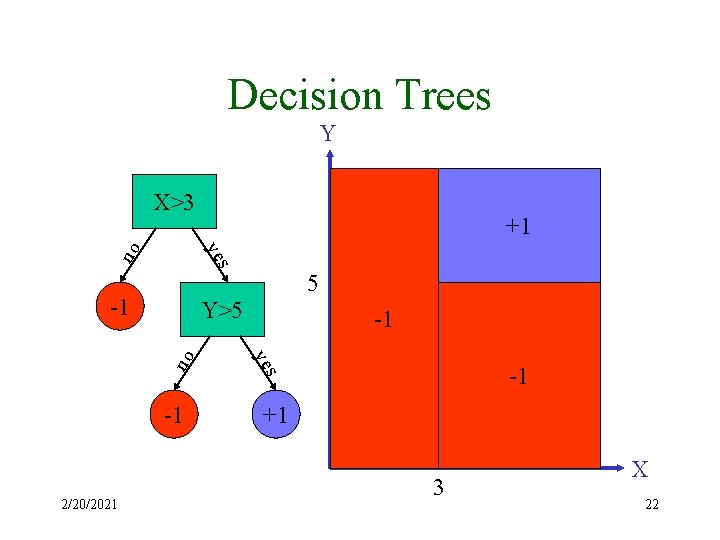

Decision Trees Y s -1 5 -1 ye -1 s no Y>5 -1 2/20/2021 +1 ye no X>3 +1 3 X 22

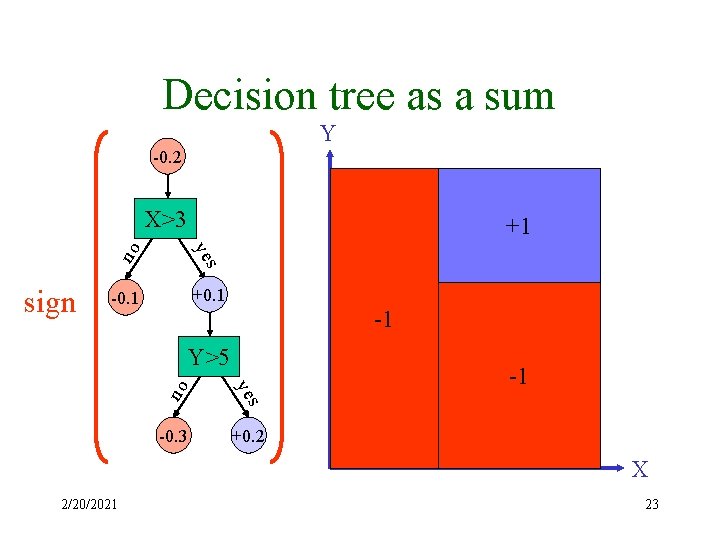

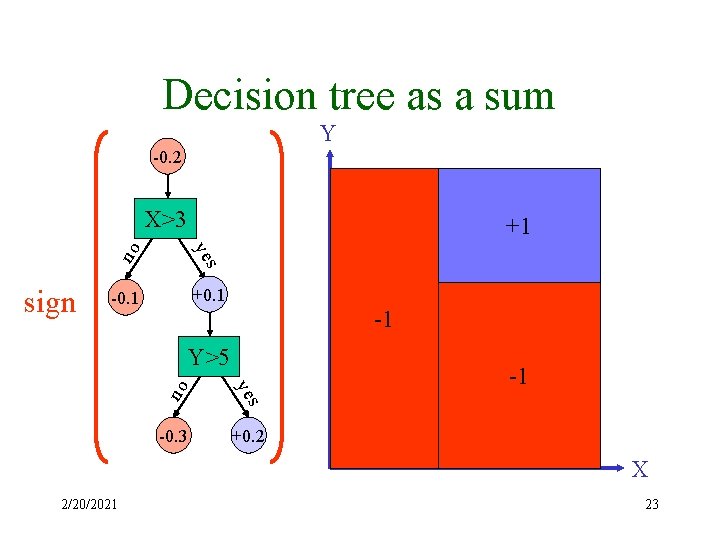

Decision tree as a sum Y -0. 2 X>3 no -0. 1 +0. 1 s ye sign +0. 2 +1 -0. 1 -1 ye -0. 3 +0. 2 -0. 3 -1 s no Y>5 -0. 2 +0. 1 X 2/20/2021 23

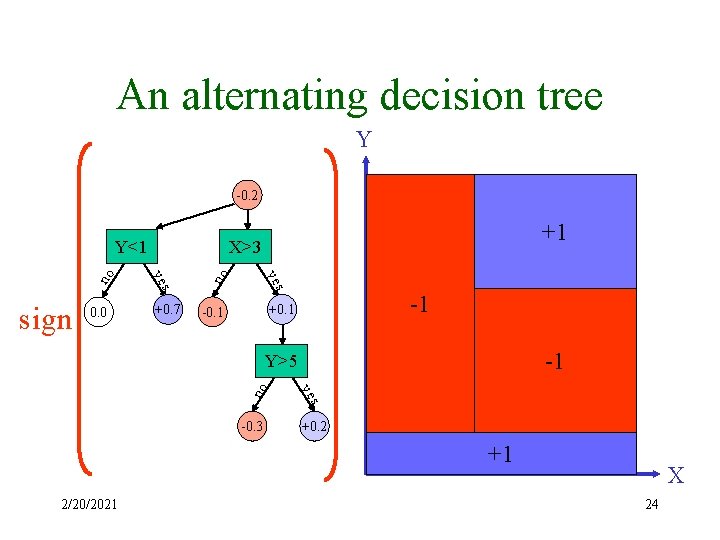

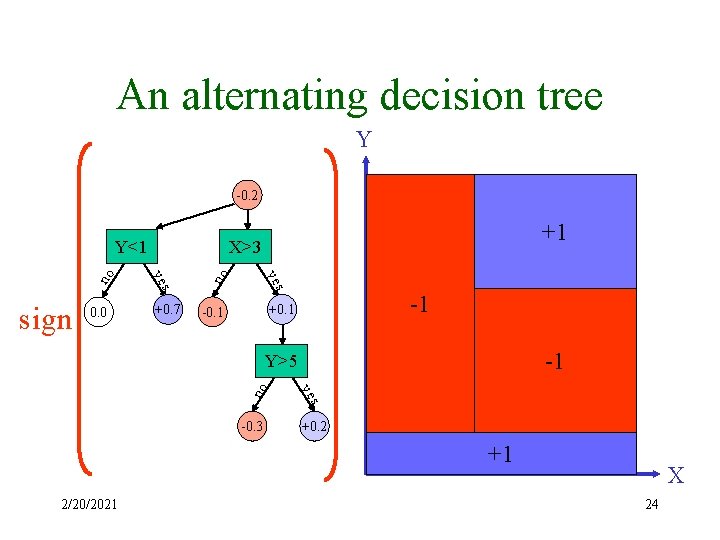

An alternating decision tree Y -0. 2 +0. 7 no s ye sign 0. 0 X>3 s ye no Y<1 +1 +0. 2 -1 -0. 1 +0. 1 -1 -0. 3 s ye no Y>5 +0. 2 +0. 7 +1 2/20/2021 X 24

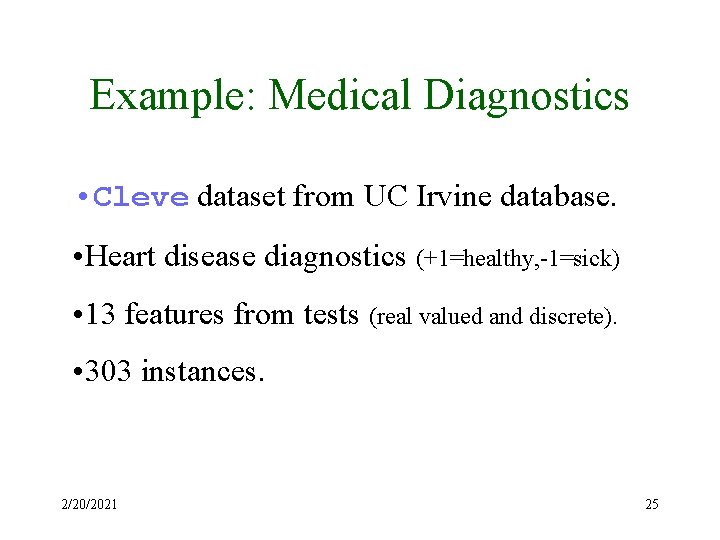

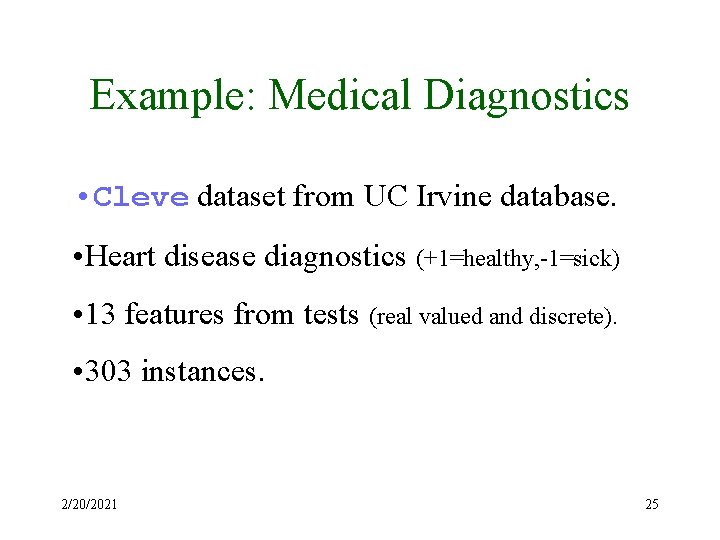

Example: Medical Diagnostics • Cleve dataset from UC Irvine database. • Heart disease diagnostics (+1=healthy, -1=sick) • 13 features from tests (real valued and discrete). • 303 instances. 2/20/2021 25

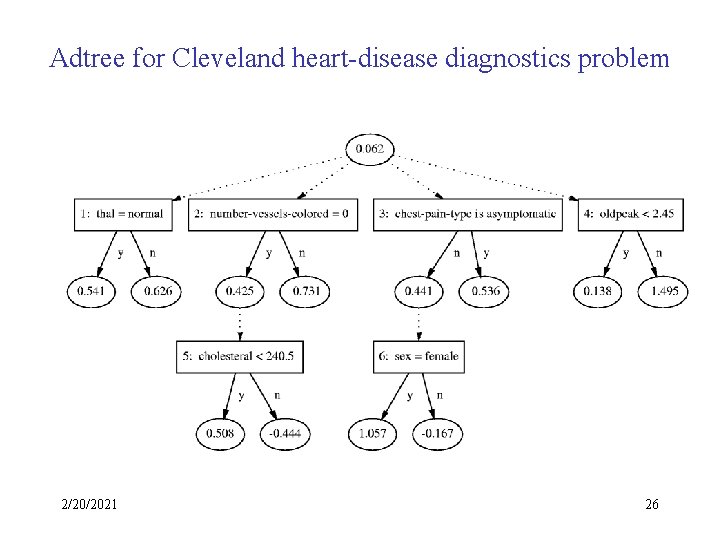

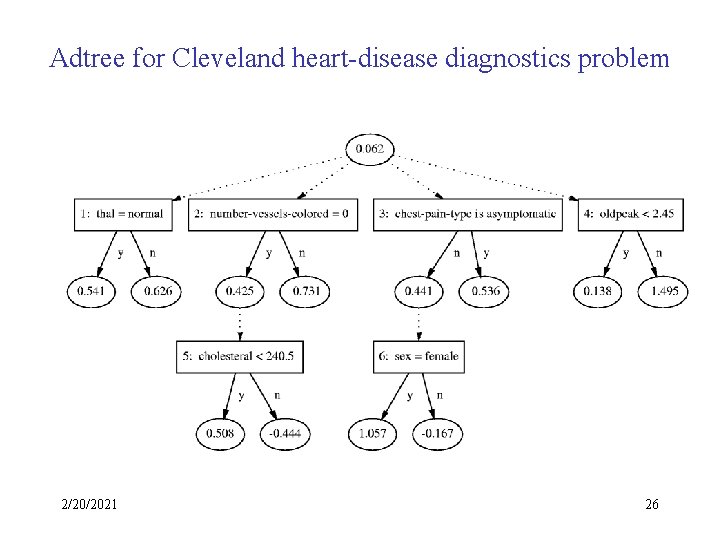

Adtree for Cleveland heart-disease diagnostics problem 2/20/2021 26

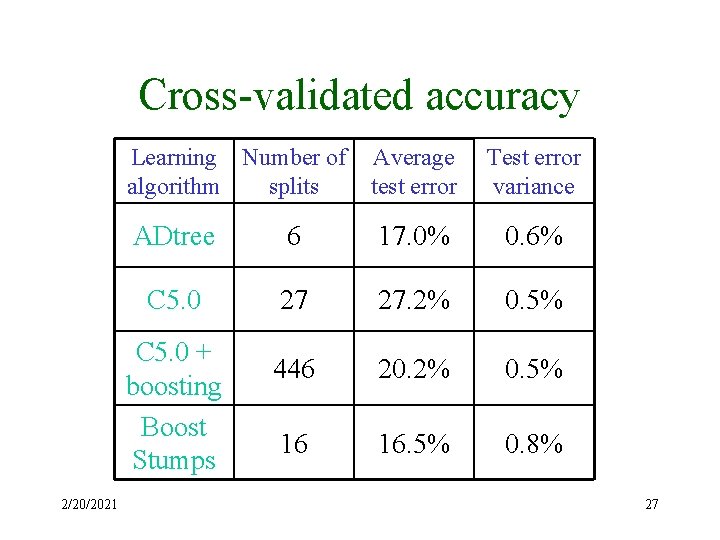

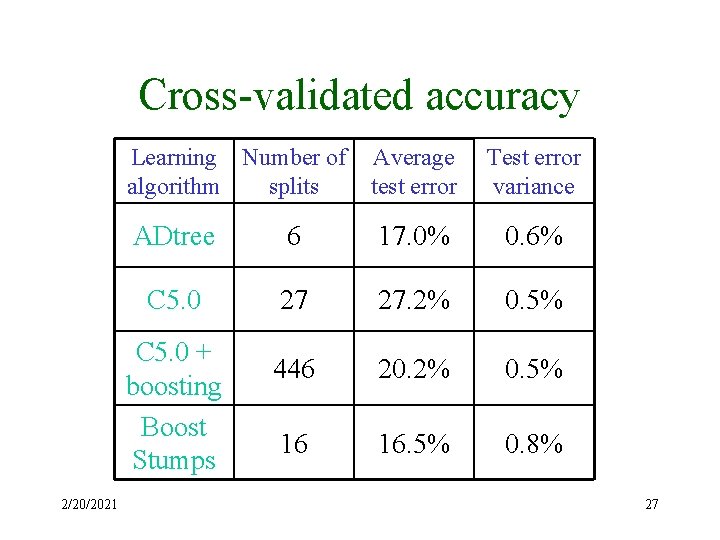

Cross-validated accuracy Learning Number of algorithm splits Average test error Test error variance ADtree 6 17. 0% 0. 6% C 5. 0 27 27. 2% 0. 5% 446 20. 2% 0. 5% 16 16. 5% 0. 8% C 5. 0 + boosting Boost Stumps 2/20/2021 27

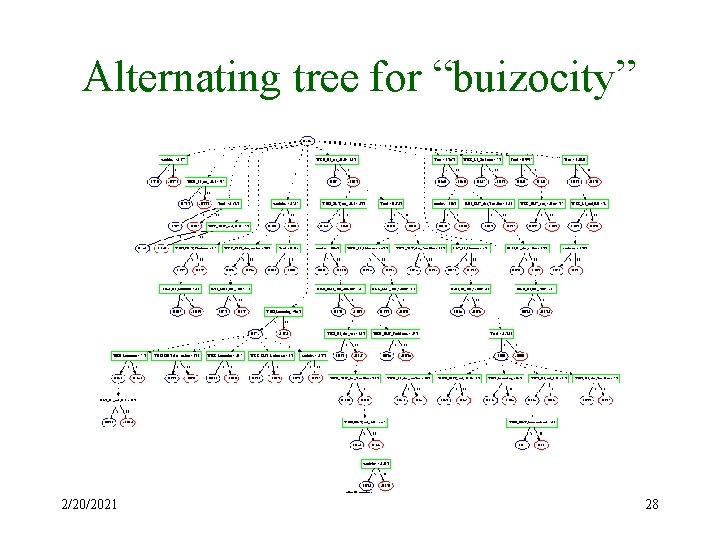

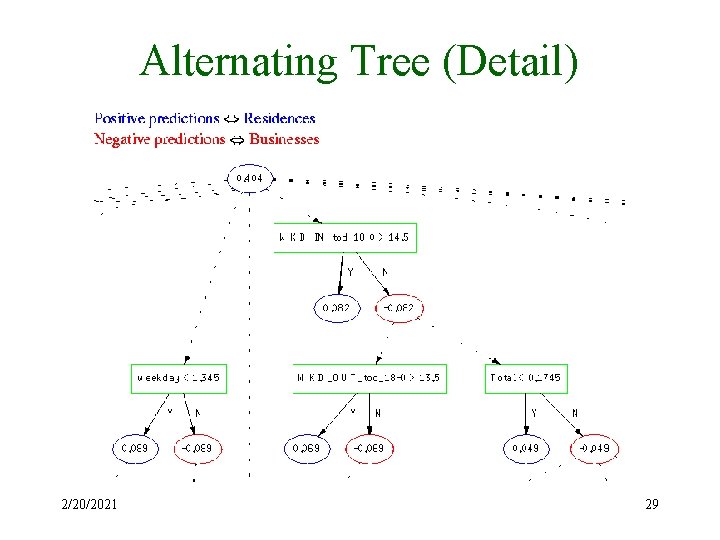

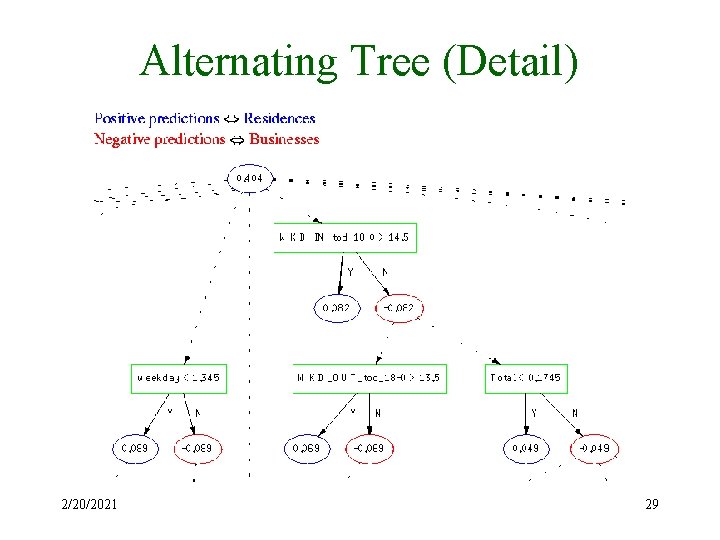

Alternating tree for “buizocity” 2/20/2021 28

Alternating Tree (Detail) 2/20/2021 29

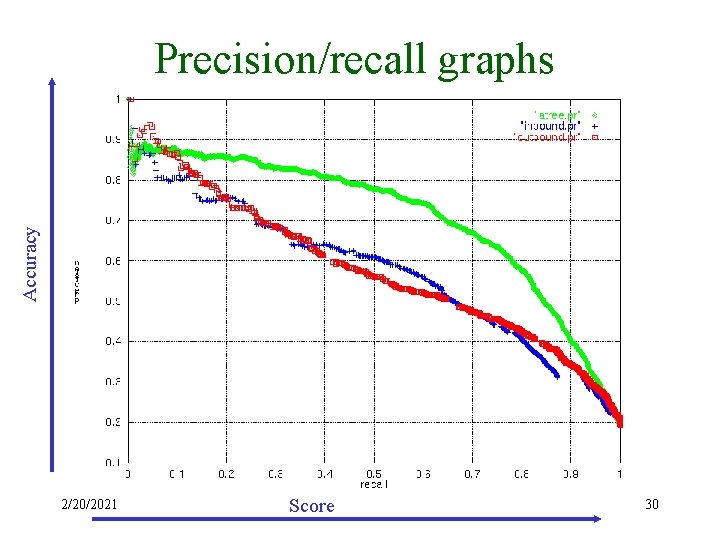

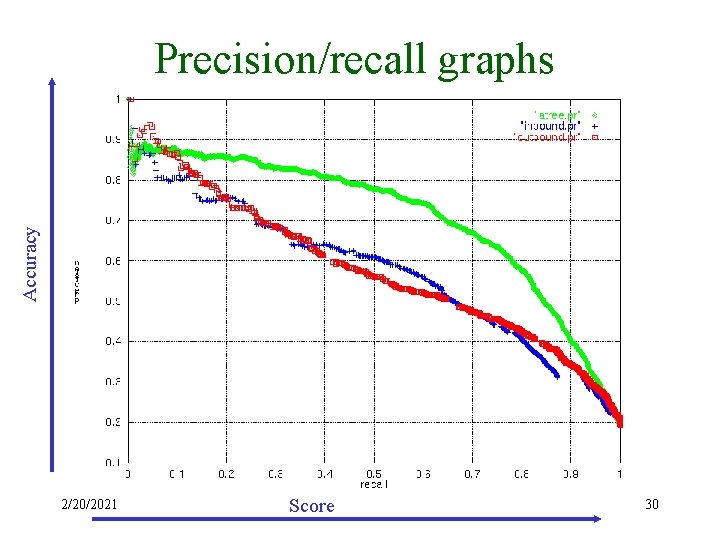

Accuracy Precision/recall graphs 2/20/2021 Score 30

“Drinking out of a fire hose” Allan Wilks, 1997 2/20/2021 31

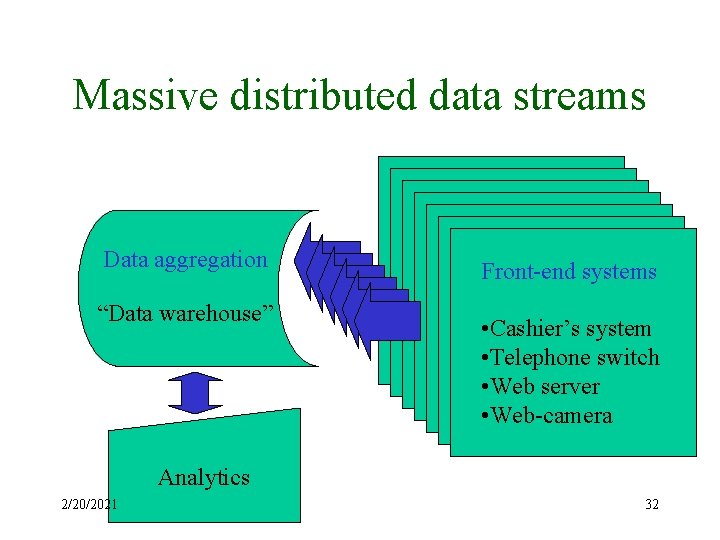

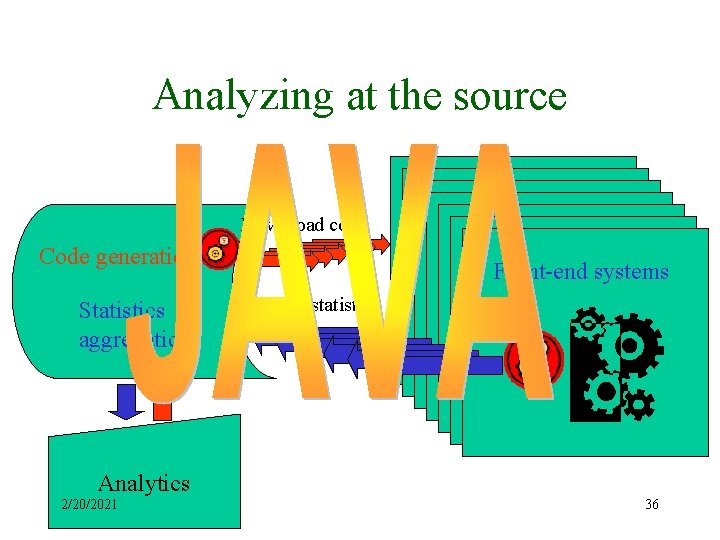

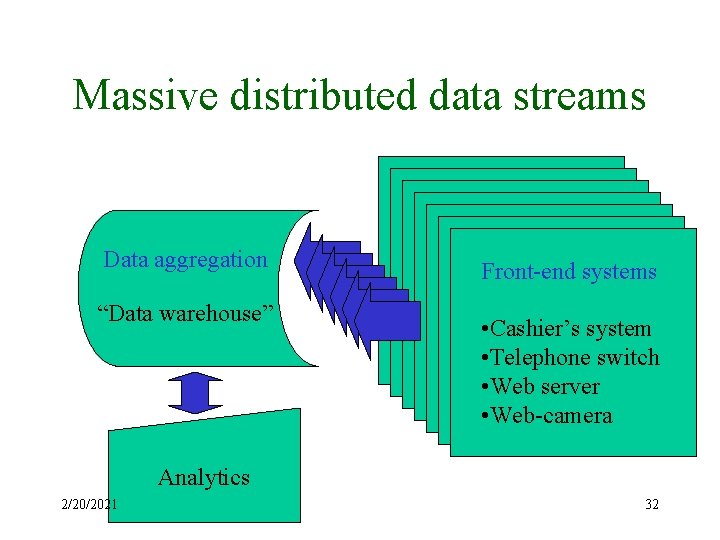

Massive distributed data streams Data aggregation “Data warehouse” Front-end systems • Cashier’s system • Telephone switch • Web server • Web-camera Analytics 2/20/2021 32

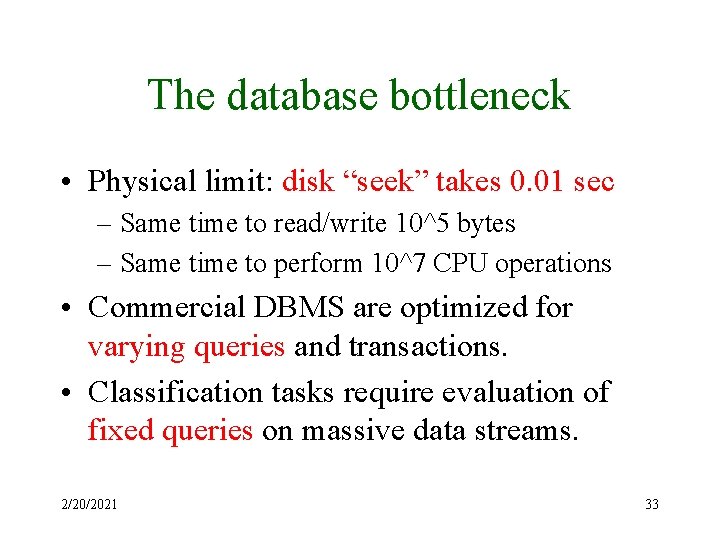

The database bottleneck • Physical limit: disk “seek” takes 0. 01 sec – Same time to read/write 10^5 bytes – Same time to perform 10^7 CPU operations • Commercial DBMS are optimized for varying queries and transactions. • Classification tasks require evaluation of fixed queries on massive data streams. 2/20/2021 33

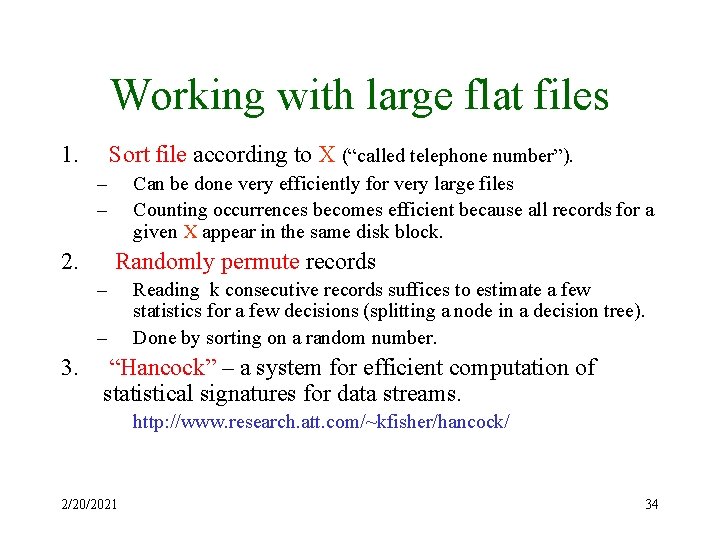

Working with large flat files 1. Sort file according to X (“called telephone number”). – – 2. Can be done very efficiently for very large files Counting occurrences becomes efficient because all records for a given X appear in the same disk block. Randomly permute records – – 3. Reading k consecutive records suffices to estimate a few statistics for a few decisions (splitting a node in a decision tree). Done by sorting on a random number. “Hancock” – a system for efficient computation of statistical signatures for data streams. http: //www. research. att. com/~kfisher/hancock/ 2/20/2021 34

Working with data streams • “You get to see each record only once” • Example problem: identify the 10 most popular items for each retail-chain customer over the last 12 months. • To learn more: Stanford’s Stream Dream Team: http: //www -db. stanford. edu/sdt/ 2/20/2021 35

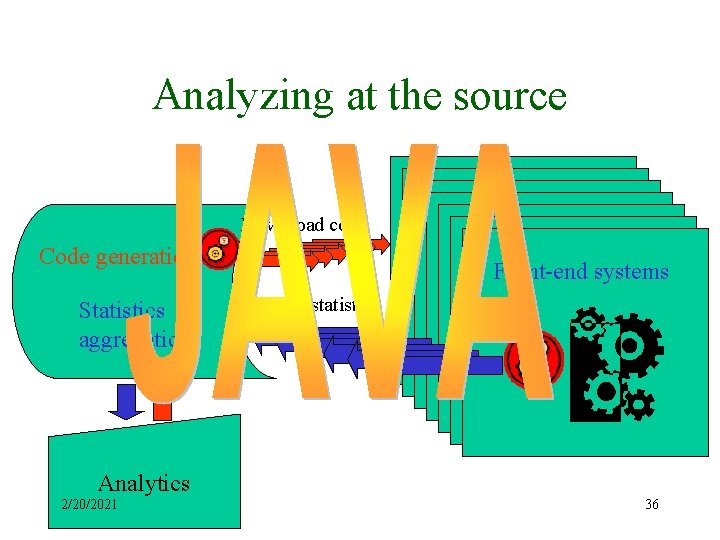

Analyzing at the source Download code Code generation Statistics aggregation Front-end systems Upload statistics Analytics 2/20/2021 36

Learn Slowly, Predict Fast! • Buizocity: – 10, 000 instances are sufficient for learning. – 300, 000 have to be labeled (weekly). – Generate ADTree classifier in C, compile it and run it using Hancock. 2/20/2021 37

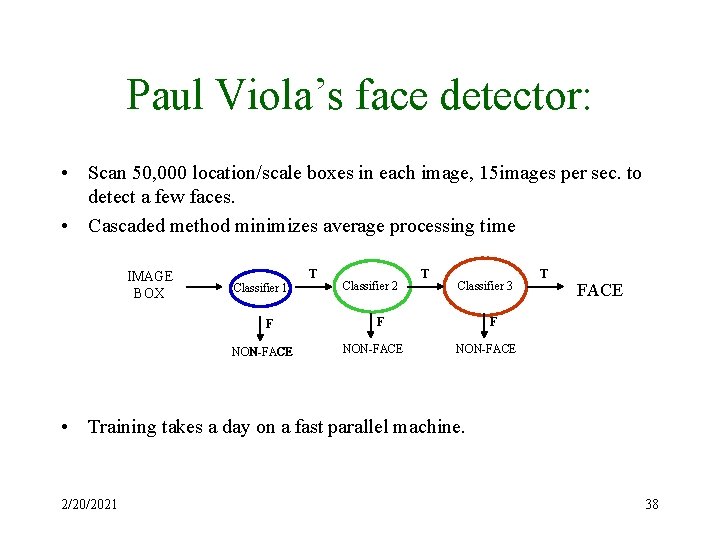

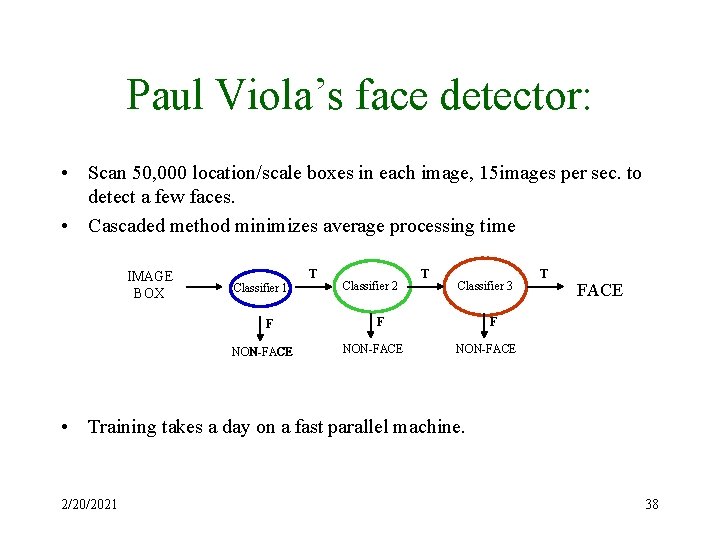

Paul Viola’s face detector: • Scan 50, 000 location/scale boxes in each image, 15 images per sec. to detect a few faces. • Cascaded method minimizes average processing time IMAGE BOX T Classifier 1 F NON-FACE Classifier 2 T Classifier 3 F NON-FACE T FACE F NON-FACE • Training takes a day on a fast parallel machine. 2/20/2021 38

Summary • • • Generative vs. Predictive methodology Boosting Alternating trees The database bottleneck Learning slowly, predicting fast. 2/20/2021 39

Other work 1 • Specialized data compression: – When data is collected in small bins, most bins are empty. – Instead of storing the zeros smart compression dramatically reduces data size. • Model averaging: – Boosting and Bagging make classifiers more stable. – We need theory that does not use Bayesian assumptions. – Closely relates to margin-based analysis of boosting and of SVM. • Zipf’s Law: – Distribution of words in free text is extremely skewed. – Methods should scale exponentially in entropy rather than linearly in number of words. 2/20/2021 40

Other work 2 • Online methods: – Data distribution changes with time. – Online refinement of feature set. – Long-term learning. • Effective label collection – Selective sampling to label only hard cases. – Comparing labels from different people to estimate reliability. – Co-training: different channels train each-other. (Blum, Mitchell, Mc. Callum) 2/20/2021 41

Contact me! • Yoav@banter. com • http: //www. cs. huji. ac. il/~yoavf 2/20/2021 42