Compression Suresh Gandhi Compression Methods around The data

- Slides: 25

Compression Suresh Gandhi

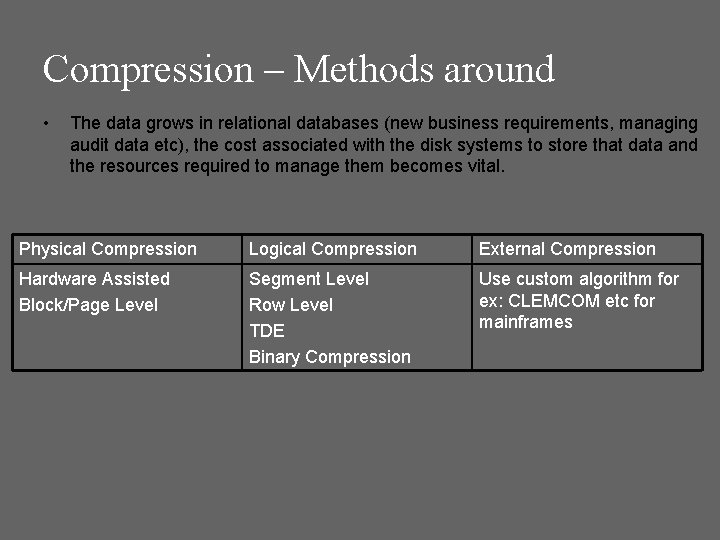

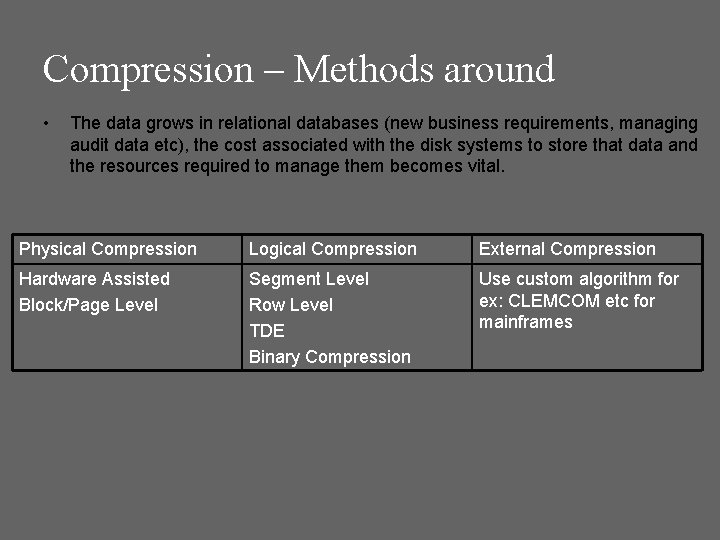

Compression – Methods around • The data grows in relational databases (new business requirements, managing audit data etc), the cost associated with the disk systems to store that data and the resources required to manage them becomes vital. Physical Compression Logical Compression External Compression Hardware Assisted Block/Page Level Segment Level Row Level TDE Binary Compression Use custom algorithm for ex: CLEMCOM etc for mainframes

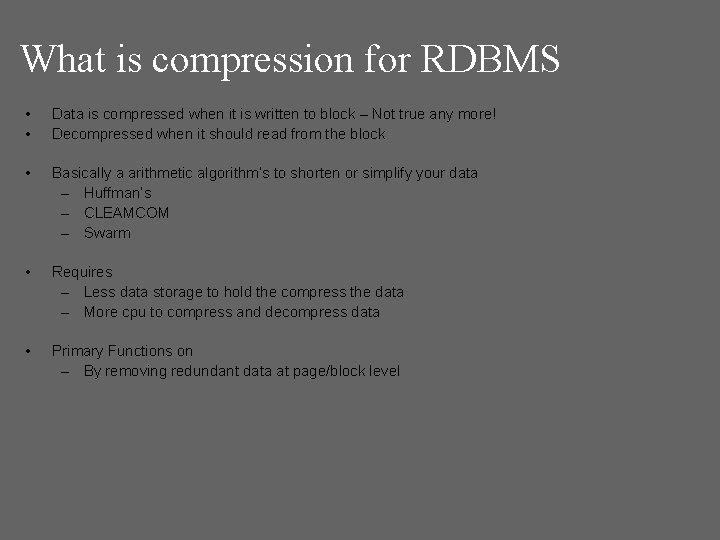

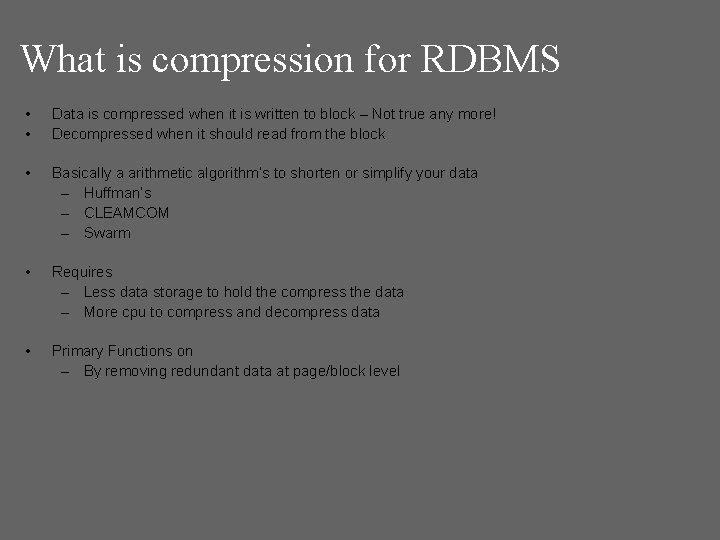

What is compression for RDBMS • • Data is compressed when it is written to block – Not true any more! Decompressed when it should read from the block • Basically a arithmetic algorithm’s to shorten or simplify your data – Huffman’s – CLEAMCOM – Swarm • Requires – Less data storage to hold the compress the data – More cpu to compress and decompress data • Primary Functions on – By removing redundant data at page/block level

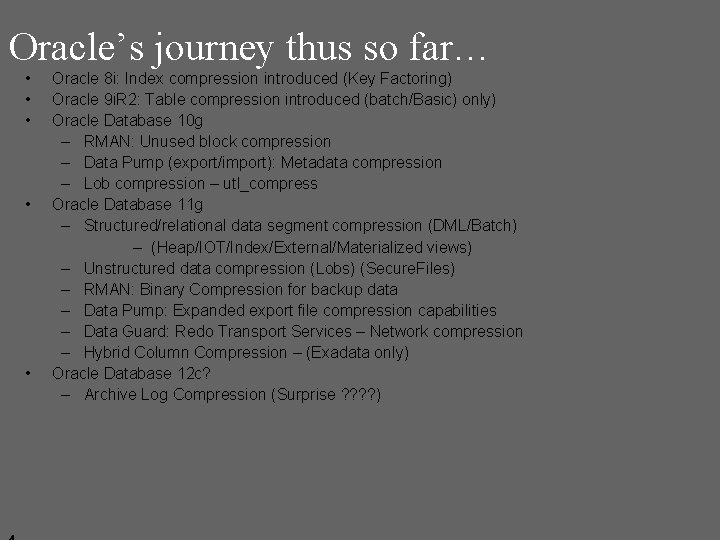

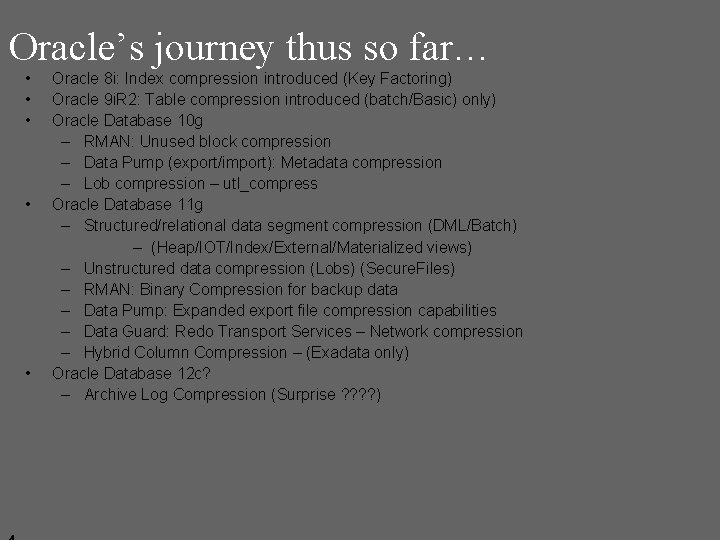

Oracle’s journey thus so far… • • • Oracle 8 i: Index compression introduced (Key Factoring) Oracle 9 i. R 2: Table compression introduced (batch/Basic) only) Oracle Database 10 g – RMAN: Unused block compression – Data Pump (export/import): Metadata compression – Lob compression – utl_compress Oracle Database 11 g – Structured/relational data segment compression (DML/Batch) – (Heap/IOT/Index/External/Materialized views) – Unstructured data compression (Lobs) (Secure. Files) – RMAN: Binary Compression for backup data – Data Pump: Expanded export file compression capabilities – Data Guard: Redo Transport Services – Network compression – Hybrid Column Compression – (Exadata only) Oracle Database 12 c? – Archive Log Compression (Surprise ? ? )

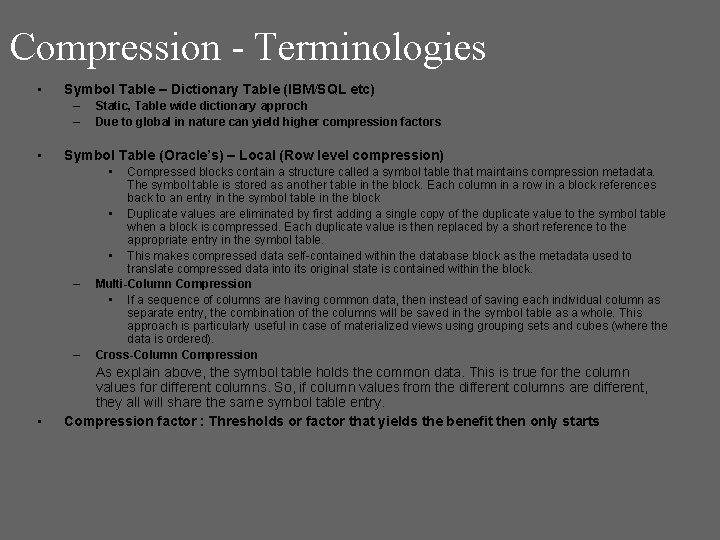

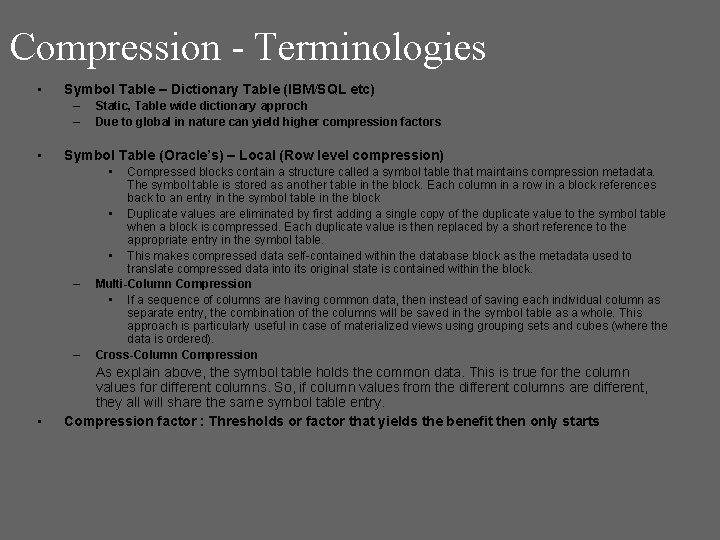

Compression - Terminologies • Symbol Table – Dictionary Table (IBM/SQL etc) – – • Static, Table wide dictionary approch Due to global in nature can yield higher compression factors Symbol Table (Oracle’s) – Local (Row level compression) • – – • Compressed blocks contain a structure called a symbol table that maintains compression metadata. The symbol table is stored as another table in the block. Each column in a row in a block references back to an entry in the symbol table in the block • Duplicate values are eliminated by first adding a single copy of the duplicate value to the symbol table when a block is compressed. Each duplicate value is then replaced by a short reference to the appropriate entry in the symbol table. • This makes compressed data self-contained within the database block as the metadata used to translate compressed data into its original state is contained within the block. Multi-Column Compression • If a sequence of columns are having common data, then instead of saving each individual column as separate entry, the combination of the columns will be saved in the symbol table as a whole. This approach is particularly useful in case of materialized views using grouping sets and cubes (where the data is ordered). Cross-Column Compression As explain above, the symbol table holds the common data. This is true for the column values for different columns. So, if column values from the different columns are different, they all will share the same symbol table entry. Compression factor : Thresholds or factor that yields the benefit then only starts

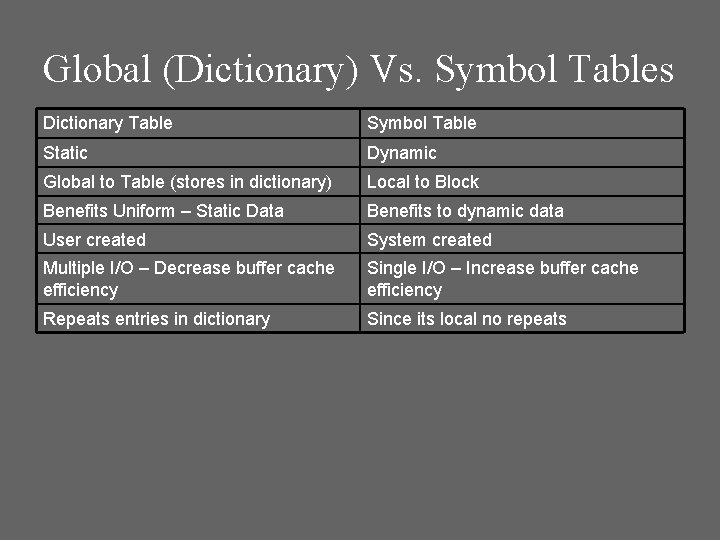

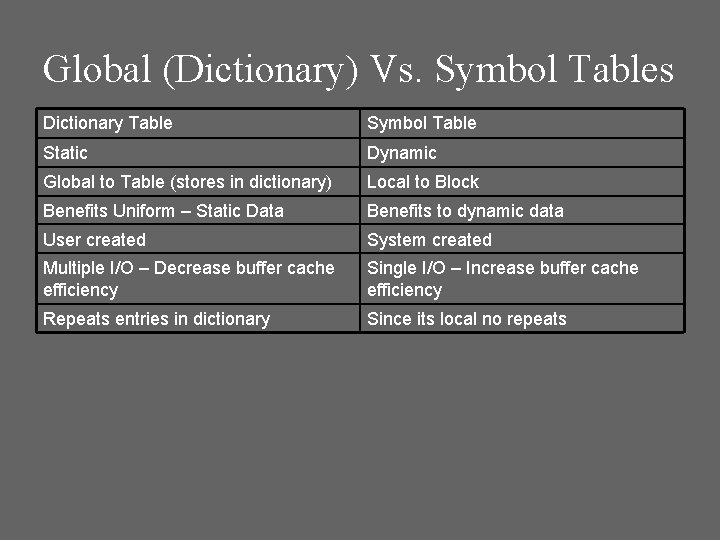

Global (Dictionary) Vs. Symbol Tables Dictionary Table Symbol Table Static Dynamic Global to Table (stores in dictionary) Local to Block Benefits Uniform – Static Data Benefits to dynamic data User created System created Multiple I/O – Decrease buffer cache efficiency Single I/O – Increase buffer cache efficiency Repeats entries in dictionary Since its local no repeats

Example Symbols’ Table – How its look like in our block_row_dump: tab 0, row 0, @0 x 1 f 66 tl: 13 fb: --H-FL-- lb: 0 x 0 col 0: [ 3] 53 59 53 col 1: [ 5] 49 4 e 44 45 58 cc: 2 SYS bindmp: 00 d 4 02 cb 53 59 53 cd 49 4 e 44 45 58 tab 0, row 1, @0 x 1 f 73 tl: 13 fb: --H-FL-- lb: 0 x 0 col 0: [ 3] 53 59 53 col 1: [ 5] 54 41 42 4 c 45 tab 1, row 0, @0 x 1 f 5 b col 0: [ 3] 53 59 53 col 1: [ 5] 54 41 42 4 c 45 col 2: [ 5] 49 43 4 f 4 c 24 cc: 3 bindmp: 2 c 00 02 02 01 cd 49 43 4 f 4 c 24 tab 1, row 1, @0 x 1 f 4 e tl: 13 fb: --H-FL-- lb: 0 x 0 Symbols’ table is tab 0 cc: 2 bindmp: 00 b 6 02 cb 53 59 53 cd 54 41 42 4 c 45 tl: 11 fb: --H-FL-- lb: 0 x 0 INDEX Actual data: tab 1 Row 1: SYS ICOL$ TABLE Row 2: SYS I_USER 1 INDEX Row 3: SYS CON$ TABLE … cc: 3 col 0: [ 3] 53 59 53 col 1: [ 5] 49 4 e 44 45 58 col 2: [ 7] 49 5 f 55 53 45 52 31 Uncompressed data bindmp: 2 c 00 02 02 00 cf 49 5 f 55 53 45 52 31 Actual binary dump of row tab 1, row 2, @0 x 1 f 44 tl: 10 fb: --H-FL-- lb: 0 x 0 col 0: [ 3] 53 59 53 col 1: [ 5] 54 41 42 4 c 45 col 2: [ 4] 43 4 f 4 e 24 cc: 3 bindmp: 2 c 00 02 02 01 cc 43 4 f 4 e 24 7

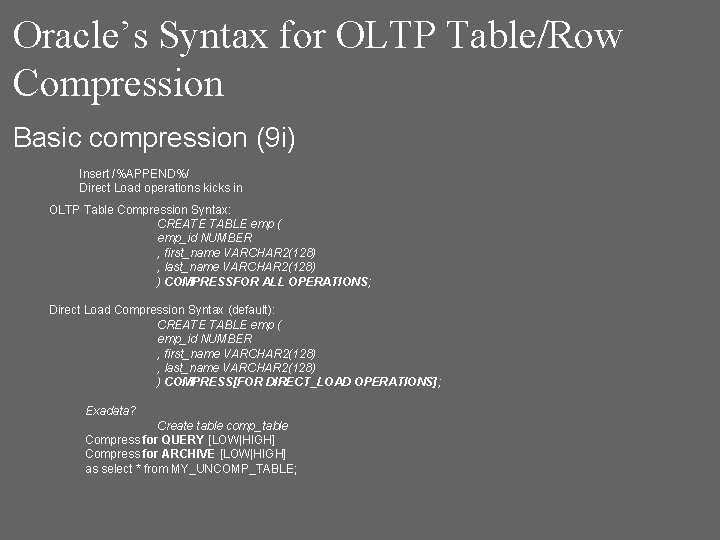

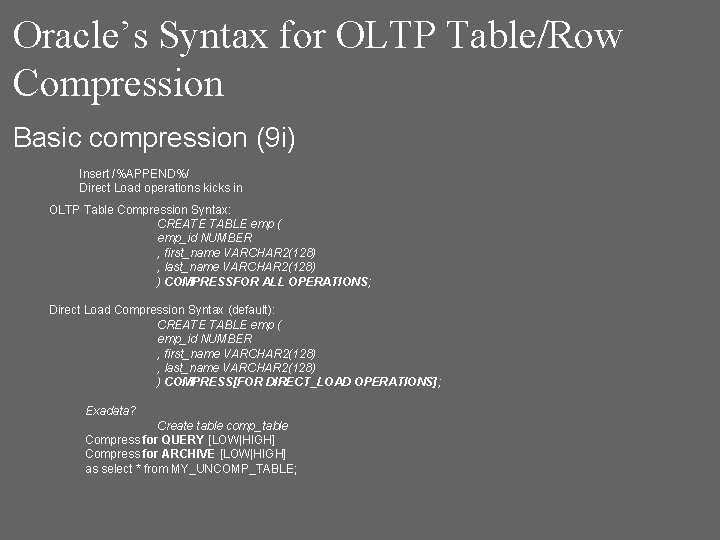

Oracle’s Syntax for OLTP Table/Row Compression Basic compression (9 i) Insert /%APPEND%/ Direct Load operations kicks in OLTP Table Compression Syntax: CREATE TABLE emp ( emp_id NUMBER , first_name VARCHAR 2(128) , last_name VARCHAR 2(128) ) COMPRESSFOR ALL OPERATIONS; Direct Load Compression Syntax (default): CREATE TABLE emp ( emp_id NUMBER , first_name VARCHAR 2(128) , last_name VARCHAR 2(128) ) COMPRESS[FOR DIRECT_LOAD OPERATIONS]; Exadata? Create table comp_table Compress for QUERY [LOW|HIGH] Compress for ARCHIVE [LOW|HIGH] as select * from MY_UNCOMP_TABLE;

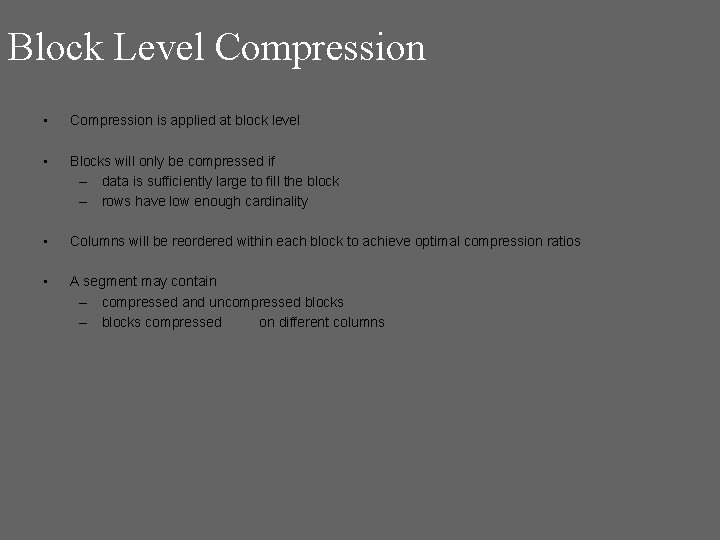

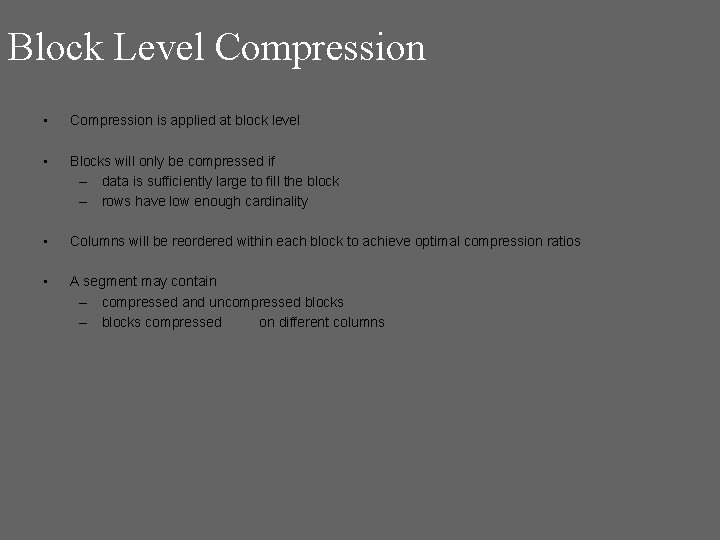

Block Level Compression • Compression is applied at block level • Blocks will only be compressed if – data is sufficiently large to fill the block – rows have low enough cardinality • Columns will be reordered within each block to achieve optimal compression ratios • A segment may contain – compressed and uncompressed blocks – blocks compressed on different columns

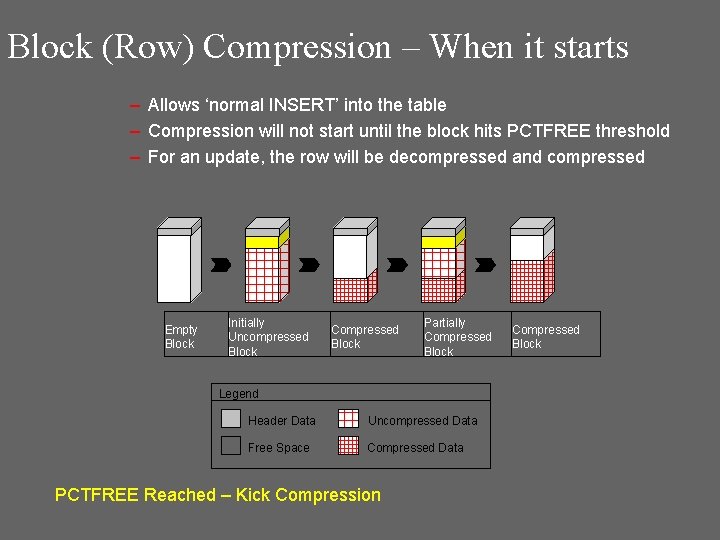

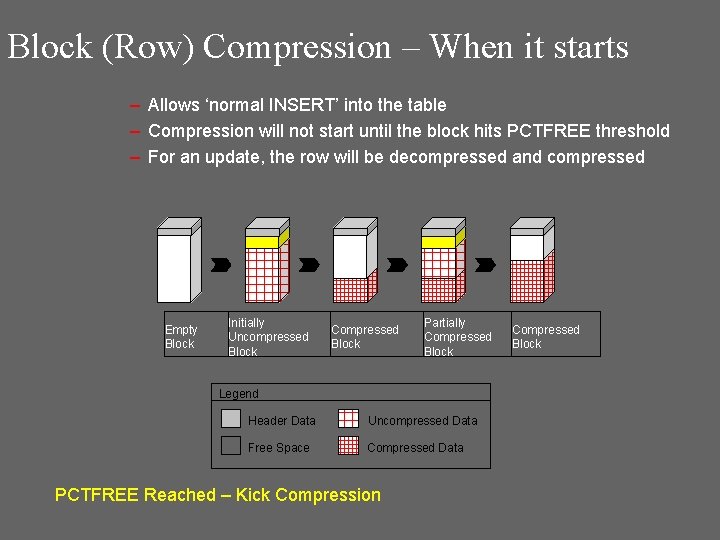

Block (Row) Compression – When it starts – Allows ‘normal INSERT’ into the table – Compression will not start until the block hits PCTFREE threshold – For an update, the row will be decompressed and compressed Empty Block Initially Uncompressed Block Compressed Block Partially Compressed Block Legend Header Data Uncompressed Data Free Space Compressed Data PCTFREE Reached – Kick Compression Compressed Block

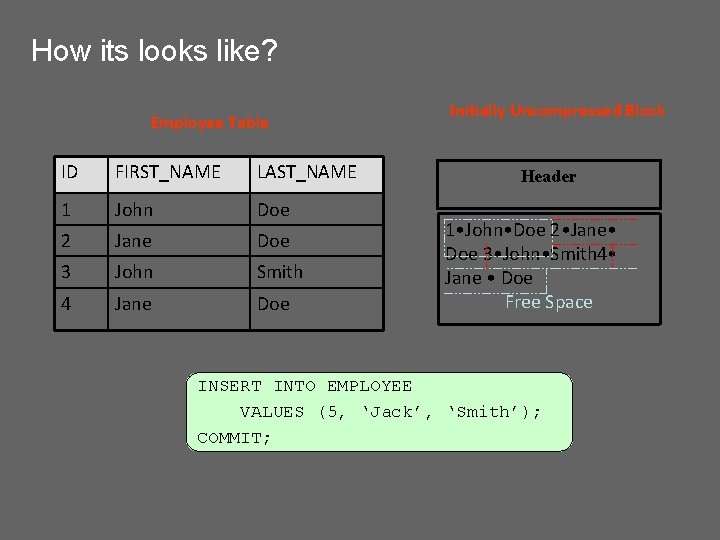

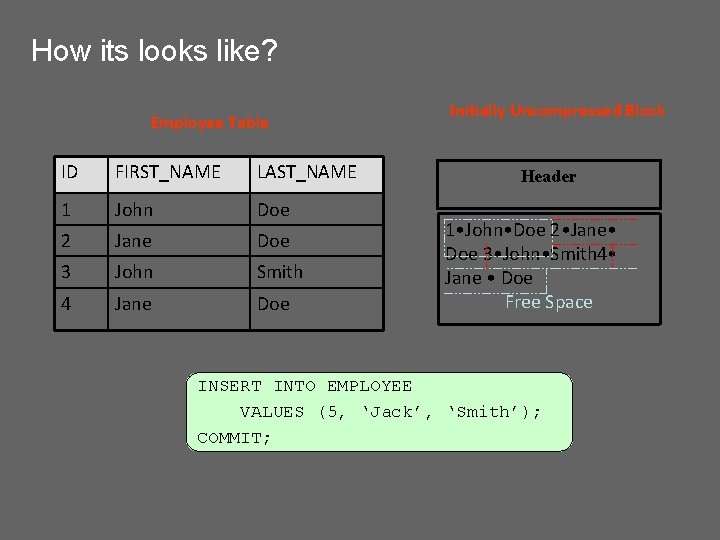

How its looks like? Employee Table ID FIRST_NAME LAST_NAME 1 John Doe 2 Jane Doe 3 John Smith 4 Jane Doe Initially Uncompressed Block Header 1 • John • Doe 2 • Jane • Doe 3 • John • Smith 4 • Jane • Doe Free Space INSERT INTO EMPLOYEE VALUES (5, ‘Jack’, ‘Smith’); COMMIT;

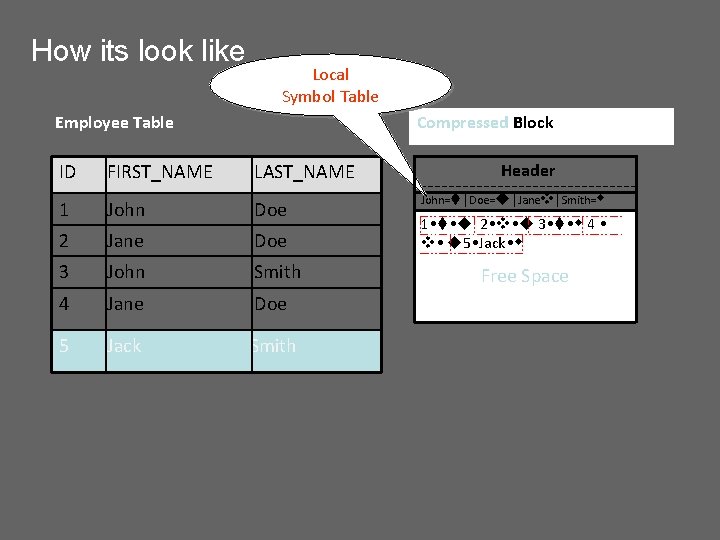

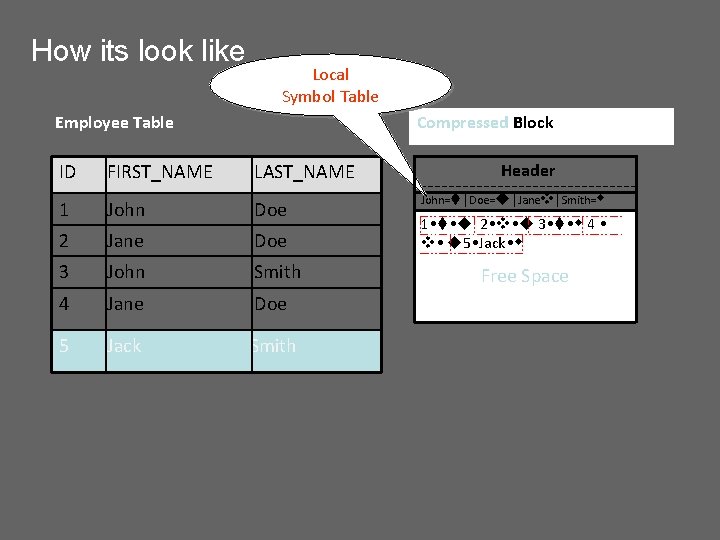

How its look like Local Symbol Table Employee Table Compressed Block ID FIRST_NAME LAST_NAME 1 John Doe 2 Jane Doe 3 John Smith 4 Jane Doe 5 Jack Smith Header John= |Doe= |Jane |Smith= 1 • • 2 • • 2 • Jane • 3 • • 4 • 1 • John • Doe • 5 • Jack • Doe 3 • John • Smith 4 • Jane • Doe Free Space

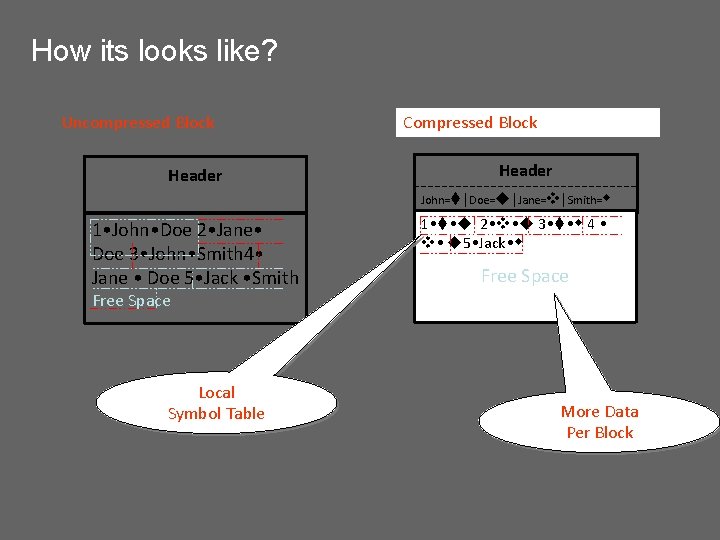

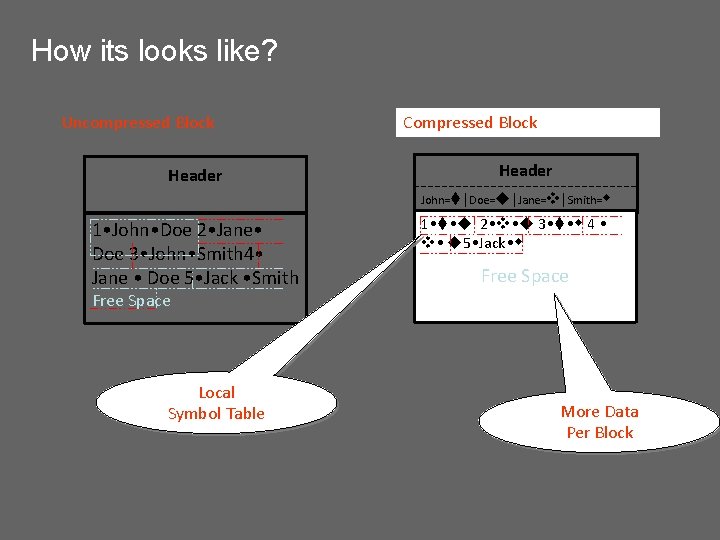

How its looks like? Uncompressed Block Header Compressed Block Header John= |Doe= |Jane= |Smith= 1 • John • Doe 2 • Jane • Doe 3 • John • Smith 4 • Jane • Doe 5 • Jack • Smith Free Space Local Symbol Table 1 • • 2 • • 2 • Jane • 3 • • 4 • 1 • John • Doe • 5 • Jack • Doe 3 • John • Smith 4 • Jane • Doe Free Space More Data Per Block

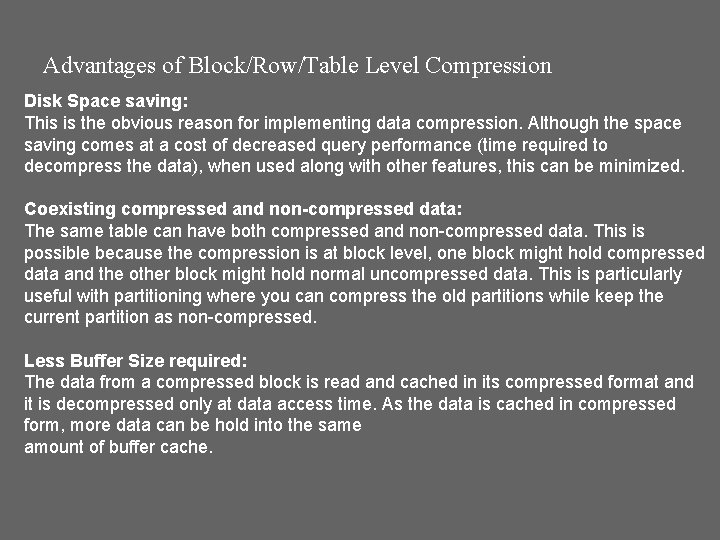

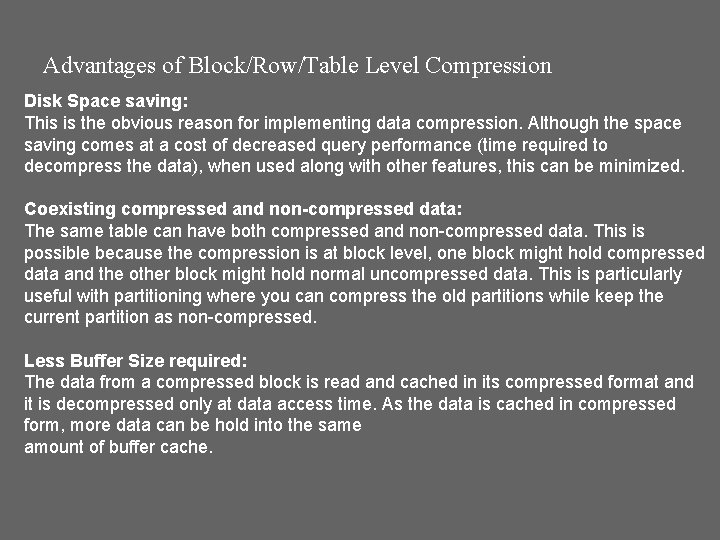

Advantages of Block/Row/Table Level Compression Disk Space saving: This is the obvious reason for implementing data compression. Although the space saving comes at a cost of decreased query performance (time required to decompress the data), when used along with other features, this can be minimized. Coexisting compressed and non-compressed data: The same table can have both compressed and non-compressed data. This is possible because the compression is at block level, one block might hold compressed data and the other block might hold normal uncompressed data. This is particularly useful with partitioning where you can compress the old partitions while keep the current partition as non-compressed. Less Buffer Size required: The data from a compressed block is read and cached in its compressed format and it is decompressed only at data access time. As the data is cached in compressed form, more data can be hold into the same amount of buffer cache.

’ • Not an free – License is required (except Basic 9 i method) , see how much you are saving before to use, since price is high • Do not capacity it while comparing to disks – rather look into max IOPS • RAM Demands: Since many blocks now demand want more time to be in buffer • Must know which is suitable under available options – Performance impacts – Resource availability

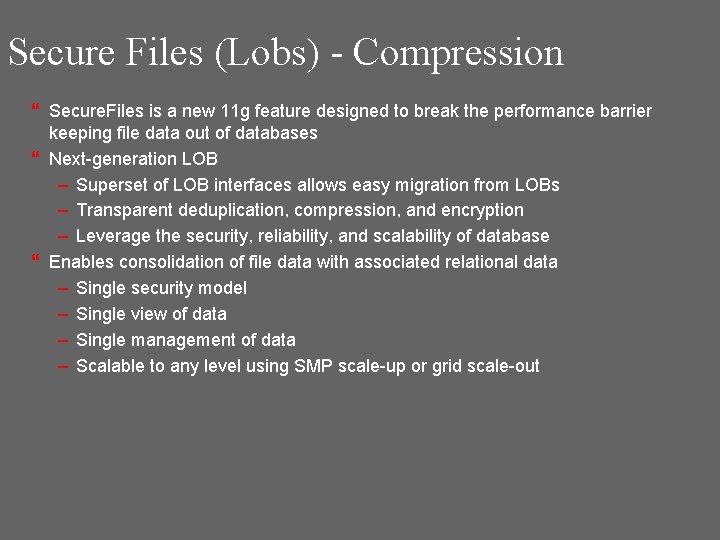

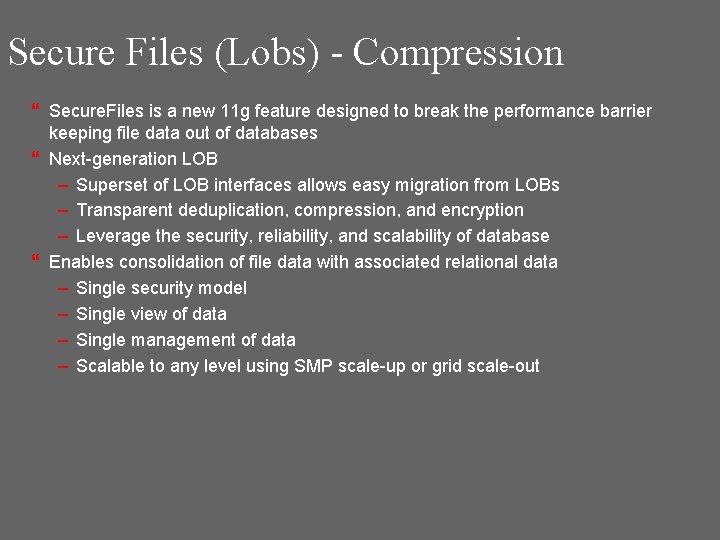

Secure Files (Lobs) - Compression } Secure. Files is a new 11 g feature designed to break the performance barrier keeping file data out of databases } Next-generation LOB – Superset of LOB interfaces allows easy migration from LOBs – Transparent deduplication, compression, and encryption – Leverage the security, reliability, and scalability of database } Enables consolidation of file data with associated relational data – Single security model – Single view of data – Single management of data – Scalable to any level using SMP scale-up or grid scale-out

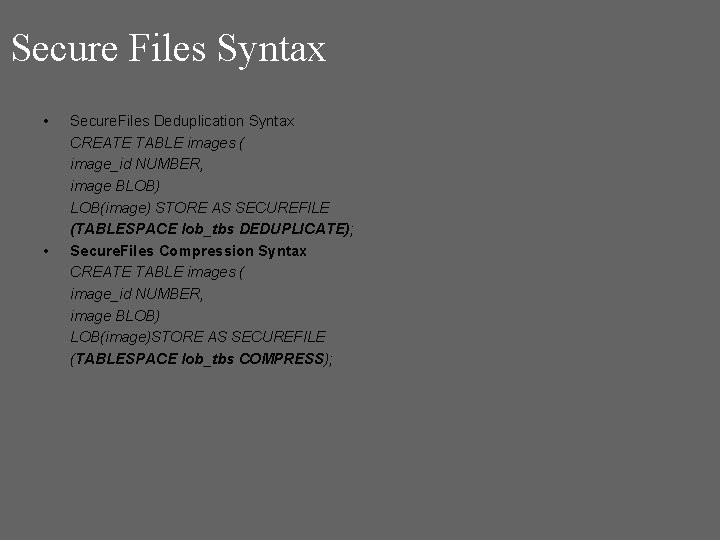

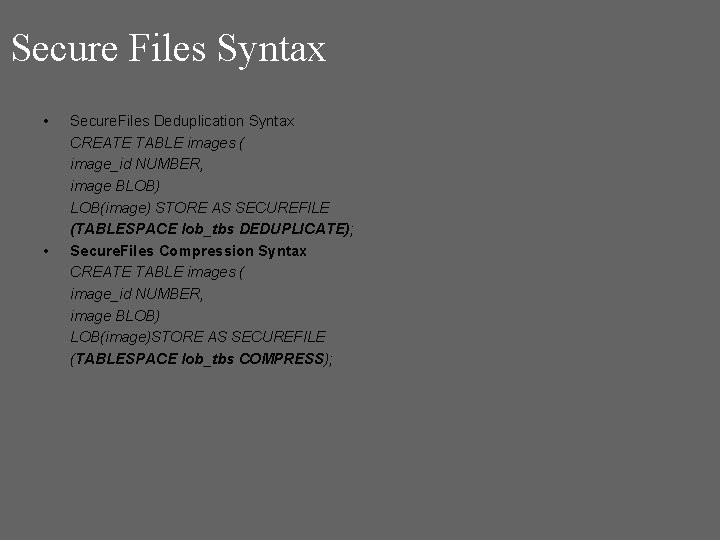

Secure Files Syntax • • Secure. Files Deduplication Syntax CREATE TABLE images ( image_id NUMBER, image BLOB) LOB(image) STORE AS SECUREFILE (TABLESPACE lob_tbs DEDUPLICATE); Secure. Files Compression Syntax CREATE TABLE images ( image_id NUMBER, image BLOB) LOB(image)STORE AS SECUREFILE (TABLESPACE lob_tbs COMPRESS);

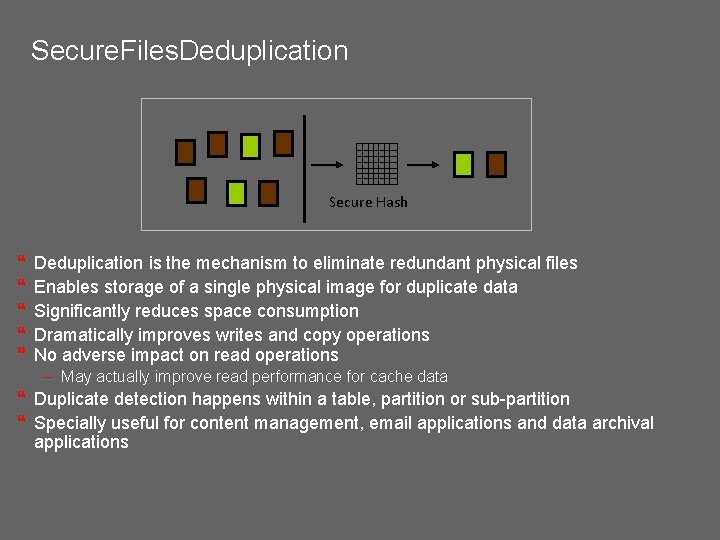

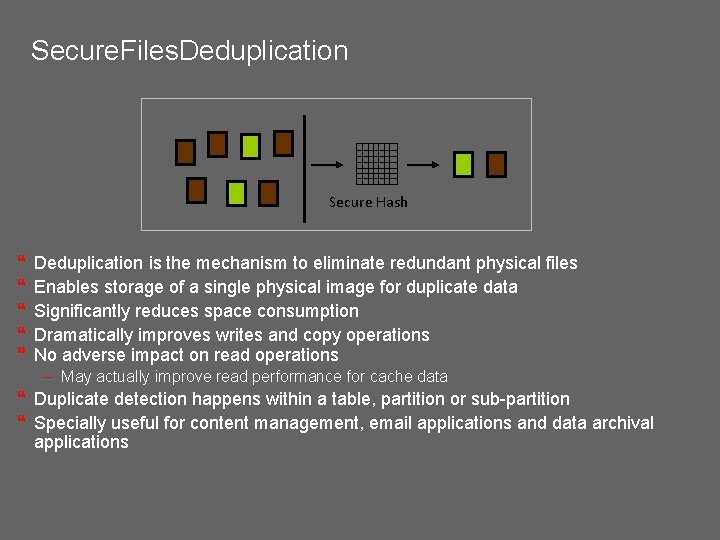

Secure. Files. Deduplication Secure Hash } } } Deduplication is the mechanism to eliminate redundant physical files Enables storage of a single physical image for duplicate data Significantly reduces space consumption Dramatically improves writes and copy operations No adverse impact on read operations – May actually improve read performance for cache data } Duplicate detection happens within a table, partition or sub-partition } Specially useful for content management, email applications and data archival applications

Data Pump Compression } Metadata compression available since Oracle Database 10 g } Oracle Database 11 g extends compression to table data during exports – No need to decompress before import } Single step compression of both data and metadata – Compressed data directly hits disk resulting in reduced disk space requirements – Internal tests reduced dump file size up to 75% } Compression factor comparable to GNU gzip utility } Application transparent – Complete Data Pump functionality available on compressed files COMPRESSION = ALL|METADATA_ONLY|NONE Caveats } Performance cost: ~10% overhead } Compression Ratio: comparable to gzip

Backup Compression } Compression features in the RMAN utility: – Sinception: Null compression – does not backup data blocks that have never been allocated – Since Oracle Database 10 g Release 2: Unused block compression – RMAN skips blocks that currently do not contain data – Since Oracle Database 11 g: Binary compression (or backup set compression) – applies a compression algorithm to the blocks as they are backed up, if configured/requested – All RMAN compression techniques are totally transparent during restore operations ØFast RMAN Compression: Compresses the backup set contents before writing them to disk or tape No extra decompression steps are required during recovery when you use High performance, industry standard compression algorithm 40% faster backup compression versus Oracle Database 10 g Suitable for fast, incremental daily backups Reduces network usage

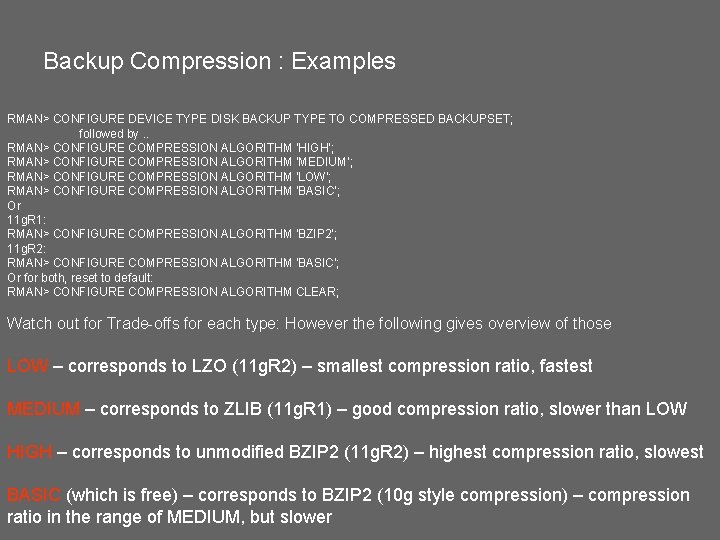

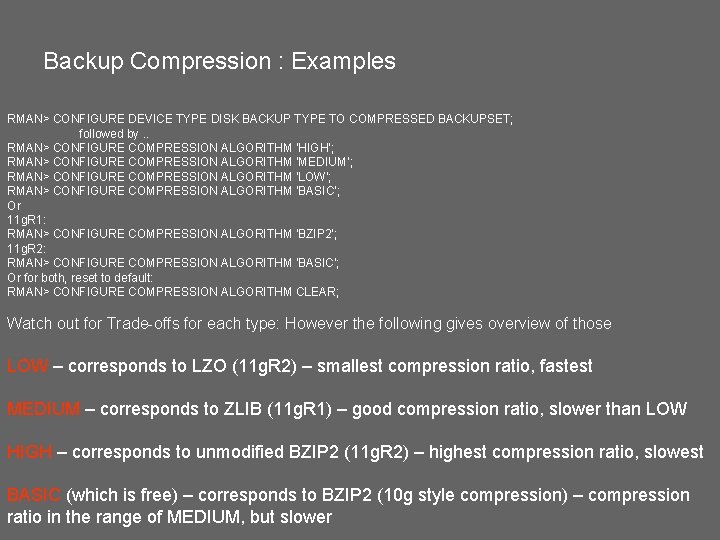

Backup Compression : Examples RMAN> CONFIGURE DEVICE TYPE DISK BACKUP TYPE TO COMPRESSED BACKUPSET; followed by. . RMAN> CONFIGURE COMPRESSION ALGORITHM ‘HIGH’; RMAN> CONFIGURE COMPRESSION ALGORITHM ‘MEDIUM’; RMAN> CONFIGURE COMPRESSION ALGORITHM ‘LOW’; RMAN> CONFIGURE COMPRESSION ALGORITHM ‘BASIC’; Or 11 g. R 1: RMAN> CONFIGURE COMPRESSION ALGORITHM 'BZIP 2'; 11 g. R 2: RMAN> CONFIGURE COMPRESSION ALGORITHM 'BASIC'; Or for both, reset to default: RMAN> CONFIGURE COMPRESSION ALGORITHM CLEAR; Watch out for Trade-offs for each type: However the following gives overview of those LOW – corresponds to LZO (11 g. R 2) – smallest compression ratio, fastest MEDIUM – corresponds to ZLIB (11 g. R 1) – good compression ratio, slower than LOW HIGH – corresponds to unmodified BZIP 2 (11 g. R 2) – highest compression ratio, slowest BASIC (which is free) – corresponds to BZIP 2 (10 g style compression) – compression ratio in the range of MEDIUM, but slower

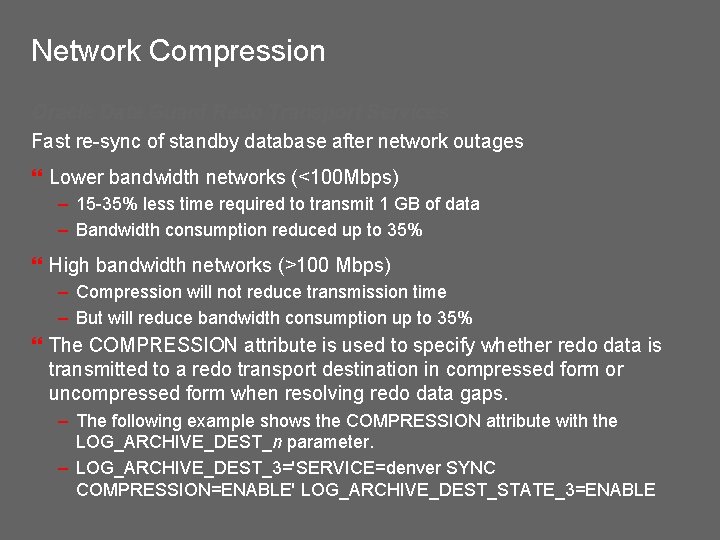

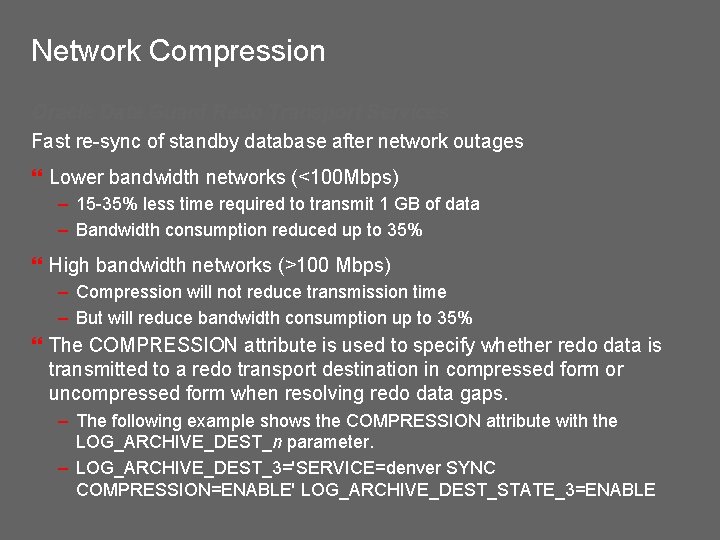

Network Compression Oracle Data Guard Redo Transport Services Fast re-sync of standby database after network outages } Lower bandwidth networks (<100 Mbps) – 15 -35% less time required to transmit 1 GB of data – Bandwidth consumption reduced up to 35% } High bandwidth networks (>100 Mbps) – Compression will not reduce transmission time – But will reduce bandwidth consumption up to 35% } The COMPRESSION attribute is used to specify whether redo data is transmitted to a redo transport destination in compressed form or uncompressed form when resolving redo data gaps. – The following example shows the COMPRESSION attribute with the LOG_ARCHIVE_DEST_n parameter. – LOG_ARCHIVE_DEST_3='SERVICE=denver SYNC COMPRESSION=ENABLE' LOG_ARCHIVE_DEST_STATE_3=ENABLE

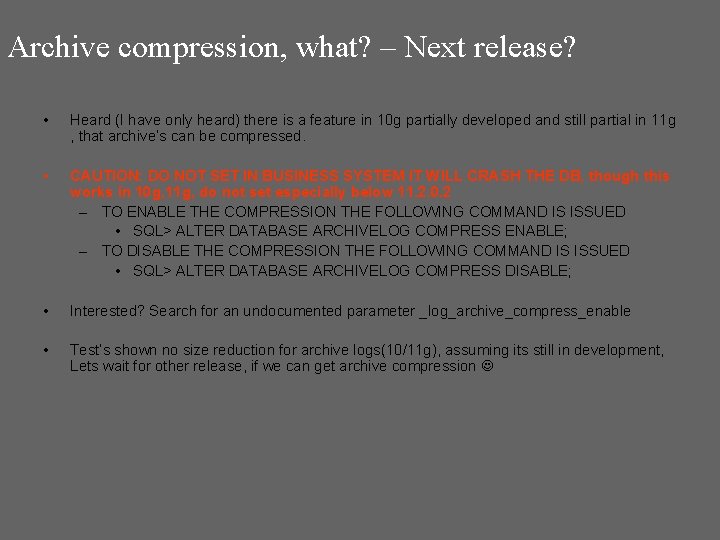

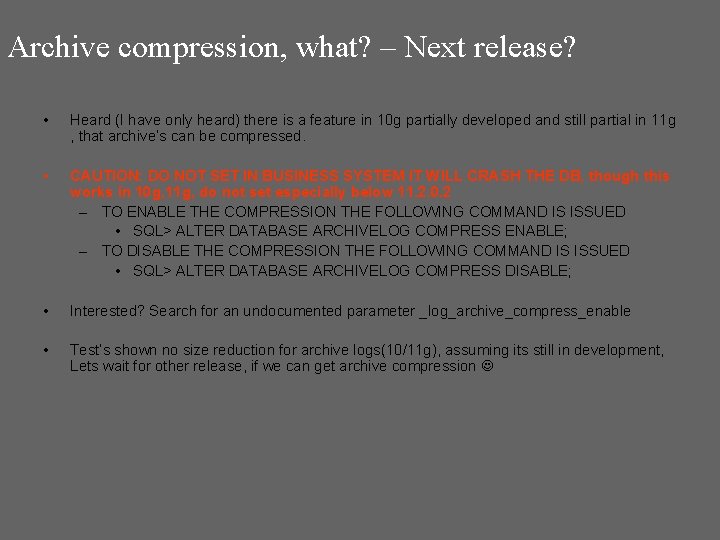

Archive compression, what? – Next release? • Heard (I have only heard) there is a feature in 10 g partially developed and still partial in 11 g , that archive’s can be compressed. • CAUTION: DO NOT SET IN BUSINESS SYSTEM IT WILL CRASH THE DB, though this works in 10 g, 11 g, do not set especially below 11. 2. 0. 2 – TO ENABLE THE COMPRESSION THE FOLLOWING COMMAND IS ISSUED • SQL> ALTER DATABASE ARCHIVELOG COMPRESS ENABLE; – TO DISABLE THE COMPRESSION THE FOLLOWING COMMAND IS ISSUED • SQL> ALTER DATABASE ARCHIVELOG COMPRESS DISABLE; • Interested? Search for an undocumented parameter _log_archive_compress_enable • Test’s shown no size reduction for archive logs(10/11 g), assuming its still in development, Lets wait for other release, if we can get archive compression

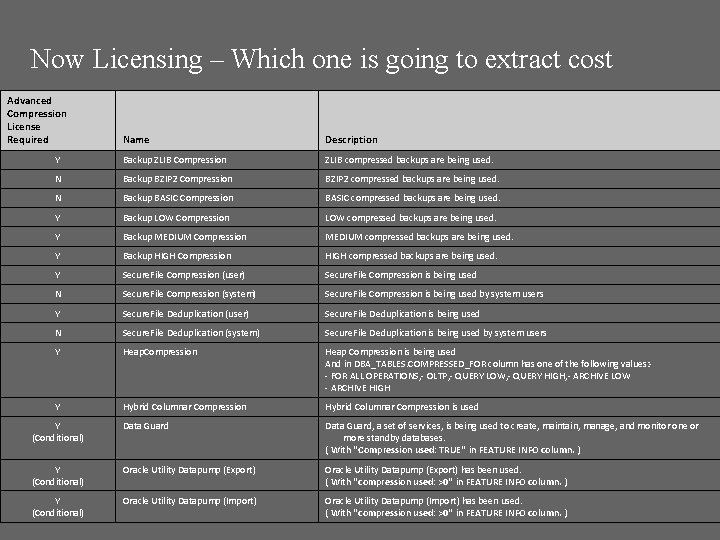

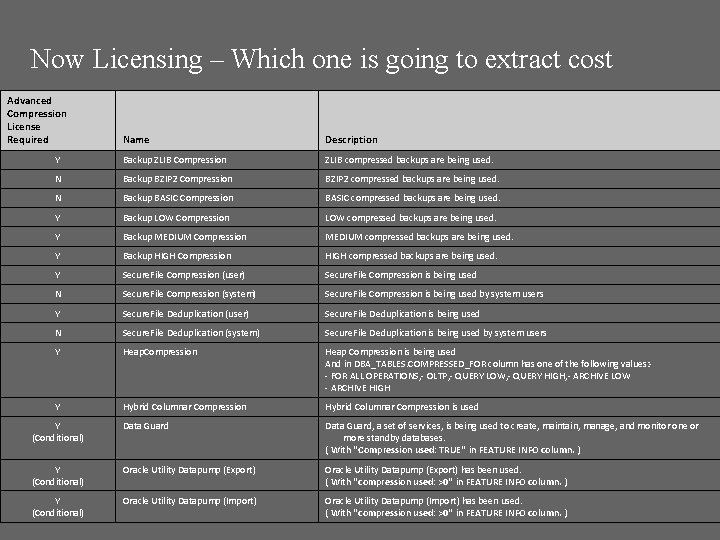

Now Licensing – Which one is going to extract cost Advanced Compression License Required Name Description Y Backup ZLIB Compression ZLIB compressed backups are being used. N Backup BZIP 2 Compression BZIP 2 compressed backups are being used. N Backup BASIC Compression BASIC compressed backups are being used. Y Backup LOW Compression LOW compressed backups are being used. Y Backup MEDIUM Compression MEDIUM compressed backups are being used. Y Backup HIGH Compression HIGH compressed backups are being used. Y Secure. File Compression (user) Secure. File Compression is being used N Secure. File Compression (system) Secure. File Compression is being used by system users Y Secure. File Deduplication (user) Secure. File Deduplication is being used N Secure. File Deduplication (system) Secure. File Deduplication is being used by system users Y Heap. Compression Heap Compression is being used And in DBA_TABLES. COMPRESSED_FOR column has one of the following values: - FOR ALL OPERATIONS, - OLTP, - QUERY LOW, - QUERY HIGH, - ARCHIVE LOW - ARCHIVE HIGH Y Hybrid Columnar Compression is used Y (Conditional) Data Guard, a set of services, is being used to create, maintain, manage, and monitor one or more standby databases. ( With “Compression used: TRUE” in FEATURE INFO column. ) Y (Conditional) Oracle Utility Datapump (Export) has been used. ( With “compression used: >0” in FEATURE INFO column. ) Y (Conditional) Oracle Utility Datapump (Import) has been used. ( With “compression used: >0” in FEATURE INFO column. )

Thank you! Q&A