ComplexityEffective Memory Access Scheduling for ManyCore Accelerator Architectures

- Slides: 36

Complexity-Effective Memory Access Scheduling for Many-Core Accelerator Architectures George L. Yuan, Ali Bakhoda and Tor M. Aamodt Electrical and Computer Engineering University of British Columbia December 14 th, 2009 (MICRO 2009)

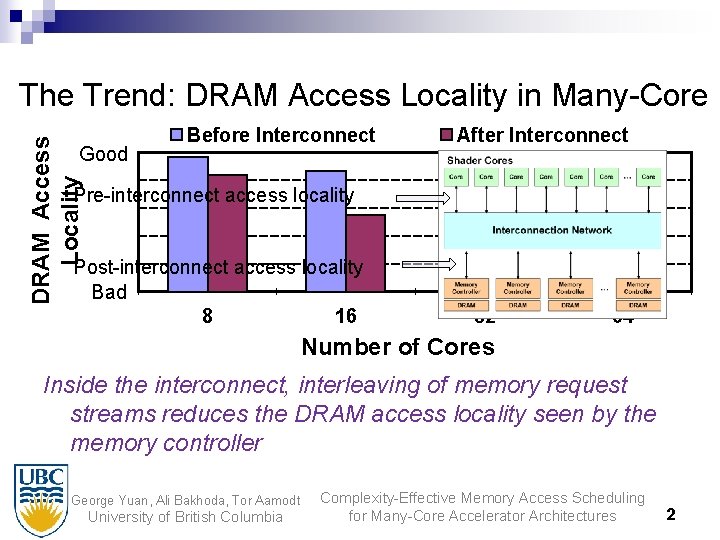

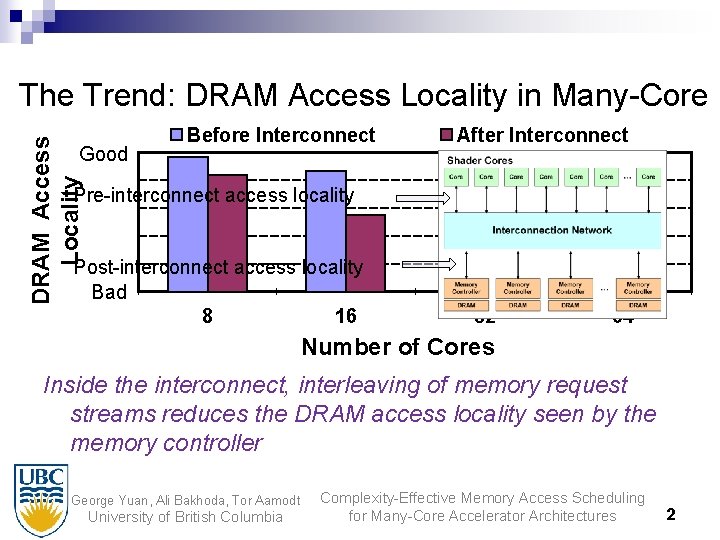

DRAM Access Locality The Trend: DRAM Access Locality in Many-Core Good Before Interconnect After Interconnect Pre-interconnect access locality Post-interconnect access locality Bad 8 16 32 64 Number of Cores Inside the interconnect, interleaving of memory request streams reduces the DRAM access locality seen by the memory controller George Yuan, Ali Bakhoda, Tor Aamodt University of British Columbia Complexity-Effective Memory Access Scheduling for Many-Core Accelerator Architectures 2

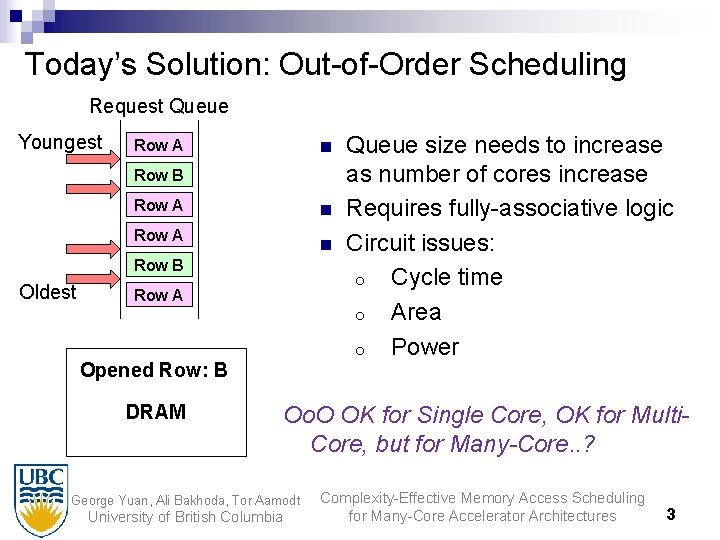

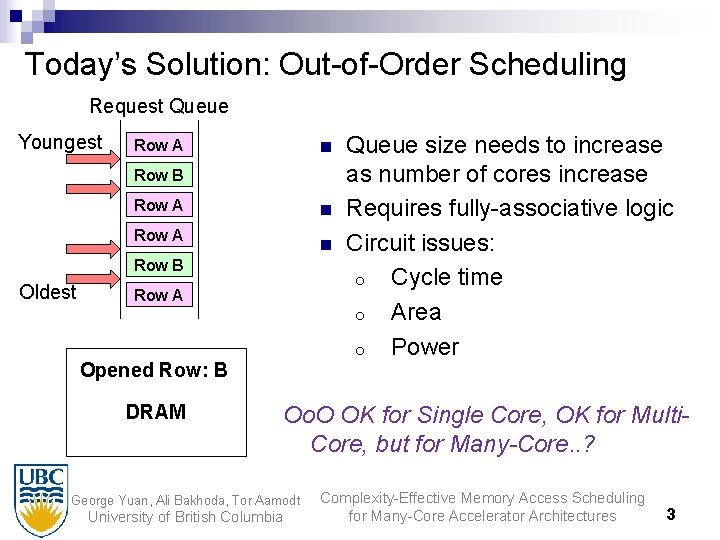

Today’s Solution: Out-of-Order Scheduling Request Queue Youngest n Row A Row B Row A n Row B Oldest Row A Opened Row: A Opened Switching Row: Row B DRAM Queue size needs to increase as number of cores increase Requires fully-associative logic Circuit issues: o Cycle time o Area o Power Oo. O OK for Single Core, OK for Multi. Core, but for Many-Core. . ? George Yuan, Ali Bakhoda, Tor Aamodt University of British Columbia Complexity-Effective Memory Access Scheduling for Many-Core Accelerator Architectures 3

Related Work n n n Rixner, Dally, et al o First-Ready First-Come First-Serve (FRFCFS) Patents by Intel, Nvidia, etc. . Mutlu & Moscibroda o Stall-time Fair Memory o Parallelism-Aware Batch Scheduling No prior work for memory access scheduling for 10, 000+ threads George Yuan, Ali Bakhoda, Tor Aamodt University of British Columbia Complexity-Effective Memory Access Scheduling for Many-Core Accelerator Architectures 4

Our Contributions n n Show request stream interleaving in interconnect First paper that considers problem of DRAM scheduling for tens of thousands of threads Integration of DRAM scheduling in interconnect, allowing for more complexity-effective design Achieves 91% of performance of out-of-order scheduling with in-order scheduling for memorylimited applications George Yuan, Ali Bakhoda, Tor Aamodt University of British Columbia Complexity-Effective Memory Access Scheduling for Many-Core Accelerator Architectures 5

Outline n n n Introduction Background on DRAM The Request Interleaving Problem Hold-Grant Interconnect Arbitration Experimental Results Conclusion George Yuan, Ali Bakhoda, Tor Aamodt University of British Columbia Complexity-Effective Memory Access Scheduling for Many-Core Accelerator Architectures 6

Example of many-core accelerator? GPUs n n High FLOP capacity for high resolution graphics Nvidia’s GTX 285: 30 8 -wide multiprocessors 10, 000’s of concurrent threads Demand on memory system extremely high George Yuan, Ali Bakhoda, Tor Aamodt University of British Columbia Complexity-Effective Memory Access Scheduling for Many-Core Accelerator Architectures 7

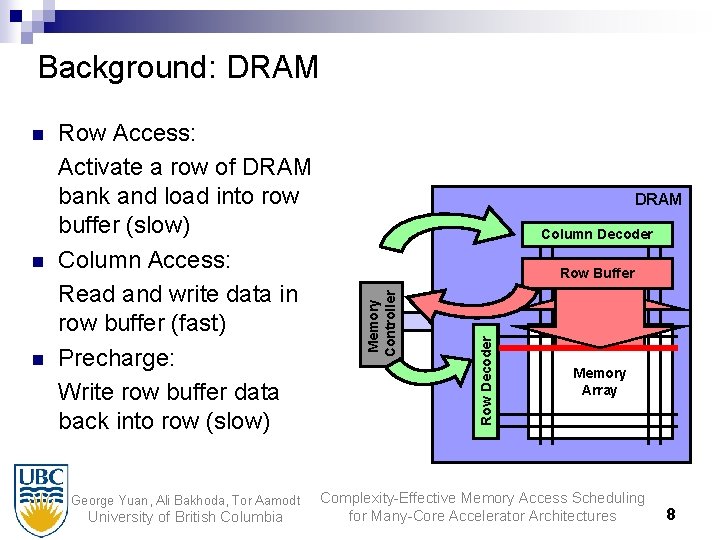

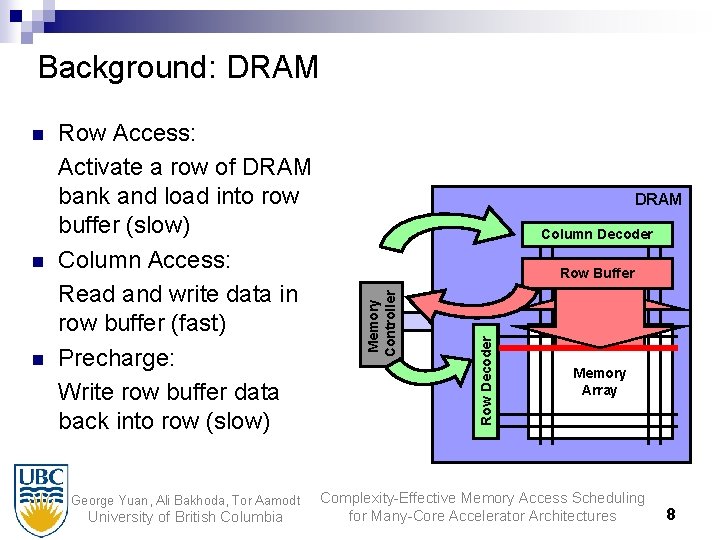

Background: DRAM n George Yuan, Ali Bakhoda, Tor Aamodt University of British Columbia DRAM Column Decoder Row Buffer Row Decoder n Row Access: Activate a row of DRAM bank and load into row buffer (slow) Column Access: Read and write data in row buffer (fast) Precharge: Write row buffer data back into row (slow) Memory Controller n Memory Array Complexity-Effective Memory Access Scheduling for Many-Core Accelerator Architectures 8

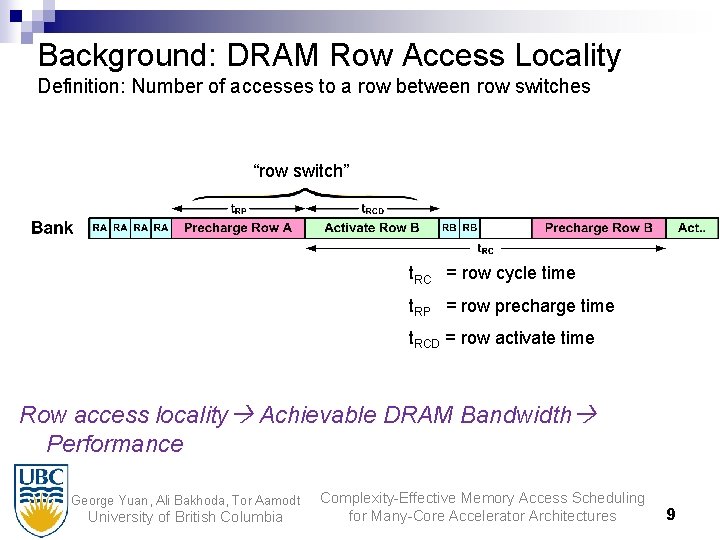

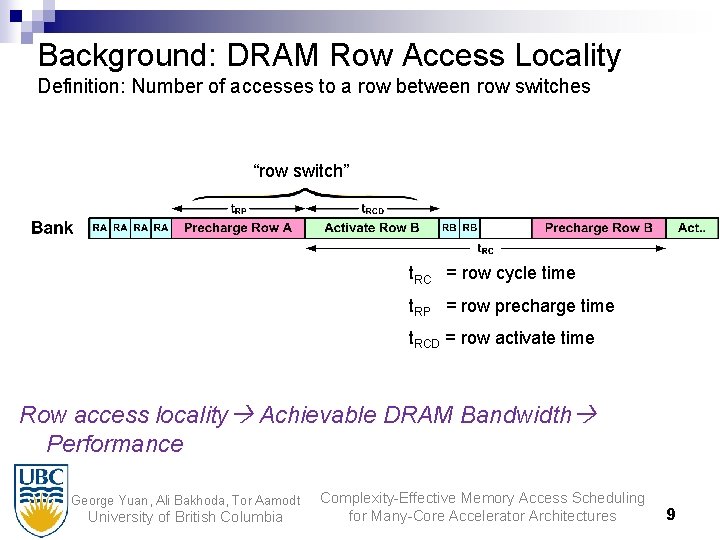

Background: DRAM Row Access Locality Definition: Number of accesses to a row between row switches “row switch” t. RC = row cycle time t. RP = row precharge time t. RCD = row activate time Row access locality Achievable DRAM Bandwidth Performance George Yuan, Ali Bakhoda, Tor Aamodt University of British Columbia Complexity-Effective Memory Access Scheduling for Many-Core Accelerator Architectures 9

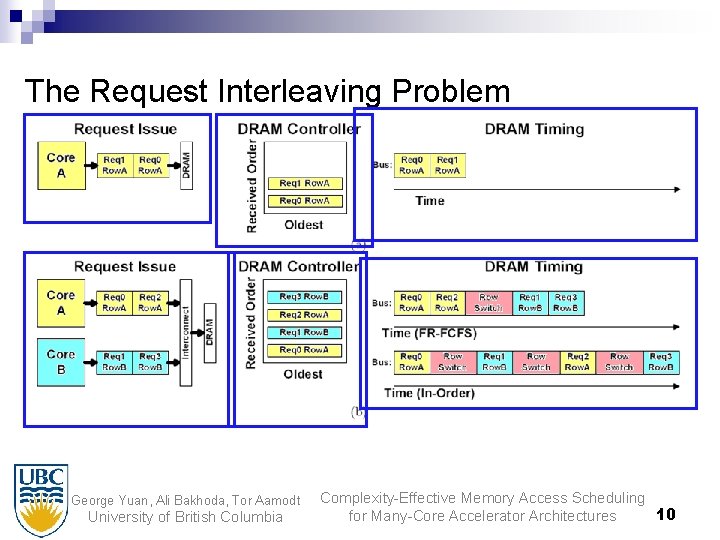

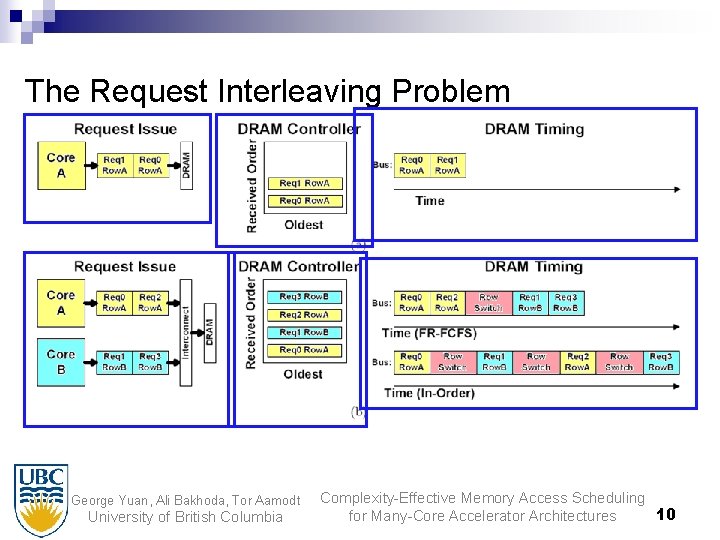

The Request Interleaving Problem George Yuan, Ali Bakhoda, Tor Aamodt University of British Columbia Complexity-Effective Memory Access Scheduling 10 for Many-Core Accelerator Architectures

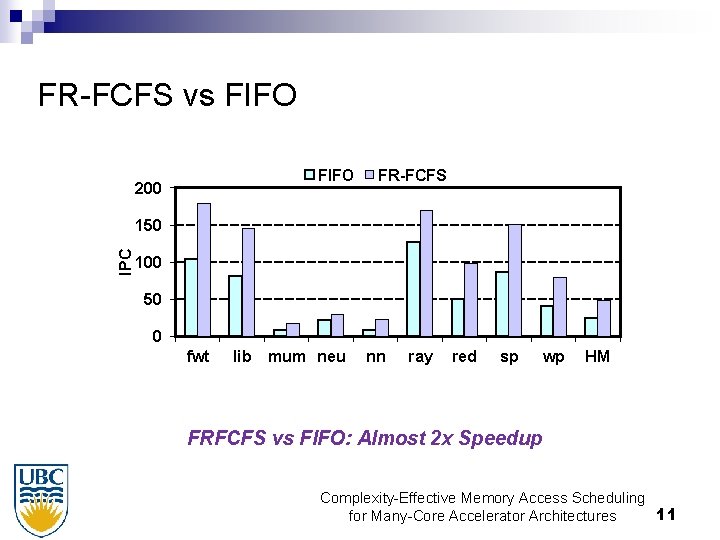

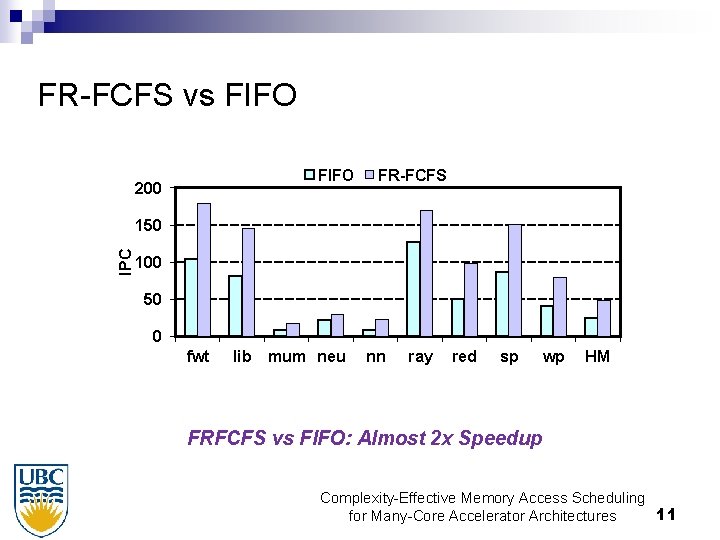

FR-FCFS vs FIFO 200 FR-FCFS IPC 150 100 50 0 fwt lib mum neu nn ray red sp wp HM FRFCFS vs FIFO: Almost 2 x Speedup Complexity-Effective Memory Access Scheduling 11 for Many-Core Accelerator Architectures

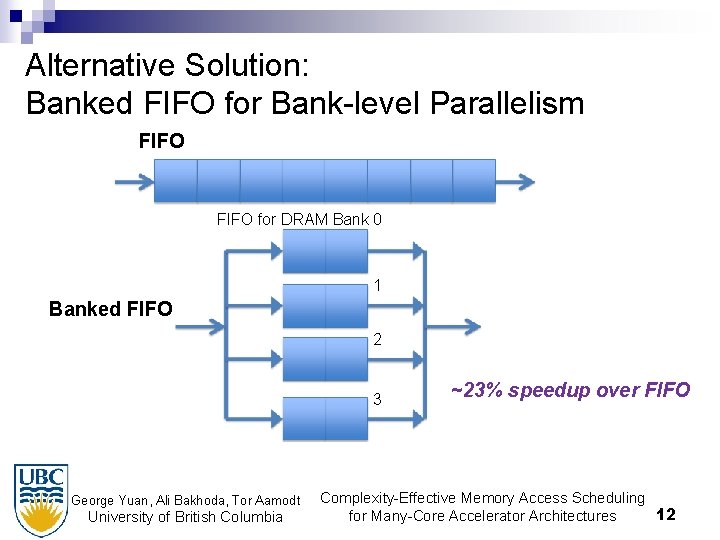

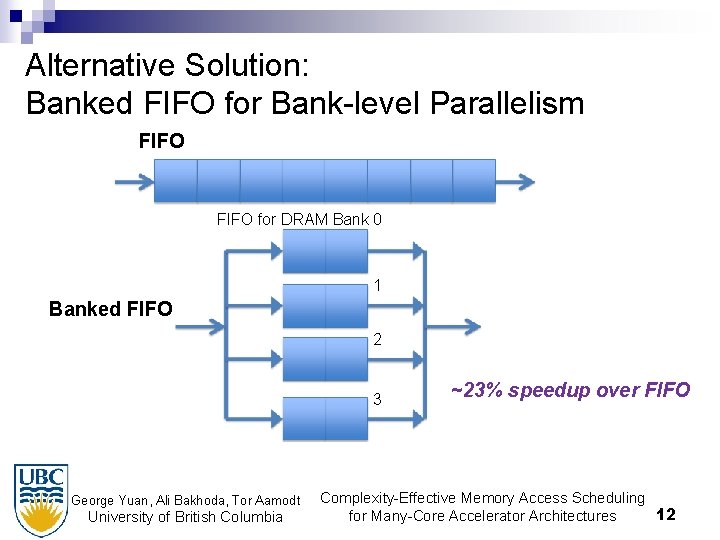

Alternative Solution: Banked FIFO for Bank-level Parallelism FIFO for DRAM Bank 0 1 Banked FIFO 2 3 George Yuan, Ali Bakhoda, Tor Aamodt University of British Columbia ~23% speedup over FIFO Complexity-Effective Memory Access Scheduling 12 for Many-Core Accelerator Architectures

Our Solution Hold grant interconnection arbitration policies “Hold Grant” (HG): Previously granted input has highest priority “Row-Matching Hold Grant” (RMHG): Previously granted input has highest priority if requested row matches previously requested row George Yuan, Ali Bakhoda, Tor Aamodt University of British Columbia Complexity-Effective Memory Access Scheduling 13 for Many-Core Accelerator Architectures

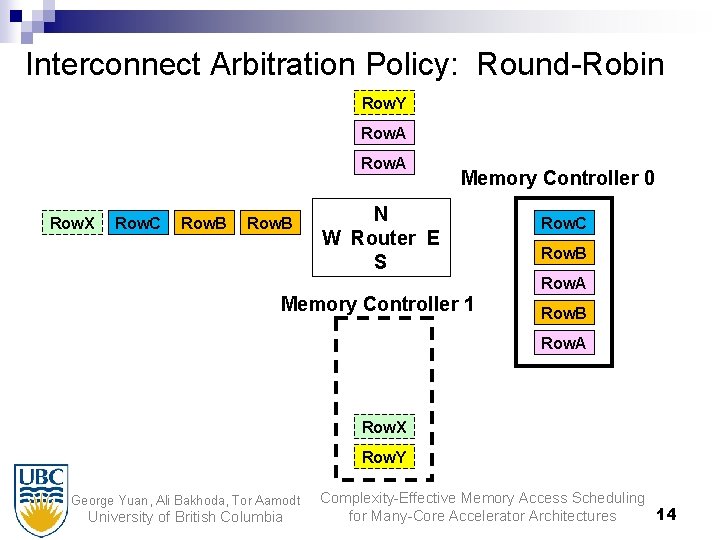

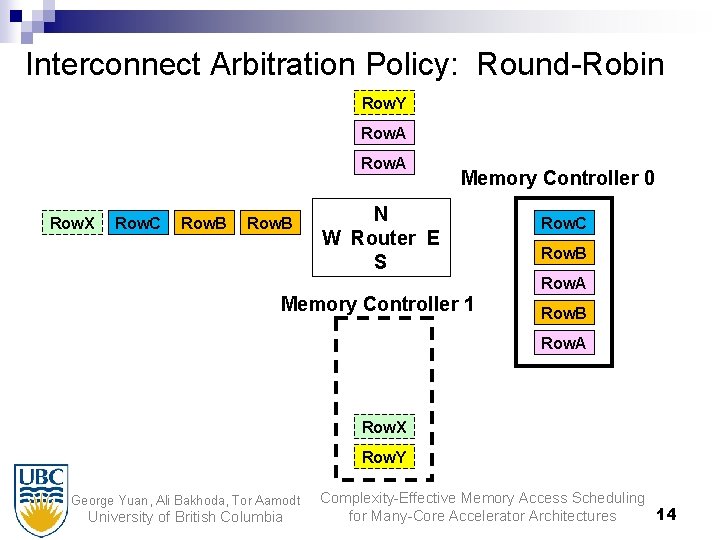

Interconnect Arbitration Policy: Round-Robin Row. Y Row. A Row. X Row. C Row. B Memory Controller 0 N W Router E S Row. C Row. B Row. A Memory Controller 1 Row. B Row. A Row. X Row. Y George Yuan, Ali Bakhoda, Tor Aamodt University of British Columbia Complexity-Effective Memory Access Scheduling 14 for Many-Core Accelerator Architectures

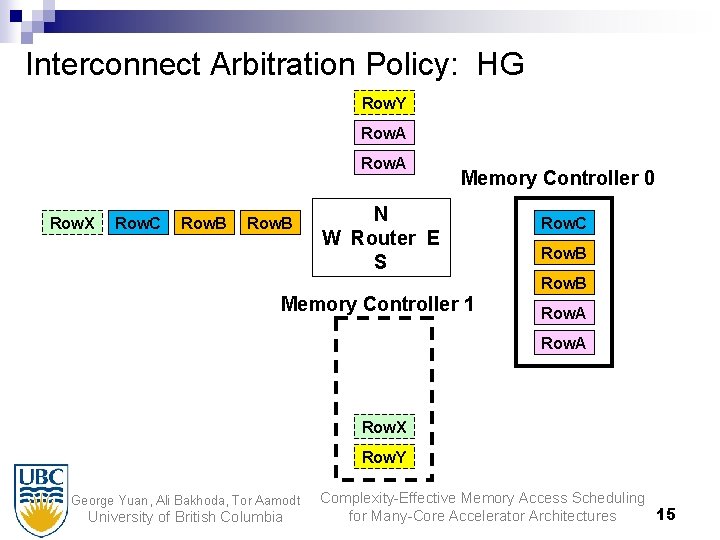

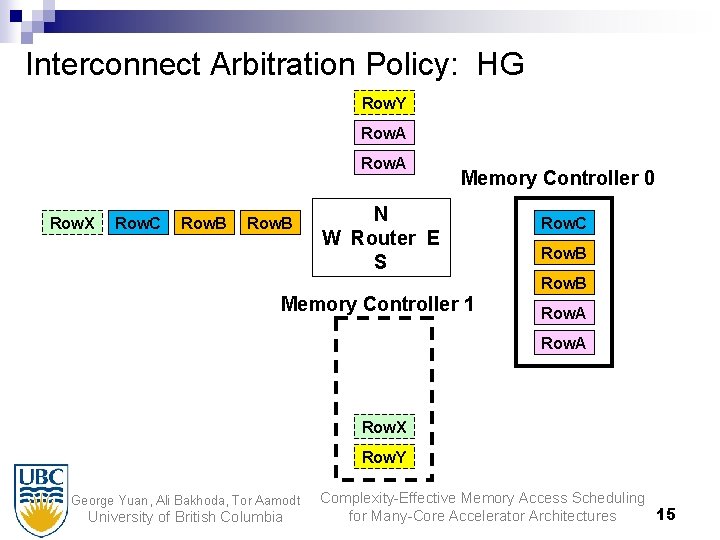

Interconnect Arbitration Policy: HG Row. Y Row. A Row. X Row. C Row. B Memory Controller 0 N W Router E S Row. C Row. B Memory Controller 1 Row. A Row. X Row. Y George Yuan, Ali Bakhoda, Tor Aamodt University of British Columbia Complexity-Effective Memory Access Scheduling 15 for Many-Core Accelerator Architectures

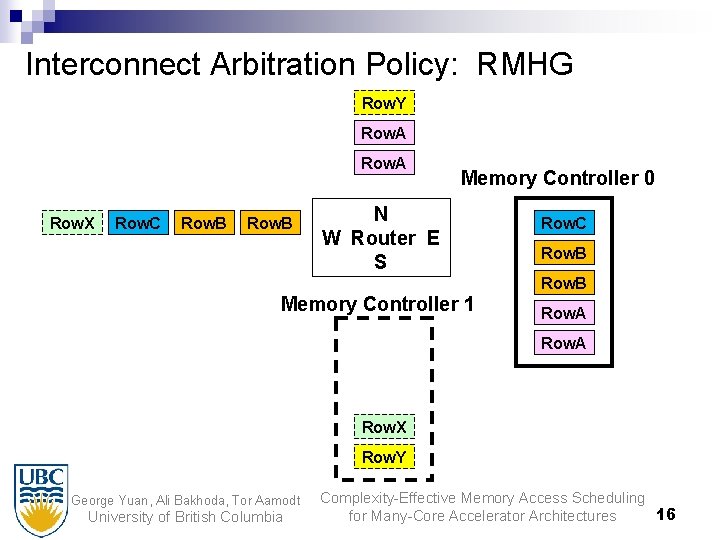

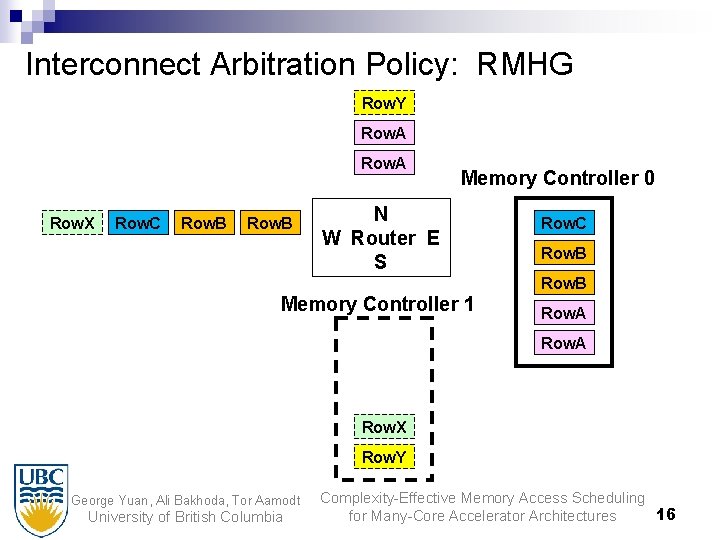

Interconnect Arbitration Policy: RMHG Row. Y Row. A Row. X Row. C Row. B Memory Controller 0 N W Router E S Row. C Row. B Memory Controller 1 Row. A Row. X Row. Y George Yuan, Ali Bakhoda, Tor Aamodt University of British Columbia Complexity-Effective Memory Access Scheduling 16 for Many-Core Accelerator Architectures

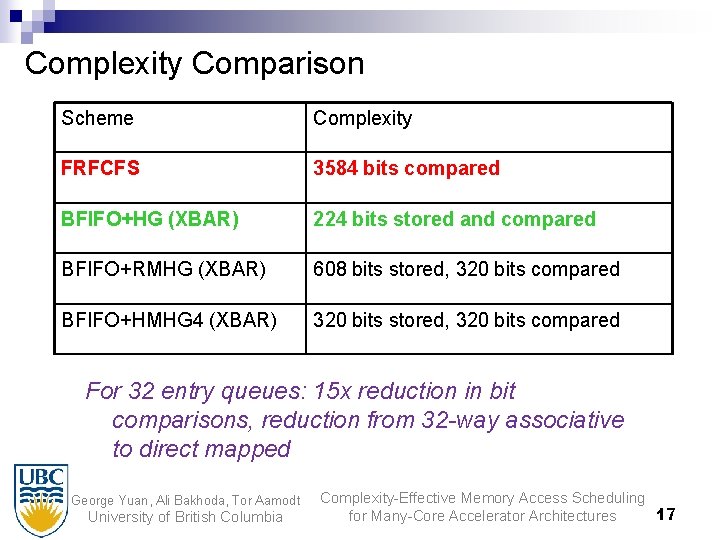

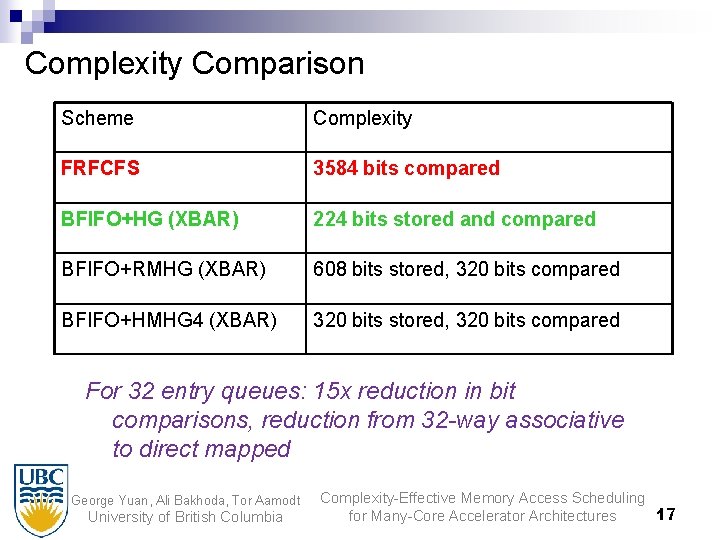

Complexity Comparison Scheme Complexity FRFCFS 3584 bits compared BFIFO+HG (XBAR) 224 bits stored and compared BFIFO+RMHG (XBAR) 608 bits stored, 320 bits compared BFIFO+HMHG 4 (XBAR) 320 bits stored, 320 bits compared For 32 entry queues: 15 x reduction in bit comparisons, reduction from 32 -way associative to direct mapped George Yuan, Ali Bakhoda, Tor Aamodt University of British Columbia Complexity-Effective Memory Access Scheduling 17 for Many-Core Accelerator Architectures

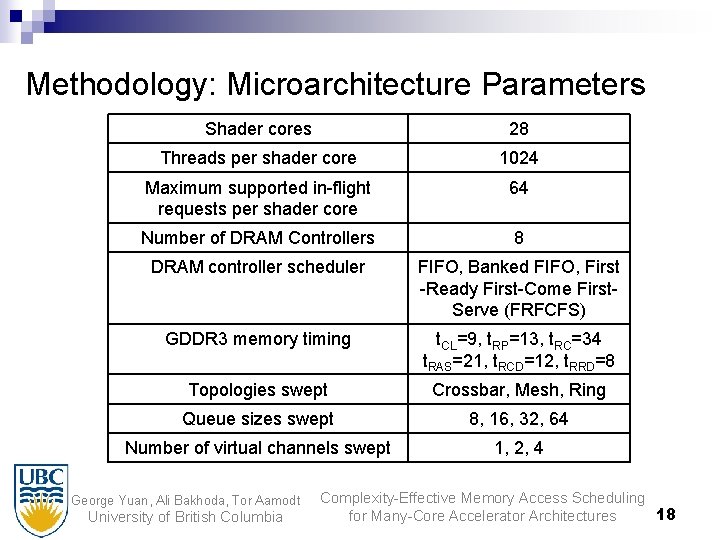

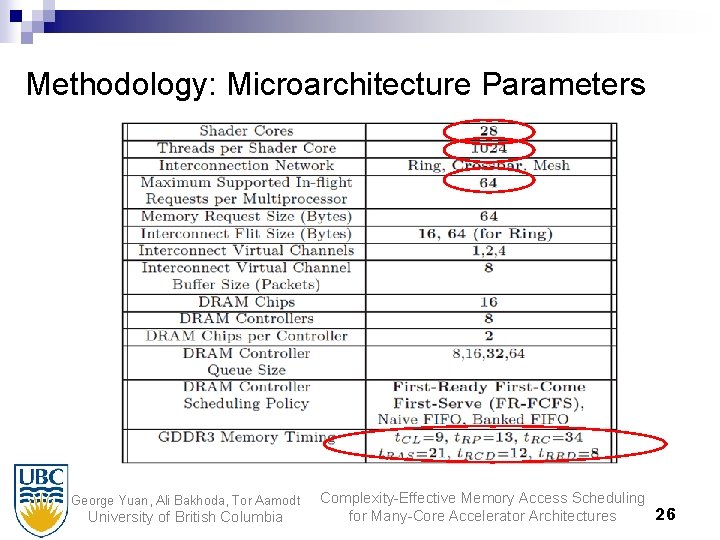

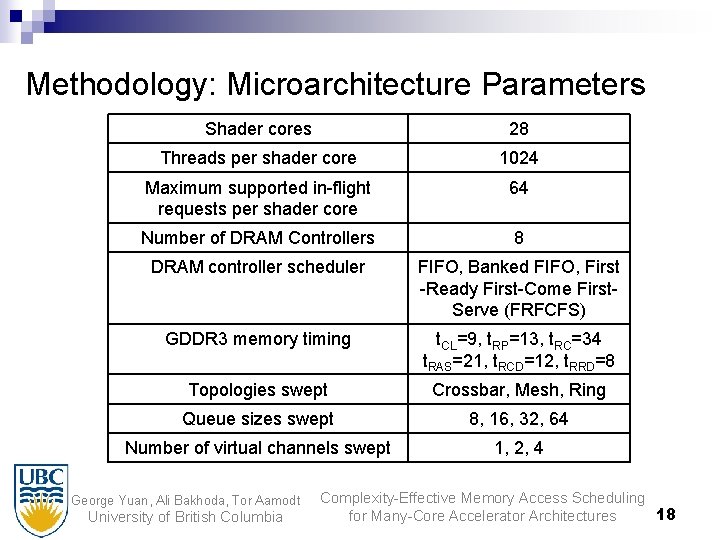

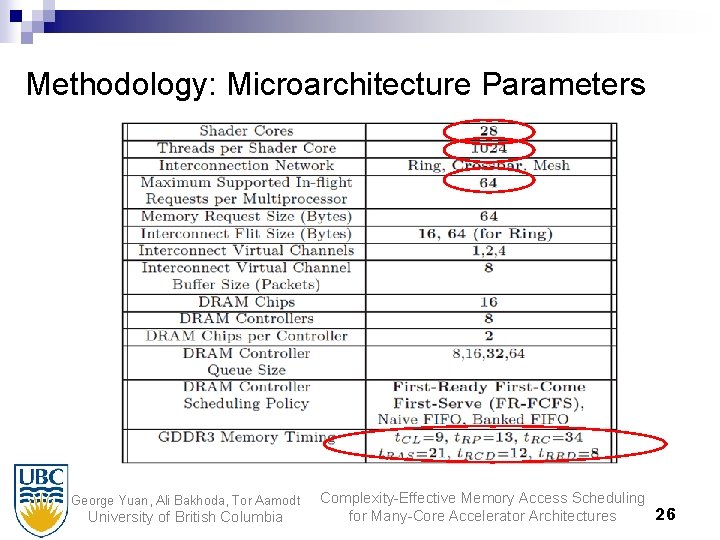

Methodology: Microarchitecture Parameters Shader cores 28 Threads per shader core 1024 Maximum supported in-flight requests per shader core 64 Number of DRAM Controllers 8 DRAM controller scheduler FIFO, Banked FIFO, First -Ready First-Come First. Serve (FRFCFS) GDDR 3 memory timing t. CL=9, t. RP=13, t. RC=34 t. RAS=21, t. RCD=12, t. RRD=8 Topologies swept Crossbar, Mesh, Ring Queue sizes swept 8, 16, 32, 64 Number of virtual channels swept 1, 2, 4 George Yuan, Ali Bakhoda, Tor Aamodt University of British Columbia Complexity-Effective Memory Access Scheduling 18 for Many-Core Accelerator Architectures

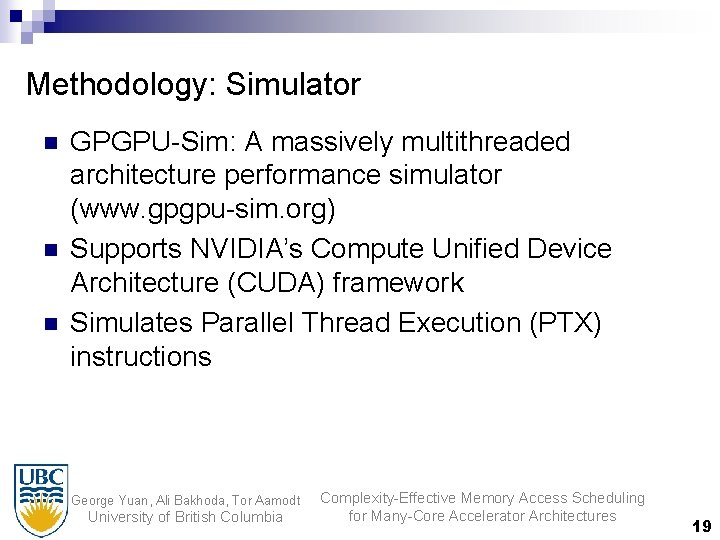

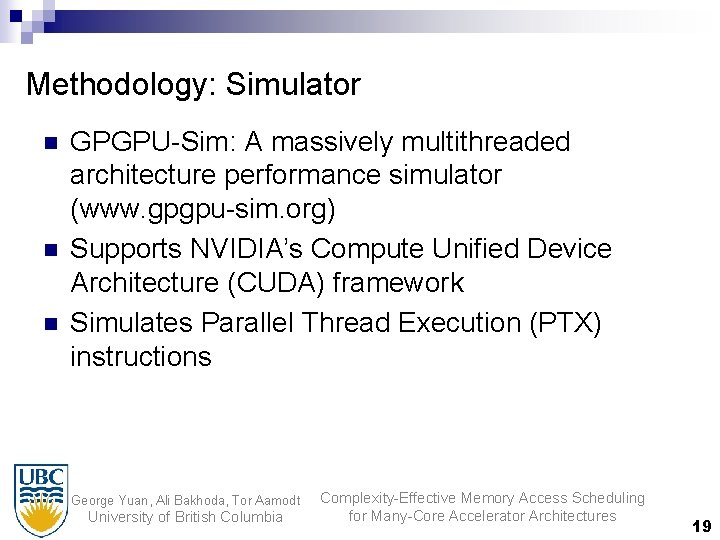

Methodology: Simulator n n n GPGPU-Sim: A massively multithreaded architecture performance simulator (www. gpgpu-sim. org) Supports NVIDIA’s Compute Unified Device Architecture (CUDA) framework Simulates Parallel Thread Execution (PTX) instructions George Yuan, Ali Bakhoda, Tor Aamodt University of British Columbia Complexity-Effective Memory Access Scheduling for Many-Core Accelerator Architectures 19

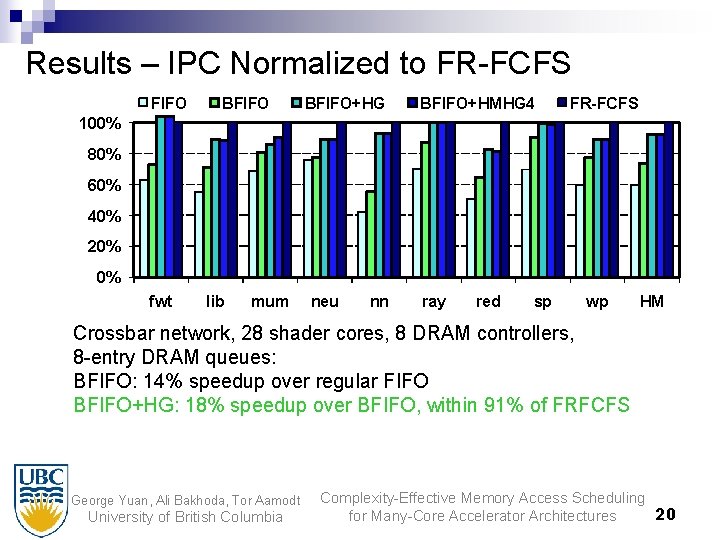

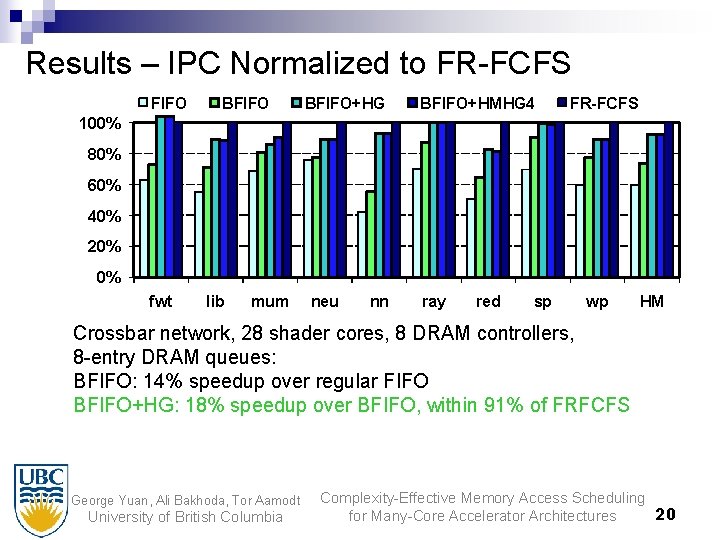

Results – IPC Normalized to FR-FCFS FIFO BFIFO+HG BFIFO+HMHG 4 FR-FCFS 100% 80% 60% 40% 20% 0% fwt lib mum neu nn ray red sp wp HM Crossbar network, 28 shader cores, 8 DRAM controllers, 8 -entry DRAM queues: BFIFO: 14% speedup over regular FIFO BFIFO+HG: 18% speedup over BFIFO, within 91% of FRFCFS George Yuan, Ali Bakhoda, Tor Aamodt University of British Columbia Complexity-Effective Memory Access Scheduling 20 for Many-Core Accelerator Architectures

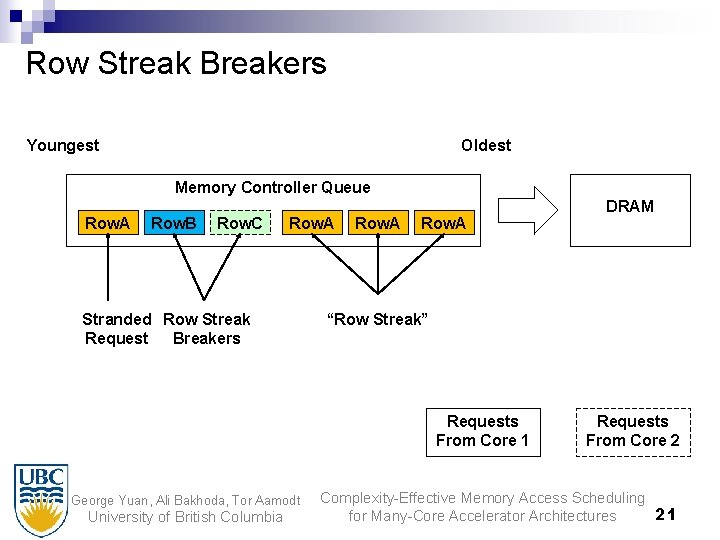

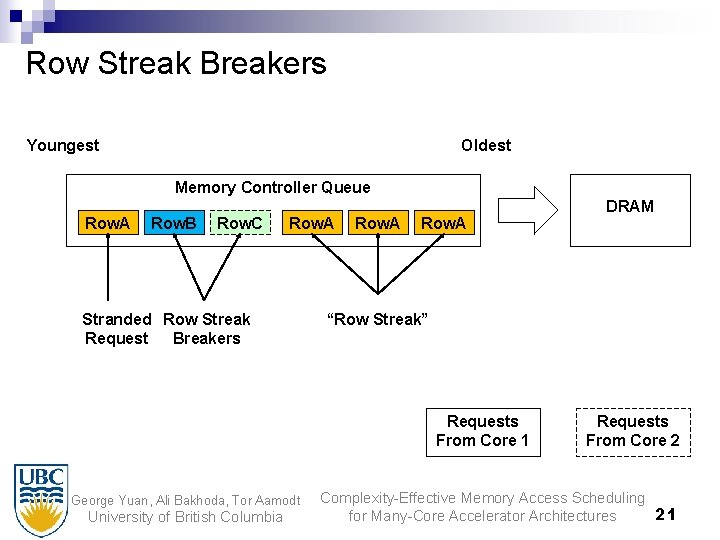

Row Streak Breakers Youngest Oldest Memory Controller Queue Row. A Row. B Row. C Row. A Stranded Row Streak Request Breakers Row. A “Row Streak” Requests From Core 1 George Yuan, Ali Bakhoda, Tor Aamodt University of British Columbia DRAM Requests From Core 2 Complexity-Effective Memory Access Scheduling 21 for Many-Core Accelerator Architectures

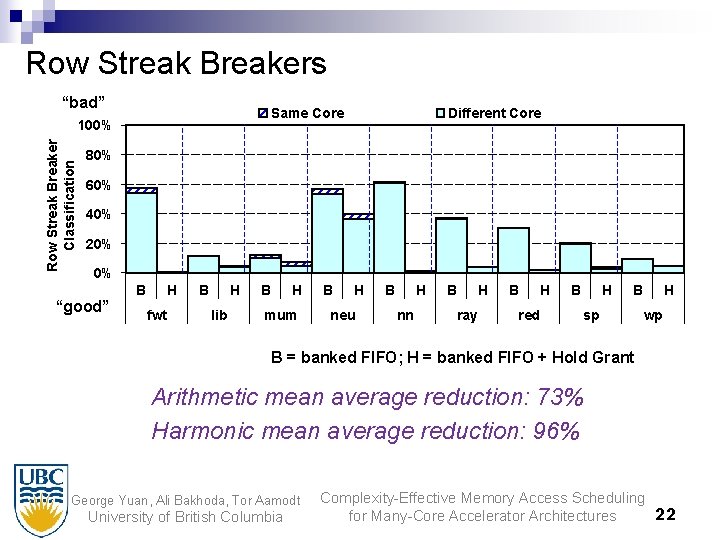

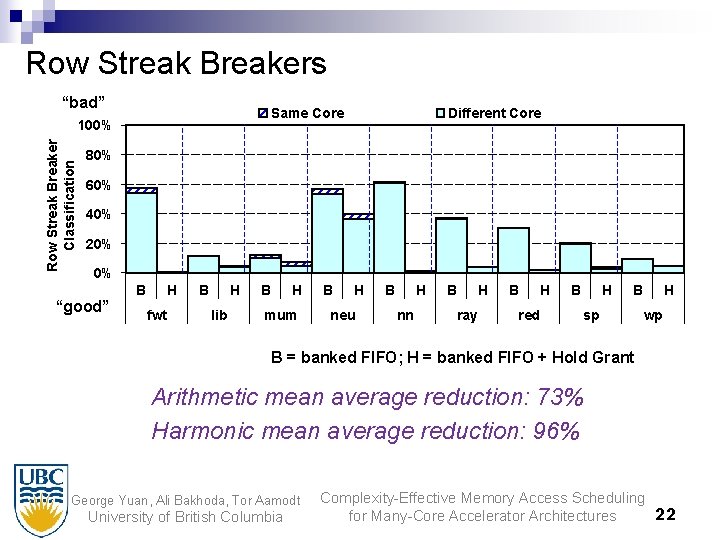

Row Streak Breakers “bad” Same Core Row Streak Breaker Classification 100% Different Core 80% 60% 40% 20% 0% “good” B H fwt B H lib B H mum B H neu B H nn B H ray B H red B H B sp H wp B = banked FIFO; H = banked FIFO + Hold Grant Arithmetic mean average reduction: 73% Harmonic mean average reduction: 96% George Yuan, Ali Bakhoda, Tor Aamodt University of British Columbia Complexity-Effective Memory Access Scheduling 22 for Many-Core Accelerator Architectures

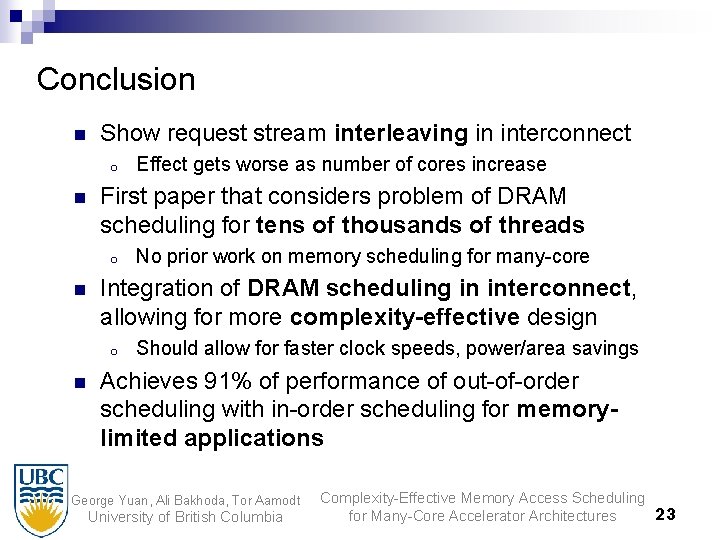

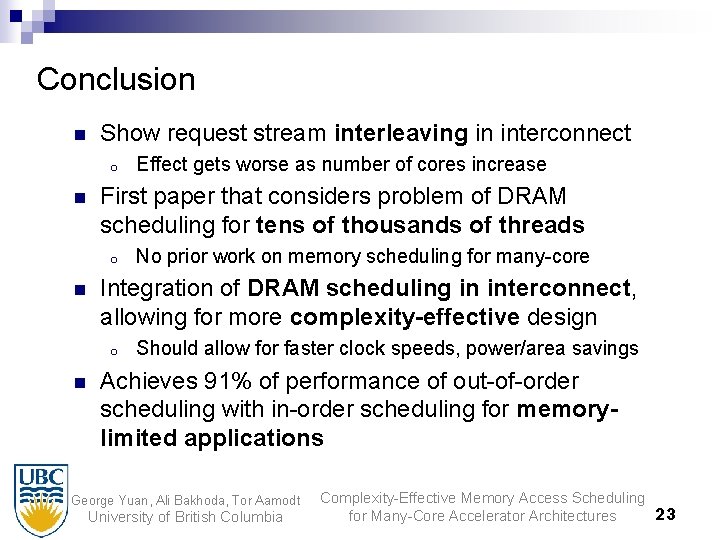

Conclusion n Show request stream interleaving in interconnect o n First paper that considers problem of DRAM scheduling for tens of thousands of threads o n No prior work on memory scheduling for many-core Integration of DRAM scheduling in interconnect, allowing for more complexity-effective design o n Effect gets worse as number of cores increase Should allow for faster clock speeds, power/area savings Achieves 91% of performance of out-of-order scheduling with in-order scheduling for memorylimited applications George Yuan, Ali Bakhoda, Tor Aamodt University of British Columbia Complexity-Effective Memory Access Scheduling 23 for Many-Core Accelerator Architectures

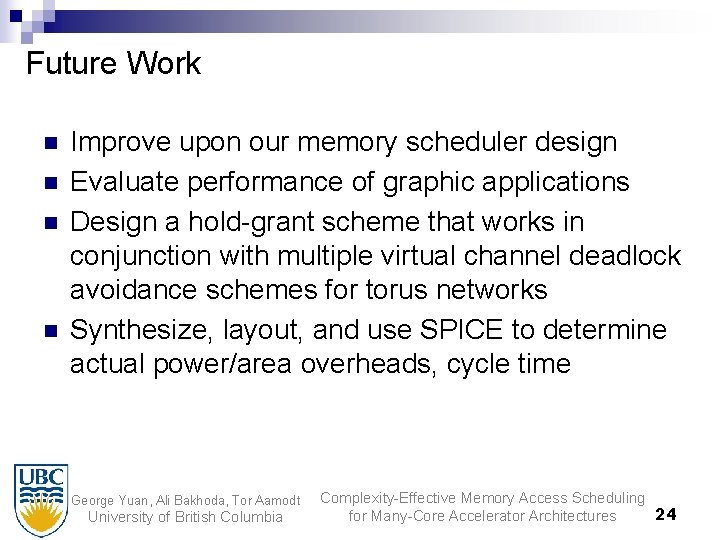

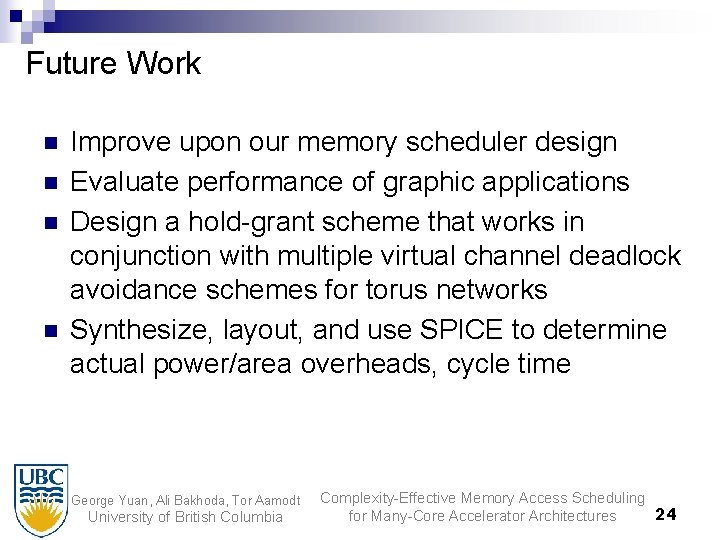

Future Work n n Improve upon our memory scheduler design Evaluate performance of graphic applications Design a hold-grant scheme that works in conjunction with multiple virtual channel deadlock avoidance schemes for torus networks Synthesize, layout, and use SPICE to determine actual power/area overheads, cycle time George Yuan, Ali Bakhoda, Tor Aamodt University of British Columbia Complexity-Effective Memory Access Scheduling 24 for Many-Core Accelerator Architectures

Thank you George Yuan, Ali Bakhoda, Tor Aamodt University of British Columbia Complexity-Effective Memory Access Scheduling 25 for Many-Core Accelerator Architectures

Methodology: Microarchitecture Parameters George Yuan, Ali Bakhoda, Tor Aamodt University of British Columbia Complexity-Effective Memory Access Scheduling 26 for Many-Core Accelerator Architectures

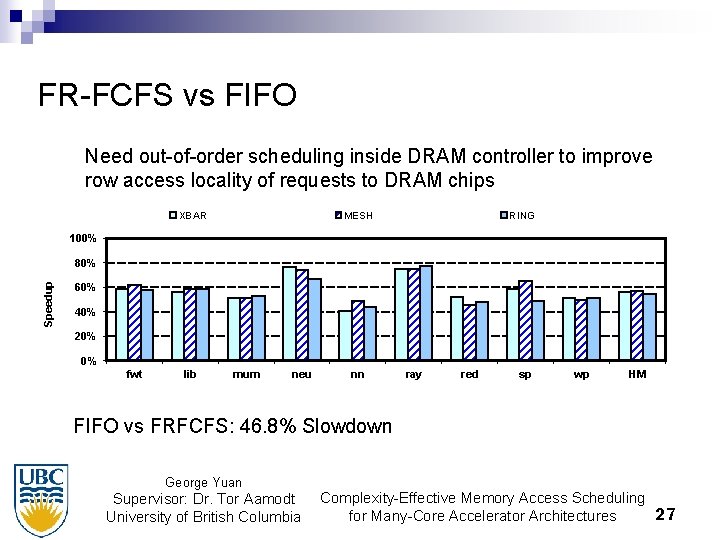

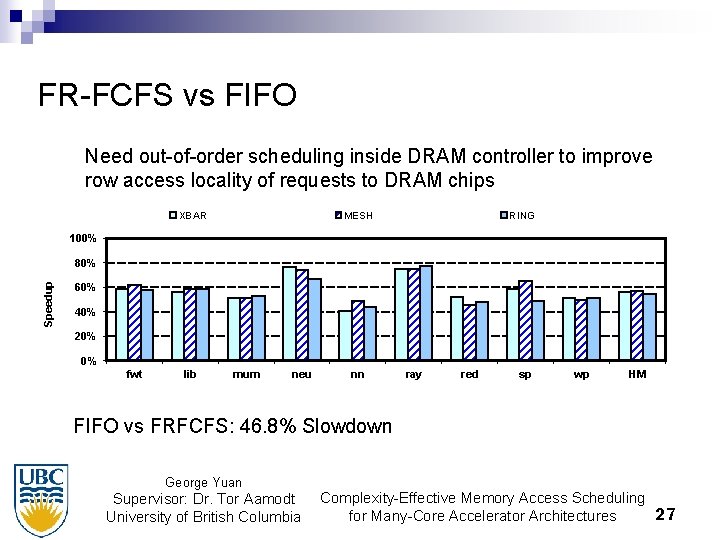

FR-FCFS vs FIFO Need out-of-order scheduling inside DRAM controller to improve row access locality of requests to DRAM chips XBAR MESH RING 100% Speedup 80% 60% 40% 20% 0% fwt lib mum neu nn ray red sp wp HM FIFO vs FRFCFS: 46. 8% Slowdown George Yuan Supervisor: Dr. Tor Aamodt University of British Columbia Complexity-Effective Memory Access Scheduling 27 for Many-Core Accelerator Architectures

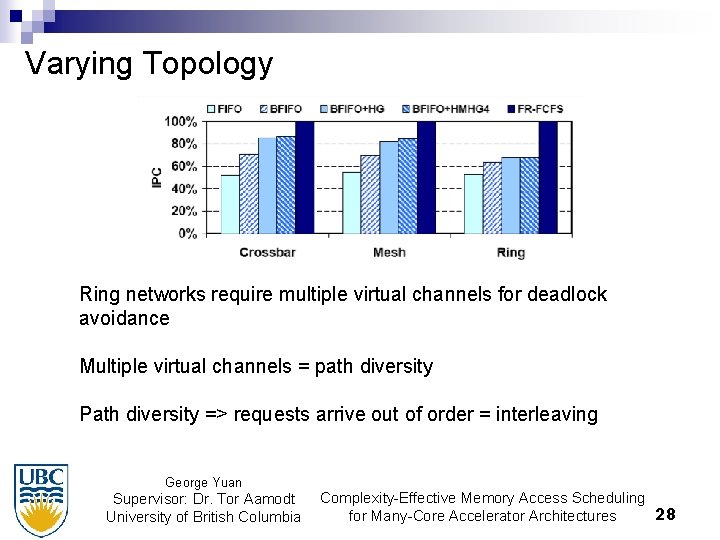

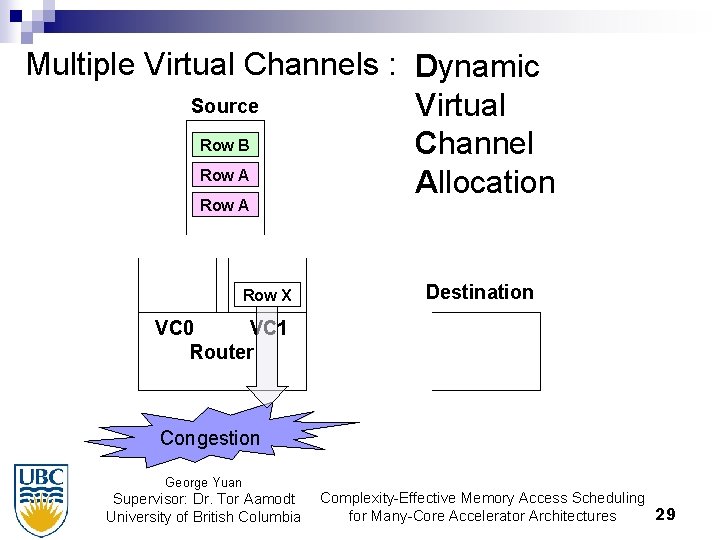

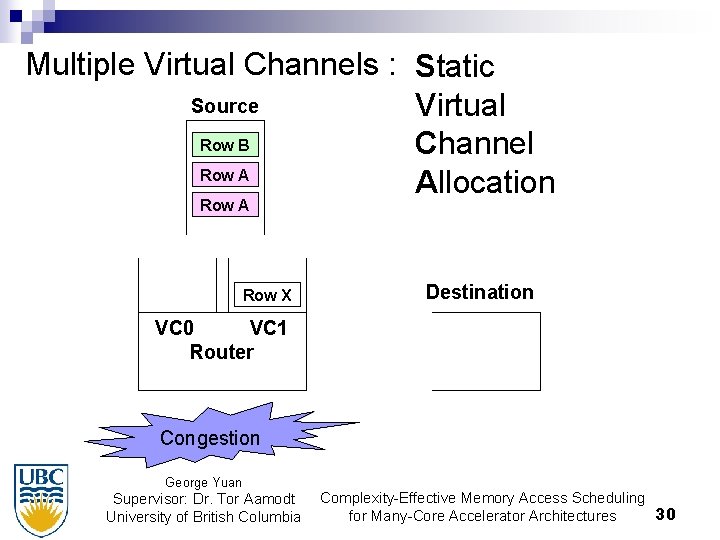

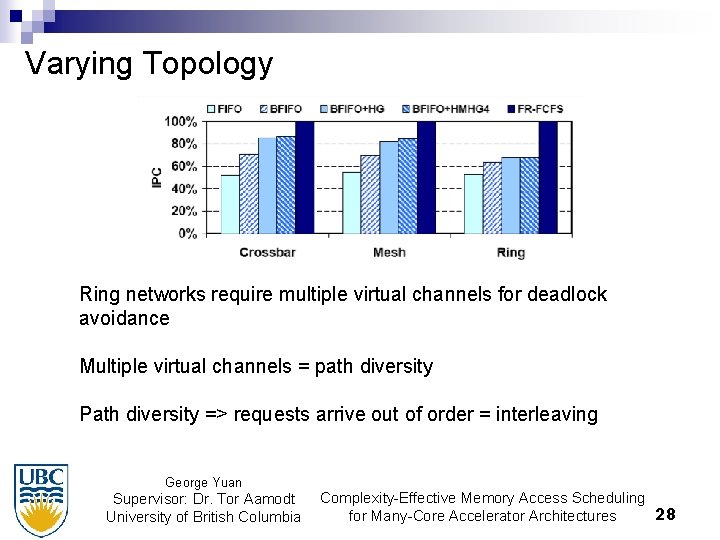

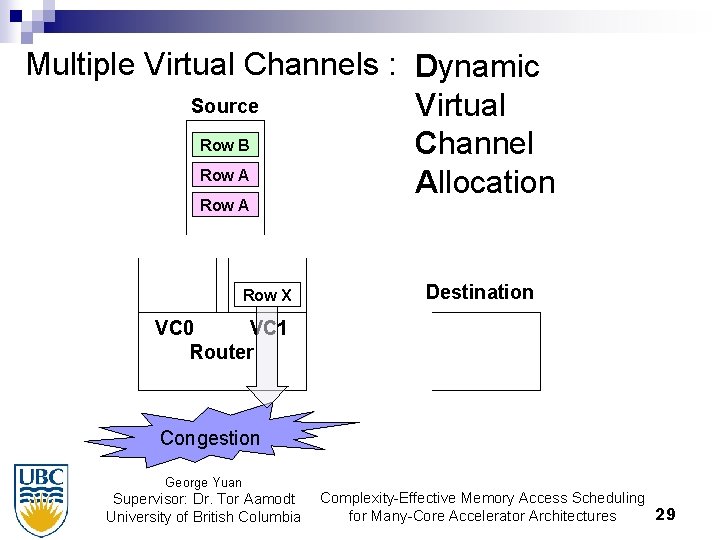

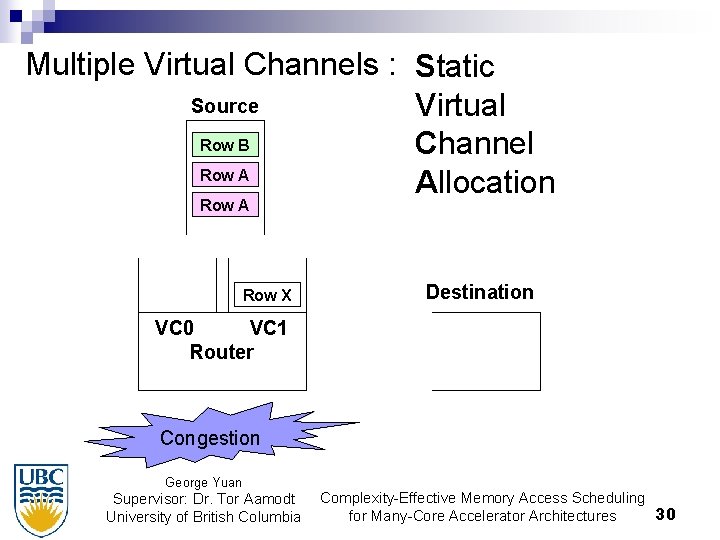

Varying Topology Ring networks require multiple virtual channels for deadlock avoidance Multiple virtual channels = path diversity Path diversity => requests arrive out of order = interleaving George Yuan Supervisor: Dr. Tor Aamodt University of British Columbia Complexity-Effective Memory Access Scheduling 28 for Many-Core Accelerator Architectures

Multiple Virtual Channels : Dynamic Source Virtual Row B Channel Row A Allocation Row A Row X Destination VC 0 VC 1 Router Congestion George Yuan Supervisor: Dr. Tor Aamodt University of British Columbia Complexity-Effective Memory Access Scheduling 29 for Many-Core Accelerator Architectures

Multiple Virtual Channels : Static Source Virtual Row B Channel Row A Allocation Row A Row X Destination VC 0 VC 1 Router Congestion George Yuan Supervisor: Dr. Tor Aamodt University of British Columbia Complexity-Effective Memory Access Scheduling 30 for Many-Core Accelerator Architectures

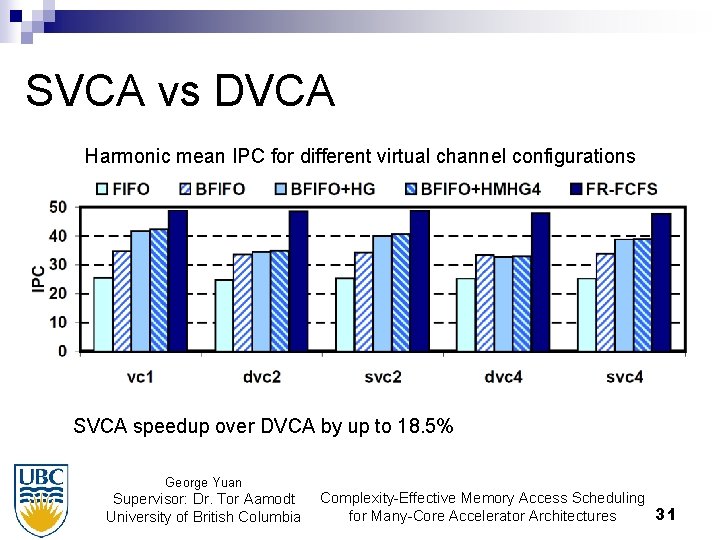

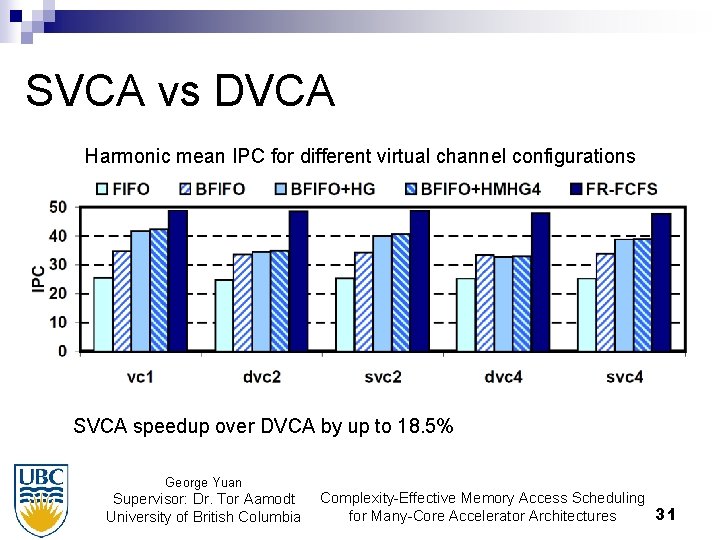

SVCA vs DVCA Harmonic mean IPC for different virtual channel configurations SVCA speedup over DVCA by up to 18. 5% George Yuan Supervisor: Dr. Tor Aamodt University of British Columbia Complexity-Effective Memory Access Scheduling 31 for Many-Core Accelerator Architectures

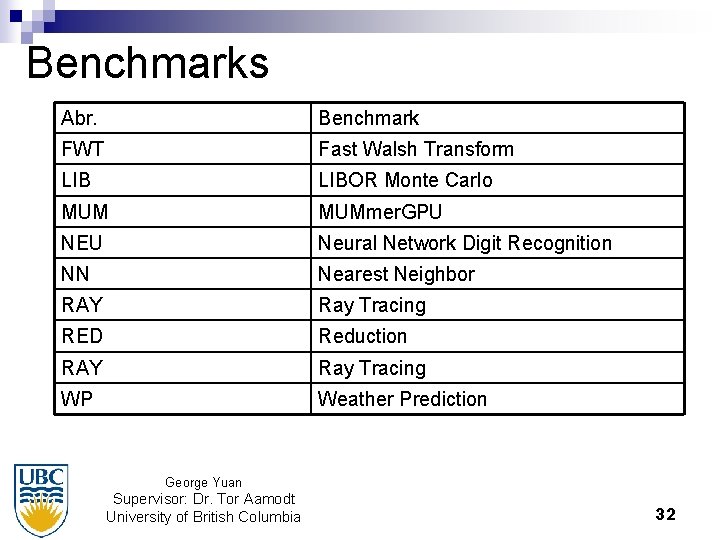

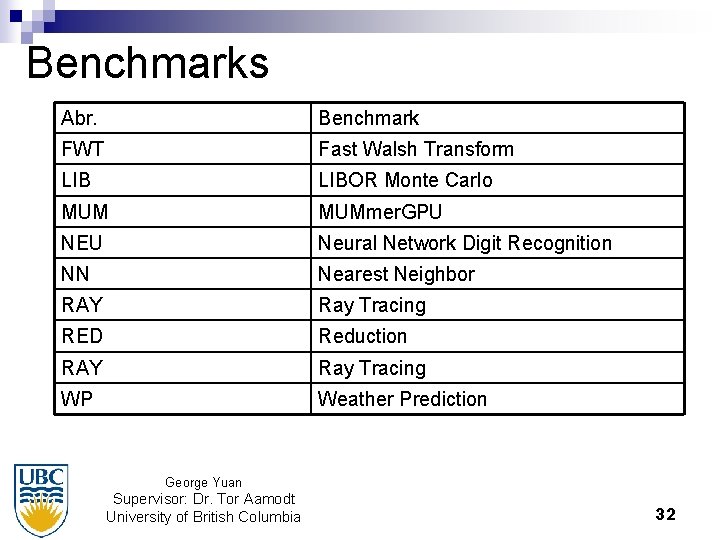

Benchmarks Abr. Benchmark FWT Fast Walsh Transform LIBOR Monte Carlo MUMmer. GPU NEU Neural Network Digit Recognition NN Nearest Neighbor RAY Ray Tracing RED Reduction RAY Ray Tracing WP Weather Prediction George Yuan Supervisor: Dr. Tor Aamodt University of British Columbia 32

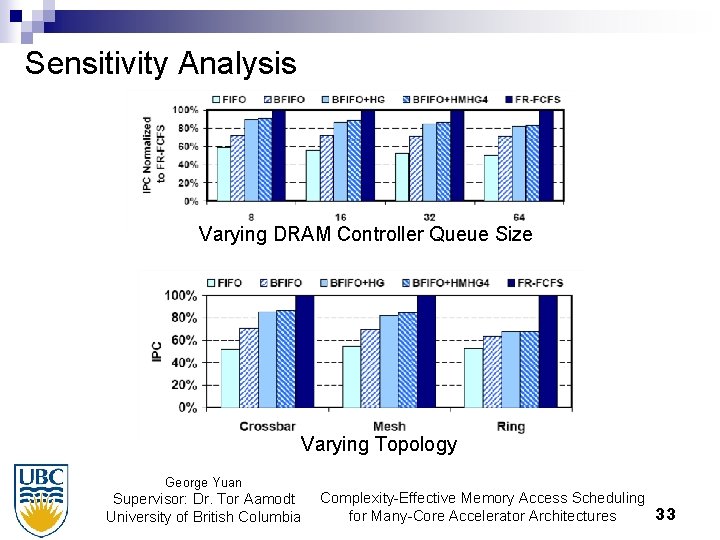

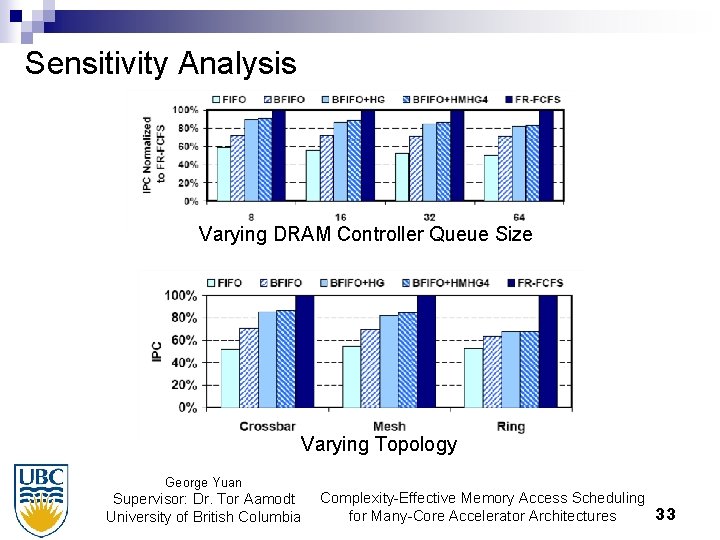

Sensitivity Analysis Varying DRAM Controller Queue Size Varying Topology George Yuan Supervisor: Dr. Tor Aamodt University of British Columbia Complexity-Effective Memory Access Scheduling 33 for Many-Core Accelerator Architectures

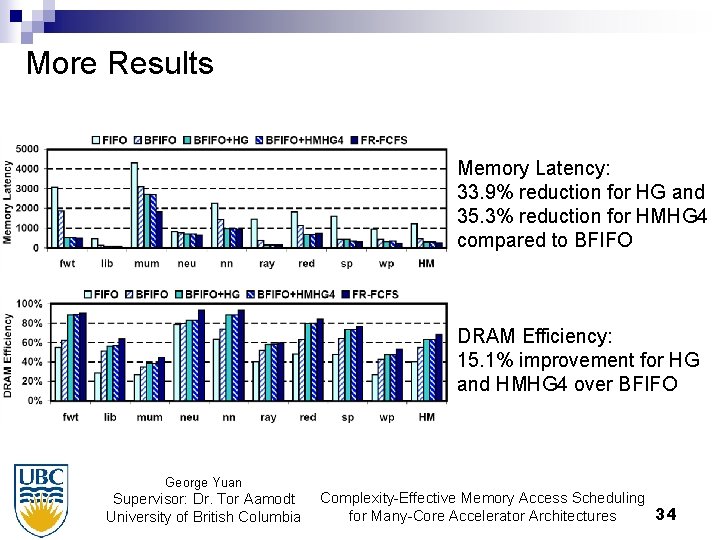

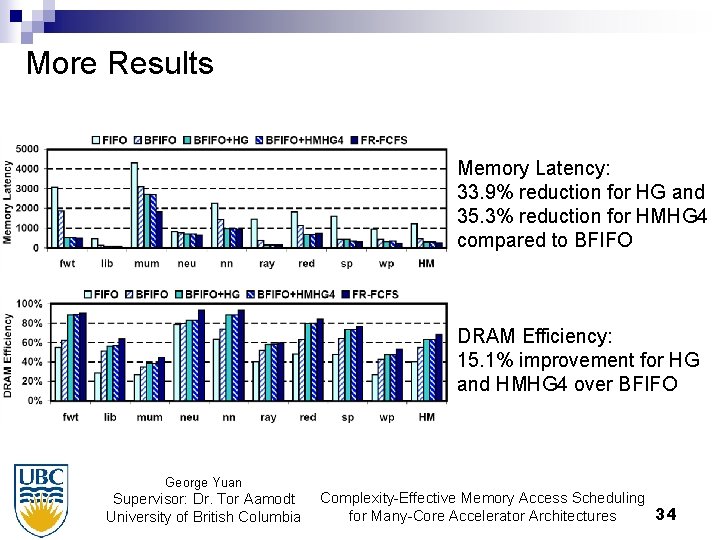

More Results Memory Latency: 33. 9% reduction for HG and 35. 3% reduction for HMHG 4 compared to BFIFO DRAM Efficiency: 15. 1% improvement for HG and HMHG 4 over BFIFO George Yuan Supervisor: Dr. Tor Aamodt University of British Columbia Complexity-Effective Memory Access Scheduling 34 for Many-Core Accelerator Architectures

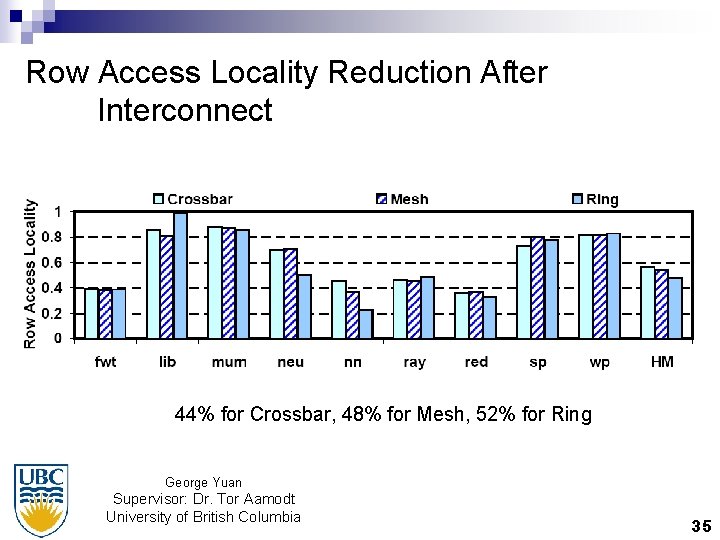

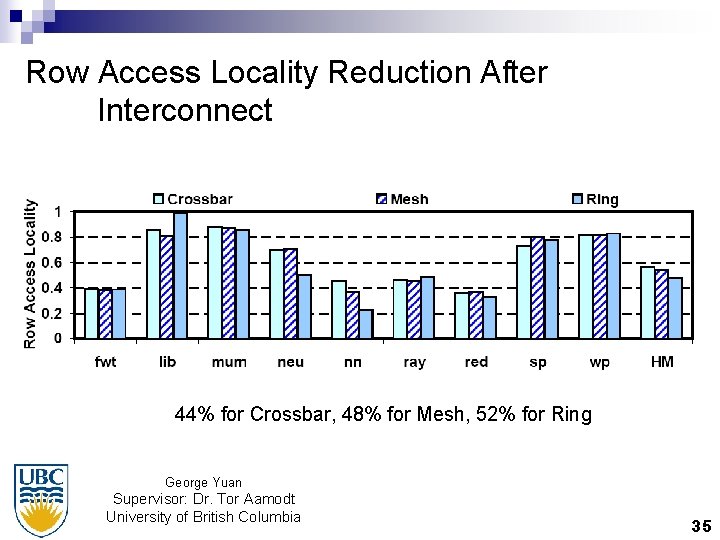

Row Access Locality Reduction After Interconnect 44% for Crossbar, 48% for Mesh, 52% for Ring George Yuan Supervisor: Dr. Tor Aamodt University of British Columbia 35

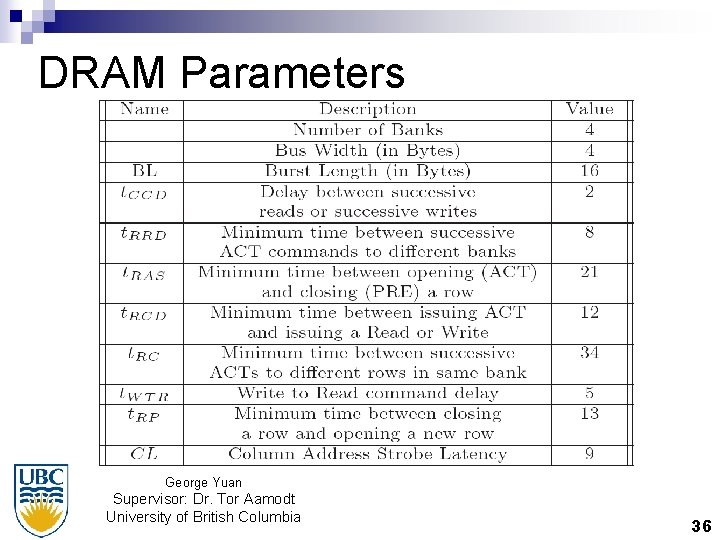

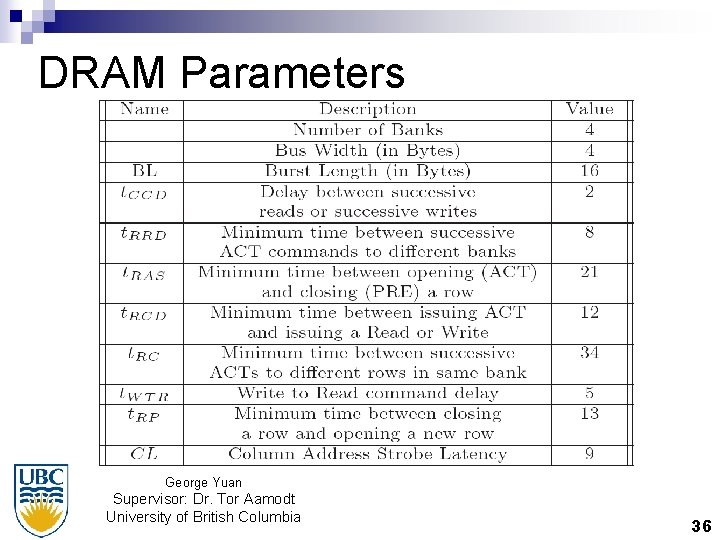

DRAM Parameters George Yuan Supervisor: Dr. Tor Aamodt University of British Columbia 36