Compiling for VectorThread Architectures Mark Hampton and Krste

- Slides: 27

Compiling for Vector-Thread Architectures Mark Hampton and Krste Asanović MIT Computer Science and Artificial Intelligence Laboratory University of California at Berkeley April 9, 2008

Vector-thread (VT) architectures efficiently encode parallelism in a variety of applications • A VT architecture unifies the vector and multithreaded execution models • The Scale VT architecture exploits DLP, TLP, and ILP (with clustering) simultaneously • Previous work [Krashinsky 04] has shown the ability of Scale to take advantage of the parallelism available in several different types of loops However, that evaluation relied on mapping code to Scale using handwritten assembly

This work presents a back end code generator for the Scale architecture • Compiler infrastructure is relatively immature, as much of the work to this point consisted of getting all the pieces to run together • We prioritized taking advantage of Scale’s unique features to enable support for “difficult” types of loops rather than focusing on optimizations Compiler can parallelize loops with internal control flow, outer loops, loops with cross iteration dependences However, compiler does not currently handle while loops • Despite lack of optimizations, compiler is still able to produce some significant speedups

Talk outline • Vector-thread architecture background • Compiler overview Emphasis on how code is mapped to Scale • Performance evaluation • Conclusions

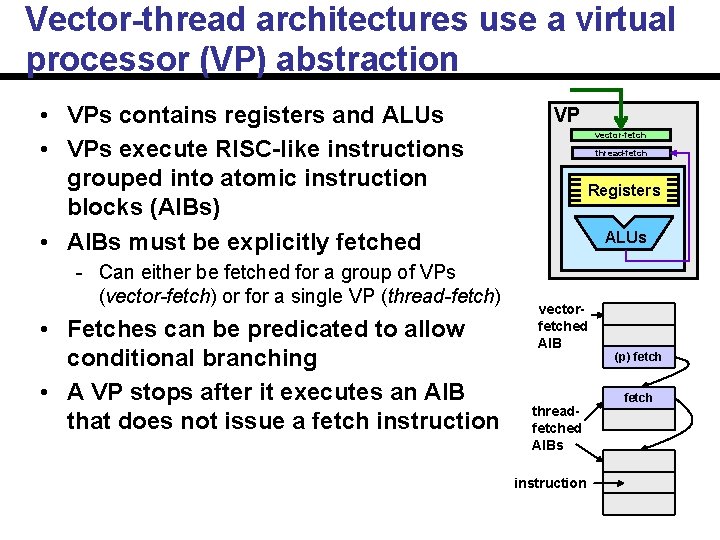

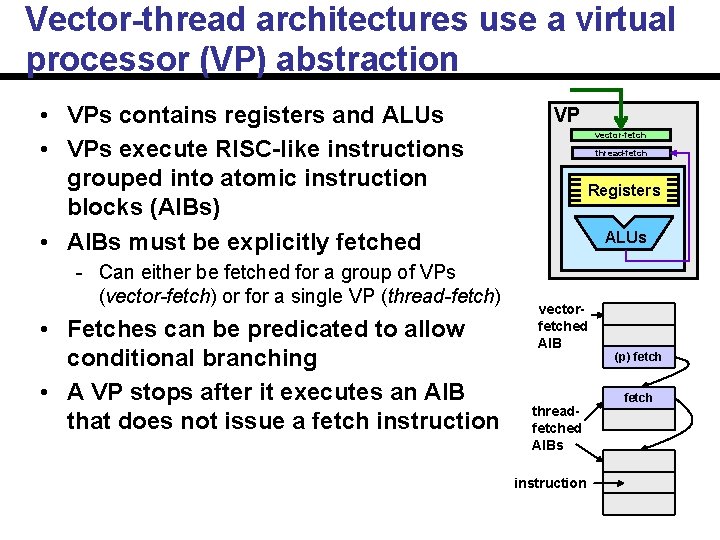

Vector-thread architectures use a virtual processor (VP) abstraction • VPs contains registers and ALUs • VPs execute RISC-like instructions grouped into atomic instruction blocks (AIBs) • AIBs must be explicitly fetched Can either be fetched for a group of VPs (vector-fetch) or for a single VP (thread-fetch) • Fetches can be predicated to allow conditional branching • A VP stops after it executes an AIB that does not issue a fetch instruction VP vector-fetch thread-fetch Registers ALUs vectorfetched AIB threadfetched AIBs instruction (p) fetch

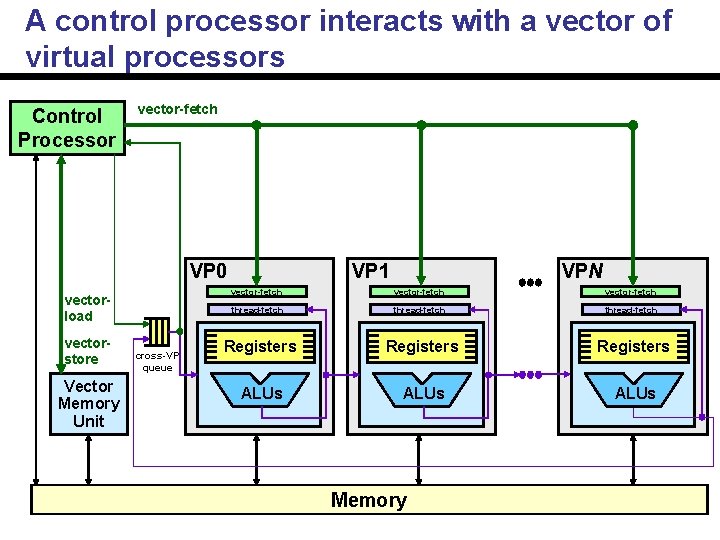

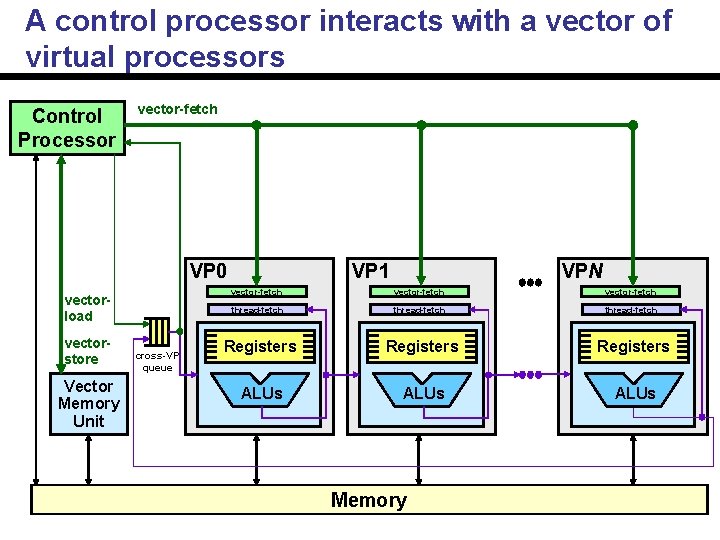

A control processor interacts with a vector of virtual processors Control Processor vector-fetch VP 0 vectorload vectorstore Vector Memory Unit cross-VP queue VPN VP 1 vector-fetch thread-fetch Registers ALUs Memory

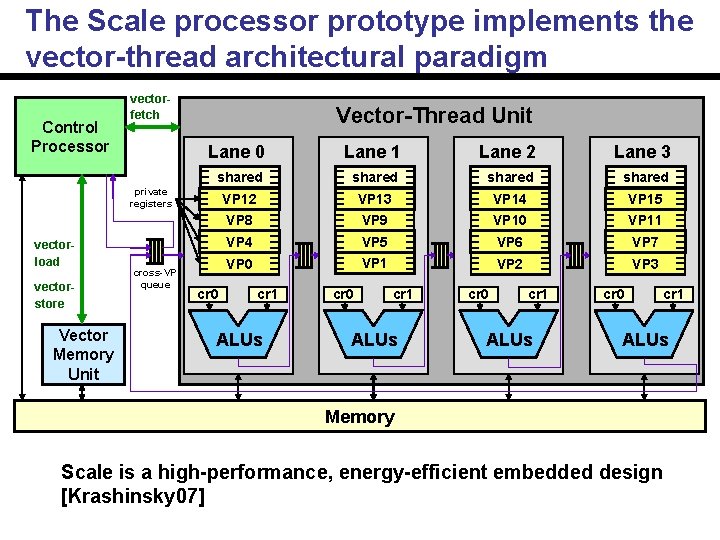

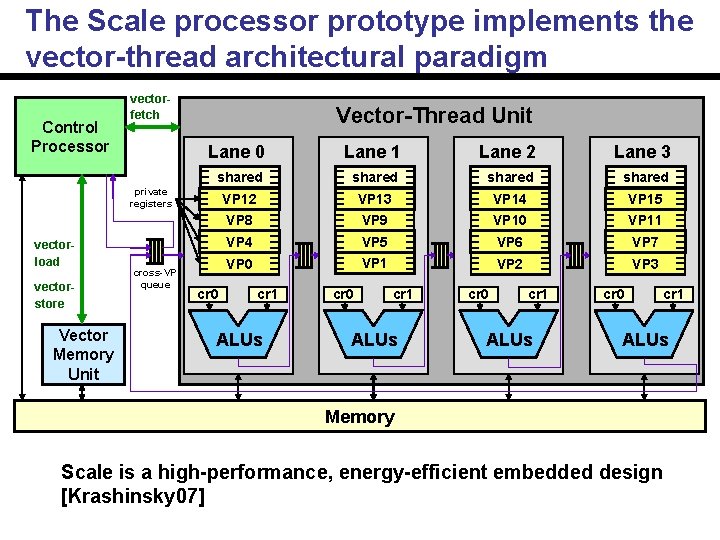

The Scale processor prototype implements the vector-thread architectural paradigm Control Processor vectorfetch Vector-Thread Unit Lane 0 Lane 1 Lane 2 Lane 3 shared VP 12 VP 13 VP 14 VP 15 VP 8 VP 9 VP 10 VP 11 VP 4 VP 5 VP 6 VP 7 VP 0 VP 1 VP 2 VP 3 private registers vectorload vectorstore Vector Memory Unit cross-VP queue cr 0 cr 1 ALUs Memory Scale is a high-performance, energy-efficient embedded design [Krashinsky 07]

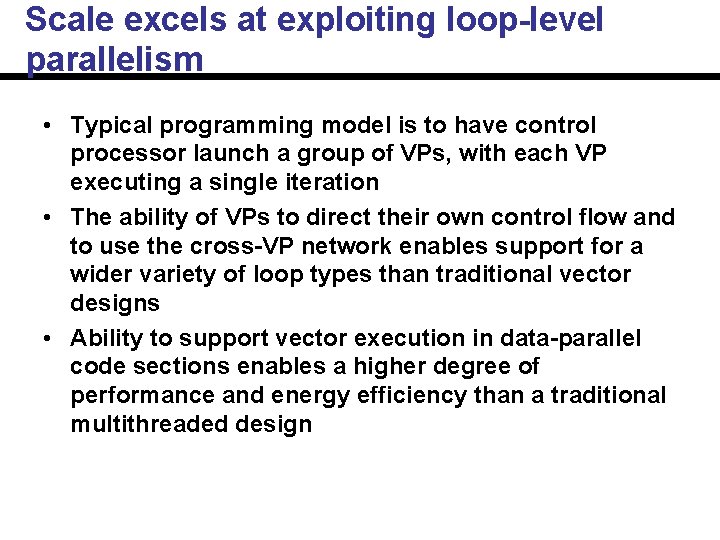

Scale excels at exploiting loop-level parallelism • Typical programming model is to have control processor launch a group of VPs, with each VP executing a single iteration • The ability of VPs to direct their own control flow and to use the cross-VP network enables support for a wider variety of loop types than traditional vector designs • Ability to support vector execution in data-parallel code sections enables a higher degree of performance and energy efficiency than a traditional multithreaded design

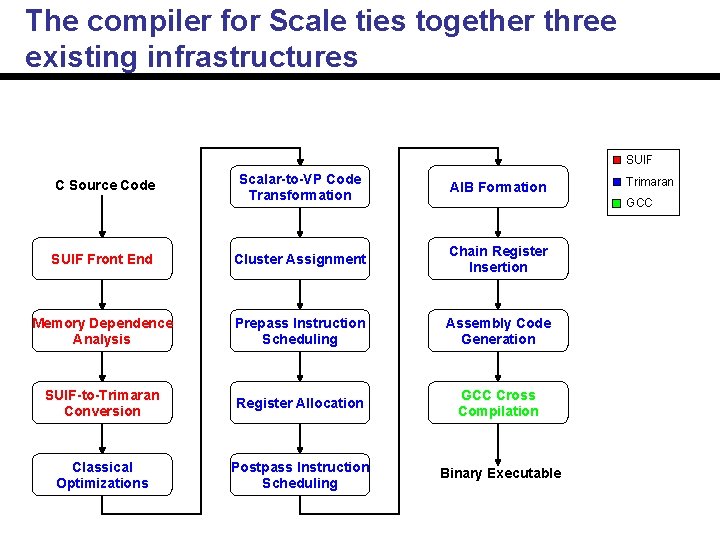

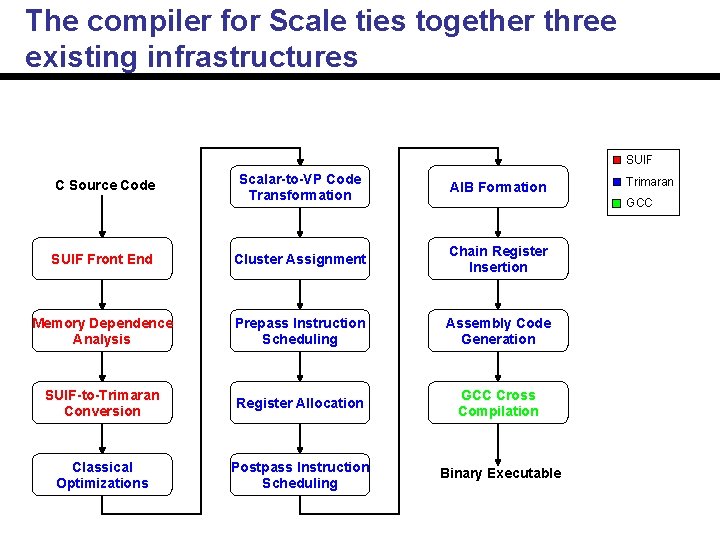

The compiler for Scale ties together three existing infrastructures SUIF Scalar-to-VP Code Transformation AIB Formation SUIF Front End Cluster Assignment Chain Register Insertion Memory Dependence Analysis Prepass Instruction Scheduling Assembly Code Generation SUIF-to-Trimaran Conversion Register Allocation GCC Cross Compilation Classical Optimizations Postpass Instruction Scheduling Binary Executable C Source Code Trimaran GCC

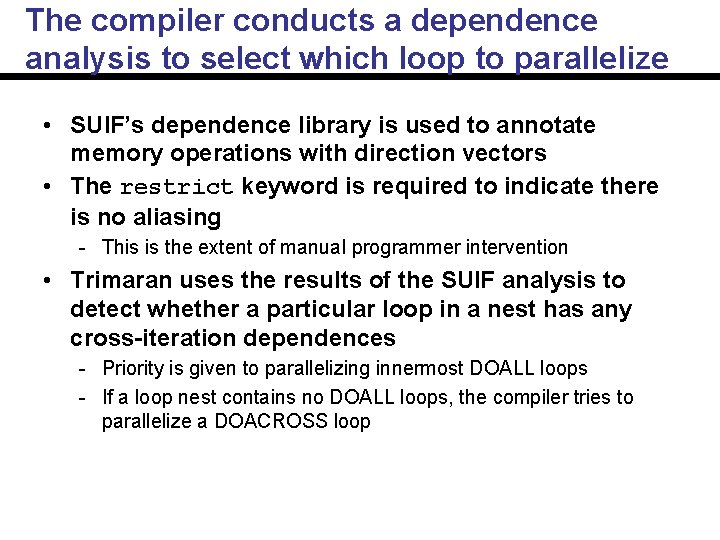

The compiler conducts a dependence analysis to select which loop to parallelize • SUIF’s dependence library is used to annotate memory operations with direction vectors • The restrict keyword is required to indicate there is no aliasing This is the extent of manual programmer intervention • Trimaran uses the results of the SUIF analysis to detect whether a particular loop in a nest has any cross-iteration dependences Priority is given to parallelizing innermost DOALL loops If a loop nest contains no DOALL loops, the compiler tries to parallelize a DOACROSS loop

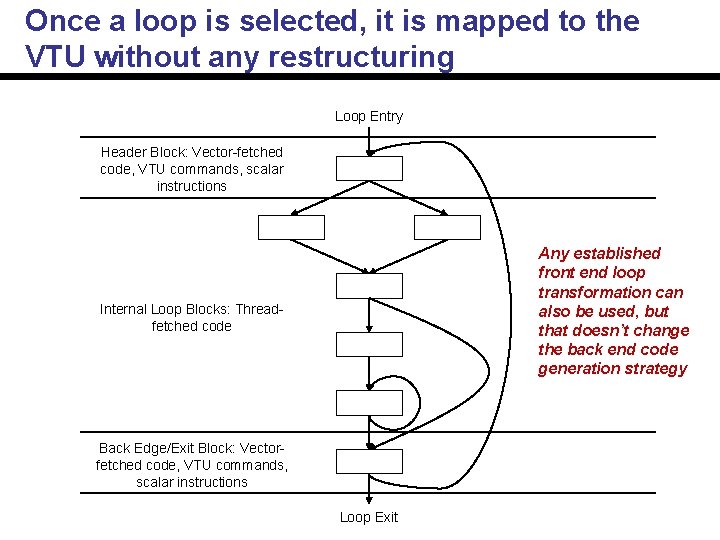

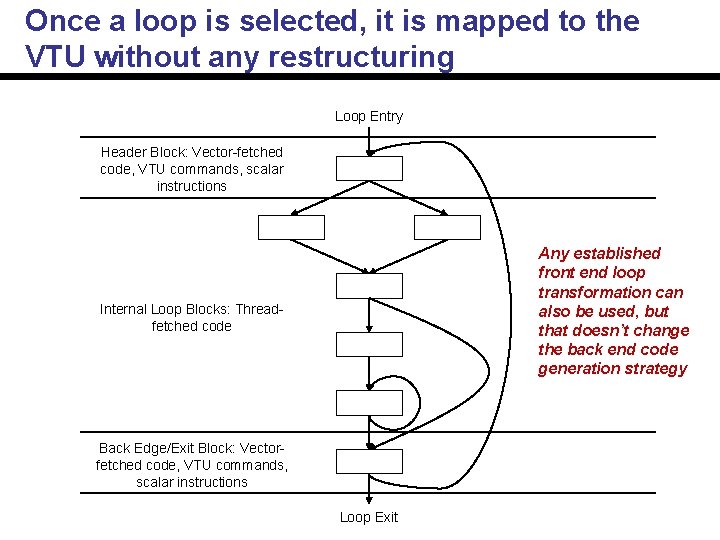

Once a loop is selected, it is mapped to the VTU without any restructuring Loop Entry Header Block: Vector fetched code, VTU commands, scalar instructions Any established front end loop transformation can also be used, but that doesn’t change the back end code generation strategy Internal Loop Blocks: Thread fetched code Back Edge/Exit Block: Vector fetched code, VTU commands, scalar instructions Loop Exit

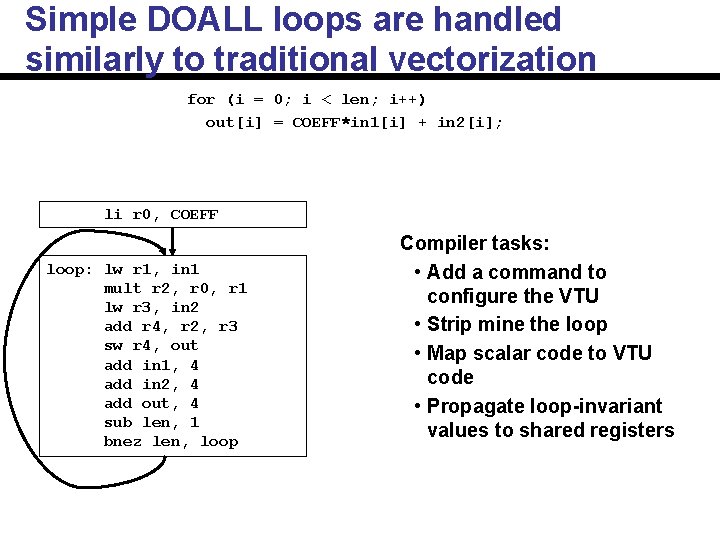

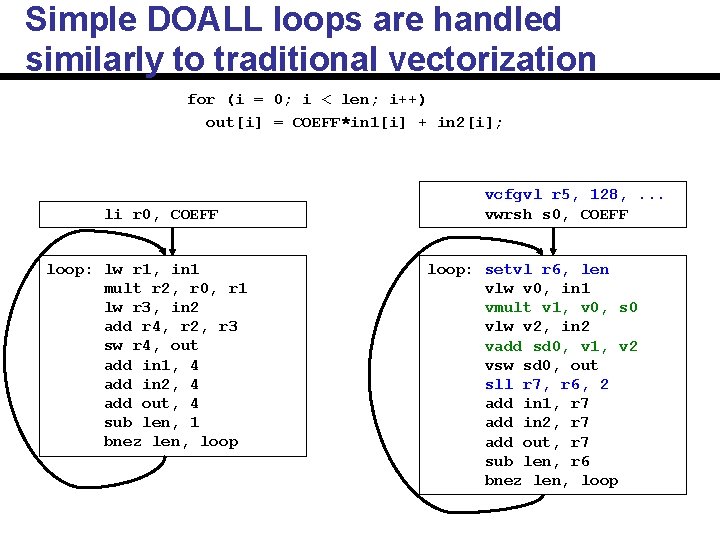

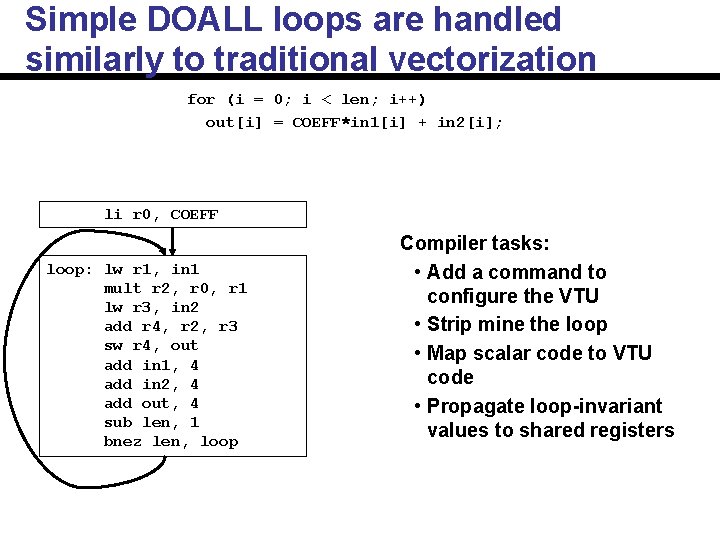

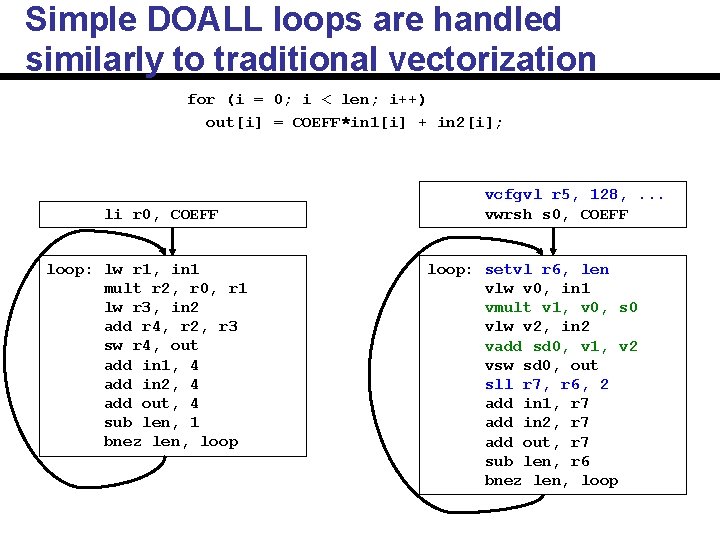

Simple DOALL loops are handled similarly to traditional vectorization for (i = 0; i < len; i++) out[i] = COEFF*in 1[i] + in 2[i]; li r 0, COEFF loop: lw r 1, in 1 mult r 2, r 0, r 1 lw r 3, in 2 add r 4, r 2, r 3 sw r 4, out add in 1, 4 add in 2, 4 add out, 4 sub len, 1 bnez len, loop Compiler tasks: • Add a command to configure the VTU • Strip mine the loop • Map scalar code to VTU code • Propagate loop-invariant values to shared registers

Simple DOALL loops are handled similarly to traditional vectorization for (i = 0; i < len; i++) out[i] = COEFF*in 1[i] + in 2[i]; li r 0, COEFF loop: lw r 1, in 1 mult r 2, r 0, r 1 lw r 3, in 2 add r 4, r 2, r 3 sw r 4, out add in 1, 4 add in 2, 4 add out, 4 sub len, 1 bnez len, loop vcfgvl r 5, 128, . . . vwrsh s 0, COEFF loop: setvl r 6, len vlw v 0, in 1 vmult v 1, v 0, s 0 vlw v 2, in 2 vadd sd 0, v 1, v 2 vsw sd 0, out sll r 7, r 6, 2 add in 1, r 7 add in 2, r 7 add out, r 7 sub len, r 6 bnez len, loop

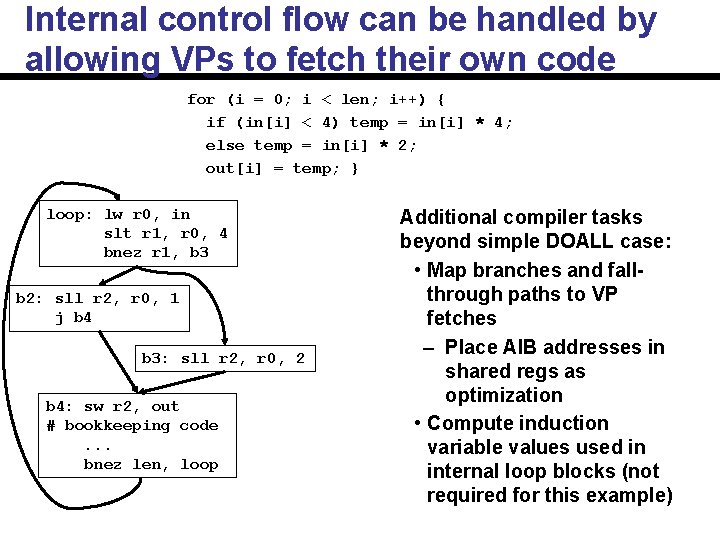

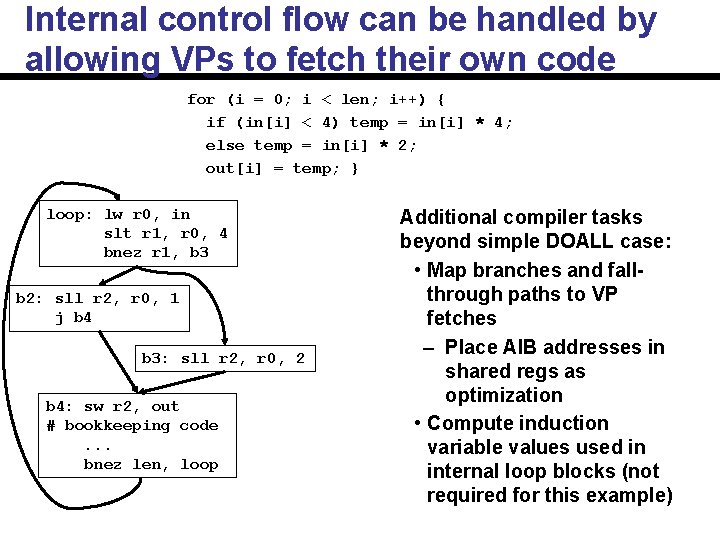

Internal control flow can be handled by allowing VPs to fetch their own code for (i = 0; i < len; i++) { if (in[i] < 4) temp = in[i] * 4; else temp = in[i] * 2; out[i] = temp; } loop: lw r 0, in slt r 1, r 0, 4 bnez r 1, b 3 b 2: sll r 2, r 0, 1 j b 4 b 3: sll r 2, r 0, 2 b 4: sw r 2, out # bookkeeping code. . . bnez len, loop Additional compiler tasks beyond simple DOALL case: • Map branches and fallthrough paths to VP fetches – Place AIB addresses in shared regs as optimization • Compute induction variable values used in internal loop blocks (not required for this example)

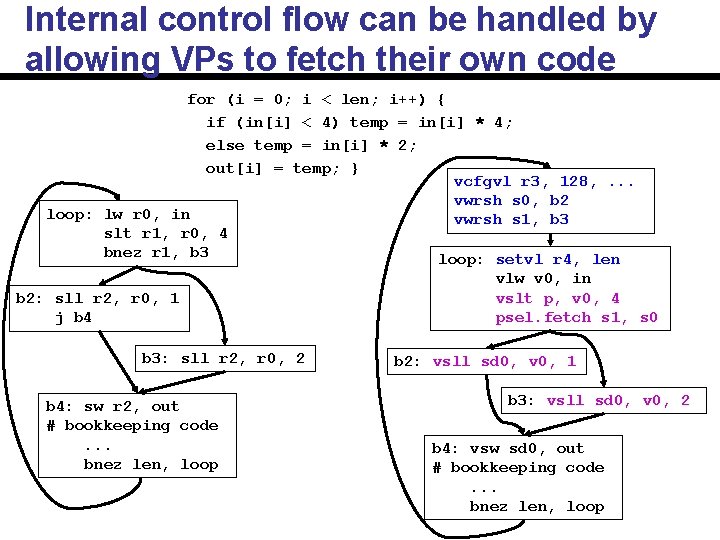

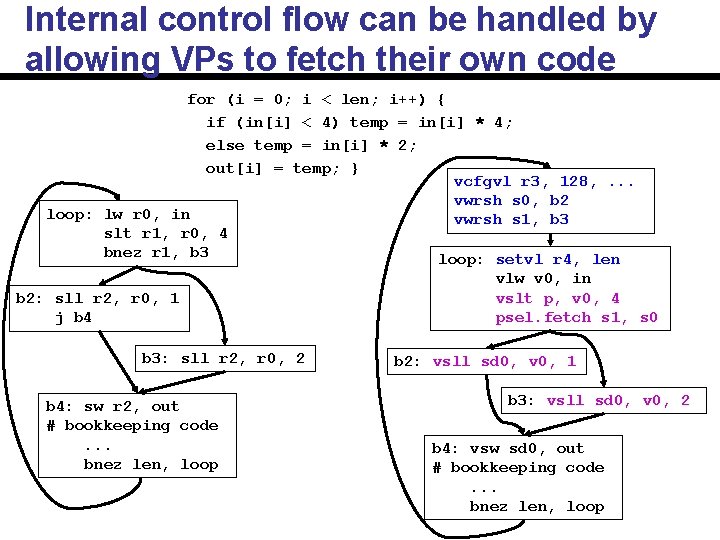

Internal control flow can be handled by allowing VPs to fetch their own code for (i = 0; i < len; i++) { if (in[i] < 4) temp = in[i] * 4; else temp = in[i] * 2; out[i] = temp; } vcfgvl r 3, 128, . . . vwrsh s 0, b 2 loop: lw r 0, in vwrsh s 1, b 3 slt r 1, r 0, 4 bnez r 1, b 3 loop: setvl r 4, len b 2: sll r 2, r 0, 1 j b 4 b 3: sll r 2, r 0, 2 b 4: sw r 2, out # bookkeeping code. . . bnez len, loop vlw v 0, in vslt p, v 0, 4 psel. fetch s 1, s 0 b 2: vsll sd 0, v 0, 1 b 3: vsll sd 0, v 0, 2 b 4: vsw sd 0, out # bookkeeping code. . . bnez len, loop

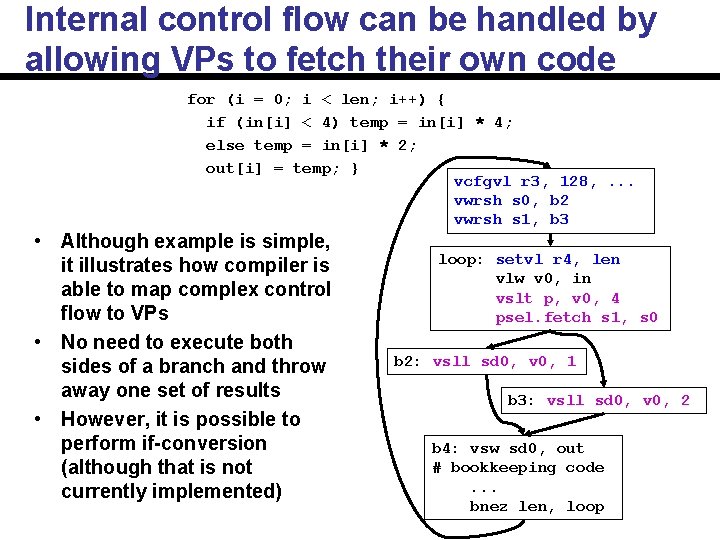

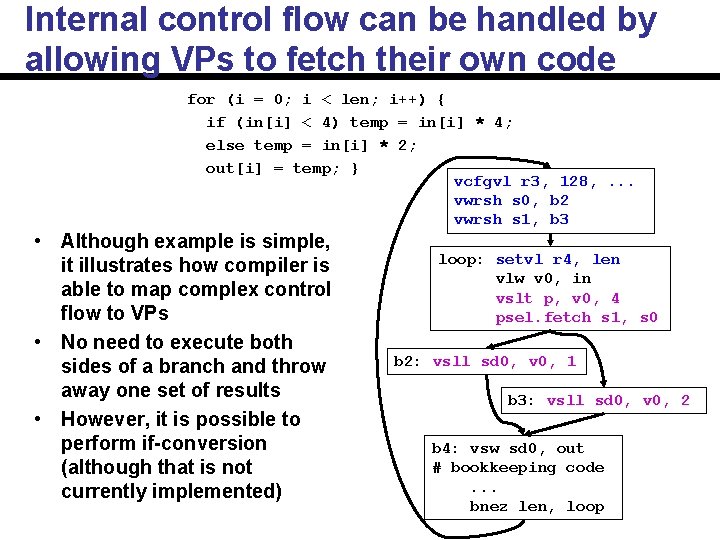

Internal control flow can be handled by allowing VPs to fetch their own code for (i = 0; i < len; i++) { if (in[i] < 4) temp = in[i] * 4; else temp = in[i] * 2; out[i] = temp; } vcfgvl r 3, 128, . . . vwrsh s 0, b 2 vwrsh s 1, b 3 • Although example is simple, it illustrates how compiler is able to map complex control flow to VPs • No need to execute both sides of a branch and throw away one set of results • However, it is possible to perform if-conversion (although that is not currently implemented) loop: setvl r 4, len vlw v 0, in vslt p, v 0, 4 psel. fetch s 1, s 0 b 2: vsll sd 0, v 0, 1 b 3: vsll sd 0, v 0, 2 b 4: vsw sd 0, out # bookkeeping code. . . bnez len, loop

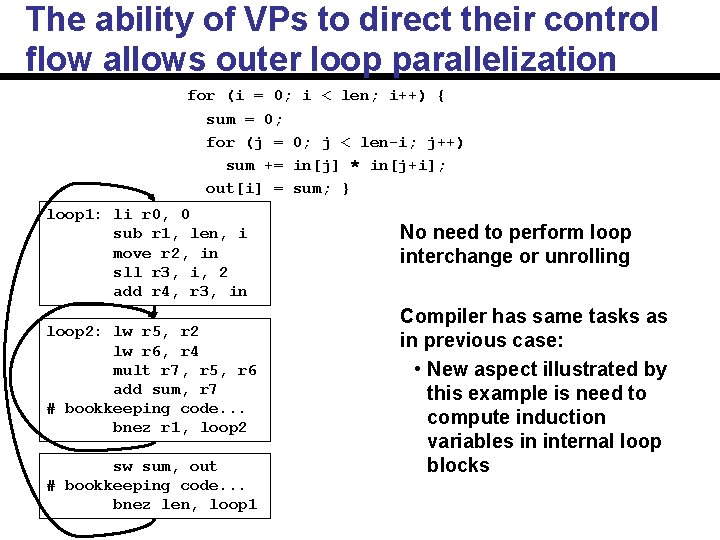

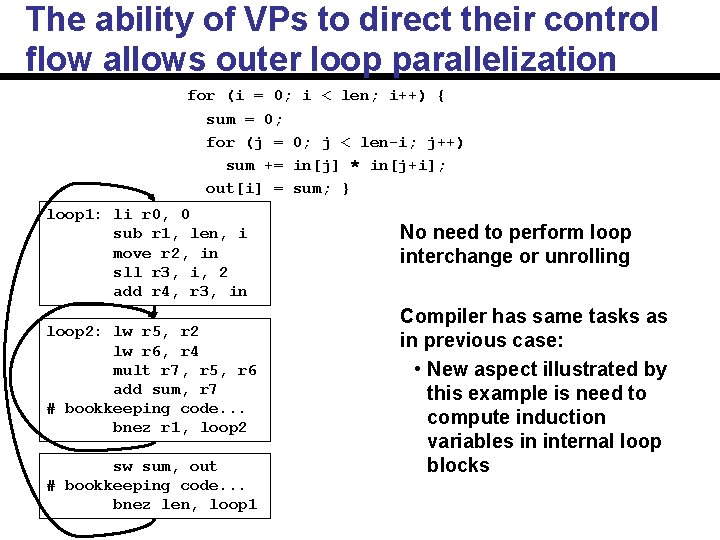

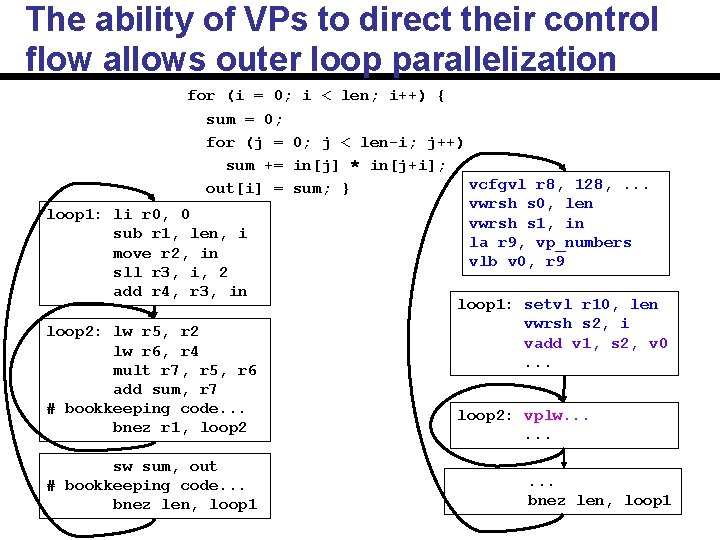

The ability of VPs to direct their control flow allows outer loop parallelization for (i = 0; i < len; i++) { sum = 0; for (j = 0; j < len-i; j++) sum += in[j] * in[j+i]; out[i] = sum; } loop 1: li r 0, 0 sub r 1, len, i move r 2, in sll r 3, i, 2 add r 4, r 3, in loop 2: lw r 5, r 2 lw r 6, r 4 mult r 7, r 5, r 6 add sum, r 7 # bookkeeping code. . . bnez r 1, loop 2 sw sum, out # bookkeeping code. . . bnez len, loop 1 No need to perform loop interchange or unrolling Compiler has same tasks as in previous case: • New aspect illustrated by this example is need to compute induction variables in internal loop blocks

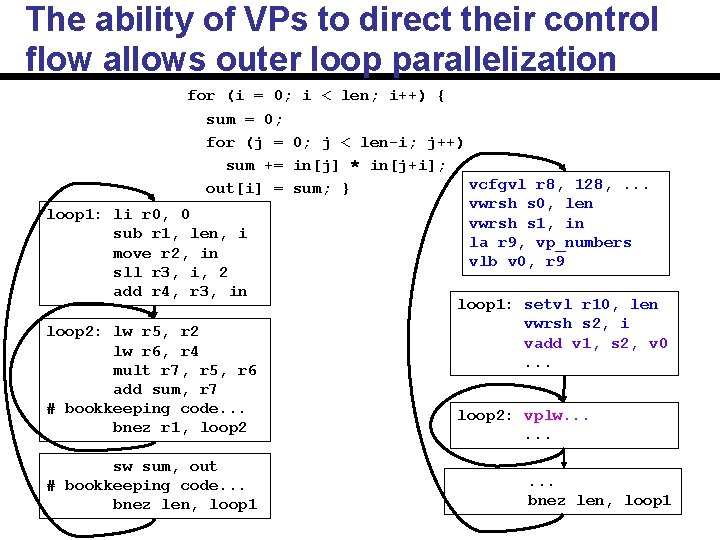

The ability of VPs to direct their control flow allows outer loop parallelization for (i = 0; i < len; i++) { sum = 0; for (j = 0; j < len-i; j++) sum += in[j] * in[j+i]; vcfgvl r 8, 128, . . . out[i] = sum; } vwrsh s 0, len loop 1: li r 0, 0 vwrsh s 1, in sub r 1, len, i la r 9, vp_numbers move r 2, in vlb v 0, r 9 sll r 3, i, 2 add r 4, r 3, in loop 1: setvl r 10, len vwrsh s 2, i loop 2: lw r 5, r 2 vadd v 1, s 2, v 0 lw r 6, r 4. . . mult r 7, r 5, r 6 add sum, r 7 # bookkeeping code. . . bnez r 1, loop 2 sw sum, out # bookkeeping code. . . bnez len, loop 1 loop 2: vplw. . bnez len, loop 1

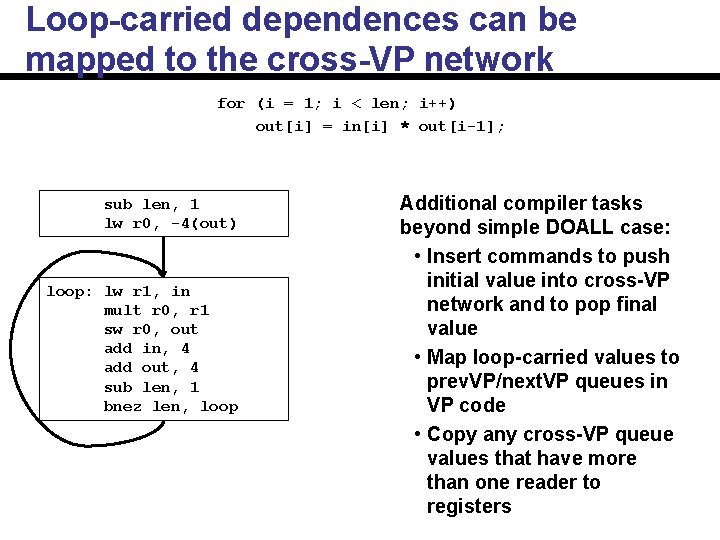

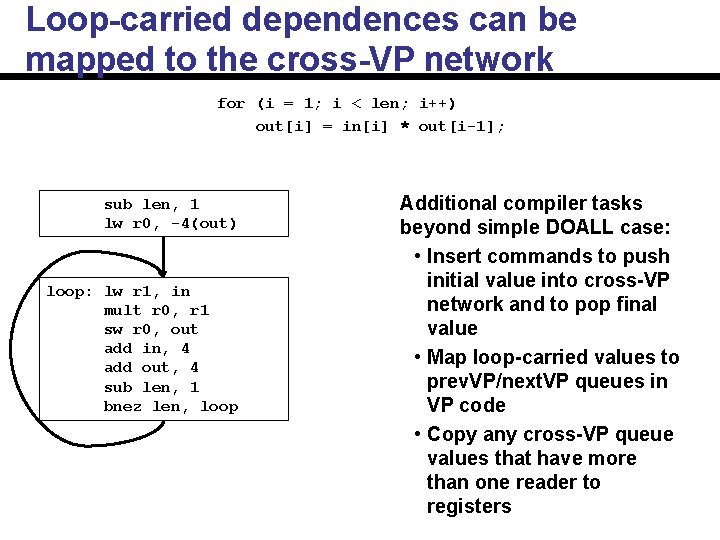

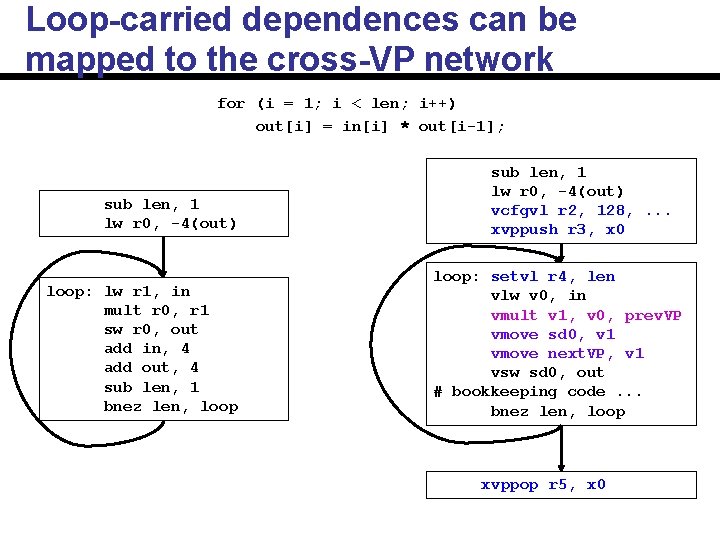

Loop-carried dependences can be mapped to the cross-VP network for (i = 1; i < len; i++) out[i] = in[i] * out[i-1]; sub len, 1 lw r 0, -4(out) loop: lw r 1, in mult r 0, r 1 sw r 0, out add in, 4 add out, 4 sub len, 1 bnez len, loop Additional compiler tasks beyond simple DOALL case: • Insert commands to push initial value into cross-VP network and to pop final value • Map loop-carried values to prev. VP/next. VP queues in VP code • Copy any cross-VP queue values that have more than one reader to registers

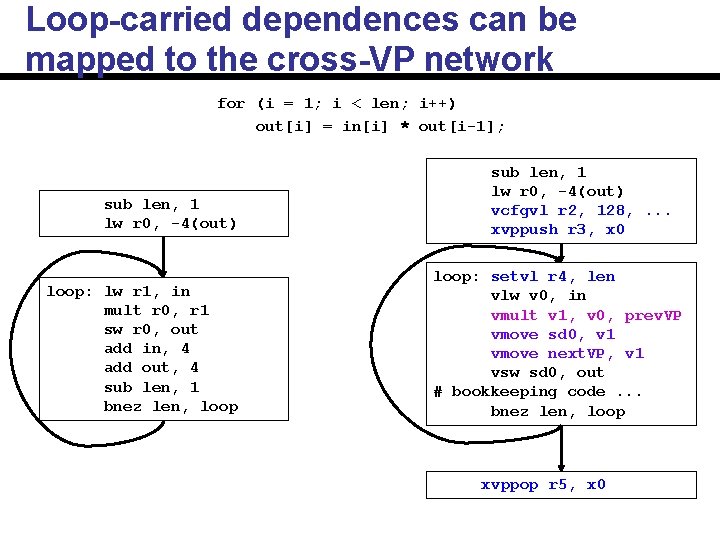

Loop-carried dependences can be mapped to the cross-VP network for (i = 1; i < len; i++) out[i] = in[i] * out[i-1]; sub len, 1 lw r 0, -4(out) loop: lw r 1, in mult r 0, r 1 sw r 0, out add in, 4 add out, 4 sub len, 1 bnez len, loop sub len, 1 lw r 0, -4(out) vcfgvl r 2, 128, . . . xvppush r 3, x 0 loop: setvl r 4, len vlw v 0, in vmult v 1, v 0, prev. VP vmove sd 0, v 1 vmove next. VP, v 1 vsw sd 0, out # bookkeeping code. . . bnez len, loop xvppop r 5, x 0

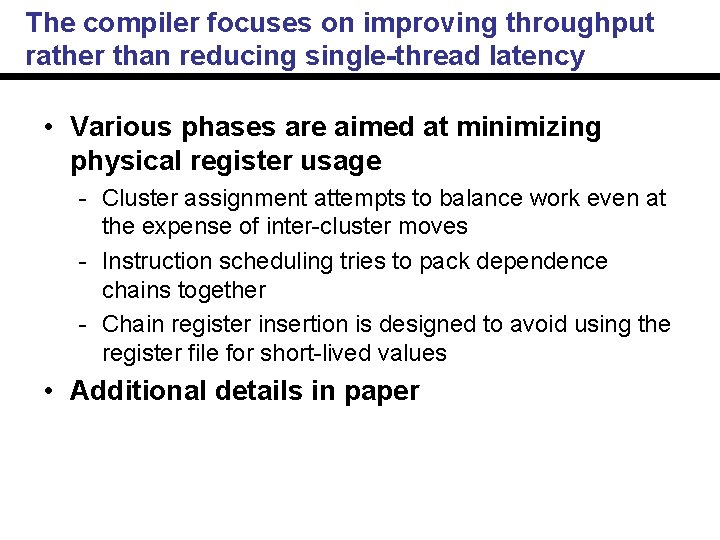

The compiler focuses on improving throughput rather than reducing single-thread latency • Various phases are aimed at minimizing physical register usage Cluster assignment attempts to balance work even at the expense of inter cluster moves Instruction scheduling tries to pack dependence chains together Chain register insertion is designed to avoid using the register file for short lived values • Additional details in paper

Evaluation methodology • Scale simulator uses detailed models for VTU and cache, but a single-instruction-per-cycle latency for control processor Reduces magnitude of parallelized code speedups • Performance is evaluated across a limited number of EEMBC benchmarks are difficult to automatically parallelize Continued improvements to the compiler infrastructure (e. g. if conversion, front end loop transformations) would enable broader benchmark coverage

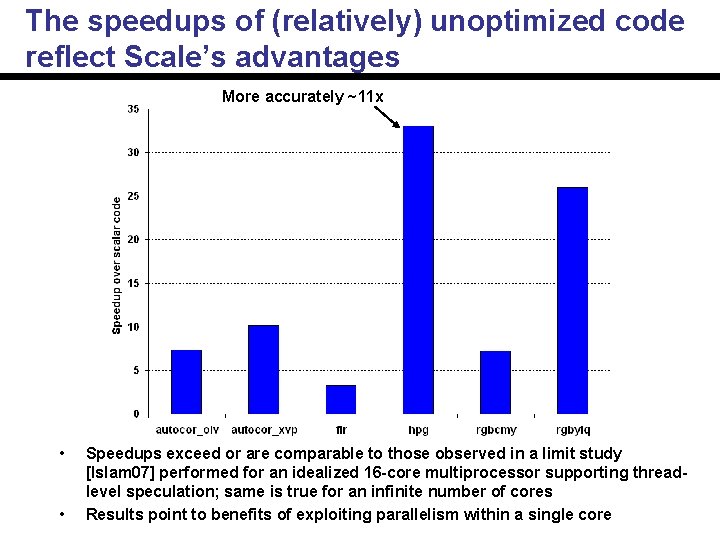

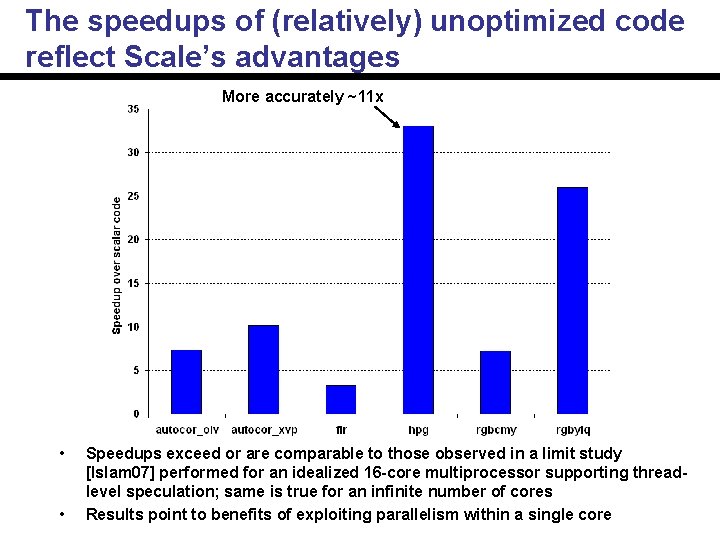

The speedups of (relatively) unoptimized code reflect Scale’s advantages More accurately ~11 x • • Speedups exceed or are comparable to those observed in a limit study [Islam 07] performed for an idealized 16 -core multiprocessor supporting threadlevel speculation; same is true for an infinite number of cores Results point to benefits of exploiting parallelism within a single core

There is a variety of related work • TRIPS also exploits multiple forms of parallelism, but the compiler’s focus is on forming blocks of useful instructions and mapping instructions to ALUs • Stream processing compilers share some similarities with our approach, but also have somewhat different priorities, such as managing the utilization of the Stream Register File • IBM’s Cell compiler has to deal with issues such as alignment and branch hints, which are not present for Scale • GPGPU designs (Nvidia’s CUDA, AMD’s Stream Computing) also have similarities with Scale, but the differences in the programming models result in different focuses in the compilers

Concluding remarks • Vector-thread architectures exploit multiple forms of parallelism • This work presented a compiler for the Scale vector-thread architecture • The compiler can parallelize a variety of loop types • Significant performance gains were achieved over a single-issue scalar processor

A comparison to handwritten code shows there is still significant room for improvement There are several optimizations that can be employed to narrow the performance gap