Compilers 7 Code Generation ChihHung Wang References 1

![Interpretation Recursive interpretation operates directly on the AST [attribute grammar] simple to write thorough Interpretation Recursive interpretation operates directly on the AST [attribute grammar] simple to write thorough](https://slidetodoc.com/presentation_image_h/00ee3ee18027e0e6e8ac3ff03a768bc2/image-4.jpg)

![Example of code generation a: =(b[4*c+d]*2)+9; 12 Example of code generation a: =(b[4*c+d]*2)+9; 12](https://slidetodoc.com/presentation_image_h/00ee3ee18027e0e6e8ac3ff03a768bc2/image-12.jpg)

![Machine instructions Load_Addr M[Ri], C, Rd Loads the address of the Ri-th element of Machine instructions Load_Addr M[Ri], C, Rd Loads the address of the Ri-th element of](https://slidetodoc.com/presentation_image_h/00ee3ee18027e0e6e8ac3ff03a768bc2/image-13.jpg)

![Object code sequence Load_Byte (b+Rd)[Rc], 4, Rt Load_Addr 9[Rt], 2, Ra 16 Object code sequence Load_Byte (b+Rd)[Rc], 4, Rt Load_Addr 9[Rt], 2, Ra 16](https://slidetodoc.com/presentation_image_h/00ee3ee18027e0e6e8ac3ff03a768bc2/image-16.jpg)

![Cloning Example double poewr_series(int n, double a[], double x) { int p; for (p=0; Cloning Example double poewr_series(int n, double a[], double x) { int p; for (p=0;](https://slidetodoc.com/presentation_image_h/00ee3ee18027e0e6e8ac3ff03a768bc2/image-116.jpg)

- Slides: 120

Compilers 7. Code Generation Chih-Hung Wang References 1. C. N. Fischer, R. K. Cytron and R. J. Le. Blanc. Crafting a Compiler. Pearson Education Inc. , 2010. 2. D. Grune, H. Bal, C. Jacobs, and K. Langendoen. Modern Compiler Design. John Wiley & Sons, 2000. 3. 1 Alfred V. Aho, Ravi Sethi, and Jeffrey D. Ullman. Compilers: Principles, Techniques, and Tools. Addison-Wesley, 1986. (2 nd Ed. 2006)

Overview 2

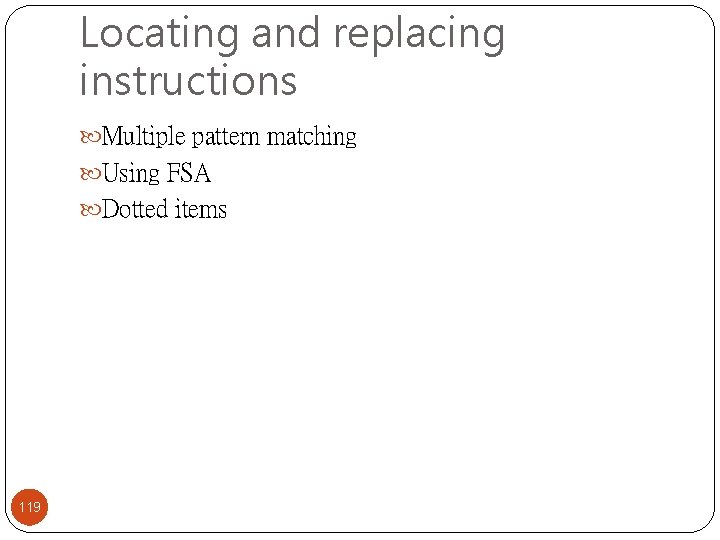

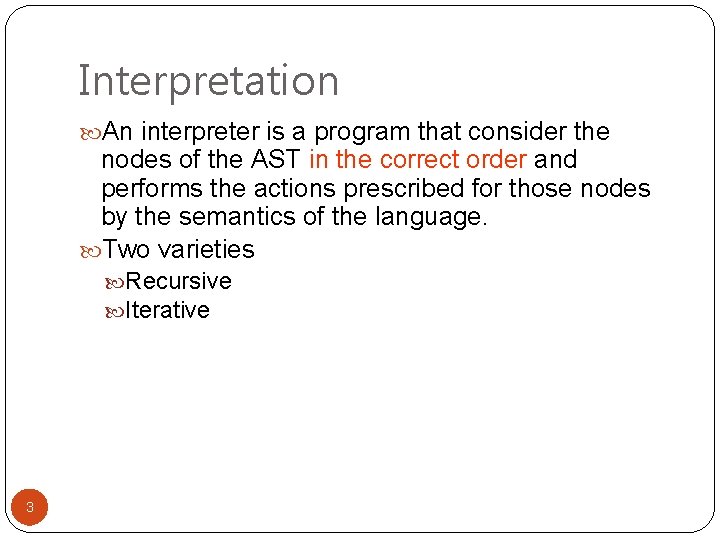

Interpretation An interpreter is a program that consider the nodes of the AST in the correct order and performs the actions prescribed for those nodes by the semantics of the language. Two varieties Recursive Iterative 3

![Interpretation Recursive interpretation operates directly on the AST attribute grammar simple to write thorough Interpretation Recursive interpretation operates directly on the AST [attribute grammar] simple to write thorough](https://slidetodoc.com/presentation_image_h/00ee3ee18027e0e6e8ac3ff03a768bc2/image-4.jpg)

Interpretation Recursive interpretation operates directly on the AST [attribute grammar] simple to write thorough error checks very slow: 1000 x speed of compiled code Iterative interpretation operates on intermediate code good error checking slow: 100 x speed of compiled code 4

Recursive Interpretation 5

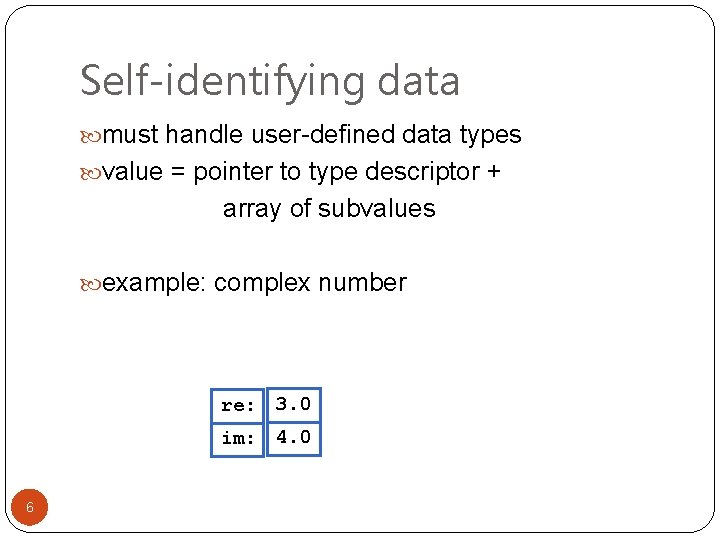

Self-identifying data must handle user-defined data types value = pointer to type descriptor + array of subvalues example: complex number re: 3. 0 im: 4. 0 6

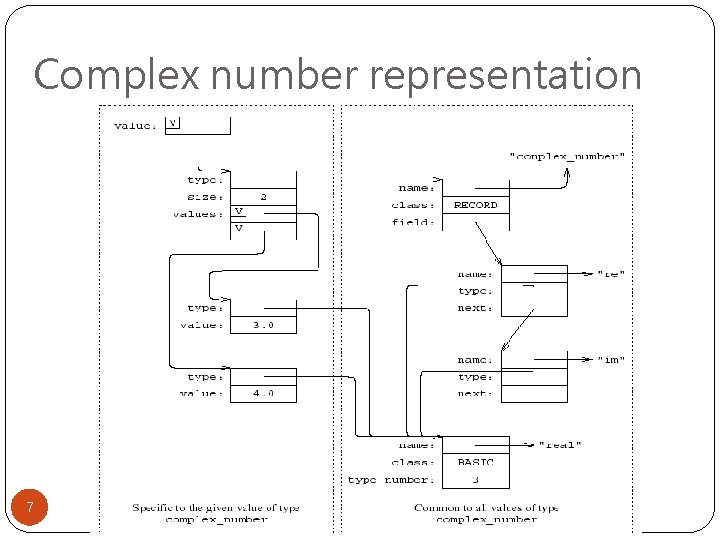

Complex number representation 7

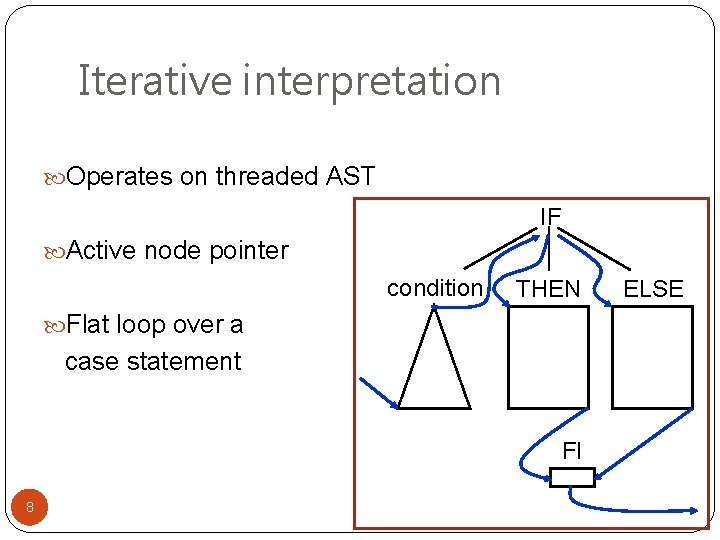

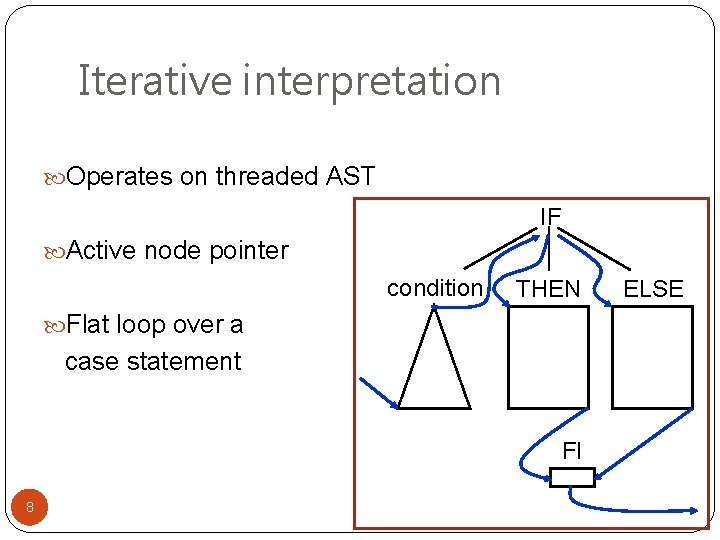

Iterative interpretation Operates on threaded AST IF Active node pointer condition THEN Flat loop over a case statement FI 8 ELSE

Sketch of the main loop 9

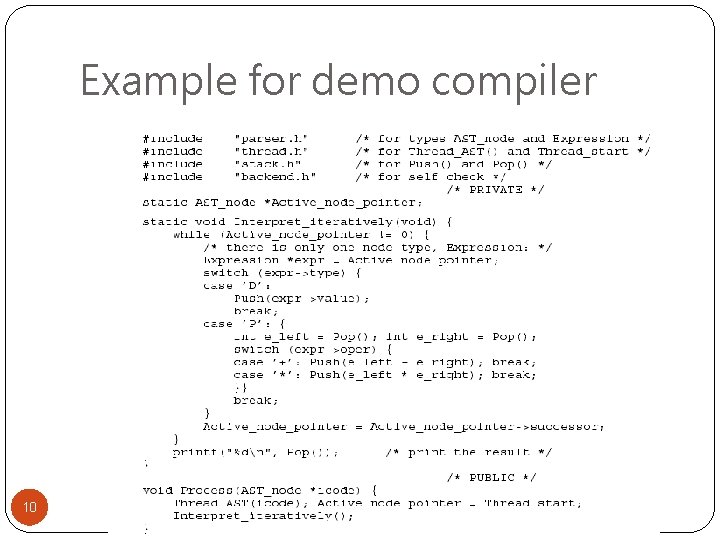

Example for demo compiler 10

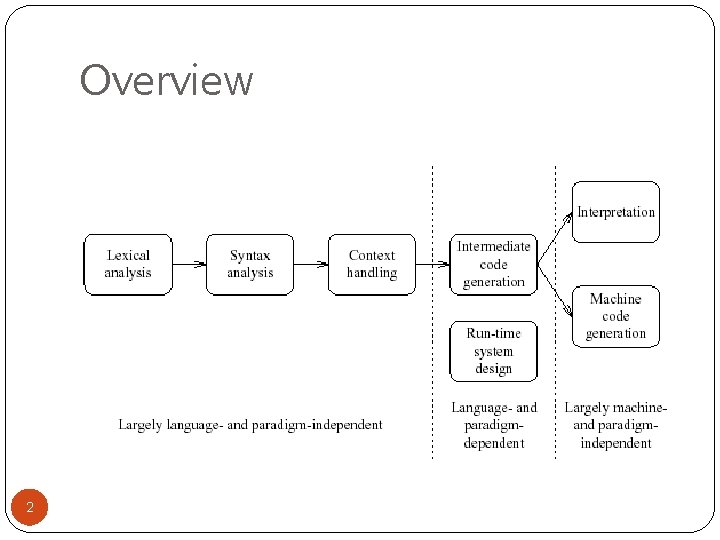

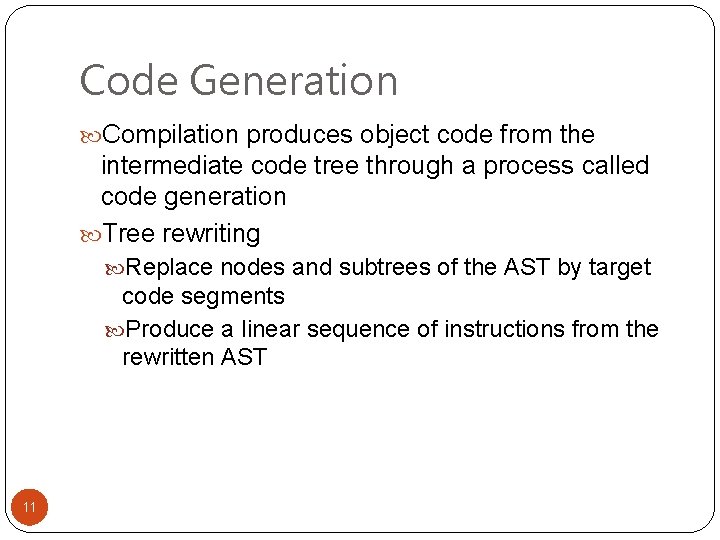

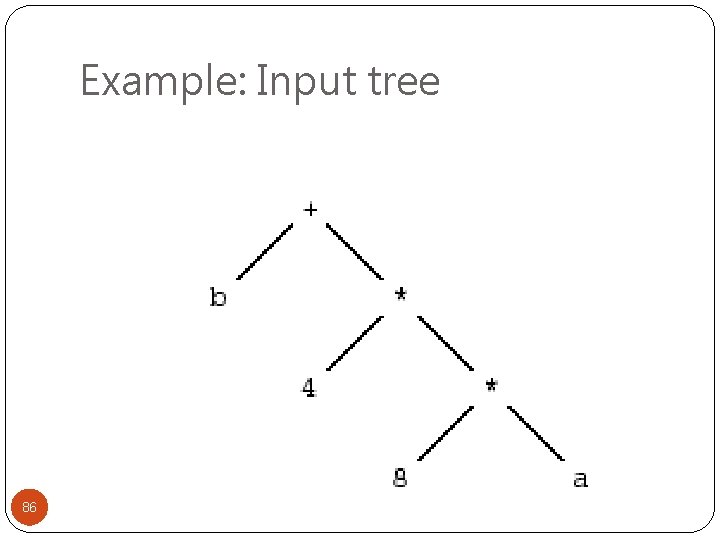

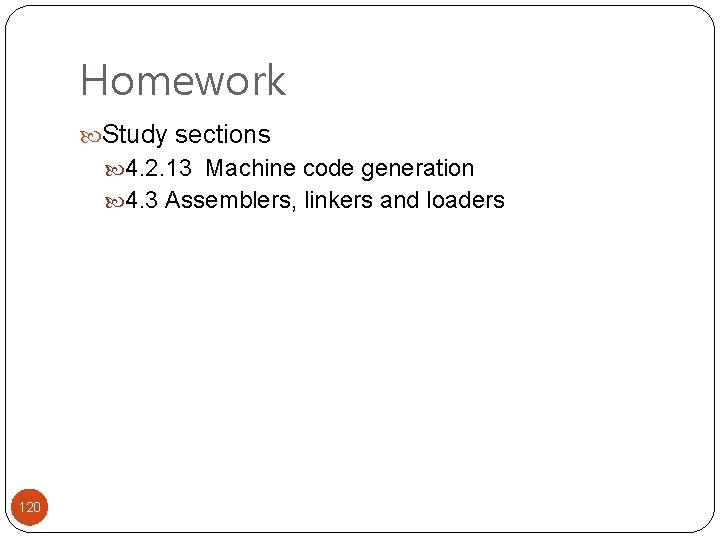

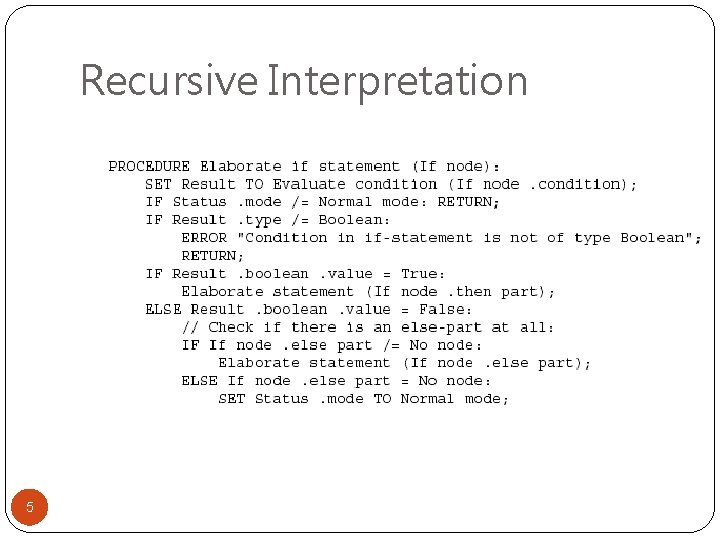

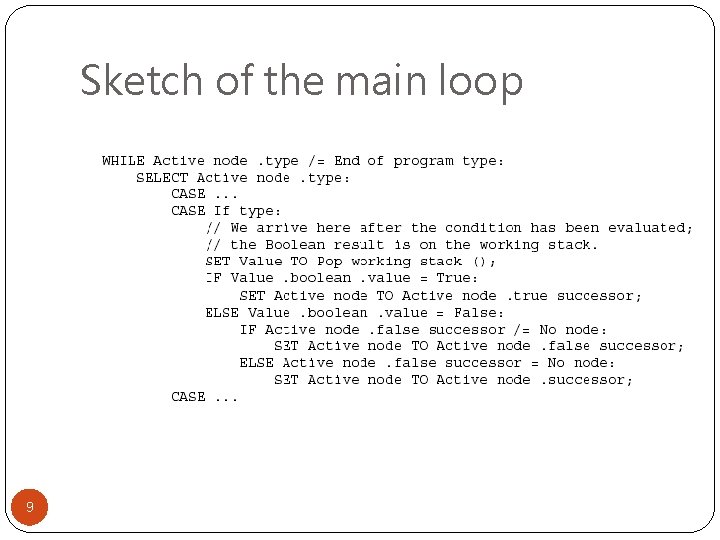

Code Generation Compilation produces object code from the intermediate code tree through a process called code generation Tree rewriting Replace nodes and subtrees of the AST by target code segments Produce a linear sequence of instructions from the rewritten AST 11

![Example of code generation a b4cd29 12 Example of code generation a: =(b[4*c+d]*2)+9; 12](https://slidetodoc.com/presentation_image_h/00ee3ee18027e0e6e8ac3ff03a768bc2/image-12.jpg)

Example of code generation a: =(b[4*c+d]*2)+9; 12

![Machine instructions LoadAddr MRi C Rd Loads the address of the Rith element of Machine instructions Load_Addr M[Ri], C, Rd Loads the address of the Ri-th element of](https://slidetodoc.com/presentation_image_h/00ee3ee18027e0e6e8ac3ff03a768bc2/image-13.jpg)

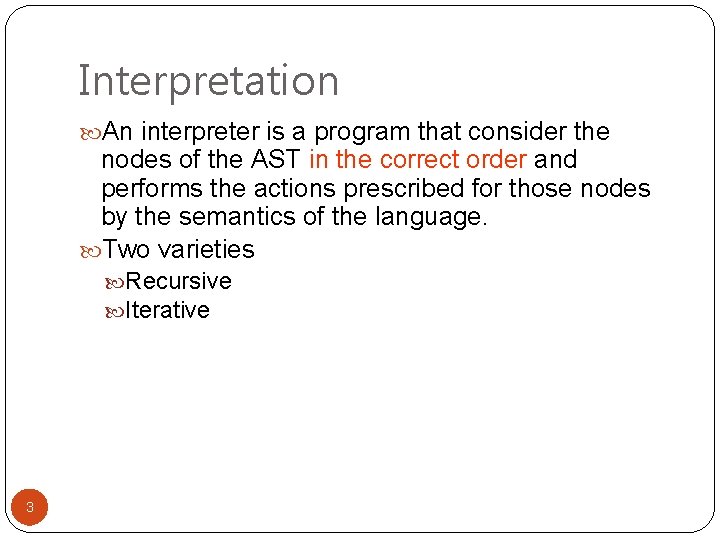

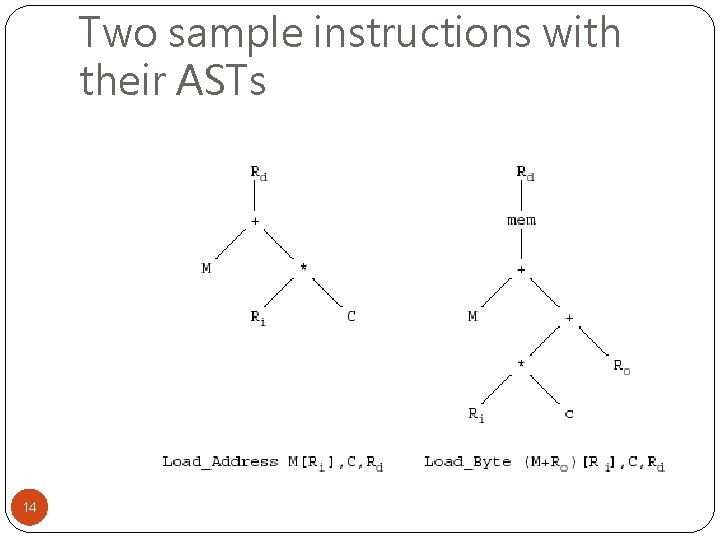

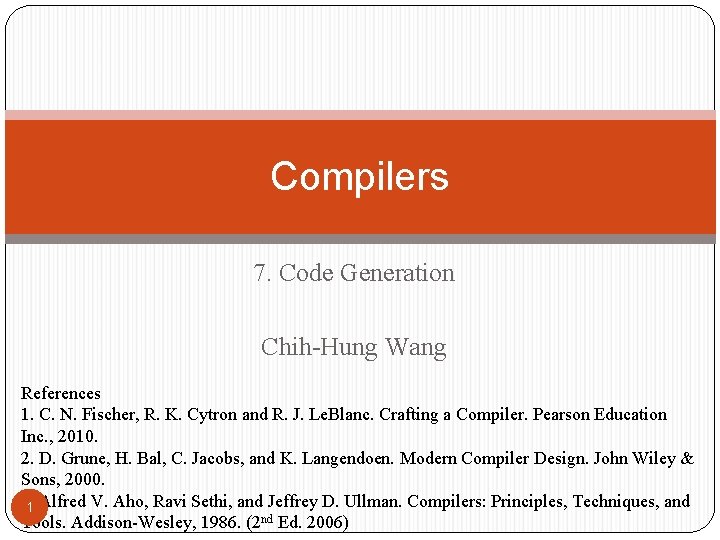

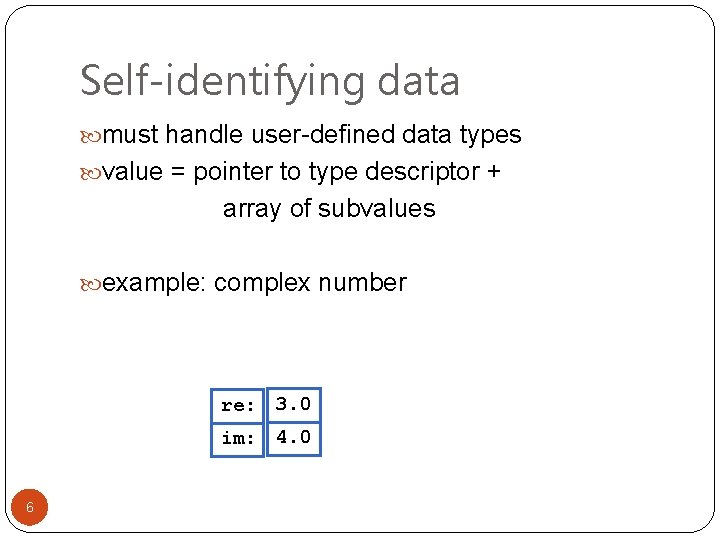

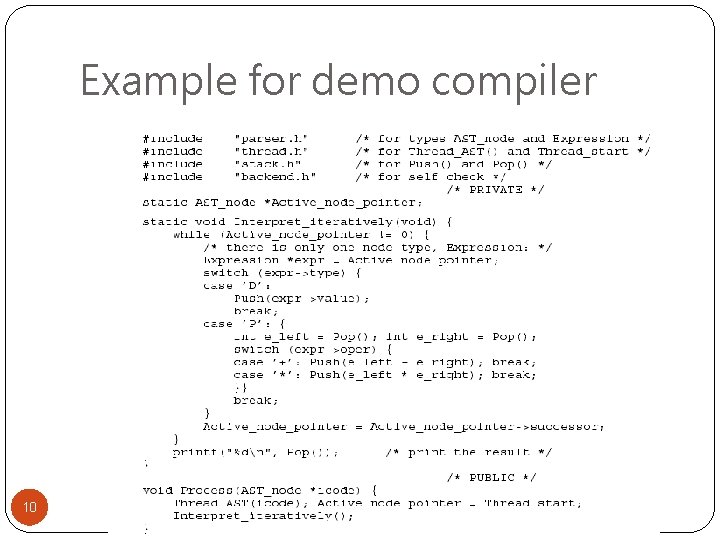

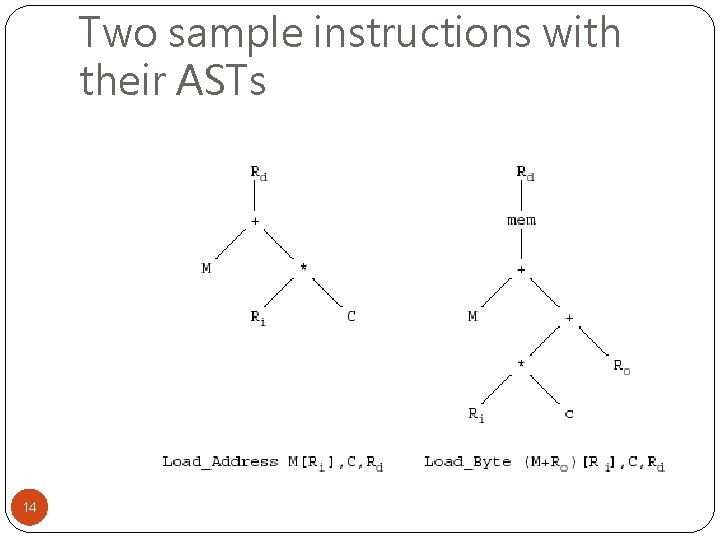

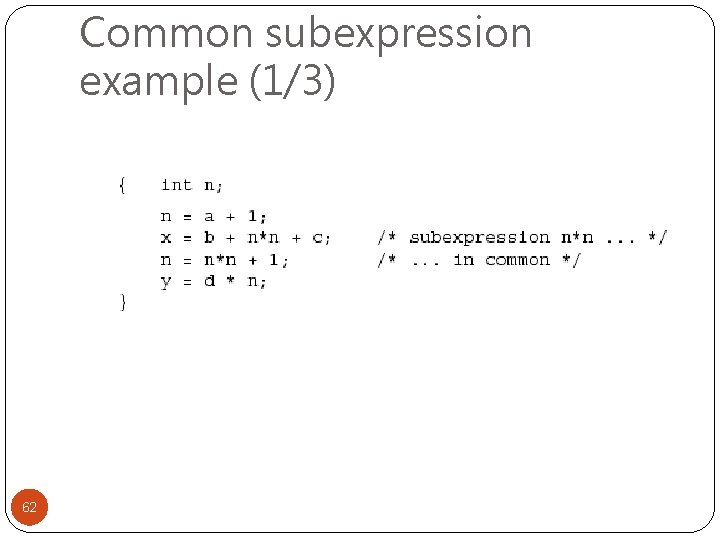

Machine instructions Load_Addr M[Ri], C, Rd Loads the address of the Ri-th element of the array at M into Rd, where the size of the elements of M is C bytes Load_Byte (M+Ro)[Ri], C, Rd Loads the byte contents of the Ri-th element of the array at M plus offset Ro into Rd, where the other parameters have the same meanings as above 13

Two sample instructions with their ASTs 14

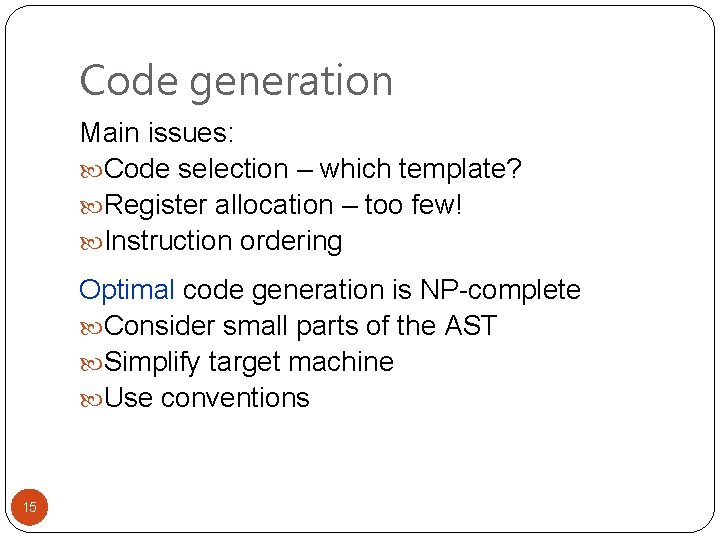

Code generation Main issues: Code selection – which template? Register allocation – too few! Instruction ordering Optimal code generation is NP-complete Consider small parts of the AST Simplify target machine Use conventions 15

![Object code sequence LoadByte bRdRc 4 Rt LoadAddr 9Rt 2 Ra 16 Object code sequence Load_Byte (b+Rd)[Rc], 4, Rt Load_Addr 9[Rt], 2, Ra 16](https://slidetodoc.com/presentation_image_h/00ee3ee18027e0e6e8ac3ff03a768bc2/image-16.jpg)

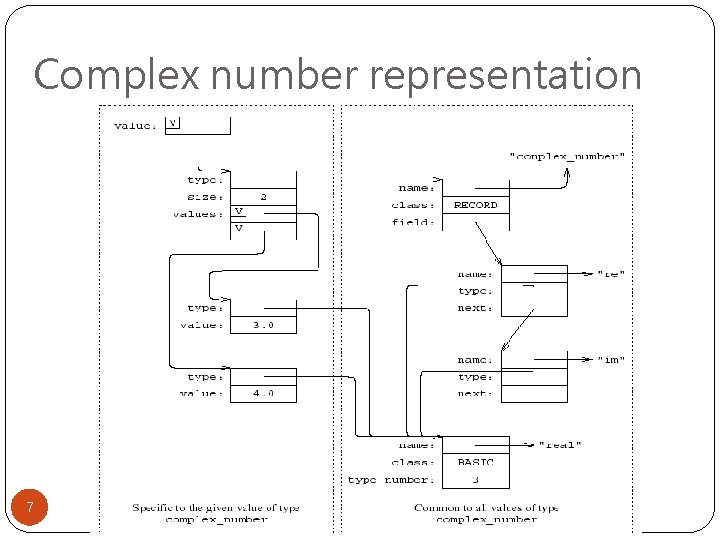

Object code sequence Load_Byte (b+Rd)[Rc], 4, Rt Load_Addr 9[Rt], 2, Ra 16

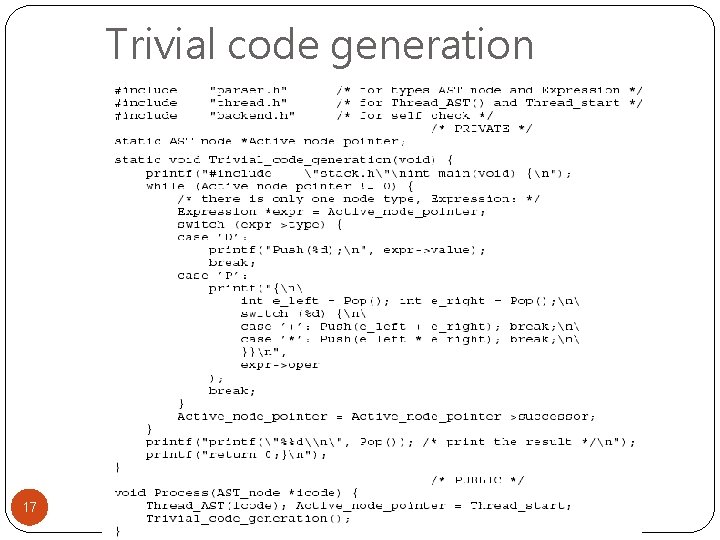

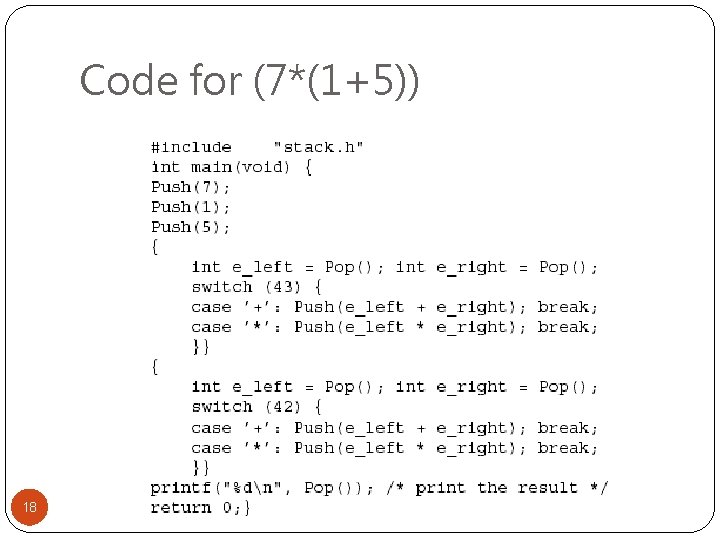

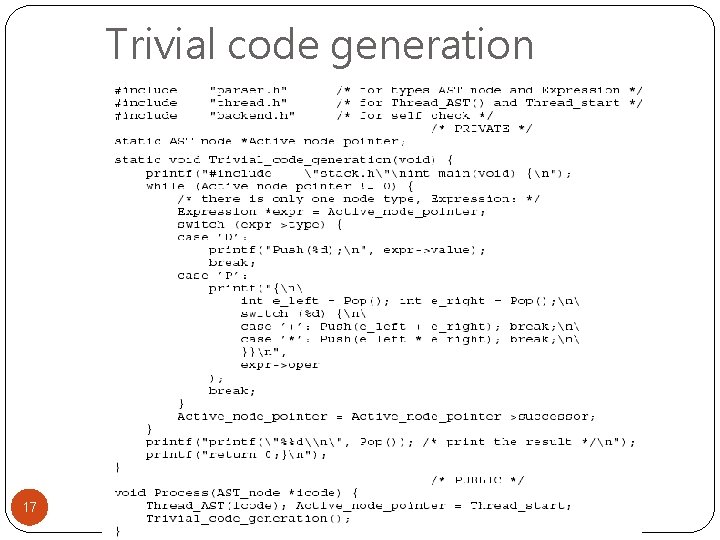

Trivial code generation 17

Code for (7*(1+5)) 18

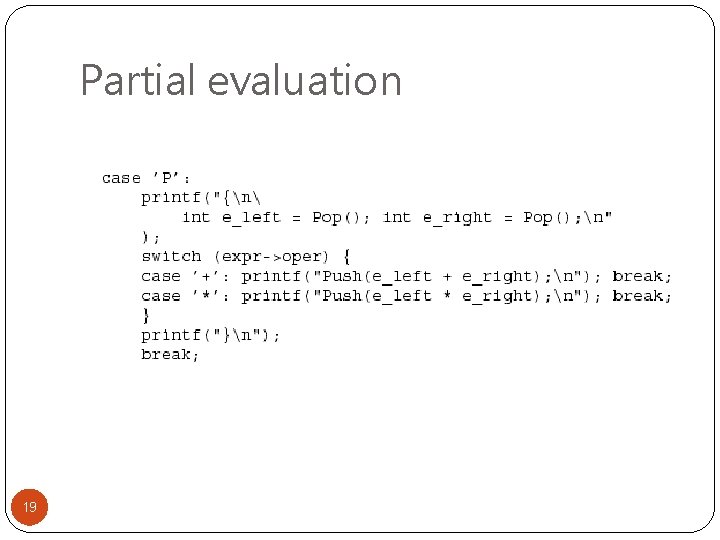

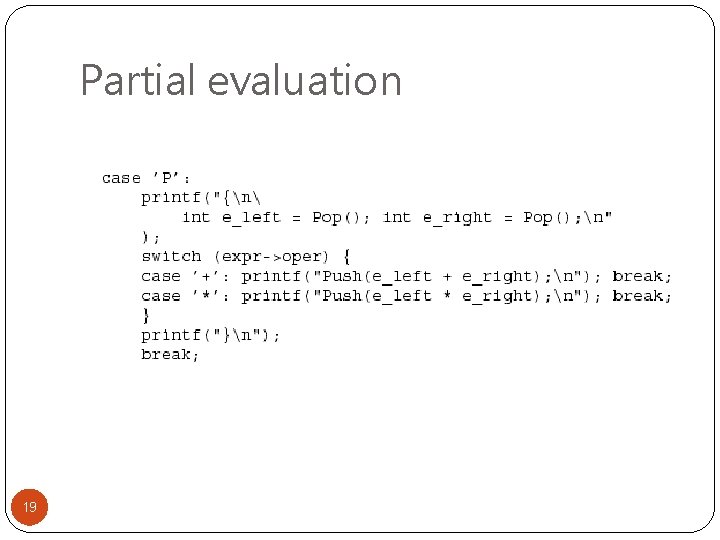

Partial evaluation 19

New Code 20

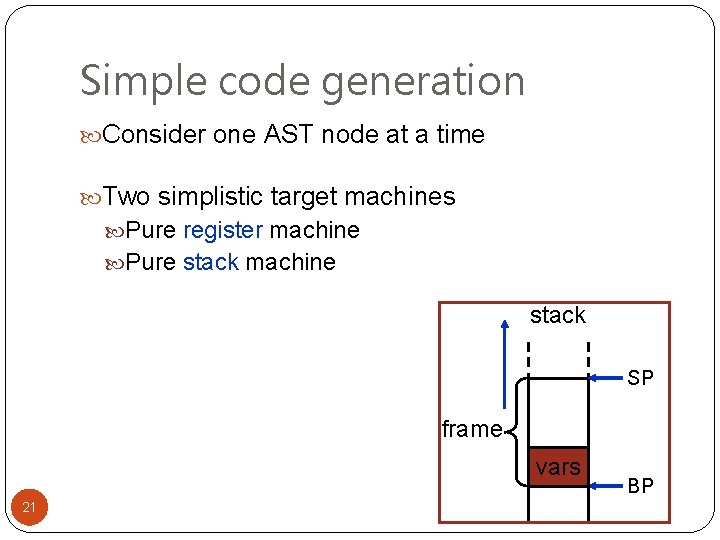

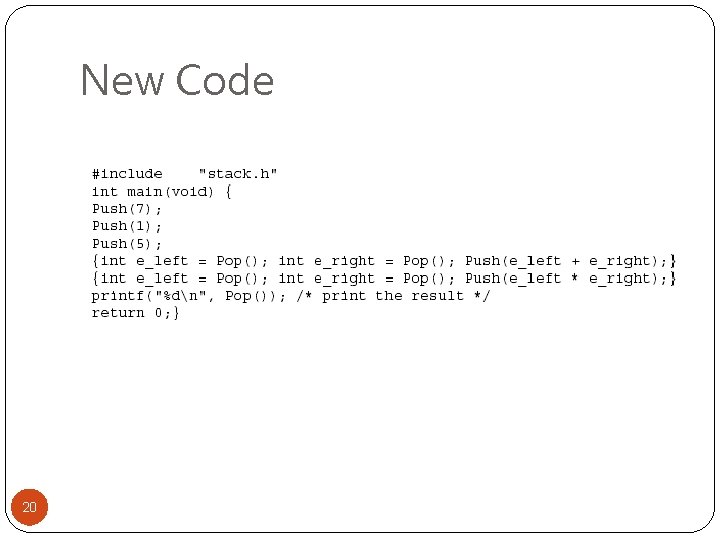

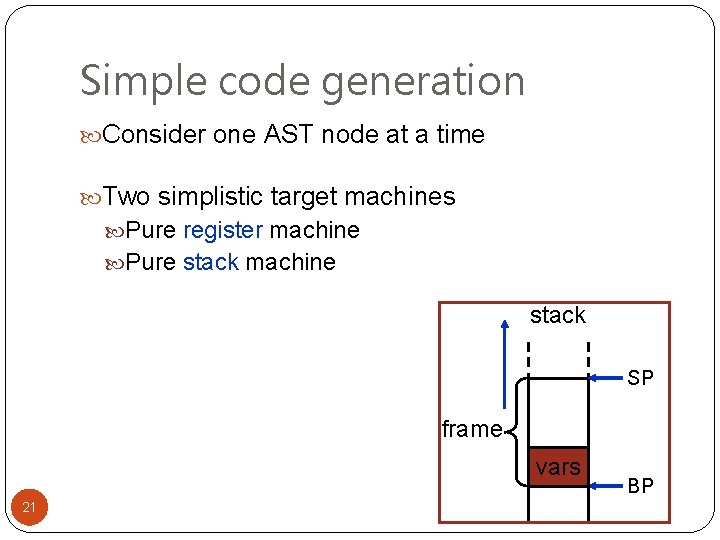

Simple code generation Consider one AST node at a time Two simplistic target machines Pure register machine Pure stack machine stack SP frame vars 21 BP

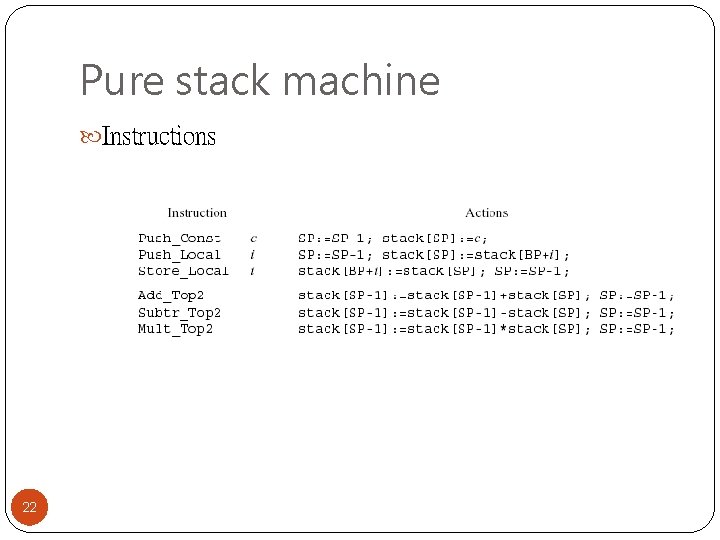

Pure stack machine Instructions 22

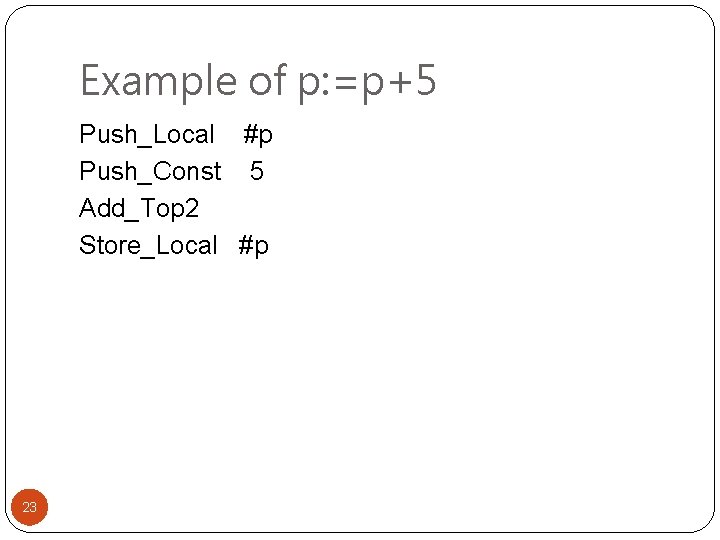

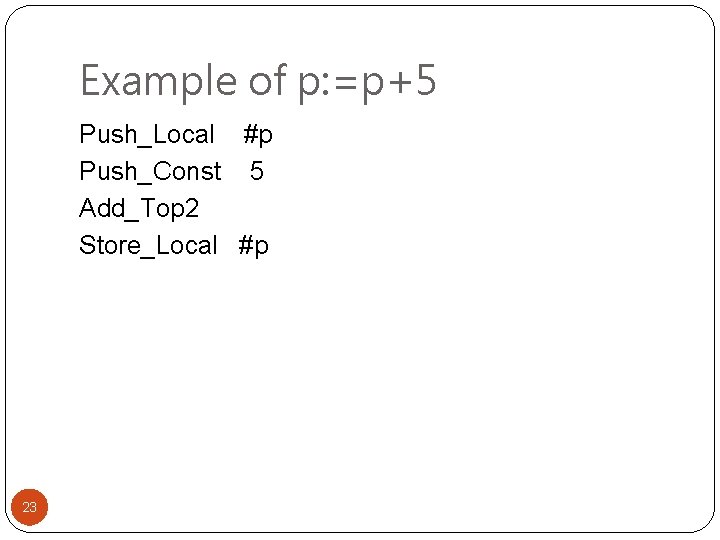

Example of p: =p+5 Push_Local #p Push_Const 5 Add_Top 2 Store_Local #p 23

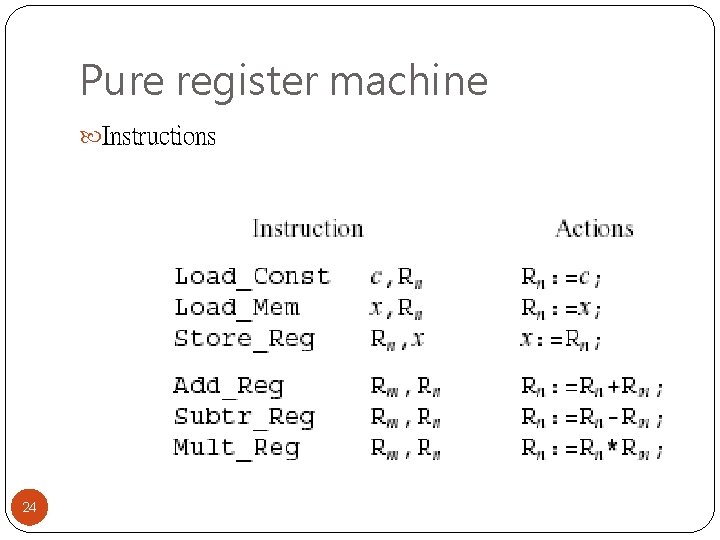

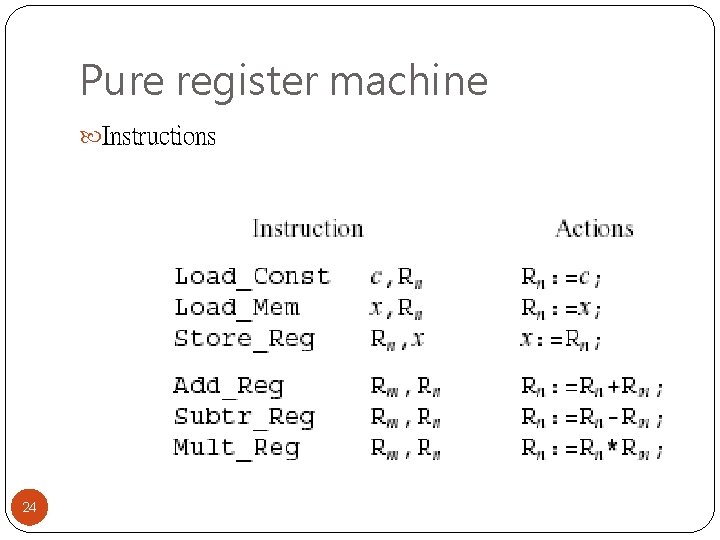

Pure register machine Instructions 24

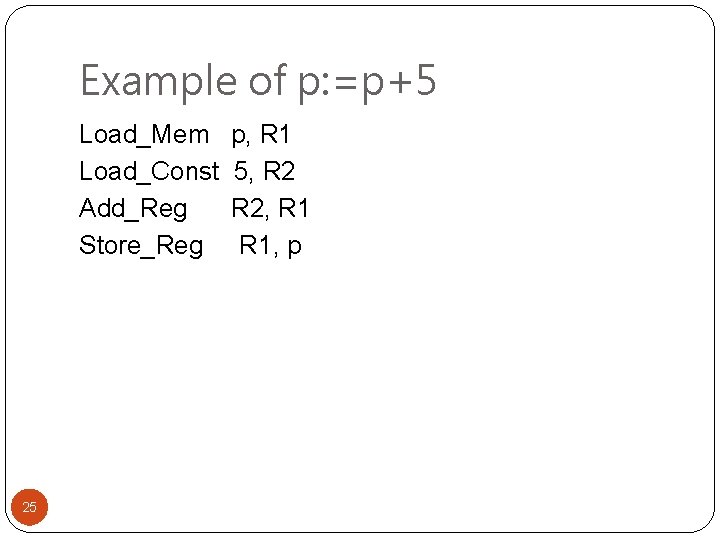

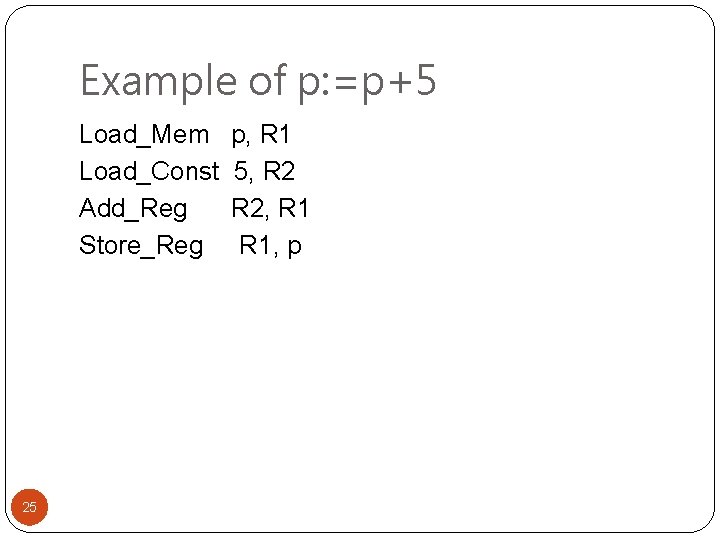

Example of p: =p+5 Load_Mem Load_Const Add_Reg Store_Reg 25 p, R 1 5, R 2, R 1, p

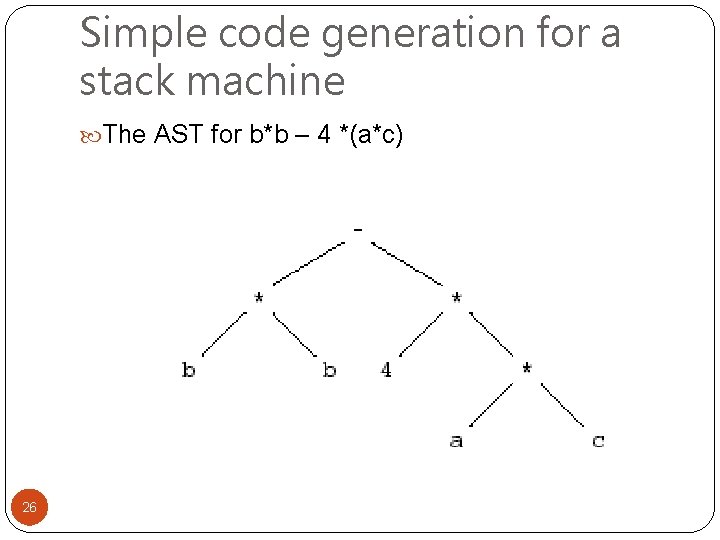

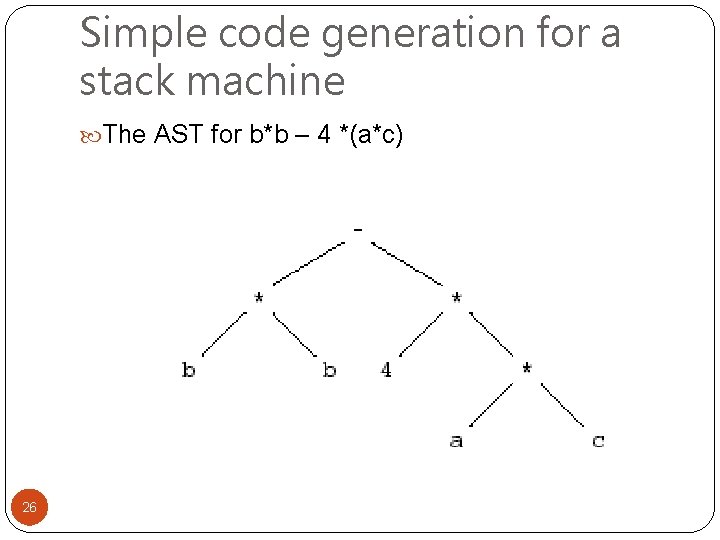

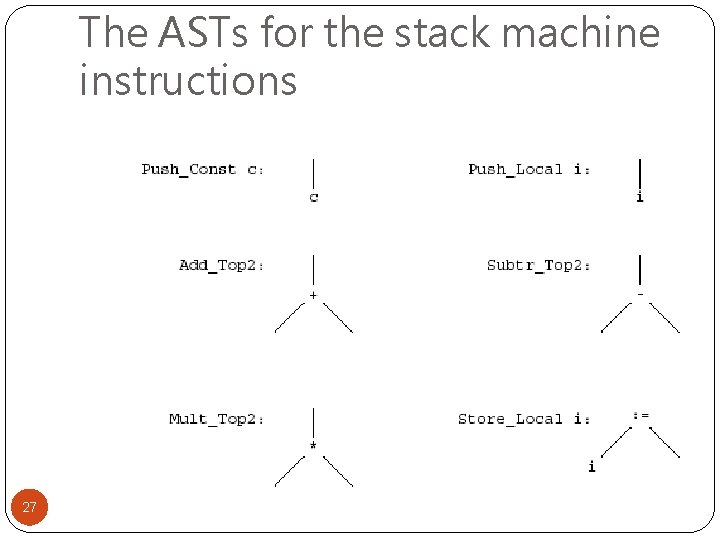

Simple code generation for a stack machine The AST for b*b – 4 *(a*c) 26

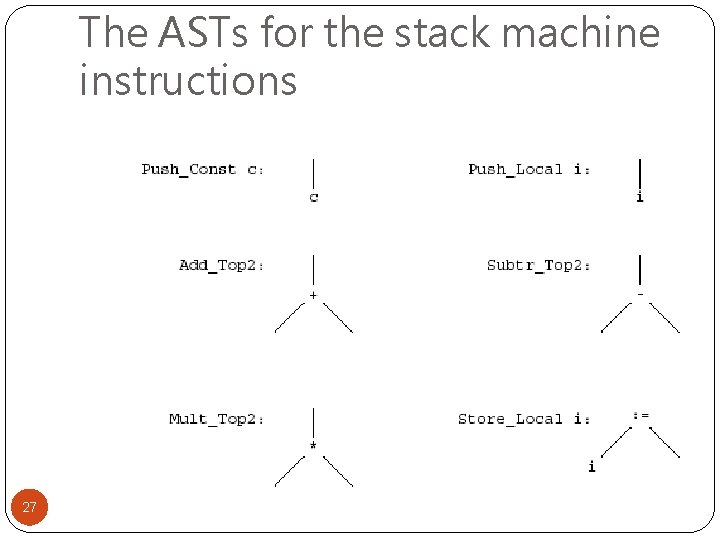

The ASTs for the stack machine instructions 27

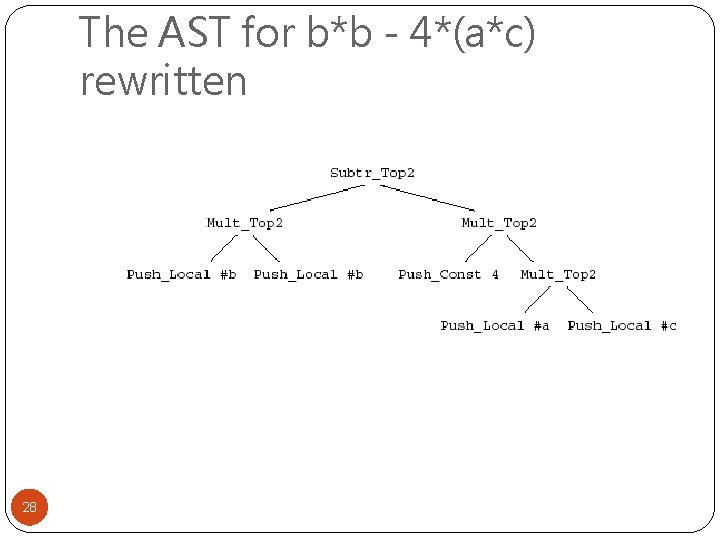

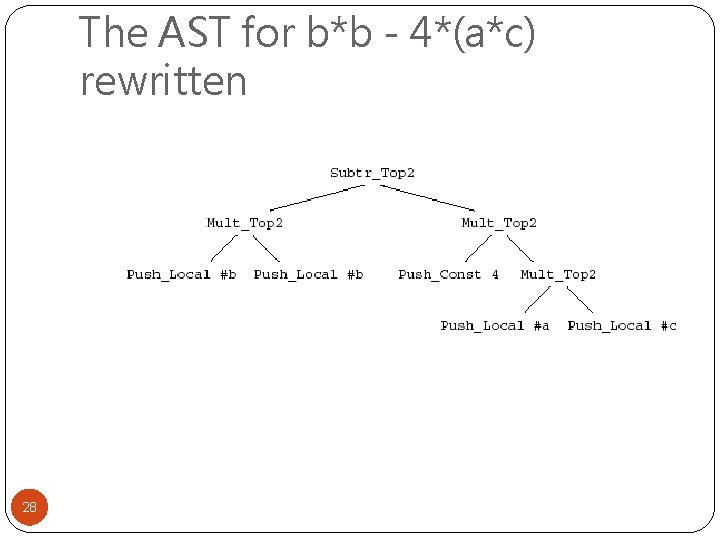

The AST for b*b - 4*(a*c) rewritten 28

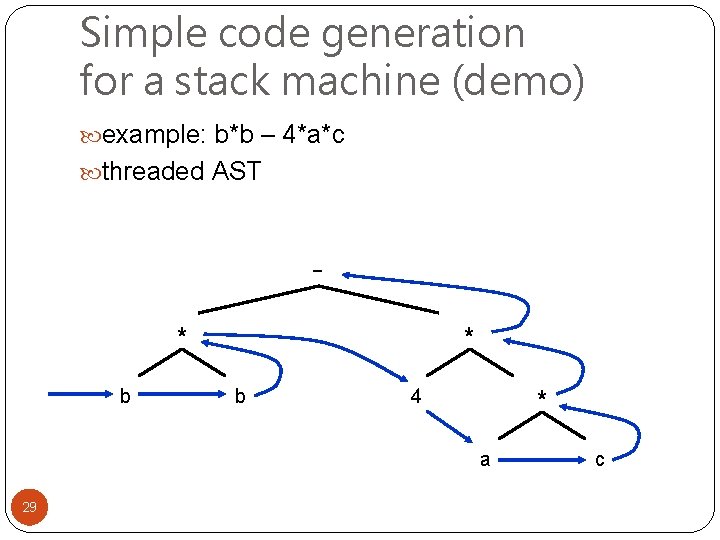

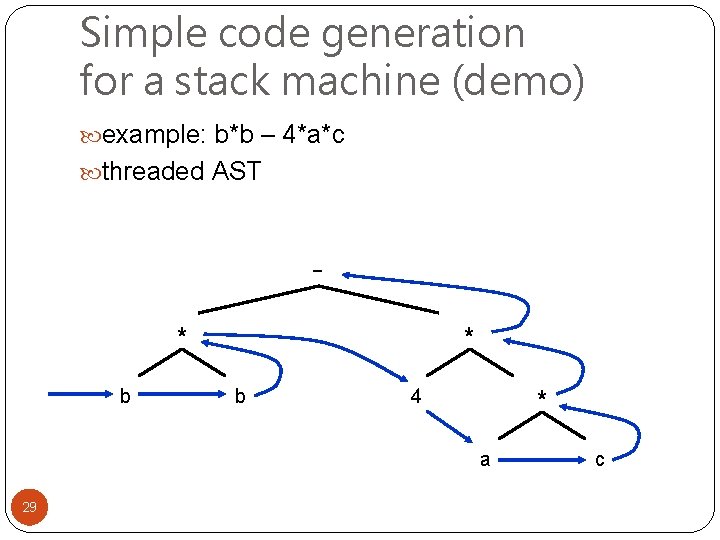

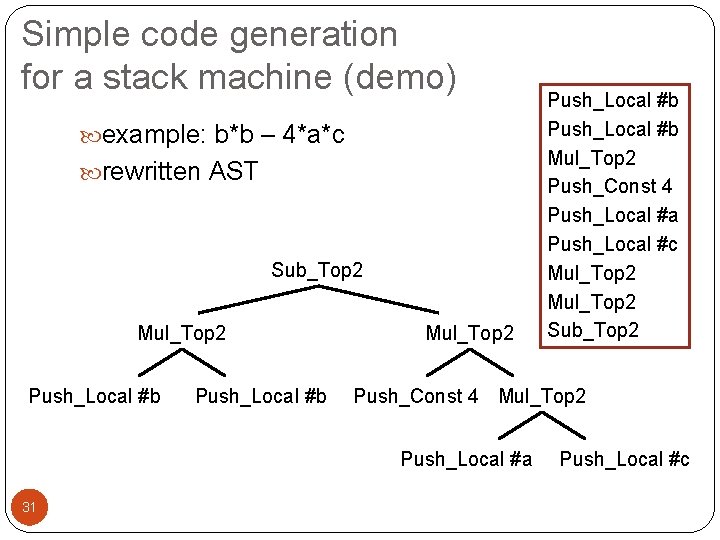

Simple code generation for a stack machine (demo) example: b*b – 4*a*c threaded AST * b 4 * a 29 c

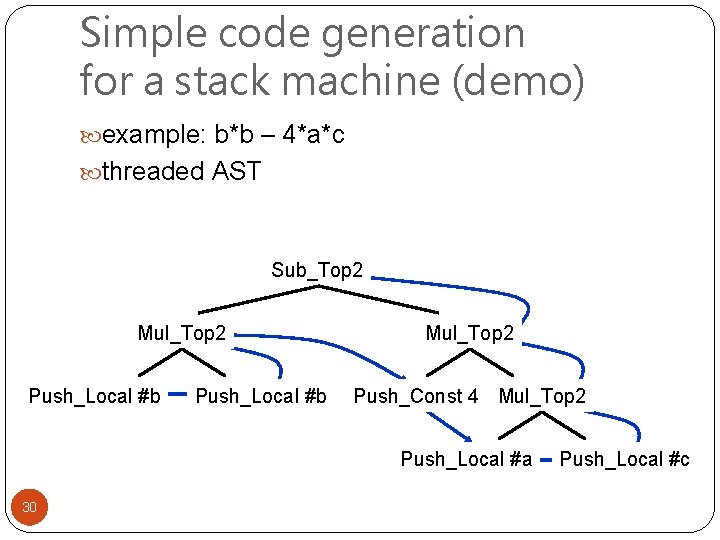

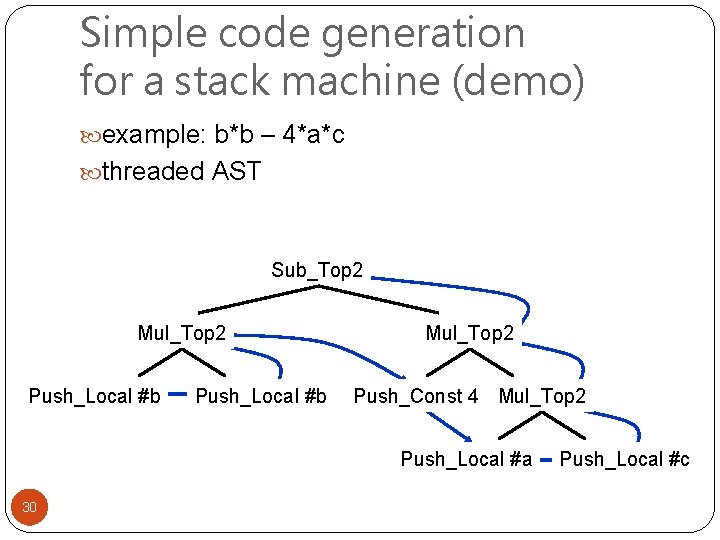

Simple code generation for a stack machine (demo) example: b*b – 4*a*c threaded AST Sub_Top 2 Mul_Top 2 * Push_Local b #b Mul_Top 2 * Push_Const 4 4 Mul_Top 2 * Push_Local a #a 30 Push_Local c #c

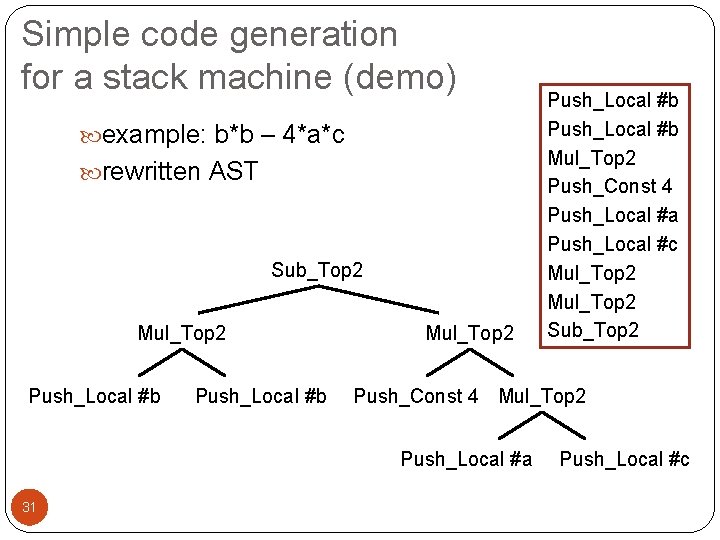

Simple code generation for a stack machine (demo) example: b*b – 4*a*c rewritten AST Sub_Top 2 Mul_Top 2 * Push_Local b #b Mul_Top 2 * Push_Const 4 4 Mul_Top 2 * Push_Local a #a 31 Push_Local #b Mul_Top 2 Push_Const 4 Push_Local #a Push_Local #c Mul_Top 2 Sub_Top 2 Push_Local c #c

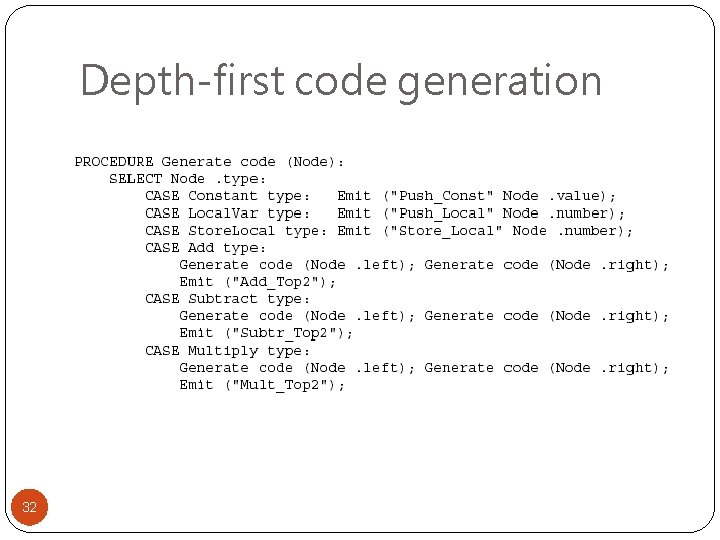

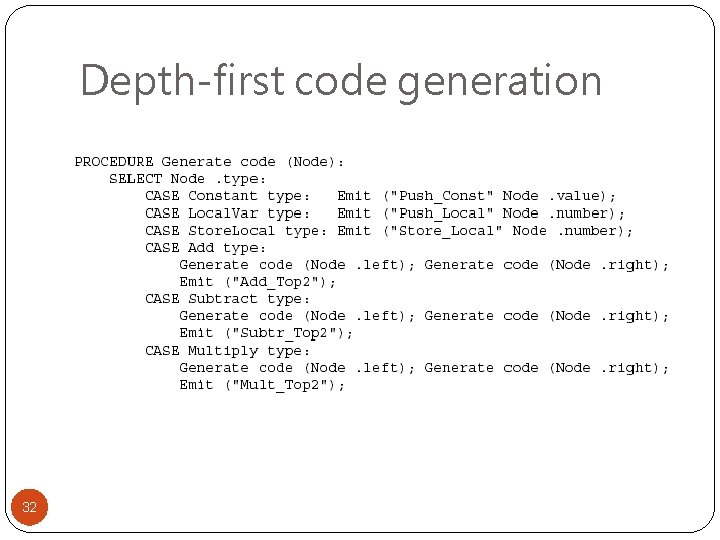

Depth-first code generation 32

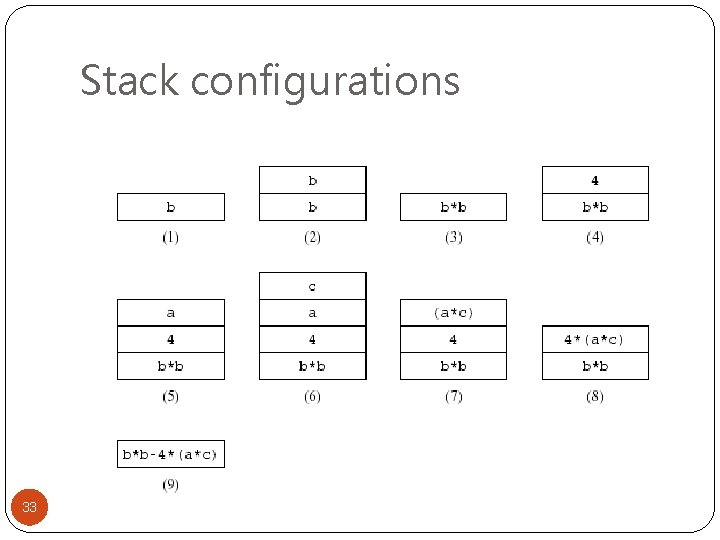

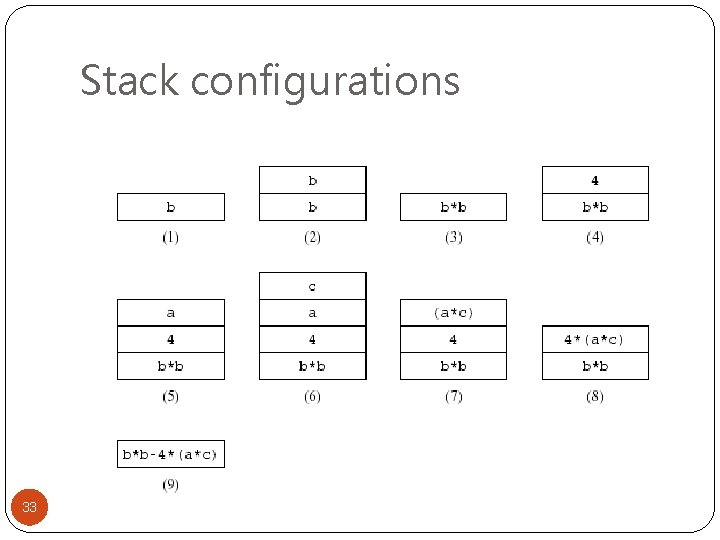

Stack configurations 33

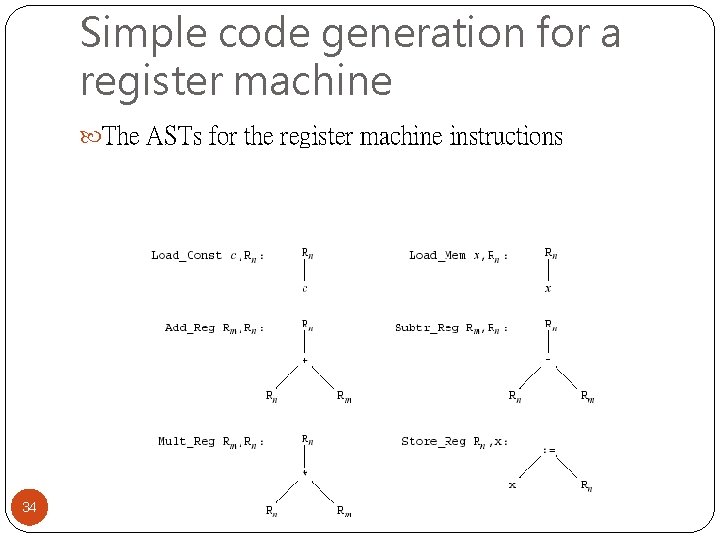

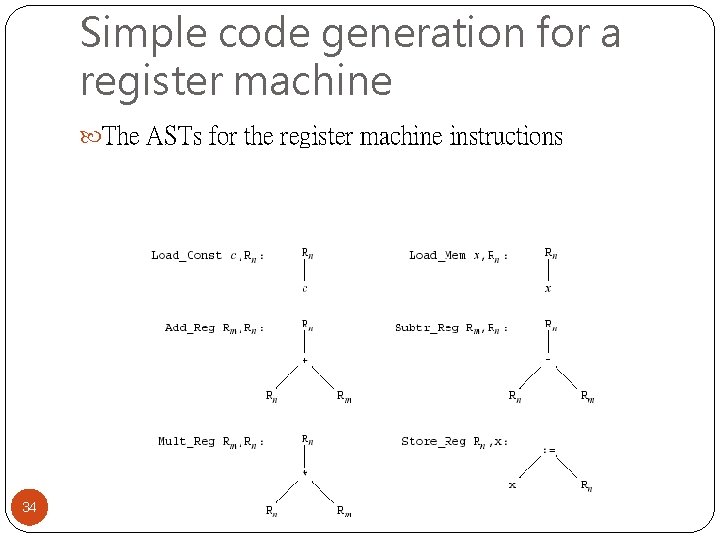

Simple code generation for a register machine The ASTs for the register machine instructions 34

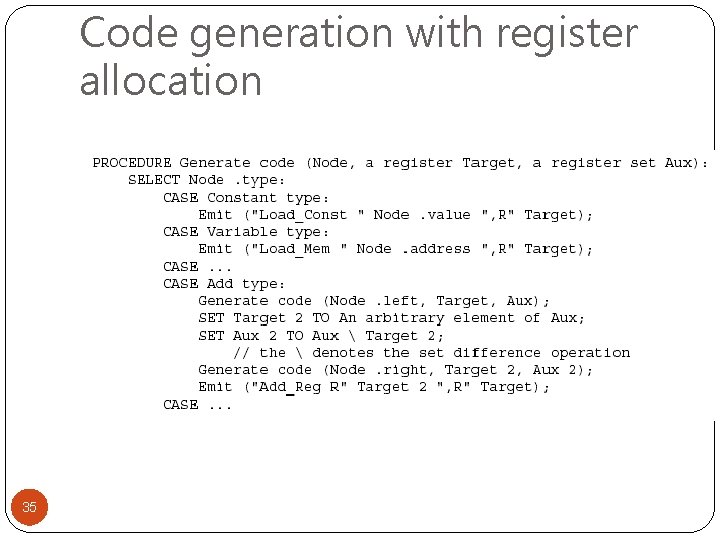

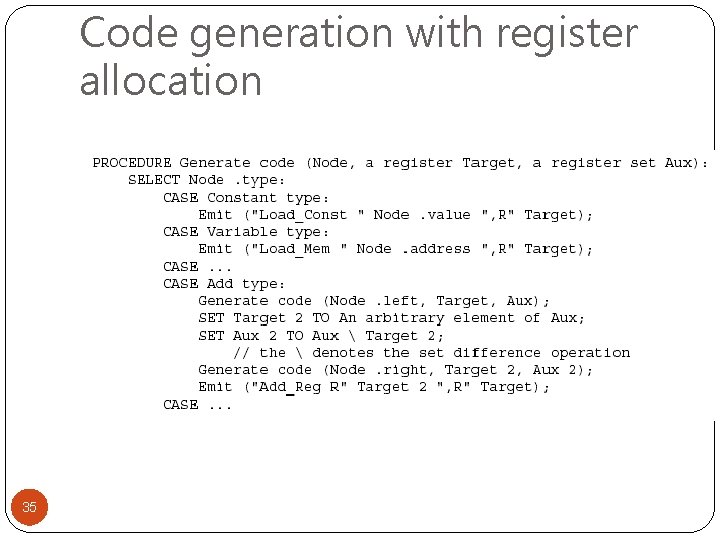

Code generation with register allocation 35

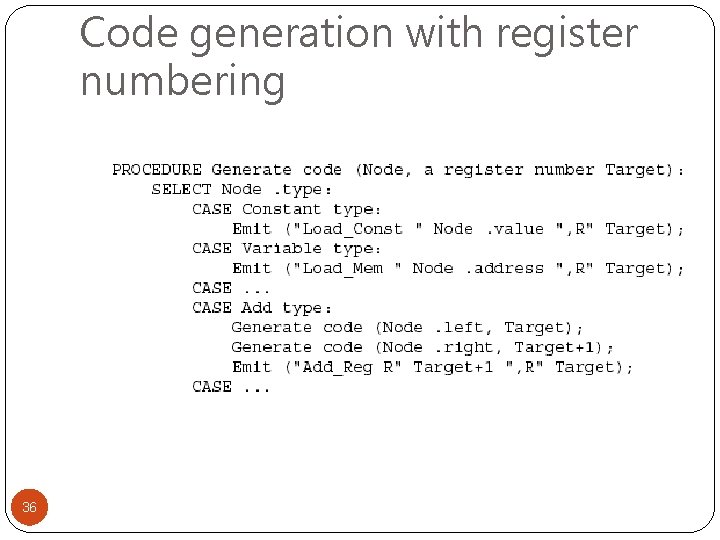

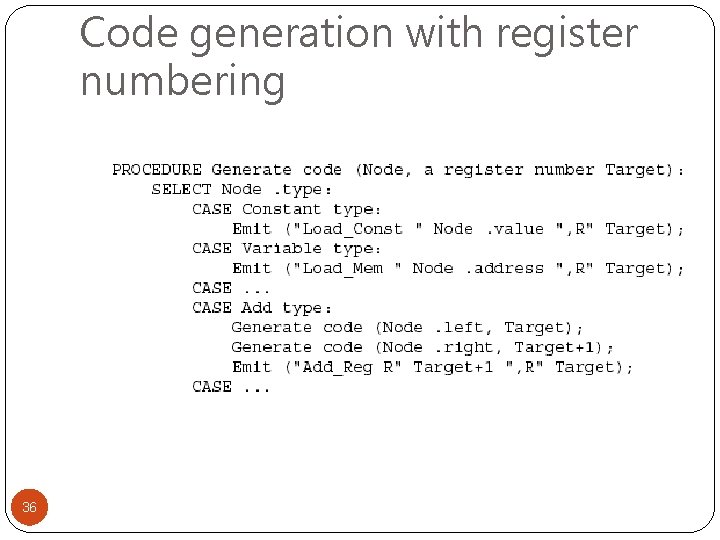

Code generation with register numbering 36

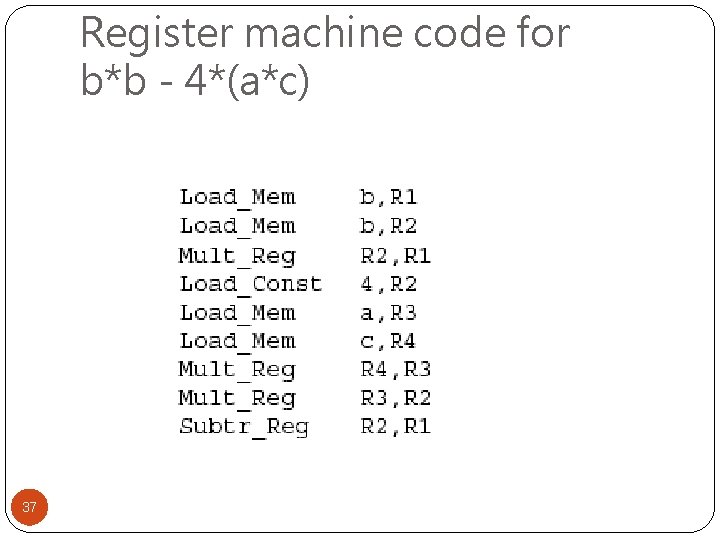

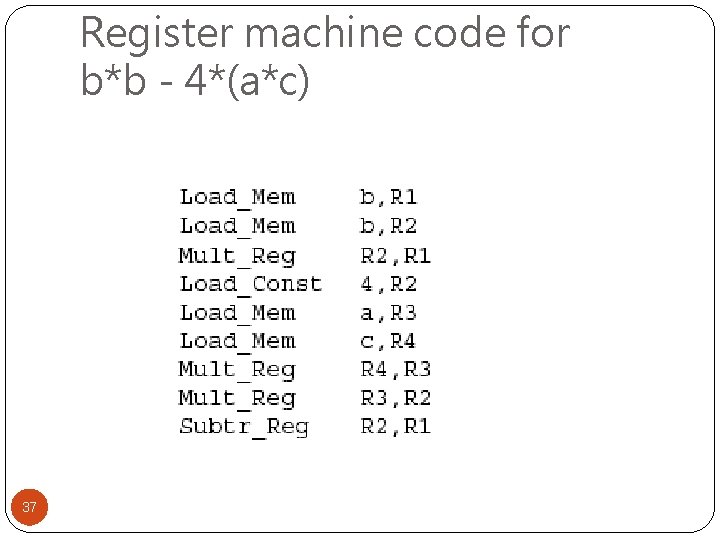

Register machine code for b*b - 4*(a*c) 37

Register contents 38

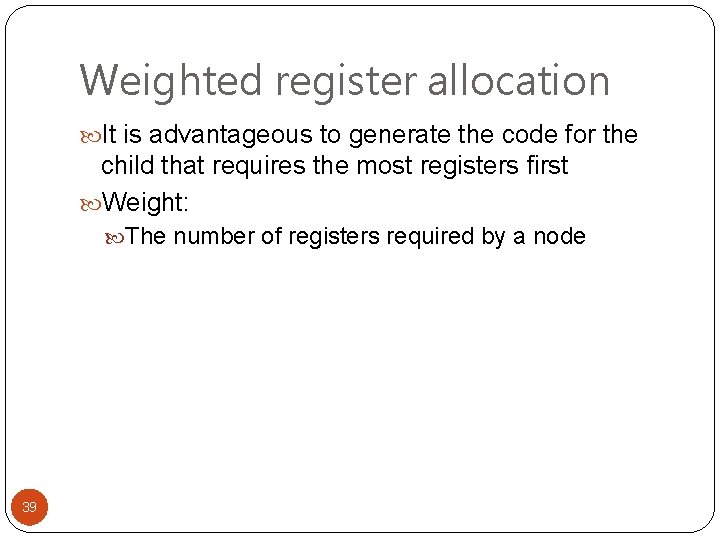

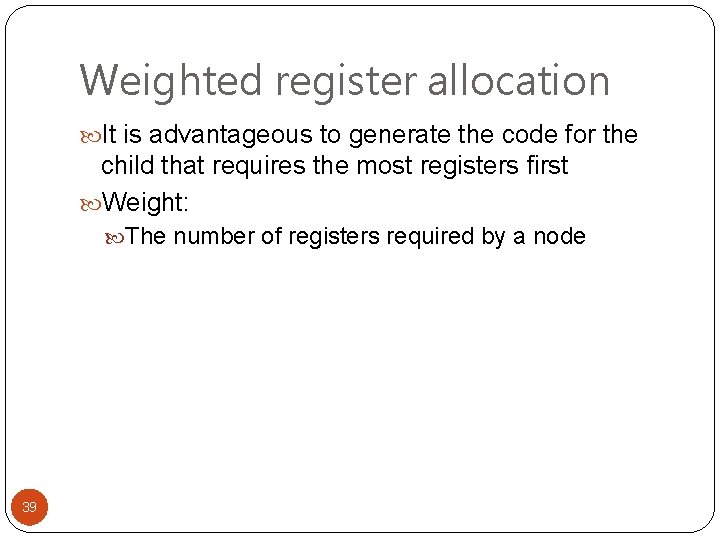

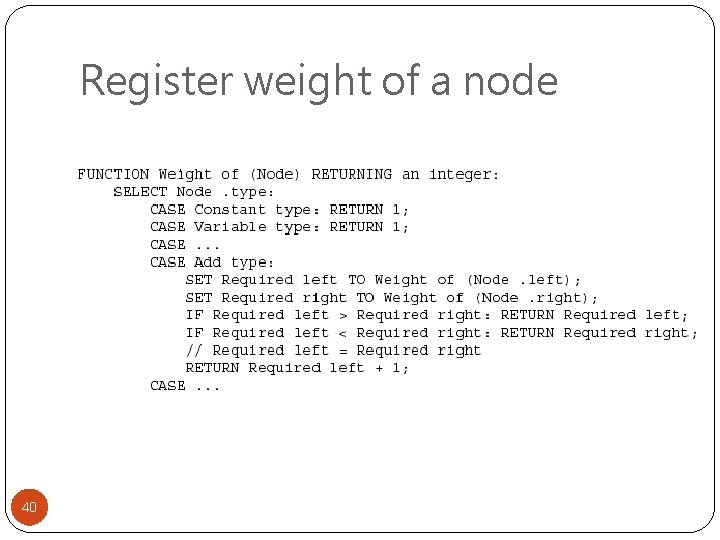

Weighted register allocation It is advantageous to generate the code for the child that requires the most registers first Weight: The number of registers required by a node 39

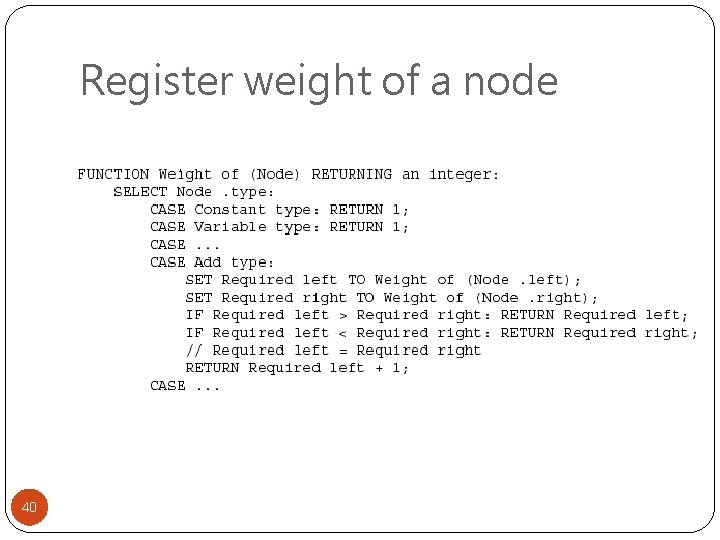

Register weight of a node 40

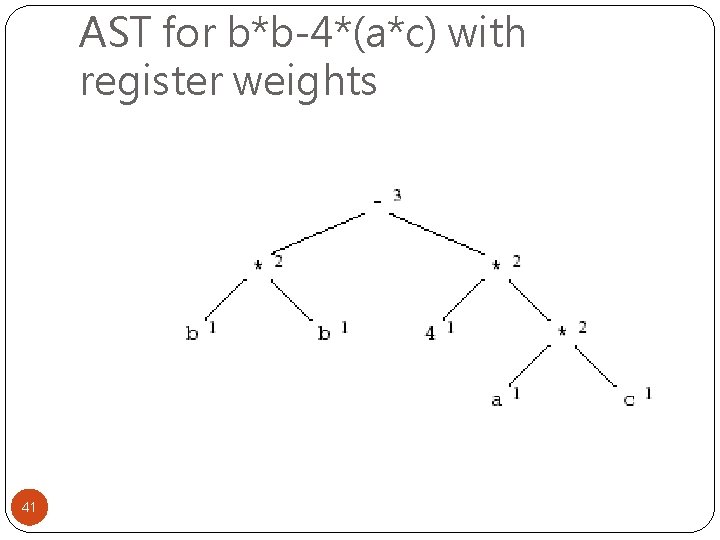

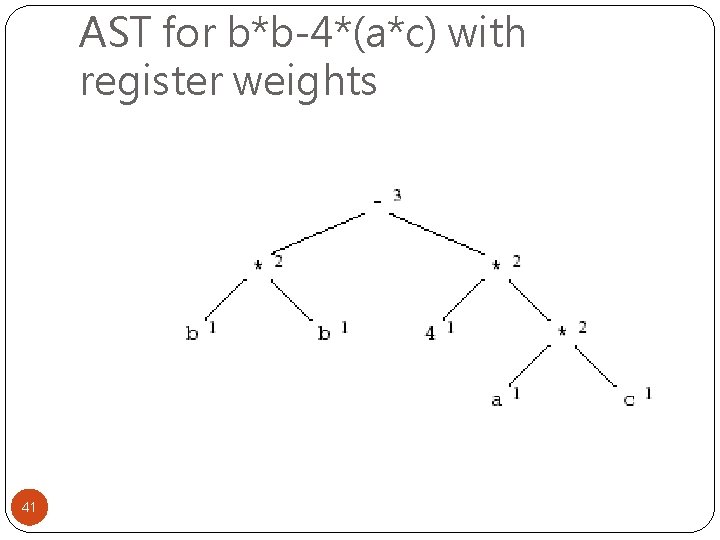

AST for b*b-4*(a*c) with register weights 41

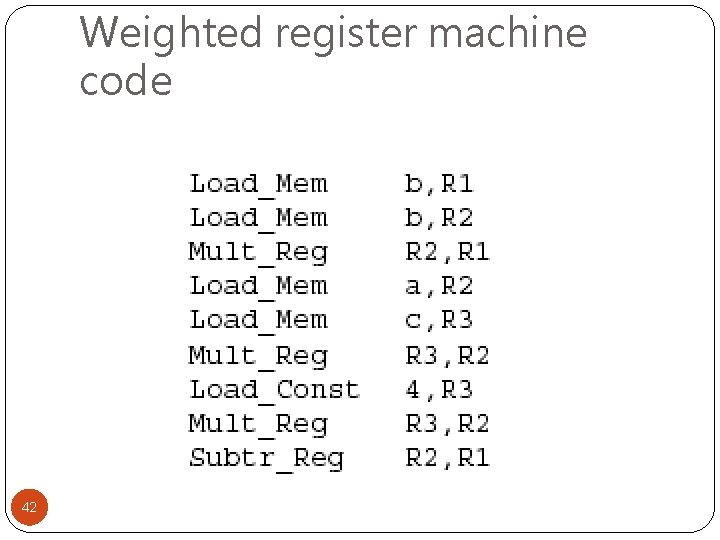

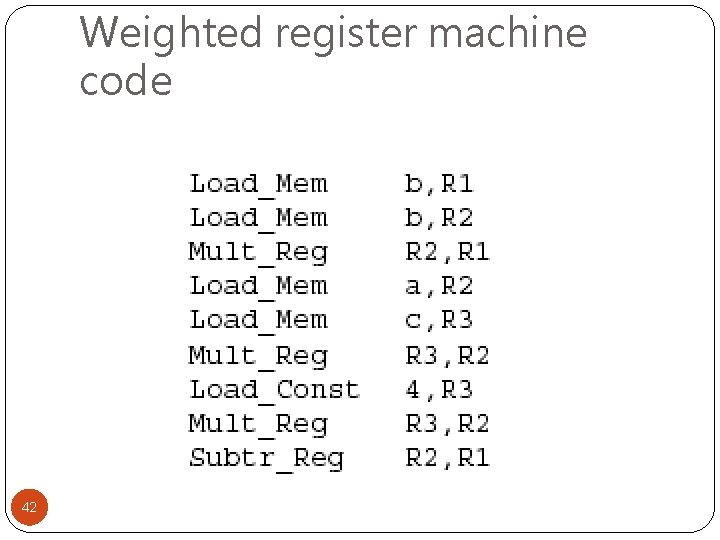

Weighted register machine code 42

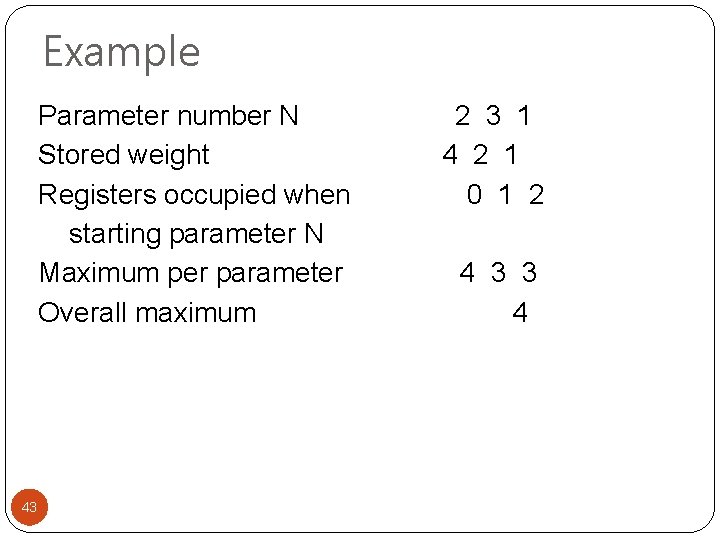

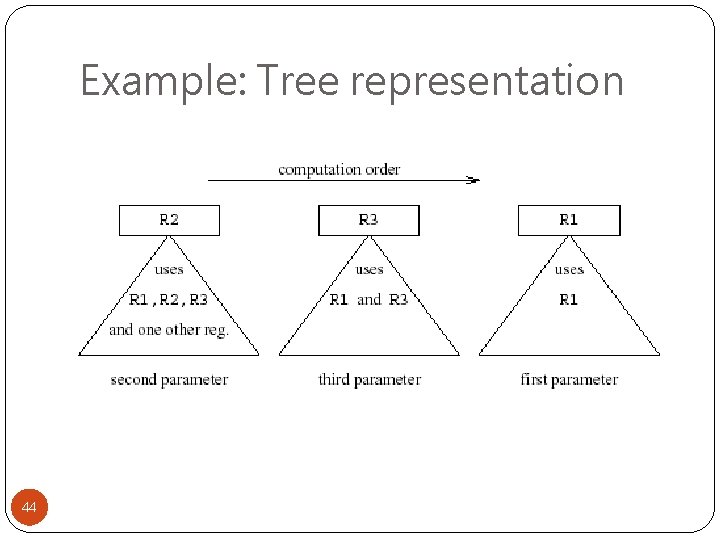

Example Parameter number N Stored weight Registers occupied when starting parameter N Maximum per parameter Overall maximum 43 2 3 1 4 2 1 0 1 2 4 3 3 4

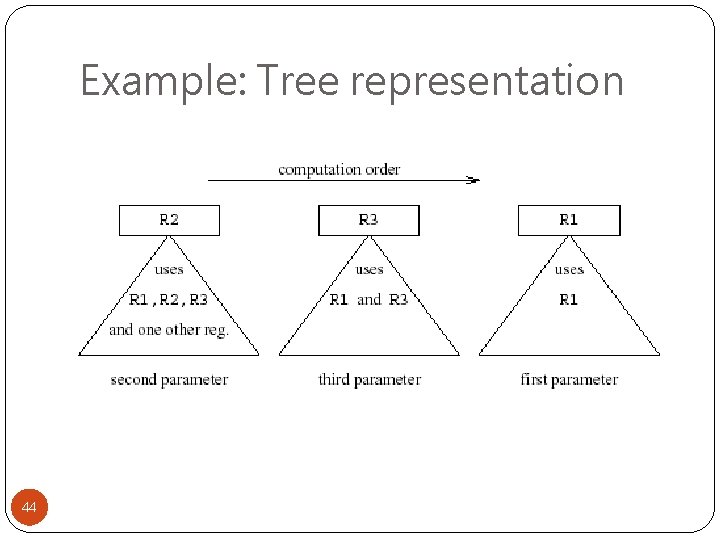

Example: Tree representation 44

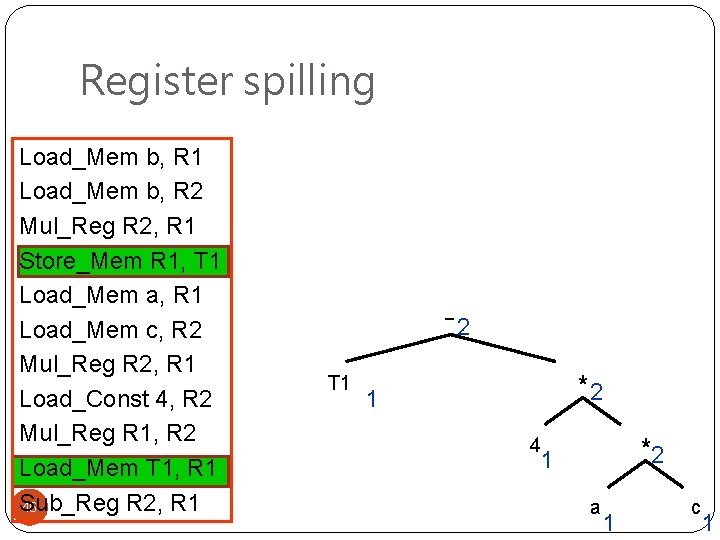

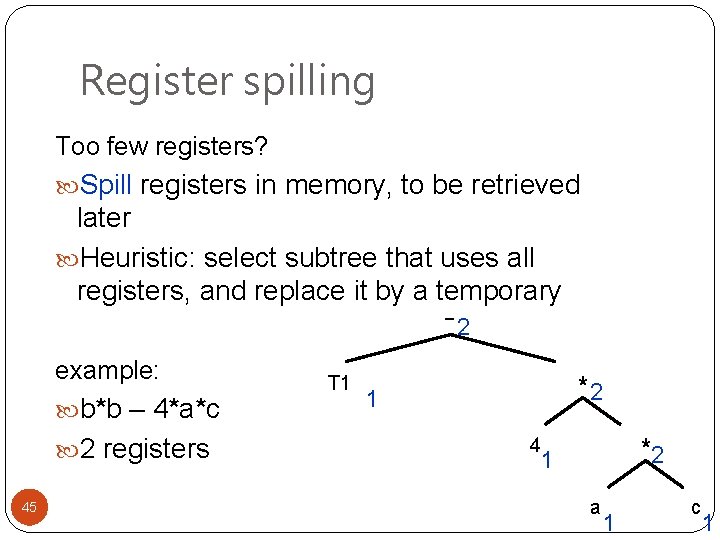

Register spilling Too few registers? Spill registers in memory, to be retrieved later Heuristic: select subtree that uses all registers, and replace it by a temporary -2 3 example: *T 1 2 b*b – 4*a*c 2 registers 45 b 1 * 1 b 1 2 4 * 2 1 a 1 c 1

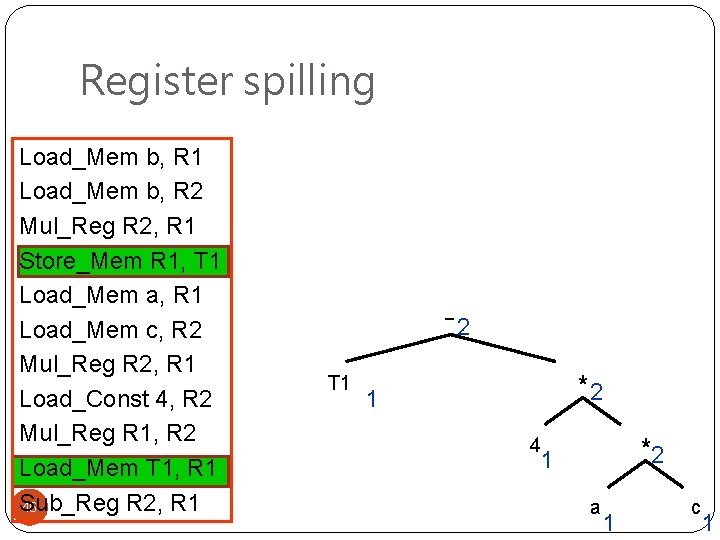

Register spilling Load_Mem b, R 1 Load_Mem b, R 2 Mul_Reg R 2, R 1 Store_Mem R 1, T 1 Load_Mem a, R 1 Load_Mem c, R 2 Mul_Reg R 2, R 1 Load_Const 4, R 2 Mul_Reg R 1, R 2 Load_Mem T 1, R 1 Sub_Reg R 2, R 1 46 -2 3 *T 1 2 b 1 * 1 b 1 2 4 * 2 1 a 1 c 1

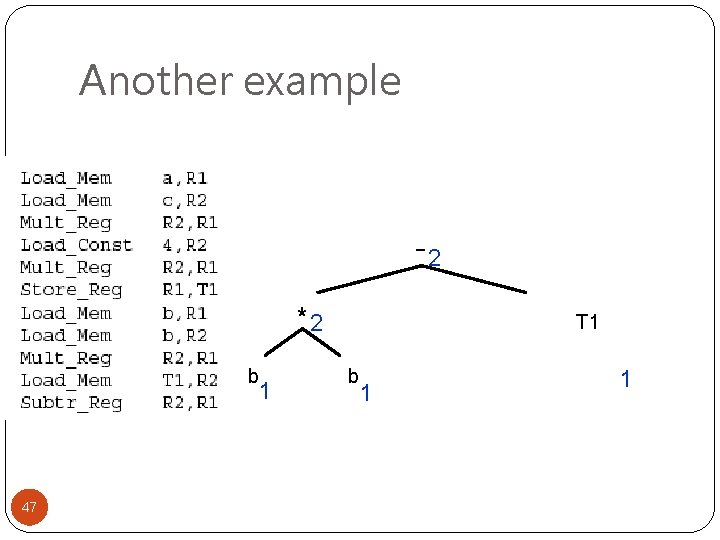

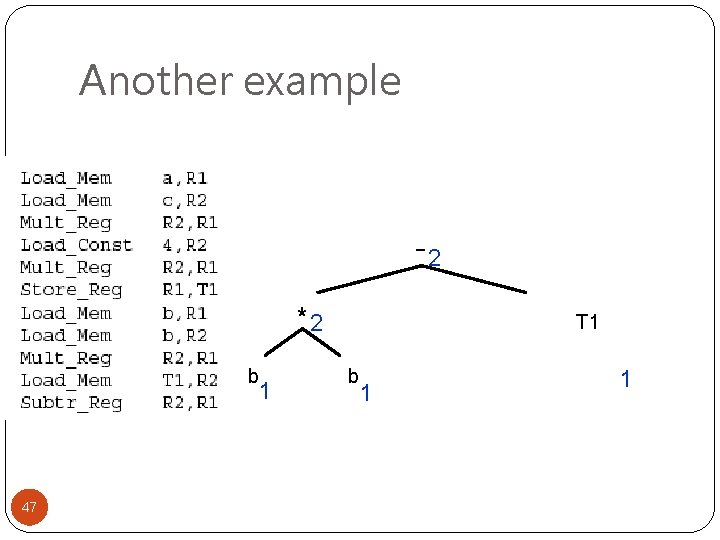

Another example -2 3 * b 1 * T 1 2 2 b 1 4 *1 2 1 a 47 c

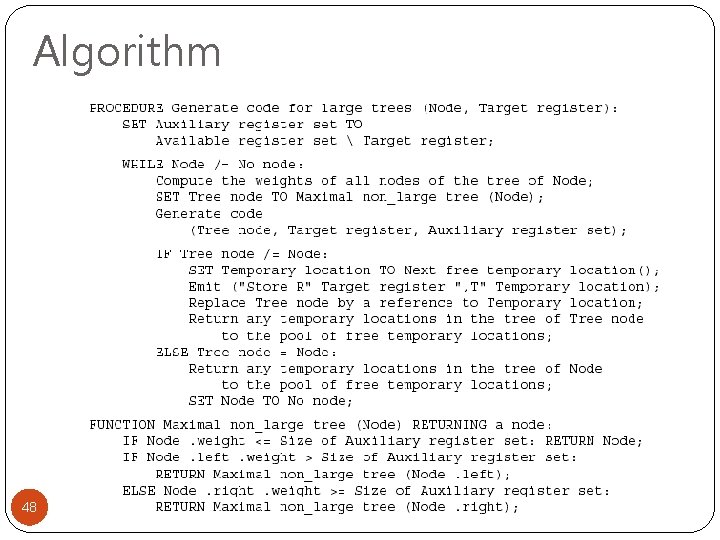

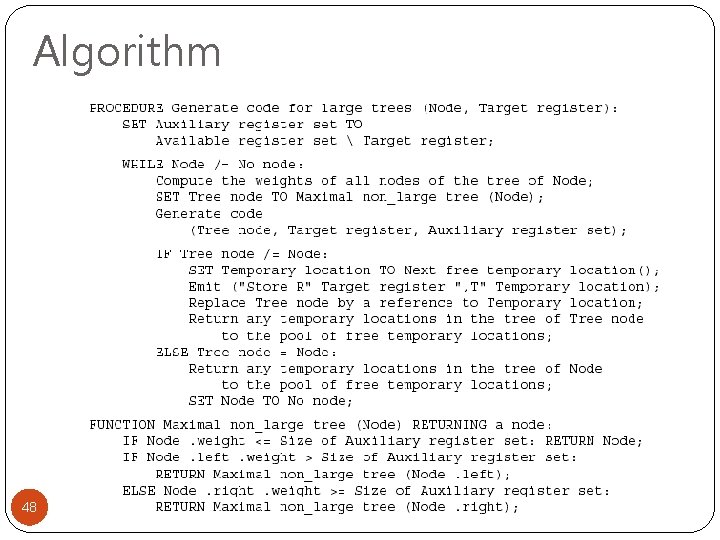

Algorithm 48

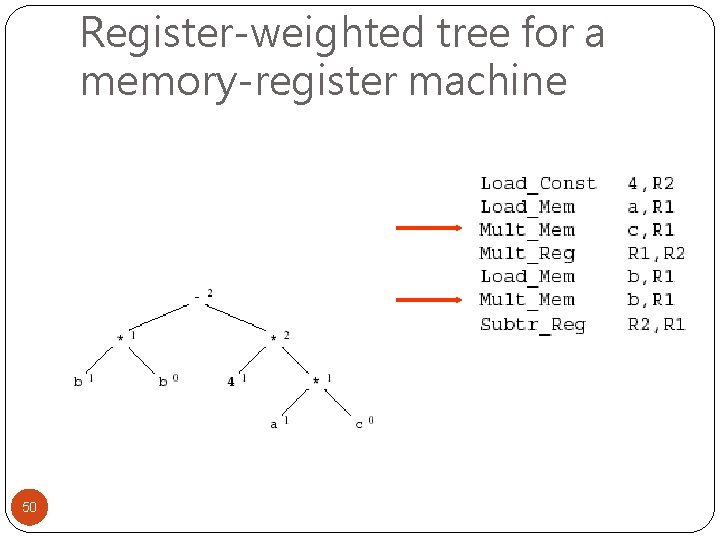

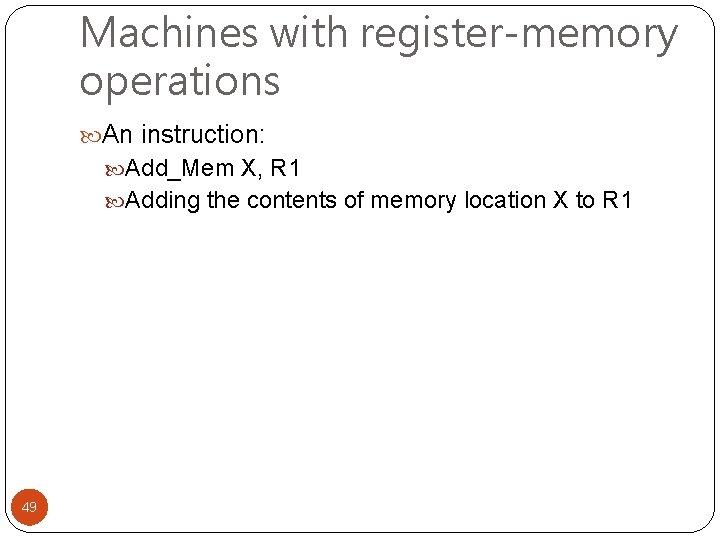

Machines with register-memory operations An instruction: Add_Mem X, R 1 Adding the contents of memory location X to R 1 49

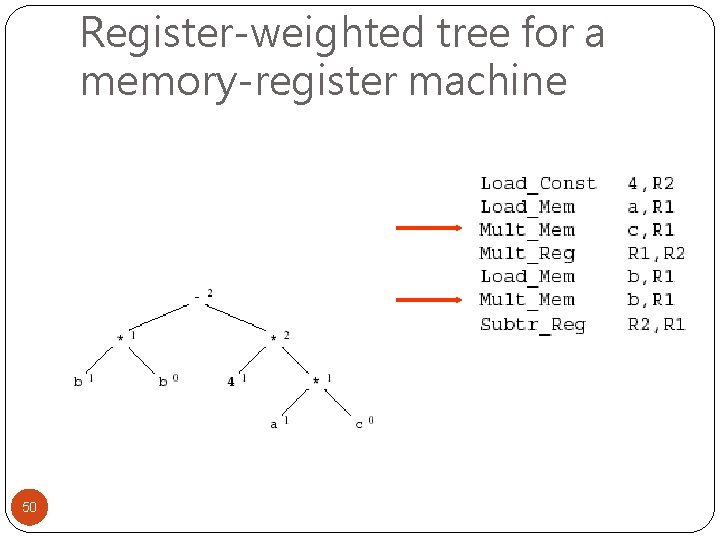

Register-weighted tree for a memory-register machine 50

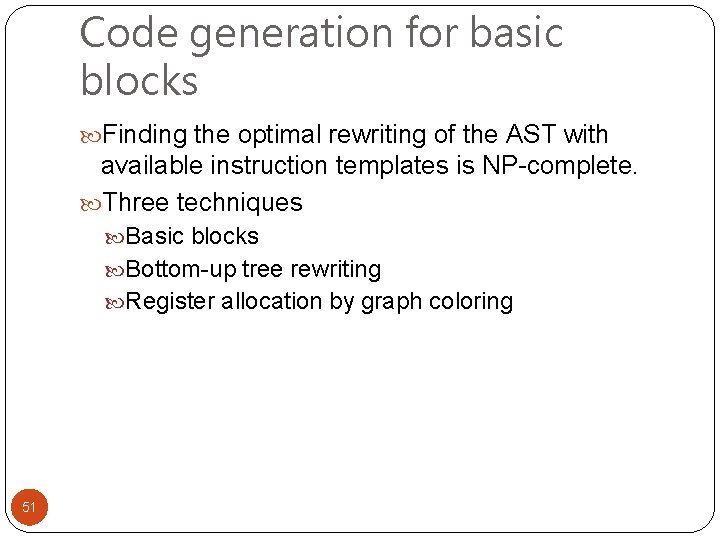

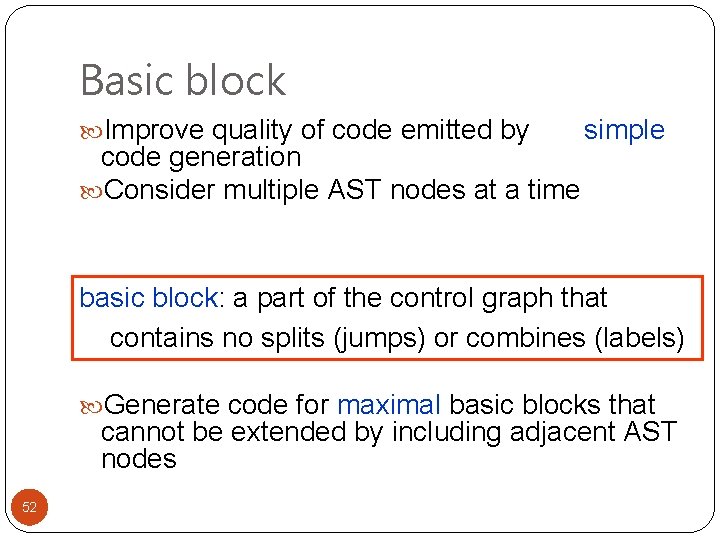

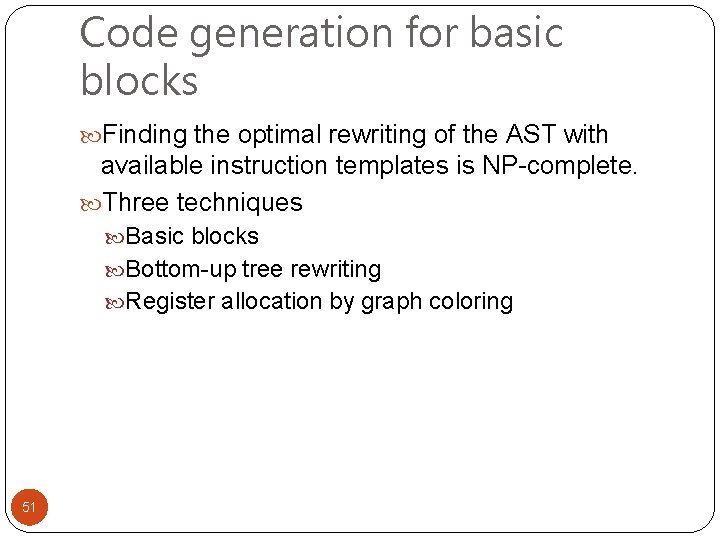

Code generation for basic blocks Finding the optimal rewriting of the AST with available instruction templates is NP-complete. Three techniques Basic blocks Bottom-up tree rewriting Register allocation by graph coloring 51

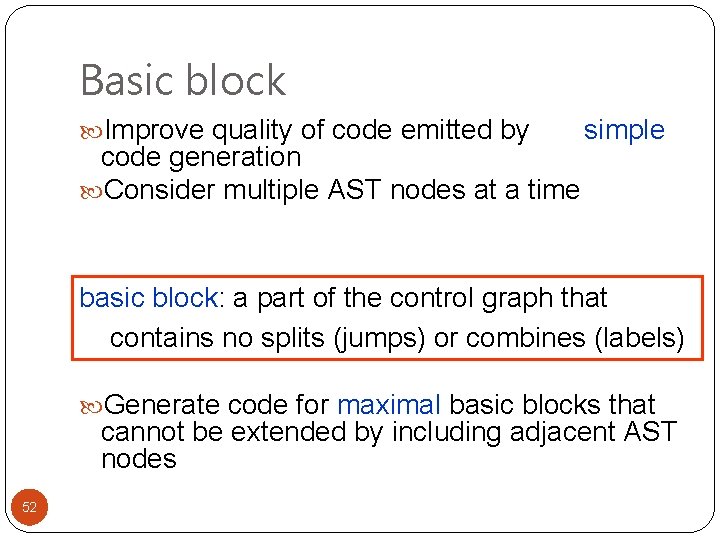

Basic block Improve quality of code emitted by code generation Consider multiple AST nodes at a time simple basic block: a part of the control graph that contains no splits (jumps) or combines (labels) Generate code for maximal basic blocks that cannot be extended by including adjacent AST nodes 52

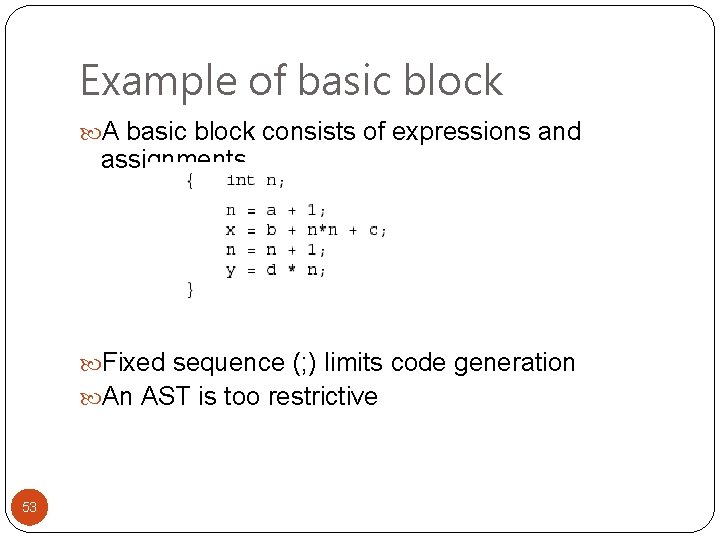

Example of basic block A basic block consists of expressions and assignments Fixed sequence (; ) limits code generation An AST is too restrictive 53

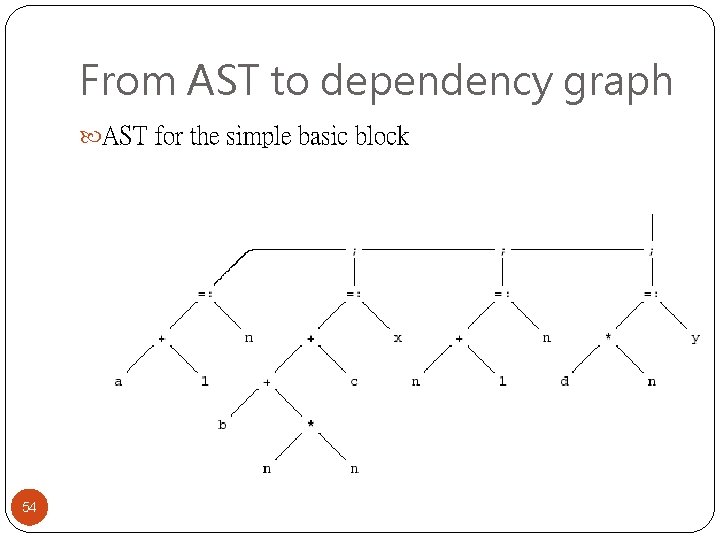

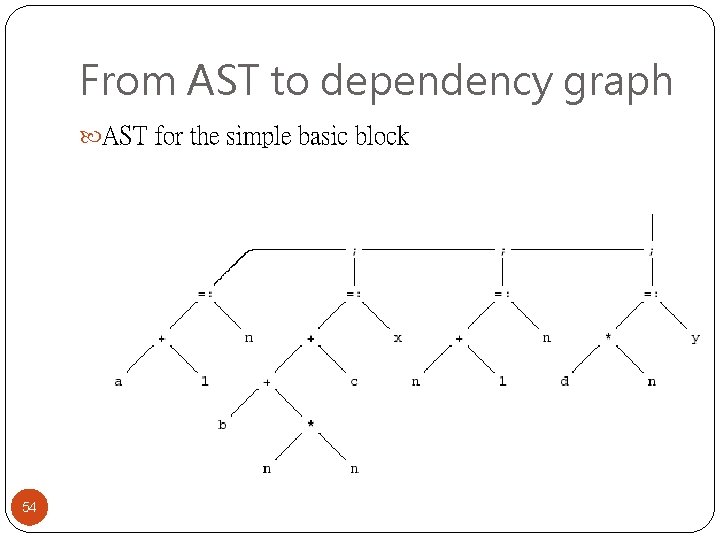

From AST to dependency graph AST for the simple basic block 54

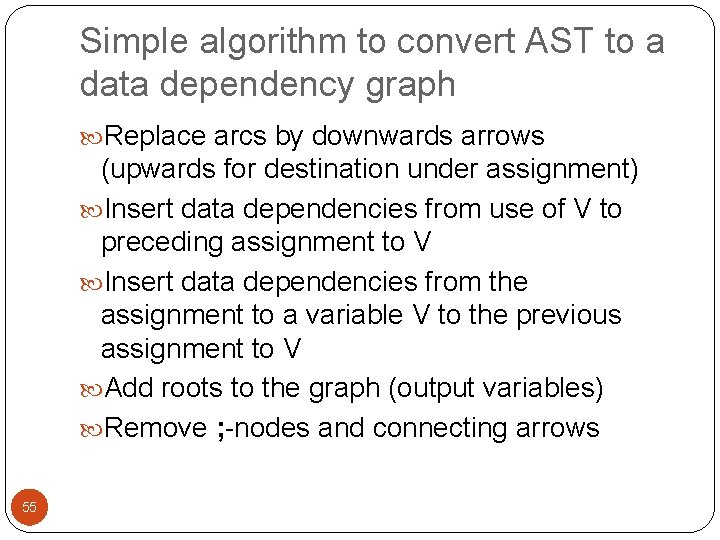

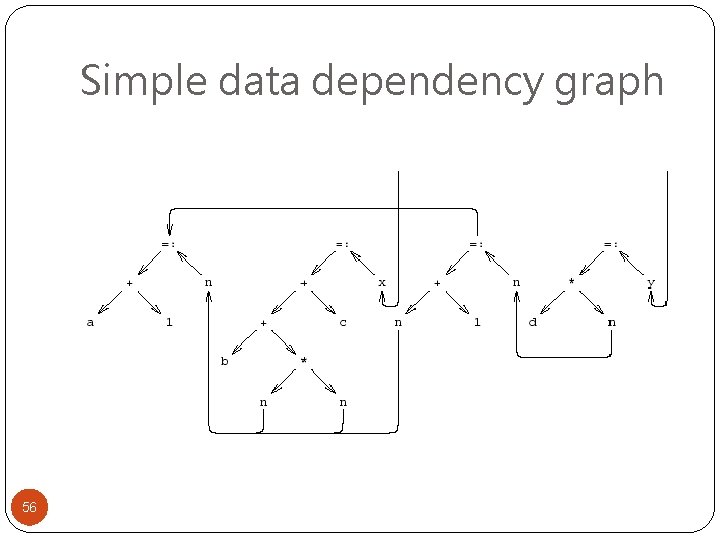

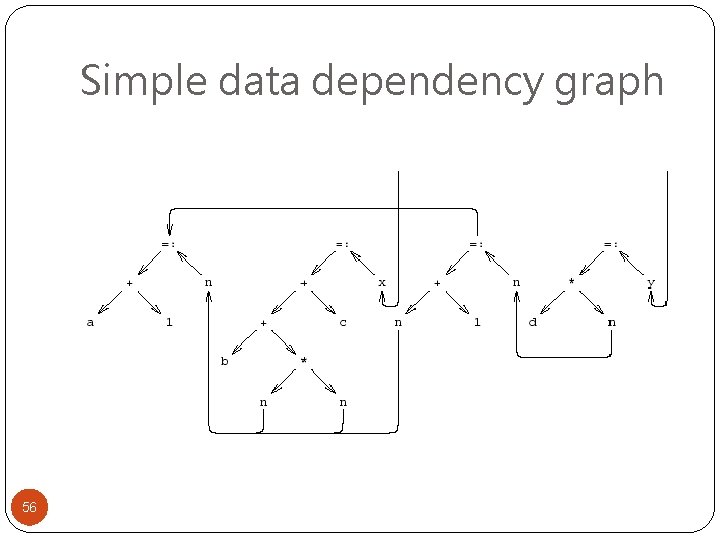

Simple algorithm to convert AST to a data dependency graph Replace arcs by downwards arrows (upwards for destination under assignment) Insert data dependencies from use of V to preceding assignment to V Insert data dependencies from the assignment to a variable V to the previous assignment to V Add roots to the graph (output variables) Remove ; -nodes and connecting arrows 55

Simple data dependency graph 56

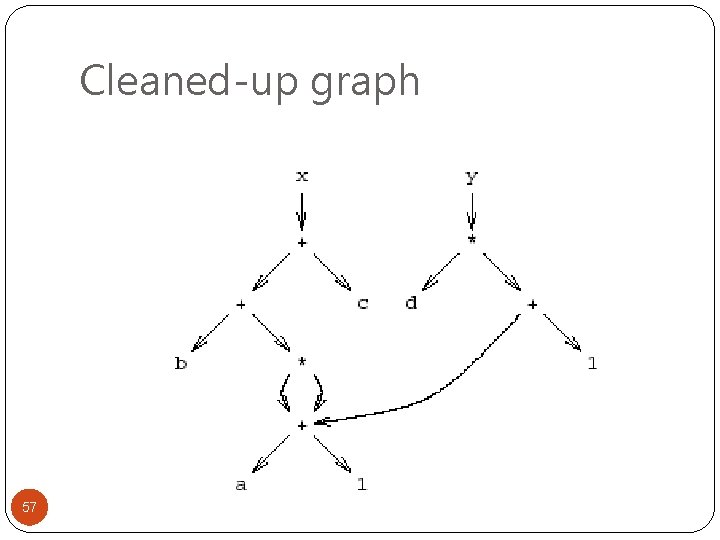

Cleaned-up graph 57

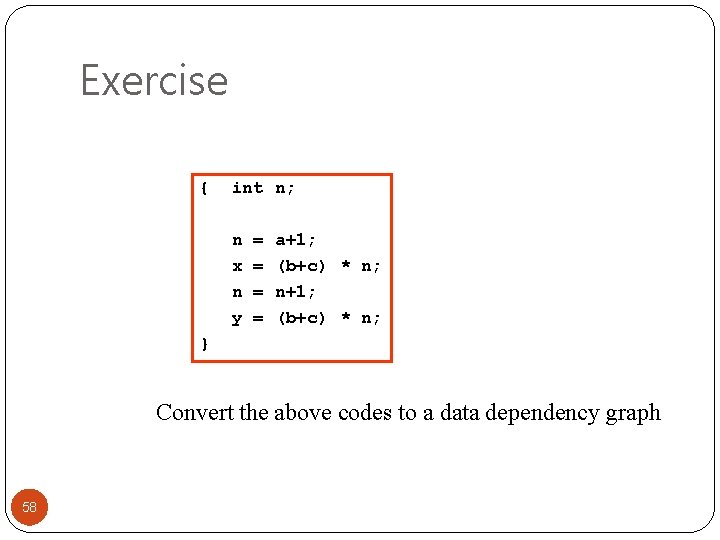

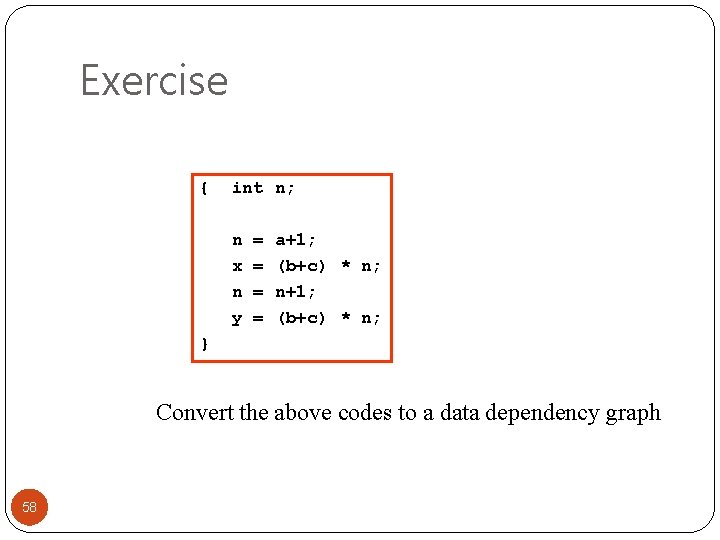

Exercise { int n; n x n y = = a+1; (b+c) * n; n+1; (b+c) * n; } Convert the above codes to a data dependency graph 58

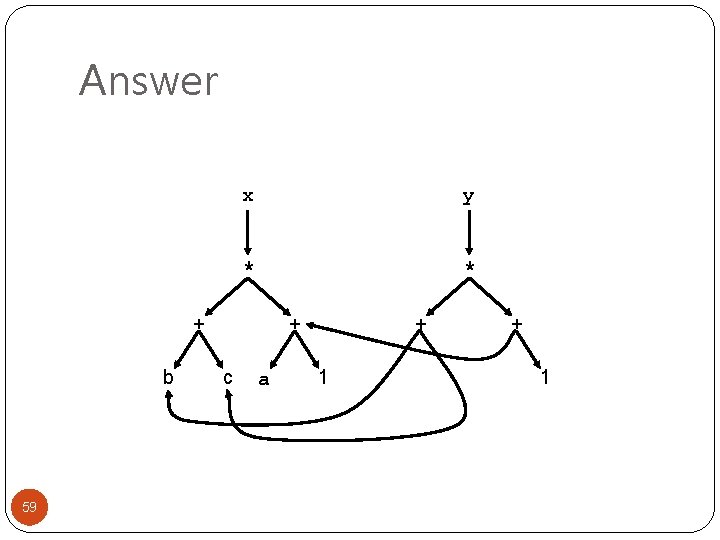

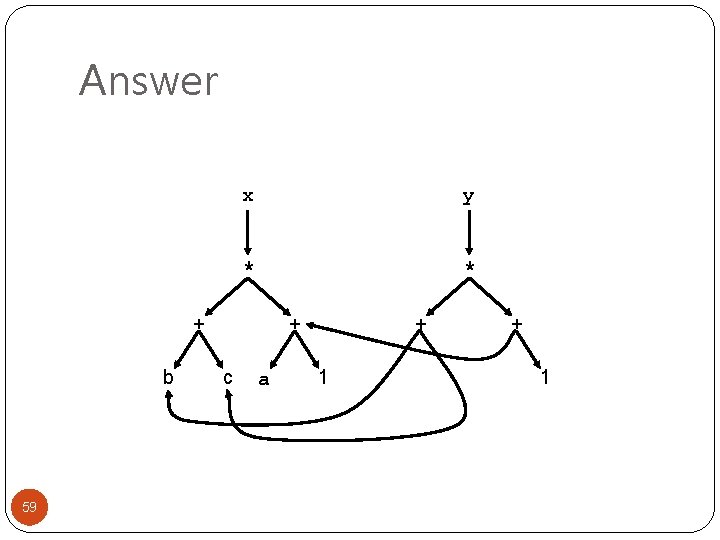

Answer x y * * + b 59 + c a + 1

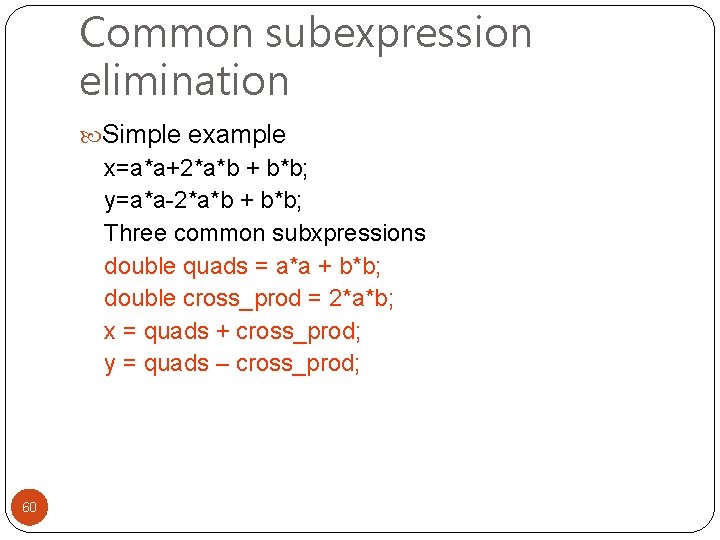

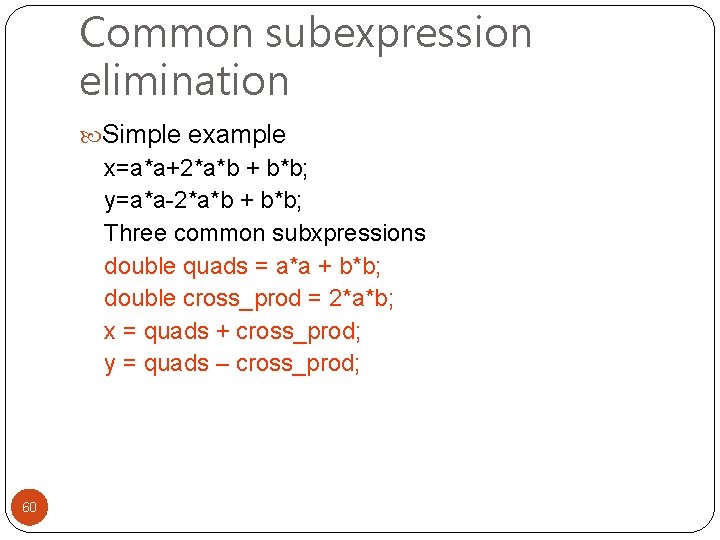

Common subexpression elimination Simple example x=a*a+2*a*b + b*b; y=a*a-2*a*b + b*b; Three common subxpressions double quads = a*a + b*b; double cross_prod = 2*a*b; x = quads + cross_prod; y = quads – cross_prod; 60

Common subexpression Equal subexpression in a basic block are not necessarily common subexpressions x=a*a+2*a*b + b*b; a=b=0; y=a*a-2*a*b + b*b; 61

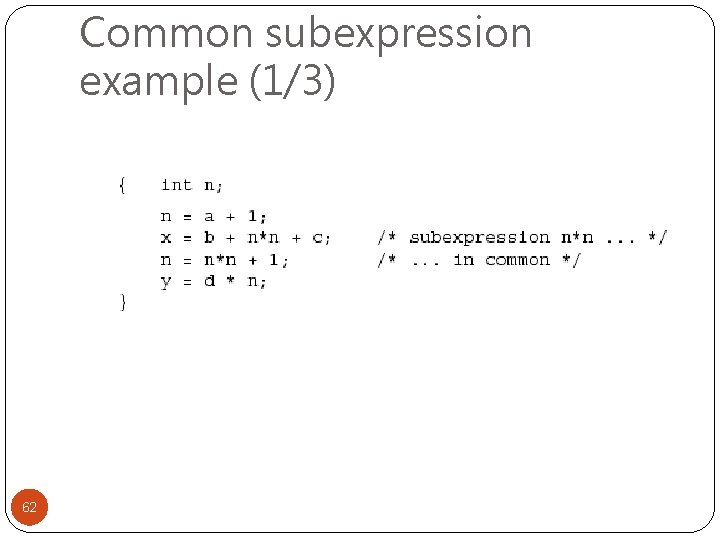

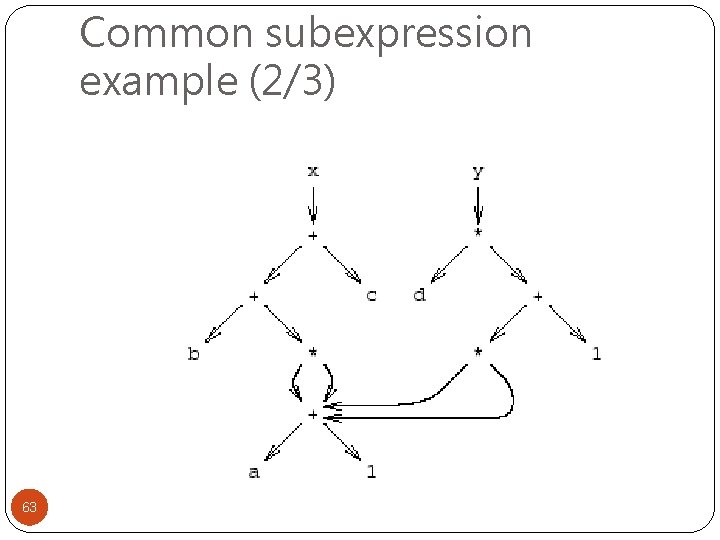

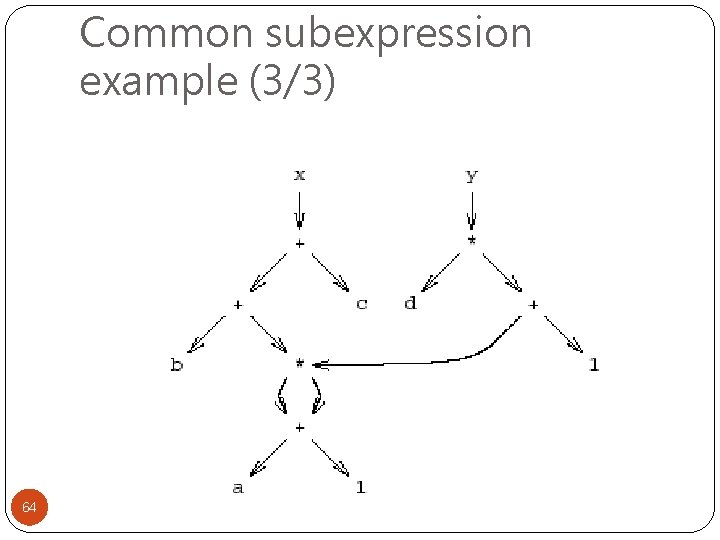

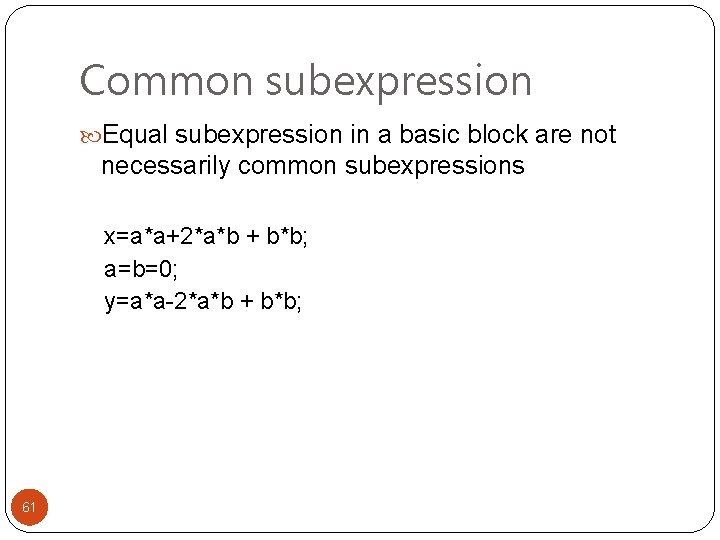

Common subexpression example (1/3) 62

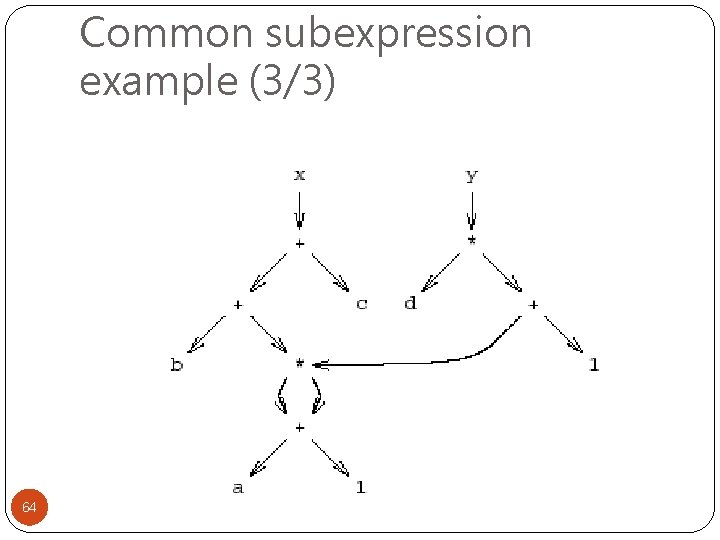

Common subexpression example (2/3) 63

Common subexpression example (3/3) 64

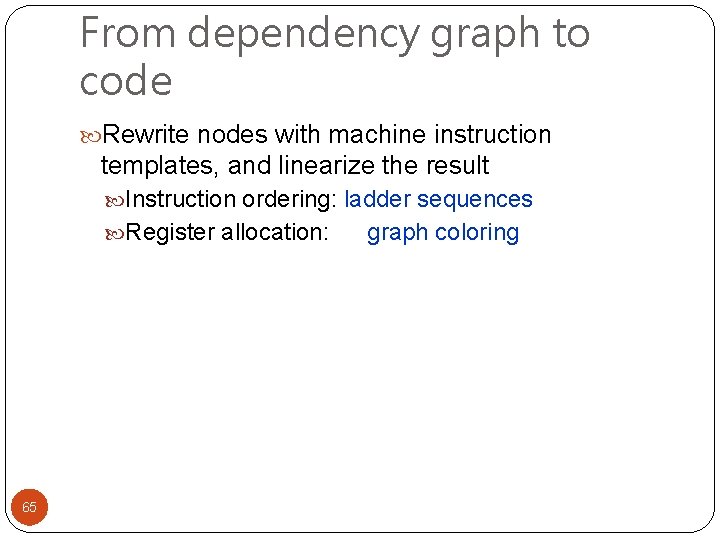

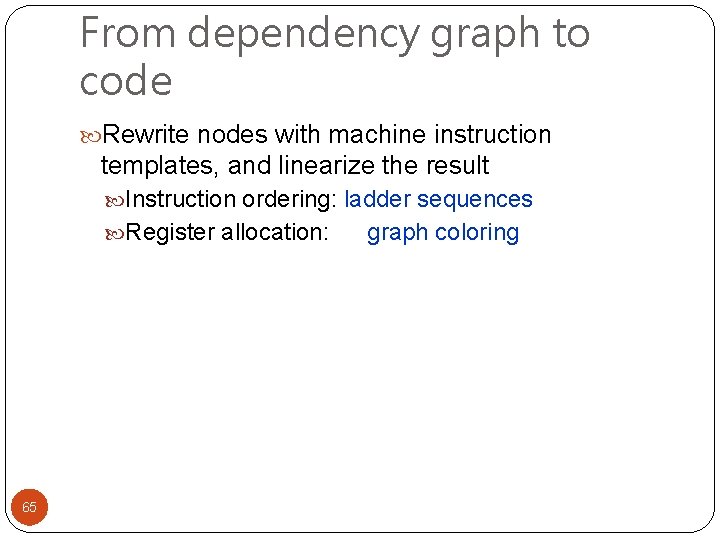

From dependency graph to code Rewrite nodes with machine instruction templates, and linearize the result Instruction ordering: ladder sequences Register allocation: 65 graph coloring

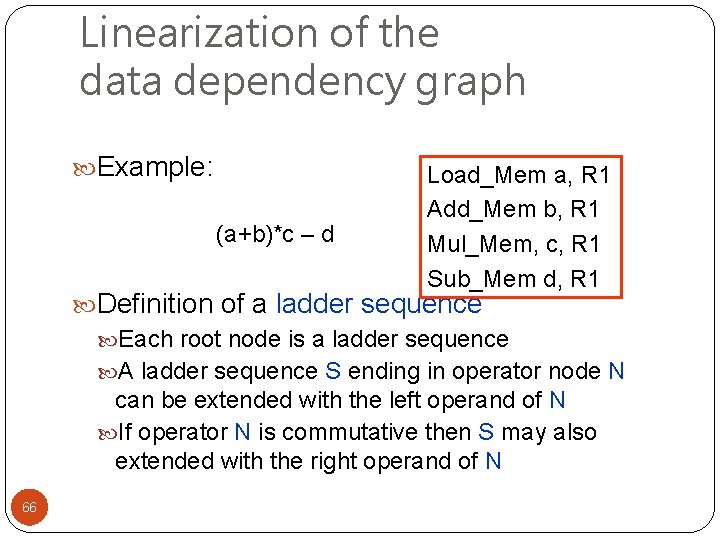

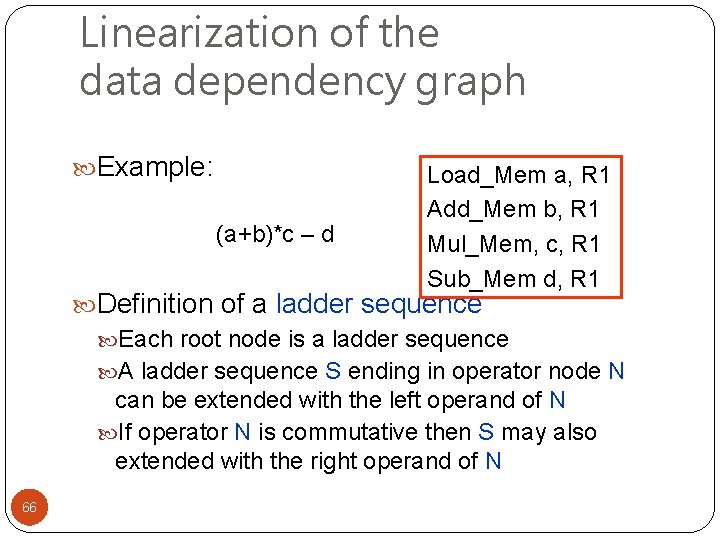

Linearization of the data dependency graph Example: (a+b)*c – d Load_Mem a, R 1 Add_Mem b, R 1 Mul_Mem, c, R 1 Sub_Mem d, R 1 Definition of a ladder sequence Each root node is a ladder sequence A ladder sequence S ending in operator node N can be extended with the left operand of N If operator N is commutative then S may also extended with the right operand of N 66

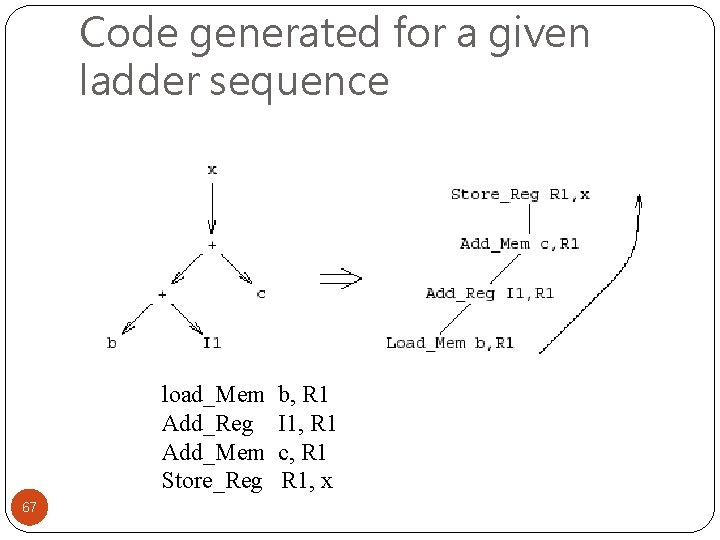

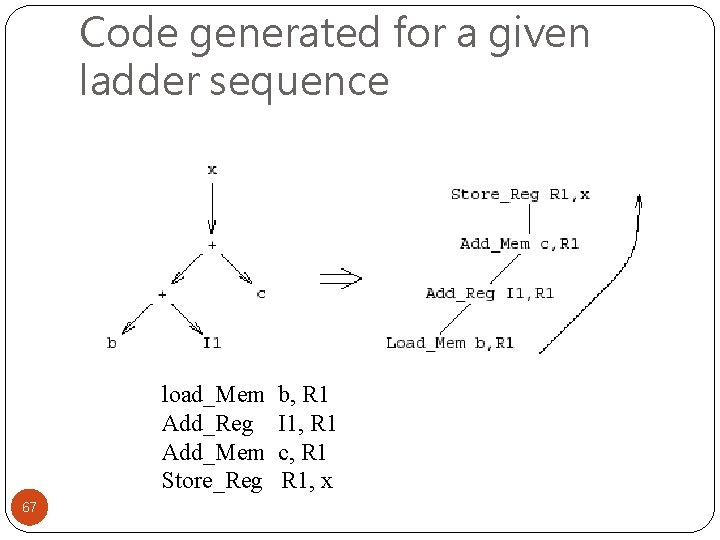

Code generated for a given ladder sequence load_Mem Add_Reg Add_Mem Store_Reg 67 b, R 1 I 1, R 1 c, R 1, x

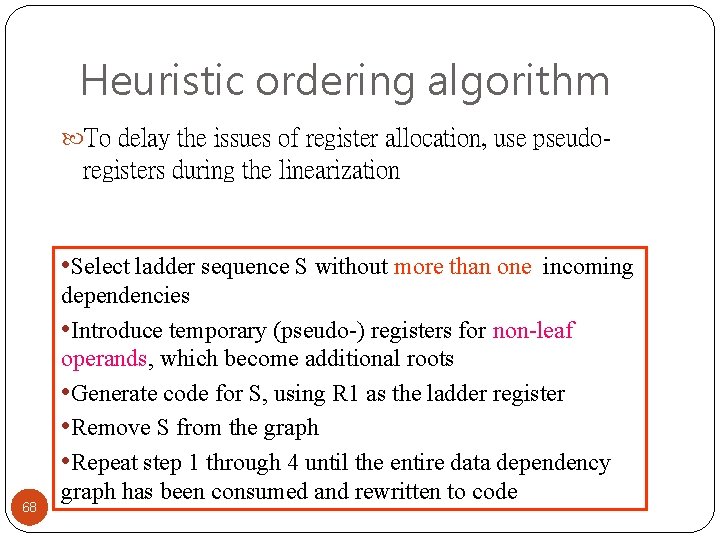

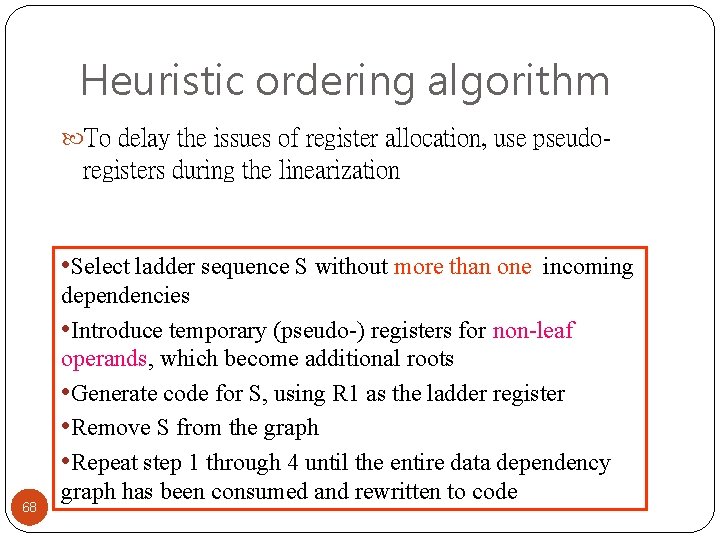

Heuristic ordering algorithm To delay the issues of register allocation, use pseudo- registers during the linearization • Select ladder sequence S without more than one incoming 68 dependencies • Introduce temporary (pseudo-) registers for non-leaf operands, which become additional roots • Generate code for S, using R 1 as the ladder register • Remove S from the graph • Repeat step 1 through 4 until the entire data dependency graph has been consumed and rewritten to code

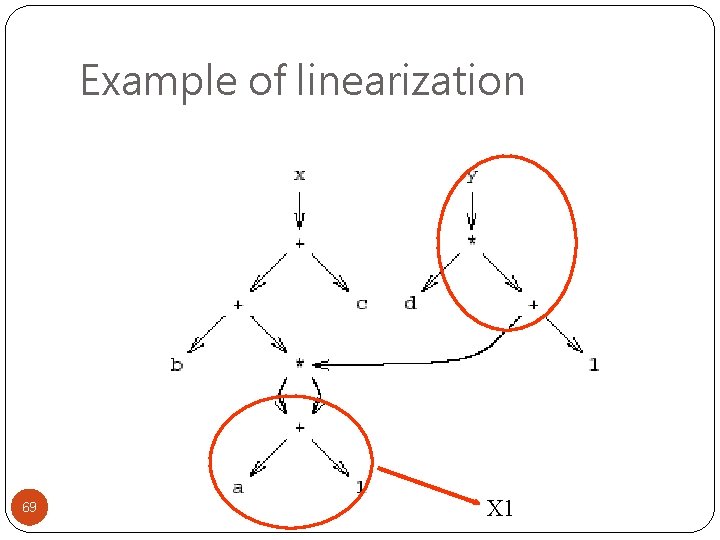

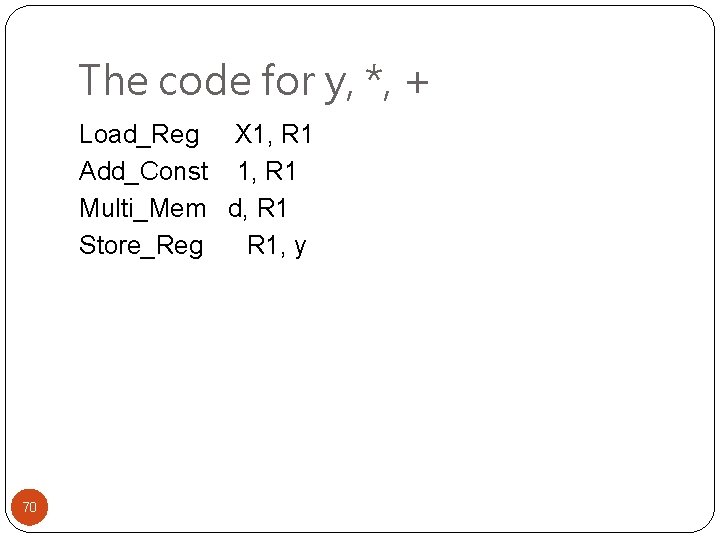

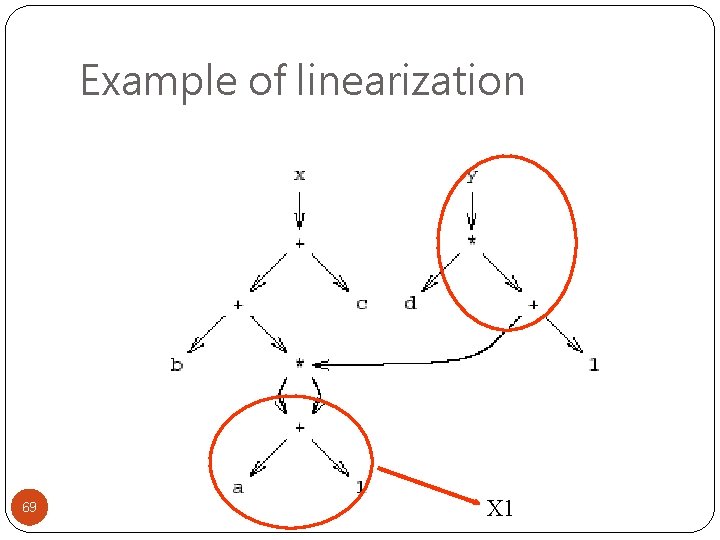

Example of linearization 69 X 1

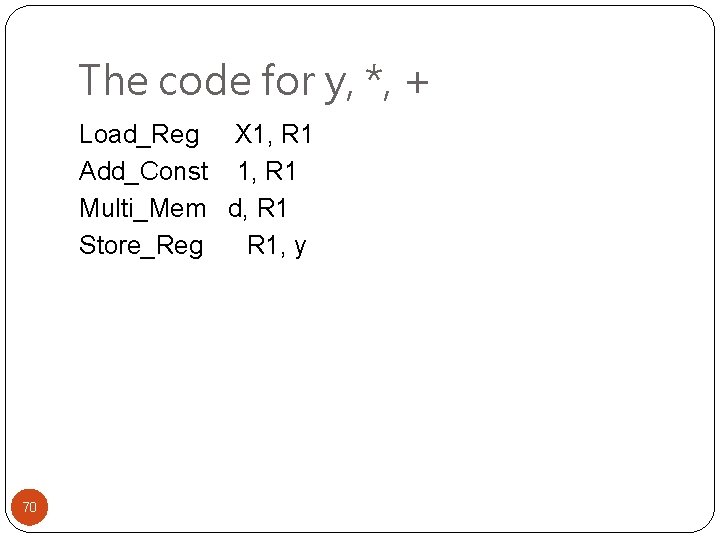

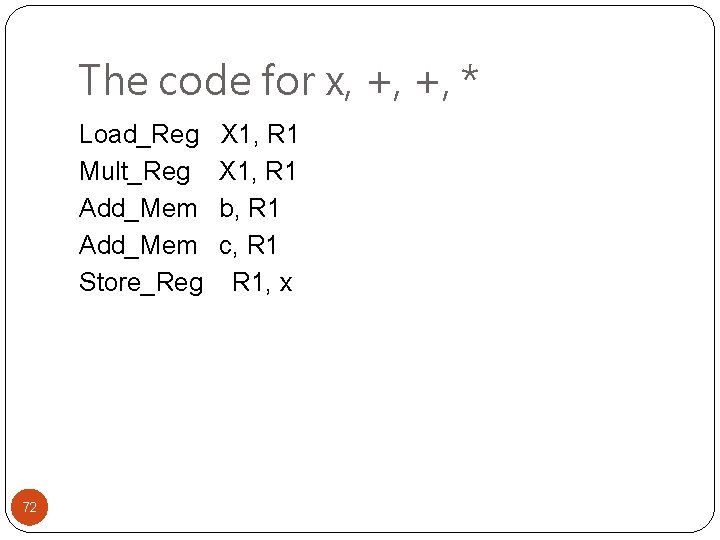

The code for y, *, + Load_Reg X 1, R 1 Add_Const 1, R 1 Multi_Mem d, R 1 Store_Reg R 1, y 70

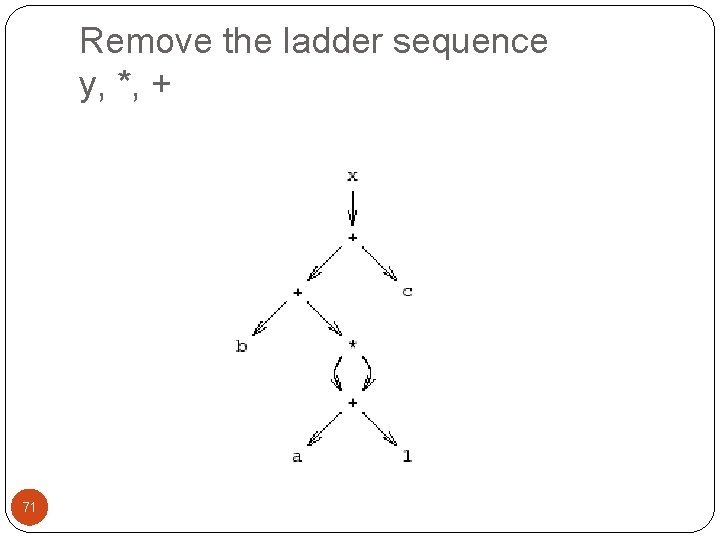

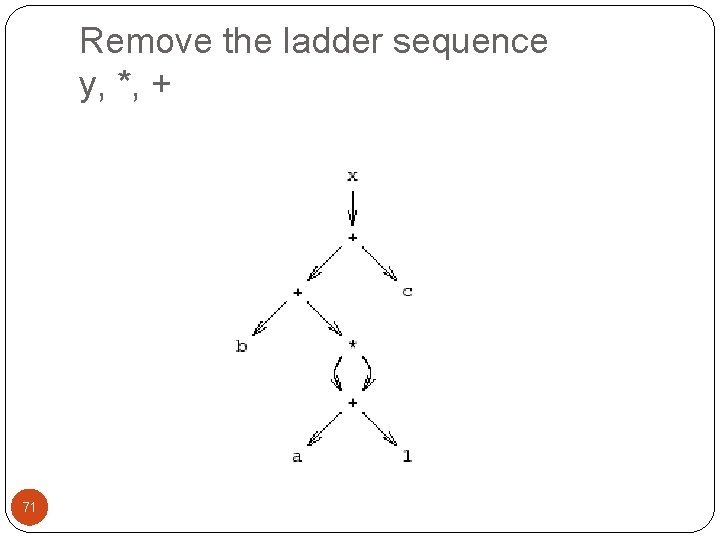

Remove the ladder sequence y, *, + 71

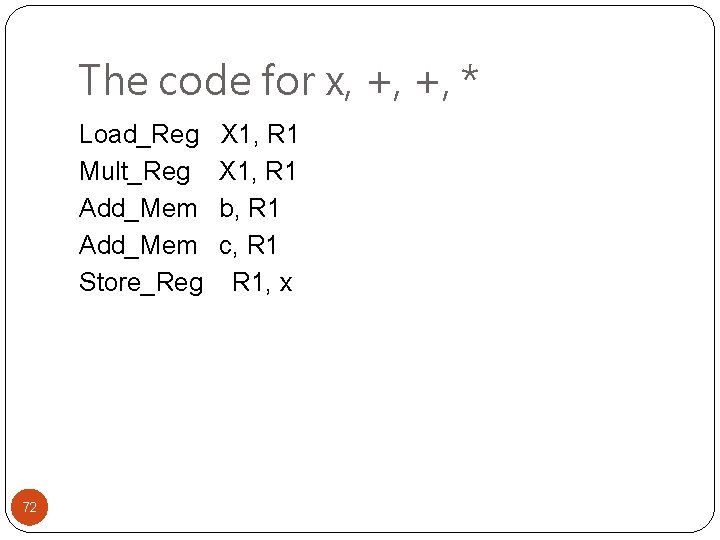

The code for x, +, +, * Load_Reg Mult_Reg Add_Mem Store_Reg 72 X 1, R 1 b, R 1 c, R 1, x

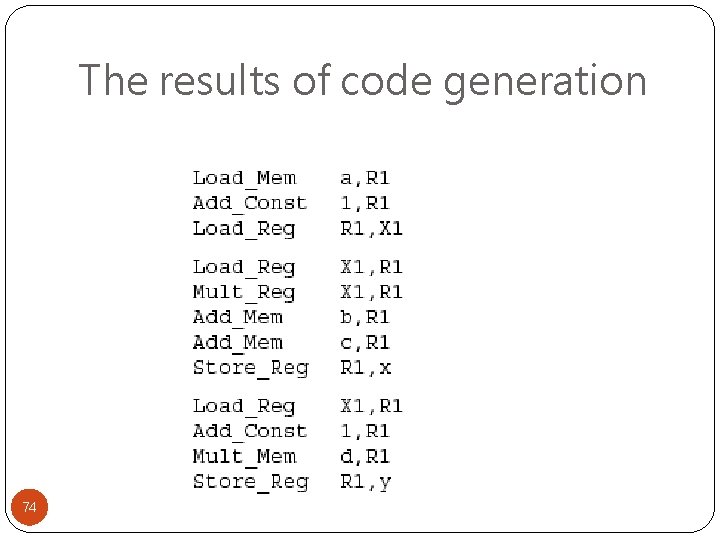

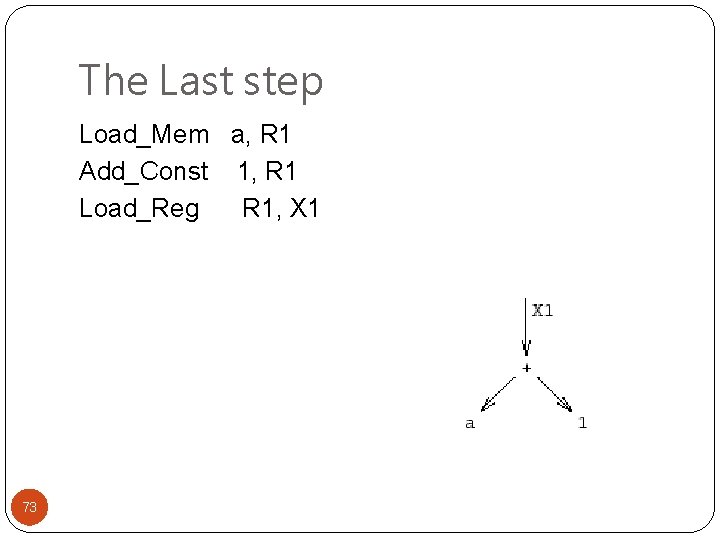

The Last step Load_Mem a, R 1 Add_Const 1, R 1 Load_Reg R 1, X 1 73

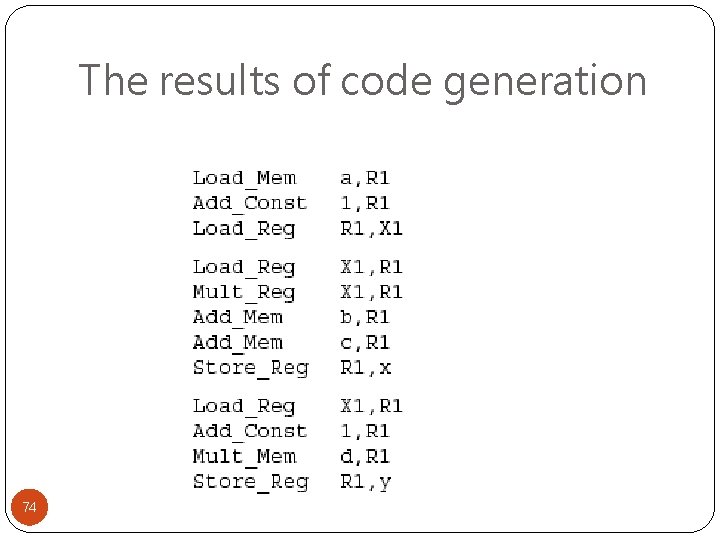

The results of code generation 74

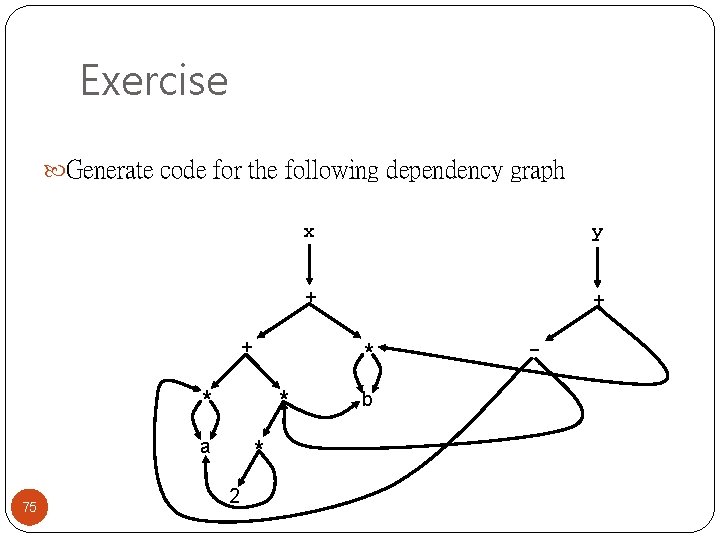

Exercise Generate code for the following dependency graph + 75 * * 2 y + + * * a x b -

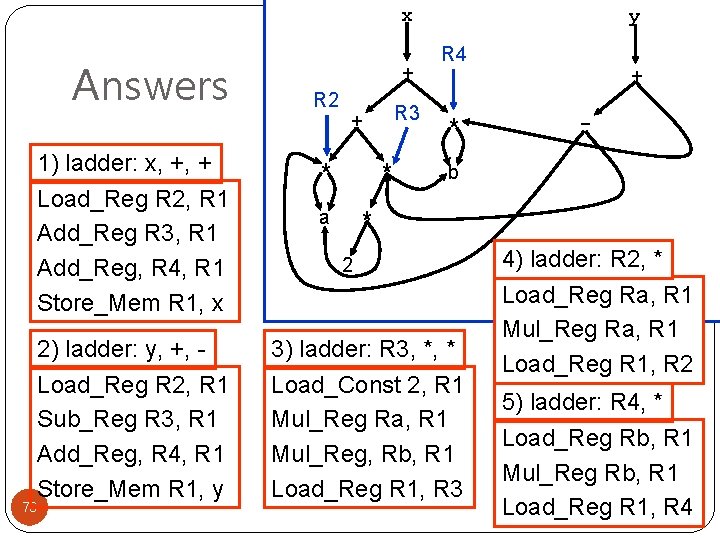

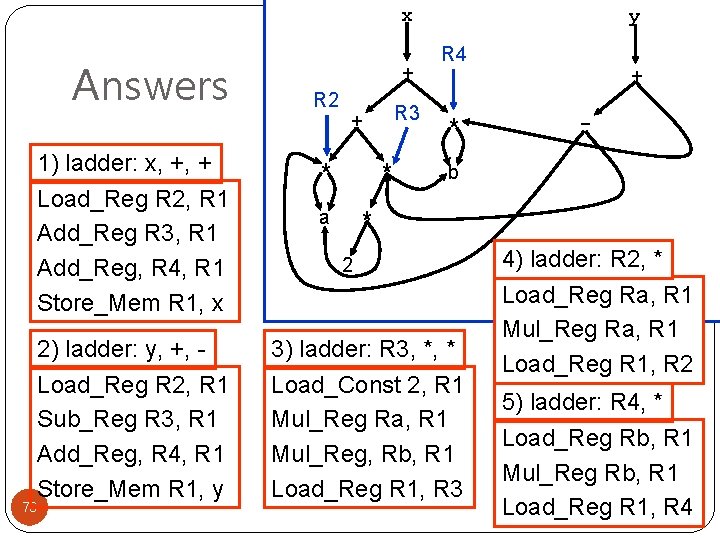

x Answers 1) ladder: x, +, + Load_Reg R 2, R 1 Add_Reg R 3, R 1 Add_Reg, R 4, R 1 Store_Mem R 1, x 2) ladder: y, +, Load_Reg R 2, R 1 Sub_Reg R 3, R 1 Add_Reg, R 4, R 1 Store_Mem R 1, y 76 + R 2 R 3 + * * a y R 4 + * - b * 2 3) ladder: R 3, *, * Load_Const 2, R 1 Mul_Reg Ra, R 1 Mul_Reg, Rb, R 1 Load_Reg R 1, R 3 4) ladder: R 2, * Load_Reg Ra, R 1 Mul_Reg Ra, R 1 Load_Reg R 1, R 2 5) ladder: R 4, * Load_Reg Rb, R 1 Mul_Reg Rb, R 1 Load_Reg R 1, R 4

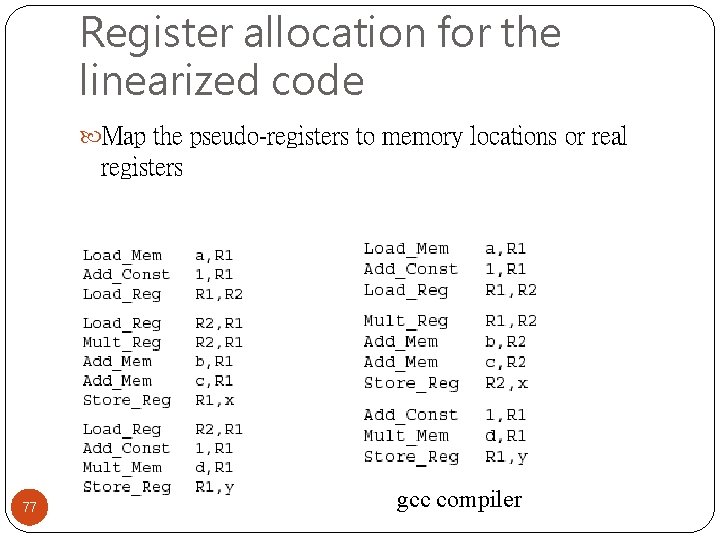

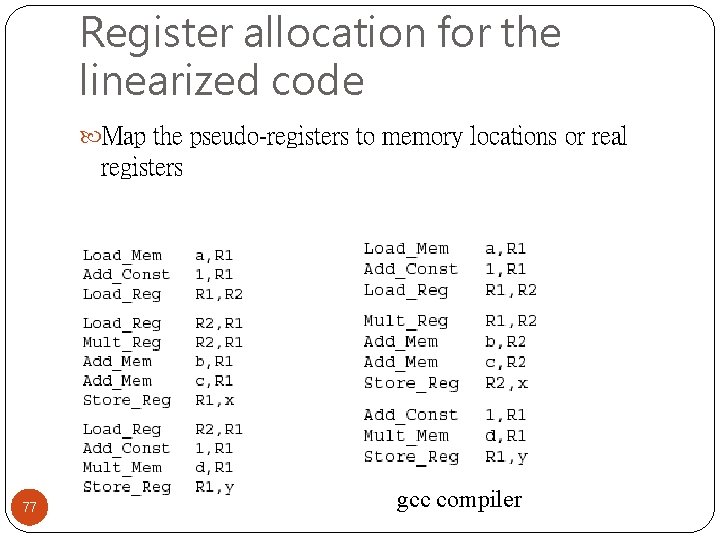

Register allocation for the linearized code Map the pseudo-registers to memory locations or real registers 77 gcc compiler

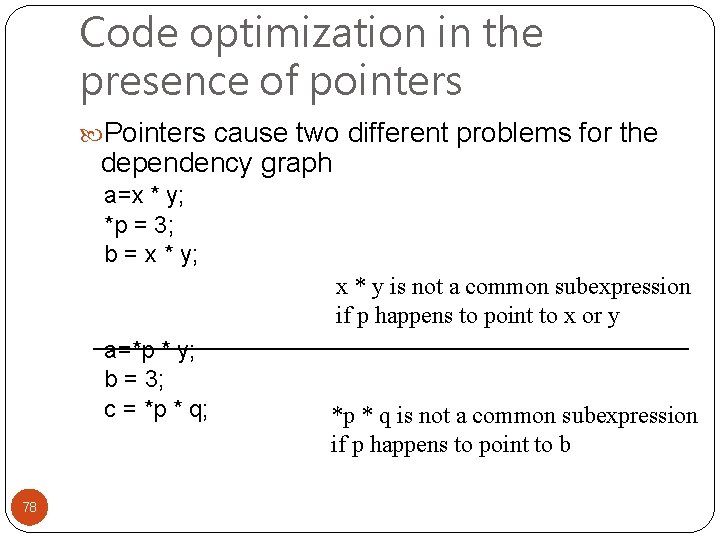

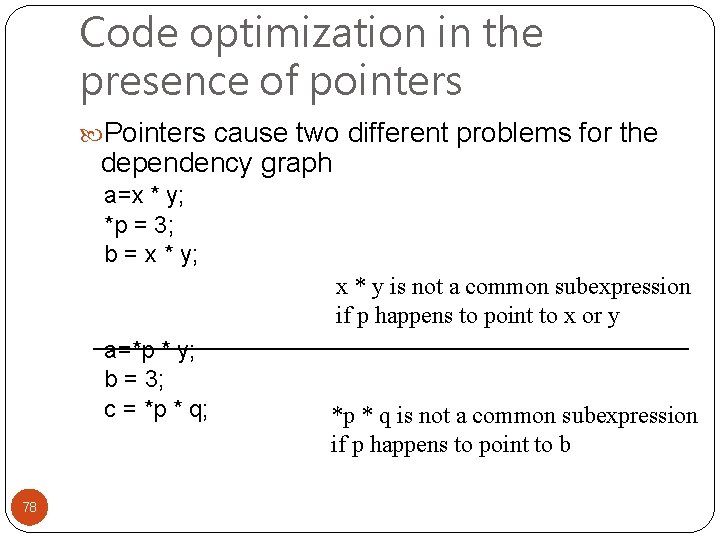

Code optimization in the presence of pointers Pointers cause two different problems for the dependency graph a=x * y; *p = 3; b = x * y; x * y is not a common subexpression if p happens to point to x or y a=*p * y; b = 3; c = *p * q; 78 *p * q is not a common subexpression if p happens to point to b

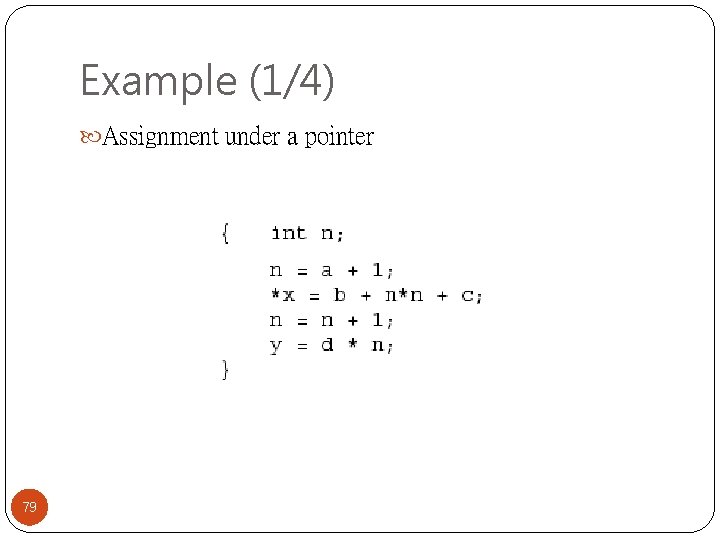

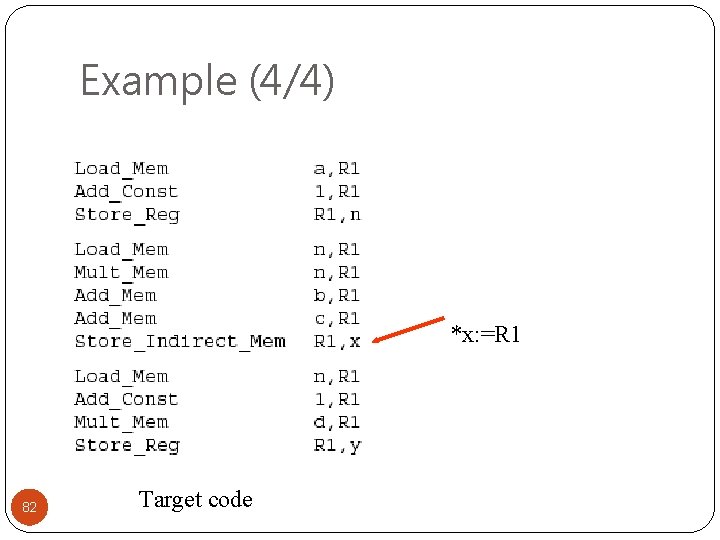

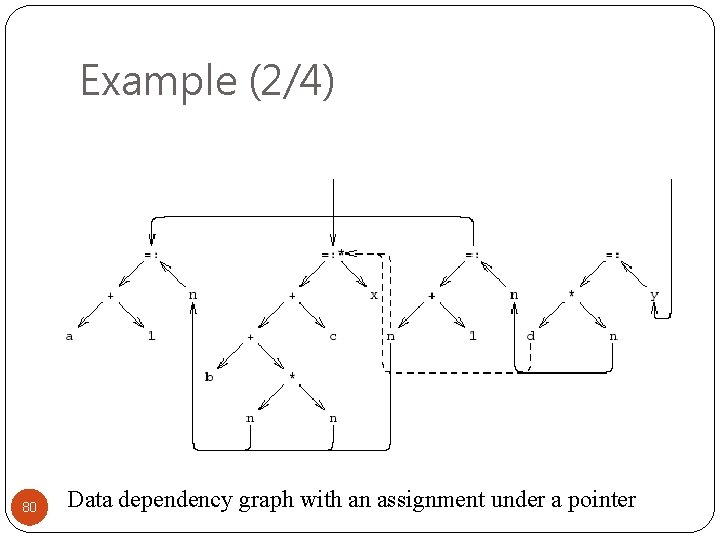

Example (1/4) Assignment under a pointer 79

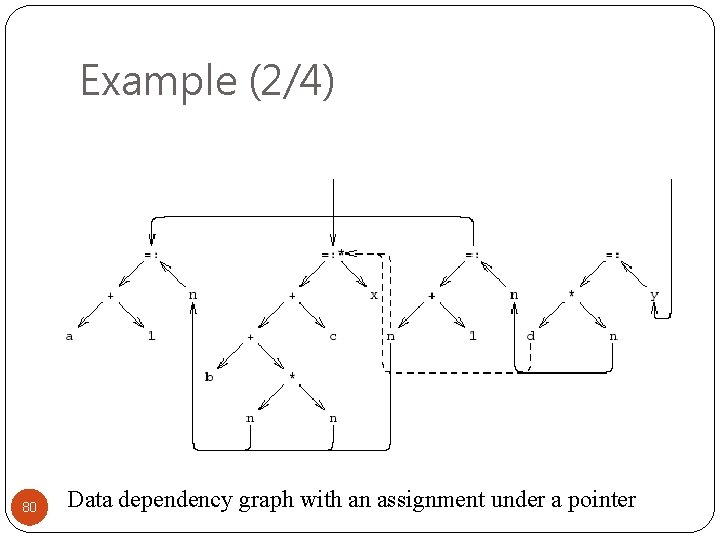

Example (2/4) 80 Data dependency graph with an assignment under a pointer

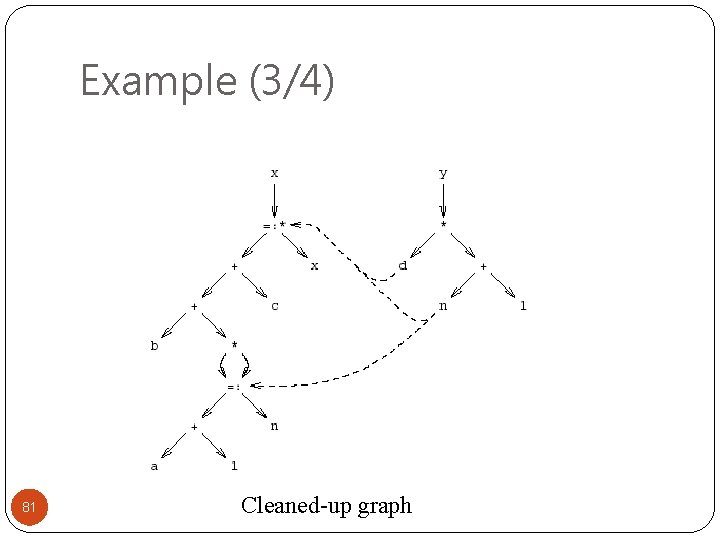

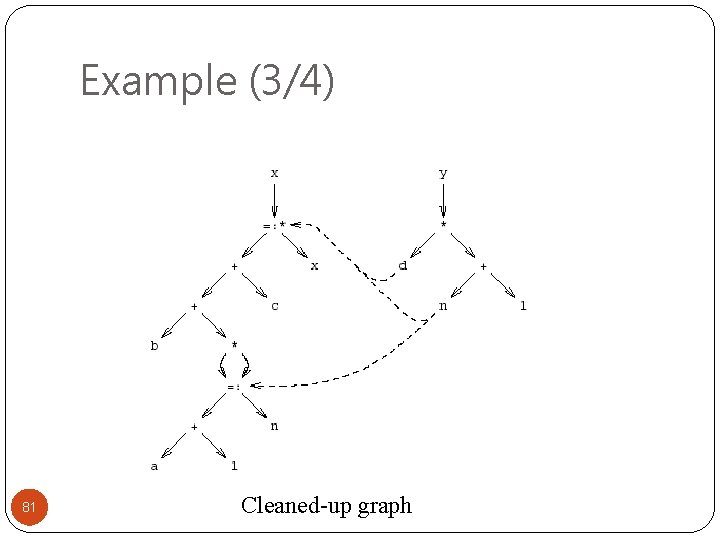

Example (3/4) 81 Cleaned-up graph

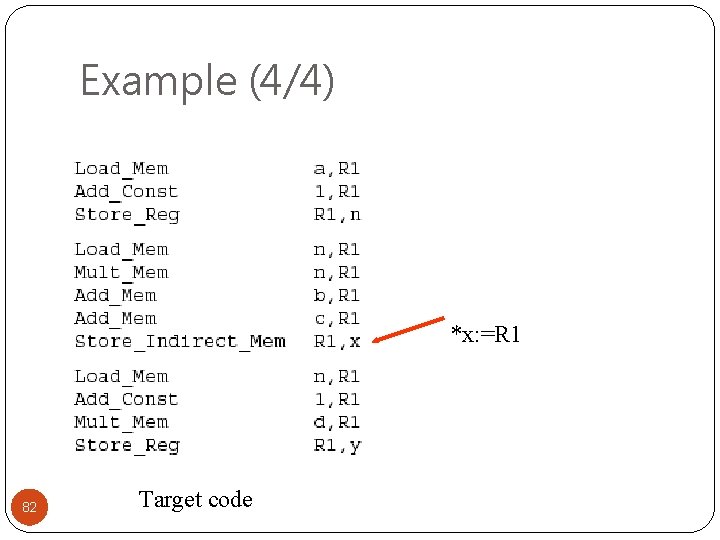

Example (4/4) *x: =R 1 82 Target code

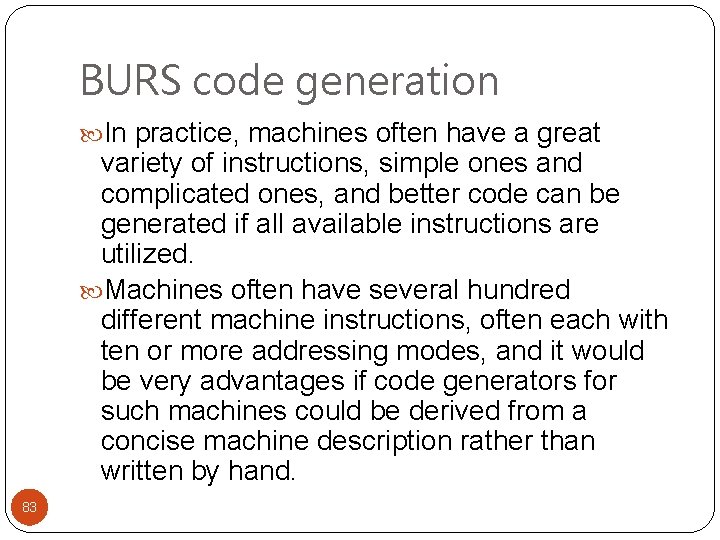

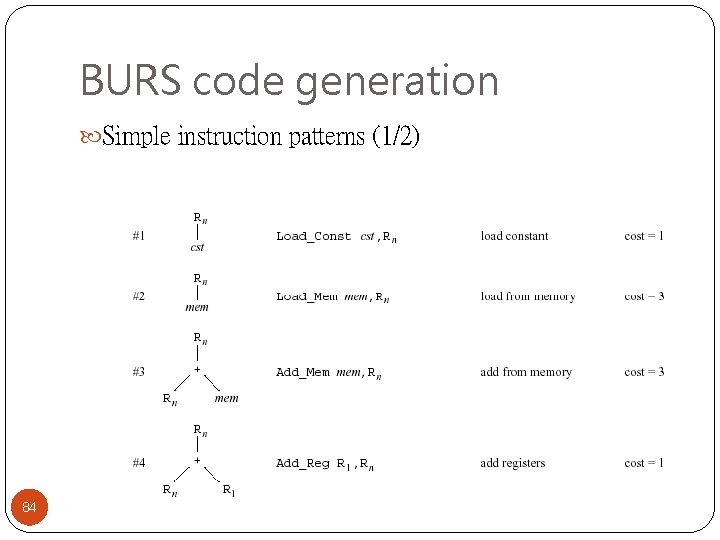

BURS code generation In practice, machines often have a great variety of instructions, simple ones and complicated ones, and better code can be generated if all available instructions are utilized. Machines often have several hundred different machine instructions, often each with ten or more addressing modes, and it would be very advantages if code generators for such machines could be derived from a concise machine description rather than written by hand. 83

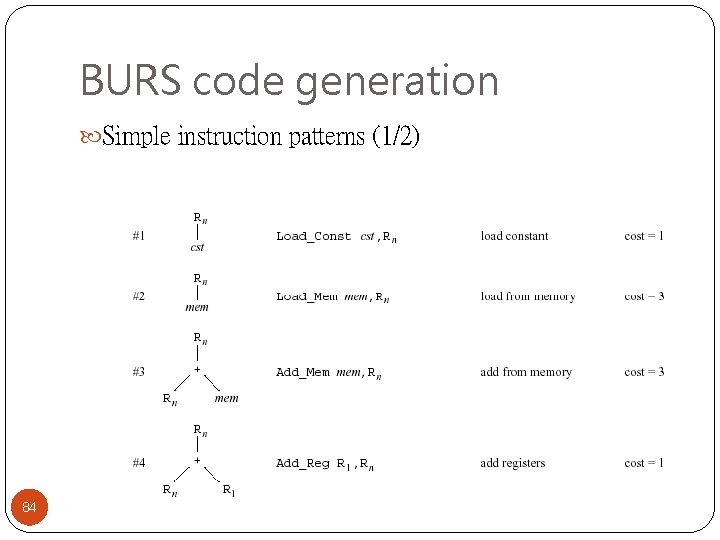

BURS code generation Simple instruction patterns (1/2) 84

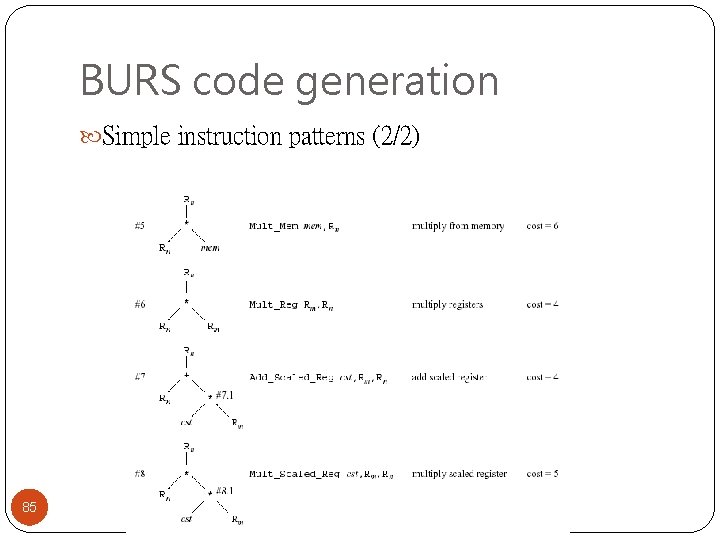

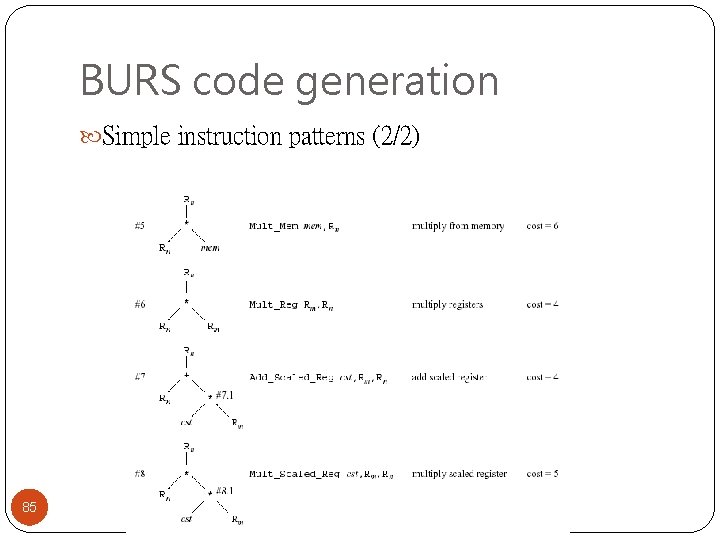

BURS code generation Simple instruction patterns (2/2) 85

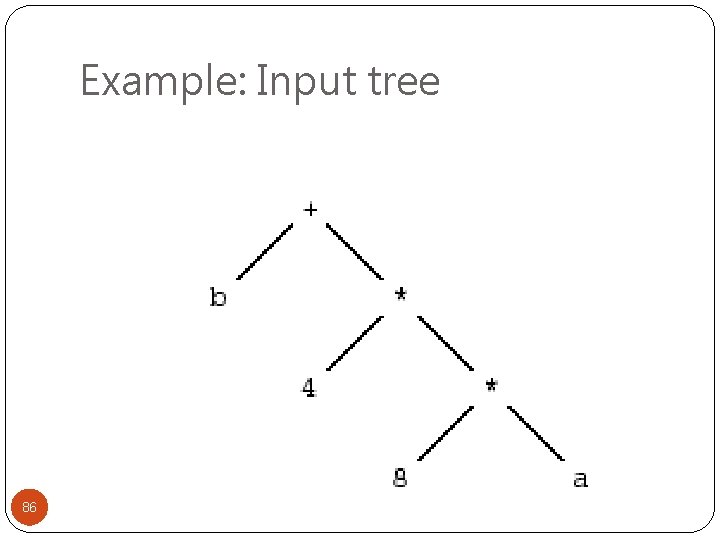

Example: Input tree 86

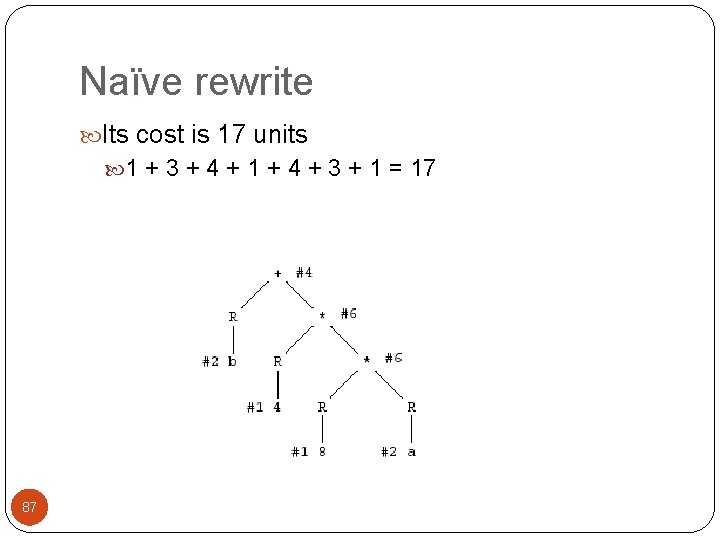

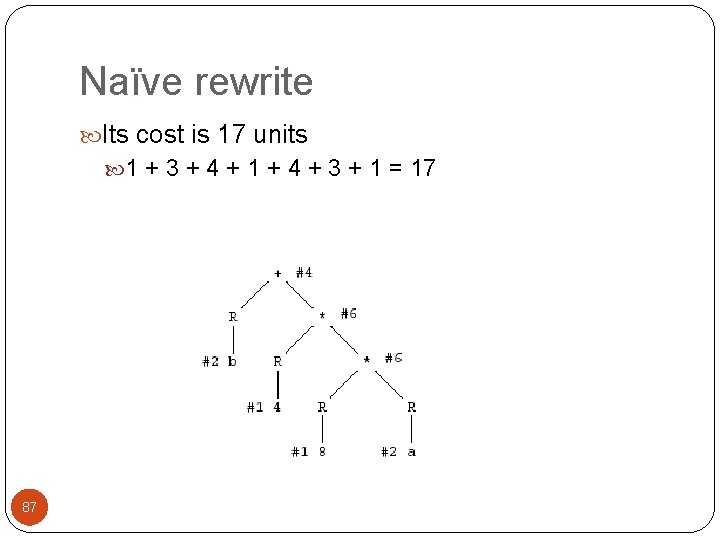

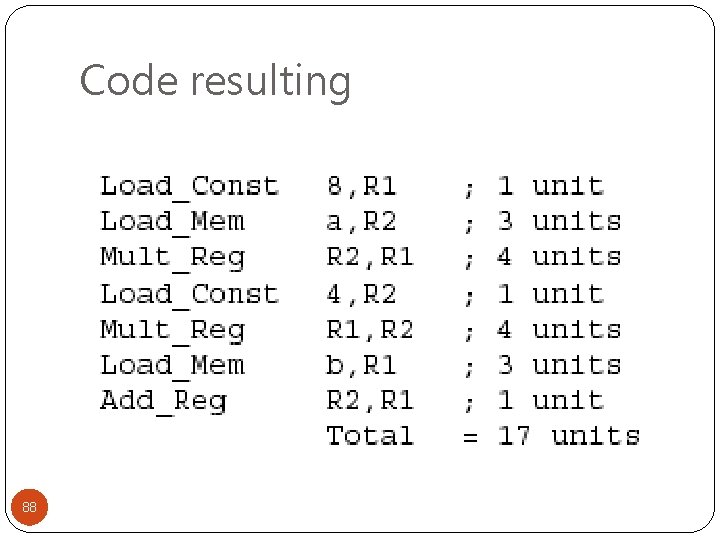

Naïve rewrite Its cost is 17 units 1 + 3 + 4 + 1 + 4 + 3 + 1 = 17 87

Code resulting 88

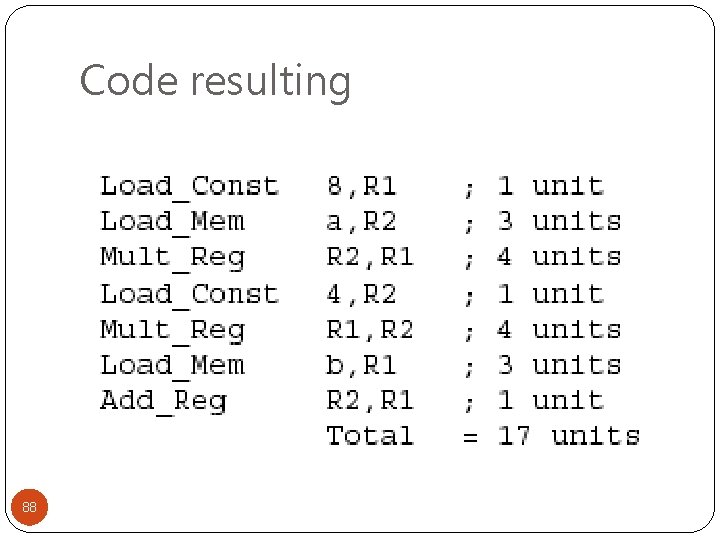

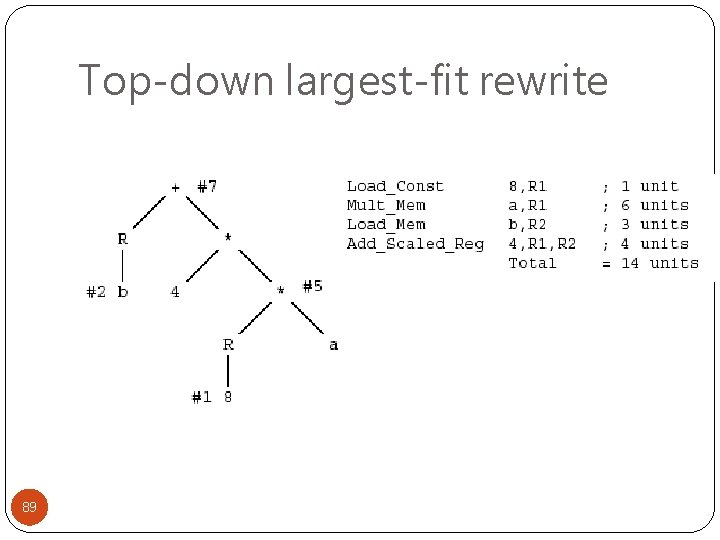

Top-down largest-fit rewrite 89

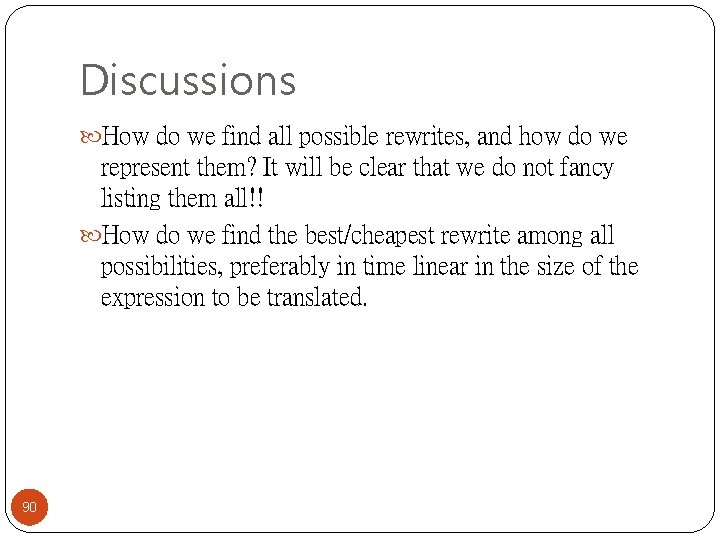

Discussions How do we find all possible rewrites, and how do we represent them? It will be clear that we do not fancy listing them all!! How do we find the best/cheapest rewrite among all possibilities, preferably in time linear in the size of the expression to be translated. 90

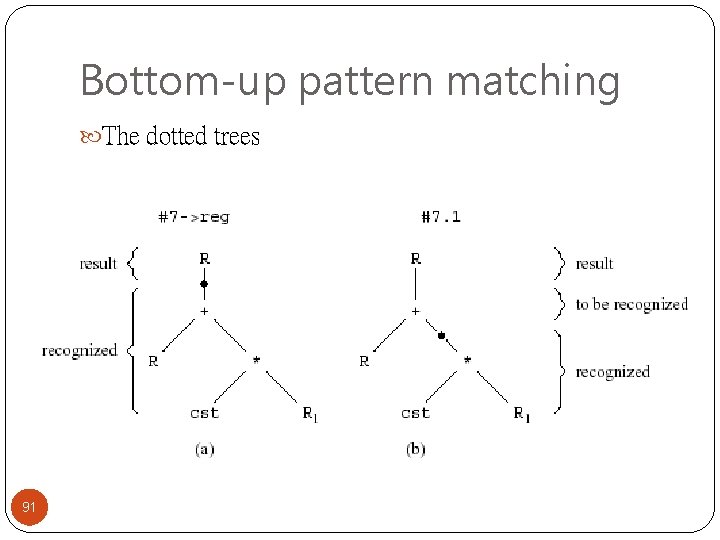

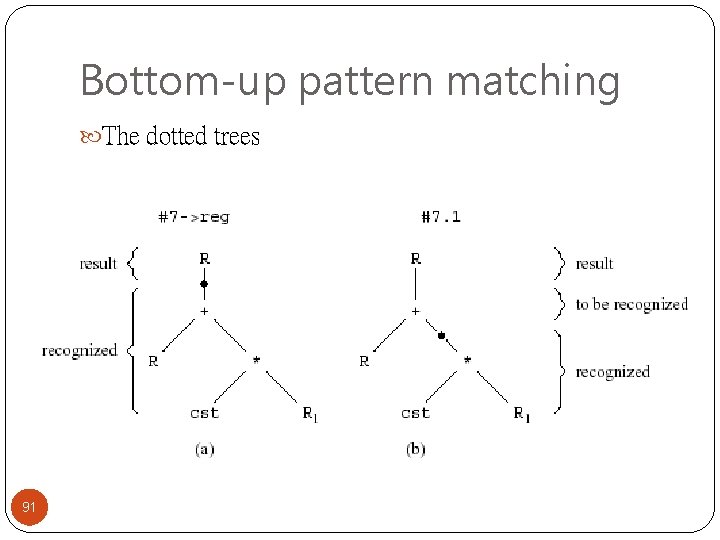

Bottom-up pattern matching The dotted trees 91

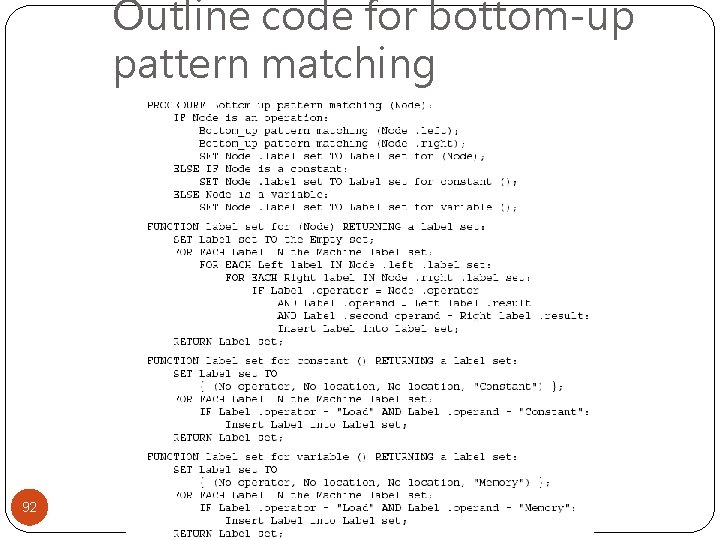

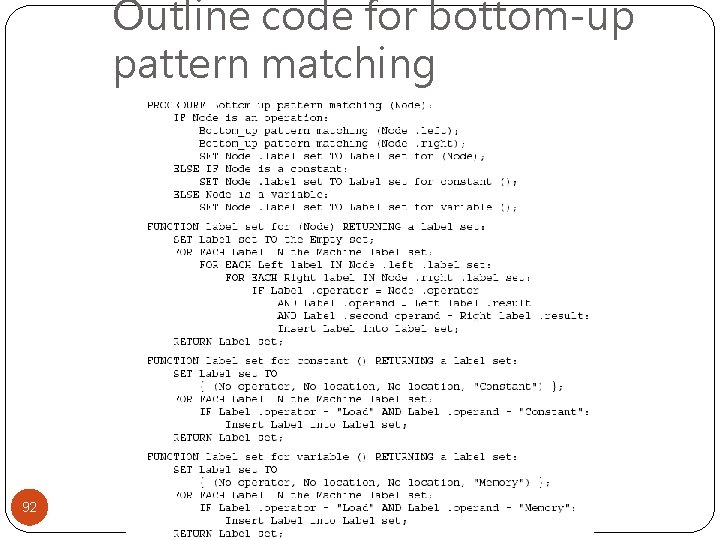

Outline code for bottom-up pattern matching 92

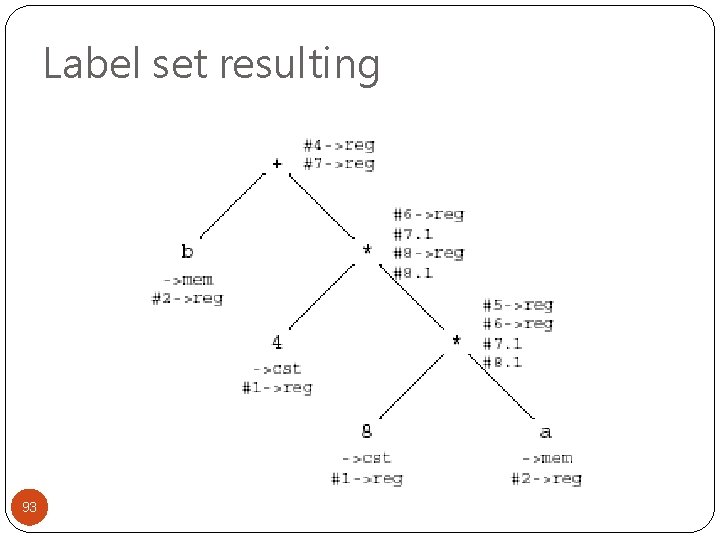

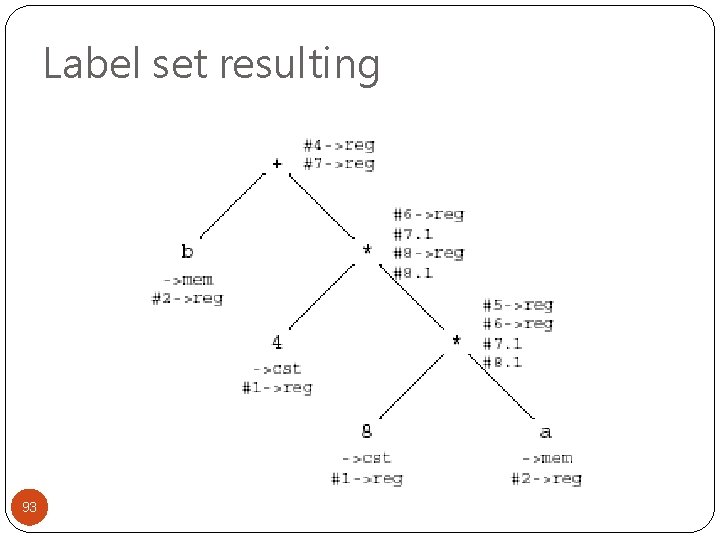

Label set resulting 93

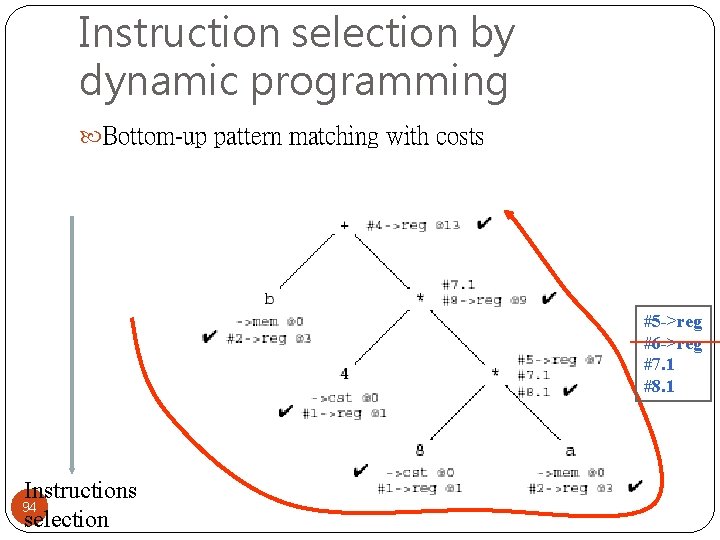

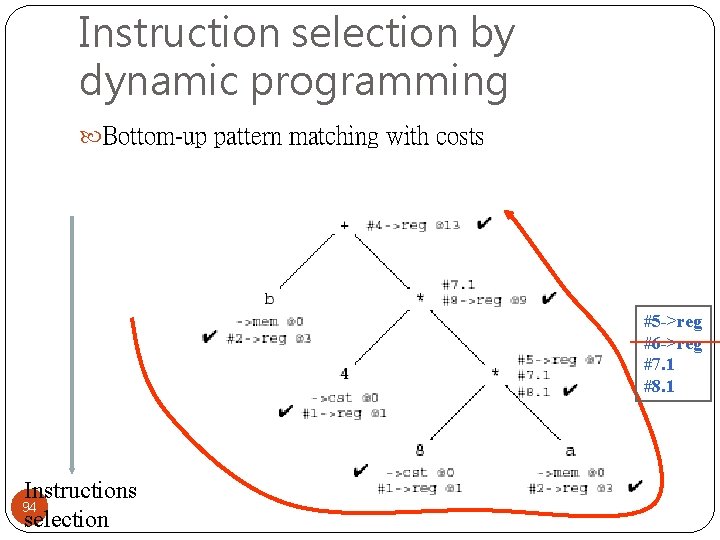

Instruction selection by dynamic programming Bottom-up pattern matching with costs #5 ->reg #6 ->reg #7. 1 #8. 1 Instructions 94 selection

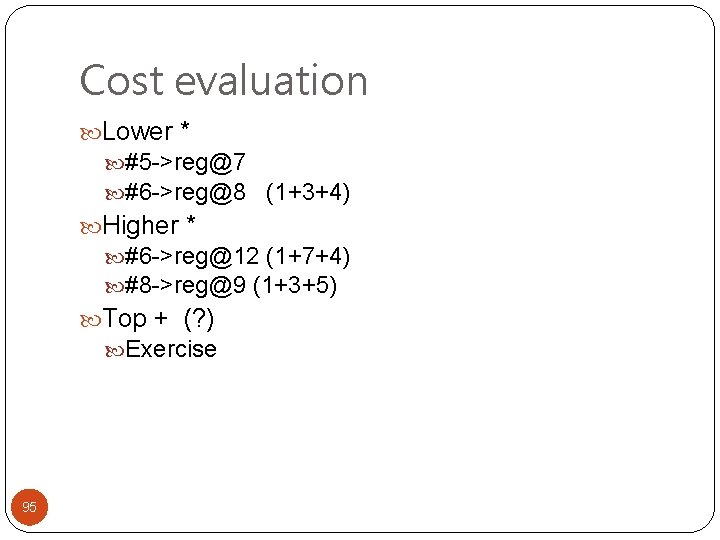

Cost evaluation Lower * #5 ->reg@7 #6 ->reg@8 (1+3+4) Higher * #6 ->reg@12 (1+7+4) #8 ->reg@9 (1+3+5) Top + (? ) Exercise 95

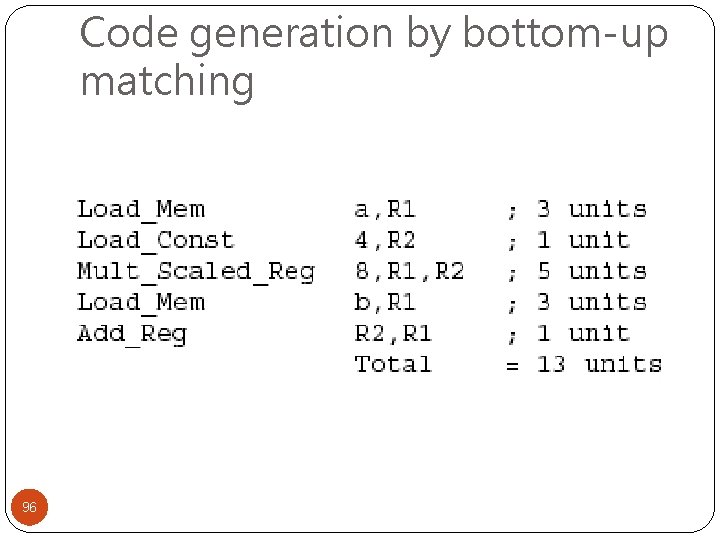

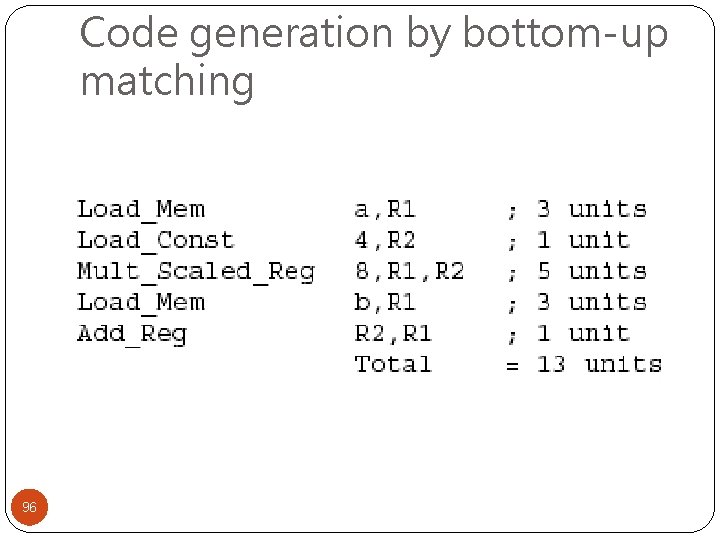

Code generation by bottom-up matching 96

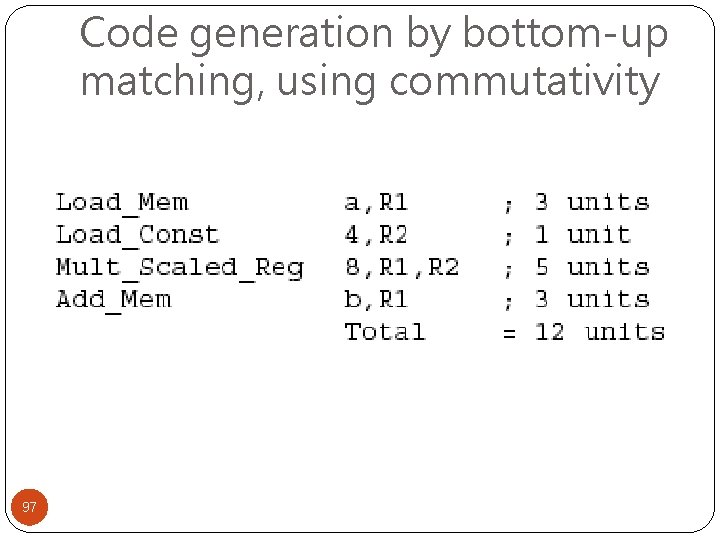

Code generation by bottom-up matching, using commutativity 97

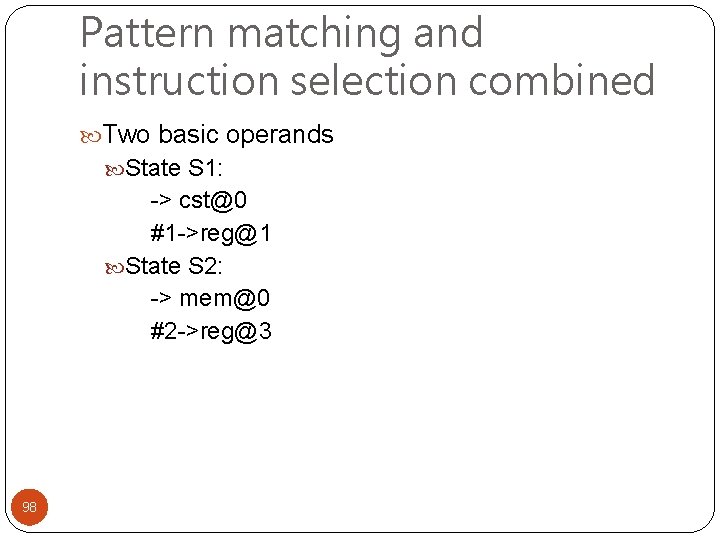

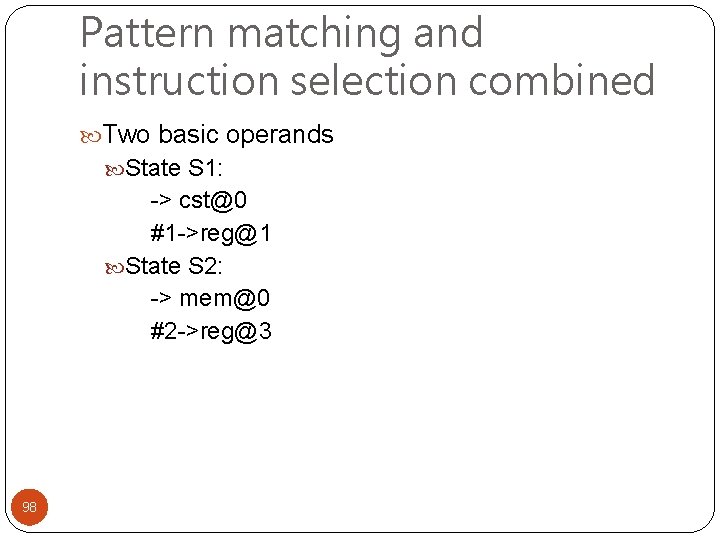

Pattern matching and instruction selection combined Two basic operands State S 1: -> cst@0 #1 ->reg@1 State S 2: -> mem@0 #2 ->reg@3 98

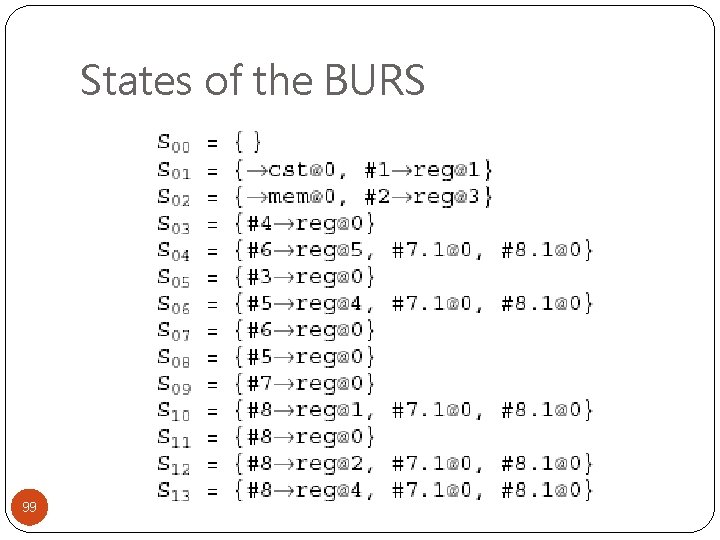

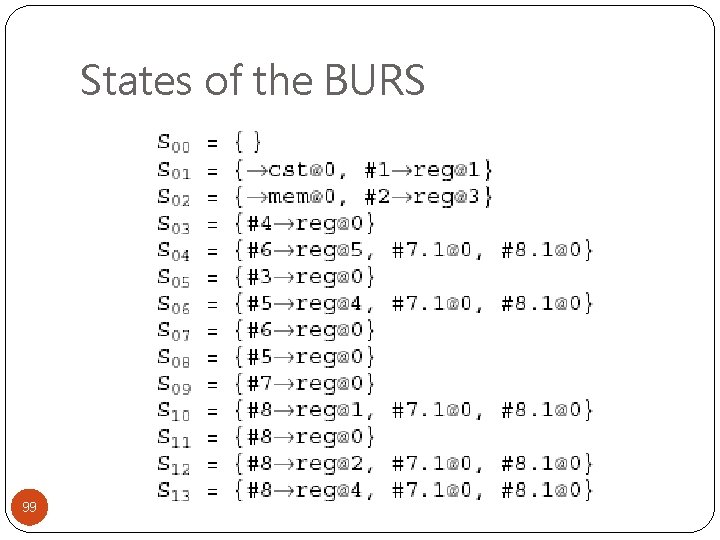

States of the BURS 99

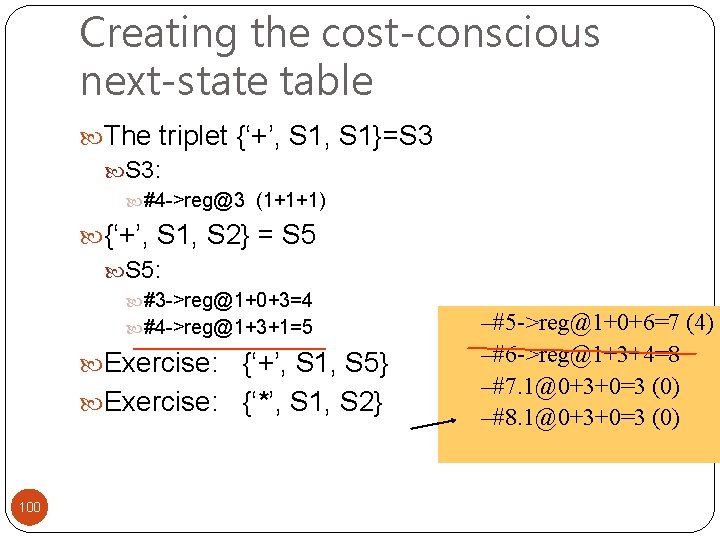

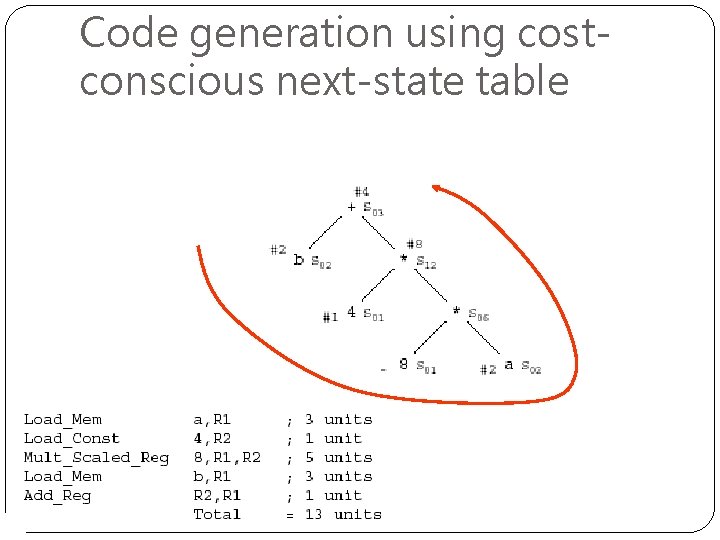

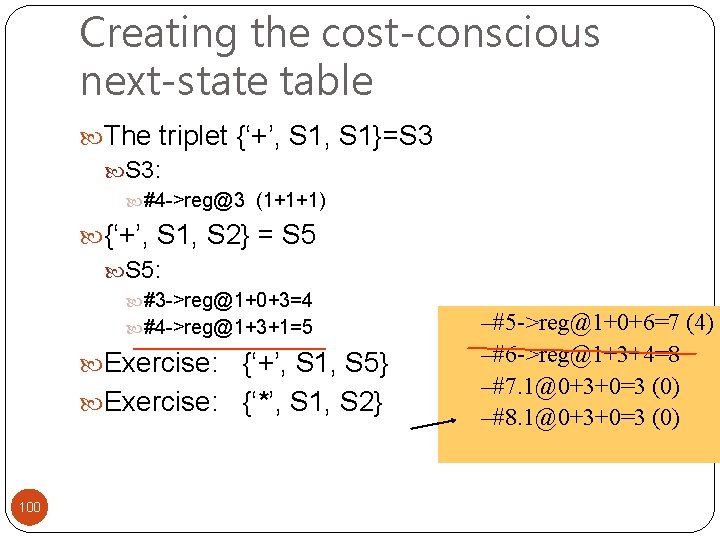

Creating the cost-conscious next-state table The triplet {‘+’, S 1}=S 3 S 3: #4 ->reg@3 (1+1+1) {‘+’, S 1, S 2} = S 5: #3 ->reg@1+0+3=4 #4 ->reg@1+3+1=5 Exercise: {‘+’, S 1, S 5} Exercise: {‘*’, S 1, S 2} 100 –#5 ->reg@1+0+6=7 (4) –#6 ->reg@1+3+4=8 –#7. 1@0+3+0=3 (0) –#8. 1@0+3+0=3 (0)

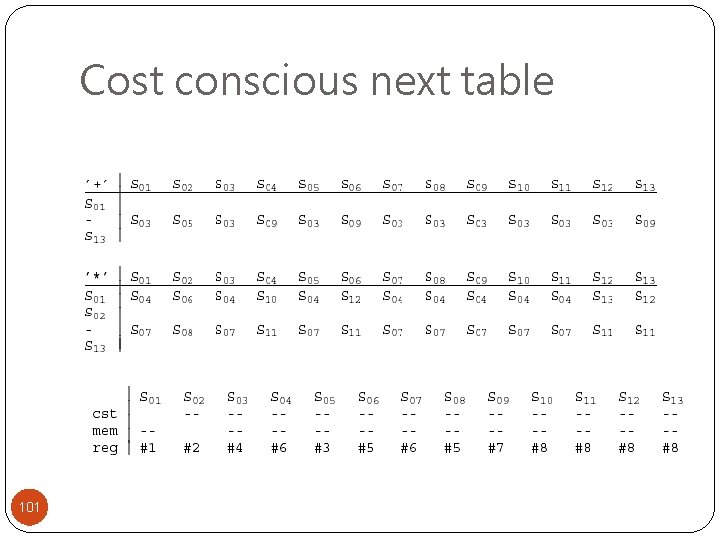

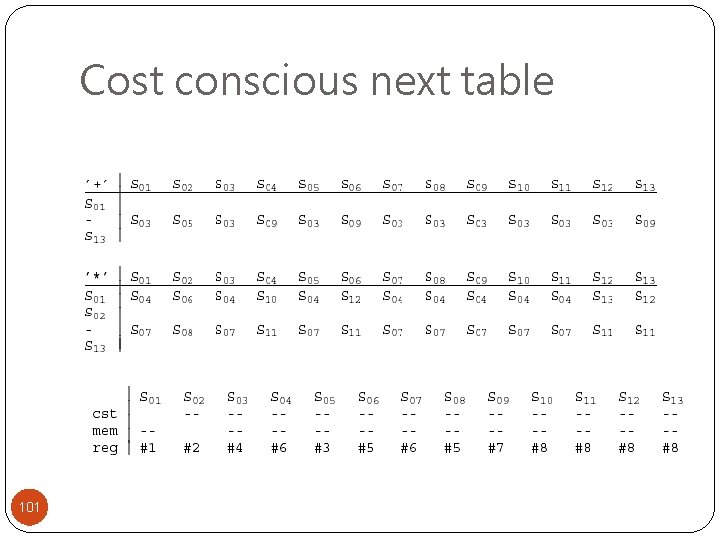

Cost conscious next table 101

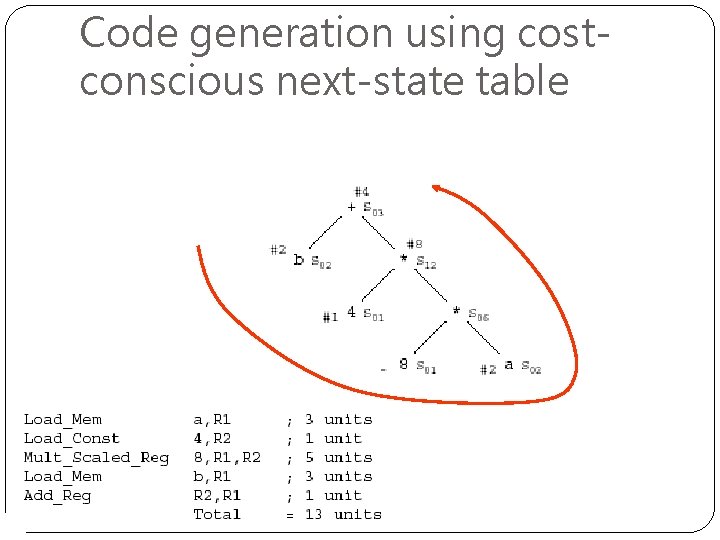

Code generation using costconscious next-state table 102

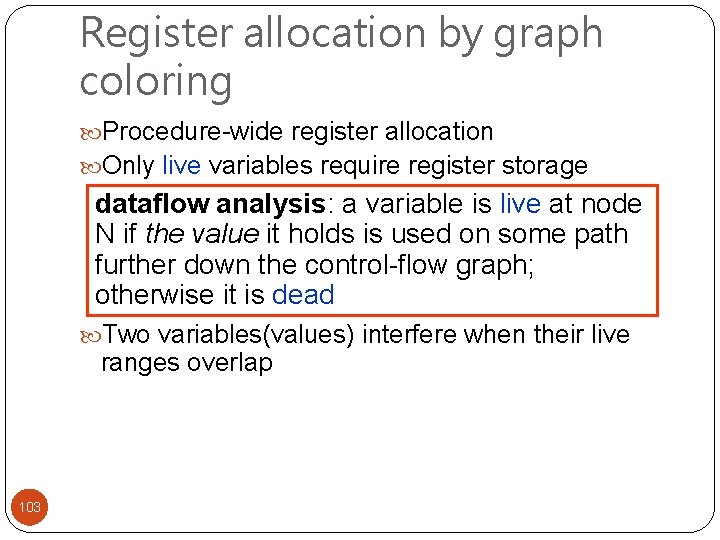

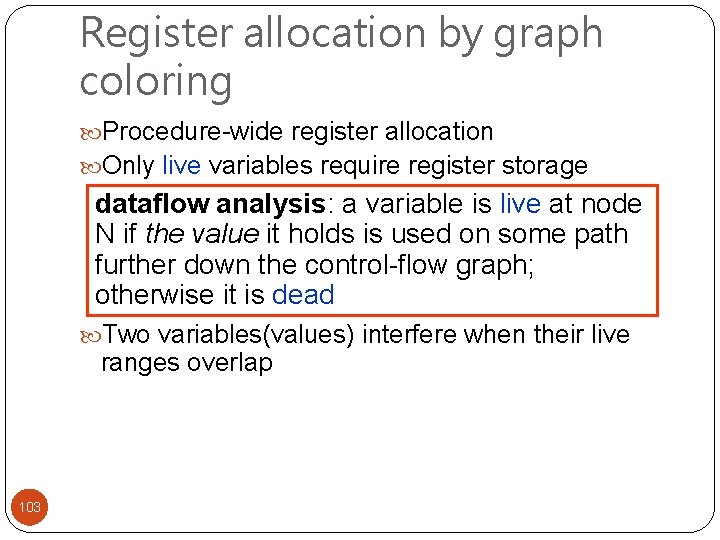

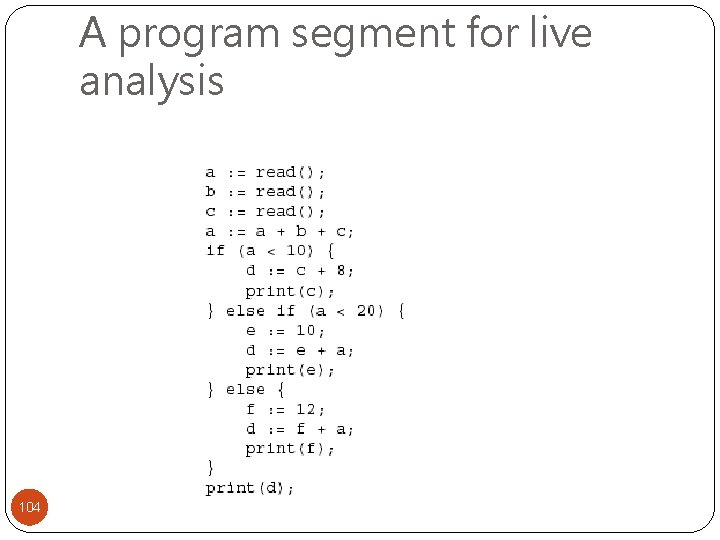

Register allocation by graph coloring Procedure-wide register allocation Only live variables require register storage dataflow analysis: a variable is live at node N if the value it holds is used on some path further down the control-flow graph; otherwise it is dead Two variables(values) interfere when their live ranges overlap 103

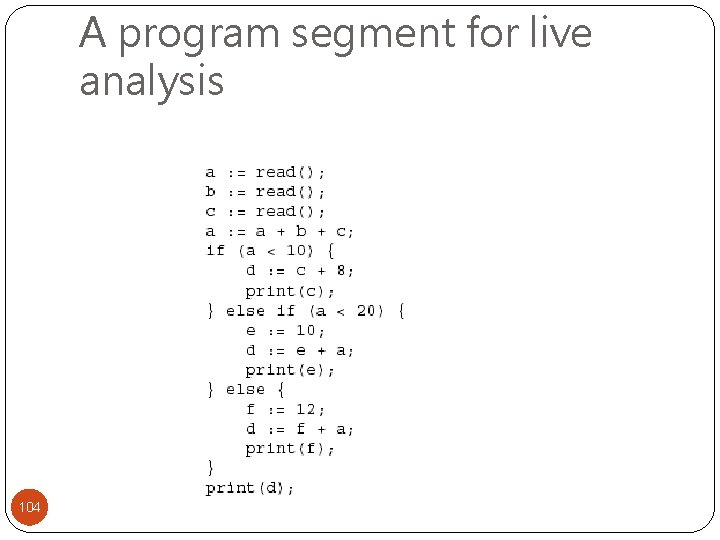

A program segment for live analysis 104

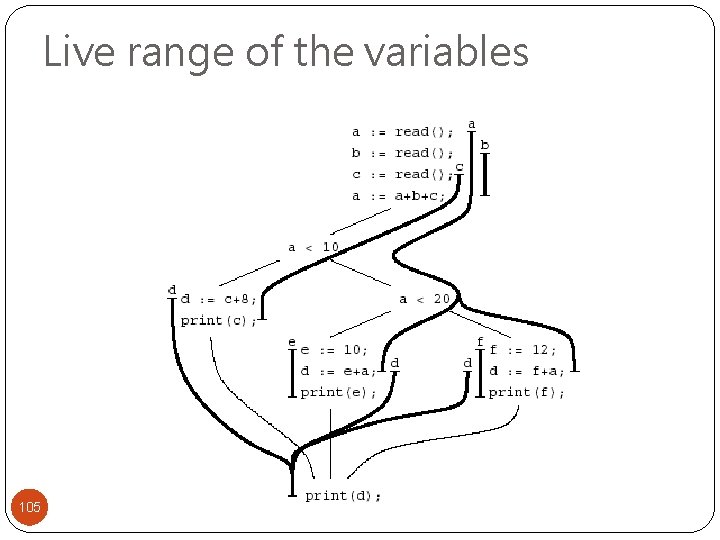

Live range of the variables 105

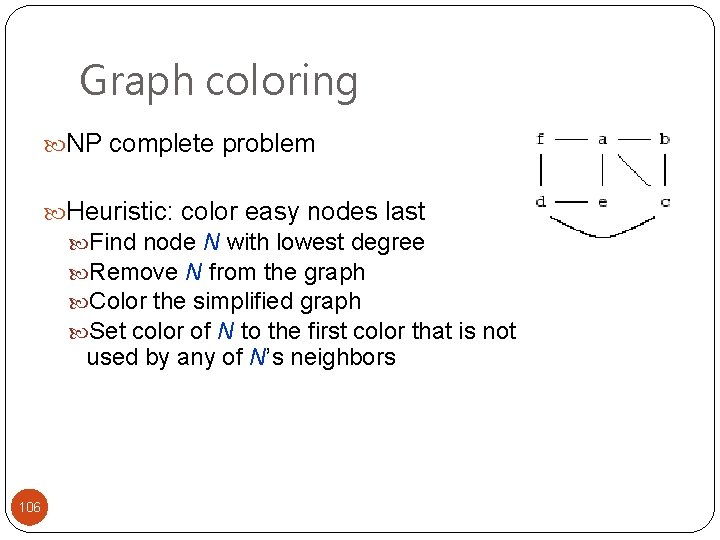

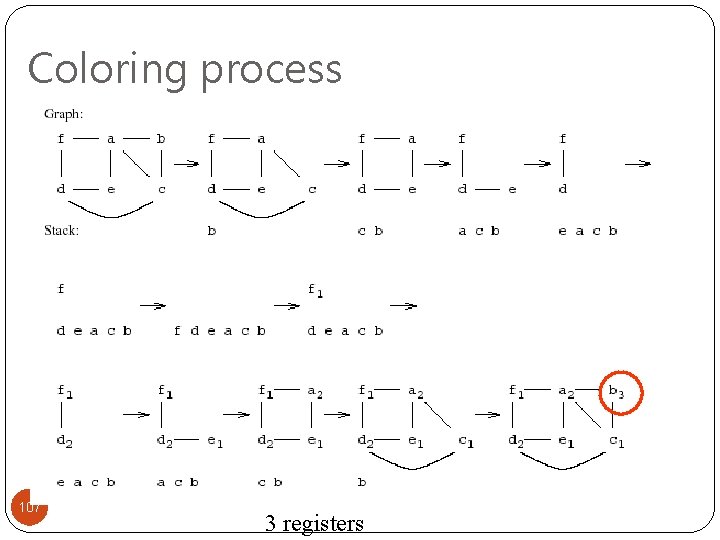

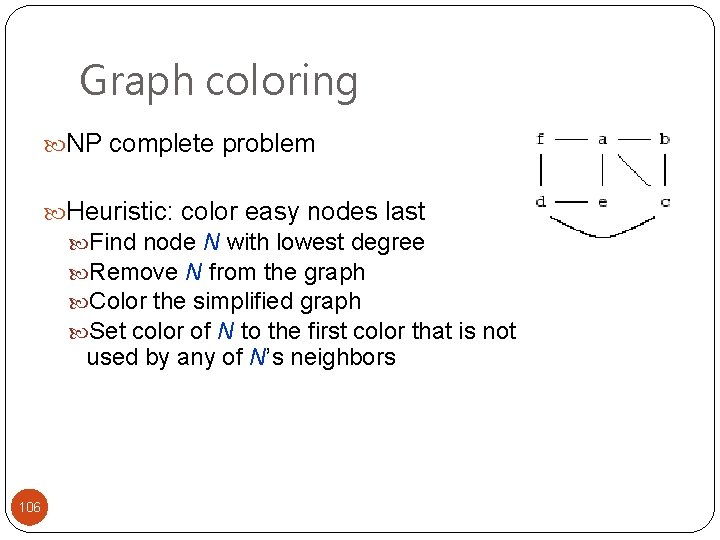

Graph coloring NP complete problem Heuristic: color easy nodes last Find node N with lowest degree Remove N from the graph Color the simplified graph Set color of N to the first color that is not used by any of N’s neighbors 106

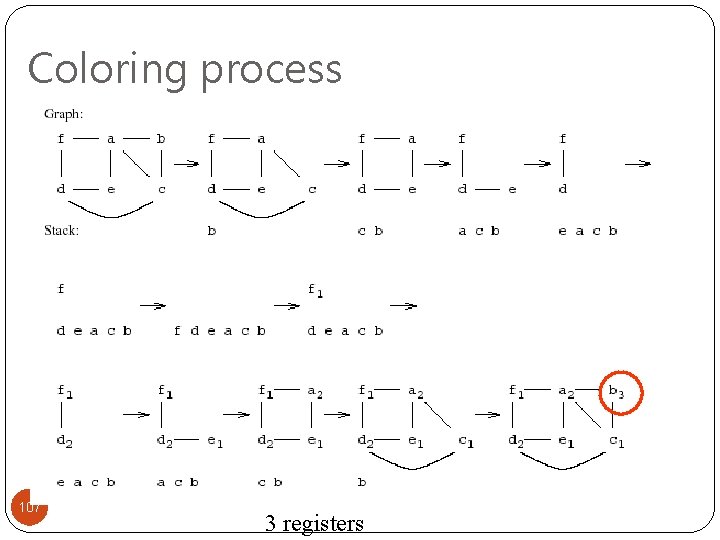

Coloring process 107 3 registers

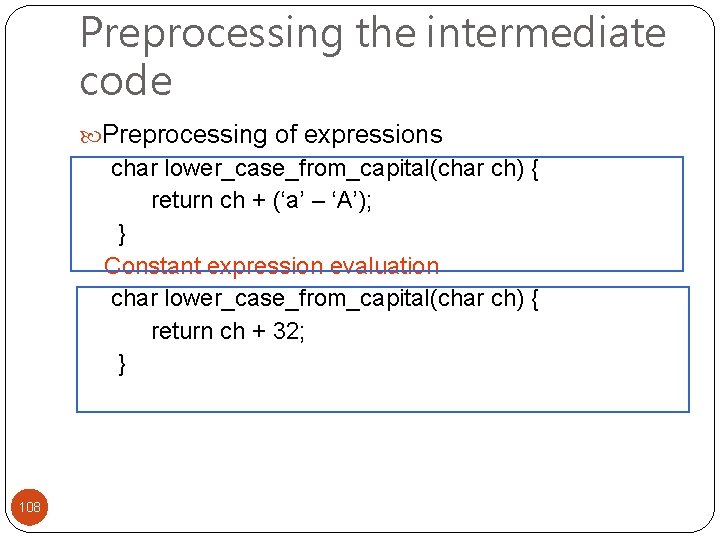

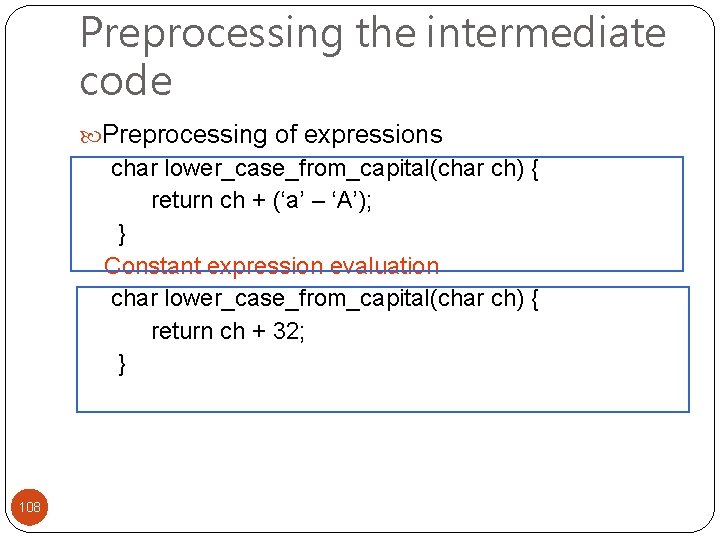

Preprocessing the intermediate code Preprocessing of expressions char lower_case_from_capital(char ch) { return ch + (‘a’ – ‘A’); } Constant expression evaluation char lower_case_from_capital(char ch) { return ch + 32; } 108

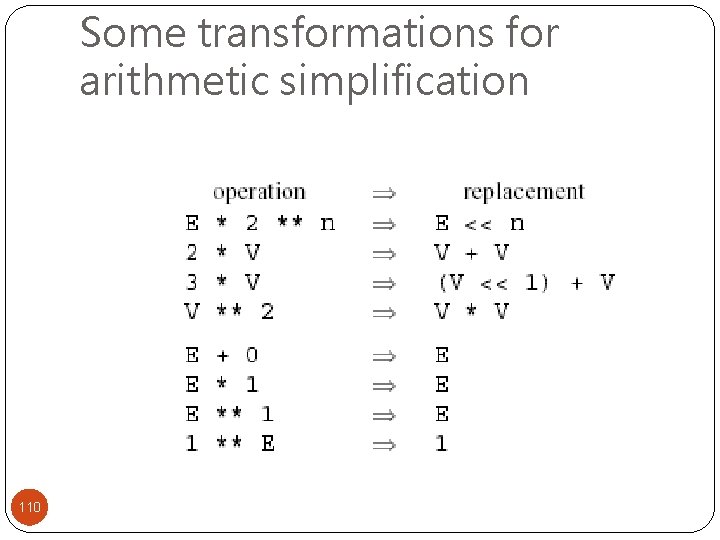

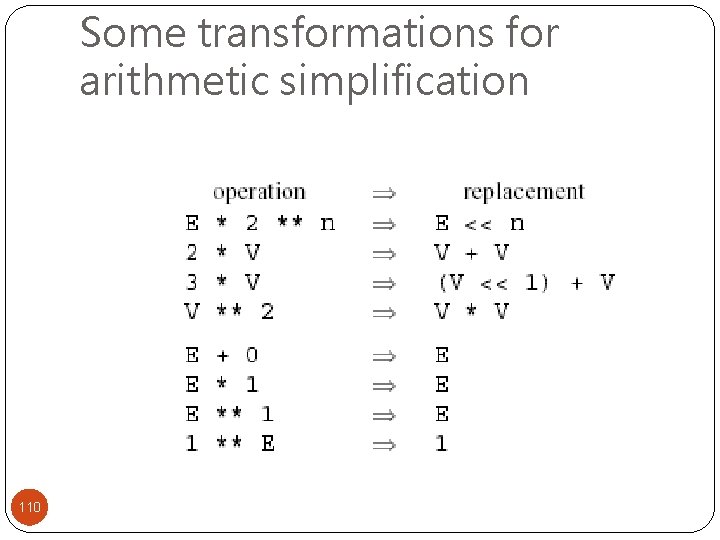

Arithmetic simplification Transformations that replace an operation by a simpler one are called strength reductions. Operations that can be removed completely are called null sequences. 109

Some transformations for arithmetic simplification 110

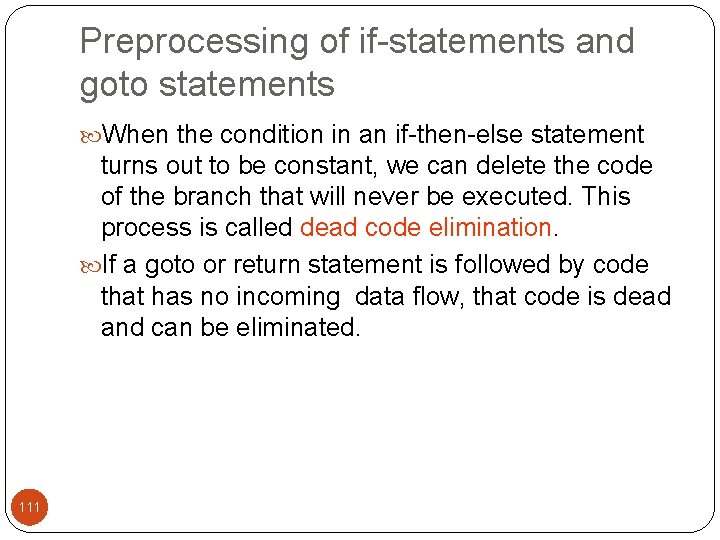

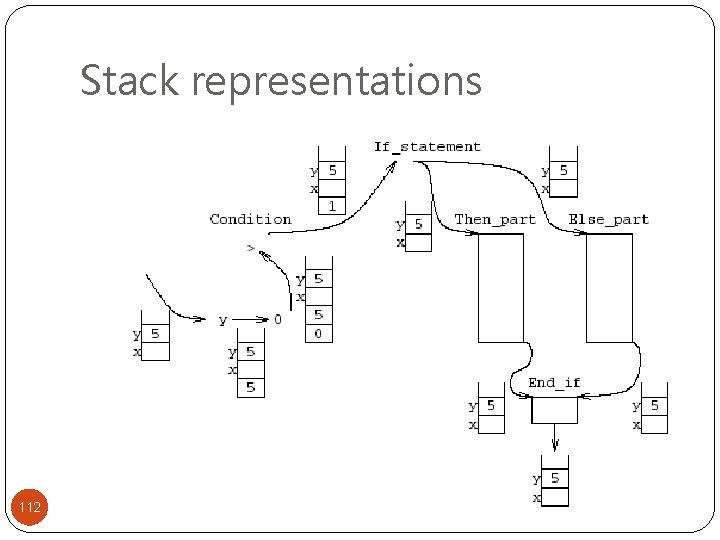

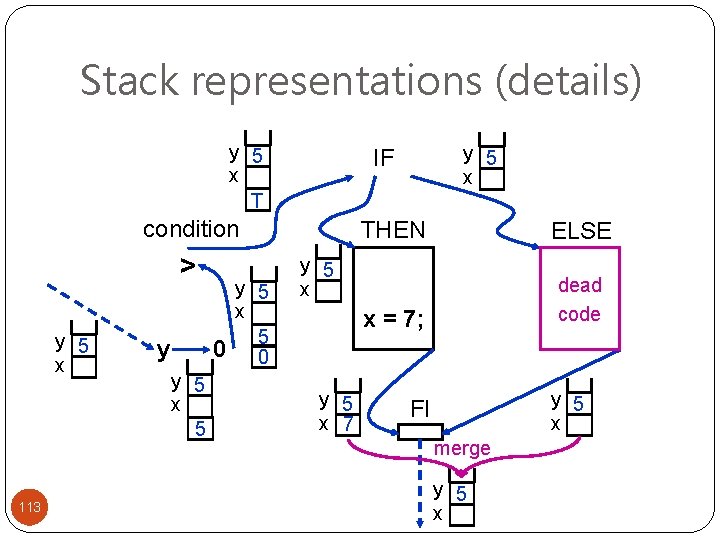

Preprocessing of if-statements and goto statements When the condition in an if-then-else statement turns out to be constant, we can delete the code of the branch that will never be executed. This process is called dead code elimination. If a goto or return statement is followed by code that has no incoming data flow, that code is dead and can be eliminated. 111

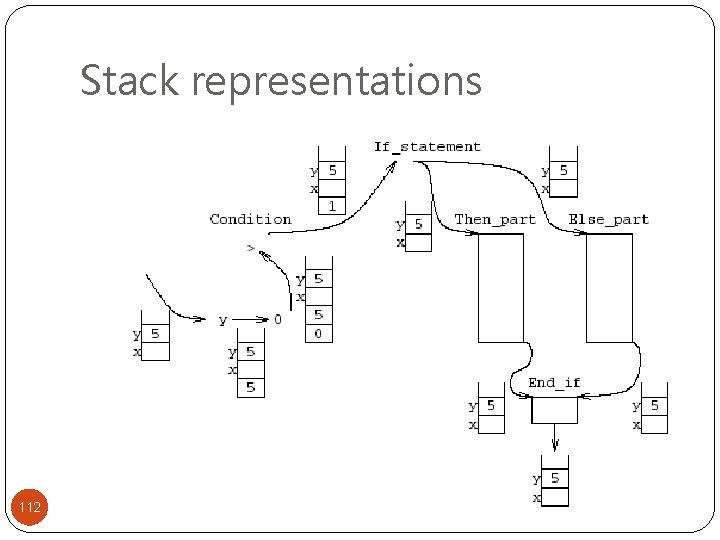

Stack representations 112

Stack representations (details) y 5 x T condition > y 5 x 113 y 0 y 5 x 5 0 y 5 x IF THEN ELSE x = 7; dead code y 5 x 7 y 5 x FI merge y 5 x

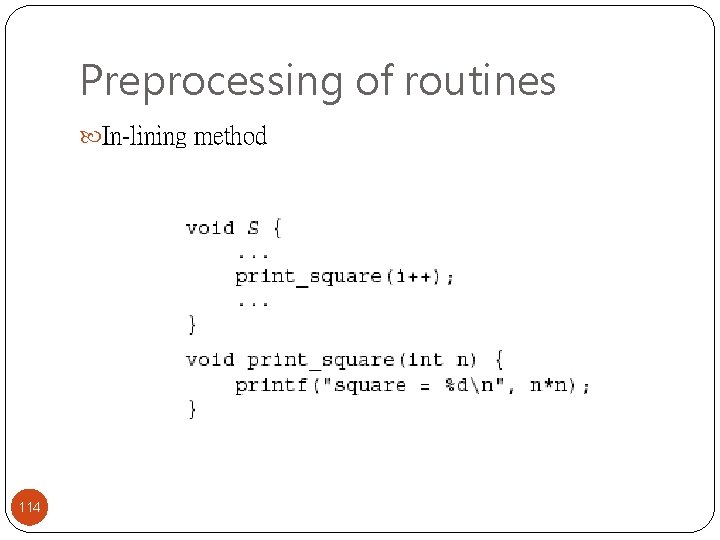

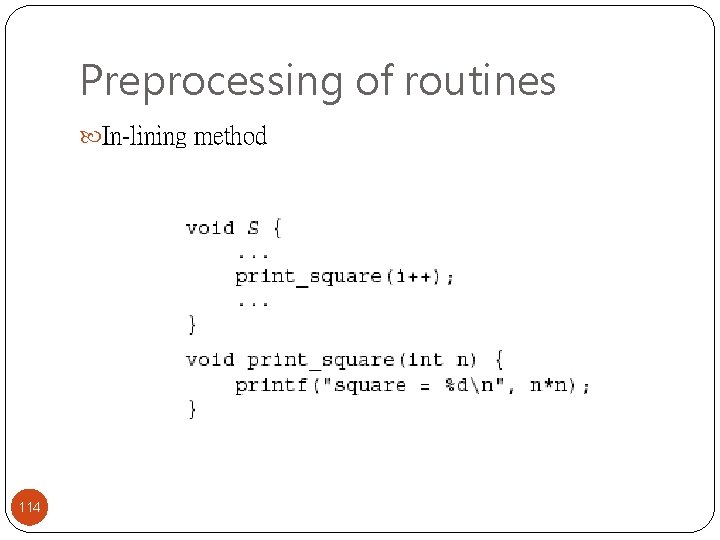

Preprocessing of routines In-lining method 114

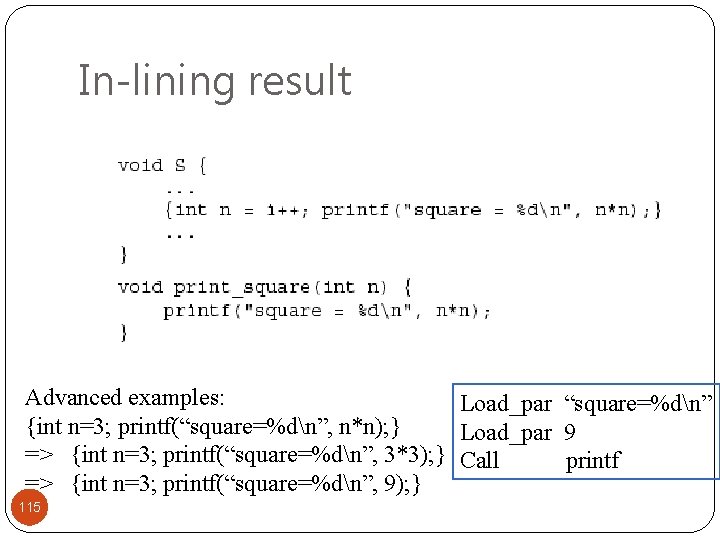

In-lining result Advanced examples: Load_par “square=%dn” {int n=3; printf(“square=%dn”, n*n); } Load_par 9 => {int n=3; printf(“square=%dn”, 3*3); } Call printf => {int n=3; printf(“square=%dn”, 9); } 115

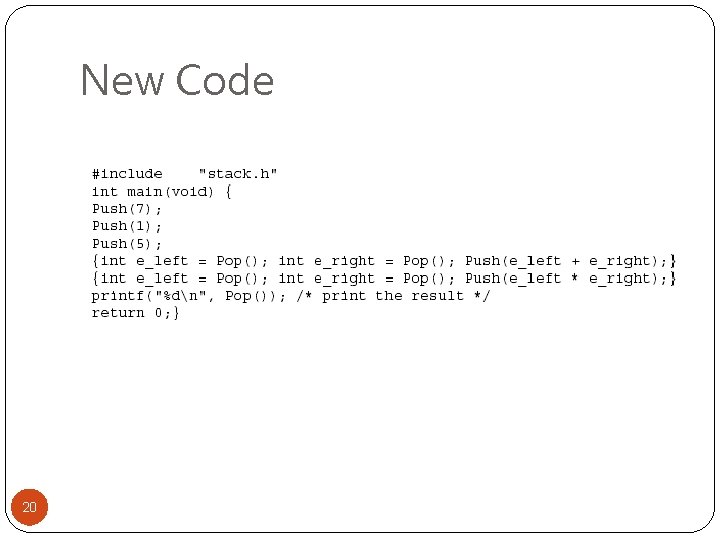

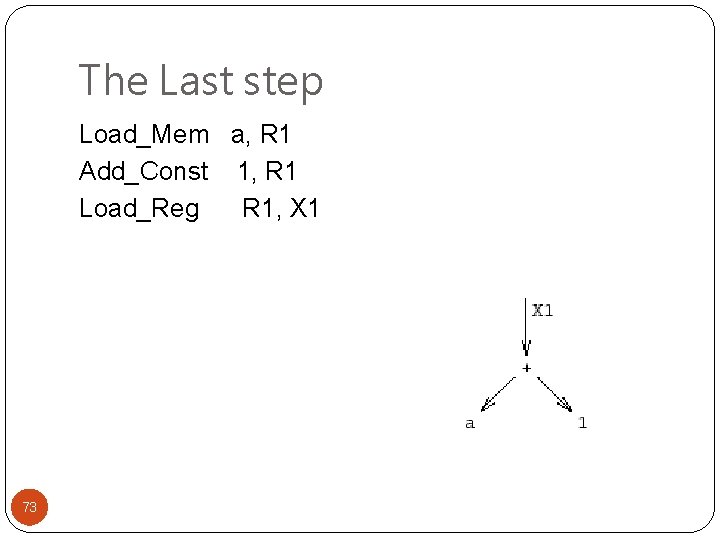

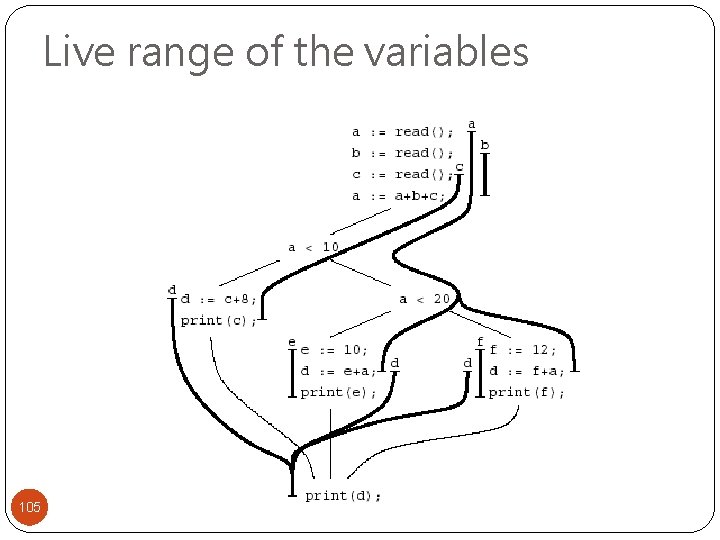

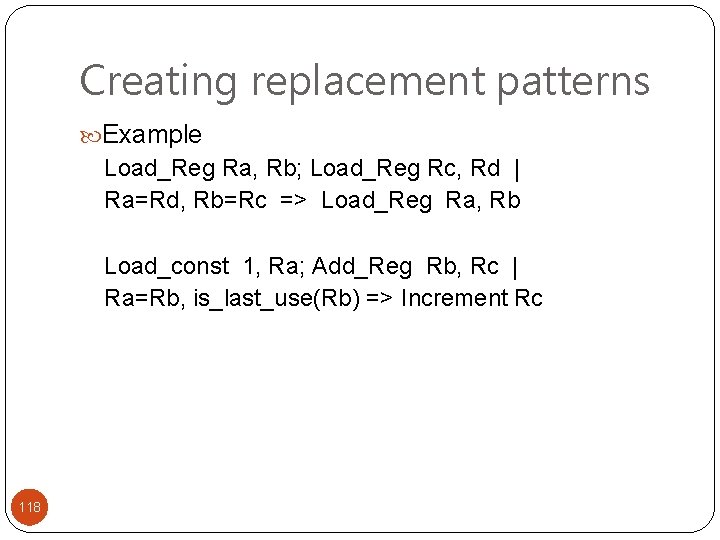

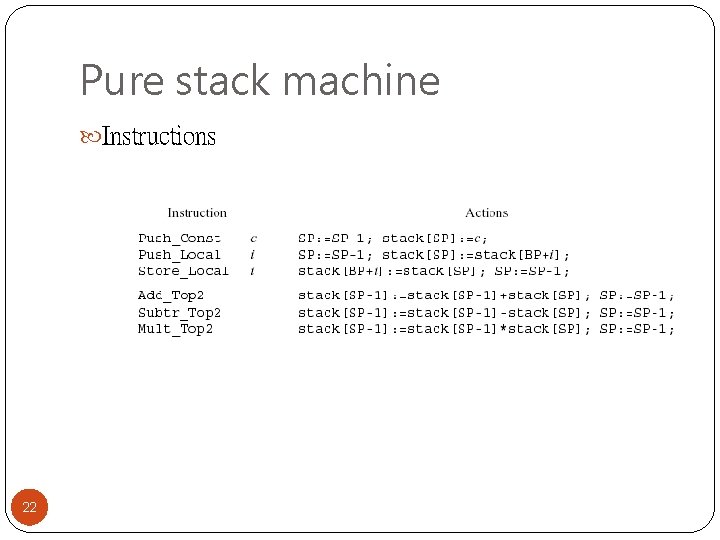

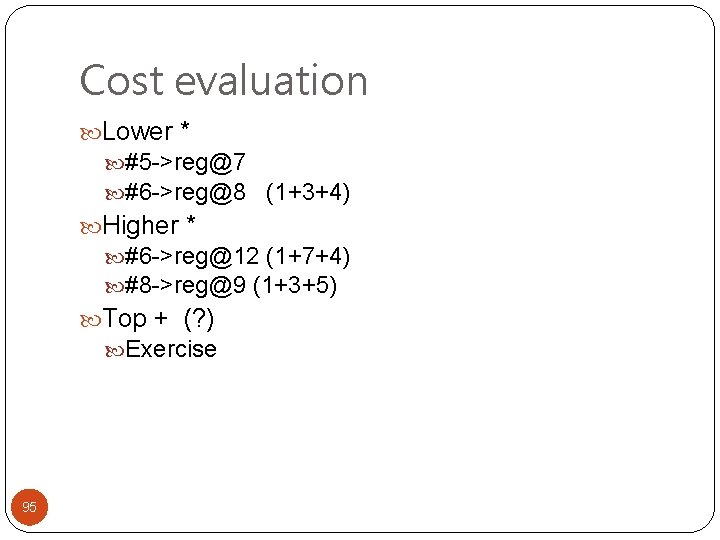

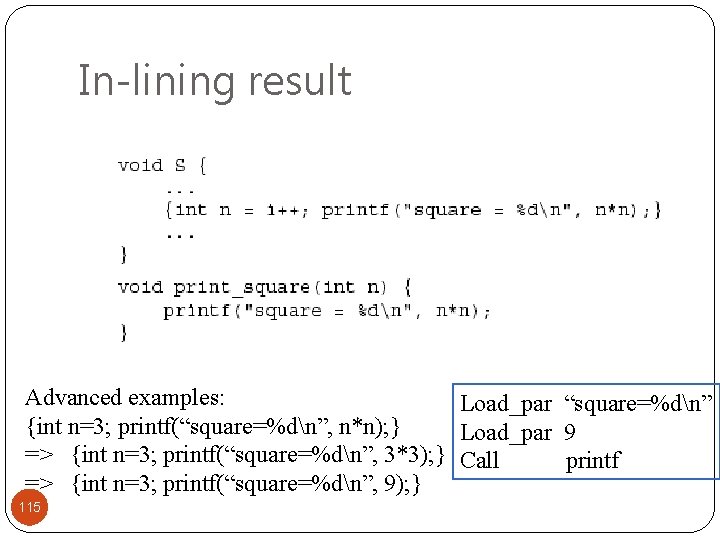

![Cloning Example double poewrseriesint n double a double x int p for p0 Cloning Example double poewr_series(int n, double a[], double x) { int p; for (p=0;](https://slidetodoc.com/presentation_image_h/00ee3ee18027e0e6e8ac3ff03a768bc2/image-116.jpg)

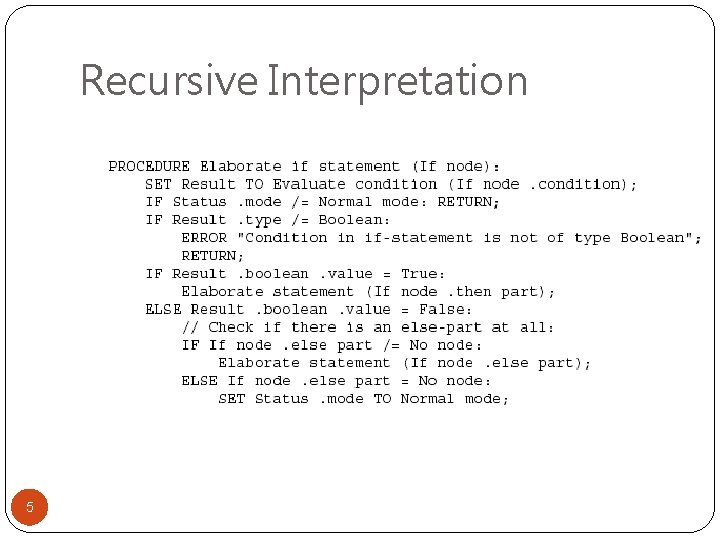

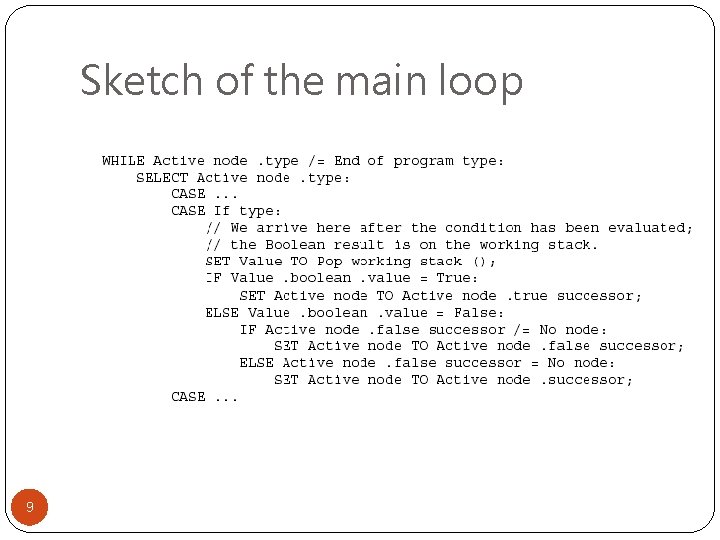

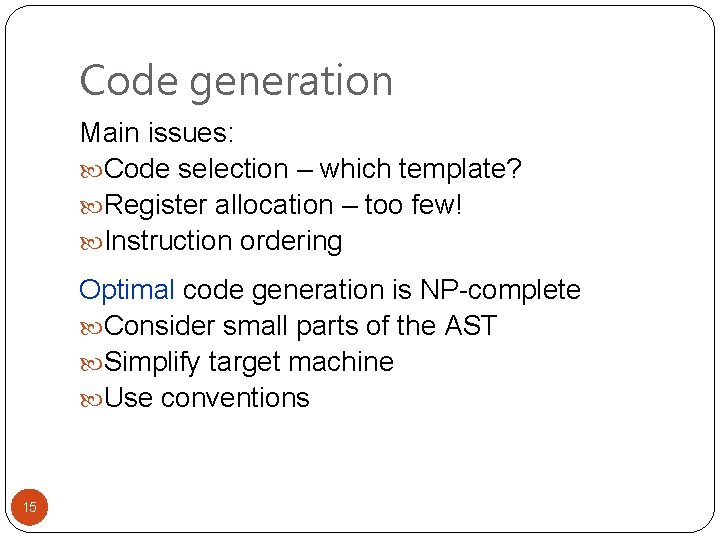

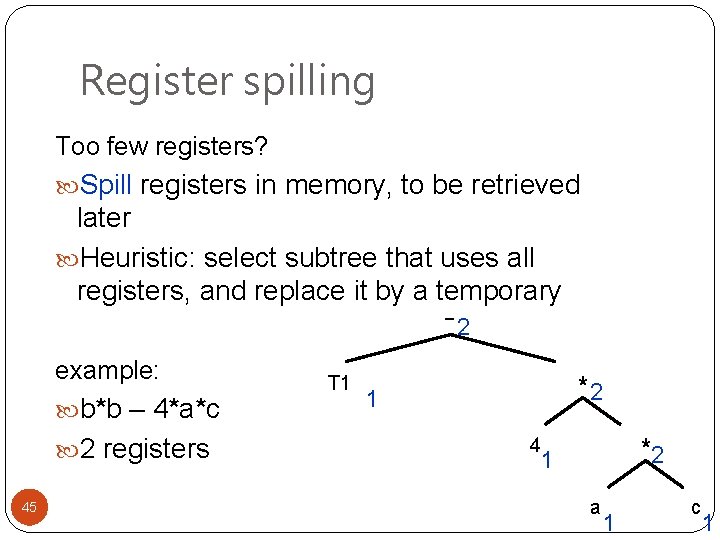

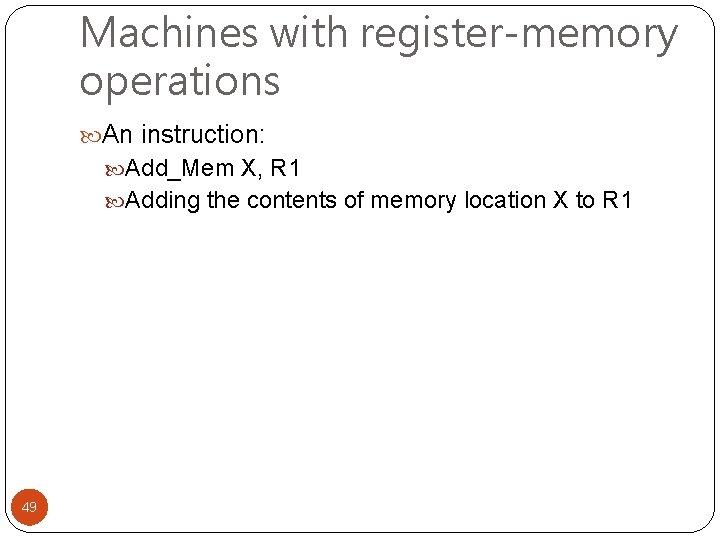

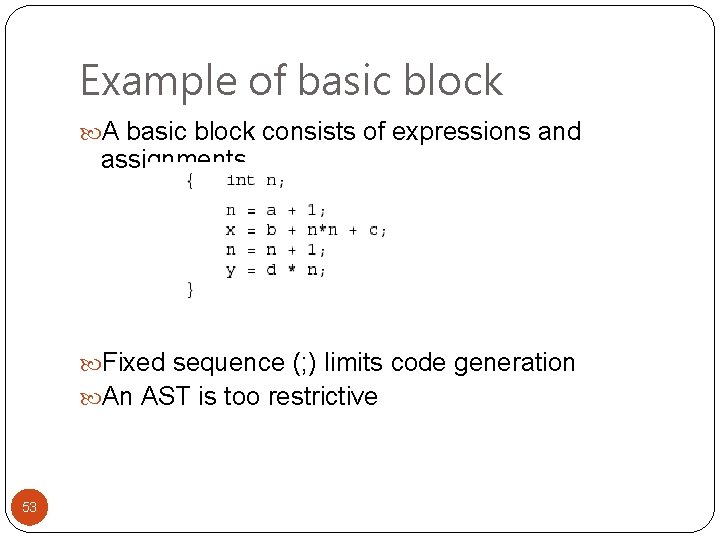

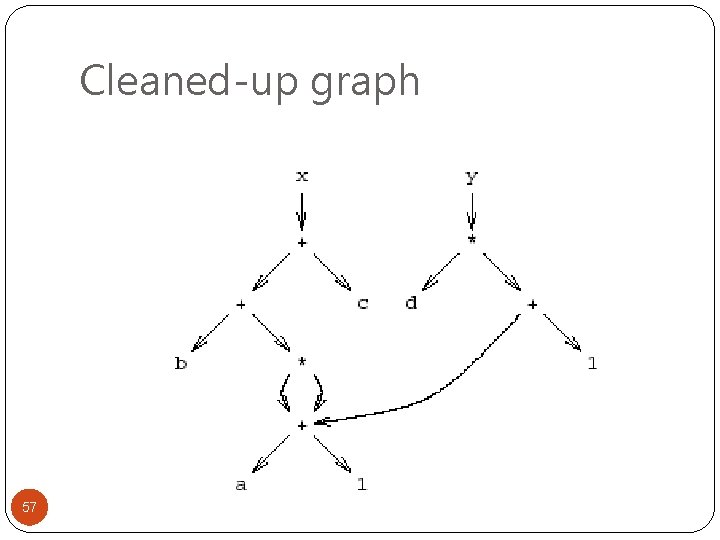

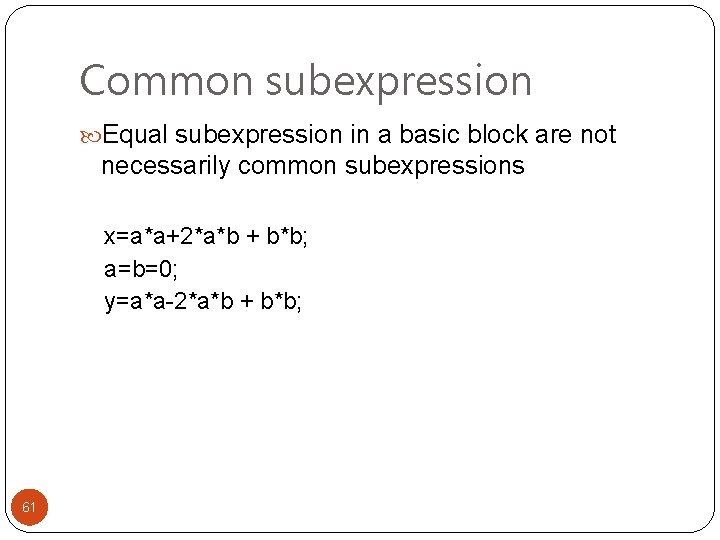

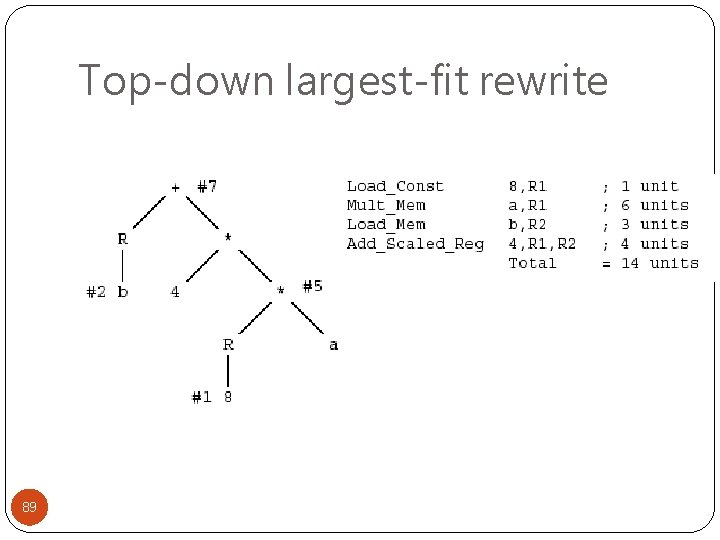

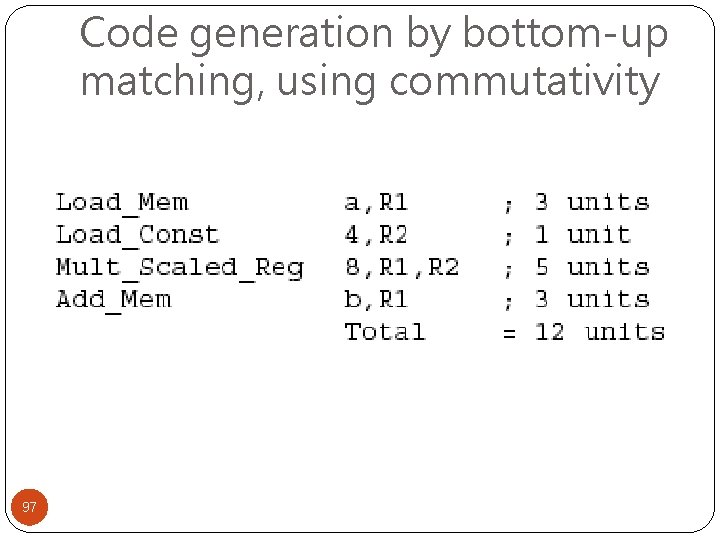

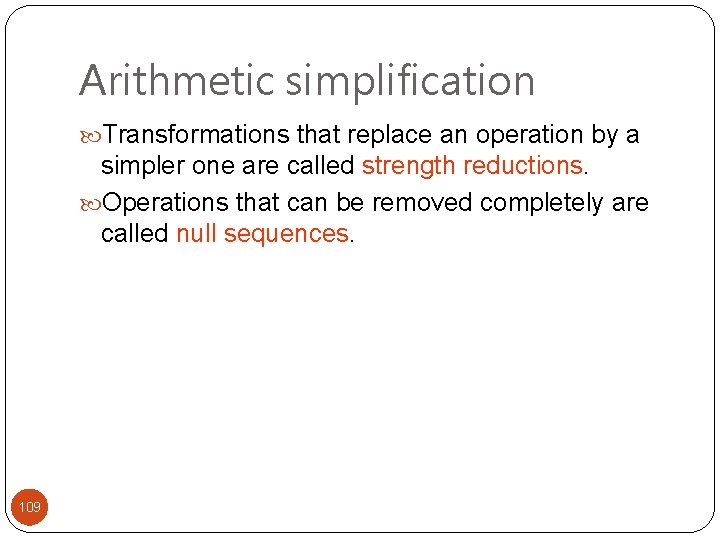

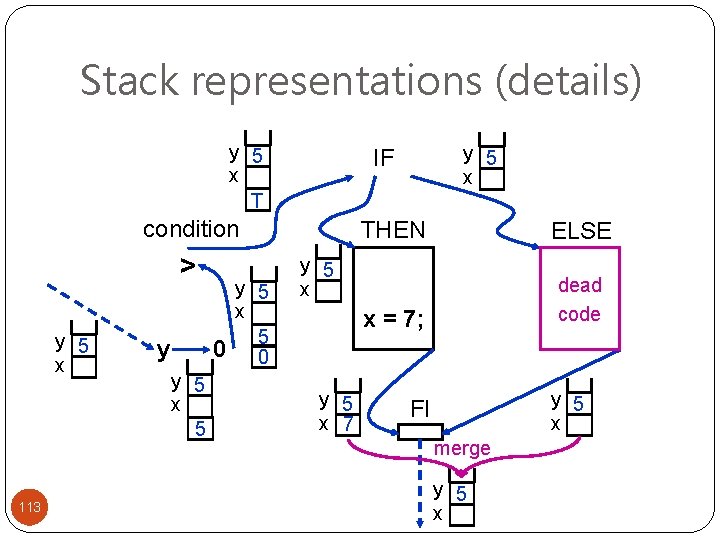

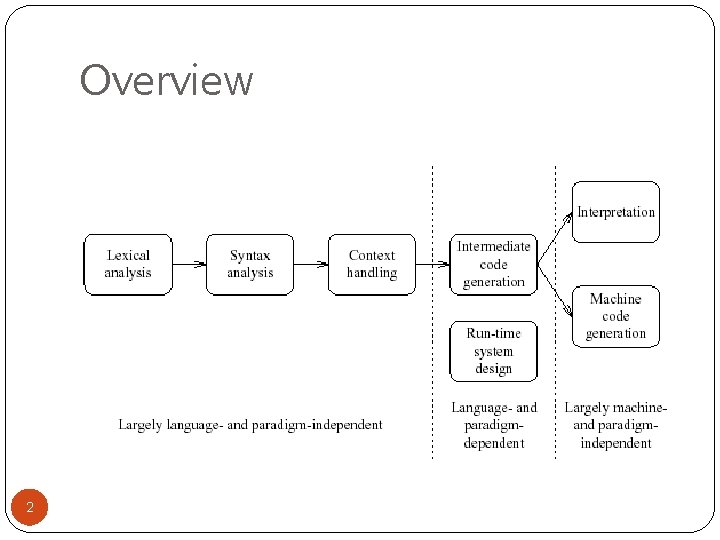

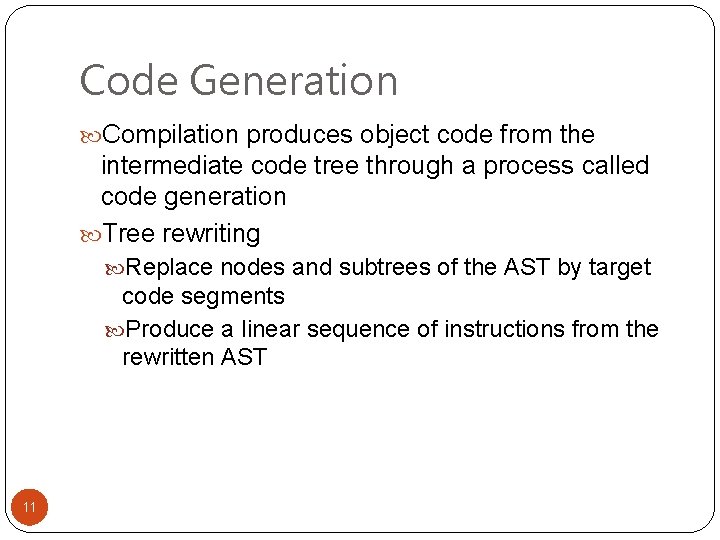

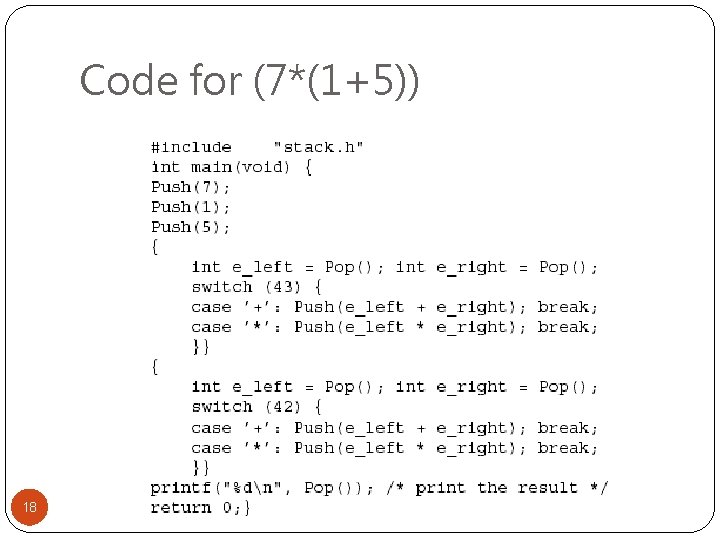

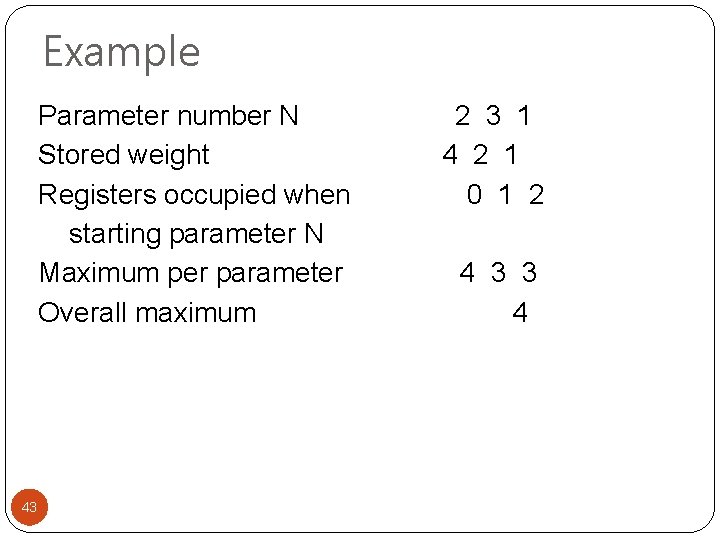

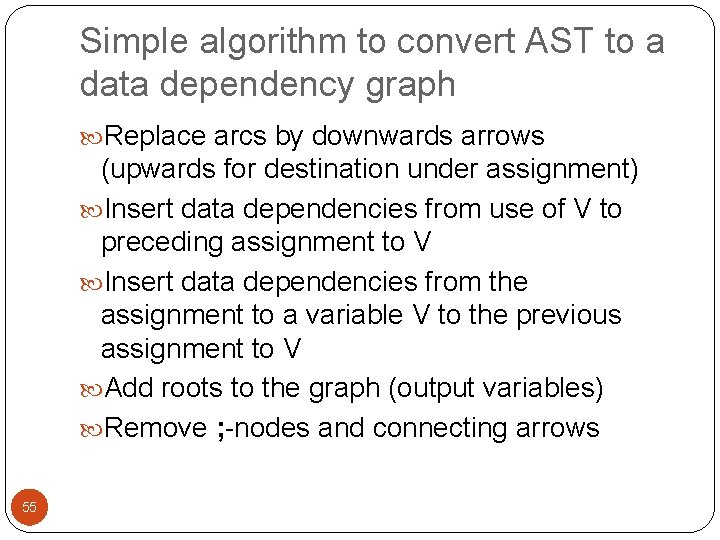

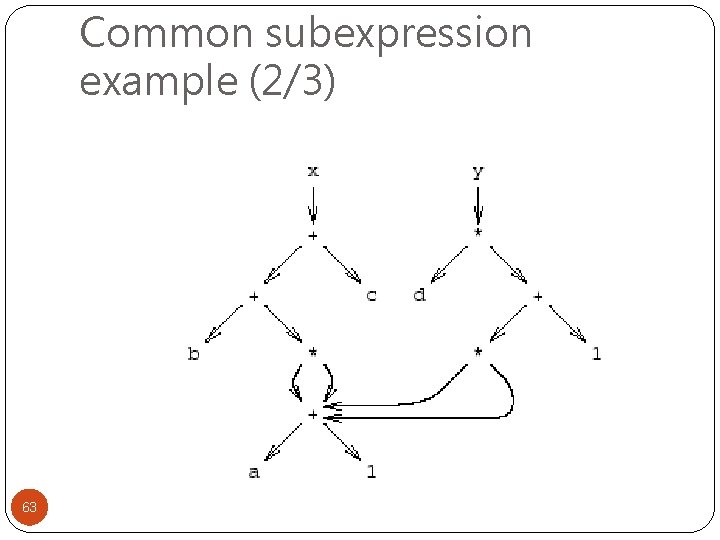

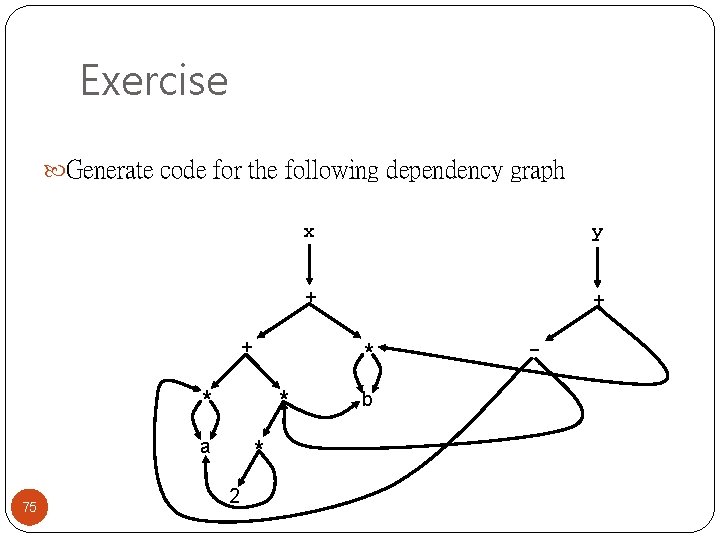

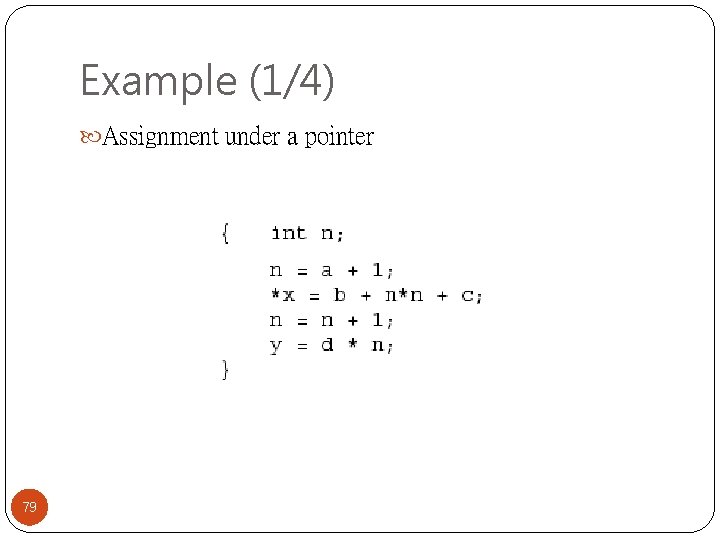

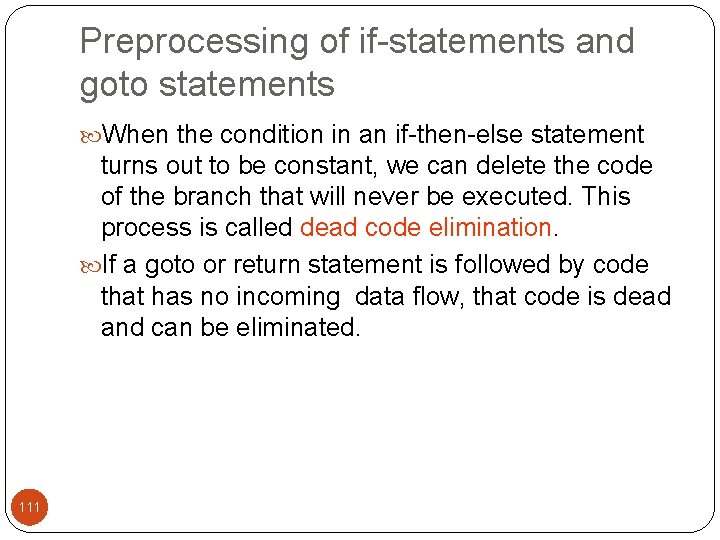

Cloning Example double poewr_series(int n, double a[], double x) { int p; for (p=0; p<n; p++) result += a[p] * (x**p); return result } Is called with x set to 1. 0 double poewr_series(int n, double a[]) { int p; for (p=0; p<n; p++) result += a[p] * (1. 0**p); return result } 116 double poewr_series(int n, double a[]) { int p; for (p=0; p<n; p++) result += a[p] ; return result }

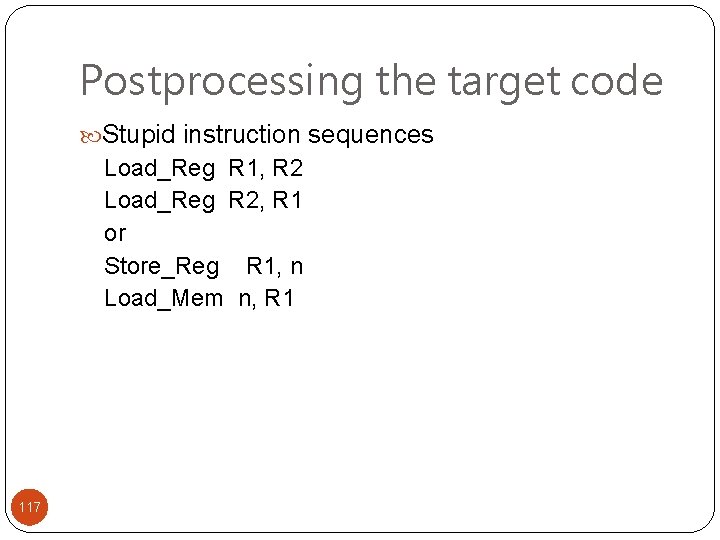

Postprocessing the target code Stupid instruction sequences Load_Reg R 1, R 2 Load_Reg R 2, R 1 or Store_Reg R 1, n Load_Mem n, R 1 117

Creating replacement patterns Example Load_Reg Ra, Rb; Load_Reg Rc, Rd | Ra=Rd, Rb=Rc => Load_Reg Ra, Rb Load_const 1, Ra; Add_Reg Rb, Rc | Ra=Rb, is_last_use(Rb) => Increment Rc 118

Locating and replacing instructions Multiple pattern matching Using FSA Dotted items 119

Homework Study sections 4. 2. 13 Machine code generation 4. 3 Assemblers, linkers and loaders 120