Compiler Scheduling for a Wide Issue Multithreaded FPGABased

Compiler Scheduling for a Wide. Issue Multithreaded FPGA-Based Compute Engine Ilian Tili Kalin Ovtcharov, J. Gregory Steffan (University of Toronto) University of Toronto 1

What is an FPGA? • FPGA = Field Programmable Gate Array • Eg. , a large Altera Stratix IV: 40 nm, 2. 5 B transistors – 820 K logic elements (LEs), 3. 1 Mb block-RAMs, 1. 2 K multipliers – High-speed I/Os • Can be programmed to implement any circuit University of Toronto 2

IBM and FPGAs • Data. Power – FPGA-accelerated XML processing • Netezza – Data warehouse appliance; FPGAs accelerate DBMS • Algorithmics – Acceleration of financial algorithms • Lime (Liquid Metal) – Java synthesized to heterogeneous (CPUs, FPGAs) • HAL (Hardware Acceleration Lab) – IBM Toronto; FPGA-based acceleration • New: IBM Canada Research & Development Centre – One (of 5) thrust on “agile computing” • SURGE IN FPGA-BASED COMPUTING! University of Toronto 3

FPGA Programming • Requires expert hardware designer • Long compile times – up to a day for a large design -> Options for programming with high-level languages? University of Toronto 4

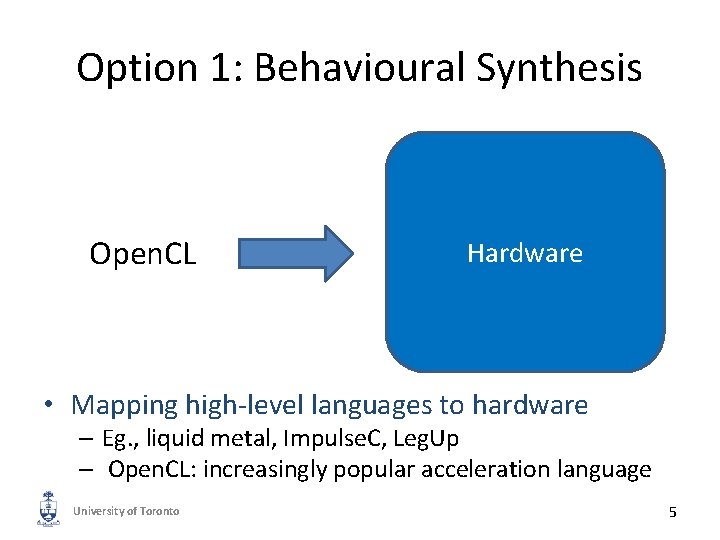

Option 1: Behavioural Synthesis Open. CL Hardware • Mapping high-level languages to hardware – Eg. , liquid metal, Impulse. C, Leg. Up – Open. CL: increasingly popular acceleration language University of Toronto 5

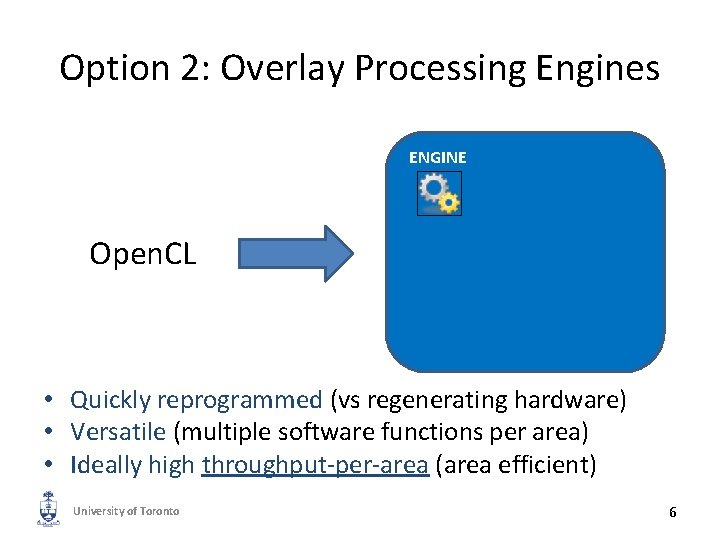

Option 2: Overlay Processing Engines ENGINE Open. CL • Quickly reprogrammed (vs regenerating hardware) • Versatile (multiple software functions per area) • Ideally high throughput-per-area (area efficient) University of Toronto 6

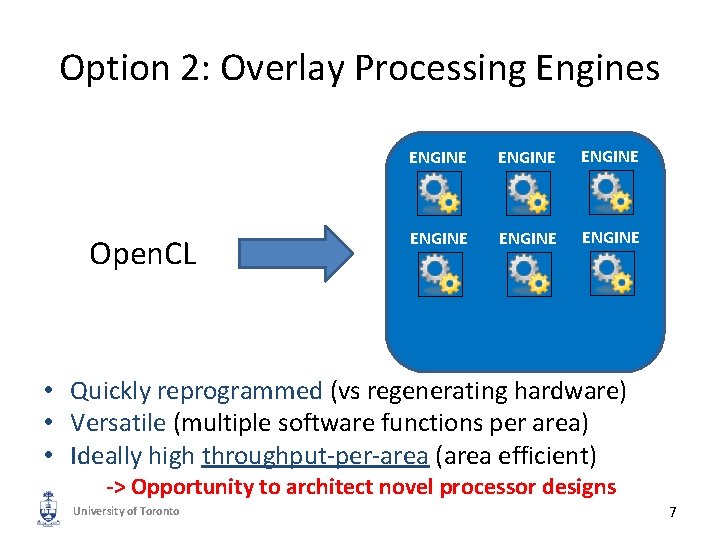

Option 2: Overlay Processing Engines Open. CL ENGINE ENGINE • Quickly reprogrammed (vs regenerating hardware) • Versatile (multiple software functions per area) • Ideally high throughput-per-area (area efficient) -> Opportunity to architect novel processor designs University of Toronto 7

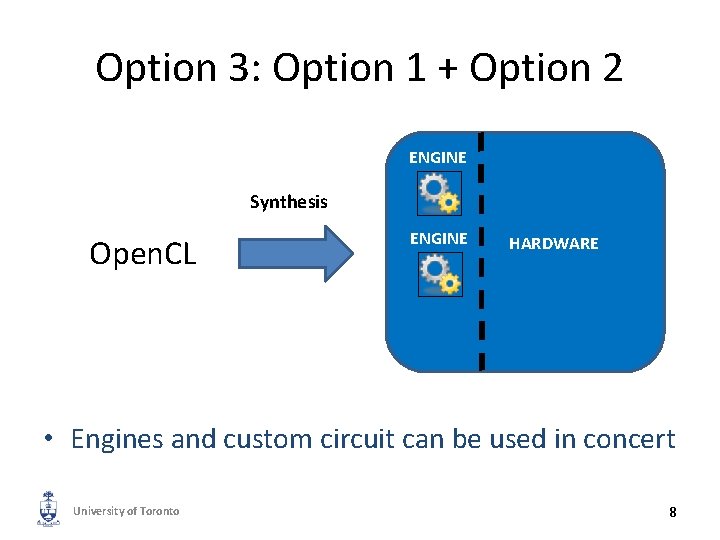

Option 3: Option 1 + Option 2 ENGINE Synthesis Open. CL ENGINE HARDWARE • Engines and custom circuit can be used in concert University of Toronto 8

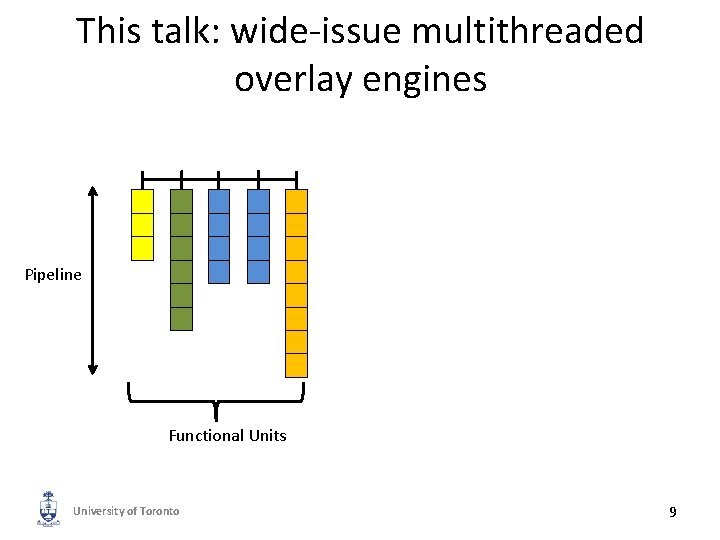

This talk: wide-issue multithreaded overlay engines Pipeline Functional Units University of Toronto 9

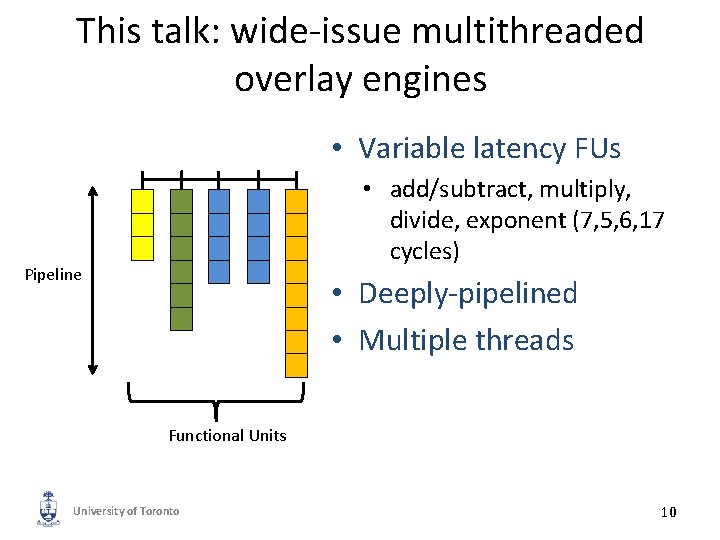

This talk: wide-issue multithreaded overlay engines • Variable latency FUs • add/subtract, multiply, divide, exponent (7, 5, 6, 17 cycles) Pipeline • Deeply-pipelined • Multiple threads Functional Units University of Toronto 10

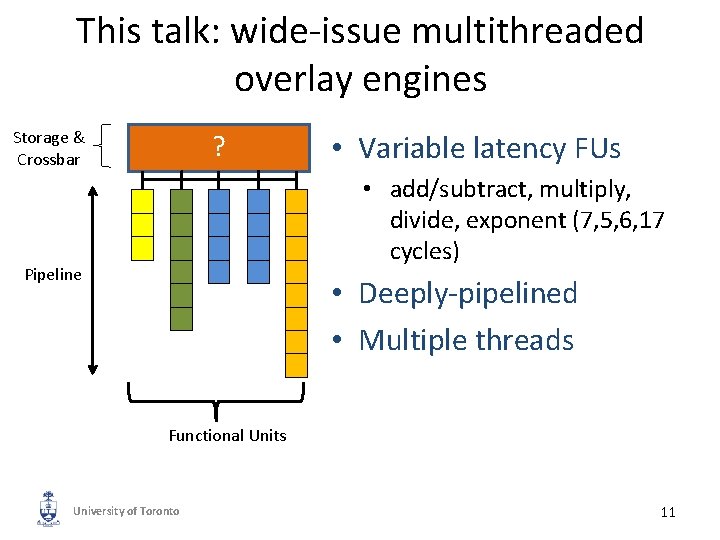

This talk: wide-issue multithreaded overlay engines Storage & Crossbar ? • Variable latency FUs • add/subtract, multiply, divide, exponent (7, 5, 6, 17 cycles) Pipeline • Deeply-pipelined • Multiple threads Functional Units University of Toronto 11

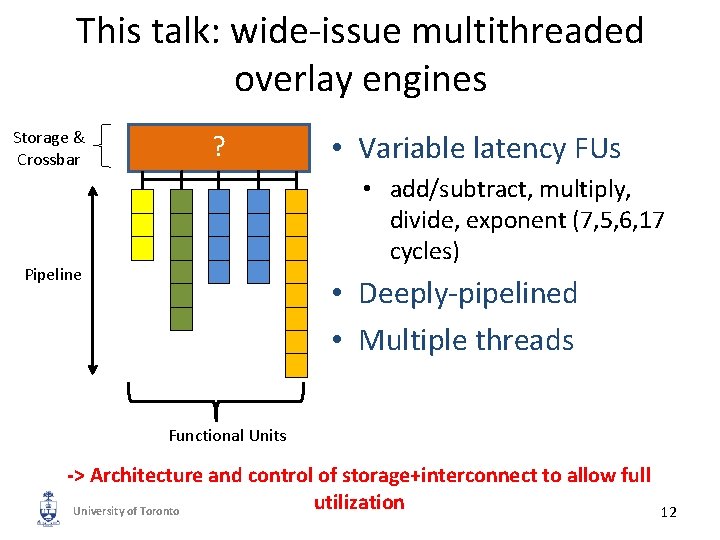

This talk: wide-issue multithreaded overlay engines Storage & Crossbar ? • Variable latency FUs • add/subtract, multiply, divide, exponent (7, 5, 6, 17 cycles) Pipeline • Deeply-pipelined • Multiple threads Functional Units -> Architecture and control of storage+interconnect to allow full utilization University of Toronto 12

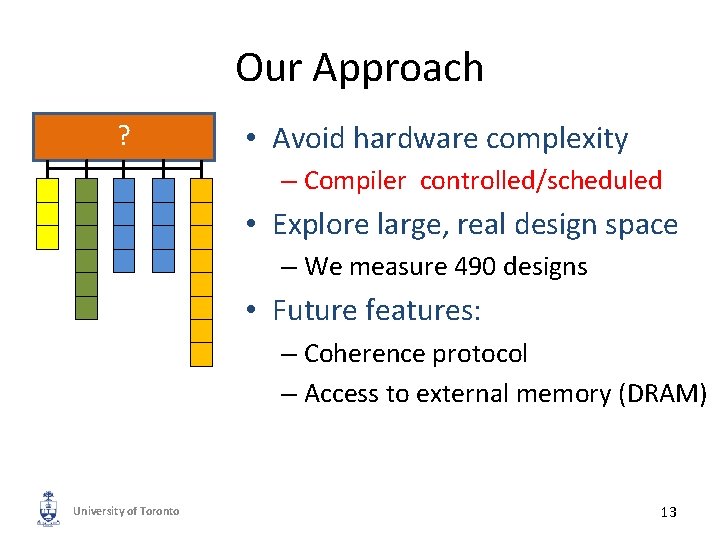

Our Approach ? • Avoid hardware complexity – Compiler controlled/scheduled • Explore large, real design space – We measure 490 designs • Future features: – Coherence protocol – Access to external memory (DRAM) University of Toronto 13

Our Objective Find Best Design 1. Fully utilizes datapath – Multiple ALUs of significant and varying pipeline depth. 2. Reduces FPGA area usage – Thread data storage – Connections between components • Exploring a very large design space University of Toronto 14

Hardware Architecture Possibilities University of Toronto 15

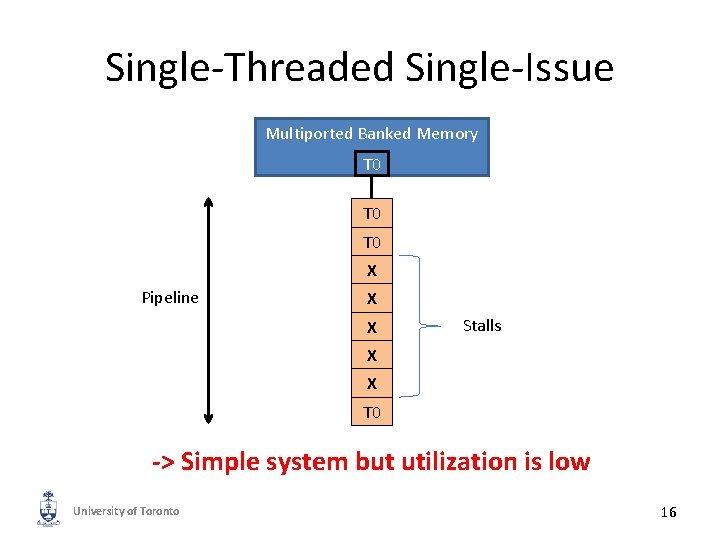

Single-Threaded Single-Issue Multiported Banked Memory T 0 T 0 X Pipeline X X Stalls X X T 0 -> Simple system but utilization is low University of Toronto 16

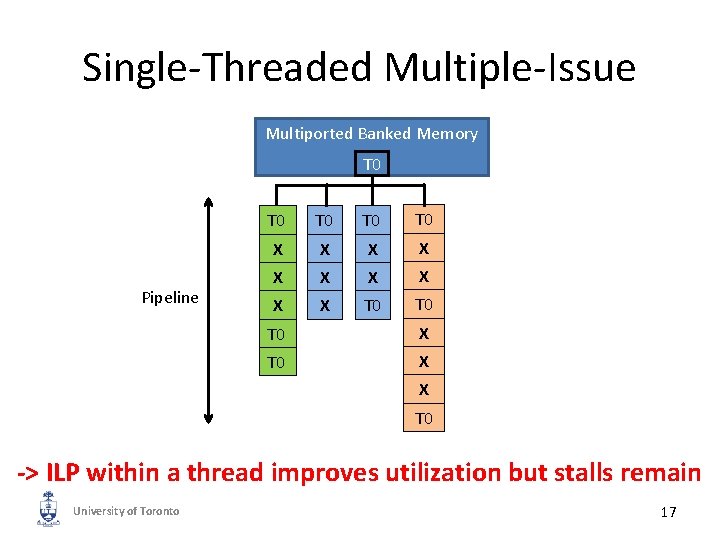

Single-Threaded Multiple-Issue Multiported Banked Memory T 0 Pipeline T 0 T 0 X X X X X T 0 T 0 X X T 0 -> ILP within a thread improves utilization but stalls remain University of Toronto 17

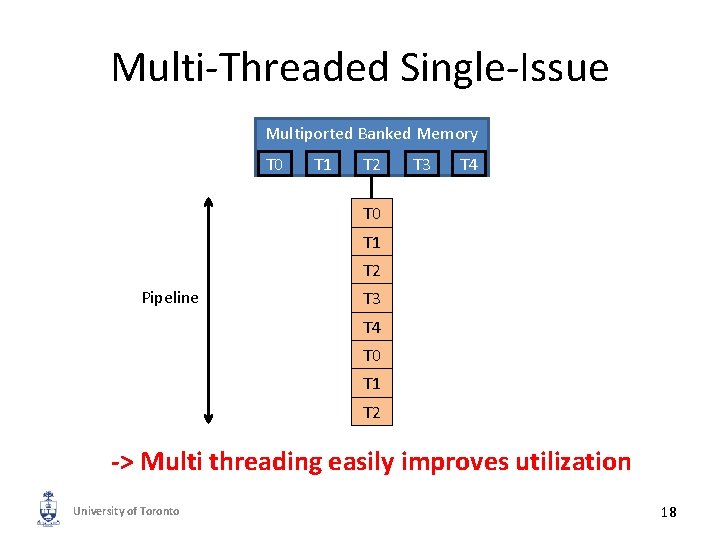

Multi-Threaded Single-Issue Multiported Banked Memory T 0 T 1 T 2 T 3 T 4 T 0 T 1 T 2 Pipeline T 3 T 4 T 0 T 1 T 2 -> Multi threading easily improves utilization University of Toronto 18

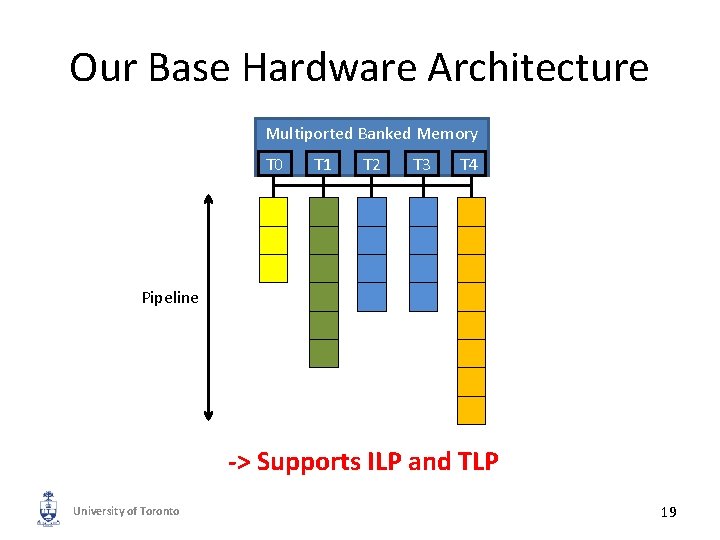

Our Base Hardware Architecture Multiported Banked Memory T 0 T 1 T 2 T 3 T 4 Pipeline -> Supports ILP and TLP University of Toronto 19

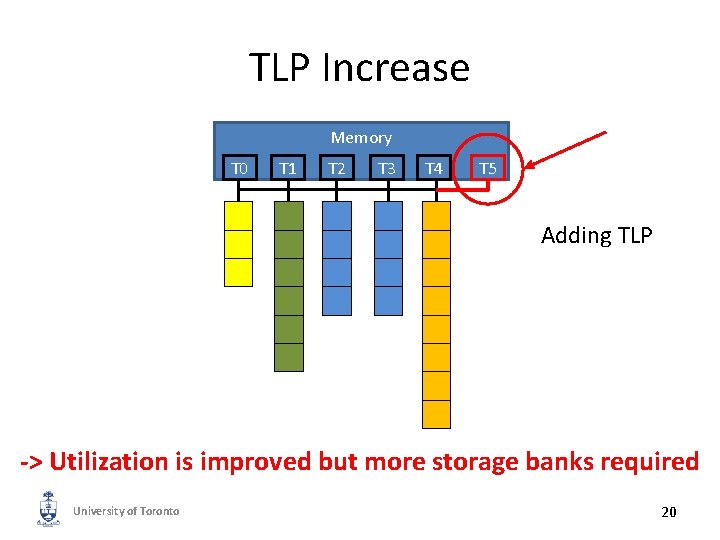

TLP Increase Memory T 0 T 1 T 2 T 3 T 4 T 5 Adding TLP -> Utilization is improved but more storage banks required University of Toronto 20

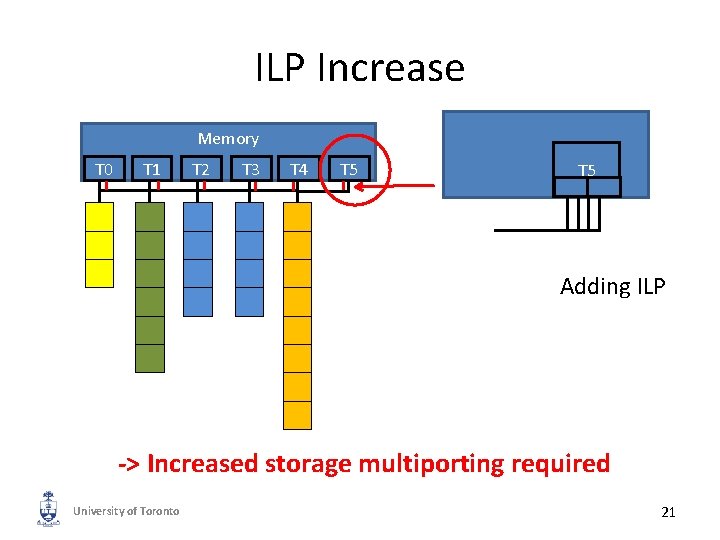

ILP Increase Memory T 0 T 1 T 2 T 3 T 4 T 5 Adding ILP -> Increased storage multiporting required University of Toronto 21

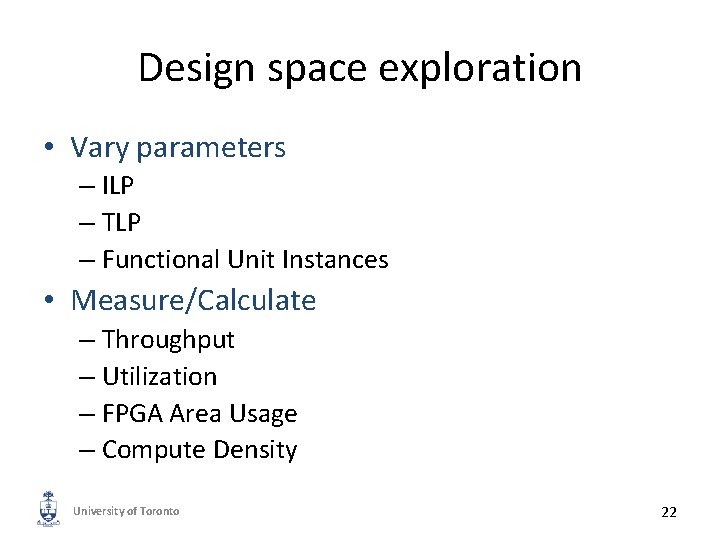

Design space exploration • Vary parameters – ILP – TLP – Functional Unit Instances • Measure/Calculate – Throughput – Utilization – FPGA Area Usage – Compute Density University of Toronto 22

Compiler Scheduling (Implemented in LLVM) University of Toronto 23

Compiler Flow C code University of Toronto 24

Compiler Flow C code M V L L 1 IR code University of Toronto 25

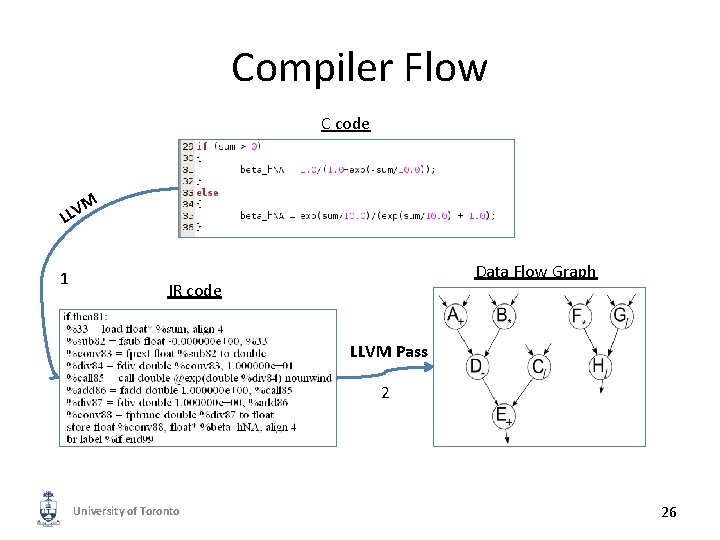

Compiler Flow C code M V L L 1 Data Flow Graph IR code LLVM Pass 2 University of Toronto 26

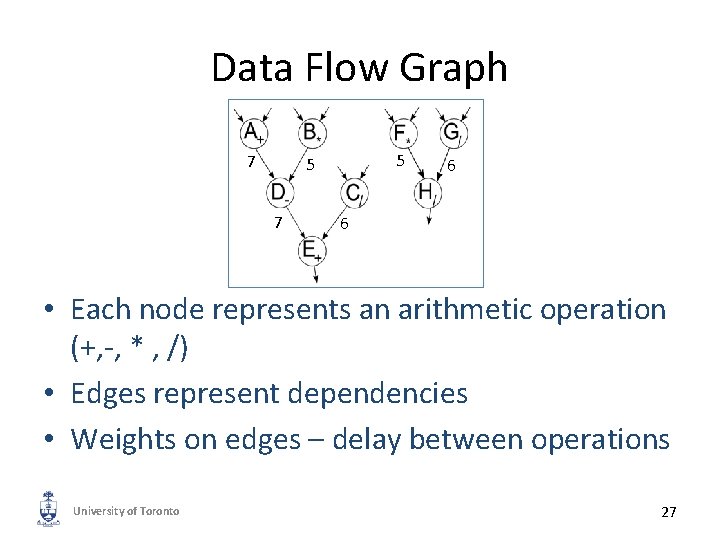

Data Flow Graph 7 5 5 7 6 6 • Each node represents an arithmetic operation (+, -, * , /) • Edges represent dependencies • Weights on edges – delay between operations University of Toronto 27

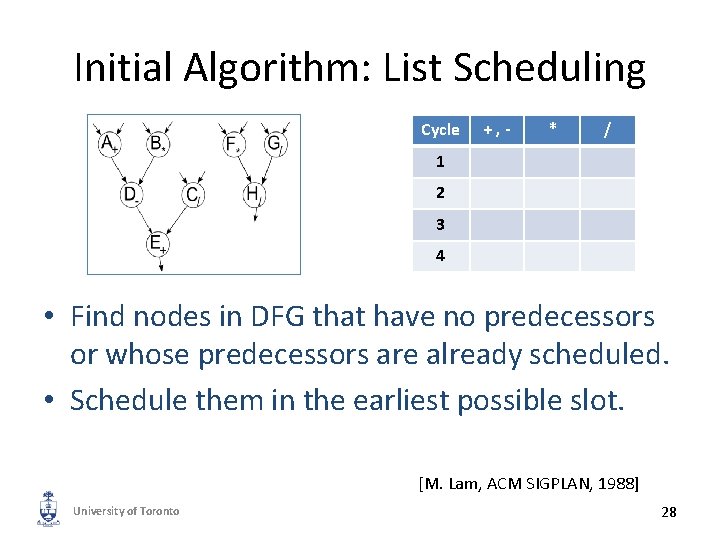

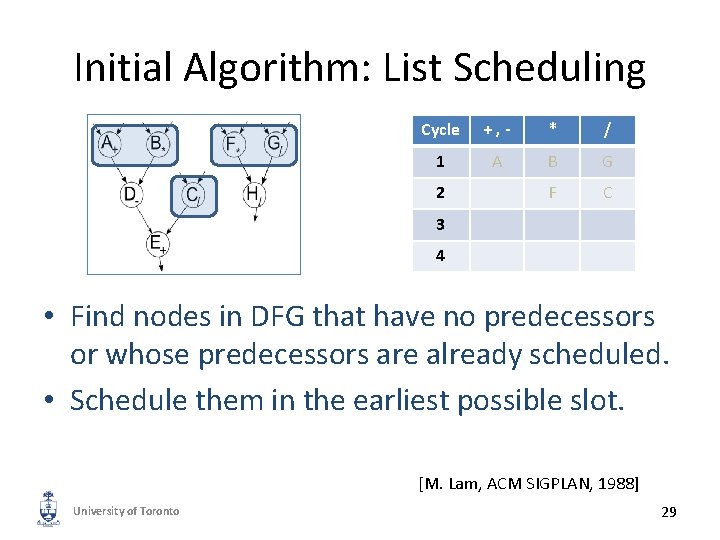

Initial Algorithm: List Scheduling Cycle +, - * / 1 2 3 4 • Find nodes in DFG that have no predecessors or whose predecessors are already scheduled. • Schedule them in the earliest possible slot. [M. Lam, ACM SIGPLAN, 1988] University of Toronto 28

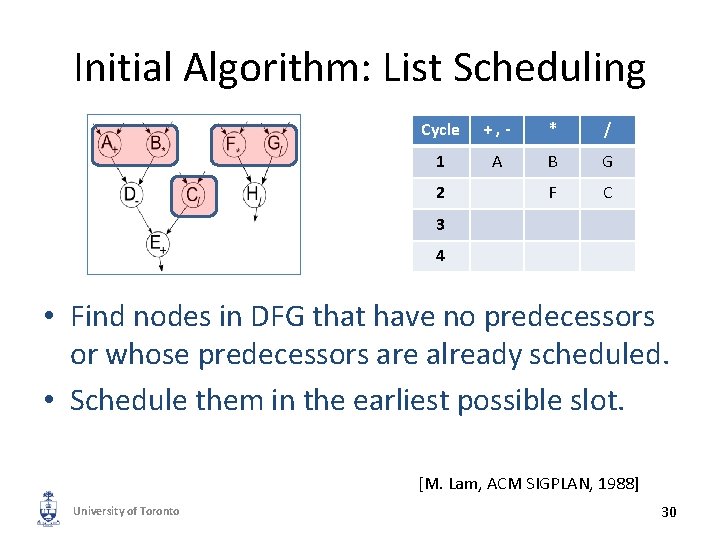

Initial Algorithm: List Scheduling Cycle +, - * / 1 A B G F C 2 3 4 • Find nodes in DFG that have no predecessors or whose predecessors are already scheduled. • Schedule them in the earliest possible slot. [M. Lam, ACM SIGPLAN, 1988] University of Toronto 29

Initial Algorithm: List Scheduling Cycle +, - * / 1 A B G F C 2 3 4 • Find nodes in DFG that have no predecessors or whose predecessors are already scheduled. • Schedule them in the earliest possible slot. [M. Lam, ACM SIGPLAN, 1988] University of Toronto 30

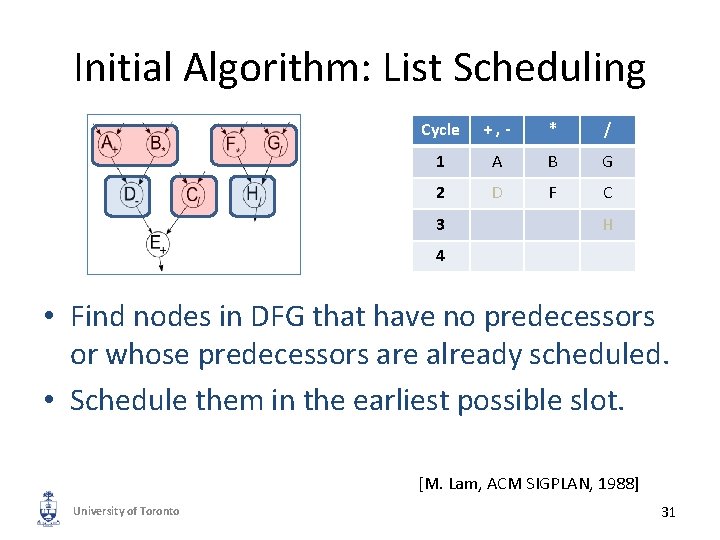

Initial Algorithm: List Scheduling Cycle +, - * / 1 A B G 2 D F C 3 H 4 • Find nodes in DFG that have no predecessors or whose predecessors are already scheduled. • Schedule them in the earliest possible slot. [M. Lam, ACM SIGPLAN, 1988] University of Toronto 31

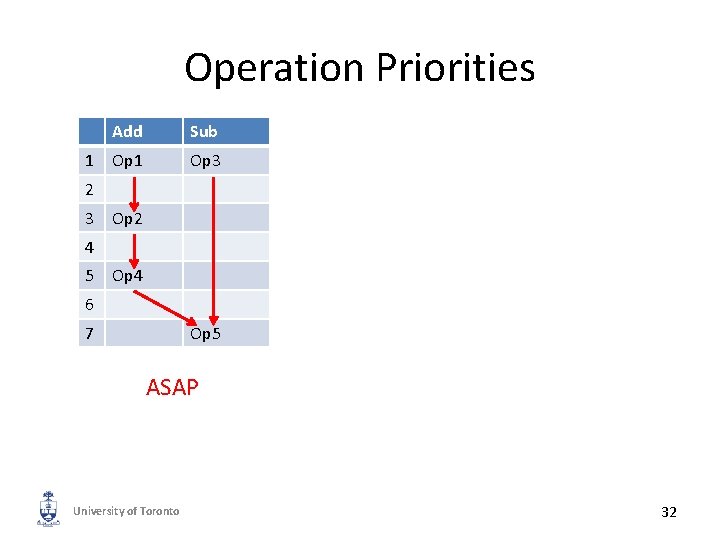

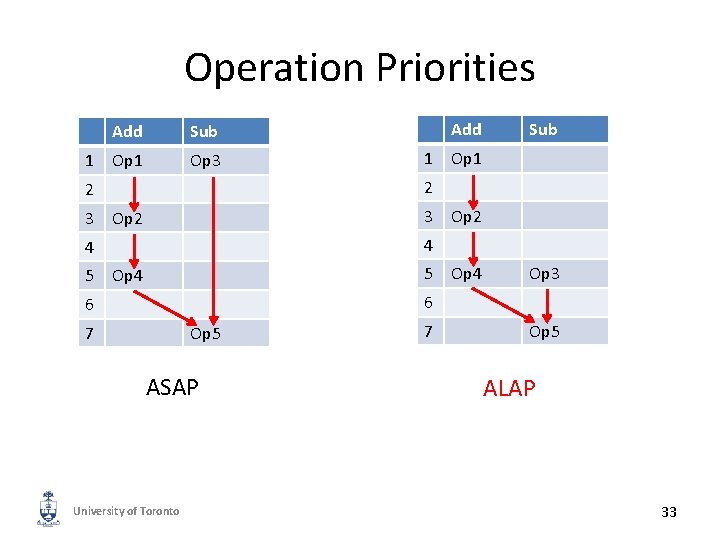

Operation Priorities 1 Add Sub Op 1 Op 3 2 3 Op 2 4 5 Op 4 6 7 Op 5 ASAP University of Toronto 32

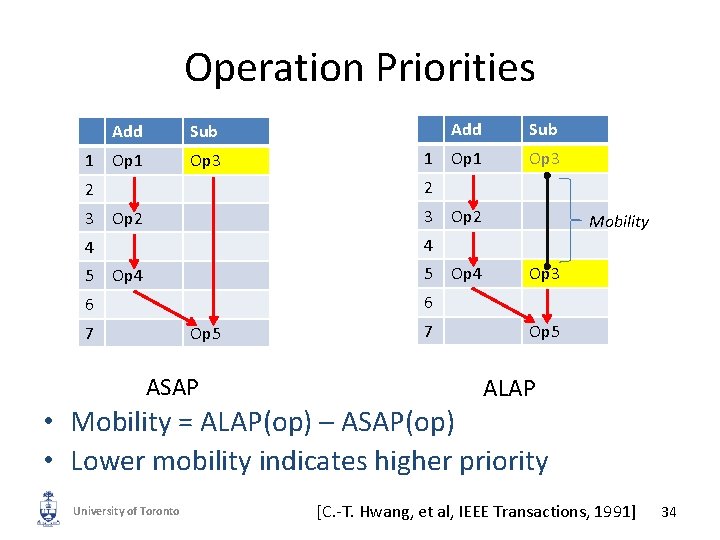

Operation Priorities 1 Add Sub Op 1 Op 3 3 Op 2 Op 1 Op 2 4 4 5 1 Sub 2 2 3 Add 5 Op 4 Op 3 6 6 7 Op 5 ASAP University of Toronto 7 Op 5 ALAP 33

Operation Priorities 1 Add Sub Op 1 Op 3 3 Op 2 Op 1 Op 3 Op 2 Mobility 4 4 5 Sub 2 2 3 1 Add 5 Op 4 Op 3 6 6 7 Op 5 ASAP 7 Op 5 ALAP • Mobility = ALAP(op) – ASAP(op) • Lower mobility indicates higher priority University of Toronto [C. -T. Hwang, et al, IEEE Transactions, 1991] 34

Scheduling Variations 1. 2. 3. 4. Greedy Mix Greedy with Variable Groups Longest Path University of Toronto 35

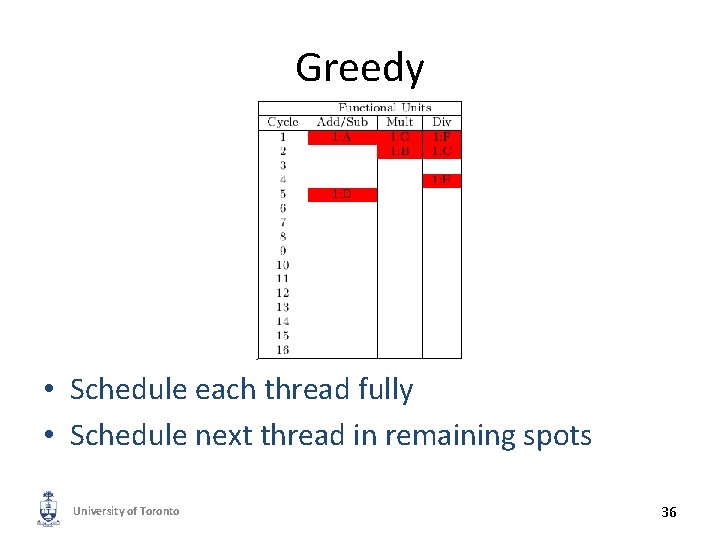

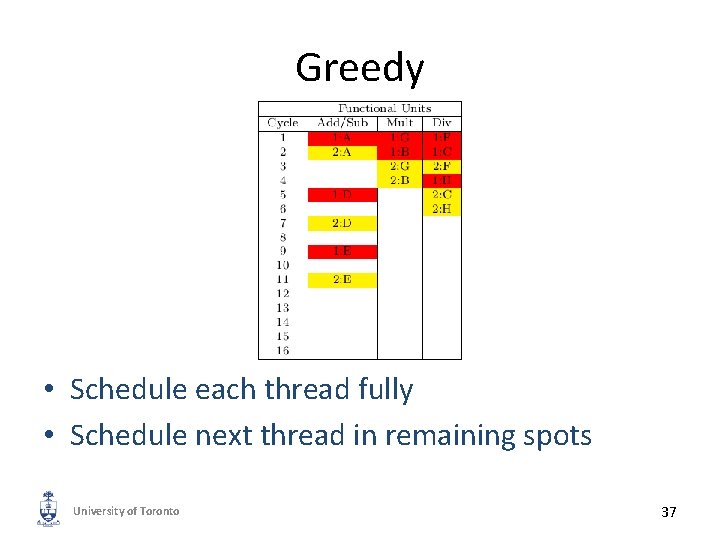

Greedy • Schedule each thread fully • Schedule next thread in remaining spots University of Toronto 36

Greedy • Schedule each thread fully • Schedule next thread in remaining spots University of Toronto 37

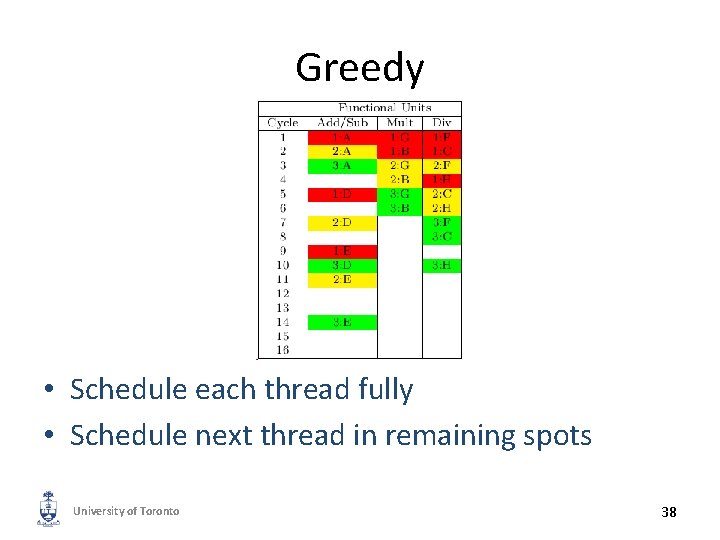

Greedy • Schedule each thread fully • Schedule next thread in remaining spots University of Toronto 38

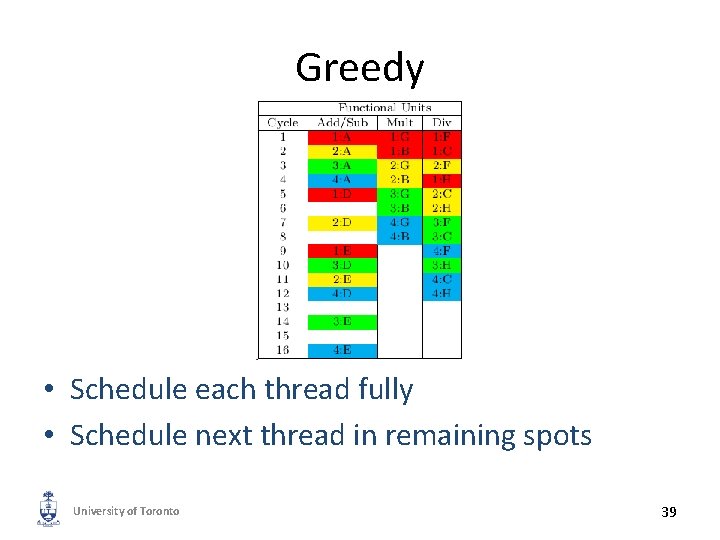

Greedy • Schedule each thread fully • Schedule next thread in remaining spots University of Toronto 39

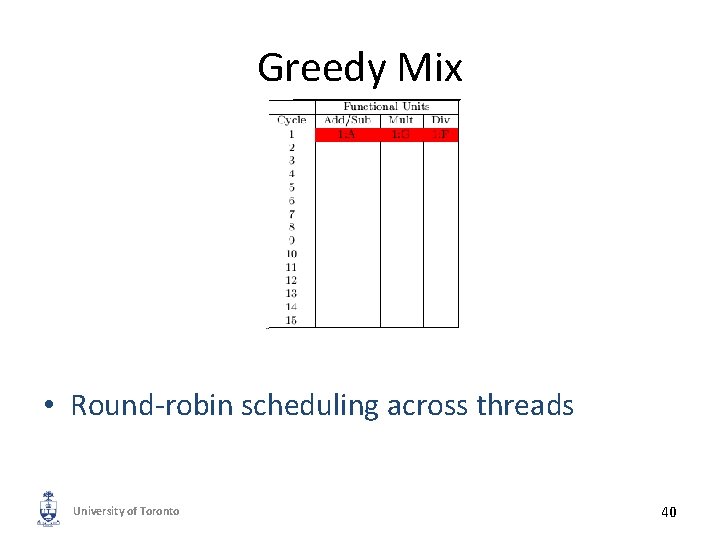

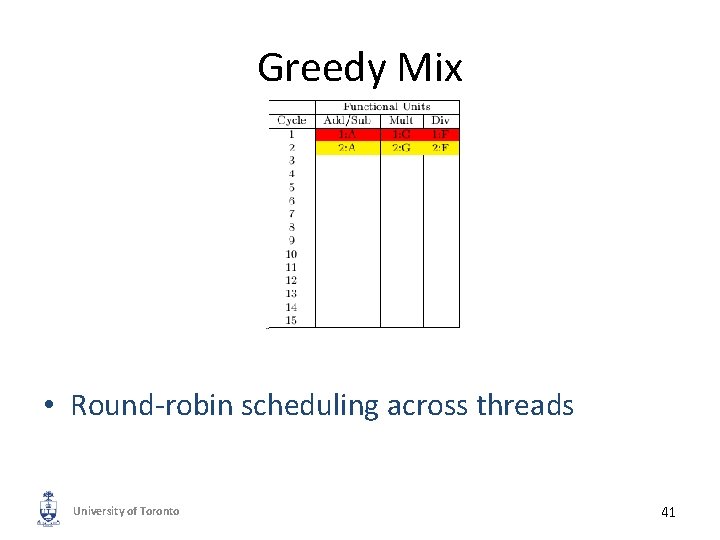

Greedy Mix • Round-robin scheduling across threads University of Toronto 40

Greedy Mix • Round-robin scheduling across threads University of Toronto 41

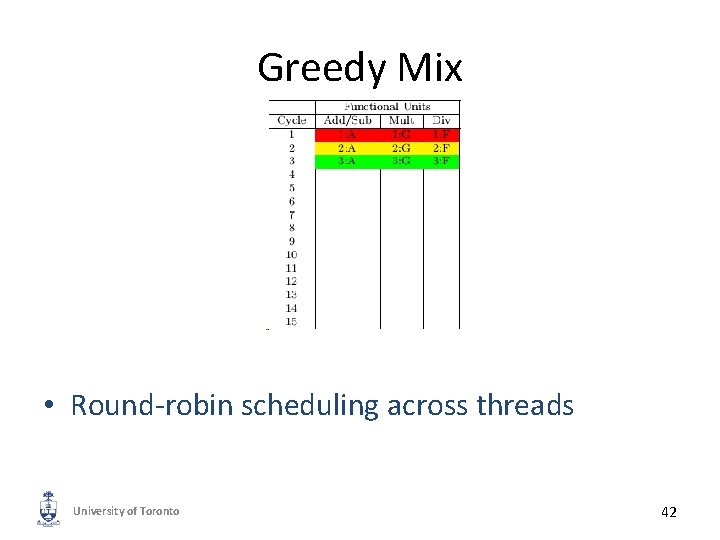

Greedy Mix • Round-robin scheduling across threads University of Toronto 42

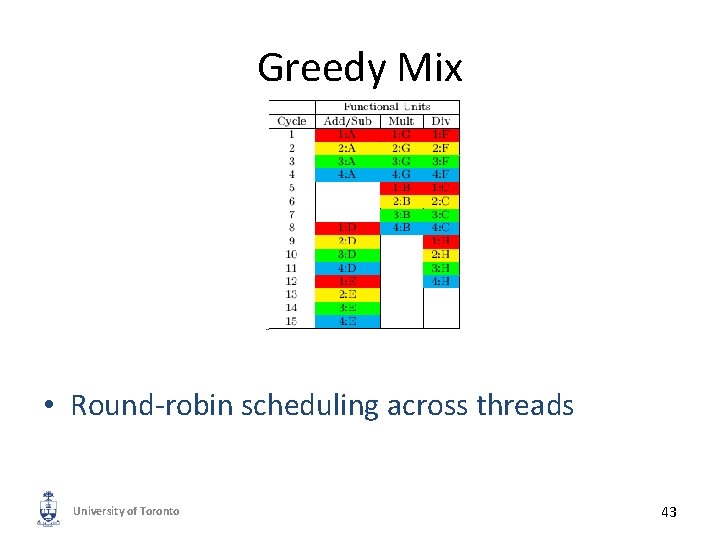

Greedy Mix • Round-robin scheduling across threads University of Toronto 43

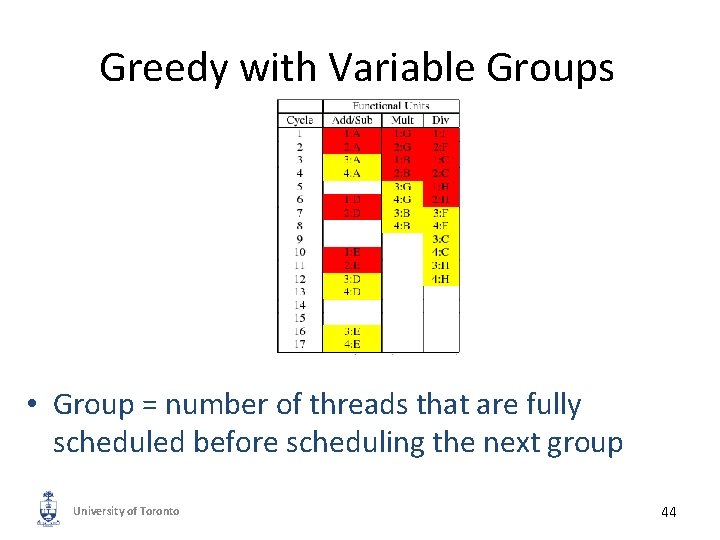

Greedy with Variable Groups • Group = number of threads that are fully scheduled before scheduling the next group University of Toronto 44

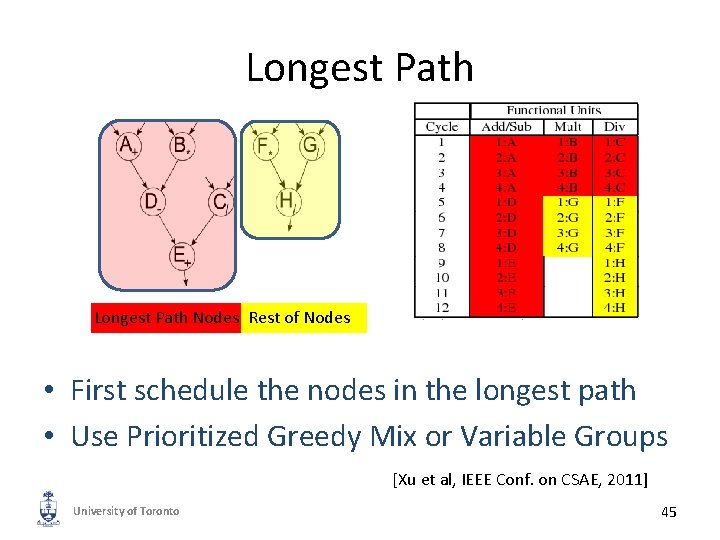

Longest Path Nodes Rest of Nodes • First schedule the nodes in the longest path • Use Prioritized Greedy Mix or Variable Groups [Xu et al, IEEE Conf. on CSAE, 2011] University of Toronto 45

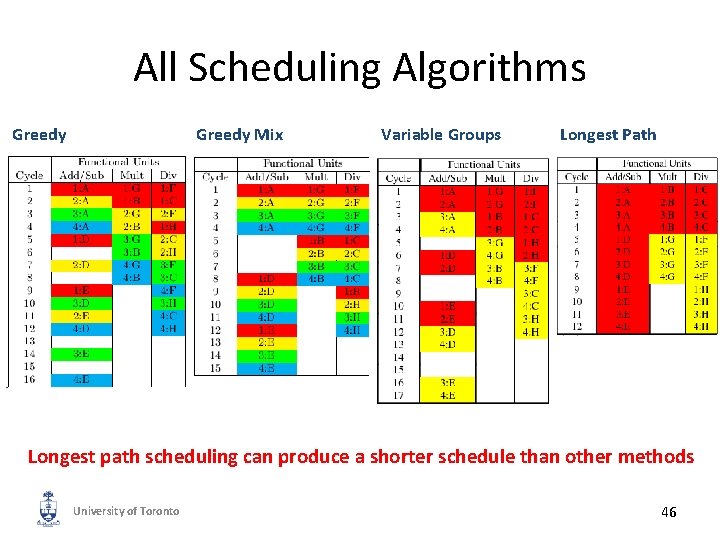

All Scheduling Algorithms Greedy Mix Variable Groups Longest Path Longest path scheduling can produce a shorter schedule than other methods University of Toronto 46

Compilation Results University of Toronto 47

Sample App: Neuron Simulation • • Hodgkin-Huxley Differential equations Computationally intensive Floating point operations: – Add, Subtract, Divide, Multiply, Exponent University of Toronto 48

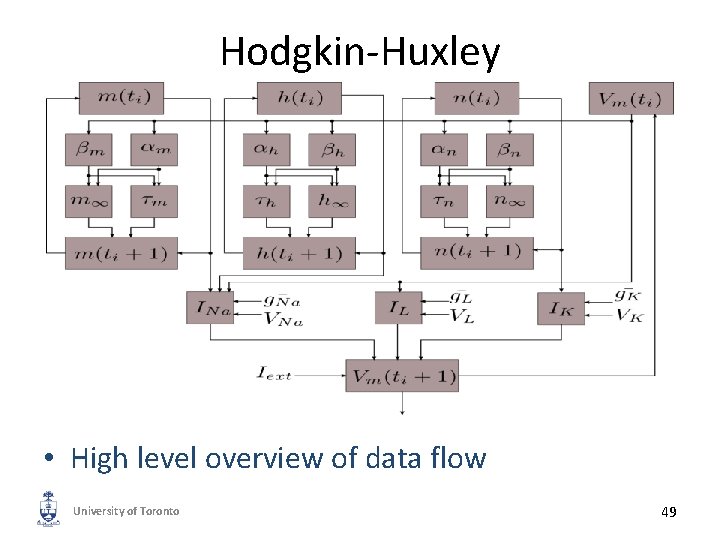

Hodgkin-Huxley • High level overview of data flow University of Toronto 49

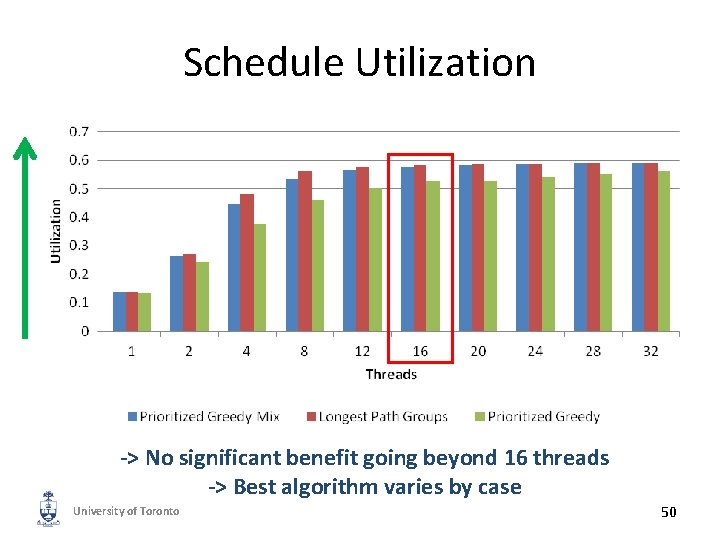

Schedule Utilization -> No significant benefit going beyond 16 threads -> Best algorithm varies by case University of Toronto 50

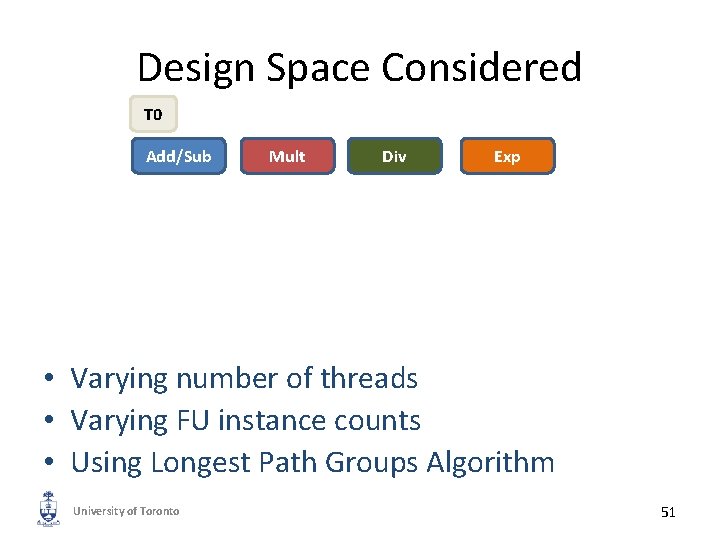

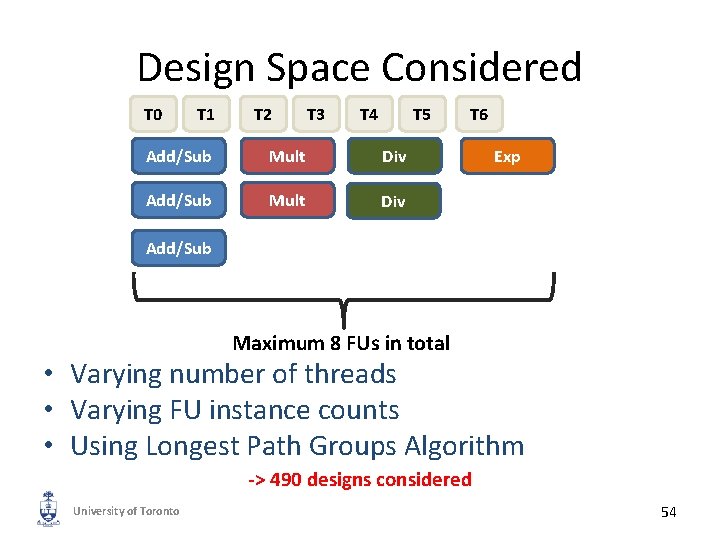

Design Space Considered T 0 Add/Sub Mult Div Exp • Varying number of threads • Varying FU instance counts • Using Longest Path Groups Algorithm University of Toronto 51

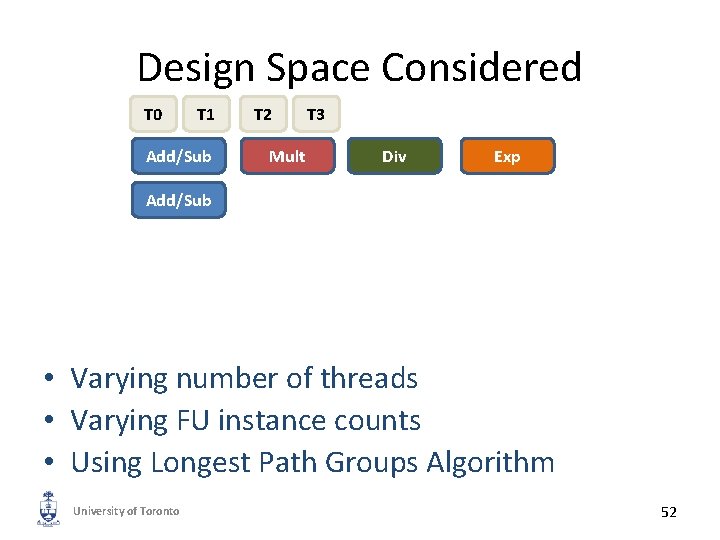

Design Space Considered T 0 T 1 Add/Sub T 2 Mult T 3 Div Exp Add/Sub • Varying number of threads • Varying FU instance counts • Using Longest Path Groups Algorithm University of Toronto 52

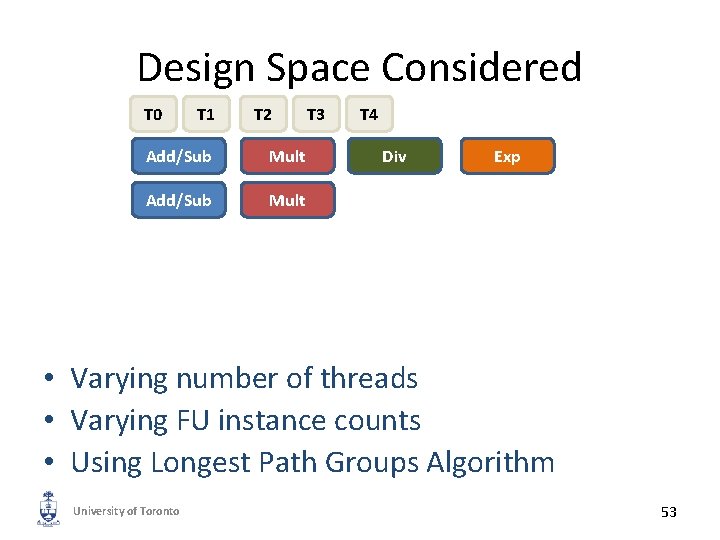

Design Space Considered T 0 T 1 T 2 Add/Sub Mult T 3 T 4 Div Exp • Varying number of threads • Varying FU instance counts • Using Longest Path Groups Algorithm University of Toronto 53

Design Space Considered T 0 T 1 T 2 T 3 T 4 T 5 Add/Sub Mult Div T 6 Exp Add/Sub Maximum 8 FUs in total • Varying number of threads • Varying FU instance counts • Using Longest Path Groups Algorithm -> 490 designs considered University of Toronto 54

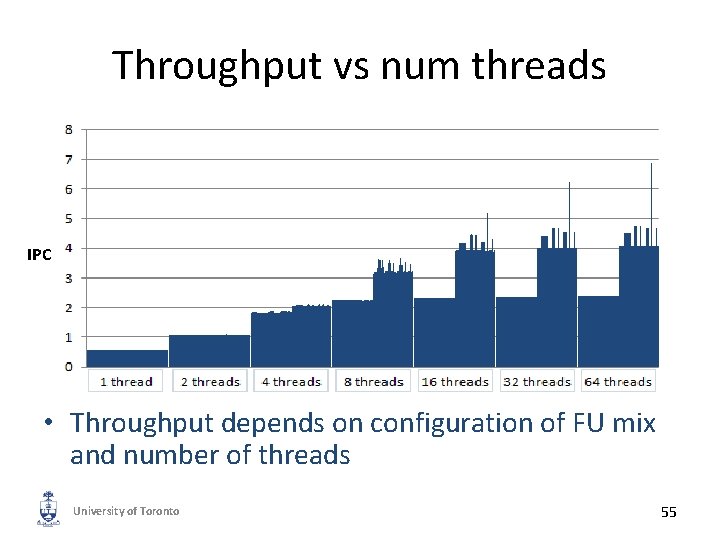

Throughput vs num threads IPC • Throughput depends on configuration of FU mix and number of threads University of Toronto 55

Throughput vs num threads 3 -add/2 -mul/2 -div/1 -exp IPC • Throughput depends on configuration of FU mix and number of threads University of Toronto 56

Real Hardware Results University of Toronto 57

Methodology • • Design built on FPGA Altera Stratix IV (EP 4 SGX 530) Quartus 12. 0 Area = equivalent ALMs – Takes into account BRAM (memory) requirement • IEEE-754 compliant floating point units – Clock Frequency at least 200 MHz University of Toronto 58

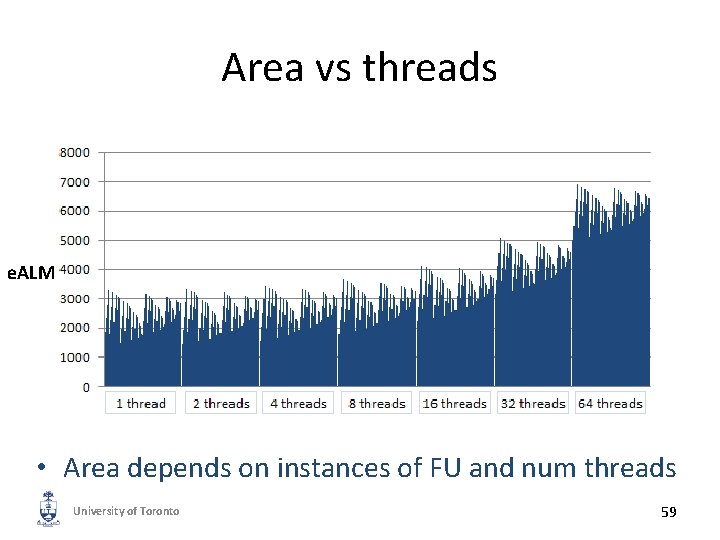

Area vs threads (e. ALM) e. ALM • Area depends on instances of FU and num threads University of Toronto 59

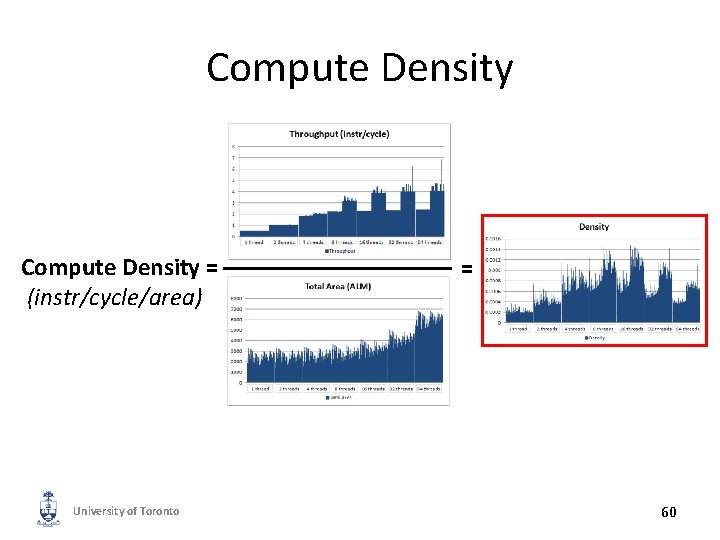

Compute Density = (instr/cycle/area) University of Toronto = 60

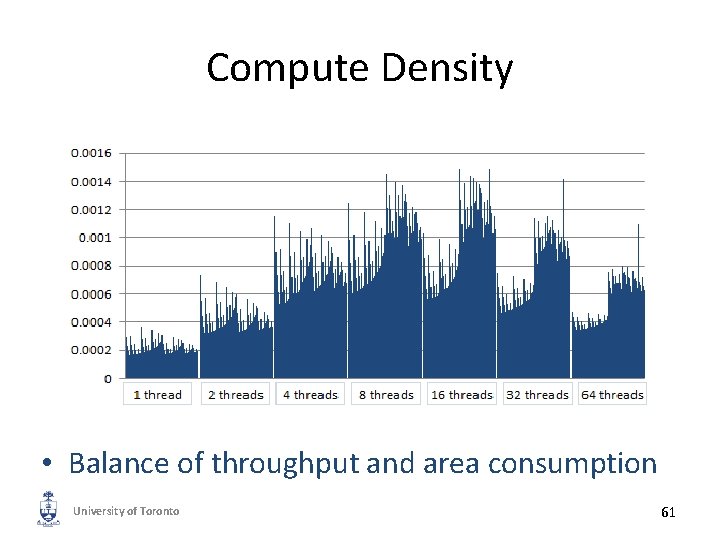

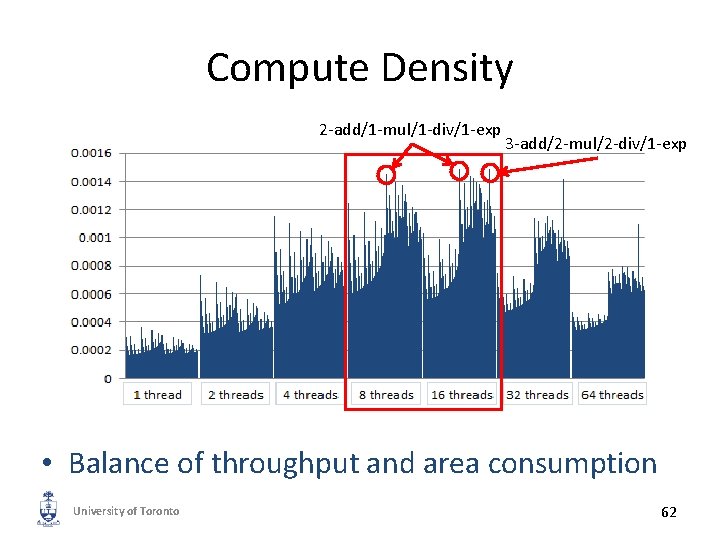

Compute Density • Balance of throughput and area consumption University of Toronto 61

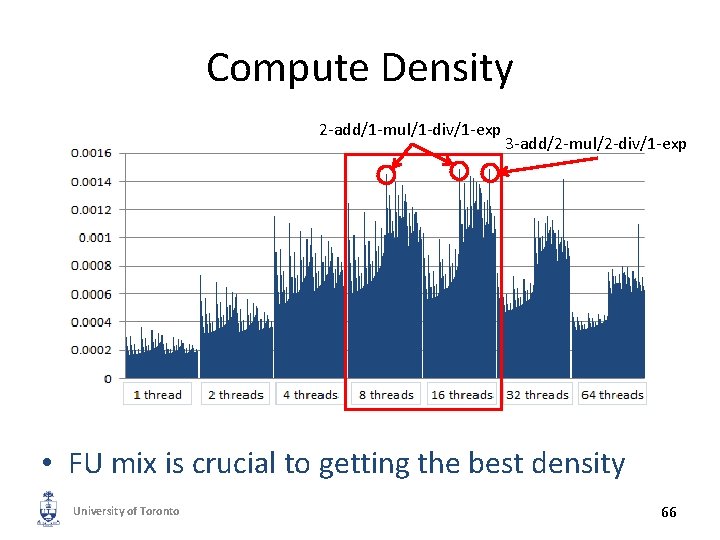

Compute Density 2 -add/1 -mul/1 -div/1 -exp 3 -add/2 -mul/2 -div/1 -exp • Balance of throughput and area consumption University of Toronto 62

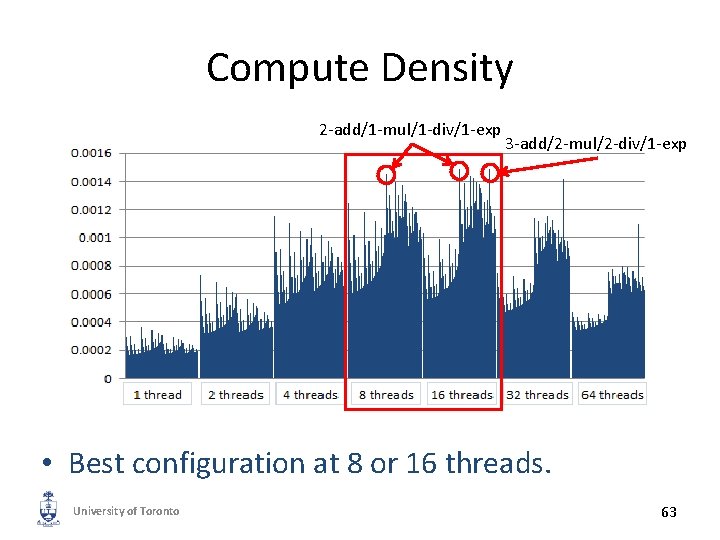

Compute Density 2 -add/1 -mul/1 -div/1 -exp 3 -add/2 -mul/2 -div/1 -exp • Best configuration at 8 or 16 threads. University of Toronto 63

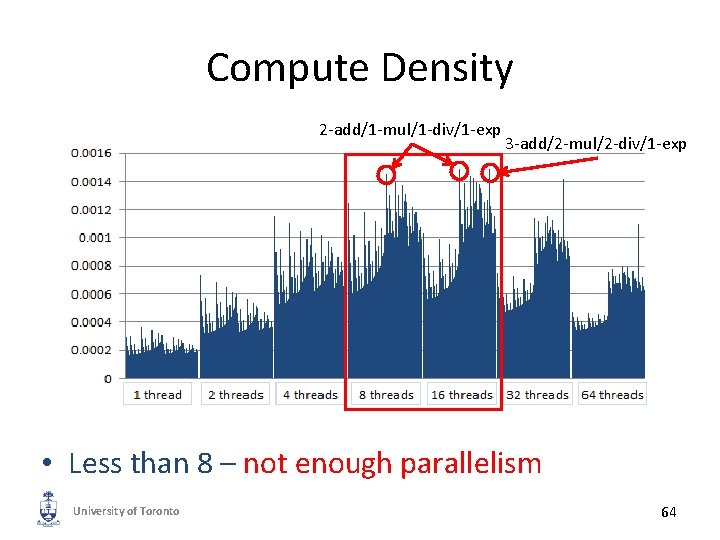

Compute Density 2 -add/1 -mul/1 -div/1 -exp 3 -add/2 -mul/2 -div/1 -exp • Less than 8 – not enough parallelism University of Toronto 64

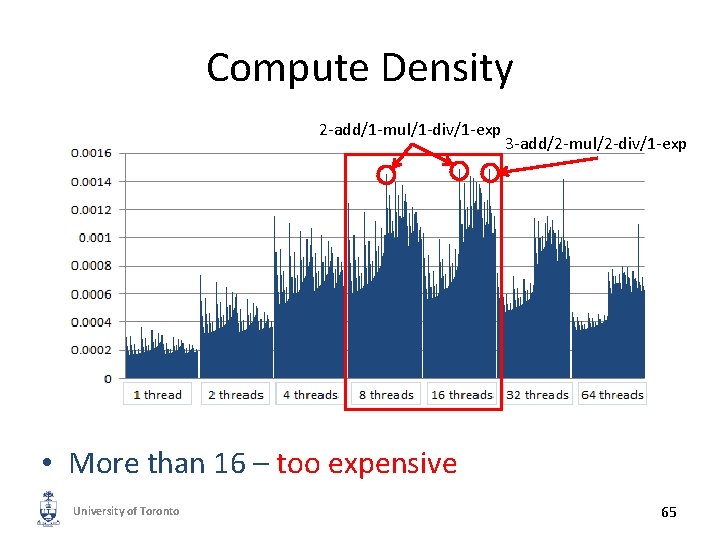

Compute Density 2 -add/1 -mul/1 -div/1 -exp 3 -add/2 -mul/2 -div/1 -exp • More than 16 – too expensive University of Toronto 65

Compute Density 2 -add/1 -mul/1 -div/1 -exp 3 -add/2 -mul/2 -div/1 -exp • FU mix is crucial to getting the best density University of Toronto 66

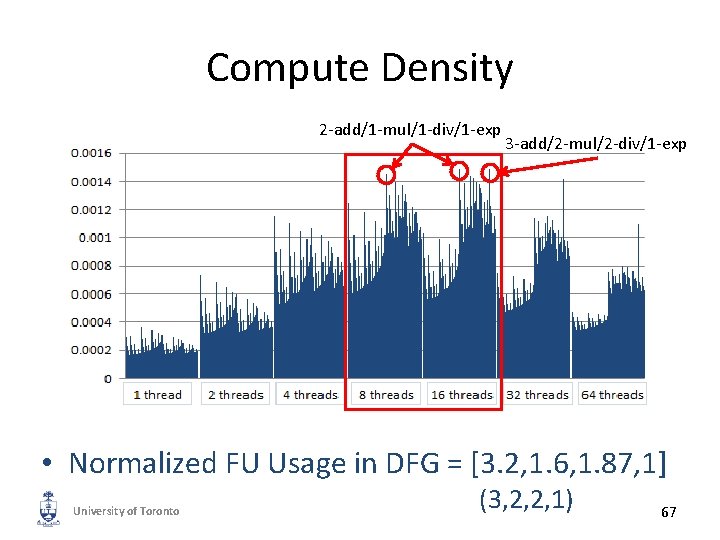

Compute Density 2 -add/1 -mul/1 -div/1 -exp 3 -add/2 -mul/2 -div/1 -exp • Normalized FU Usage in DFG = [3. 2, 1. 6, 1. 87, 1] University of Toronto (3, 2, 2, 1) 67

Conclusions • Longest Path Scheduling seems best – Highest utilization on average • Best compute density found through simulation – 8 and 16 threads give best compute densities – Best FU mix proportional to FU usage in DFG • Compiler finds best hardware configuration University of Toronto 68

- Slides: 68