Compiler Construction BackEnd Synthesis Virendra Singh Associate Professor

Compiler Construction Back-End (Synthesis) Virendra Singh Associate Professor Computer Architecture and Dependable Systems Lab Department of Electrical Engineering Indian Institute of Technology Bombay http: //www. ee. iitb. ac. in/~viren/ E-mail: viren@ee. iitb. ac. in EE-717/453 Advanced Computing for Electrical Engineers Lecture 26

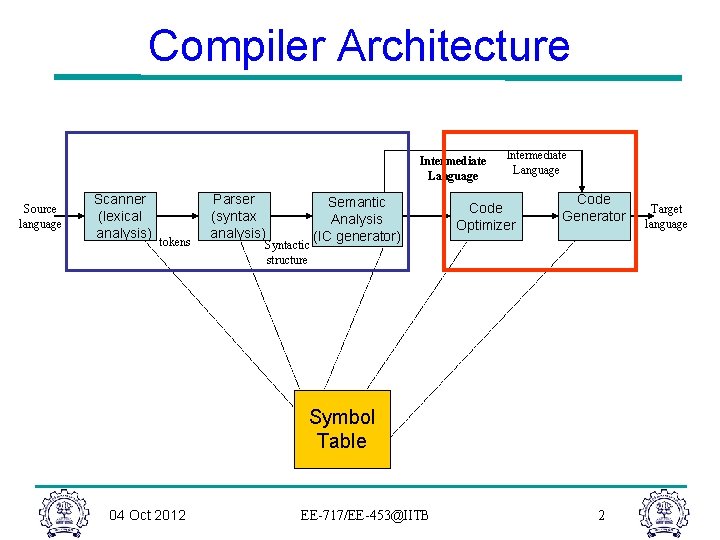

Compiler Architecture Intermediate Language Source language Scanner (lexical analysis) tokens Parser (syntax analysis) Syntactic structure Semantic Analysis (IC generator) Intermediate Language Code Optimizer Code Generator Symbol Table 04 Oct 2012 EE-717/EE-453@IITB 2 Target language

Intermediate Code Generation • Representation of the input program – Internal to the compiler – Encode knowledge collected during compilation – Varied forms and levels • Typically a compiler use more than one kind of IR – Desired properties • Easy to produce, manipulate, and translate into the target code 04 Oct 2012 EE-717/EE-453@IITB 3

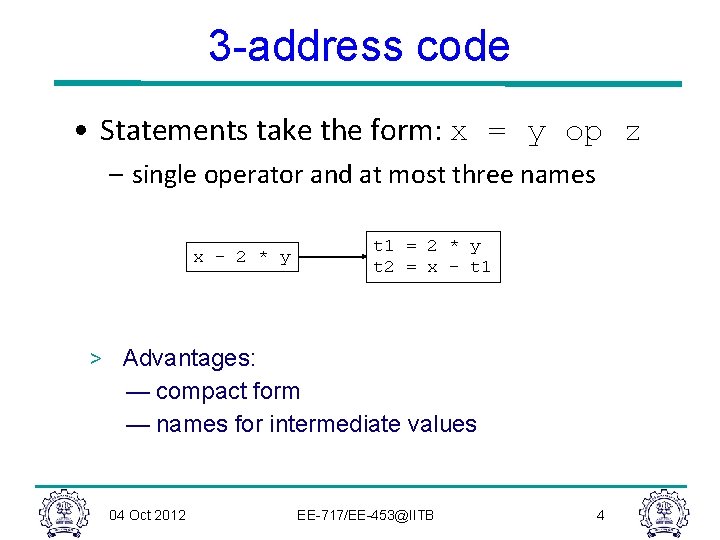

3 -address code • Statements take the form: x = y op z – single operator and at most three names x – 2 * y t 1 = 2 * y t 2 = x – t 1 > Advantages: — compact form — names for intermediate values 04 Oct 2012 EE-717/EE-453@IITB 4

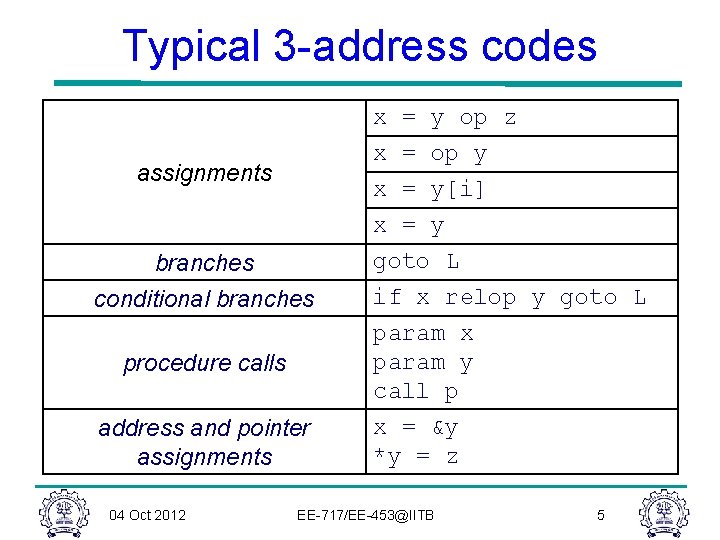

Typical 3 -address codes x x assignments branches conditional branches procedure calls address and pointer assignments 04 Oct 2012 = = y op z op y y[i] y goto L if x relop y goto L param x param y call p x = &y *y = z EE-717/EE-453@IITB 5

Optimization: The Idea • Transform the program to improve efficiency • Performance: faster execution • Size: smaller executable, smaller memory footprint Tradeoffs: 1) Performance vs. Size 2) Compilation speed and memory 04 Oct 2012 EE-717/EE-453@IITB 6

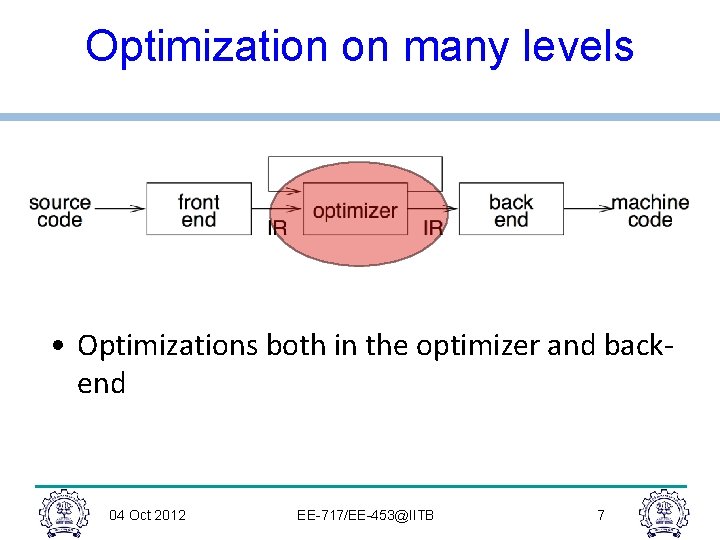

Optimization on many levels • Optimizations both in the optimizer and backend 04 Oct 2012 EE-717/EE-453@IITB 7

Examples for Optimizations • • Constant Folding / Propagation Copy Propagation Algebraic Simplifications Strength Reduction Dead Code Elimination Loop Optimizations Partial Redundancy Elimination Code Inlining 04 Oct 2012 EE-717/EE-453@IITB 8

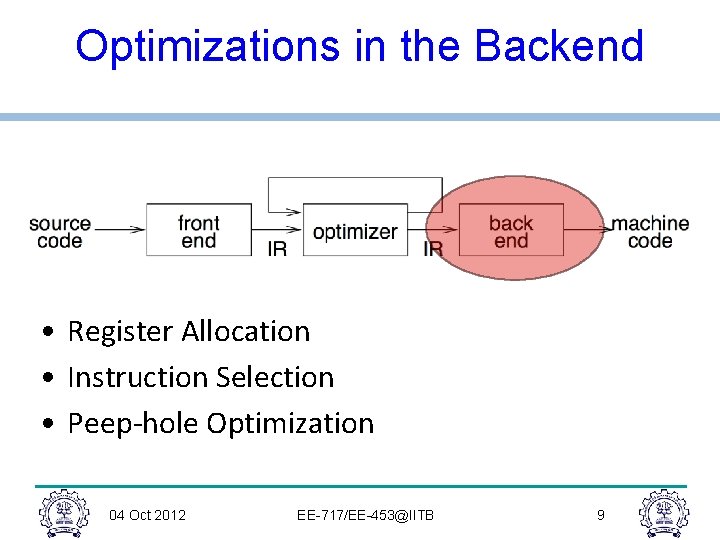

Optimizations in the Backend • Register Allocation • Instruction Selection • Peep-hole Optimization 04 Oct 2012 EE-717/EE-453@IITB 9

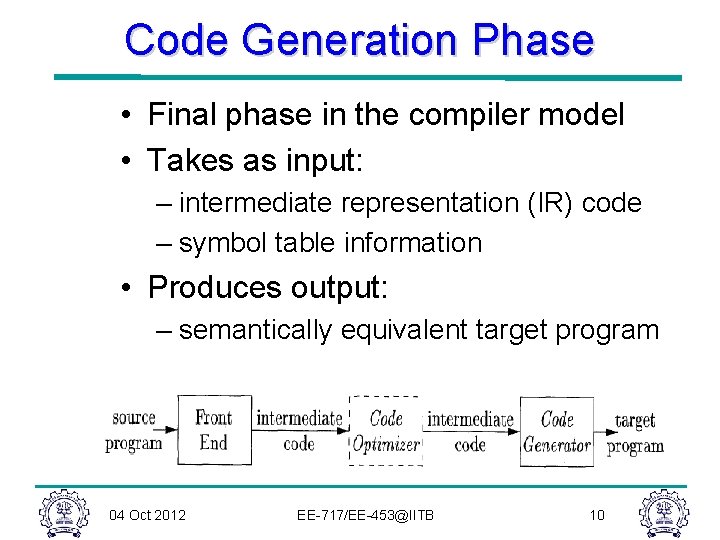

Code Generation Phase • Final phase in the compiler model • Takes as input: – intermediate representation (IR) code – symbol table information • Produces output: – semantically equivalent target program 04 Oct 2012 EE-717/EE-453@IITB 10

Code Generation Phase • Compilers need to produce efficient target programs • Includes an optimization phase prior to code generation • May make multiple passes over the IR before generating the target program 04 Oct 2012 EE-717/EE-453@IITB 11

Target Program Code • The back-end code generator of a compiler may generate different forms of code, depending on the requirements: – Absolute machine code (executable code) – Relocatable machine code (object files for linker) – Assembly language (facilitates debugging) – Byte code forms for interpreters (e. g. JVM) 04 Oct 2012 EE-717/EE-453@IITB 12

Code Generation Phase • Code generator has three primary tasks: – Instruction selection – Register allocation and assignment – Instruction ordering • Instruction selection – choose appropriate target-machine instructions to implement the IR statements • Register allocation and assignment – decide what values to keep in which registers 04 Oct 2012 EE-717/EE-453@IITB 13

Code Generation Phase • Instruction ordering – decide in what order to schedule the execution of instructions • Design of all code generators involve the above three tasks • Details of code generation are dependent on the specifics of IR, target language, and run-time system 04 Oct 2012 EE-717/EE-453@IITB 14

The Target Machine • Implementing code generation requires thorough understanding of the target machine architecture and its instruction set • Example: Hypothetical machine: – Byte-addressable (word = 4 bytes) – Has n general purpose registers R 0, R 1, …, Rn-1 – Two-address instructions of the form op source, destination – Op – op-code – Source, destination – data fields 04 Oct 2012 EE-717/EE-453@IITB 15

The Target Machine: Op-codes • • Op-codes (op), for example MOV (move content of source to destination) ADD (add content of source to destination) SUB (subtract content of source from destination) • There also other ops 04 Oct 2012 EE-717/EE-453@IITB 16

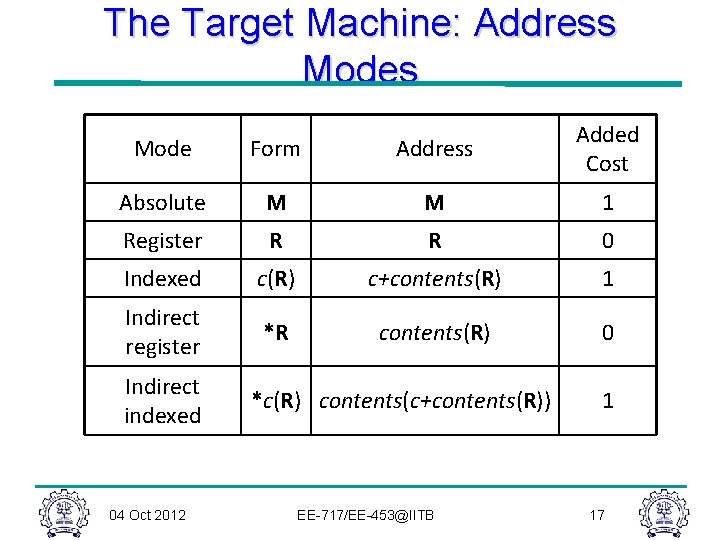

The Target Machine: Address Mode Form Address Added Cost Absolute M M 1 Register R R 0 Indexed c(R) c+contents(R) 1 Indirect register *R contents(R) 0 Indirect indexed 04 Oct 2012 *c(R) contents(c+contents(R)) EE-717/EE-453@IITB 1 17

Instruction Costs • Machine is a simple processor with fixed instruction costs • Define the cost of instruction = 1 + cost(source-mode) + cost(destinationmode) 04 Oct 2012 EE-717/EE-453@IITB 18

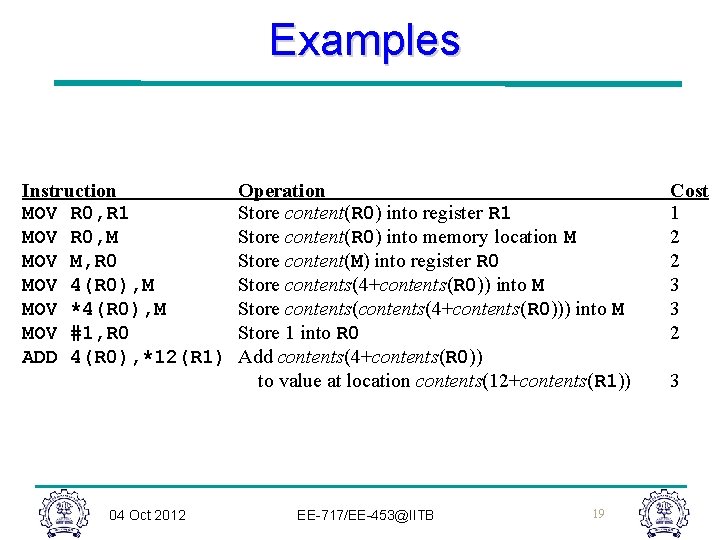

Examples Instruction MOV R 0, R 1 MOV R 0, M MOV M, R 0 MOV 4(R 0), M MOV *4(R 0), M MOV #1, R 0 ADD 4(R 0), *12(R 1) 04 Oct 2012 Operation Store content(R 0) into register R 1 Store content(R 0) into memory location M Store content(M) into register R 0 Store contents(4+contents(R 0)) into M Store contents(4+contents(R 0))) into M Store 1 into R 0 Add contents(4+contents(R 0)) to value at location contents(12+contents(R 1)) EE-717/EE-453@IITB 19 Cost 1 2 2 3 3 2 3

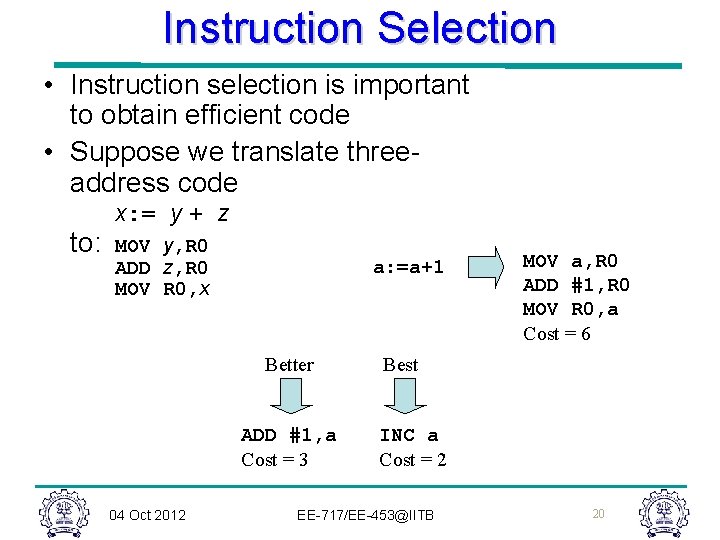

Instruction Selection • Instruction selection is important to obtain efficient code • Suppose we translate threeaddress code to: x: = y + z MOV y, R 0 ADD z, R 0 MOV R 0, x a: =a+1 Better ADD #1, a Cost = 3 04 Oct 2012 MOV a, R 0 ADD #1, R 0 MOV R 0, a Cost = 6 Best INC a Cost = 2 EE-717/EE-453@IITB 20

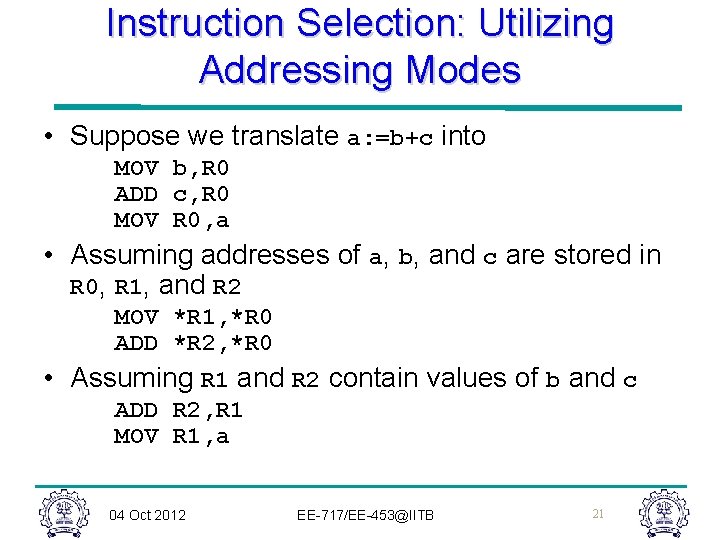

Instruction Selection: Utilizing Addressing Modes • Suppose we translate a: =b+c into MOV b, R 0 ADD c, R 0 MOV R 0, a • Assuming addresses of a, b, and c are stored in R 0, R 1, and R 2 MOV *R 1, *R 0 ADD *R 2, *R 0 • Assuming R 1 and R 2 contain values of b and c ADD R 2, R 1 MOV R 1, a 04 Oct 2012 EE-717/EE-453@IITB 21

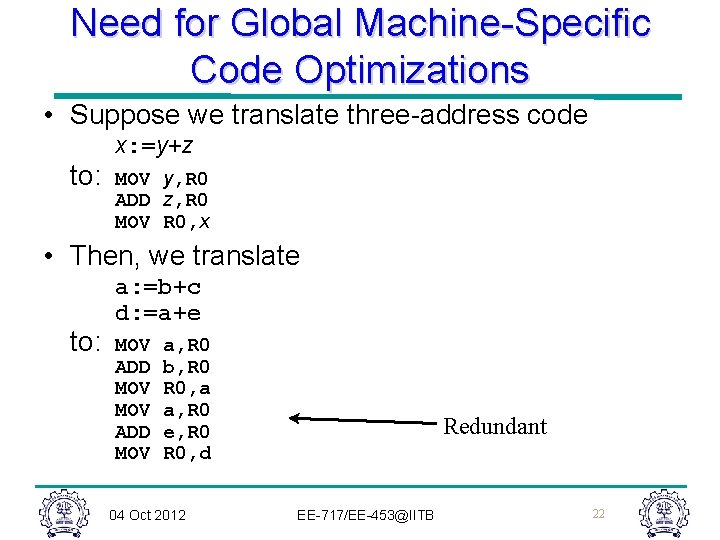

Need for Global Machine-Specific Code Optimizations • Suppose we translate three-address code x: =y+z to: MOV y, R 0 ADD z, R 0 MOV R 0, x • Then, we translate to: a: =b+c d: =a+e MOV ADD MOV a, R 0 b, R 0, a a, R 0 e, R 0, d 04 Oct 2012 Redundant EE-717/EE-453@IITB 22

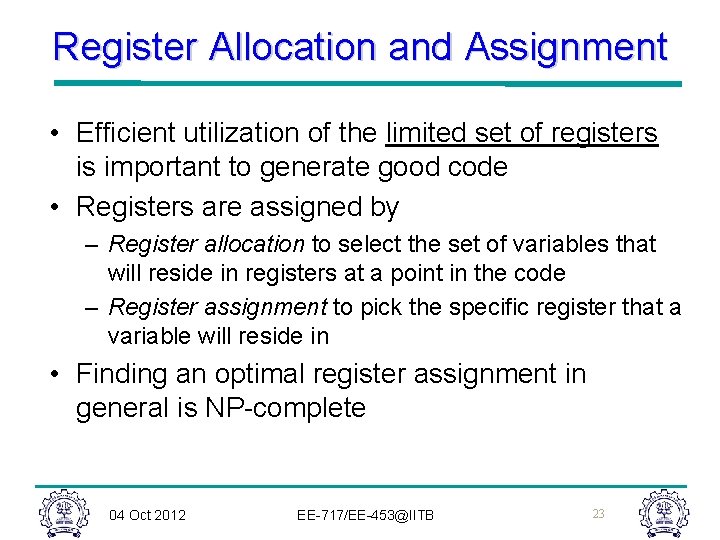

Register Allocation and Assignment • Efficient utilization of the limited set of registers is important to generate good code • Registers are assigned by – Register allocation to select the set of variables that will reside in registers at a point in the code – Register assignment to pick the specific register that a variable will reside in • Finding an optimal register assignment in general is NP-complete 04 Oct 2012 EE-717/EE-453@IITB 23

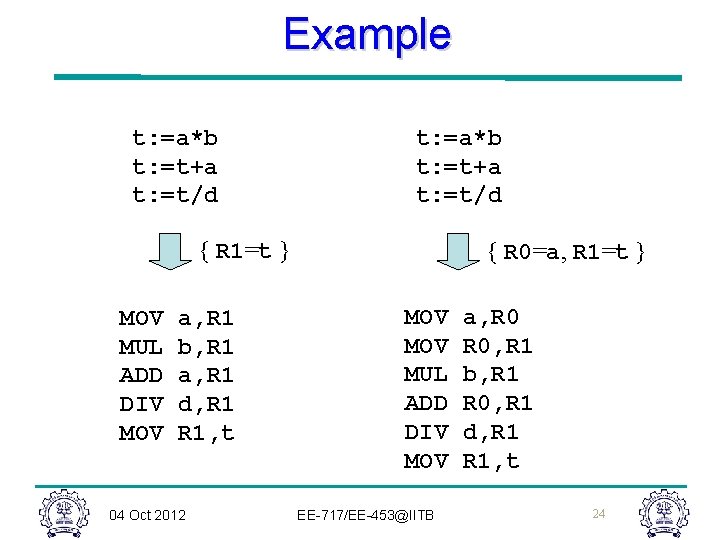

Example t: =a*b t: =t+a t: =t/d { R 1=t } MOV MUL ADD DIV MOV a, R 1 b, R 1 a, R 1 d, R 1, t 04 Oct 2012 { R 0=a, R 1=t } MOV MUL ADD DIV MOV EE-717/EE-453@IITB a, R 0, R 1 b, R 1 R 0, R 1 d, R 1, t 24

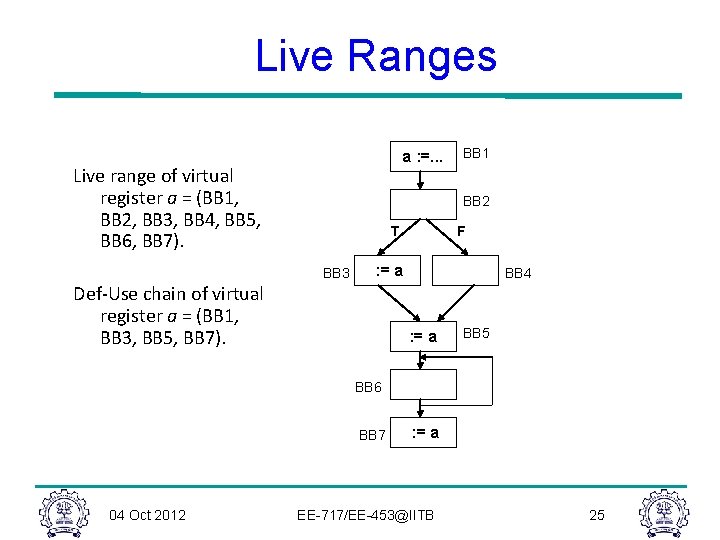

Live Ranges a : =. . . Live range of virtual register a = (BB 1, BB 2, BB 3, BB 4, BB 5, BB 6, BB 7). BB 1 BB 2 T BB 3 F : = a Def-Use chain of virtual register a = (BB 1, BB 3, BB 5, BB 7). BB 4 : = a BB 5 BB 6 BB 7 04 Oct 2012 : = a EE-717/EE-453@IITB 25

Computing Live Ranges Using data flow analysis, we compute for each basic block: • In the forward direction, the reaching attribute. A variable is reaching block i if a definition or use of the variable reaches the basic block along the edges of the CFG. • In the backward direction, the liveness attribute. A variable is live at block i if there is a direct reference to the variable at block i or at some block j that succeeds i in the CFG, provided the variable in question is not redefined in the interval between i and j. 04 Oct 2012 EE-717/EE-453@IITB CS 6241 / ECE 8833 A

Computing Live Ranges The live range of a variable is the intersection of basicblocks in CFG nodes in which the variable is live, and the set which it can reach. 04 Oct 2012 EE-717/EE-453@IITB CS 6241 / ECE 8833 A

Local Register Allocation • Perform register allocation one basic block (or super-block or hyper-block) at a time • Must note virtual registers that are live on entry and live on exit of a basic block - later perform reconciliation • During allocation, spill out all live outs and bring all live ins from memory • Advantage: very fast 04 Oct 2012 EE-717/EE-453@IITB CS 6241 / ECE 8833 A

Local Register Allocation Reconciliation Codes • After completing allocation for all blocks, we need to reconcile differences in allocation • If there are spare registers between two basic blocks, replace the spill out and spill in code with register moves 04 Oct 2012 EE-717/EE-453@IITB CS 6241 / ECE 8833 A

Linear Scan Register Allocation • A simple local allocation algorithm • Assume code is already scheduled • Build a linear ordering of live ranges (also called live intervals or lifetimes) • Scan the live range and assign registers until you run out of them - then spill 04 Oct 2012 EE-717/EE-453@IITB CS 6241 / ECE 8833 A

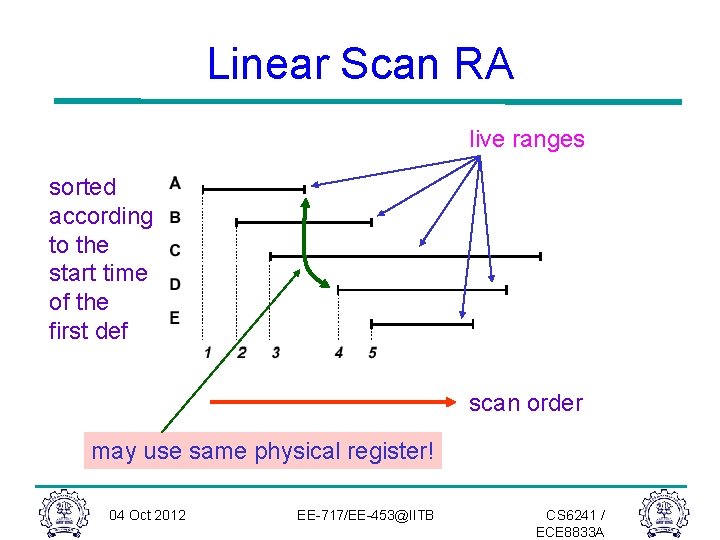

Linear Scan RA live ranges sorted according to the start time of the first def scan order may use same physical register! 04 Oct 2012 EE-717/EE-453@IITB CS 6241 / ECE 8833 A

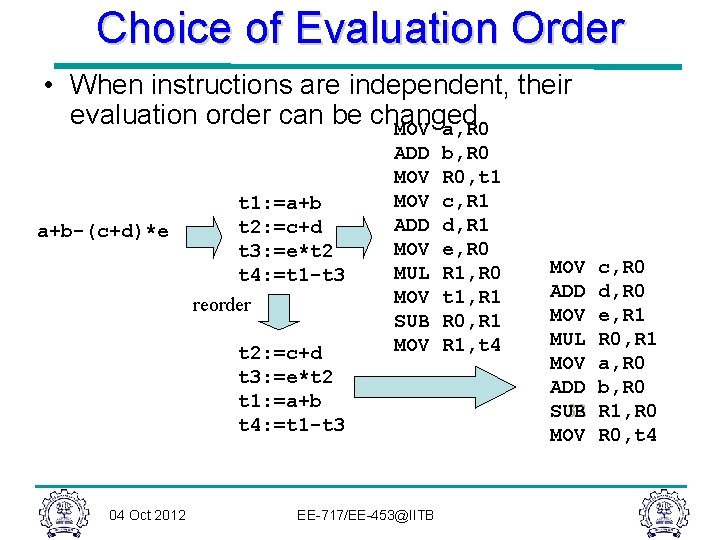

Choice of Evaluation Order • When instructions are independent, their evaluation order can be changed MOV a, R 0 a+b-(c+d)*e t 1: =a+b t 2: =c+d t 3: =e*t 2 t 4: =t 1 -t 3 reorder t 2: =c+d t 3: =e*t 2 t 1: =a+b t 4: =t 1 -t 3 04 Oct 2012 ADD MOV MUL MOV SUB MOV EE-717/EE-453@IITB b, R 0, t 1 c, R 1 d, R 1 e, R 0 R 1, R 0 t 1, R 1 R 0, R 1, t 4 MOV ADD MOV MUL MOV ADD SUB 32 MOV c, R 0 d, R 0 e, R 1 R 0, R 1 a, R 0 b, R 0 R 1, R 0, t 4

Two Classes of Storage in Processor • Registers – Fast access, but only a few of them – Address space not visible to programmer • Doesn’t support pointer access! • Memory – Slow access, but large – Supports pointers 04 Oct 2012 EE-717/EE-453@IITB 33

4 Distinct Regions of Memory • Code space – Instructions to be executed – Best if read-only • Static (or Global) – Variables that retain their value over the lifetime of the program • Stack – Variables that is only as long as the block within which they are defined (local) • Heap – Variables that are defined by calls to the system storage allocator (malloc, new) 04 Oct 2012 EE-717/EE-453@IITB 34

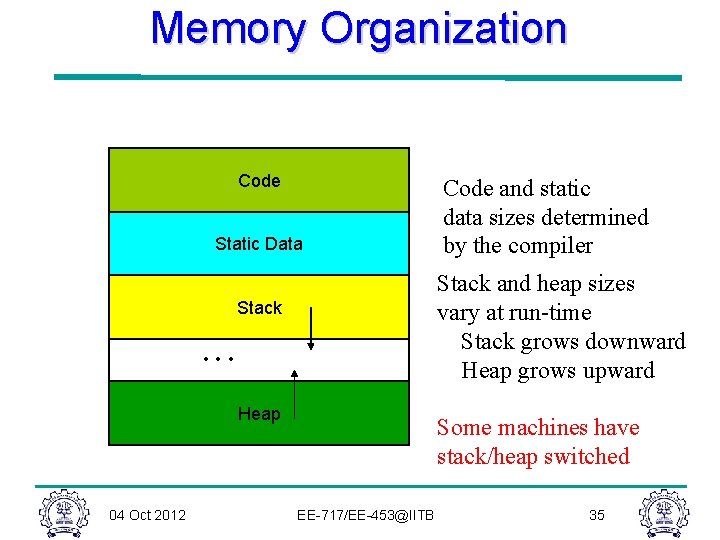

Memory Organization Code Static Data Stack and heap sizes vary at run-time Stack grows downward Heap grows upward Stack . . . Heap 04 Oct 2012 Code and static data sizes determined by the compiler Some machines have stack/heap switched EE-717/EE-453@IITB 35

Storage Class Selection • Standard (simple) approach – Globals/statics – memory – Locals • Composite types (structs, arrays, etc. ) – memory • Scalars – Preceded by register keyword? – register – Rest – memory • All memory approach – Put all variables into memory – Compiler register allocation relocates some memory variables to registers later 04 Oct 2012 EE-717/EE-453@IITB 36

Thank You 04 Oct 2012 EE-717/EE-453@IITB 37

- Slides: 37