Comparison of Delivered Reliability of Branch Data Flow

Comparison of Delivered Reliability of Branch, Data Flow, and Operational Testing: A Case Study Phyllis G. Frankl Yuetang Deng Polytechnic University Brooklyn, NY 8/23/00 ISSTA-2000 1

Outline • • Measures of test effectiveness Delivered reliability Experiment design Subject program Results Threats to validity Conclusions 8/23/00 ISSTA-2000 2

Measures of Test Effectiveness • Probability of detecting at least one fault [DN 84, HT 90, FWey 93, FWei 93, …] • Expected number of failures during test [FWey 93, CY 96] • Number of faults detected [HFGO 94] • Delivered reliability [FHLS 98] 8/23/00 ISSTA-2000 3

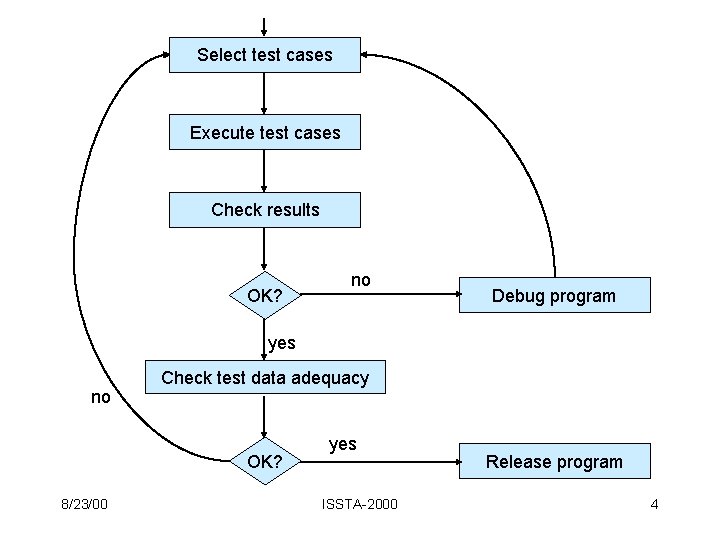

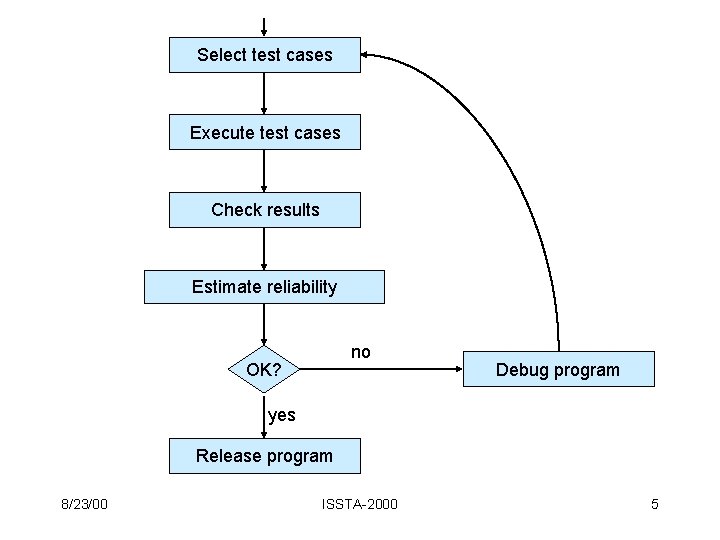

Select test cases Execute test cases Check results OK? no Debug program yes no Check test data adequacy OK? 8/23/00 yes ISSTA-2000 Release program 4

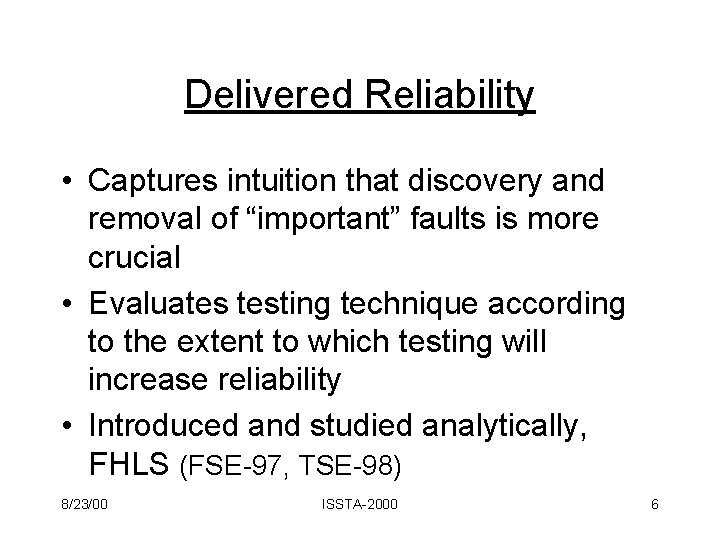

Select test cases Execute test cases Check results Estimate reliability no OK? Debug program yes Release program 8/23/00 ISSTA-2000 5

Delivered Reliability • Captures intuition that discovery and removal of “important” faults is more crucial • Evaluates testing technique according to the extent to which testing will increase reliability • Introduced and studied analytically, FHLS (FSE-97, TSE-98) 8/23/00 ISSTA-2000 6

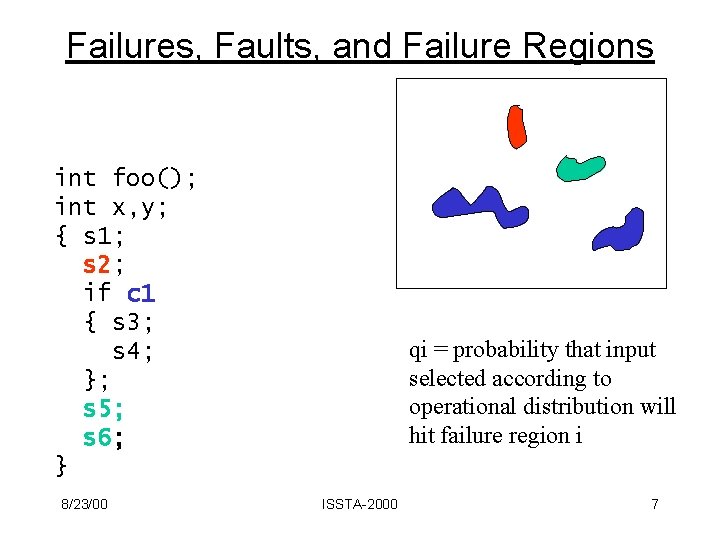

Failures, Faults, and Failure Regions int foo(); int x, y; { s 1; s 2; if c 1 { s 3; s 4; }; s 5; s 6; } 8/23/00 qi = probability that input selected according to operational distribution will hit failure region i ISSTA-2000 7

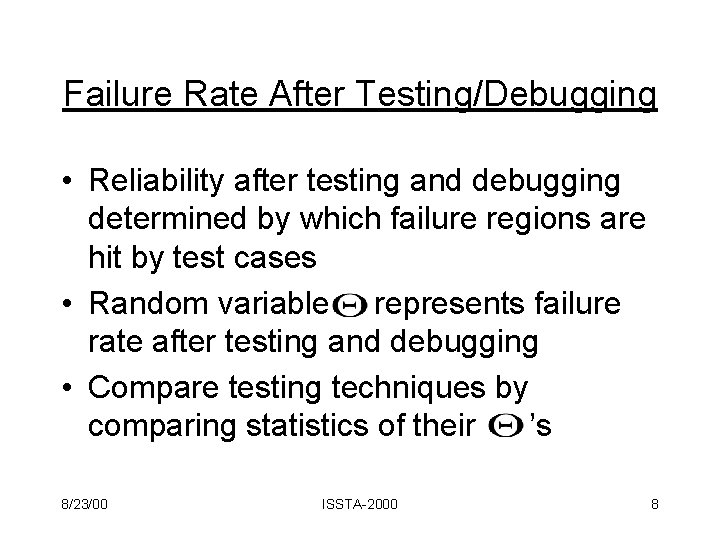

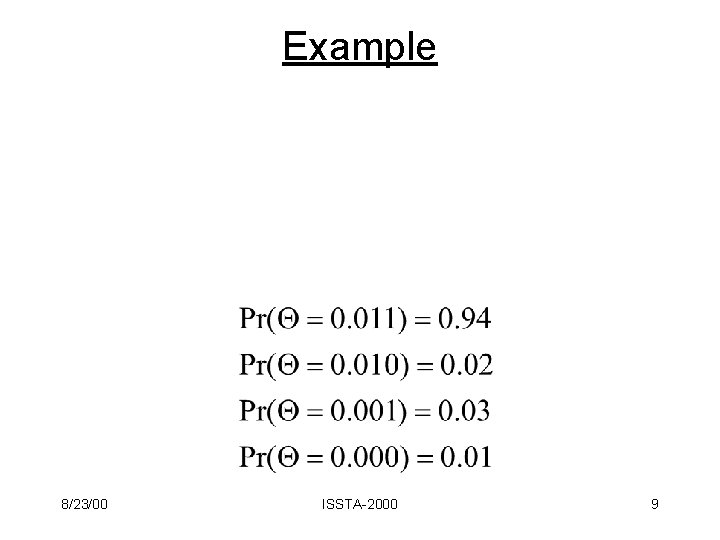

Failure Rate After Testing/Debugging • Reliability after testing and debugging determined by which failure regions are hit by test cases • Random variable represents failure rate after testing and debugging • Compare testing techniques by comparing statistics of their ’s 8/23/00 ISSTA-2000 8

Example 8/23/00 ISSTA-2000 9

Testing Criteria Considered • Various levels of coverage of – decision coverage (branch testing) – def-use coverage (all-used data flow testing) – grouped into quartiles and deciles • random testing with no coverage criterion 8/23/00 ISSTA-2000 10

Questions Investigated • How do test sets that achieve high coverage levels (of branch testing or data flow testing) compare to those achieving lower coverage, according to – Expected improvement in reliability: – Probability of reaching given reliability target: 8/23/00 ISSTA-2000 11

Subject Program • “Space” Program • 10, 000+ LOC C antenna design program, written by professional programmers, containing naturally occurring faults • Test generator generates tests according to operational distribution [Pasquini et al] • Considered 10 relatively hard-to-detect faults • Failure rate: 0. 05564 8/23/00 ISSTA-2000 12

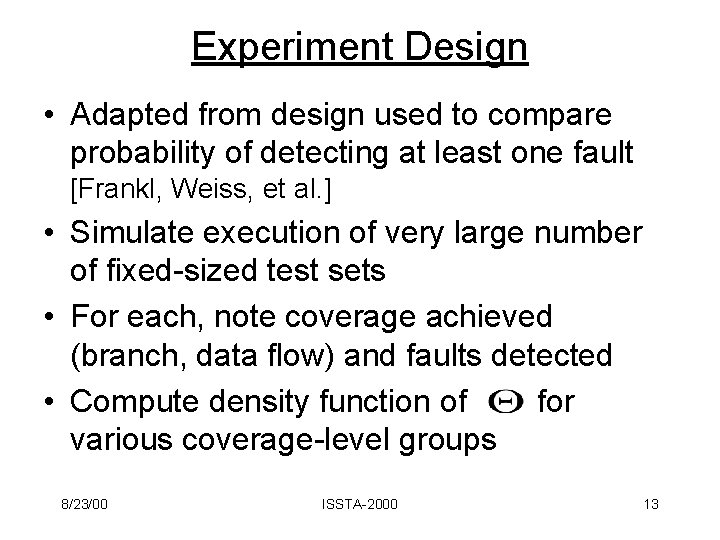

Experiment Design • Adapted from design used to compare probability of detecting at least one fault [Frankl, Weiss, et al. ] • Simulate execution of very large number of fixed-sized test sets • For each, note coverage achieved (branch, data flow) and faults detected • Compute density function of for various coverage-level groups 8/23/00 ISSTA-2000 13

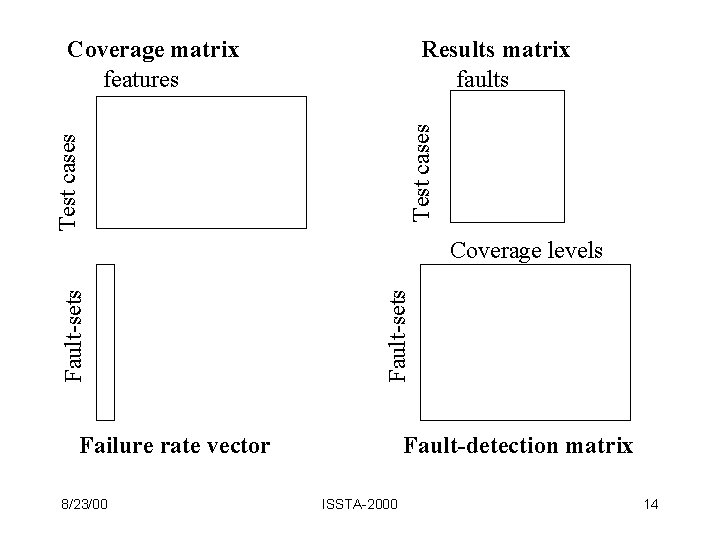

Results matrix faults Test cases Coverage matrix features Fault-sets Coverage levels Failure rate vector 8/23/00 Fault-detection matrix ISSTA-2000 14

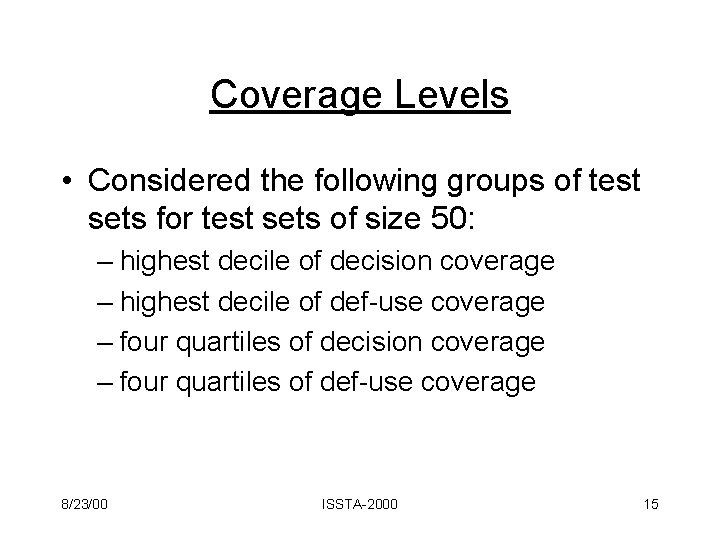

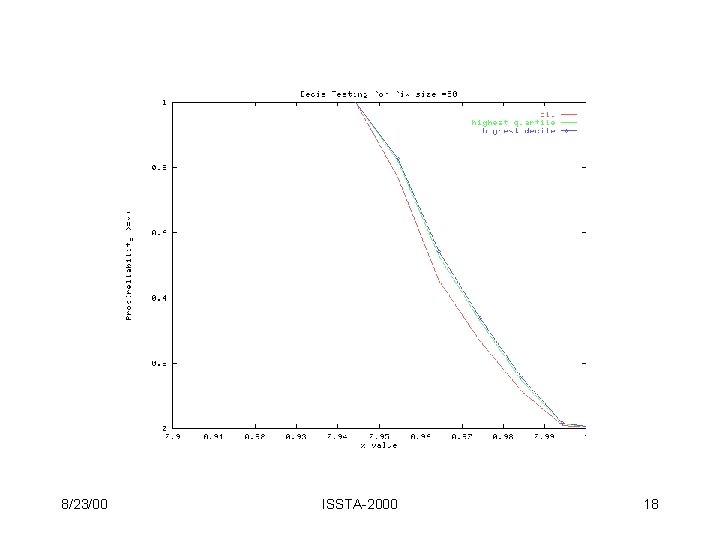

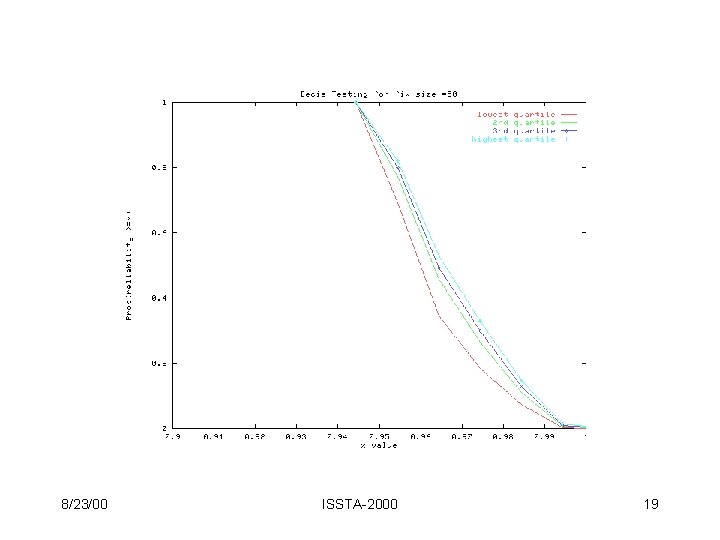

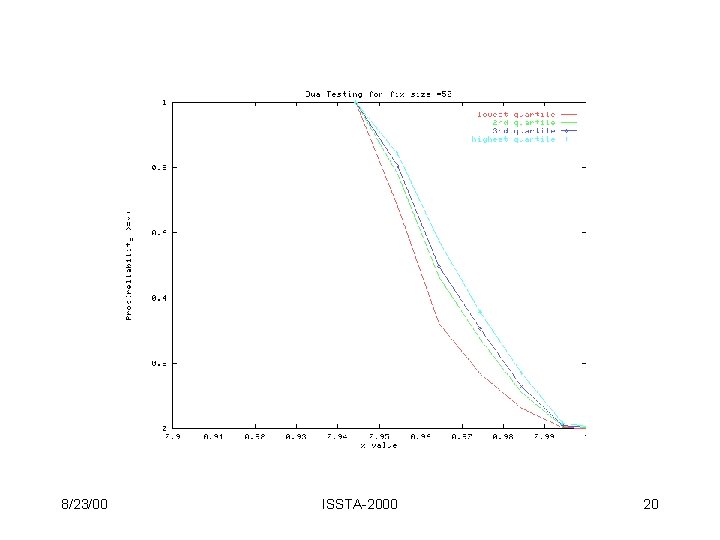

Coverage Levels • Considered the following groups of test sets for test sets of size 50: – highest decile of decision coverage – highest decile of def-use coverage – four quartiles of decision coverage – four quartiles of def-use coverage 8/23/00 ISSTA-2000 15

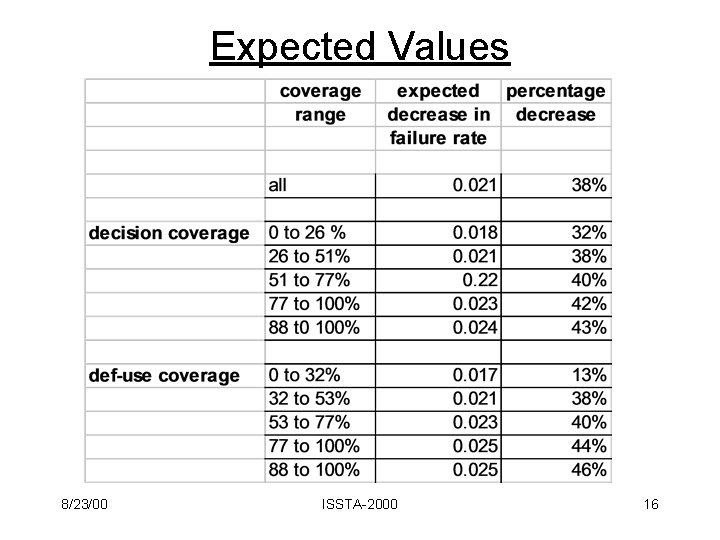

Expected Values 8/23/00 ISSTA-2000 16

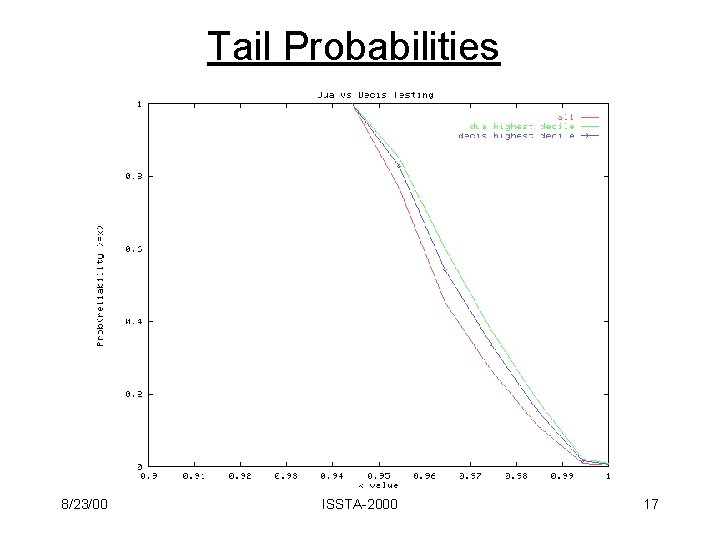

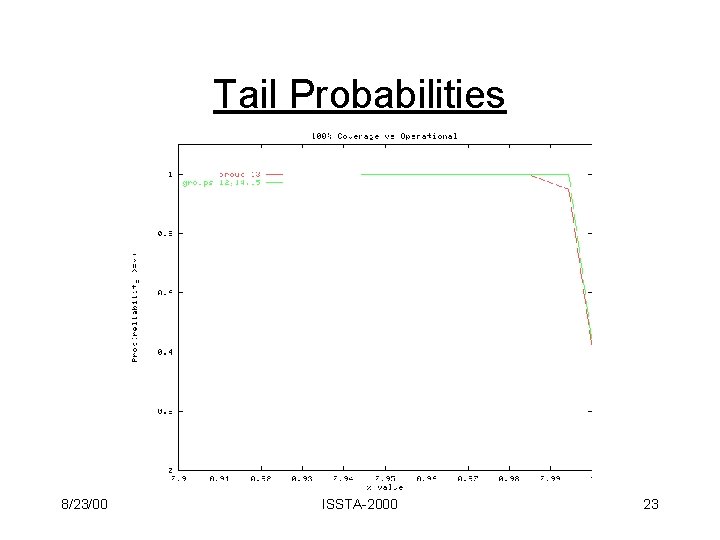

Tail Probabilities 8/23/00 ISSTA-2000 17

8/23/00 ISSTA-2000 18

8/23/00 ISSTA-2000 19

8/23/00 ISSTA-2000 20

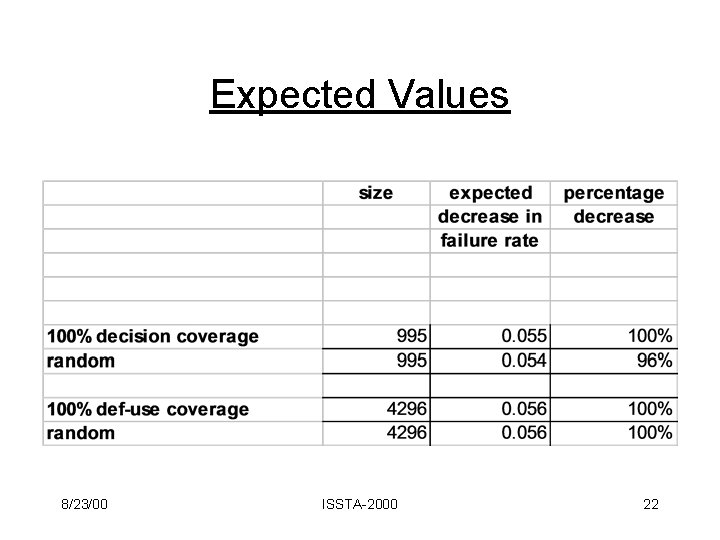

Idealized Test Generation Strategy • Select one test case from each subdomain (independently, randomly) • Widely studied analytically • Results in very large test sets for this subject – decision coverage: 995 – def-use coverage: 4296 • Compared to large random test sets 8/23/00 ISSTA-2000 21

Expected Values 8/23/00 ISSTA-2000 22

Tail Probabilities 8/23/00 ISSTA-2000 23

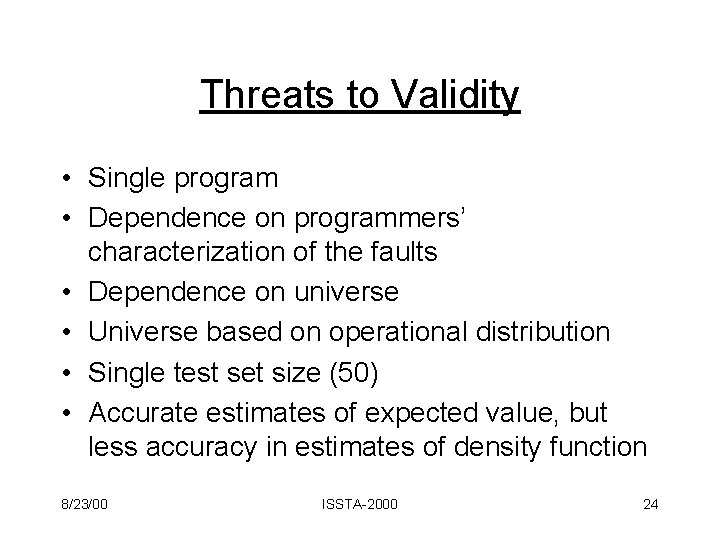

Threats to Validity • Single program • Dependence on programmers’ characterization of the faults • Dependence on universe • Universe based on operational distribution • Single test set size (50) • Accurate estimates of expected value, but less accuracy in estimates of density function 8/23/00 ISSTA-2000 24

Conclusions • Positive: – higher decision coverage yields lower expected failure rate – higher def-use coverage yields lower expected failure rate – higher coverage increases likelihood of reaching high reliability target (low failure rate target) 8/23/00 ISSTA-2000 25

Conclusions (continued) • Negative: – reliability gains with increased coverage are modest • cost-effectiveness questionable • economic significance of increases depends on context – no silver bullet for ultra-reliability 8/23/00 ISSTA-2000 26

- Slides: 26