Comparison of Classifiers for use in a Learning

- Slides: 26

Comparison of Classifiers for use in a Learning by Demonstration System for a Situated Agent Michael W. Floyd and Babak Esfandiari Carleton University July 21, 2009

Outline v. Introduction and Motivation v. Sensory Stimuli v. Data Transformation v. Experiments and Results v. Conclusions and Future Work mfloyd@sce. carleton. ca July 21, 2009 Slide 2

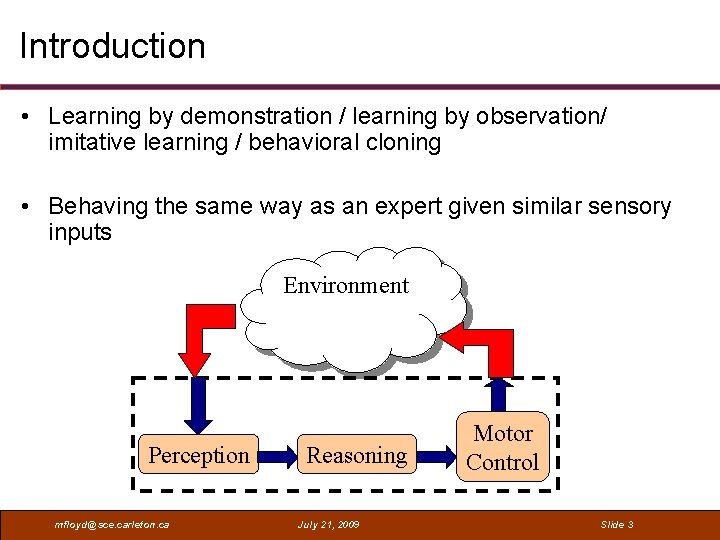

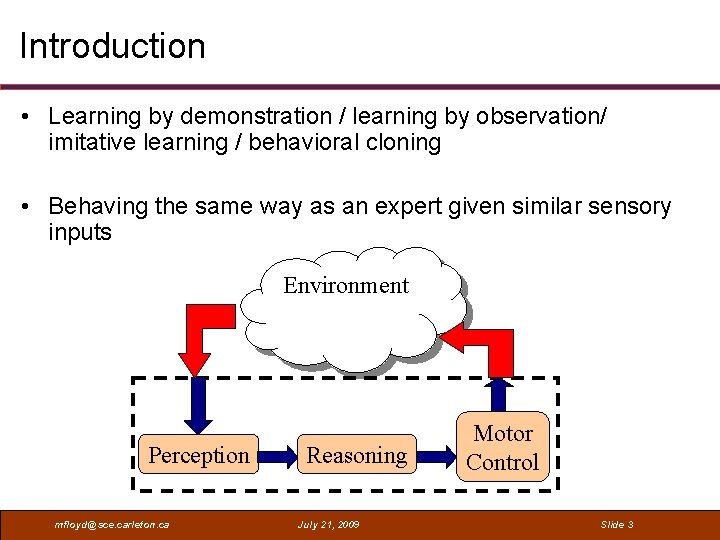

Introduction • Learning by demonstration / learning by observation/ imitative learning / behavioral cloning • Behaving the same way as an expert given similar sensory inputs Environment Perception mfloyd@sce. carleton. ca Reasoning July 21, 2009 Motor Control Slide 3

Learning by Demonstration in CBR Several recent applications using case-based reasoning: Real-time strategy games Tetris Poker Space Invaders Chess Soccer mfloyd@sce. carleton. ca July 21, 2009 Slide 4

Current Work • Our current work is in simulated Robo. Cup soccer • Remember how the expert behaved in response to sensory inputs • Behave similarly when presented with similar sensory inputs mfloyd@sce. carleton. ca July 21, 2009 Slide 5

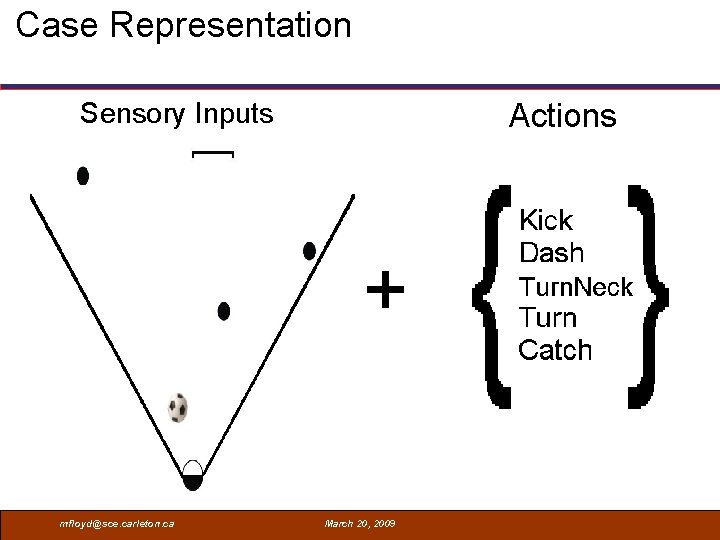

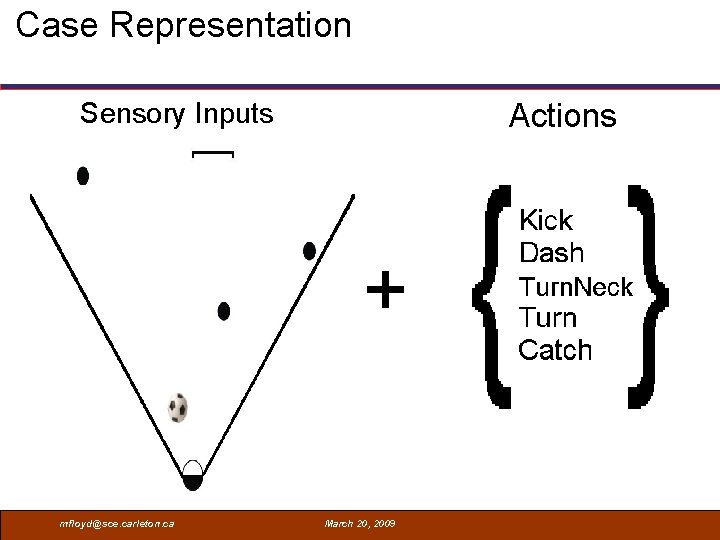

Case Representation Sensory Inputs Actions + mfloyd@sce. carleton. ca March 20, 2009

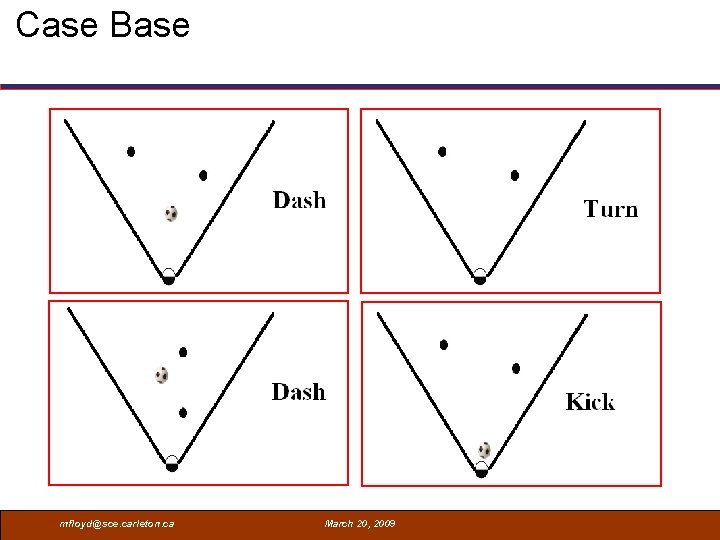

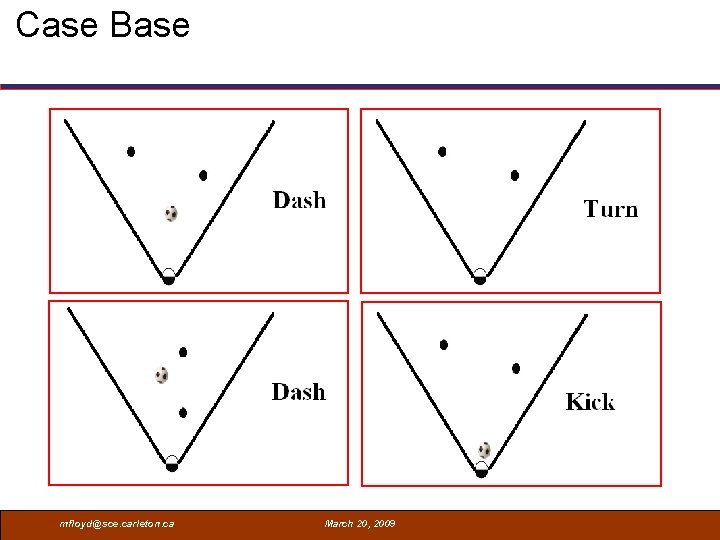

Case Base mfloyd@sce. carleton. ca March 20, 2009

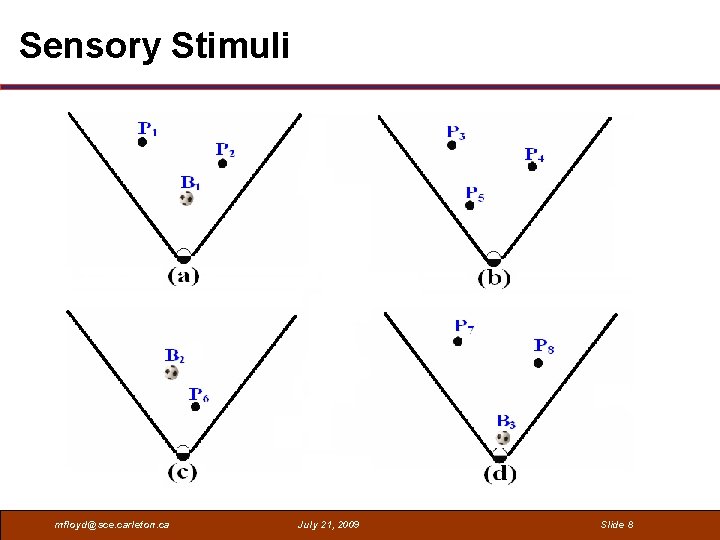

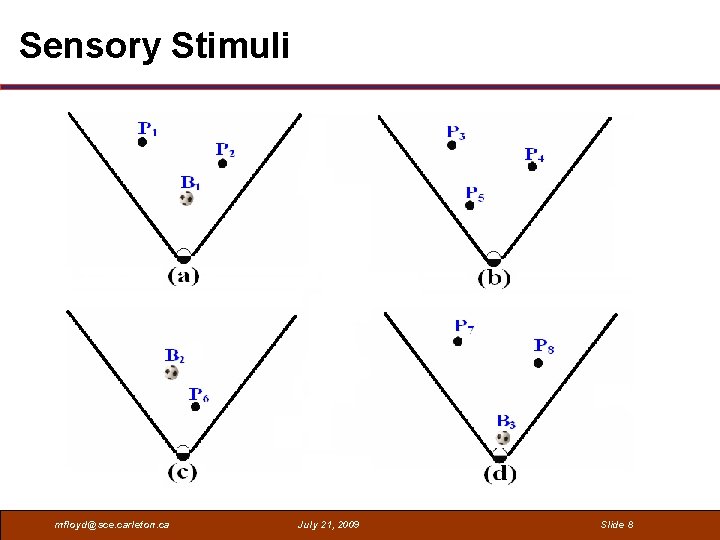

Sensory Stimuli mfloyd@sce. carleton. ca July 21, 2009 Slide 8

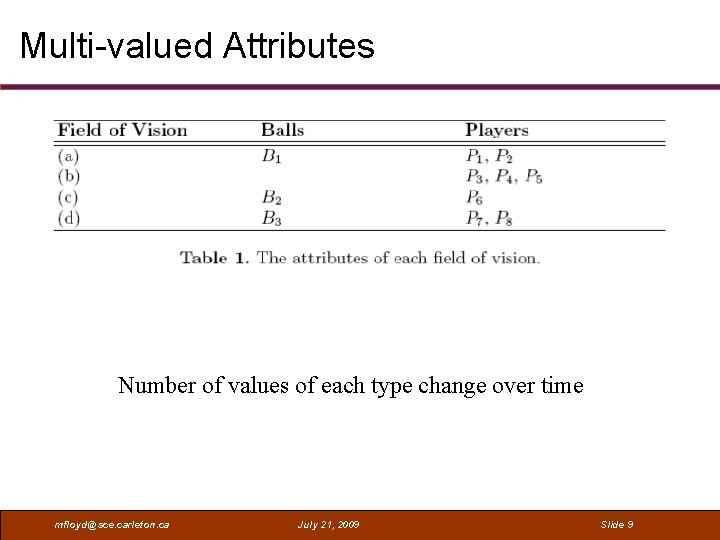

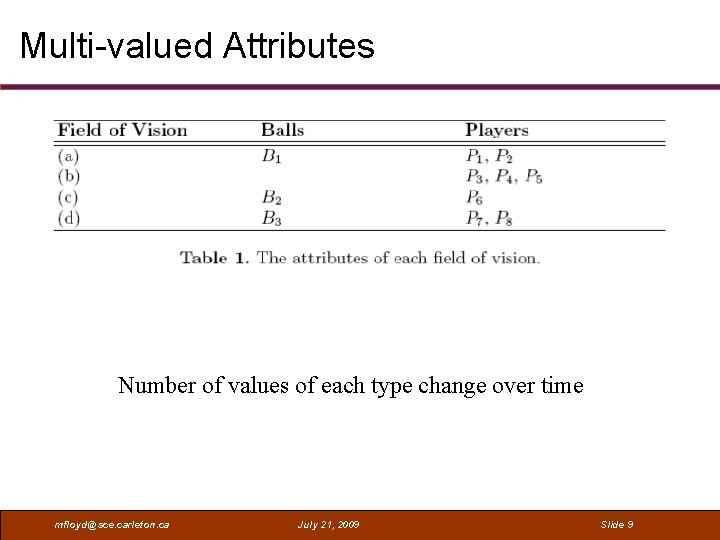

Multi-valued Attributes Number of values of each type change over time mfloyd@sce. carleton. ca July 21, 2009 Slide 9

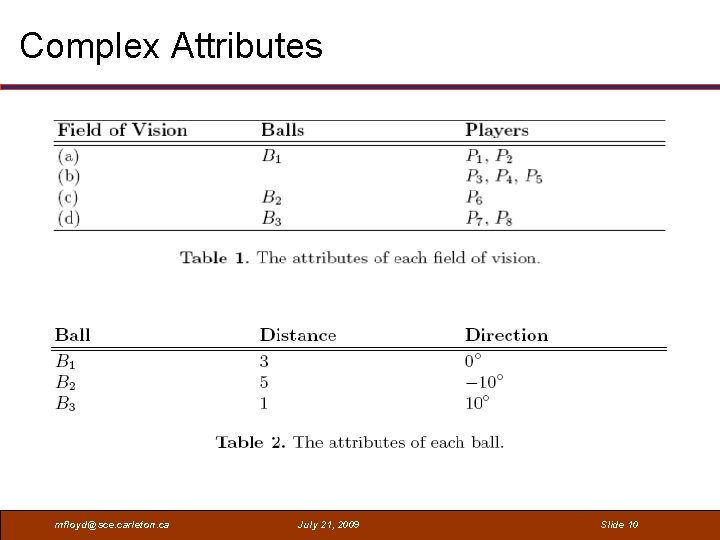

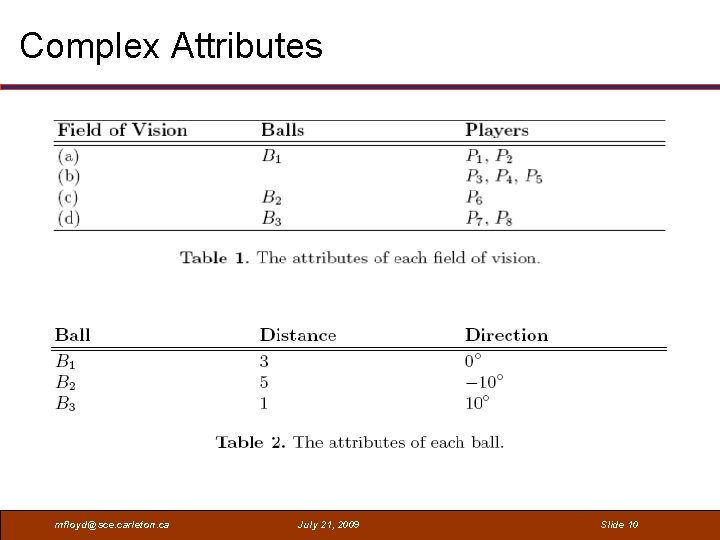

Complex Attributes mfloyd@sce. carleton. ca July 21, 2009 Slide 10

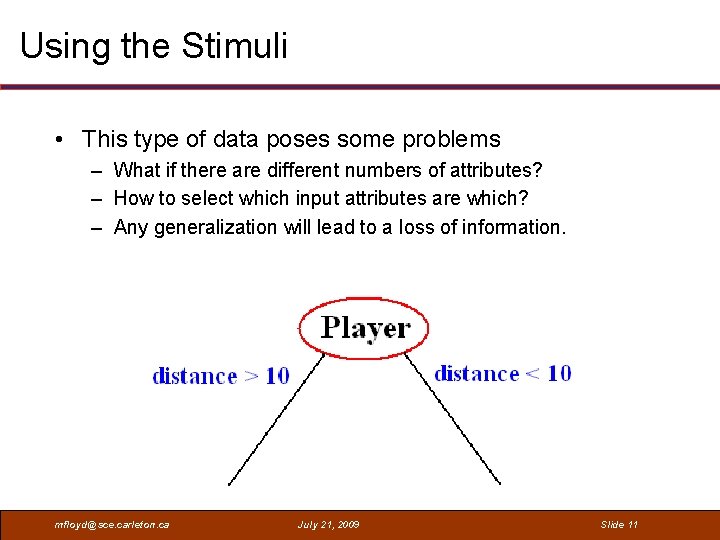

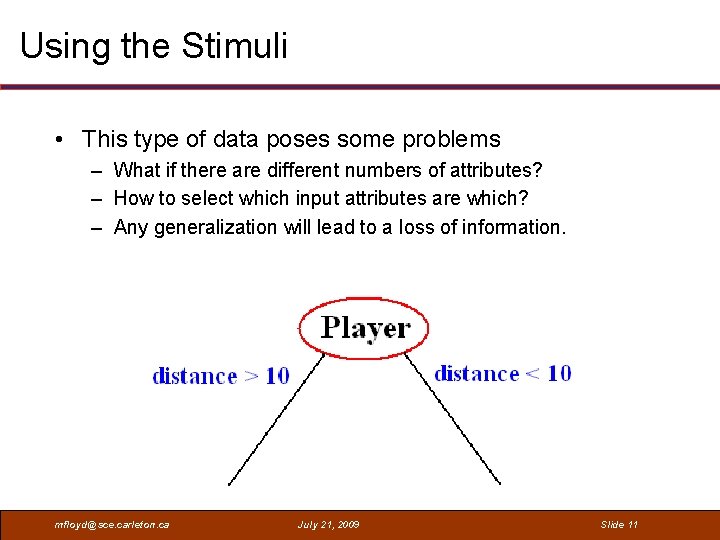

Using the Stimuli • This type of data poses some problems – What if there are different numbers of attributes? – How to select which input attributes are which? – Any generalization will lead to a loss of information. mfloyd@sce. carleton. ca July 21, 2009 Slide 11

Other Learning by Demonstration • Other approaches use expert knowledge to select sensory features that are important to learner • Use a complete world model, or a partial world view with extensive expert knowledge/ workarounds • We are dealing with a raw partial world view? mfloyd@sce. carleton. ca July 21, 2009 Slide 12

Data Transformation • Transform the data into a fixed length feature vector • Make the raw data usable by traditional classifiers • Use the same biases as our CBR approach mfloyd@sce. carleton. ca July 21, 2009 Slide 13

Step 1: Feature Vector Length • • Make all sensory stimuli the same length Use the maximum size of each multi-valued attribute in the entire dataset Example: 1. There were at most 1 ball and 3 players in our example case base 2. Therefore each feature vector will have room for 1 ball and 3 players 3. Each object has a distance and direction, so the feature vector will be (1 + 3)x 2 = 8 features in length mfloyd@sce. carleton. ca July 21, 2009 Slide 14

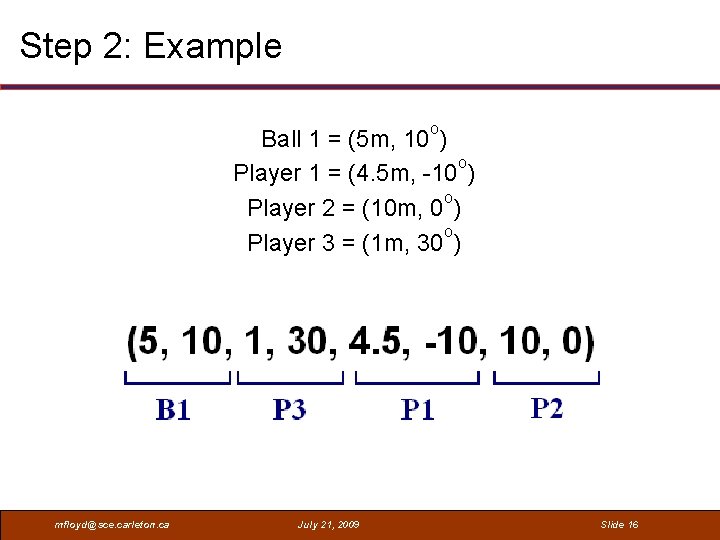

Step 2: Object Ordering • Adding a consistent ordering to the unordered multivalued attributes • Simple ordering based on distance of object from the agent mfloyd@sce. carleton. ca July 21, 2009 Slide 15

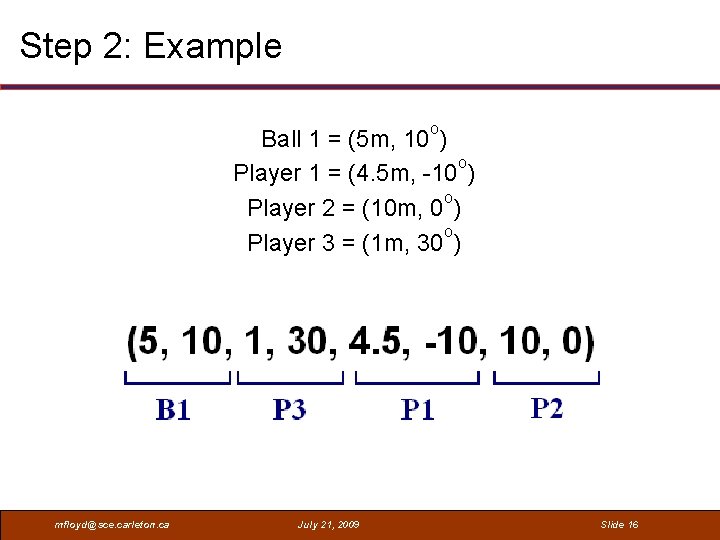

Step 2: Example o Ball 1 = (5 m, 10 ) o Player 1 = (4. 5 m, -10 ) o Player 2 = (10 m, 0 ) o Player 3 = (1 m, 30 ) mfloyd@sce. carleton. ca July 21, 2009 Slide 16

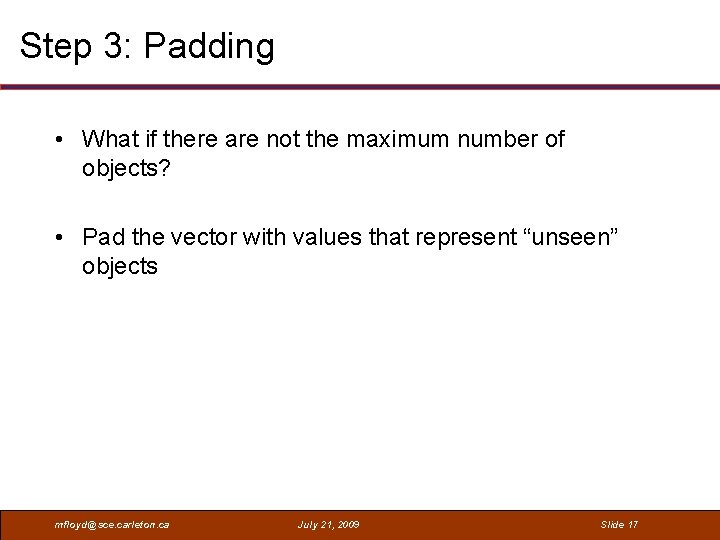

Step 3: Padding • What if there are not the maximum number of objects? • Pad the vector with values that represent “unseen” objects mfloyd@sce. carleton. ca July 21, 2009 Slide 17

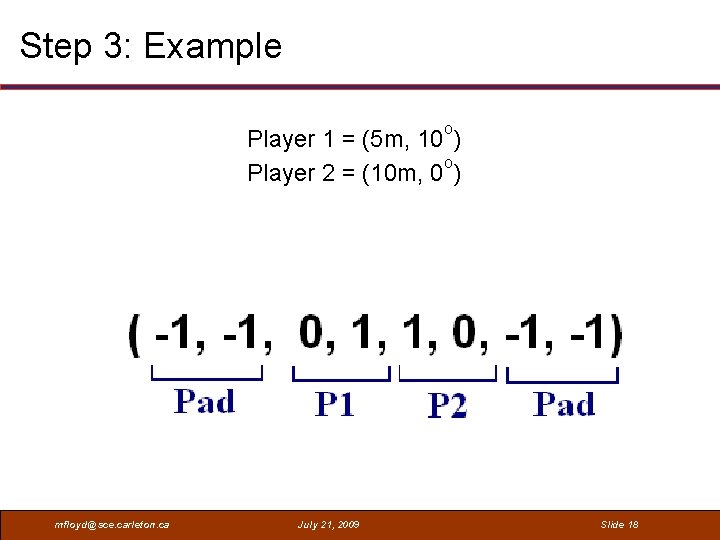

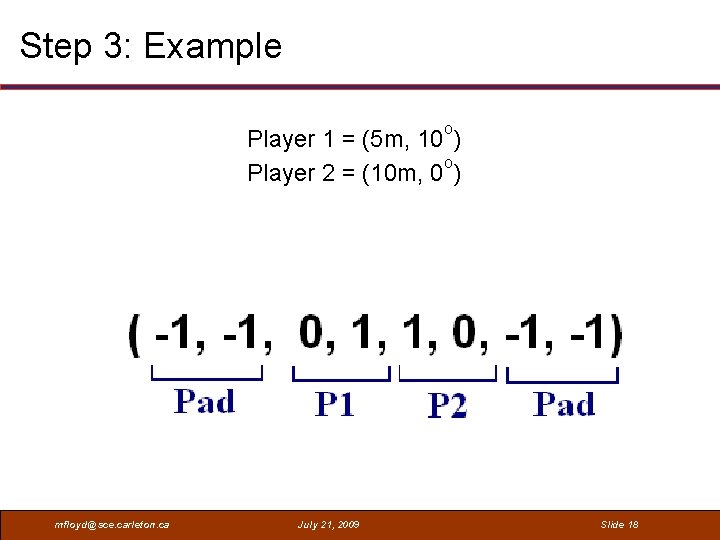

Step 3: Example o Player 1 = (5 m, 10 ) o Player 2 = (10 m, 0 ) mfloyd@sce. carleton. ca July 21, 2009 Slide 18

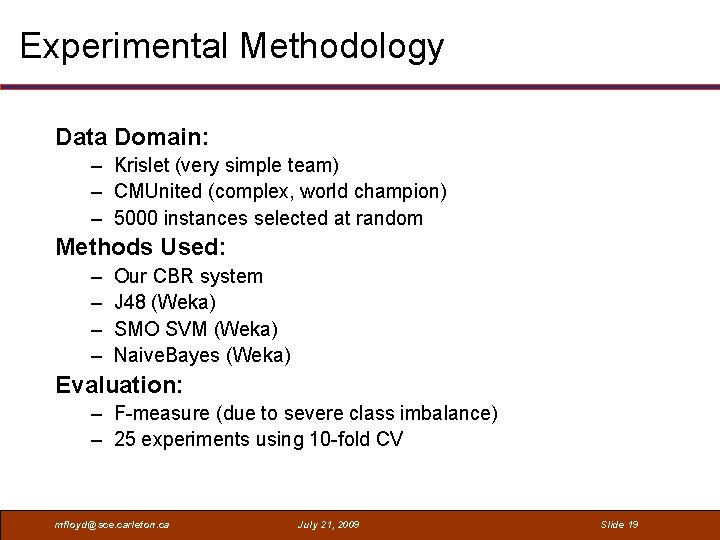

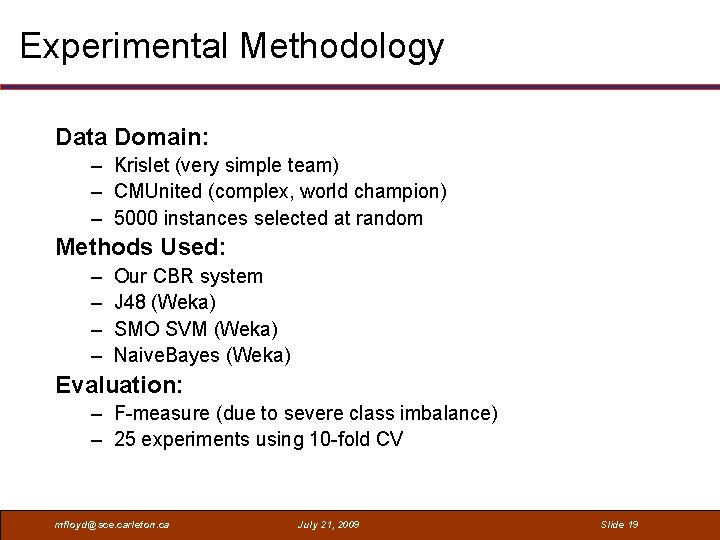

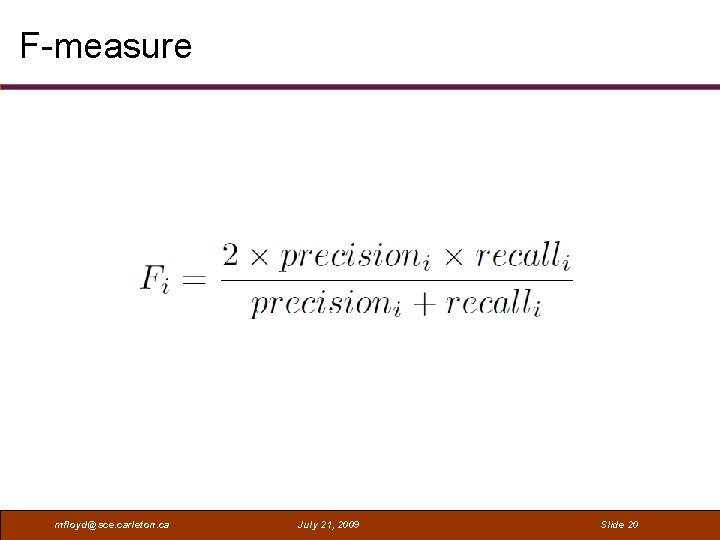

Experimental Methodology Data Domain: – Krislet (very simple team) – CMUnited (complex, world champion) – 5000 instances selected at random Methods Used: – – Our CBR system J 48 (Weka) SMO SVM (Weka) Naive. Bayes (Weka) Evaluation: – F-measure (due to severe class imbalance) – 25 experiments using 10 -fold CV mfloyd@sce. carleton. ca July 21, 2009 Slide 19

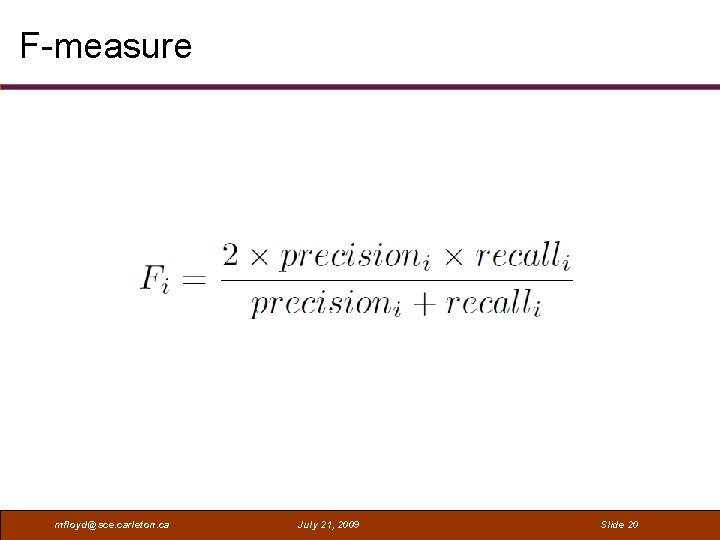

F-measure mfloyd@sce. carleton. ca July 21, 2009 Slide 20

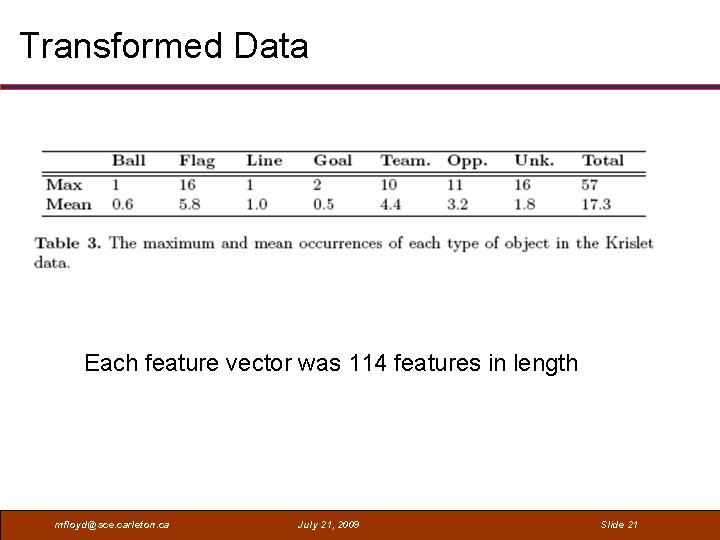

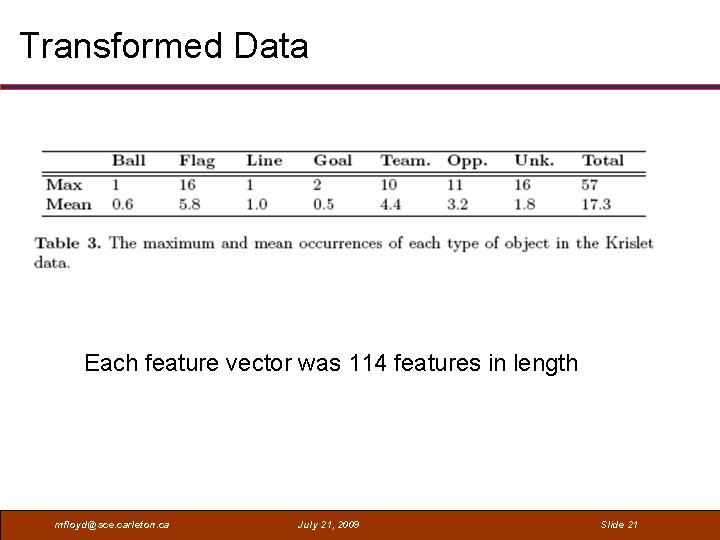

Transformed Data Each feature vector was 114 features in length mfloyd@sce. carleton. ca July 21, 2009 Slide 21

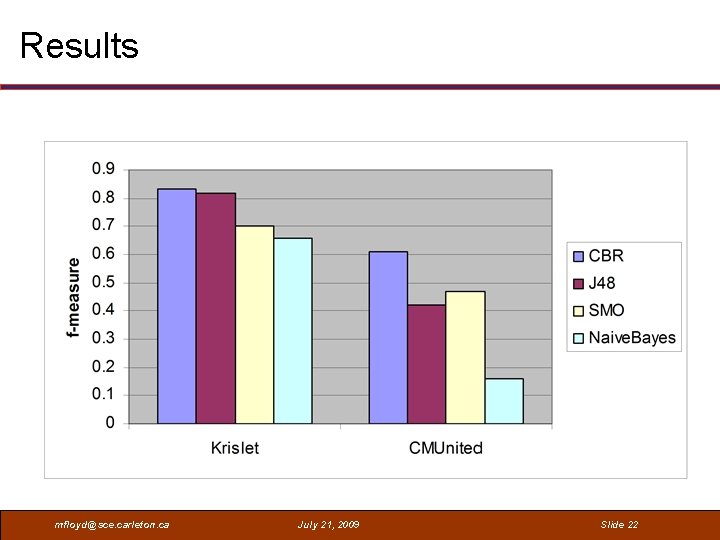

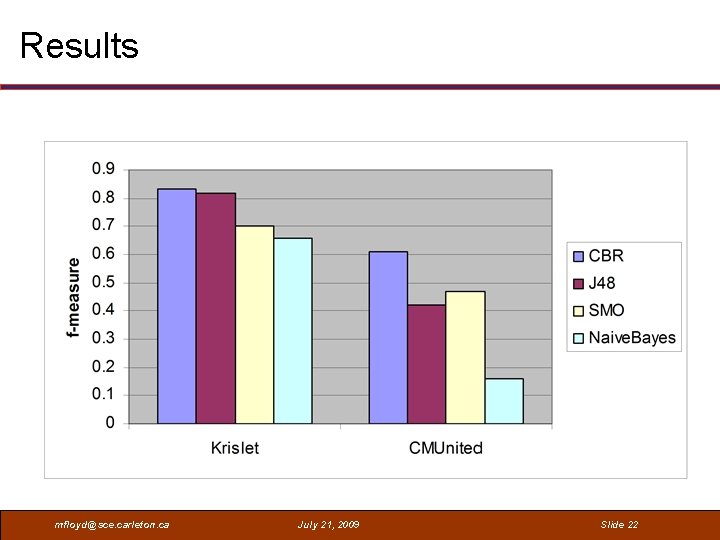

Results mfloyd@sce. carleton. ca July 21, 2009 Slide 22

Discussion • Our CBR approach performed well compared to J 48 on a decision tree based agent • J 48, SMO and Naive. Bayes have trouble, and make rules specific to each training instance, on CMUnited data. mfloyd@sce. carleton. ca July 21, 2009 Slide 23

Conclusions • Compared our approach to popular classifiers, not state of the art! • This transformation becomes more complex when we take into account action parameters. • CBR keeps the actual instances, so it can allow for more complex ordering at run-time mfloyd@sce. carleton. ca July 21, 2009 Slide 24

Further Work • Our CBR approach performed well when reasoning was: – based on number of visible objects – based on middle or furthest objects • Our CBR approach is least beneficial when objects are uniquely distinguishable. • Finding idealized parameters for other classifiers did not significantly change results. mfloyd@sce. carleton. ca July 21, 2009 Slide 25

Questions mfloyd@sce. carleton. ca July 21, 2009 Slide 26