COMP 791 A Statistical Language Processing Word Sense

- Slides: 63

COMP 791 A: Statistical Language Processing Word Sense Disambiguation Chap. 7 1

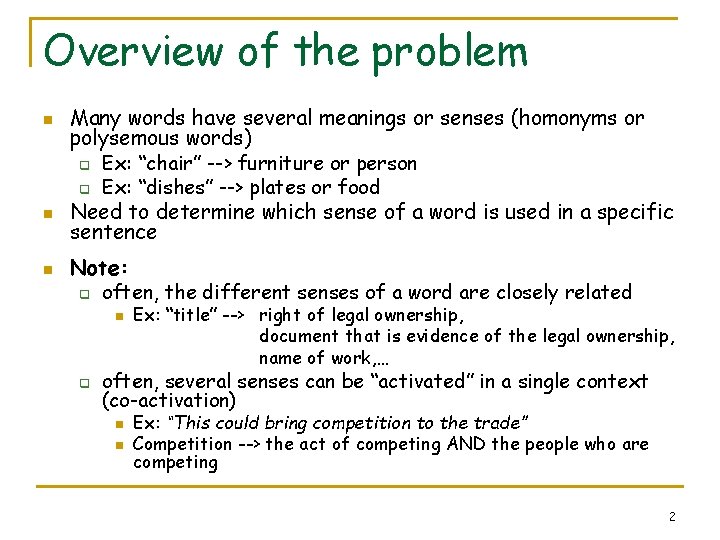

Overview of the problem n n n Many words have several meanings or senses (homonyms or polysemous words) q Ex: “chair” --> furniture or person q Ex: “dishes” --> plates or food Need to determine which sense of a word is used in a specific sentence Note: q often, the different senses of a word are closely related n q Ex: “title” --> right of legal ownership, document that is evidence of the legal ownership, name of work, … often, several senses can be “activated” in a single context (co-activation) n n Ex: “This could bring competition to the trade” Competition --> the act of competing AND the people who are competing 2

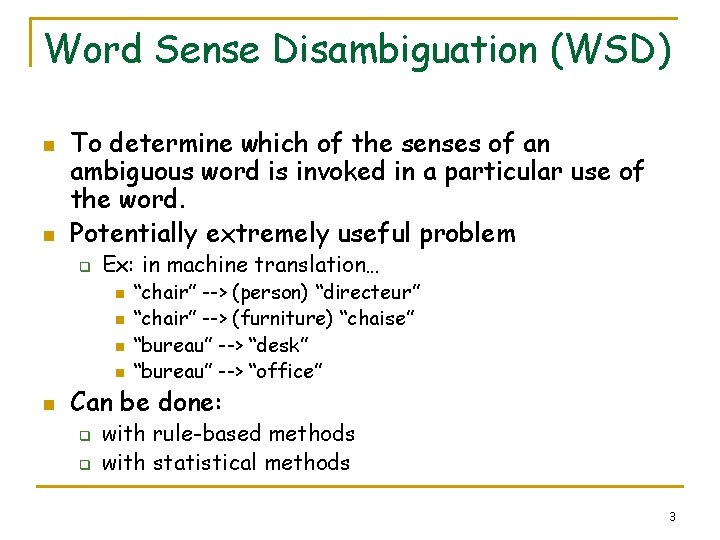

Word Sense Disambiguation (WSD) n n To determine which of the senses of an ambiguous word is invoked in a particular use of the word. Potentially extremely useful problem q Ex: in machine translation… n n n “chair” --> (person) “directeur” “chair” --> (furniture) “chaise” “bureau” --> “desk” “bureau” --> “office” Can be done: q q with rule-based methods with statistical methods 3

Word. Net n n n most widely-used lexical database for English free! G. Miller at Princeton www. cogsci. princeton. edu/~wn used in many applications of NLP Euro. Wor. Net q n Dutch, Italian, Spanish, German, French, Czech and Estonian includes entries for open-class words only (nouns, verbs, adjectives & adverbs) 4

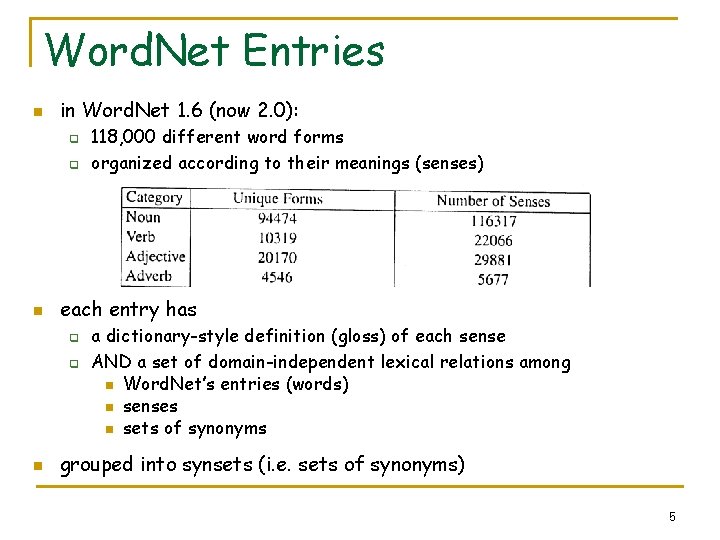

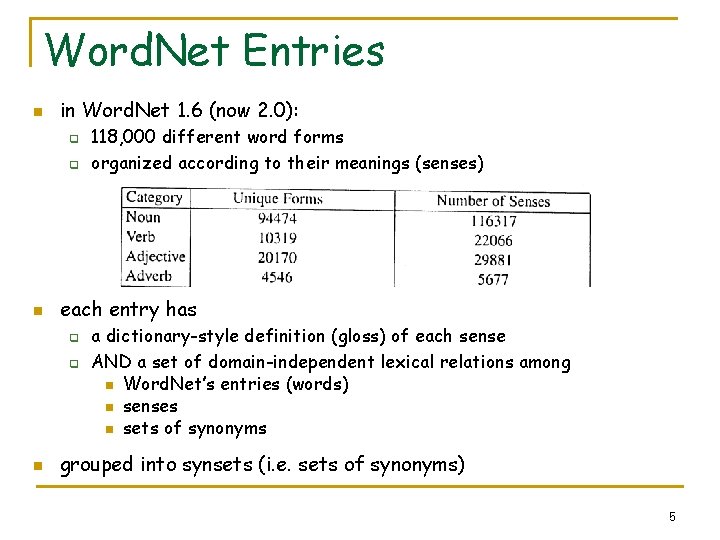

Word. Net Entries n in Word. Net 1. 6 (now 2. 0): q q n each entry has q q n 118, 000 different word forms organized according to their meanings (senses) a dictionary-style definition (gloss) of each sense AND a set of domain-independent lexical relations among n Word. Net’s entries (words) n senses n sets of synonyms grouped into synsets (i. e. sets of synonyms) 5

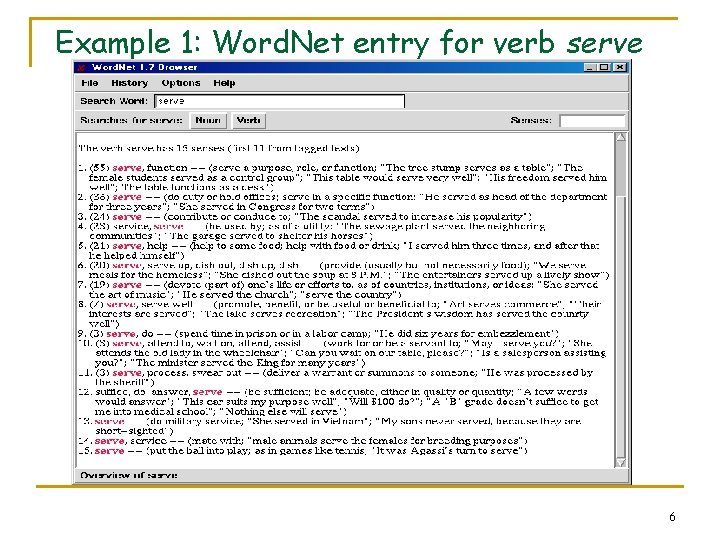

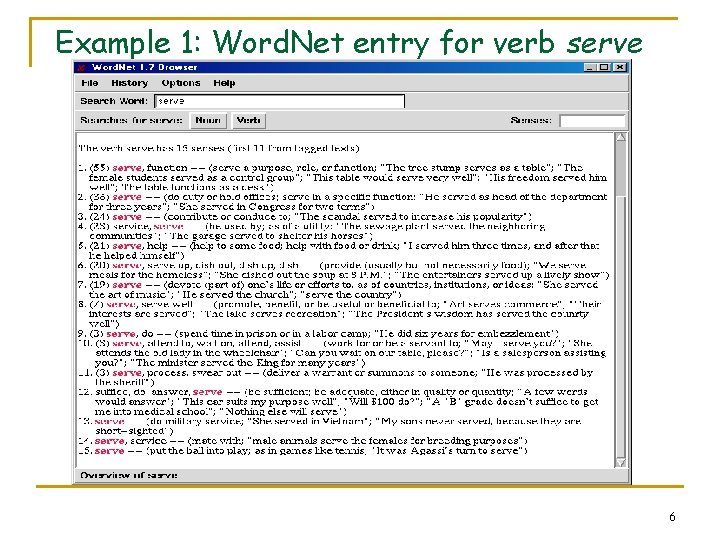

Example 1: Word. Net entry for verb serve 6

Rule-based WSD n n n They served green-lipped mussels from New Zealand. Which airlines serve Denver? semantic restrictions on the predicate of an argument q q argument mussels: --> needs a predicate with the sense {provide-food} --> sense 6 of Word. Net argument Denver: --> needs a predicate with the sense {attend-to} --> sense 10 of Word. Net 7

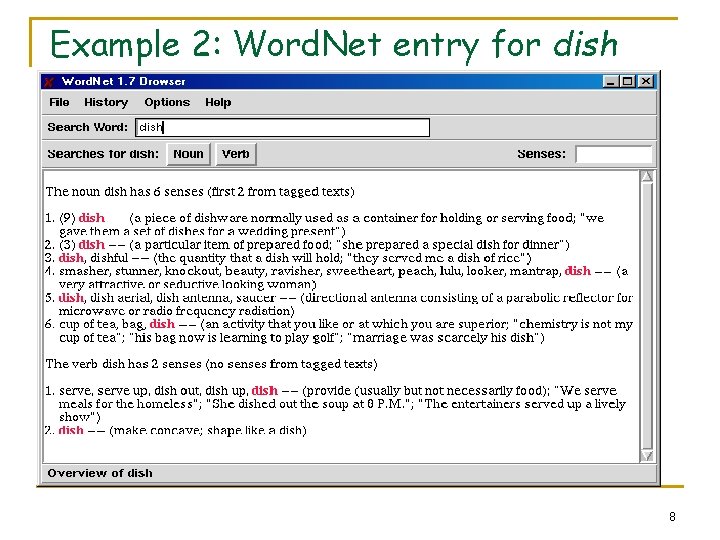

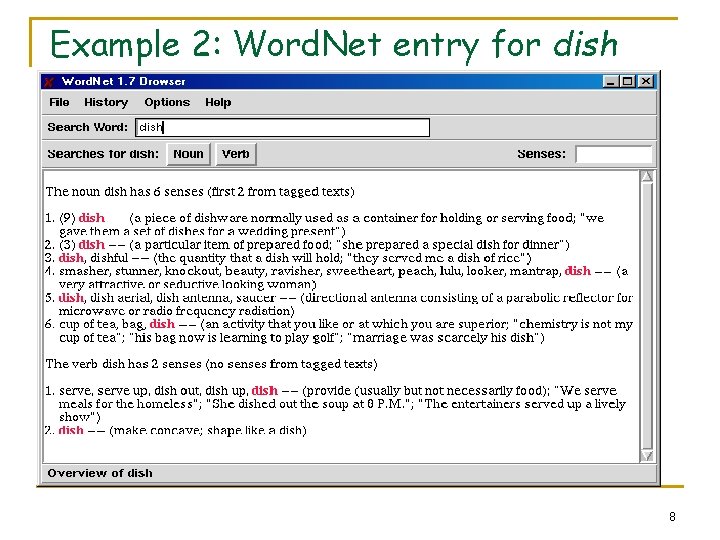

Example 2: Word. Net entry for dish 8

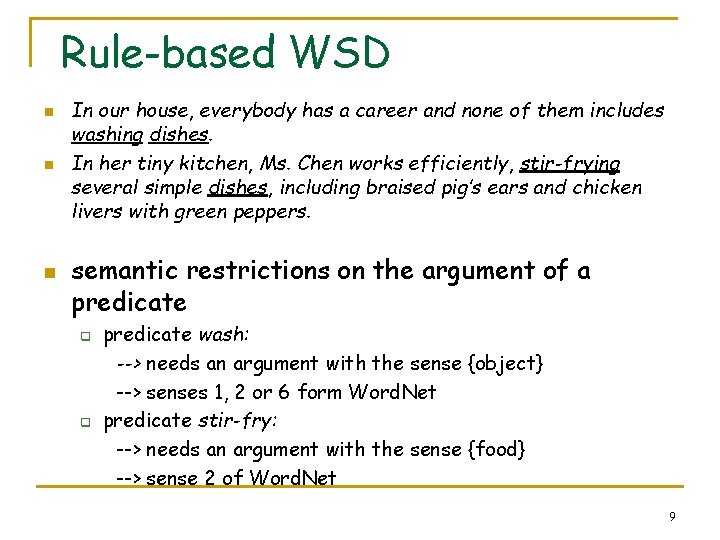

Rule-based WSD n n n In our house, everybody has a career and none of them includes washing dishes. In her tiny kitchen, Ms. Chen works efficiently, stir-frying several simple dishes, including braised pig’s ears and chicken livers with green peppers. semantic restrictions on the argument of a predicate q q predicate wash: --> needs an argument with the sense {object} --> senses 1, 2 or 6 form Word. Net predicate stir-fry: --> needs an argument with the sense {food} --> sense 2 of Word. Net 9

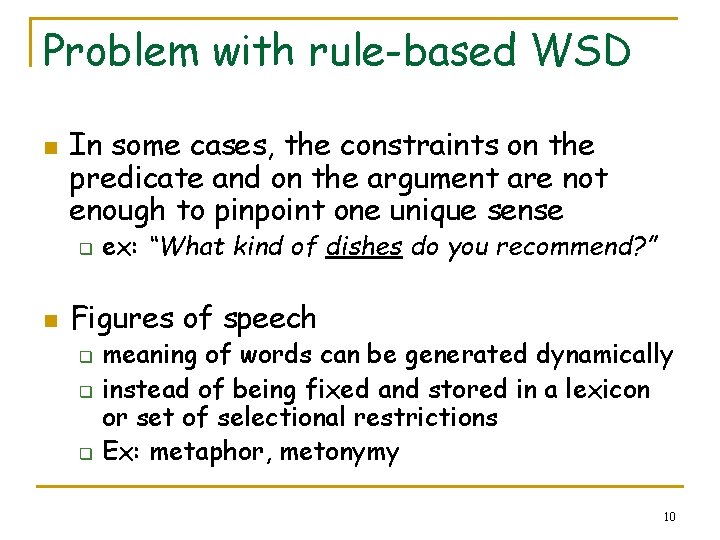

Problem with rule-based WSD n In some cases, the constraints on the predicate and on the argument are not enough to pinpoint one unique sense q n ex: “What kind of dishes do you recommend? ” Figures of speech q q q meaning of words can be generated dynamically instead of being fixed and stored in a lexicon or set of selectional restrictions Ex: metaphor, metonymy 10

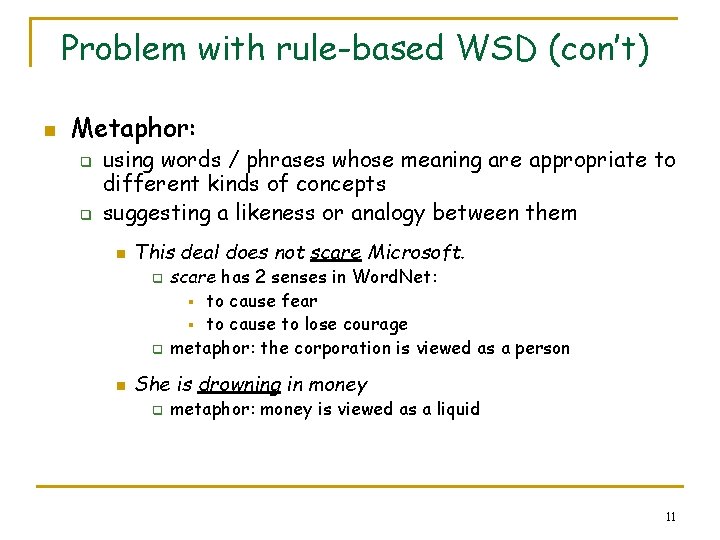

Problem with rule-based WSD (con’t) n Metaphor: q q using words / phrases whose meaning are appropriate to different kinds of concepts suggesting a likeness or analogy between them n This deal does not scare Microsoft. q q n scare has 2 senses in Word. Net: § to cause fear § to cause to lose courage metaphor: the corporation is viewed as a person She is drowning in money q metaphor: money is viewed as a liquid 11

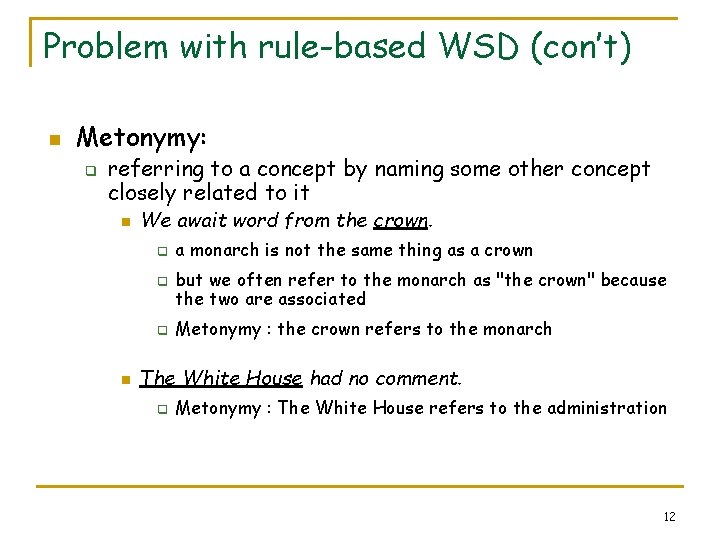

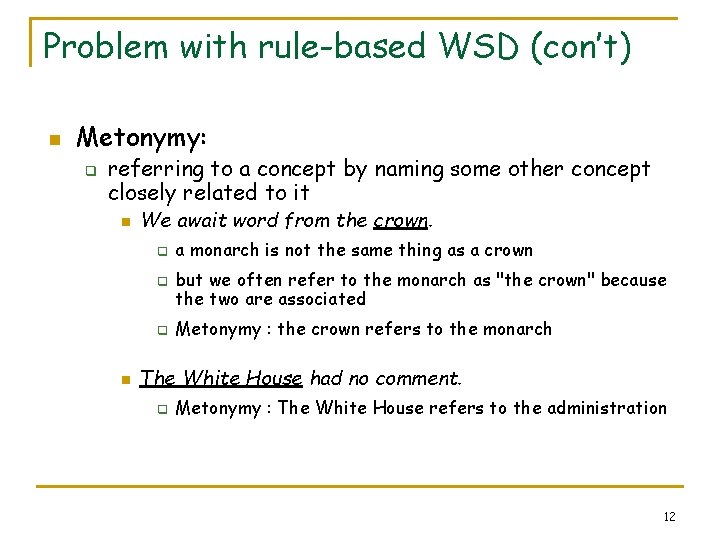

Problem with rule-based WSD (con’t) n Metonymy: q referring to a concept by naming some other concept closely related to it n We await word from the crown. q q q n a monarch is not the same thing as a crown but we often refer to the monarch as "the crown" because the two are associated Metonymy : the crown refers to the monarch The White House had no comment. q Metonymy : The White House refers to the administration 12

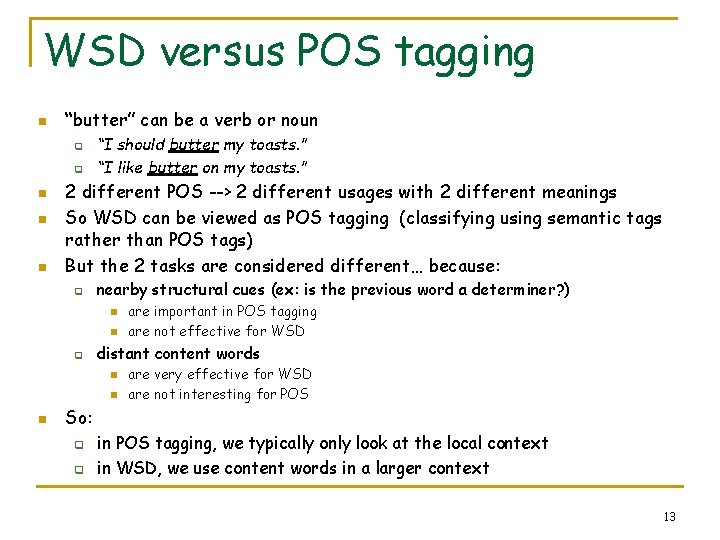

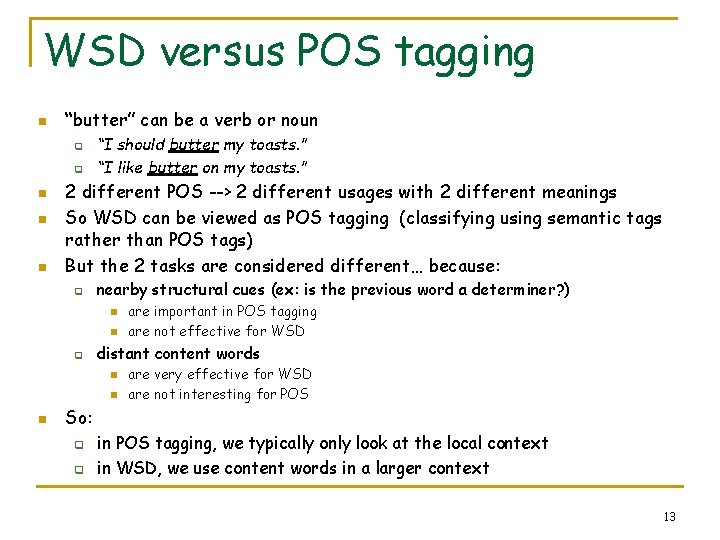

WSD versus POS tagging n “butter” can be a verb or noun q q n n n “I should butter my toasts. ” “I like butter on my toasts. ” 2 different POS --> 2 different usages with 2 different meanings So WSD can be viewed as POS tagging (classifying using semantic tags rather than POS tags) But the 2 tasks are considered different… because: q nearby structural cues (ex: is the previous word a determiner? ) n n q distant content words n n n are important in POS tagging are not effective for WSD are very effective for WSD are not interesting for POS So: q q in POS tagging, we typically only look at the local context in WSD, we use content words in a larger context 13

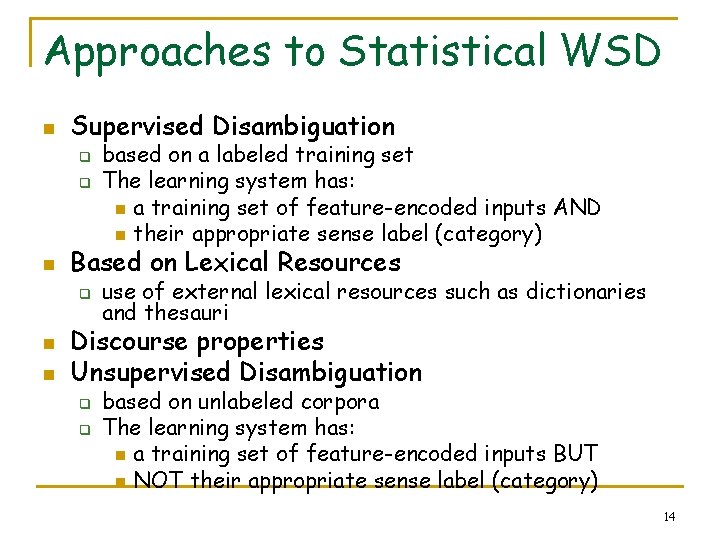

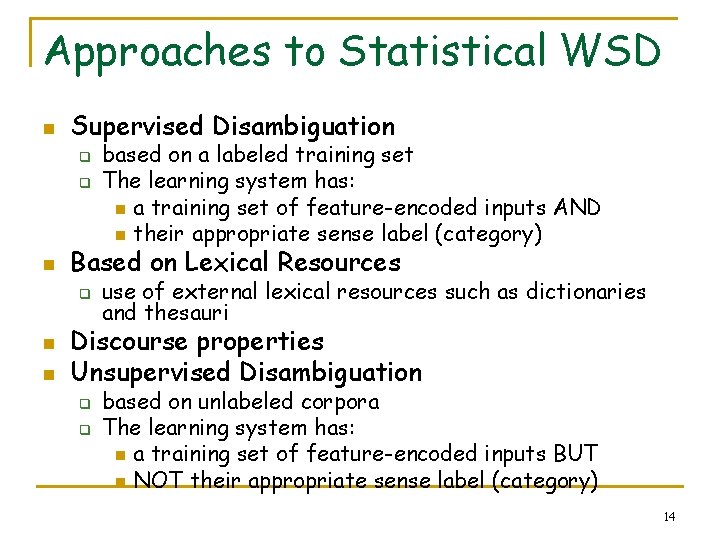

Approaches to Statistical WSD n Supervised Disambiguation q q n Based on Lexical Resources q n n based on a labeled training set The learning system has: n a training set of feature-encoded inputs AND n their appropriate sense label (category) use of external lexical resources such as dictionaries and thesauri Discourse properties Unsupervised Disambiguation q q based on unlabeled corpora The learning system has: n a training set of feature-encoded inputs BUT n NOT their appropriate sense label (category) 14

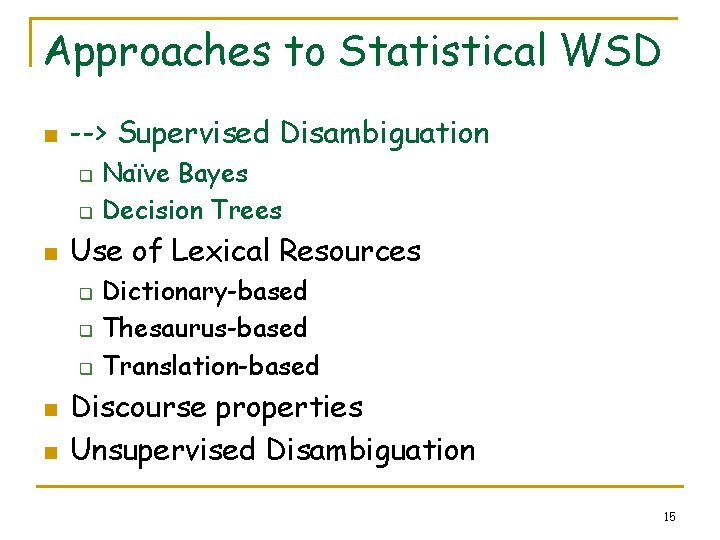

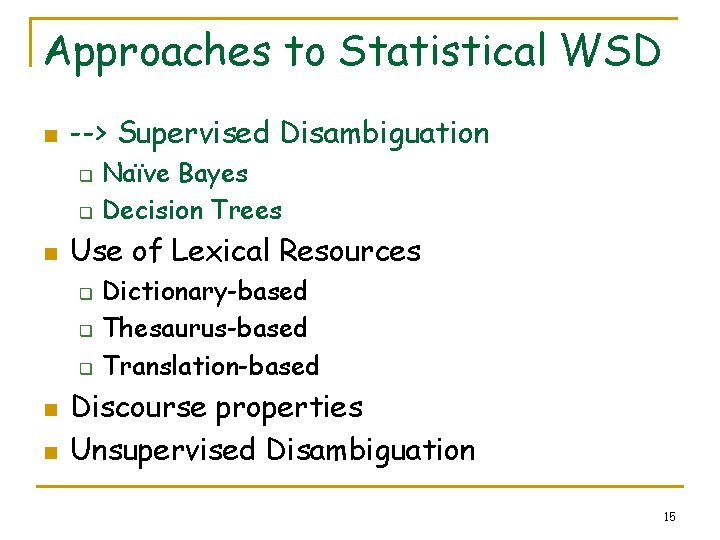

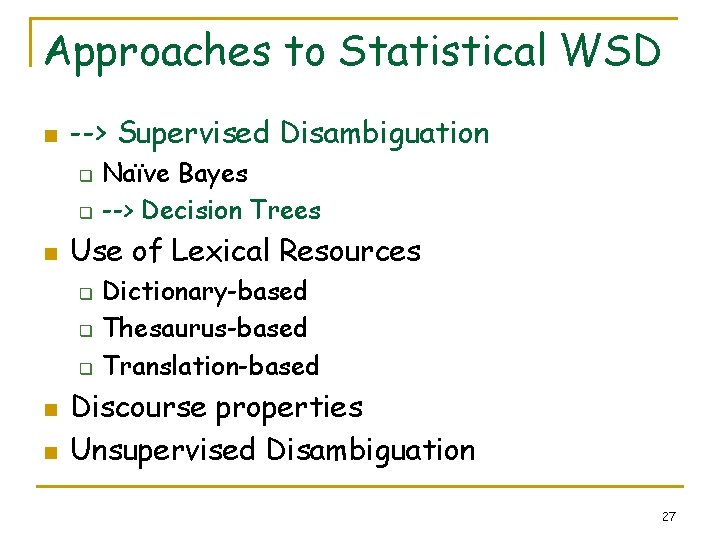

Approaches to Statistical WSD n --> Supervised Disambiguation q q n Use of Lexical Resources q q q n n Naïve Bayes Decision Trees Dictionary-based Thesaurus-based Translation-based Discourse properties Unsupervised Disambiguation 15

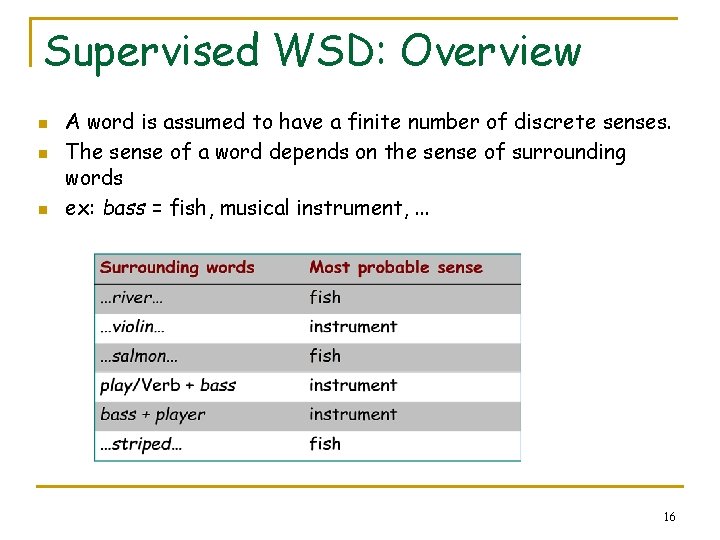

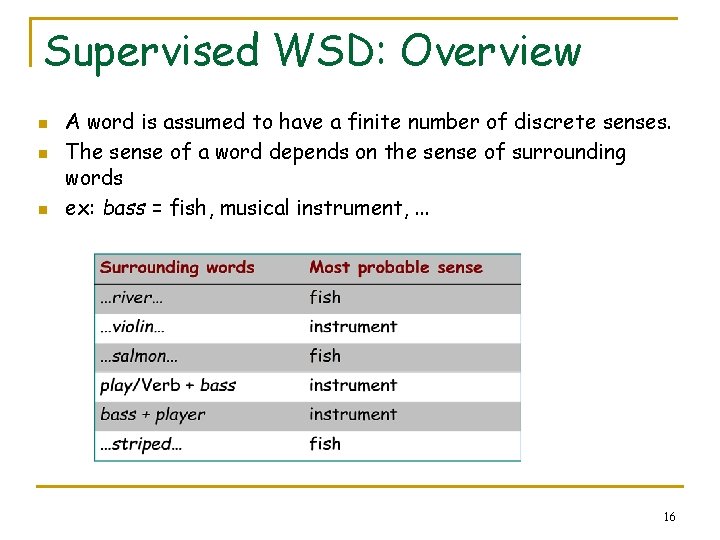

Supervised WSD: Overview n n n A word is assumed to have a finite number of discrete senses. The sense of a word depends on the sense of surrounding words ex: bass = fish, musical instrument, . . . 16

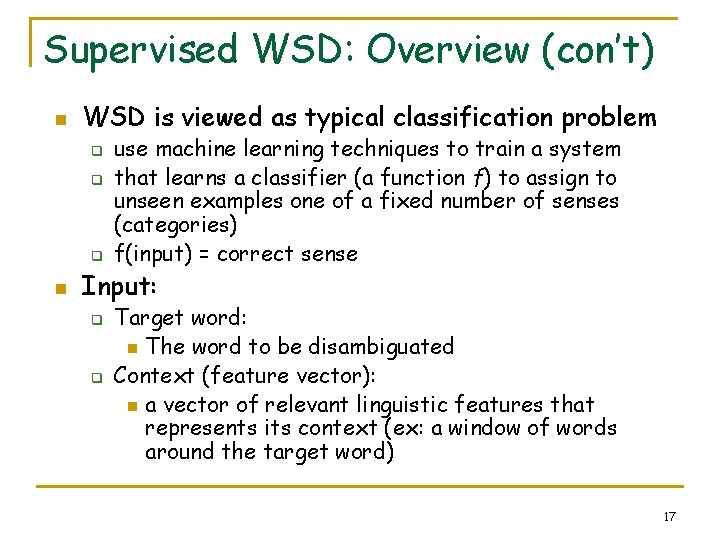

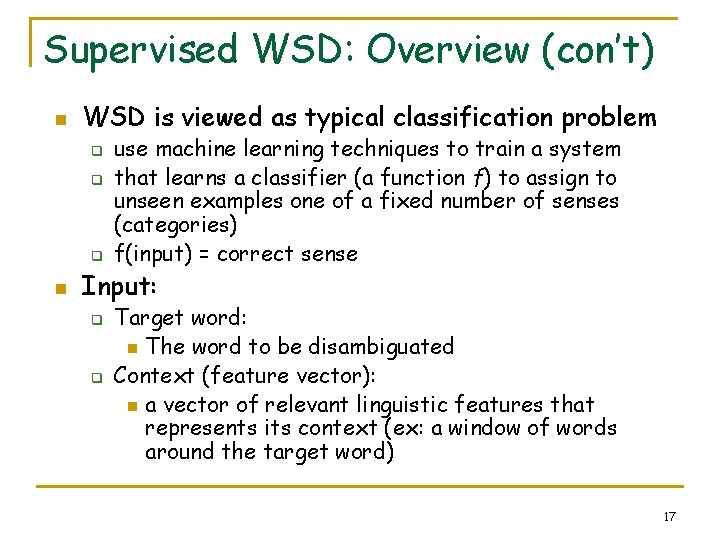

Supervised WSD: Overview (con’t) n WSD is viewed as typical classification problem q q q n use machine learning techniques to train a system that learns a classifier (a function f) to assign to unseen examples one of a fixed number of senses (categories) f(input) = correct sense Input: q q Target word: n The word to be disambiguated Context (feature vector): n a vector of relevant linguistic features that represents its context (ex: a window of words around the target word) 17

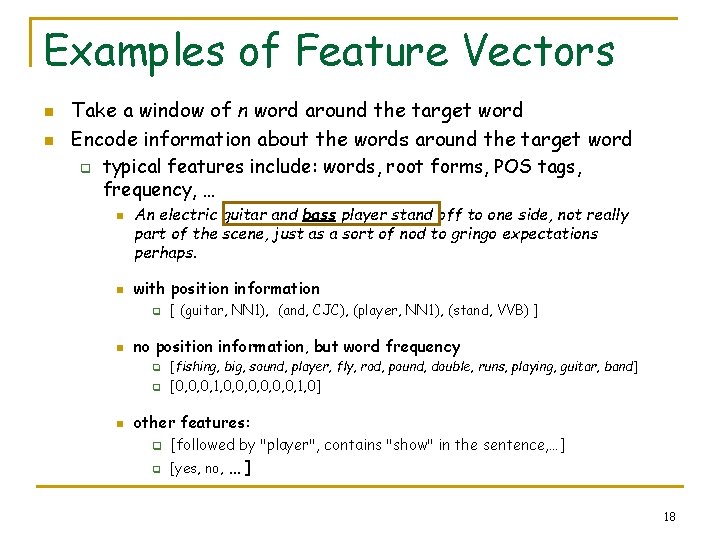

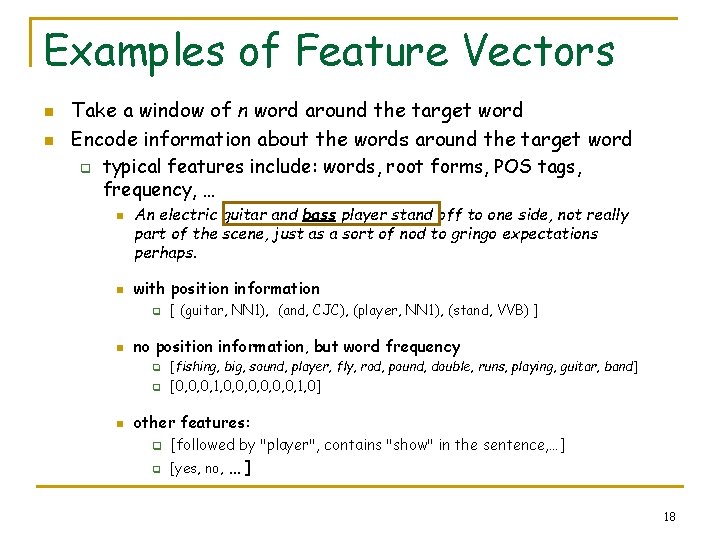

Examples of Feature Vectors n n Take a window of n word around the target word Encode information about the words around the target word q typical features include: words, root forms, POS tags, frequency, … n n An electric guitar and bass player stand off to one side, not really part of the scene, just as a sort of nod to gringo expectations perhaps. with position information q n n [ (guitar, NN 1), (and, CJC), (player, NN 1), (stand, VVB) ] no position information, but word frequency q [fishing, big, sound, player, fly, rod, pound, double, runs, playing, guitar, band] q [0, 0, 0, 1, 0] other features: q [followed by "player", contains "show" in the sentence, …] q [yes, no, … ] 18

Supervised WSD n Training corpus q n Each occurrence of the ambiguous word w is annotated with a semantic label (its contextually appropriate sense sk). Several approaches from ML q q q Bayesian classification Decision trees Neural networks K-nearest neighbor (k. NN) … 19

Approaches to Statistical WSD n --> Supervised Disambiguation q q n Use of Lexical Resources q q q n n --> Naïve Bayes Decision Trees Dictionary-based Thesaurus-based Translation-based Discourse properties Unsupervised Disambiguation 20

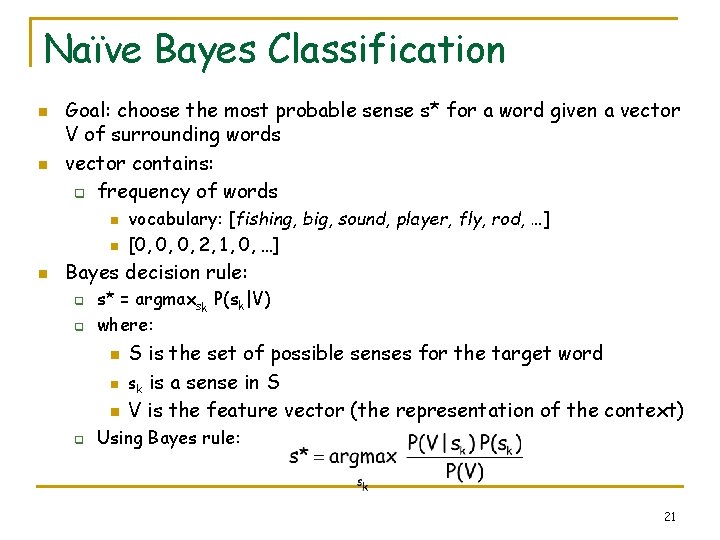

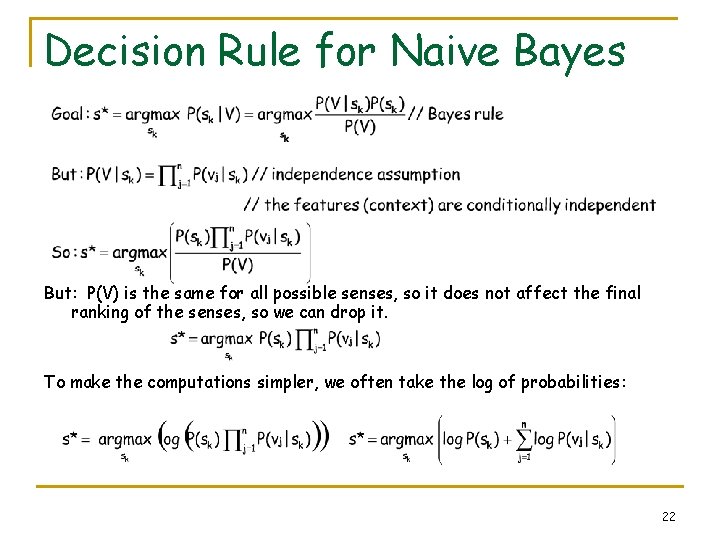

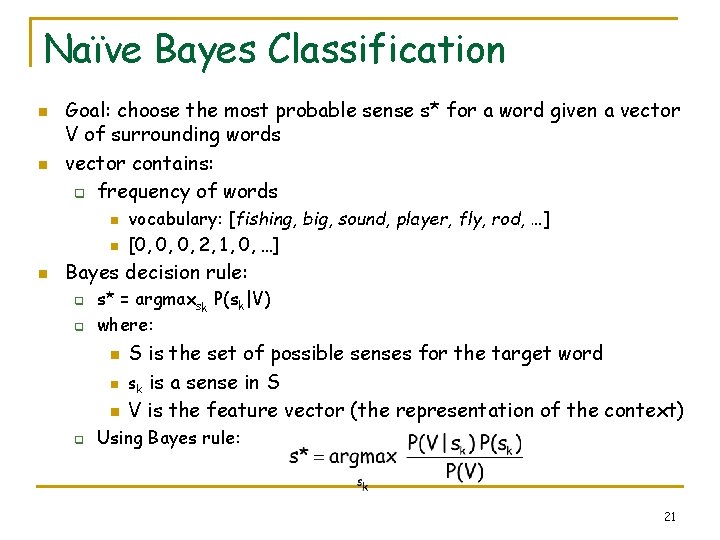

Naïve Bayes Classification n n Goal: choose the most probable sense s* for a word given a vector V of surrounding words vector contains: q frequency of words n n n vocabulary: [fishing, big, sound, player, fly, rod, …] [0, 0, 0, 2, 1, 0, …] Bayes decision rule: q q s* = argmaxsk P(sk|V) where: n n n q S is the set of possible senses for the target word sk is a sense in S V is the feature vector (the representation of the context) Using Bayes rule: 21

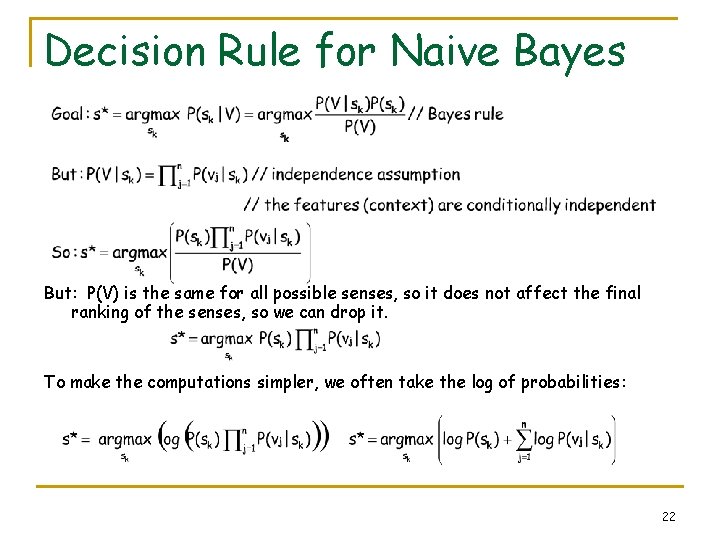

Decision Rule for Naive Bayes But: P(V) is the same for all possible senses, so it does not affect the final ranking of the senses, so we can drop it. To make the computations simpler, we often take the log of probabilities: 22

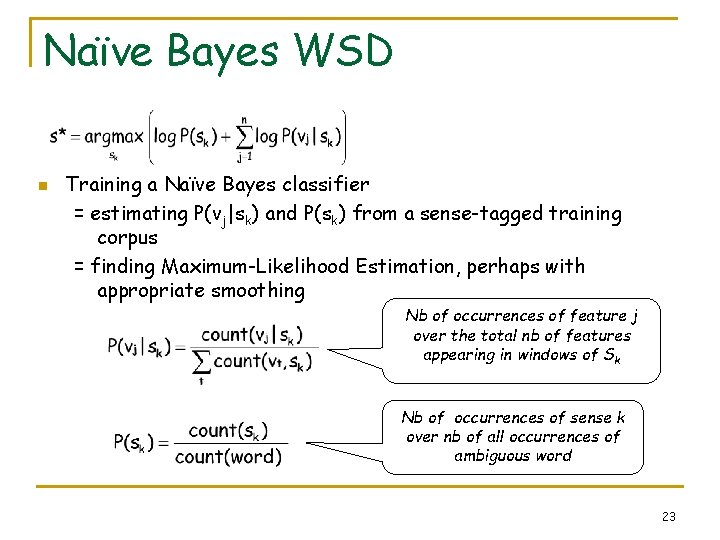

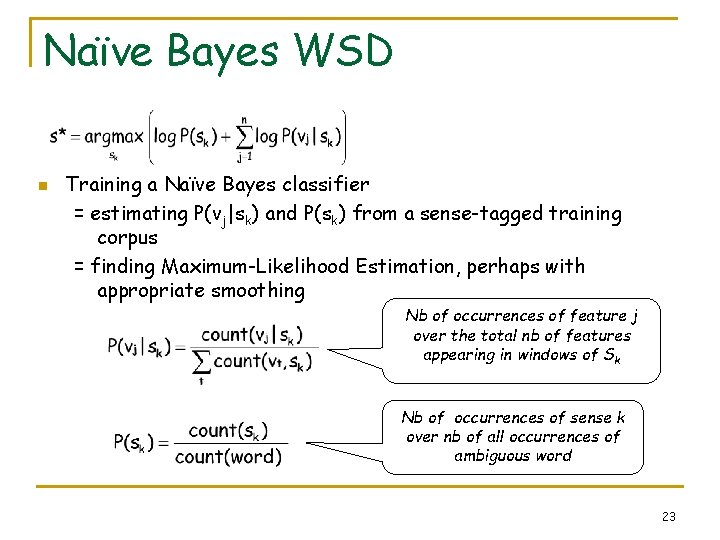

Naïve Bayes WSD n Training a Naïve Bayes classifier = estimating P(vj|sk) and P(sk) from a sense-tagged training corpus = finding Maximum-Likelihood Estimation, perhaps with appropriate smoothing Nb of occurrences of feature j over the total nb of features appearing in windows of Sk Nb of occurrences of sense k over nb of all occurrences of ambiguous word 23

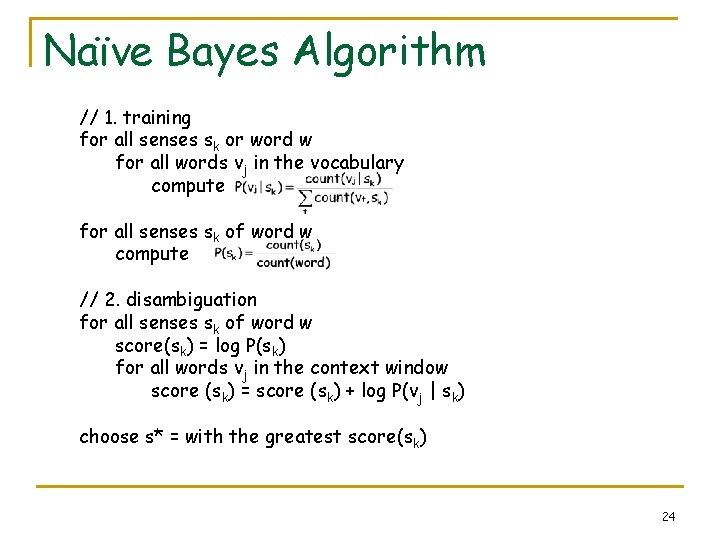

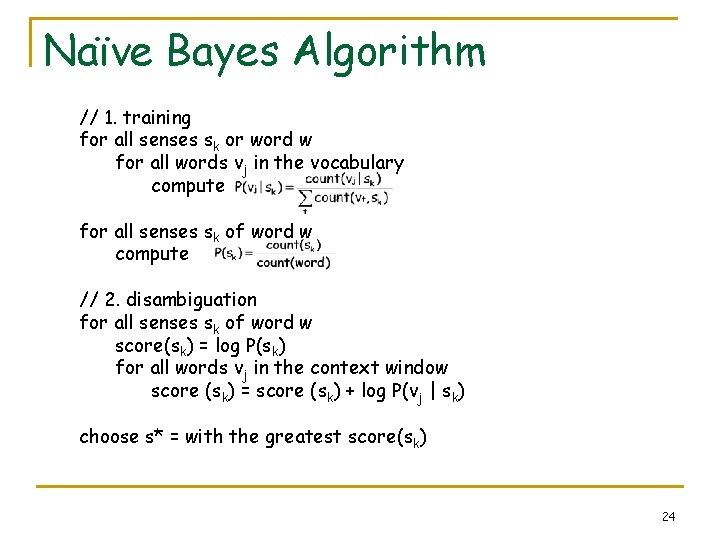

Naïve Bayes Algorithm // 1. training for all senses sk or word w for all words vj in the vocabulary compute for all senses sk of word w compute // 2. disambiguation for all senses sk of word w score(sk) = log P(sk) for all words vj in the context window score (sk) = score (sk) + log P(vj | sk) choose s* = with the greatest score(sk) 24

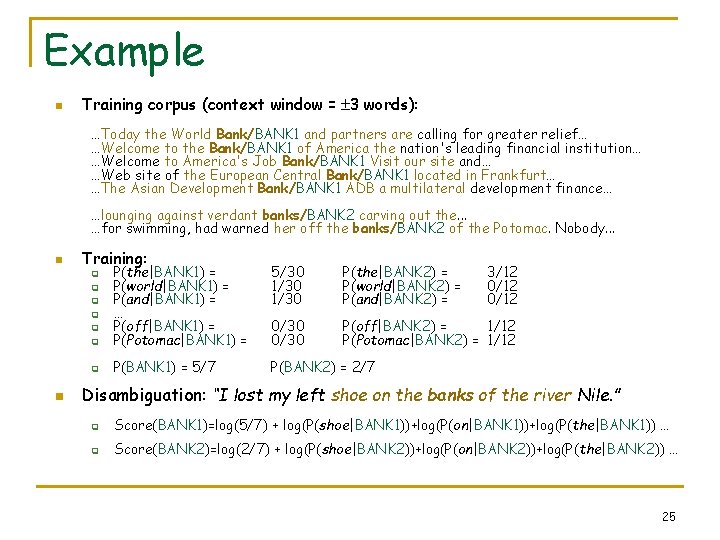

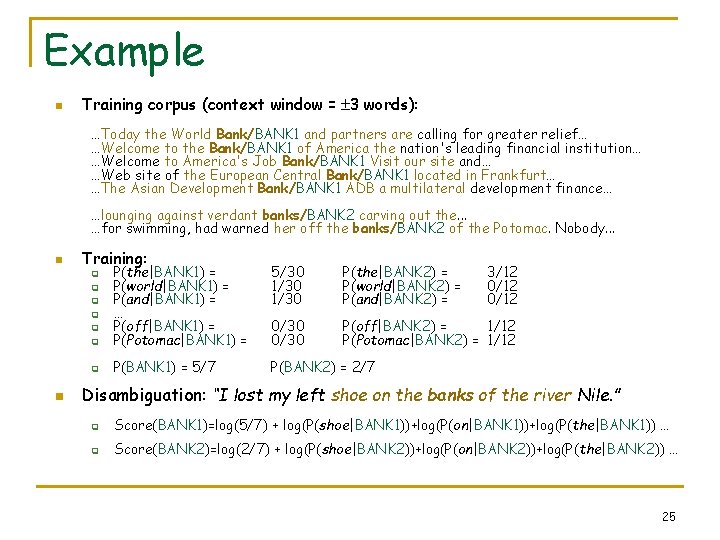

Example n Training corpus (context window = 3 words): …Today the World Bank/BANK 1 and partners are calling for greater relief… …Welcome to the Bank/BANK 1 of America the nation's leading financial institution… …Welcome to America's Job Bank/BANK 1 Visit our site and… …Web site of the European Central Bank/BANK 1 located in Frankfurt… …The Asian Development Bank/BANK 1 ADB a multilateral development finance… …lounging against verdant banks/BANK 2 carving out the. . . …for swimming, had warned her off the banks/BANK 2 of the Potomac. Nobody. . . n Training: 5/30 1/30 P(the|BANK 2) = P(world|BANK 2) = P(and|BANK 2) = q P(the|BANK 1) = P(world|BANK 1) = P(and|BANK 1) = … P(off|BANK 1) = P(Potomac|BANK 1) = 0/30 P(off|BANK 2) = 1/12 P(Potomac|BANK 2) = 1/12 q P(BANK 1) = 5/7 P(BANK 2) = 2/7 q q q n 3/12 0/12 Disambiguation: “I lost my left shoe on the banks of the river Nile. ” q Score(BANK 1)=log(5/7) + log(P(shoe|BANK 1))+log(P(on|BANK 1))+log(P(the|BANK 1)) … q Score(BANK 2)=log(2/7) + log(P(shoe|BANK 2))+log(P(on|BANK 2))+log(P(the|BANK 2)) … 25

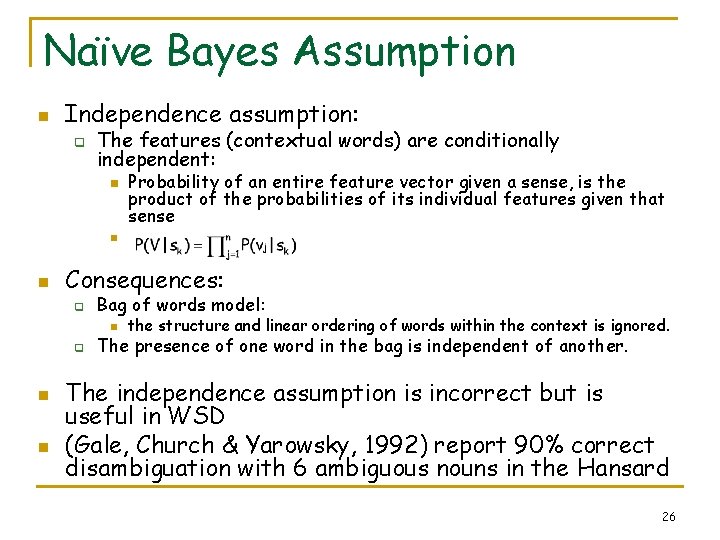

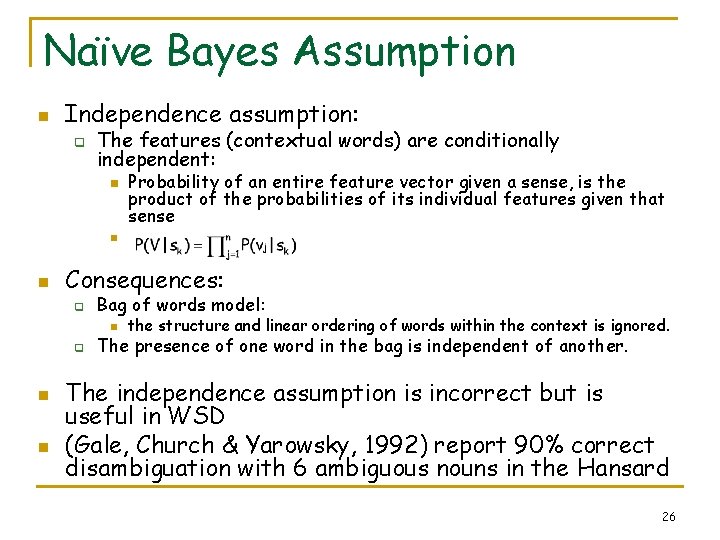

Naïve Bayes Assumption n Independence assumption: q The features (contextual words) are conditionally independent: n Probability of an entire feature vector given a sense, is the product of the probabilities of its individual features given that sense n n Consequences: q Bag of words model: n q n n the structure and linear ordering of words within the context is ignored. The presence of one word in the bag is independent of another. The independence assumption is incorrect but is useful in WSD (Gale, Church & Yarowsky, 1992) report 90% correct disambiguation with 6 ambiguous nouns in the Hansard 26

Approaches to Statistical WSD n --> Supervised Disambiguation q q n Use of Lexical Resources q q q n n Naïve Bayes --> Decision Trees Dictionary-based Thesaurus-based Translation-based Discourse properties Unsupervised Disambiguation 27

Decision Tree Classifier n n Bayes Classifier uses information from all words in the context window But some words are more reliable than others to indicate which sense is used… 28

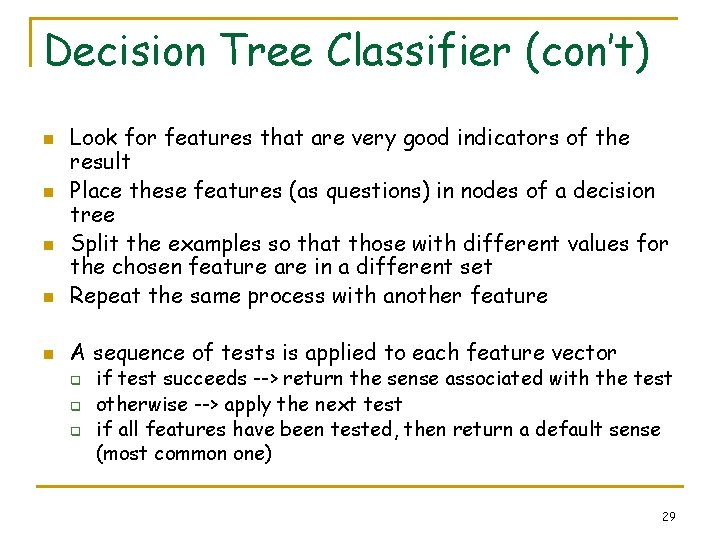

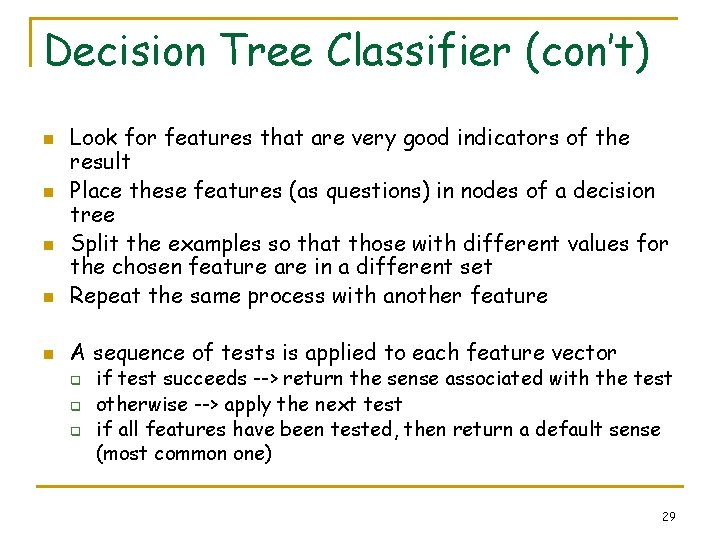

Decision Tree Classifier (con’t) n Look for features that are very good indicators of the result Place these features (as questions) in nodes of a decision tree Split the examples so that those with different values for the chosen feature are in a different set Repeat the same process with another feature n A sequence of tests is applied to each feature vector n n n q q q if test succeeds --> return the sense associated with the test otherwise --> apply the next test if all features have been tested, then return a default sense (most common one) 29

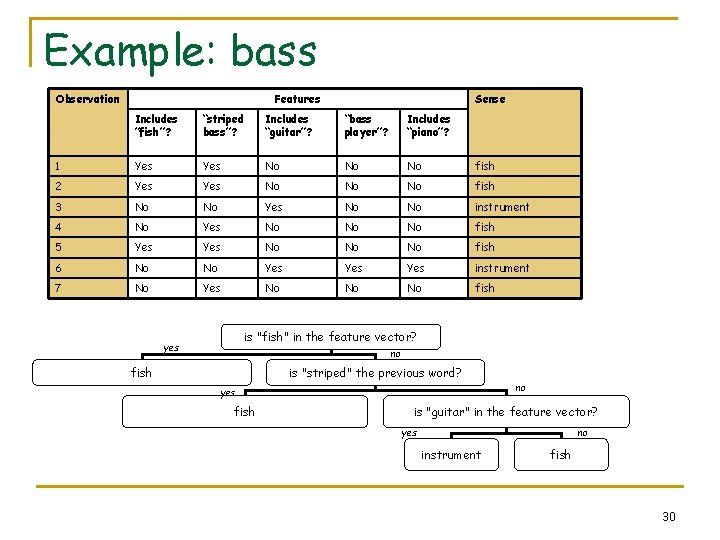

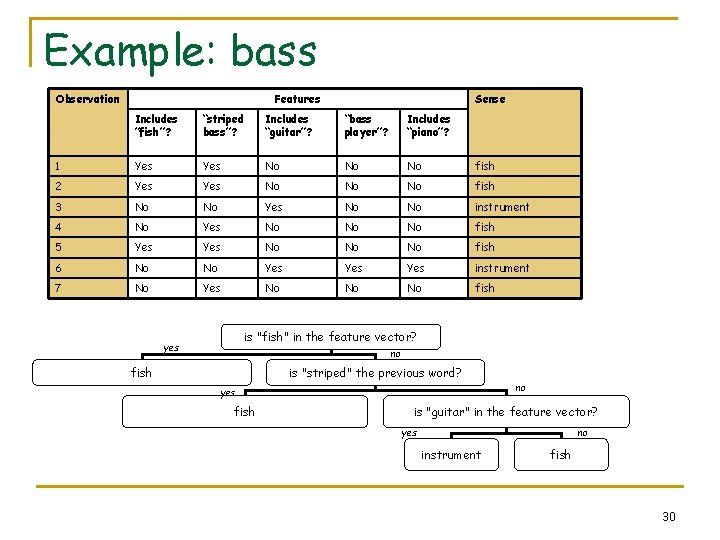

Example: bass Observation Features Sense Includes ”fish”? “striped bass”? Includes “guitar”? “bass player”? Includes “piano”? 1 Yes No No No fish 2 Yes No No No fish 3 No No Yes No No instrument 4 No Yes No No No fish 5 Yes No No No fish 6 No No Yes Yes instrument 7 No Yes No No No fish is "fish" in the feature vector? yes no fish is "striped" the previous word? yes fish no is "guitar" in the feature vector? yes no instrument fish 30

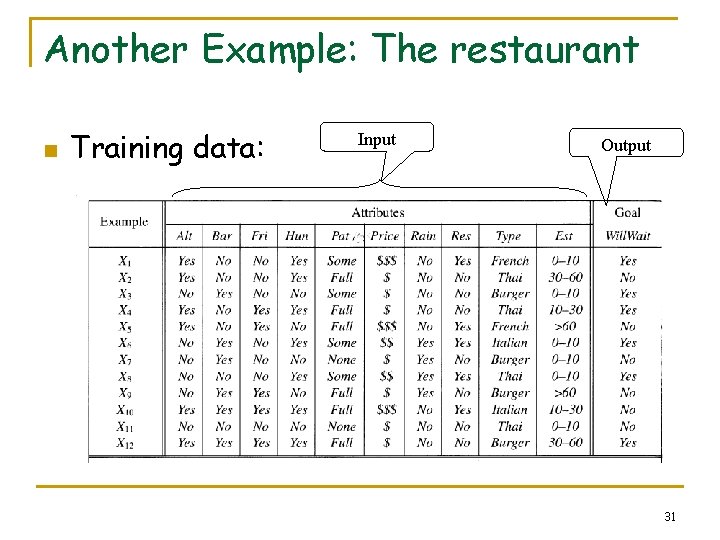

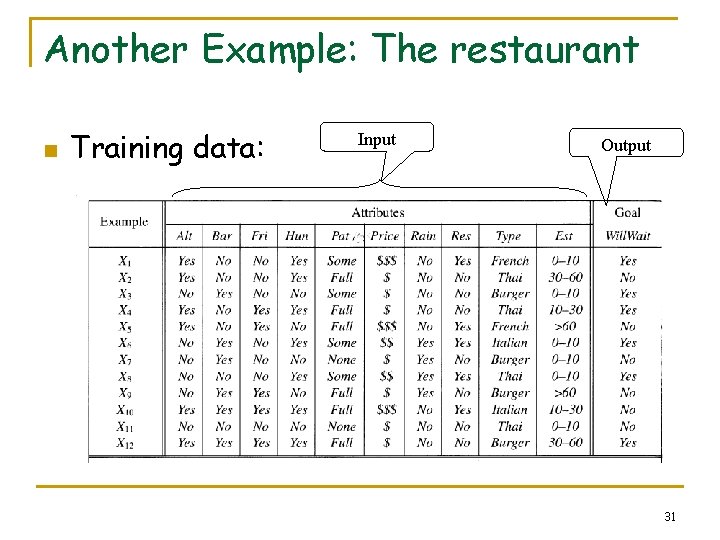

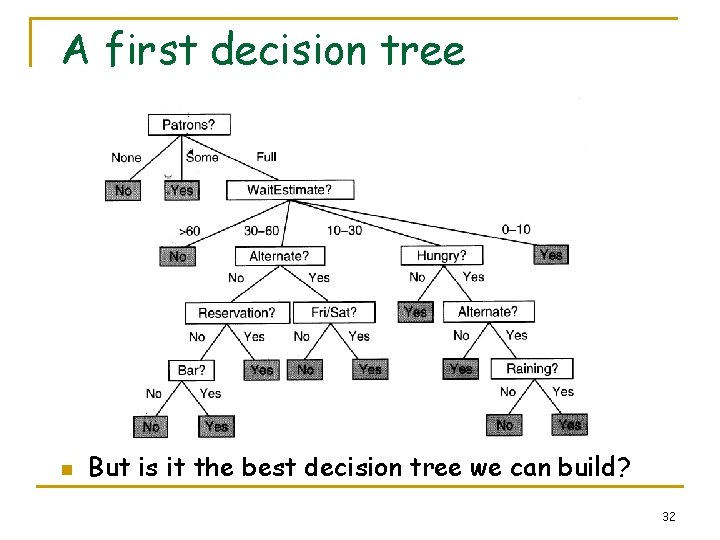

Another Example: The restaurant n Training data: Input Output 31

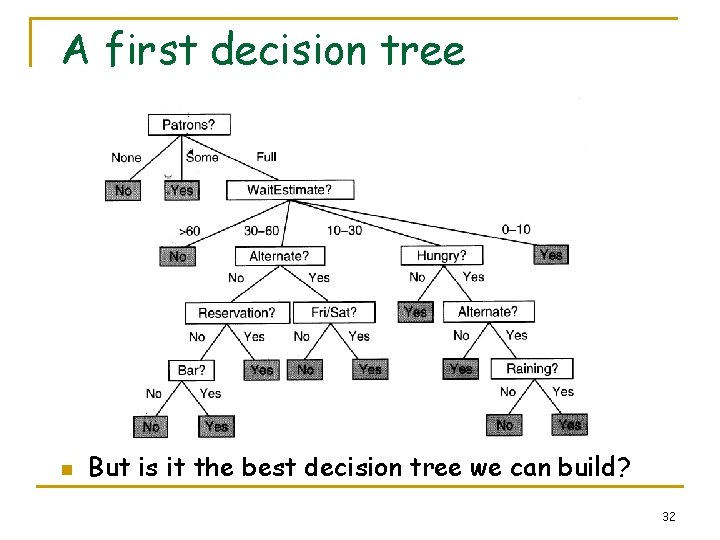

A first decision tree n But is it the best decision tree we can build? 32

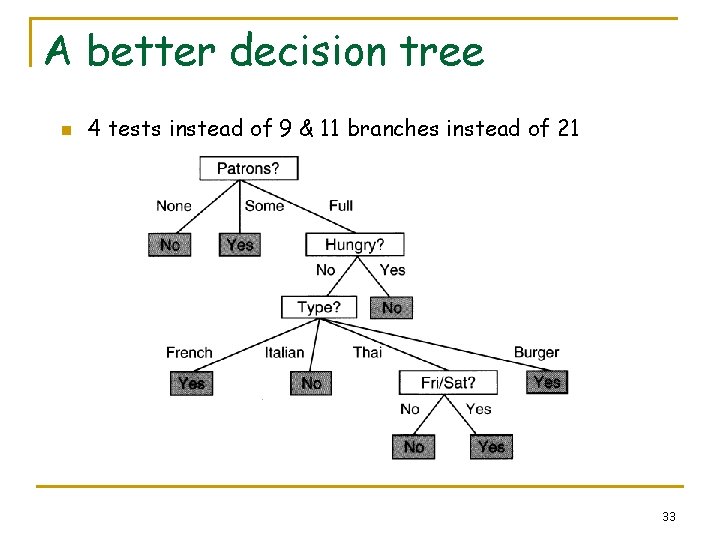

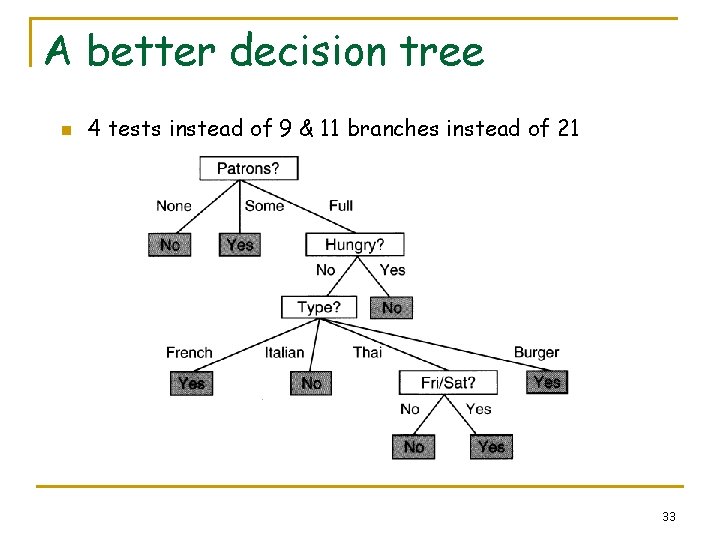

A better decision tree n 4 tests instead of 9 & 11 branches instead of 21 33

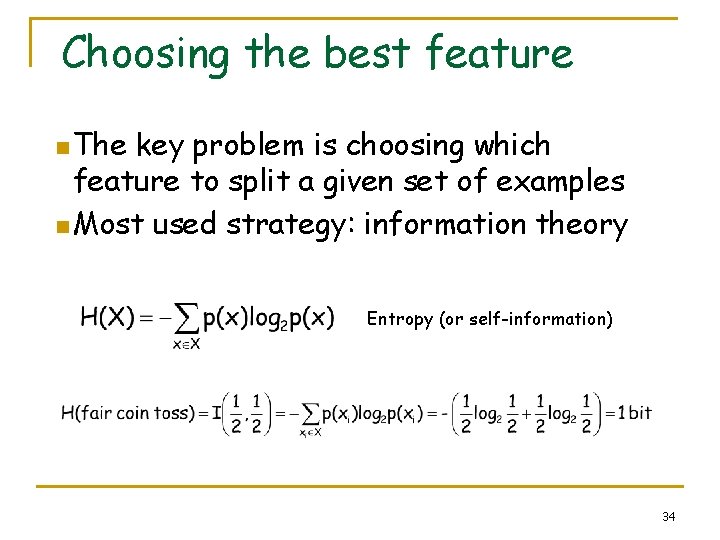

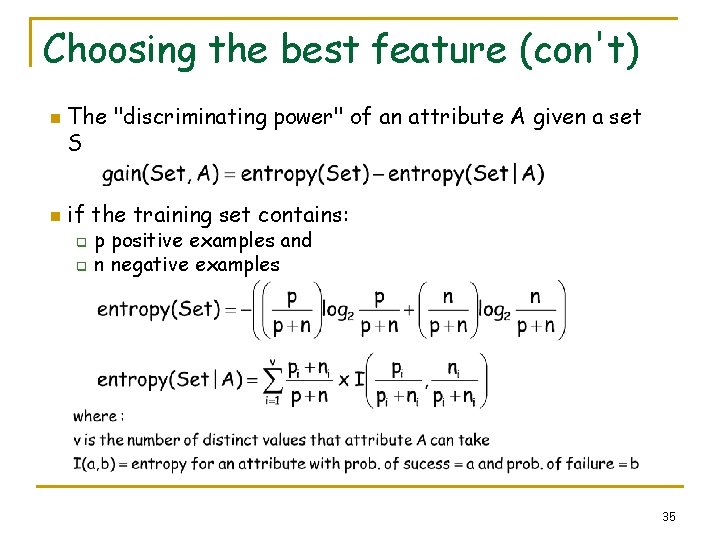

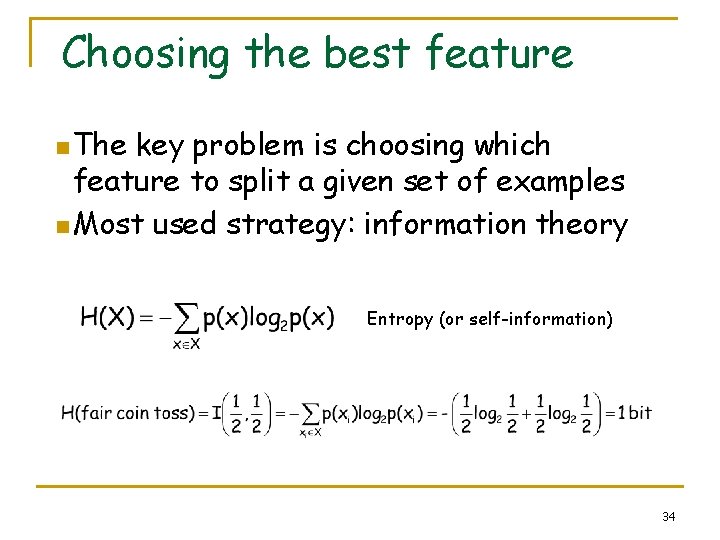

Choosing the best feature n The key problem is choosing which feature to split a given set of examples n Most used strategy: information theory Entropy (or self-information) 34

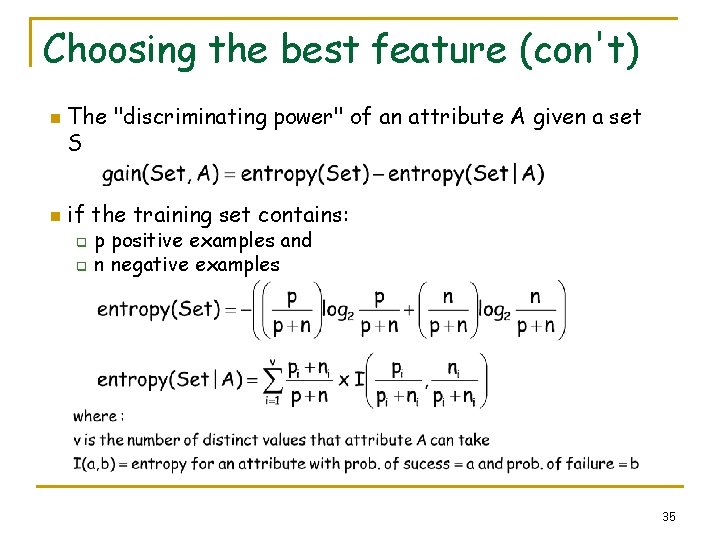

Choosing the best feature (con't) n n The "discriminating power" of an attribute A given a set S if the training set contains: q q p positive examples and n negative examples 35

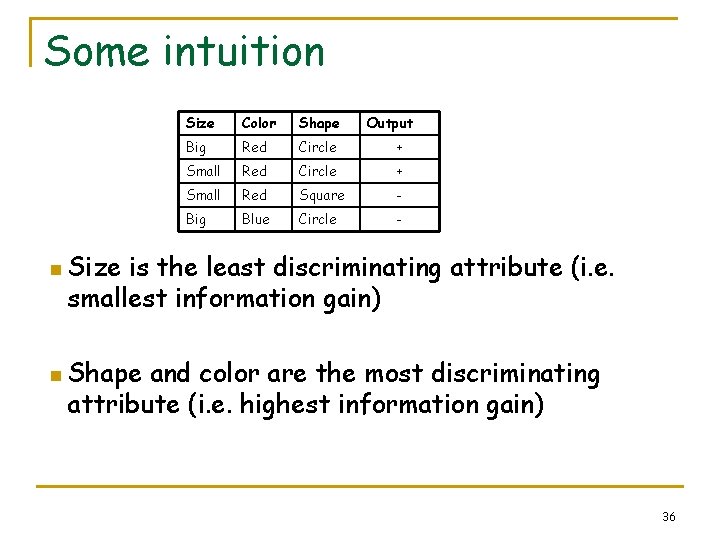

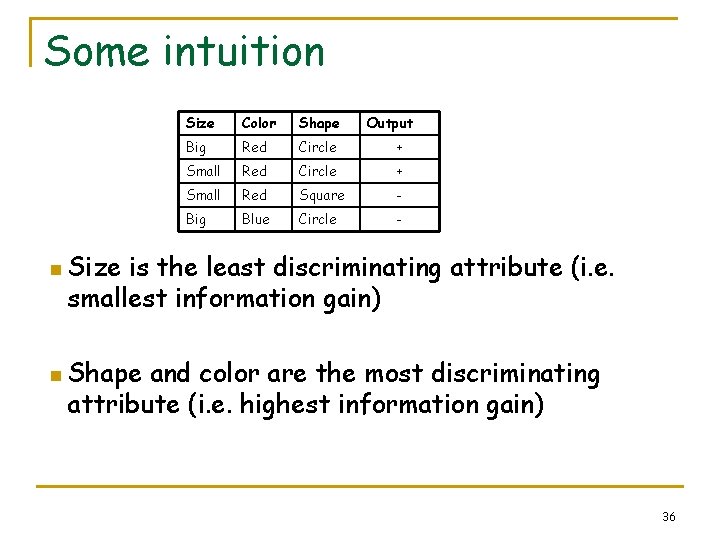

Some intuition Size Color Shape Output Big Red Circle + Small Red Square - Big Blue Circle - n Size is the least discriminating attribute (i. e. smallest information gain) n Shape and color are the most discriminating attribute (i. e. highest information gain) 36

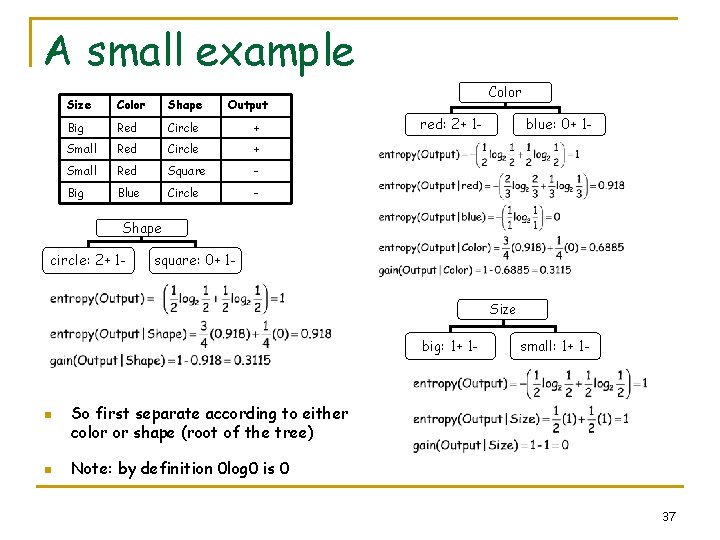

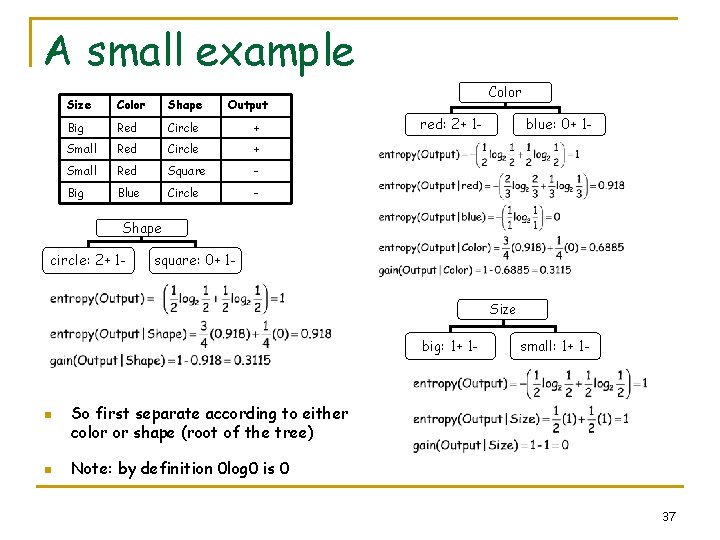

A small example Size Color Shape Big Red Circle + Small Red Square - Big Blue Circle - Color Output red: 2+ 1 - blue: 0+ 1 - Shape circle: 2+ 1 - square: 0+ 1 Size big: 1+ 1 - n n small: 1+ 1 - So first separate according to either color or shape (root of the tree) Note: by definition 0 log 0 is 0 37

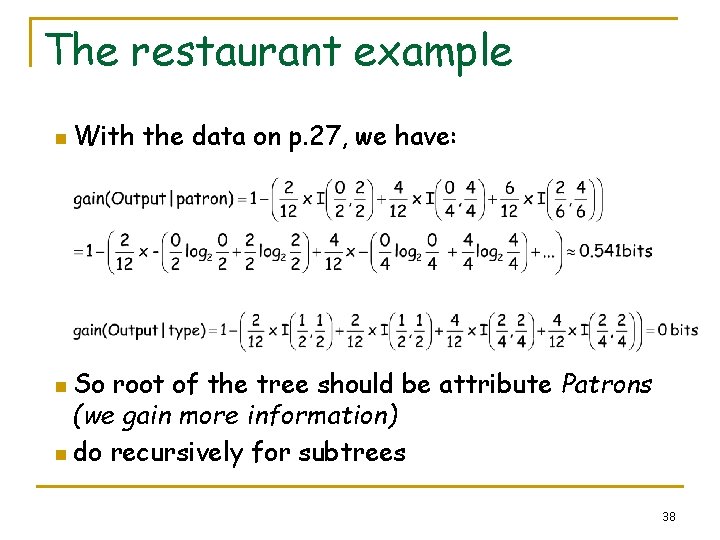

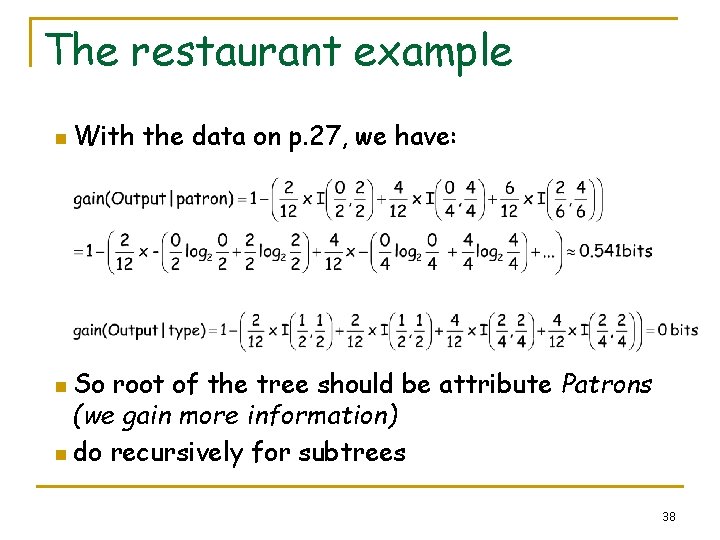

The restaurant example n With the data on p. 27, we have: n So root of the tree should be attribute Patrons (we gain more information) n do recursively for subtrees 38

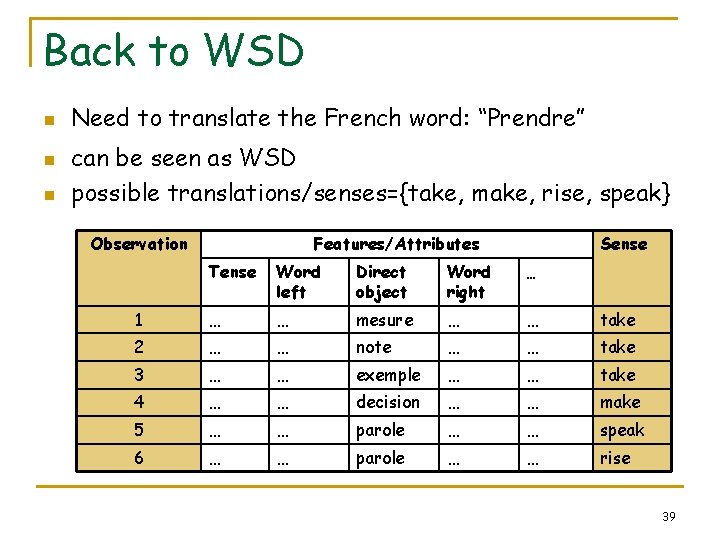

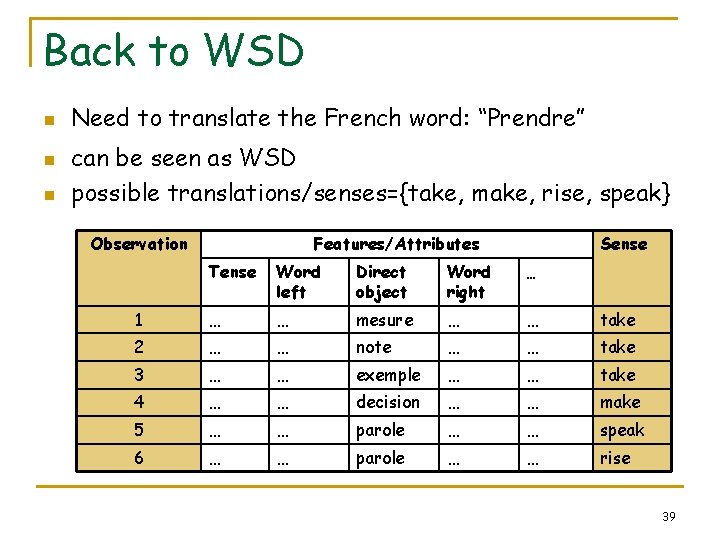

Back to WSD n n n Need to translate the French word: “Prendre” can be seen as WSD possible translations/senses={take, make, rise, speak} Observation Features/Attributes Sense Tense Word left Direct object Word right … 1 … … mesure … … take 2 … … note … … take 3 … … exemple … … take 4 … … decision … … make 5 … … parole … … speak 6 … … parole … … rise 39

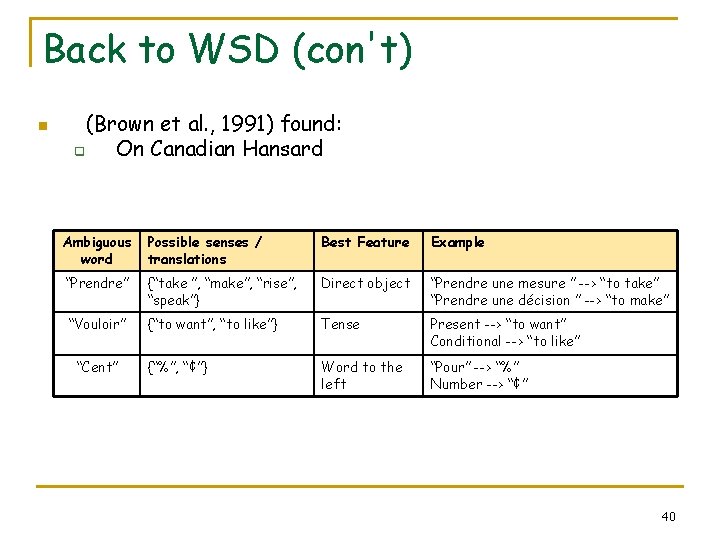

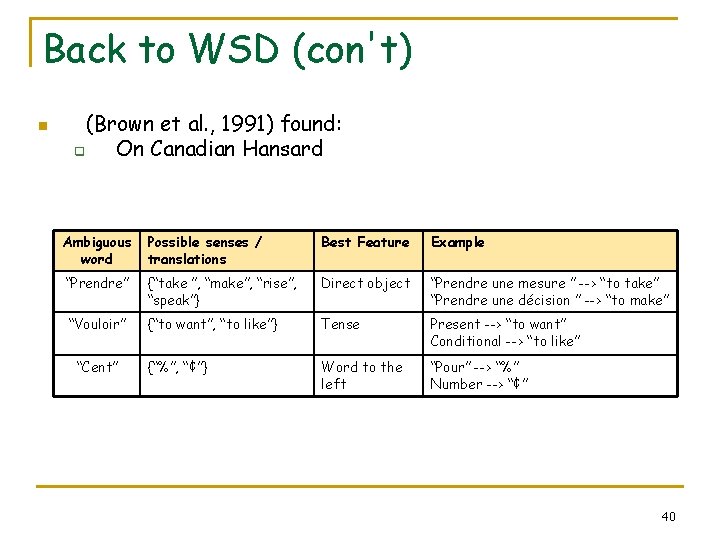

Back to WSD (con't) n (Brown et al. , 1991) found: q On Canadian Hansard Ambiguous word Possible senses / translations Best Feature Example “Prendre” {“take ”, “make”, “rise”, “speak”} Direct object “Prendre une mesure ” --> “to take” “Prendre une décision ” --> “to make” “Vouloir” {“to want”, “to like”} Tense Present --> “to want” Conditional --> “to like” {“%”, “¢”} Word to the left “Pour” --> “%” Number --> “¢” “Cent” 40

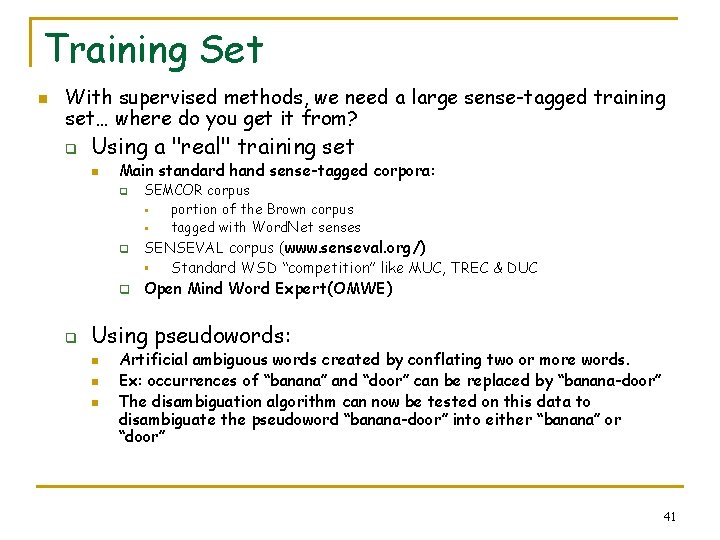

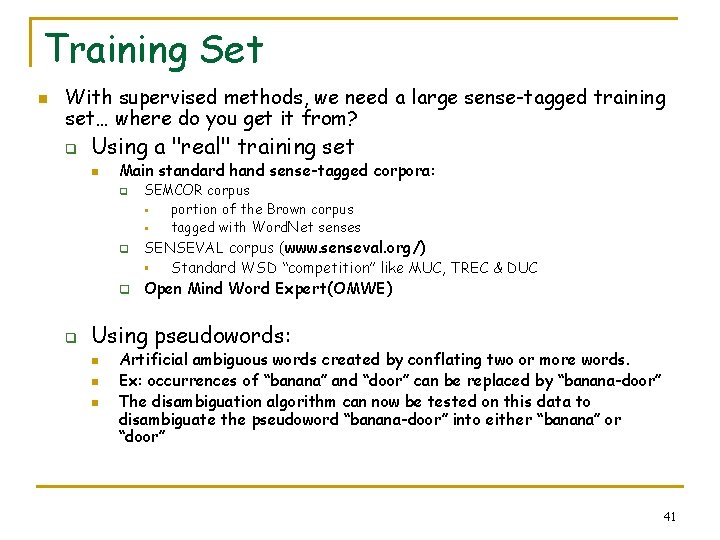

Training Set n With supervised methods, we need a large sense-tagged training set… where do you get it from? q Using a "real" training set n Main standard hand sense-tagged corpora: q q SEMCOR corpus § portion of the Brown corpus § tagged with Word. Net senses SENSEVAL corpus (www. senseval. org/) § Standard WSD “competition” like MUC, TREC & DUC Open Mind Word Expert(OMWE) Using pseudowords: n n n Artificial ambiguous words created by conflating two or more words. Ex: occurrences of “banana” and “door” can be replaced by “banana-door” The disambiguation algorithm can now be tested on this data to disambiguate the pseudoword “banana-door” into either “banana” or “door” 41

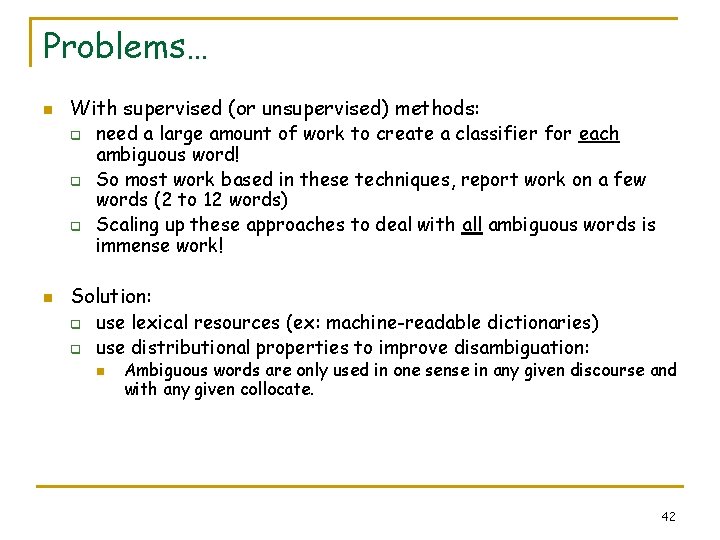

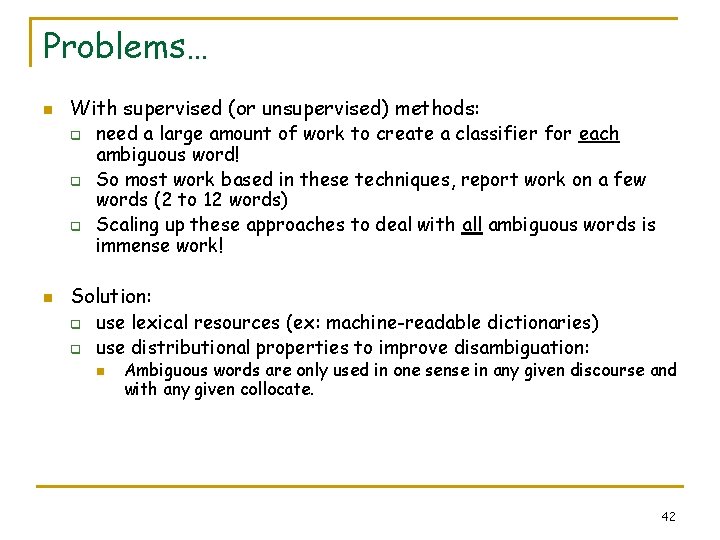

Problems… n With supervised (or unsupervised) methods: q q q n need a large amount of work to create a classifier for each ambiguous word! So most work based in these techniques, report work on a few words (2 to 12 words) Scaling up these approaches to deal with all ambiguous words is immense work! Solution: q q use lexical resources (ex: machine-readable dictionaries) use distributional properties to improve disambiguation: n Ambiguous words are only used in one sense in any given discourse and with any given collocate. 42

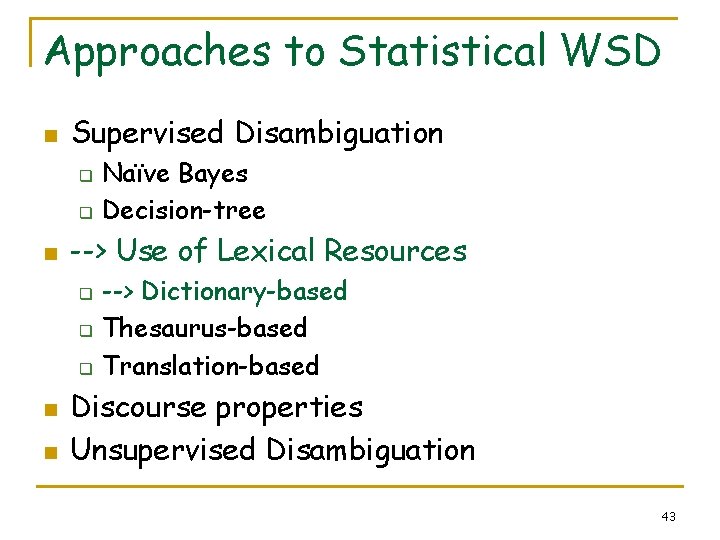

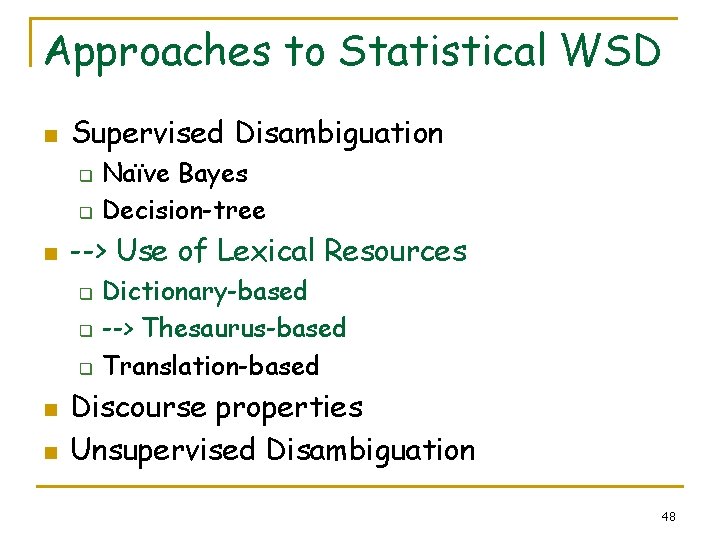

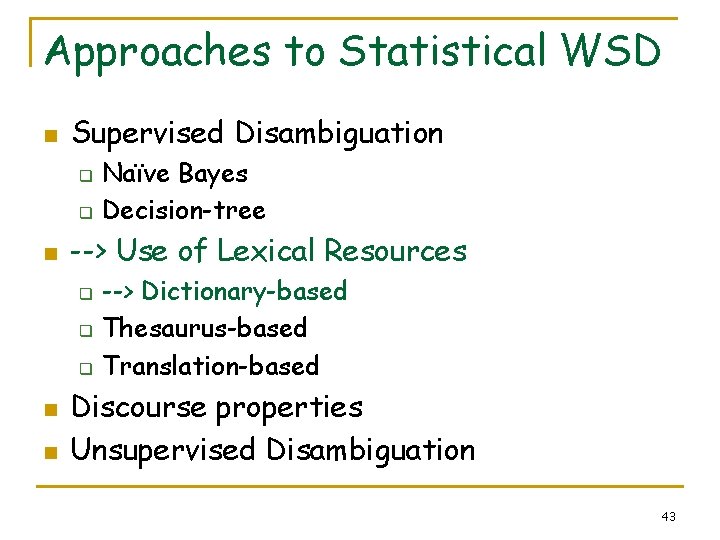

Approaches to Statistical WSD n Supervised Disambiguation q q n --> Use of Lexical Resources q q q n n Naïve Bayes Decision-tree --> Dictionary-based Thesaurus-based Translation-based Discourse properties Unsupervised Disambiguation 43

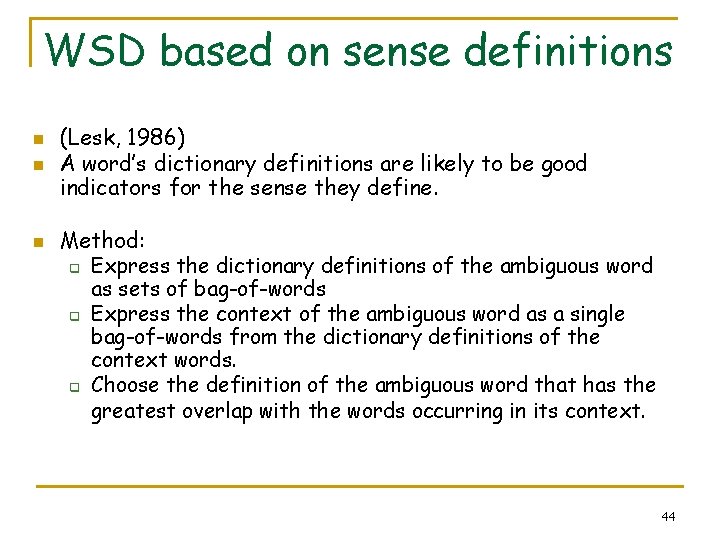

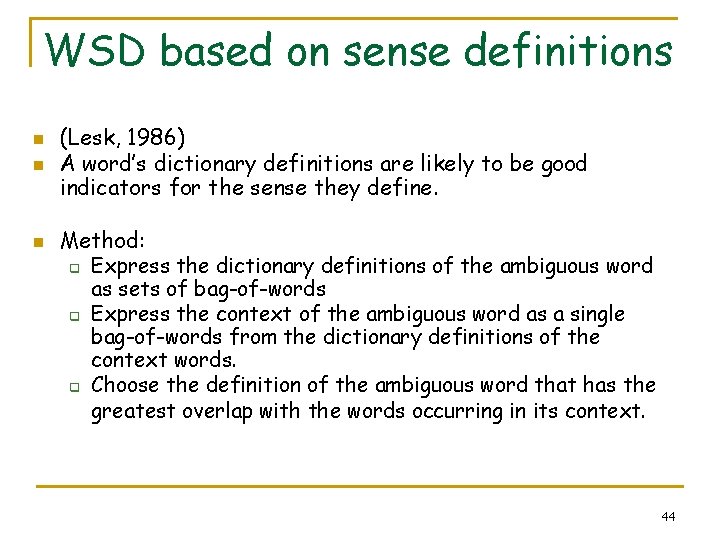

WSD based on sense definitions n n n (Lesk, 1986) A word’s dictionary definitions are likely to be good indicators for the sense they define. Method: q Express the dictionary definitions of the ambiguous word as sets of bag-of-words q Express the context of the ambiguous word as a single bag-of-words from the dictionary definitions of the context words. q Choose the definition of the ambiguous word that has the greatest overlap with the words occurring in its context. 44

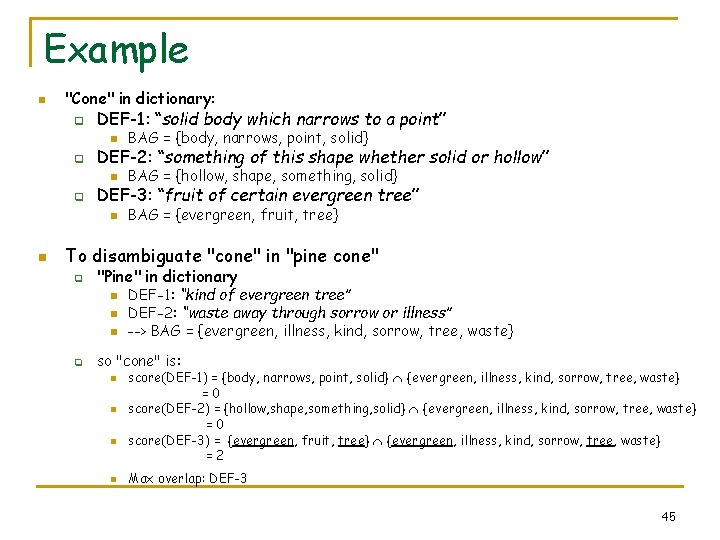

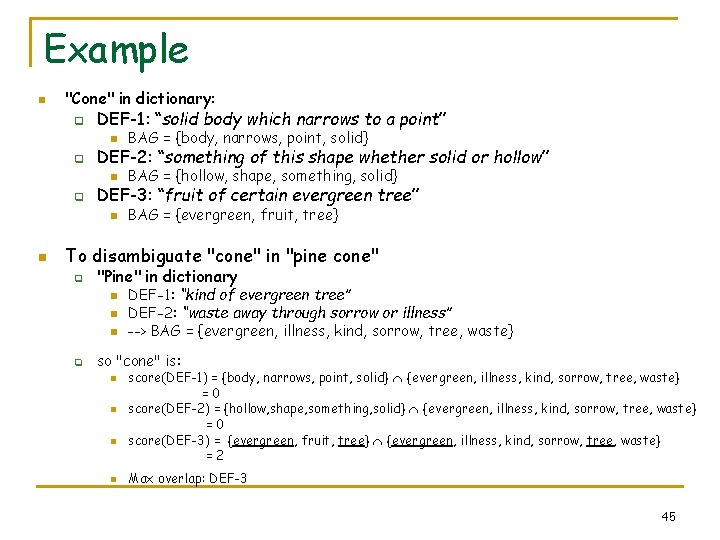

Example n "Cone" in dictionary: q q q n DEF-1: “solid body which narrows to a point” n BAG = {body, narrows, point, solid} n BAG = {hollow, shape, something, solid} n BAG = {evergreen, fruit, tree} DEF-2: “something of this shape whether solid or hollow” DEF-3: “fruit of certain evergreen tree” To disambiguate "cone" in "pine cone" q "Pine" in dictionary n n n q DEF-1: “kind of evergreen tree” DEF-2: “waste away through sorrow or illness” --> BAG = {evergreen, illness, kind, sorrow, tree, waste} so "cone" is: n n score(DEF-1) = {body, narrows, point, solid} {evergreen, illness, kind, sorrow, tree, waste} =0 score(DEF-2) = {hollow, shape, something, solid} {evergreen, illness, kind, sorrow, tree, waste} =0 score(DEF-3) = {evergreen, fruit, tree} {evergreen, illness, kind, sorrow, tree, waste} =2 Max overlap: DEF-3 45

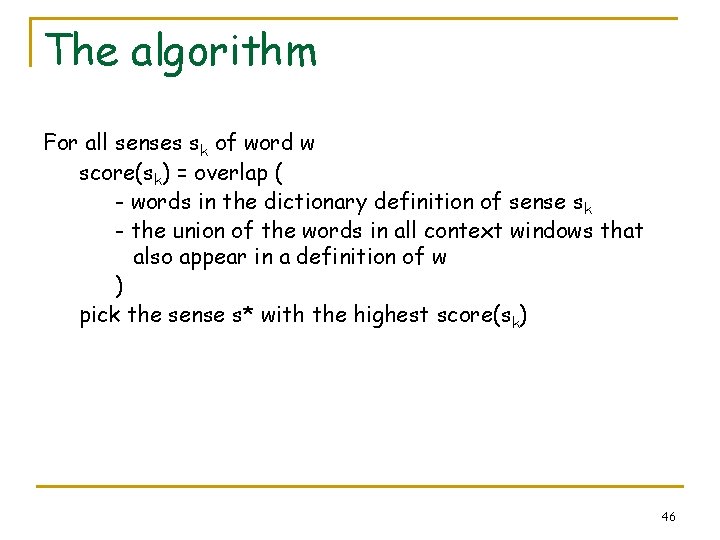

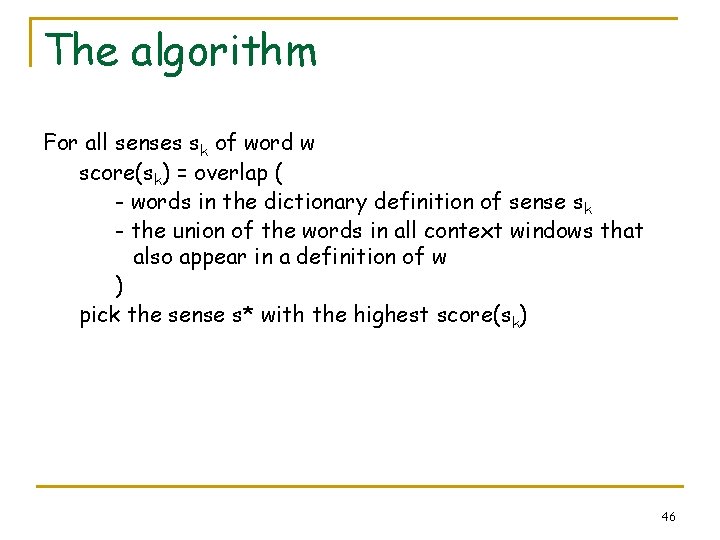

The algorithm For all senses sk of word w score(sk) = overlap ( - words in the dictionary definition of sense sk - the union of the words in all context windows that also appear in a definition of w ) pick the sense s* with the highest score(sk) 46

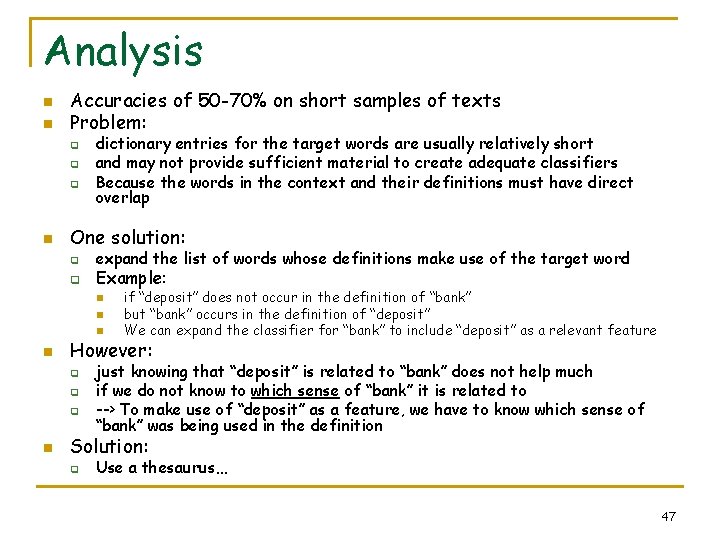

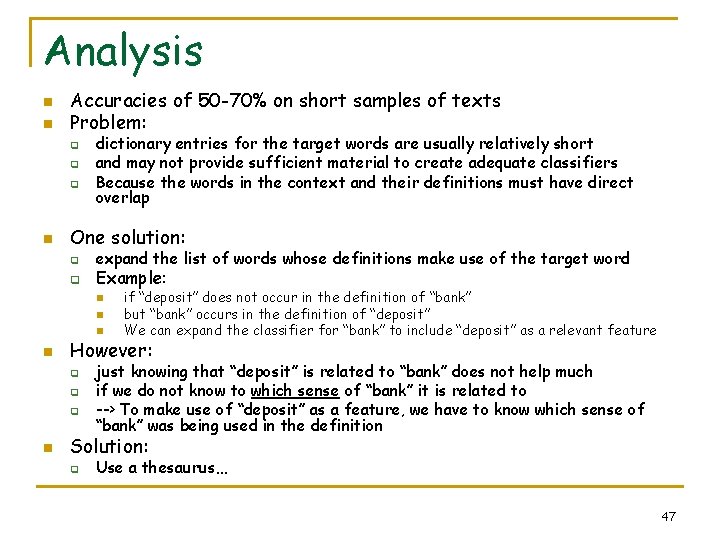

Analysis n n Accuracies of 50 -70% on short samples of texts Problem: q q q n dictionary entries for the target words are usually relatively short and may not provide sufficient material to create adequate classifiers Because the words in the context and their definitions must have direct overlap One solution: q q expand the list of words whose definitions make use of the target word Example: n n However: q q q n if “deposit” does not occur in the definition of “bank” but “bank” occurs in the definition of “deposit” We can expand the classifier for “bank” to include “deposit” as a relevant feature just knowing that “deposit” is related to “bank” does not help much if we do not know to which sense of “bank” it is related to --> To make use of “deposit” as a feature, we have to know which sense of “bank” was being used in the definition Solution: q Use a thesaurus… 47

Approaches to Statistical WSD n Supervised Disambiguation q q n --> Use of Lexical Resources q q q n n Naïve Bayes Decision-tree Dictionary-based --> Thesaurus-based Translation-based Discourse properties Unsupervised Disambiguation 48

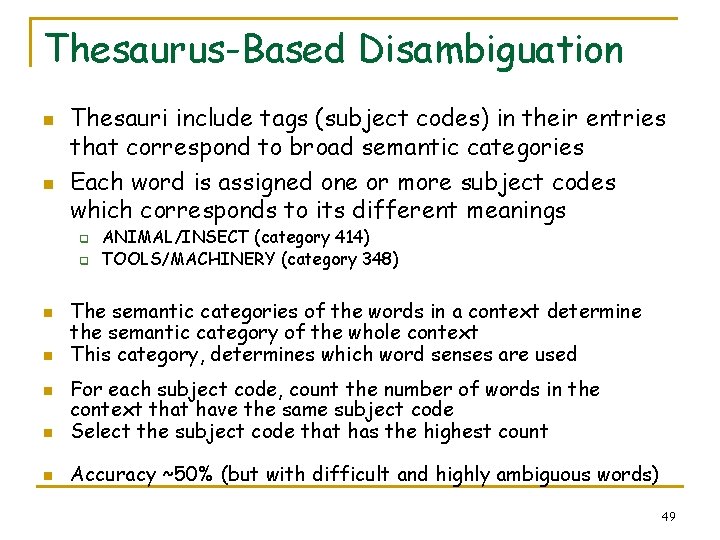

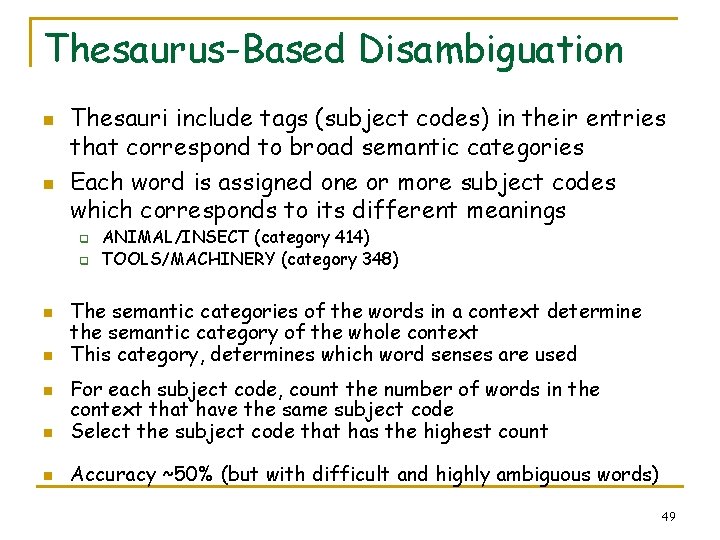

Thesaurus-Based Disambiguation n n Thesauri include tags (subject codes) in their entries that correspond to broad semantic categories Each word is assigned one or more subject codes which corresponds to its different meanings q q n n ANIMAL/INSECT (category 414) TOOLS/MACHINERY (category 348) The semantic categories of the words in a context determine the semantic category of the whole context This category, determines which word senses are used n For each subject code, count the number of words in the context that have the same subject code Select the subject code that has the highest count n Accuracy ~50% (but with difficult and highly ambiguous words) n 49

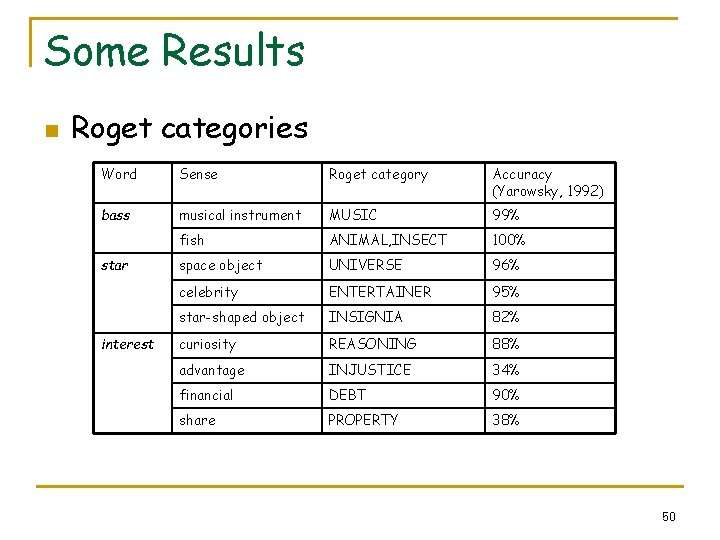

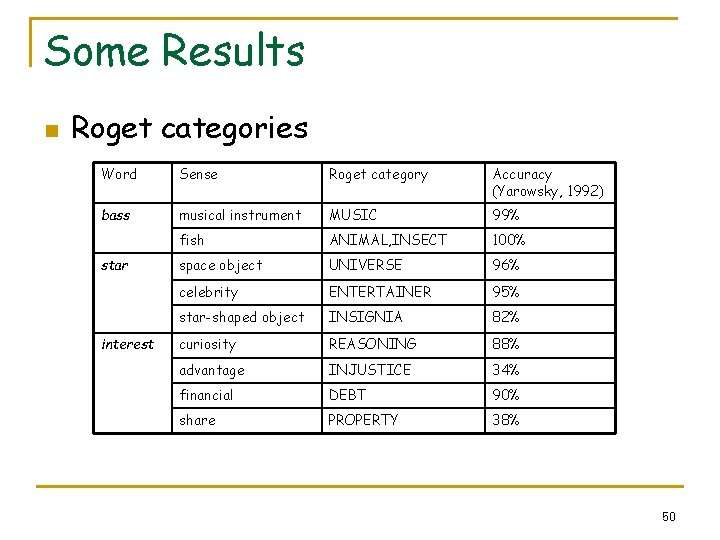

Some Results n Roget categories Word Sense Roget category Accuracy (Yarowsky, 1992) bass musical instrument MUSIC 99% fish ANIMAL, INSECT 100% space object UNIVERSE 96% celebrity ENTERTAINER 95% star-shaped object INSIGNIA 82% curiosity REASONING 88% advantage INJUSTICE 34% financial DEBT 90% share PROPERTY 38% star interest 50

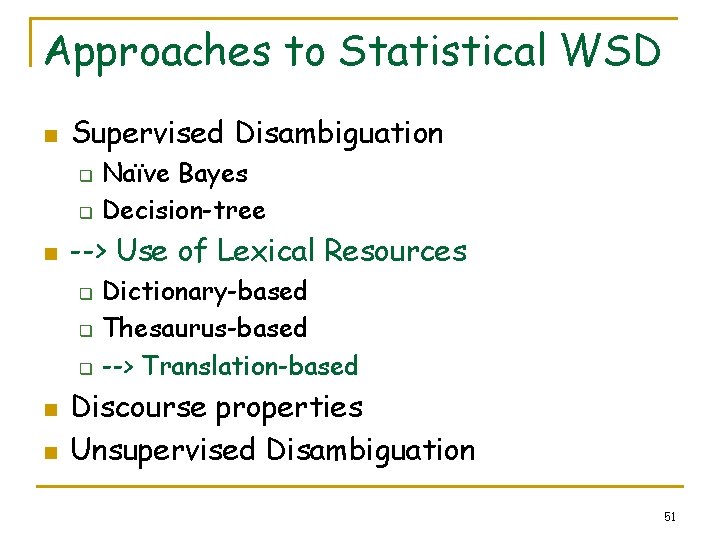

Approaches to Statistical WSD n Supervised Disambiguation q q n --> Use of Lexical Resources q q q n n Naïve Bayes Decision-tree Dictionary-based Thesaurus-based --> Translation-based Discourse properties Unsupervised Disambiguation 51

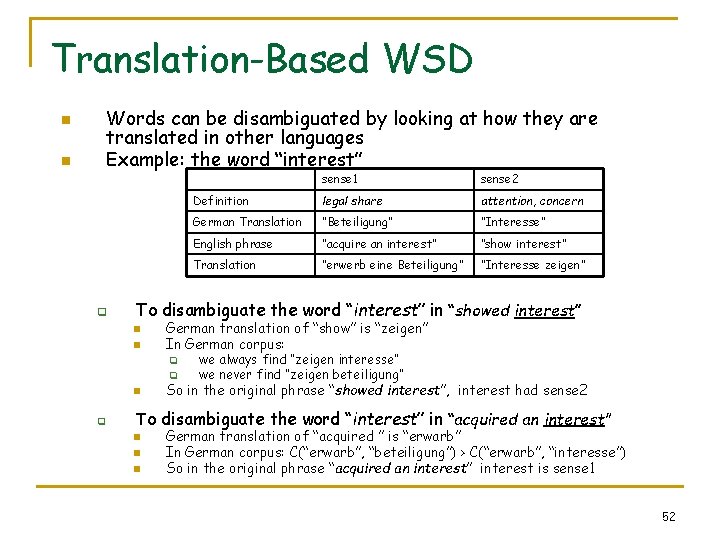

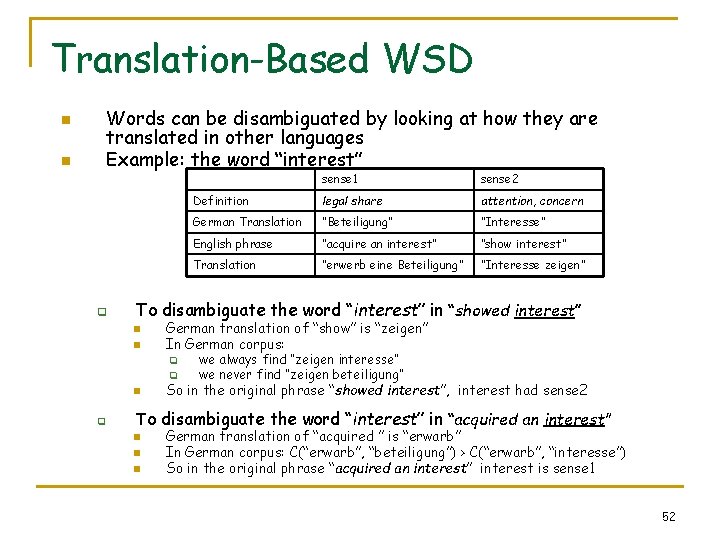

Translation-Based WSD Words can be disambiguated by looking at how they are translated in other languages Example: the word “interest” n n q sense 2 Definition legal share attention, concern German Translation “Beteiligung” “Interesse” English phrase “acquire an interest” “show interest” Translation “erwerb eine Beteiligung” “Interesse zeigen” To disambiguate the word “interest” in “showed interest” n n German translation of “show” is “zeigen” In German corpus: q q n q sense 1 we always find “zeigen interesse” we never find “zeigen beteiligung” So in the original phrase “showed interest”, interest had sense 2 To disambiguate the word “interest” in “acquired an interest” n n n German translation of “acquired ” is “erwarb” In German corpus: C(“erwarb”, “beteiligung”) > C(“erwarb”, “interesse”) So in the original phrase “acquired an interest” interest is sense 1 52

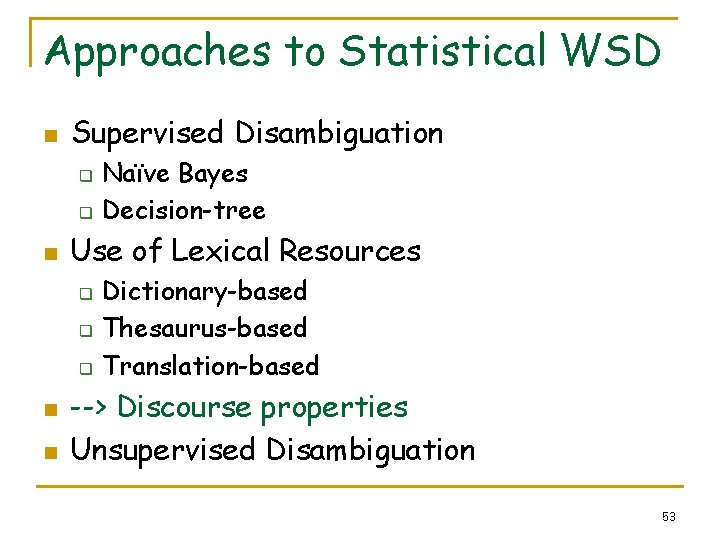

Approaches to Statistical WSD n Supervised Disambiguation q q n Use of Lexical Resources q q q n n Naïve Bayes Decision-tree Dictionary-based Thesaurus-based Translation-based --> Discourse properties Unsupervised Disambiguation 53

Discourse Properties n n So far, all methods have considered each occurrence of ambiguous word separately… But… q q n One sense per discourse n One document --> one sense n Select some nearby word that give very clues … ie. select words of a collocation <-> sense of target word One sense per collocation (Yarowsky , 1995) shows a reduction of error rate by 27% when using the discourse constraint! q n (Yarowsky, 1995) i. e. assign the majority sense of the discourse to all occurrences of the target word we can combine these 2 heuristics 54

Approaches to Statistical WSD n Supervised Disambiguation q q n Use of Lexical Resources q q q n n Naïve Bayes Decision-tree Dictionary-based Thesaurus-based Translation-based Discourse properties --> Unsupervised Disambiguation 55

Unsupervised Disambiguation n Disambiguate word senses: q without supporting tools such as dictionaries and thesauri q without a labeled training text Without such resources, we cannot really identify/label the senses q ie. cannot say bank-1 or bank-2 q we do not even know the different senses of a word! But we can: q Cluster/group the contexts of an ambiguous word into a number of groups q discriminate between these groups without actually labeling them 56

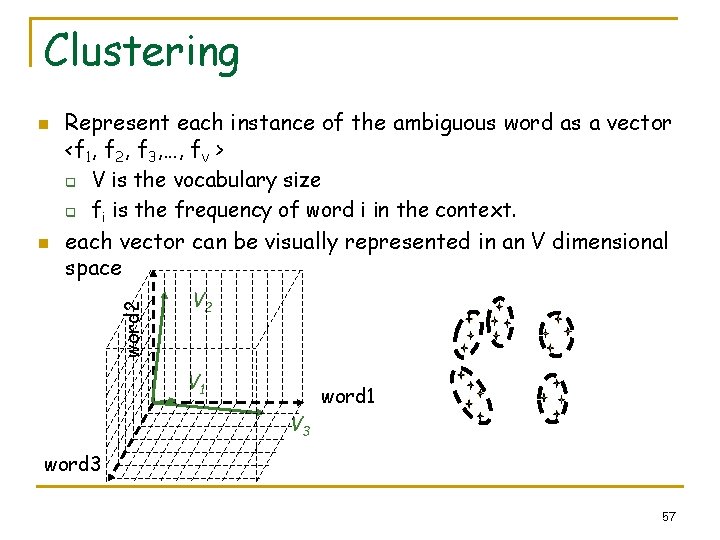

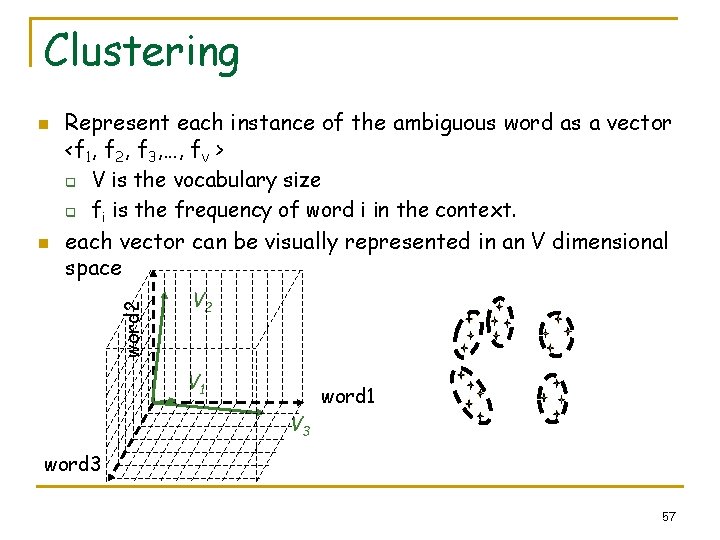

Clustering n word 2 n Represent each instance of the ambiguous word as a vector <f 1, f 2, f 3, …, fv > q V is the vocabulary size q fi is the frequency of word i in the context. each vector can be visually represented in an V dimensional space V 2 V 1 word 1 V 3 word 3 57

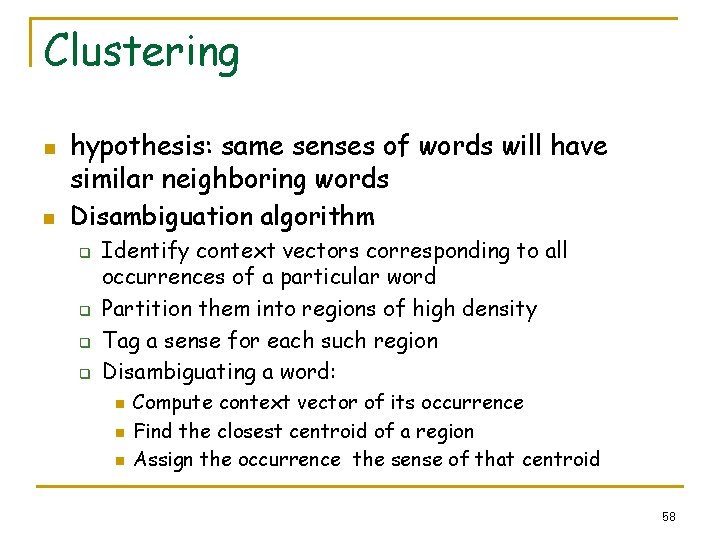

Clustering n n hypothesis: same senses of words will have similar neighboring words Disambiguation algorithm q q Identify context vectors corresponding to all occurrences of a particular word Partition them into regions of high density Tag a sense for each such region Disambiguating a word: n n n Compute context vector of its occurrence Find the closest centroid of a region Assign the occurrence the sense of that centroid 58

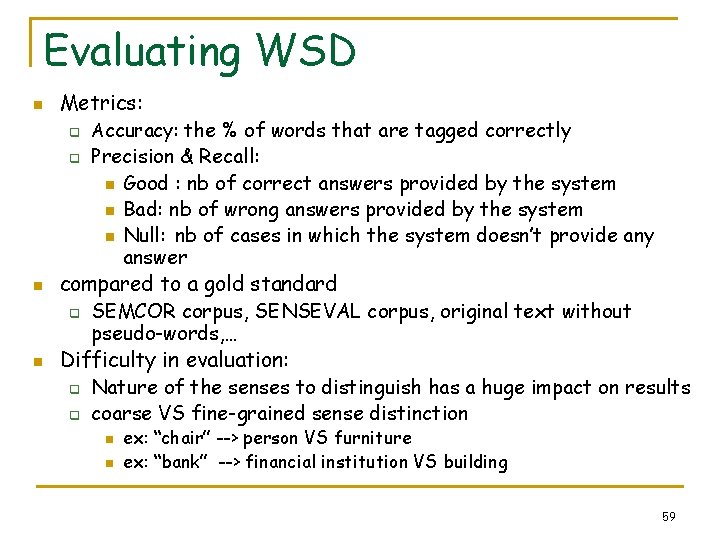

Evaluating WSD n Metrics: q q n n Accuracy: the % of words that are tagged correctly Precision & Recall: n Good : nb of correct answers provided by the system n Bad: nb of wrong answers provided by the system n Null: nb of cases in which the system doesn’t provide any answer compared to a gold standard q SEMCOR corpus, SENSEVAL corpus, original text without pseudo-words, … Difficulty in evaluation: q Nature of the senses to distinguish has a huge impact on results q coarse VS fine-grained sense distinction n n ex: “chair” --> person VS furniture ex: “bank” --> financial institution VS building 59

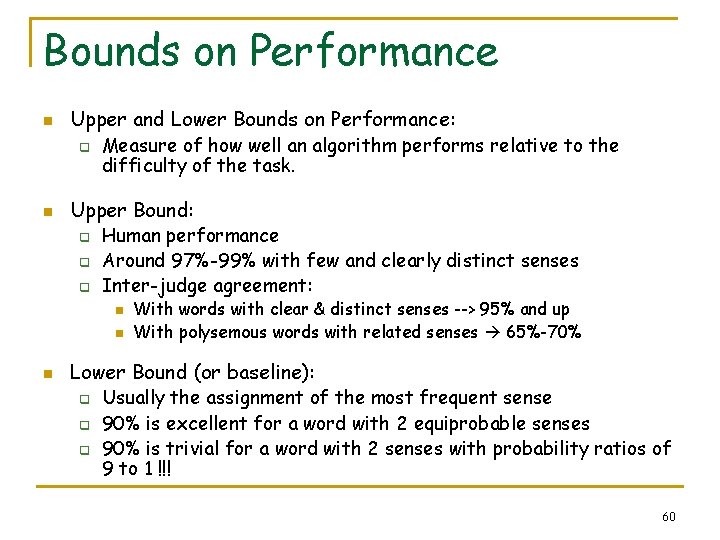

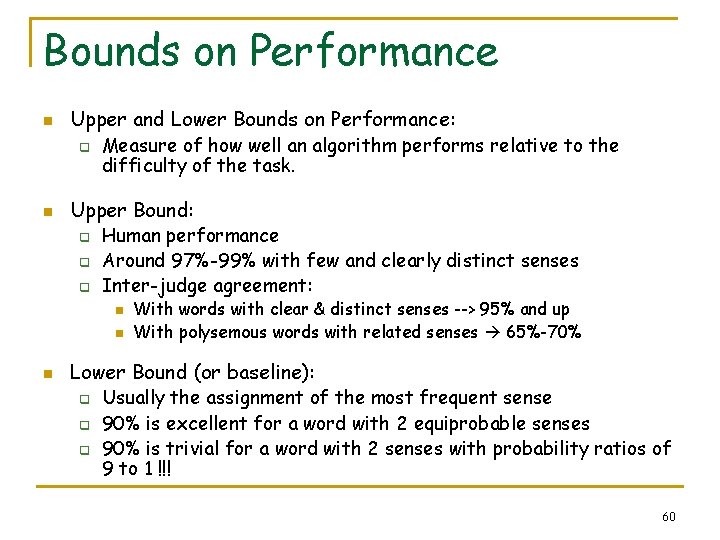

Bounds on Performance n Upper and Lower Bounds on Performance: q n Measure of how well an algorithm performs relative to the difficulty of the task. Upper Bound: q q q Human performance Around 97%-99% with few and clearly distinct senses Inter-judge agreement: n n n With words with clear & distinct senses --> 95% and up With polysemous words with related senses 65%-70% Lower Bound (or baseline): q q q Usually the assignment of the most frequent sense 90% is excellent for a word with 2 equiprobable senses 90% is trivial for a word with 2 senses with probability ratios of 9 to 1 !!! 60

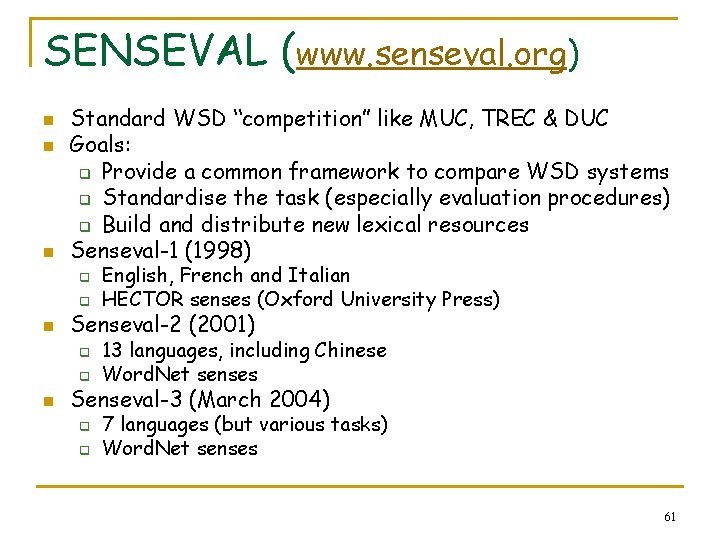

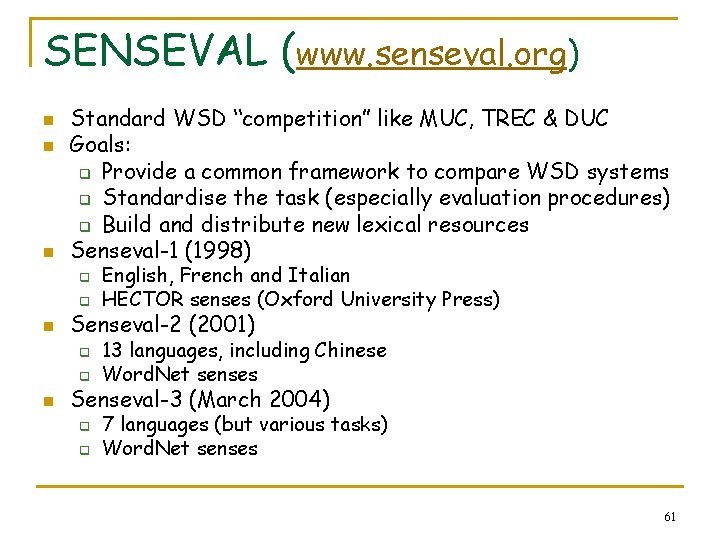

SENSEVAL (www. senseval. org) n n n Standard WSD “competition” like MUC, TREC & DUC Goals: q Provide a common framework to compare WSD systems q Standardise the task (especially evaluation procedures) q Build and distribute new lexical resources Senseval-1 (1998) q q n Senseval-2 (2001) q q n English, French and Italian HECTOR senses (Oxford University Press) 13 languages, including Chinese Word. Net senses Senseval-3 (March 2004) q q 7 languages (but various tasks) Word. Net senses 61

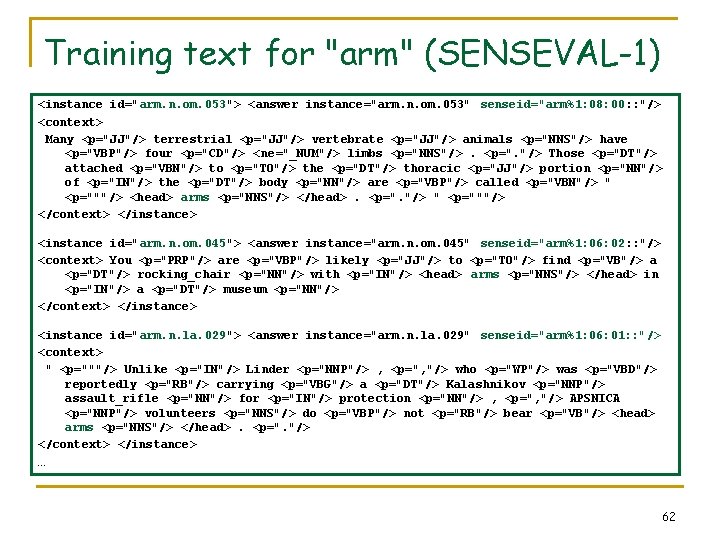

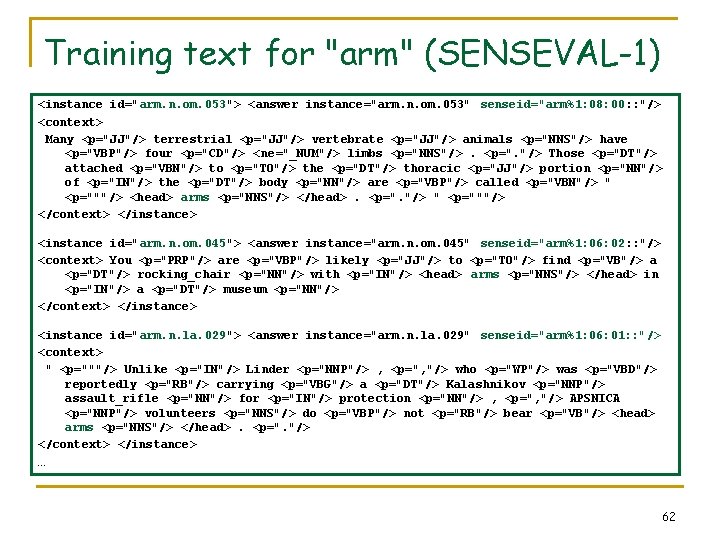

Training text for "arm" (SENSEVAL-1) <instance id="arm. n. om. 053"> <answer instance="arm. n. om. 053" senseid="arm%1: 08: 00: : "/> <context> Many <p="JJ"/> terrestrial <p="JJ"/> vertebrate <p="JJ"/> animals <p="NNS"/> have <p="VBP"/> four <p="CD"/> < ne="_NUM"/> limbs <p="NNS"/>. <p=". "/> Those <p="DT"/> attached <p="VBN"/> to <p="TO"/> the <p="DT"/> thoracic <p="JJ"/> portion <p="NN"/> of <p="IN"/> the <p="DT"/> body <p="NN"/> are <p="VBP"/> called <p="VBN"/> " <p="""/> <head> arms <p="NNS"/> </head>. <p=". "/> " <p="""/> </context> </instance> <instance id="arm. n. om. 045"> <answer instance="arm. n. om. 045" senseid="arm%1: 06: 02: : "/> <context> You <p="PRP"/> are <p="VBP"/> likely <p="JJ"/> to <p="TO"/> find <p="VB"/> a <p="DT"/> rocking_chair <p="NN"/> with <p="IN"/> <head> arms <p="NNS"/> </head> in <p="IN"/> a <p="DT"/> museum <p="NN"/> </context> </instance> <instance id="arm. n. la. 029"> <answer instance="arm. n. la. 029" senseid="arm%1: 06: 01: : "/> <context> " <p="""/> Unlike <p="IN"/> Linder <p="NNP"/> , <p=", "/> who <p="WP"/> was <p="VBD"/> reportedly <p="RB"/> carrying <p="VBG"/> a <p="DT"/> Kalashnikov <p="NNP"/> assault_rifle <p="NN"/> for <p="IN"/> protection <p="NN"/> , <p=", "/> APSNICA <p="NNP"/> volunteers <p="NNS"/> do <p="VBP"/> not <p="RB"/> bear <p="VB"/> <head> arms <p="NNS"/> </head>. <p=". "/> </context> </instance> … 62

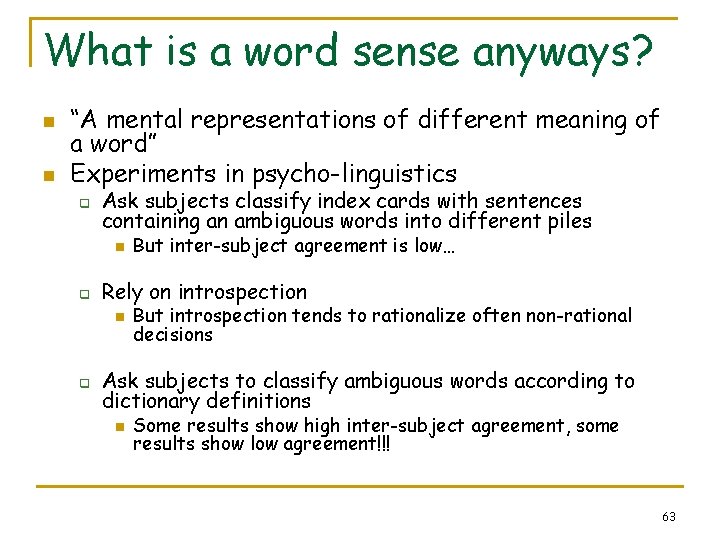

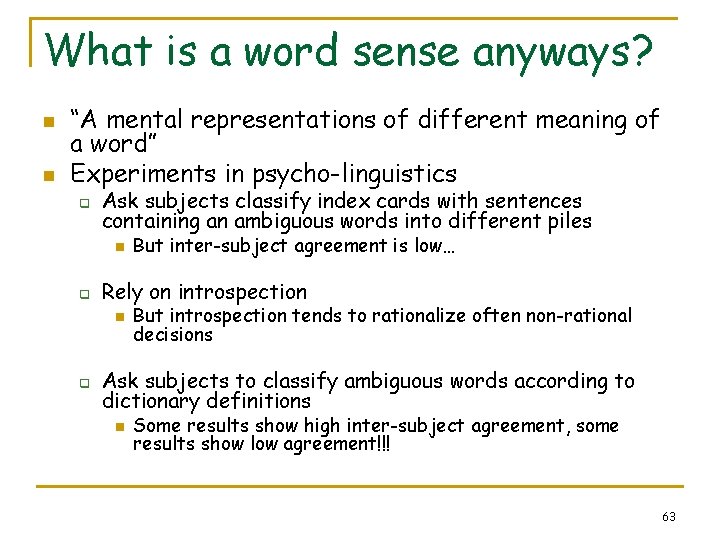

What is a word sense anyways? n n “A mental representations of different meaning of a word” Experiments in psycho-linguistics q Ask subjects classify index cards with sentences containing an ambiguous words into different piles n q Rely on introspection n q But inter-subject agreement is low… But introspection tends to rationalize often non-rational decisions Ask subjects to classify ambiguous words according to dictionary definitions n Some results show high inter-subject agreement, some results show low agreement!!! 63