COMP 73307336 Advanced Parallel and Distributed Computing Parallel

COMP 7330/7336 Advanced Parallel and Distributed Computing Parallel Computer Architecture Dr. Xiao Qin Auburn University http: //www. eng. auburn. edu/~xqin@auburn. edu Some slides are adopted from Dr. David E. Culler, U. C. Berkeley

What is Parallel Architecture? • A parallel computer is a collection of processing elements that cooperate to solve large problems fast • Some broad issues: – Resource Allocation – Data access, Communication and Synchronization – Performance and Scalability

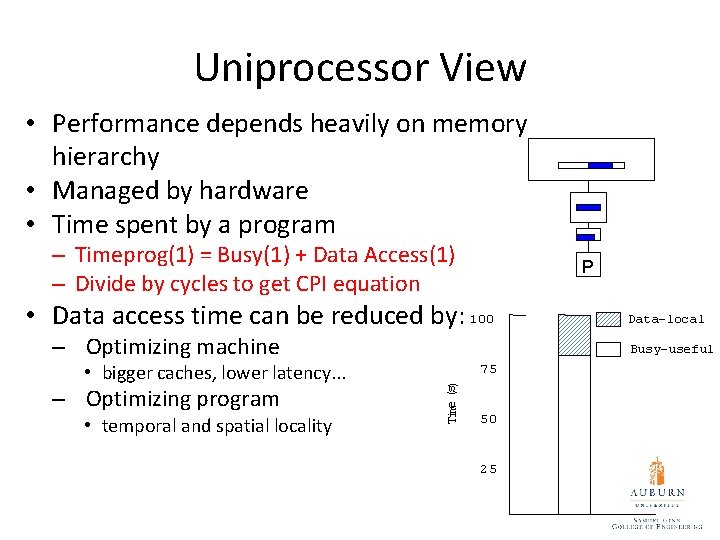

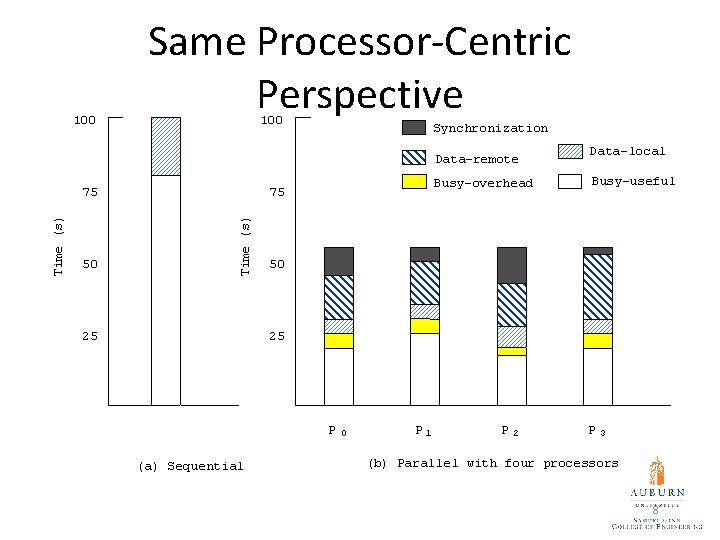

Uniprocessor View • Performance depends heavily on memory hierarchy • Managed by hardware • Time spent by a program – Timeprog(1) = Busy(1) + Data Access(1) – Divide by cycles to get CPI equation P • Data access time can be reduced by: 100 – Optimizing machine – Optimizing program • temporal and spatial locality Busy-useful 75 Time (s) • bigger caches, lower latency. . . Data-local 50 25

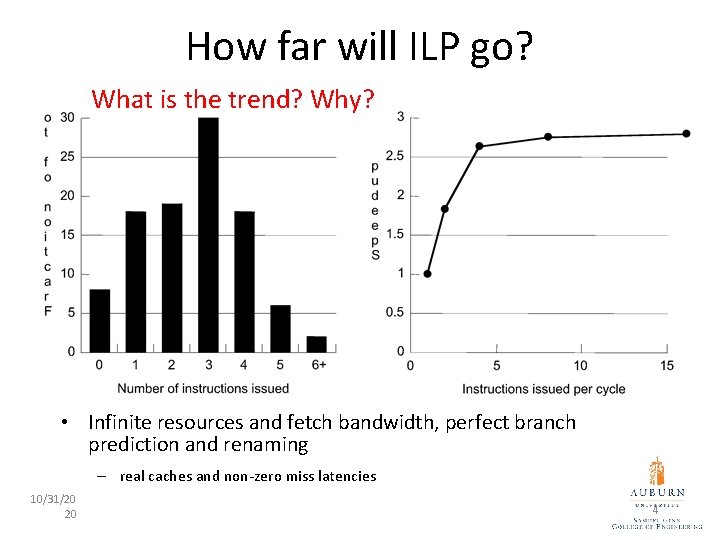

How far will ILP go? What is the trend? Why? • Infinite resources and fetch bandwidth, perfect branch prediction and renaming – real caches and non-zero miss latencies 10/31/20 20 4

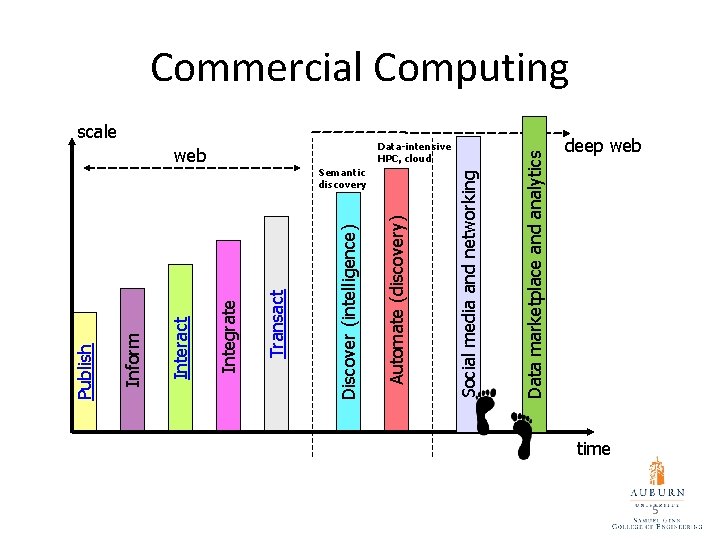

Semantic discovery Data-intensive HPC, cloud Data marketplace and analytics Social media and networking scale Automate (discovery) web Discover (intelligence) Transact Integrate Interact Inform Publish Commercial Computing deep web time 5

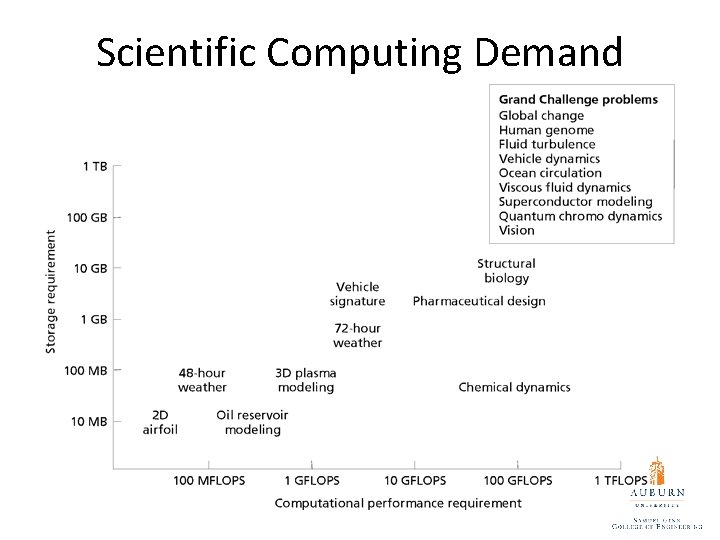

Scientific Computing Demand

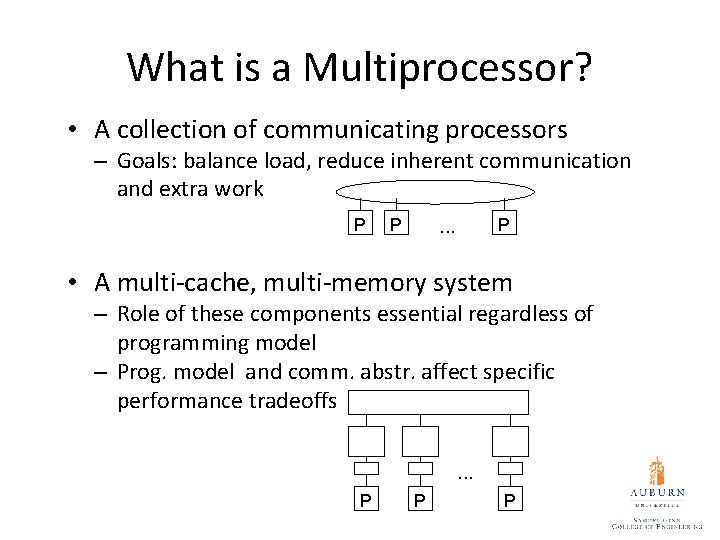

What is a Multiprocessor? • A collection of communicating processors – Goals: balance load, reduce inherent communication and extra work P P P . . . • A multi-cache, multi-memory system – Role of these components essential regardless of programming model – Prog. model and comm. abstr. affect specific performance tradeoffs. . . P P P

100 Same Processor-Centric Perspective 100 Synchronization Data-remote 50 Busy-overhead 75 Time (s) 75 Data-local Busy-useful 50 25 25 P (a) Sequential 0 P 1 P 2 P 3 (b) Parallel with four processors 8

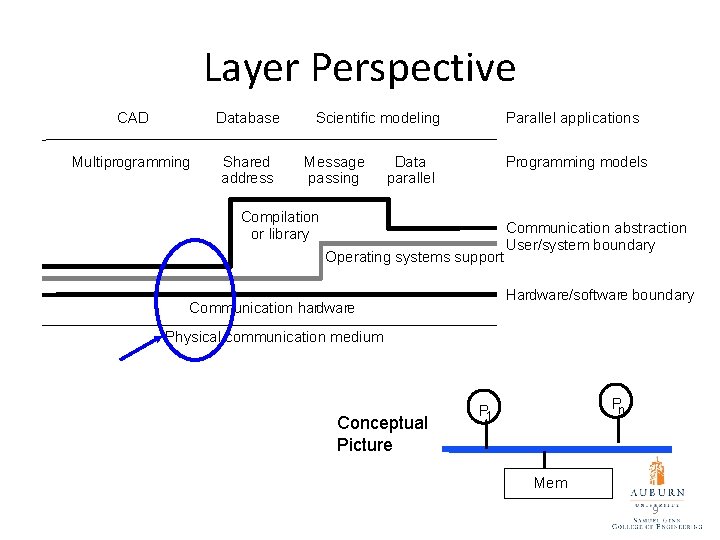

Layer Perspective CAD Database Multiprogramming Shared address Scientific modeling Message passing Parallel applications Data parallel Programming models Compilation or library Operating systems support Communication abstraction User/system boundary Hardware/software boundary Communication hardware Physical communication medium Conceptual Picture Pn P 1 Mem 9

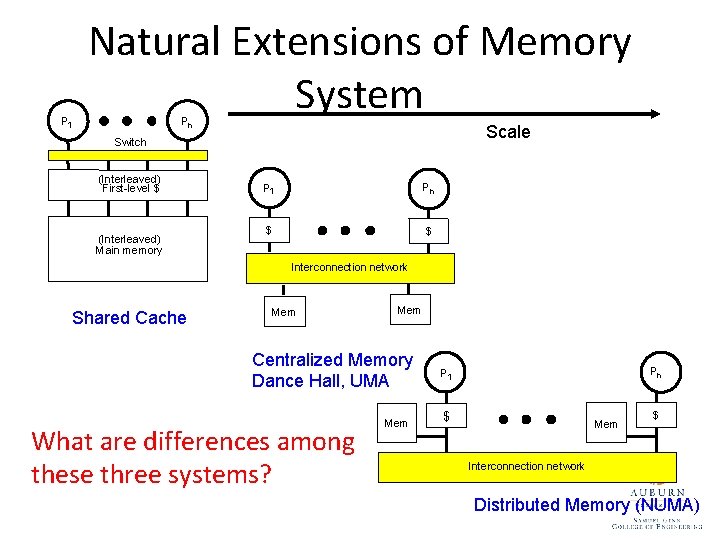

P 1 Natural Extensions of Memory System Pn Scale Switch (Interleaved) First-level $ (Interleaved) Main memory P 1 Pn $ $ Interconnection network Shared Cache Mem Centralized Memory Dance Hall, UMA What are differences among these three systems? Mem Pn P 1 $ Mem $ Interconnection network Distributed Memory (NUMA)

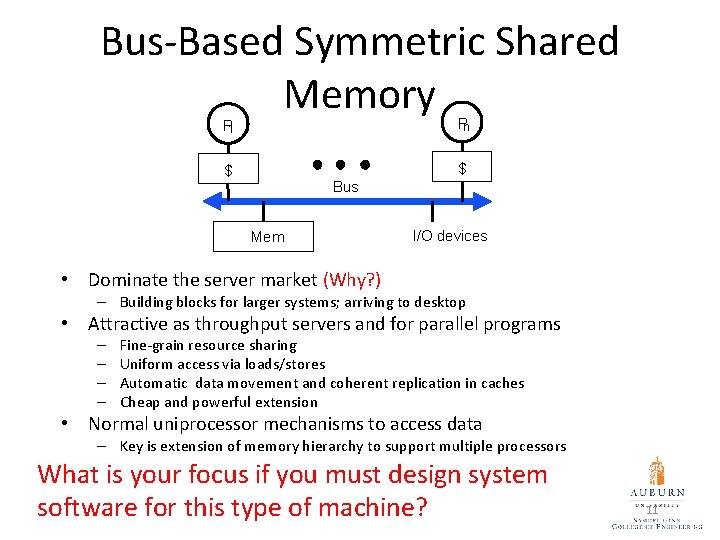

Bus-Based Symmetric Shared Memory P 1 Pn $ $ Bus Mem I/O devices • Dominate the server market (Why? ) – Building blocks for larger systems; arriving to desktop • Attractive as throughput servers and for parallel programs – – Fine-grain resource sharing Uniform access via loads/stores Automatic data movement and coherent replication in caches Cheap and powerful extension • Normal uniprocessor mechanisms to access data – Key is extension of memory hierarchy to support multiple processors What is your focus if you must design system software for this type of machine? 11

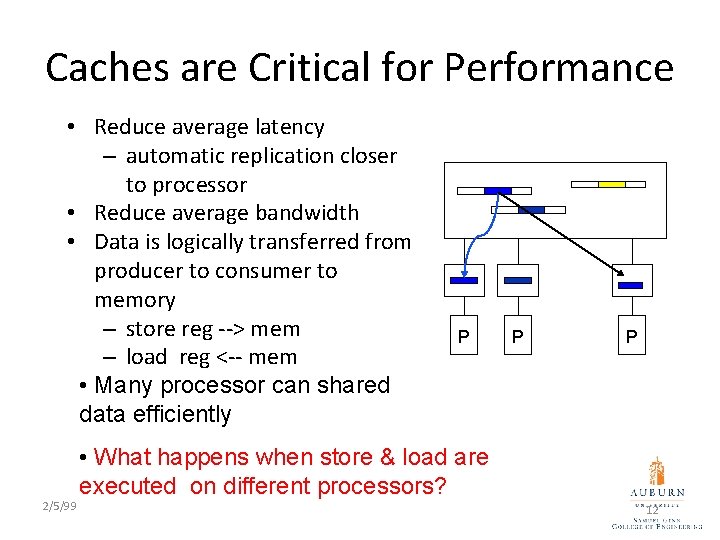

Caches are Critical for Performance • Reduce average latency – automatic replication closer to processor • Reduce average bandwidth • Data is logically transferred from producer to consumer to memory – store reg --> mem – load reg <-- mem • Many processor can shared data efficiently P P P • What happens when store & load are executed on different processors? 2/5/99 12

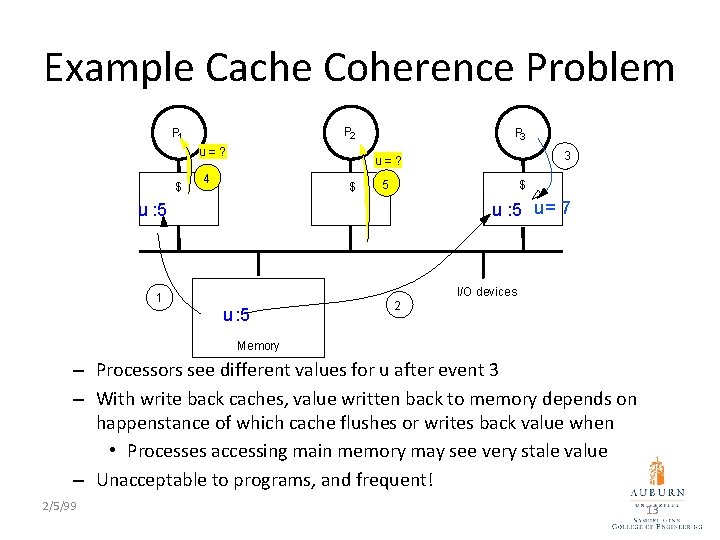

Example Cache Coherence Problem P 2 P 1 u=? $ P 3 3 u=? 4 $ 5 $ u : 5 u = 7 u : 5 I/O devices 1 u : 5 2 Memory – Processors see different values for u after event 3 – With write back caches, value written back to memory depends on happenstance of which cache flushes or writes back value when • Processes accessing main memory may see very stale value – Unacceptable to programs, and frequent! 2/5/99 13

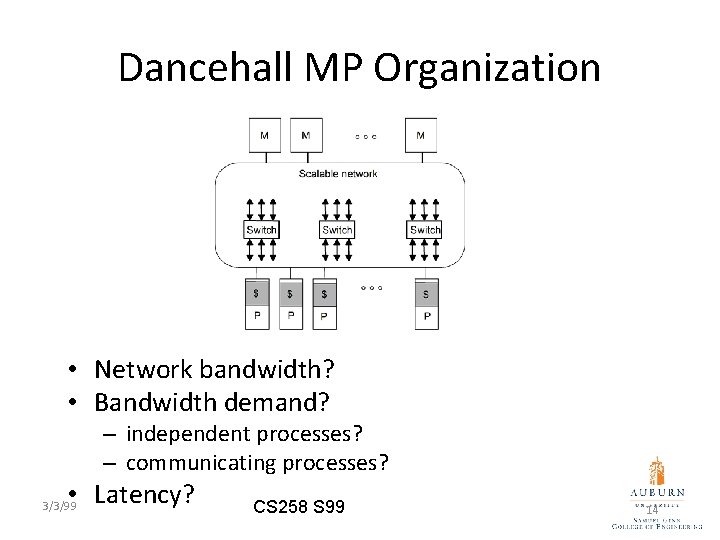

Dancehall MP Organization • Network bandwidth? • Bandwidth demand? – independent processes? – communicating processes? • Latency? 3/3/99 CS 258 S 99 14

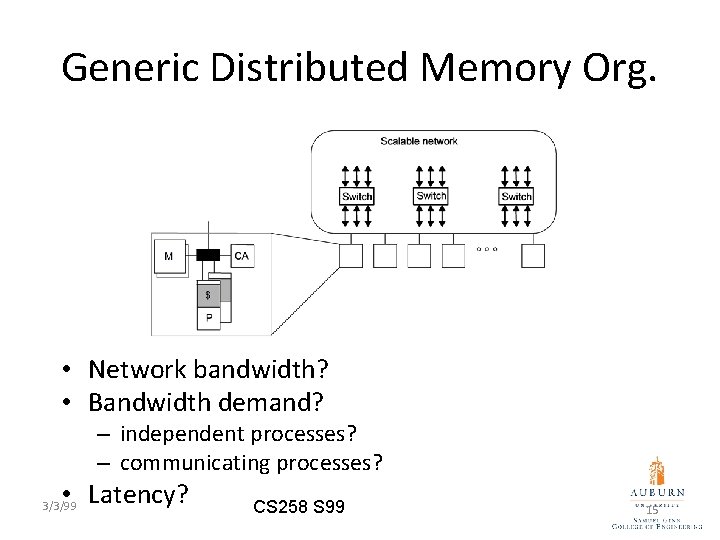

Generic Distributed Memory Org. • Network bandwidth? • Bandwidth demand? – independent processes? – communicating processes? • Latency? 3/3/99 CS 258 S 99 15

Speedup • Speedup (p processors) = Performance (p processors) Performance (1 processor) • For a fixed problem size (input data set), performance = 1/time • Speedup fixed problem (p processors) = Time (1 processor) Time (p processors) How to measure performance? 10/31/20 20 16

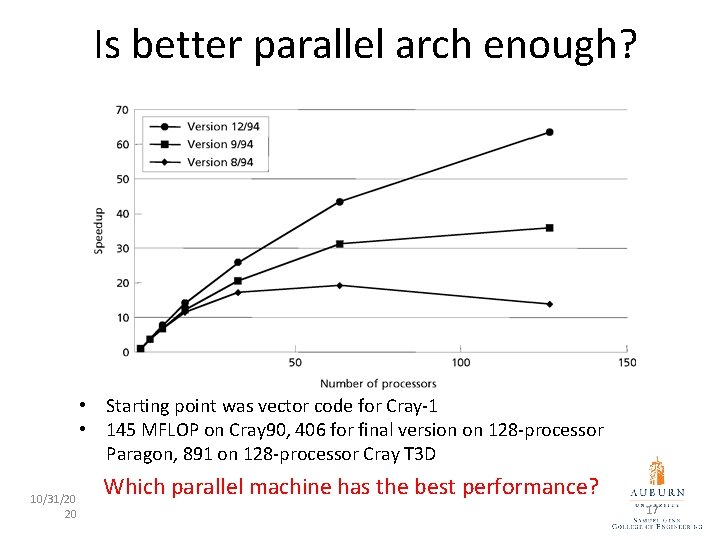

Is better parallel arch enough? • Starting point was vector code for Cray-1 • 145 MFLOP on Cray 90, 406 for final version on 128 -processor Paragon, 891 on 128 -processor Cray T 3 D 10/31/20 20 Which parallel machine has the best performance? 17

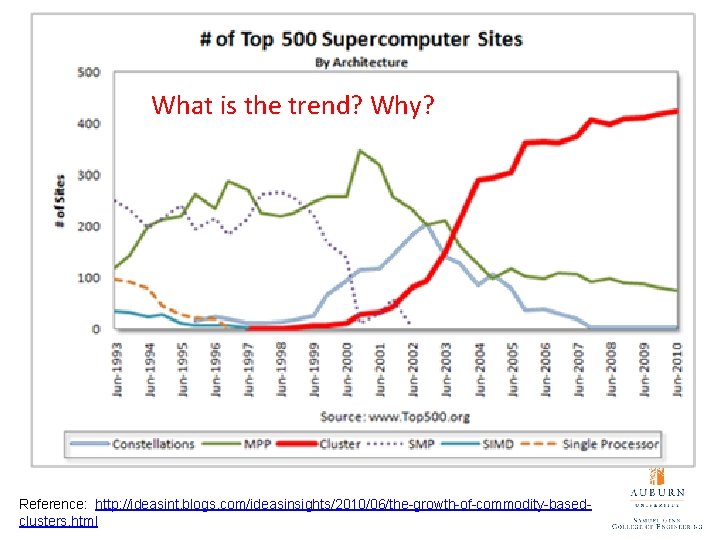

What is the trend? Why? Reference: http: //ideasint. blogs. com/ideasinsights/2010/06/the-growth-of-commodity-basedclusters. html

- Slides: 18