Community Diagnostics Package CDP Zeshawn Shaheen Computer Scientist

- Slides: 14

Community Diagnostics Package (CDP) Zeshawn Shaheen Computer Scientist December 6, 2018 LLNL-PRES-763342 -DRAFT This work was performed under the auspices of the U. S. Department of Energy by Lawrence Livermore National Laboratory under contract DE-AC 52 -07 NA 27344. Lawrence Livermore National Security, LLC

Contents § Introduction § Features § Current Implementations § Future Work 2

Introduction § Diagnostics compares test data to reference data. — Reference data are often observational data. — Ex: Model vs obs, model vs model, etc. § Metrics calculated, used to tune the model. § New model data is generated, run through diags again. 3

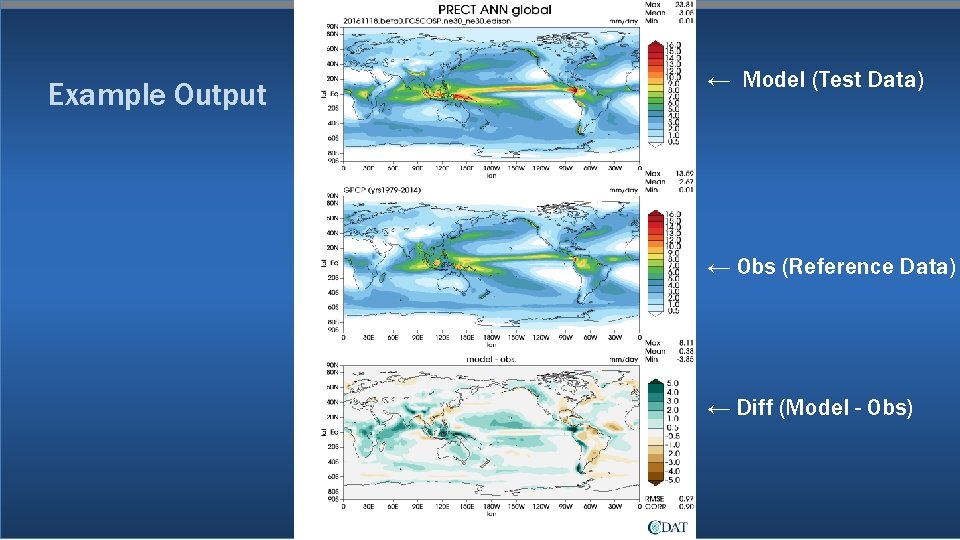

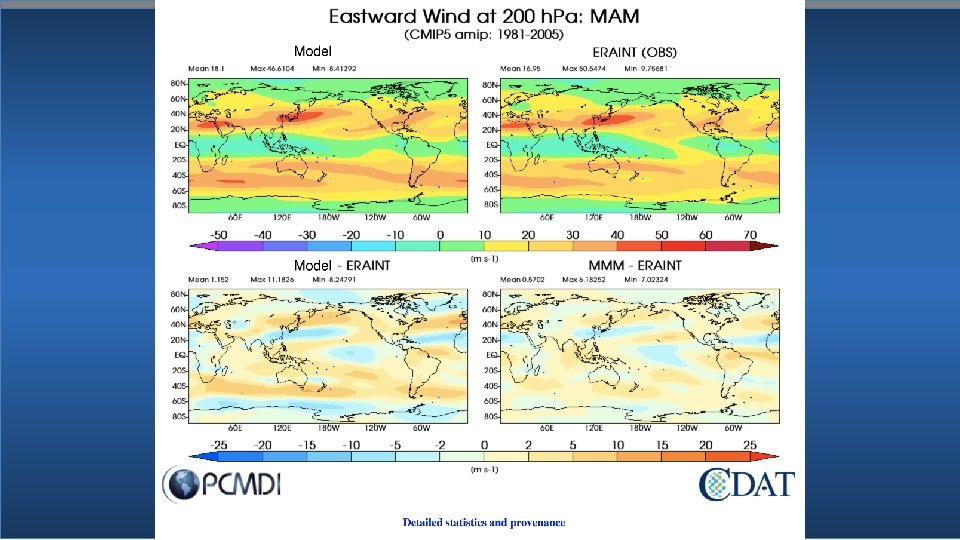

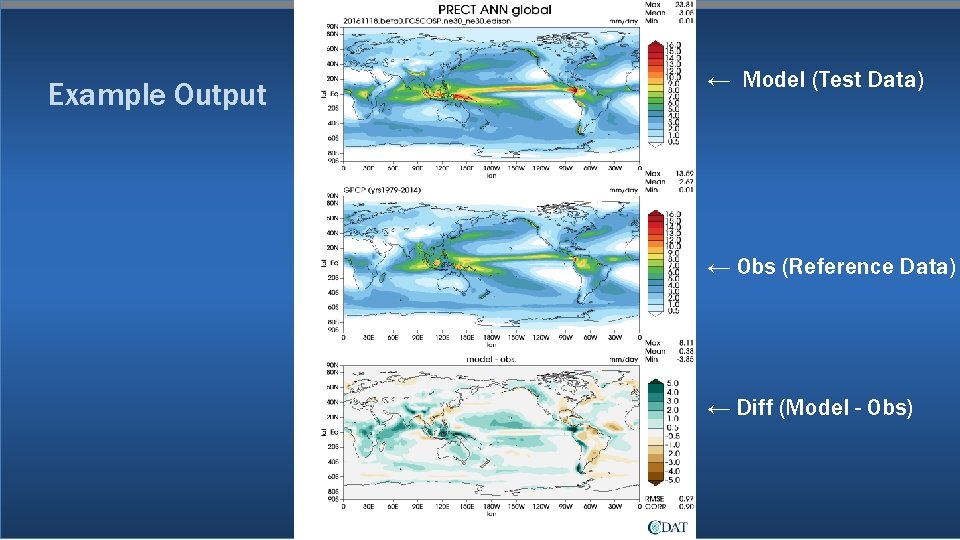

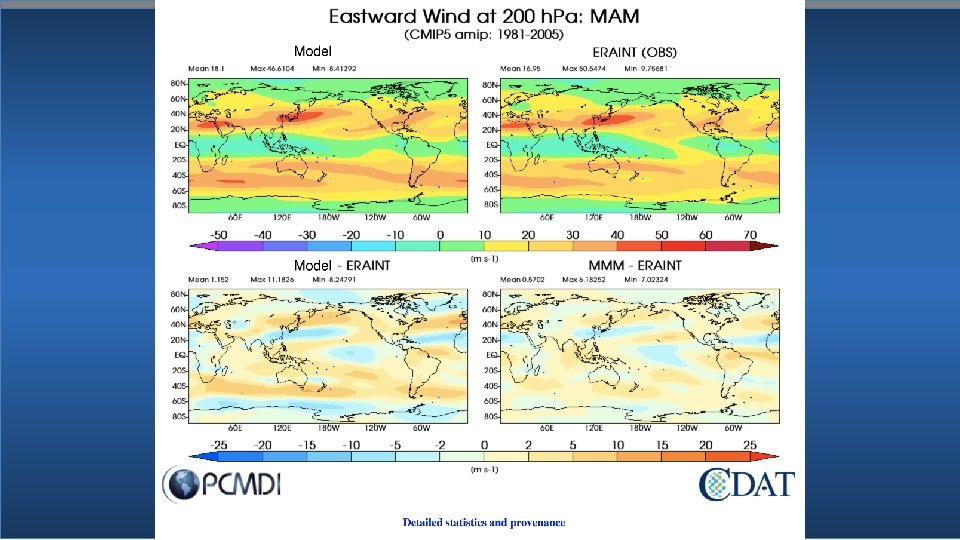

Example Output ← Model (Test Data) ← Obs (Reference Data) ← Diff (Model - Obs) 4

Features § Framework for creating diagnostics packages, include functionalities: — — — Reading parameters. Loading data. Scheduling multi-processed runs. Computing the metrics. Tracking the provenance. § Goal: Unify the operation and structure of disparate diagnostics packages. — Operation: User input. — Structure: Driver. § User Input: — Parser, customizable with Common Input Arguments (CIA). — Parameter files (py, cfg). § Driver: Main diagnostics code. 5

Features – User Input § User input: Take in a set of parameters. — Parameters: • Path model/obs data, variables, regions, etc. to run diagnostics on. — Via py files (single parameter object), or combined with cfg files (multiple parameter objects). • Ex: e 3 sm_diags -p params. py -d diags. cfg — Command line arguments as well. • Ex: e 3 sm_diags --vars PRECT --seasons DJF MAM. . . § Common Input Arguments: — Allow the parameters used to be changed dynamically via an input json file. — Mirrors functionality of argparse without reinstalling. — Goals: • Allow for many diagnostics packages to interoperate using the same parameters. • Introduce conventions for input parameters for diagnostic packages. 6

Features – Driver § Main diagnostics code. — Given a set of parameters, run diags based off them. § Easy data parallelization: — PMP: • Given a driver and parameters, generate a bash script to run in parallel. — E 3 SM Diagnostics: • Uses API to parallelize drivers. • User just provides num_workers parameter. § Dask for data parallelism: — Dask bag, runs with multiprocessing scheduler in a round-robin fashion. — Idea: custom approach with Process. Pool. Executor. • Allow for caching variables read in via files. • Cache expensive computation: calculating the climatology. 7

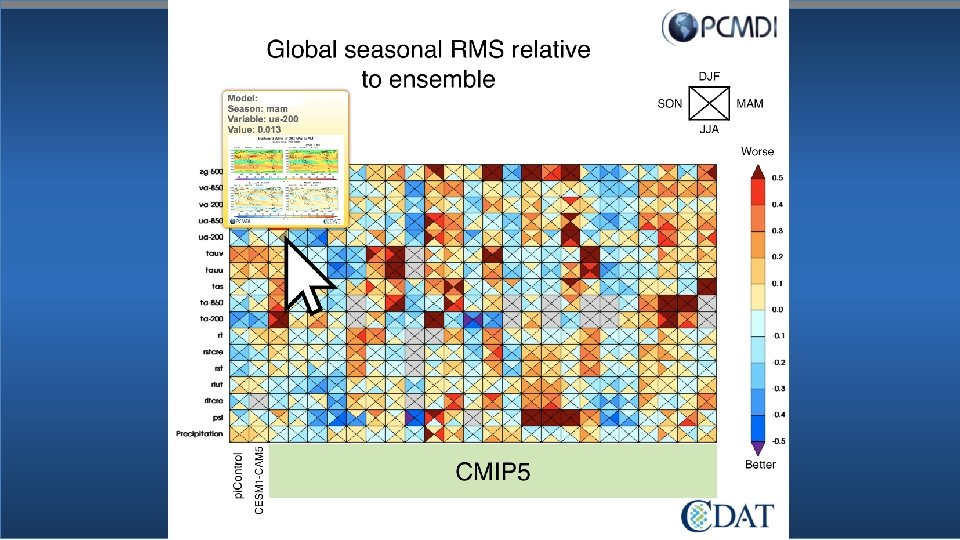

Current Implementations - PMP § PCMDI Metrics Package. — Program for Climate Model Diagnosis and Intercomparison. § Peter Gleckler, Charles Doutriaux, Ji-woo Lee, Paul Durack, & Zeshawn Shaheen. § Compare results from many climate models with many different observations using various metrics. — Emphasis on large to global-scale metrics. § Science-driven approach: Scientists drive the development, with aid from software engineers. § Current/Future plans: — ENSO diags, regional monsoon precipitation and diurnal cycle of precipitation metrics. — Interactive results/plots. 8

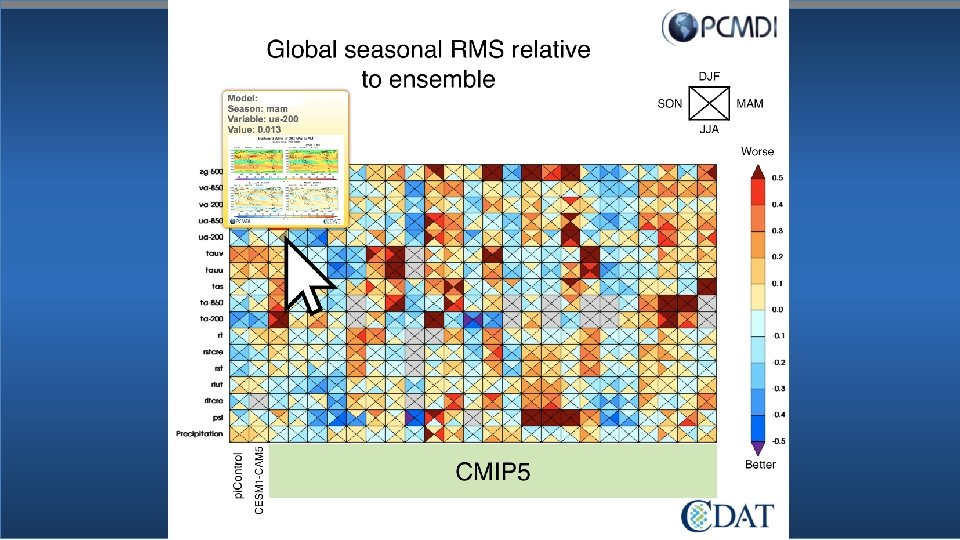

9

10

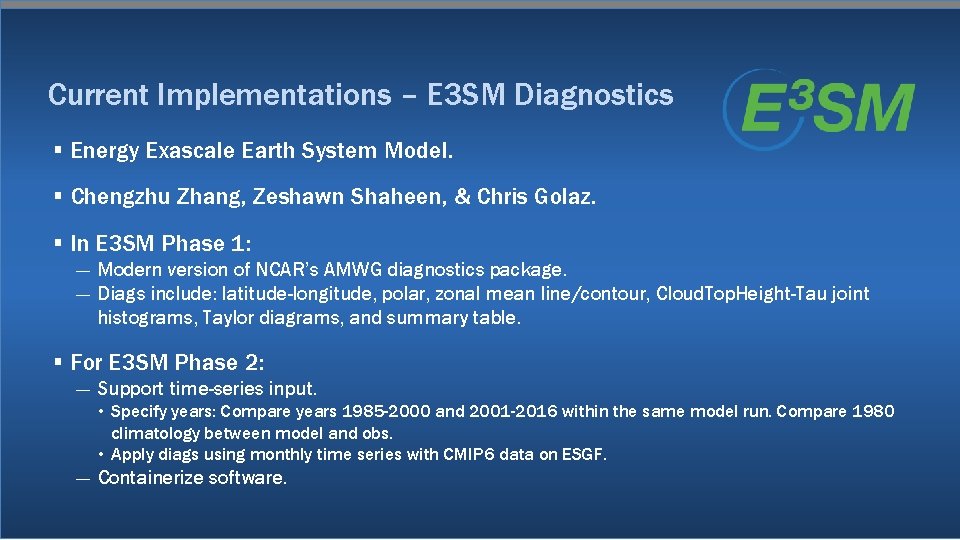

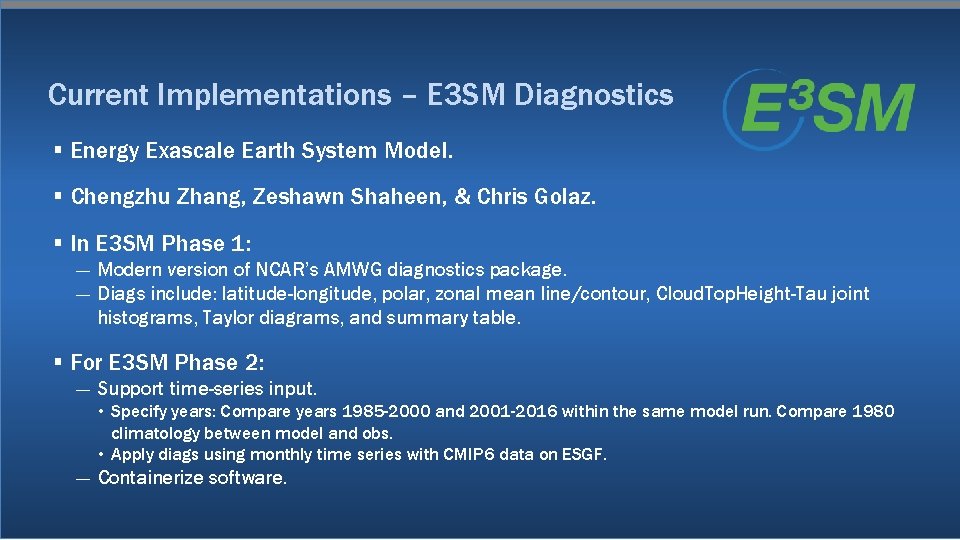

Current Implementations – E 3 SM Diagnostics § Energy Exascale Earth System Model. § Chengzhu Zhang, Zeshawn Shaheen, & Chris Golaz. § In E 3 SM Phase 1: — Modern version of NCAR’s AMWG diagnostics package. — Diags include: latitude-longitude, polar, zonal mean line/contour, Cloud. Top. Height-Tau joint histograms, Taylor diagrams, and summary table. § For E 3 SM Phase 2: — Support time-series input. • Specify years: Compare years 1985 -2000 and 2001 -2016 within the same model run. Compare 1980 climatology between model and obs. • Apply diags using monthly time series with CMIP 6 data on ESGF. — Containerize software. 11

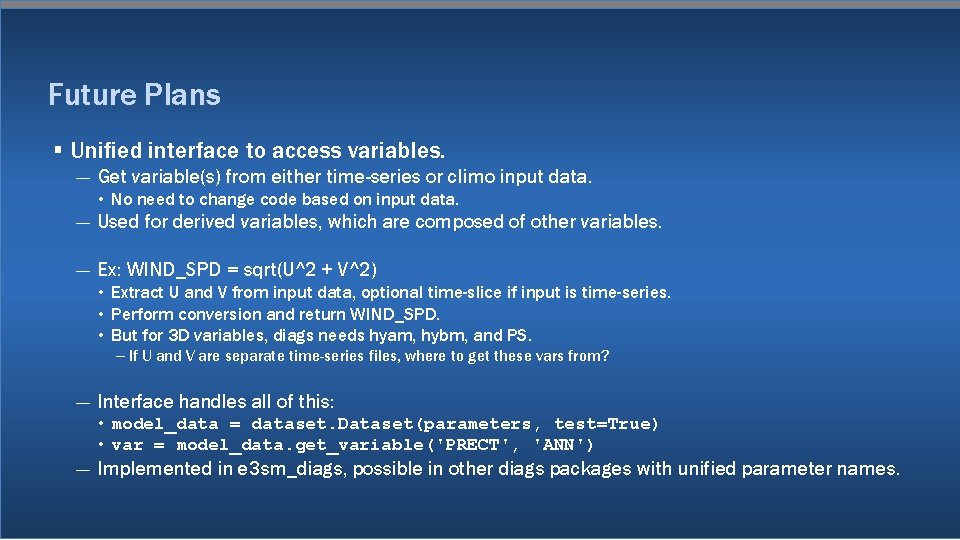

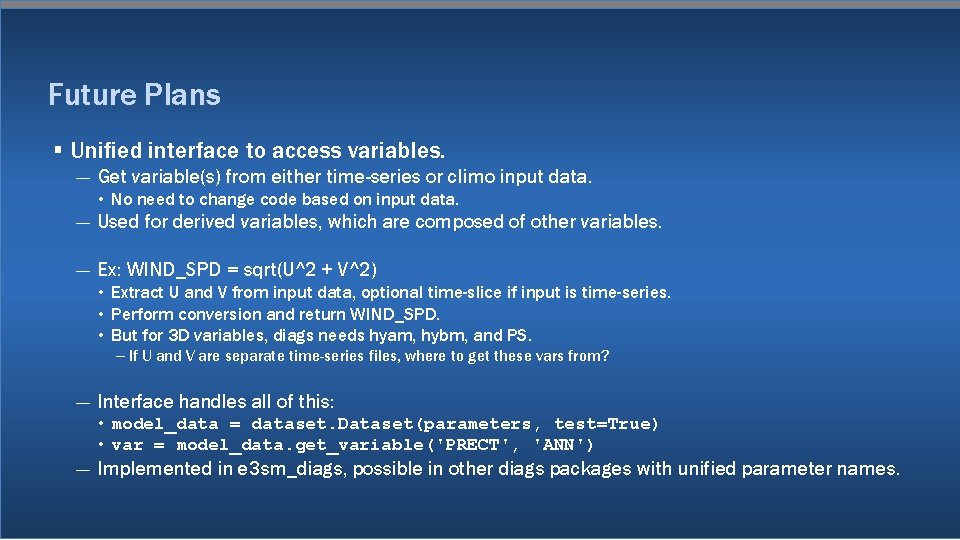

Future Plans § Unified interface to access variables. — Get variable(s) from either time-series or climo input data. • No need to change code based on input data. — Used for derived variables, which are composed of other variables. — Ex: WIND_SPD = sqrt(U^2 + V^2) • Extract U and V from input data, optional time-slice if input is time-series. • Perform conversion and return WIND_SPD. • But for 3 D variables, diags needs hyam, hybm, and PS. – If U and V are separate time-series files, where to get these vars from? — Interface handles all of this: • model_data = dataset. Dataset(parameters, test=True) • var = model_data. get_variable('PRECT', 'ANN') — Implemented in e 3 sm_diags, possible in other diags packages with unified parameter names. 12

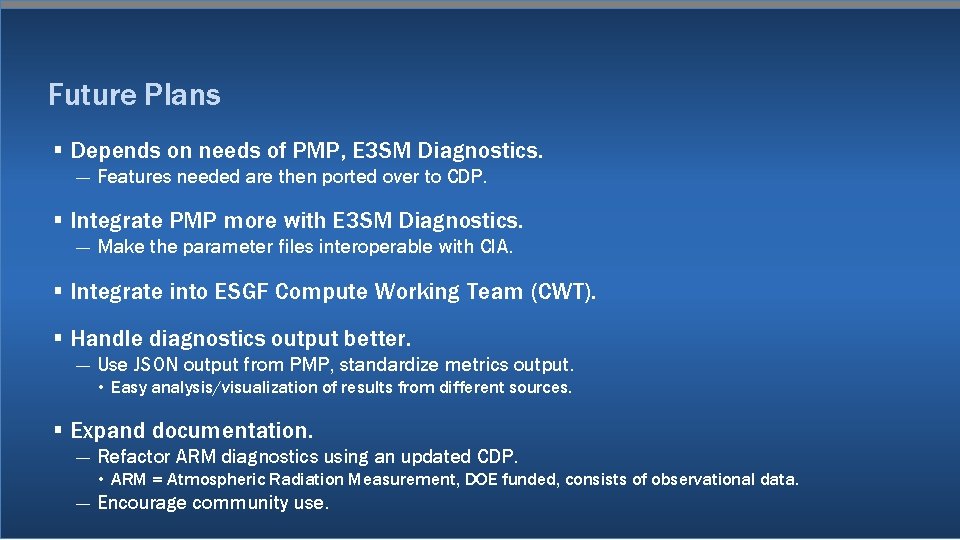

Future Plans § Depends on needs of PMP, E 3 SM Diagnostics. — Features needed are then ported over to CDP. § Integrate PMP more with E 3 SM Diagnostics. — Make the parameter files interoperable with CIA. § Integrate into ESGF Compute Working Team (CWT). § Handle diagnostics output better. — Use JSON output from PMP, standardize metrics output. • Easy analysis/visualization of results from different sources. § Expand documentation. — Refactor ARM diagnostics using an updated CDP. • ARM = Atmospheric Radiation Measurement, DOE funded, consists of observational data. — Encourage community use. 13