Common Practices for Managing Small HPC Clusters Supercomputing

- Slides: 57

Common Practices for Managing Small HPC Clusters Supercomputing 12 roger. bielefeld@cwru. edu david@uwm. edu

Small HPC Bo. F @ Supercomputing • 2012 Survey Instrument: tinyurl. com/small. HPC • Fourth annual Bo. F: https: //sites. google. com/site/smallhpc/ • Discussing 2011 and 2012 survey results…

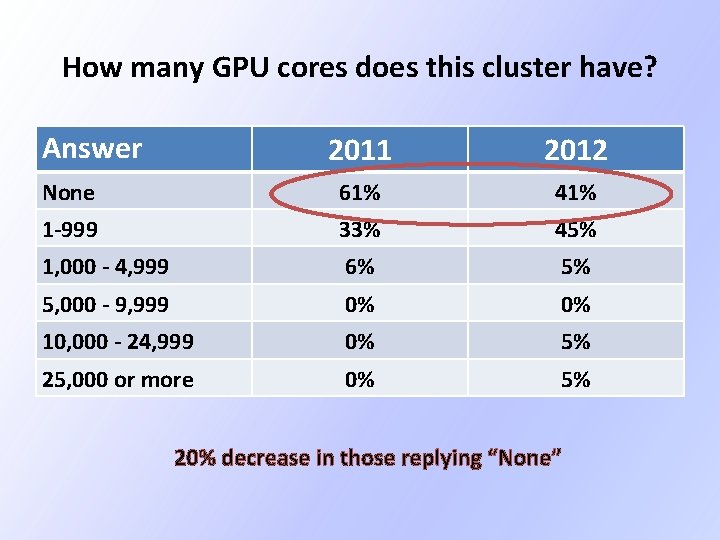

GPUs

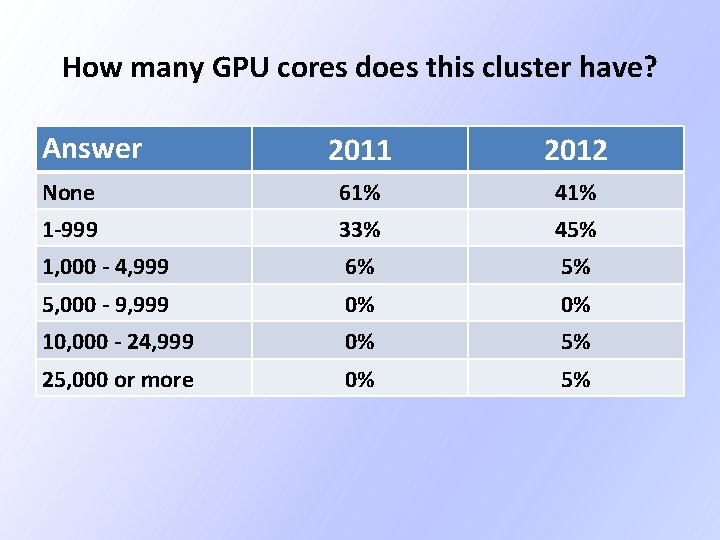

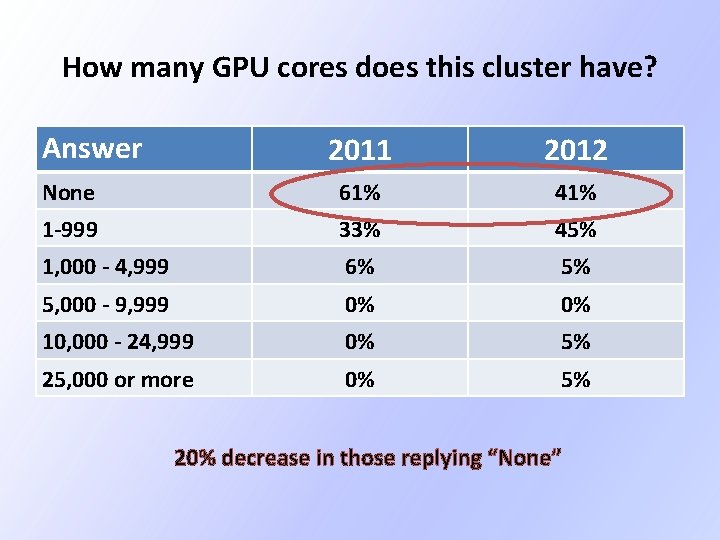

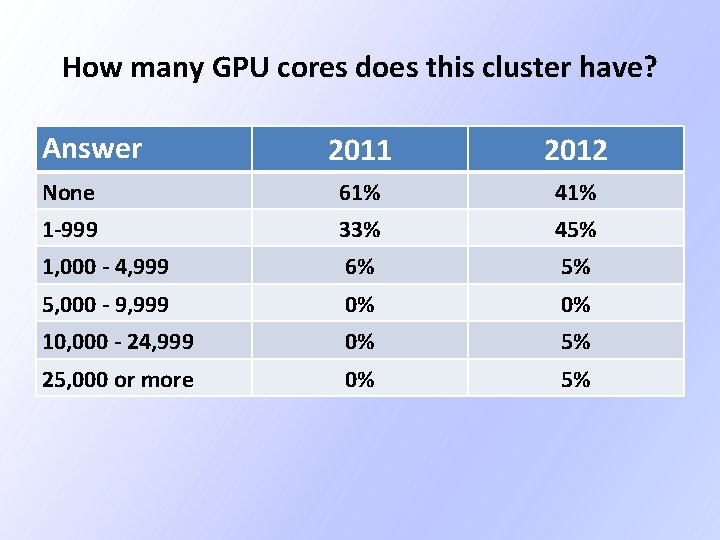

How many GPU cores does this cluster have? Answer 2011 2012 None 61% 41% 1 -999 33% 45% 1, 000 - 4, 999 6% 5% 5, 000 - 9, 999 0% 0% 10, 000 - 24, 999 0% 5% 25, 000 or more 0% 5%

How many GPU cores does this cluster have? Answer 2011 2012 None 61% 41% 1 -999 33% 45% 1, 000 - 4, 999 6% 5% 5, 000 - 9, 999 0% 0% 10, 000 - 24, 999 0% 5% 25, 000 or more 0% 5% 20% decrease in those replying “None”

Hardware Configuration

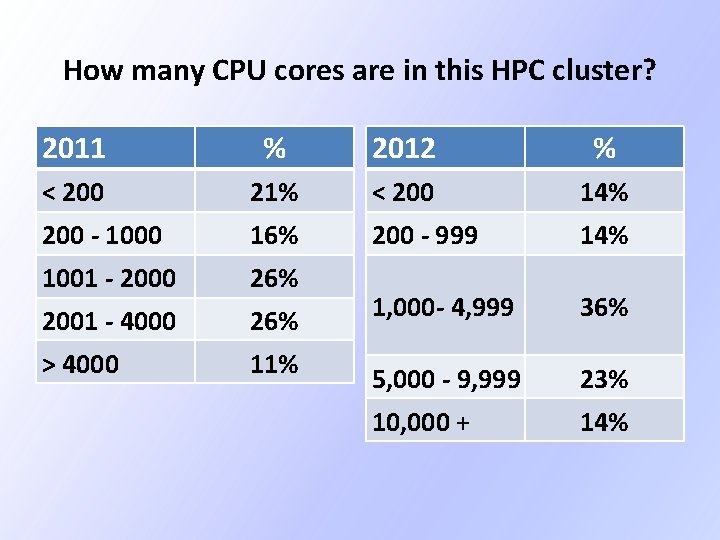

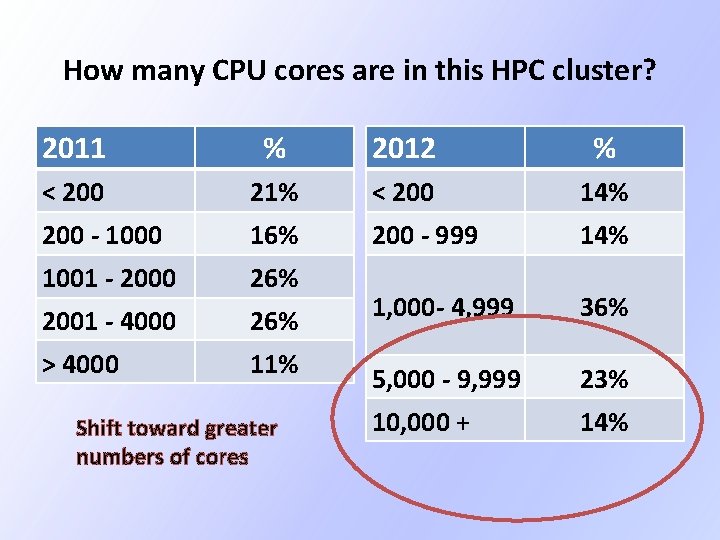

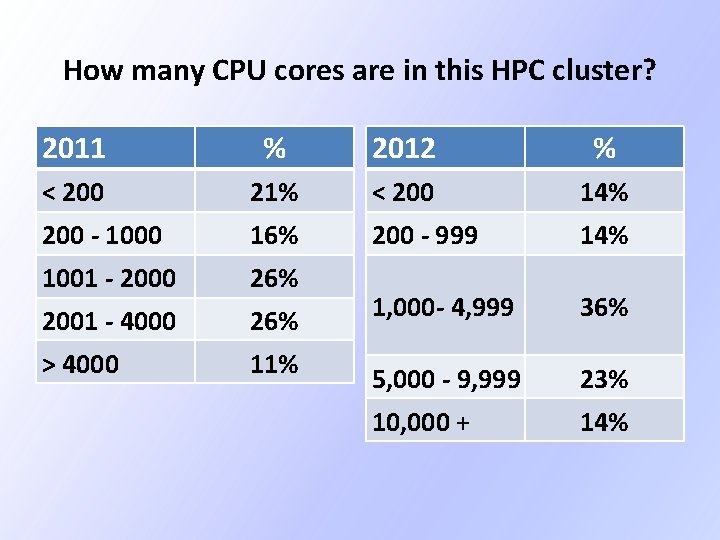

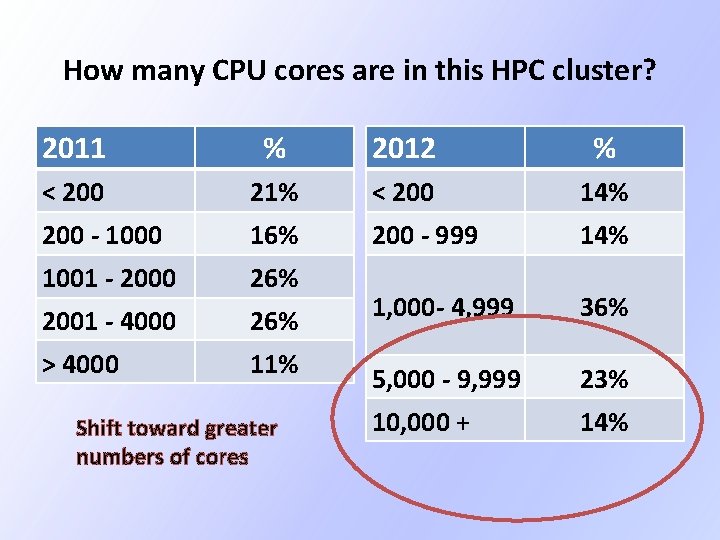

How many CPU cores are in this HPC cluster? 2011 % 2012 % < 200 21% < 200 14% 200 - 1000 16% 200 - 999 14% 1001 - 2000 26% 2001 - 4000 26% 1, 000 - 4, 999 36% > 4000 11% 5, 000 - 9, 999 23% 10, 000 + 14%

How many CPU cores are in this HPC cluster? 2011 % 2012 % < 200 21% < 200 14% 200 - 1000 16% 200 - 999 14% 1001 - 2000 26% 2001 - 4000 26% 1, 000 - 4, 999 36% > 4000 11% 5, 000 - 9, 999 23% 10, 000 + 14% Shift toward greater numbers of cores

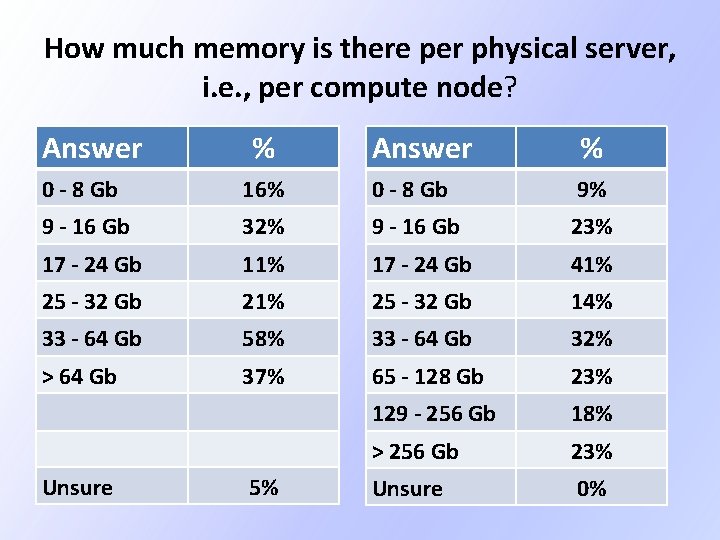

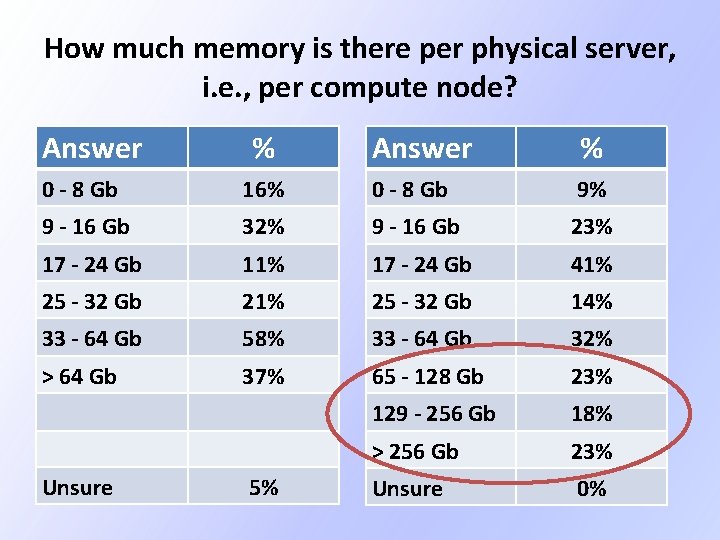

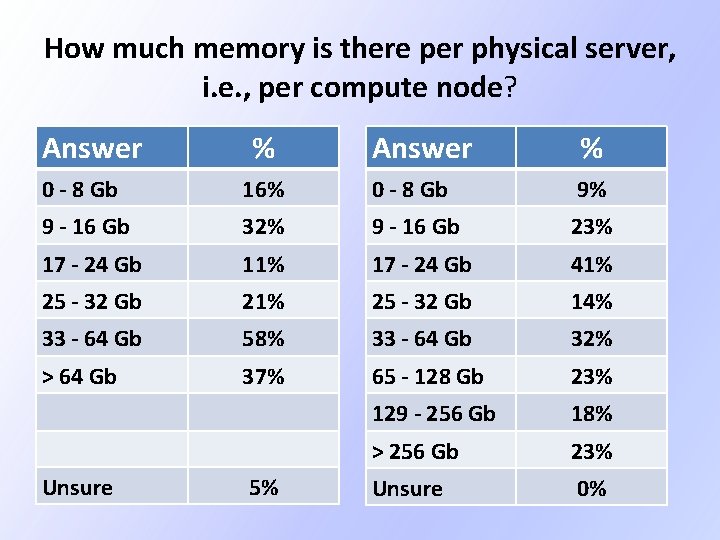

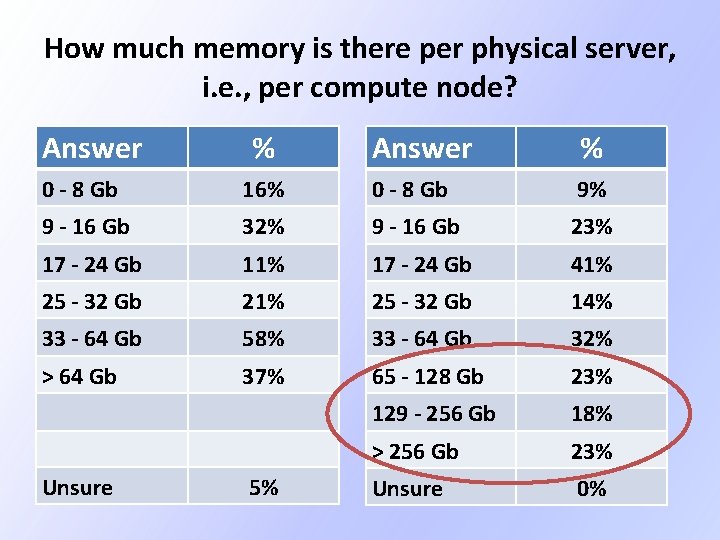

How much memory is there per physical server, i. e. , per compute node? Answer % 0 - 8 Gb 16% 0 - 8 Gb 9% 9 - 16 Gb 32% 9 - 16 Gb 23% 17 - 24 Gb 11% 17 - 24 Gb 41% 25 - 32 Gb 21% 25 - 32 Gb 14% 33 - 64 Gb 58% 33 - 64 Gb 32% > 64 Gb 37% 65 - 128 Gb 23% 129 - 256 Gb 18% > 256 Gb 23% Unsure 0% Unsure 5%

How much memory is there per physical server, i. e. , per compute node? Answer % 0 - 8 Gb 16% 0 - 8 Gb 9% 9 - 16 Gb 32% 9 - 16 Gb 23% 17 - 24 Gb 11% 17 - 24 Gb 41% 25 - 32 Gb 21% 25 - 32 Gb 14% 33 - 64 Gb 58% 33 - 64 Gb 32% > 64 Gb 37% 65 - 128 Gb 23% 129 - 256 Gb 18% > 256 Gb 23% Unsure 0% Unsure 5%

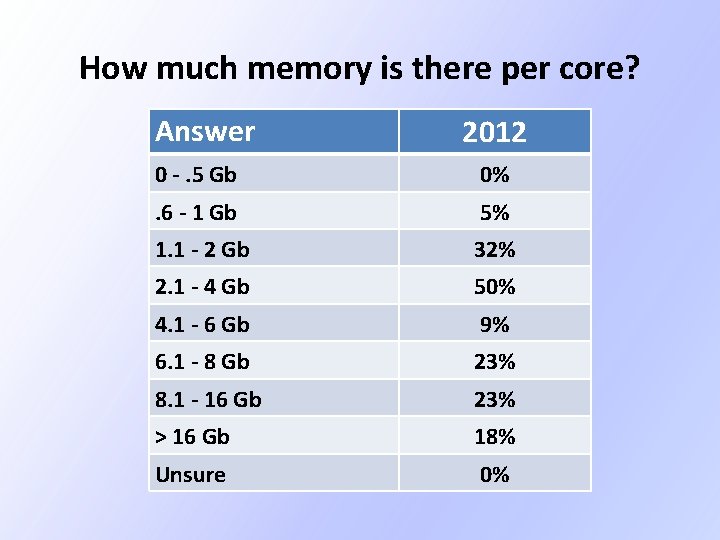

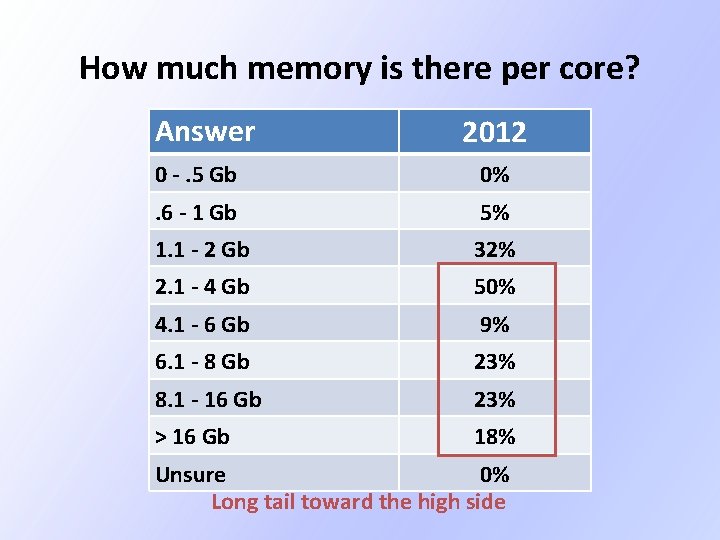

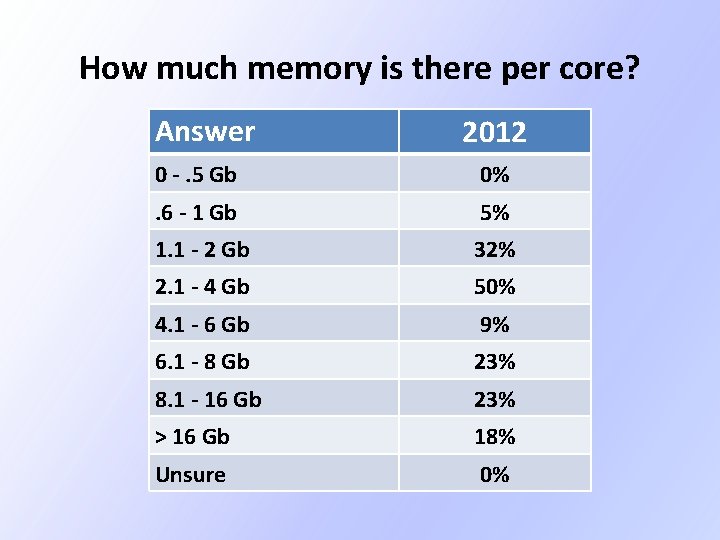

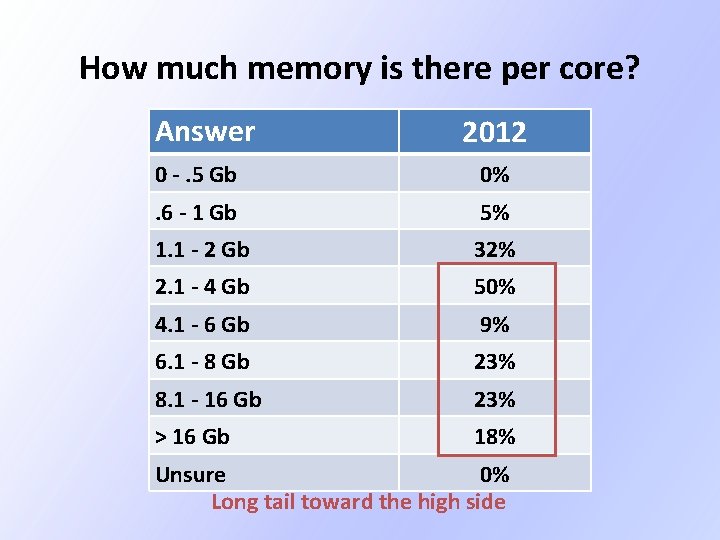

How much memory is there per core? Answer 2012 0 -. 5 Gb 0% . 6 - 1 Gb 5% 1. 1 - 2 Gb 32% 2. 1 - 4 Gb 50% 4. 1 - 6 Gb 9% 6. 1 - 8 Gb 23% 8. 1 - 16 Gb 23% > 16 Gb 18% Unsure 0%

How much memory is there per core? Answer 2012 0 -. 5 Gb 0% . 6 - 1 Gb 5% 1. 1 - 2 Gb 32% 2. 1 - 4 Gb 50% 4. 1 - 6 Gb 9% 6. 1 - 8 Gb 23% 8. 1 - 16 Gb 23% > 16 Gb 18% Unsure 0% Long tail toward the high side

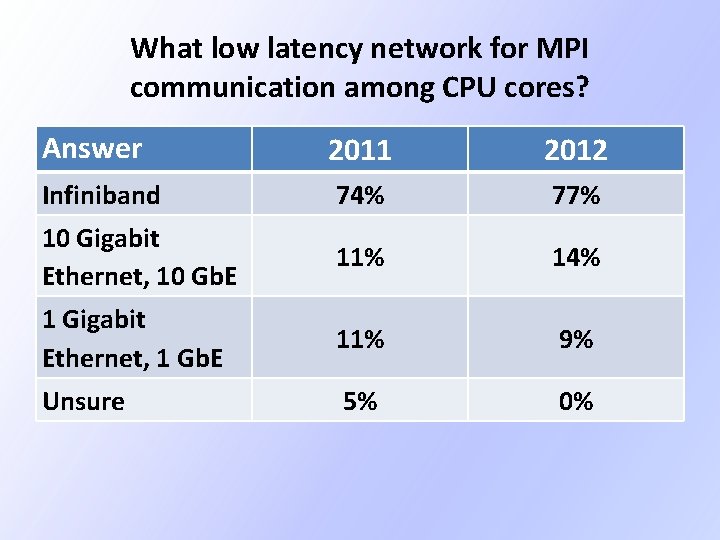

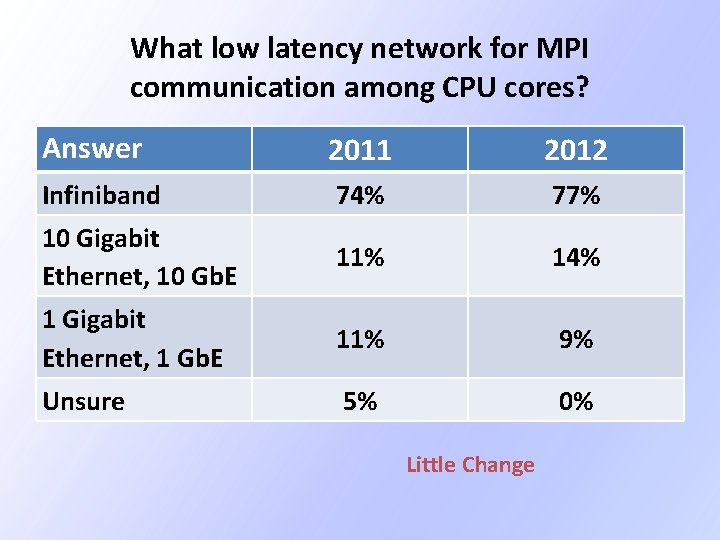

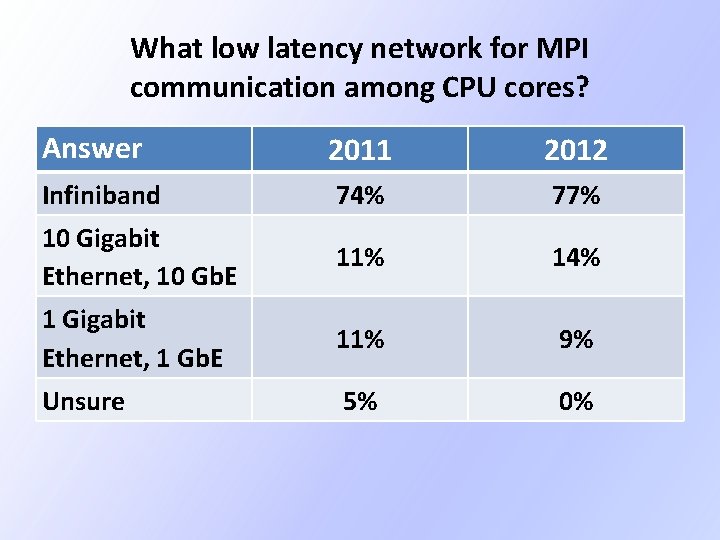

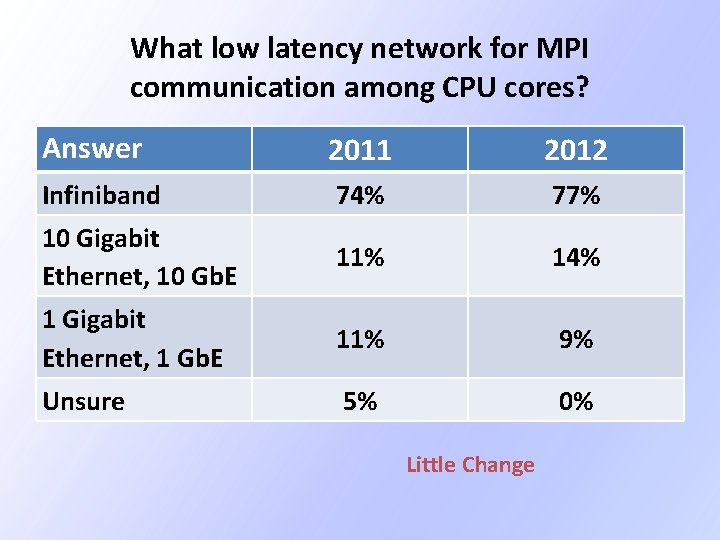

What low latency network for MPI communication among CPU cores? Answer 2011 2012 Infiniband 74% 77% 10 Gigabit Ethernet, 10 Gb. E 11% 14% 1 Gigabit Ethernet, 1 Gb. E 11% 9% Unsure 5% 0%

What low latency network for MPI communication among CPU cores? Answer 2011 2012 Infiniband 74% 77% 10 Gigabit Ethernet, 10 Gb. E 11% 14% 1 Gigabit Ethernet, 1 Gb. E 11% 9% Unsure 5% 0% Little Change

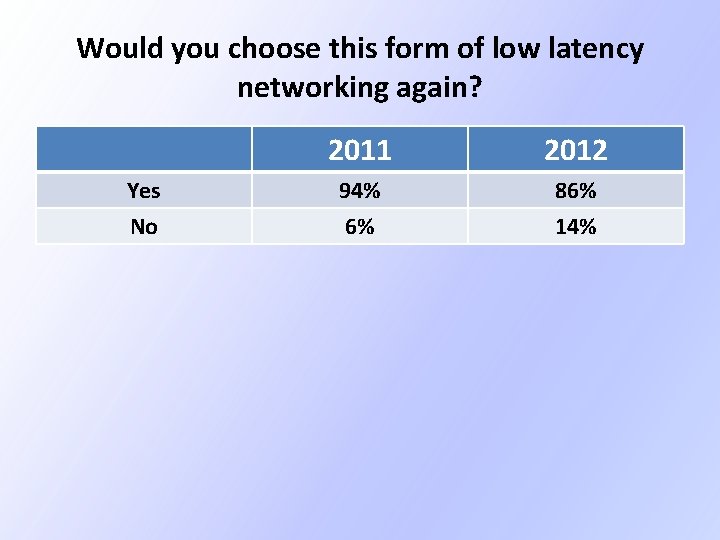

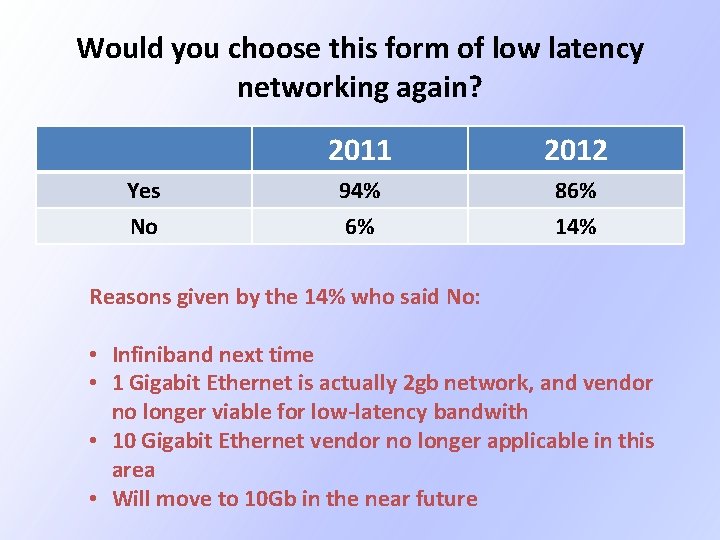

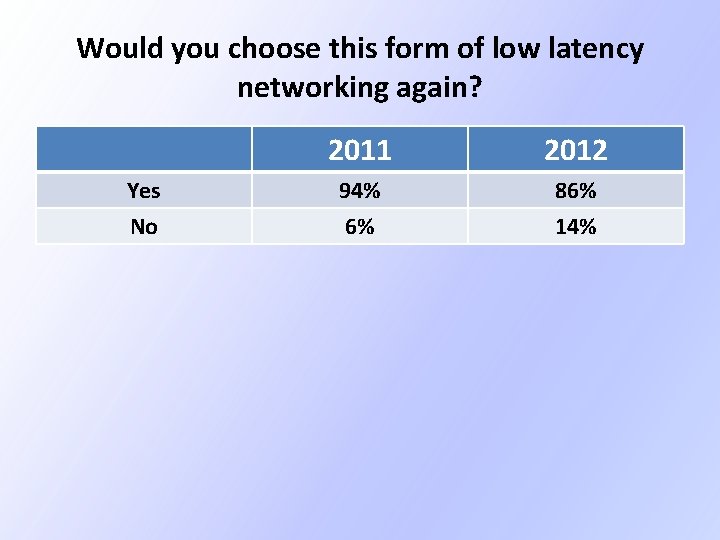

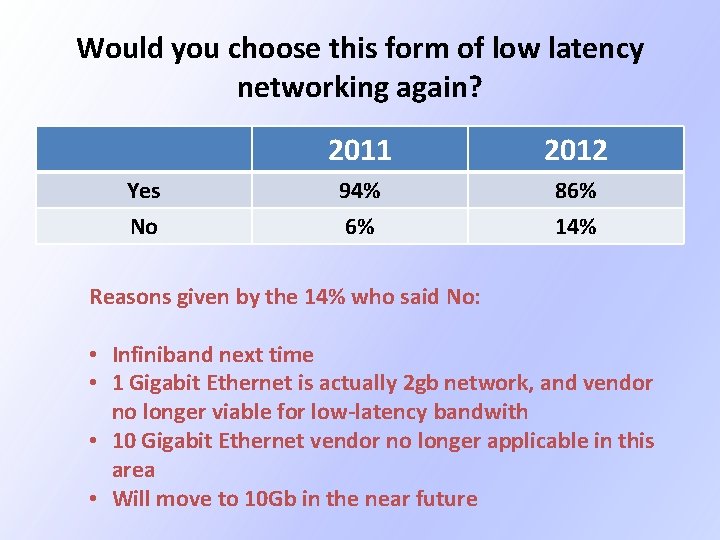

Would you choose this form of low latency networking again? Yes No 2011 2012 94% 6% 86% 14%

Would you choose this form of low latency networking again? Yes No 2011 2012 94% 6% 86% 14% Reasons given by the 14% who said No: • Infiniband next time • 1 Gigabit Ethernet is actually 2 gb network, and vendor no longer viable for low-latency bandwith • 10 Gigabit Ethernet vendor no longer applicable in this area • Will move to 10 Gb in the near future

Scheduler Questions

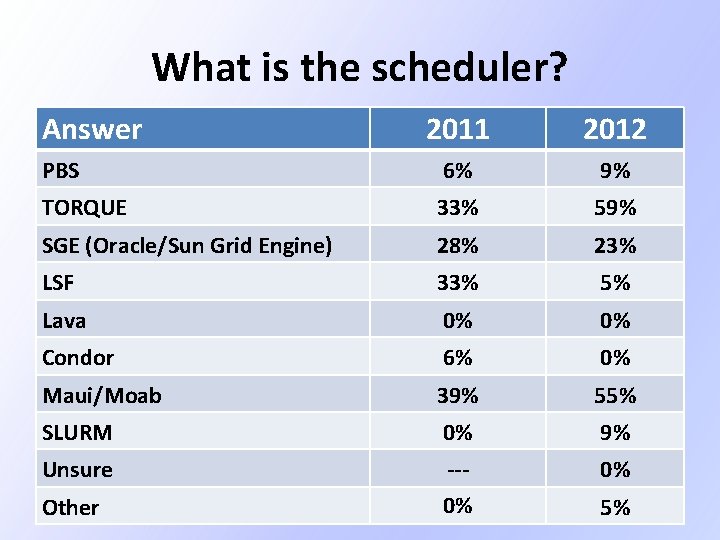

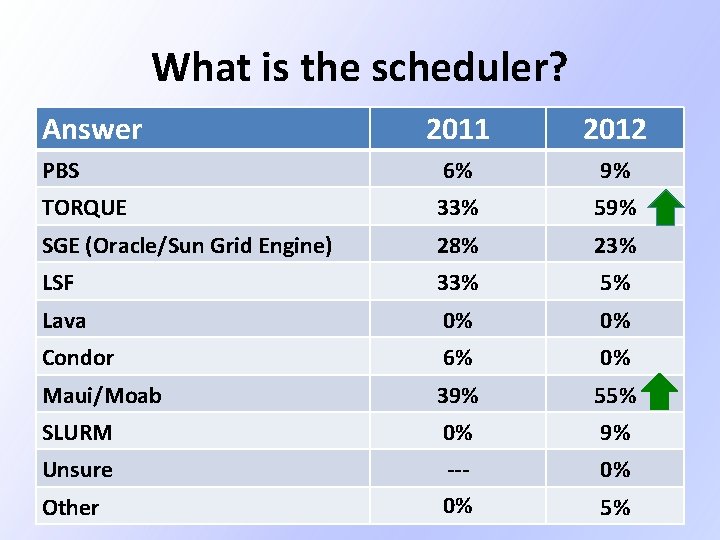

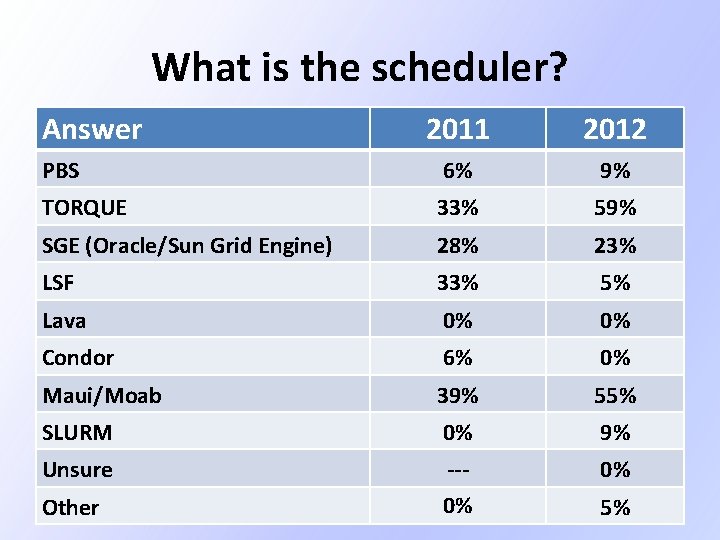

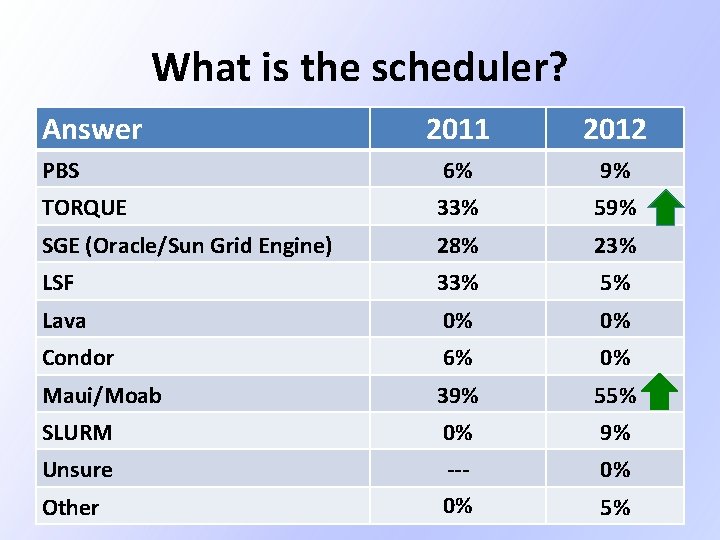

What is the scheduler? Answer 2011 2012 PBS 6% 9% TORQUE 33% 59% SGE (Oracle/Sun Grid Engine) 28% 23% LSF 33% 5% Lava 0% 0% Condor 6% 0% Maui/Moab 39% 55% SLURM 0% 9% Unsure --0% 0% Other 5%

What is the scheduler? Answer 2011 2012 PBS 6% 9% TORQUE 33% 59% SGE (Oracle/Sun Grid Engine) 28% 23% LSF 33% 5% Lava 0% 0% Condor 6% 0% Maui/Moab 39% 55% SLURM 0% 9% Unsure --0% 0% Other 5%

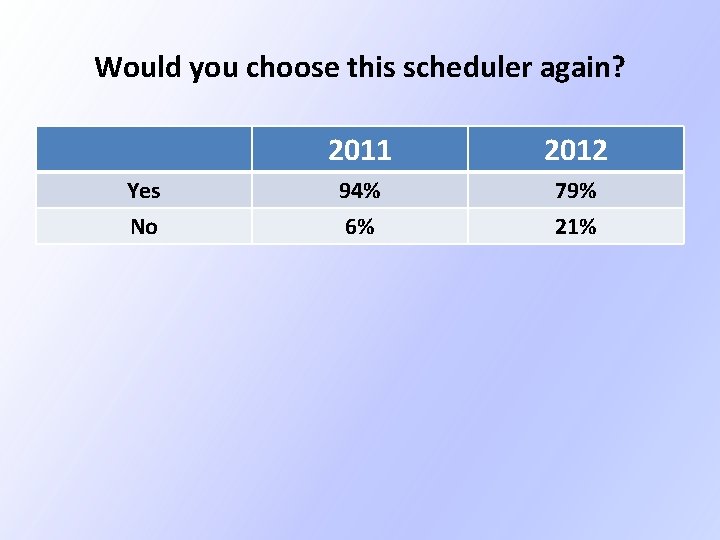

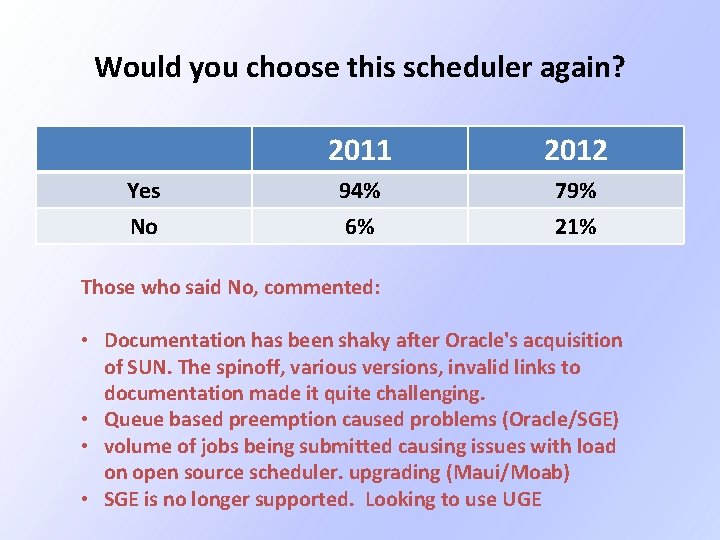

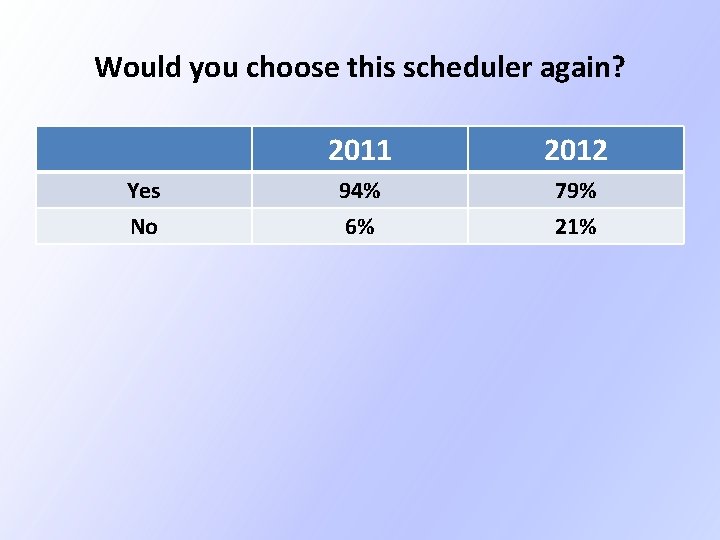

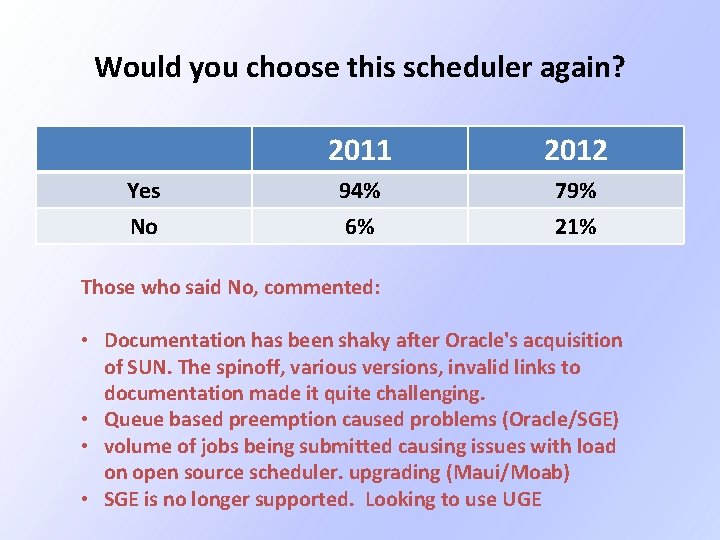

Would you choose this scheduler again? Yes No 2011 2012 94% 6% 79% 21%

Would you choose this scheduler again? Yes No 2011 2012 94% 6% 79% 21% Those who said No, commented: • Documentation has been shaky after Oracle's acquisition of SUN. The spinoff, various versions, invalid links to documentation made it quite challenging. • Queue based preemption caused problems (Oracle/SGE) • volume of jobs being submitted causing issues with load on open source scheduler. upgrading (Maui/Moab) • SGE is no longer supported. Looking to use UGE

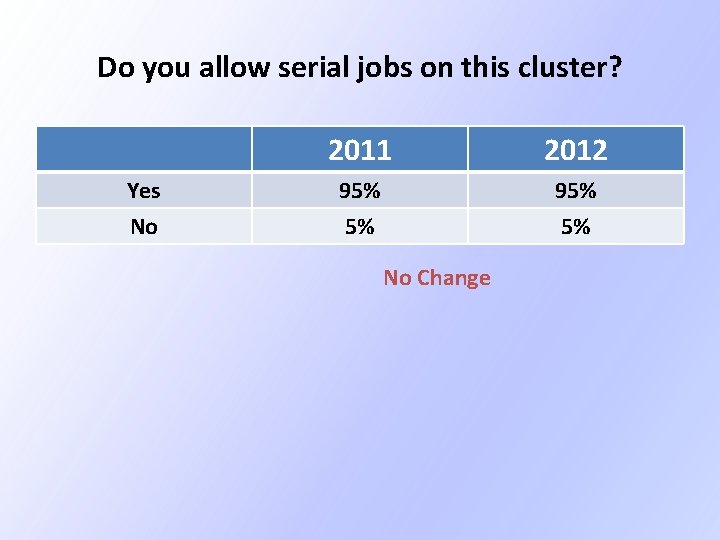

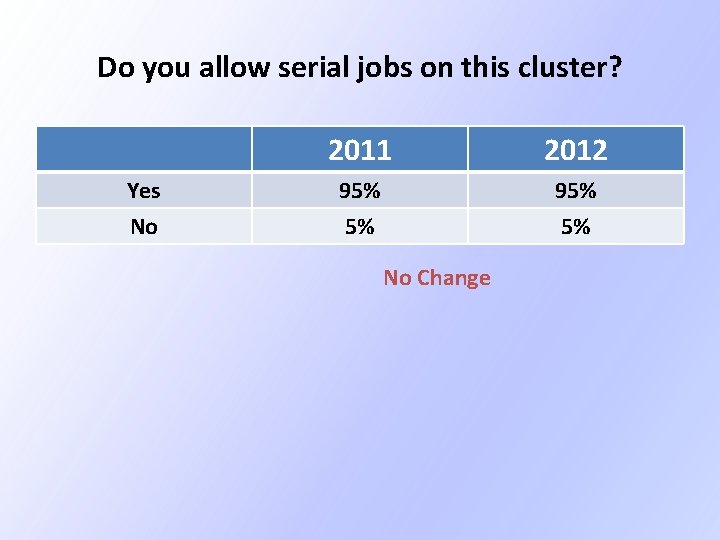

Do you allow serial jobs on this cluster? Yes No 2011 2012 95% 5%

Do you allow serial jobs on this cluster? Yes No 2011 2012 95% 5% No Change

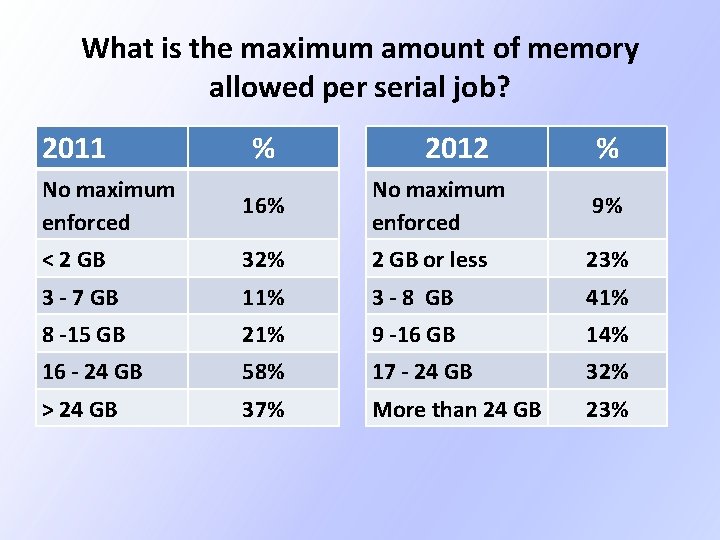

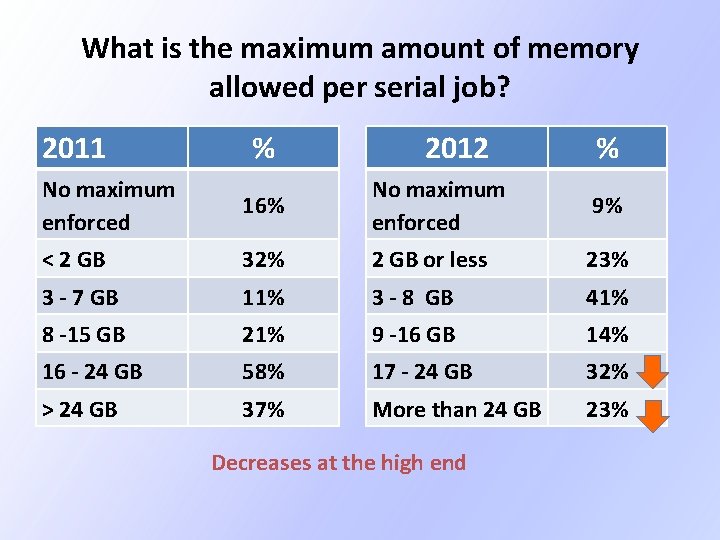

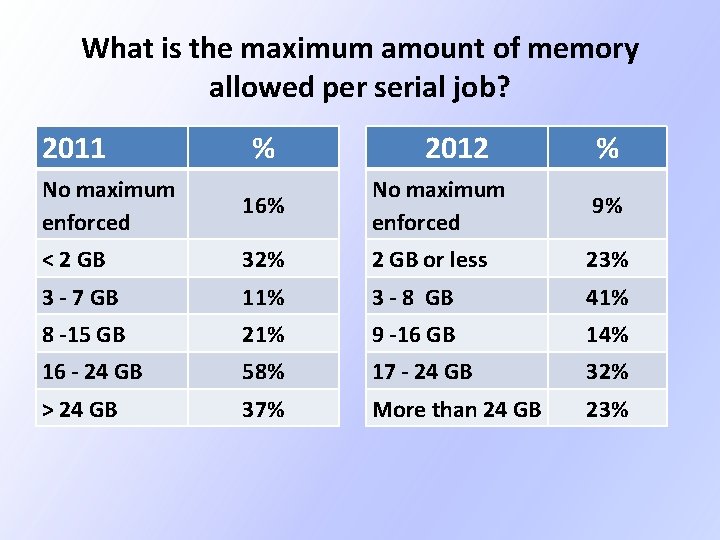

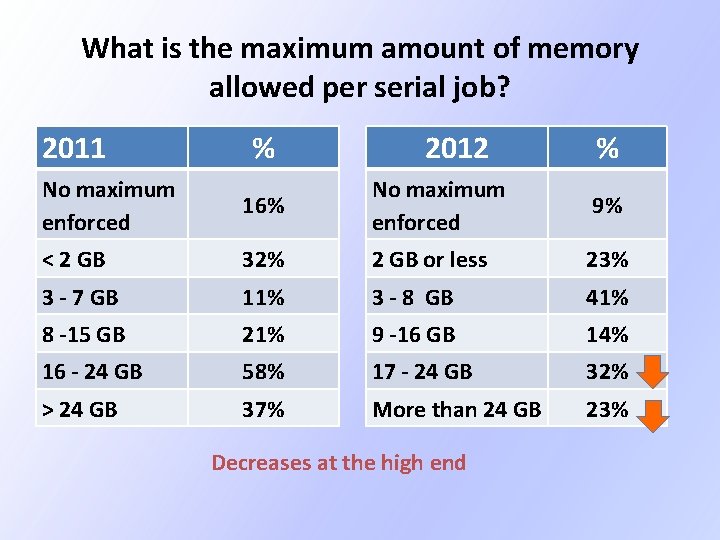

What is the maximum amount of memory allowed per serial job? 2011 No maximum enforced % 2012 % 16% No maximum enforced 9% < 2 GB 32% 2 GB or less 23% 3 - 7 GB 11% 3 - 8 GB 41% 8 -15 GB 21% 9 -16 GB 14% 16 - 24 GB 58% 17 - 24 GB 32% > 24 GB 37% More than 24 GB 23%

What is the maximum amount of memory allowed per serial job? 2011 No maximum enforced % 2012 % 16% No maximum enforced 9% < 2 GB 32% 2 GB or less 23% 3 - 7 GB 11% 3 - 8 GB 41% 8 -15 GB 21% 9 -16 GB 14% 16 - 24 GB 58% 17 - 24 GB 32% > 24 GB 37% More than 24 GB 23% Decreases at the high end

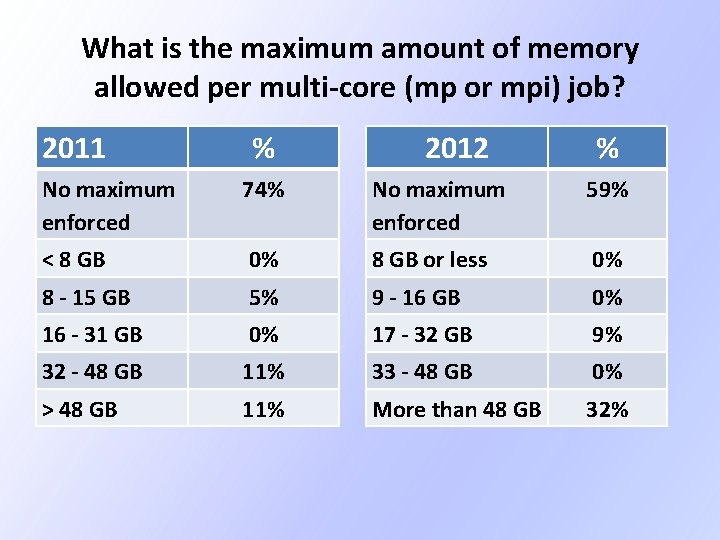

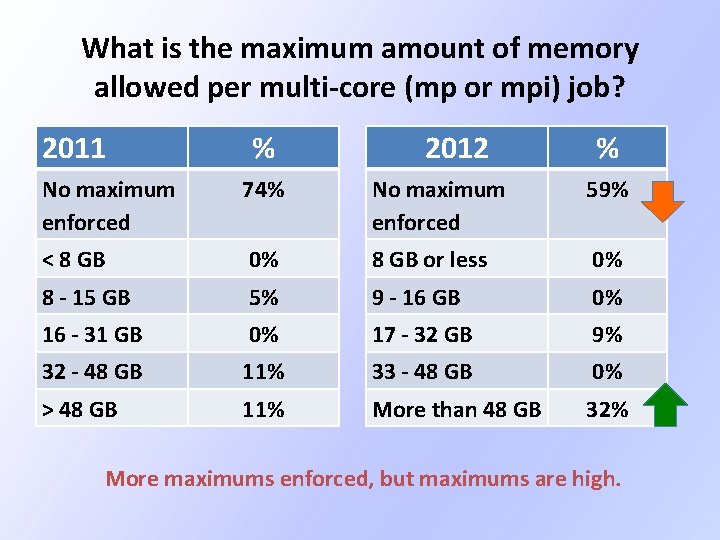

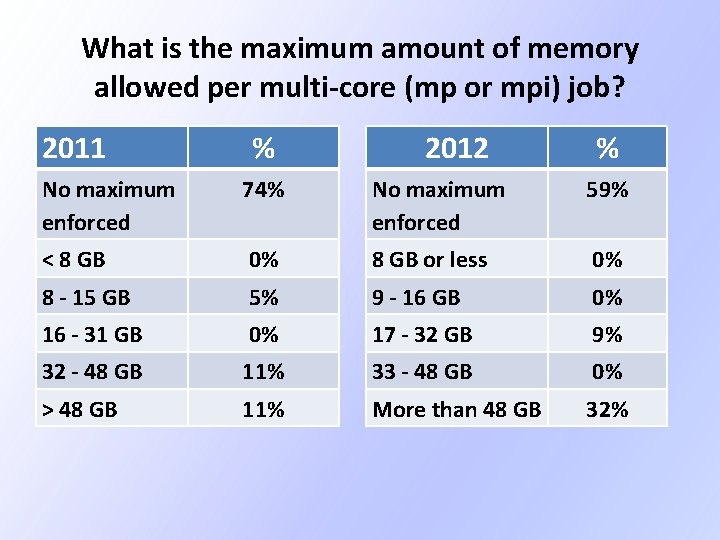

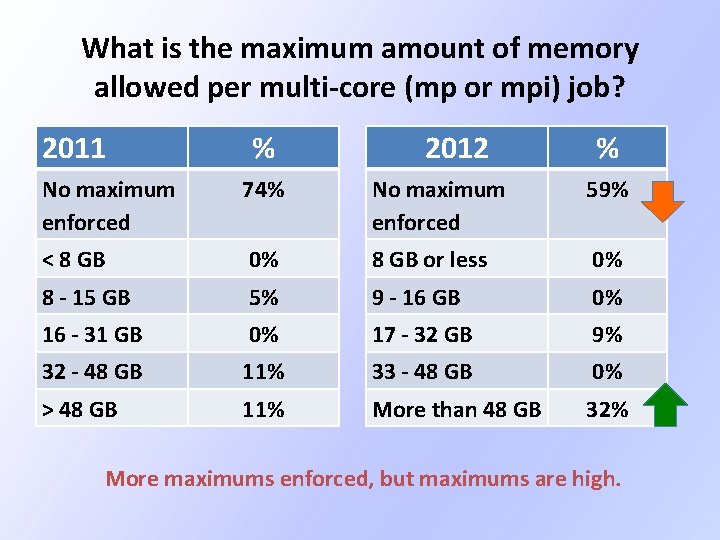

What is the maximum amount of memory allowed per multi-core (mp or mpi) job? 2011 % 2012 % No maximum enforced 74% No maximum enforced 59% < 8 GB 0% 8 GB or less 0% 8 - 15 GB 5% 9 - 16 GB 0% 16 - 31 GB 0% 17 - 32 GB 9% 32 - 48 GB 11% 33 - 48 GB 0% > 48 GB 11% More than 48 GB 32%

What is the maximum amount of memory allowed per multi-core (mp or mpi) job? 2011 % 2012 % No maximum enforced 74% No maximum enforced 59% < 8 GB 0% 8 GB or less 0% 8 - 15 GB 5% 9 - 16 GB 0% 16 - 31 GB 0% 17 - 32 GB 9% 32 - 48 GB 11% 33 - 48 GB 0% > 48 GB 11% More than 48 GB 32% More maximums enforced, but maximums are high.

Storage

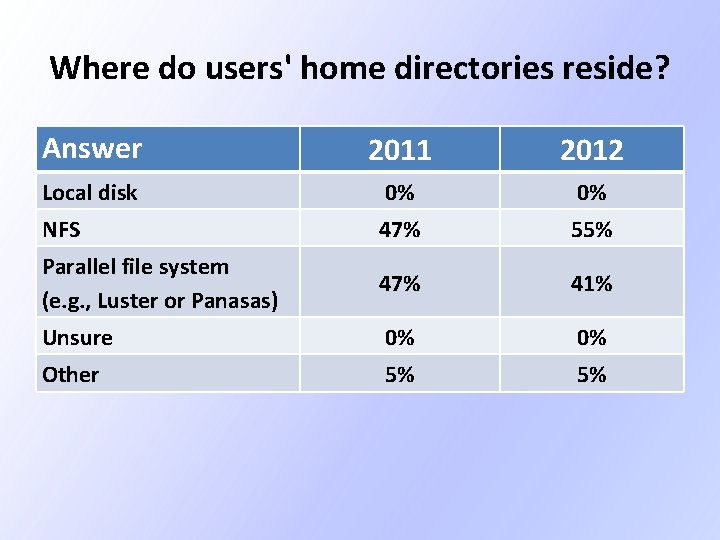

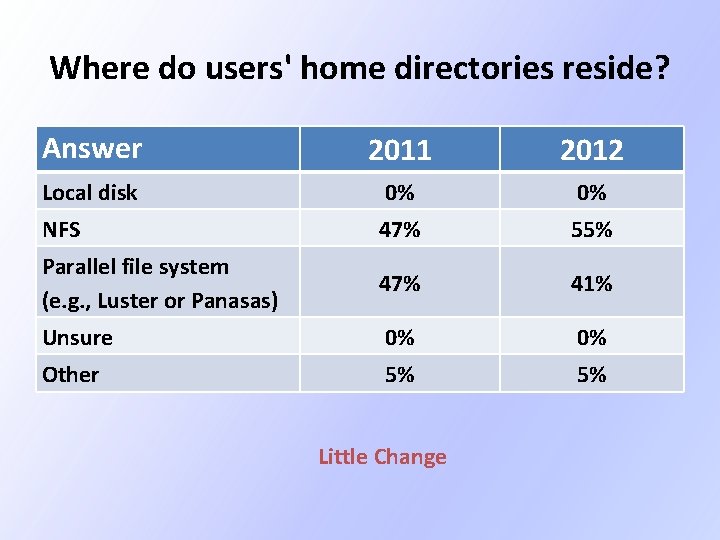

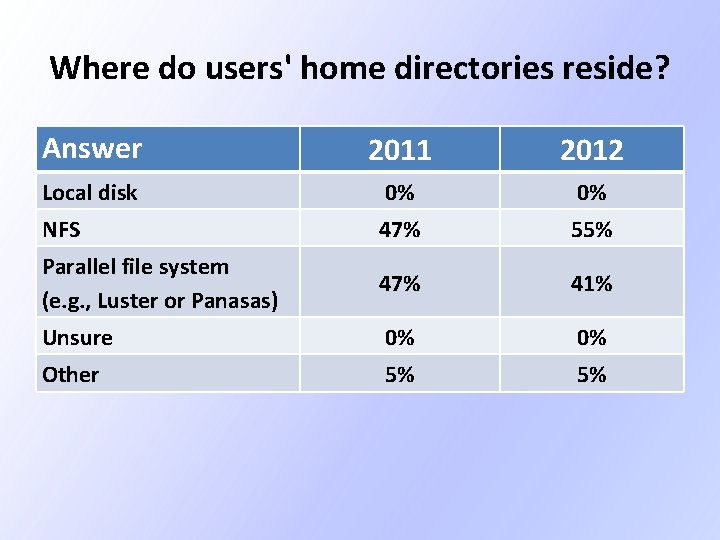

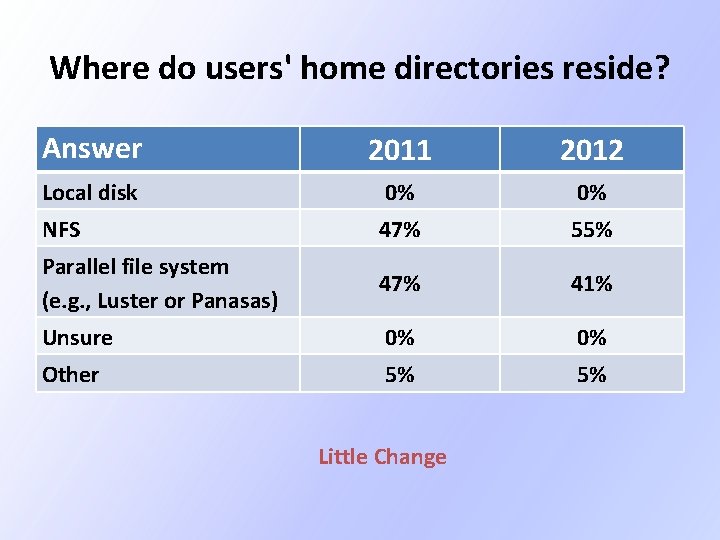

Where do users' home directories reside? Answer 2011 2012 Local disk 0% 0% NFS 47% 55% Parallel file system (e. g. , Luster or Panasas) 47% 41% Unsure 0% 0% Other 5% 5%

Where do users' home directories reside? Answer 2011 2012 Local disk 0% 0% NFS 47% 55% Parallel file system (e. g. , Luster or Panasas) 47% 41% Unsure 0% 0% Other 5% 5% Little Change

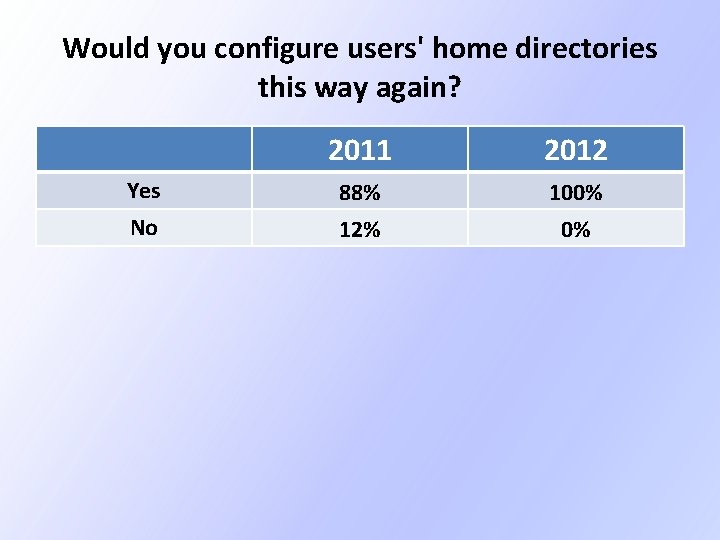

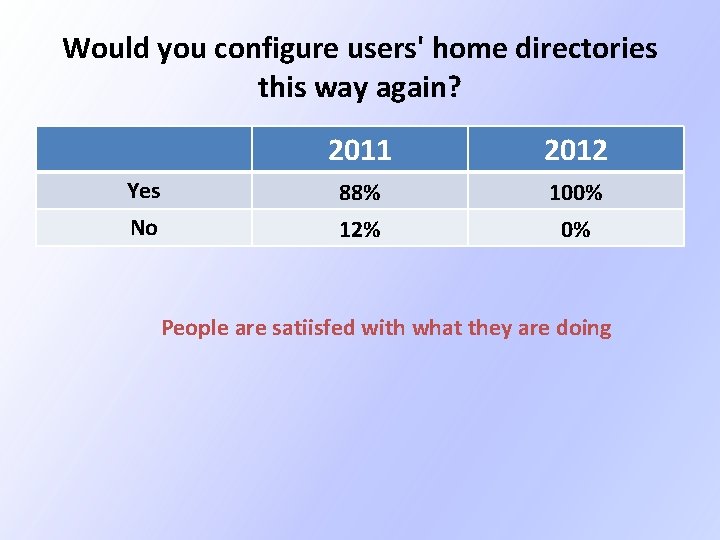

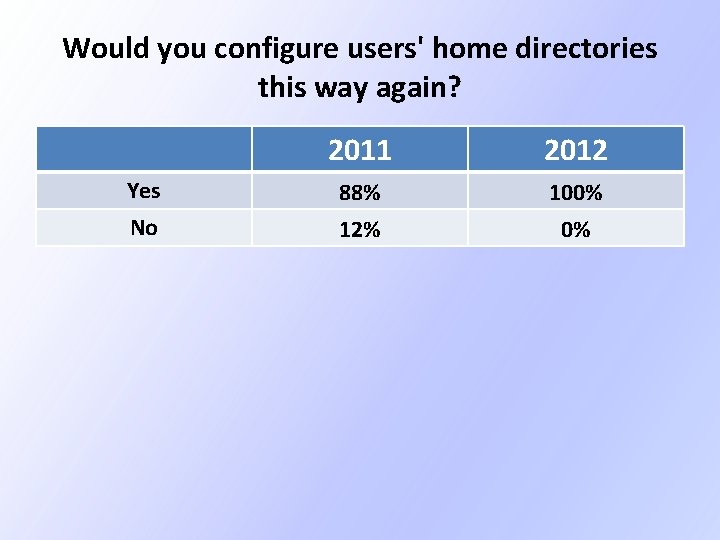

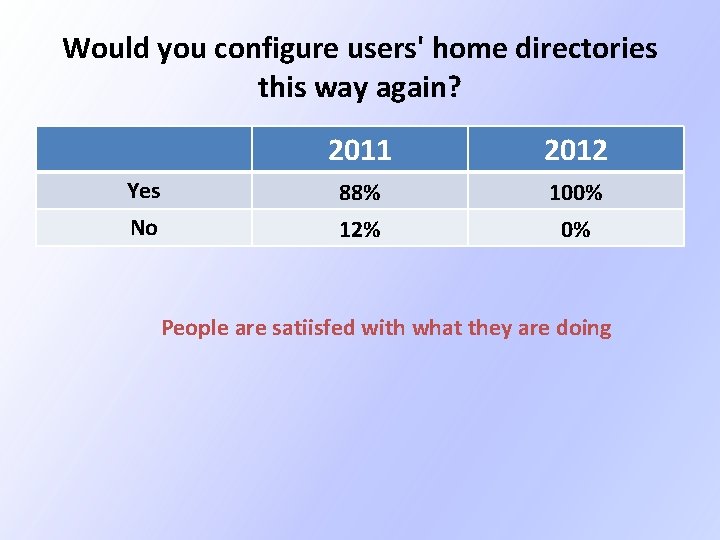

Would you configure users' home directories this way again? 2011 2012 Yes 88% 100% No 12% 0%

Would you configure users' home directories this way again? 2011 2012 Yes 88% 100% No 12% 0% People are satiisfed with what they are doing

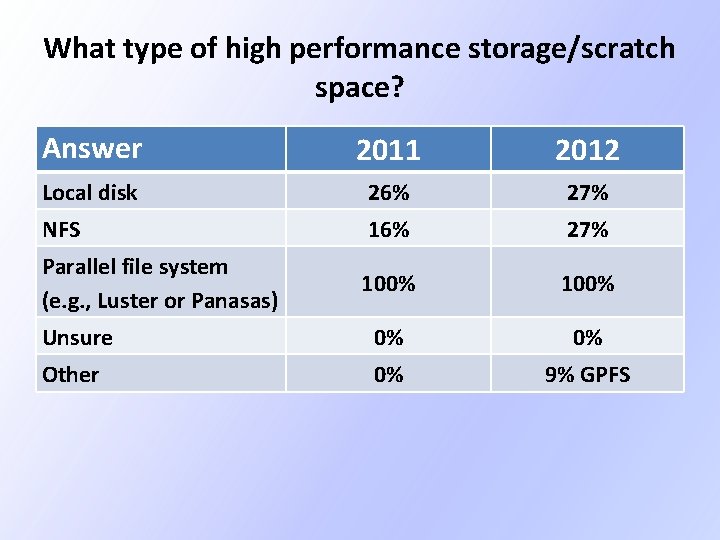

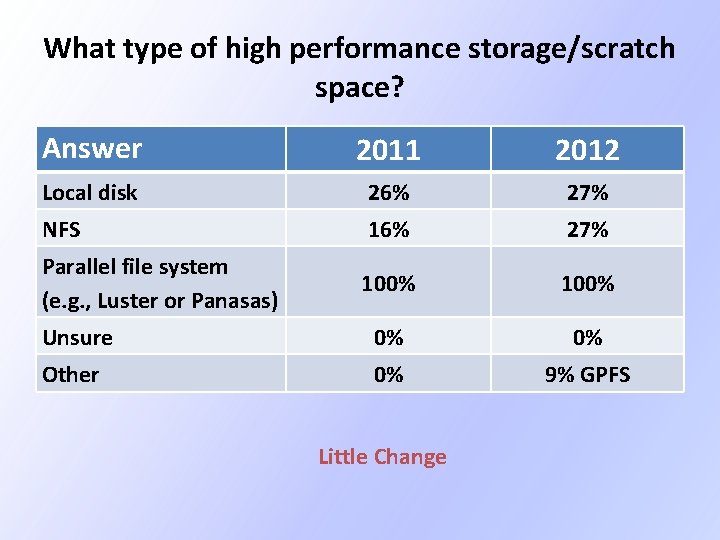

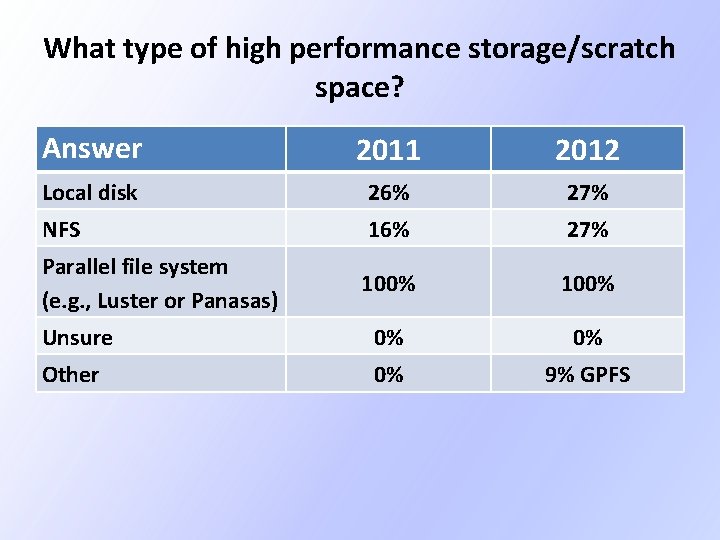

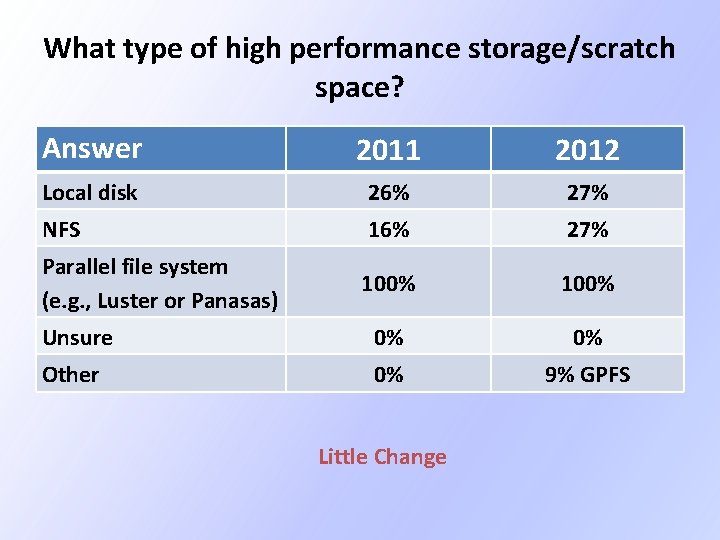

What type of high performance storage/scratch space? Answer 2011 2012 Local disk 26% 27% NFS 16% 27% Parallel file system (e. g. , Luster or Panasas) 100% Unsure 0% 0% Other 0% 9% GPFS

What type of high performance storage/scratch space? Answer 2011 2012 Local disk 26% 27% NFS 16% 27% Parallel file system (e. g. , Luster or Panasas) 100% Unsure 0% 0% Other 0% 9% GPFS Little Change

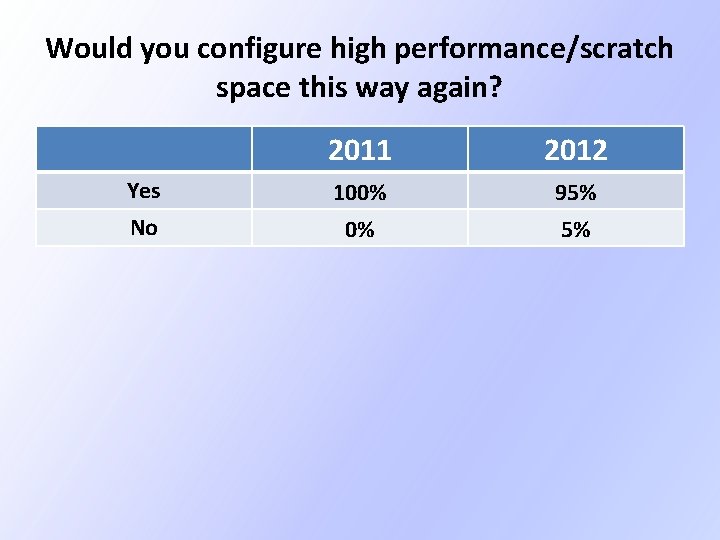

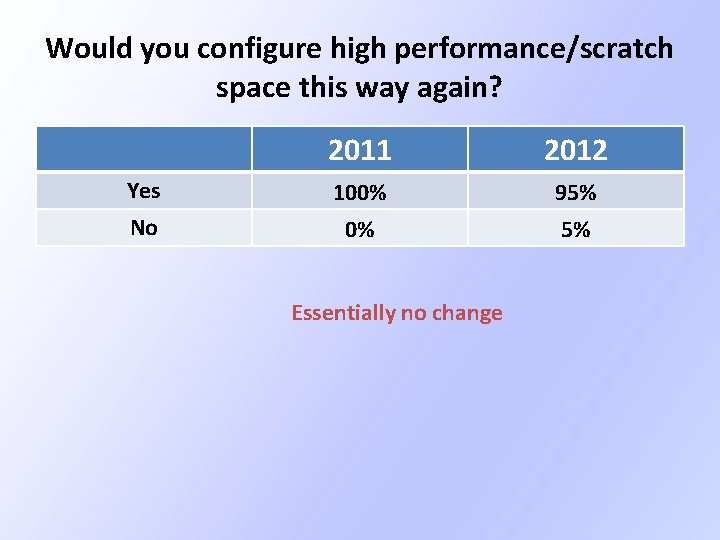

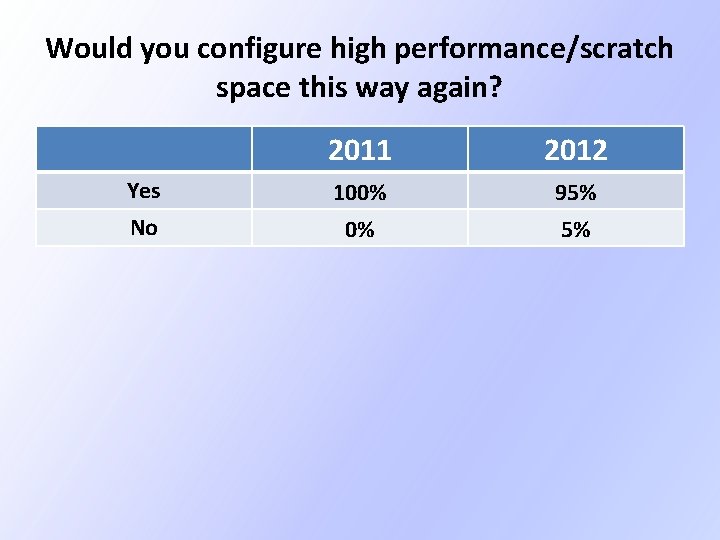

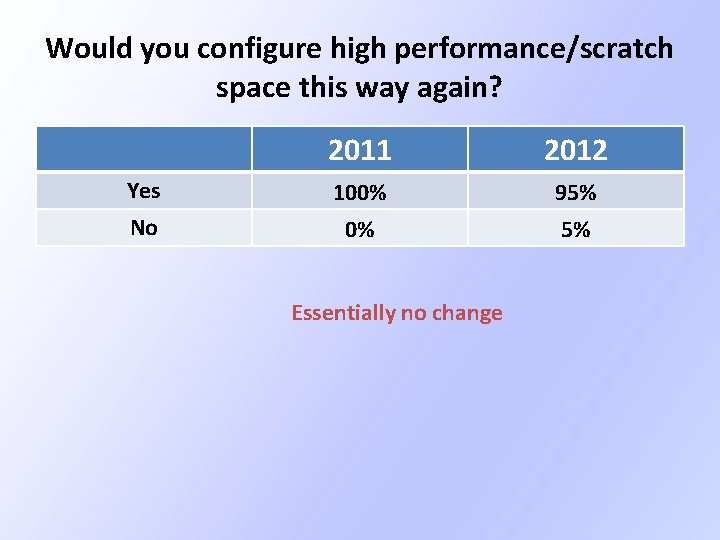

Would you configure high performance/scratch space this way again? 2011 2012 Yes 100% 95% No 0% 5%

Would you configure high performance/scratch space this way again? 2011 2012 Yes 100% 95% No 0% 5% Essentially no change

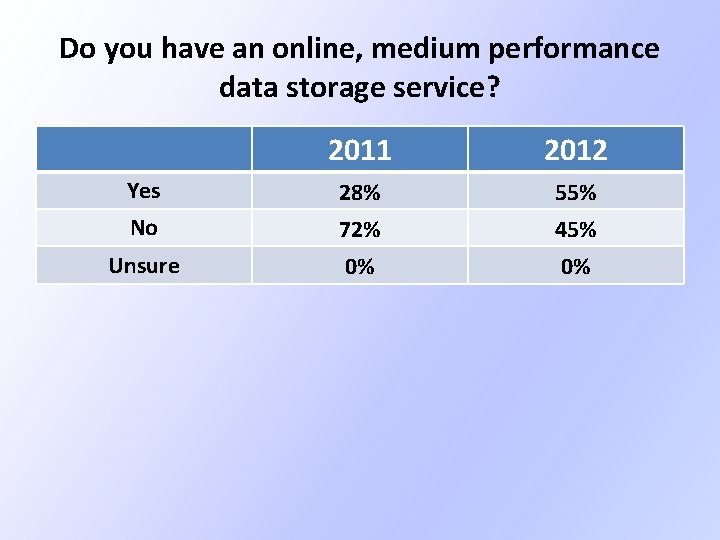

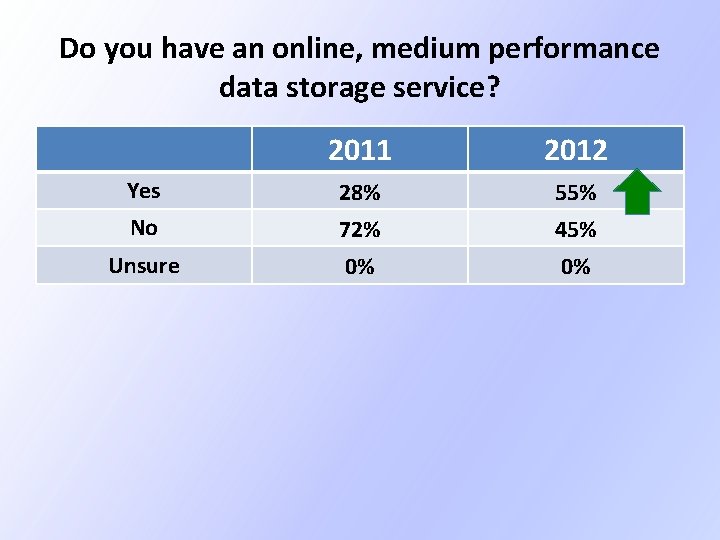

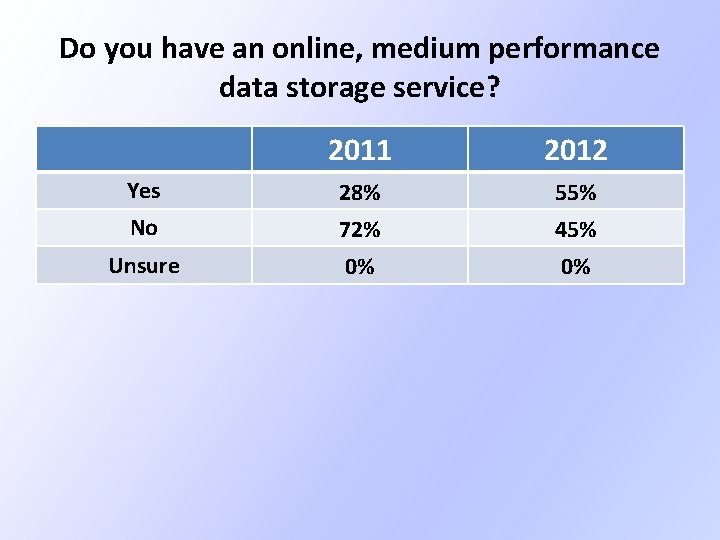

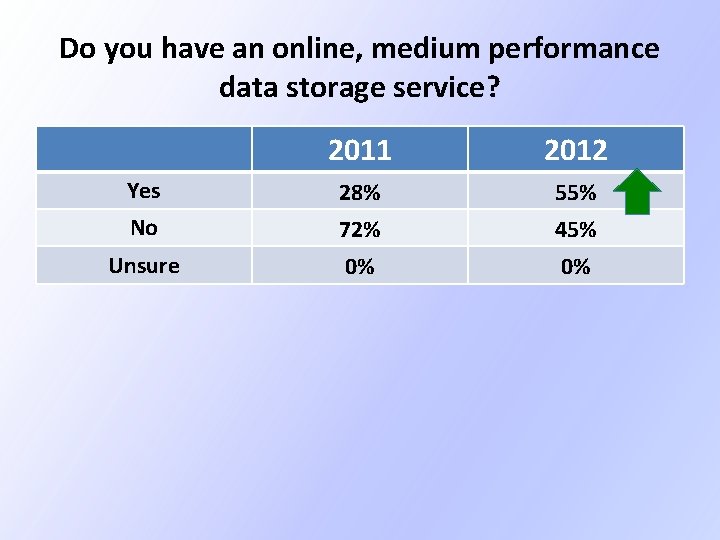

Do you have an online, medium performance data storage service? 2011 2012 Yes 28% 55% No 72% 45% Unsure 0% 0%

Do you have an online, medium performance data storage service? 2011 2012 Yes 28% 55% No 72% 45% Unsure 0% 0%

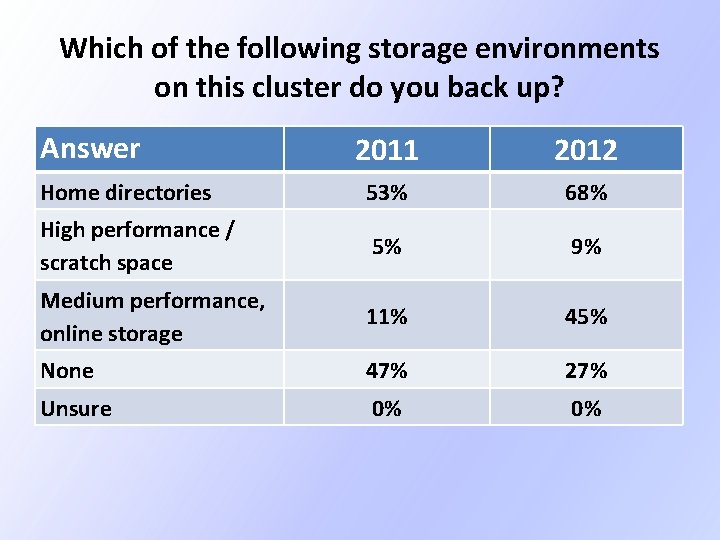

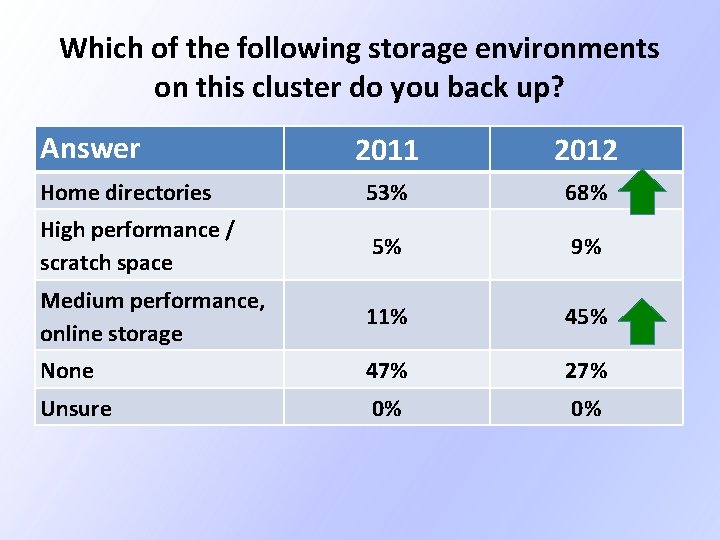

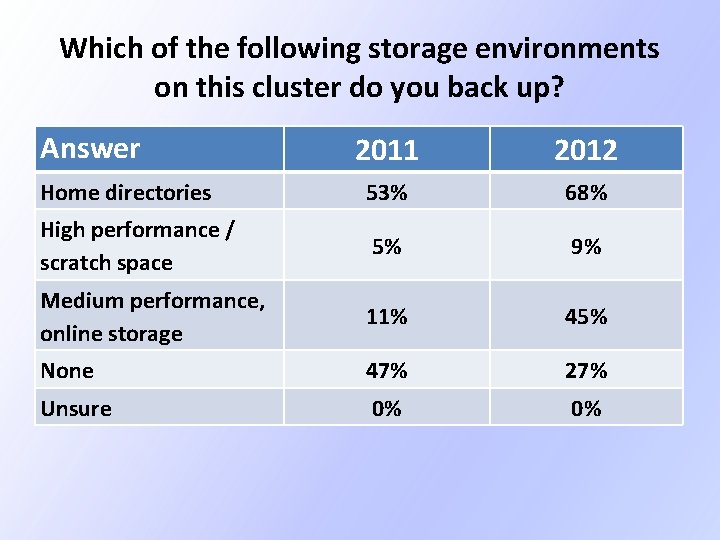

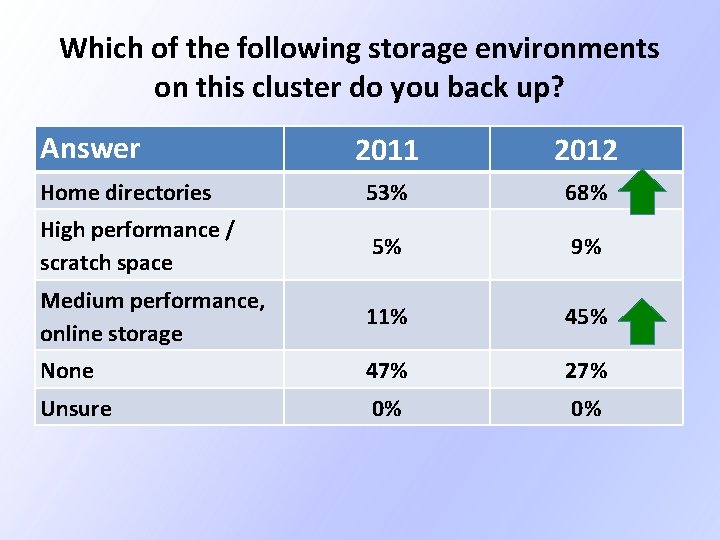

Which of the following storage environments on this cluster do you back up? Answer 2011 2012 Home directories 53% 68% High performance / scratch space 5% 9% Medium performance, online storage 11% 45% None 47% 27% Unsure 0% 0%

Which of the following storage environments on this cluster do you back up? Answer 2011 2012 Home directories 53% 68% High performance / scratch space 5% 9% Medium performance, online storage 11% 45% None 47% 27% Unsure 0% 0%

Current Directions

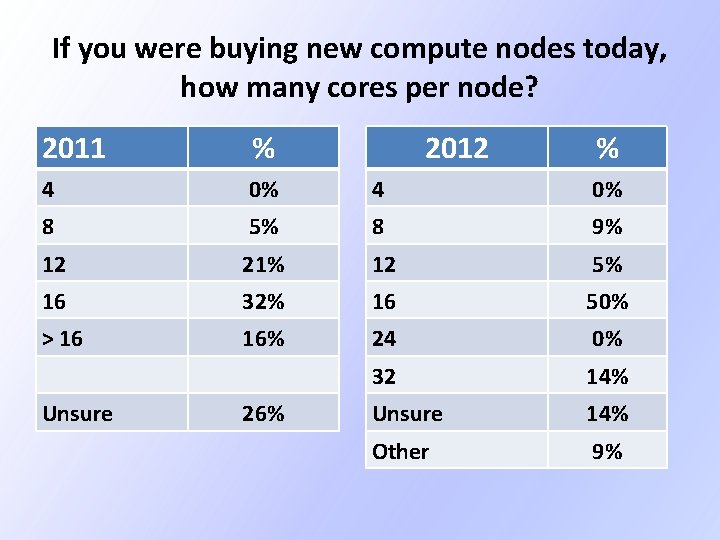

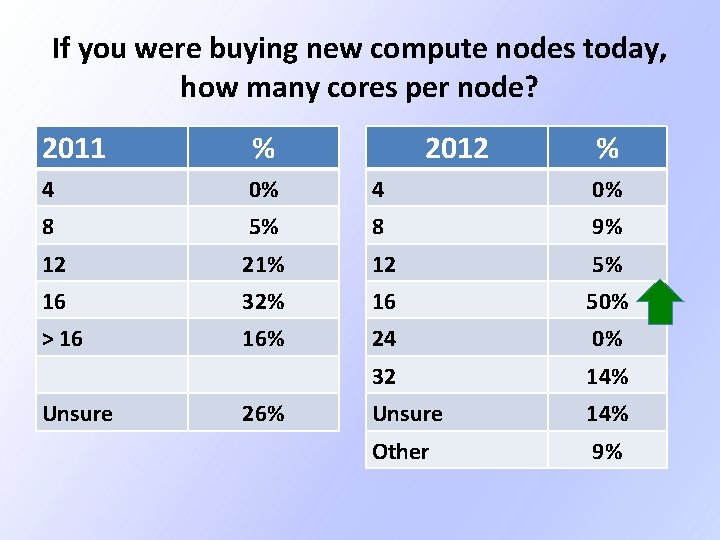

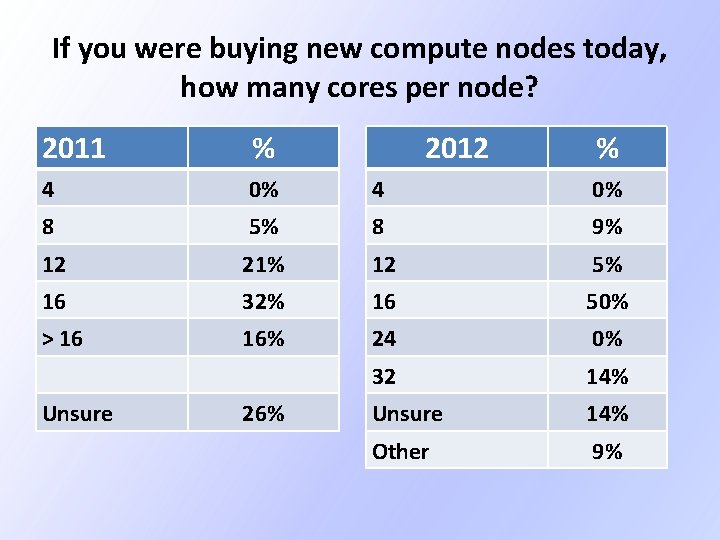

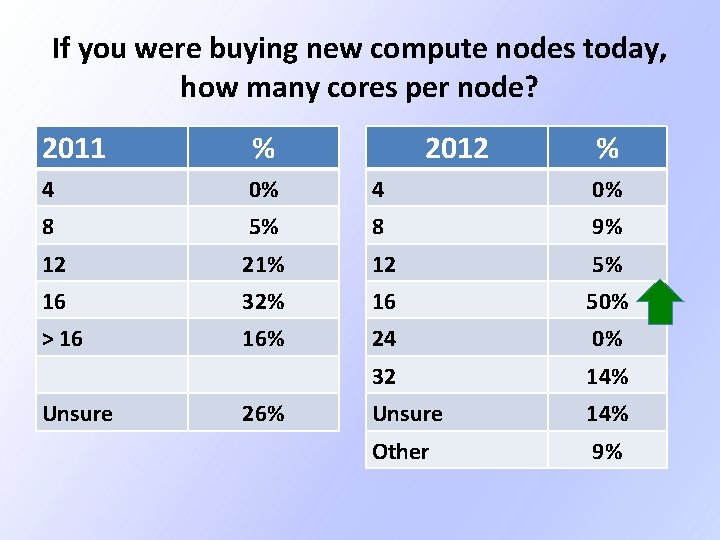

If you were buying new compute nodes today, how many cores per node? 2012 % 2011 % 4 0% 8 5% 8 9% 12 21% 12 5% 16 32% 16 50% > 16 16% 24 0% 32 14% Unsure 14% Other 9% Unsure 26%

If you were buying new compute nodes today, how many cores per node? 2012 % 2011 % 4 0% 8 5% 8 9% 12 21% 12 5% 16 32% 16 50% > 16 16% 24 0% 32 14% Unsure 14% Other 9% Unsure 26%

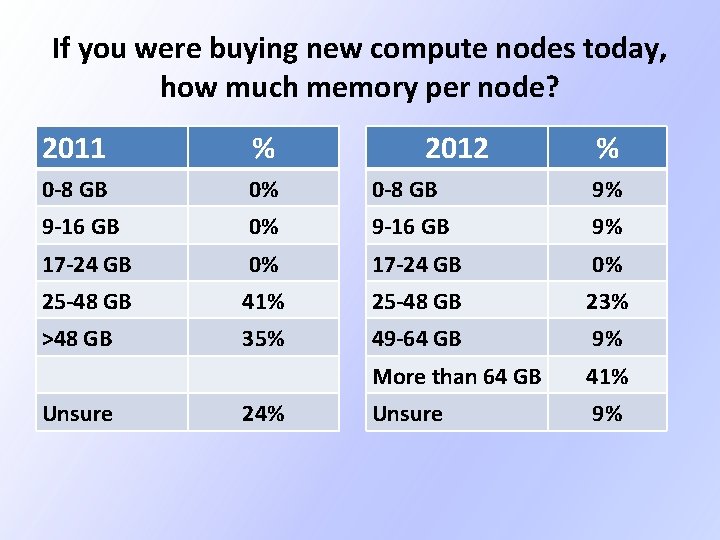

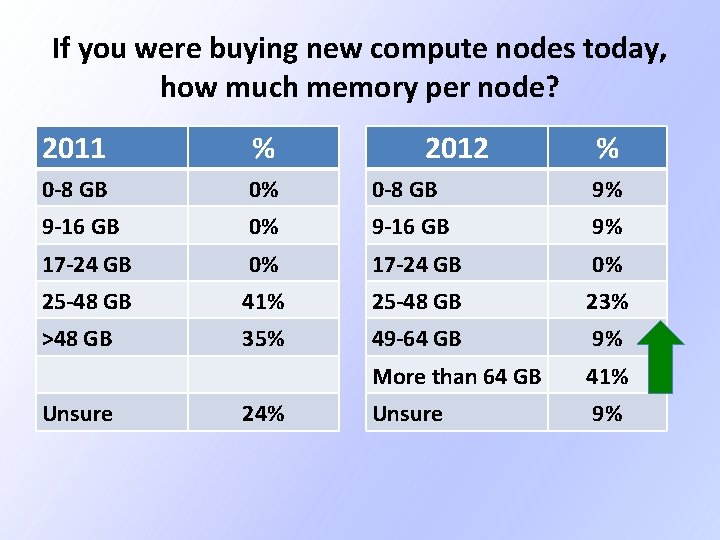

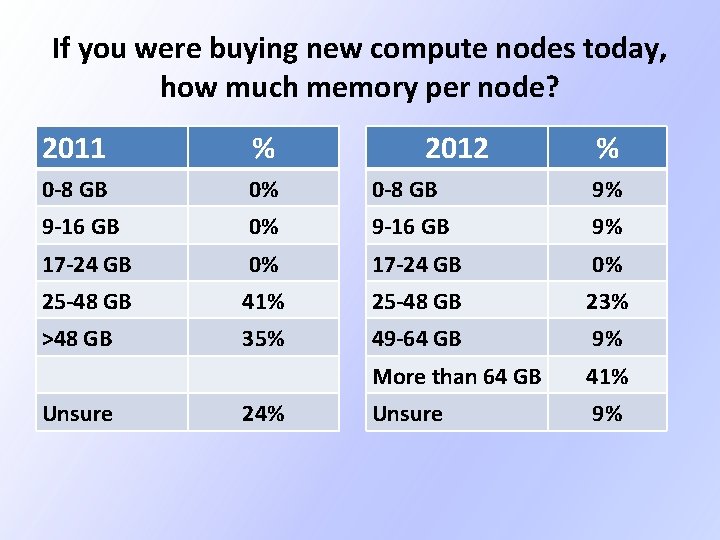

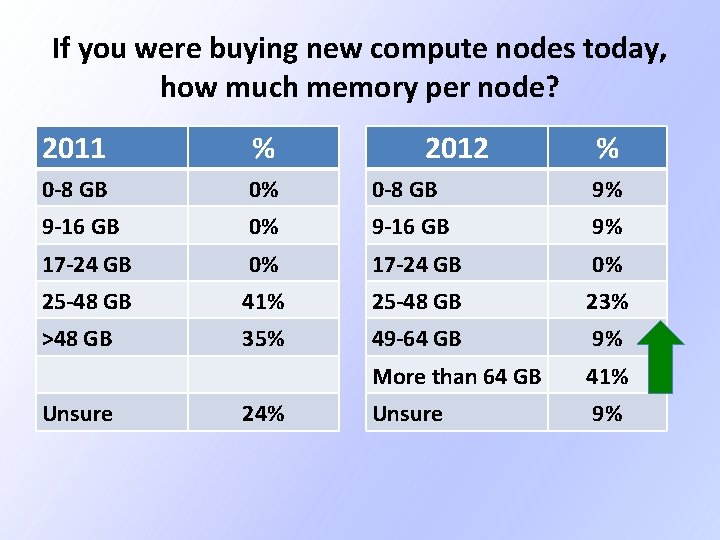

If you were buying new compute nodes today, how much memory per node? 2011 % 0 -8 GB 0% 0 -8 GB 9% 9 -16 GB 0% 9 -16 GB 9% 17 -24 GB 0% 25 -48 GB 41% 25 -48 GB 23% >48 GB 35% 49 -64 GB 9% More than 64 GB 41% Unsure 9% Unsure 24% 2012 %

If you were buying new compute nodes today, how much memory per node? 2011 % 0 -8 GB 0% 0 -8 GB 9% 9 -16 GB 0% 9 -16 GB 9% 17 -24 GB 0% 25 -48 GB 41% 25 -48 GB 23% >48 GB 35% 49 -64 GB 9% More than 64 GB 41% Unsure 9% Unsure 24% 2012 %

Staffing

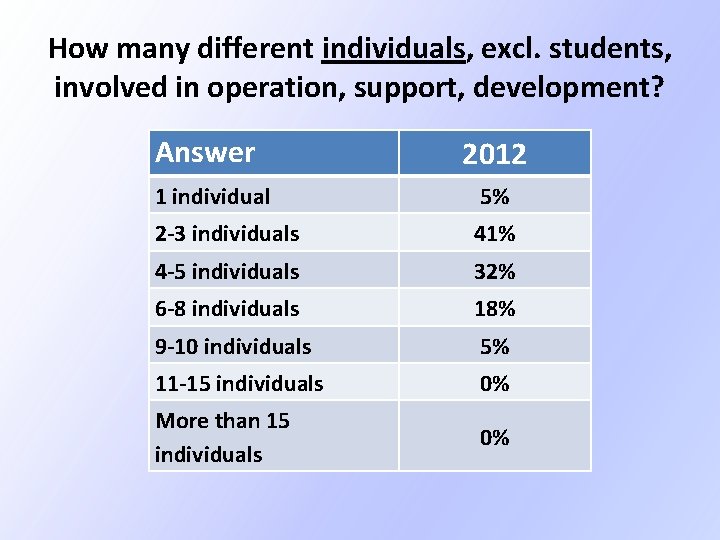

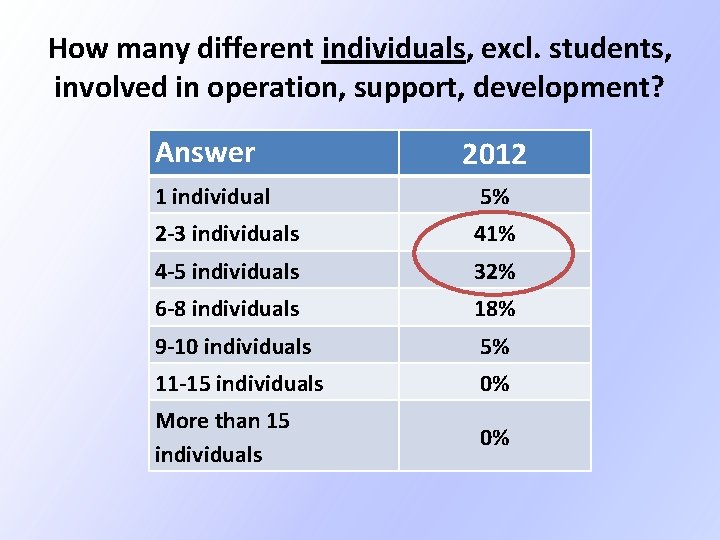

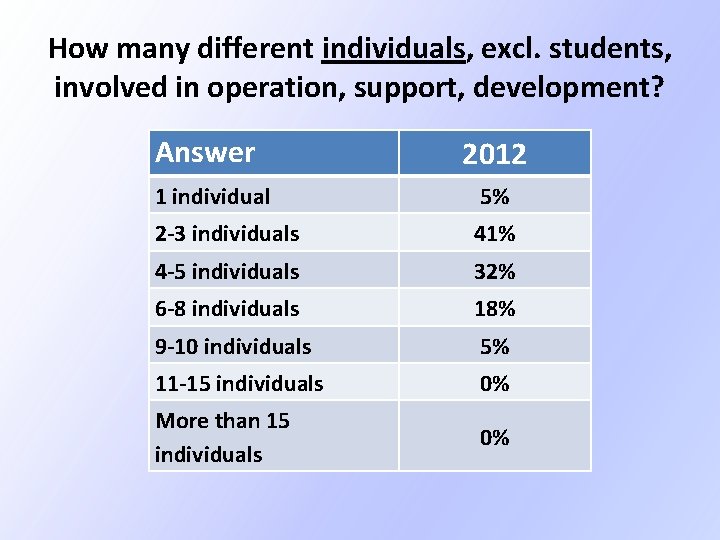

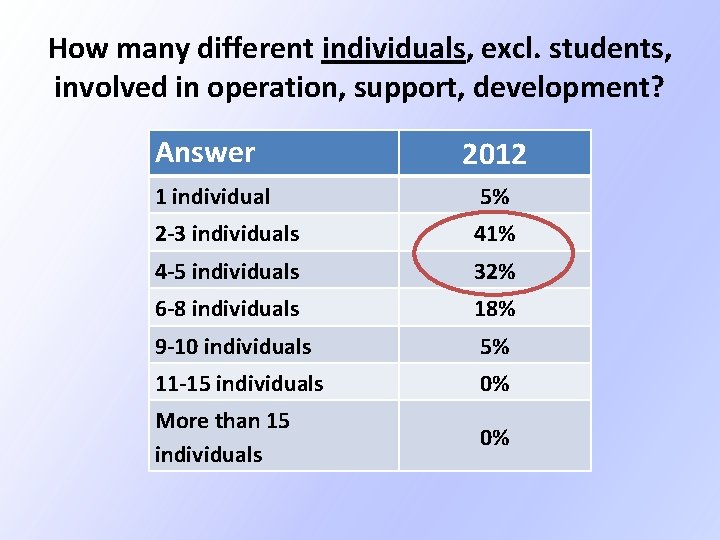

How many different individuals, excl. students, involved in operation, support, development? Answer 2012 1 individual 5% 2 -3 individuals 41% 4 -5 individuals 32% 6 -8 individuals 18% 9 -10 individuals 5% 11 -15 individuals 0% More than 15 individuals 0%

How many different individuals, excl. students, involved in operation, support, development? Answer 2012 1 individual 5% 2 -3 individuals 41% 4 -5 individuals 32% 6 -8 individuals 18% 9 -10 individuals 5% 11 -15 individuals 0% More than 15 individuals 0%

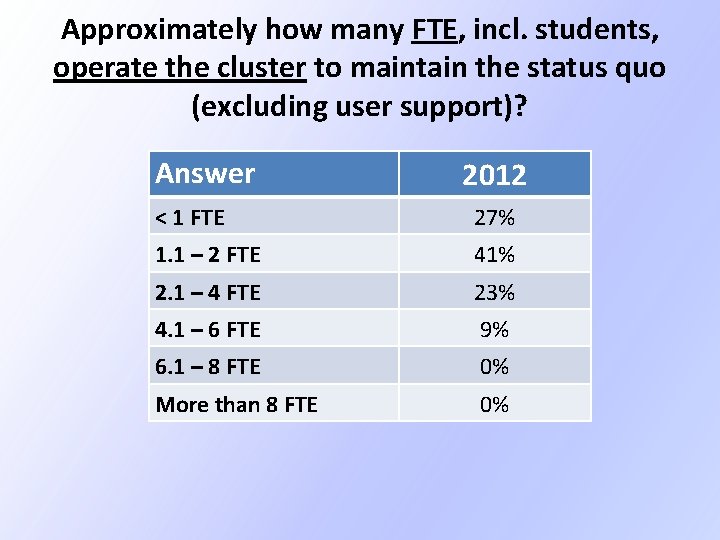

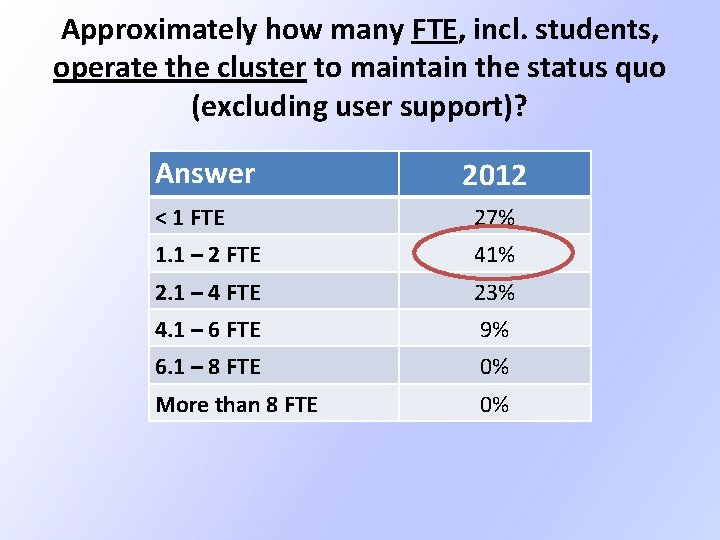

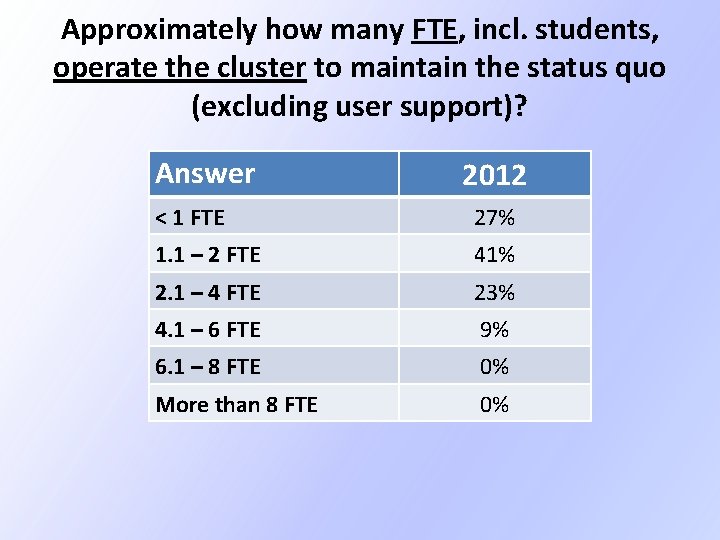

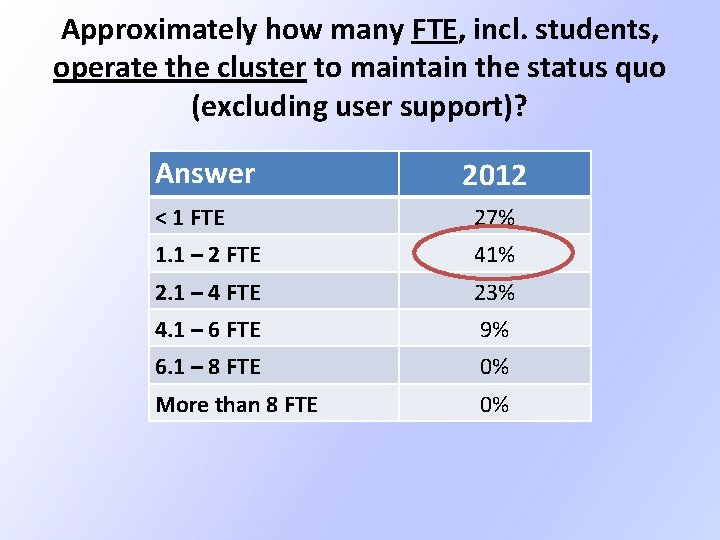

Approximately how many FTE, incl. students, operate the cluster to maintain the status quo (excluding user support)? Answer 2012 < 1 FTE 27% 1. 1 – 2 FTE 41% 2. 1 – 4 FTE 23% 4. 1 – 6 FTE 9% 6. 1 – 8 FTE 0% More than 8 FTE 0%

Approximately how many FTE, incl. students, operate the cluster to maintain the status quo (excluding user support)? Answer 2012 < 1 FTE 27% 1. 1 – 2 FTE 41% 2. 1 – 4 FTE 23% 4. 1 – 6 FTE 9% 6. 1 – 8 FTE 0% More than 8 FTE 0%

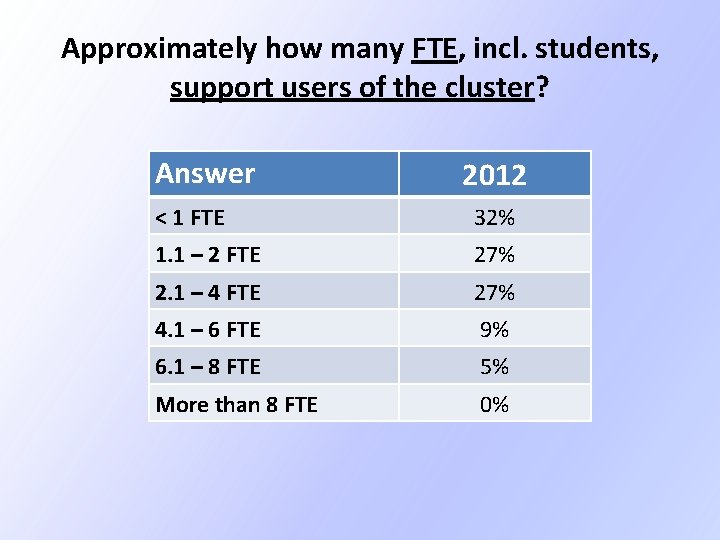

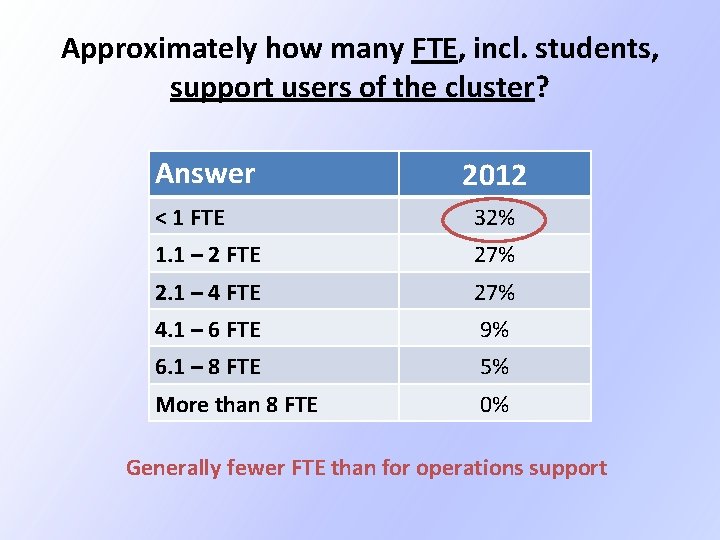

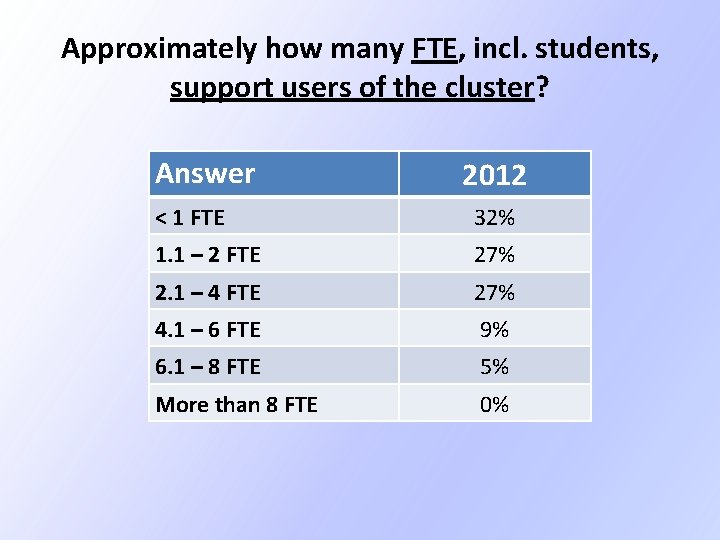

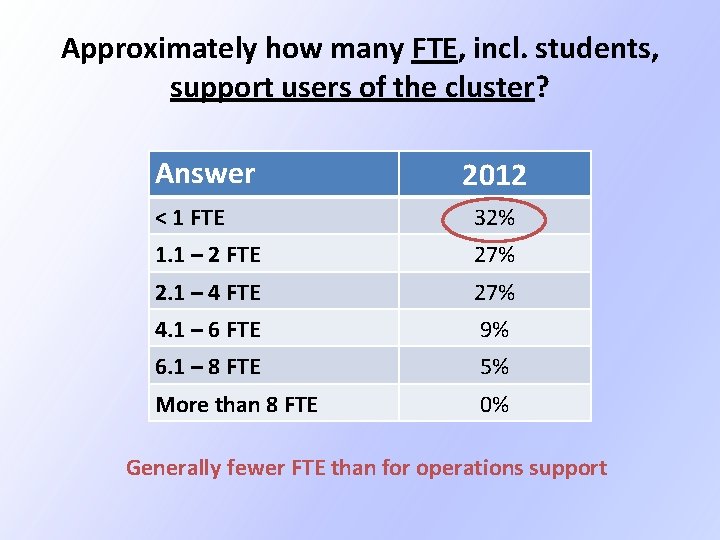

Approximately how many FTE, incl. students, support users of the cluster? Answer 2012 < 1 FTE 32% 1. 1 – 2 FTE 27% 2. 1 – 4 FTE 27% 4. 1 – 6 FTE 9% 6. 1 – 8 FTE 5% More than 8 FTE 0%

Approximately how many FTE, incl. students, support users of the cluster? Answer 2012 < 1 FTE 32% 1. 1 – 2 FTE 27% 2. 1 – 4 FTE 27% 4. 1 – 6 FTE 9% 6. 1 – 8 FTE 5% More than 8 FTE 0% Generally fewer FTE than for operations support

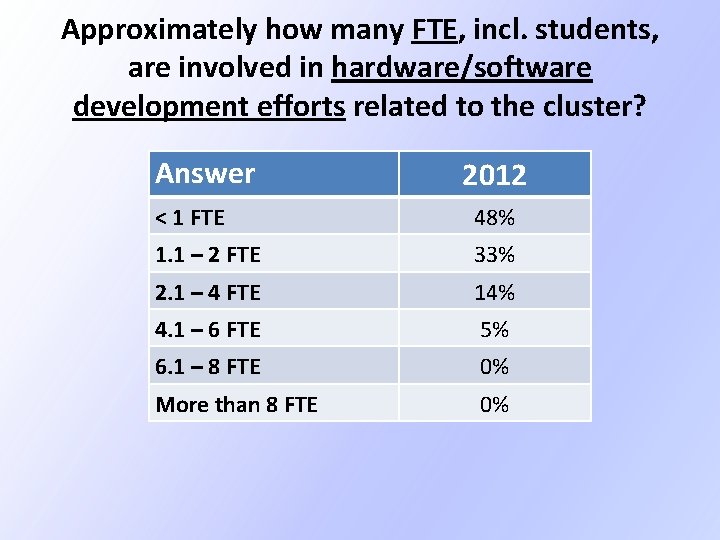

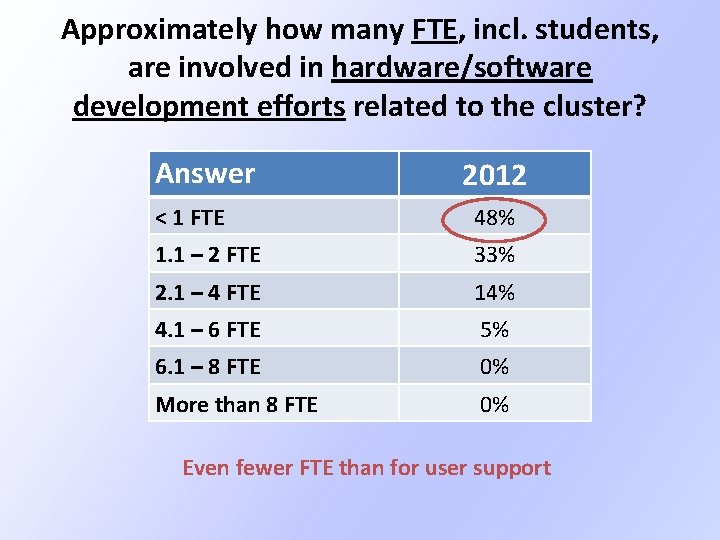

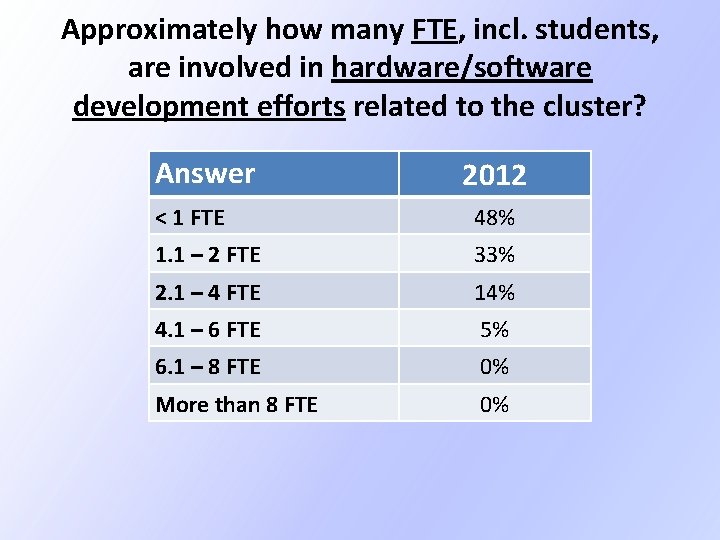

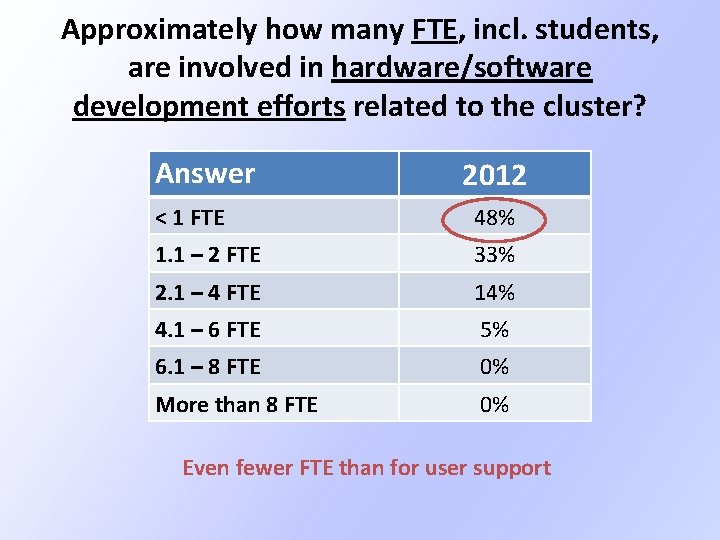

Approximately how many FTE, incl. students, are involved in hardware/software development efforts related to the cluster? Answer 2012 < 1 FTE 48% 1. 1 – 2 FTE 33% 2. 1 – 4 FTE 14% 4. 1 – 6 FTE 5% 6. 1 – 8 FTE 0% More than 8 FTE 0%

Approximately how many FTE, incl. students, are involved in hardware/software development efforts related to the cluster? Answer 2012 < 1 FTE 48% 1. 1 – 2 FTE 33% 2. 1 – 4 FTE 14% 4. 1 – 6 FTE 5% 6. 1 – 8 FTE 0% More than 8 FTE 0% Even fewer FTE than for user support

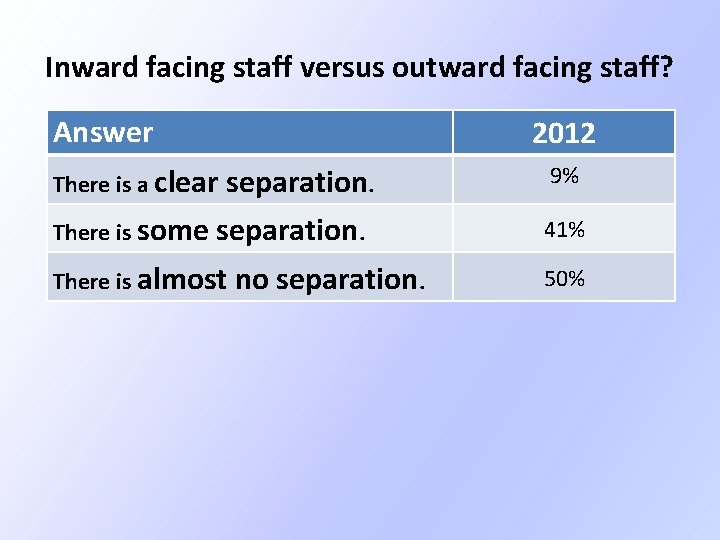

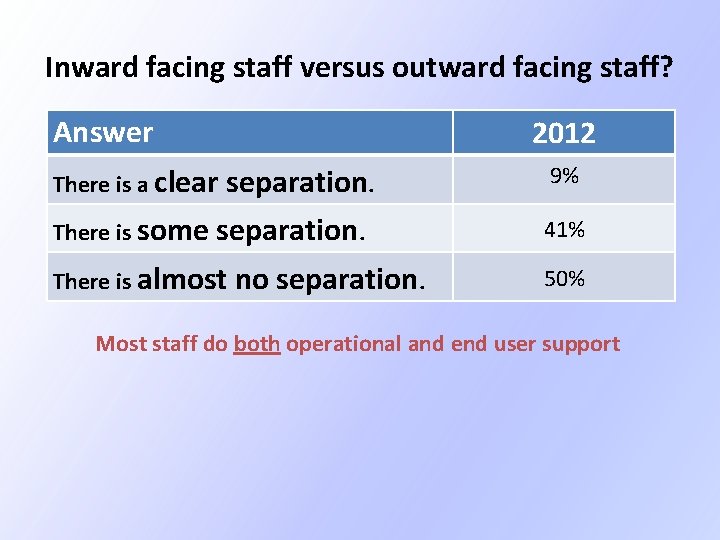

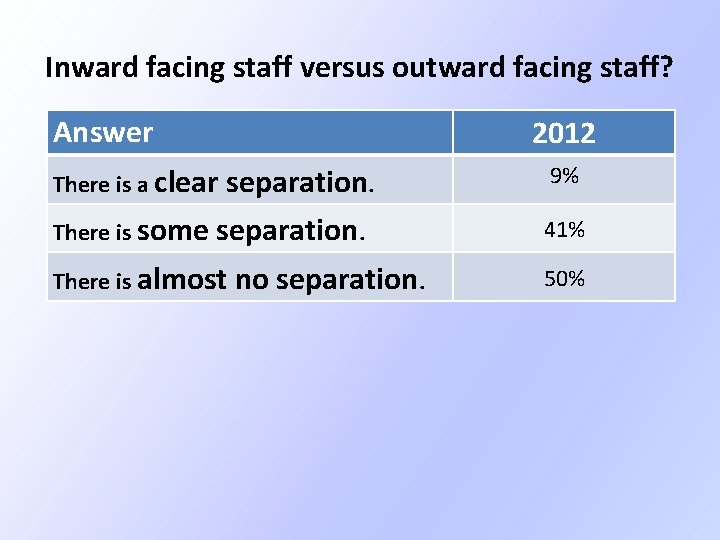

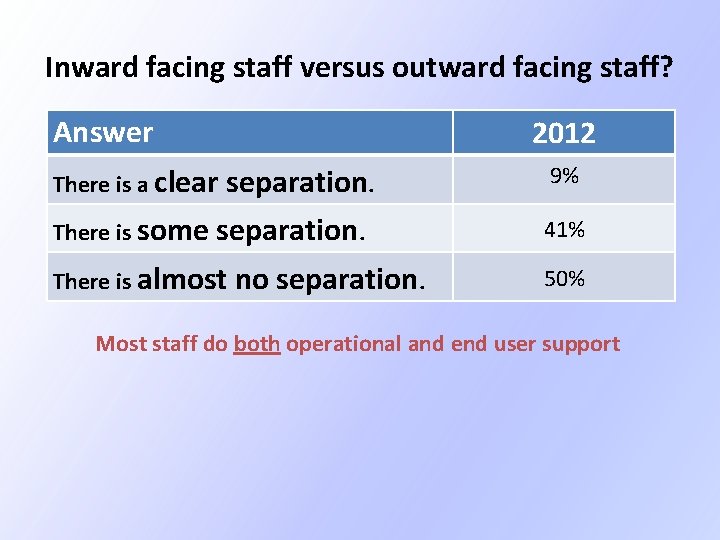

Inward facing staff versus outward facing staff? Answer 2012 There is a clear There is some separation. There is almost no separation. 9% 41% 50%

Inward facing staff versus outward facing staff? Answer 2012 There is a clear There is some separation. There is almost no separation. 9% 41% 50% Most staff do both operational and end user support

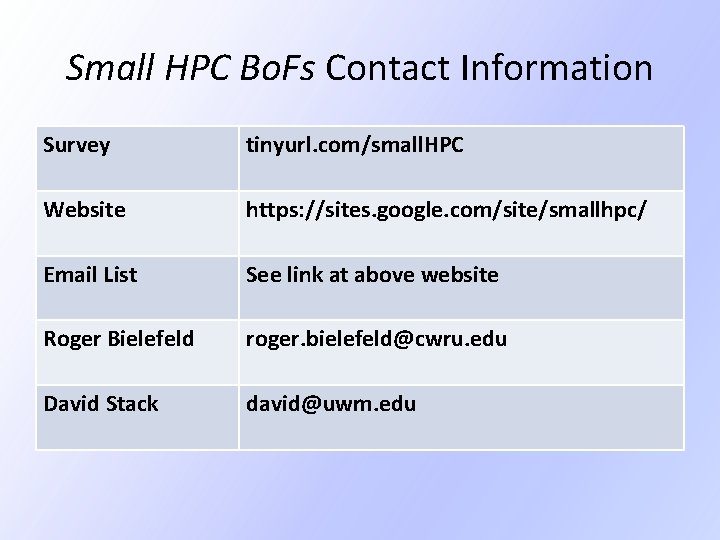

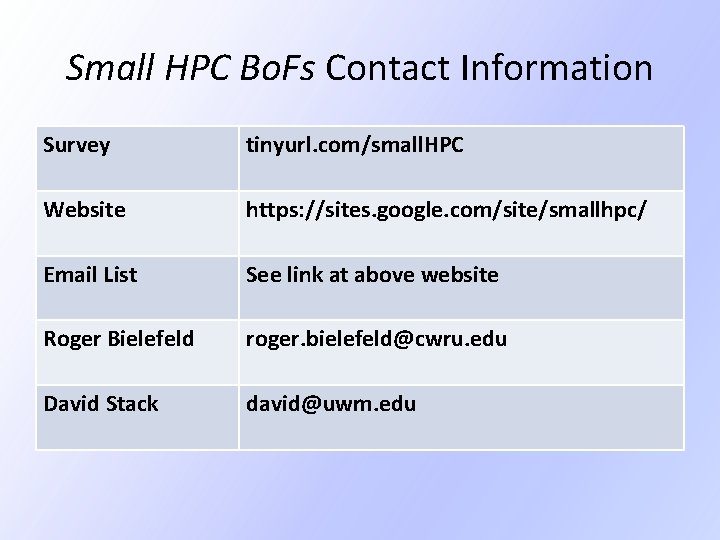

Small HPC Bo. Fs Contact Information Survey tinyurl. com/small. HPC Website https: //sites. google. com/site/smallhpc/ Email List See link at above website Roger Bielefeld roger. bielefeld@cwru. edu David Stack david@uwm. edu