Comment Spam Identification Eric Cheng Eric Steinlauf What

![Minimum Error Configuration • • • Separator: [^a-z<>]+ Header: Both Significant words: All Double Minimum Error Configuration • • • Separator: [^a-z<>]+ Header: Both Significant words: All Double](https://slidetodoc.com/presentation_image_h/185abbcce479bf9814e5ceb651d36fab/image-39.jpg)

- Slides: 47

Comment Spam Identification Eric Cheng & Eric Steinlauf

What is comment spam?

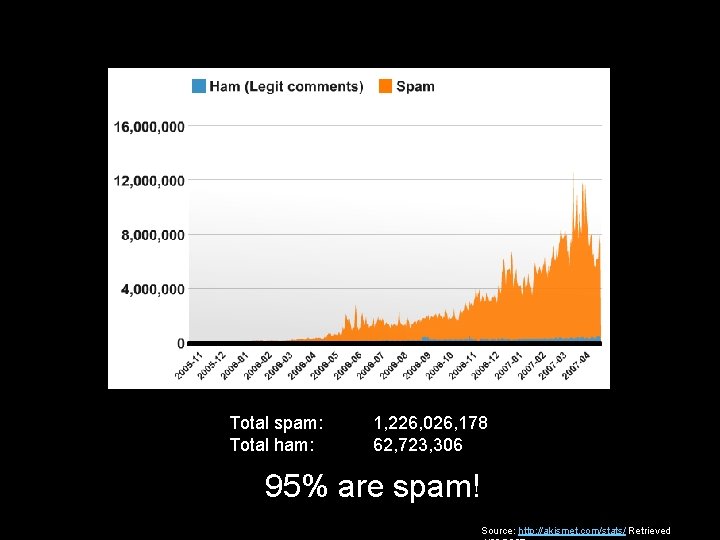

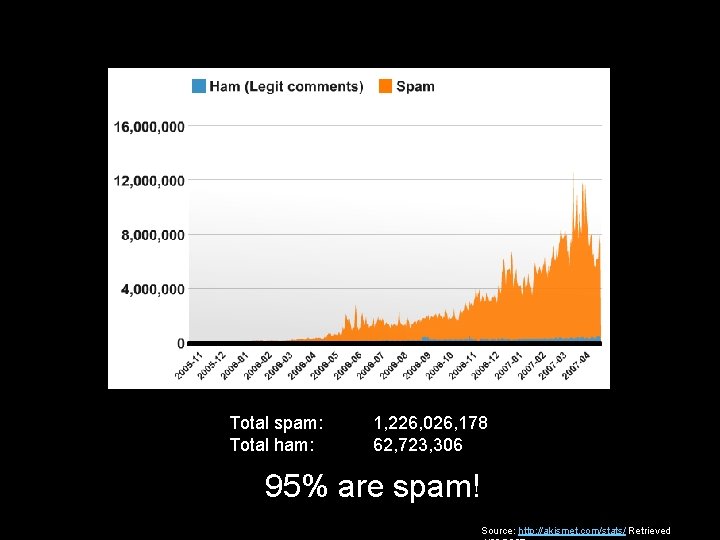

Total spam: Total ham: 1, 226, 026, 178 62, 723, 306 95% are spam! Source: http: //akismet. com/stats/ Retrieved

Countermeasures

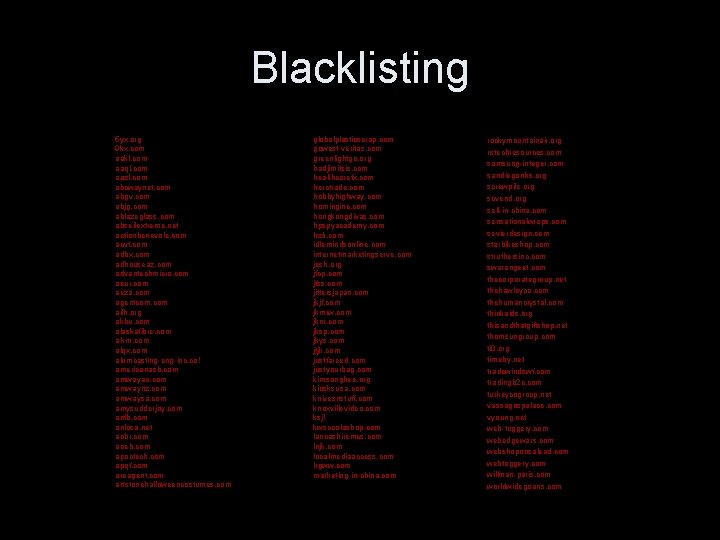

Blacklisting 5 yx. org 9 kx. com aakl. com aaql. com aazl. com abcwaynet. com abgv. com abjg. com ablazeglass. com abseilextreme. net actionbenevole. com acvt. com adbx. com adhouseaz. com advantechmicro. com aeur. com aeza. com agentcom. com ailh. org akbu. com alaskafibre. com alkm. com alqx. com alumcasting-eng-inc. co! americanasb. com amwayau. com amwaynz. com amwaysa. com amysudderjoy. com anfb. com anlusa. net aobr. com aoeb. com apoctech. com apqf. com areagent. com artstonehalloweencostumes. com globalplasticscrap. com gowest-veritas. com greenlightgo. org hadjimitsis. com healthcarefx. com herctrade. com hobbyhighway. com hominginc. com hongkongdivas. com hpspyacademy. com hzlr. com idlemindsonline. com internetmarketingserve. com jesh. org jfcp. com jfss. com jittersjapan. com jkjf. com jkmrw. com jknr. com jksp. com jkys. com jtjk. com justfareed. com justyourbag. com kimsanghee. org kiosksusa. com knivesnstuff. com knoxvillevideo. com ksj! kwscuolashop. com lancashiremcs. com lnjk. com localmediaaccess. com lrgww. com marketing-in-china. com rockymountainair. org rstechresources. com samsung-integer. com sandiegonhs. org screwpile. org scvend. org sell-in-china. com sensationalwraps. com sevierdesign. com starbikeshop. com struthersinc. com swarangeet. com thecorporategroup. net thehawleyco. com thehumancrystal. com thinkaids. org thisandthatgiftshop. net thomsungroup. com ti 0. org timeby. net tradewindswf. com tradingb 2 c. com turkeycogroup. net vassagospalace. com vyoung. net web-toggery. com webedgewars. com webshoponsalead. com webtoggery. com willman-paris. com worldwidegoans. com

Captchas • "Completely Automated Public Turing test to tell Computers and Humans Apart"

Other ad-hoc/weak methods • • Authentication / registration Comment throttling Disallowing links in comments Moderation

Our Approach – Naïve Bayes • • • Statistical Adaptive Automatic Scalable and extensible Works well for spam e-mail

Naïve Bayes

P(A|B) = P(B|A) ∙ P(A) / P(B)

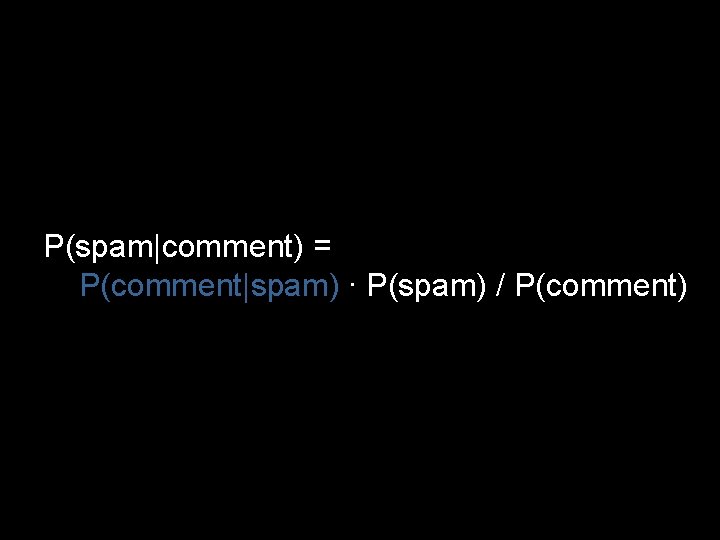

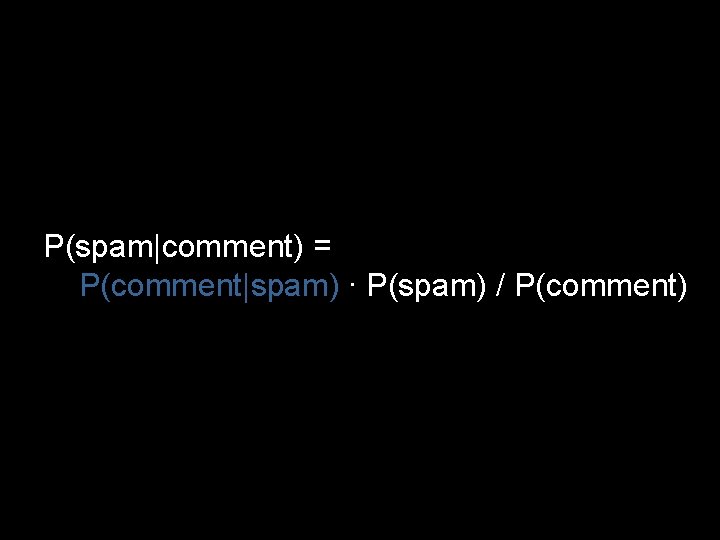

P(spam|comment) = P(comment|spam) ∙ P(spam) / P(comment)

P(spam|comment) = P(comment|spam) ∙ P(spam) / P(comment)

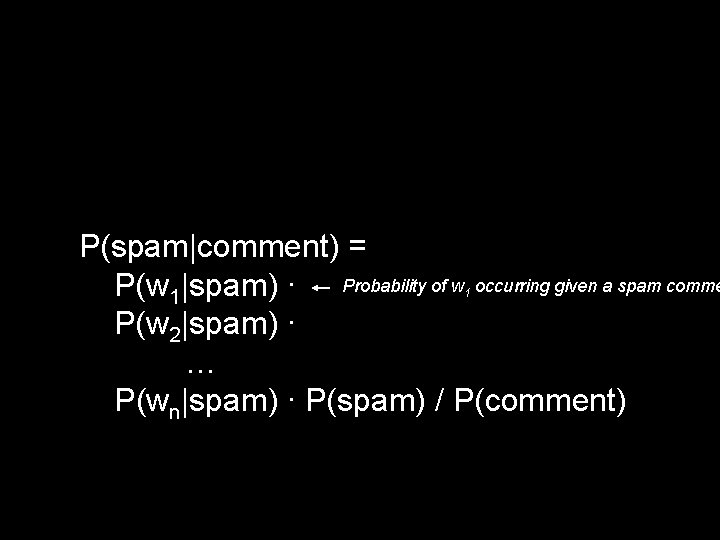

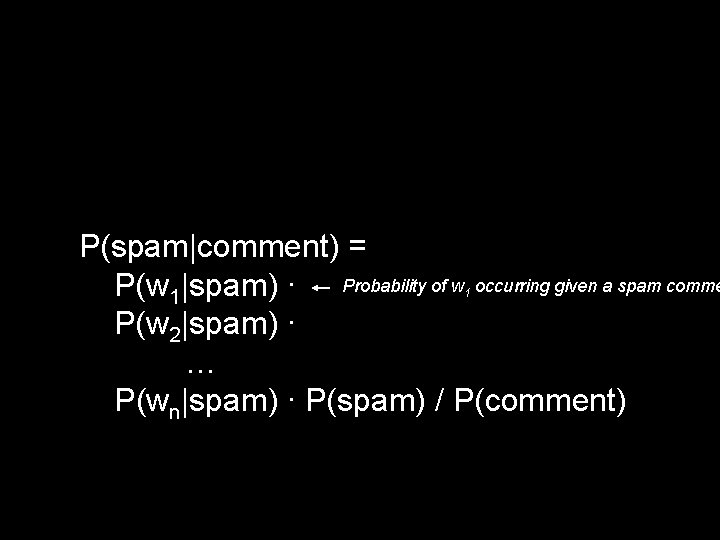

P(spam|comment) = Probability of w occurring given a spam comme P(w 1|spam) ∙ P(w 2|spam) ∙ … P(wn|spam) ∙ P(spam) / P(comment) 1 (naïve assumption)

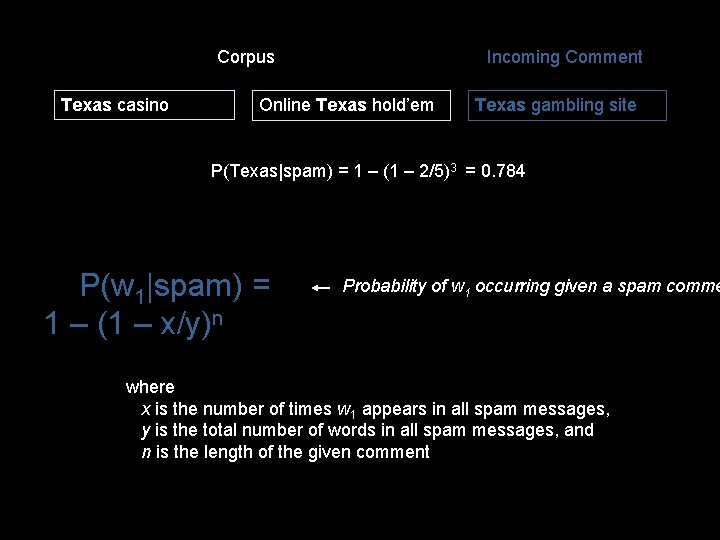

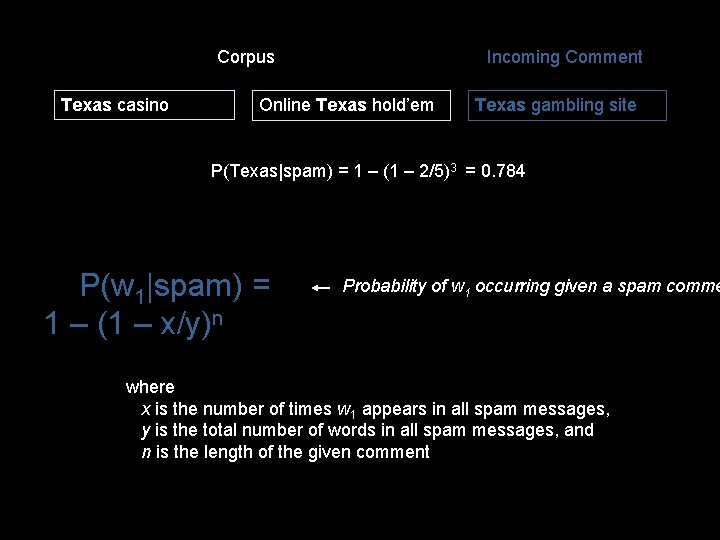

Corpus Texas casino Incoming Comment Online Texas hold’em Texas gambling site P(Texas|spam) = 1 – (1 – 2/5)3 = 0. 784 P(w 1|spam) = 1 – (1 – x/y)n Probability of w 1 occurring given a spam comme where x is the number of times w 1 appears in all spam messages, y is the total number of words in all spam messages, and n is the length of the given comment

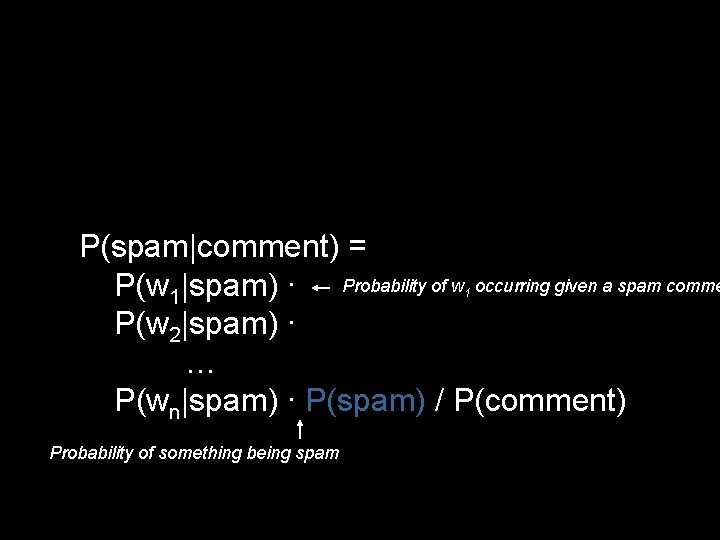

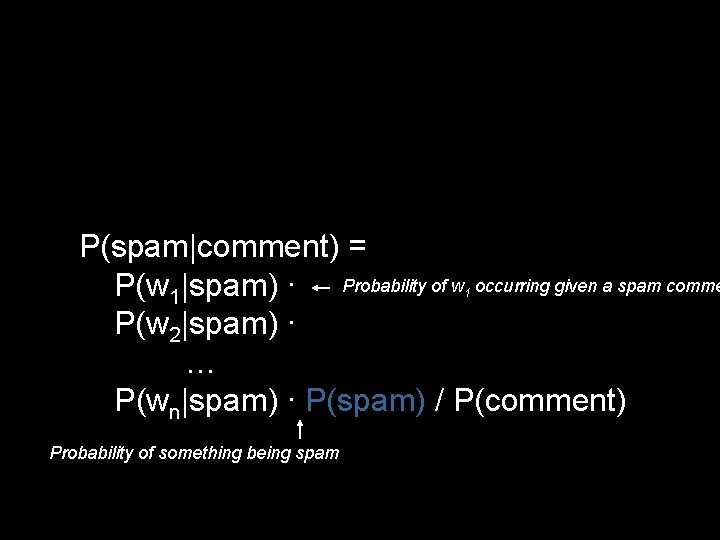

P(spam|comment) = P(w 1|spam) ∙ Probability of w occurring given a spam comme P(w 2|spam) ∙ … P(wn|spam) ∙ P(spam) / P(comment) 1

P(spam|comment) = P(w 1|spam) ∙ Probability of w occurring given a spam comme P(w 2|spam) ∙ … P(wn|spam) ∙ P(spam) / P(comment) 1 Probability of something being spam

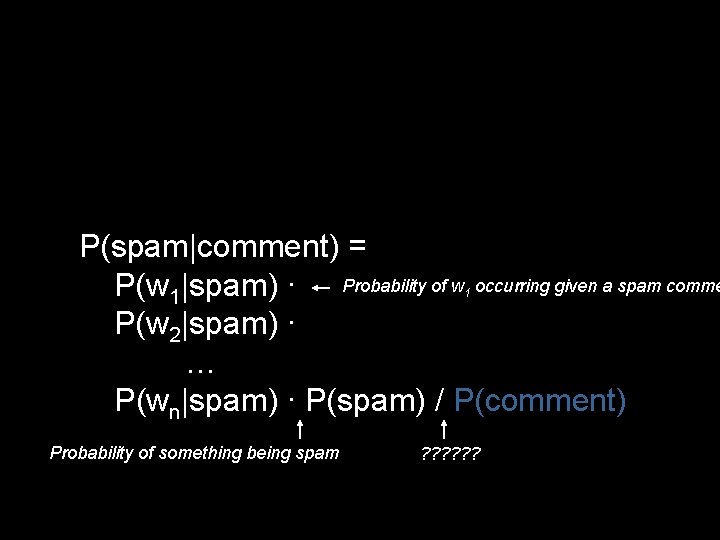

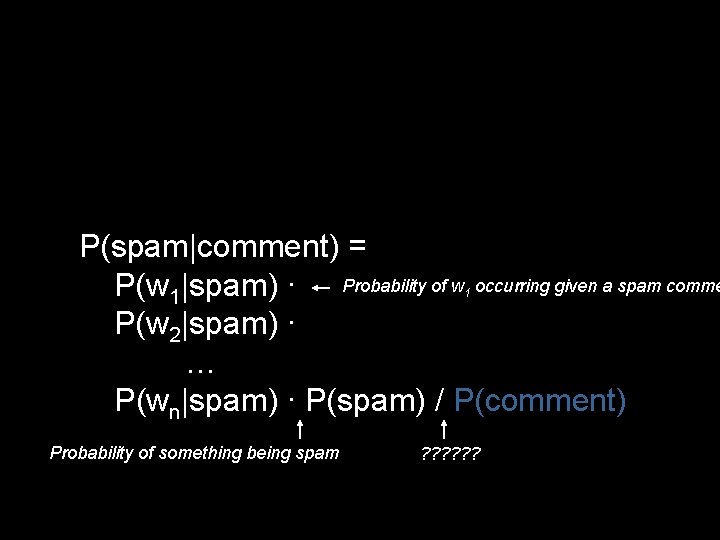

P(spam|comment) = P(w 1|spam) ∙ Probability of w occurring given a spam comme P(w 2|spam) ∙ … P(wn|spam) ∙ P(spam) / P(comment) 1 Probability of something being spam ? ? ?

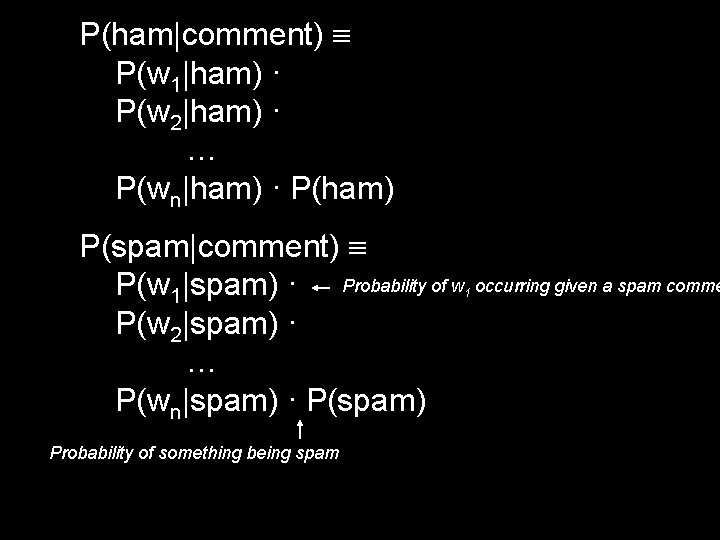

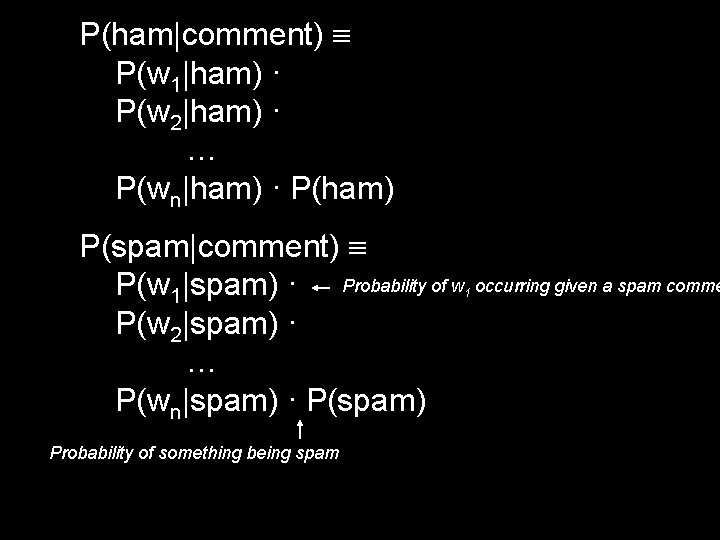

P(ham|comment) = P(w 1|ham) ∙ P(w 2|ham) ∙ … P(wn|ham) ∙ P(ham) / P(comment) P(spam|comment) = P(w 1|spam) ∙ Probability of w occurring given a spam comme P(w 2|spam) ∙ … P(wn|spam) ∙ P(spam) / P(comment) 1 Probability of something being spam ? ? ?

P(ham|comment) P(w 1|ham) ∙ P(w 2|ham) ∙ … P(wn|ham) ∙ P(ham) P(spam|comment) P(w 1|spam) ∙ Probability of w P(w 2|spam) ∙ … P(wn|spam) ∙ P(spam) Probability of something being spam 1 occurring given a spam comme

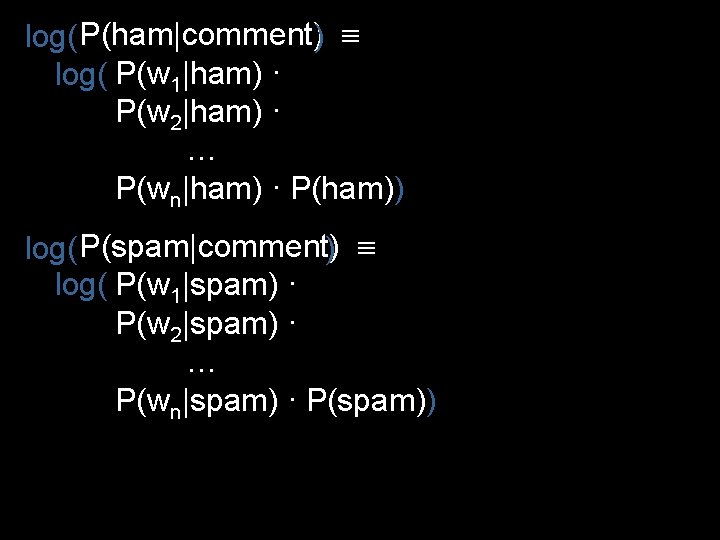

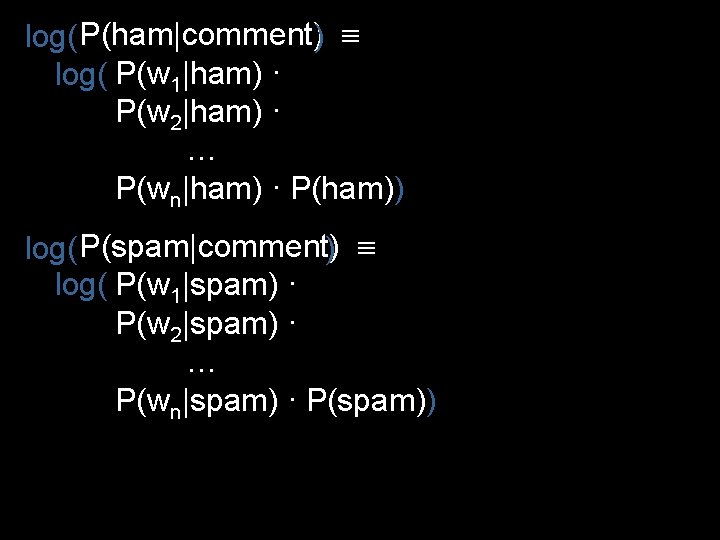

log( P(ham|comment)) log( P(w 1|ham) ∙ P(w 2|ham) ∙ … P(wn|ham) ∙ P(ham)) log( P(spam|comment)) log( P(w 1|spam) ∙ P(w 2|spam) ∙ … P(wn|spam) ∙ P(spam))

log(P(ham|comment)) log(P(w 1|ham)) + log(P(w 2|ham)) + … log(P(wn|ham)) + log(P(ham)) log(P(spam|comment)) log(P(w 1|spam)) + log(P(w 2|spam)) + … log(P(wn|spam)) + log(P(spam))

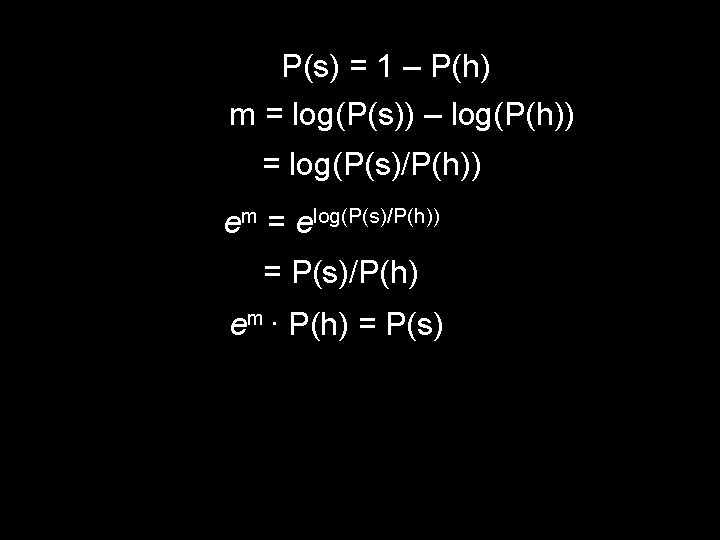

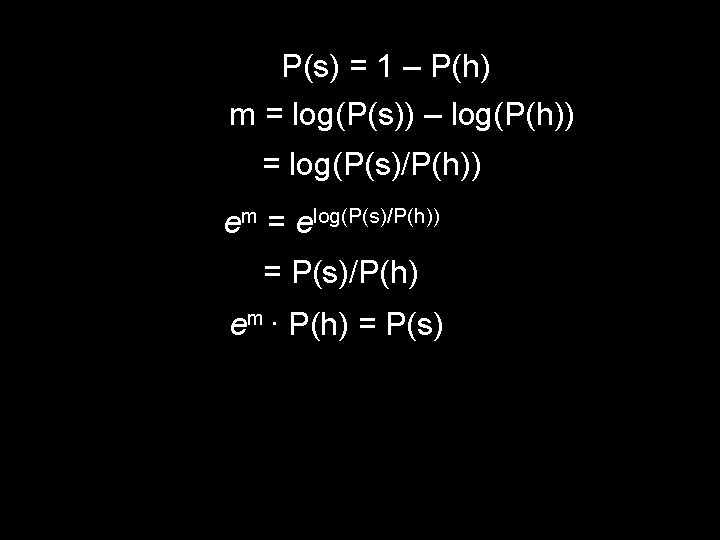

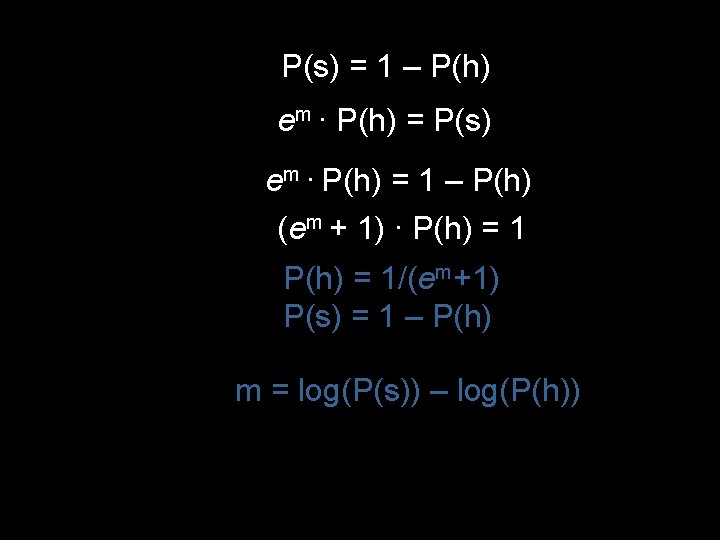

Fact: P(spam|comment) = 1 – P(ham|comment) Abuse of notation: P(s) = P(spam|comment) P(h) = P(ham|comment)

P(s) = 1 – P(h) m = log(P(s)) – log(P(h)) = log(P(s)/P(h)) em = elog(P(s)/P(h)) = P(s)/P(h) em ∙ P(h) = P(s)

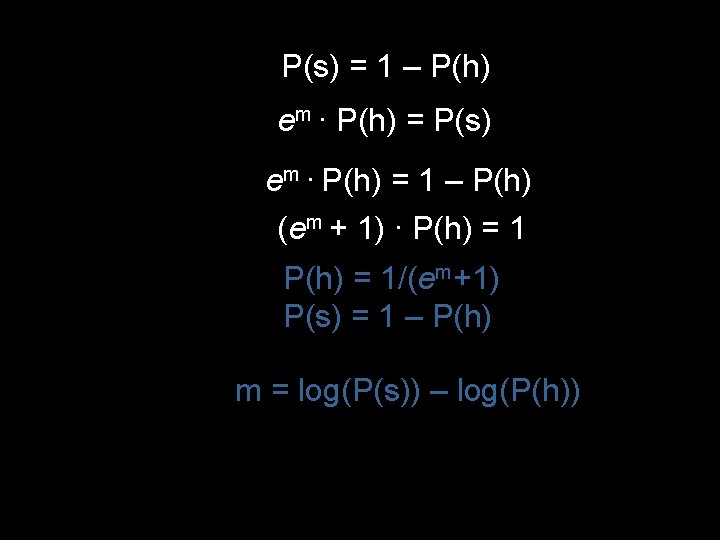

P(s) = 1 – P(h) em ∙ P(h) = P(s) em ∙ P(h) = 1 – P(h) (em + 1) ∙ P(h) = 1/(em+1) P(s) = 1 – P(h) m = log(P(s)) – log(P(h))

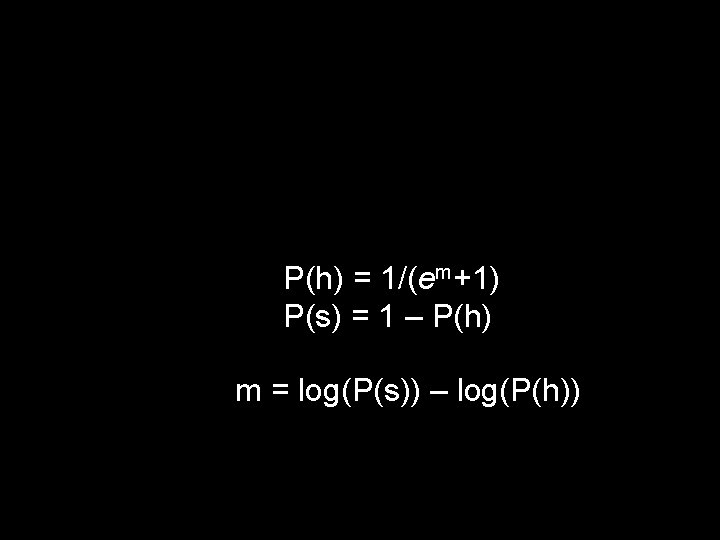

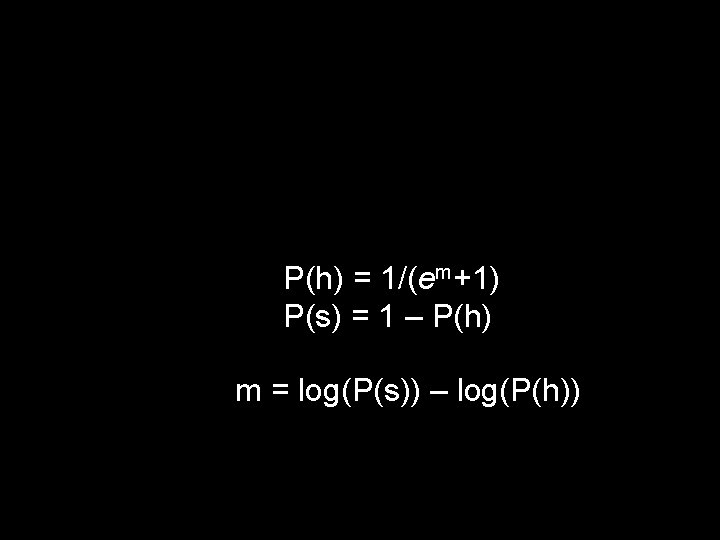

P(h) = 1/(em+1) P(s) = 1 – P(h) m = log(P(s)) – log(P(h))

P(ham|comment) = 1/(em+1) P(spam|comment) = 1 – P(ham|comment) m = log(P(spam|comment)) – log(P(ham|commen

In practice, just compare log(P(ham|comment)) log(P(spam|comment))

Implementation

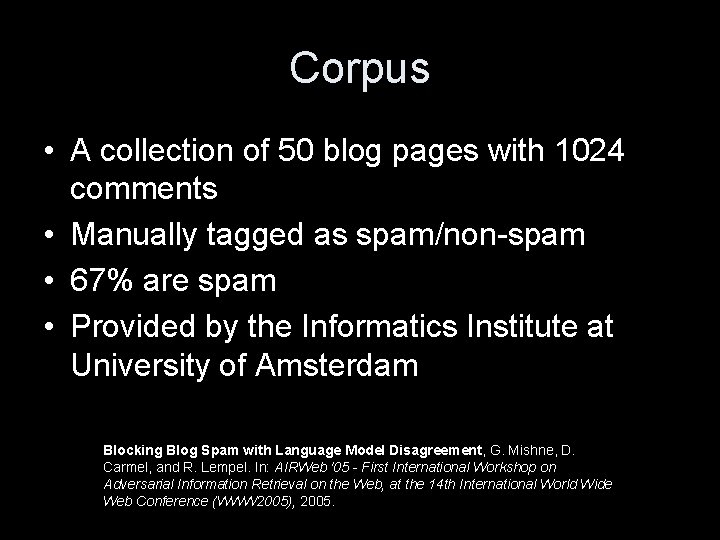

Corpus • A collection of 50 blog pages with 1024 comments • Manually tagged as spam/non-spam • 67% are spam • Provided by the Informatics Institute at University of Amsterdam Blocking Blog Spam with Language Model Disagreement, G. Mishne, D. Carmel, and R. Lempel. In: AIRWeb '05 - First International Workshop on Adversarial Information Retrieval on the Web, at the 14 th International World Wide Web Conference (WWW 2005), 2005.

Most popular spam words casino 0. 999918 0. 000082076 betting 0. 999879 0. 000120513 texas 0. 999813 0. 000187148 biz 0. 999776 0. 000223708 holdem 0. 999738 0. 000262111 poker 0. 999551 0. 000448675 pills 0. 999527 0. 000473407 pokerabc 0. 999506 0. 000493821 teen 0. 999455 0. 000544715 online 0. 999455 0. 000544715 bowl 0. 999437 0. 000562555 gambling 0. 999437 0. 000562555 sonneries 0. 999353 0. 000647359 blackjack 0. 999346 0. 000653516 pharmacy 0. 999254 0. 000745723

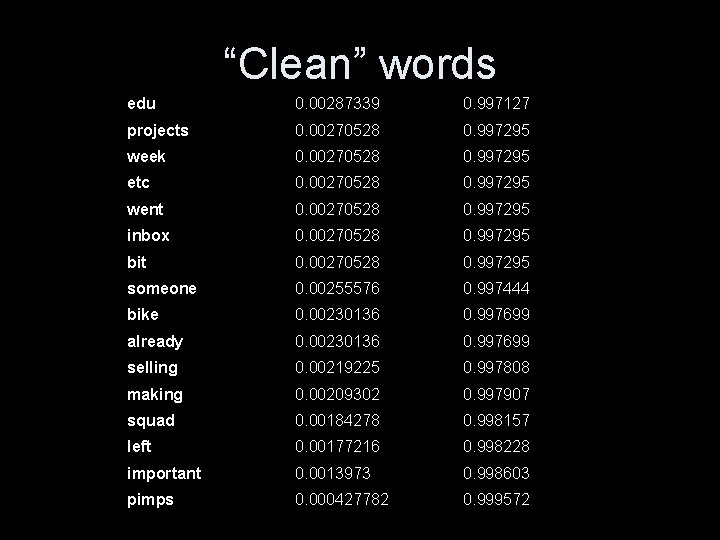

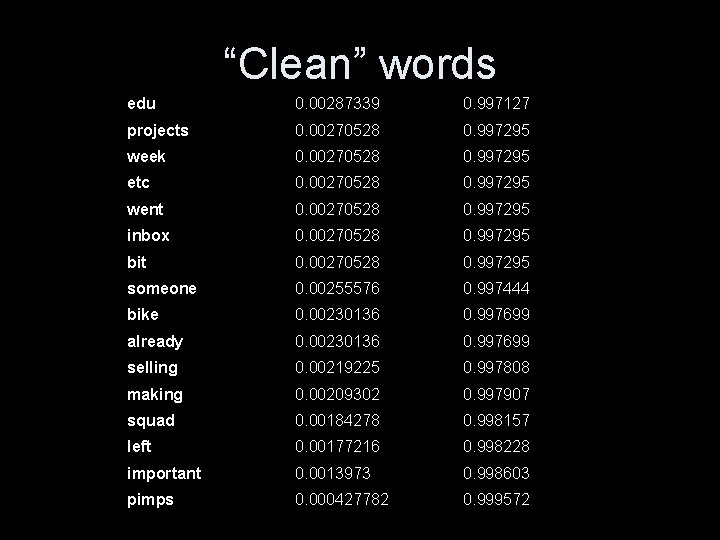

“Clean” words edu 0. 00287339 0. 997127 projects 0. 00270528 0. 997295 week 0. 00270528 0. 997295 etc 0. 00270528 0. 997295 went 0. 00270528 0. 997295 inbox 0. 00270528 0. 997295 bit 0. 00270528 0. 997295 someone 0. 00255576 0. 997444 bike 0. 00230136 0. 997699 already 0. 00230136 0. 997699 selling 0. 00219225 0. 997808 making 0. 00209302 0. 997907 squad 0. 00184278 0. 998157 left 0. 00177216 0. 998228 important 0. 0013973 0. 998603 pimps 0. 000427782 0. 999572

Implementation • Corpus parsing and processing • Naïve Bayes algorithm • Randomly select 70% for training, 30% for testing • Stand-alone web service • Written entirely in Python

It’s showtime!

Configurations • • • Separator used to tokenize comment Inclusion of words from header Classify based only on most significant words Double count non-spam comments Include article body as non-spam example Boosting

![Minimum Error Configuration Separator az Header Both Significant words All Double Minimum Error Configuration • • • Separator: [^a-z<>]+ Header: Both Significant words: All Double](https://slidetodoc.com/presentation_image_h/185abbcce479bf9814e5ceb651d36fab/image-39.jpg)

Minimum Error Configuration • • • Separator: [^a-z<>]+ Header: Both Significant words: All Double count: No Include body: No Boosting: No

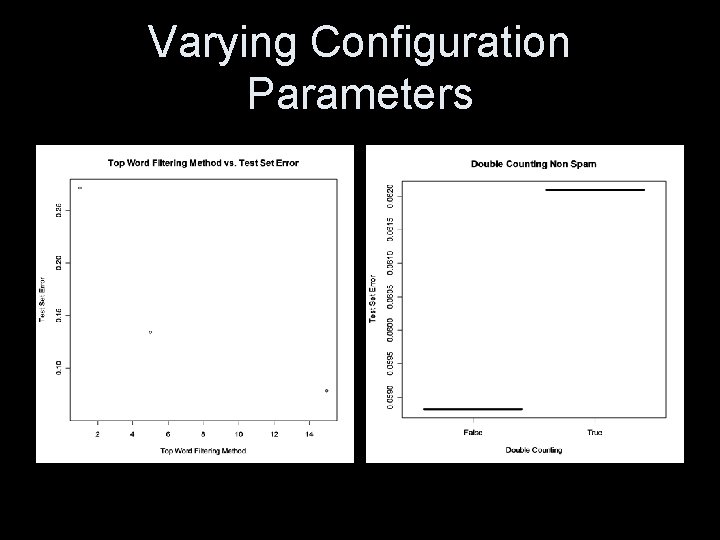

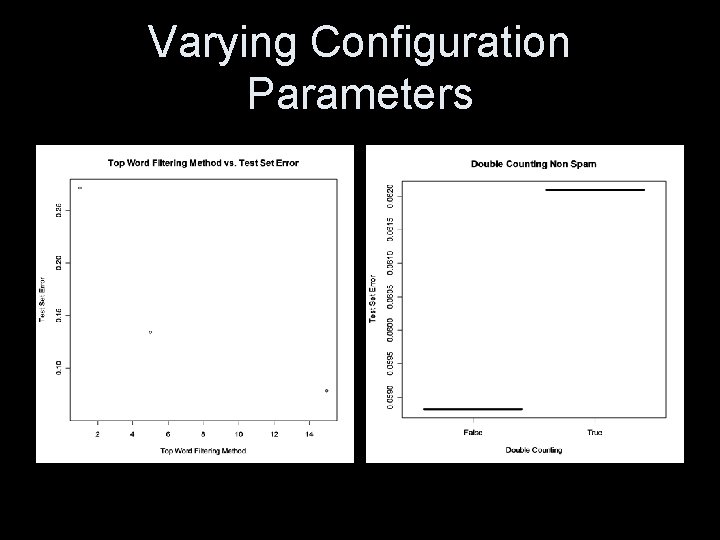

Varying Configuration Parameters

Varying Configuration Parameters

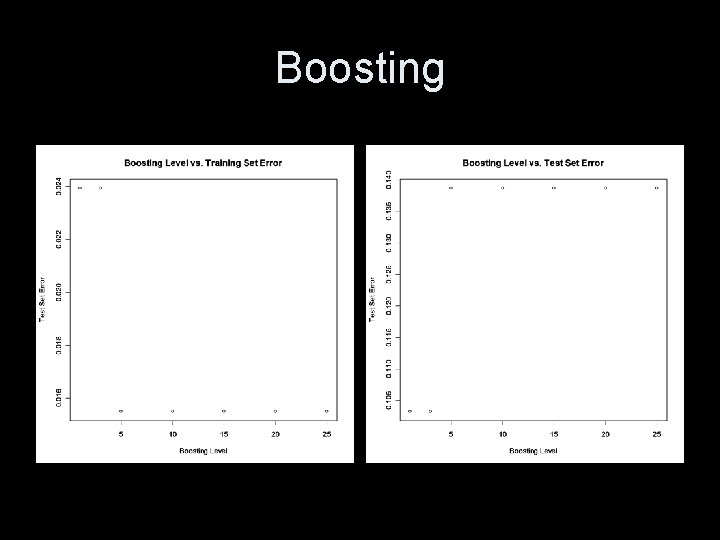

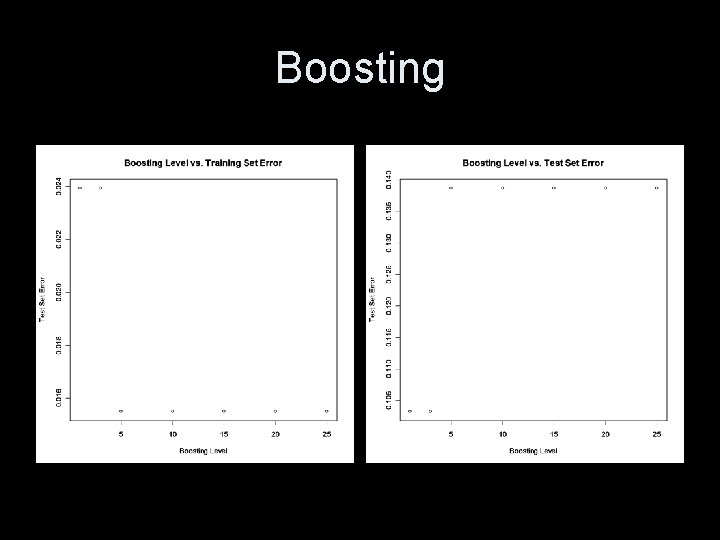

Boosting • Naïve Bayes is applied repeatedly to the data. • bayes. Models Produces Weighted Majority Model = empty list weights = vector(1) for i in 1 to M: model = naive. Bayes(examples, weights) error = compute. Error(model, examples) weights = adjust. Weights(examples, weights, error) bayes. Models[i] = [model, error] if error==0: break

Boosting

Future work (or what we did not do)

Data Processing • Follow links in comment and include words in target web page • More sophisticated tokenization and URL handling (handling $100, 000. . . ) • Word stemming

Features • Ability to incorporate incoming comments into corpus • Ability to mark comment as spam/nonspam • Assign more weight on page content • Adjust probability table based on page content, providing content-sensitive filtering

Comments? No spam, please.