Collapsed Variational Dirichlet Process Mixture Models Kenichi Kurihara

- Slides: 14

Collapsed Variational Dirichlet Process Mixture Models Kenichi Kurihara, Max Welling and Yee W. Teh Published on IJCAI 07 Discussion led by Qi An

Outline • • • Introduction Four approximations to DP Variational Bayesian Inference Optimal cluster label reordering Experimental results Discussion

Introduction • DP is suitable for many density estimation and data clustering applications. • Gibbs sampling solution is not efficient enough to scale up to the large scale problems. • Truncated stick-breaking approximation is formulated in the space of explicit, nonexchangeable cluster labels.

Introduction • This paper – propose an improved VB algorithm based on integrating out mixture weights – compare the stick-breaking representation against the finite symmetric Dirichlet approximation – maintain optimal ordering of cluster labels in the stick-breaking VB algorithm

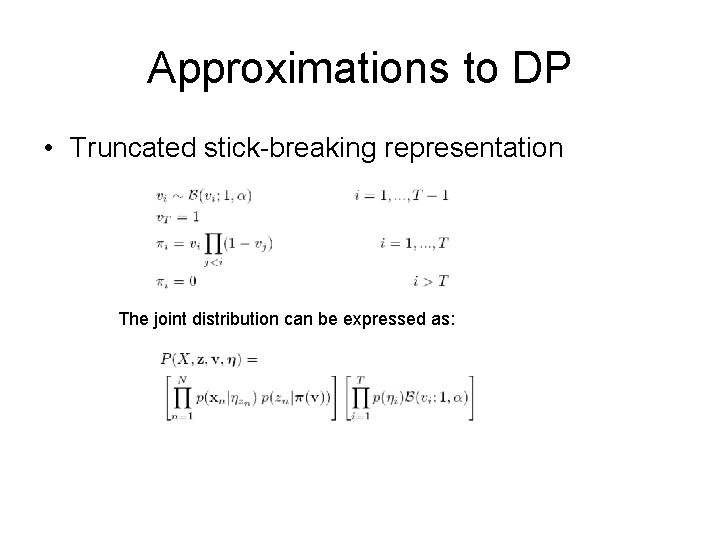

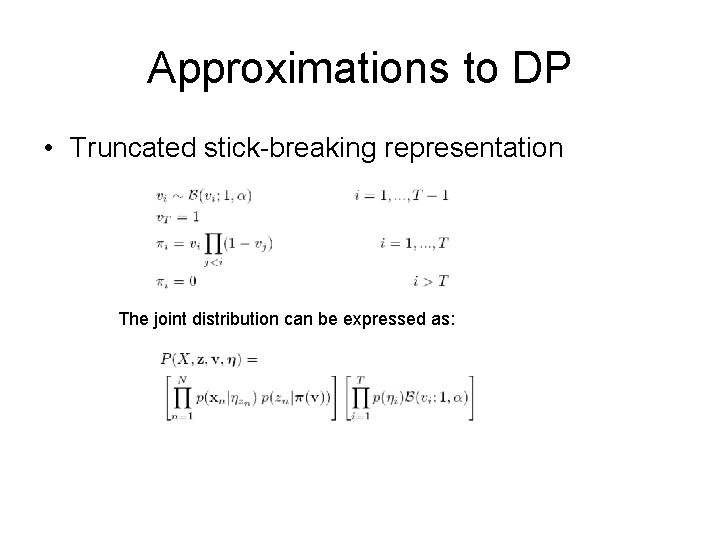

Approximations to DP • Truncated stick-breaking representation The joint distribution can be expressed as:

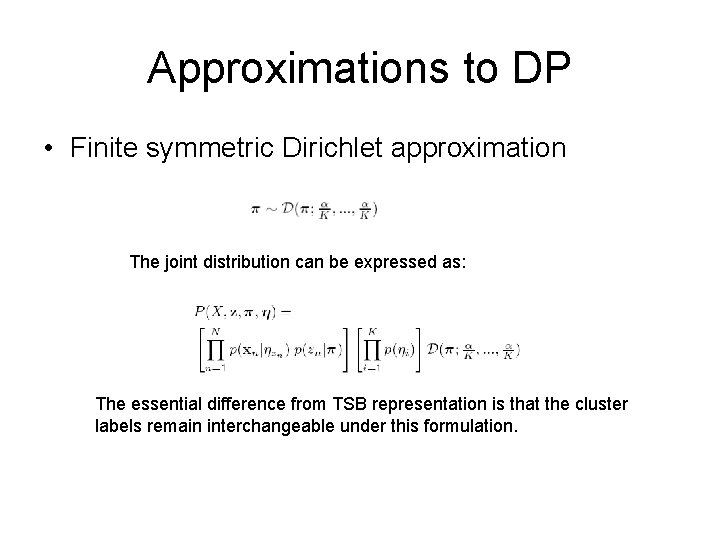

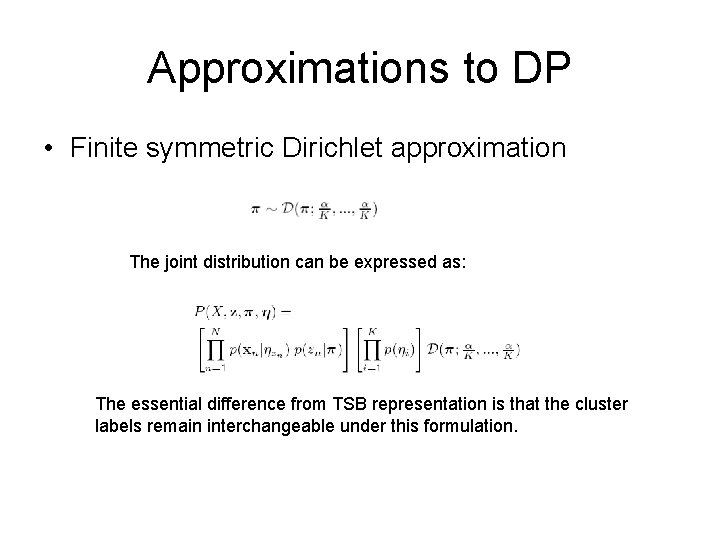

Approximations to DP • Finite symmetric Dirichlet approximation The joint distribution can be expressed as: The essential difference from TSB representation is that the cluster labels remain interchangeable under this formulation.

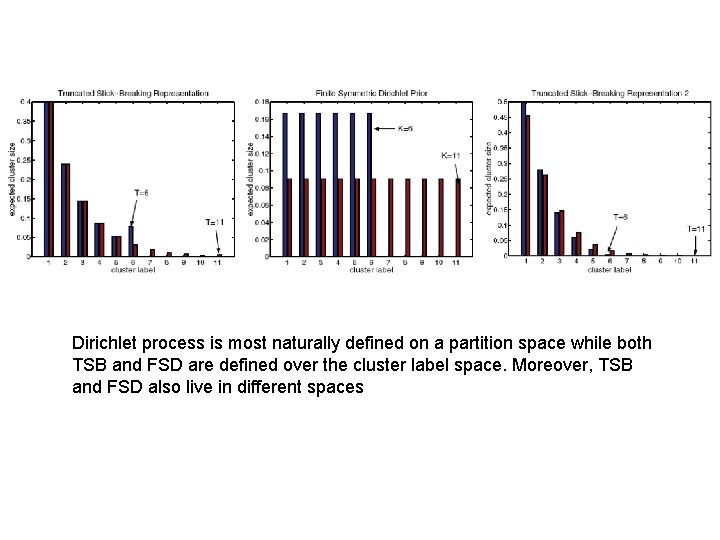

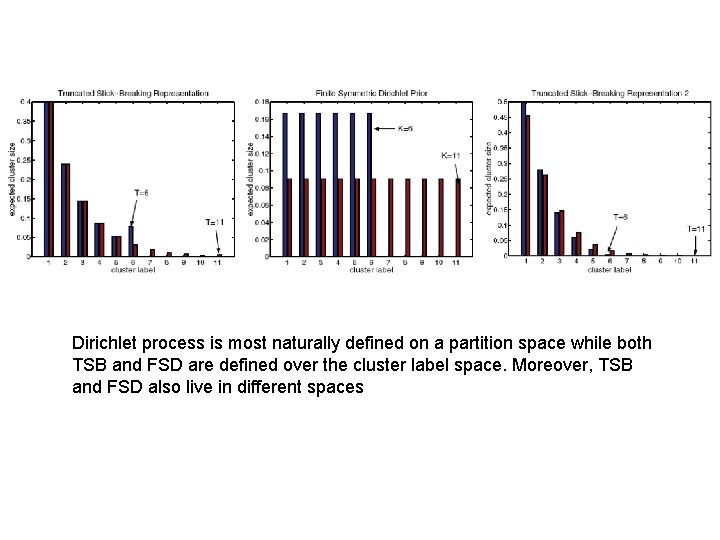

Dirichlet process is most naturally defined on a partition space while both TSB and FSD are defined over the cluster label space. Moreover, TSB and FSD also live in different spaces

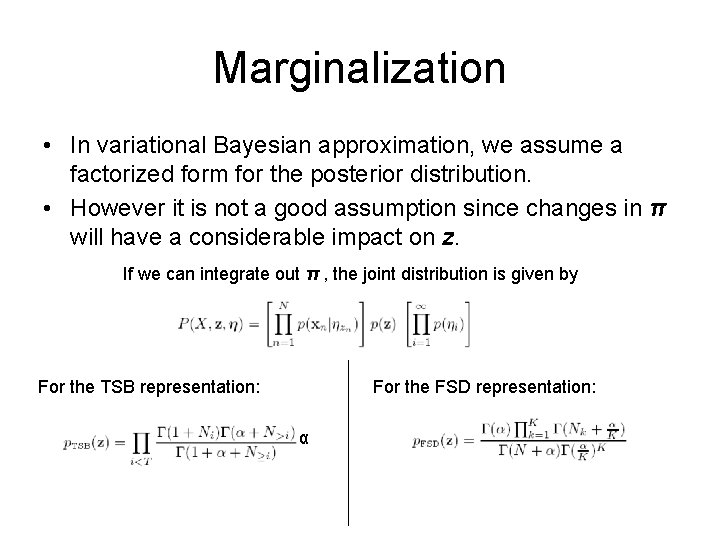

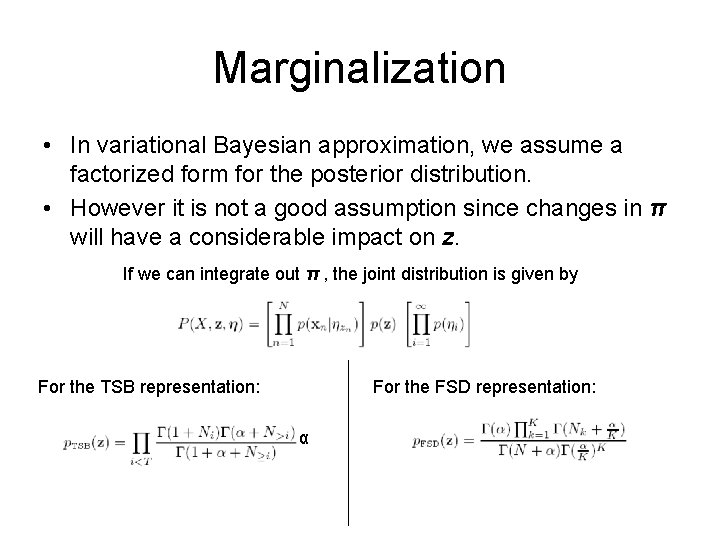

Marginalization • In variational Bayesian approximation, we assume a factorized form for the posterior distribution. • However it is not a good assumption since changes in π will have a considerable impact on z. If we can integrate out π , the joint distribution is given by For the TSB representation: For the FSD representation: α

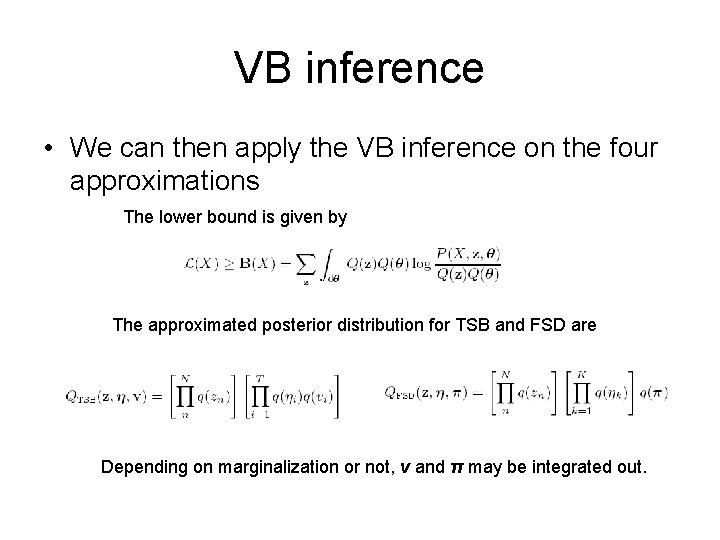

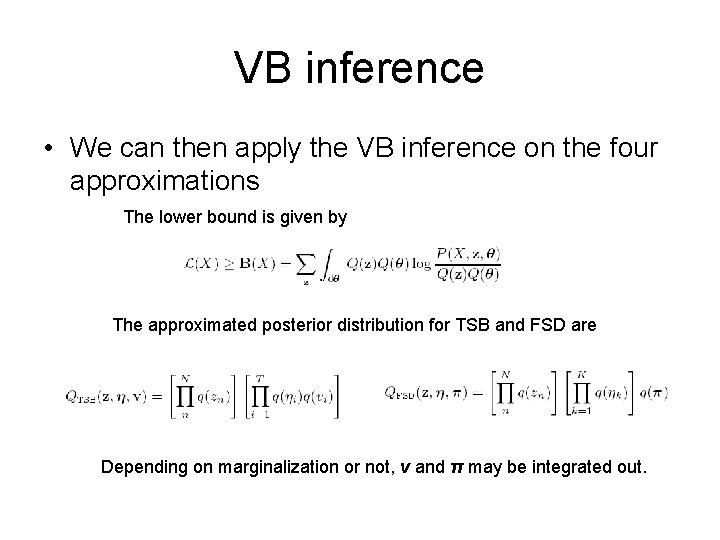

VB inference • We can then apply the VB inference on the four approximations The lower bound is given by The approximated posterior distribution for TSB and FSD are Depending on marginalization or not, v and π may be integrated out.

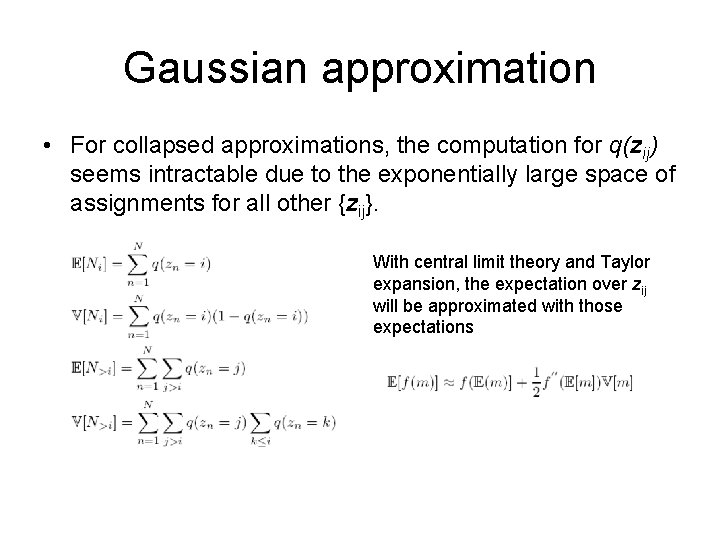

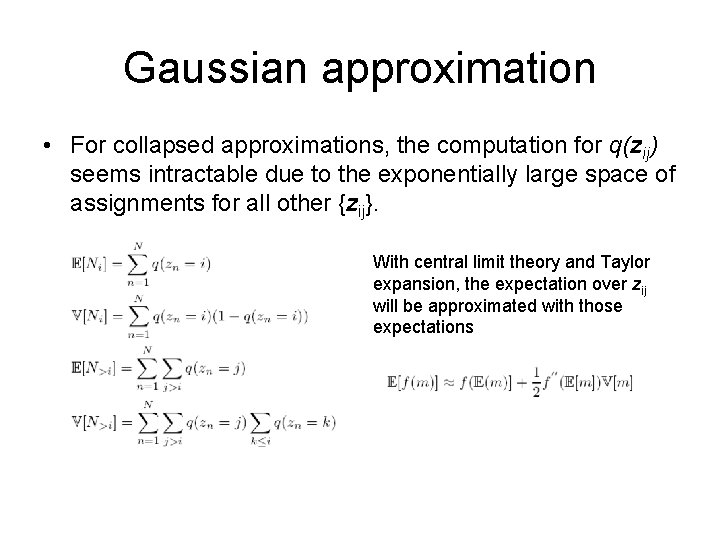

Gaussian approximation • For collapsed approximations, the computation for q(zij) seems intractable due to the exponentially large space of assignments for all other {zij}. With central limit theory and Taylor expansion, the expectation over zij will be approximated with those expectations

Optimal cluster label reordering • For FSB representation, the prior assumes a certain ordering of the clusters. • The authors claims the optimal relabelling of the clusters is given by ordering the cluster sizes in decreasing order.

Experimental results

Experimental results

Discussion • There is very little difference between variational Bayesian inference in the reordered stickbreaking representation and the finite mixture model with symmetric Dirichlet priors. • Label reordering is important for the stickbreaking representation • Variational approximation are much more efficient computationally than Gibbs sampling, with almost no loss in accuracy