Collaborative Filtering Sistemt Rekomendasi 1 Recommender Systems Sistem

Collaborative Filtering Sistemt Rekomendasi -1 -

Recommender Systems § Sistem untuk merekomendasikan items (e. g. books, movies, CD’s, web pages, newsgroup messages) kepada users didasarkan pada contoh -contoh (data historisi ) pilihan user lain sebelumnya -2 -

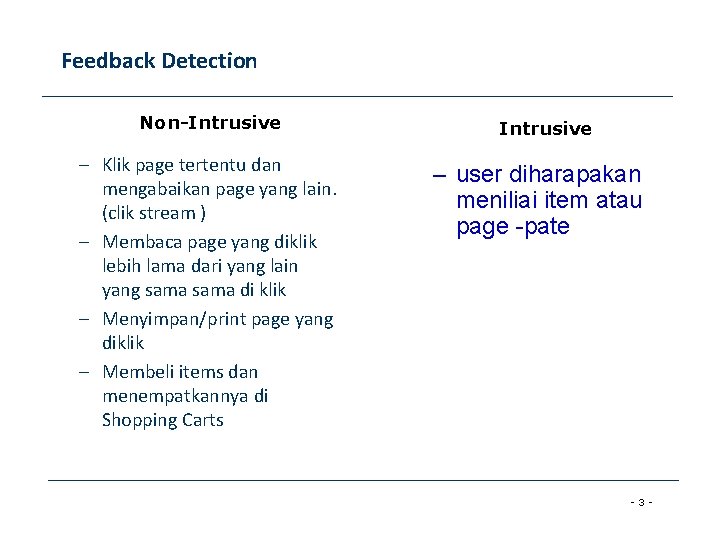

Feedback Detection Non-Intrusive – Klik page tertentu dan mengabaikan page yang lain. (clik stream ) – Membaca page yang diklik lebih lama dari yang lain yang sama di klik – Menyimpan/print page yang diklik – Membeli items dan menempatkannya di Shopping Carts Intrusive – user diharapakan meniliai item atau page -pate -3 -

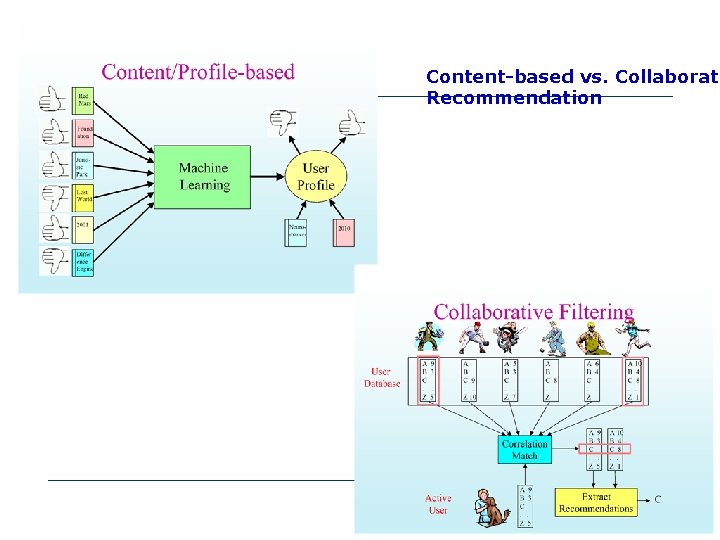

Content-based vs. Collaborati Recommendation -4 -

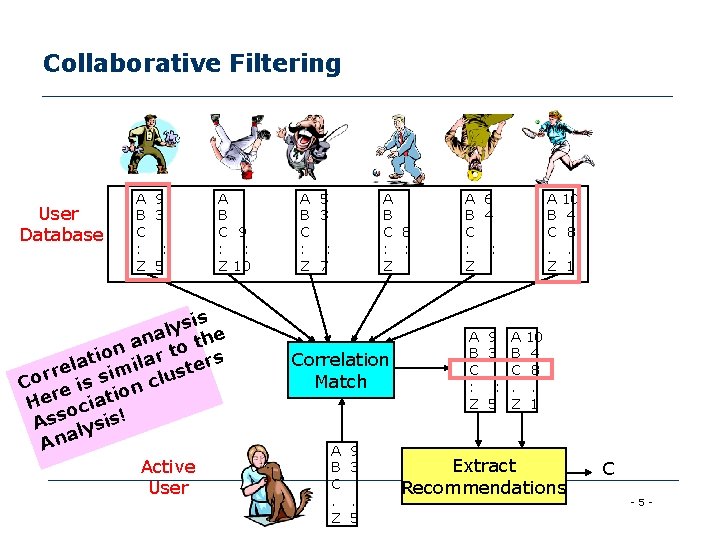

Collaborative Filtering User Database A 9 B 3 C : : Z 5 A B C 9 : : Z 10 sis y l ana to the n tio ilar rs a l e t e m r s i Cor e is s n clu o r He ociati Ass lysis! a An Active User A 5 B 3 C : : Z 7 A B C 8 : : Z Correlation Match A B C. Z 9 3. 5 A 6 B 4 C : : Z A B C. Z 10 4 8. 1 A 9 A 10 B 3 B 4 C C 8 : : . . Z 5 Z 1 Extract Recommendations C -5 -

Item-User Matrix § Input untuk algoritma collaborative filtering algorithm (K-NN) adalah matrik mxn dimana baris user dan kolom adalah items – Seperti term-document matrix (items adalah kosakata dan dokumen adalah user) -6 -

§ User-based nearest-neighbor collaborative filtering § Item-based nearest-neighbor collaborative filtering -7 -

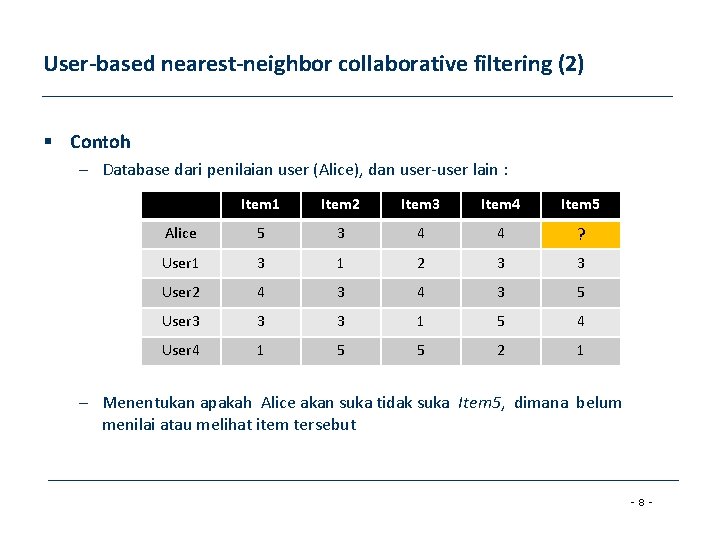

User-based nearest-neighbor collaborative filtering (2) § Contoh – Database dari penilaian user (Alice), dan user-user lain : Item 1 Item 2 Item 3 Item 4 Item 5 Alice 5 3 4 4 ? User 1 3 1 2 3 3 User 2 4 3 5 User 3 3 3 1 5 4 User 4 1 5 5 2 1 – Menentukan apakah Alice akan suka tidak suka Item 5, dimana belum menilai atau melihat item tersebut -8 -

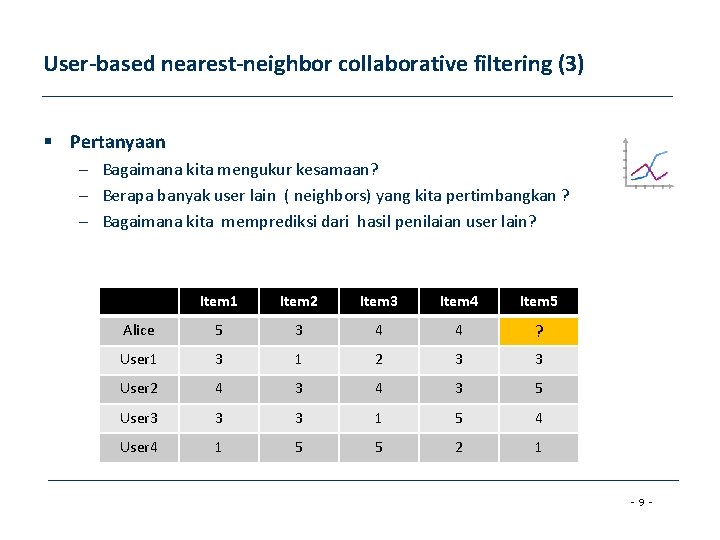

User-based nearest-neighbor collaborative filtering (3) § Pertanyaan – Bagaimana kita mengukur kesamaan? – Berapa banyak user lain ( neighbors) yang kita pertimbangkan ? – Bagaimana kita memprediksi dari hasil penilaian user lain? Item 1 Item 2 Item 3 Item 4 Item 5 Alice 5 3 4 4 ? User 1 3 1 2 3 3 User 2 4 3 5 User 3 3 3 1 5 4 User 4 1 5 5 2 1 -9 -

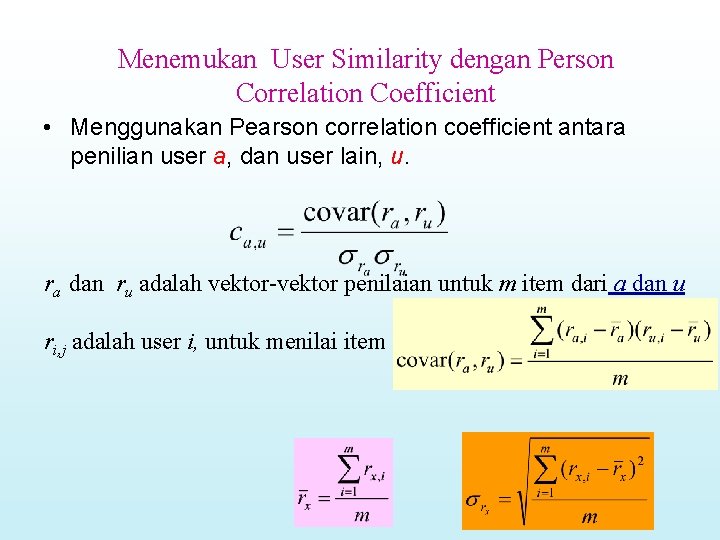

Menemukan User Similarity dengan Person Correlation Coefficient • Menggunakan Pearson correlation coefficient antara penilian user a, dan user lain, u. ra dan ru adalah vektor-vektor penilaian untuk m item dari a dan u ri, j adalah user i, untuk menilai item j

Memilih user lain (Neighbor) Selection • Untuk aktif user a, a, user-user yang dipilih akan dipakai sebagai imput dari prediksi. • Pendekatan menggunakan similarity weights, wa, u • Memilih semua user (neighbor) yang similarity weight diatas threshold yang ditentukan

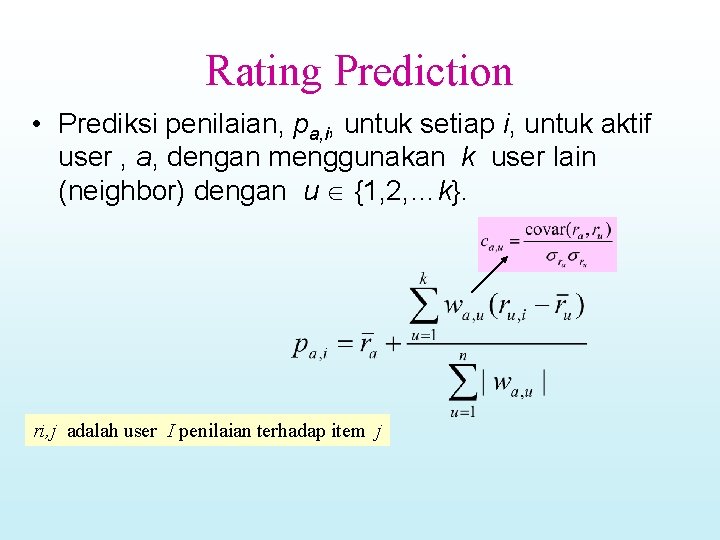

Rating Prediction • Prediksi penilaian, pa, i, untuk setiap i, untuk aktif user , a, dengan menggunakan k user lain (neighbor) dengan u {1, 2, …k}. ri, j adalah user I penilaian terhadap item j

Memperbaiki ukuran /fungsi prediksi – Gunakan "significance weighting", mengurangi nilai secara linier jika jumlah co-rated items rendah – Beri bobot lebih pada neighbor dimana nilai similaritasnya mendekati 1 – Pilih neighbor (user lain) menggunakan threshold/nilai tertentu

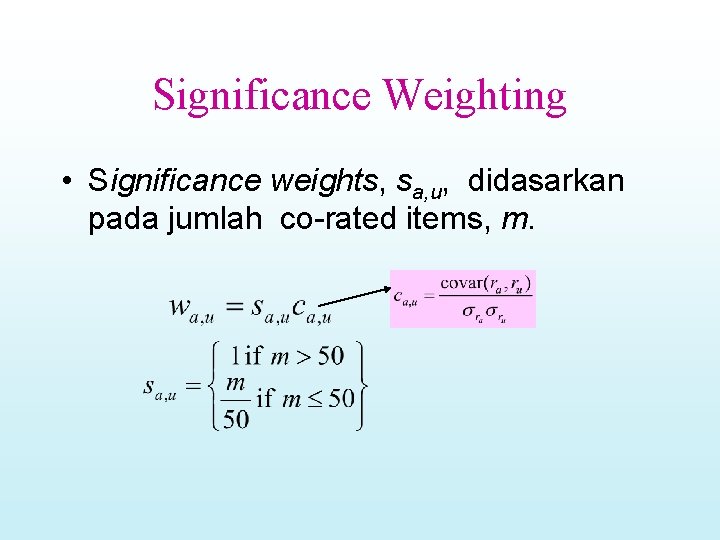

Significance Weighting • Significance weights, sa, u, didasarkan pada jumlah co-rated items, m.

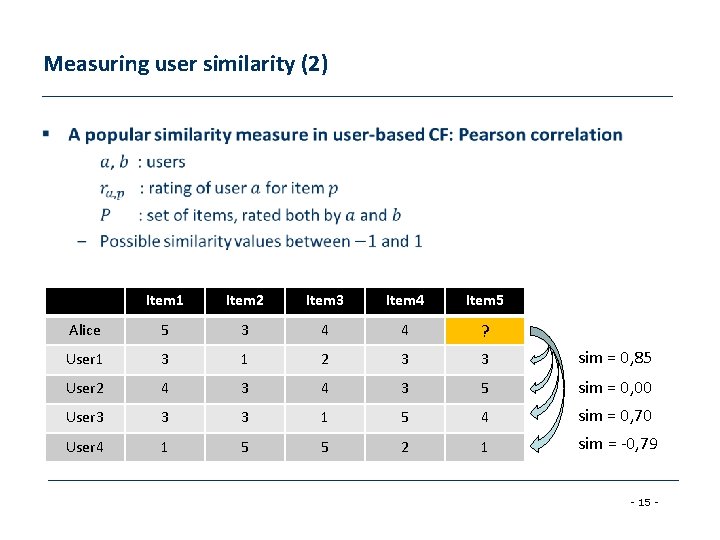

Measuring user similarity (2) § Item 1 Item 2 Item 3 Item 4 Item 5 Alice 5 3 4 4 ? User 1 3 1 2 3 3 sim = 0, 85 User 2 4 3 5 sim = 0, 00 User 3 3 3 1 5 4 sim = 0, 70 User 4 1 5 5 2 1 sim = -0, 79 - 15 -

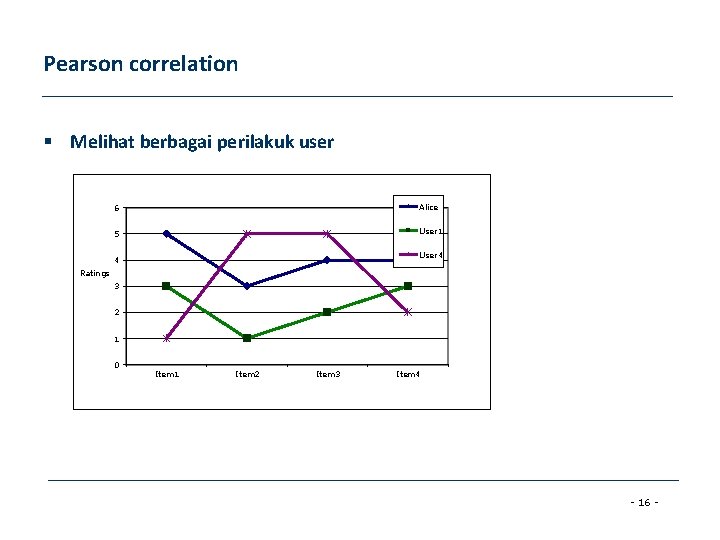

Pearson correlation § Melihat berbagai perilakuk user 6 Alice 5 User 1 User 4 4 Ratings 3 2 1 0 Item 1 Item 2 Item 3 Item 4 - 16 -

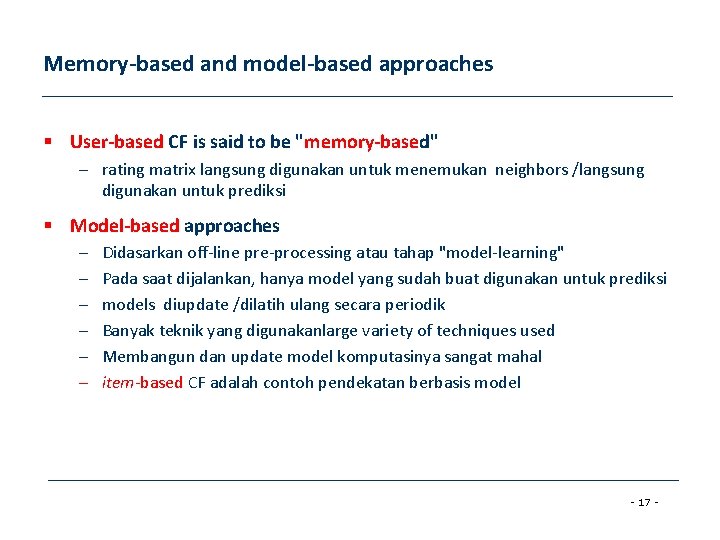

Memory-based and model-based approaches § User-based CF is said to be "memory-based" – rating matrix langsung digunakan untuk menemukan neighbors /langsung digunakan untuk prediksi § Model-based approaches – – – Didasarkan off-line pre-processing atau tahap "model-learning" Pada saat dijalankan, hanya model yang sudah buat digunakan untuk prediksi models diupdate /dilatih ulang secara periodik Banyak teknik yang digunakanlarge variety of techniques used Membangun dan update model komputasinya sangat mahal item-based CF adalah contoh pendekatan berbasis model - 17 -

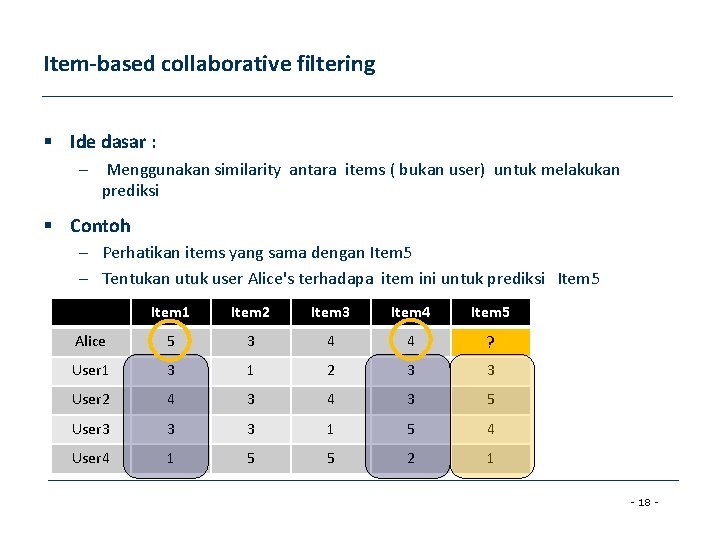

Item-based collaborative filtering § Ide dasar : – Menggunakan similarity antara items ( bukan user) untuk melakukan prediksi § Contoh – Perhatikan items yang sama dengan Item 5 – Tentukan utuk user Alice's terhadapa item ini untuk prediksi Item 5 Item 1 Item 2 Item 3 Item 4 Item 5 Alice 5 3 4 4 ? User 1 3 1 2 3 3 User 2 4 3 5 User 3 3 3 1 5 4 User 4 1 5 5 2 1 - 18 -

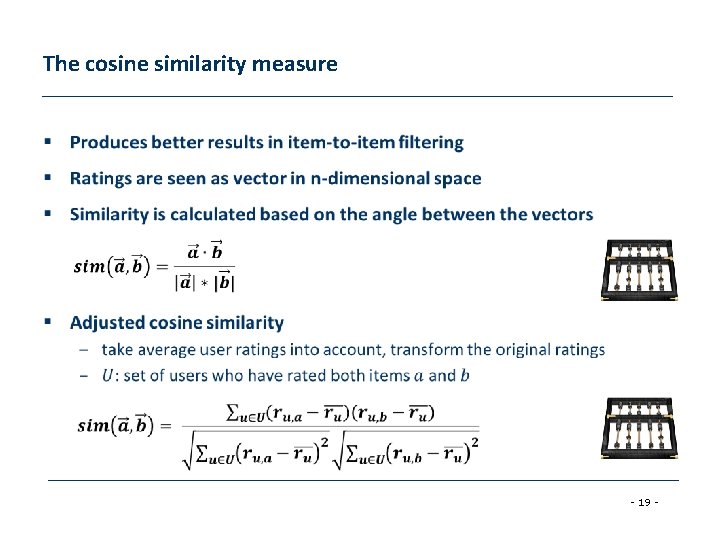

The cosine similarity measure § - 19 -

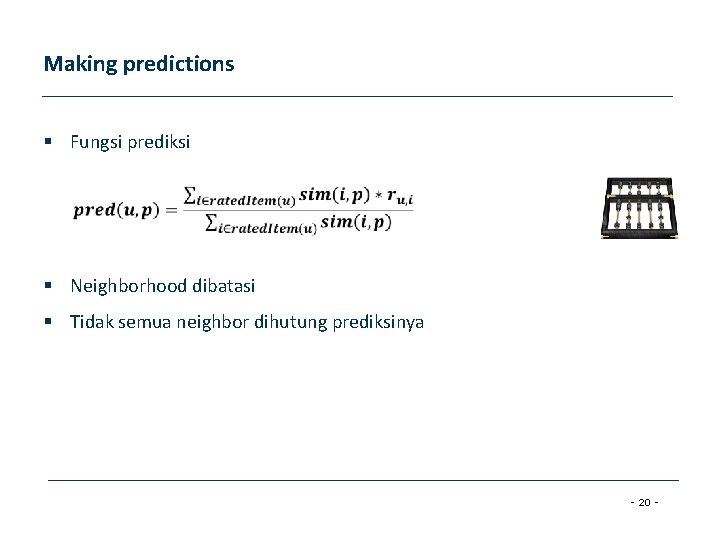

Making predictions § Fungsi prediksi § Neighborhood dibatasi § Tidak semua neighbor dihutung prediksinya - 20 -

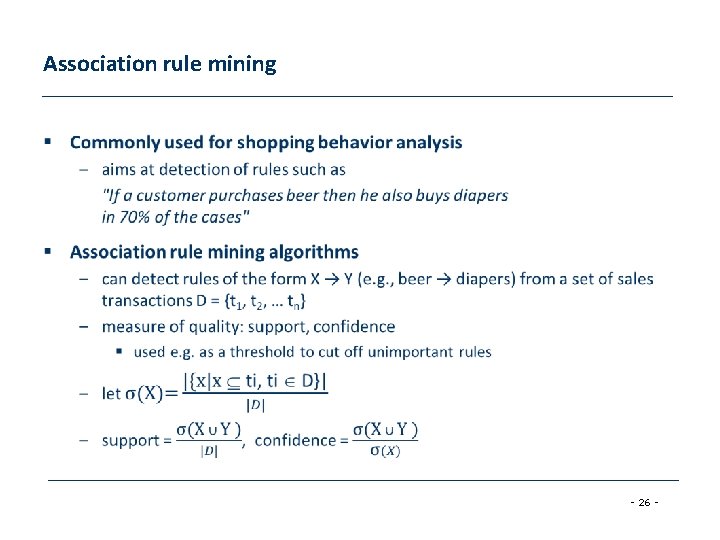

Pendekatan berbasis model – Matrix factorization techniques, statistics § singular value decomposition, principal component analysis – Association rule mining § compare: shopping basket analysis – Probabilistic models § clustering models, Bayesian networks, probabilistic Latent Semantic Analysis – Various other machine learning approaches § Costs of pre-processing – Usually not discussed – Incremental updates possible? - 21 -

§ Ide dasar: Membangun model offline untuk mempercepat prediksi secara online § Singular Value Decomposition untuk mengurangi dimensi dari matrik peniliaian § Waktu konstan untuk melakukan rekomendasi § Pendekatan yang popuar dalam IR… - 22 -

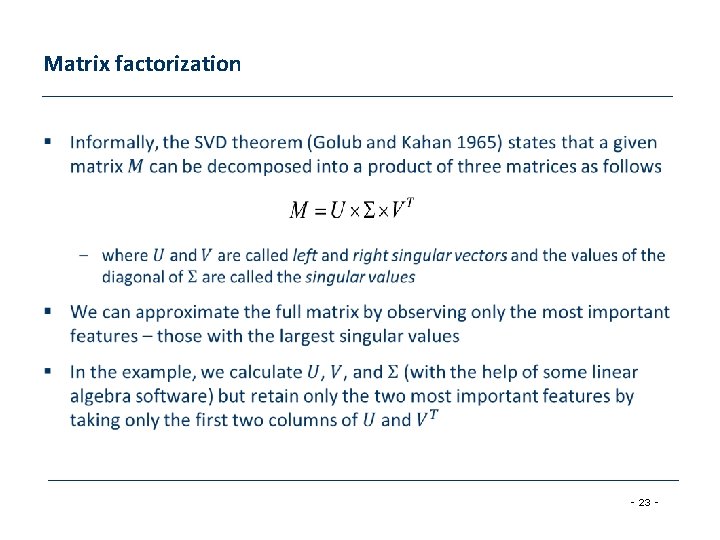

Matrix factorization § - 23 -

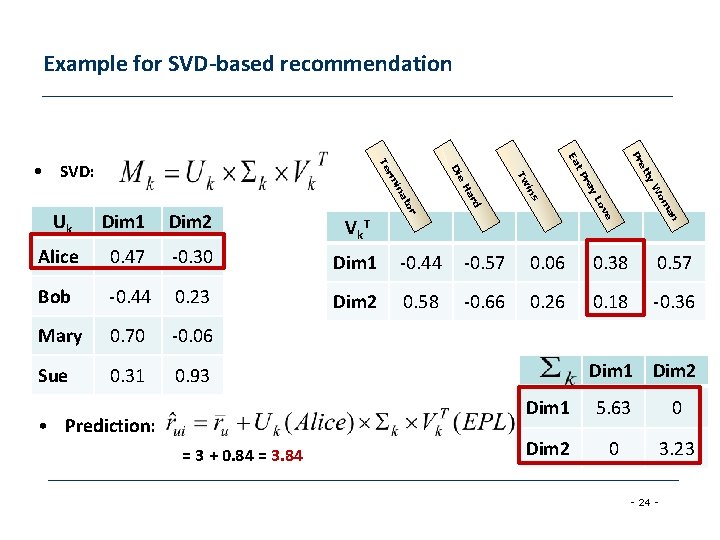

Example for SVD-based recommendation Pr y W ra ty t. P et Ea Uk Dim 1 Dim 2 Lo om Vk T Alice 0. 47 -0. 30 Dim 1 -0. 44 -0. 57 0. 06 0. 38 0. 57 Bob -0. 44 0. 23 Dim 2 0. 58 -0. 66 0. 26 0. 18 -0. 36 Mary 0. 70 -0. 06 Sue 0. 31 0. 93 an = 3 + 0. 84 = 3. 84 ve • Prediction: rd s Ha in Tw e Di or at in rm Te • SVD: Dim 1 Dim 2 Dim 1 5. 63 0 Dim 2 0 3. 23 - 24 -

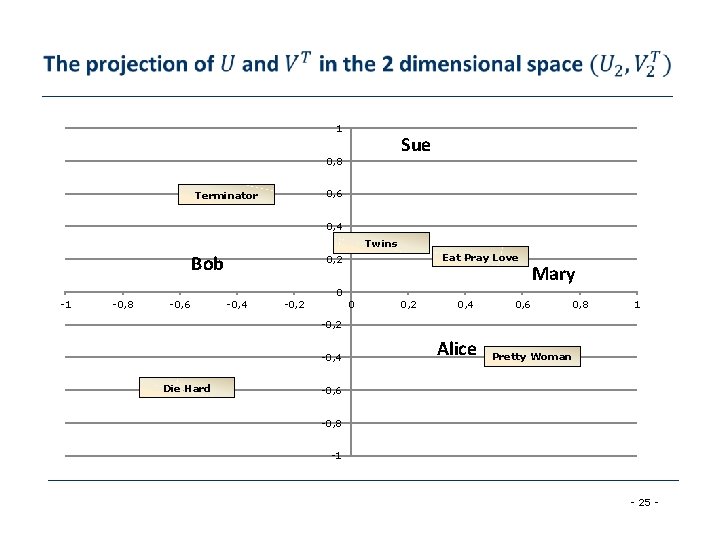

1 Sue 0, 8 0, 6 Terminator 0, 4 Twins Bob Eat Pray Love 0, 2 Mary 0 -1 -0, 8 -0, 6 -0, 4 -0, 2 0, 4 0, 6 0, 8 1 -0, 2 -0, 4 Die Hard Alice Pretty Woman -0, 6 -0, 8 -1 - 25 -

Association rule mining § - 26 -

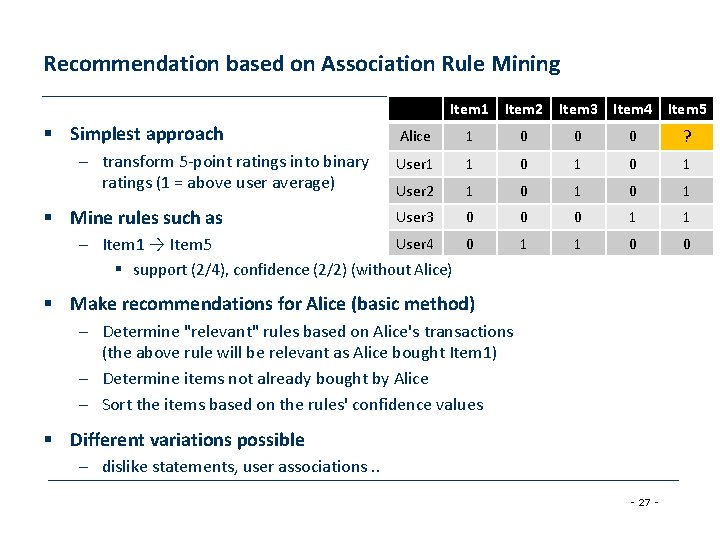

Recommendation based on Association Rule Mining Item 1 Item 2 Item 3 Item 4 Item 5 § Simplest approach – transform 5 -point ratings into binary ratings (1 = above user average) § Mine rules such as – Item 1 → Item 5 Alice 1 0 0 0 ? User 1 1 0 1 User 2 1 0 1 User 3 0 0 0 1 1 User 4 0 1 1 0 0 § support (2/4), confidence (2/2) (without Alice) § Make recommendations for Alice (basic method) – Determine "relevant" rules based on Alice's transactions (the above rule will be relevant as Alice bought Item 1) – Determine items not already bought by Alice – Sort the items based on the rules' confidence values § Different variations possible – dislike statements, user associations. . - 27 -

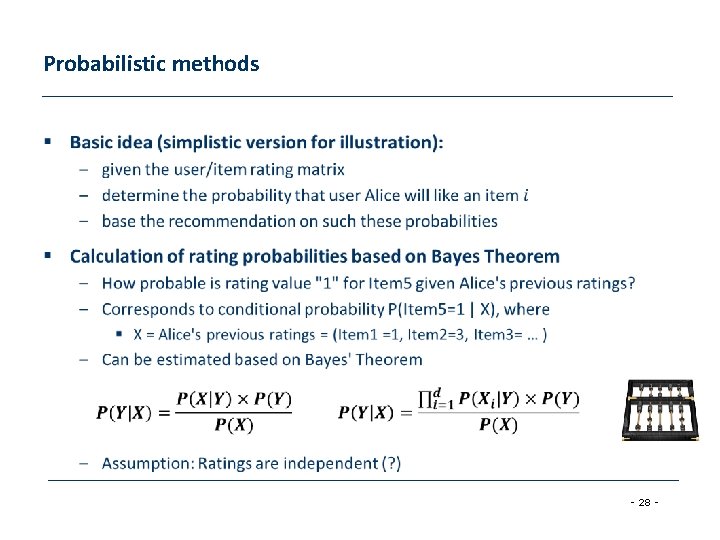

Probabilistic methods § - 28 -

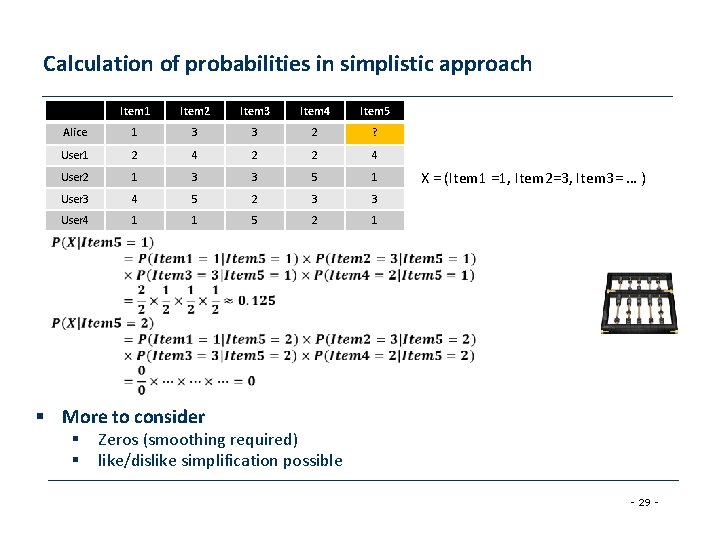

Calculation of probabilities in simplistic approach Item 1 Item 2 Item 3 Item 4 Item 5 Alice 1 3 3 2 ? User 1 2 4 2 2 4 User 2 1 3 3 5 1 User 3 4 5 2 3 3 User 4 1 1 5 2 1 X = (Item 1 =1, Item 2=3, Item 3= … ) § More to consider § § Zeros (smoothing required) like/dislike simplification possible - 29 -

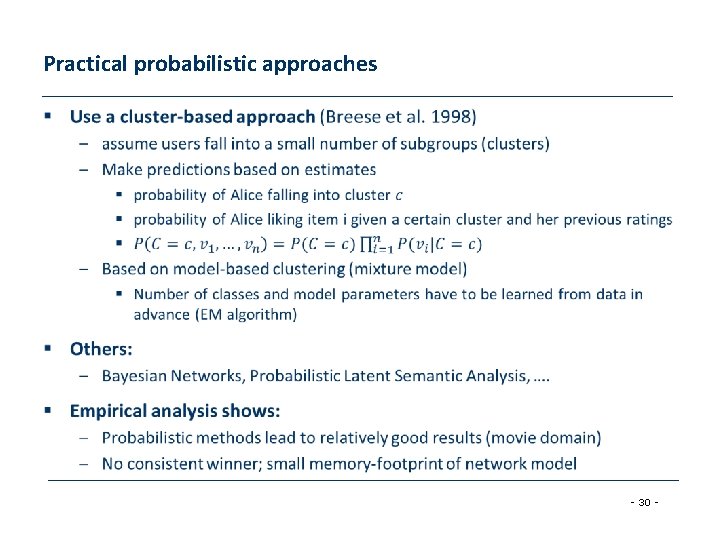

Practical probabilistic approaches § - 30 -

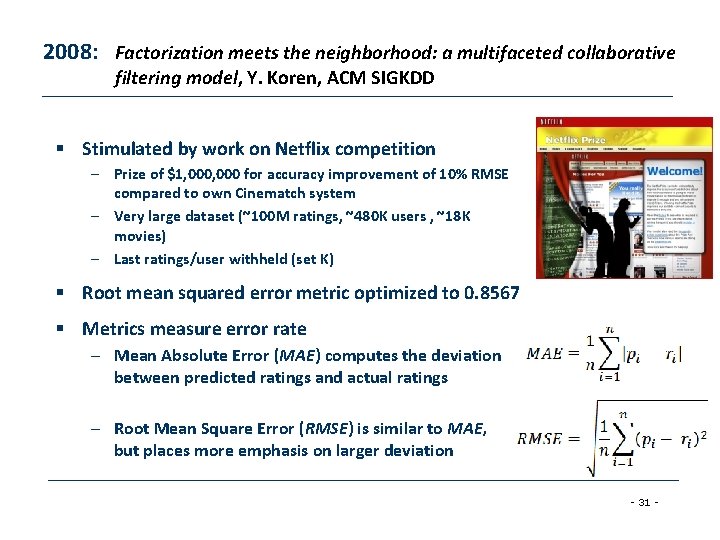

2008: Factorization meets the neighborhood: a multifaceted collaborative filtering model, Y. Koren, ACM SIGKDD § Stimulated by work on Netflix competition – Prize of $1, 000 for accuracy improvement of 10% RMSE compared to own Cinematch system – Very large dataset (~100 M ratings, ~480 K users , ~18 K movies) – Last ratings/user withheld (set K) § Root mean squared error metric optimized to 0. 8567 § Metrics measure error rate – Mean Absolute Error (MAE) computes the deviation between predicted ratings and actual ratings – Root Mean Square Error (RMSE) is similar to MAE, but places more emphasis on larger deviation - 31 -

Summarizing recent methods § Recommendation is concerned with learning from noisy observations (x, y), where has to be determined such that is minimal. § A huge variety of different learning strategies have been applied trying to estimate f(x) – Non parametric neighborhood models – MF models, SVMs, Neural Networks, Bayesian Networks, … - 32 -

- Slides: 32