Collaborative Competitive Filtering Learning recommender using context of

Collaborative Competitive Filtering: Learning recommender using context of user choices Shuang Hong Yang Hongyuan Zha Georgia Tech Bo Long, Alex Smola, Zhaohui Zheng Yahoo! Labs

Outline • • • Motivation Collaborative filtering Collaborative competitive filtering Experiments Summary & Discussions 2

Too many options to choose from? • Information filter (e. g. , recommender system): = capture user's preference to: ü Identify what you truly like ü Avoid your disliked ones filter 3

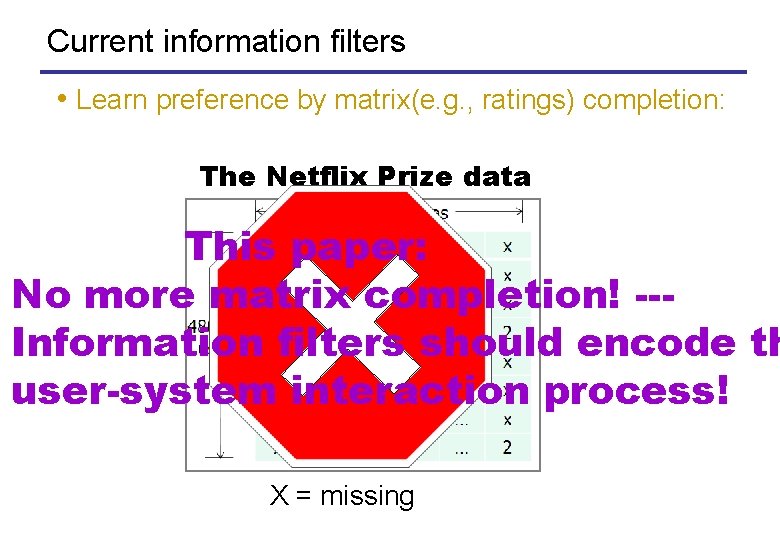

Current information filters • Learn preference by matrix(e. g. , ratings) completion: The Netflix Prize data This paper: No more matrix completion! --Information filters should encode th user-system interaction process! X = missing

User-system interaction • A typical interaction at a recommender system: ü user visit the recommender system (e. g. visit a website) ü system offers a subset of options out of a huge inventory ü user chooses one from the offers and take actions context of user choice: user makes a choice (indicate preference) in the context of a offer set, rather than the whole inventory 5

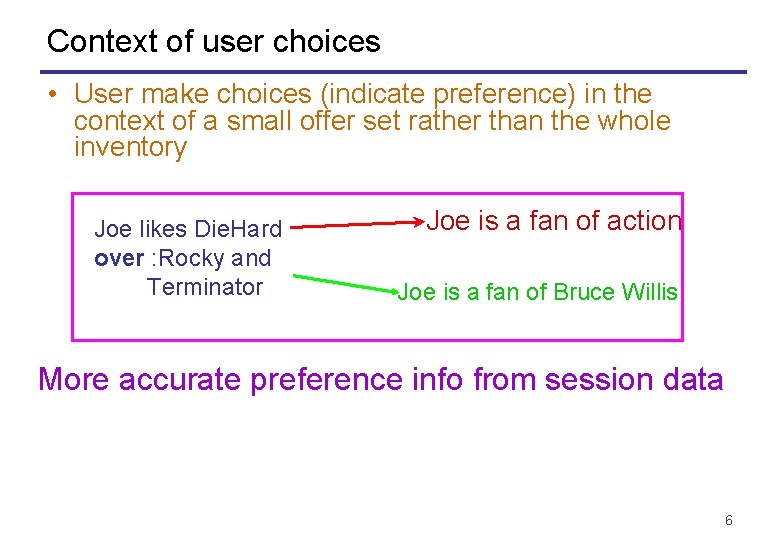

Context of user choices • User make choices (indicate preference) in the context of a small offer set rather than the whole inventory Joe likes Die. Hard over : Rocky and Terminator Joe is a fan of action Joe is a fan of Bruce Willis More accurate preference info from session data 6

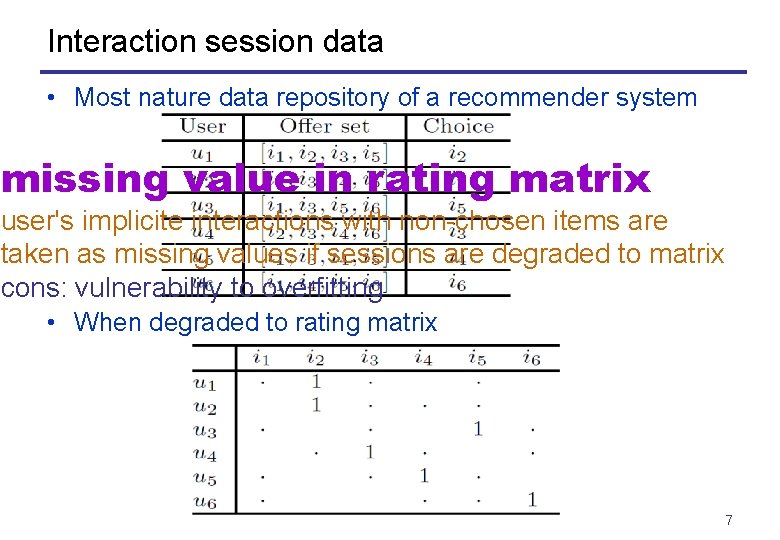

Interaction session data • Most nature data repository of a recommender system missing value in rating matrix user's implicite interactions with non-chosen items are taken as missing values if sessions are degraded to matrix cons: vulnerability to overfitting • When degraded to rating matrix 7

Key insight • No more matrix completion! --- Information filters should encode the user-system interaction process! 1. User make choices (indicate preference) in the context of a small offer set rather than the whole inventory 2. Matrix completion does not fully leverage the session data and is hence vulnerable to overfitting 8

Outline • • • Motivation Collaborative filtering Collaborative competitive filtering Experiments Summary & Discussions 9

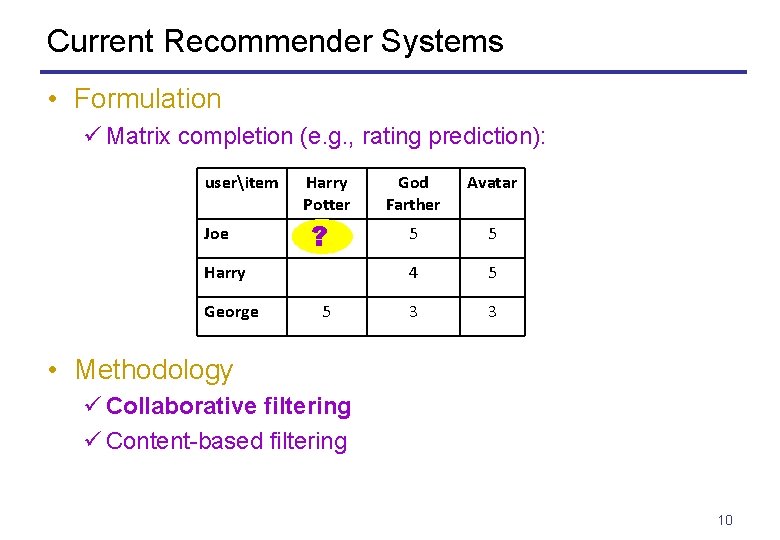

Current Recommender Systems • Formulation ü Matrix completion (e. g. , rating prediction): useritem Joe Harry Potter ? 3 Harry George 5 God Farther Avatar 5 5 4 5 3 3 • Methodology ü Collaborative filtering ü Content-based filtering 10

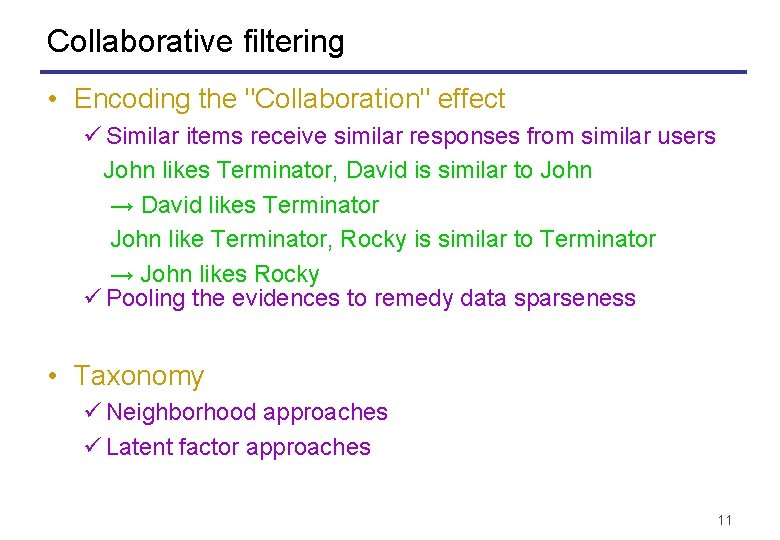

Collaborative filtering • Encoding the "Collaboration" effect ü Similar items receive similar responses from similar users John likes Terminator, David is similar to John → David likes Terminator John like Terminator, Rocky is similar to Terminator → John likes Rocky ü Pooling the evidences to remedy data sparseness • Taxonomy ü Neighborhood approaches ü Latent factor approaches 11

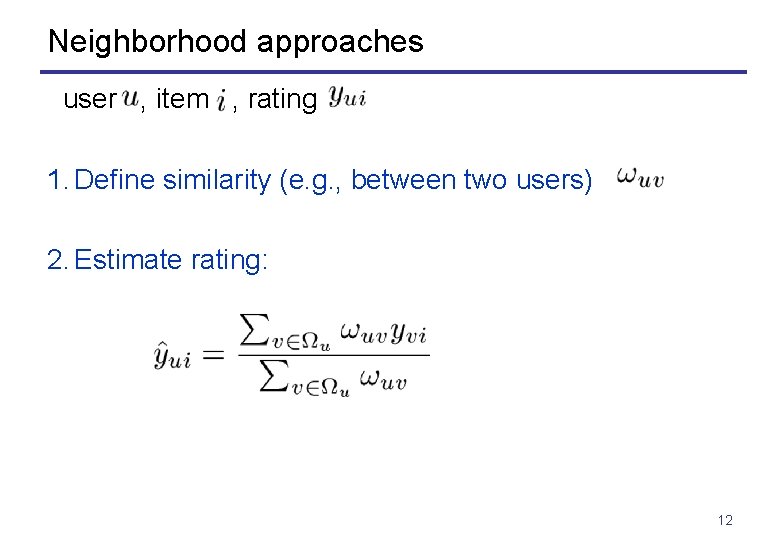

Neighborhood approaches user , item , rating 1. Define similarity (e. g. , between two users) 2. Estimate rating: 12

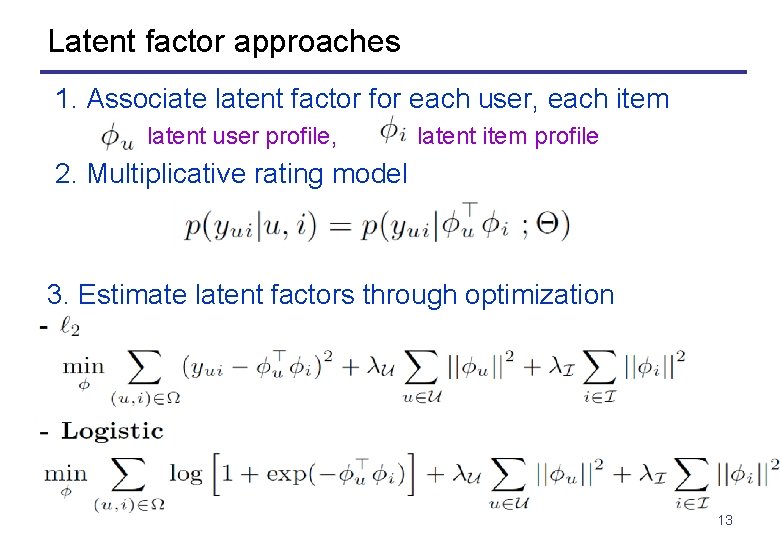

Latent factor approaches 1. Associate latent factor for each user, each item latent user profile, latent item profile 2. Multiplicative rating model 3. Estimate latent factors through optimization 13

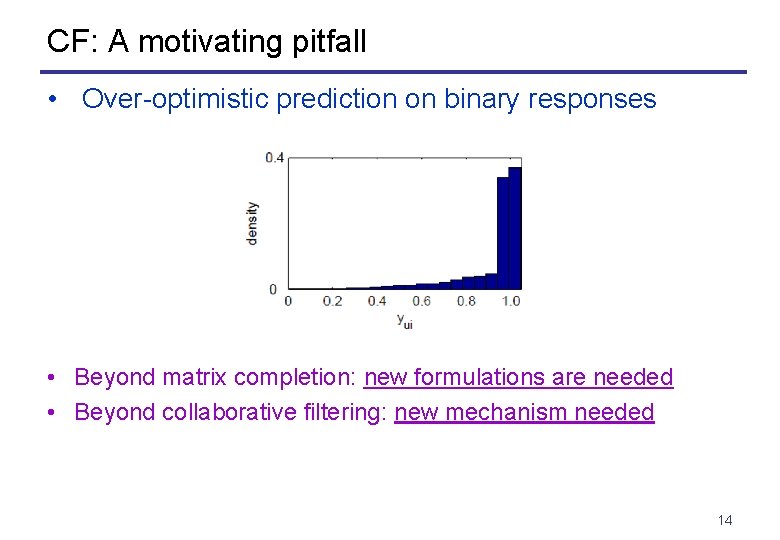

CF: A motivating pitfall • Over-optimistic prediction on binary responses • Beyond matrix completion: new formulations are needed • Beyond collaborative filtering: new mechanism needed 14

Outline • • • Motivation Collaborative filtering Collaborative competitive filtering Experiments Summary & Discussions 15

An overview • Directly modeling session data • Encode collaboration effect: – Similar users have similar preference toward similar items • Encode competitive effect: – Items compete for the attention of users 16

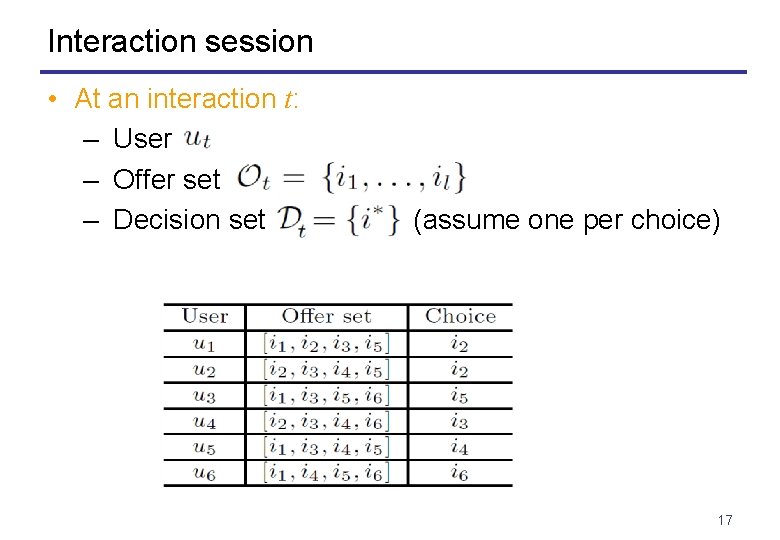

Interaction session • At an interaction t: – User – Offer set – Decision set (assume one per choice) 17

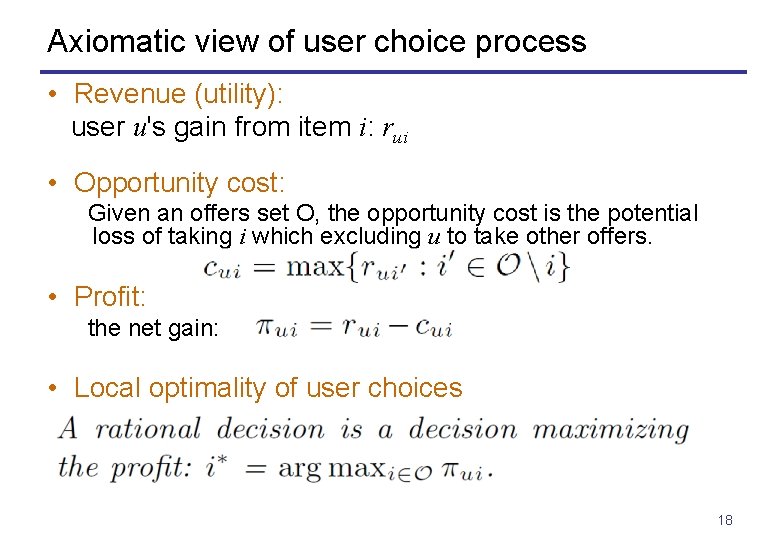

Axiomatic view of user choice process • Revenue (utility): user u's gain from item i: rui • Opportunity cost: Given an offers set O, the opportunity cost is the potential loss of taking i which excluding u to take other offers. • Profit: the net gain: • Local optimality of user choices 18

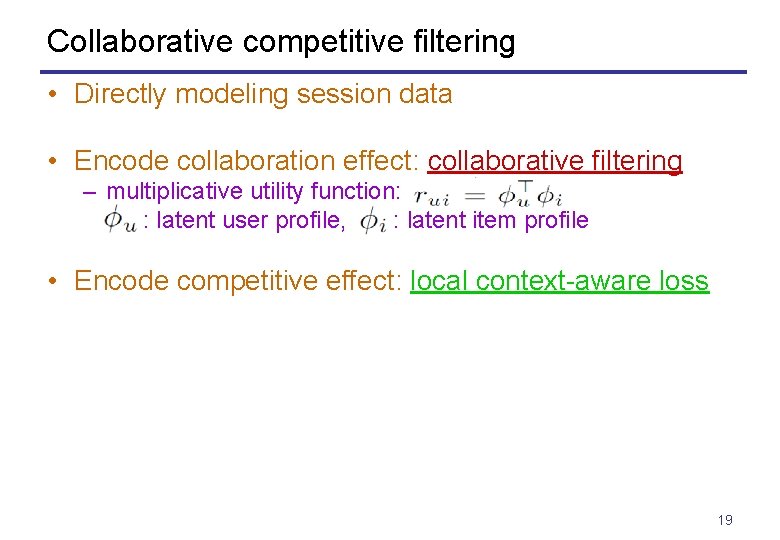

Collaborative competitive filtering • Directly modeling session data • Encode collaboration effect: collaborative filtering – multiplicative utility function: : latent user profile, : latent item profile • Encode competitive effect: local context-aware loss 19

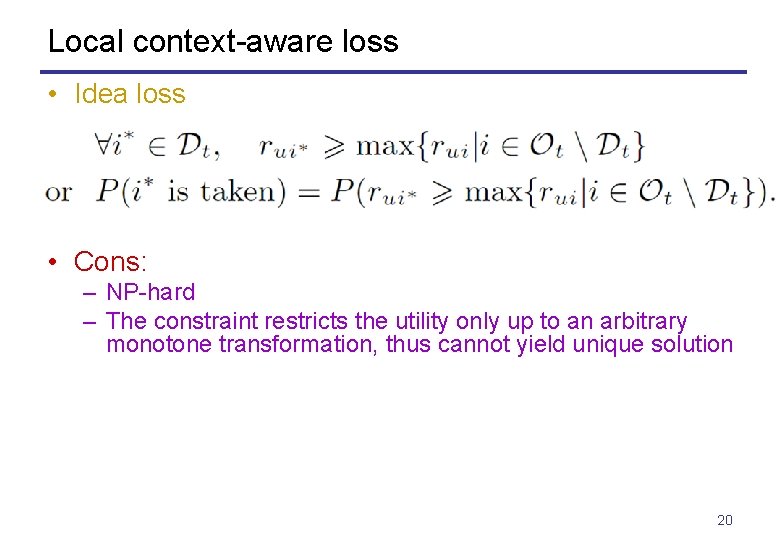

Local context-aware loss • Idea loss • Cons: – NP-hard – The constraint restricts the utility only up to an arbitrary monotone transformation, thus cannot yield unique solution 20

Local context-aware loss • Surrogate loss functions – Softmax (multinomial logit) – Hinge (bundle pairwise) 21

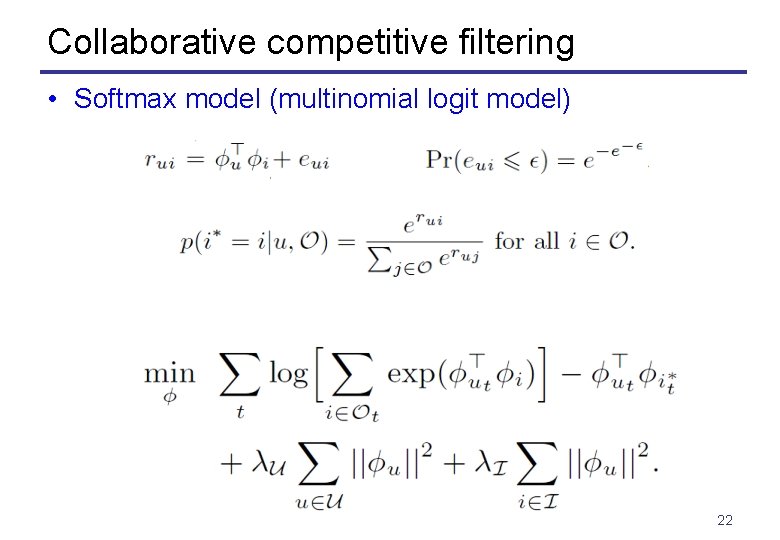

Collaborative competitive filtering • Softmax model (multinomial logit model) 22

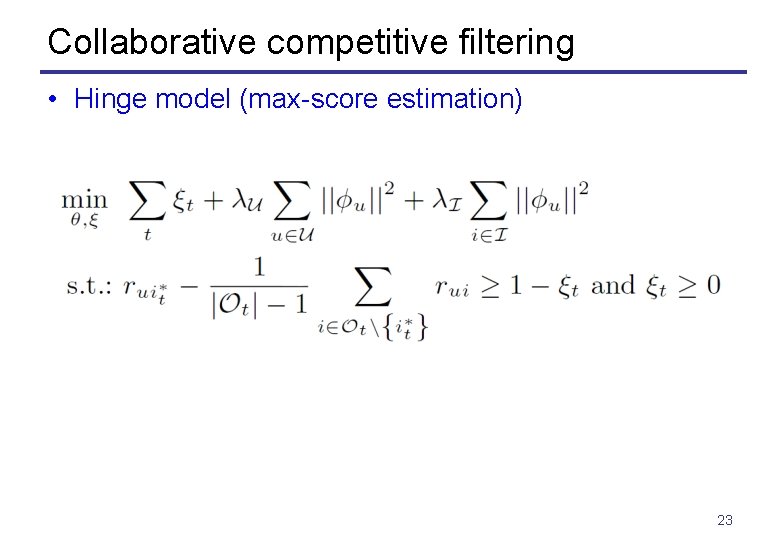

Collaborative competitive filtering • Hinge model (max-score estimation) 23

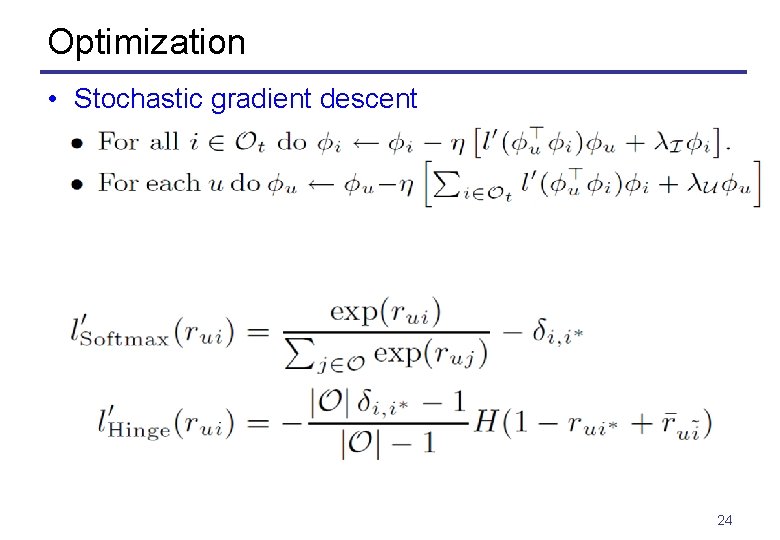

Optimization • Stochastic gradient descent 24

Optimization • Large-scale implementation – distributed optimization with averaging (decomposing the objective/data w. r. t. users) on Hadoop – feature hashing 25

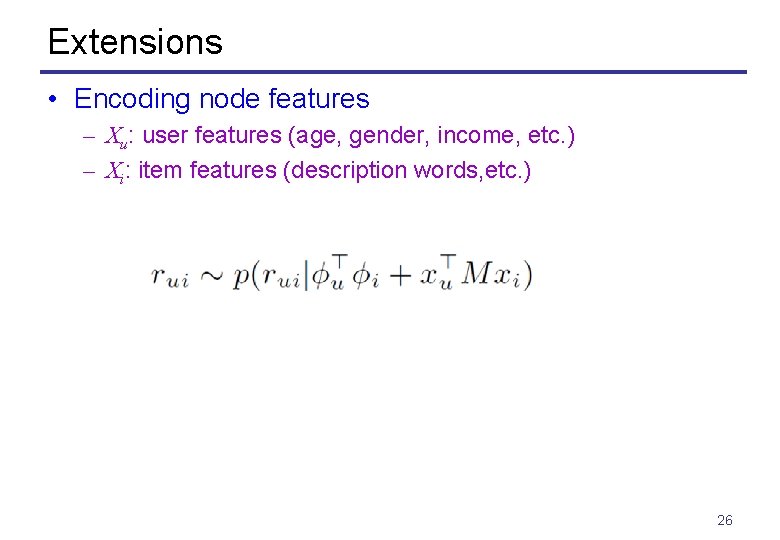

Extensions • Encoding node features – Xu: user features (age, gender, income, etc. ) – Xi: item features (description words, etc. ) 26

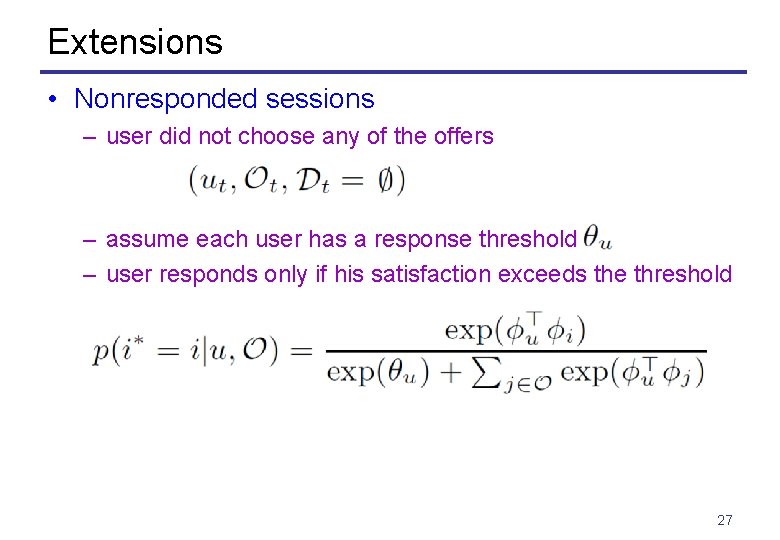

Extensions • Nonresponded sessions – user did not choose any of the offers – assume each user has a response threshold – user responds only if his satisfaction exceeds the threshold 27

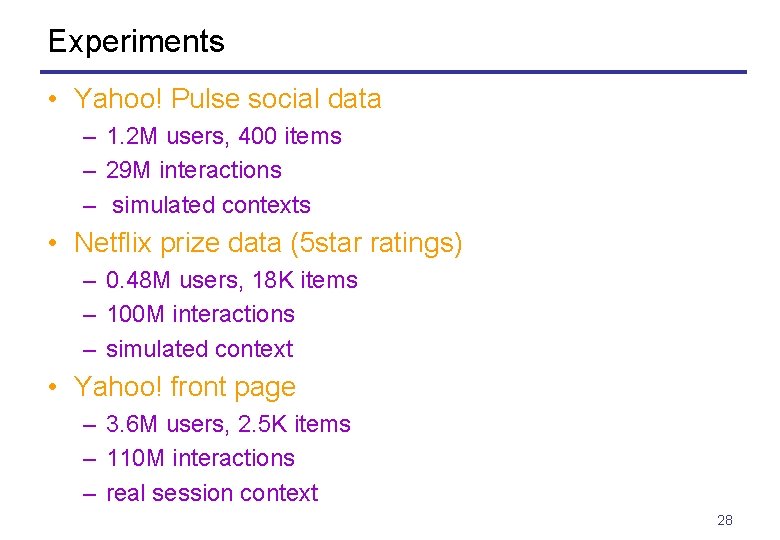

Experiments • Yahoo! Pulse social data – 1. 2 M users, 400 items – 29 M interactions – simulated contexts • Netflix prize data (5 star ratings) – 0. 48 M users, 18 K items – 100 M interactions – simulated context • Yahoo! front page – 3. 6 M users, 2. 5 K items – 110 M interactions – real session context 28

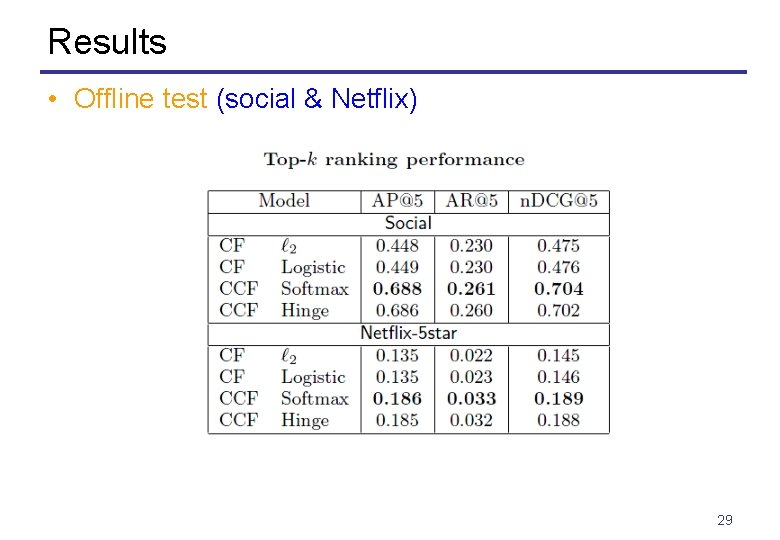

Results • Offline test (social & Netflix) 29

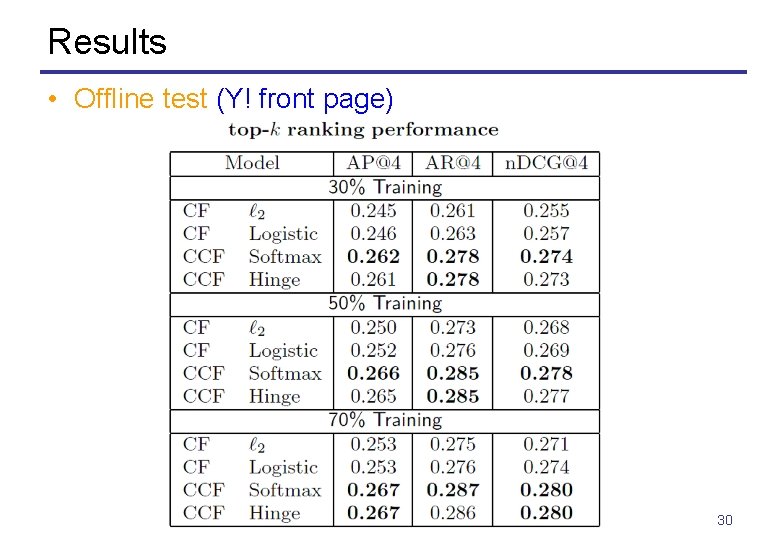

Results • Offline test (Y! front page) 30

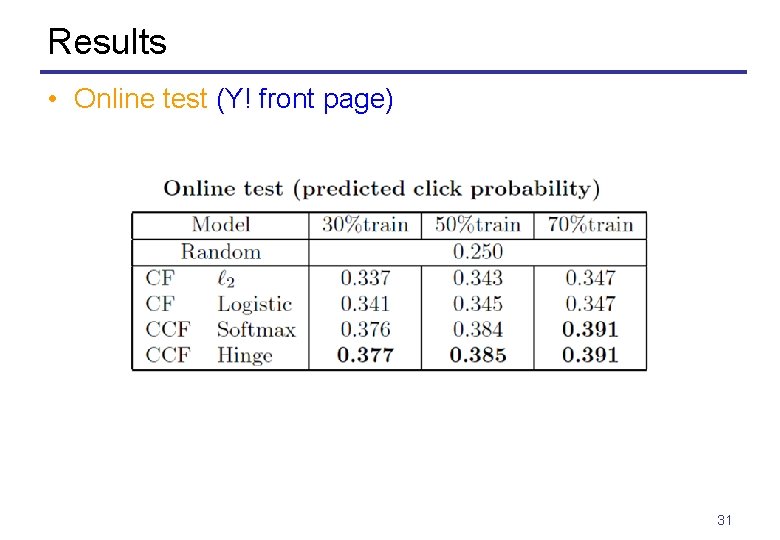

Results • Online test (Y! front page) 31

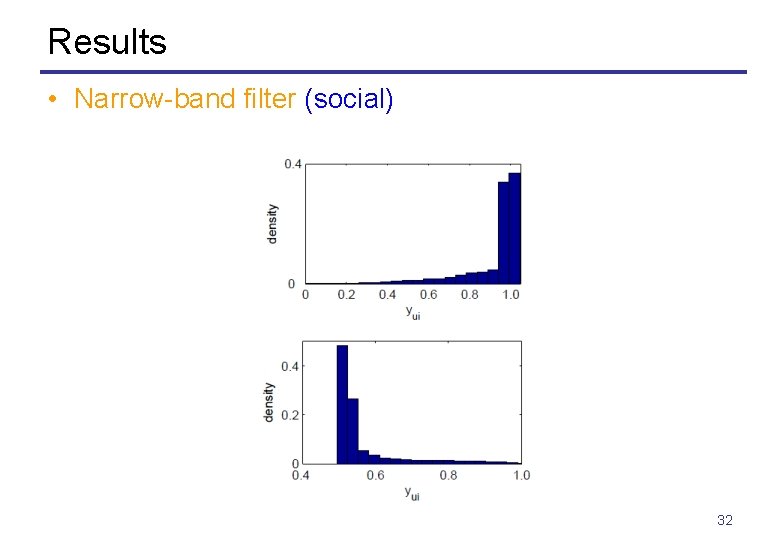

Results • Narrow-band filter (social) 32

Summary • Information filtering beyond matrix completion --modeling user-system interaction sessions – Placing user choice into context → more accurate preference modeling – Remedy data sparsity issue • Local context-aware loss – softmax (multinomial logit) – hinge (bundled pariwise) • Significant improvements – offline top-k ranking – online clickthrough rate 33

Thanks! Any comments would be appreciated! 34

- Slides: 34