Collaborative Assessment Using Balanced Scorecard to Measure Performance

Collaborative Assessment: Using Balanced Scorecard to Measure Performance and Show Value Liz Mengel, Johns Hopkins University Vivian Lewis, Mc. Master University Northumbria Conference (August 25, 2011)

Session Overview 1. The ARL Balanced Scorecard Project 2. Measures Commonality 3. Synergies with ARL Statistics Program 4. Ongoing Research Project

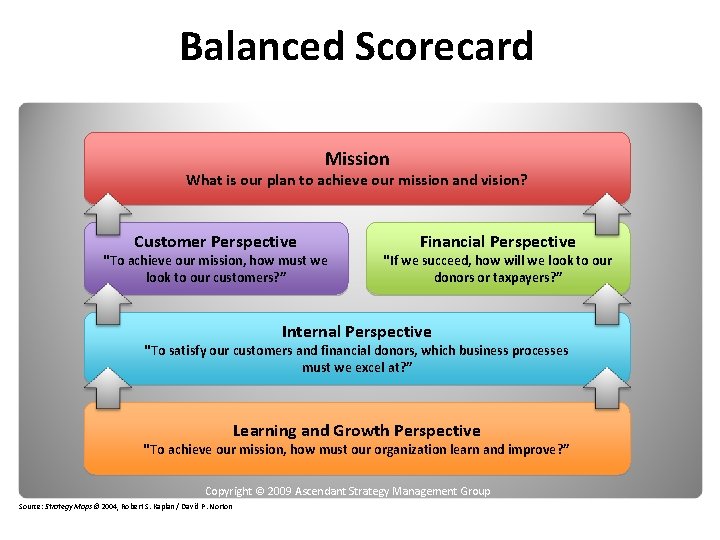

Balanced Scorecard Mission What is our plan to achieve our mission and vision? Customer Perspective "To achieve our mission, how must we look to our customers? ” Financial Perspective "If we succeed, how will we look to our donors or taxpayers? ” Internal Perspective "To satisfy our customers and financial donors, which business processes must we excel at? ” Learning and Growth Perspective "To achieve our mission, how must our organization learn and improve? ” Copyright © 2009 Ascendant Strategy Management Group Source: Strategy Maps© 2004, Robert S. Kaplan / David P. Norton

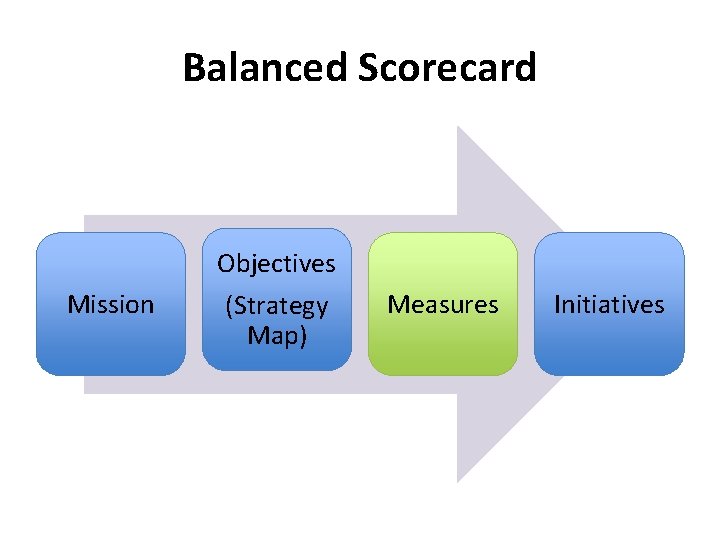

Balanced Scorecard Objectives Mission (Strategy Map) Measures Initiatives

ARL Balanced Scorecard Project • Explore suitability of BSC for academic research libraries • Encourage cross-library collaboration • Explore concept of common objectives and metrics emerge?

ARL Balanced Scorecard Timeline • 2009 – ARL call for participants • 2010 – Training, initial implementation • 2011 – Implementation, Refinement, Research Cohort #1 – ARL call for Cohort #2

Cohort 1 Common Objectives • Financial Perspective Objective – Secure funding for operational needs (4/4) • Customer Perspective Objective – Provide productive and user centered spaces, both virtual and physical (4/4) • Learning and Growth Perspective Objective – Develop workforces that are productive, motivated, and engaged (4/4) • Internal Processes Perspective Objective – Promote library resources, services, and value (3/4)

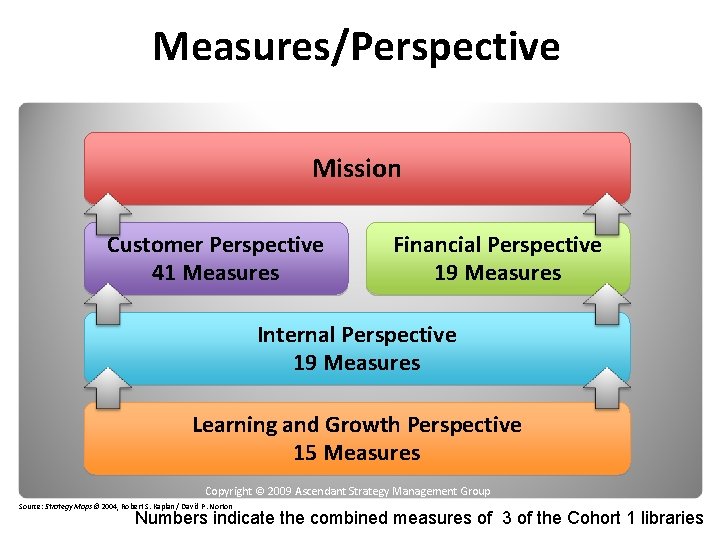

Measures/Perspective Mission Customer Perspective 41 Measures Financial Perspective 19 Measures Internal Perspective 19 Measures Learning and Growth Perspective 15 Measures Copyright © 2009 Ascendant Strategy Management Group Source: Strategy Maps© 2004, Robert S. Kaplan / David P. Norton Numbers indicate the combined measures of 3 of the Cohort 1 libraries

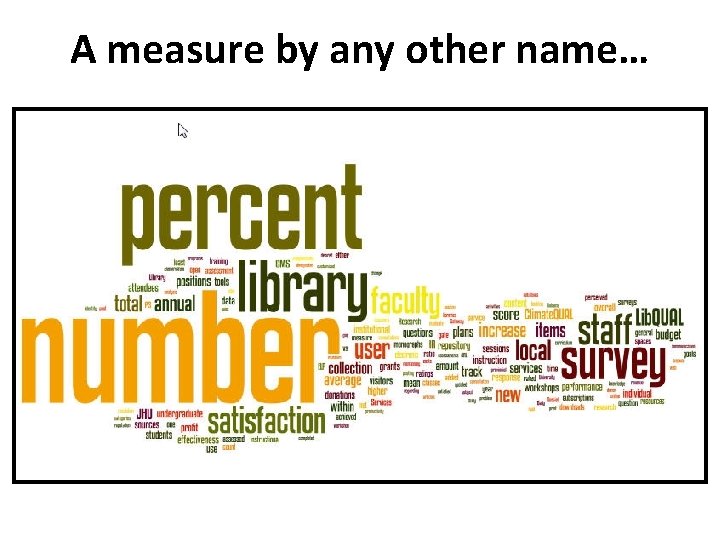

A measure by any other name…

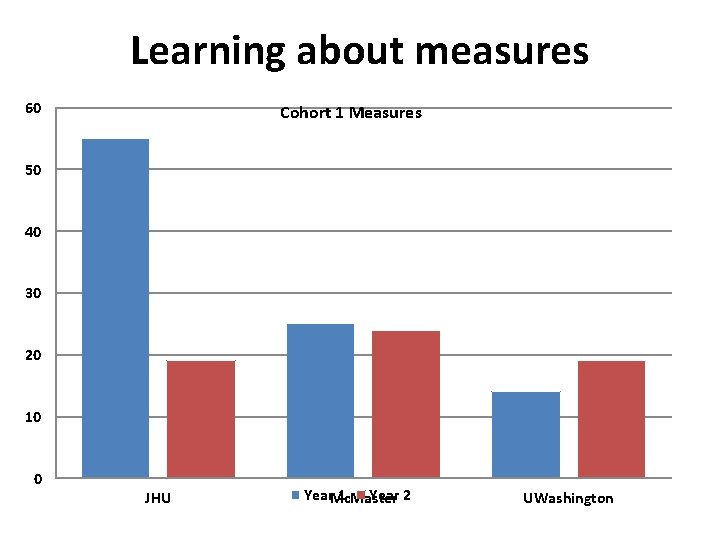

Learning about measures 60 Cohort 1 Measures 50 40 30 20 10 0 JHU Year. Mc. Master 1 Year 2 UWashington

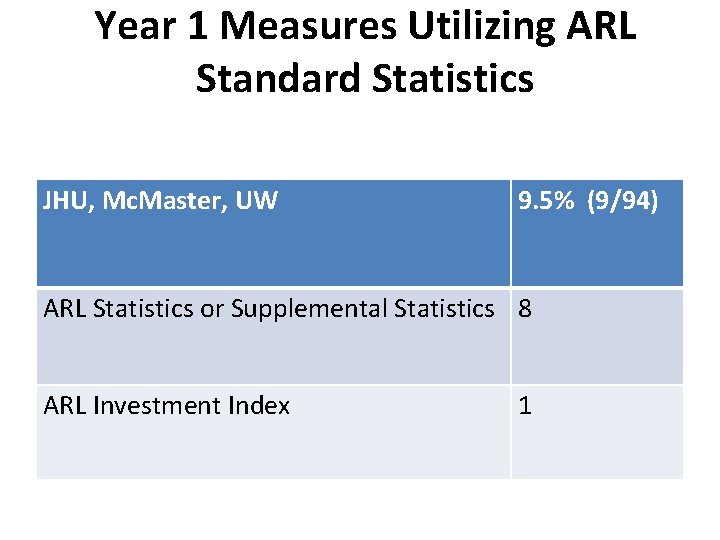

Year 1 Measures Utilizing ARL Standard Statistics JHU, Mc. Master, UW 9. 5% (9/94) ARL Statistics or Supplemental Statistics 8 ARL Investment Index 1

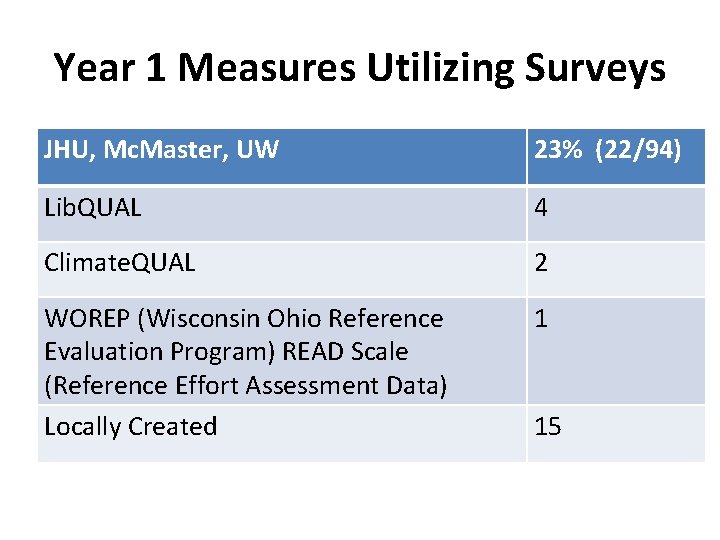

Year 1 Measures Utilizing Surveys JHU, Mc. Master, UW 23% (22/94) Lib. QUAL 4 Climate. QUAL 2 WOREP (Wisconsin Ohio Reference Evaluation Program) READ Scale (Reference Effort Assessment Data) Locally Created 1 15

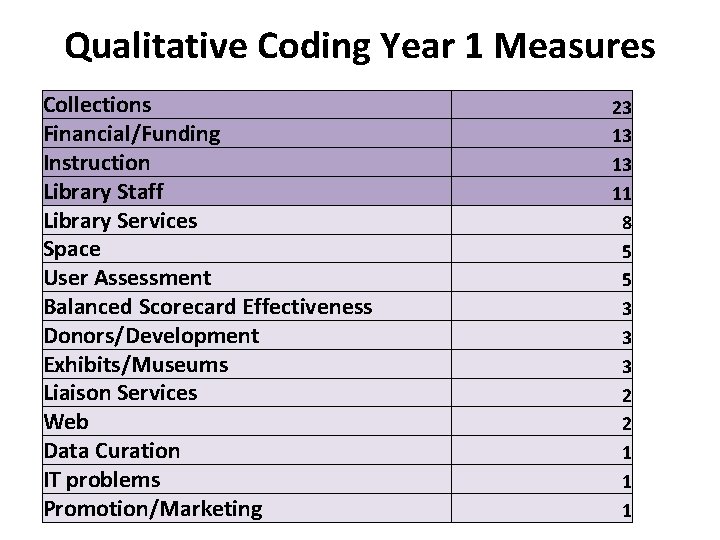

Qualitative Coding Year 1 Measures Collections Financial/Funding Instruction Library Staff Library Services Space User Assessment Balanced Scorecard Effectiveness Donors/Development Exhibits/Museums Liaison Services Web Data Curation IT problems Promotion/Marketing 23 13 13 11 8 5 5 3 3 3 2 2 1 1 1

Fluidity • Many iterations before measures are “finalized” • Mc. Master example • The refinement process is exhausting!

The Impact of Collaboration • Reviewed each others’ slates • Borrowed formulas, approaches to gathering data, etc. • Benefits Cohort 1: – time – credibility – benchmarking

Research Objective Explore two options for furthering the concept of collaborative scorecard development • an inventory of all used measures • a common set of core measures

Research Questions Perceived Usefulness 1. Will ARL scorecard planning teams perceive the provision of a standardized set of core measures or an inventory of used measures as helpful to their local implementations? 2. Of the two options, which is perceived as most helpful? Measure Adoption 3. Were measures added, deleted or changed as a result of the set sharing activity?

Why is this important? • To facilitate scorecard implementation at local sites (save time, share expertise…) • To facilitate inter-university comparisons • To define common and distinctive capacities.

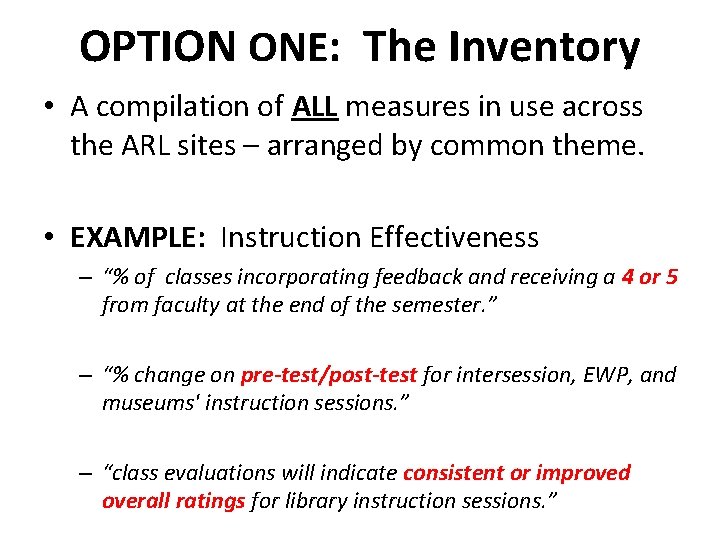

OPTION ONE: The Inventory • A compilation of ALL measures in use across the ARL sites – arranged by common theme. • EXAMPLE: Instruction Effectiveness – “% of classes incorporating feedback and receiving a 4 or 5 from faculty at the end of the semester. ” – “% change on pre-test/post-test for intersession, EWP, and museums' instruction sessions. ” – “class evaluations will indicate consistent or improved overall ratings for library instruction sessions. ”

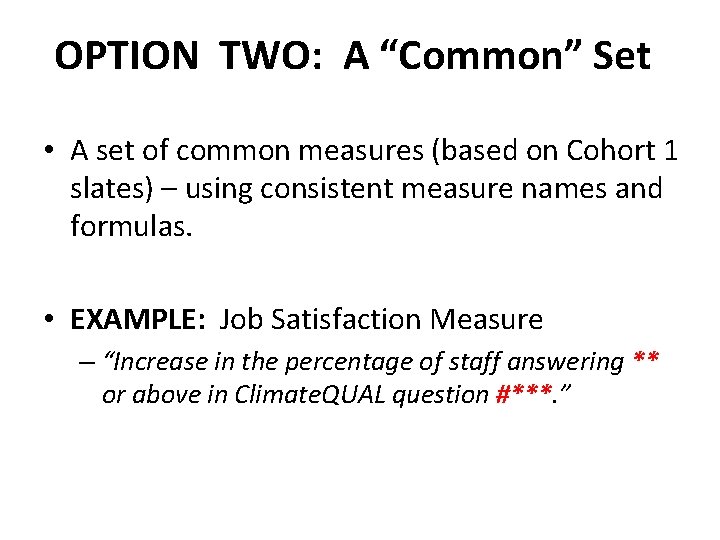

OPTION TWO: A “Common” Set • A set of common measures (based on Cohort 1 slates) – using consistent measure names and formulas. • EXAMPLE: Job Satisfaction Measure – “Increase in the percentage of staff answering ** or above in Climate. QUAL question #***. ”

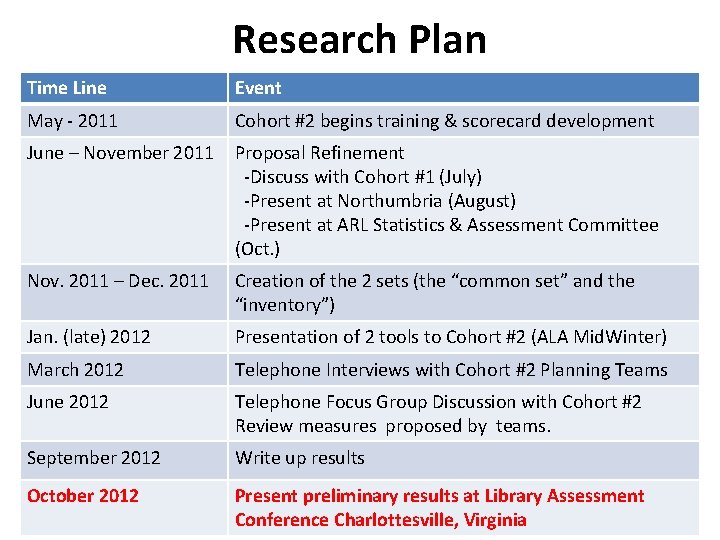

Research Plan Time Line Event May - 2011 Cohort #2 begins training & scorecard development June – November 2011 Proposal Refinement -Discuss with Cohort #1 (July) -Present at Northumbria (August) -Present at ARL Statistics & Assessment Committee (Oct. ) Nov. 2011 – Dec. 2011 Creation of the 2 sets (the “common set” and the “inventory”) Jan. (late) 2012 Presentation of 2 tools to Cohort #2 (ALA Mid. Winter) March 2012 Telephone Interviews with Cohort #2 Planning Teams June 2012 Telephone Focus Group Discussion with Cohort #2 Review measures proposed by teams. September 2012 Write up results October 2012 Present preliminary results at Library Assessment Conference Charlottesville, Virginia

VIVIAN LEWIS LIZ MENGEL LEWISVM@MCMASTER. CA EMENGEL@JHU. EDU

- Slides: 22