Cognitive Models of Decision Making Dr Thomas Busey

Cognitive Models of Decision Making Dr. Thomas Busey Indiana University, Bloomington, IN busey@iu. edu

Hypothetical Proficiency Tests Four examiners take a very rigorous proficiency test: • 100 total comparisons – 40 mated – 60 non-mated • They are asked to use whatever decision thresholds they would apply in casework. • Ground truth is known for all comparisons.

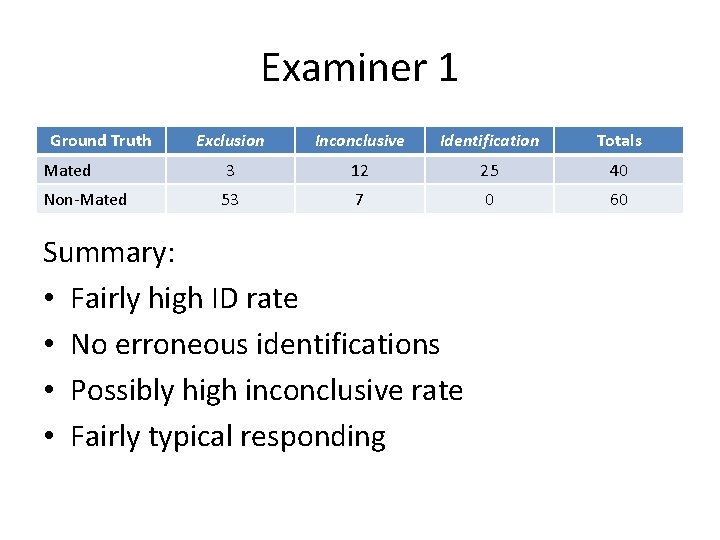

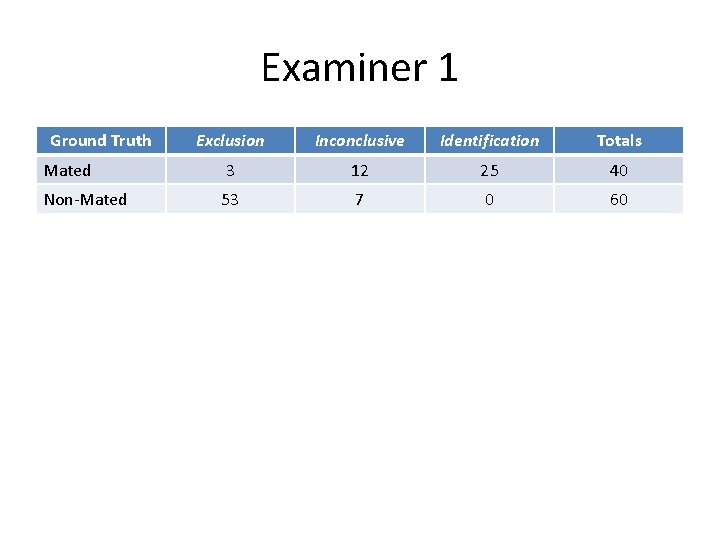

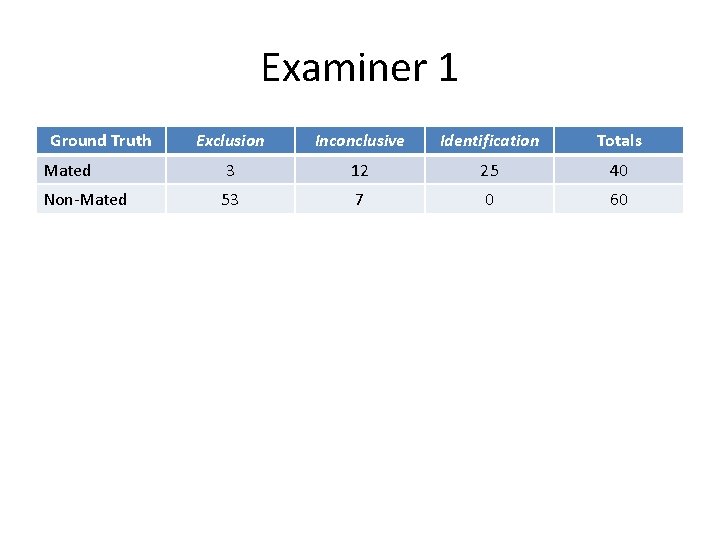

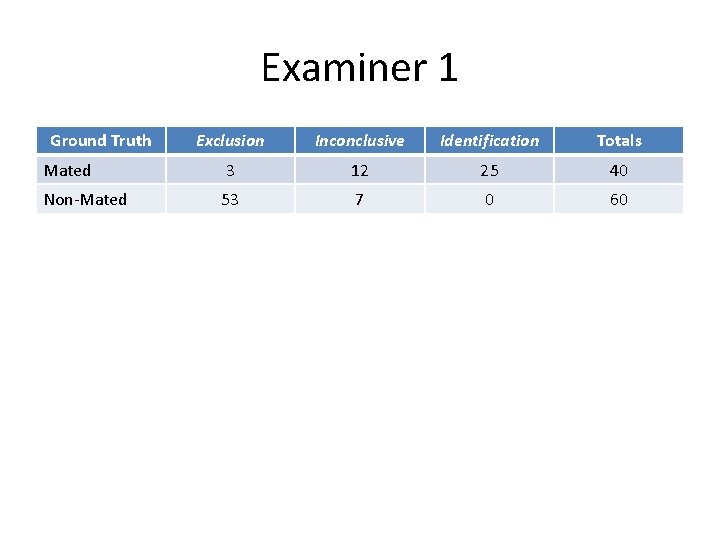

Examiner 1 Ground Truth Exclusion Inconclusive Identification Totals Mated 3 12 25 40 Non-Mated 53 7 0 60 Summary: • Fairly high ID rate • No erroneous identifications • Possibly high inconclusive rate • Fairly typical responding

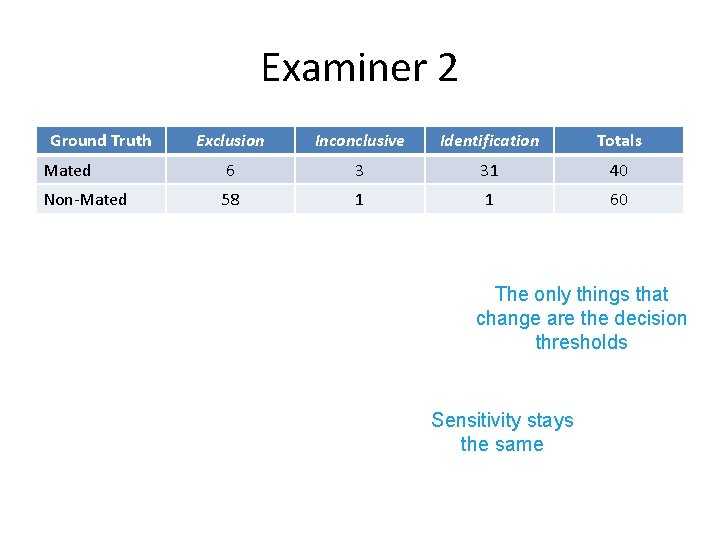

Examiner 2 Ground Truth Exclusion Inconclusive Identification Totals Mated 6 3 31 40 Non-Mated 58 1 1 60 Summary: • Very high ID rate • One erroneous identification • Very low inconclusive rate • Better or worse than Examiner 1?

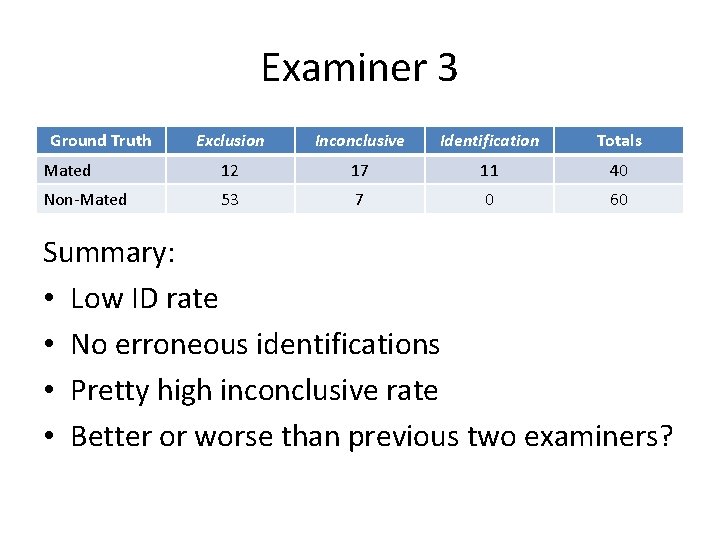

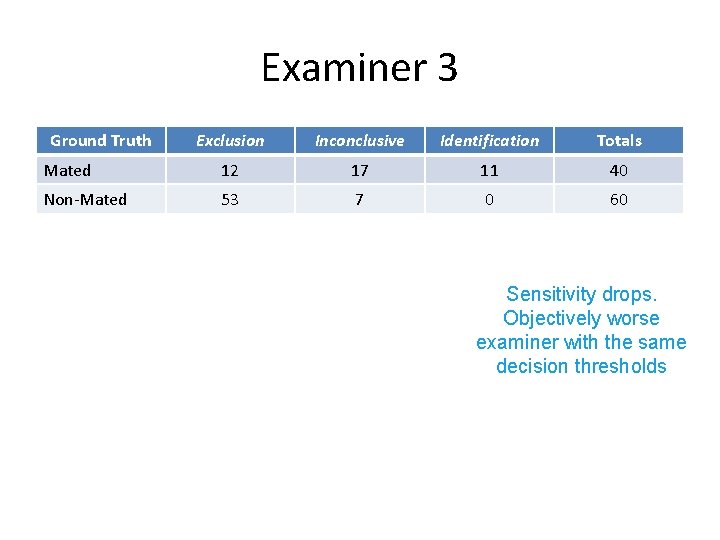

Examiner 3 Ground Truth Exclusion Inconclusive Identification Totals Mated 12 17 11 40 Non-Mated 53 7 0 60 Summary: • Low ID rate • No erroneous identifications • Pretty high inconclusive rate • Better or worse than previous two examiners?

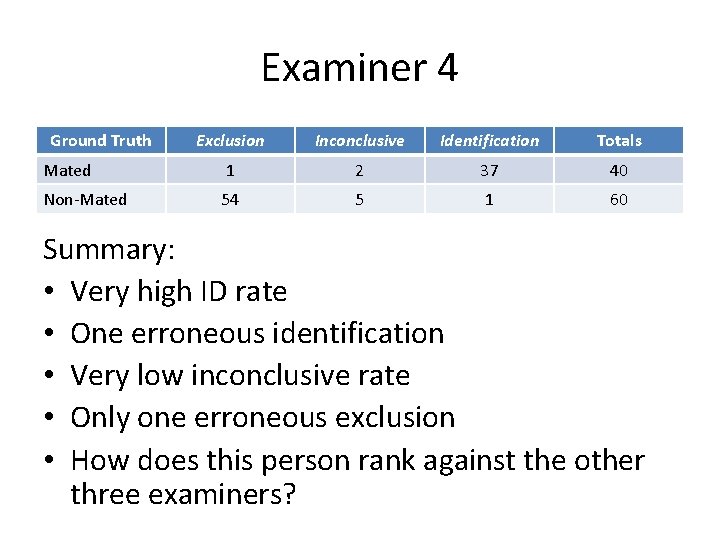

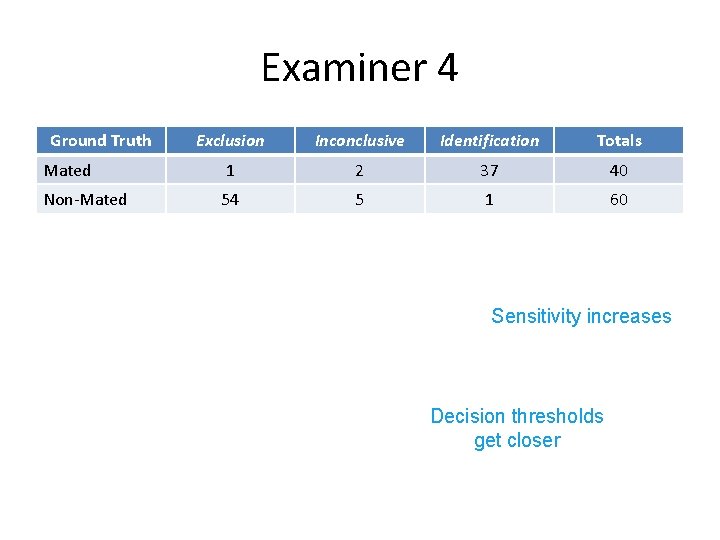

Examiner 4 Ground Truth Exclusion Inconclusive Identification Totals Mated 1 2 37 40 Non-Mated 54 5 1 60 Summary: • Very high ID rate • One erroneous identification • Very low inconclusive rate • Only one erroneous exclusion • How does this person rank against the other three examiners?

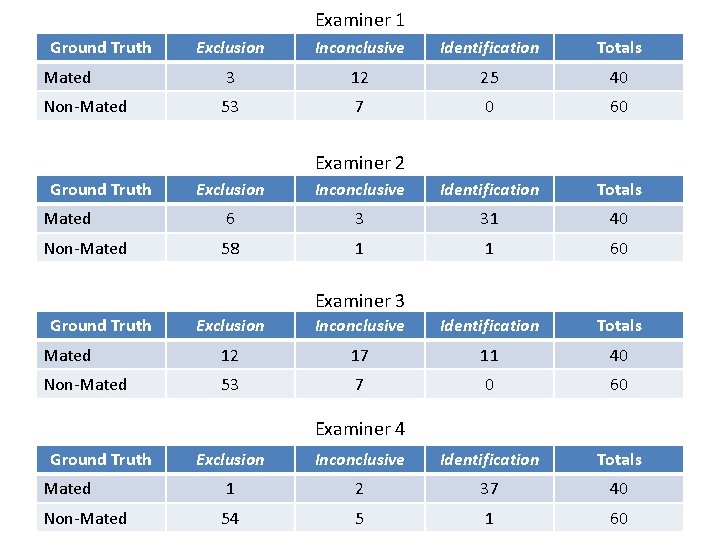

Examiner 1 Ground Truth Exclusion Inconclusive Identification Totals Mated 3 12 25 40 Non-Mated 53 7 0 60 Examiner 2 Ground Truth Exclusion Inconclusive Identification Totals Mated 6 3 31 40 Non-Mated 58 1 1 60 Examiner 3 Ground Truth Exclusion Inconclusive Identification Totals Mated 12 17 11 40 Non-Mated 53 7 0 60 Examiner 4 Ground Truth Exclusion Inconclusive Identification Totals Mated 1 2 37 40 Non-Mated 54 5 1 60

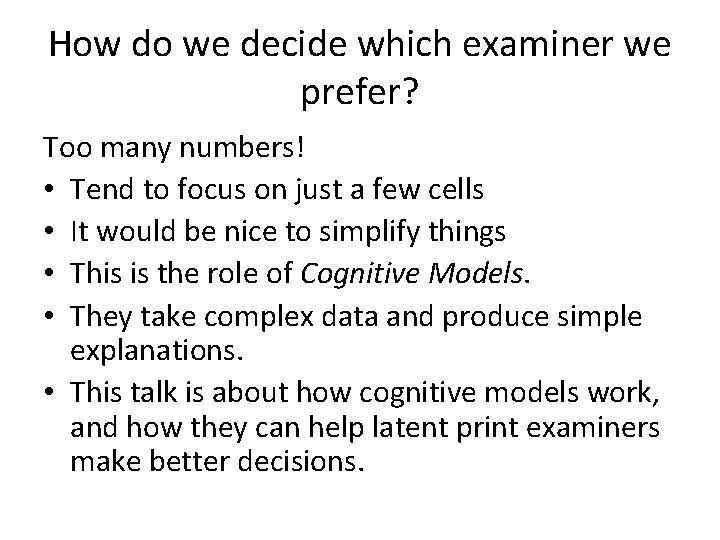

How do we decide which examiner we prefer? Too many numbers! • Tend to focus on just a few cells • It would be nice to simplify things • This is the role of Cognitive Models. • They take complex data and produce simple explanations. • This talk is about how cognitive models work, and how they can help latent print examiners make better decisions.

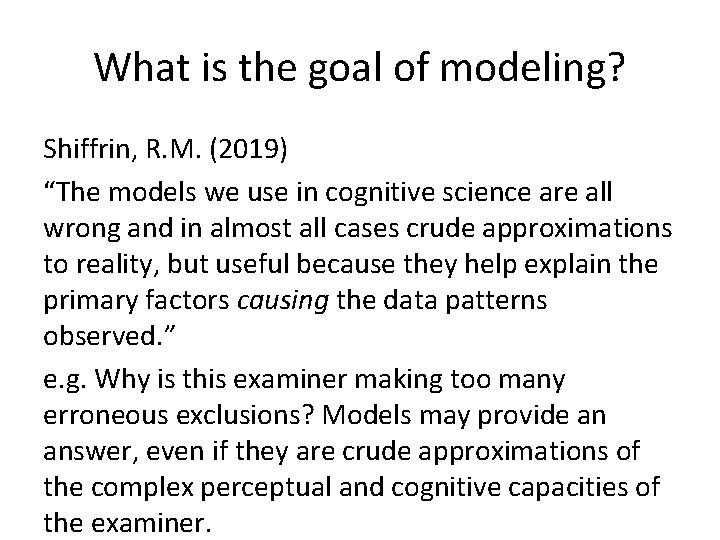

What is the goal of modeling? Shiffrin, R. M. (2019) “The models we use in cognitive science are all wrong and in almost all cases crude approximations to reality, but useful because they help explain the primary factors causing the data patterns observed. ” e. g. Why is this examiner making too many erroneous exclusions? Models may provide an answer, even if they are crude approximations of the complex perceptual and cognitive capacities of the examiner.

Other types of models and frameworks What is a model? Some examples: • Mario • SWGFAST graph • Critical number of points • FRStat • Signal Detection Theory

Super Mario • Gravity/hit detection/friction are all modeled correctly. • Shouldn’t be able to change direction in the air. • Lots of other factors left out (e. g. perspective) • Nintendo models just enough of the real world to make the game intuitive and interesting. • The ‘physics’ engine are the rules that govern game play. That’s the model (but not a model of decisionmaking).

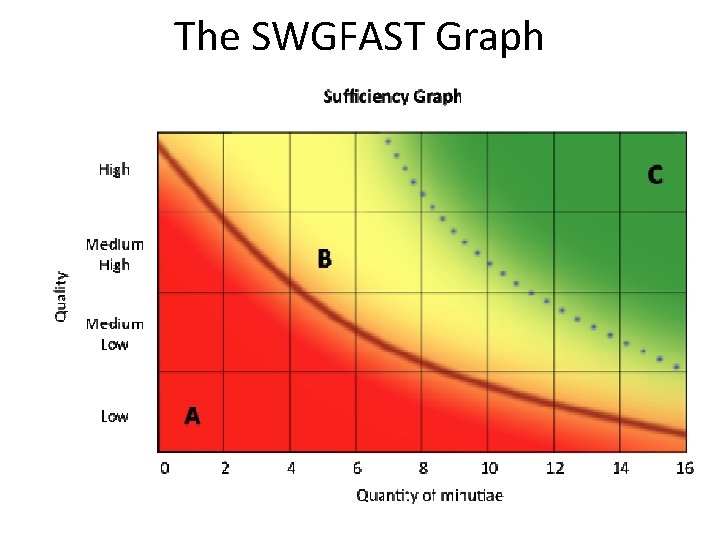

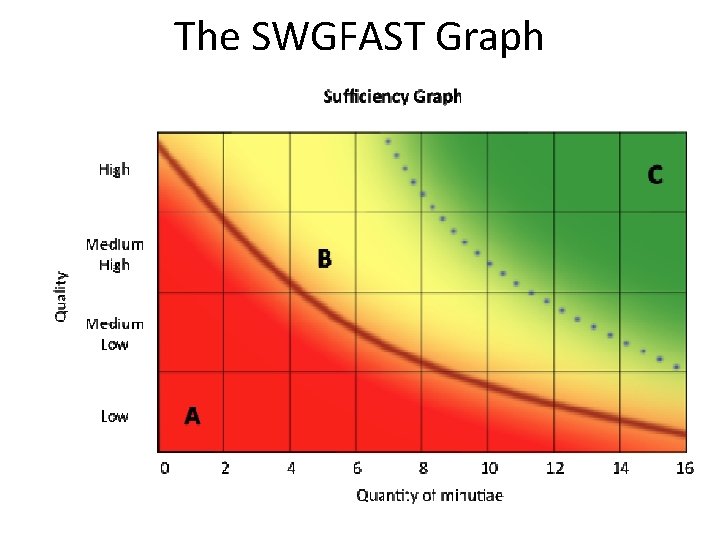

The SWGFAST Graph

The SWGFAST Graph • This graph does posit the causal factors (quantity and quality as they determine sufficiency) • It makes specific claims about how much quantity and quality is required to determine sufficiency. • This is a model! • Is it a useful model? Only if we can validate it… • It also doesn’t account for errors. Where are erroneous identifications on this graph?

The SWGFAST Graph

The SWGFAST Graph • Help John Vanderkolk validate this model • Signup sheets going around • Sign up if you are a latent print examiner eligible to testify in your home country.

The 12 point standard • You need 12 ‘matching’ ‘points’ in order to make an ID • This is also a model (specifies causal factors), although it doesn’t specify what ‘matching’ or ‘points’ are. • It also doesn’t account for errors; instead the 12 point criterion is set so high that the probability of an erroneous identification is probably low (barring large database searches).

FRStat method • Computes the likelihood ratio that captures the strength of evidence. • Doesn’t make decisions, although part of the decisionmaking process. • Not a model of the examination process by itself, but an incredibly useful measurement tool.

How do models work? Shiffrin, R. M. (2019) “Causal models have two major components: Those representing the primary causal factors and those that tune the model for the exact paradigm and setting of the experiment(s) modeled. ” e. g. Gravity should make Mario eventually come back to earth (gravity is a causal factor of falling) Tune the model: how fast does Mario fall?

How do models work? Shiffrin, R. M. (2019) e. g. What is the relation between quality and quantity? Can you get by with less quality if you have more quantity? Causal factors: quantity and quality (maybe specificity as well? ) Parameters: How much of each is required for sufficiency.

Problem with all of these models so far • They don’t help us understand how errors occur, or the tradeoffs if you were to change your decision threshold. • They don’t allow us to understand which of our four examiners is the best. • We need a model that can help here. Signal Detection Theory to the rescue.

Model of Decisionmaking Signal Detection Theory Example Put down your phones for a moment. Lesley gave me one number at random from the conference list. Did your phone just vibrate?

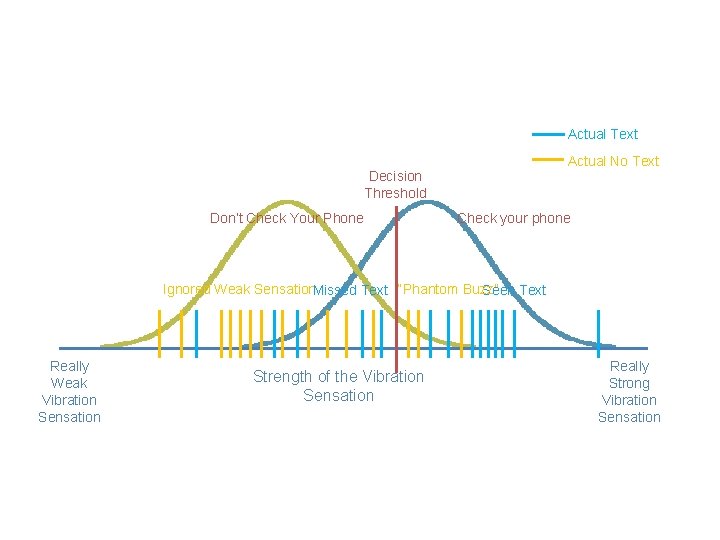

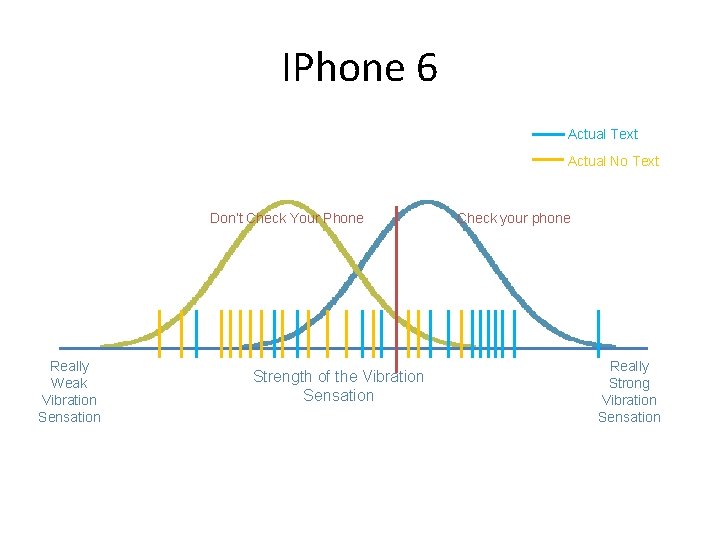

Actual Text Actual No Text Decision Threshold Don’t Check Your Phone Check your phone Ignored Weak Sensation. Missed Text “Phantom Buzz” Seen Text Really Weak Vibration Sensation Strength of the Vibration Sensation Really Strong Vibration Sensation

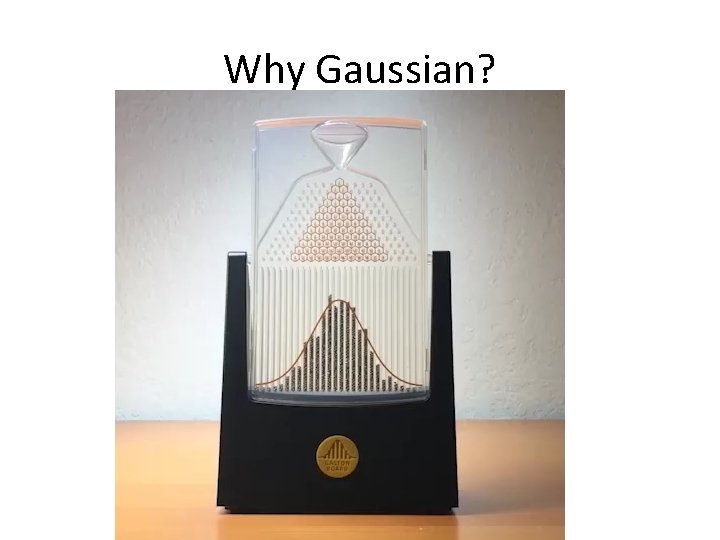

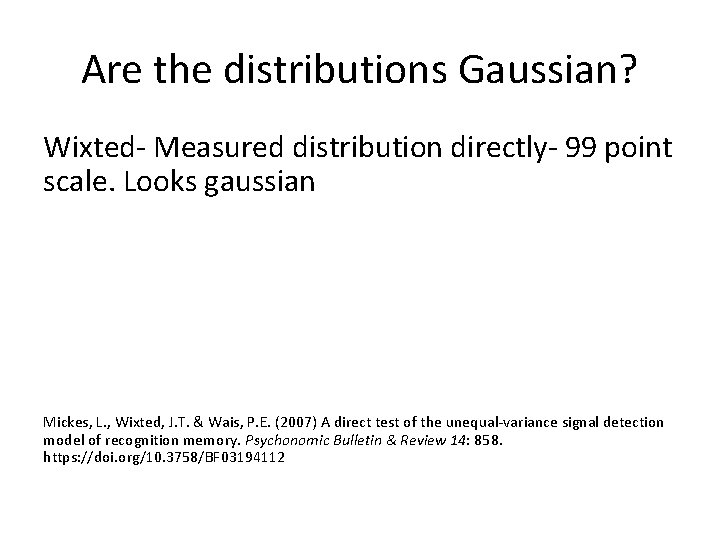

Why Gaussian?

Are the distributions Gaussian? Wixted- Measured distribution directly- 99 point scale. Looks gaussian Mickes, L. , Wixted, J. T. & Wais, P. E. (2007) A direct test of the unequal-variance signal detection model of recognition memory. Psychonomic Bulletin & Review 14: 858. https: //doi. org/10. 3758/BF 03194112

IPhone 6 Actual Text Actual No Text Don’t Check Your Phone Really Weak Vibration Sensation Strength of the Vibration Sensation Check your phone Really Strong Vibration Sensation

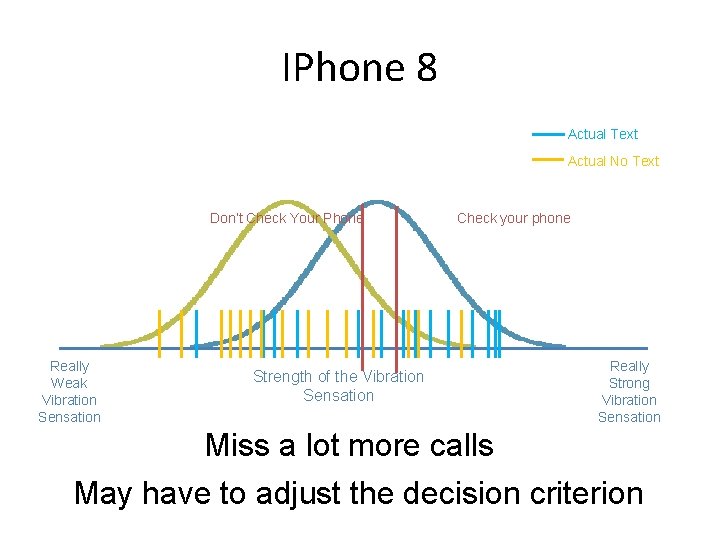

IPhone 8 Actual Text Actual No Text Don’t Check Your Phone Really Weak Vibration Sensation Check your phone Strength of the Vibration Sensation Really Strong Vibration Sensation Miss a lot more calls May have to adjust the decision criterion

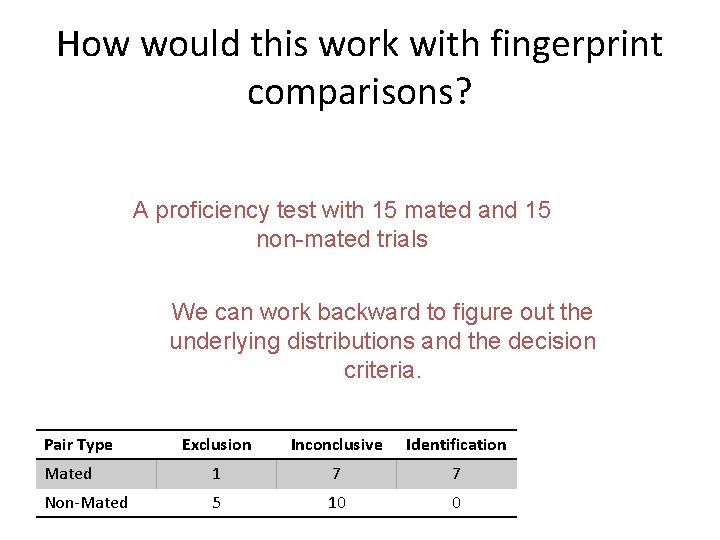

How would this work with fingerprint comparisons? A proficiency test with 15 mated and 15 non-mated trials We can work backward to figure out the underlying distributions and the decision criteria. Pair Type Exclusion Inconclusive Identification Mated 1 7 7 Non-Mated 5 10 0

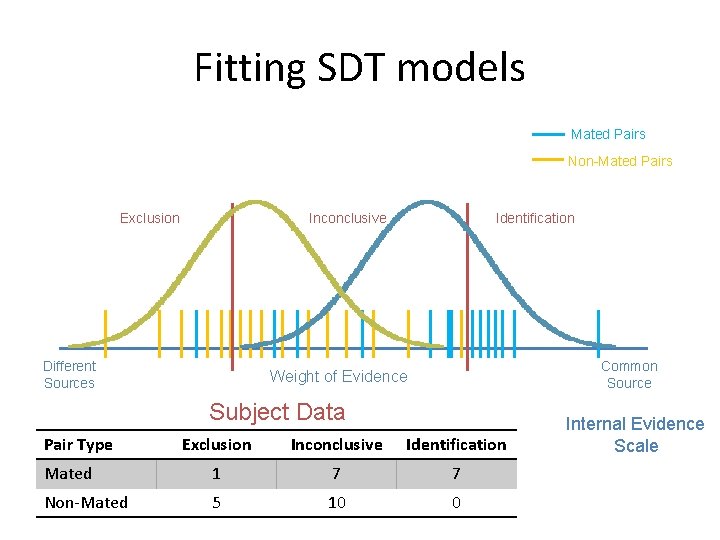

Fitting SDT models Mated Pairs Non-Mated Pairs Exclusion Different Sources Inconclusive Identification Weight of Evidence We can summarize the collection of mated and non-mated evidence values by Gaussian distributions. Common Source Internal Evidence Scale

Fitting SDT models Mated Pairs Non-Mated Pairs Exclusion Inconclusive Different Sources Identification Common Source Weight of Evidence Subject Data Pair Type Exclusion Inconclusive Identification Mated 1 7 7 Non-Mated 5 10 0 Internal Evidence Scale

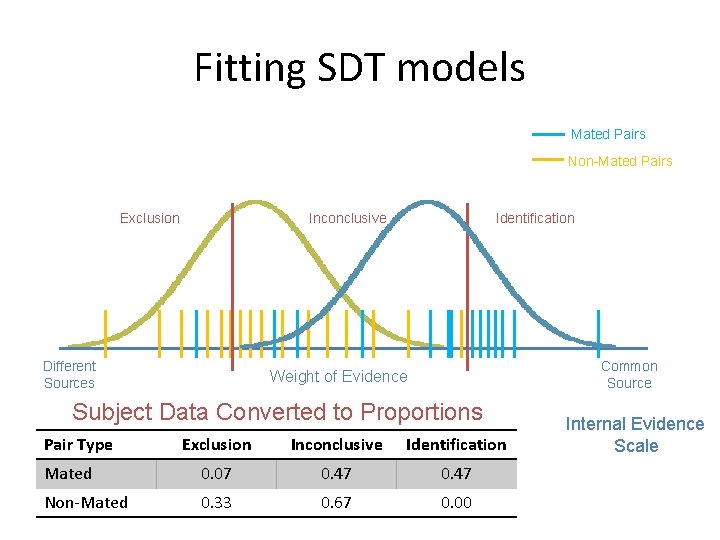

Fitting SDT models Mated Pairs Non-Mated Pairs Exclusion Inconclusive Different Sources Identification Common Source Weight of Evidence Subject Data Converted to Proportions Pair Type Exclusion Inconclusive Identification Mated 0. 07 0. 47 Non-Mated 0. 33 0. 67 0. 00 Internal Evidence Scale

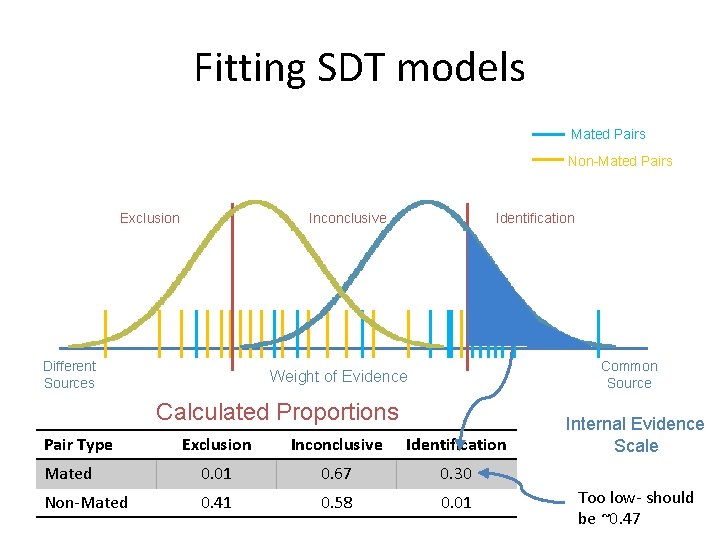

Fitting SDT models Mated Pairs Non-Mated Pairs Exclusion Inconclusive Different Sources Identification Common Source Weight of Evidence Calculated Proportions Pair Type Exclusion Inconclusive Identification Mated 0. 01 0. 67 0. 30 Non-Mated 0. 41 0. 58 0. 01 Internal Evidence Scale Too low- should be ~0. 47

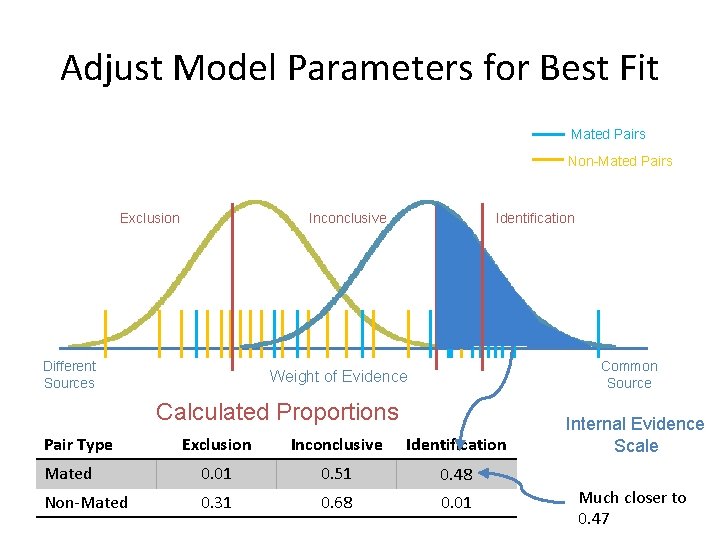

Adjust Model Parameters for Best Fit Mated Pairs Non-Mated Pairs Exclusion Inconclusive Different Sources Identification Common Source Weight of Evidence Calculated Proportions Pair Type Exclusion Inconclusive Identification Mated 0. 01 0. 51 0. 48 Non-Mated 0. 31 0. 68 0. 01 Internal Evidence Scale Much closer to 0. 47

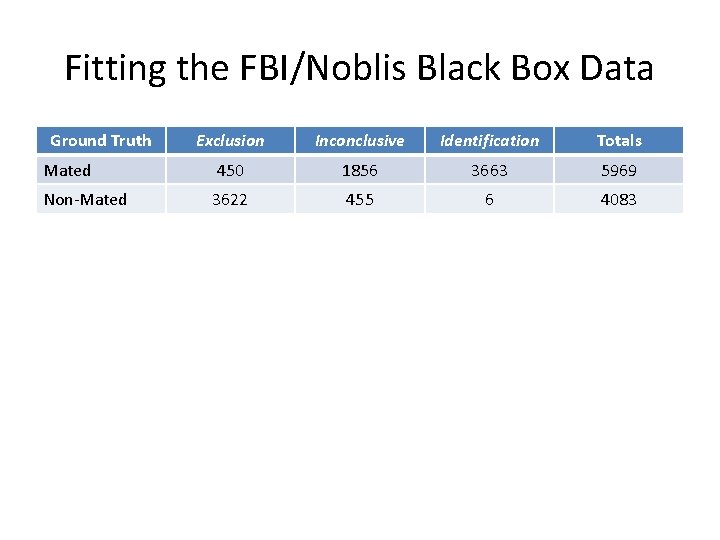

Fitting the FBI/Noblis Black Box Data Ground Truth Exclusion Inconclusive Identification Totals Mated 450 1856 3663 5969 Non-Mated 3622 455 6 4083

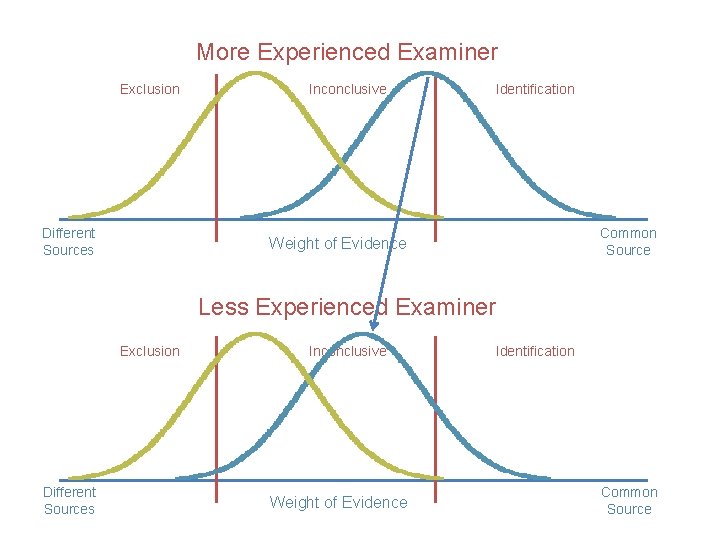

More Experienced Examiner Exclusion Different Sources Inconclusive Identification Common Source Weight of Evidence Less Experienced Examiner Exclusion Different Sources Inconclusive Weight of Evidence Identification Common Source

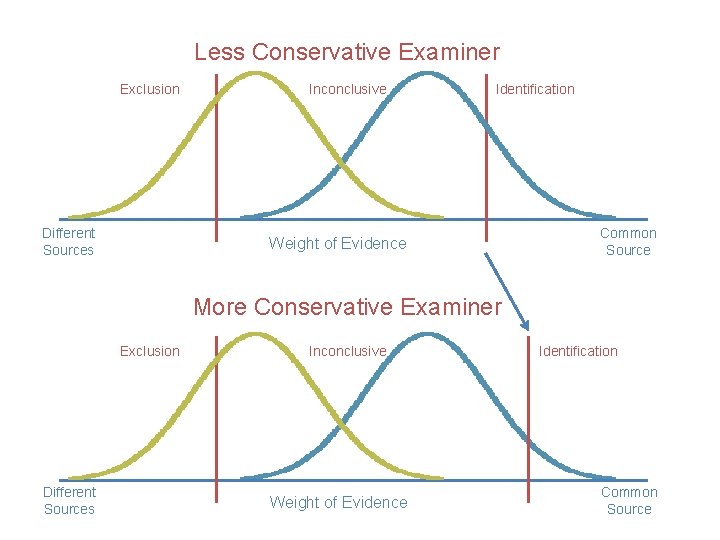

Less Conservative Examiner Exclusion Different Sources Inconclusive Identification Weight of Evidence Common Source More Conservative Examiner Exclusion Different Sources Inconclusive Weight of Evidence Identification Common Source

What happens if you change the decision criterion? Dynamic Demonstration with points instead of curves. http: //go. iu. edu/2 bgj

Which of our four examiners is best? • Correct responses and errors have complex relationship. – Making fewer inconclusive responses increases both correct identifications and potentially more erroneous identifications • Signal detection theory pulls apart a subject’s ability from their decision threshold.

Examiner 1 Ground Truth Exclusion Inconclusive Identification Totals Mated 3 12 25 40 Non-Mated 53 7 0 60

Examiner 2 Ground Truth Exclusion Inconclusive Identification Totals Mated 6 3 31 40 Non-Mated 58 1 1 60 The only things that change are the decision thresholds Sensitivity stays the same

Examiner 1 Ground Truth Exclusion Inconclusive Identification Totals Mated 3 12 25 40 Non-Mated 53 7 0 60

Examiner 3 Ground Truth Exclusion Inconclusive Identification Totals Mated 12 17 11 40 Non-Mated 53 7 0 60 Sensitivity drops. Objectively worse examiner with the same decision thresholds

Examiner 1 Ground Truth Exclusion Inconclusive Identification Totals Mated 3 12 25 40 Non-Mated 53 7 0 60

Examiner 4 Ground Truth Exclusion Inconclusive Identification Totals Mated 1 2 37 40 Non-Mated 54 5 1 60 Sensitivity increases Decision thresholds get closer

Examiner Summary • Examiner 3 is objectively the worst. – Lower sensitivity, same decision thresholds as #1 • Examiner 1 and 2 have the same sensitivity, but different decision thresholds • Examiner 4 is objectively the best. – Higher sensitivity, closer decision thresholds • This assumes that both kinds of errors are about equally bad, and the mated and nonmated pairs occur about equally often.

You can fit your own data! http: //go. iu. edu/2 bho • Which examiners are better at separating mated from non-mated pairs (regardless of their thresholds) – Look at the separating between the curves • Are some examiners ‘decision averse’? – See this with wide decision thresholds

How can signal detection theory help us? • Measure sensitivity and decision thresholds – These can depend on each other – More experienced examiners might want different thresholds. • Understand the tradeoffs that occur with different thresholds • Conceptualize the values of different outcomes • Confront the issue of likelihoods vs posteriors – Before you look at a print, how likely is it to be a mated pair? (prior probability)

Tradeoffs with Different Thresholds • Shifting your decision threshold affects both correct identifications as well as erroneous identifications • They trade off in very complex ways. They do not trade off 1 -to-1. • But the model quantifies the tradeoff for you.

Application: Validating an Expanded Conclusion Scale • Traditional scale: – Exclusion – Inconclusive – Identification • Possible expanded scale: – Exclusion – Support for Different Sources – Inconclusive – Support for Common Sources – Identification

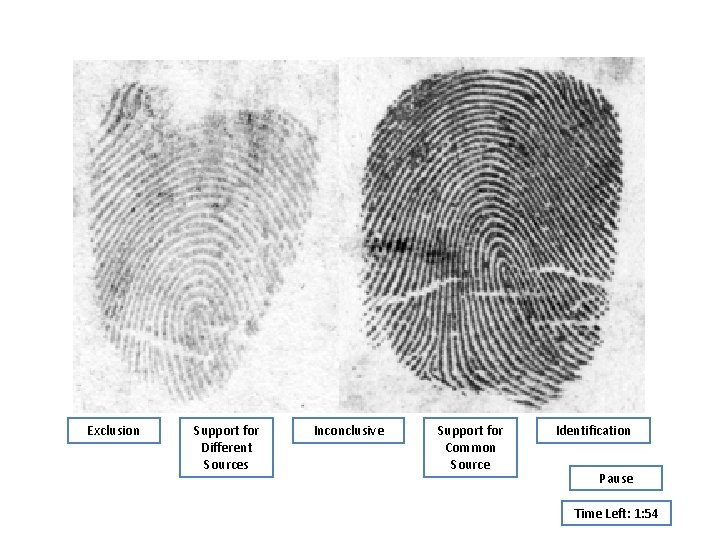

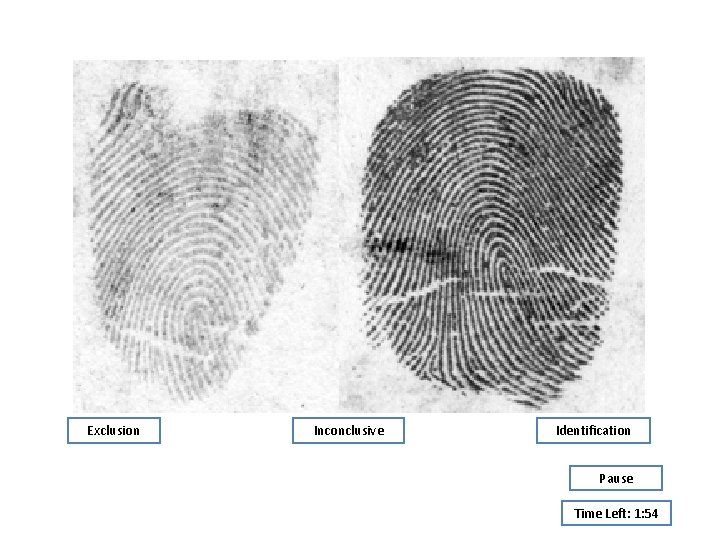

Study Details • Each examiner did 60 trials – 30 mated and 30 non-mated – Half were with the traditional scale and half were with the expanded scale – On each trial they knew which scale they would use – Trials were capped at 3 minutes. Only 10% of trials hit this cap. Trials could be paused, at which point the image was hidden.

Exclusion Support for Different Sources Inconclusive Support for Common Source Identification Pause Time Left: 1: 54

Exclusion Inconclusive Identification Pause Time Left: 1: 54

Primary Research Questions • Is an expanded conclusion scale objectively better or worse than the traditional scale (we have a statistical definition of ‘better’). • Does the meaning of the Identification conclusion change which more categories are added to the conclusion scale? • Based on our subjective evaluation of the utility or value to the justice system of the various outcomes, do we prefer one scale over the other?

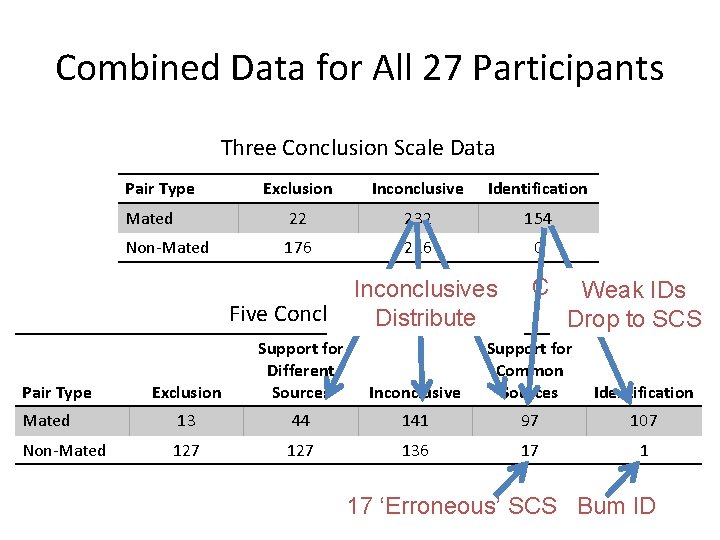

Combined Data for All 27 Participants Three Conclusion Scale Data Pair Type Exclusion Inconclusive Identification Mated 22 232 154 Non-Mated 176 226 0 Inconclusives Five Conclusion. Distribute Scale Data Exclusion Support for Different Sources Mated 13 Non-Mated 127 Pair Type Correct IDs Drop Weak Drop to SCS Inconclusive Support for Common Sources Identification 44 141 97 107 127 136 17 1 17 ‘Erroneous’ SCS One Bum ID

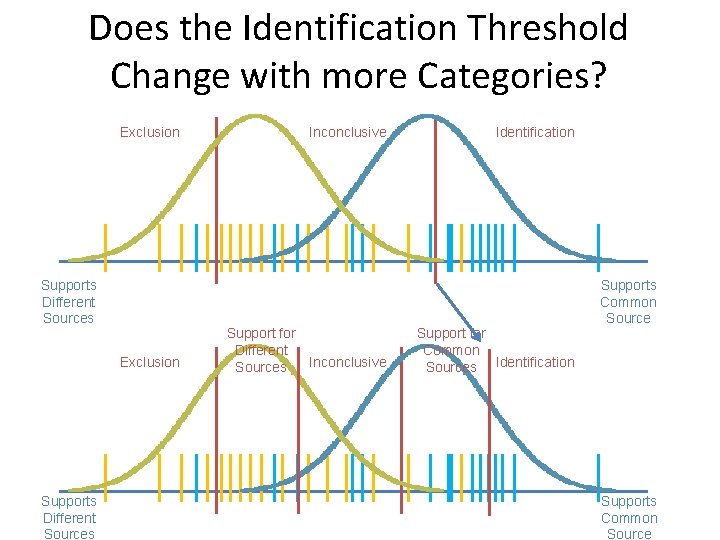

Does the Identification Threshold Change with more Categories? Exclusion Supports Different Sources Inconclusive Support for Different Inconclusive Sources Identification Support for Common Sources Identification Supports Common Source

Modeling Individual Participants • Strong evidence for a shift in the Identification threshold. Out of 27 participants, 21 had an identification threshold shifted to the right (i. e. more conservative) in the 5 Conclusion scale relative to the 3 Conclusion scale (exact probability is 0. 0029) • Examiners redefine what they mean by an Identification when given more categories in the scale (become more conservative).

SDT is a framework for asking questions • Erroneous Identifications are bad. • Erroneous Exclusions are bad. • Inconclusive decision might not be bad, but a lot of them might be bad. • To figure out where the decision thresholds should be, we have to understand the tradeoffs as well as the value of the different outcomes. • SDT forces us to think in these terms. It’s not enough to measure thresholds, we need to place them correctly.

Forces us to think about the likelihood of a mated pair. • Any discussion of optimal decision threshold placement requires not only the values of different outcomes, but also how likely mated pairs are in your lab’s casework. • SDT provides the framework to start asking these questions.

Limitations of SDT Assumes: • All of the evidence from a comparison can be distilled down to a single unidimensional value along a strength-of-evidence scale. • The distributions are Gaussian (normal). – Not exactly correct; are likely skewed, with less data in the tails than a Gaussian curve. – Might be close enough to understand the tradeoff as a decision threshold is changed • Changes in decision thresholds over time get reflected as decreased sensitivity

Limitations of SDT • It is falsifiable- the assumptions can be tested. • SDT is a model of the decision process. It does not explain how things like quality, quantity, and specificity combine to produce a single value on the decision axis. • But more complex models could do this!

High Level Takeaways • All models are wrong. • Some models are less wrong than others. • Models help us reduce complex datasets into simple, readily interpretable parameters – Better examiners have higher sensitivity, regardless of their decision thresholds – Some examiners are more conservative than others

High Level Takeaways • Validation requires ground truthed images. • If your model doesn’t account for errors, it isn’t a model of human decision making. It might be a framework. • Your lab should create a set of ground-truthed images and start fitting SDT to determine where your sensitivity and decision thresholds are.

- Slides: 62