CODING MANIPULATION CHECKS POSTEXPERIMENTAL INTERVIEWS AND OTHER WAYS

- Slides: 14

CODING, MANIPULATION CHECKS, POST-EXPERIMENTAL INTERVIEWS …AND OTHER WAYS QUALITATIVE DATA SNEAK INTO YOUR LIFE By: Lauren Boyatzi November 28, 2011

WHAT IS QUALITATIVE RESEARCH? Please take a minute… Subjective Atheoretical Unable to show causation Lack of generalizeability Little-to-no a priori knowledge Naturalistic approach Time-consuming/difficult

WHAT IS QUALITATIVE RESEARCH? There is a “wide consensus that qualitative research is a naturalistic, interpretative approach concerned with understanding the meanings which people attach to phenomena (actions, decisions, beliefs, values, etc. ) within their social worlds” (Snape & Spencer, 2003, pg. 3, in “Qualitative Research Practice: A Guide for Social Science Students and Researchers, edited by Ritchie & Lewis)

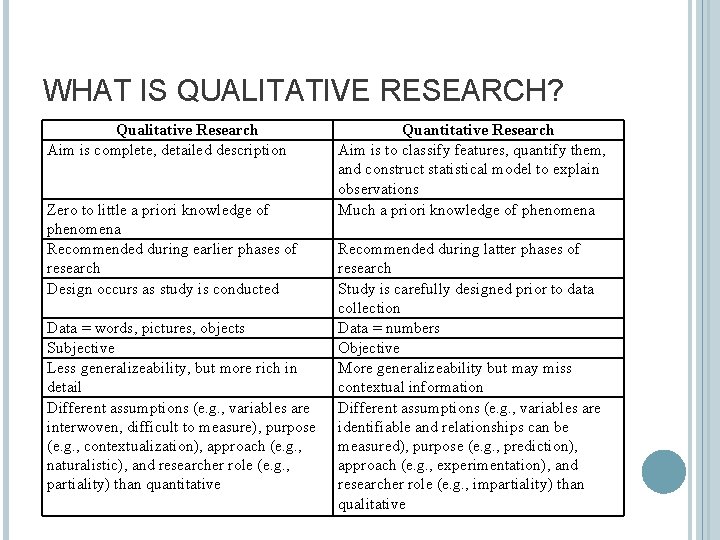

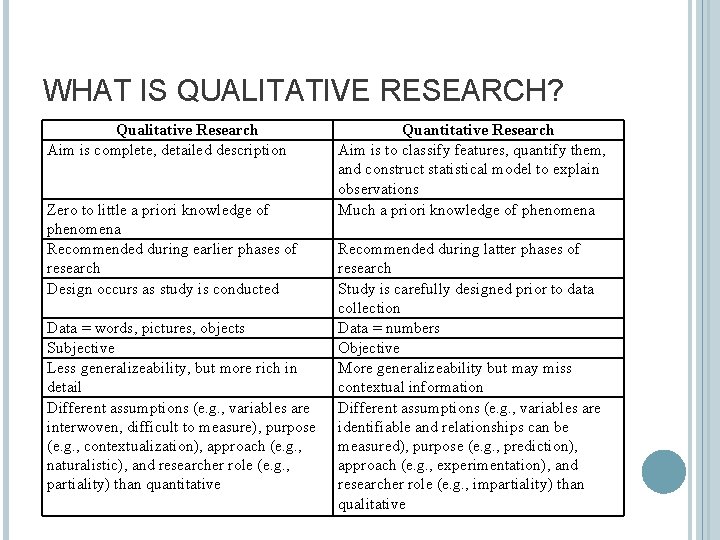

WHAT IS QUALITATIVE RESEARCH? Qualitative Research Aim is complete, detailed description Zero to little a priori knowledge of phenomena Recommended during earlier phases of research Design occurs as study is conducted Data = words, pictures, objects Subjective Less generalizeability, but more rich in detail Different assumptions (e. g. , variables are interwoven, difficult to measure), purpose (e. g. , contextualization), approach (e. g. , naturalistic), and researcher role (e. g. , partiality) than quantitative Quantitative Research Aim is to classify features, quantify them, and construct statistical model to explain observations Much a priori knowledge of phenomena Recommended during latter phases of research Study is carefully designed prior to data collection Data = numbers Objective More generalizeability but may miss contextual information Different assumptions (e. g. , variables are identifiable and relationships can be measured), purpose (e. g. , prediction), approach (e. g. , experimentation), and researcher role (e. g. , impartiality) than qualitative

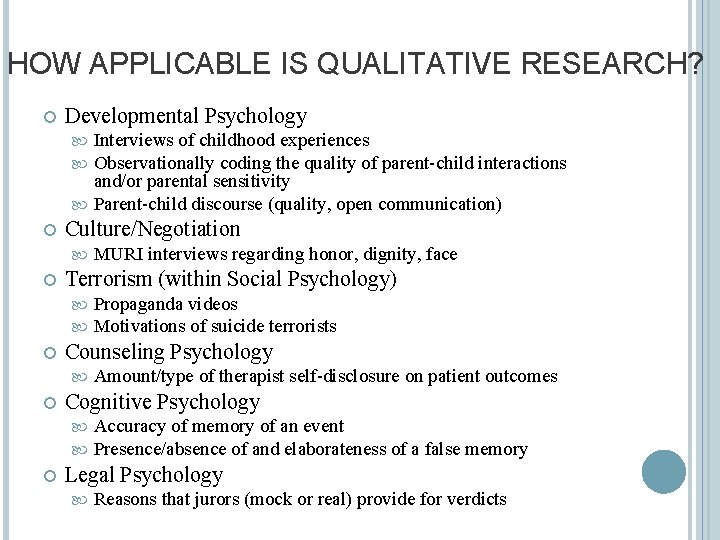

HOW APPLICABLE IS QUALITATIVE RESEARCH? Developmental Psychology Interviews of childhood experiences Observationally coding the quality of parent-child interactions and/or parental sensitivity Parent-child discourse (quality, open communication) Culture/Negotiation Terrorism (within Social Psychology) Amount/type of therapist self-disclosure on patient outcomes Cognitive Psychology Propaganda videos Motivations of suicide terrorists Counseling Psychology MURI interviews regarding honor, dignity, face Accuracy of memory of an event Presence/absence of and elaborateness of a false memory Legal Psychology Reasons that jurors (mock or real) provide for verdicts

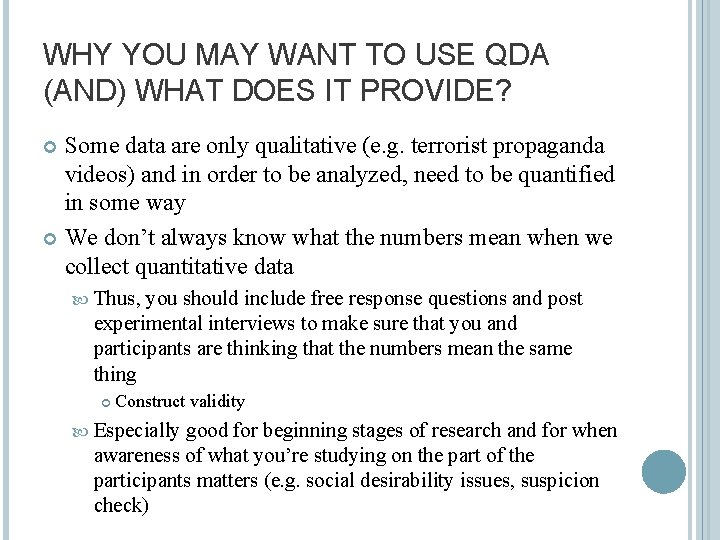

WHY YOU MAY WANT TO USE QDA (AND) WHAT DOES IT PROVIDE? Some data are only qualitative (e. g. terrorist propaganda videos) and in order to be analyzed, need to be quantified in some way We don’t always know what the numbers mean when we collect quantitative data Thus, you should include free response questions and post experimental interviews to make sure that you and participants are thinking that the numbers mean the same thing Construct validity Especially good for beginning stages of research and for when awareness of what you’re studying on the part of the participants matters (e. g. social desirability issues, suspicion check)

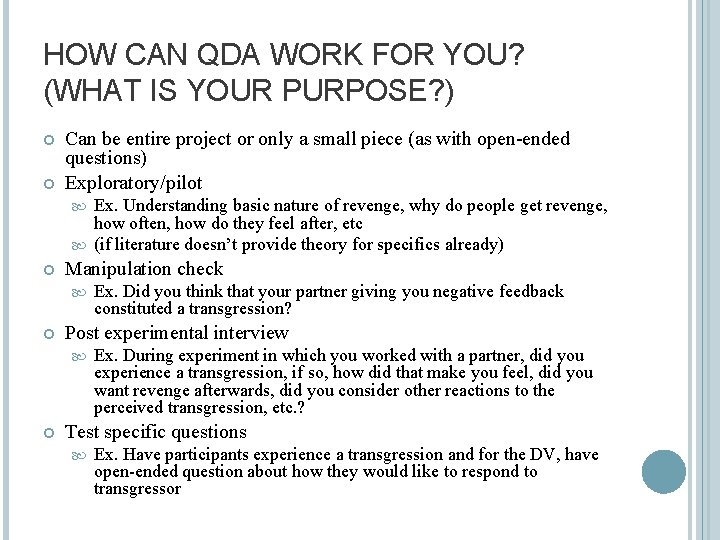

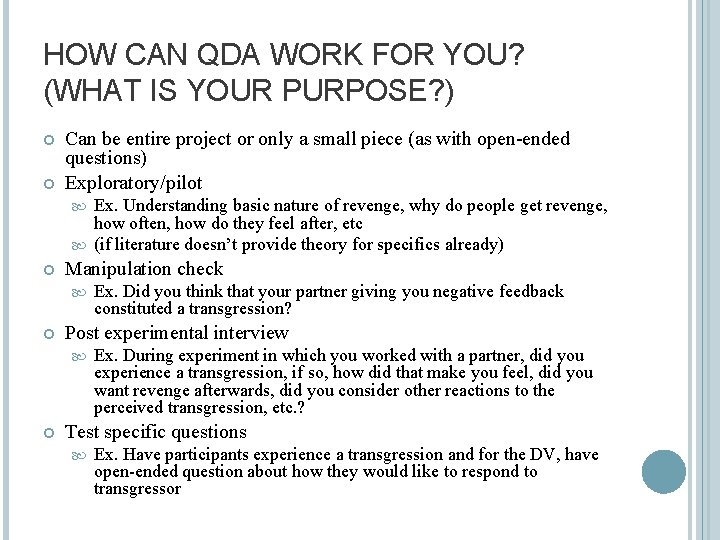

HOW CAN QDA WORK FOR YOU? (WHAT IS YOUR PURPOSE? ) Can be entire project or only a small piece (as with open-ended questions) Exploratory/pilot Ex. Understanding basic nature of revenge, why do people get revenge, how often, how do they feel after, etc (if literature doesn’t provide theory for specifics already) Manipulation check Post experimental interview Ex. Did you think that your partner giving you negative feedback constituted a transgression? Ex. During experiment in which you worked with a partner, did you experience a transgression, if so, how did that make you feel, did you want revenge afterwards, did you consider other reactions to the perceived transgression, etc. ? Test specific questions Ex. Have participants experience a transgression and for the DV, have open-ended question about how they would like to respond to transgressor

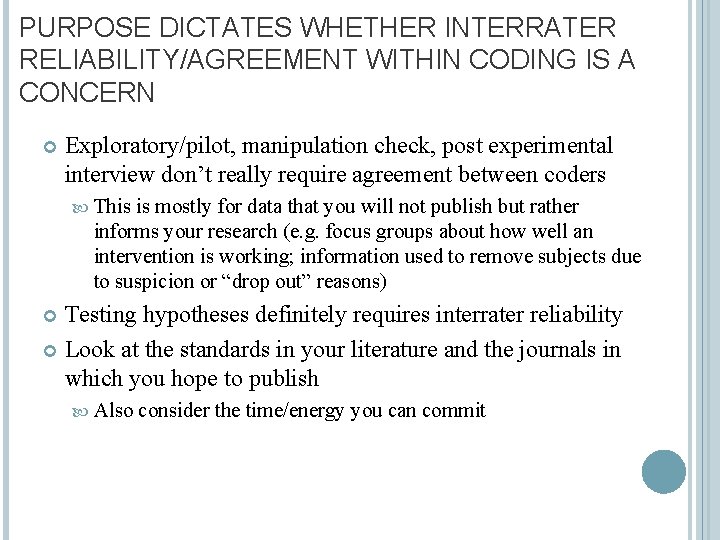

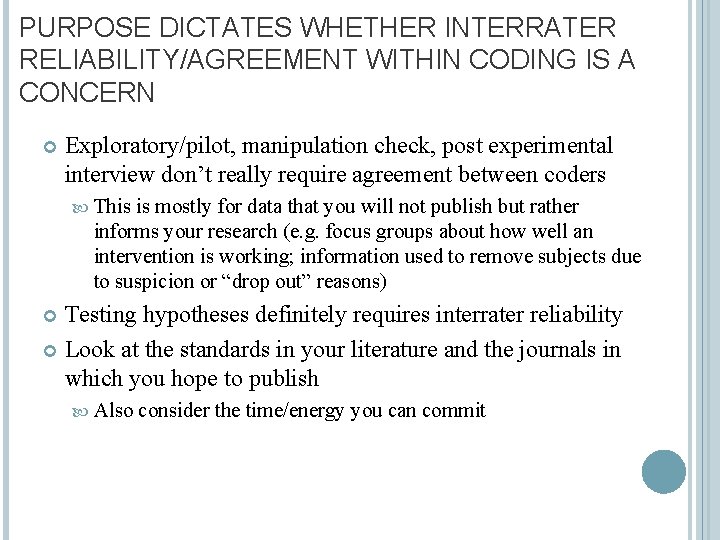

PURPOSE DICTATES WHETHER INTERRATER RELIABILITY/AGREEMENT WITHIN CODING IS A CONCERN Exploratory/pilot, manipulation check, post experimental interview don’t really require agreement between coders This is mostly for data that you will not publish but rather informs your research (e. g. focus groups about how well an intervention is working; information used to remove subjects due to suspicion or “drop out” reasons) Testing hypotheses definitely requires interrater reliability Look at the standards in your literature and the journals in which you hope to publish Also consider the time/energy you can commit

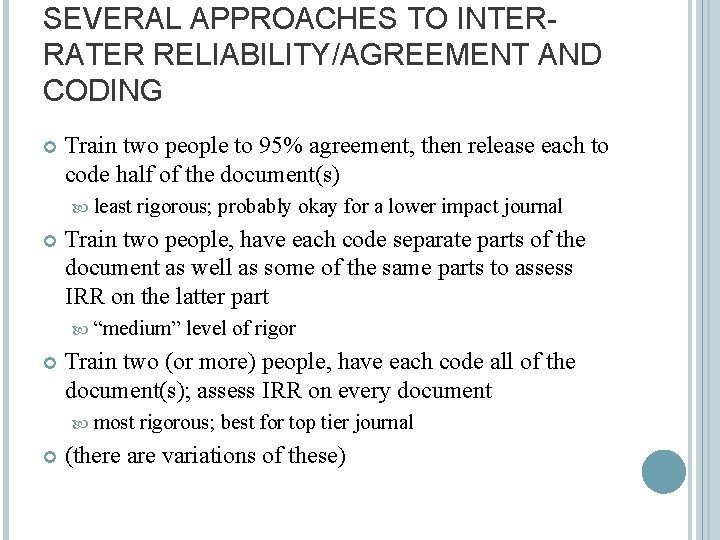

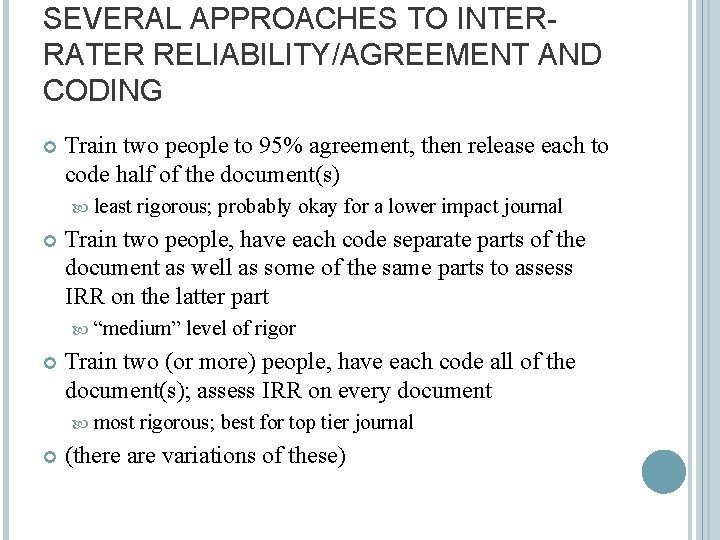

SEVERAL APPROACHES TO INTERRATER RELIABILITY/AGREEMENT AND CODING Train two people to 95% agreement, then release each to code half of the document(s) least rigorous; probably okay for a lower impact journal Train two people, have each code separate parts of the document as well as some of the same parts to assess IRR on the latter part “medium” Train two (or more) people, have each code all of the document(s); assess IRR on every document most level of rigorous; best for top tier journal (there are variations of these)

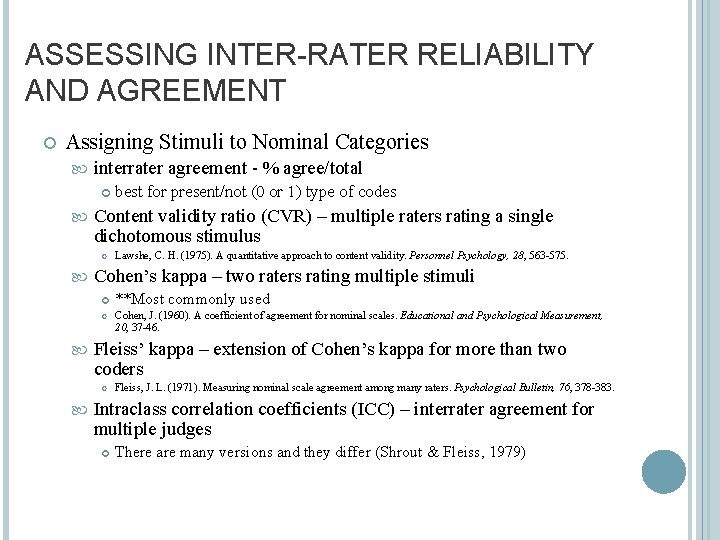

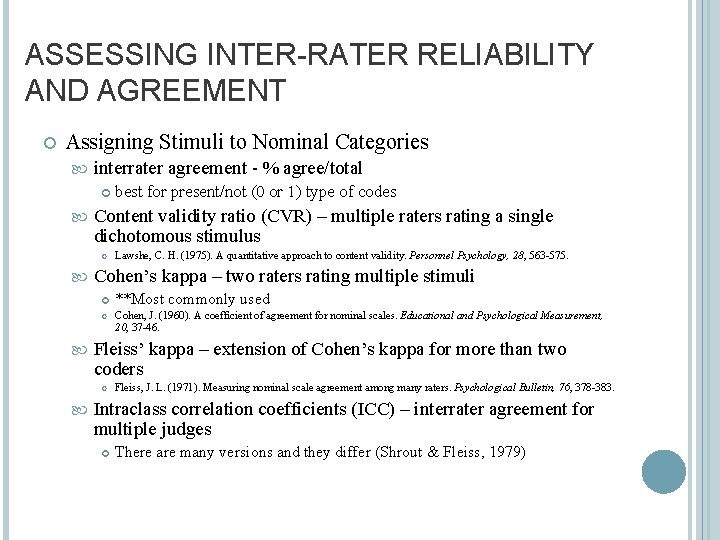

ASSESSING INTER-RATER RELIABILITY AND AGREEMENT Assigning Stimuli to Nominal Categories interrater agreement - % agree/total Content validity ratio (CVR) – multiple raters rating a single dichotomous stimulus **Most commonly used Cohen, J. (1960). A coefficient of agreement for nominal scales. Educational and Psychological Measurement, 20, 37 -46. Fleiss’ kappa – extension of Cohen’s kappa for more than two coders Lawshe, C. H. (1975). A quantitative approach to content validity. Personnel Psychology, 28, 563 -575. Cohen’s kappa – two raters rating multiple stimuli best for present/not (0 or 1) type of codes Fleiss, J. L. (1971). Measuring nominal scale agreement among many raters. Psychological Bulletin, 76, 378 -383. Intraclass correlation coefficients (ICC) – interrater agreement for multiple judges There are many versions and they differ (Shrout & Fleiss, 1979)

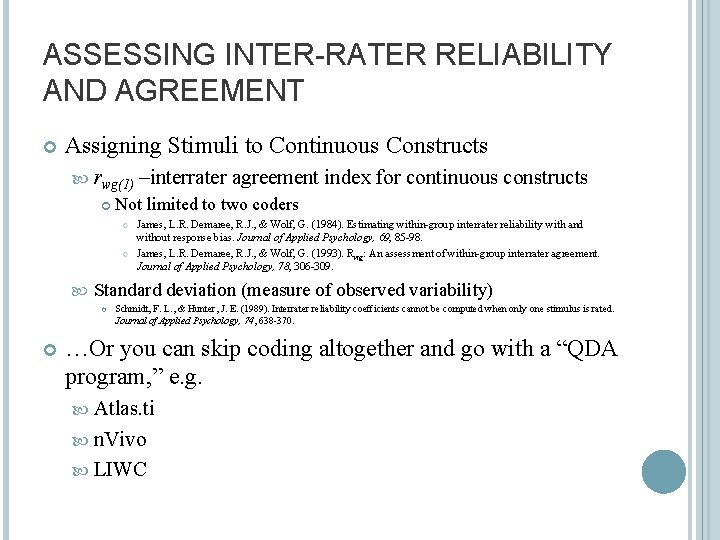

ASSESSING INTER-RATER RELIABILITY AND AGREEMENT Assigning Stimuli to Continuous Constructs rwg(1) Not limited to two coders James, L. R. Demaree, R. J. , & Wolf, G. (1984). Estimating within-group interrater reliability with and without response bias. Journal of Applied Psychology, 69, 85 -98. James, L. R. Demaree, R. J. , & Wolf, G. (1993). Rwg: An assessment of within-group interrater agreement. Journal of Applied Psychology, 78, 306 -309. Standard deviation (measure of observed variability) –interrater agreement index for continuous constructs Schmidt, F. L. , & Hunter, J. E. (1989). Interrater reliability coefficients cannot be computed when only one stimulus is rated. Journal of Applied Psychology, 74, 638 -370. …Or you can skip coding altogether and go with a “QDA program, ” e. g. Atlas. ti n. Vivo LIWC

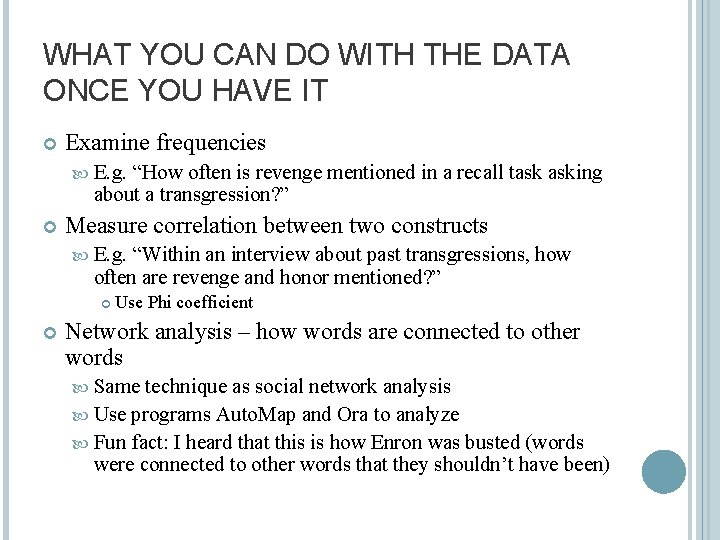

WHAT YOU CAN DO WITH THE DATA ONCE YOU HAVE IT Examine frequencies E. g. “How often is revenge mentioned in a recall task asking about a transgression? ” Measure correlation between two constructs E. g. “Within an interview about past transgressions, how often are revenge and honor mentioned? ” Use Phi coefficient Network analysis – how words are connected to other words Same technique as social network analysis Use programs Auto. Map and Ora to analyze Fun fact: I heard that this is how Enron was busted (words were connected to other words that they shouldn’t have been)

CONCLUSIONS Both qualitative and quantitative research can have same methodological rigor (and same assumptions, purpose, approach, research role/objectivity, etc) What you do differently (or the same) depends on your purpose and research question Qualitative data collection and analysis is for everyone! Improves research by making it richer/stronger Can be used in field, lab, and regardless of area/topics

IMPORTANT POINTS FROM DISCUSSION Interrater reliability should be assessed at the level at which you make your conclusions You can/should assess coder “drifting” which can occur: If one coder drifts from manual and other coders If two coders are reliable with each other but drift from manual Qualitative data can provide compelling examples (e. g. quotes or video) for your paper/talks You can/should publish your coding manual so that other researchers in your field understand your criteria for different constructs May alleviate simple interpretation disagreements