CNN Alex Net Sungjoon Choi Alex Net Not

![Conv: Channel [batch, in_height, in_width, in_chnnel] [filter_height, filter_width, in_channels, out_channels] [batch, in_height=4, in_width=4, in_chnnel=3] Conv: Channel [batch, in_height, in_width, in_chnnel] [filter_height, filter_width, in_channels, out_channels] [batch, in_height=4, in_width=4, in_chnnel=3]](https://slidetodoc.com/presentation_image_h/2e2052f57823a2d32575976fffbb6785/image-13.jpg)

![Conv: Channel [batch, in_height=4, in_width=4, in_chnnel=3] [filter_height=3, filter_width=3, in_channels=3, out_channels=7] What is the number Conv: Channel [batch, in_height=4, in_width=4, in_chnnel=3] [filter_height=3, filter_width=3, in_channels=3, out_channels=7] What is the number](https://slidetodoc.com/presentation_image_h/2e2052f57823a2d32575976fffbb6785/image-14.jpg)

![Reg: Dropout Original dropout [1] sets the output of each hidden neuron with certain Reg: Dropout Original dropout [1] sets the output of each hidden neuron with certain](https://slidetodoc.com/presentation_image_h/2e2052f57823a2d32575976fffbb6785/image-23.jpg)

- Slides: 23

CNN / Alex. Net Sungjoon Choi

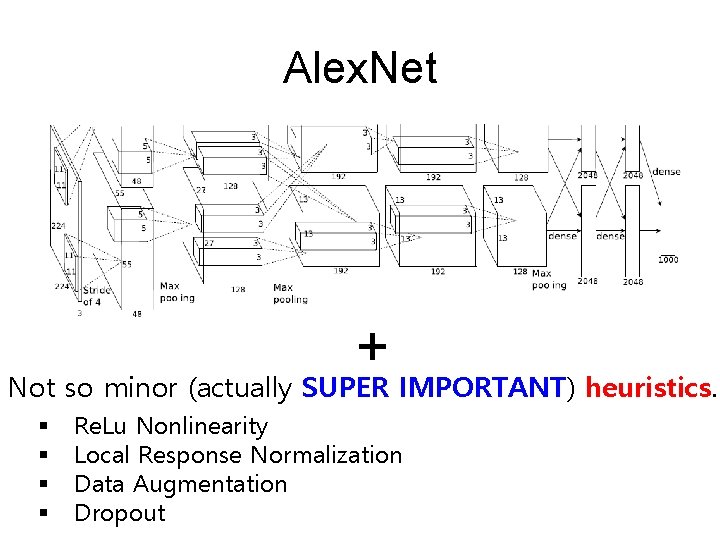

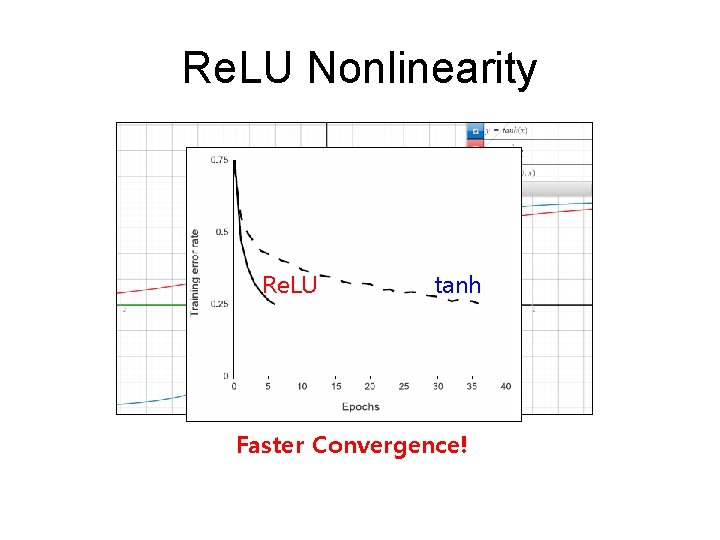

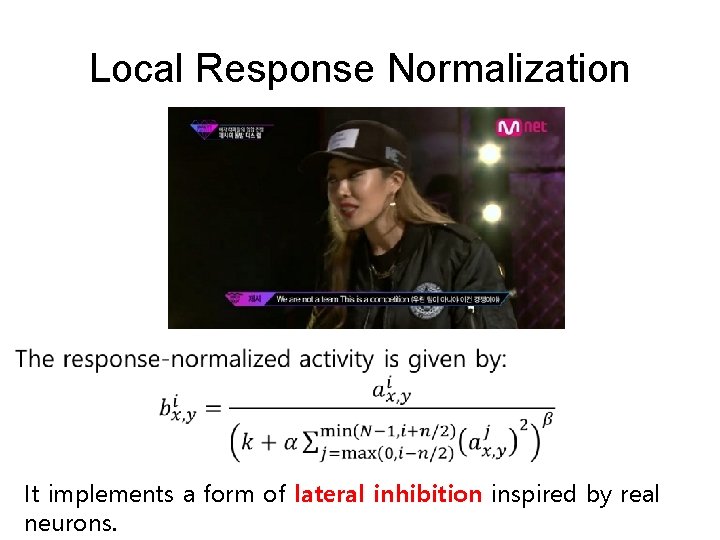

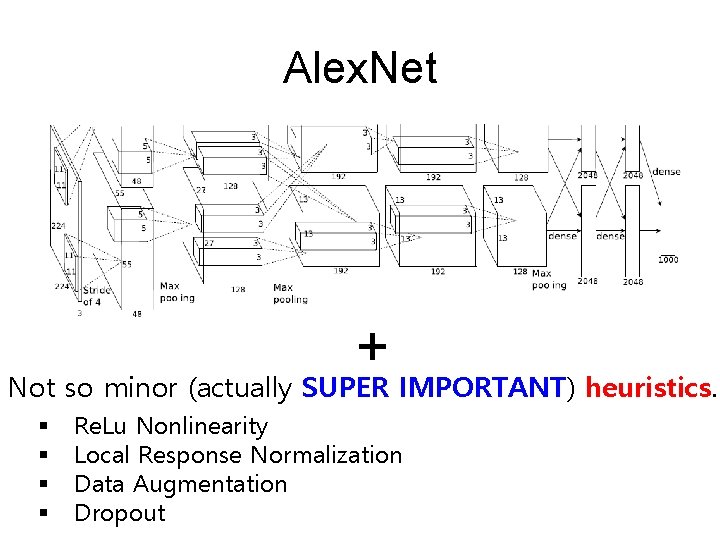

Alex. Net + Not so minor (actually SUPER IMPORTANT) heuristics. § § Re. Lu Nonlinearity Local Response Normalization Data Augmentation Dropout

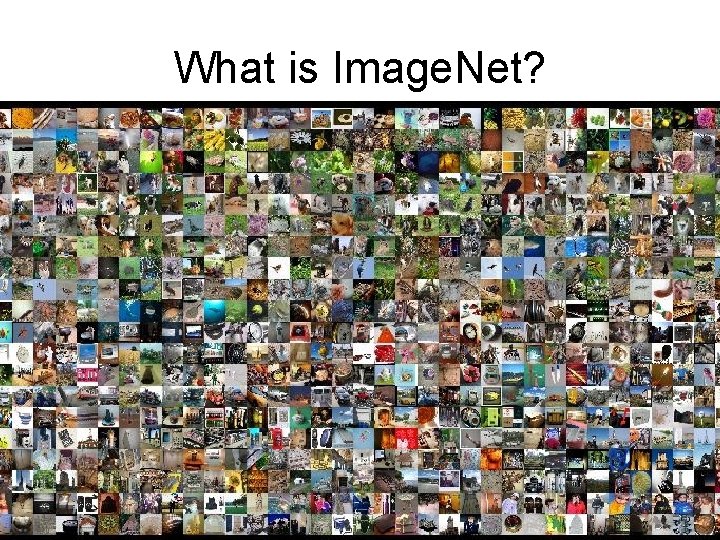

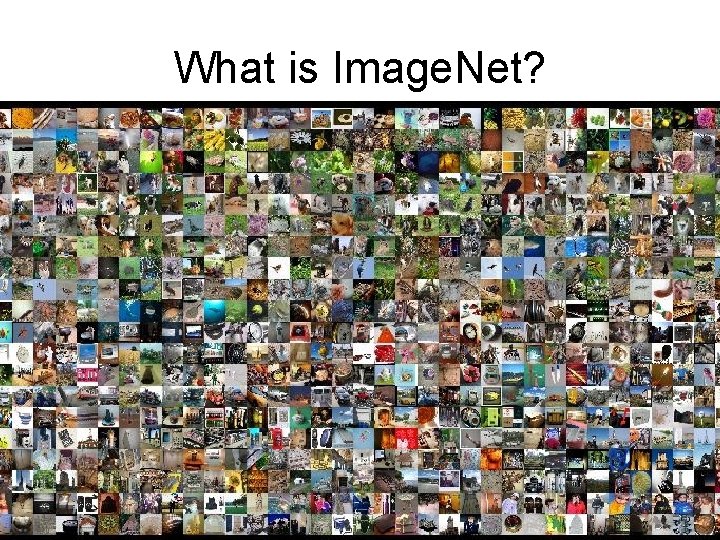

What is Image. Net?

ILSVRC 2010 Image. Net Large Scale Visual Recognition Challenge (ILSVRC) It uses a subset of Image. Net with roughly 1 M images with 1 K categories. Are these all just cats? (Of course, some are super cute!) These are all different categories! (Egyptian, Persian, Tiger, Siamese, and Tabby cat)

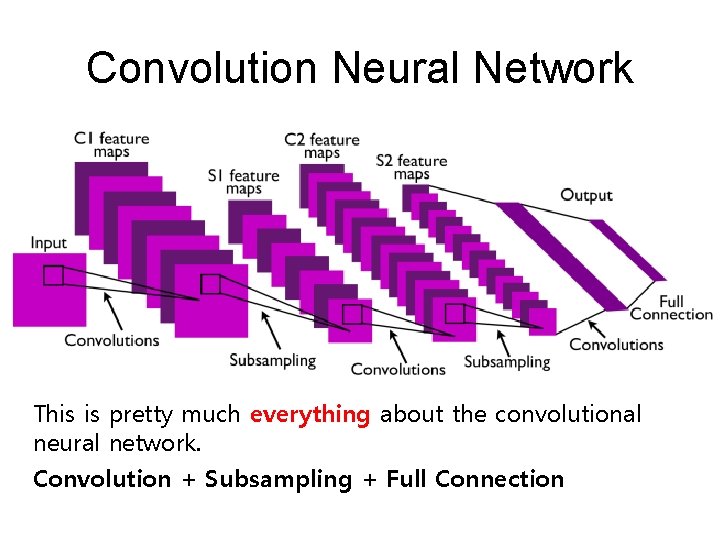

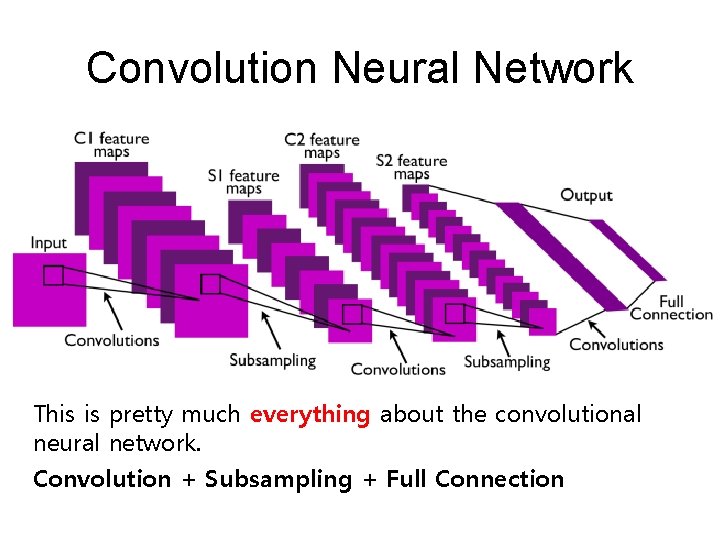

Convolution Neural Network This is pretty much everything about the convolutional neural network. Convolution + Subsampling + Full Connection

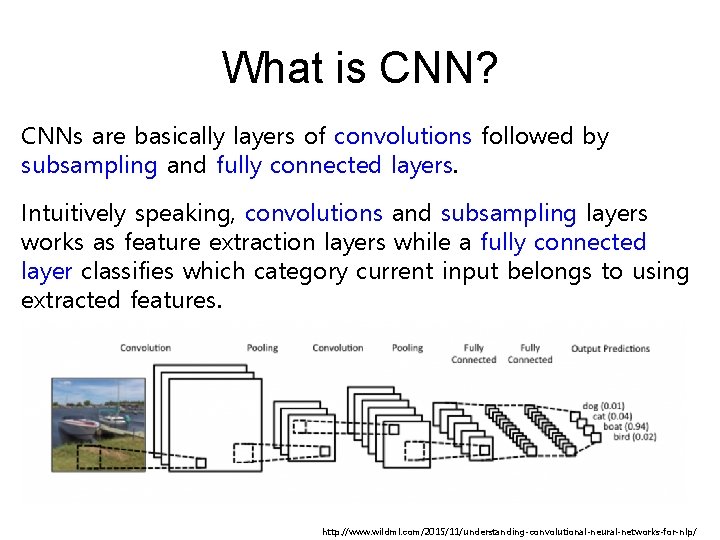

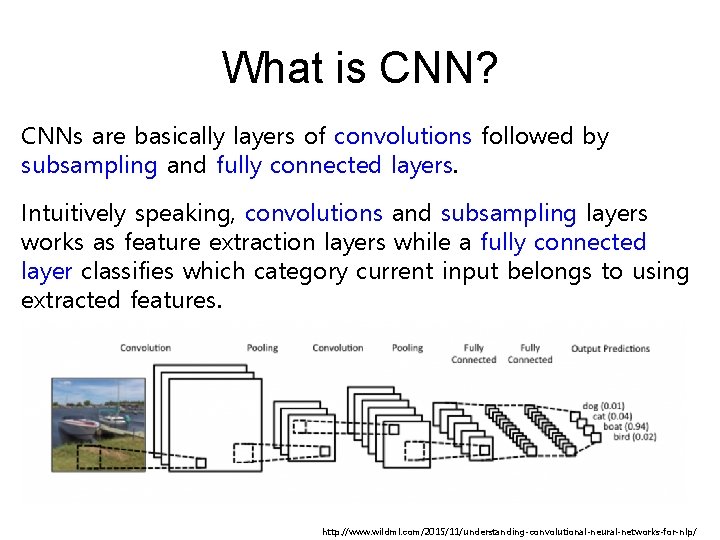

What is CNN? CNNs are basically layers of convolutions followed by subsampling and fully connected layers. Intuitively speaking, convolutions and subsampling layers works as feature extraction layers while a fully connected layer classifies which category current input belongs to using extracted features. http: //www. wildml. com/2015/11/understanding-convolutional-neural-networks-for-nlp/

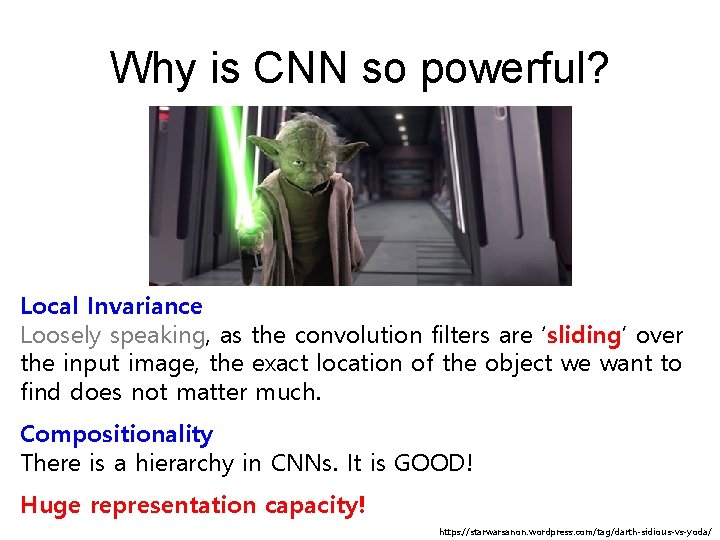

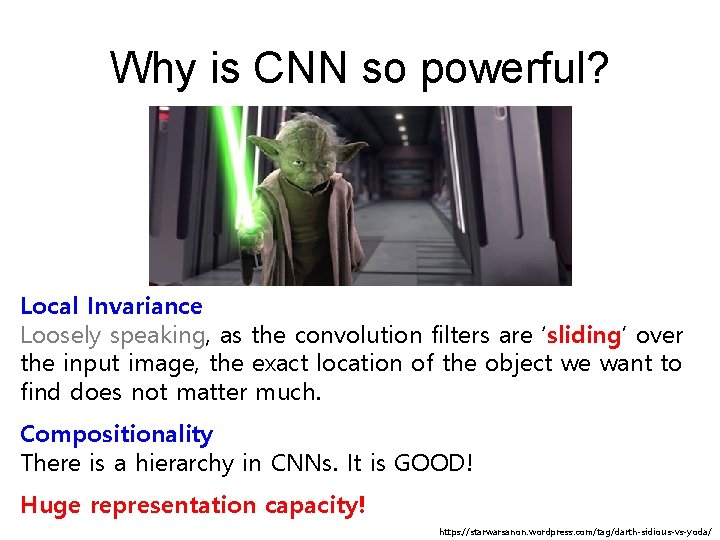

Why is CNN so powerful? Local Invariance Loosely speaking, as the convolution filters are ‘sliding’ over the input image, the exact location of the object we want to find does not matter much. Compositionality There is a hierarchy in CNNs. It is GOOD! Huge representation capacity! https: //starwarsanon. wordpress. com/tag/darth-sidious-vs-yoda/

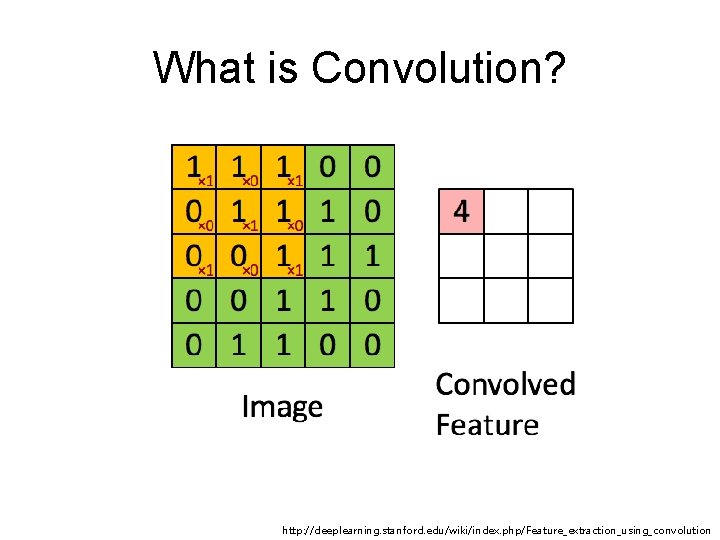

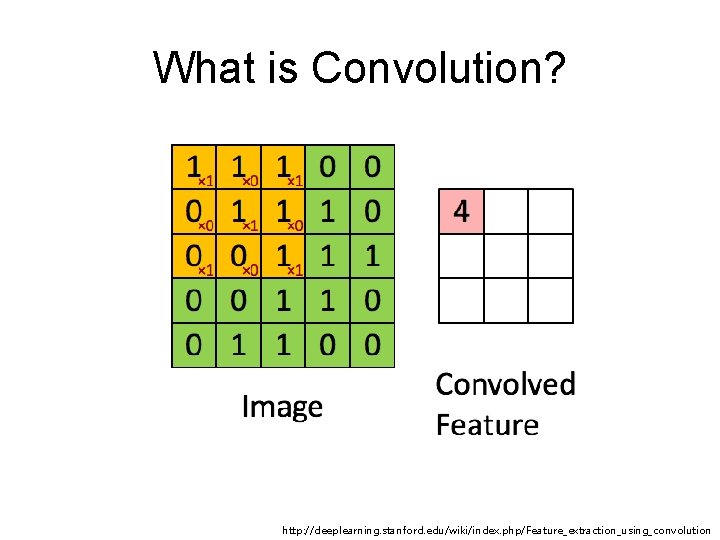

What is Convolution? http: //deeplearning. stanford. edu/wiki/index. php/Feature_extraction_using_convolution

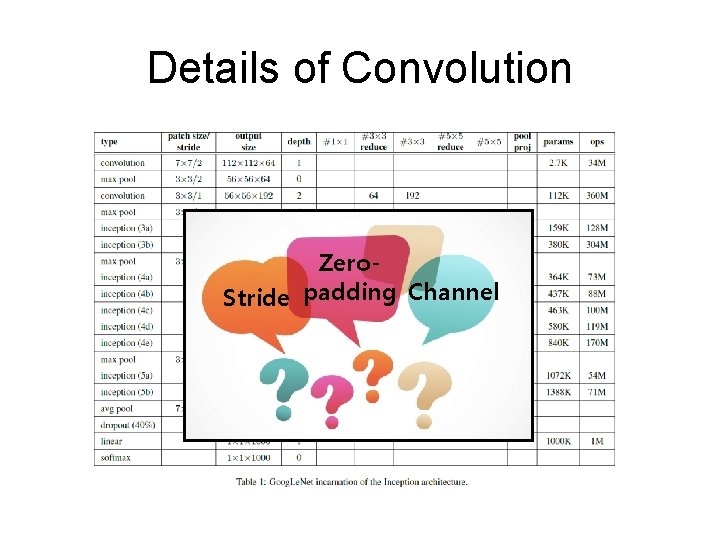

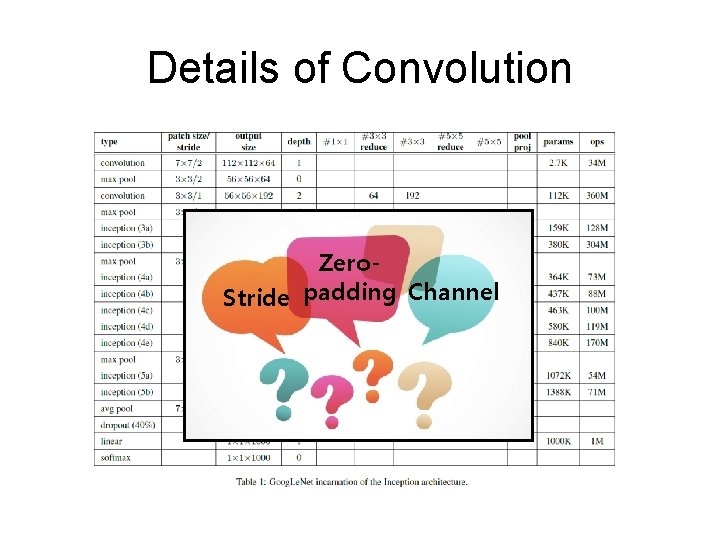

Details of Convolution Zero. Stride padding Channel

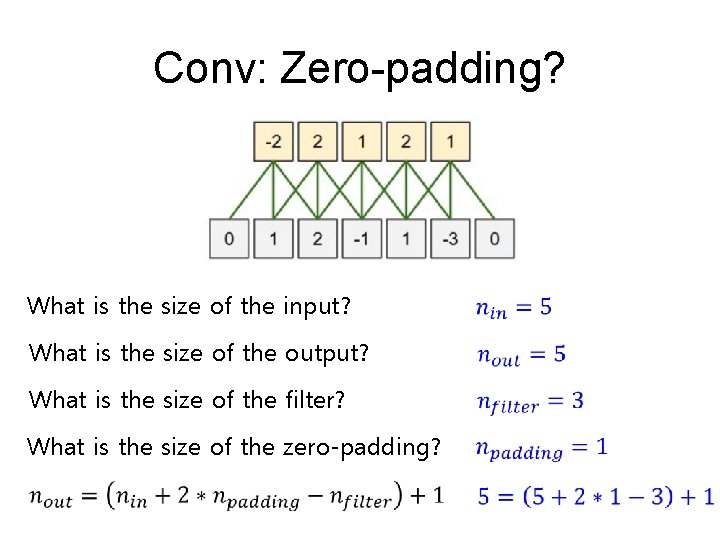

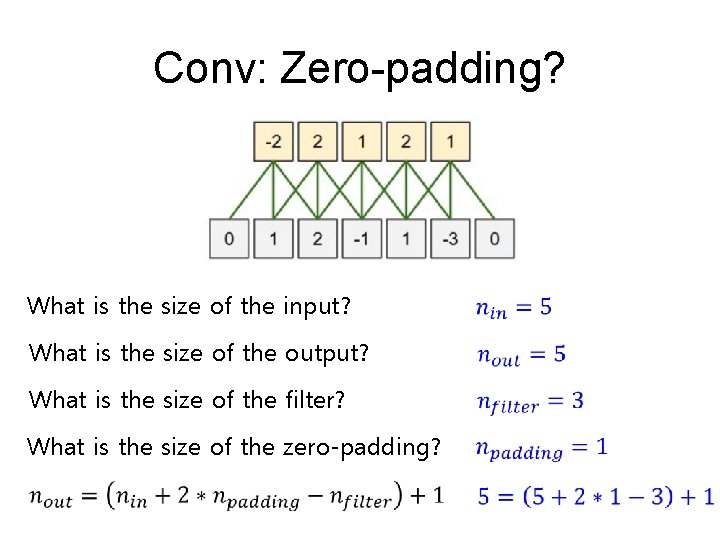

Conv: Zero-padding? What is the size of the input? What is the size of the output? What is the size of the filter? What is the size of the zero-padding?

Stride?

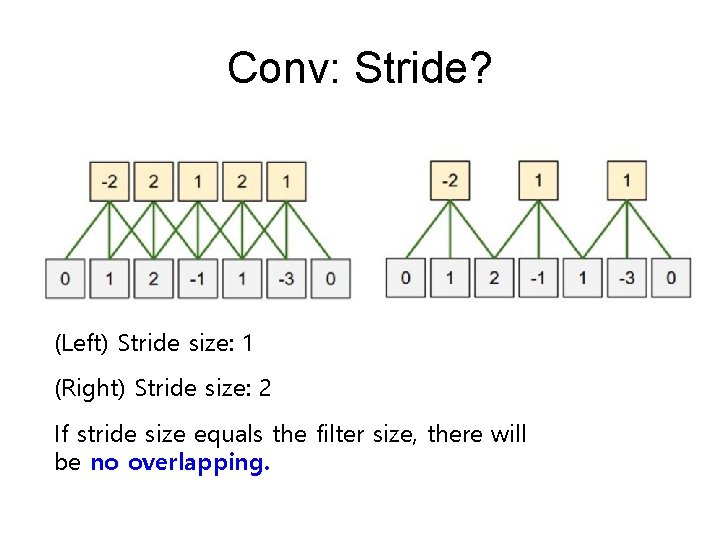

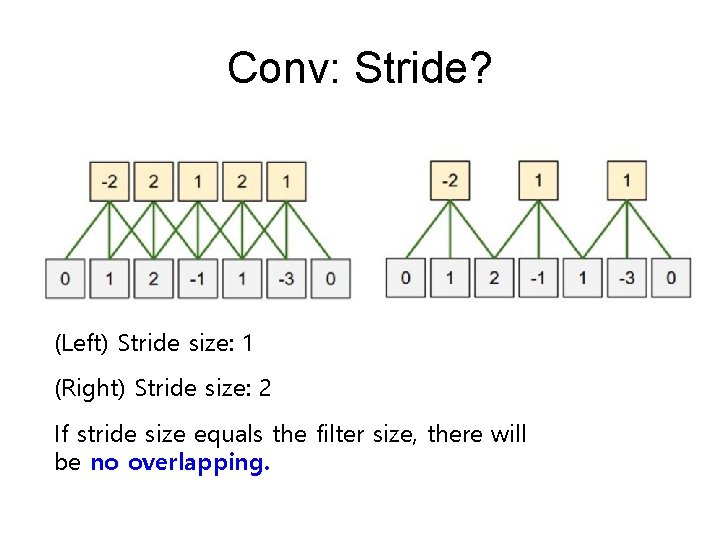

Conv: Stride? (Left) Stride size: 1 (Right) Stride size: 2 If stride size equals the filter size, there will be no overlapping.

![Conv Channel batch inheight inwidth inchnnel filterheight filterwidth inchannels outchannels batch inheight4 inwidth4 inchnnel3 Conv: Channel [batch, in_height, in_width, in_chnnel] [filter_height, filter_width, in_channels, out_channels] [batch, in_height=4, in_width=4, in_chnnel=3]](https://slidetodoc.com/presentation_image_h/2e2052f57823a2d32575976fffbb6785/image-13.jpg)

Conv: Channel [batch, in_height, in_width, in_chnnel] [filter_height, filter_width, in_channels, out_channels] [batch, in_height=4, in_width=4, in_chnnel=3] [filter_height=3, filter_width=3, in_channels=3, out_channels=7]

![Conv Channel batch inheight4 inwidth4 inchnnel3 filterheight3 filterwidth3 inchannels3 outchannels7 What is the number Conv: Channel [batch, in_height=4, in_width=4, in_chnnel=3] [filter_height=3, filter_width=3, in_channels=3, out_channels=7] What is the number](https://slidetodoc.com/presentation_image_h/2e2052f57823a2d32575976fffbb6785/image-14.jpg)

Conv: Channel [batch, in_height=4, in_width=4, in_chnnel=3] [filter_height=3, filter_width=3, in_channels=3, out_channels=7] What is the number of parameters in this convolution layer?

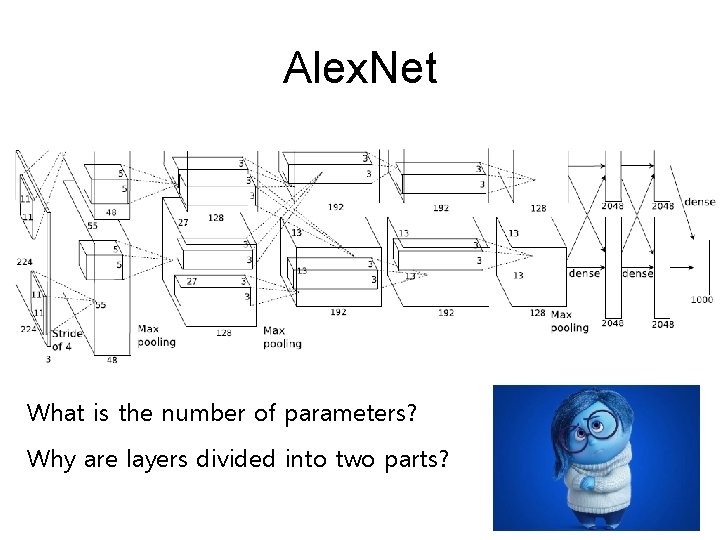

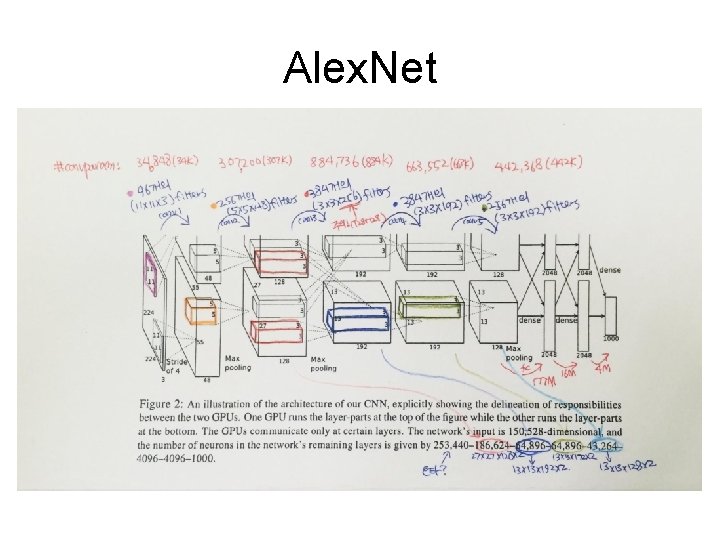

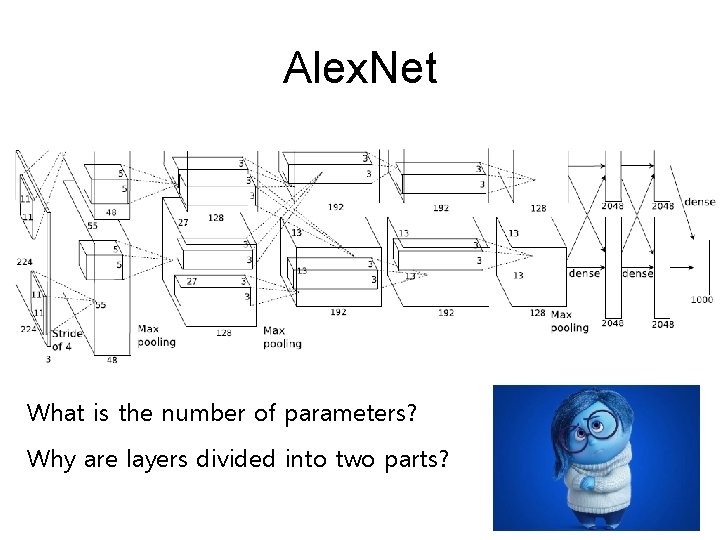

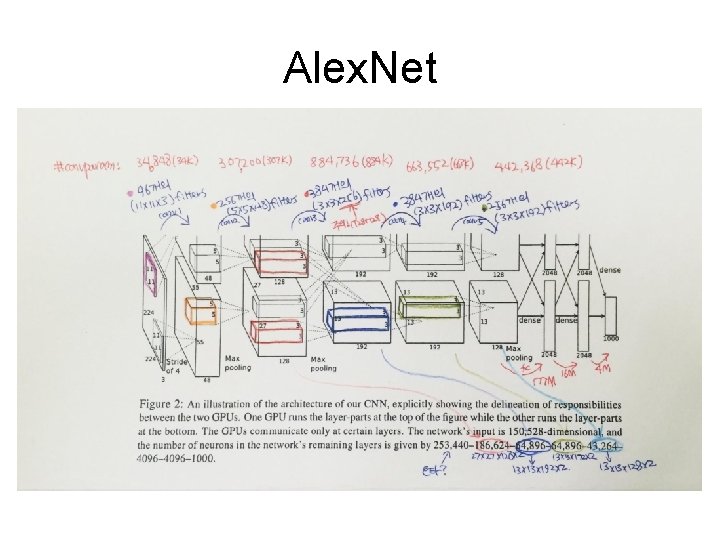

Alex. Net What is the number of parameters? Why are layers divided into two parts?

Alex. Net

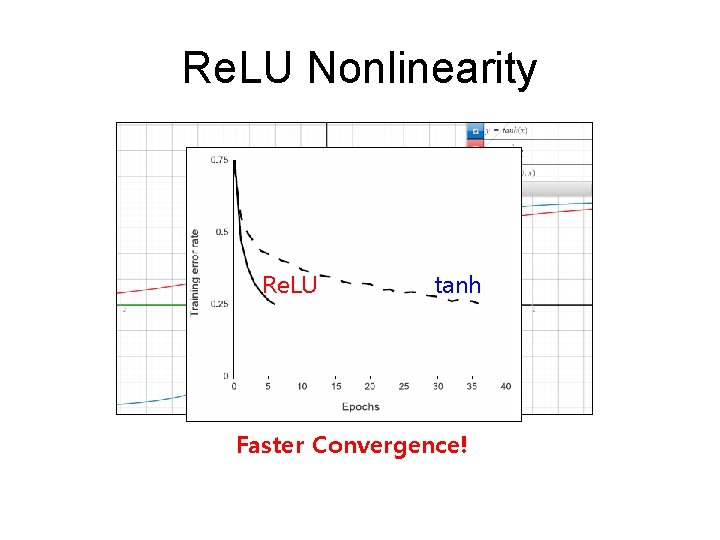

Re. LU Nonlinearity Re. LU tanh Faster Convergence!

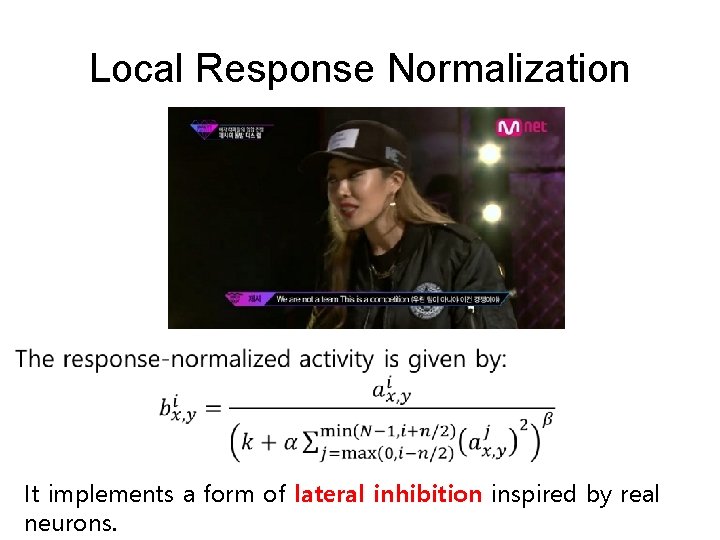

Local Response Normalization It implements a form of lateral inhibition inspired by real neurons.

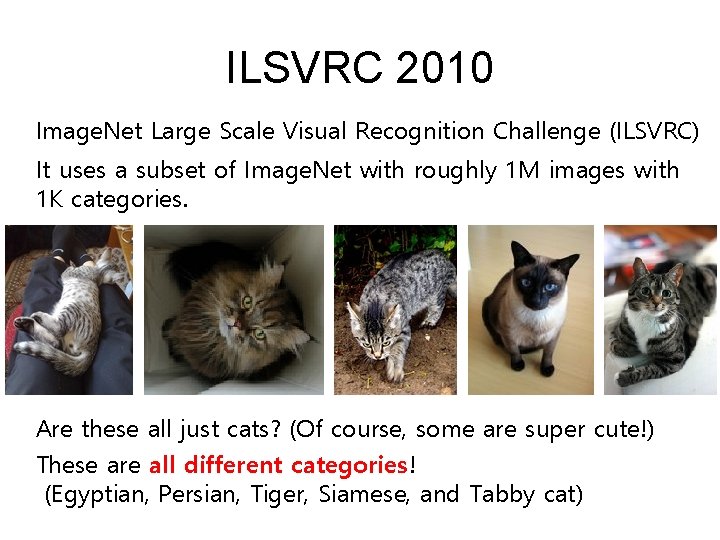

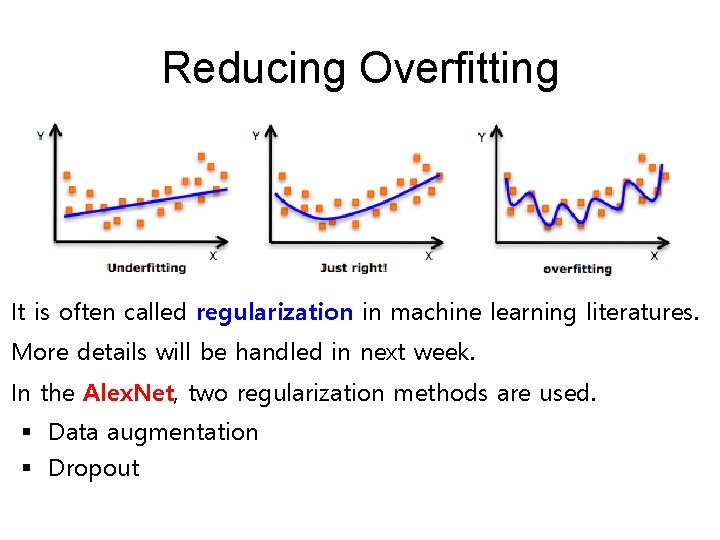

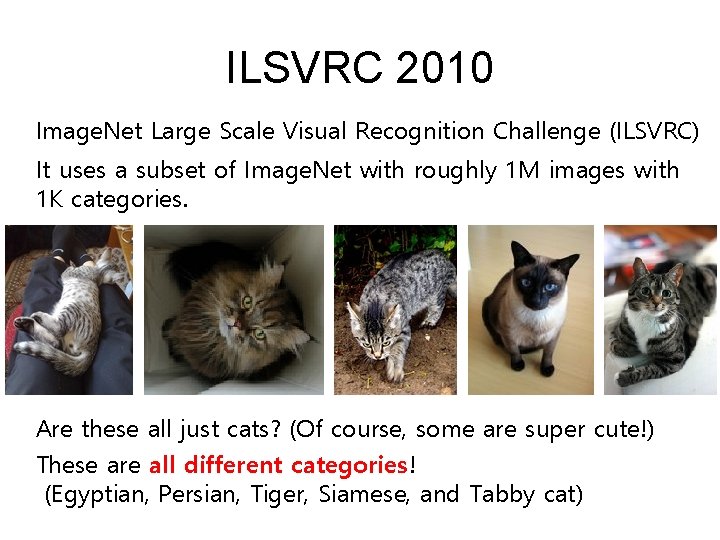

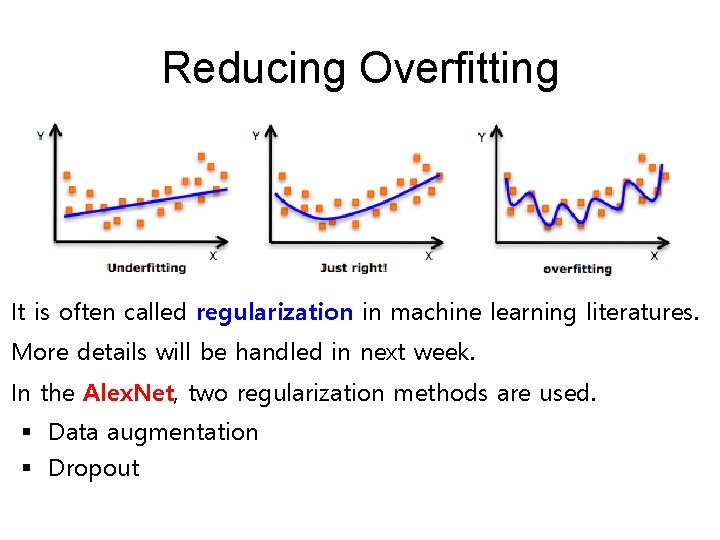

Reducing Overfitting It is often called regularization in machine learning literatures. More details will be handled in next week. In the Alex. Net, two regularization methods are used. § Data augmentation § Dropout

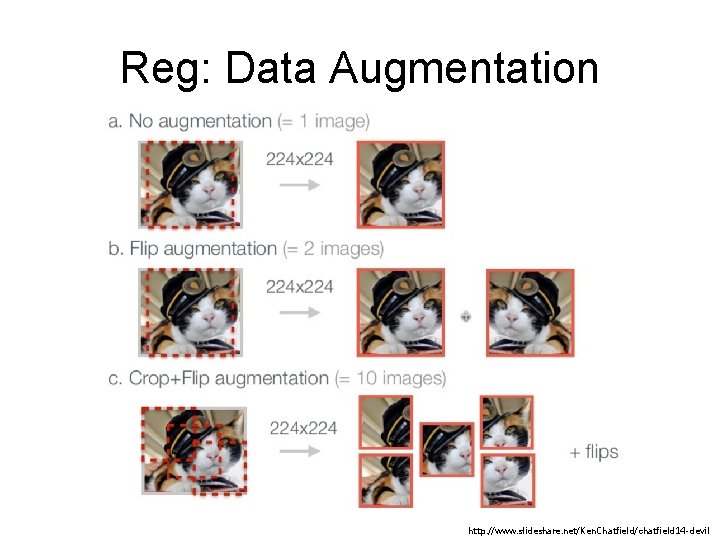

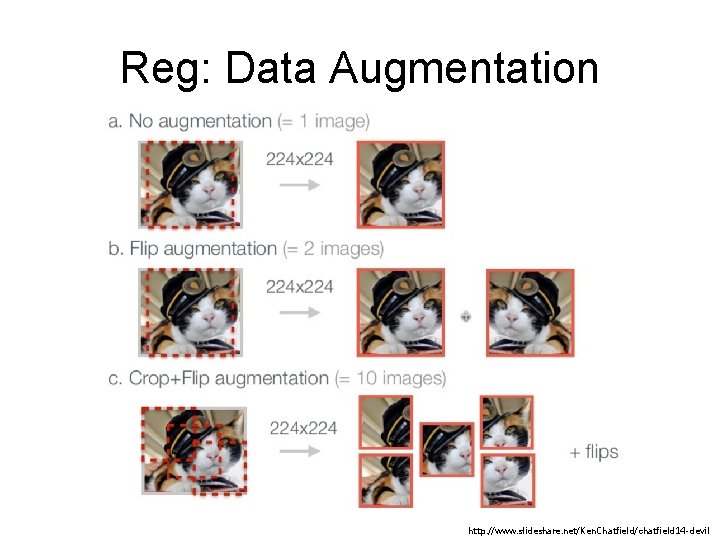

Reg: Data Augmentation http: //www. slideshare. net/Ken. Chatfield/chatfield 14 -devil

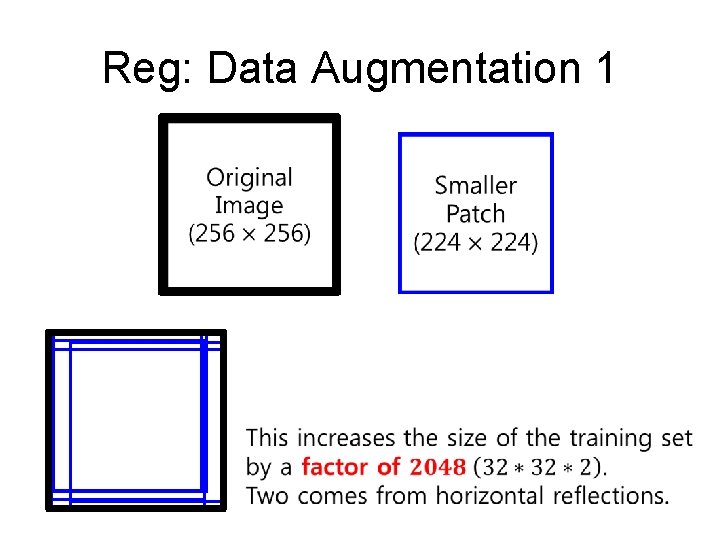

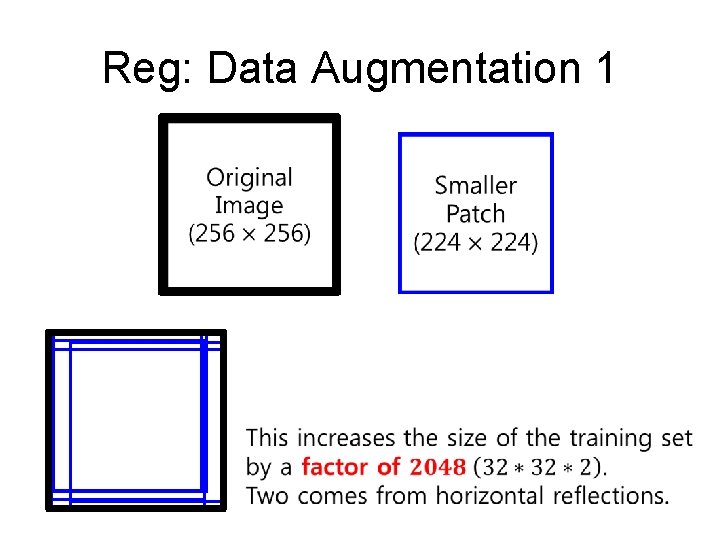

Reg: Data Augmentation 1

Reg: Data Augmentation 2 Color variation Probabilistically, not a single patch will be same at the training phase! (a factor of infinity!)

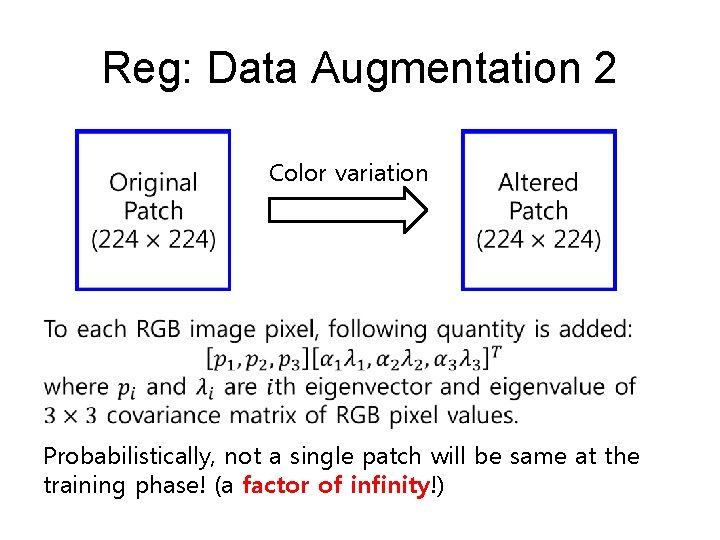

![Reg Dropout Original dropout 1 sets the output of each hidden neuron with certain Reg: Dropout Original dropout [1] sets the output of each hidden neuron with certain](https://slidetodoc.com/presentation_image_h/2e2052f57823a2d32575976fffbb6785/image-23.jpg)

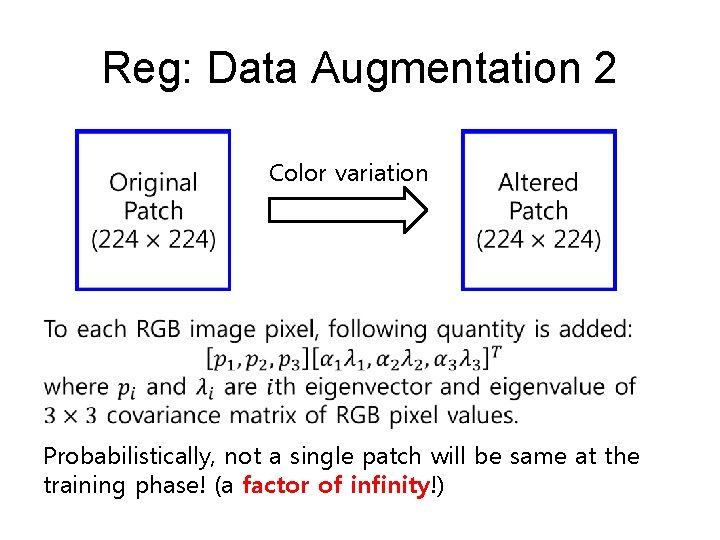

Reg: Dropout Original dropout [1] sets the output of each hidden neuron with certain probability. In this paper, they simply multiply the outputs by 0. 5. [1] G. E. Hinton, N. Srivastava, A. Krizhevsky, I. Sutskever, and R. R. Salakhutdinov. Improving neural networks by preventing coadaptation of feature detectors. ar. Xiv, 2012. http: //www. eddyazar. com/the-regrets-of-a-dropout-and-why-you-should-drop-out-too/