CMU SCS Roadmap Introduction Motivation Part1 Graphs P

- Slides: 98

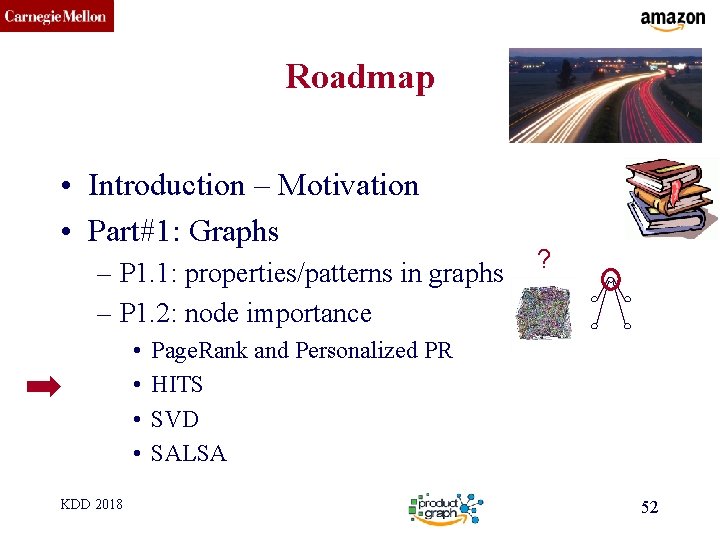

CMU SCS Roadmap • Introduction – Motivation • Part#1: Graphs – P 1. 1: properties/patterns in graphs – P 1. 2: node importance – P 1. 3: community detection – P 1. 4: fraud/anomaly detection – P 1. 5: belief propagation KDD 2018 ? 1

CMU SCS Roadmap • Introduction – Motivation • Part#1: Graphs – P 1. 1: properties/patterns in graphs – P 1. 2: node importance • • KDD 2018 ? Page. Rank and Personalized PR HITS (SVD) SALSA 2

CMU SCS ‘Recipe’ Structure: • Problem definition • Short answer/solution • LONG answer – details • Conclusion/short-answer KDD 2018 3

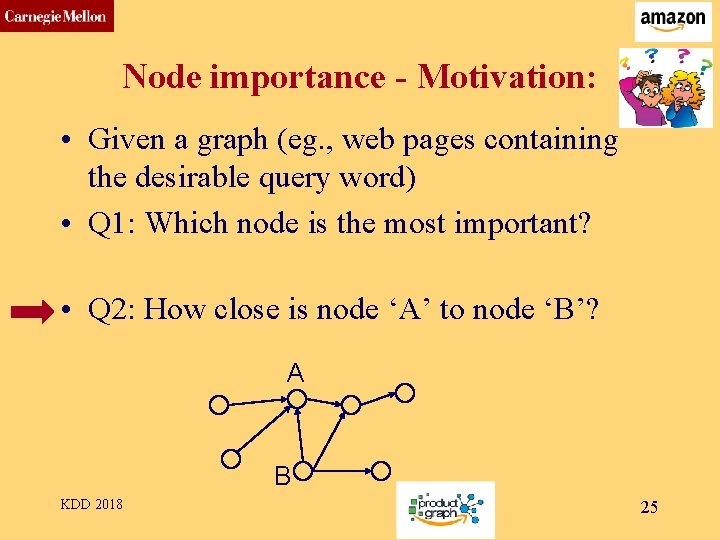

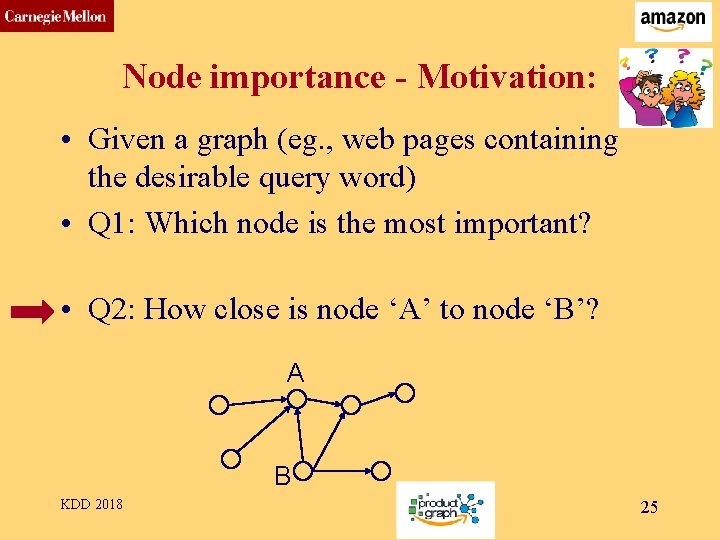

CMU SCS Node importance - Motivation: • Given a graph (eg. , web pages containing the desirable query word) • Q 1: Which node is the most important? • Q 2: How close is node ‘A’ to node ‘B’? KDD 2018 4

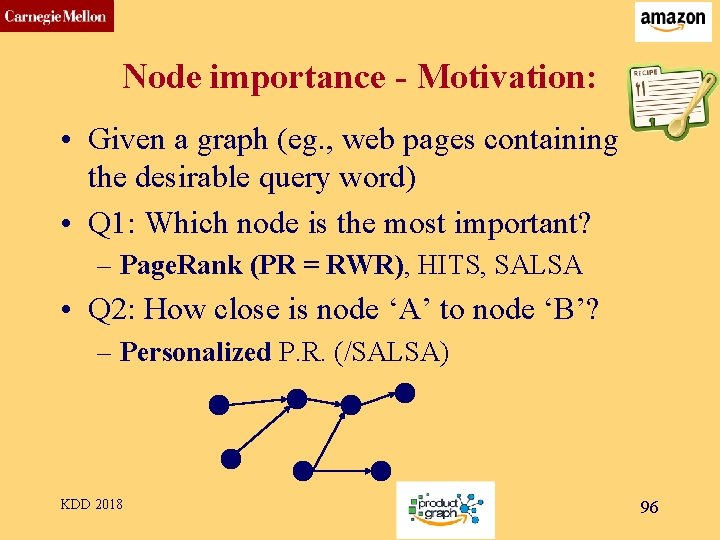

CMU SCS Node importance - Motivation: • Given a graph (eg. , web pages containing the desirable query word) • Q 1: Which node is the most important? – Page. Rank (PR = RWR), HITS, SALSA • Q 2: How close is node ‘A’ to node ‘B’? – Personalized P. R. (/SALSA) KDD 2018 5

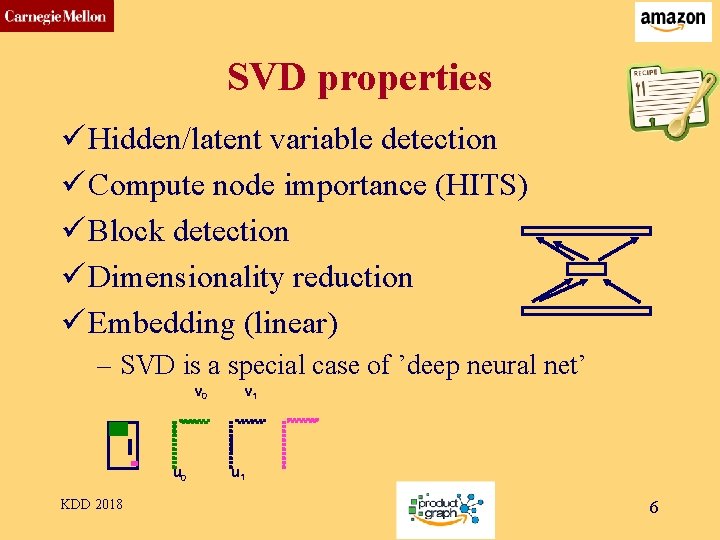

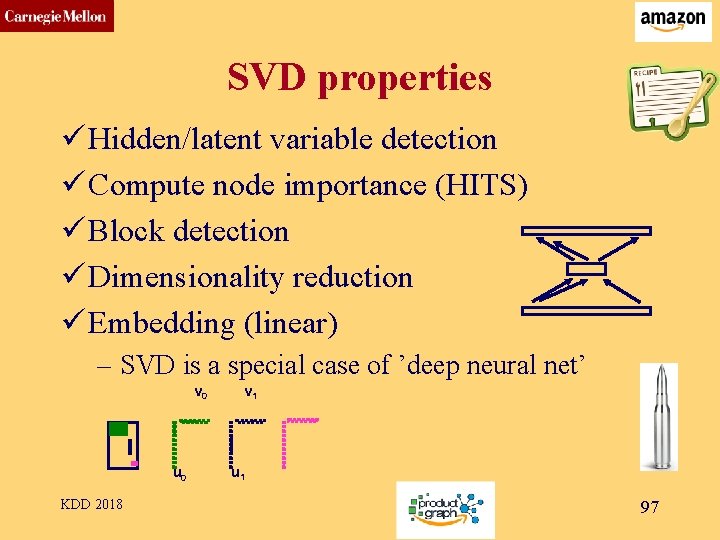

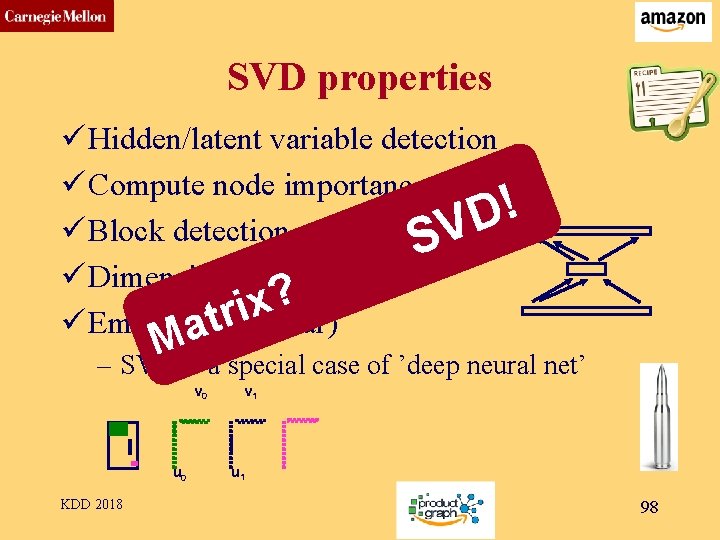

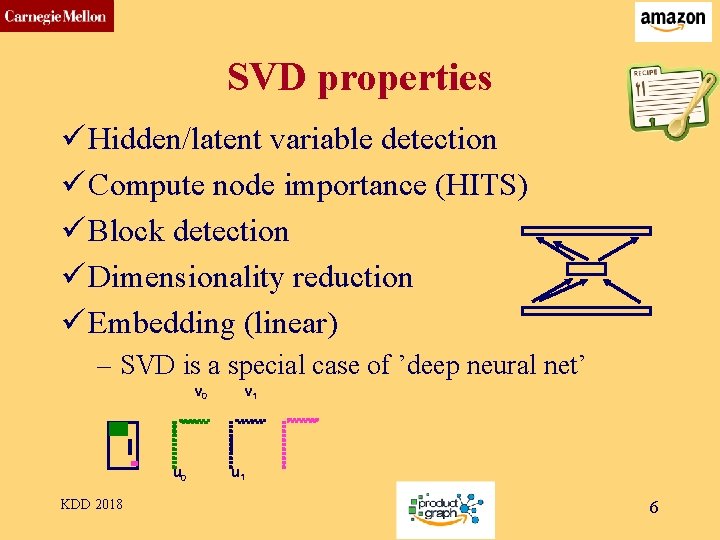

CMU SCS SVD properties ü Hidden/latent variable detection ü Compute node importance (HITS) ü Block detection ü Dimensionality reduction ü Embedding (linear) – SVD is a special case of ’deep neural net’ v 0 u 0 KDD 2018 v 1 u 1 6

CMU SCS Roadmap • Introduction – Motivation • Part#1: Graphs – P 1. 1: properties/patterns in graphs – P 1. 2: node importance ? • Page. Rank and Personalized PR • HITS • SALSA KDD 2018 7

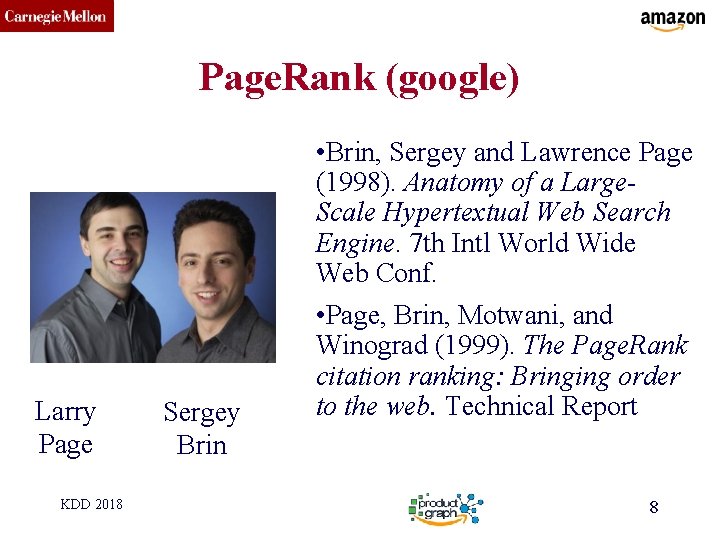

CMU SCS Page. Rank (google) Larry Page KDD 2018 Sergey Brin • Brin, Sergey and Lawrence Page (1998). Anatomy of a Large. Scale Hypertextual Web Search Engine. 7 th Intl World Wide Web Conf. • Page, Brin, Motwani, and Winograd (1999). The Page. Rank citation ranking: Bringing order to the web. Technical Report 8

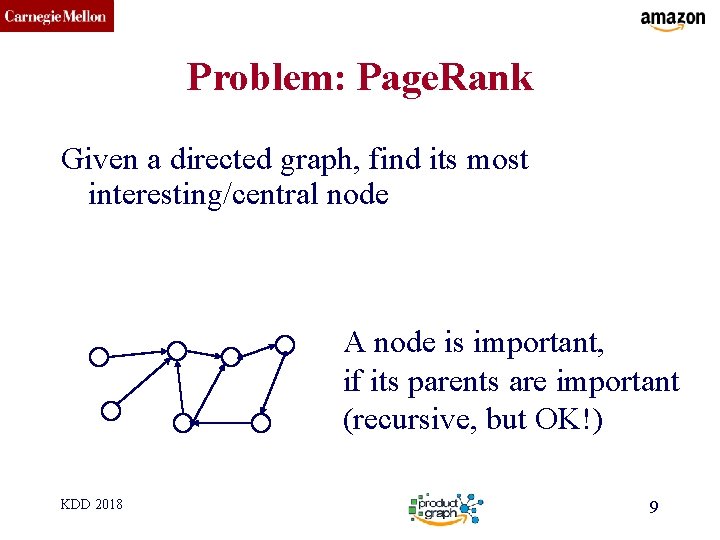

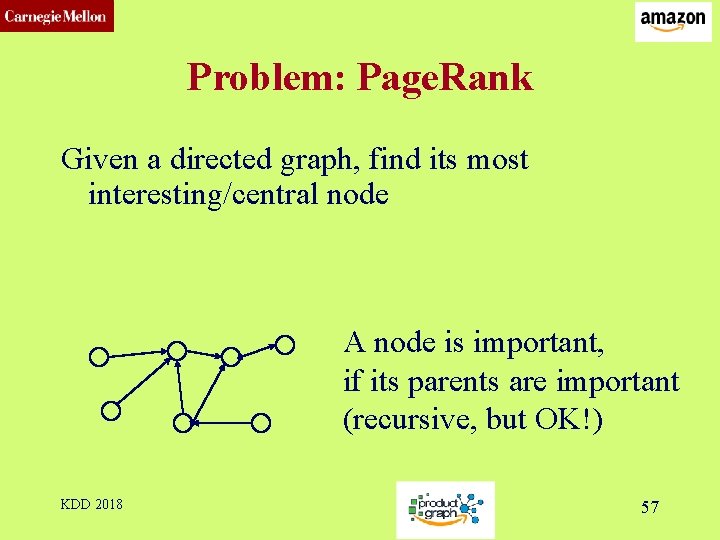

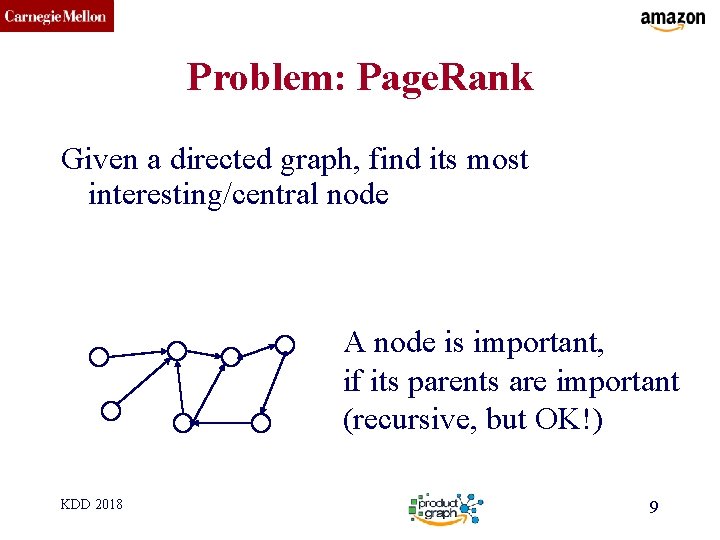

CMU SCS Problem: Page. Rank Given a directed graph, find its most interesting/central node A node is important, if its parents are important (recursive, but OK!) KDD 2018 9

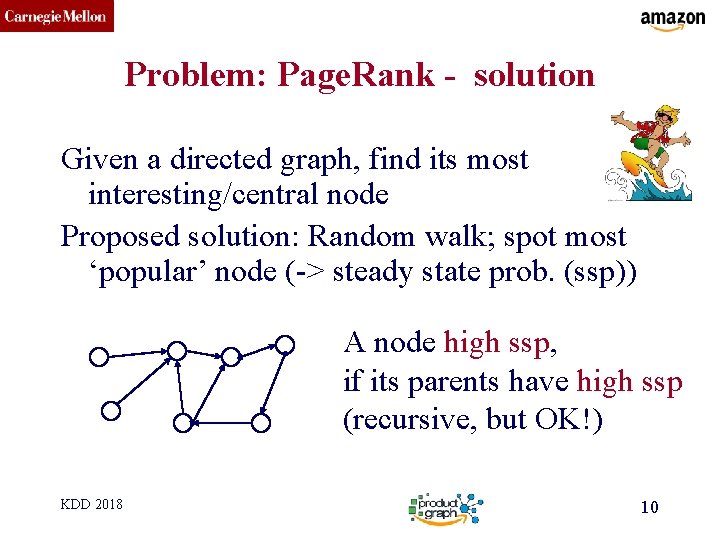

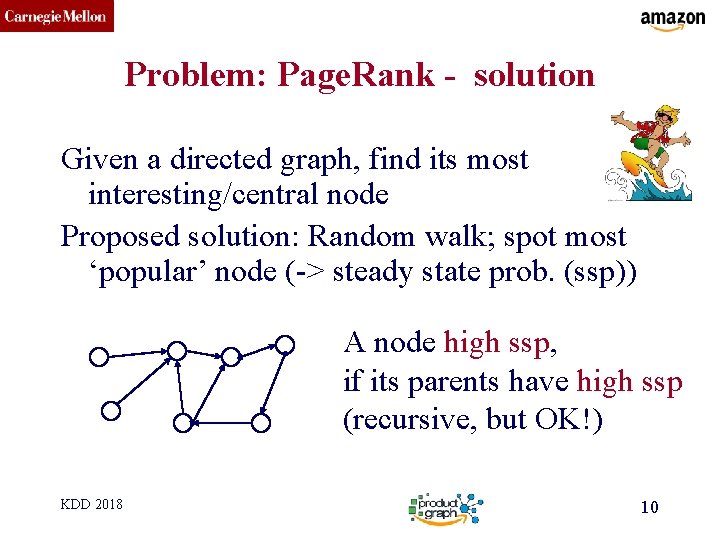

CMU SCS Problem: Page. Rank - solution Given a directed graph, find its most interesting/central node Proposed solution: Random walk; spot most ‘popular’ node (-> steady state prob. (ssp)) A node high ssp, if its parents have high ssp (recursive, but OK!) KDD 2018 10

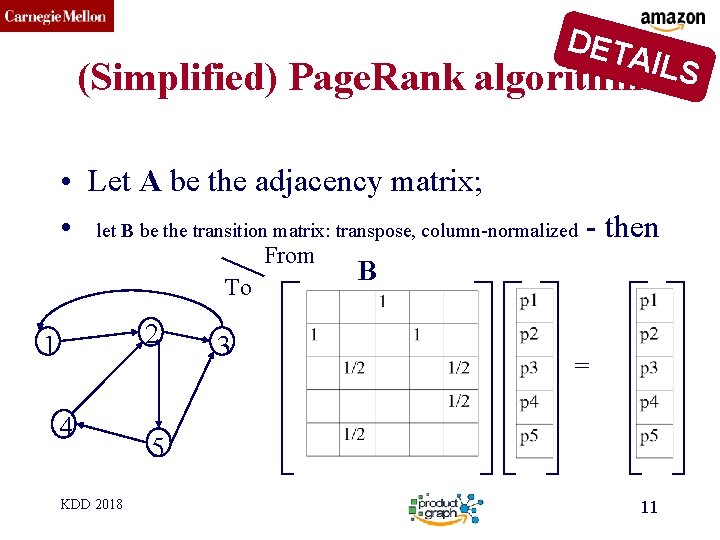

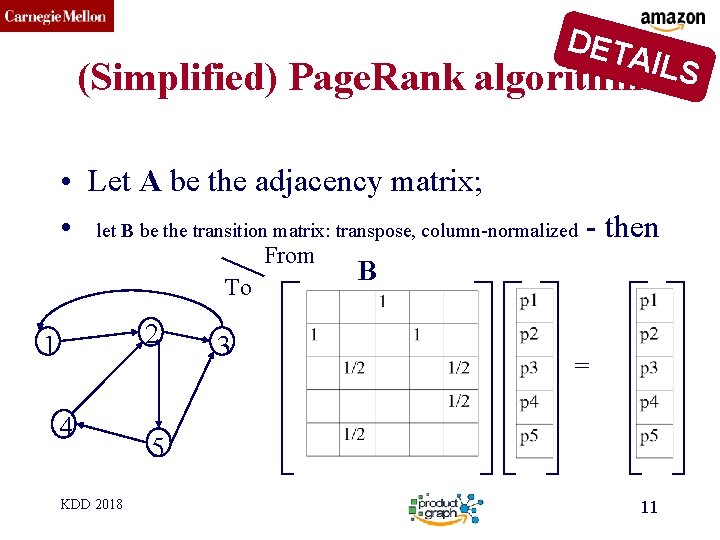

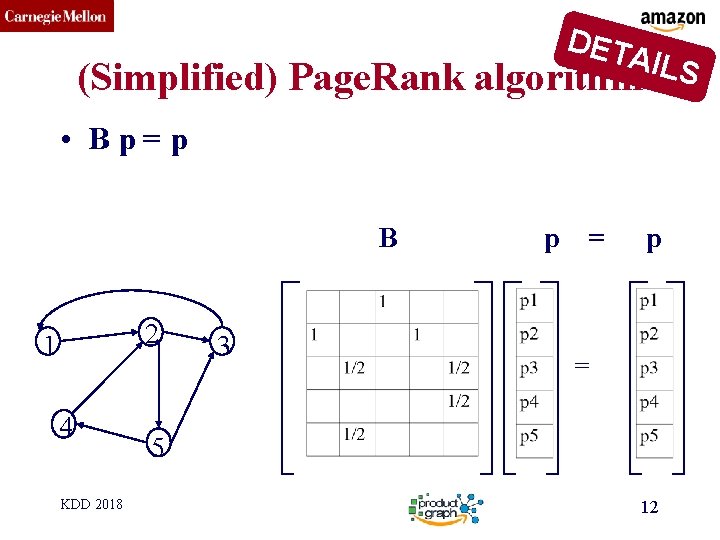

CMU SCS DET AILS (Simplified) Page. Rank algorithm • Let A be the adjacency matrix; • let B be the transition matrix: transpose, column-normalized - then From To 2 1 4 KDD 2018 3 B = 5 11

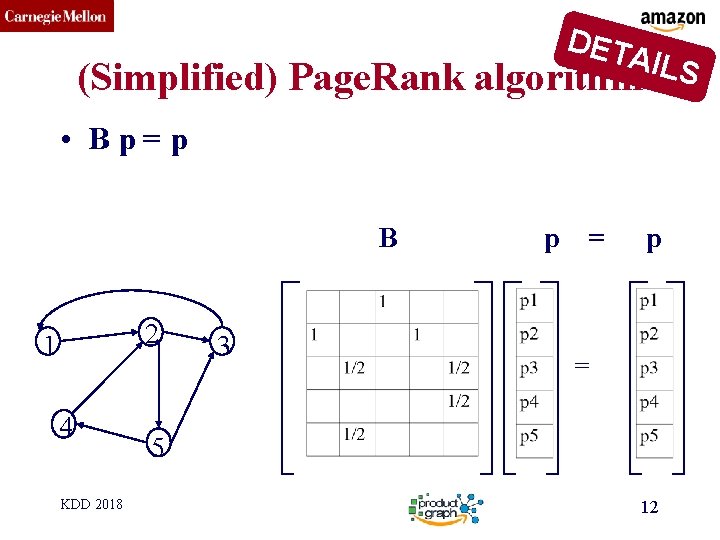

CMU SCS DET AILS (Simplified) Page. Rank algorithm • Bp=p B 2 1 4 KDD 2018 3 p = 5 12

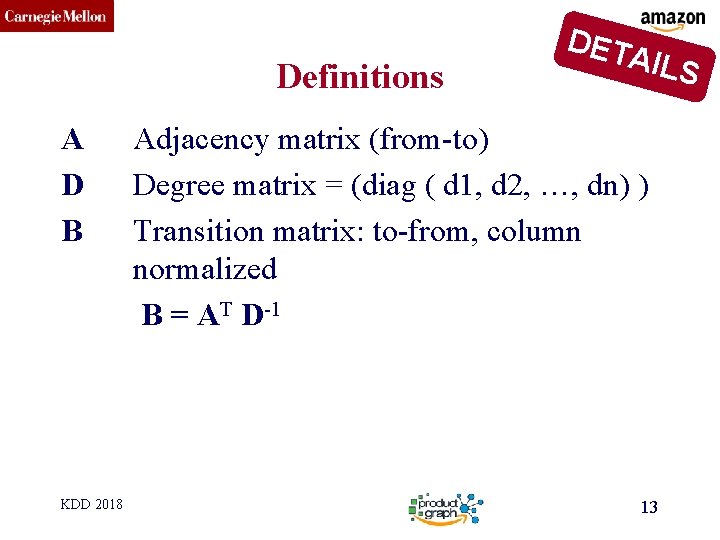

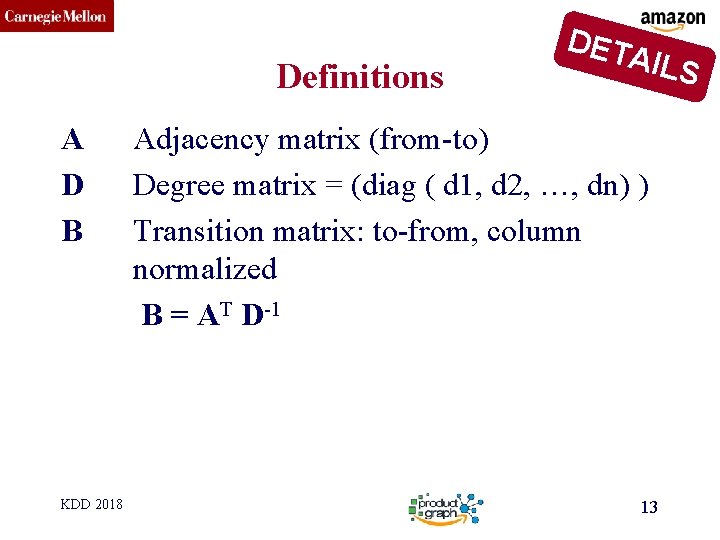

CMU SCS Definitions A D B KDD 2018 DET AILS Adjacency matrix (from-to) Degree matrix = (diag ( d 1, d 2, …, dn) ) Transition matrix: to-from, column normalized B = AT D-1 13

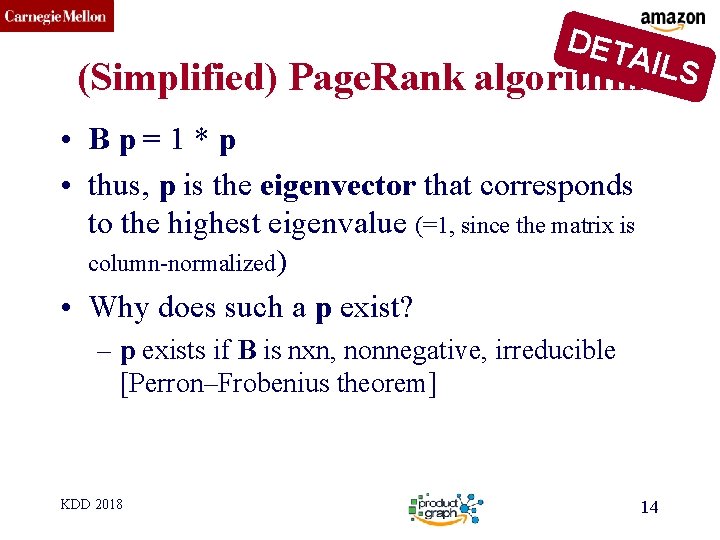

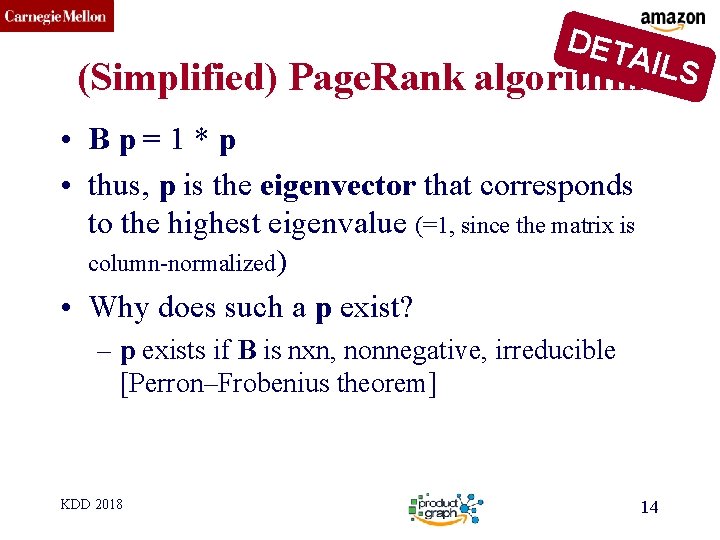

CMU SCS DET AILS (Simplified) Page. Rank algorithm • B p = 1 * p • thus, p is the eigenvector that corresponds to the highest eigenvalue (=1, since the matrix is column-normalized) • Why does such a p exist? – p exists if B is nxn, nonnegative, irreducible [Perron–Frobenius theorem] KDD 2018 14

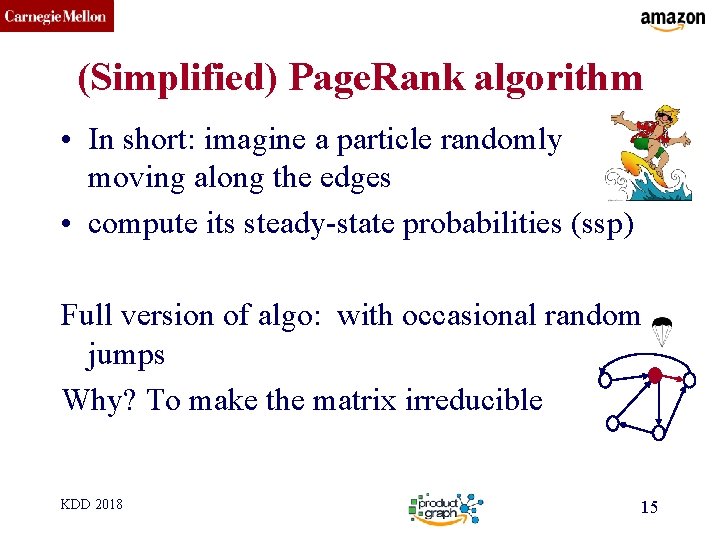

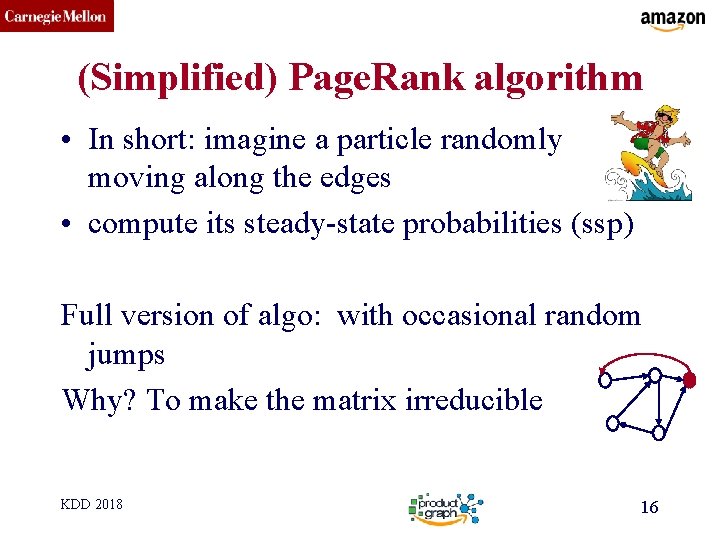

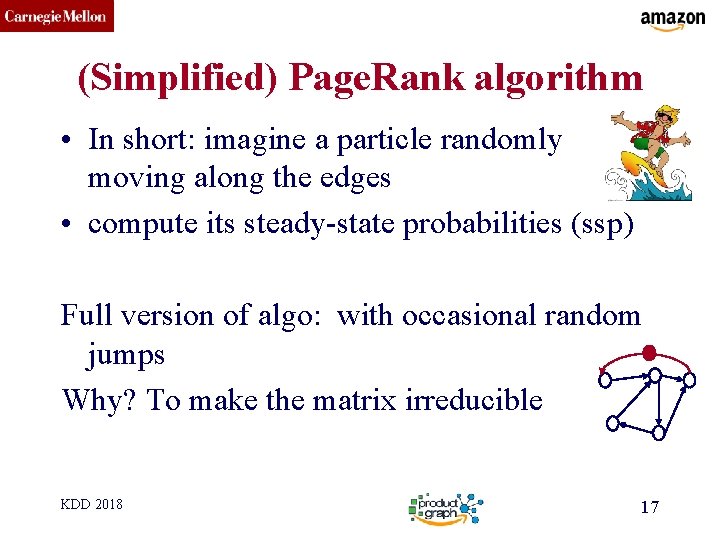

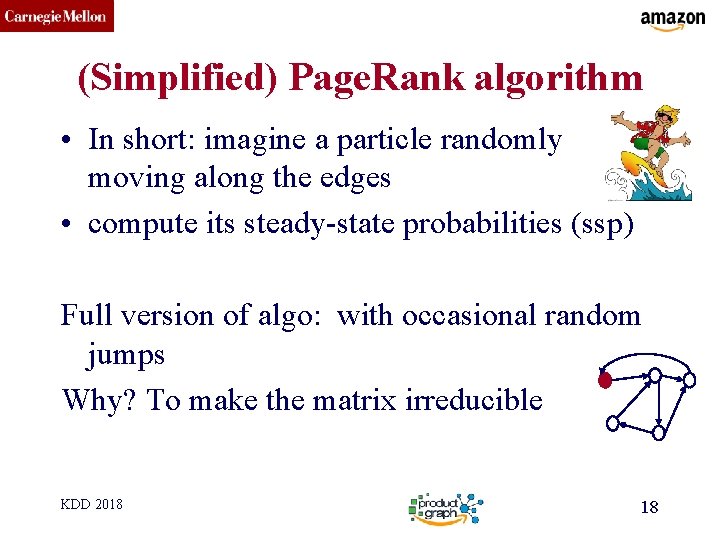

CMU SCS (Simplified) Page. Rank algorithm • In short: imagine a particle randomly moving along the edges • compute its steady-state probabilities (ssp) Full version of algo: with occasional random jumps Why? To make the matrix irreducible KDD 2018 15

CMU SCS (Simplified) Page. Rank algorithm • In short: imagine a particle randomly moving along the edges • compute its steady-state probabilities (ssp) Full version of algo: with occasional random jumps Why? To make the matrix irreducible KDD 2018 16

CMU SCS (Simplified) Page. Rank algorithm • In short: imagine a particle randomly moving along the edges • compute its steady-state probabilities (ssp) Full version of algo: with occasional random jumps Why? To make the matrix irreducible KDD 2018 17

CMU SCS (Simplified) Page. Rank algorithm • In short: imagine a particle randomly moving along the edges • compute its steady-state probabilities (ssp) Full version of algo: with occasional random jumps Why? To make the matrix irreducible KDD 2018 18

CMU SCS (Simplified) Page. Rank algorithm • In short: imagine a particle randomly moving along the edges • compute its steady-state probabilities (ssp) Full version of algo: with occasional random jumps Why? To make the matrix irreducible KDD 2018 19

CMU SCS (Simplified) Page. Rank algorithm • In short: imagine a particle randomly moving along the edges • compute its steady-state probabilities (ssp) Page. Rank = PR = Random Walk with Restarts = RWR = Random surfer KDD 2018 20

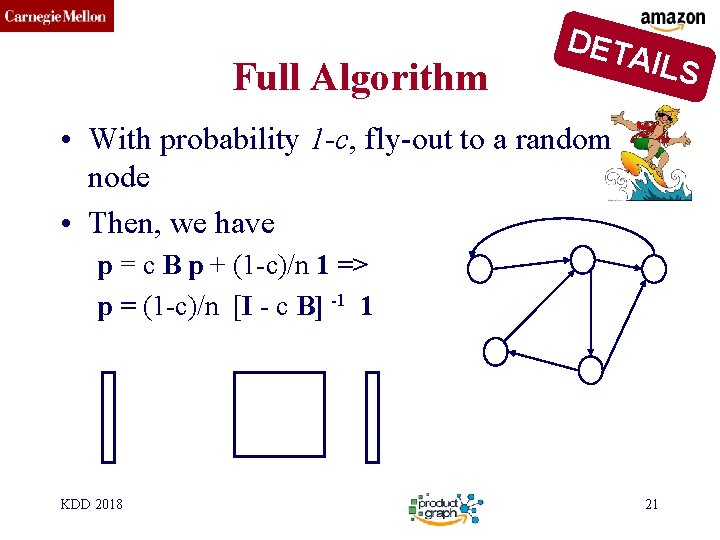

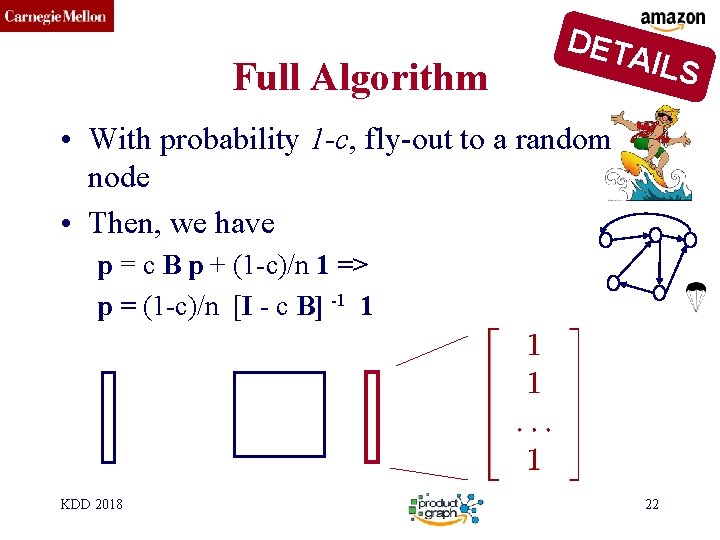

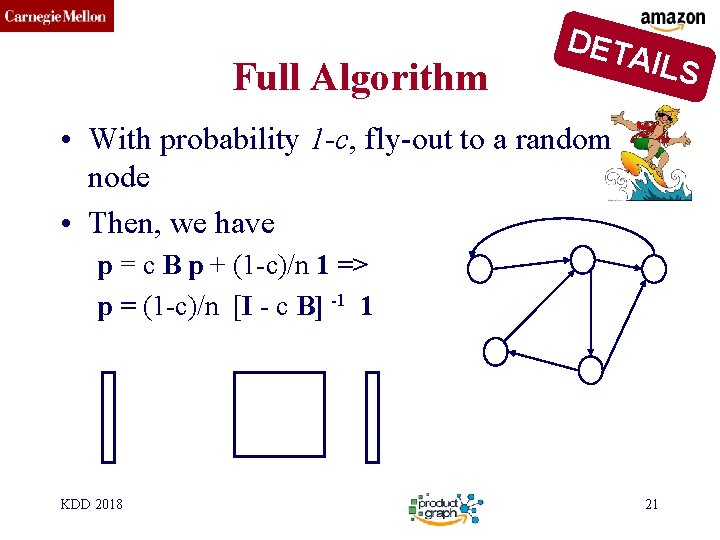

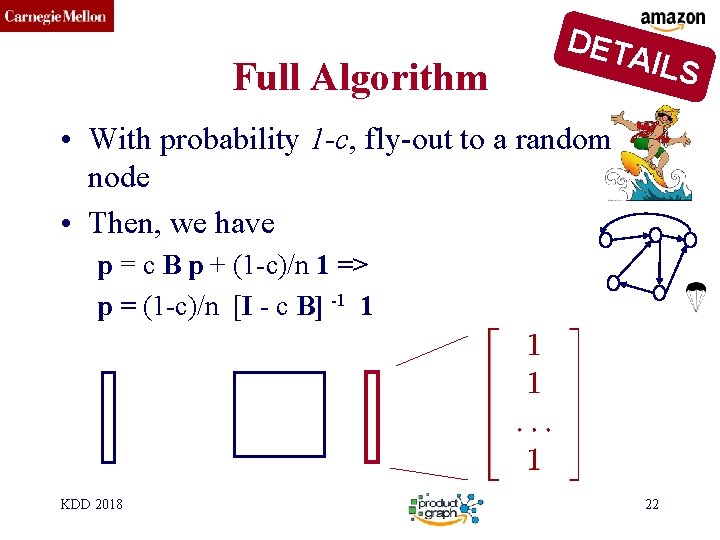

CMU SCS Full Algorithm DET AILS • With probability 1 -c, fly-out to a random node • Then, we have p = c B p + (1 -c)/n 1 => p = (1 -c)/n [I - c B] -1 1 KDD 2018 21

CMU SCS Full Algorithm DET AILS • With probability 1 -c, fly-out to a random node • Then, we have p = c B p + (1 -c)/n 1 => p = (1 -c)/n [I - c B] -1 1 KDD 2018 22

CMU SCS Notice: • page. Rank ~ in-degree • (and HITS, SALSA, also: ~ in-degree) KDD 2018 23

CMU SCS Roadmap • Introduction – Motivation • Part#1: Graphs – P 1. 1: properties/patterns in graphs – P 1. 2: node importance ? • Page. Rank and Personalized PR • HITS • SALSA KDD 2018 24

CMU SCS Node importance - Motivation: • Given a graph (eg. , web pages containing the desirable query word) • Q 1: Which node is the most important? • Q 2: How close is node ‘A’ to node ‘B’? A B KDD 2018 25

CMU SCS Personalized P. R. Taher H. Haveliwala. 2002. Topic-sensitive Page. Rank. (WWW '02). 517 -526. http: //dx. doi. org/10. 1145/511446. 511513 Page L. , Brin S. , Motwani R. , and Winograd T. (1999). The Page. Rank citation ranking: Bringing order to the web. Technical Report KDD 2018 26

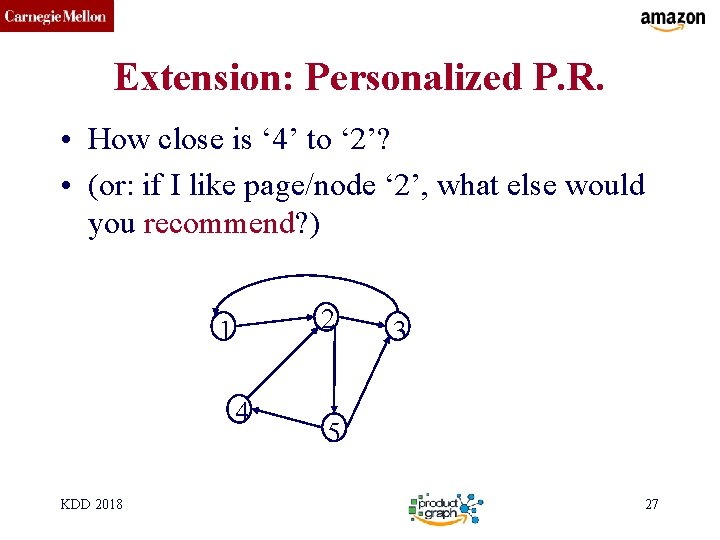

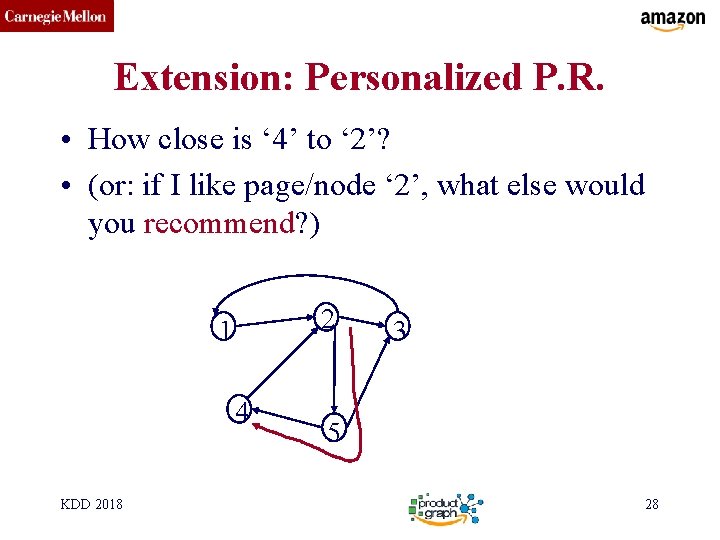

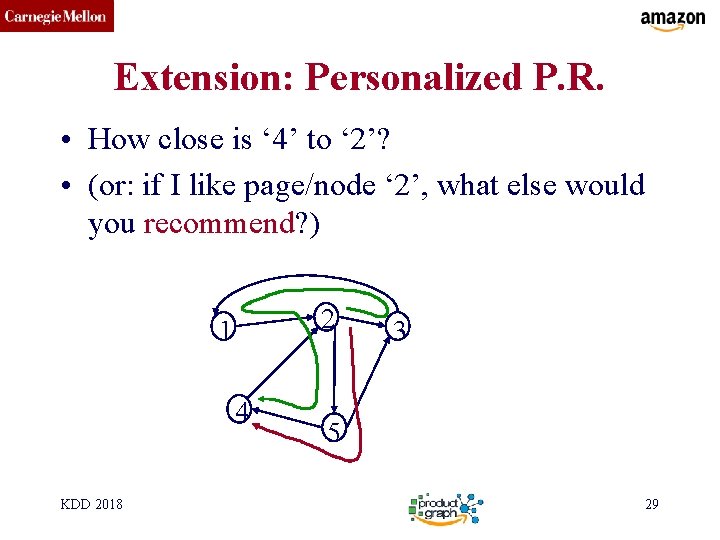

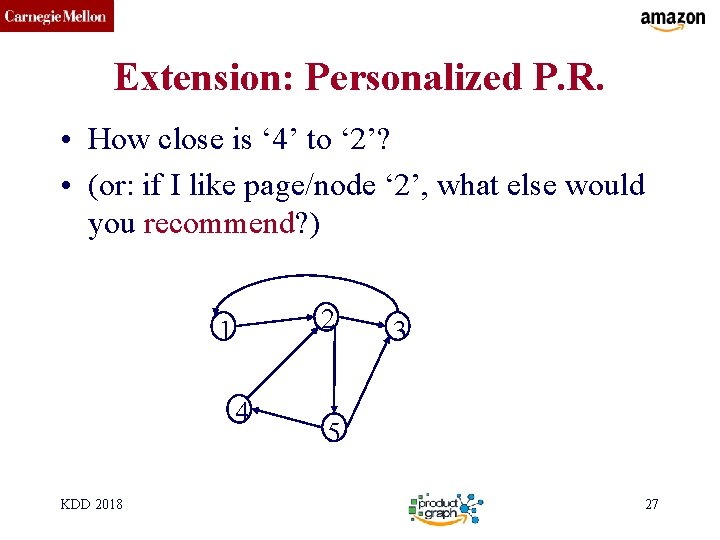

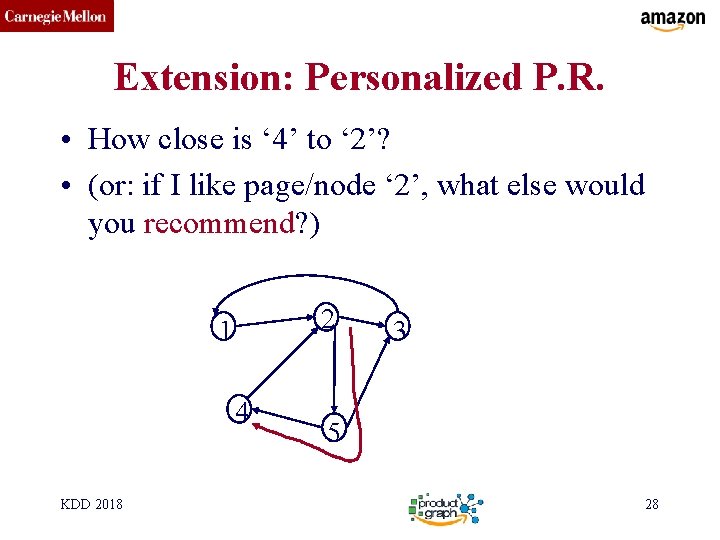

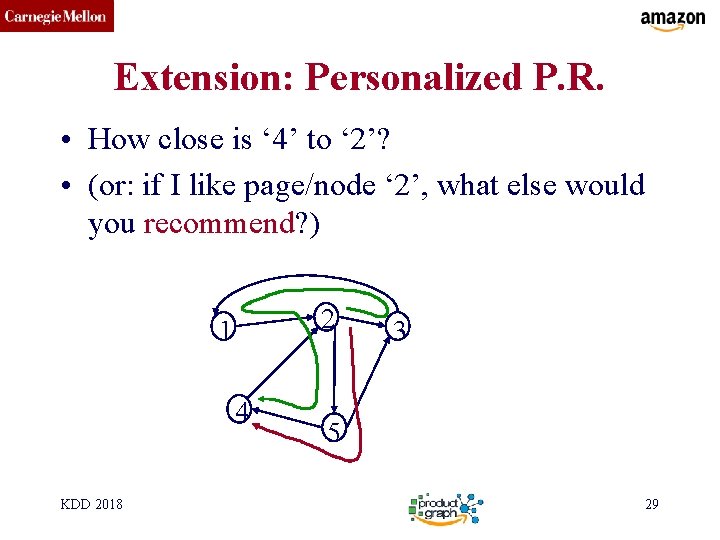

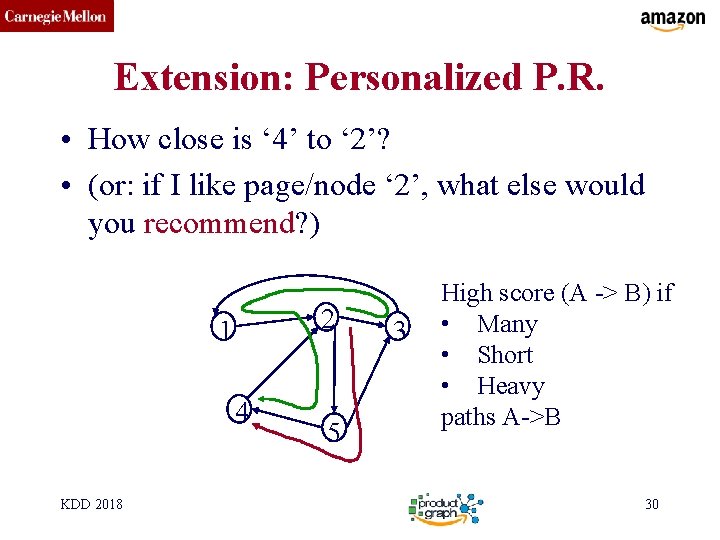

CMU SCS Extension: Personalized P. R. • How close is ‘ 4’ to ‘ 2’? • (or: if I like page/node ‘ 2’, what else would you recommend? ) 2 1 4 KDD 2018 3 5 27

CMU SCS Extension: Personalized P. R. • How close is ‘ 4’ to ‘ 2’? • (or: if I like page/node ‘ 2’, what else would you recommend? ) 2 1 4 KDD 2018 3 5 28

CMU SCS Extension: Personalized P. R. • How close is ‘ 4’ to ‘ 2’? • (or: if I like page/node ‘ 2’, what else would you recommend? ) 2 1 4 KDD 2018 3 5 29

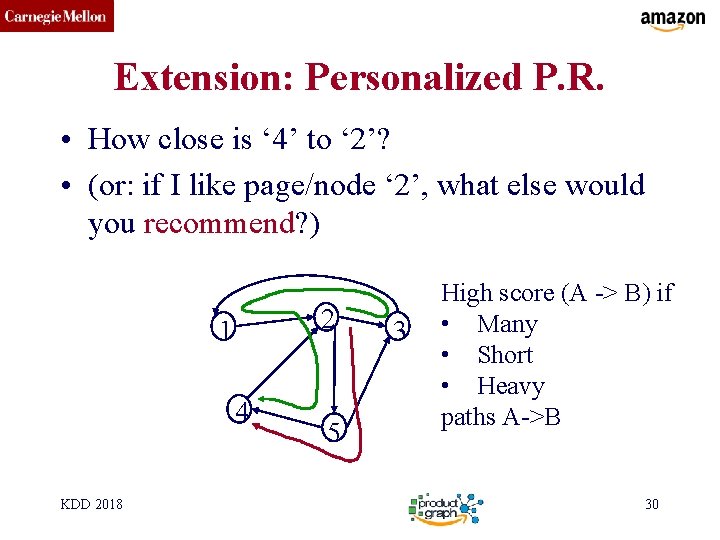

CMU SCS Extension: Personalized P. R. • How close is ‘ 4’ to ‘ 2’? • (or: if I like page/node ‘ 2’, what else would you recommend? ) 2 1 4 KDD 2018 5 3 High score (A -> B) if • Many • Short • Heavy paths A->B 30

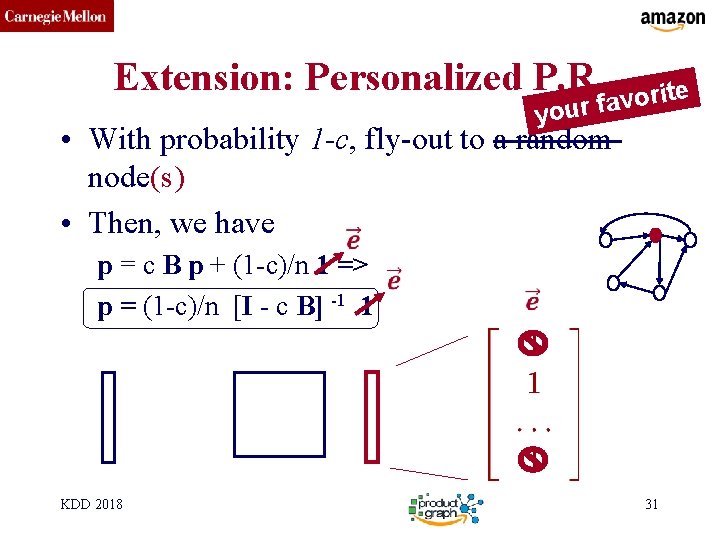

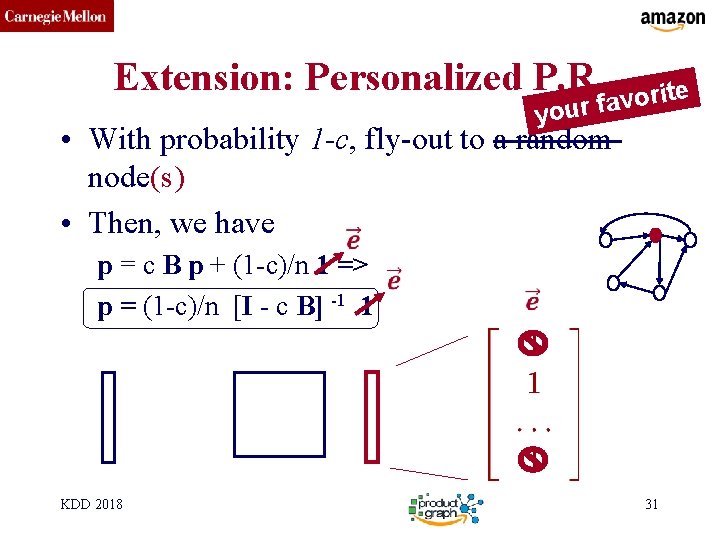

CMU SCS Extension: Personalized P. R. e t i r o v a your f • With probability 1 -c, fly-out to a random node(s) • Then, we have p = c B p + (1 -c)/n 1 => p = (1 -c)/n [I - c B] -1 1 KDD 2018 31

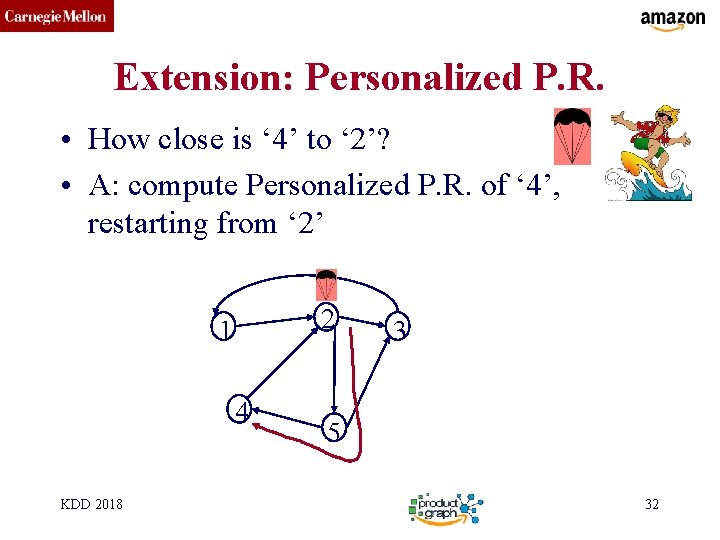

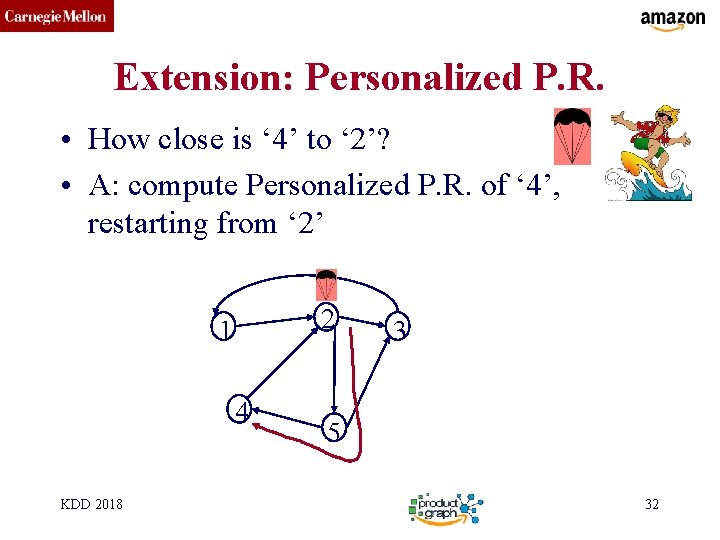

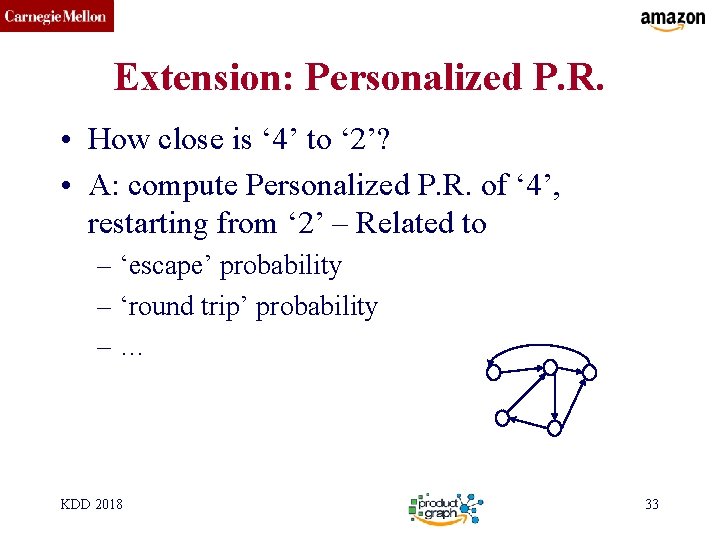

CMU SCS Extension: Personalized P. R. • How close is ‘ 4’ to ‘ 2’? • A: compute Personalized P. R. of ‘ 4’, restarting from ‘ 2’ 2 1 4 KDD 2018 3 5 32

CMU SCS Extension: Personalized P. R. • How close is ‘ 4’ to ‘ 2’? • A: compute Personalized P. R. of ‘ 4’, restarting from ‘ 2’ – Related to – ‘escape’ probability – ‘round trip’ probability –… KDD 2018 33

CMU SCS Roadmap • Introduction – Motivation • Part#1: Graphs – P 1. 1: properties/patterns in graphs – P 1. 2: node importance ? • Page. Rank and Personalized PR – Fast computation - ‘Pixie’ • HITS • SALSA KDD 2018 34

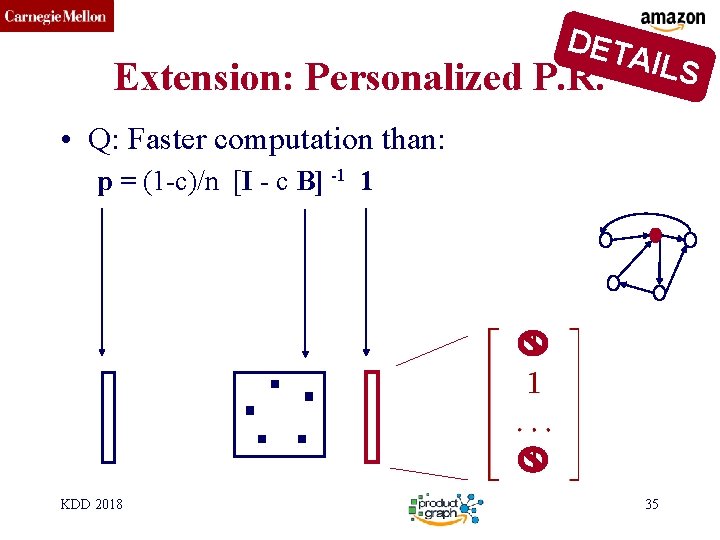

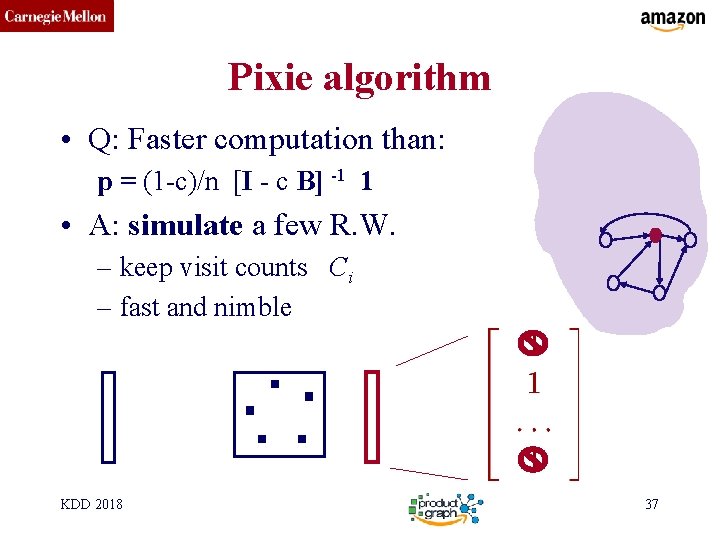

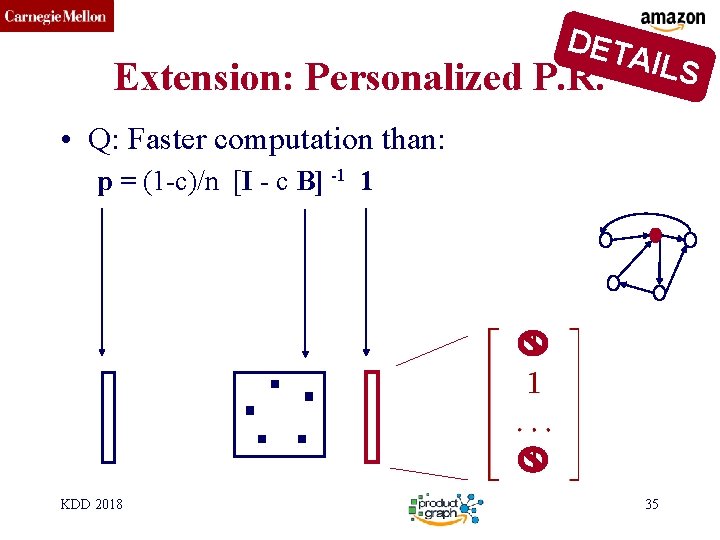

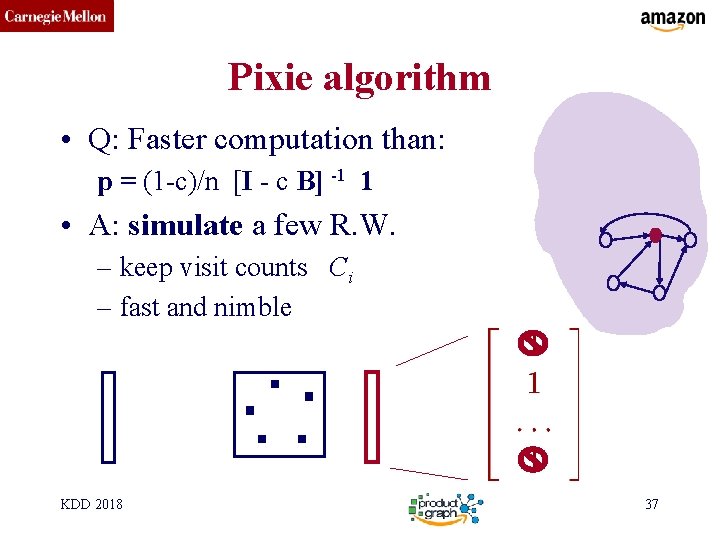

CMU SCS DET Extension: Personalized P. R. AILS • Q: Faster computation than: p = (1 -c)/n [I - c B] -1 1 KDD 2018 35

CMU SCS Pixie algorithm Chantat Eksombatchai, Pranav Jindal, Jerry Zitao Liu, Yuchen Liu, Rahul Sharma, Charles Sugnet, Mark Ulrich, Jure Leskovec: Pixie: A System for Recommending 3+ Billion Items to 200+ Million Users in Real-Time. WWW 2018: 1775 -1784 https: //dl. acm. org/citation. cfm? doid=3178876. 3186183 KDD 2018 36

CMU SCS Pixie algorithm • Q: Faster computation than: p = (1 -c)/n [I - c B] -1 1 • A: simulate a few R. W. – keep visit counts Ci – fast and nimble KDD 2018 37

CMU SCS Personalized Page. Rank algorithm KDD 2018 38

CMU SCS Personalized Page. Rank algorithm KDD 2018 39

CMU SCS Personalized Page. Rank algorithm KDD 2018 40

CMU SCS Personalized Page. Rank algorithm KDD 2018 41

CMU SCS Personalized Page. Rank algorithm KDD 2018 42

CMU SCS Personalized Page. Rank algorithm KDD 2018 43

CMU SCS Personalized Page. Rank algorithm . . KDD 2018 … … 44

CMU SCS Roadmap • Introduction – Motivation • Part#1: Graphs – P 1. 1: properties/patterns in graphs – P 1. 2: node importance ? • Page. Rank and Personalized PR – Fast computation - ‘Pixie’ – Other applications • HITS • SALSA KDD 2018 45

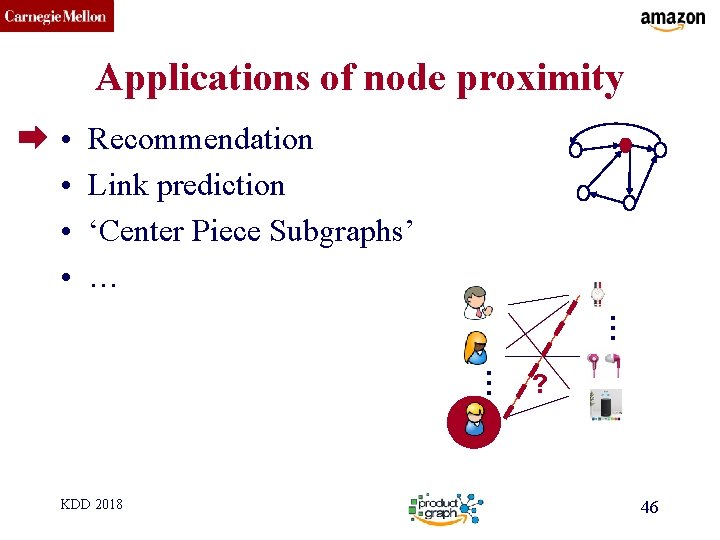

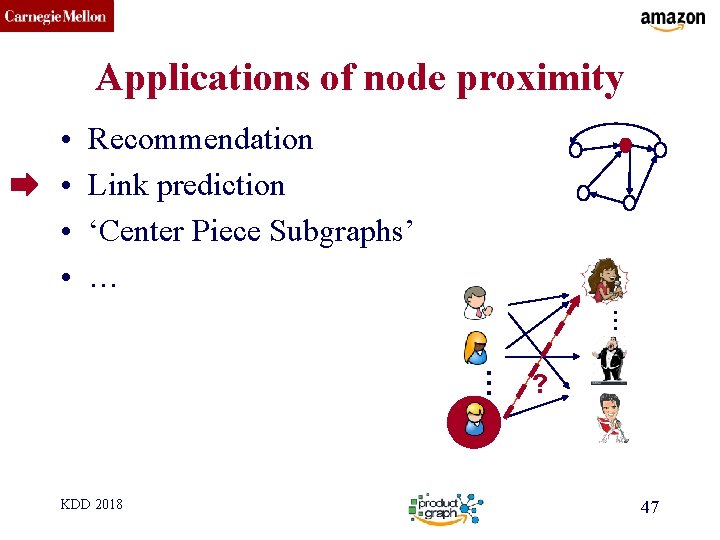

CMU SCS Applications of node proximity • • Recommendation Link prediction ‘Center Piece Subgraphs’ … … … KDD 2018 ? 46

CMU SCS Applications of node proximity • • Recommendation Link prediction ‘Center Piece Subgraphs’ … … … KDD 2018 ? 47

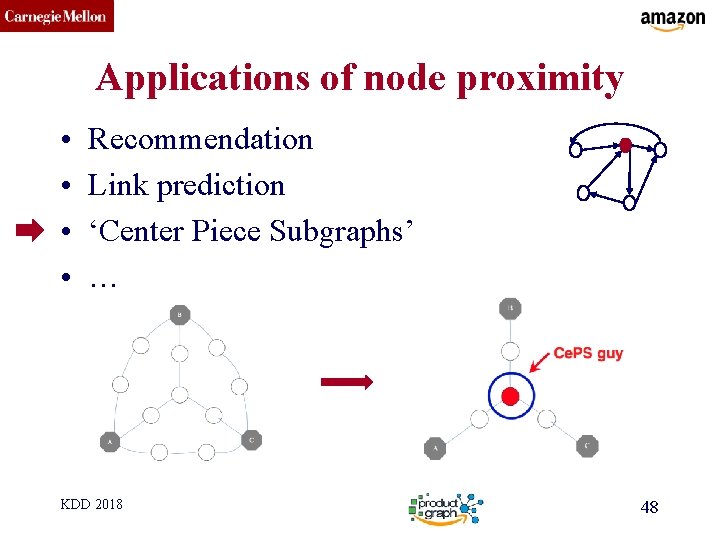

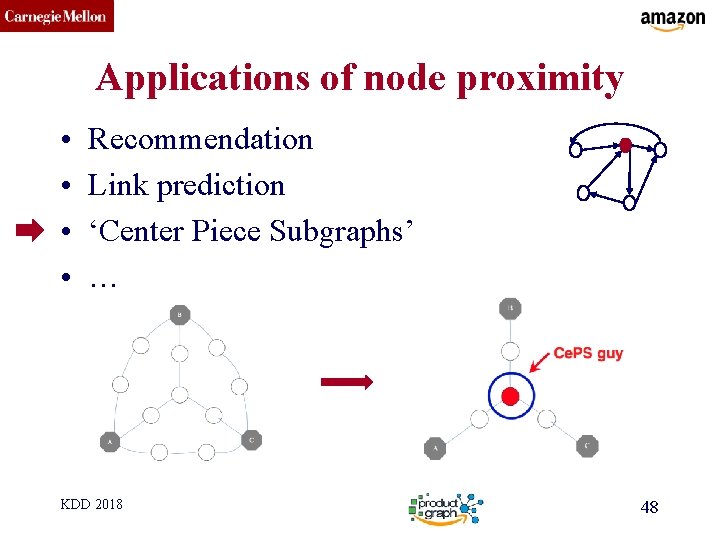

CMU SCS Applications of node proximity • • Recommendation Link prediction ‘Center Piece Subgraphs’ … KDD 2018 48

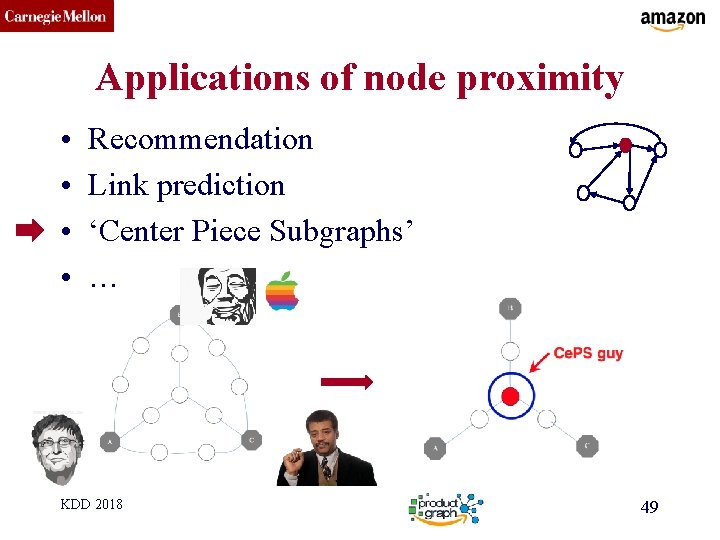

CMU SCS Applications of node proximity • • Recommendation Link prediction ‘Center Piece Subgraphs’ … KDD 2018 49

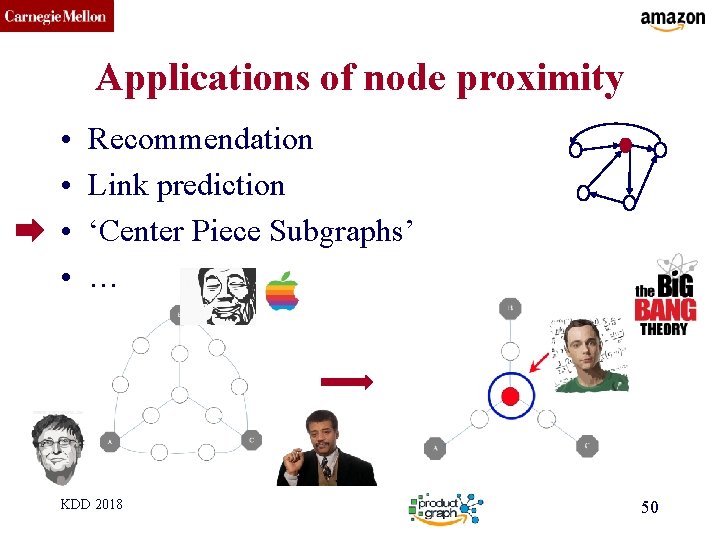

CMU SCS Applications of node proximity • • Recommendation Link prediction ‘Center Piece Subgraphs’ … KDD 2018 50

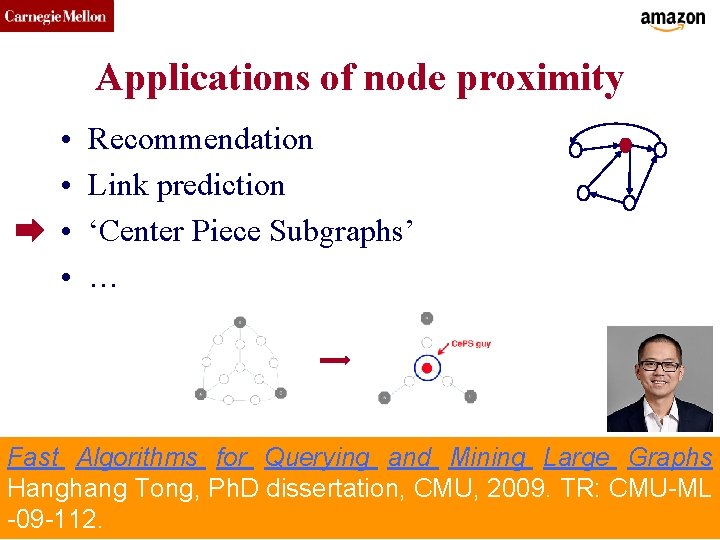

CMU SCS Applications of node proximity • • Recommendation Link prediction ‘Center Piece Subgraphs’ … Fast Algorithms for Querying and Mining Large Graphs Hanghang Tong, Ph. D dissertation, CMU, 2009. TR: CMU-ML KDD 2018 51 -09 -112.

CMU SCS Roadmap • Introduction – Motivation • Part#1: Graphs – P 1. 1: properties/patterns in graphs – P 1. 2: node importance • • KDD 2018 ? Page. Rank and Personalized PR HITS SVD SALSA 52

CMU SCS Kleinberg’s algo (HITS) Kleinberg, Jon (1998). Authoritative sources in a hyperlinked environment. Proc. 9 th ACM-SIAM Symposium on Discrete Algorithms. KDD 2018 53

CMU SCS Recall: problem dfn • Given a graph (eg. , web pages containing the desirable query word) • Q 1: Which node is the most important? KDD 2018 54

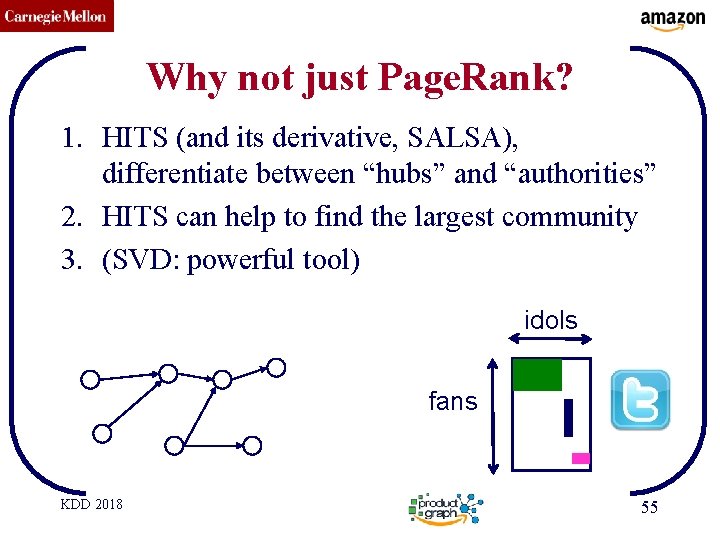

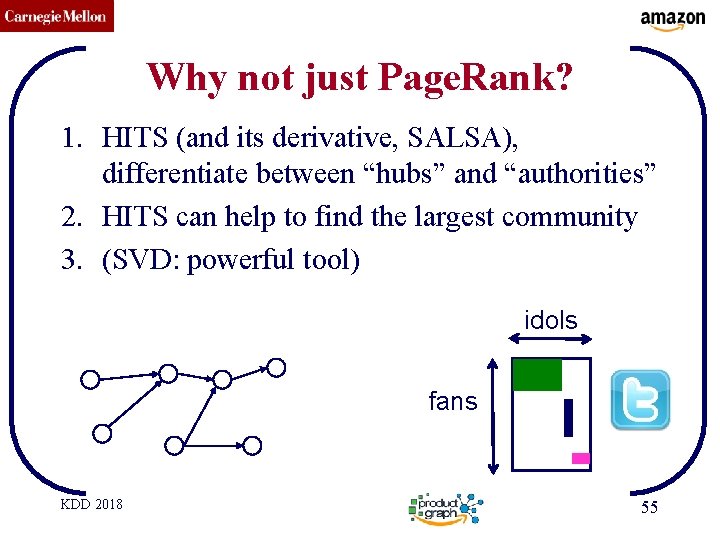

CMU SCS Why not just Page. Rank? 1. HITS (and its derivative, SALSA), differentiate between “hubs” and “authorities” 2. HITS can help to find the largest community 3. (SVD: powerful tool) idols fans KDD 2018 55

CMU SCS Kleinberg’s algorithm • Problem dfn: given the web and a query • find the most ‘authoritative’ web pages for this query KDD 2018 56

CMU SCS Problem: Page. Rank Given a directed graph, find its most interesting/central node A node is important, if its parents are important (recursive, but OK!) KDD 2018 57

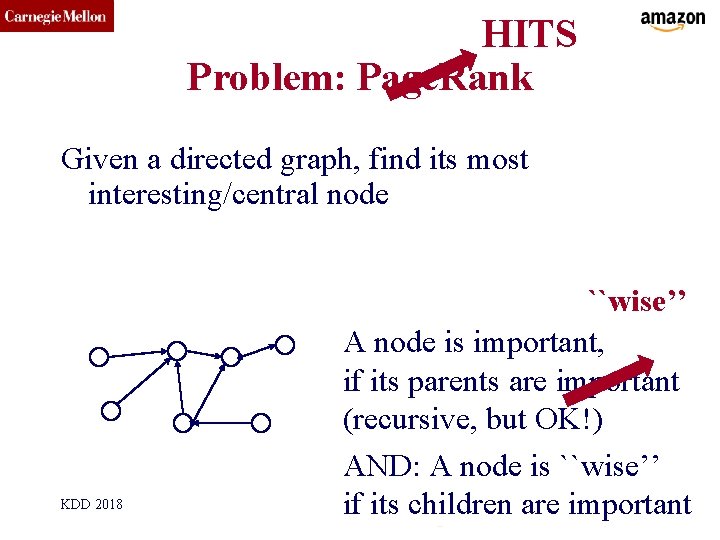

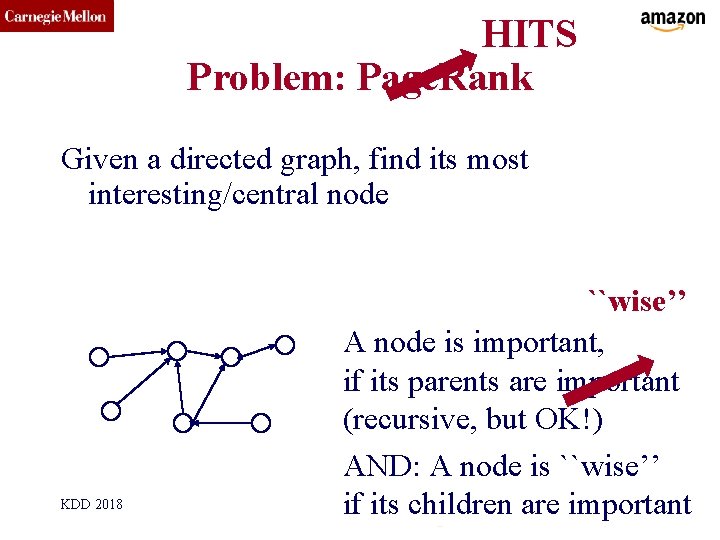

CMU SCS HITS Problem: Page. Rank Given a directed graph, find its most interesting/central node KDD 2018 ``wise’’ A node is important, if its parents are important (recursive, but OK!) AND: A node is ``wise’’ 58 if its children are important

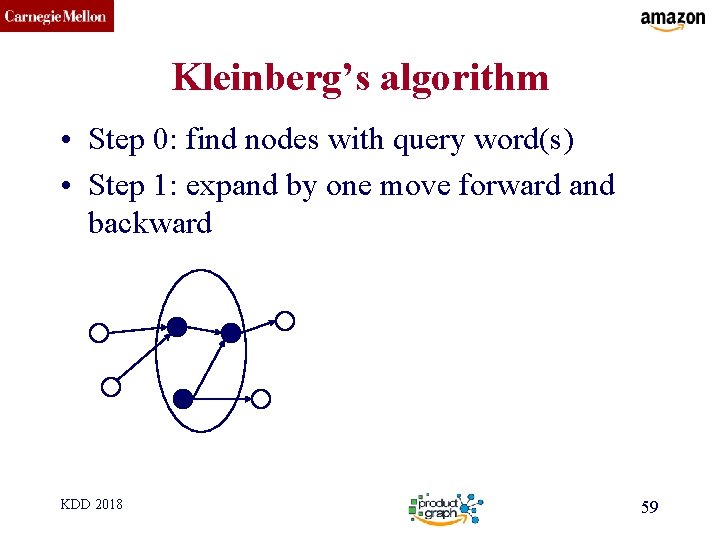

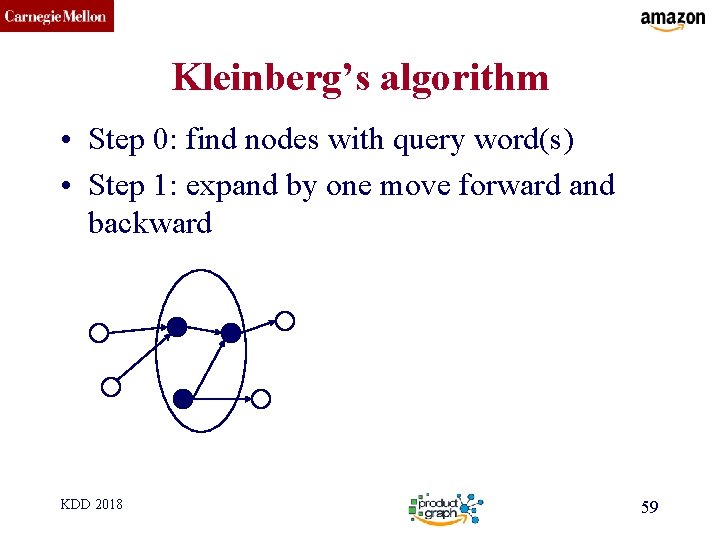

CMU SCS Kleinberg’s algorithm • Step 0: find nodes with query word(s) • Step 1: expand by one move forward and backward KDD 2018 59

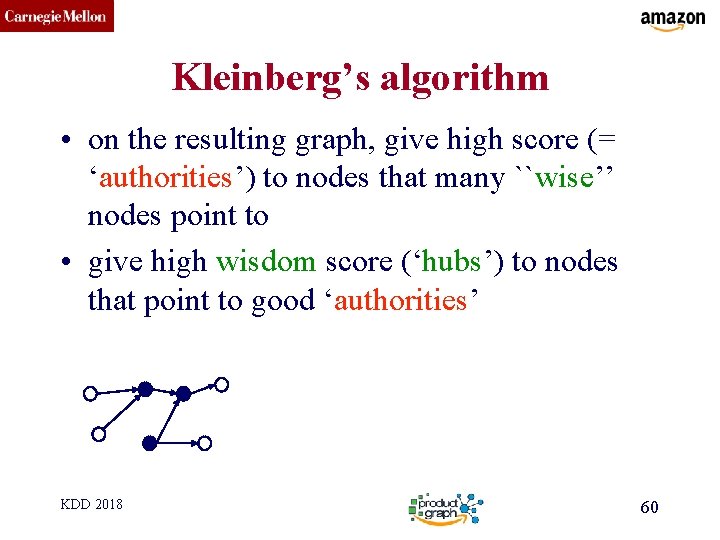

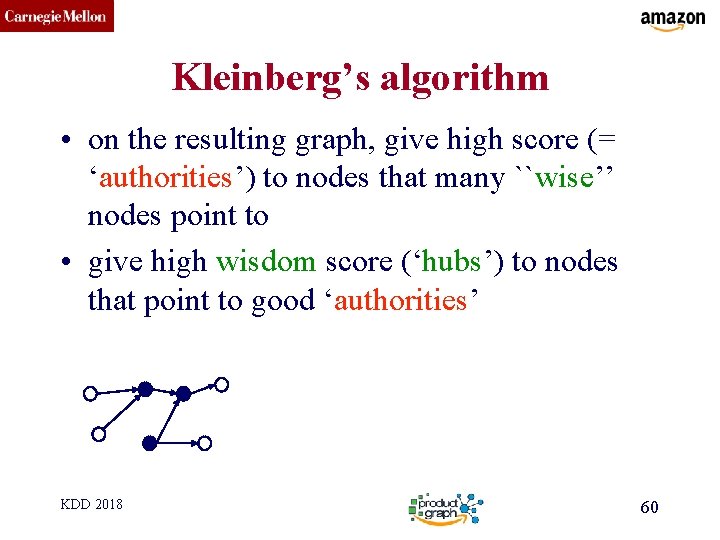

CMU SCS Kleinberg’s algorithm • on the resulting graph, give high score (= ‘authorities’) to nodes that many ``wise’’ nodes point to • give high wisdom score (‘hubs’) to nodes that point to good ‘authorities’ hubs KDD 2018 authorities 60

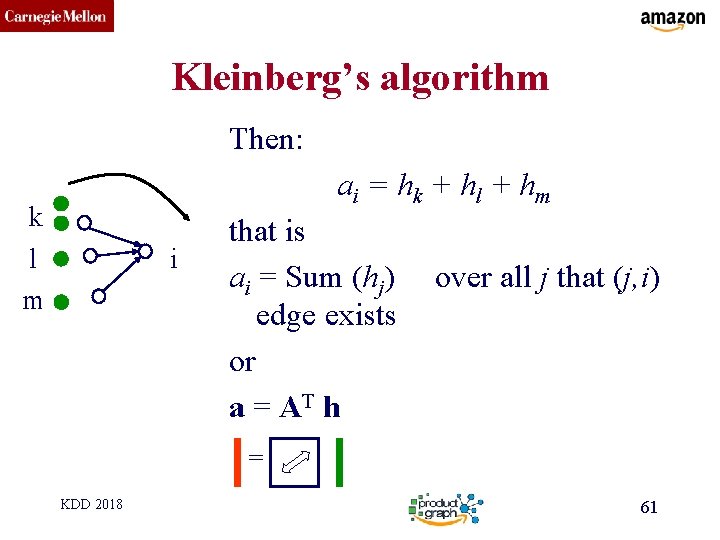

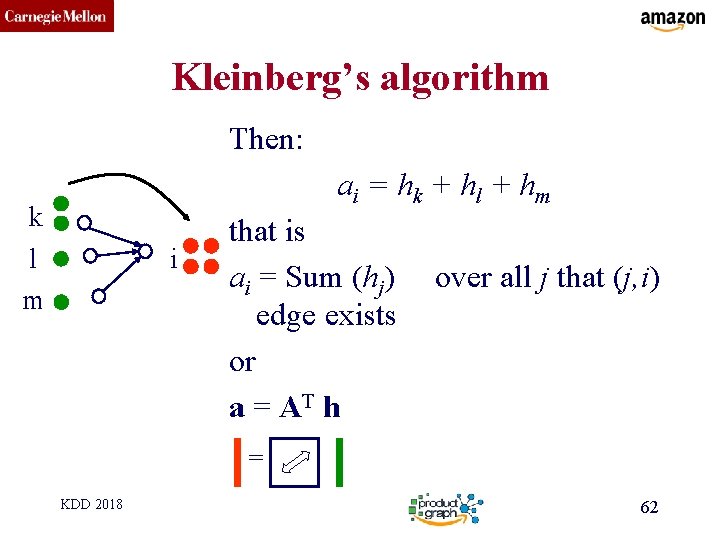

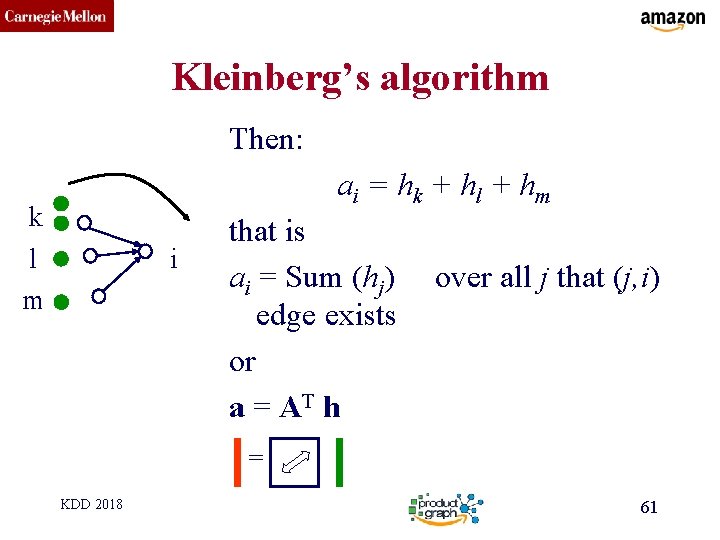

CMU SCS Kleinberg’s algorithm Then: ai = hk + hl + hm k l m i that is ai = Sum (hj) over all j that (j, i) edge exists or a = AT h = KDD 2018 61

CMU SCS Kleinberg’s algorithm Then: ai = hk + hl + hm k l m i that is ai = Sum (hj) over all j that (j, i) edge exists or a = AT h = KDD 2018 62

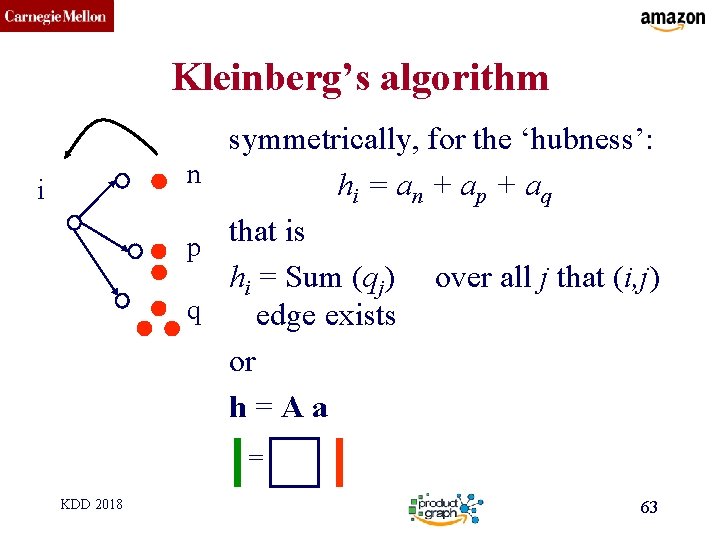

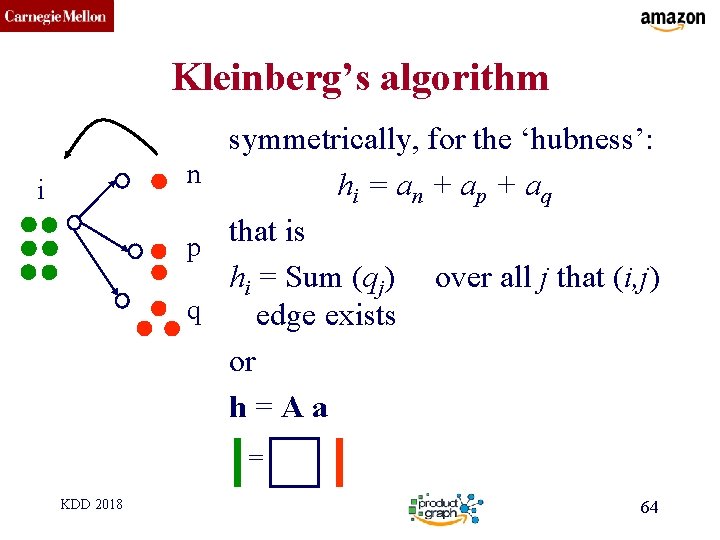

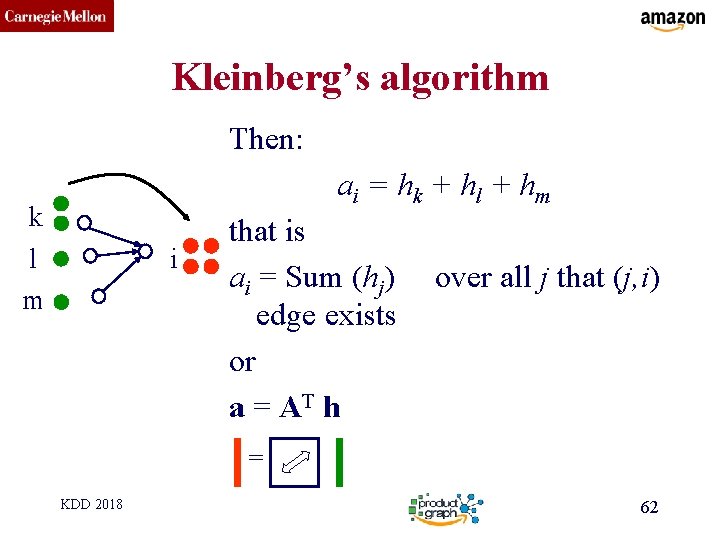

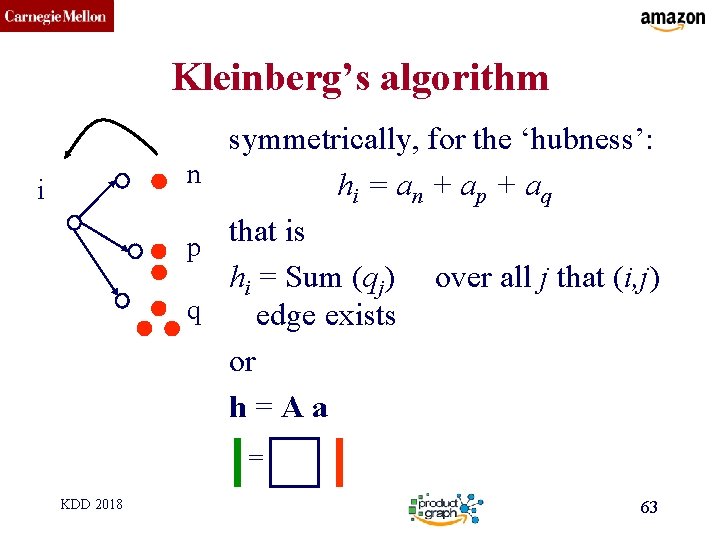

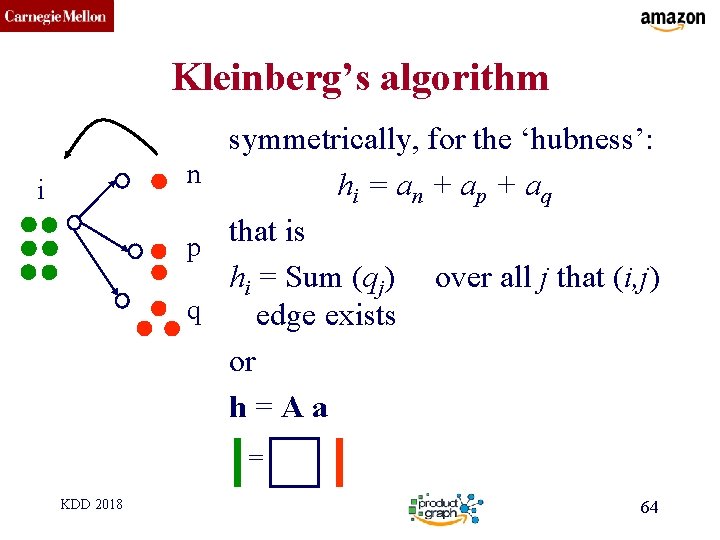

CMU SCS Kleinberg’s algorithm symmetrically, for the ‘hubness’: n hi = an + ap + aq that is p hi = Sum (qj) over all j that (i, j) q edge exists or h = A a i = KDD 2018 63

CMU SCS Kleinberg’s algorithm symmetrically, for the ‘hubness’: n hi = an + ap + aq that is p hi = Sum (qj) over all j that (i, j) q edge exists or h = A a i = KDD 2018 64

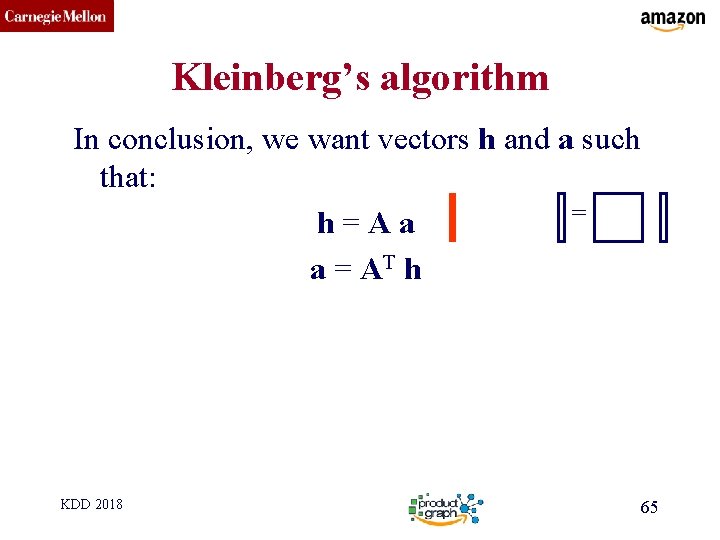

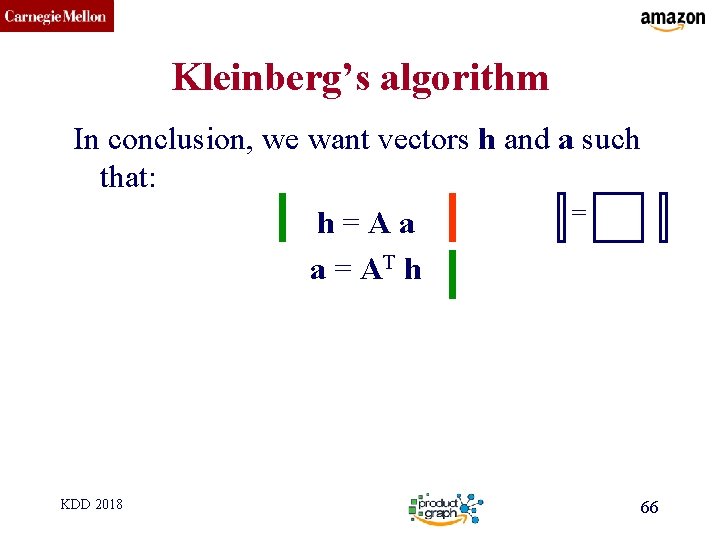

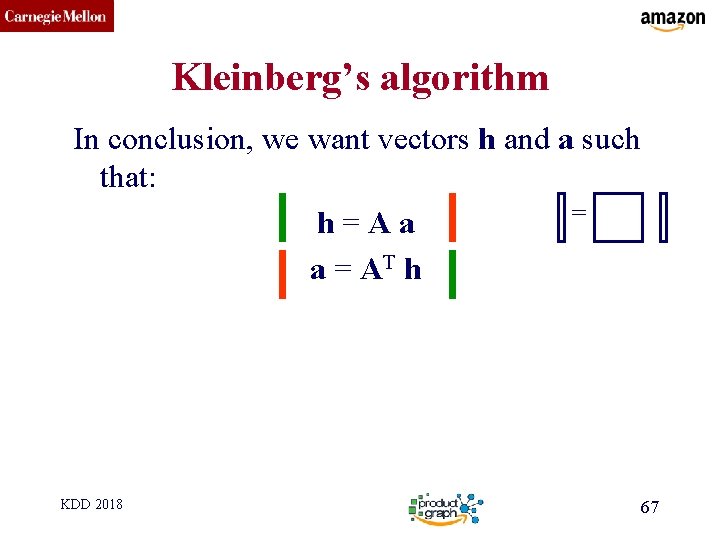

CMU SCS Kleinberg’s algorithm In conclusion, we want vectors h and a such that: = h = A a a = AT h KDD 2018 65

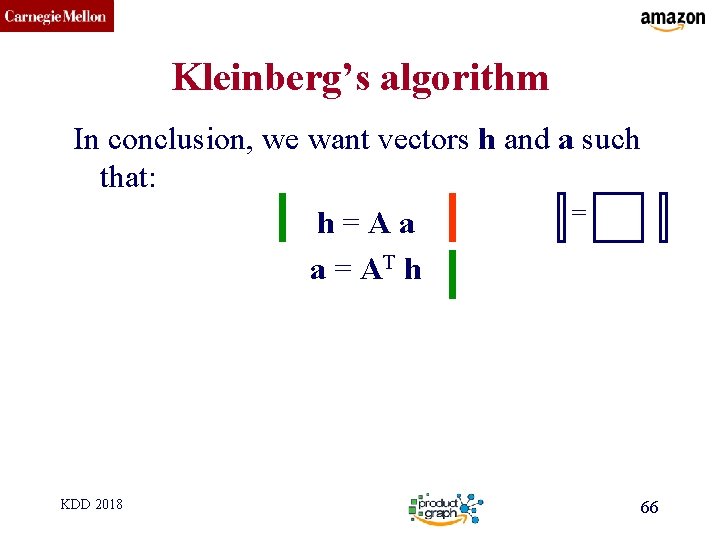

CMU SCS Kleinberg’s algorithm In conclusion, we want vectors h and a such that: = h = A a a = AT h KDD 2018 66

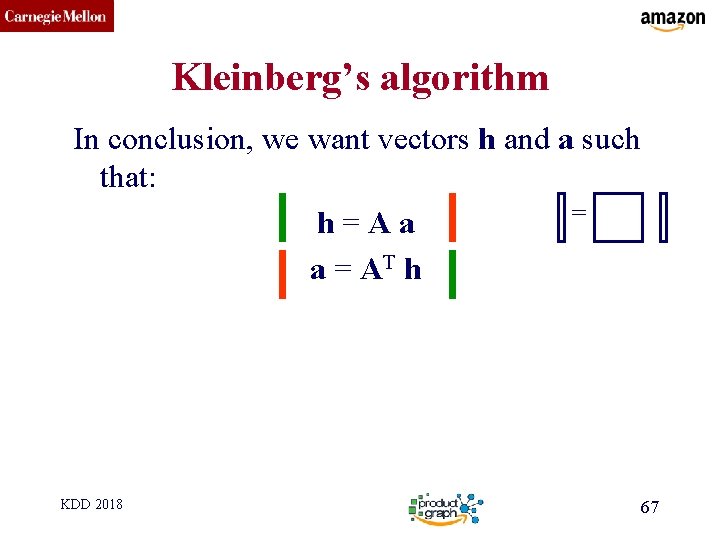

CMU SCS Kleinberg’s algorithm In conclusion, we want vectors h and a such that: = h = A a a = AT h KDD 2018 67

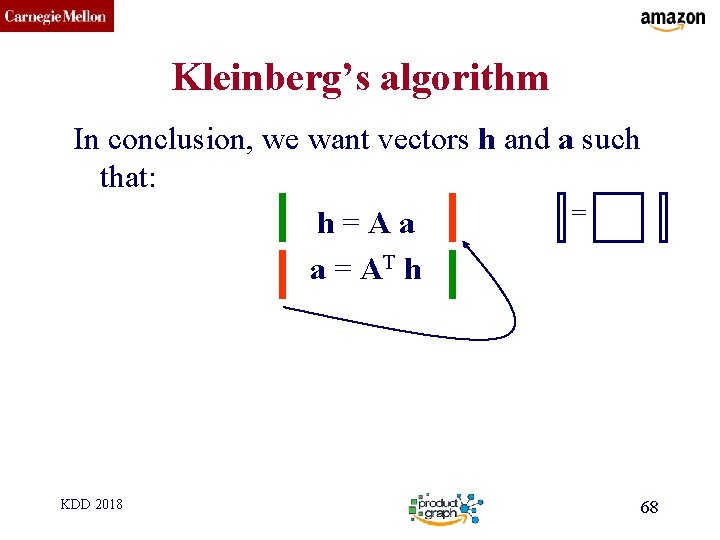

CMU SCS Kleinberg’s algorithm In conclusion, we want vectors h and a such that: = h = A a a = AT h KDD 2018 68

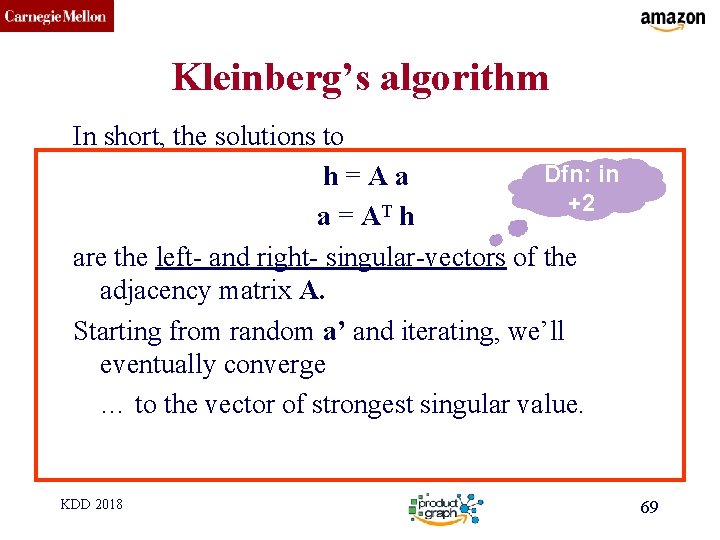

CMU SCS Kleinberg’s algorithm In short, the solutions to Dfn: in h = A a +2 T a = A h are the left- and right- singular-vectors of the adjacency matrix A. Starting from random a’ and iterating, we’ll eventually converge … to the vector of strongest singular value. KDD 2018 69

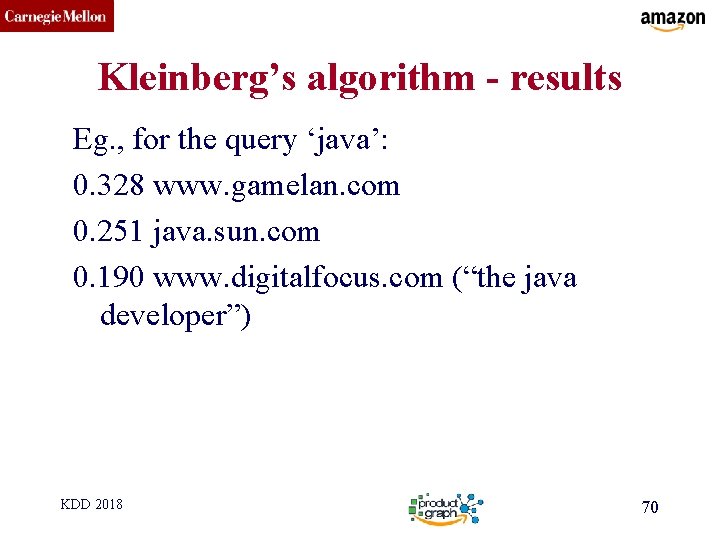

CMU SCS Kleinberg’s algorithm - results Eg. , for the query ‘java’: 0. 328 www. gamelan. com 0. 251 java. sun. com 0. 190 www. digitalfocus. com (“the java developer”) KDD 2018 70

CMU SCS Roadmap • Introduction – Motivation • Part#1: Graphs – P 1. 1: properties/patterns in graphs – P 1. 2: node importance • • KDD 2018 ? Page. Rank and Personalized PR HITS (SVD) SALSA 71

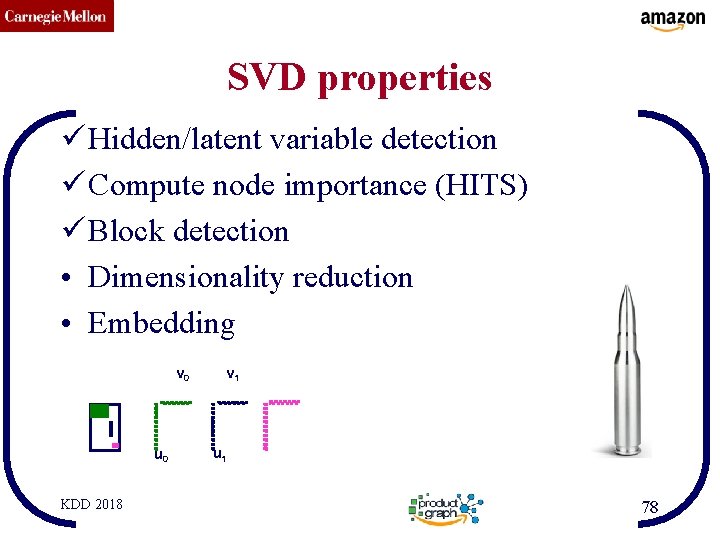

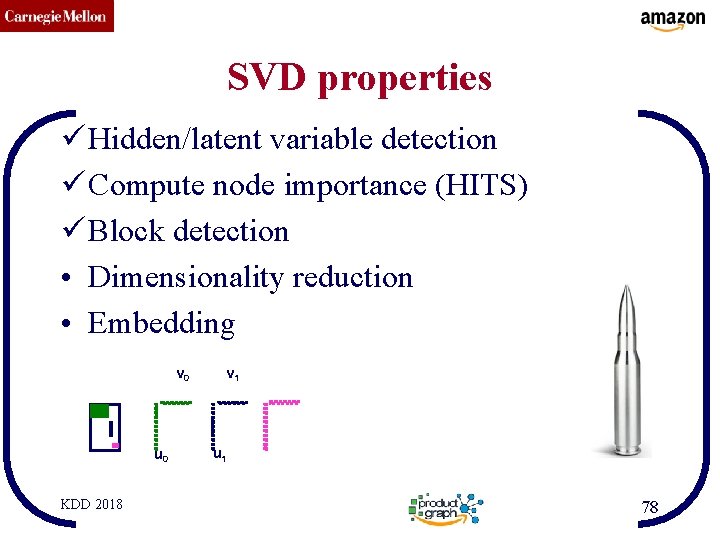

CMU SCS SVD properties • • • Hidden/latent variable detection Compute node importance (HITS) Block detection Dimensionality reduction Embedding KDD 2018 72

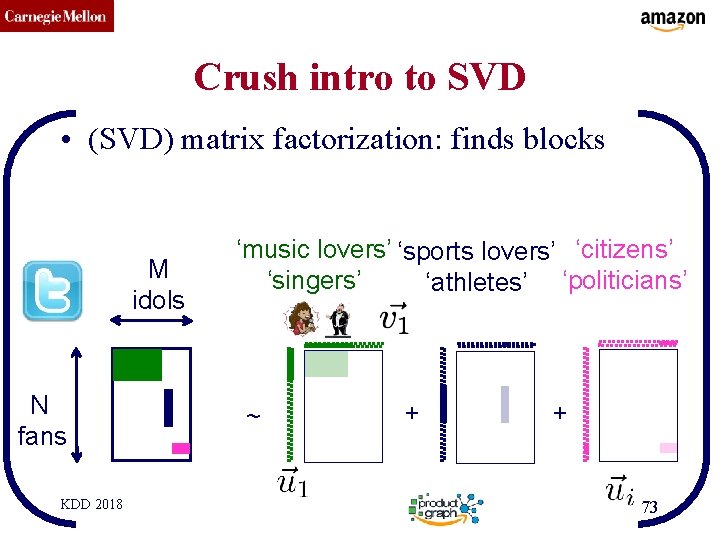

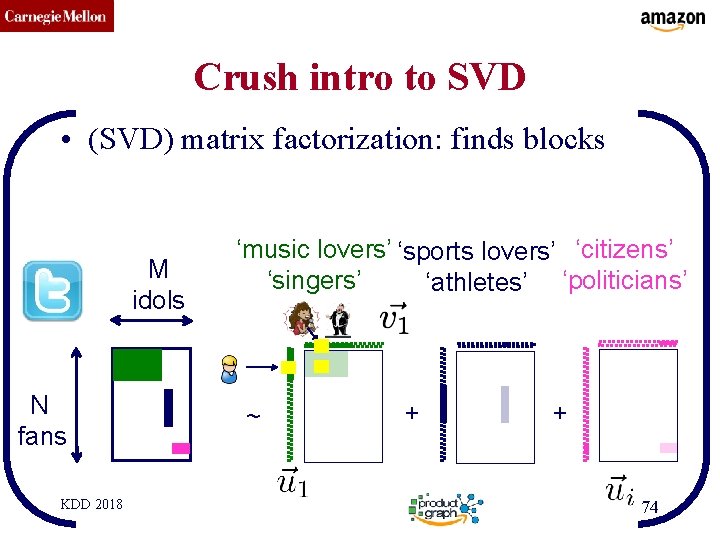

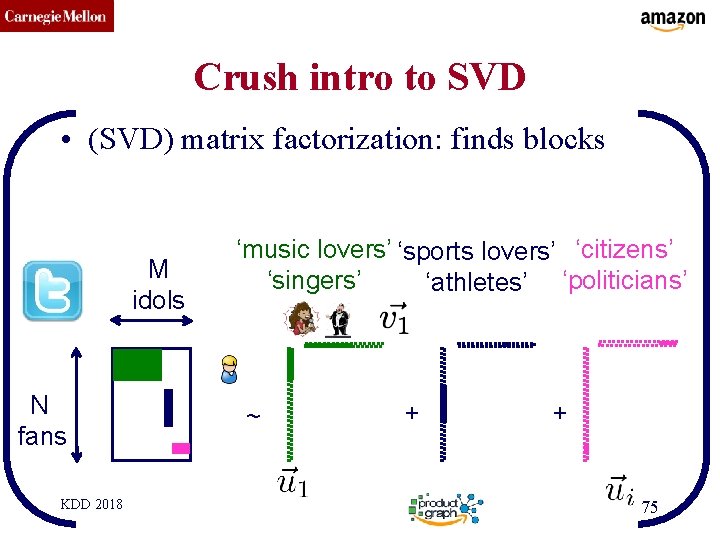

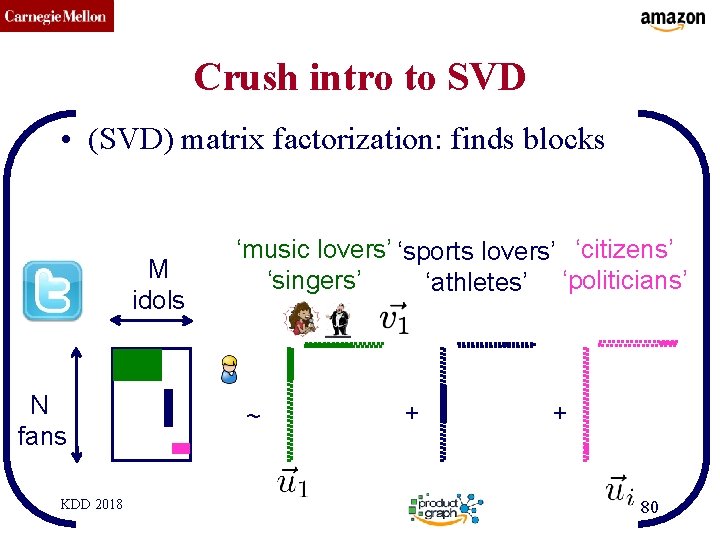

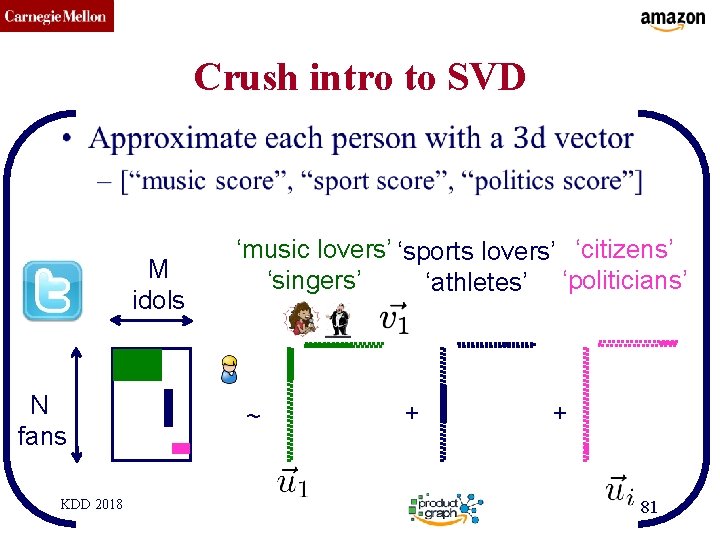

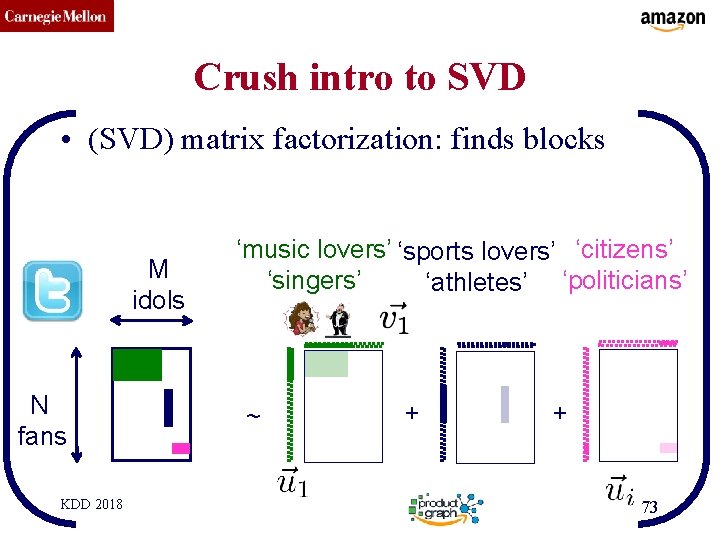

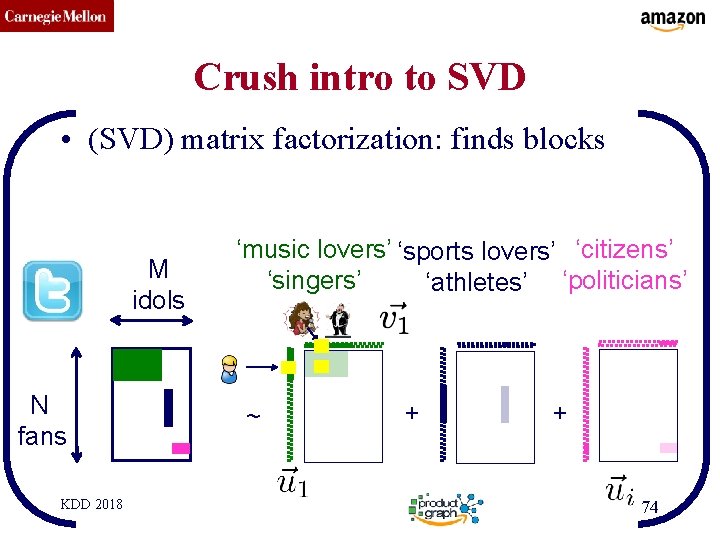

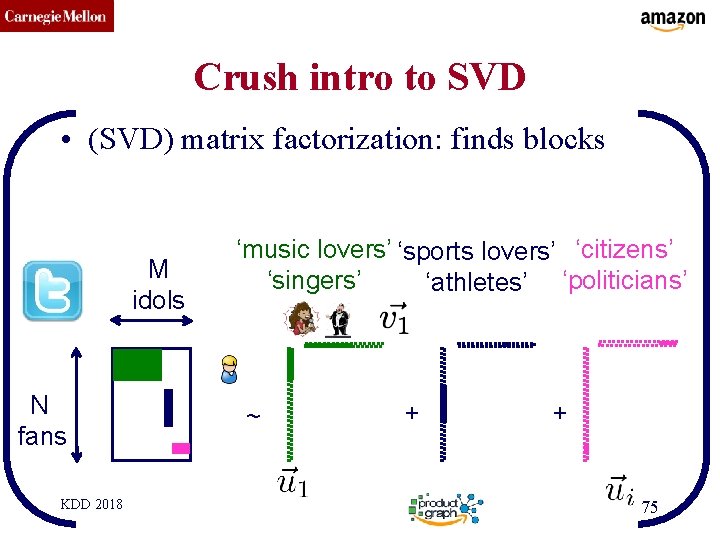

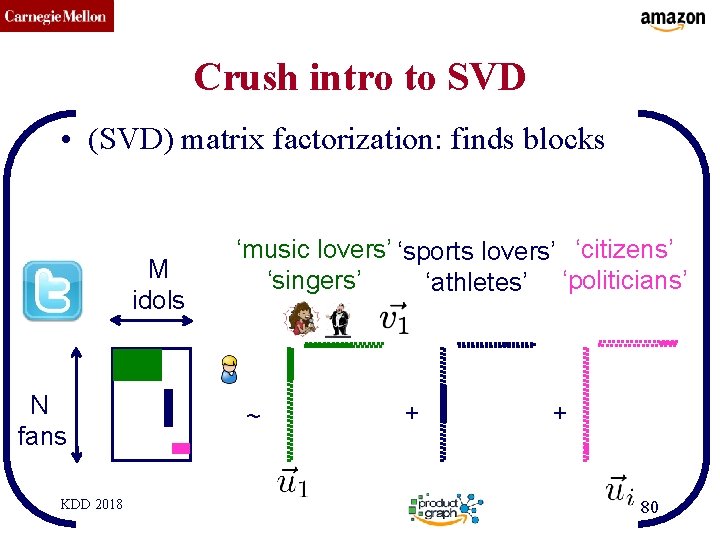

CMU SCS Crush intro to SVD • (SVD) matrix factorization: finds blocks M idols N fans KDD 2018 ‘music lovers’ ‘sports lovers’ ‘citizens’ ‘singers’ ‘athletes’ ‘politicians’ ~ + + 73

CMU SCS Crush intro to SVD • (SVD) matrix factorization: finds blocks M idols N fans KDD 2018 ‘music lovers’ ‘sports lovers’ ‘citizens’ ‘singers’ ‘athletes’ ‘politicians’ ~ + + 74

CMU SCS Crush intro to SVD • (SVD) matrix factorization: finds blocks M idols N fans KDD 2018 ‘music lovers’ ‘sports lovers’ ‘citizens’ ‘singers’ ‘athletes’ ‘politicians’ ~ + + 75

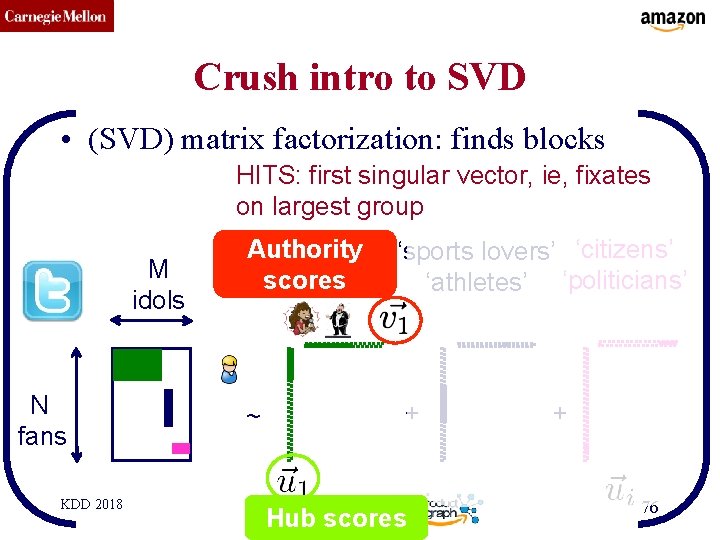

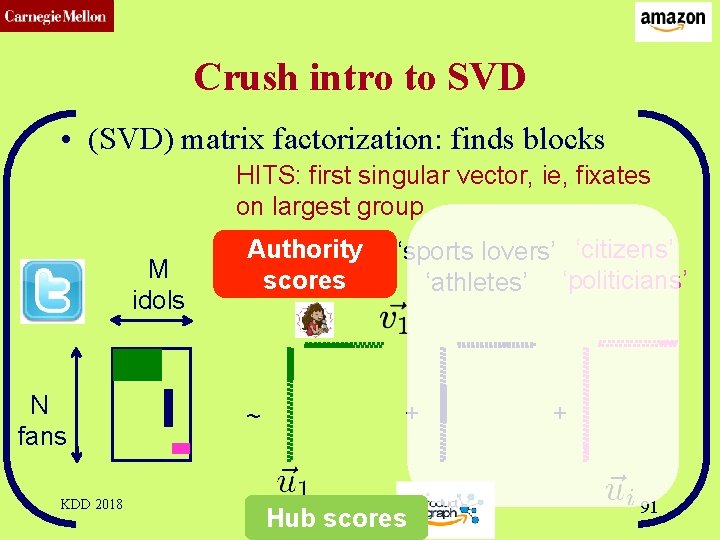

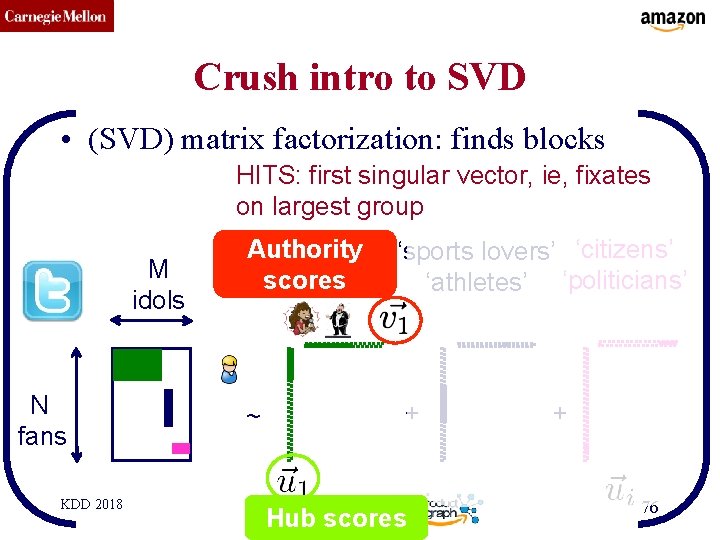

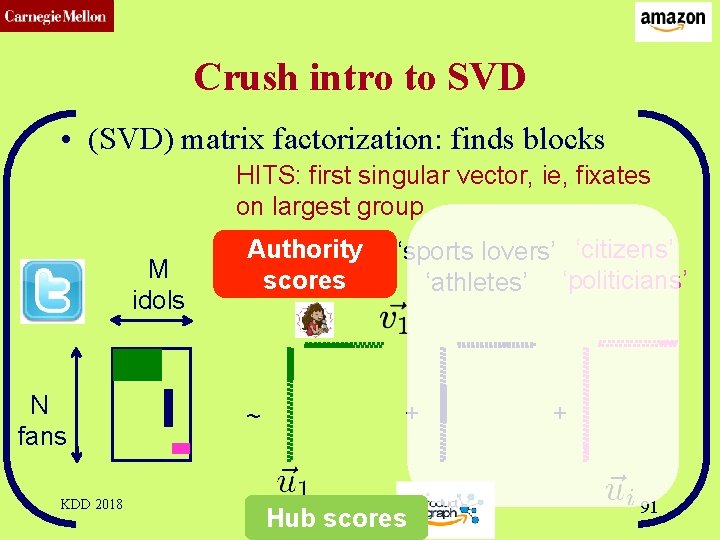

CMU SCS Crush intro to SVD • (SVD) matrix factorization: finds blocks HITS: first singular vector, ie, fixates on largest group M idols N fans KDD 2018 ‘music lovers’ Authority ‘sports lovers’ ‘citizens’ ‘singers’ scores ‘athletes’ ‘politicians’ ~ + Hub scores + 76

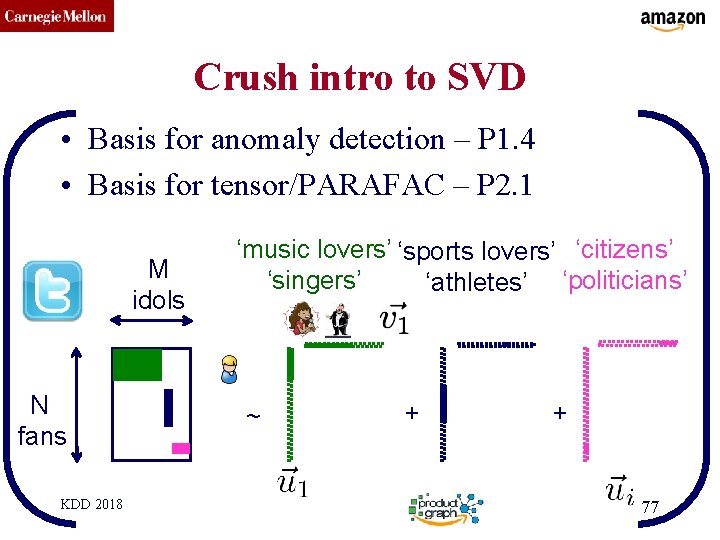

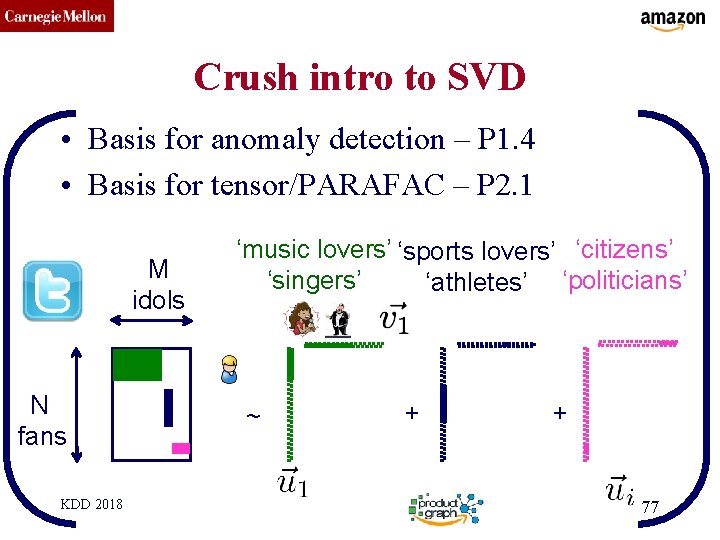

CMU SCS Crush intro to SVD • Basis for anomaly detection – P 1. 4 • Basis for tensor/PARAFAC – P 2. 1 M idols N fans KDD 2018 ‘music lovers’ ‘sports lovers’ ‘citizens’ ‘singers’ ‘athletes’ ‘politicians’ ~ + + 77

CMU SCS SVD properties ü Hidden/latent variable detection ü Compute node importance (HITS) ü Block detection • Dimensionality reduction • Embedding v 0 u 0 KDD 2018 v 1 u 1 78

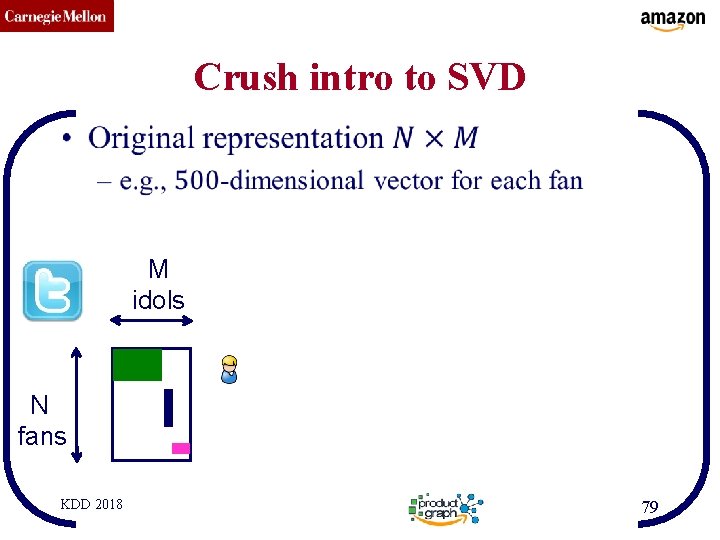

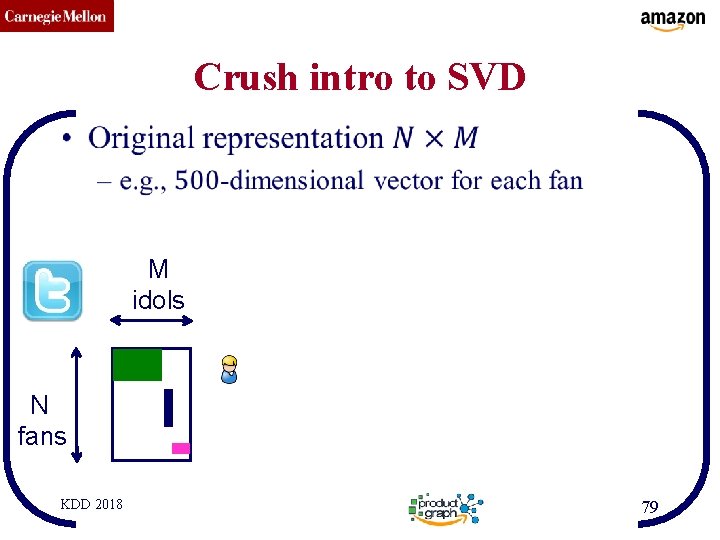

CMU SCS Crush intro to SVD • M idols N fans KDD 2018 79

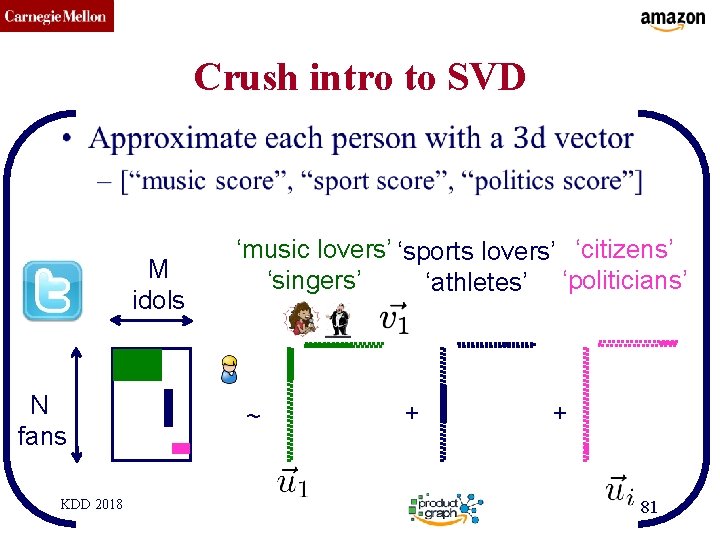

CMU SCS Crush intro to SVD • (SVD) matrix factorization: finds blocks M idols N fans KDD 2018 ‘music lovers’ ‘sports lovers’ ‘citizens’ ‘singers’ ‘athletes’ ‘politicians’ ~ + + 80

CMU SCS Crush intro to SVD • M idols N fans KDD 2018 ‘music lovers’ ‘sports lovers’ ‘citizens’ ‘singers’ ‘athletes’ ‘politicians’ ~ + + 81

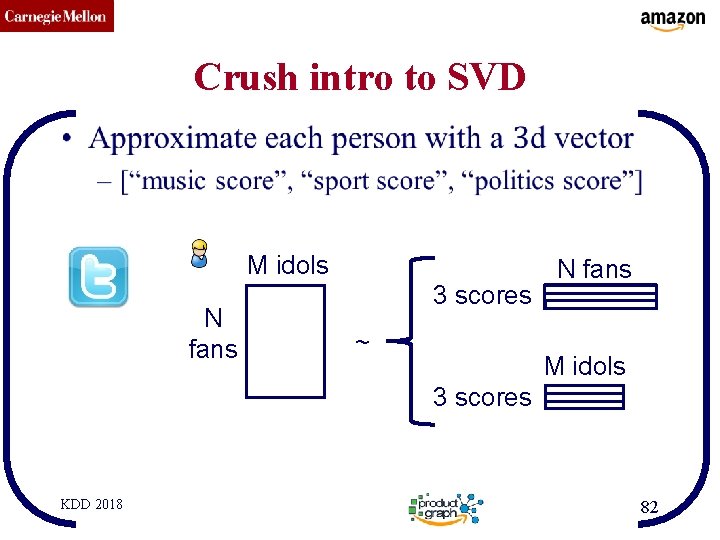

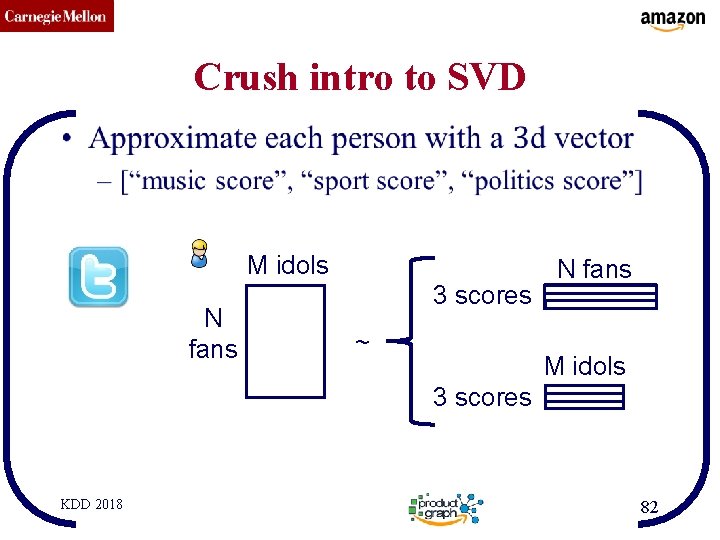

CMU SCS Crush intro to SVD • M idols N fans 3 scores ~ N fans M idols 3 scores KDD 2018 82

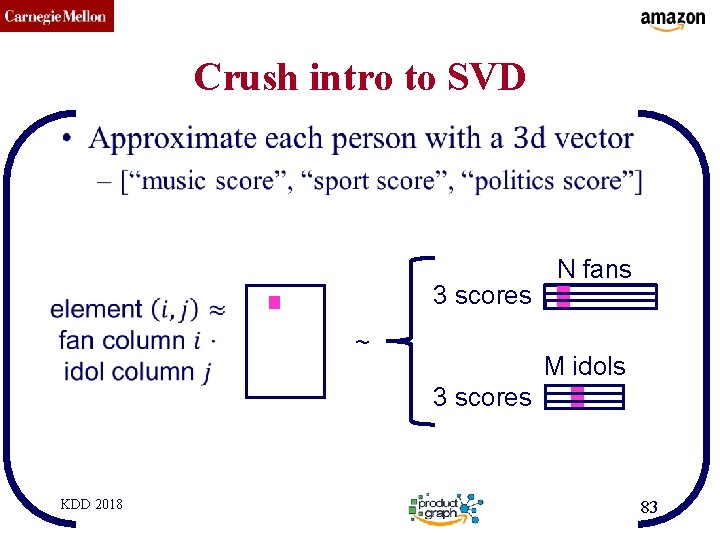

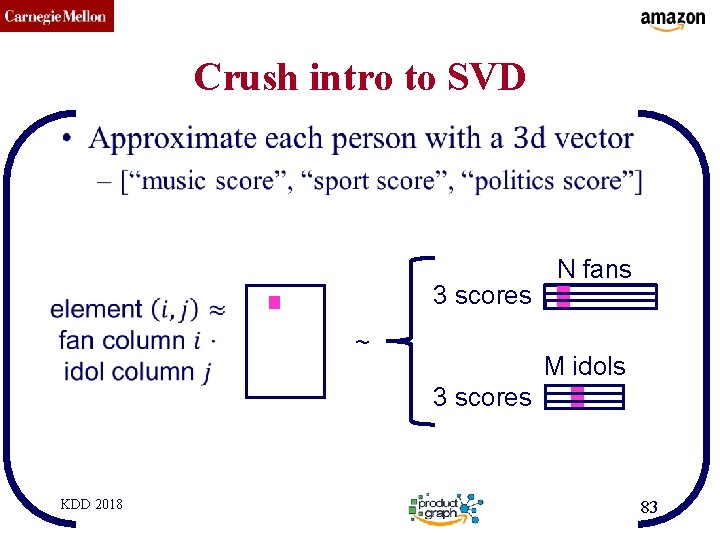

CMU SCS Crush intro to SVD • 3 scores ~ N fans M idols 3 scores KDD 2018 83

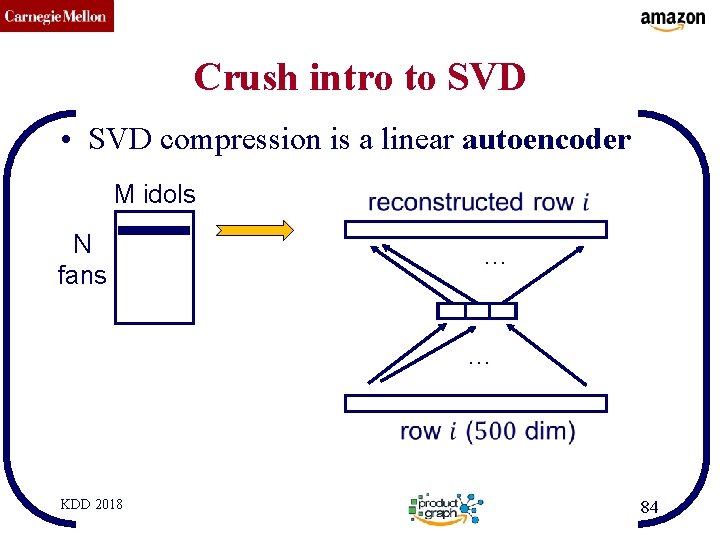

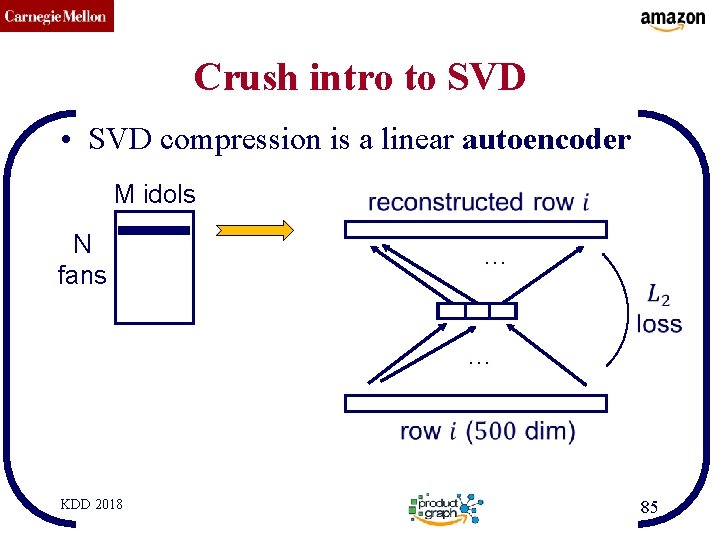

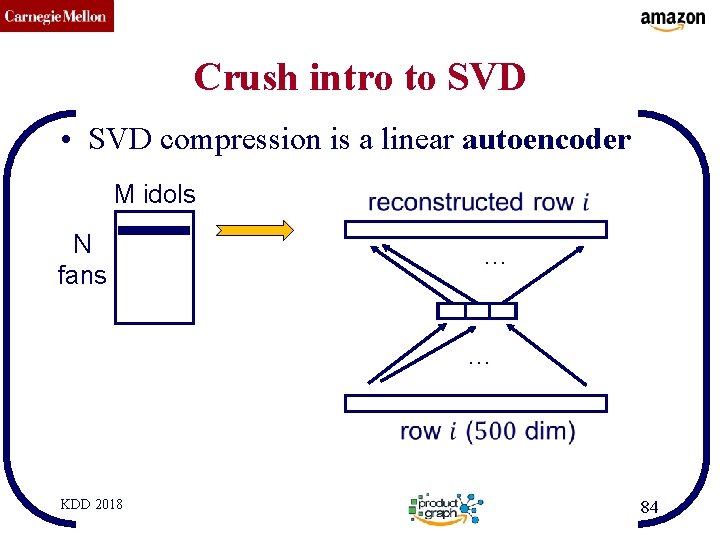

CMU SCS Crush intro to SVD • SVD compression is a linear autoencoder M idols N fans … … KDD 2018 84

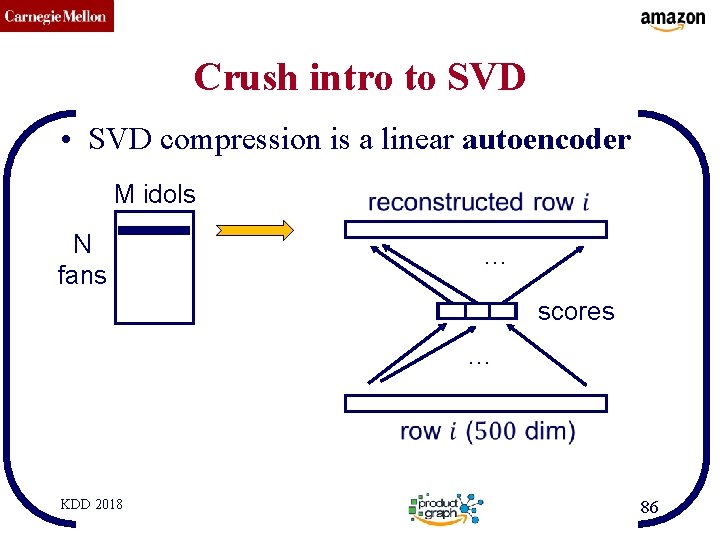

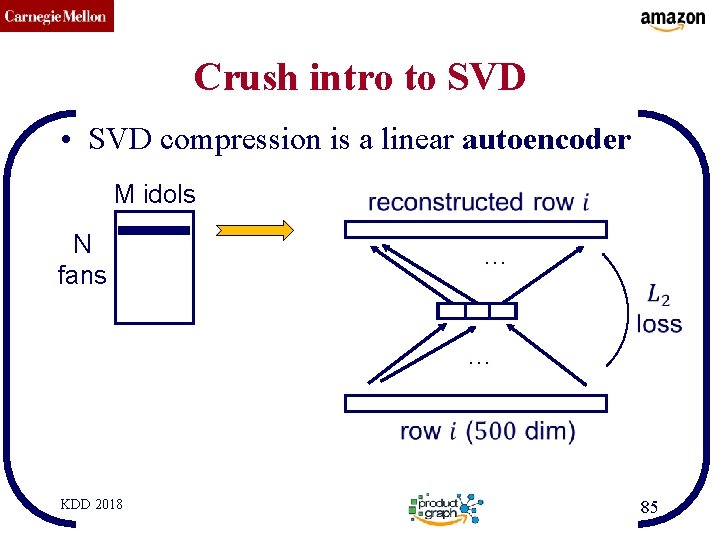

CMU SCS Crush intro to SVD • SVD compression is a linear autoencoder M idols N fans … … KDD 2018 85

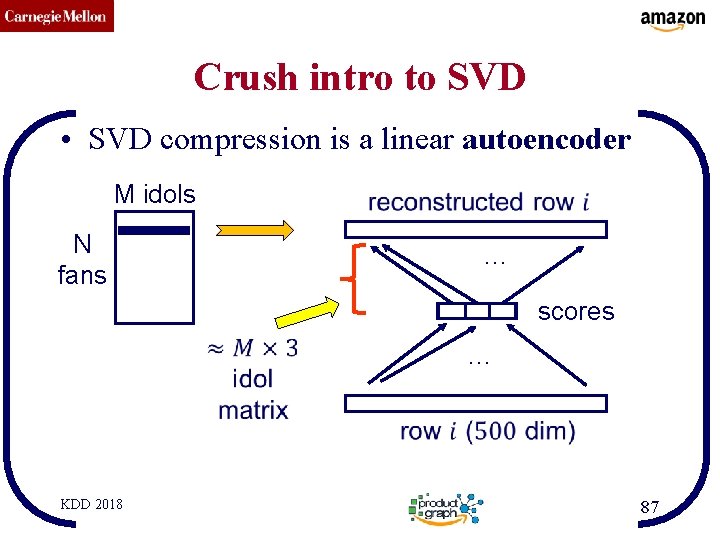

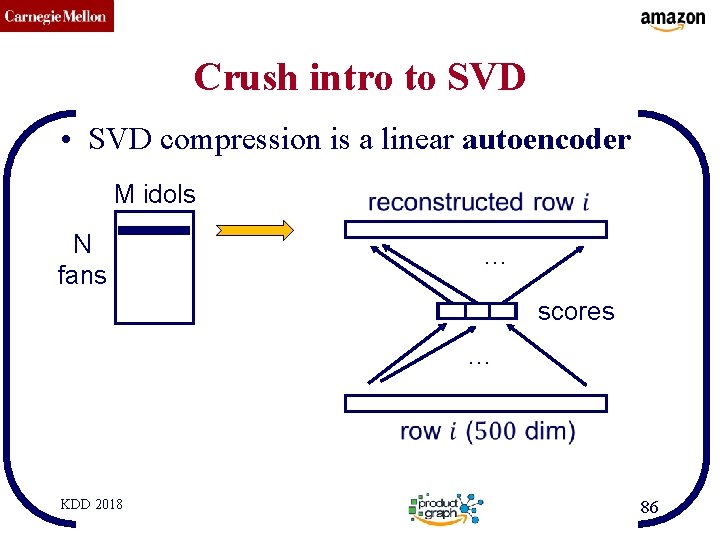

CMU SCS Crush intro to SVD • SVD compression is a linear autoencoder M idols N fans … scores … KDD 2018 86

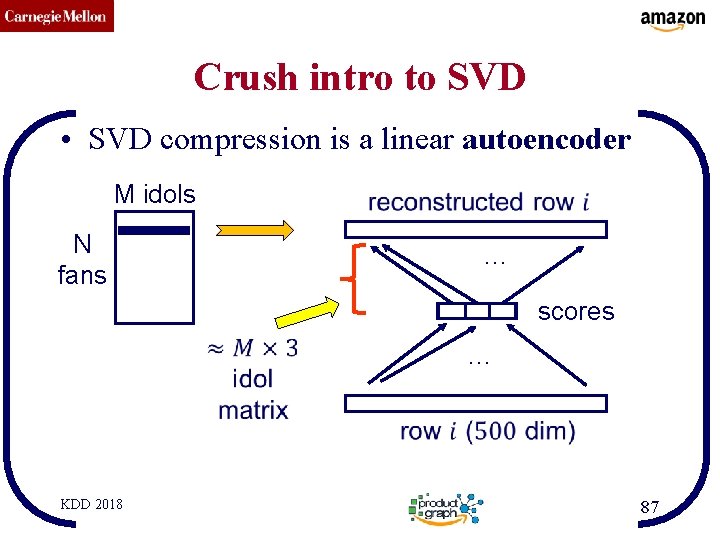

CMU SCS Crush intro to SVD • SVD compression is a linear autoencoder M idols N fans … scores … KDD 2018 87

CMU SCS SVD properties ü Hidden/latent variable detection ü Compute node importance (HITS) ü Block detection ü Dimensionality reduction ü Embedding (linear) – SVD is a special case of ’deep neural net’ v 0 u 0 KDD 2018 v 1 u 1 88

CMU SCS Roadmap • Introduction – Motivation • Part#1: Graphs – P 1. 1: properties/patterns in graphs – P 1. 2: node importance • • KDD 2018 ? Page. Rank and Personalized PR HITS (SVD) SALSA 89

CMU SCS SALSA R. Lempel and S. Moran. SALSA: the stochastic approach for link-structure analysis. ACM Trans. Inf. Syst. 19, 2 (April 2001), 131 -160. Goal: avoid HITS’ fixation on largest block KDD 2018 90

CMU SCS Crush intro to SVD • (SVD) matrix factorization: finds blocks HITS: first singular vector, ie, fixates on largest group M idols N fans KDD 2018 ‘music lovers’ Authority ‘sports lovers’ ‘citizens’ ‘singers’ scores ‘athletes’ ‘politicians’ ~ + Hub scores + 91

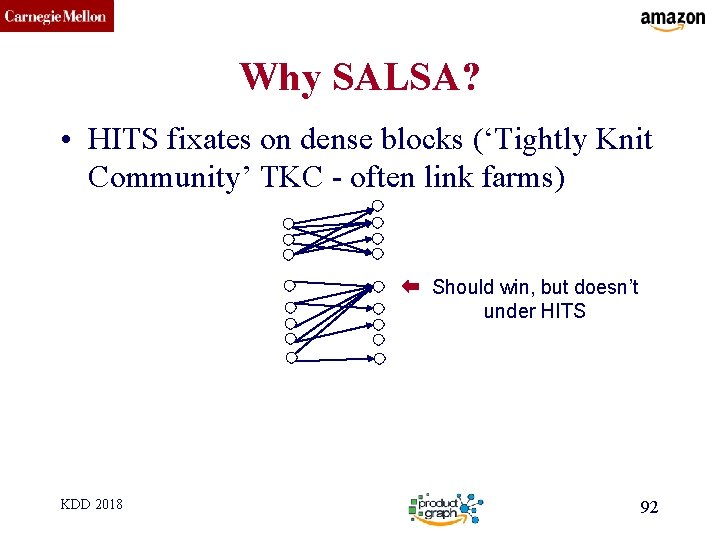

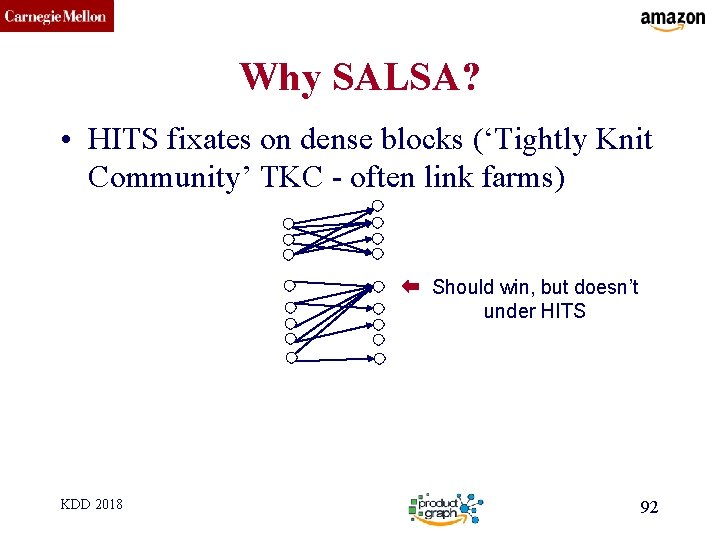

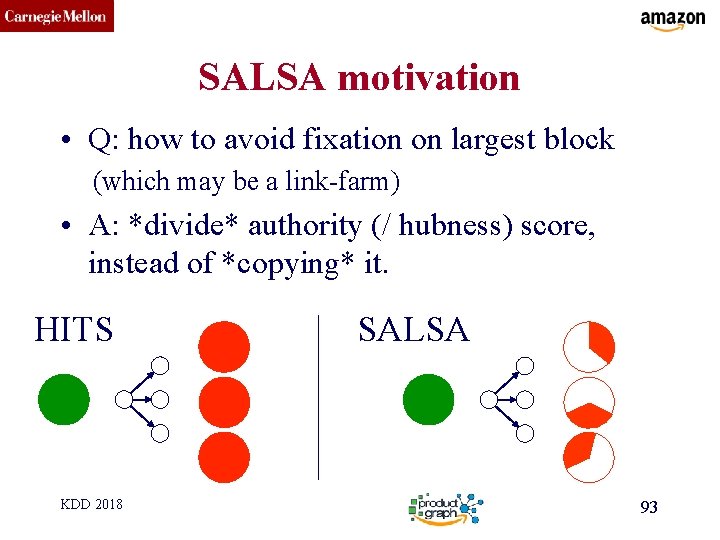

CMU SCS Why SALSA? • HITS fixates on dense blocks (‘Tightly Knit Community’ TKC - often link farms) Should win, but doesn’t under HITS KDD 2018 92

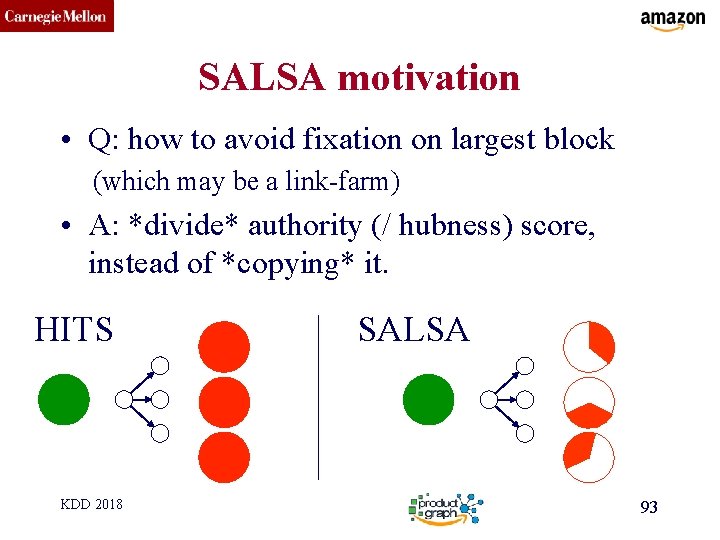

CMU SCS SALSA motivation • Q: how to avoid fixation on largest block (which may be a link-farm) • A: *divide* authority (/ hubness) score, instead of *copying* it. HITS KDD 2018 SALSA 93

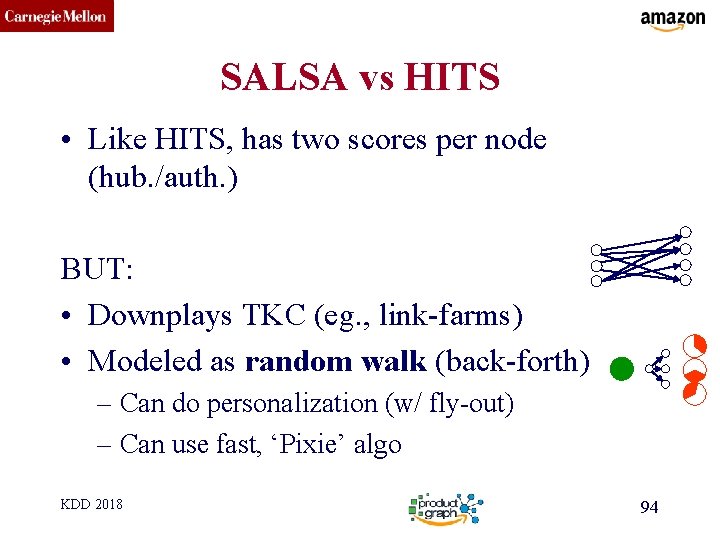

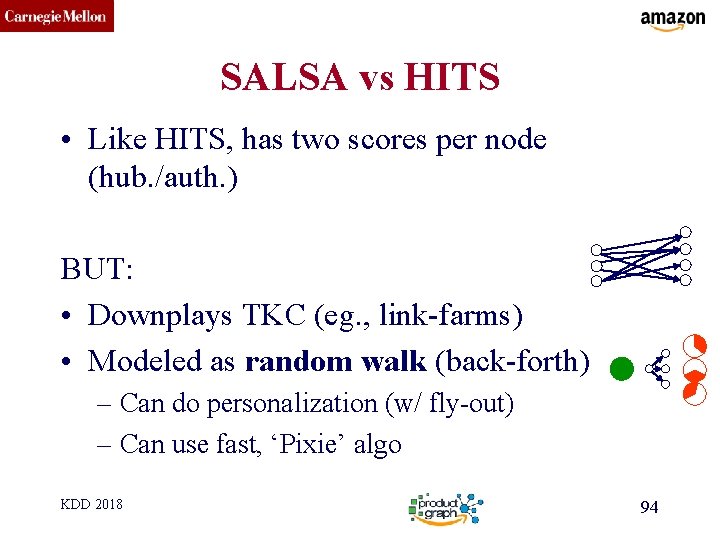

CMU SCS SALSA vs HITS • Like HITS, has two scores per node (hub. /auth. ) BUT: • Downplays TKC (eg. , link-farms) • Modeled as random walk (back-forth) – Can do personalization (w/ fly-out) – Can use fast, ‘Pixie’ algo KDD 2018 94

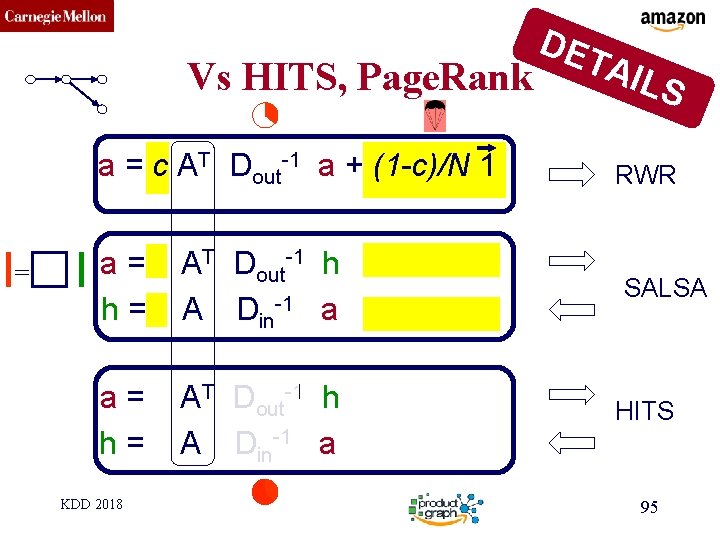

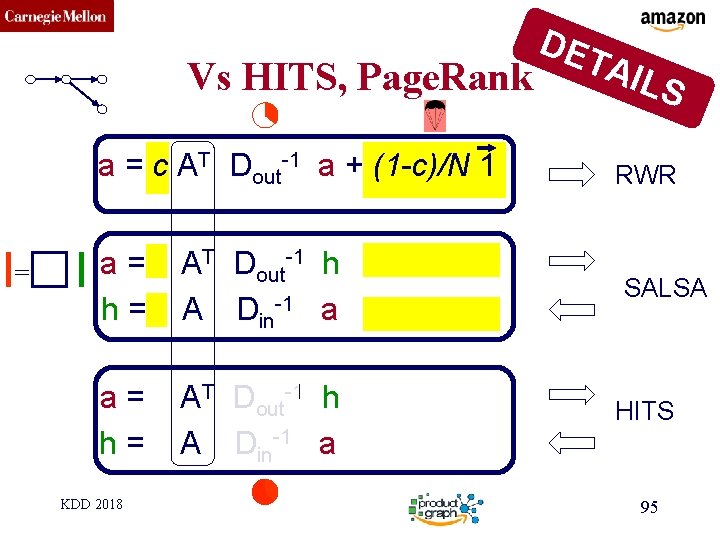

CMU SCS Vs HITS, Page. Rank a = c AT Dout-1 a + (1 -c)/N 1 = a = AT Dout-1 h h = A Din-1 a KDD 2018 DE TAI LS RWR SALSA HITS 95

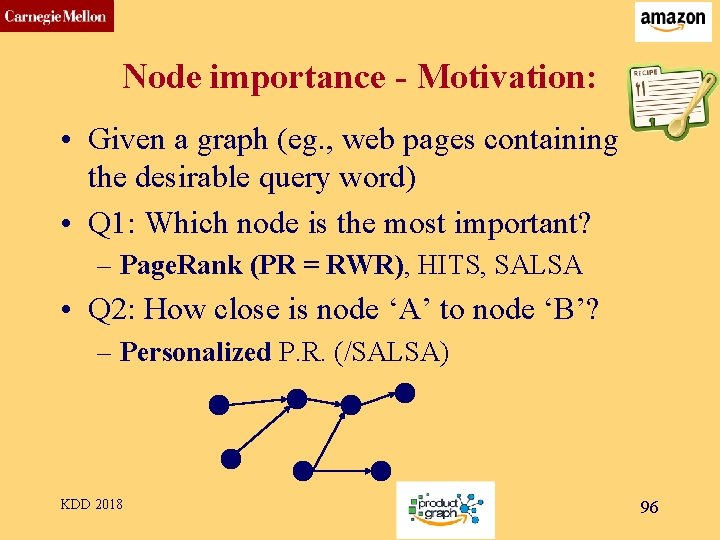

CMU SCS Node importance - Motivation: • Given a graph (eg. , web pages containing the desirable query word) • Q 1: Which node is the most important? – Page. Rank (PR = RWR), HITS, SALSA • Q 2: How close is node ‘A’ to node ‘B’? – Personalized P. R. (/SALSA) KDD 2018 96

CMU SCS SVD properties ü Hidden/latent variable detection ü Compute node importance (HITS) ü Block detection ü Dimensionality reduction ü Embedding (linear) – SVD is a special case of ’deep neural net’ v 0 u 0 KDD 2018 v 1 u 1 97

CMU SCS SVD properties ü Hidden/latent variable detection ü Compute node importance (HITS) ! D ü Block detection SV ü Dimensionality reduction ? x i ü Embedding (linear) atr M – SVD is a special case of ’deep neural net’ v 0 u 0 KDD 2018 v 1 u 1 98