CMS T 1T 2 Estimates CMS perspective n

- Slides: 14

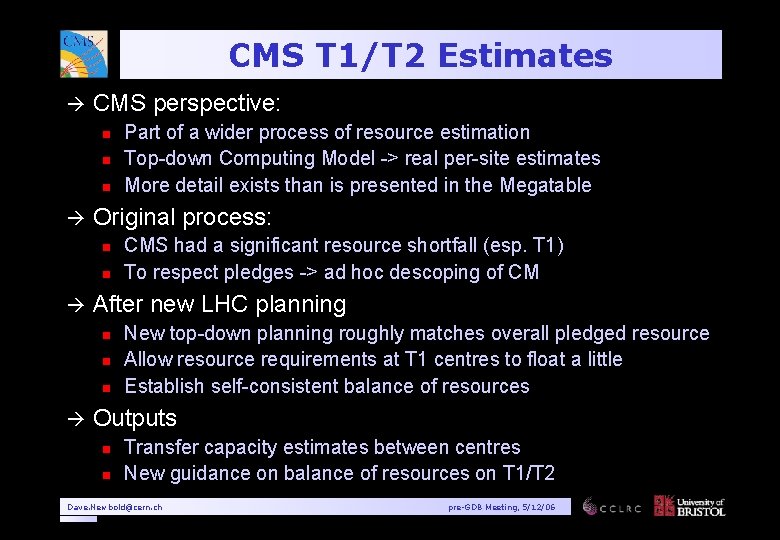

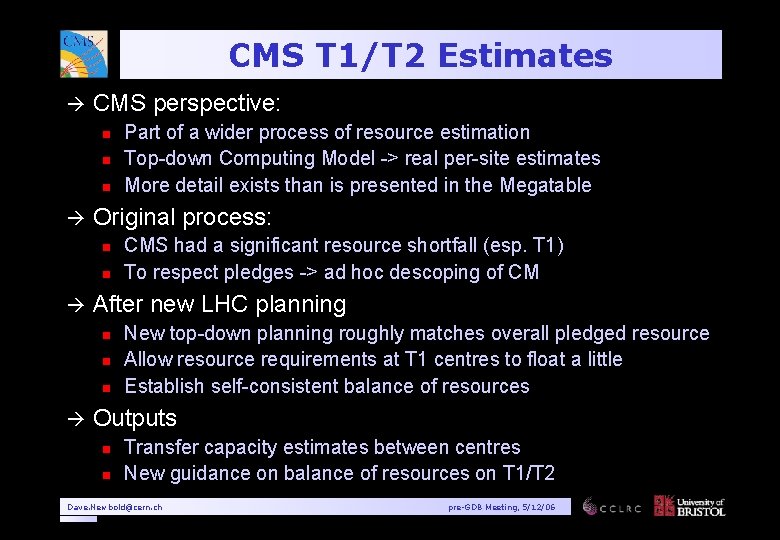

CMS T 1/T 2 Estimates à CMS perspective: n n n à Original process: n n à CMS had a significant resource shortfall (esp. T 1) To respect pledges -> ad hoc descoping of CM After new LHC planning n n n à Part of a wider process of resource estimation Top-down Computing Model -> real per-site estimates More detail exists than is presented in the Megatable New top-down planning roughly matches overall pledged resource Allow resource requirements at T 1 centres to float a little Establish self-consistent balance of resources Outputs n n Transfer capacity estimates between centres New guidance on balance of resources on T 1/T 2 Dave. Newbold@cern. ch pre-GDB Meeting, 5/12/06

Inputs: CMS Model à Data rates, event sizes n n à Trigger rate: ~300 Hz (450 MB/s) Sim to real ratio is 1: 1 (though not all full simulation) RAW (sim) 1. 5 (2. 0) MB/evt; RECO (sim) 250 (400) k. B/evt All AOD is 50 k. B/evt Data placement n n RAW/RECO: one copy across all T 1, disk 1 tape 1 Sim RAW/RECO: one copy across all T 1, on tape with 10% disk cache • How is this expressed in disk. Xtape. Y formalism? • Is this formalism in fact appropriate for resource questions…? n AOD: one copy at each T 1, disk 1 tape 1 Dave. Newbold@cern. ch pre-GDB Meeting, 5/12/06

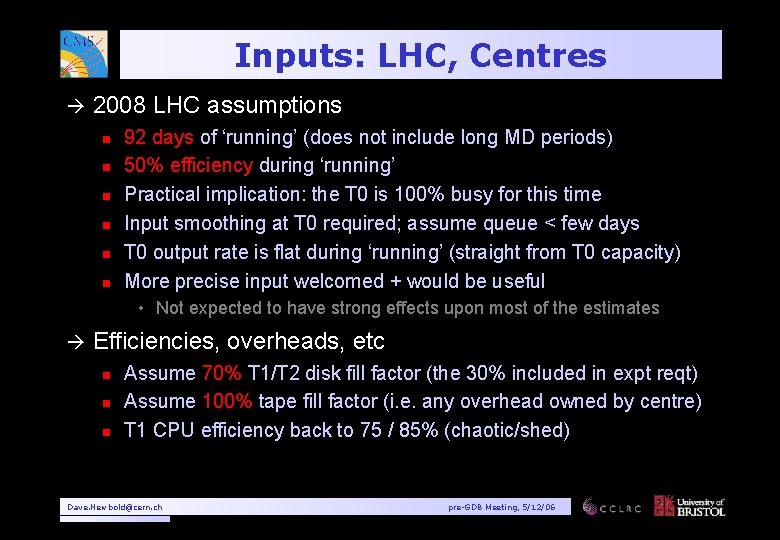

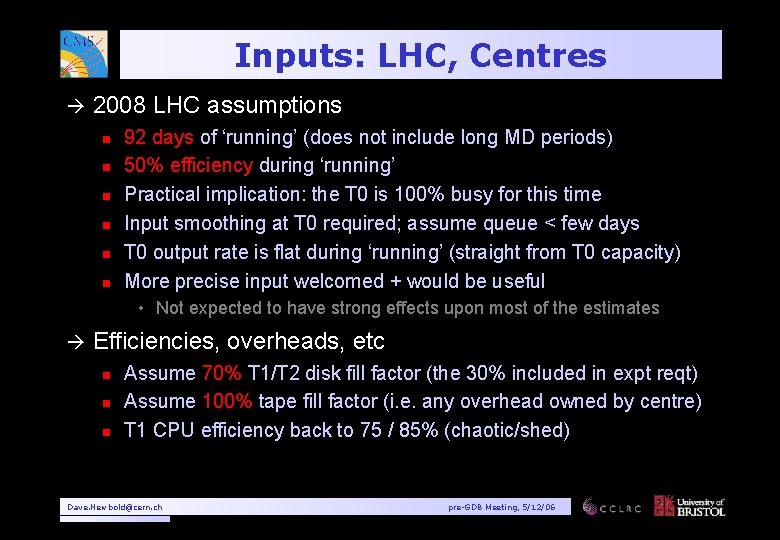

Inputs: LHC, Centres à 2008 LHC assumptions n n n 92 days of ‘running’ (does not include long MD periods) 50% efficiency during ‘running’ Practical implication: the T 0 is 100% busy for this time Input smoothing at T 0 required; assume queue < few days T 0 output rate is flat during ‘running’ (straight from T 0 capacity) More precise input welcomed + would be useful • Not expected to have strong effects upon most of the estimates à Efficiencies, overheads, etc n n n Assume 70% T 1/T 2 disk fill factor (the 30% included in expt reqt) Assume 100% tape fill factor (i. e. any overhead owned by centre) T 1 CPU efficiency back to 75 / 85% (chaotic/shed) Dave. Newbold@cern. ch pre-GDB Meeting, 5/12/06

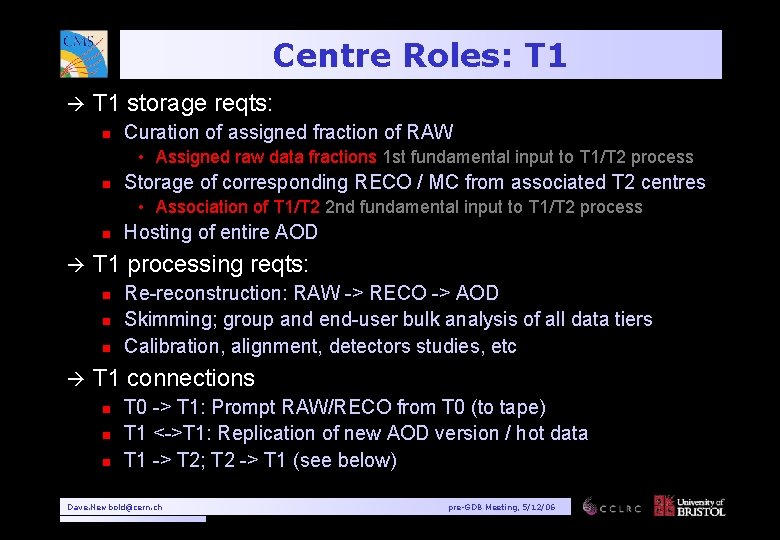

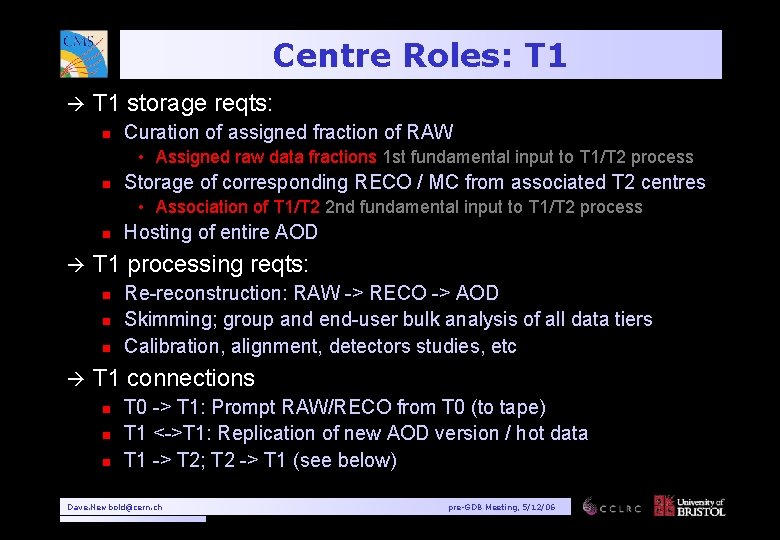

Centre Roles: T 1 à T 1 storage reqts: n Curation of assigned fraction of RAW • Assigned raw data fractions 1 st fundamental input to T 1/T 2 process n Storage of corresponding RECO / MC from associated T 2 centres • Association of T 1/T 2 2 nd fundamental input to T 1/T 2 process n à T 1 processing reqts: n n n à Hosting of entire AOD Re-reconstruction: RAW -> RECO -> AOD Skimming; group and end-user bulk analysis of all data tiers Calibration, alignment, detectors studies, etc T 1 connections n n n T 0 -> T 1: Prompt RAW/RECO from T 0 (to tape) T 1 <->T 1: Replication of new AOD version / hot data T 1 -> T 2; T 2 -> T 1 (see below) Dave. Newbold@cern. ch pre-GDB Meeting, 5/12/06

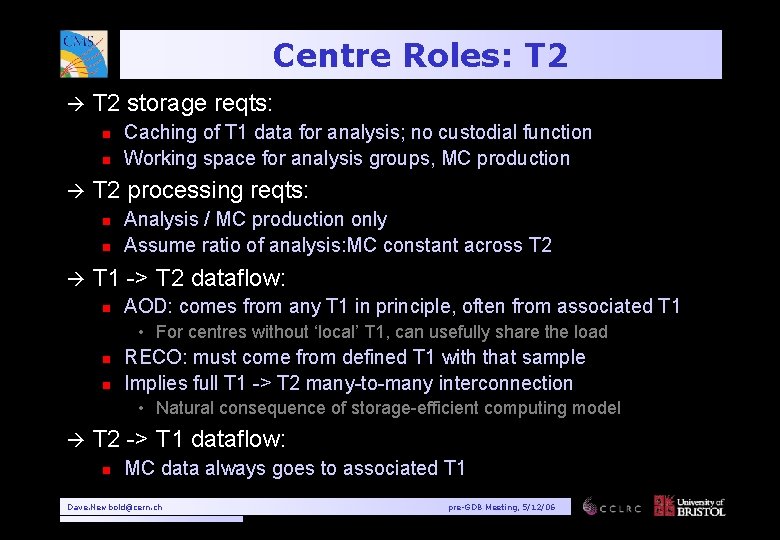

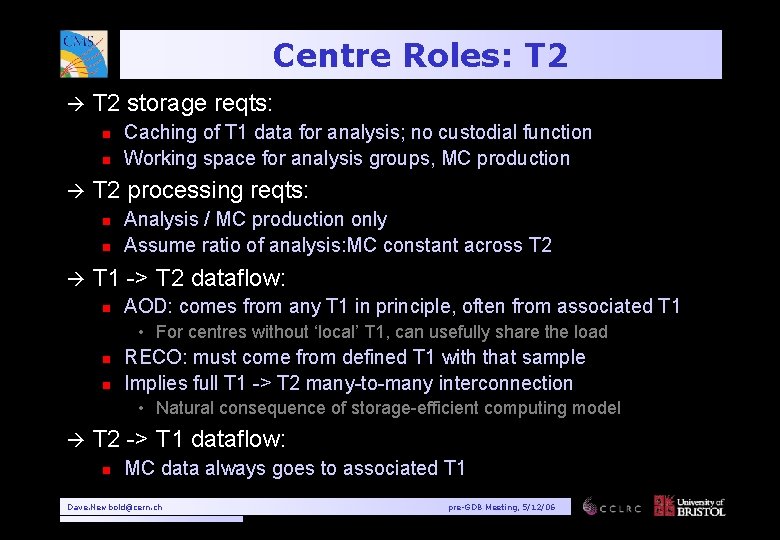

Centre Roles: T 2 à T 2 storage reqts: n n à T 2 processing reqts: n n à Caching of T 1 data for analysis; no custodial function Working space for analysis groups, MC production Analysis / MC production only Assume ratio of analysis: MC constant across T 2 T 1 -> T 2 dataflow: n AOD: comes from any T 1 in principle, often from associated T 1 • For centres without ‘local’ T 1, can usefully share the load n n RECO: must come from defined T 1 with that sample Implies full T 1 -> T 2 many-to-many interconnection • Natural consequence of storage-efficient computing model à T 2 -> T 1 dataflow: n MC data always goes to associated T 1 Dave. Newbold@cern. ch pre-GDB Meeting, 5/12/06

T 1/T 2 Associations à NB: These are working assumptions in some cases à Stream “allocation” ~ available storage at centre Dave. Newbold@cern. ch pre-GDB Meeting, 5/12/06

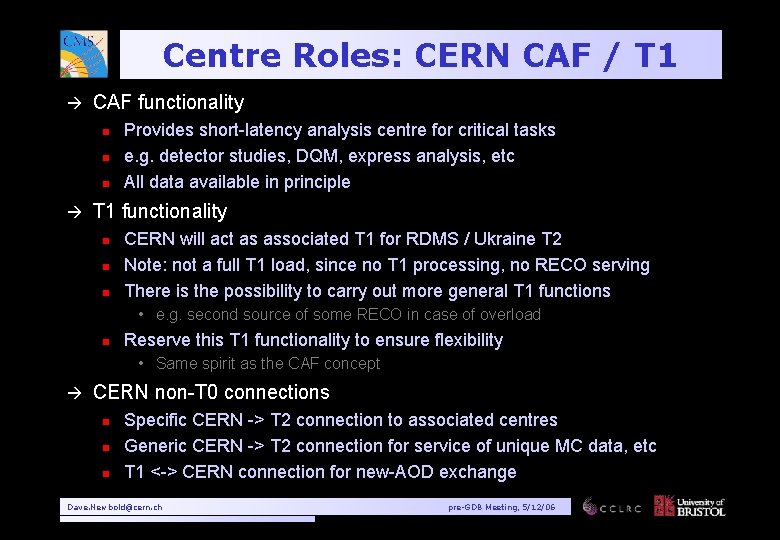

Centre Roles: CERN CAF / T 1 à CAF functionality n n n à Provides short-latency analysis centre for critical tasks e. g. detector studies, DQM, express analysis, etc All data available in principle T 1 functionality n n n CERN will act as associated T 1 for RDMS / Ukraine T 2 Note: not a full T 1 load, since no T 1 processing, no RECO serving There is the possibility to carry out more general T 1 functions • e. g. second source of some RECO in case of overload n Reserve this T 1 functionality to ensure flexibility • Same spirit as the CAF concept à CERN non-T 0 connections n n n Specific CERN -> T 2 connection to associated centres Generic CERN -> T 2 connection for service of unique MC data, etc T 1 <-> CERN connection for new-AOD exchange Dave. Newbold@cern. ch pre-GDB Meeting, 5/12/06

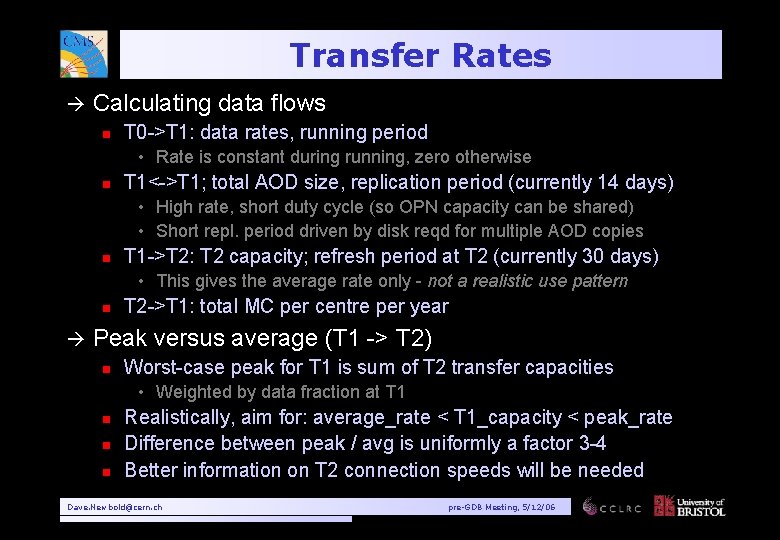

Transfer Rates à Calculating data flows n T 0 ->T 1: data rates, running period • Rate is constant during running, zero otherwise n T 1<->T 1; total AOD size, replication period (currently 14 days) • High rate, short duty cycle (so OPN capacity can be shared) • Short repl. period driven by disk reqd for multiple AOD copies n T 1 ->T 2: T 2 capacity; refresh period at T 2 (currently 30 days) • This gives the average rate only - not a realistic use pattern n à T 2 ->T 1: total MC per centre per year Peak versus average (T 1 -> T 2) n Worst-case peak for T 1 is sum of T 2 transfer capacities • Weighted by data fraction at T 1 n n n Realistically, aim for: average_rate < T 1_capacity < peak_rate Difference between peak / avg is uniformly a factor 3 -4 Better information on T 2 connection speeds will be needed Dave. Newbold@cern. ch pre-GDB Meeting, 5/12/06

Outputs: Rates à Units are MB/s à These are raw rates: no catchup (x 2? ), no overhead (x 2? ) n n à Potentially some large factor to be added A common understanding is needed FNAL T 2 -out-avg is around 50% US, 50% external Dave. Newbold@cern. ch pre-GDB Meeting, 5/12/06

Outputs: Capacities à “Resource” from a simple estimate of relative unit costs n à CPU : Disk : Tape at 0. 5 : 1. 5 : 0. 3 (a la B. Panzer) Clearly some fine-tuning left to do n But is a step towards a reasonably balanced model Total is consistent with top-down input to CRRB, by construction à Storage classes are still under study à n n Megatable totals are firm, but disk. Xtape. Y categories are not This may be site-dependent (also, details of cache) Dave. Newbold@cern. ch pre-GDB Meeting, 5/12/06

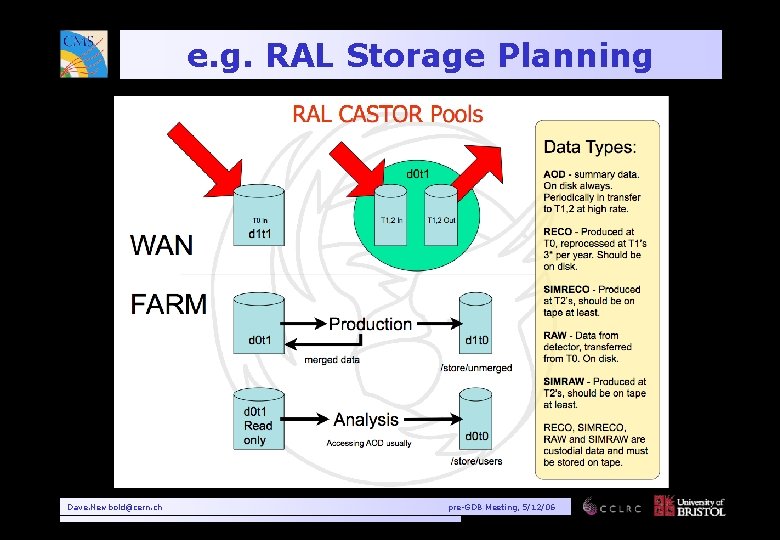

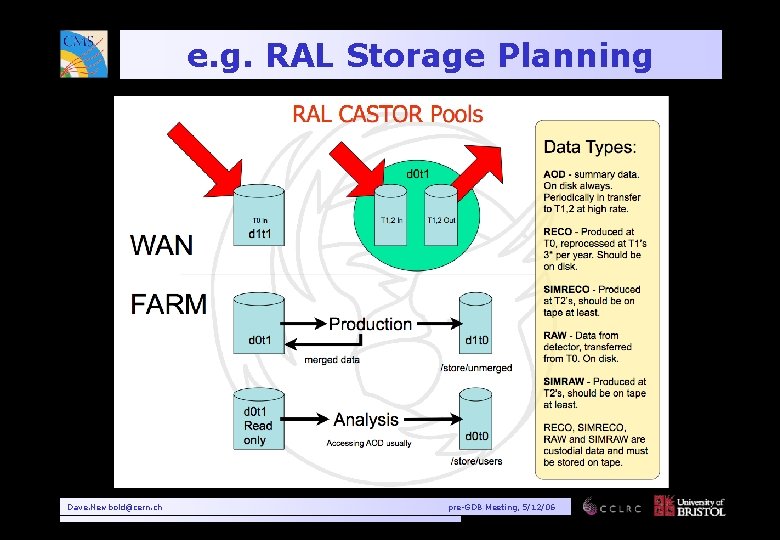

e. g. RAL Storage Planning Dave. Newbold@cern. ch pre-GDB Meeting, 5/12/06

Comments / Next Steps? à T 1 / T 2 process: n à What other information is useful for sites? n n à Internal dataflow estimates for centres (-> cache sizes, etc) Assumptions on storage classes, etc. Similar model estimates for 2007 / 2009+ Documentation of assumed CPU capacities at centres What does CMS need? n n à Has been productive and useful; exposed many issues Feedback from sites (not overloaded with this so far) Understanding of site ramp-up plans, resource balance, network capacity Input on realistic LHC schedule, running conditions, etc Feedback from providers on network requirements Goal: detailed self-consistent model for 2007/8 n n n Based upon real / guaranteed centre, network capacities… Gives at least an outline for ramp-up at sites, global experiment Much work left to do… Dave. Newbold@cern. ch pre-GDB Meeting, 5/12/06

Backup: Rate Details Dave. Newbold@cern. ch pre-GDB Meeting, 5/12/06

Backup: Capacity Details Dave. Newbold@cern. ch pre-GDB Meeting, 5/12/06