CMS Data Management and CMS Monitoring emphasis on

- Slides: 46

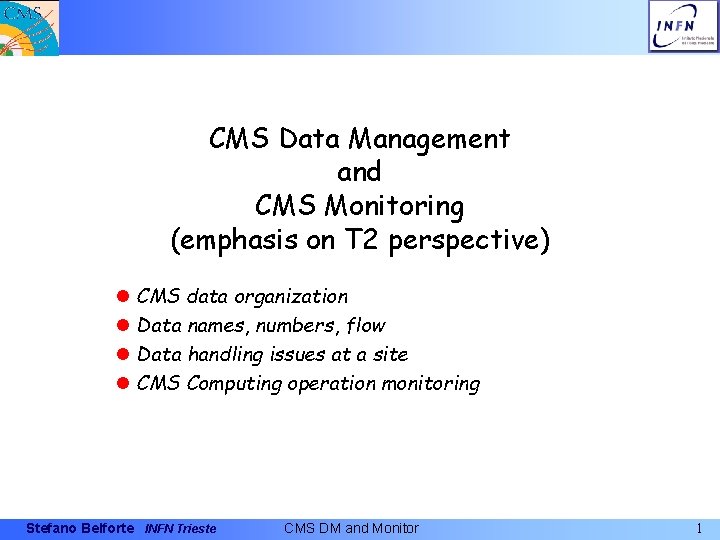

CMS Data Management and CMS Monitoring (emphasis on T 2 perspective) l CMS data organization l Data names, numbers, flow l Data handling issues at a site l CMS Computing operation monitoring Stefano Belforte INFN Trieste CMS DM and Monitor 1

Data building block: The Event l Two protons collide at the center of CMS detector, and millions of electronic channels collect data l Yellow lines (tracks) e. g. , added later on during data processing Stefano Belforte INFN Trieste CMS DM and Monitor 2

The Event l At the core of the experiment data is “The Event” l A numerical representation of one proton-proton collision at LHC Ø As seen through CMS detector Ø Sort of a digital picture of the collision l There are many events Datasets l There are many data in one event Ø Data inside each event are described as objects Ø Events data are written in root format (outside CERN at least) l As data are processed, event content changes Data Tiers Ø Events with similar content (same objects) are said to belong to same data Tier (more in next slides) Stefano Belforte INFN Trieste CMS DM and Monitor 3

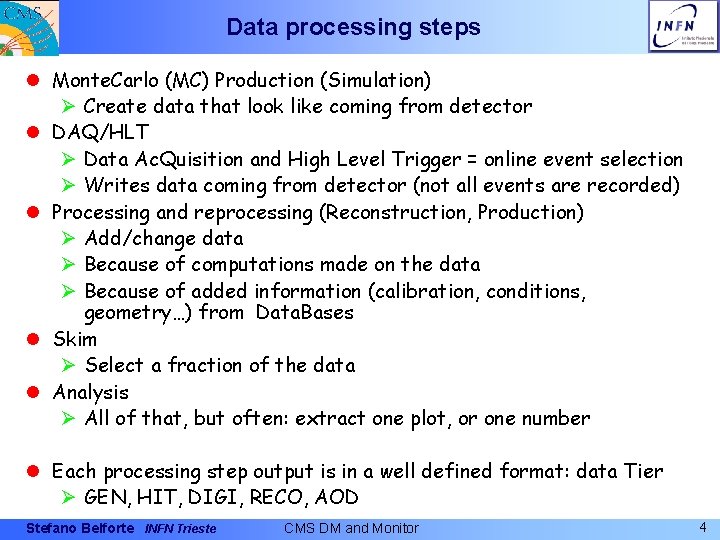

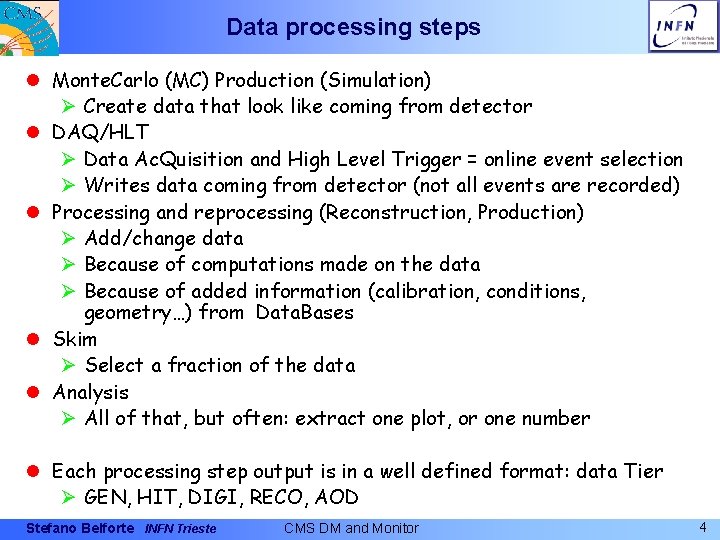

Data processing steps l Monte. Carlo (MC) Production (Simulation) Ø Create data that look like coming from detector l DAQ/HLT Ø Data Ac. Quisition and High Level Trigger = online event selection Ø Writes data coming from detector (not all events are recorded) l Processing and reprocessing (Reconstruction, Production) Ø Add/change data Ø Because of computations made on the data Ø Because of added information (calibration, conditions, geometry…) from Data. Bases l Skim Ø Select a fraction of the data l Analysis Ø All of that, but often: extract one plot, or one number l Each processing step output is in a well defined format: data Tier Ø GEN, HIT, DIGI, RECO, AOD Stefano Belforte INFN Trieste CMS DM and Monitor 4

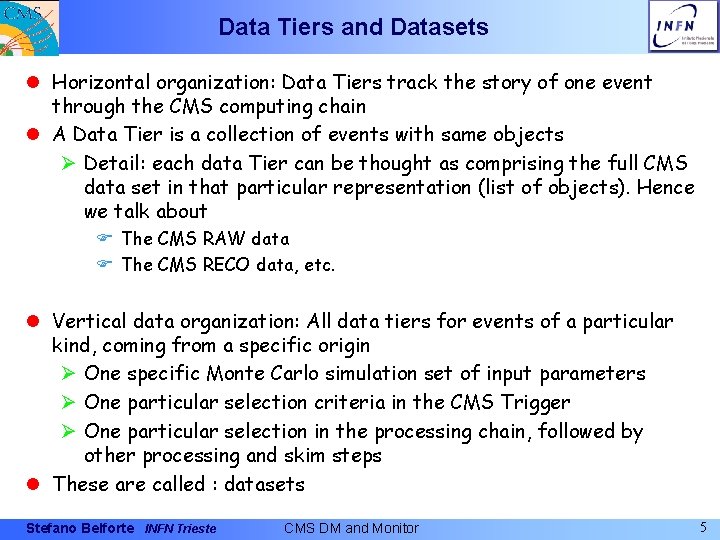

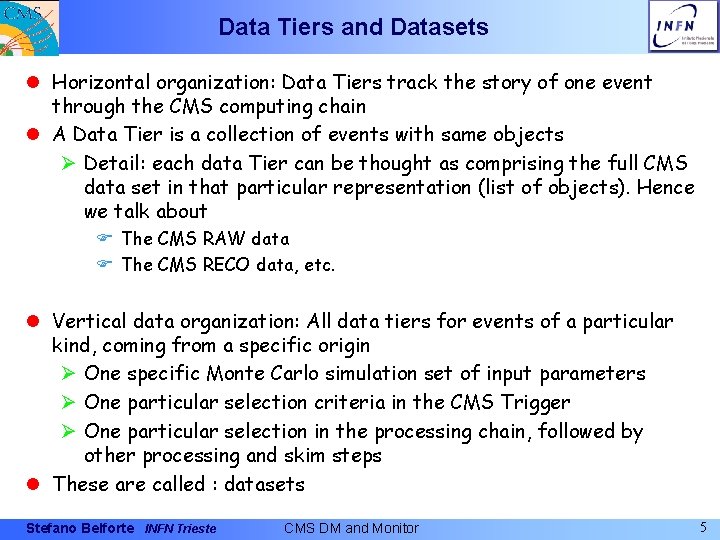

Data Tiers and Datasets l Horizontal organization: Data Tiers track the story of one event through the CMS computing chain l A Data Tier is a collection of events with same objects Ø Detail: each data Tier can be thought as comprising the full CMS data set in that particular representation (list of objects). Hence we talk about F The CMS RAW data F The CMS RECO data, etc. l Vertical data organization: All data tiers for events of a particular kind, coming from a specific origin Ø One specific Monte Carlo simulation set of input parameters Ø One particular selection criteria in the CMS Trigger Ø One particular selection in the processing chain, followed by other processing and skim steps l These are called : datasets Stefano Belforte INFN Trieste CMS DM and Monitor 5

Examples (names you may sort of see) Simulation Z-to-ee HLT 4 -leptons HLT Jet-1200 Simulation Jet. Sim 1200 MC Production at Tier 2 GEN, HITS, DIGI Copy to Tier 1 GEN, HITS, DIGI DAQ/HLT at CMS (P 5) RAW Prompt Reco at Tier 0 RECO, AOD Reprocessing at Tier 1 RECO, AOD Skim at Tier 1 RECO, AOD Copy to Tier 2 RECO, AOD RAW, RECO, AOD Stefano Belforte INFN Trieste CMS DM and Monitor 6

CMS data management l There are data (events) (KB~MB: size driven by physics) Ø 1 PB/year = 10^12 KB ~ 10^9 events l Event data are in files (GB: size driven by DM convenience) Ø 10^6 files/year CMS catalogs list files, not events, nor objects l Files are grouped in Fileblocks (TB: size driven by DM convenience) Ø 10^3 Fileblocks/year CMS data management moves Fileblocks F i. e. tracks data location only at the Fileblock level l Fileblocks are grouped in Datasets (TB: size driven by physics) Ø Datasets are large (100 TB) or small (0. 1 TB) Ø Datasets are not too many: 10^3 Datasets (after years of running) l CMS catalog (DBS) lists all Datasets and their contents, relationships, provenance and associated metadata l CMS Data Location Service (DLS) list location of all File Blocks l DBS+DLS: central catalogs at CERN, no replica foreseen currently Stefano Belforte INFN Trieste CMS DM and Monitor 7

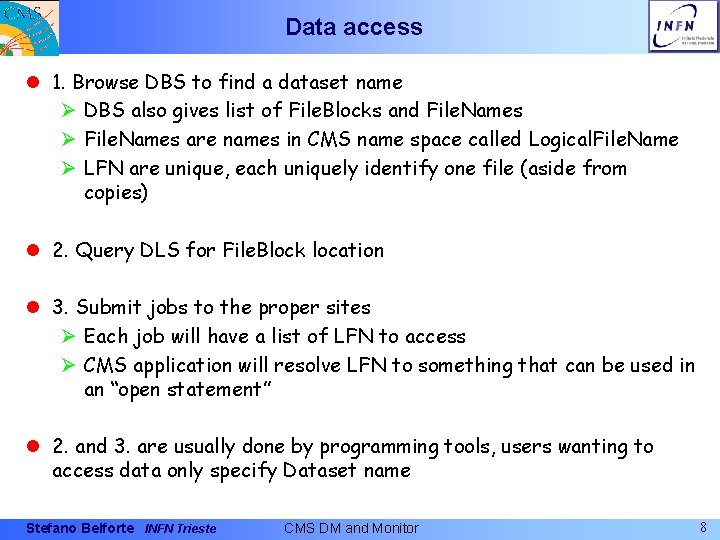

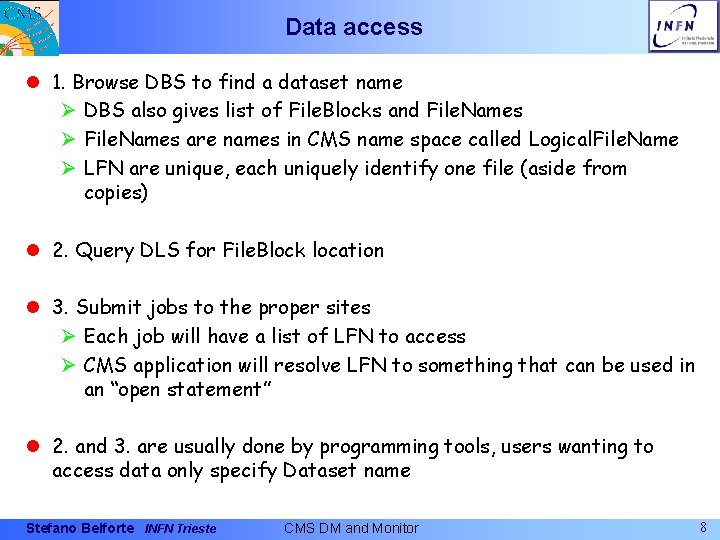

Data access l 1. Browse DBS to find a dataset name Ø DBS also gives list of File. Blocks and File. Names Ø File. Names are names in CMS name space called Logical. File. Name Ø LFN are unique, each uniquely identify one file (aside from copies) l 2. Query DLS for File. Block location l 3. Submit jobs to the proper sites Ø Each job will have a list of LFN to access Ø CMS application will resolve LFN to something that can be used in an “open statement” l 2. and 3. are usually done by programming tools, users wanting to access data only specify Dataset name Stefano Belforte INFN Trieste CMS DM and Monitor 8

In practice: files on servers l A Tier 2 will manage various kinds of data Ø Appear as a set of file blocks from specific datasets l MC production output Ø Intermediate small files at job output (unmerged) Ø Merged files, O(GB) for transfer to Tier 1 l Data for analysis users Ø Skims from larger datasets at Tier 1 (all kind of tiers) Ø Data for/from local users from processing of those l Some data will have backup (on tape) at Tier 1/0 l Some not (MC output before transfer, user’s data) l A Tier 2 may (or may not) want to use different resources (for space, performance, reliability) for different data Ø How does a Tier 2 know which file is of which kind ? Stefano Belforte INFN Trieste CMS DM and Monitor 9

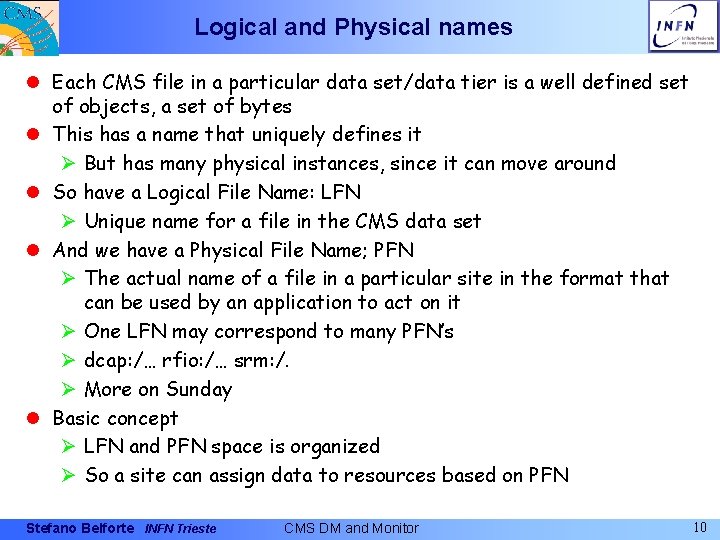

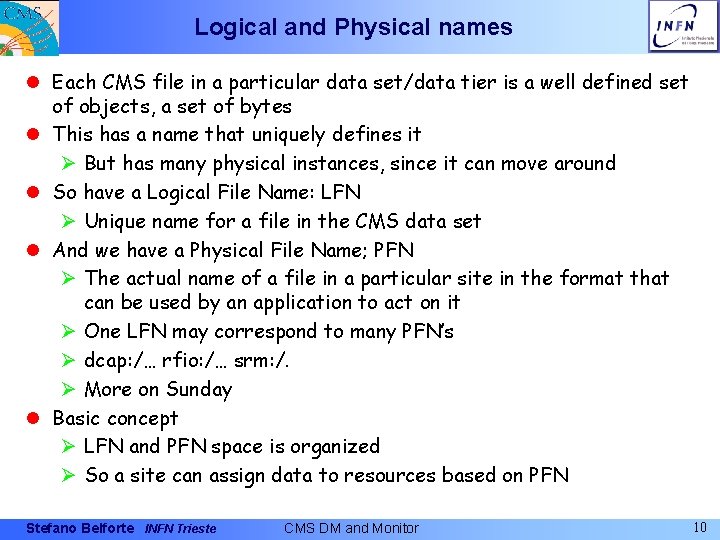

Logical and Physical names l Each CMS file in a particular data set/data tier is a well defined set of objects, a set of bytes l This has a name that uniquely defines it Ø But has many physical instances, since it can move around l So have a Logical File Name: LFN Ø Unique name for a file in the CMS data set l And we have a Physical File Name; PFN Ø The actual name of a file in a particular site in the format that can be used by an application to act on it Ø One LFN may correspond to many PFN’s Ø dcap: /… rfio: /… srm: /. Ø More on Sunday l Basic concept Ø LFN and PFN space is organized Ø So a site can assign data to resources based on PFN Stefano Belforte INFN Trieste CMS DM and Monitor 10

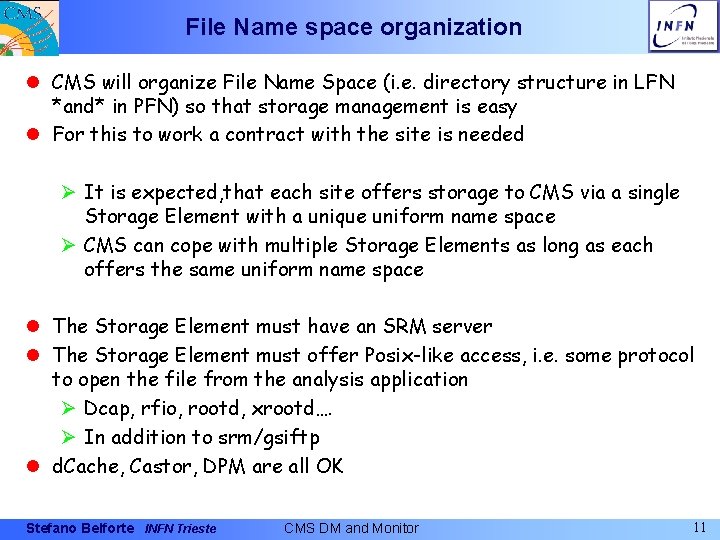

File Name space organization l CMS will organize File Name Space (i. e. directory structure in LFN *and* in PFN) so that storage management is easy l For this to work a contract with the site is needed Ø It is expected, that each site offers storage to CMS via a single Storage Element with a unique uniform name space Ø CMS can cope with multiple Storage Elements as long as each offers the same uniform name space l The Storage Element must have an SRM server l The Storage Element must offer Posix-like access, i. e. some protocol to open the file from the analysis application Ø Dcap, rfio, rootd, xrootd…. Ø In addition to srm/gsiftp l d. Cache, Castor, DPM are all OK Stefano Belforte INFN Trieste CMS DM and Monitor 11

File Names organization l CMS organize all its data in a unique, hierarchical, LFN space. Ø All data live in subdirectories of this common name space. Ø This name space organization is the one visible in DBS l CMS guarantees that the number of files in each directory is limited Ø Therefore CMS LFN name space can be trivially mapped to physical name space of any specific Storage Element Ø See Trivial File Catalot tutorial on l CMS will use the leftmost directory/ies of the name space to separate sets of data that may need to be handled differently at sites, as far as physical location (tape, disk, other). Examples Ø /store/unmerged/. . used for temporary outputs that need to be merged in larger files before moving/storing, well suited for disk-only storage Ø /store/production/. . . used for final production output, to be saved on tape at Tier 1's l Each site will then map those branches of the LFN tree to a specific SE (or piece of) according to desired policy and local technicalities Stefano Belforte INFN Trieste CMS DM and Monitor 12

Data transfer l Data is transferred file-by-file l But CMS data transfer tool (Ph. EDEx) is optimized to deal with datasets l Moving a dataset (or a portion of it) implies interaction with CMS data catalogs and may last days of weeks, hence the need to cope with failures and down times l CMS’s Ph. EDEx tool builds on top of single file transfer tools for this l Site data managers will interact with Ph. EDEx requesting datasets to be copied locally, or declaring data created locally as being available for transfer to other sites. Ø Dataset name from DBS will be used l Ph. EDEx will take care of interacting with DBS and DLS as needed Stefano Belforte INFN Trieste CMS DM and Monitor 13

Data flows: output l MC Simulation data are created at Tier 2 and stay there only for a brief time. Final destination is one Tier 1, selected because of its capacity to offer custodial storage for them Ø not because of proximity/affiliation Ø required bandwidth is small, no problem to reach any T 1 l Data rate out of a Tier 2 dependent on amount of generated data per unit of time (i. e. CPU), usually a few MB/sec (e. g. ~4 for US T 2’s) Ø steady trickle of data out from the site l Which data is produced will be under control of central CMS operations, site provides resources but does not control which job is sent to the site Stefano Belforte INFN Trieste CMS DM and Monitor 14

Data flows: input l What data to import is largely under site control l See discussion about Tier 2 role in previous talk l How much to import is a combination of local users needs and disk capacity l In general transfers will be initiated by a person responsible for data management at the site, whom asks Ph. EDEx to replicate locally a given (fraction of a) dataset, i. e. a list of Fileblocks l New data are not required every day l But when they are required for local analysis, tomorrow is not too soon Ø Input traffic has peaks and comes in bursts Ø Will try to saturate network for a while, then stop Ø Few MB/sec average, but up to 100 MB/sec peak l Different data may have to come from different T 1’s Stefano Belforte INFN Trieste CMS DM and Monitor 15

Data flows summary l Ph. EDEx will manage most of the data flow Ø Tutorial on Sunday l Ph. EDEx relies on FTS and SRM Ø Tutorial on Saturday l Input bandwidth much higher then output Ø Beware competition with data access from running jobs Ø May have site specific issues that requires site specific solution depending on actual hardware configuration l CMS can tune operation to have as much transfers as possible to have the “best connected Tier 1” at the other end, but this will not satisfy all needs l A Tier 2 will need to move data to/from many Tier 1 l More details of flows (work in progress) Ø http: //lcg. web. cern. ch/LCG/documents/Megatable 161106. xls Stefano Belforte INFN Trieste CMS DM and Monitor 16

Summary on Data Management l Structured data l Well defined name space for files l Sites can map CMS data to various hardware, to comply with preferred policies for space allocation, robustness, performance l Uniform name space must be offered locally l No local file catalog needed l CMS’s own products (DBS, DLS, Ph. EDEx) focus on the TB scale (Fileblocks, datasets) l Grid solutions underneath deal with singe files at GB scale Ø FTS, SRM, gsi. Ftp l CMS tools and applications work with all standard SRM servers currently deployed Ø d. Cache, Castor, DPM Stefano Belforte INFN Trieste CMS DM and Monitor 17

Monitor l Monitor the data locations Ø Which data a site hosts l Monitor the data transfers, data flows Ø Which data should a site receive/send ? Ø Are data moving ? How well ? l Monitor the running applications Ø Which jobs are running at a site ? Ø How long they wait, run ? Ø Are they successful ? Ø Which data do they access ? Ø How much data do they read ? Stefano Belforte INFN Trieste CMS DM and Monitor 18

Monitor the data location Integrated DBS/DLS view Click on file block name to get file list More Info on Sunday Computer readable format as well Stefano Belforte INFN Trieste CMS DM and Monitor 19

Monitor the data location Integrated DBS/DLS view Click on file block name to get file list More Info on Sunday Computer readable format as well Stefano Belforte INFN Trieste Click CMS DM and Monitor 20

Monitor the data transfers l Layered set of tools Ø Ph. EDEx : moves CMS datasets F rich set of web pages and graphs F more features and new web site in development Ø FTS : moves files, some retries, hides SRM details, implements access policies on “site A to site B” traffic F no general monitoring tool yet F see FTS tutorial tomorrow Ø SRM/gsiftp : low level single file transfer tool F http: //Gridview. cern. ch monitoring based on grid. Ftp logs F does not cover all sites in practice at present Stefano Belforte INFN Trieste CMS DM and Monitor 21

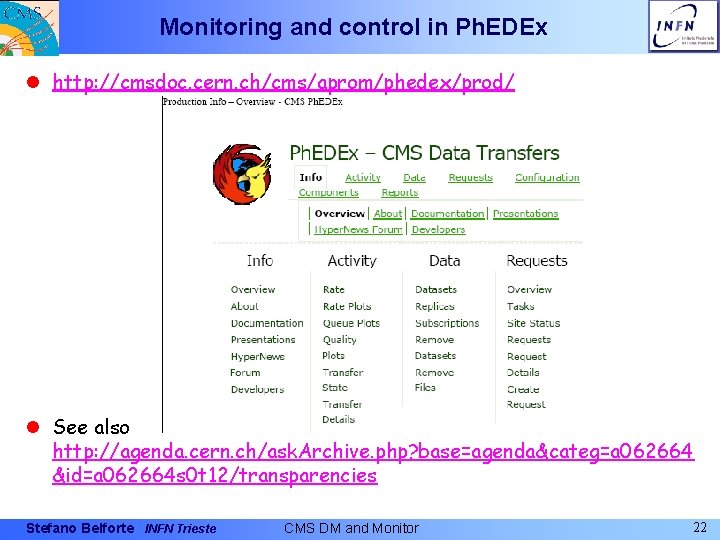

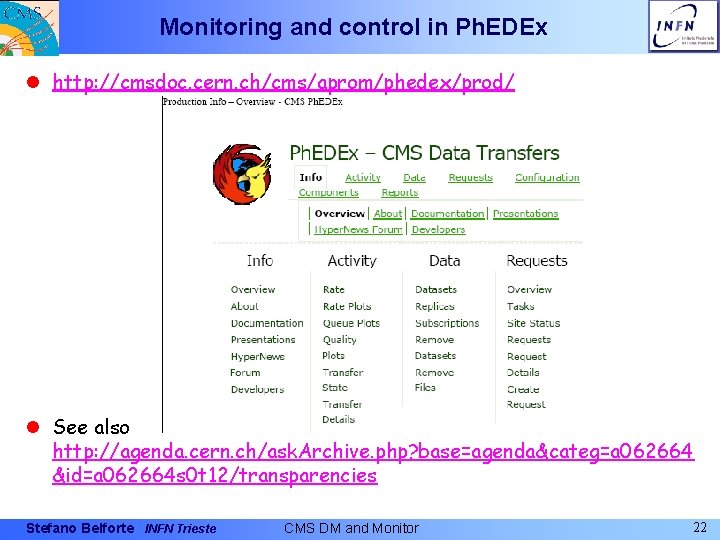

Monitoring and control in Ph. EDEx l http: //cmsdoc. cern. ch/cms/aprom/phedex/prod/ l See also http: //agenda. cern. ch/ask. Archive. php? base=agenda&categ=a 062664 &id=a 062664 s 0 t 12/transparencies Stefano Belforte INFN Trieste CMS DM and Monitor 22

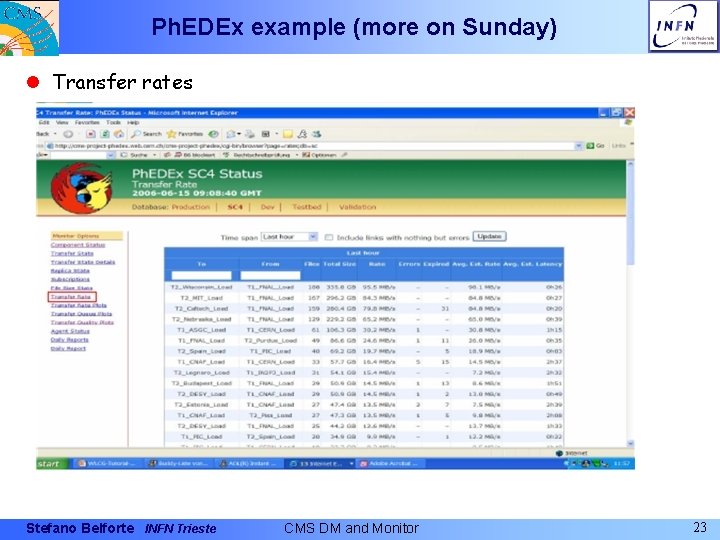

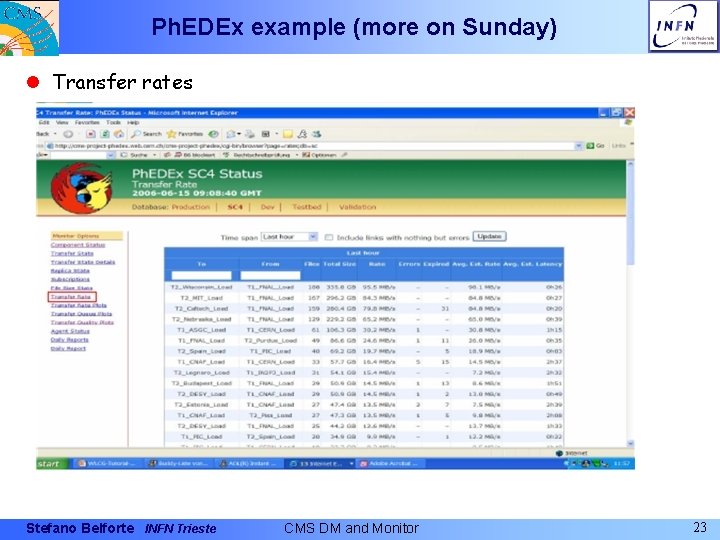

Ph. EDEx example (more on Sunday) l Transfer rates Stefano Belforte INFN Trieste CMS DM and Monitor 23

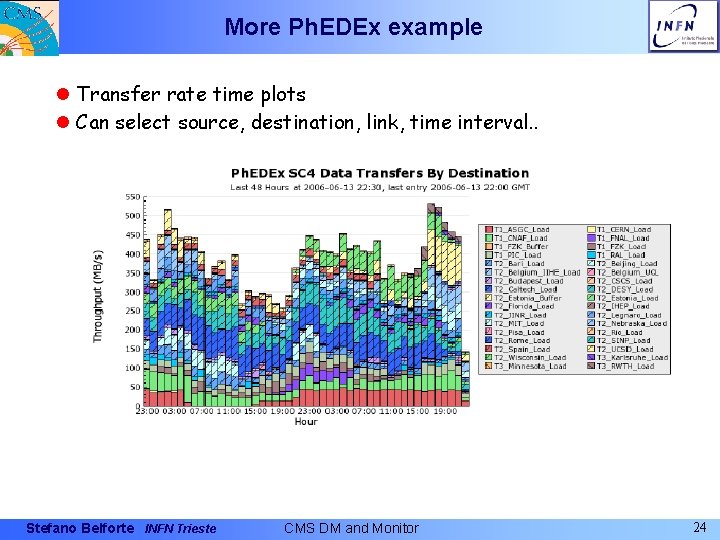

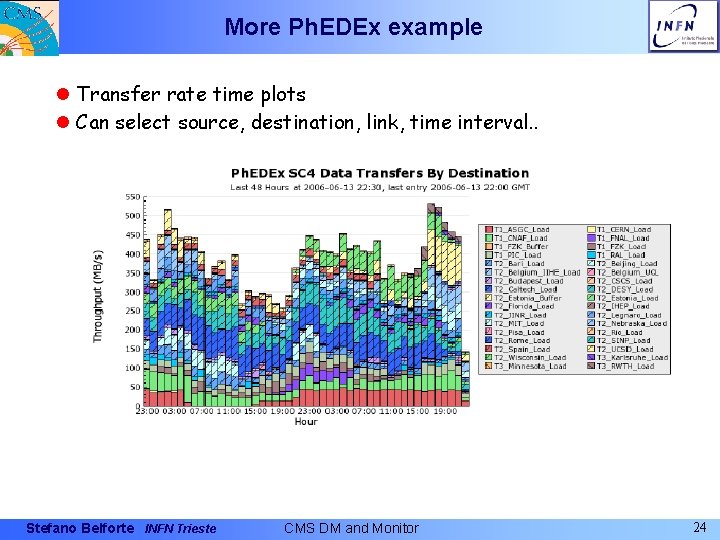

More Ph. EDEx example l Transfer rate time plots l Can select source, destination, link, time interval. . Stefano Belforte INFN Trieste CMS DM and Monitor 24

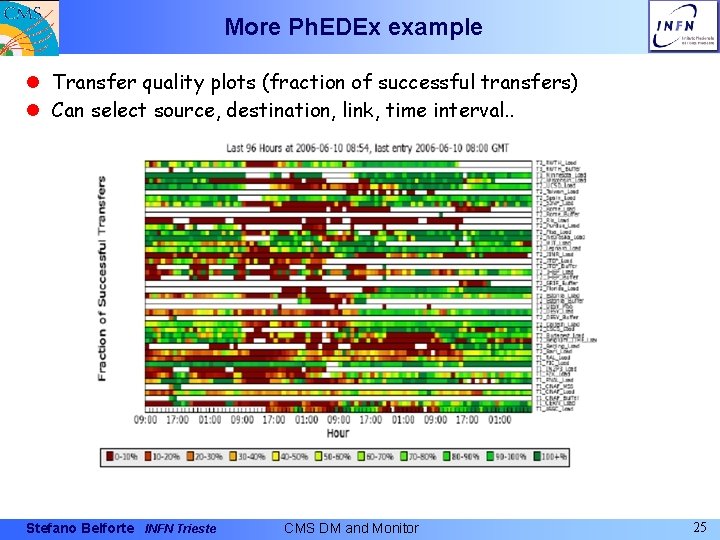

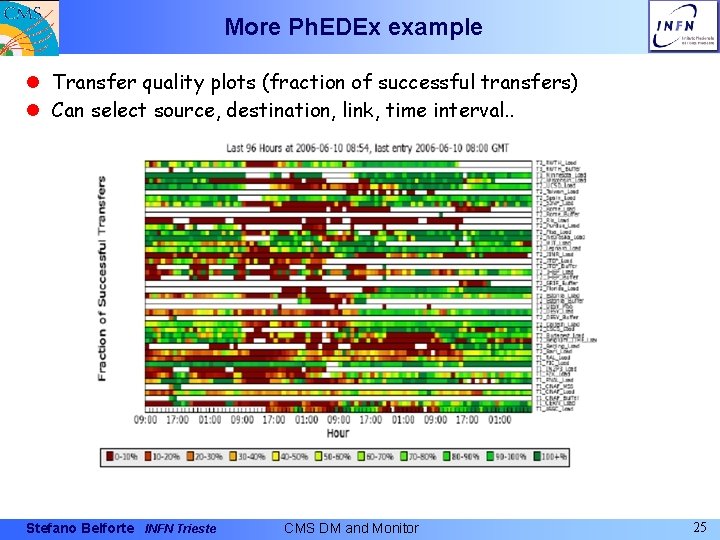

More Ph. EDEx example l Transfer quality plots (fraction of successful transfers) l Can select source, destination, link, time interval. . Stefano Belforte INFN Trieste CMS DM and Monitor 25

Monitoring CMS jobs l There is site monitoring and grid monitoring l CMS adds own tool for application monitoring Ø Correlate/aggregate information based on application specific information that site or grid does not know F F which data does the job access is this real production or test ? user’s analysis or organized physics groups activity was the application (CMS SW) successful ? Why ? l Strategy Ø Instrument job to report about itself at submission, execution, completion times Ø Via hooks in job management tools (Crab, Production. Agent) Ø Via hooks in job wrapper Ø Collect all data in central Database Ø Have interactive “dig-in” browser and static plots Stefano Belforte INFN Trieste CMS DM and Monitor 26

CMS Dashboard l http: //arda-dashboard. cern. ch/cms/ l Explore around, there are quite a few useful things l Next slides show examples from “Job Monitoring” links l See also Michael Ernst tutorial at June’s T 2 Workshop http: //agenda. cern. ch/ask. Archive. php? base=agenda&categ=a 062664 &id=a 062664 s 0 t 12/transparencies Stefano Belforte INFN Trieste CMS DM and Monitor 27

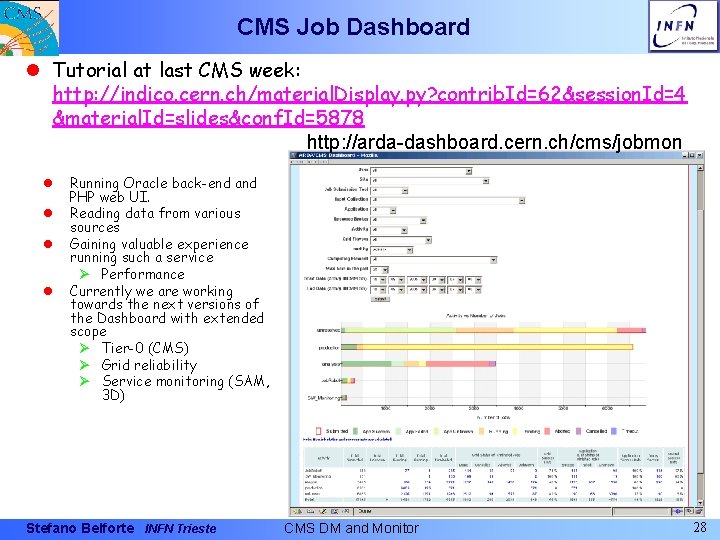

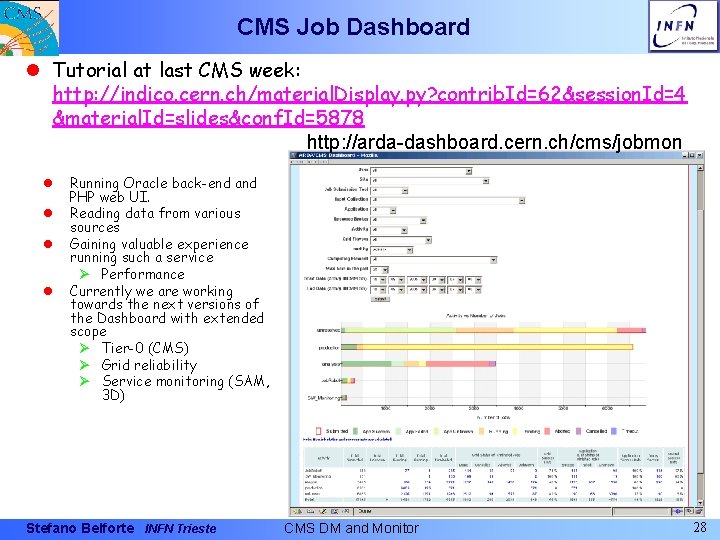

CMS Job Dashboard l Tutorial at last CMS week: http: //indico. cern. ch/material. Display. py? contrib. Id=62&session. Id=4 &material. Id=slides&conf. Id=5878 http: //arda-dashboard. cern. ch/cms/jobmon l l Running Oracle back-end and PHP web UI. Reading data from various sources Gaining valuable experience running such a service Ø Performance Currently we are working towards the next versions of the Dashboard with extended scope Ø Tier-0 (CMS) Ø Grid reliability Ø Service monitoring (SAM, 3 D) Stefano Belforte INFN Trieste CMS DM and Monitor 28

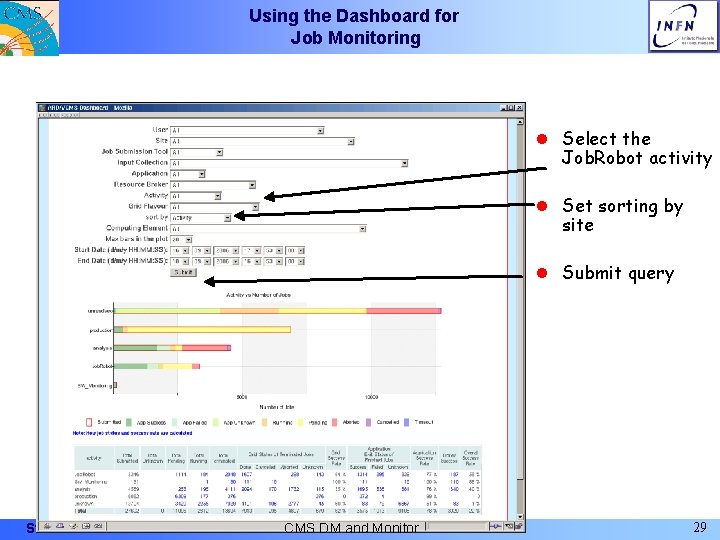

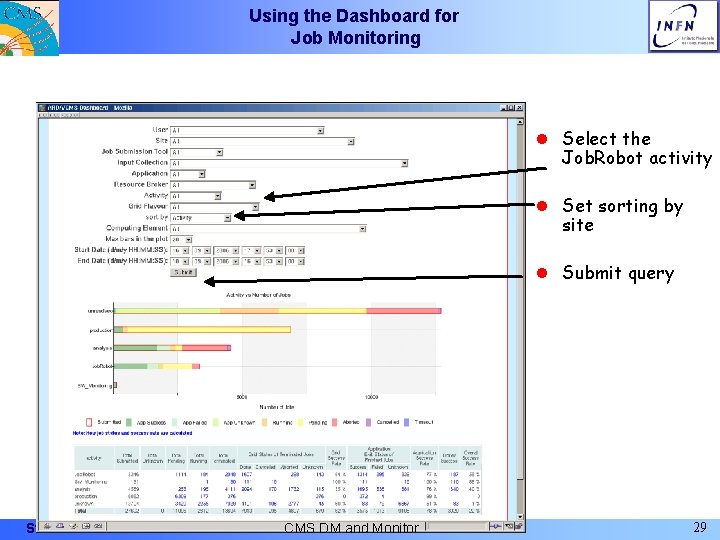

Using the Dashboard for Job Monitoring l Select the Job. Robot activity l Set sorting by site l Submit query Stefano Belforte INFN Trieste CMS DM and Monitor 29

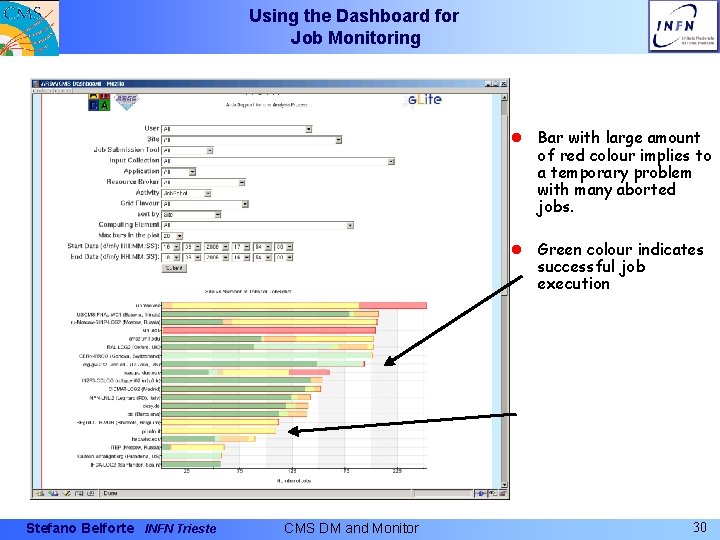

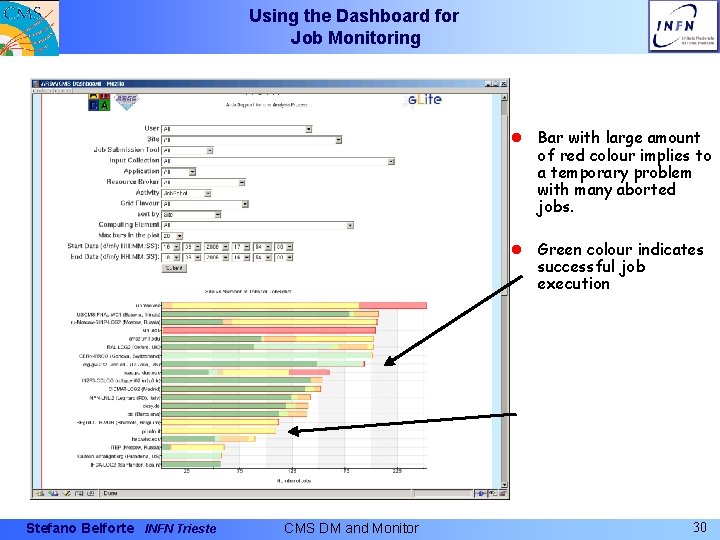

Using the Dashboard for Job Monitoring l Bar with large amount of red colour implies to a temporary problem with many aborted jobs. l Green colour indicates successful job execution Stefano Belforte INFN Trieste CMS DM and Monitor 30

Using the Dashboard for Job Monitoring l Can tell if jobs failed before or after execution start, and when Grid job id Stefano Belforte INFN Trieste Submission time CMS DM and Monitor Task name 31

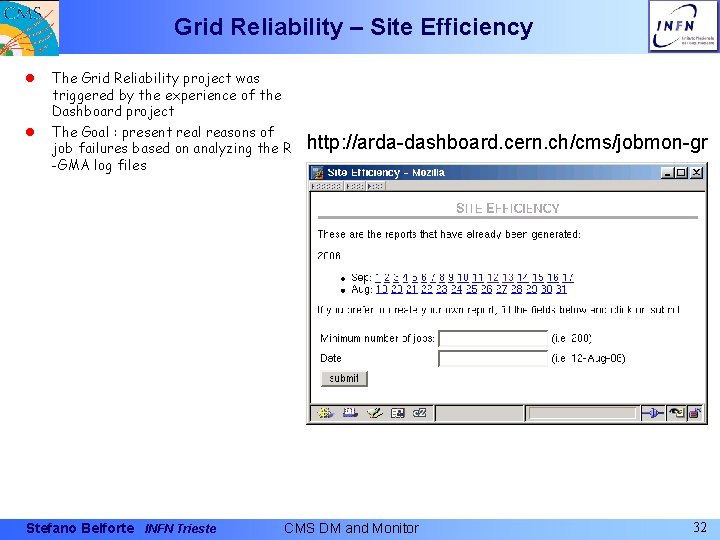

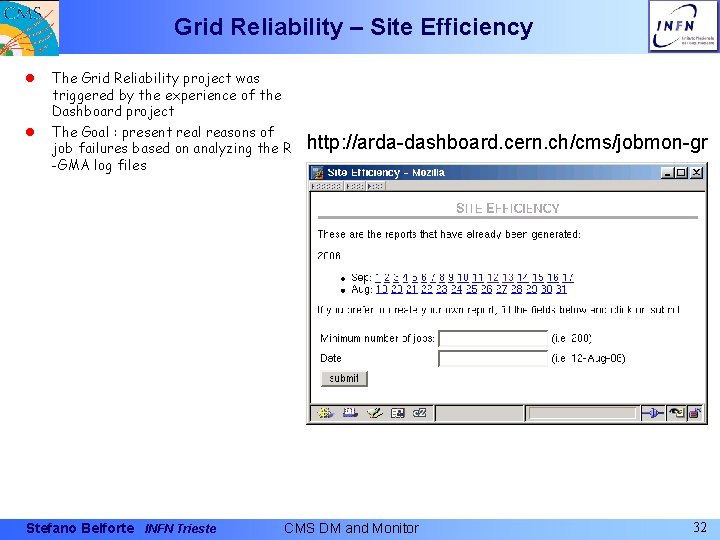

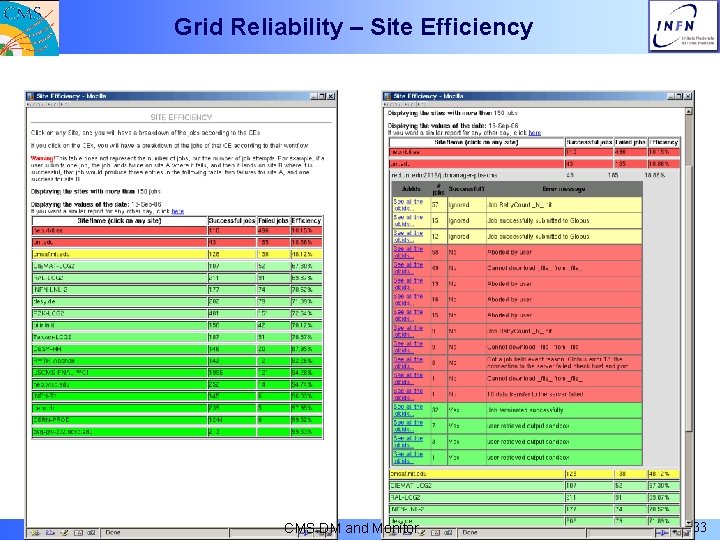

Grid Reliability – Site Efficiency l l The Grid Reliability project was triggered by the experience of the Dashboard project The Goal : present real reasons of job failures based on analyzing the R -GMA log files Stefano Belforte INFN Trieste http: //arda-dashboard. cern. ch/cms/jobmon-gr CMS DM and Monitor 32

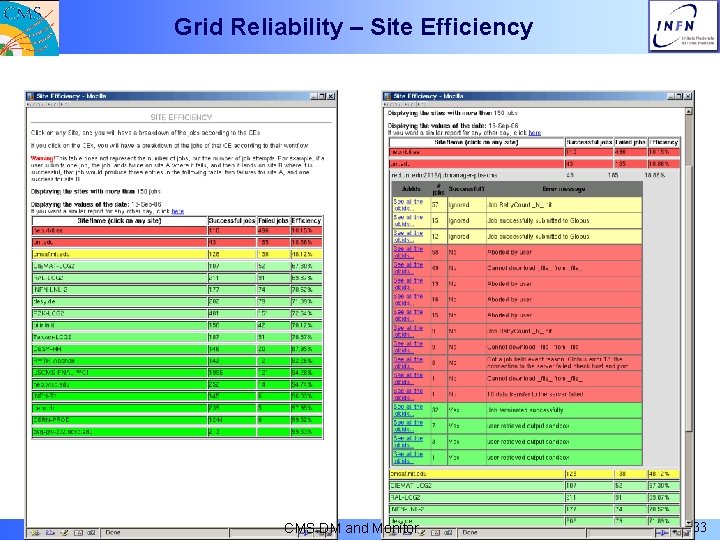

Grid Reliability – Site Efficiency Stefano Belforte INFN Trieste CMS DM and Monitor 33

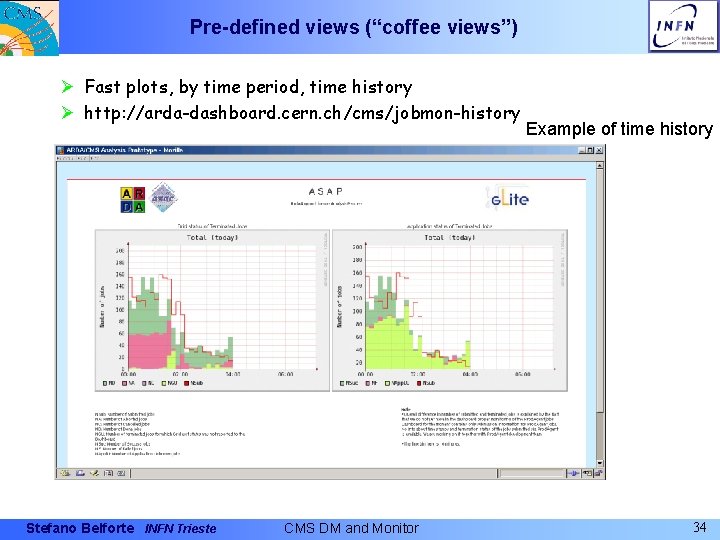

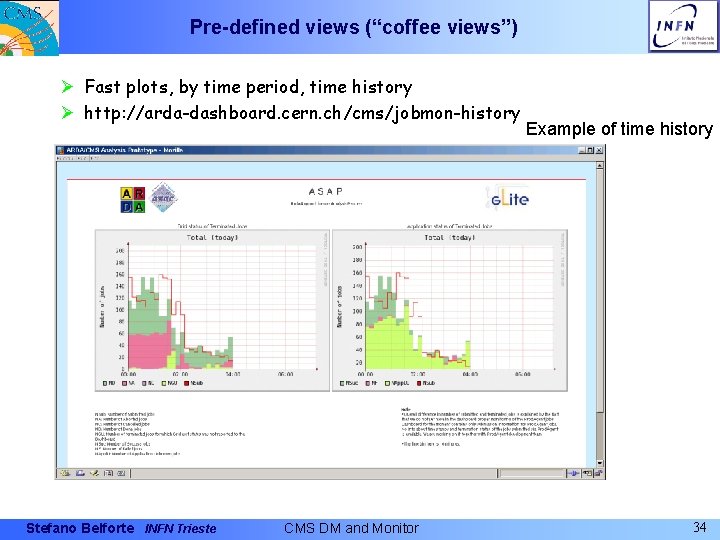

Pre-defined views (“coffee views”) Ø Fast plots, by time period, time history Ø http: //arda-dashboard. cern. ch/cms/jobmon-history Stefano Belforte INFN Trieste CMS DM and Monitor Example of time history 34

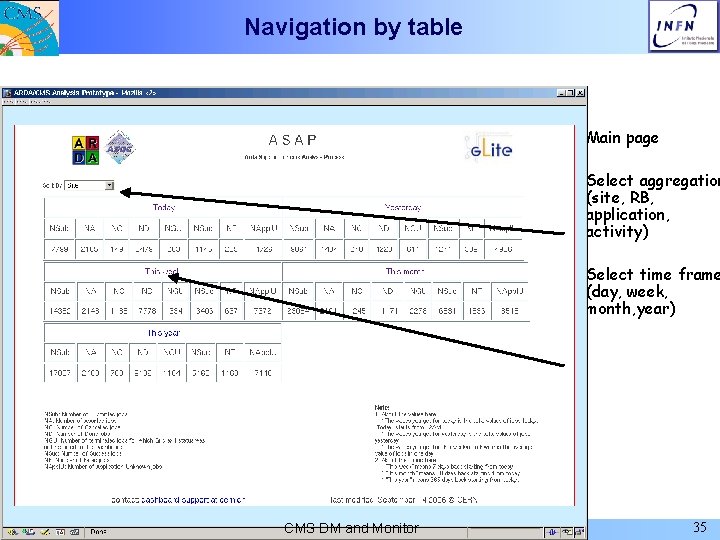

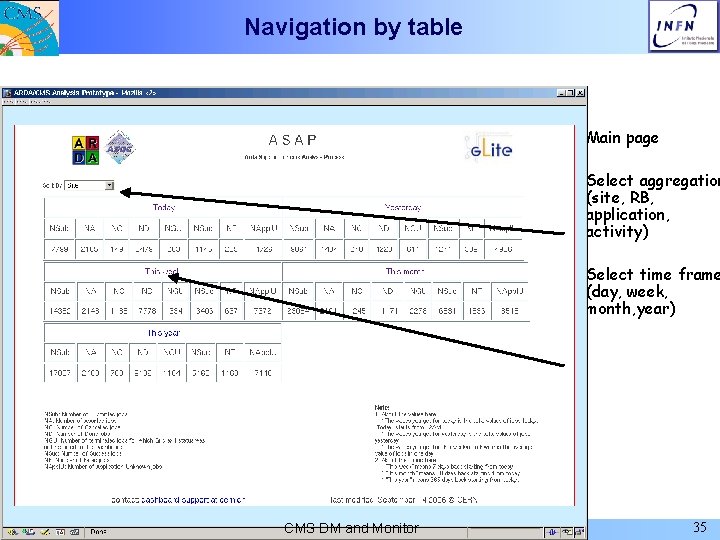

Navigation by table l Main page l Select aggregation (site, RB, application, activity) l Select time frame (day, week, month, year) Stefano Belforte INFN Trieste CMS DM and Monitor 35

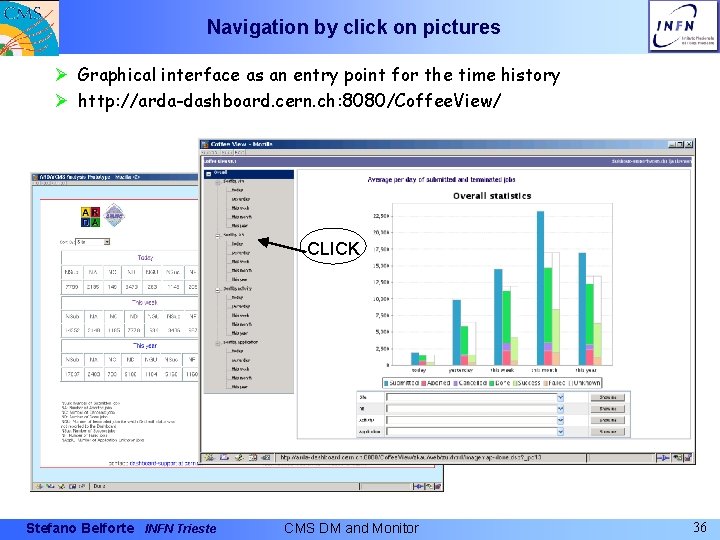

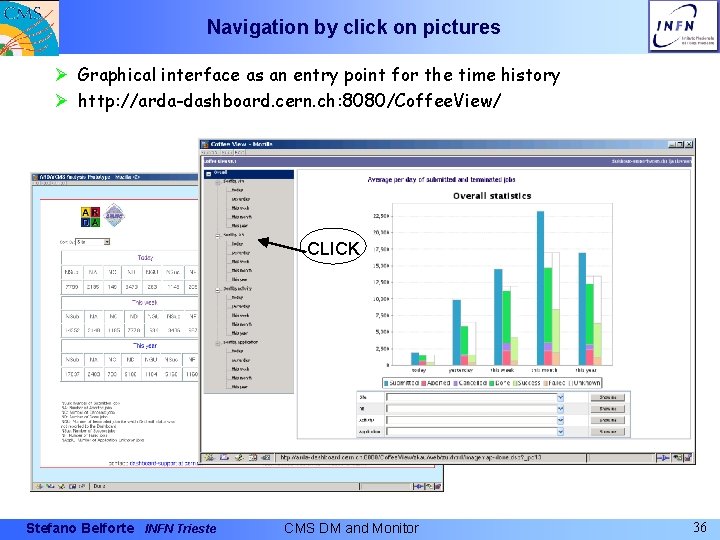

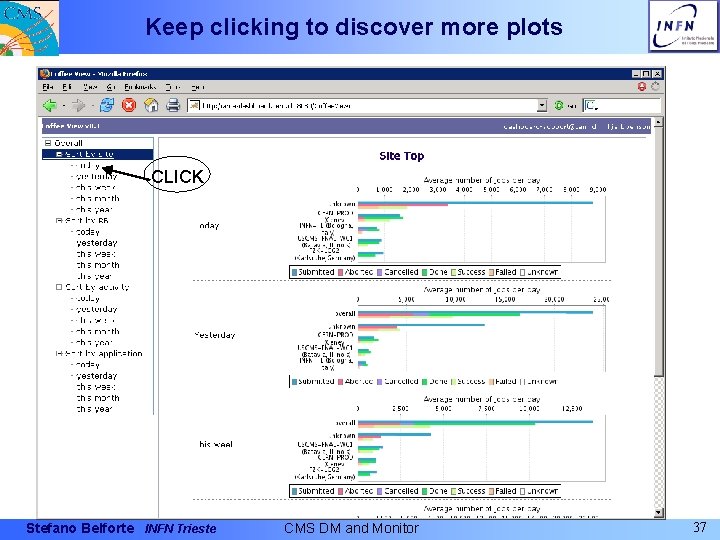

Navigation by click on pictures Ø Graphical interface as an entry point for the time history Ø http: //arda-dashboard. cern. ch: 8080/Coffee. View/ CLICK Stefano Belforte INFN Trieste CMS DM and Monitor 36

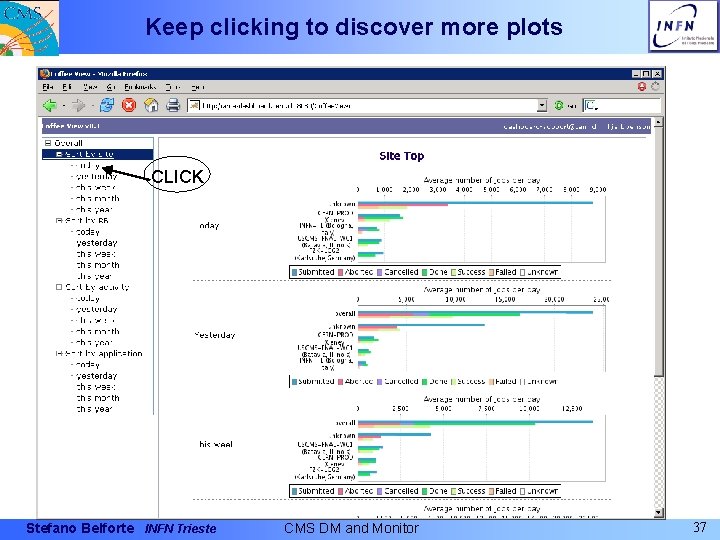

Keep clicking to discover more plots CLICK Stefano Belforte INFN Trieste CMS DM and Monitor 37

Jobs waiting and running times l Per site l Per application type l Per user (group) Stefano Belforte INFN Trieste CMS DM and Monitor 38

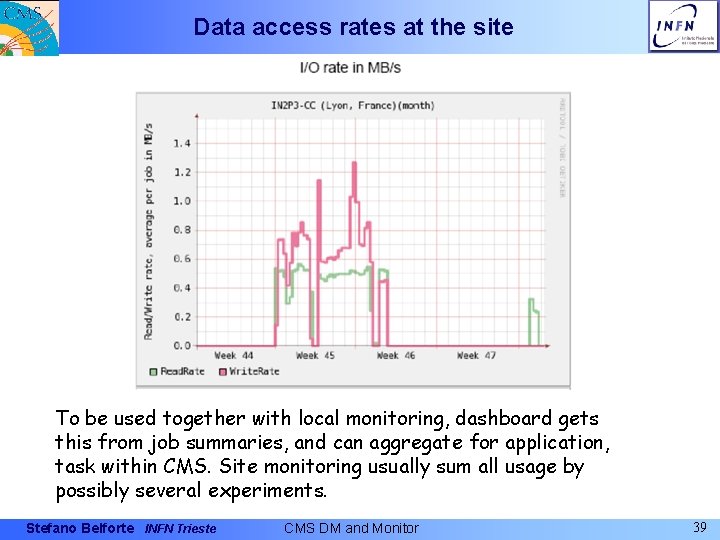

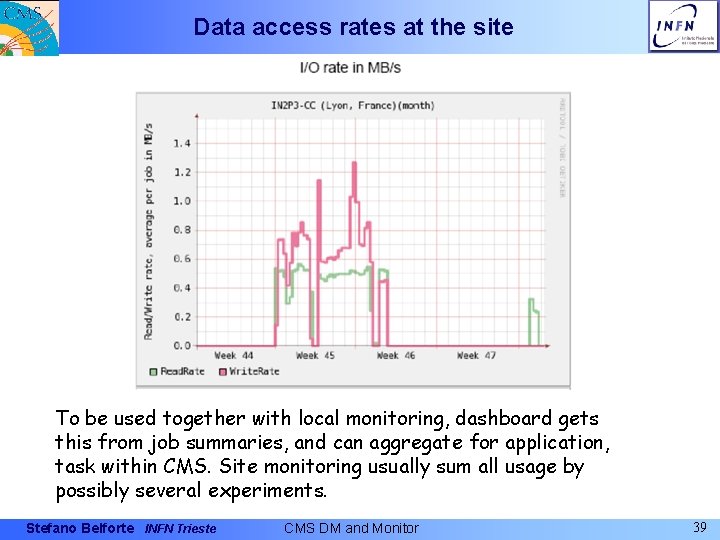

Data access rates at the site To be used together with local monitoring, dashboard gets this from job summaries, and can aggregate for application, task within CMS. Site monitoring usually sum all usage by possibly several experiments. Stefano Belforte INFN Trieste CMS DM and Monitor 39

Summary on monitoring l When one needs to understand a complicated system (a Tier 2 is complicated enough), information is never too much l Therefore CMS develops internal monitoring tools to complement more standard fabric monitoring tools l Ph. EDEx and Job Dashboard monitoring allow to look at things from the CMS perspective, aggregating information that makes sense with respect to CMS operations Ø CMS datasets Ø CMS applications, tasks Ø CMS users, groups Stefano Belforte INFN Trieste CMS DM and Monitor 40

Conclusion l Questions ? Stefano Belforte INFN Trieste CMS DM and Monitor 41

Spares l Spare slides follow Stefano Belforte INFN Trieste CMS DM and Monitor 42

Tiered Architecture Tier-0: l Accepts data from DAQ l Prompt reconstruction l Data archive and distribution to Tier-1’s Stefano Belforte INFN Trieste Tier-1’s: l Real data archiving l Re-processing l Skimming and other dataintensive analysis tasks l Calibration l MC data archiving CMS DM and Monitor Tier-2’s: l User data Analysis l MC production l Import skimmed datasets from Tier-1 and export MC data l Calibration/alignment 43

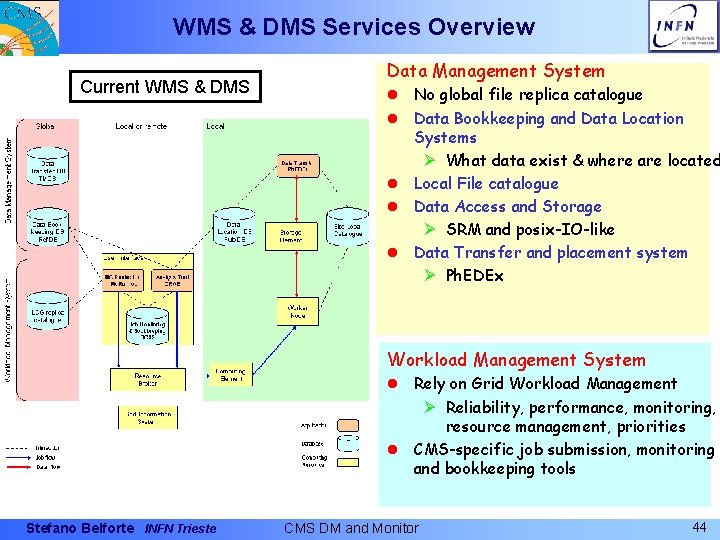

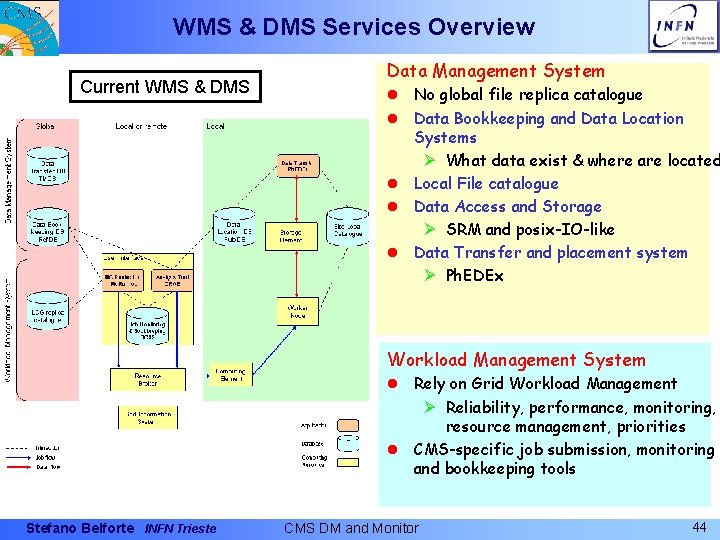

WMS & DMS Services Overview Current WMS & DMS Data Management System l No global file replica catalogue l Data Bookkeeping and Data Location Systems Ø What data exist & where are located l Local File catalogue l Data Access and Storage Ø SRM and posix-IO-like l Data Transfer and placement system Ø Ph. EDEx Workload Management System l Rely on Grid Workload Management Ø Reliability, performance, monitoring, resource management, priorities l CMS-specific job submission, monitoring and bookkeeping tools Stefano Belforte INFN Trieste CMS DM and Monitor 44

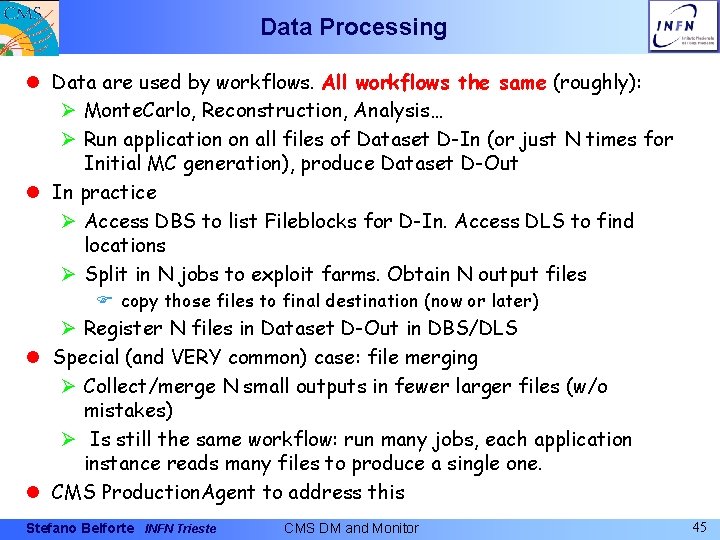

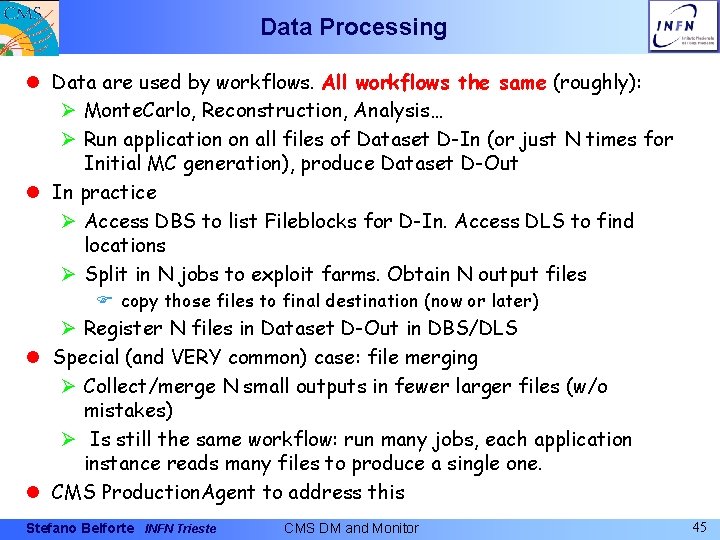

Data Processing l Data are used by workflows. All workflows the same (roughly): Ø Monte. Carlo, Reconstruction, Analysis… Ø Run application on all files of Dataset D-In (or just N times for Initial MC generation), produce Dataset D-Out l In practice Ø Access DBS to list Fileblocks for D-In. Access DLS to find locations Ø Split in N jobs to exploit farms. Obtain N output files F copy those files to final destination (now or later) Ø Register N files in Dataset D-Out in DBS/DLS l Special (and VERY common) case: file merging Ø Collect/merge N small outputs in fewer larger files (w/o mistakes) Ø Is still the same workflow: run many jobs, each application instance reads many files to produce a single one. l CMS Production. Agent to address this Stefano Belforte INFN Trieste CMS DM and Monitor 45

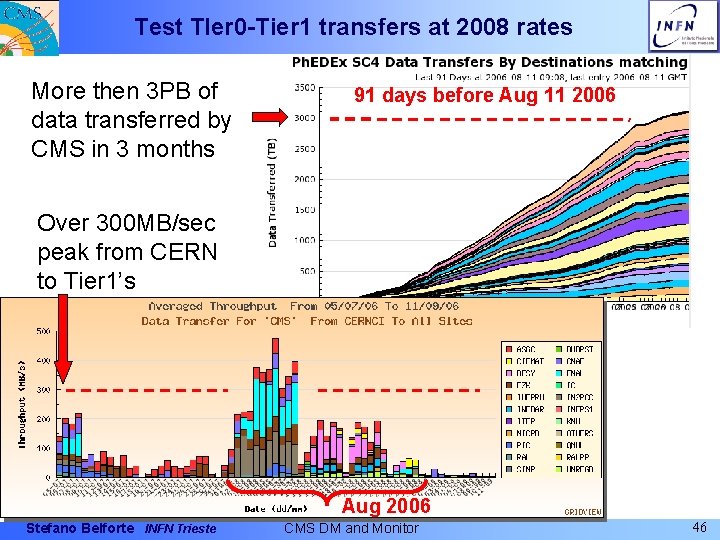

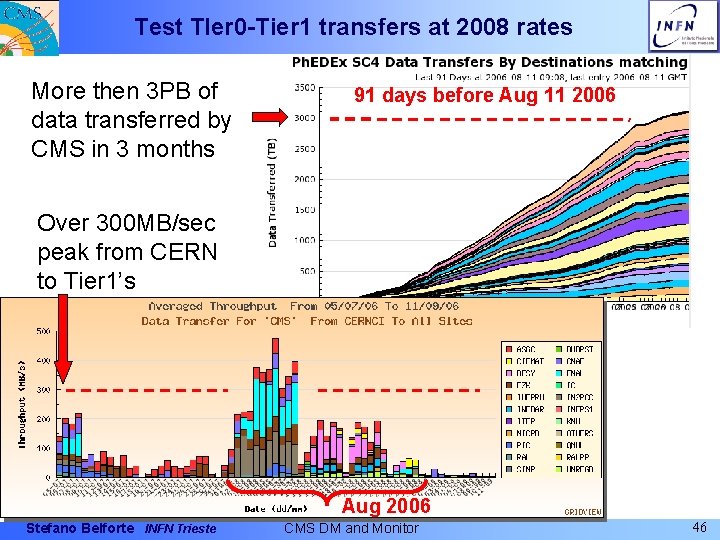

Test TIer 0 -Tier 1 transfers at 2008 rates More then 3 PB of data transferred by CMS in 3 months 91 days before Aug 11 2006 Over 300 MB/sec peak from CERN to Tier 1’s Aug 2006 Stefano Belforte INFN Trieste CMS DM and Monitor 46

Contoh terjemahan setia

Contoh terjemahan setia Prinsip dasar analisis kualitas lingkungan

Prinsip dasar analisis kualitas lingkungan Emphasis through placement

Emphasis through placement Examples of dominance in art

Examples of dominance in art Repetition balance

Repetition balance Coherence and unity

Coherence and unity Knowledge management monitoring and evaluation

Knowledge management monitoring and evaluation Project monitoring and control

Project monitoring and control Cms measures management

Cms measures management Casino management system (cms ) market

Casino management system (cms ) market Cms project management

Cms project management Cms cash management system

Cms cash management system Cms call management system

Cms call management system Qdm

Qdm Mms tools

Mms tools Cms measures management

Cms measures management Data quality monitoring framework

Data quality monitoring framework Wigos data quality monitoring system

Wigos data quality monitoring system Real time data quality monitoring

Real time data quality monitoring Spice (simulation program with integrated circuit emphasis)

Spice (simulation program with integrated circuit emphasis) Vocal emphasis

Vocal emphasis What is the going concern assumption

What is the going concern assumption Incremental model of decision making

Incremental model of decision making Elements and principles of design photography

Elements and principles of design photography It is a movement in which some elements recur regularly.

It is a movement in which some elements recur regularly. Wake turbulence avoidance

Wake turbulence avoidance Positive emphasis

Positive emphasis Entrance là loại hoạt cảnh

Entrance là loại hoạt cảnh Personal software process

Personal software process Emphasis on

Emphasis on Inversion after negative adverbials

Inversion after negative adverbials Types of emphasis in art

Types of emphasis in art Repetition balance

Repetition balance Primary and supporting instruments

Primary and supporting instruments Points of emphasis

Points of emphasis Experience centered design curriculum

Experience centered design curriculum Fixation omission emphasis

Fixation omission emphasis Emphasis center of interest

Emphasis center of interest Little emphasis on sociocultural context

Little emphasis on sociocultural context Emphasis timer

Emphasis timer Society where emphasis shifts from production

Society where emphasis shifts from production Qualitative research methods

Qualitative research methods Quantitative emphasis

Quantitative emphasis Positive emphasis

Positive emphasis Iambic pentameter

Iambic pentameter Importance of emphasis

Importance of emphasis Whats emphasis

Whats emphasis