CLUSTERING ON GPU CONCURRENCY USING CUDA STREAMS Bei

CLUSTERING ON GPU: CONCURRENCY USING CUDA STREAMS Bei Wang Weekly Tracking Meeting Oct 18, 2019

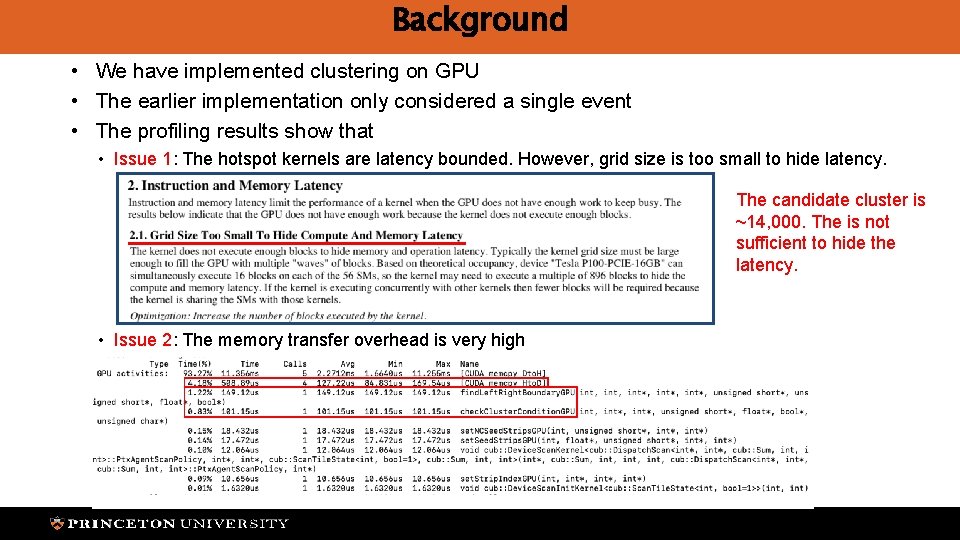

Background • We have implemented clustering on GPU • The earlier implementation only considered a single event • The profiling results show that • Issue 1: The hotspot kernels are latency bounded. However, grid size is too small to hide latency. The candidate cluster is ~14, 000. The is not sufficient to hide the latency. • Issue 2: The memory transfer overhead is very high

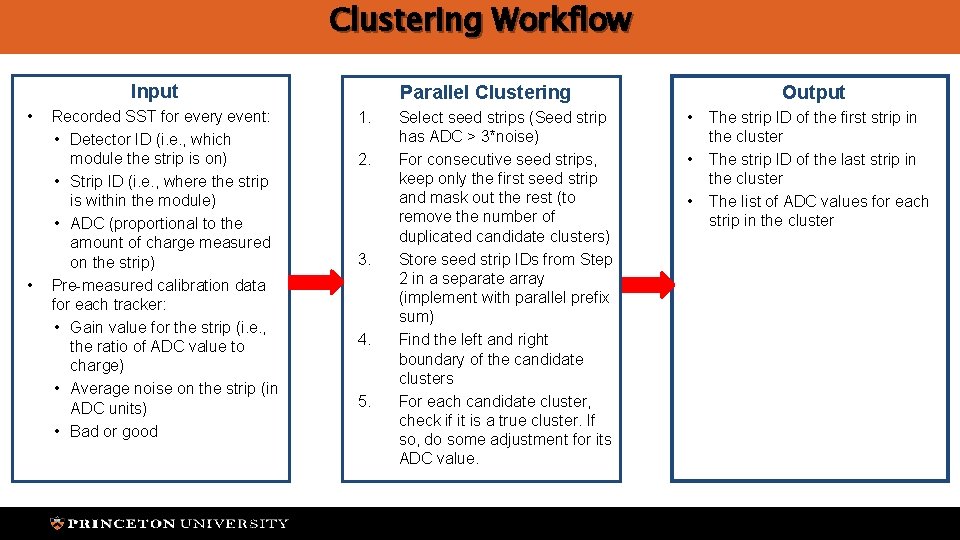

Clustering Workflow Input • • Recorded SST for every event: • Detector ID (i. e. , which module the strip is on) • Strip ID (i. e. , where the strip is within the module) • ADC (proportional to the amount of charge measured on the strip) Pre-measured calibration data for each tracker: • Gain value for the strip (i. e. , the ratio of ADC value to charge) • Average noise on the strip (in ADC units) • Bad or good Parallel Clustering 1. 2. 3. 4. 5. Select seed strips (Seed strip has ADC > 3*noise) For consecutive seed strips, keep only the first seed strip and mask out the rest (to remove the number of duplicated candidate clusters) Store seed strip IDs from Step 2 in a separate array (implement with parallel prefix sum) Find the left and right boundary of the candidate clusters For each candidate cluster, check if it is a true cluster. If so, do some adjustment for its ADC value. Output • • • The strip ID of the first strip in the cluster The strip ID of the last strip in the cluster The list of ADC values for each strip in the cluster

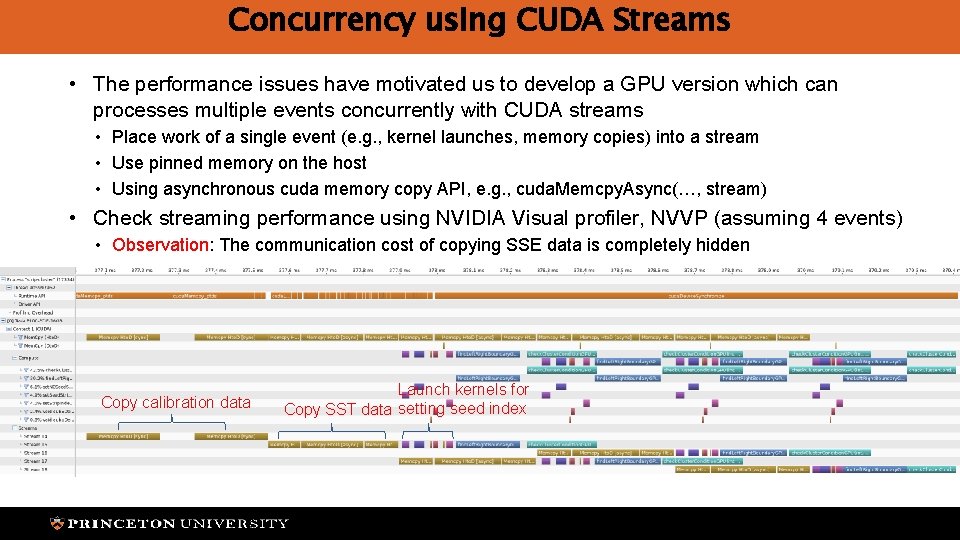

Concurrency using CUDA Streams • The performance issues have motivated us to develop a GPU version which can processes multiple events concurrently with CUDA streams • Place work of a single event (e. g. , kernel launches, memory copies) into a stream • Use pinned memory on the host • Using asynchronous cuda memory copy API, e. g. , cuda. Memcpy. Async(…, stream) • Check streaming performance using NVIDIA Visual profiler, NVVP (assuming 4 events) • Observation: The communication cost of copying SSE data is completely hidden Copy calibration data Launch kernels for Copy SST data setting seed index

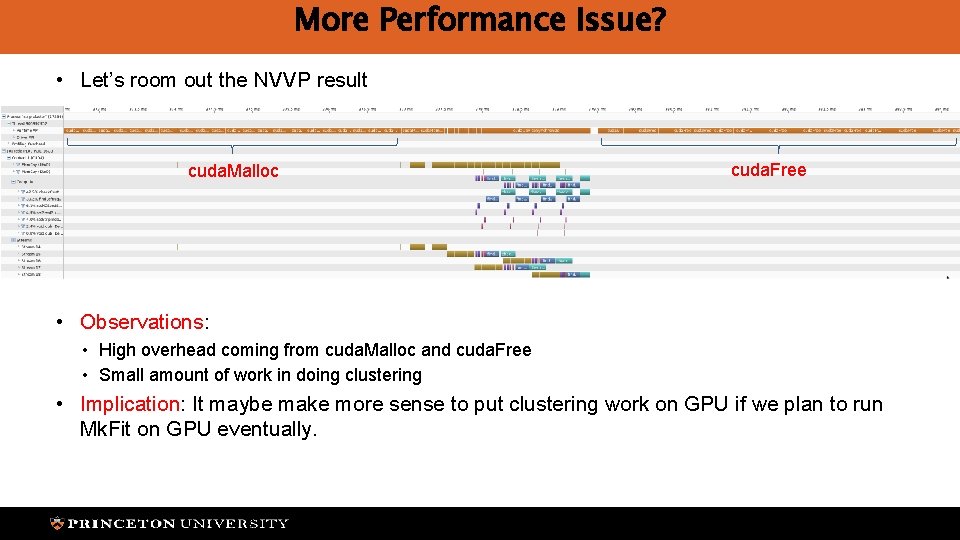

More Performance Issue? • Let’s room out the NVVP result cuda. Malloc cuda. Free • Observations: • High overhead coming from cuda. Malloc and cuda. Free • Small amount of work in doing clustering • Implication: It maybe make more sense to put clustering work on GPU if we plan to run Mk. Fit on GPU eventually.

- Slides: 5