Clustering Change Detection Using Normalized Maximum Likelihood Coding

![Related works Clustering change detection issue �Evolutionally clustering [Chakrabrti et. al. , 2006] �Hypothesis Related works Clustering change detection issue �Evolutionally clustering [Chakrabrti et. al. , 2006] �Hypothesis](https://slidetodoc.com/presentation_image_h/95d4b2d6e15dd9276068ecbc898893c6/image-5.jpg)

![RNML code-length �Theorem [Hirai and Yamanishi 2012] RNML code-length for GMM is calculated as RNML code-length �Theorem [Hirai and Yamanishi 2012] RNML code-length for GMM is calculated as](https://slidetodoc.com/presentation_image_h/95d4b2d6e15dd9276068ecbc898893c6/image-21.jpg)

![Restrict the range of data for Shtarkov’s minimax criterion [Shtarkov, 1987] For a given Restrict the range of data for Shtarkov’s minimax criterion [Shtarkov, 1987] For a given](https://slidetodoc.com/presentation_image_h/95d4b2d6e15dd9276068ecbc898893c6/image-34.jpg)

- Slides: 35

Clustering Change Detection Using Normalized Maximum Likelihood Coding So Hirai The University of Tokyo Currently NTT DATA Corp. Kenji Yamanishi The University of Tokyo WITMSE 2012, Amsterdam, Netherland Presented at KDD 2012 on Aug. 13.

Contents �Problem Setting �Significance �Proposed Algorithm : Sequential Dynamic Model Selection with NML(normalized maximum likelihood) coding �How to compute the NML coding for Gaussian mixtures �Experimental Results �Marketing Applications �Conclusion 2

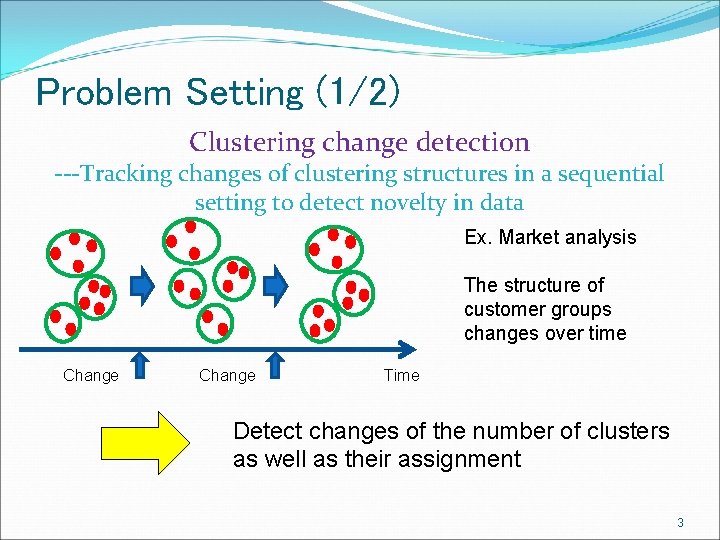

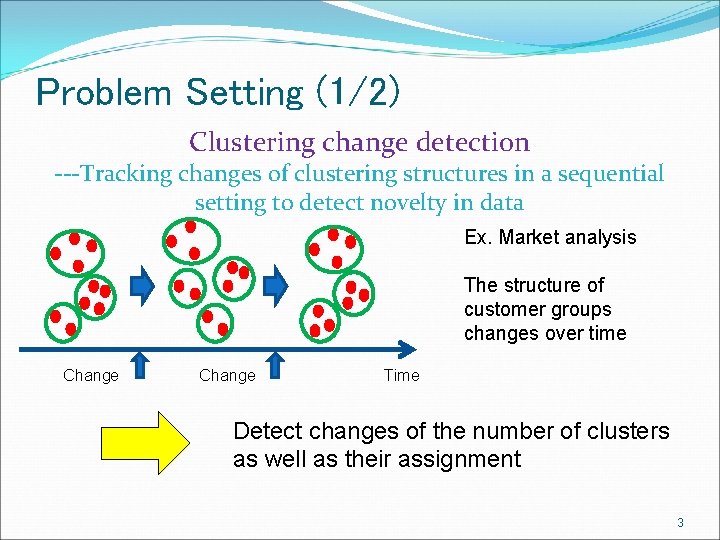

Problem Setting (1/2) Clustering change detection ---Tracking changes of clustering structures in a sequential setting to detect novelty in data Ex. Market analysis The structure of customer groups changes over time Change Time Detect changes of the number of clusters as well as their assignment 3

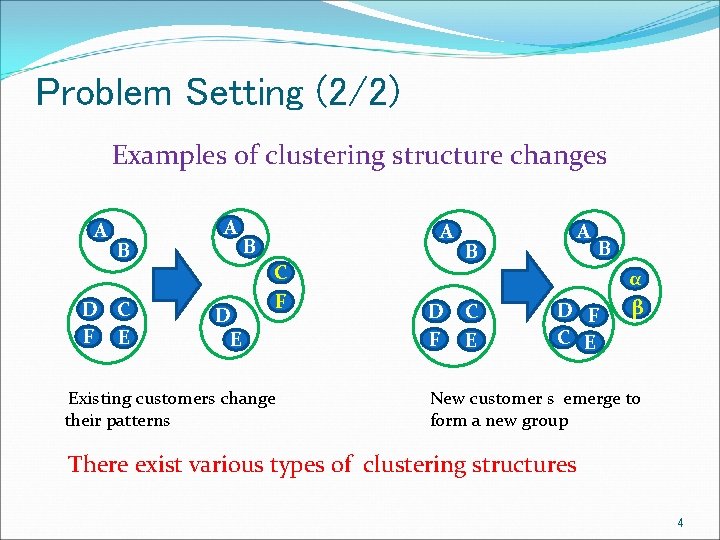

Problem Setting (2/2) Examples of clustering structure changes A B D C F E A D E A B C F Existing customers change their patterns D F B C E A B D F C E α β New customer s emerge to form a new group There exist various types of clustering structures 4

![Related works Clustering change detection issue Evolutionally clustering Chakrabrti et al 2006 Hypothesis Related works Clustering change detection issue �Evolutionally clustering [Chakrabrti et. al. , 2006] �Hypothesis](https://slidetodoc.com/presentation_image_h/95d4b2d6e15dd9276068ecbc898893c6/image-5.jpg)

Related works Clustering change detection issue �Evolutionally clustering [Chakrabrti et. al. , 2006] �Hypothesis testing approach[Song and Wang, 2005] �Kalman filter approach [Krempl et. al. , 2011] �Graph Scope [Sun et. al. , 2007] �Variational Bayes approach[Sato, 2001] 5

Significance �A novel clustering change detection algorithm Key idea: ・Sequential dynamic model selection (sequential DMS) ・NML(normalized maximum likelihood) code-length as criteria ……. . First formulae for NML for Gaussian mixture models Empirical demonstration of its superiority over existing methods Shown using artificial data sets Demonstration of its validity in market analysis Shown using real beer consumption data sets 6

Sequential Dynamic Model Selection Algorithm 7

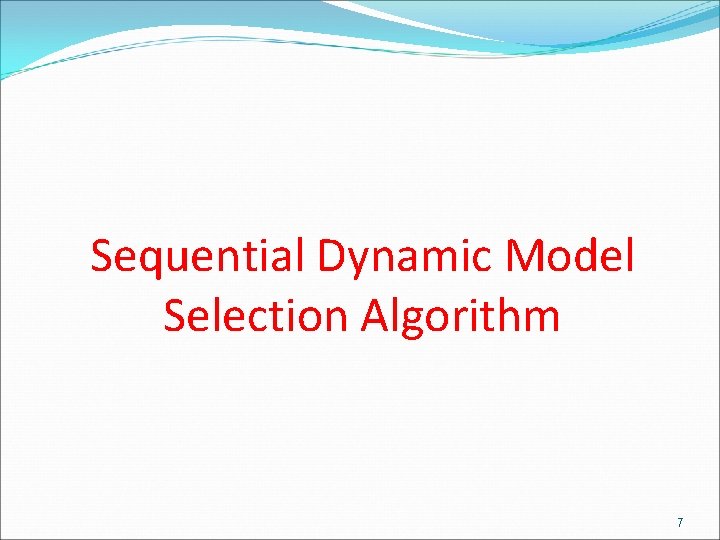

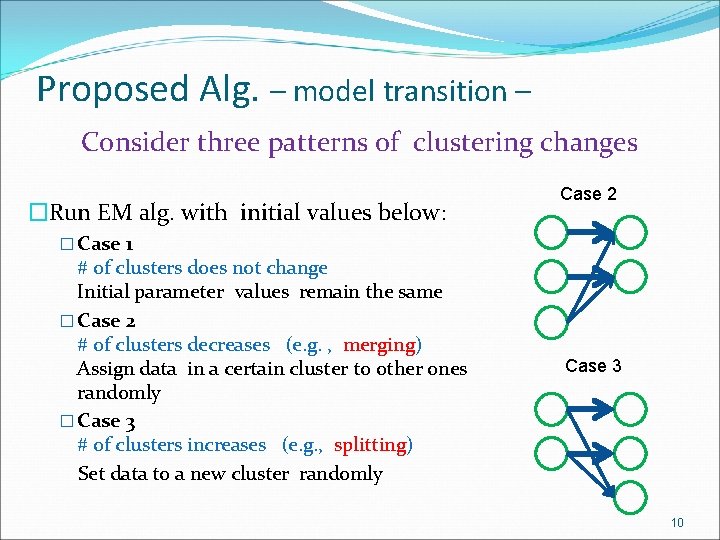

Proposed Alg. – background of DMS – Dynamic Model Selection ( DMS ) [Yamanishi and Maruyama, 2007] �Batch DMS criterion : Total code-length Code-length of data seq. Code-length of model seq. Minimum w. r. t. ~Extension of MDL (Minimum Description Length) principle[Rissanen, 1978] into model “sequence” selection 8

Proposed Alg. – Sequential DMS – Sequential dynamic model selection (SDMS) Alg. �At each time t, given sequentially select for clustering , s. t. Code-length for data clustering ~NML (normalized maximum likelihoood) coding Minimum w. r. t. Kt, Zt Code-length for transition of clustering structure Sequential variant of DMS criterion [Yamanishi and Maruyama, 2007] 9

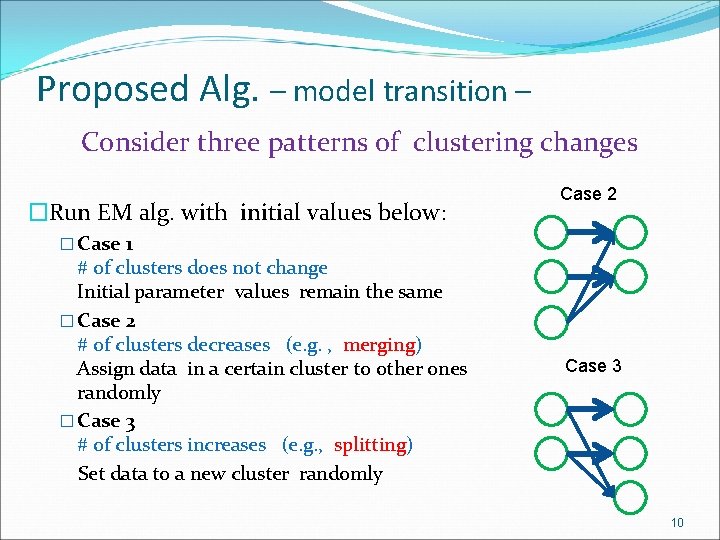

Proposed Alg. – model transition – Consider three patterns of clustering changes �Run EM alg. with initial values below: Case 2 � Case 1 # of clusters does not change Initial parameter values remain the same � Case 2 # of clusters decreases (e. g. , merging) Assign data in a certain cluster to other ones randomly � Case 3 # of clusters increases (e. g. , splitting) Set data to a new cluster randomly Case 3 10

Proposed Alg. – code-length for transition – �Model transition probability distribution Suppose K transits to neighbors only �Employ Krichevsky-Trofimov (KT) estimate [Krichevsky and Trofimov, 1981] Code-length of the model transition 11

How to compute NML code-length for Gaussian mixtures 12

Criteria – NML code-length – �Model (Gaussian mixture model) : �NML (normalized maximum likelihood) code-length : Normalization term Shortest code-length in the sense of minimax criterion [Shatarkov 1987] 13

For Continuous Data �Normalization term �In case of , the data ranges over all domains �Problem: �NML for Gaussian distribution � Normalization term diverges �NML for mixture distribution � Normalization term is computationally intractable � This comes from combinational difficulties 14

For Continuous Data (Example) �For the one-dimension Gaussian distribution (σ2 is given) �Normalization term 15

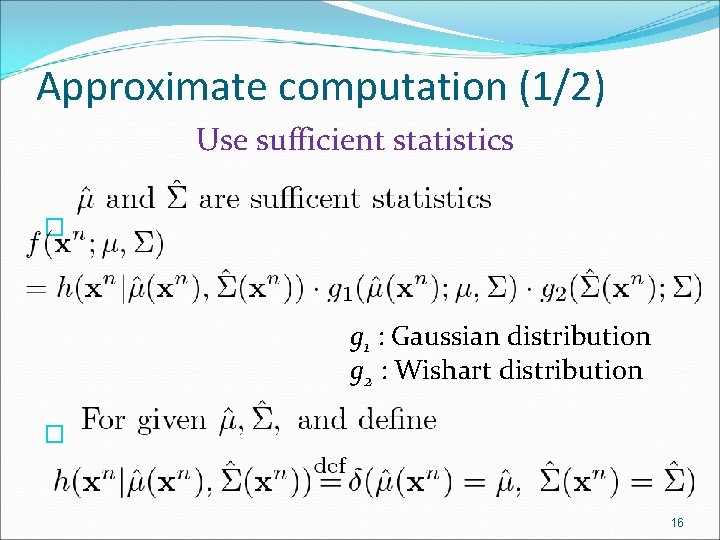

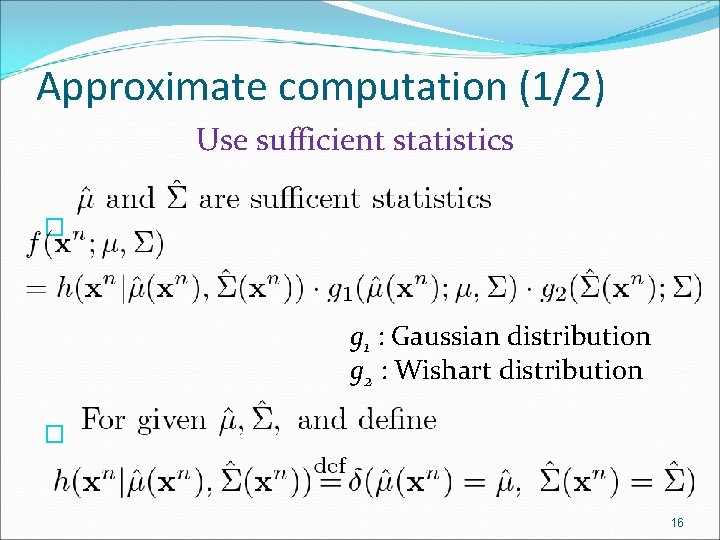

Approximate computation (1/2) Use sufficient statistics � g 1 : Gaussian distribution g 2 : Wishart distribution � 16

Criteria – NML for GMM – Efficiently computing an approximate variant of the NML code-length for a GMM [Hirai and Yamanishi, 2011] �Restrict the range of data so that the MLE lies in a bounded range specified by a parameter The normalization term does not diverge But still highly depends on the parameters : 17

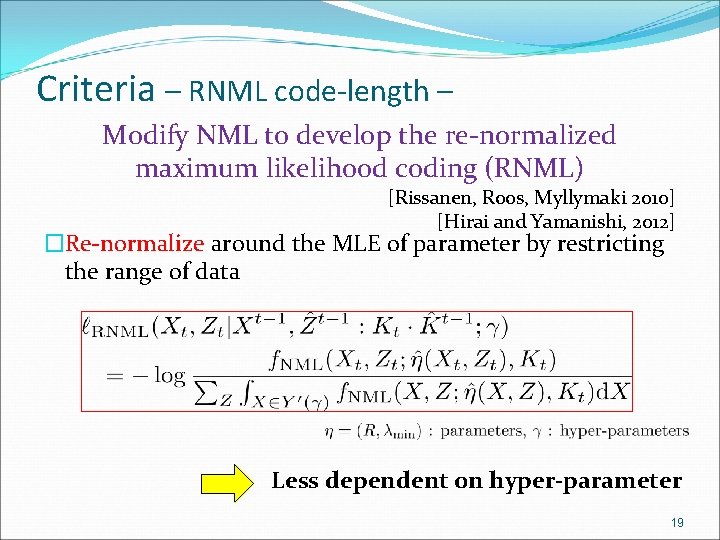

NML �The normalization term is calculated as follows : where, : number of data, : dim. of data 18

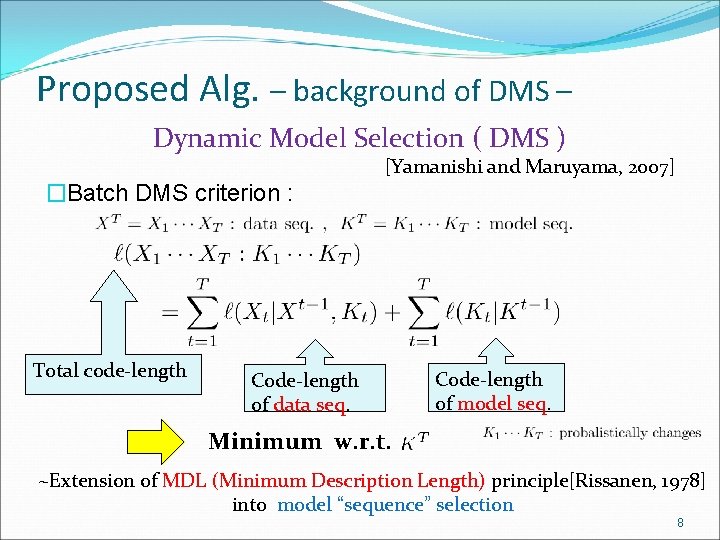

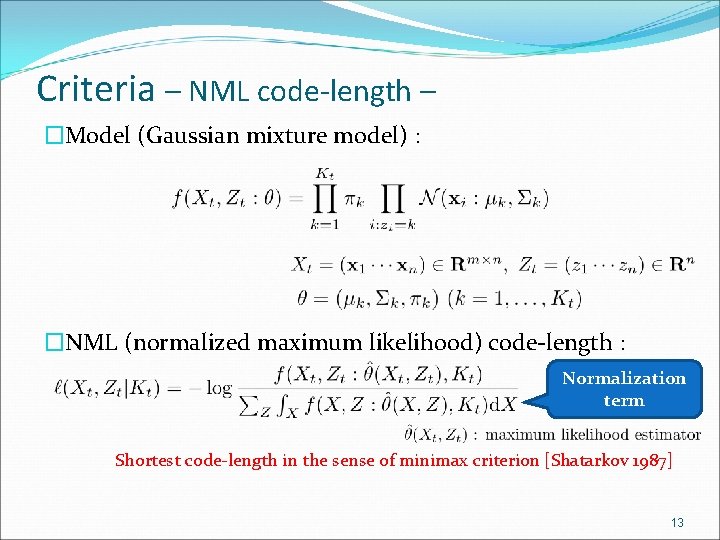

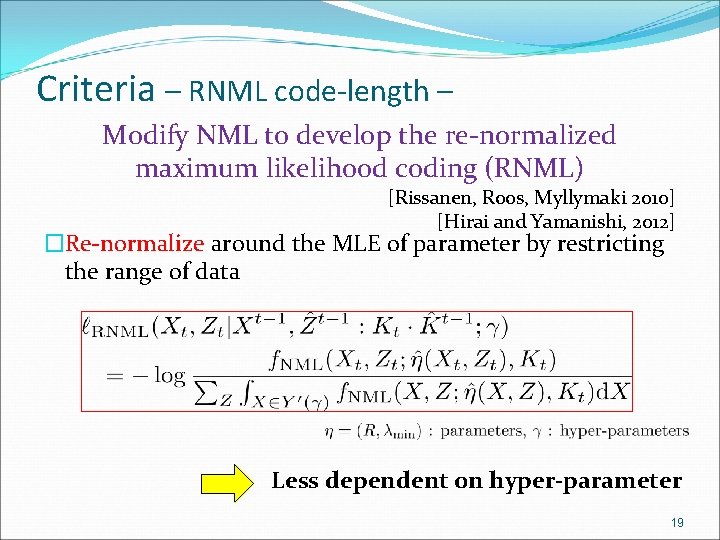

Criteria – RNML code-length – Modify NML to develop the re-normalized maximum likelihood coding (RNML) [Rissanen, Roos, Myllymaki 2010] [Hirai and Yamanishi, 2012] �Re-normalize around the MLE of parameter by restricting the range of data Less dependent on hyper-parameter 19

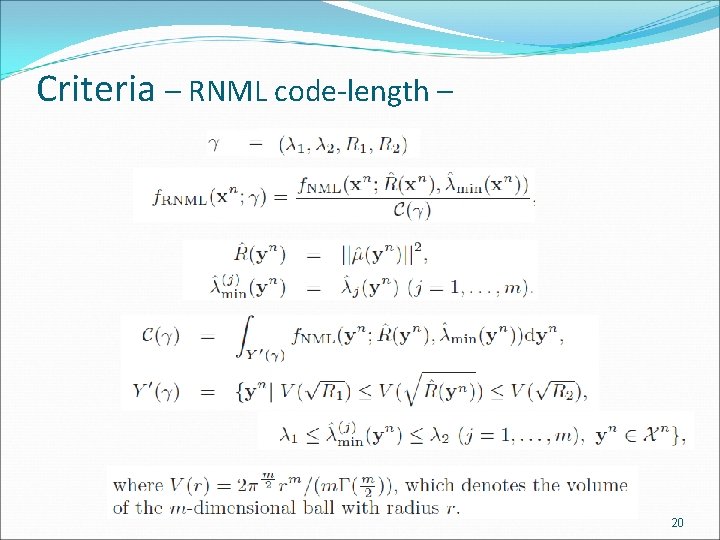

Criteria – RNML code-length – 20

![RNML codelength Theorem Hirai and Yamanishi 2012 RNML codelength for GMM is calculated as RNML code-length �Theorem [Hirai and Yamanishi 2012] RNML code-length for GMM is calculated as](https://slidetodoc.com/presentation_image_h/95d4b2d6e15dd9276068ecbc898893c6/image-21.jpg)

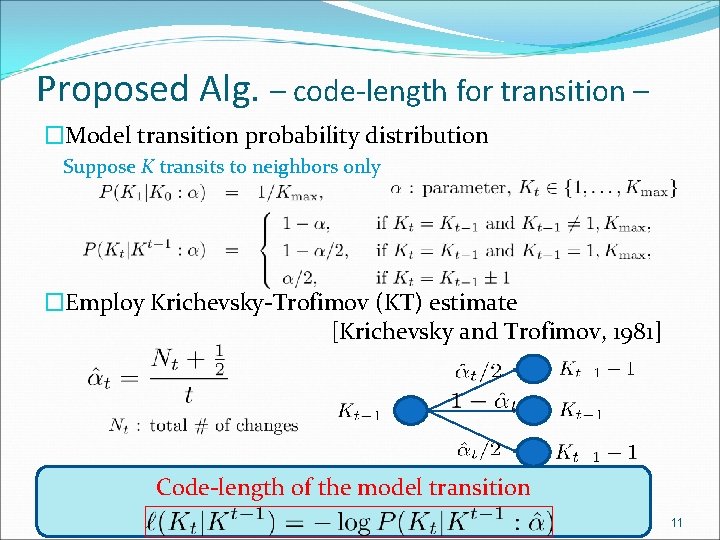

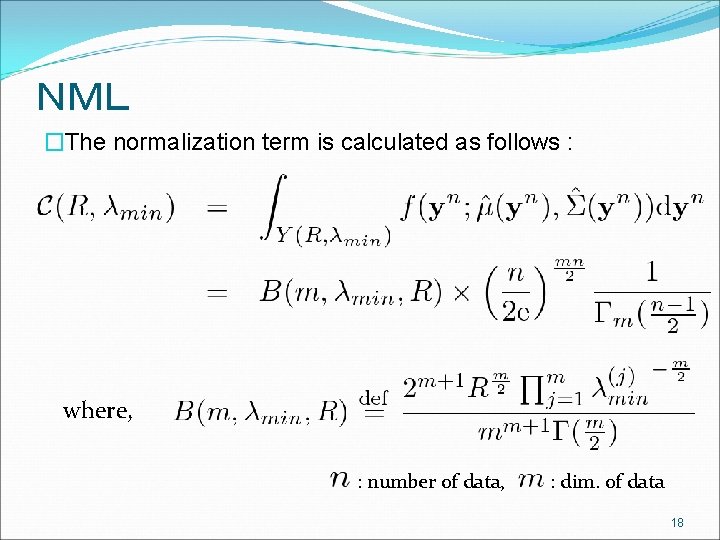

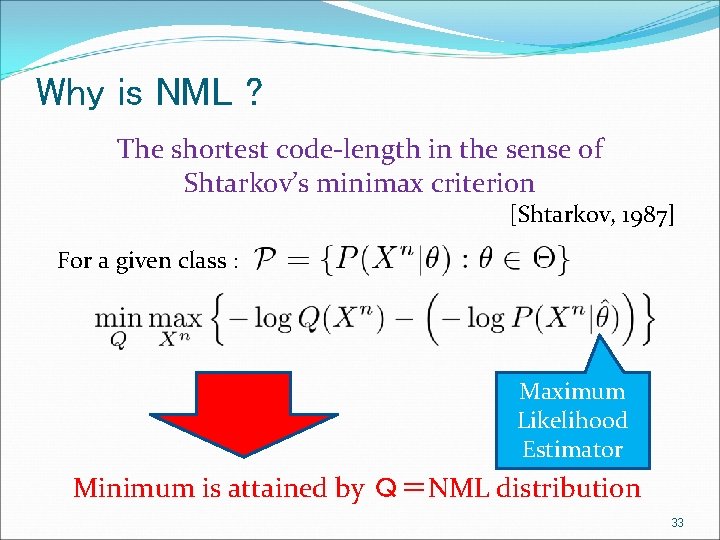

RNML code-length �Theorem [Hirai and Yamanishi 2012] RNML code-length for GMM is calculated as follows : Definition Problem Computing 1 costs . , 21

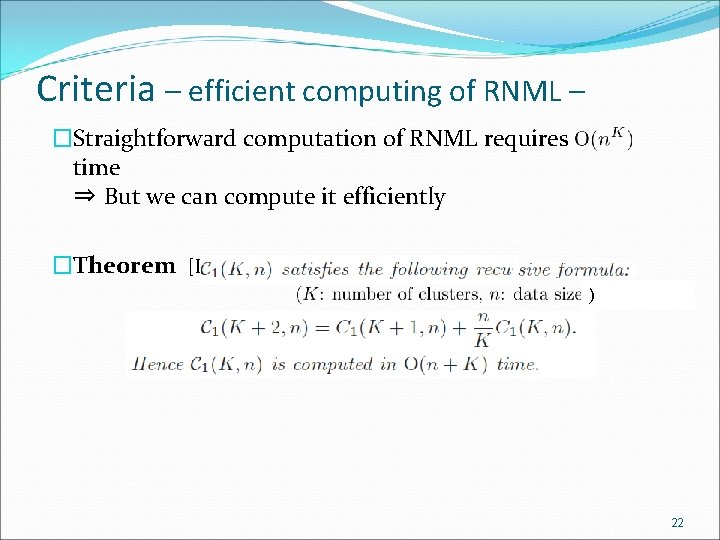

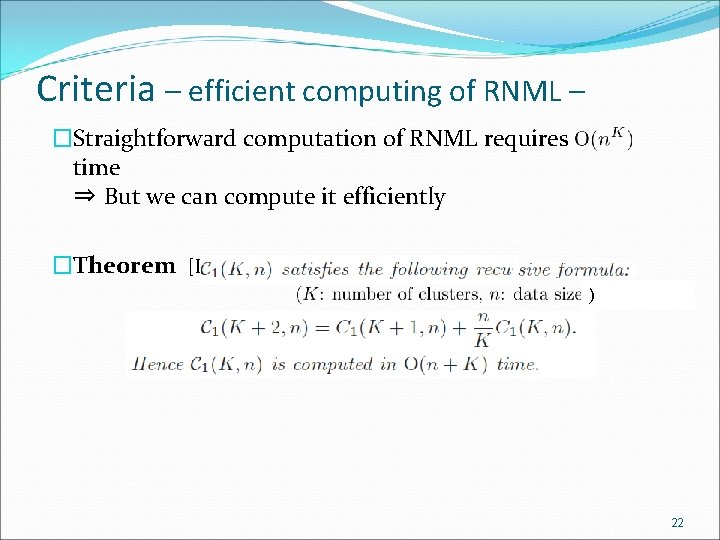

Criteria – efficient computing of RNML – �Straightforward computation of RNML requires time ⇒ But we can compute it efficiently �Theorem [Kontkanen and Myllymaki, 07] ) 1 22

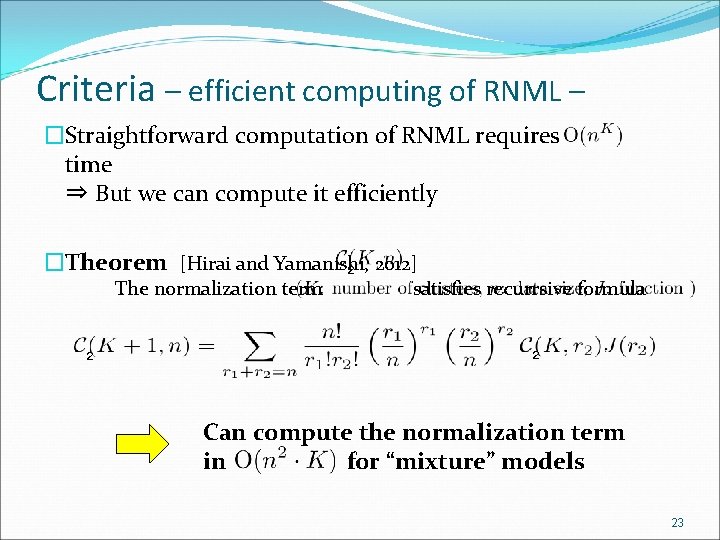

Criteria – efficient computing of RNML – �Straightforward computation of RNML requires time ⇒ But we can compute it efficiently �Theorem [Hirai and Yamanishi, 2012] 2 The normalization term 2 satisfies recurrsive formula 2 Can compute the normalization term in for “mixture” models 23

Experimental Results – Artificial Data – – Market Analysis – 24

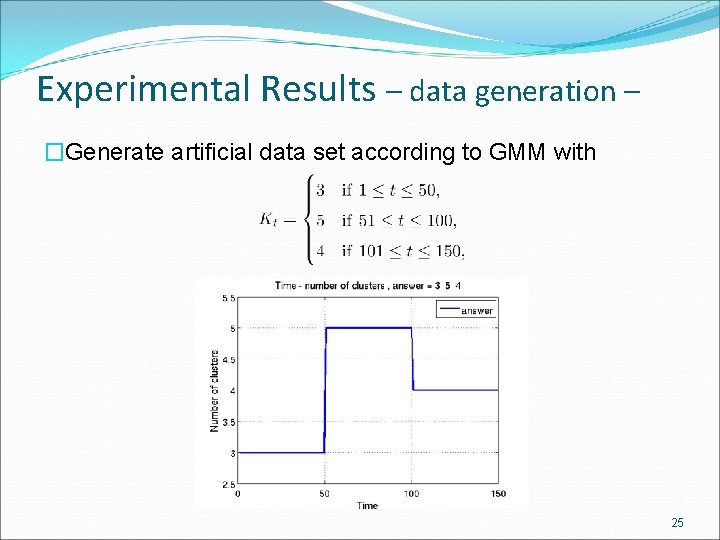

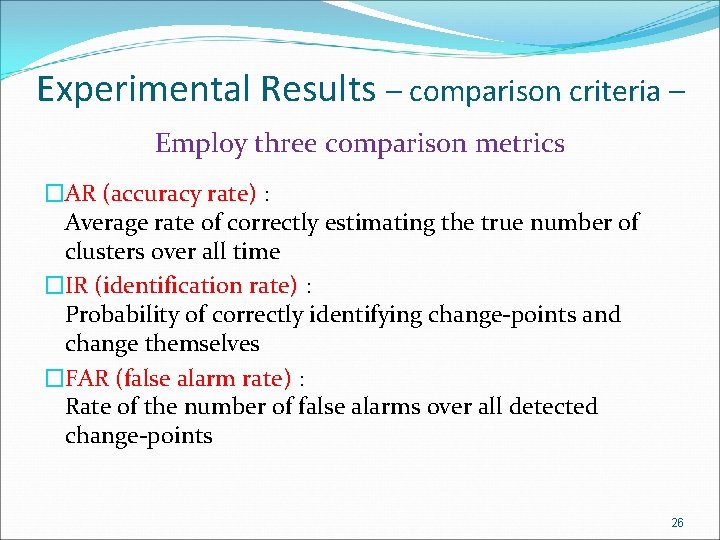

Experimental Results – data generation – �Generate artificial data set according to GMM with 25

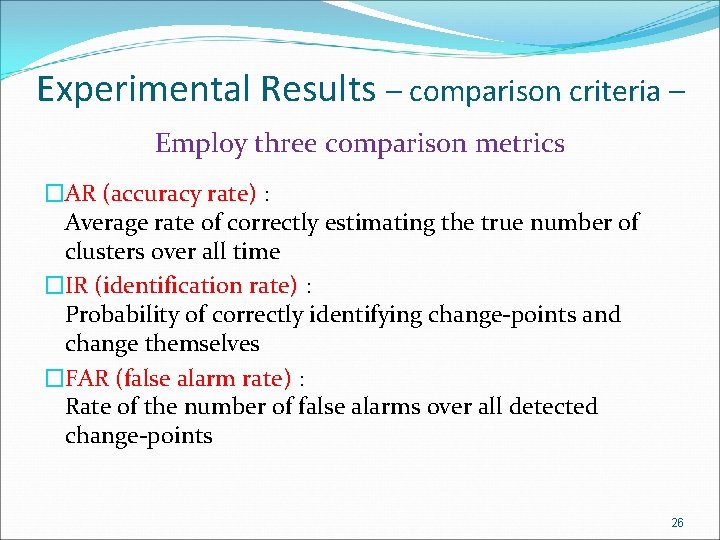

Experimental Results – comparison criteria – Employ three comparison metrics �AR (accuracy rate) : Average rate of correctly estimating the true number of clusters over all time �IR (identification rate) : Probability of correctly identifying change-points and change themselves �FAR (false alarm rate) : Rate of the number of false alarms over all detected change-points 26

Experimental Results – artificial data – Our alg. with NML was able to detect true changepoints and identify the true # of clusters with higher probability than AIC and BIC RNML AIC BIC AR 0. 903 0. 135 IR 0. 380 0. 005 0. 020 FAR 0. 260 0. 020 0. 718 AIC: Akaike’s information criteria [Akaike 1974] BIC: Bayesian information criteria [Shwarz 1978] Average Number of clusters Over Time 27

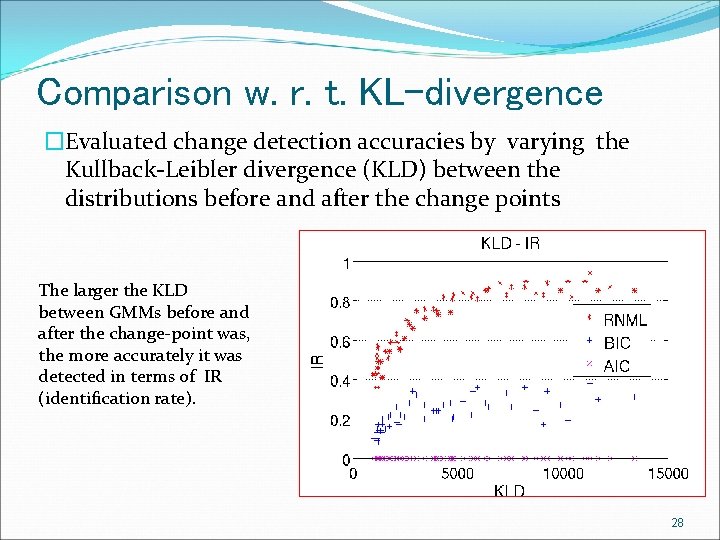

Comparison w. r. t. KL-divergence �Evaluated change detection accuracies by varying the Kullback-Leibler divergence (KLD) between the distributions before and after the change points The larger the KLD between GMMs before and after the change-point was, the more accurately it was detected in terms of IR (identification rate). 28

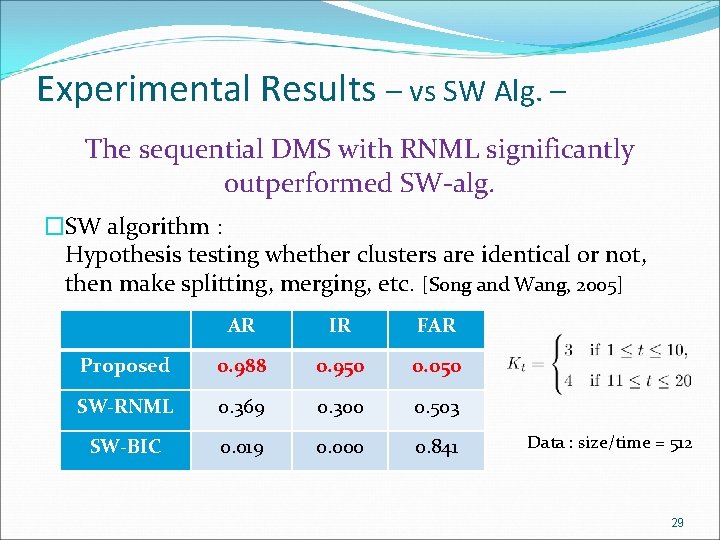

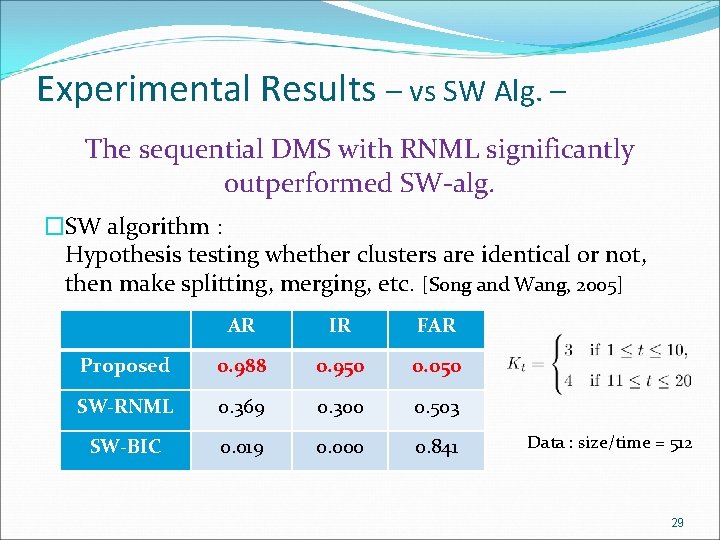

Experimental Results – vs SW Alg. – The sequential DMS with RNML significantly outperformed SW-alg. �SW algorithm : Hypothesis testing whether clusters are identical or not, then make splitting, merging, etc. [Song and Wang, 2005] AR IR FAR Proposed 0. 988 0. 950 0. 050 SW-RNML 0. 369 0. 300 0. 503 SW-BIC 0. 019 0. 000 0. 841 Data : size/time = 512 29

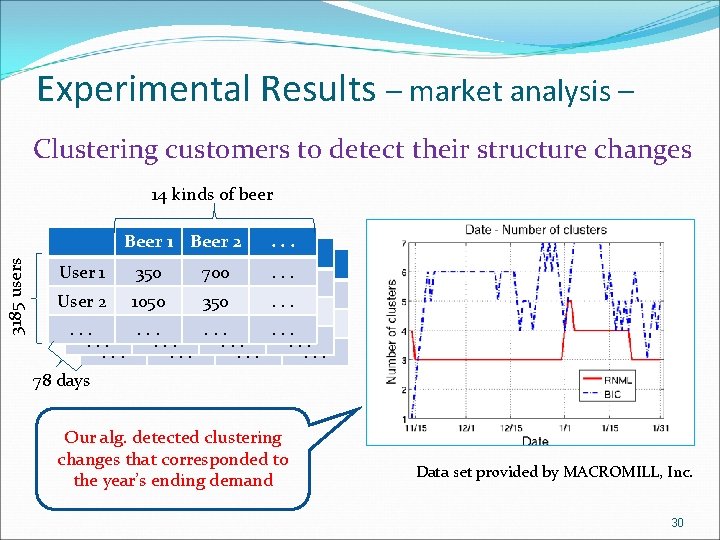

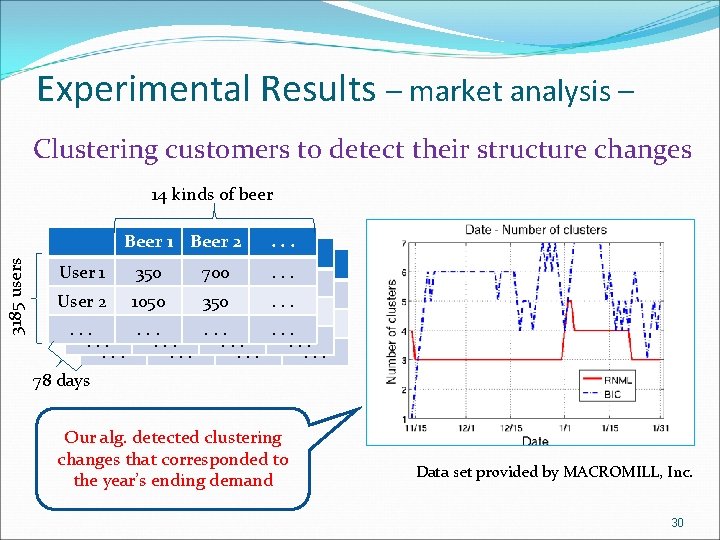

Experimental Results – market analysis – Clustering customers to detect their structure changes 3185 users 14 kinds of beer Beer 1 Beer 2. . . Beer 1 Beer 2 User 1 350 700. . . User 1 350 700 User 2 1050 350. . . 78 days Our alg. detected clustering changes that corresponded to the year’s ending demand Data set provided by MACROMILL, Inc. 30

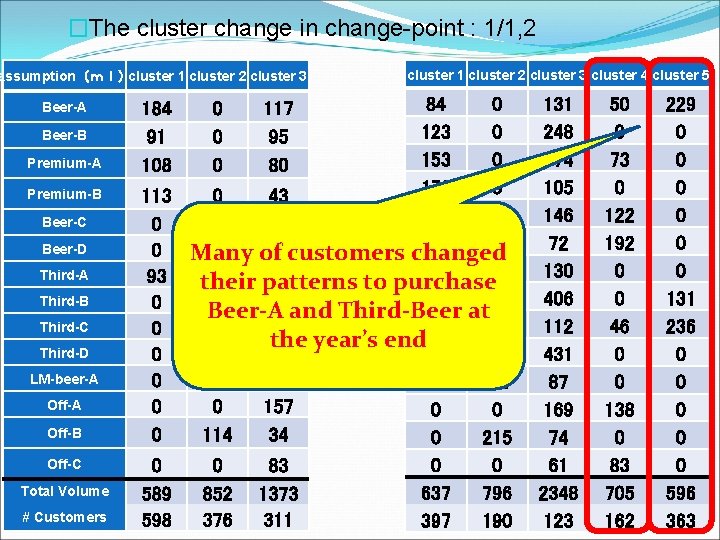

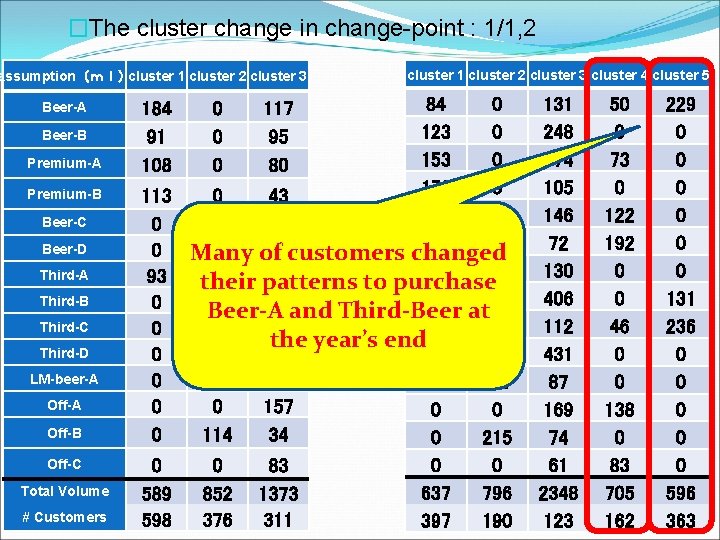

�The cluster change in change-point : 1/1, 2 assumption(ml)cluster 1 cluster 2 cluster 3 Beer-A Beer-B Premium-A Premium-B Beer-C Beer-D Third-A Third-B Third-C Third-D LM-beer-A Off-B Off-C Total Volume # Customers cluster 1 cluster 2 cluster 3 cluster 4 cluster 5 84 0 123 0 153 0 176 0 113 0 43 0 0 126 0 0 0 Many 0 140 customers changed of 101 131 93 41 43 their patterns to purchase 0 34 0 198 121 and Third-Beer-A at 0 107 0 303 103 the year’s end 0 202 0 120 182 0 75 48 0 107 0 0 157 0 0 0 114 34 0 215 0 0 83 637 796 589 852 1373 598 376 311 397 190 184 91 108 0 0 0 117 95 80 131 248 174 105 146 72 130 406 112 431 87 169 74 61 2348 123 50 0 73 0 122 192 0 0 46 0 0 138 0 83 705 162 229 0 0 0 131 236 0 0 0 596 31 363

Conclusion �Proposed the sequential DMS algorithm to address clustering change detection issue. �Key ideas : � Sequential dynamic model selection based on MDL principle � The use of the NML code-length as criteria and its efficient computation �It is able to detect cluster changes significantly more accurately than AIC/BIC based methods and the existing statistical-test based method in artificial data �Tracking changes of group structures leads to the understanding changes of market structures 32

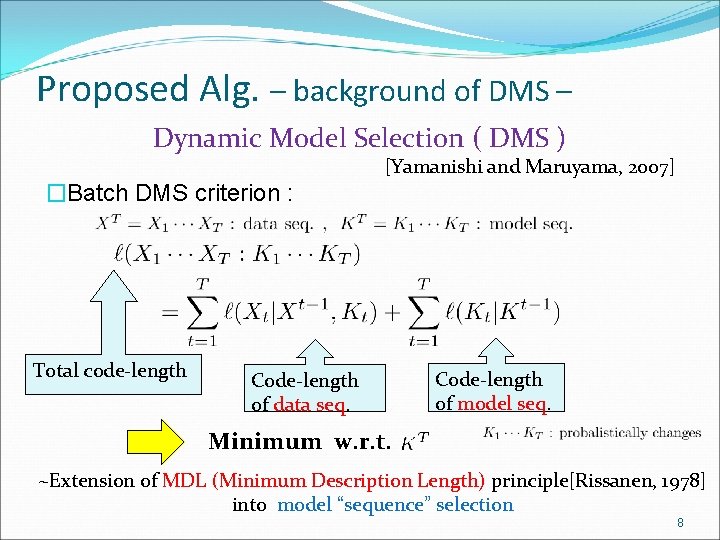

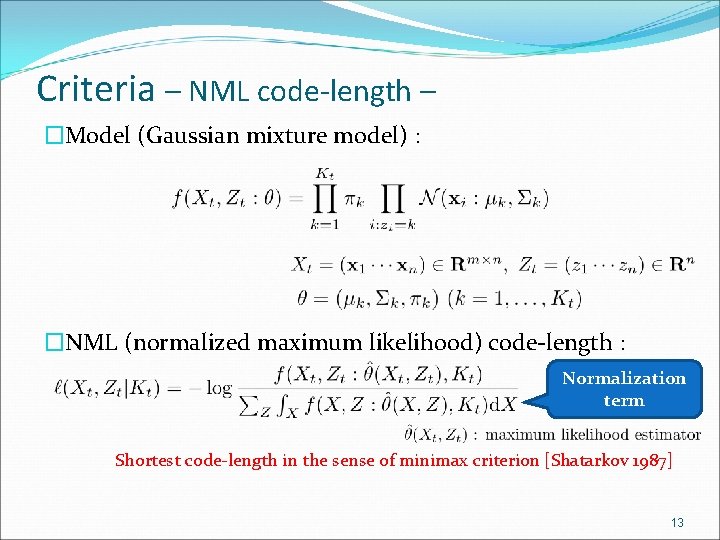

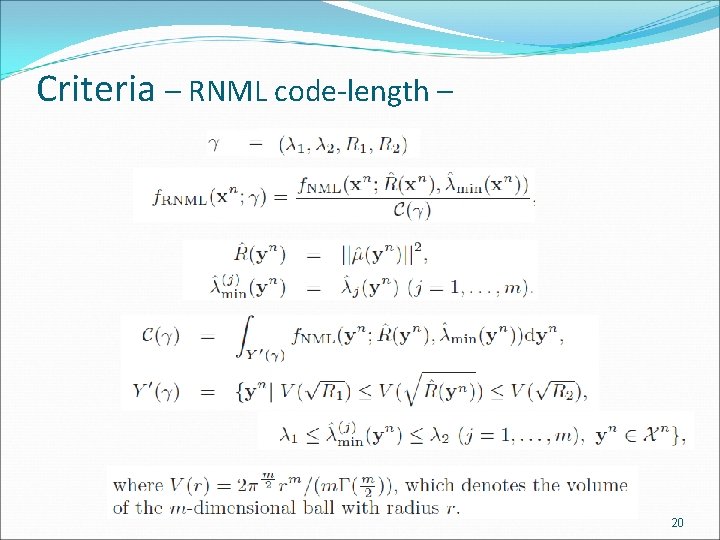

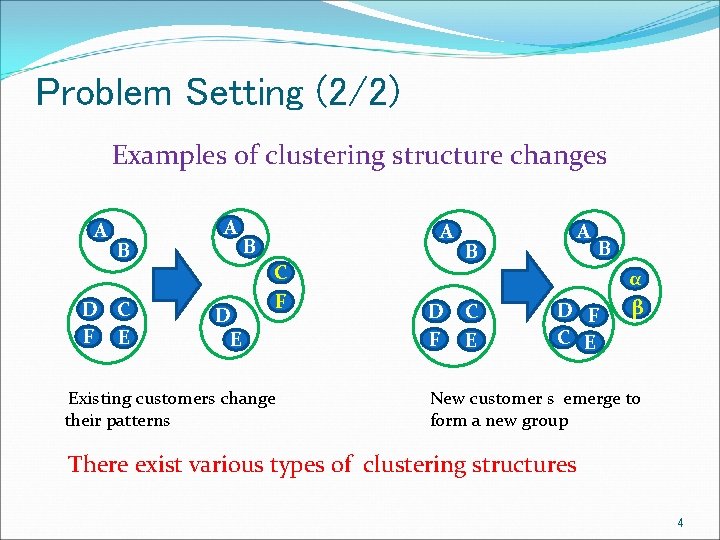

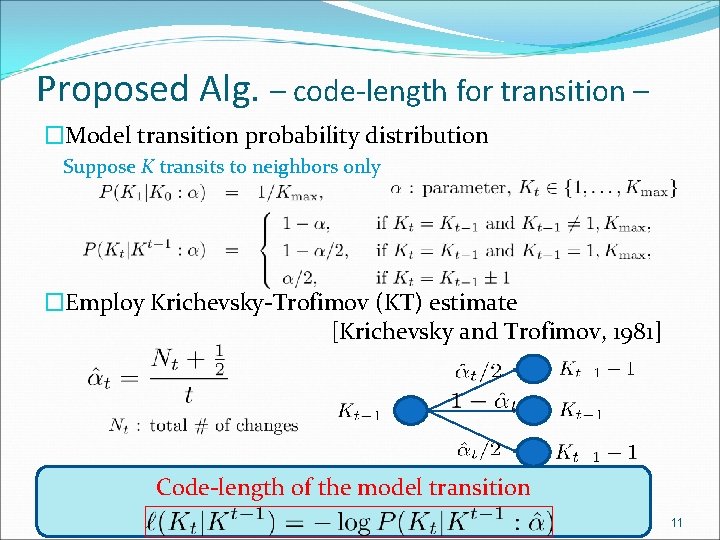

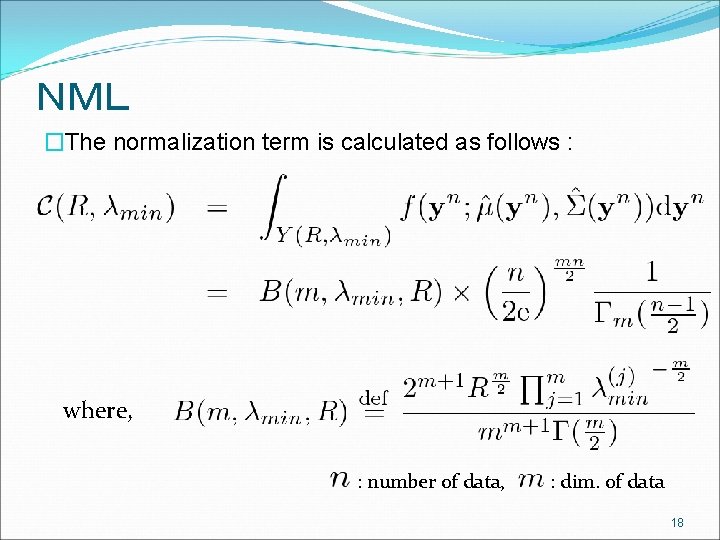

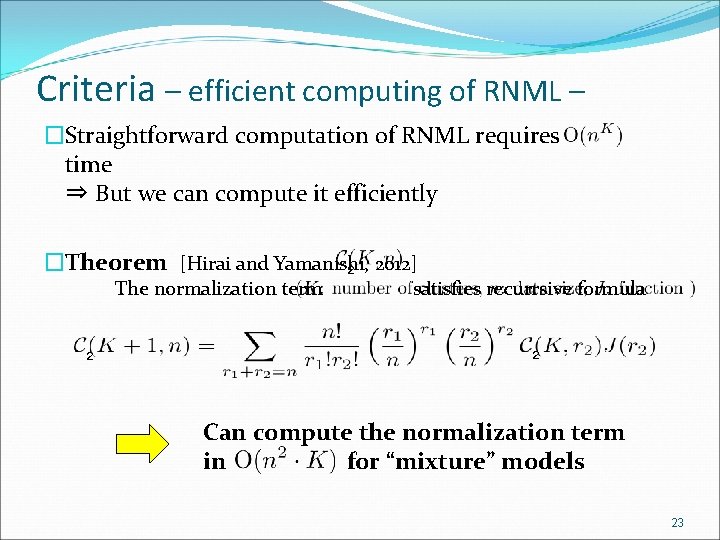

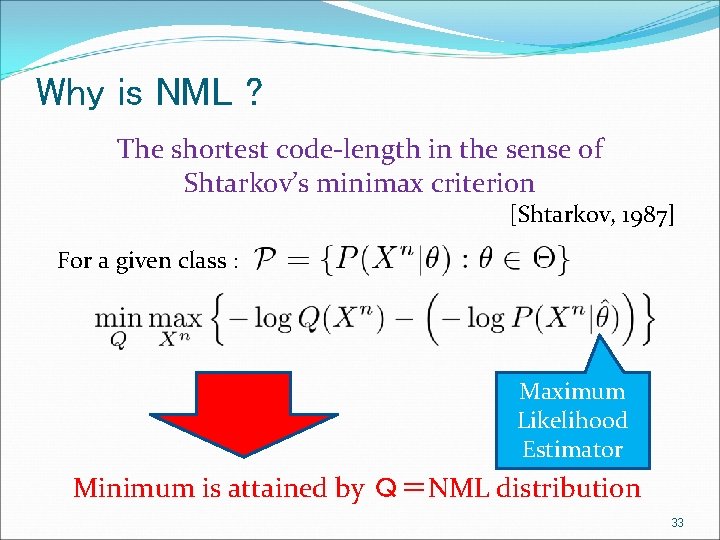

Why is NML ? The shortest code-length in the sense of Shtarkov’s minimax criterion [Shtarkov, 1987] For a given class : Maximum Likelihood Estimator Minimum is attained by Q=NML distribution 33

![Restrict the range of data for Shtarkovs minimax criterion Shtarkov 1987 For a given Restrict the range of data for Shtarkov’s minimax criterion [Shtarkov, 1987] For a given](https://slidetodoc.com/presentation_image_h/95d4b2d6e15dd9276068ecbc898893c6/image-34.jpg)

Restrict the range of data for Shtarkov’s minimax criterion [Shtarkov, 1987] For a given class : Restrict the range of data. We change the Shtarkov’s minimax criterion itself 34

Comparison with non-parametric Bayes �Sequential Dynamic Model Selection works better than non-parametric Bayes (Infinite HMM, etc. ) [Comparison of Dynamic Model Selection with Infinite HMM for Statistical Model Change Detection Sakurai and Yamanishi, to appear in ITW 2012] 35