Clustering Are there clusters of similar cells Light

- Slides: 24

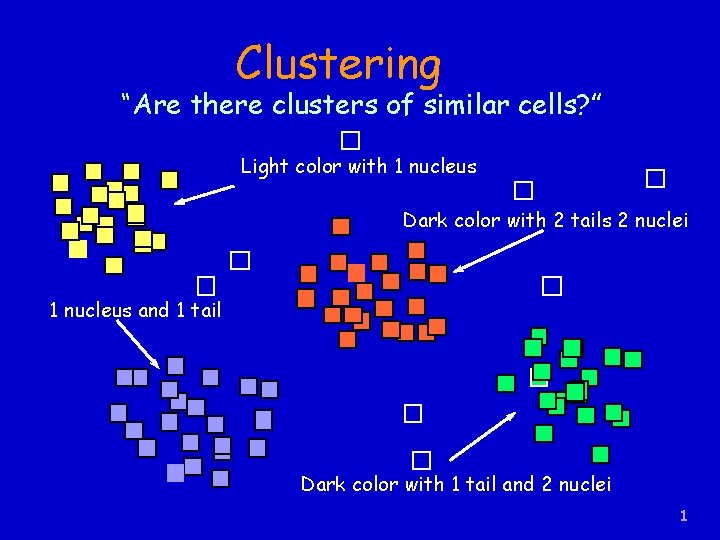

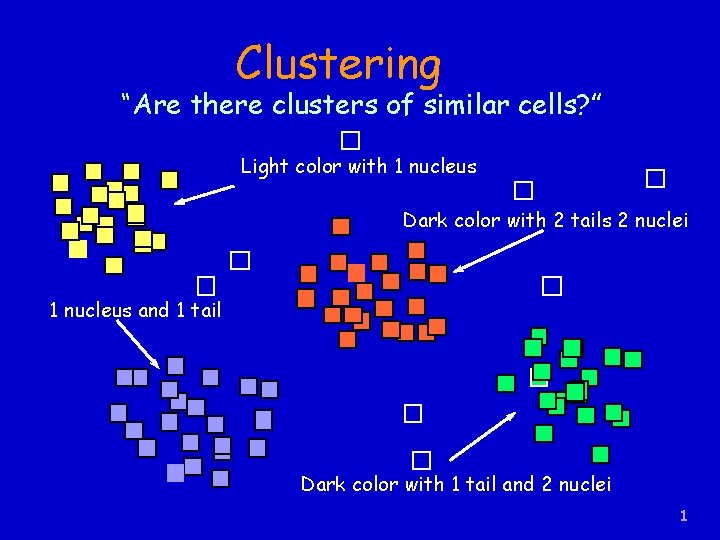

Clustering “Are there clusters of similar cells? ” Light color with 1 nucleus Dark color with 2 tails 2 nuclei 1 nucleus and 1 tail Dark color with 1 tail and 2 nuclei 1

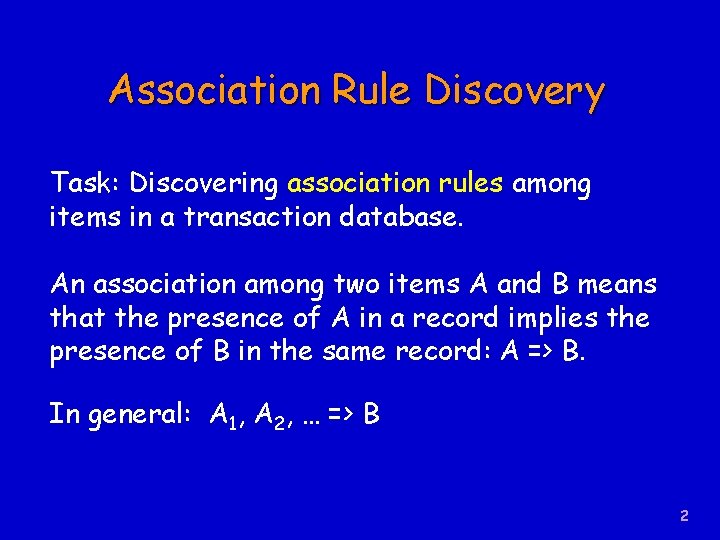

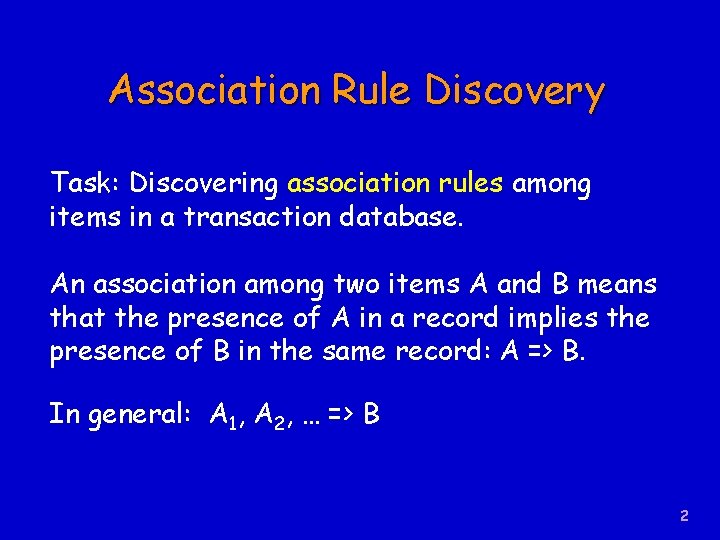

Association Rule Discovery Task: Discovering association rules among items in a transaction database. An association among two items A and B means that the presence of A in a record implies the presence of B in the same record: A => B. In general: A 1, A 2, … => B 2

Association Rule Discovery “Are there any associations between the characteristics of the cells? ” If color = light and # nuclei = 1 then # tails = 1 (support = 12. 5%; confidence = 50%) If # nuclei = 2 and Cell = Cancerous then # tails = 2 (support = 25%; If # tails = 1 then Color = light confidence = 100%) (support = 37. 5%; confidence = 75%) 3

Many Other Data Mining Techniques Genetic Algorithms Rough Sets Bayesian Networks Text Mining Statistics Time Series 4

Lecture 1: Overview of KDD 1. What is KDD and Why ? 2. The KDD Process 3. KDD Applications 4. Data Mining Methods 5. Challenges for KDD 5

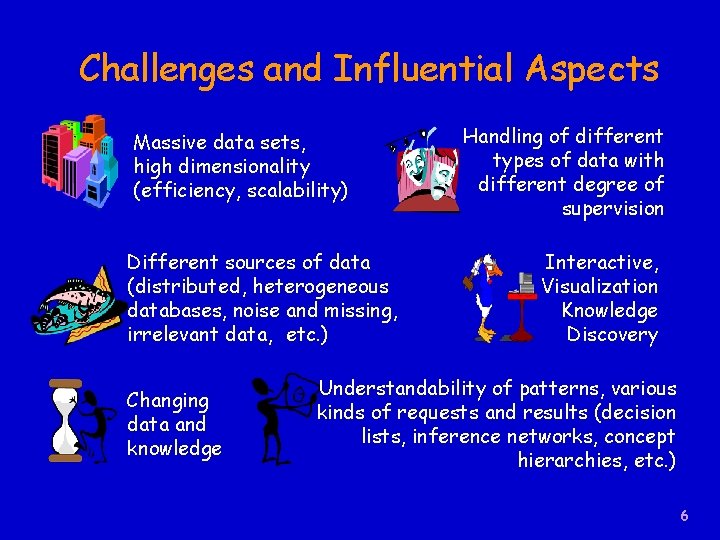

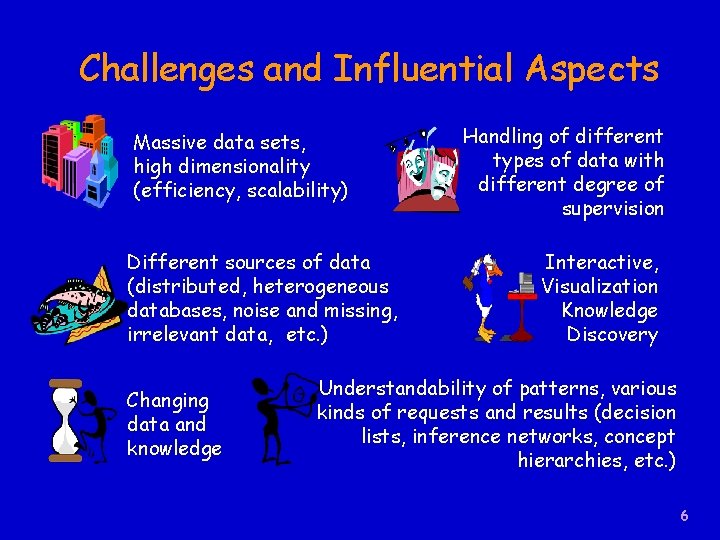

Challenges and Influential Aspects Massive data sets, high dimensionality (efficiency, scalability) Different sources of data (distributed, heterogeneous databases, noise and missing, irrelevant data, etc. ) Changing data and knowledge Handling of different types of data with different degree of supervision Interactive, Visualization Knowledge Discovery Understandability of patterns, various kinds of requests and results (decision lists, inference networks, concept hierarchies, etc. ) 6

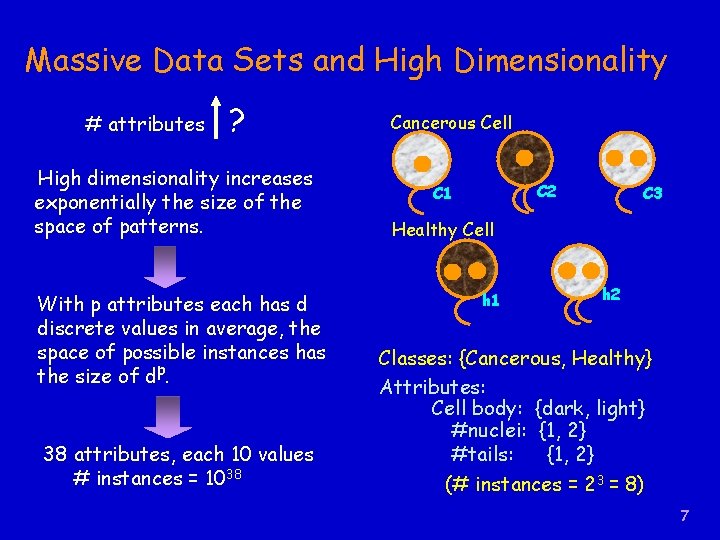

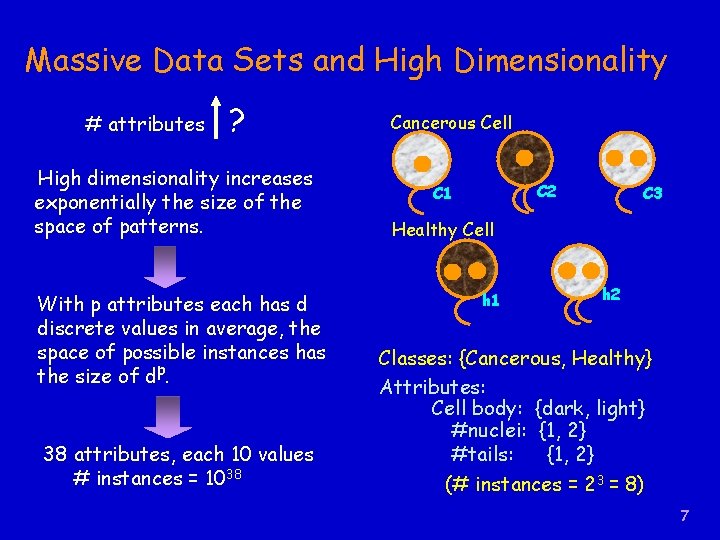

Massive Data Sets and High Dimensionality # attributes ? High dimensionality increases exponentially the size of the space of patterns. With p attributes each has d discrete values in average, the space of possible instances has the size of dp. 38 attributes, each 10 values # instances = 1038 Cancerous Cell C 2 C 1 C 3 Healthy Cell h 1 h 2 Classes: {Cancerous, Healthy} Attributes: Cell body: {dark, light} #nuclei: {1, 2} #tails: {1, 2} (# instances = 23 = 8) 7

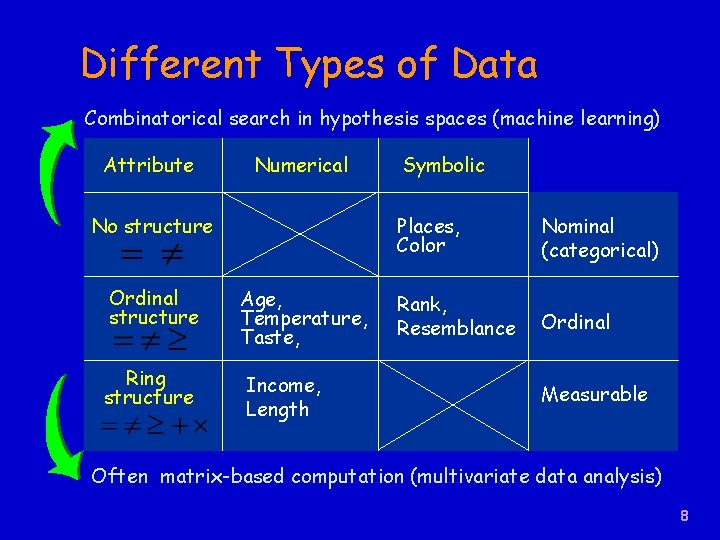

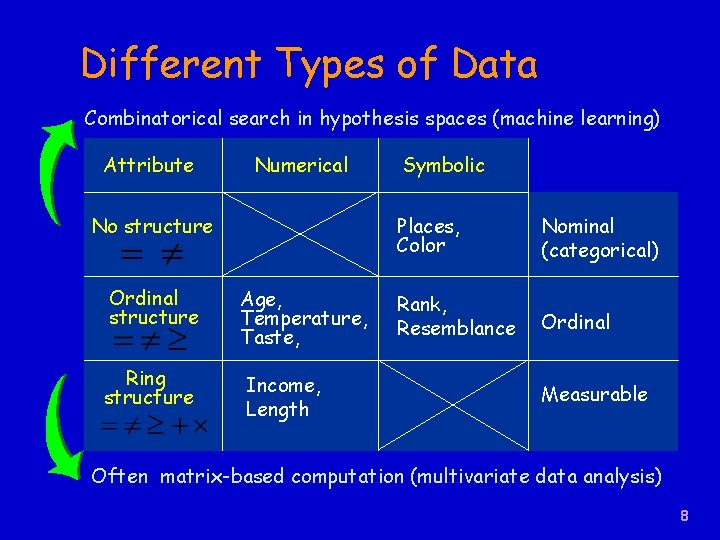

Different Types of Data Combinatorical search in hypothesis spaces (machine learning) Attribute Numerical No structure Ordinal structure Age, Temperature, Taste, Ring structure Income, Length Symbolic Places, Color Nominal (categorical) Rank, Resemblance Ordinal Measurable Often matrix-based computation (multivariate data analysis) 8

Brief introduction to lectures Lecture 1: Overview of KDD Lecture 2: Preparing data Lecture 3: Decision tree induction Lecture 4: Mining association rules Lecture 5: Automatic cluster detection Lecture 6: Artificial neural networks Lecture 7: Evaluation of discovered knowledge 9

Lecture 2: Preparing Data • As much as 80% of KDD is about preparing data, and the remaining 20% is about mining • Content of the lecture 1. Data cleaning 2. Data transformations 3. Data reduction 4. Software and case-studies • Prerequisite: Nothing special but expected some understanding of statistics 10

Data Preparation The design and organization of data, including the setting of goals and the composition of features, is done by humans. There are two central goals for the preparation of data: • • To organize data into a standard form that is ready for processing by data mining programs. To prepare features that lead to the best data mining performance. 11

Brief introduction to lectures Lecture 1: Overview of KDD Lecture 2: Preparing data Lecture 3: Decision tree induction Lecture 4: Mining association rules Lecture 5: Automatic cluster detection Lecture 6: Artificial neural networks Lecture 7: Evaluation of discovered knowledge 12

Lecture 3: Decision Tree Induction • One of the most widely used KDD classification techniques for supervised data. • Content of the lecture 1. 2. 3. 4. Decision tree representation and framework Attribute selection Pruning trees Software C 4. 5, CABRO and case-studies • Prerequisite: Nothing special 13

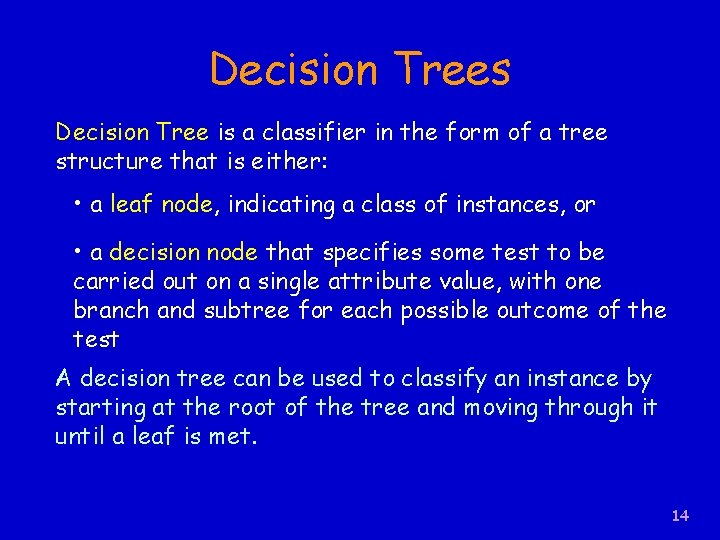

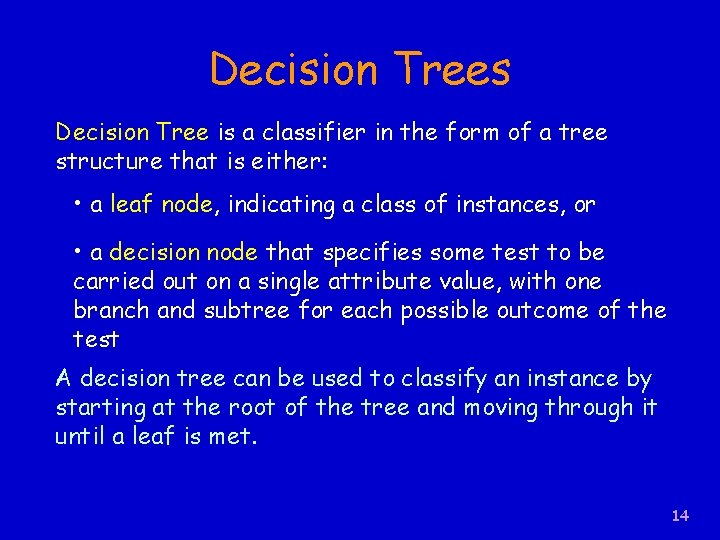

Decision Trees Decision Tree is a classifier in the form of a tree structure that is either: • a leaf node, indicating a class of instances, or • a decision node that specifies some test to be carried out on a single attribute value, with one branch and subtree for each possible outcome of the test A decision tree can be used to classify an instance by starting at the root of the tree and moving through it until a leaf is met. 14

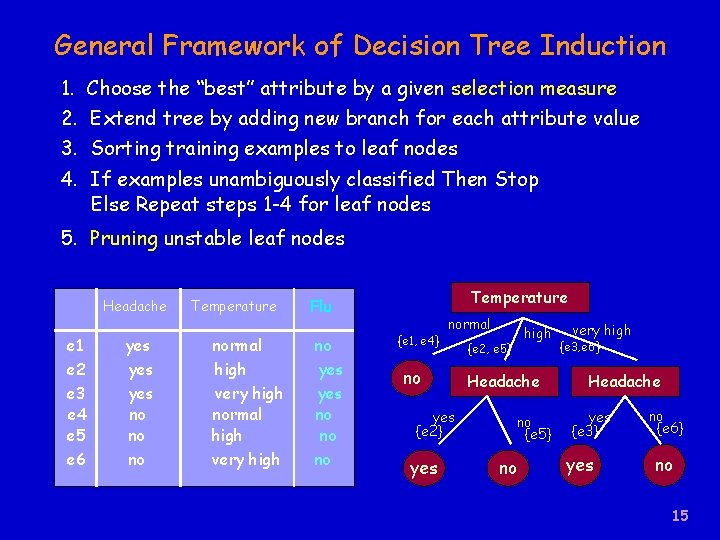

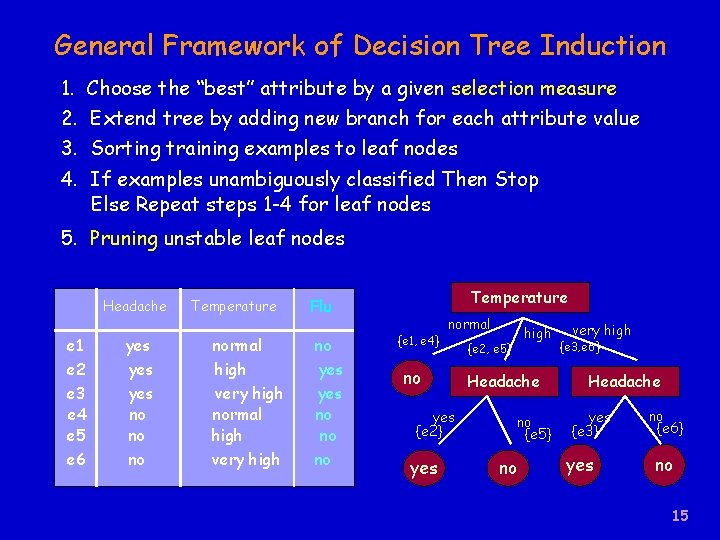

General Framework of Decision Tree Induction 1. Choose the “best” attribute by a given selection measure 2. Extend tree by adding new branch for each attribute value 3. Sorting training examples to leaf nodes 4. If examples unambiguously classified Then Stop Else Repeat steps 1 -4 for leaf nodes 5. Pruning unstable leaf nodes Headache e 1 e 2 e 3 e 4 e 5 e 6 yes yes no no no Temperature normal high very high Temperature Flu no yes no no no {e 1, e 4} normal no yes {e 2} yes high {e 2, e 5} Headache no {e 5} no very high {e 3, e 6} Headache yes {e 3} yes no {e 6} no 15

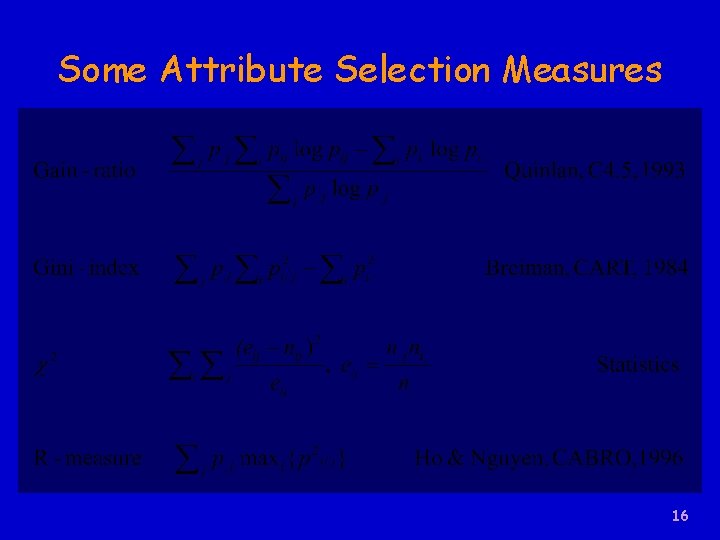

Some Attribute Selection Measures 16

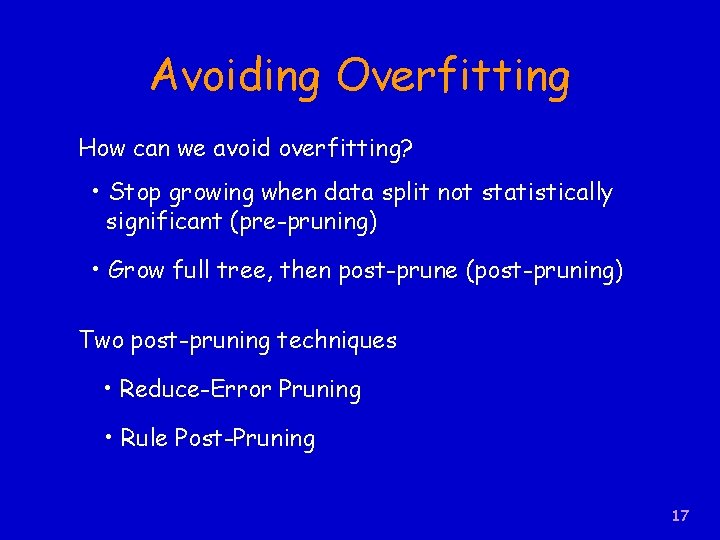

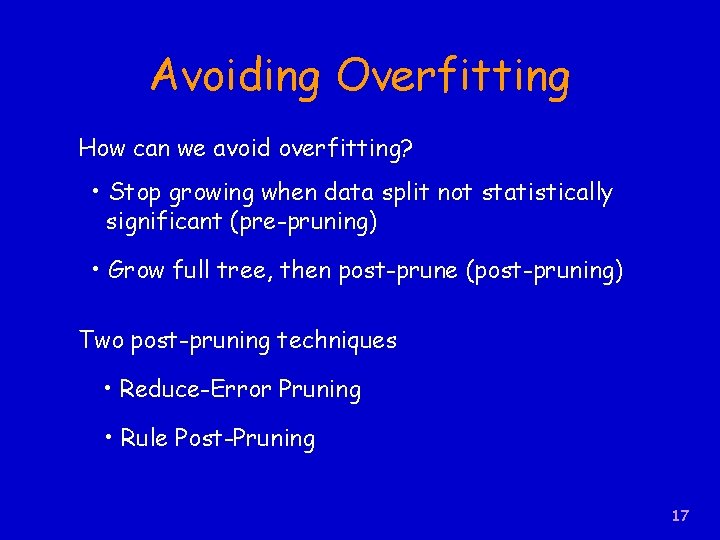

Avoiding Overfitting How can we avoid overfitting? • Stop growing when data split not statistically significant (pre-pruning) • Grow full tree, then post-prune (post-pruning) Two post-pruning techniques • Reduce-Error Pruning • Rule Post-Pruning 17

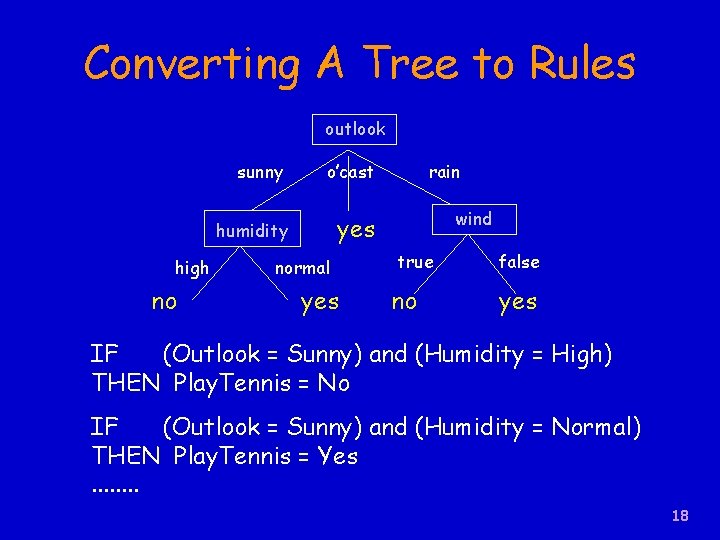

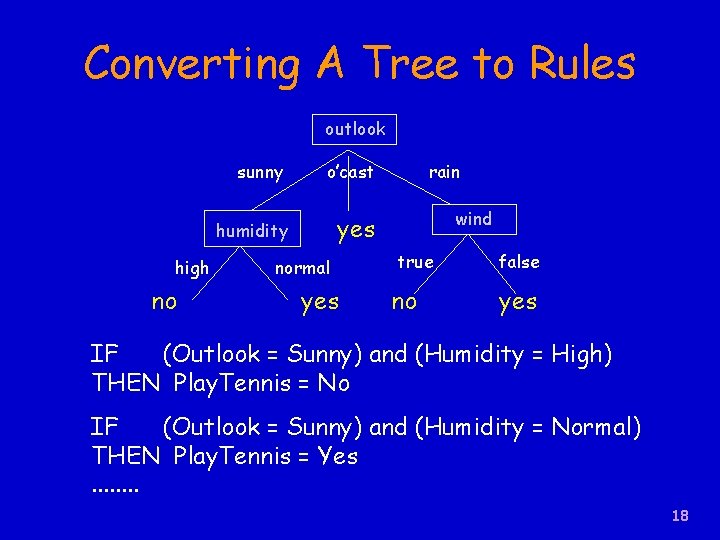

Converting A Tree to Rules outlook high no sunny o’cast humidity yes normal yes rain wind true no false yes IF (Outlook = Sunny) and (Humidity = High) THEN Play. Tennis = No IF (Outlook = Sunny) and (Humidity = Normal) THEN Play. Tennis = Yes. . . . 18

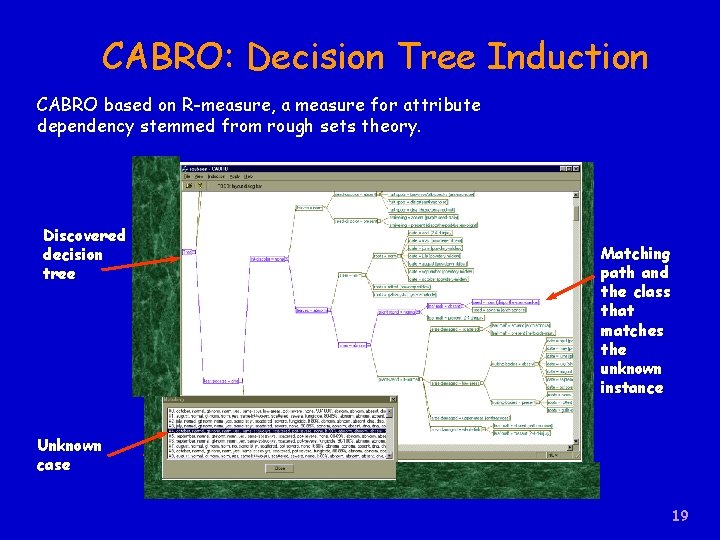

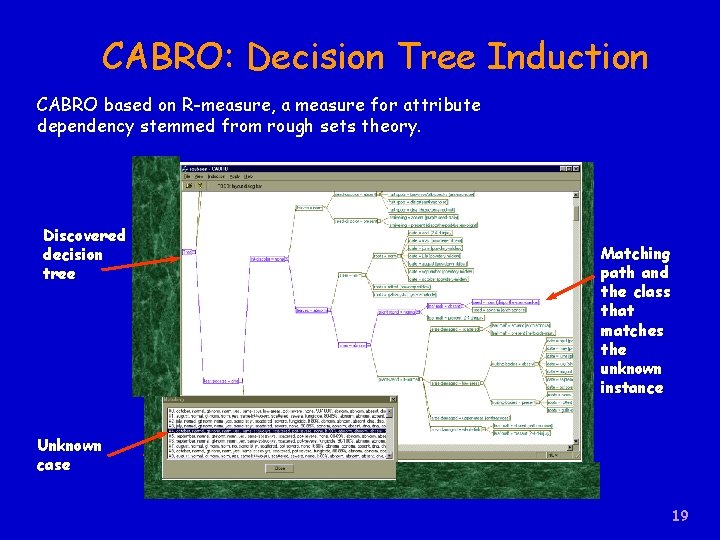

CABRO: Decision Tree Induction CABRO based on R-measure, a measure for attribute dependency stemmed from rough sets theory. Discovered decision tree Matching path and the class that matches the unknown instance Unknown case 19

Brief introduction to lectures Lecture 1: Overview of KDD Lecture 2: Preparing data Lecture 3: Decision tree induction Lecture 4: Mining association rules Lecture 5: Automatic cluster detection Lecture 6: Artificial neural networks Lecture 7: Evaluation of discovered knowledge 20

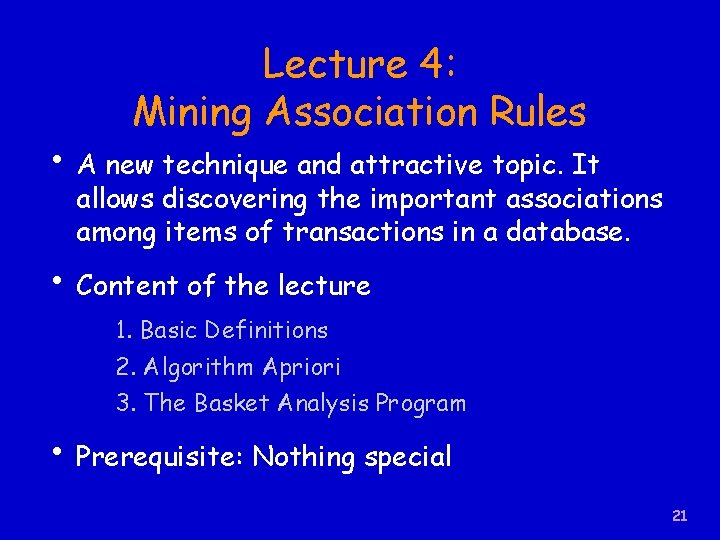

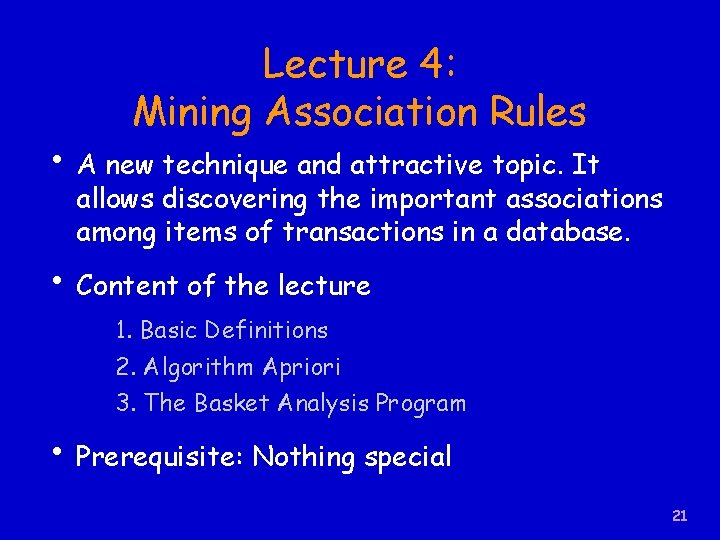

Lecture 4: Mining Association Rules • A new technique and attractive topic. It allows discovering the important associations among items of transactions in a database. • Content of the lecture 1. Basic Definitions 2. Algorithm Apriori 3. The Basket Analysis Program • Prerequisite: Nothing special 21

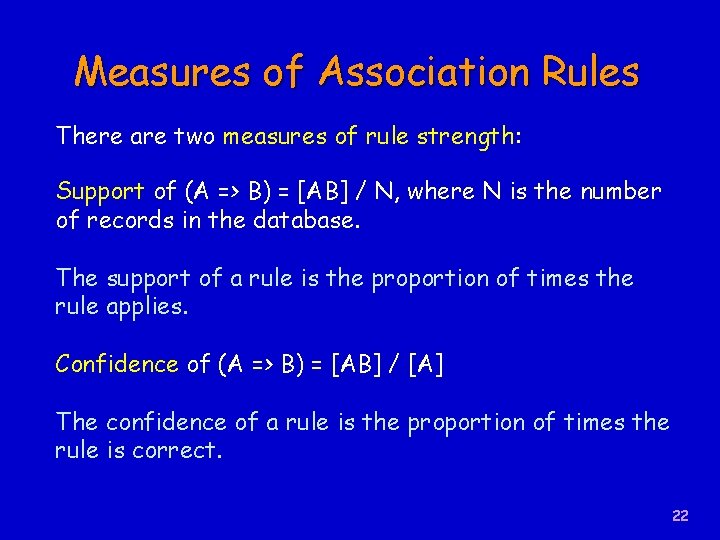

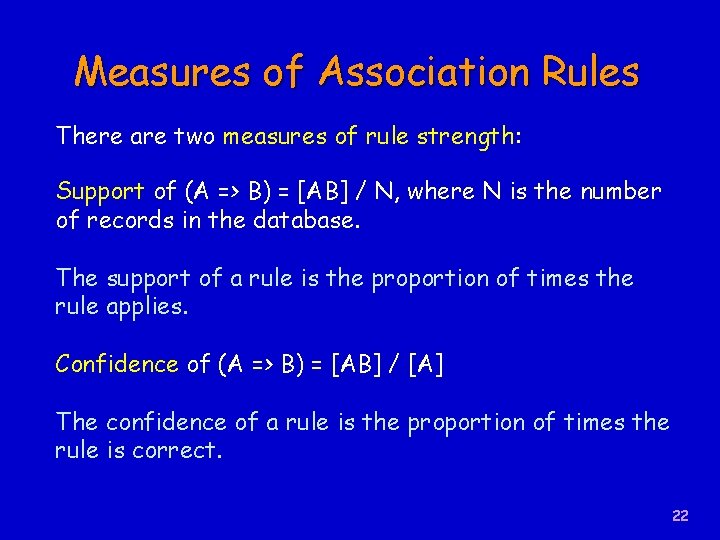

Measures of Association Rules There are two measures of rule strength: Support of (A => B) = [AB] / N, where N is the number of records in the database. The support of a rule is the proportion of times the rule applies. Confidence of (A => B) = [AB] / [A] The confidence of a rule is the proportion of times the rule is correct. 22

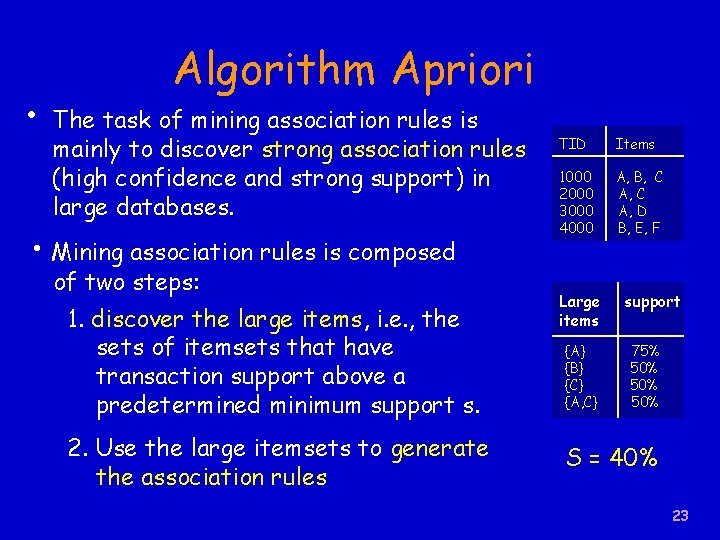

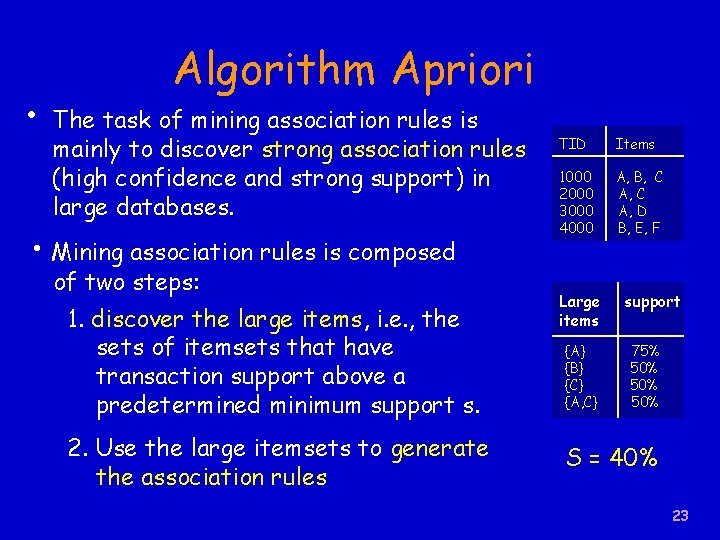

• Algorithm Apriori The task of mining association rules is mainly to discover strong association rules (high confidence and strong support) in large databases. • Mining association rules is composed of two steps: 1. discover the large items, i. e. , the sets of itemsets that have transaction support above a predetermined minimum support s. 2. Use the large itemsets to generate the association rules TID Items 1000 2000 3000 4000 A, B, C A, D B, E, F Large items {A} {B} {C} {A, C} support 75% 50% 50% S = 40% 23

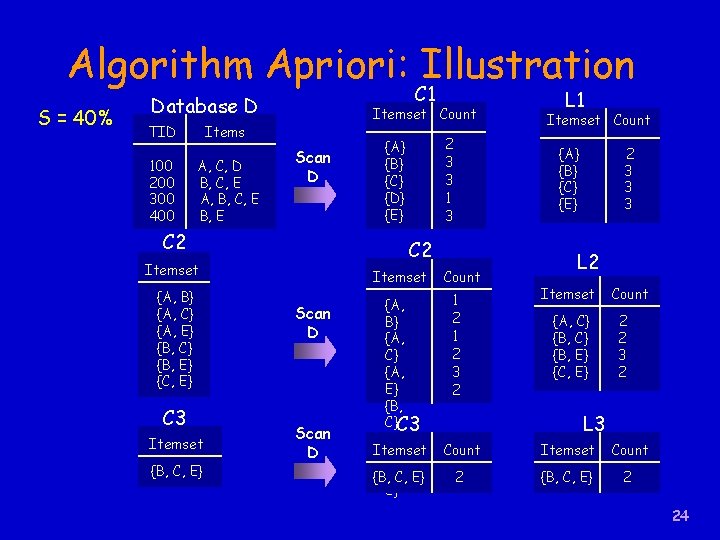

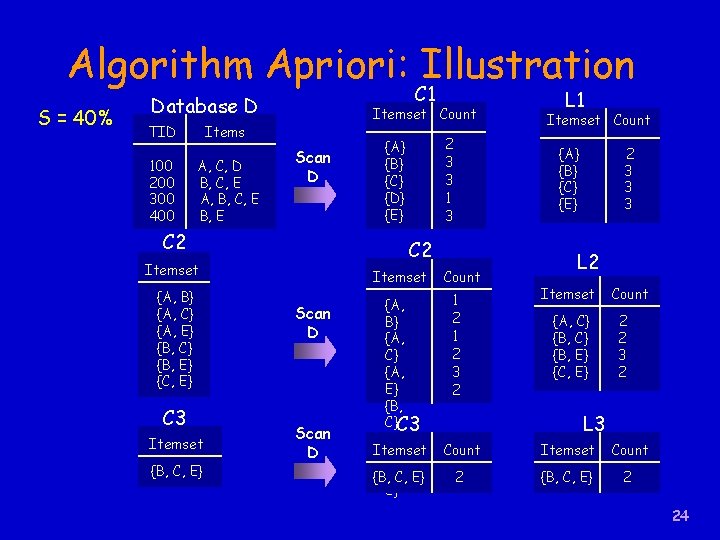

Algorithm Apriori: Illustration S = 40% C 1 Database D TID 100 200 300 400 Itemset Count Items A, C, D B, C, E A, B, C, E B, E Scan D C 2 C 3 Itemset {B, C, E} Itemset Scan D {A, B} {A, C} {A, E} {B, C}C 3 {B, Itemset E} {B, {C, C, E} E} Itemset Count {A} {B} {C} {E} C 2 Itemset {A, B} {A, C} {A, E} {B, C} {B, E} {C, E} 2 3 3 1 3 {A} {B} {C} {D} {E} L 1 Count 1 2 3 2 2 3 3 3 L 2 Itemset {A, C} {B, E} {C, E} Count 2 2 3 2 L 3 Count Itemset Count 2 {B, C, E} 2 24