Cluster Optimisation using Cgroups Gang Qin Gareth Roy

- Slides: 22

Cluster Optimisation using Cgroups Gang Qin, Gareth Roy, David Crooks, Sam Skipsey, Gordon Stewart and David Britton Prof. David Britton ACAT 2016 18 th Jan 2016 David Britton, University of Glasgow IET, Oct 09 Grid. PP Project leader University of Glasgow Slide

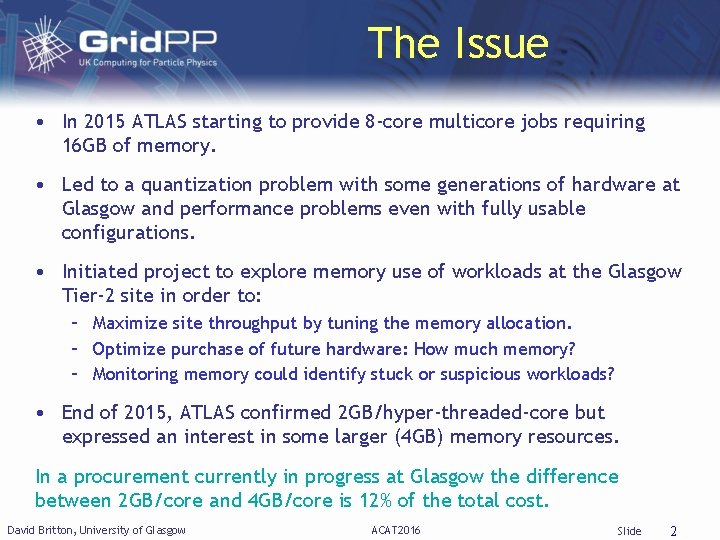

The Issue • In 2015 ATLAS starting to provide 8 -core multicore jobs requiring 16 GB of memory. • Led to a quantization problem with some generations of hardware at Glasgow and performance problems even with fully usable configurations. • Initiated project to explore memory use of workloads at the Glasgow Tier-2 site in order to: – Maximize site throughput by tuning the memory allocation. – Optimize purchase of future hardware: How much memory? – Monitoring memory could identify stuck or suspicious workloads? • End of 2015, ATLAS confirmed 2 GB/hyper-threaded-core but expressed an interest in some larger (4 GB) memory resources. In a procurement currently in progress at Glasgow the difference between 2 GB/core and 4 GB/core is 12% of the total cost. David Britton, University of Glasgow ACAT 2016 Slide 2

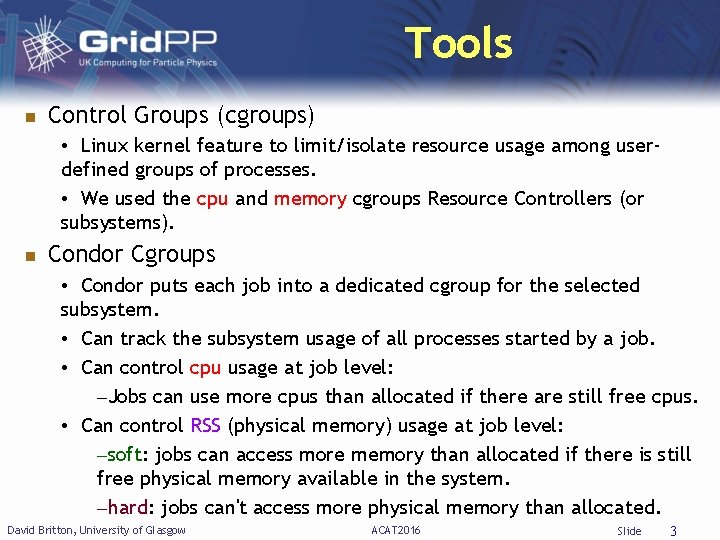

Tools Control Groups (cgroups) • Linux kernel feature to limit/isolate resource usage among userdefined groups of processes. • We used the cpu and memory cgroups Resource Controllers (or subsystems). Condor Cgroups • Condor puts each job into a dedicated cgroup for the selected subsystem. • Can track the subsystem usage of all processes started by a job. • Can control cpu usage at job level: –Jobs can use more cpus than allocated if there are still free cpus. • Can control RSS (physical memory) usage at job level: –soft: jobs can access more memory than allocated if there is still free physical memory available in the system. –hard: jobs can't access more physical memory than allocated. David Britton, University of Glasgow ACAT 2016 Slide 3

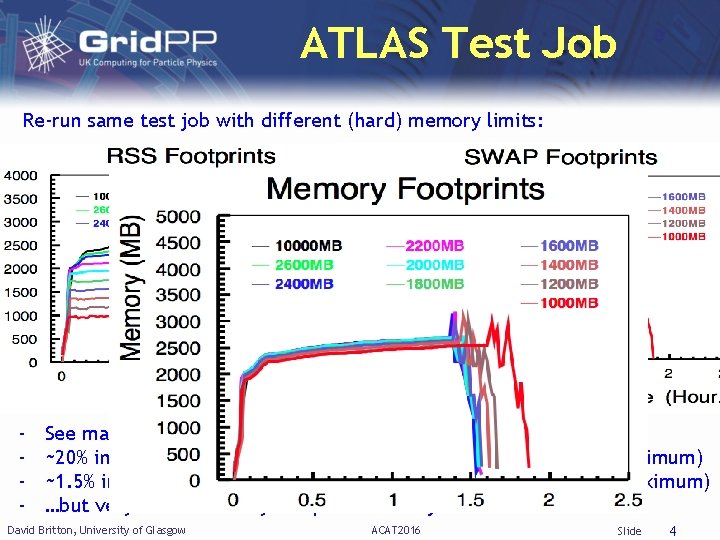

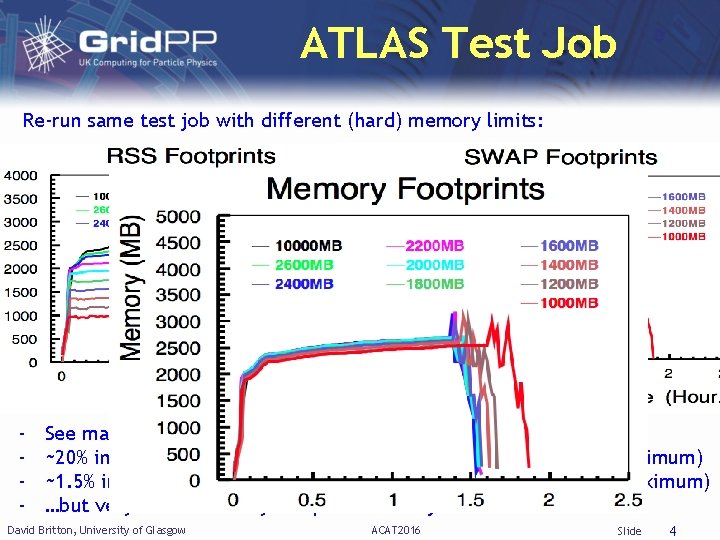

ATLAS Test Job Re-run same test job with different (hard) memory limits: - See maximum memory needed is 2700 MB ~20% increase in run time when RSS limited to 1000 MB (37% of maximum) ~1. 5% increase in run time when RSS limited to 2000 MB (75% of maximum) …but very linear memory footprint on this job. David Britton, University of Glasgow ACAT 2016 Slide 4

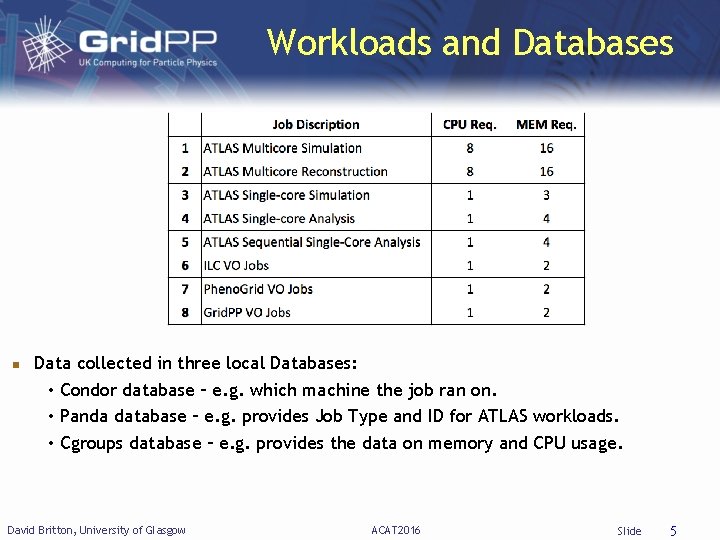

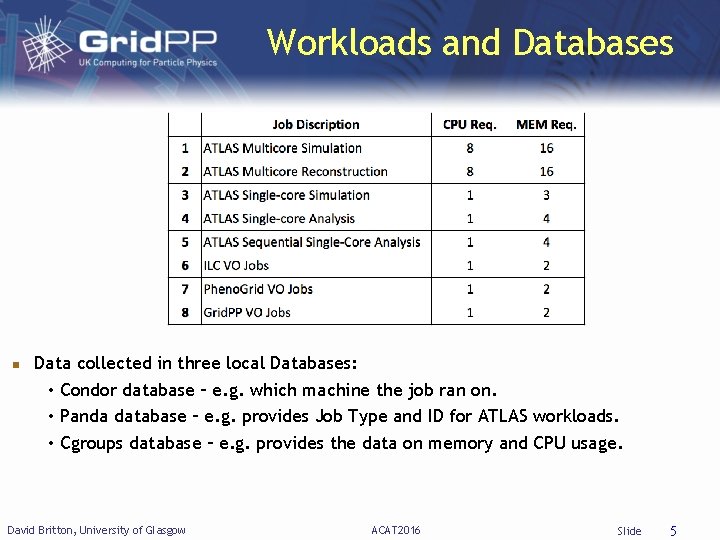

Workloads and Databases Data collected in three local Databases: • Condor database – e. g. which machine the job ran on. • Panda database – e. g. provides Job Type and ID for ATLAS workloads. • Cgroups database – e. g. provides the data on memory and CPU usage. David Britton, University of Glasgow ACAT 2016 Slide 5

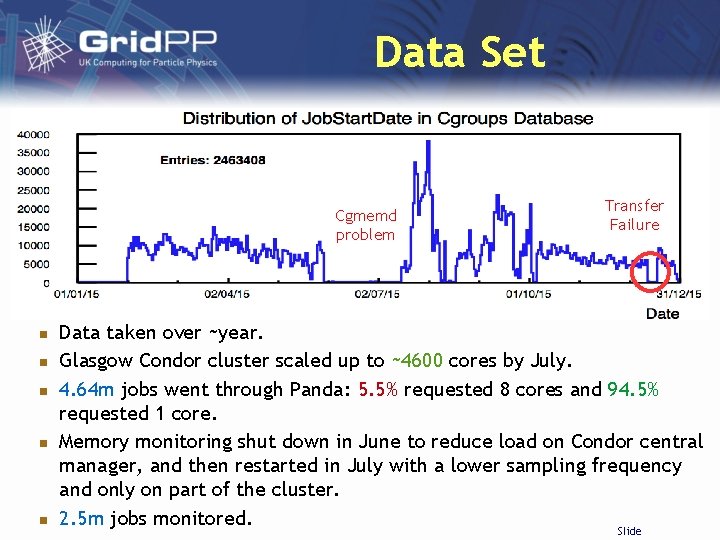

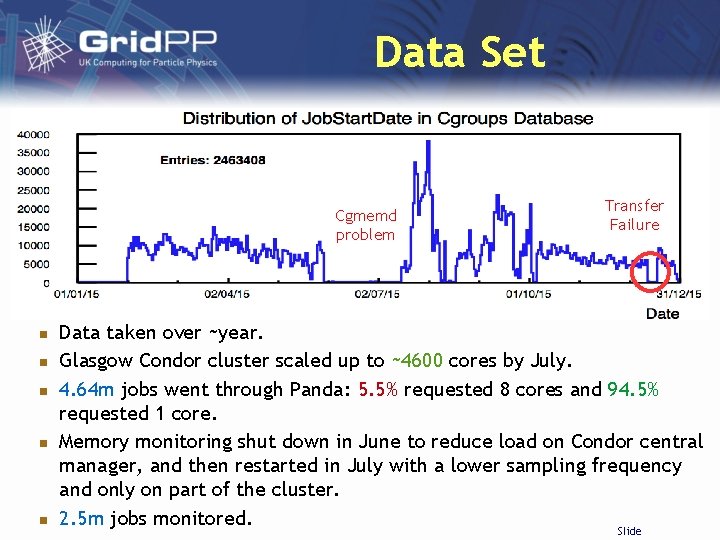

Data Set Cgmemd problem Transfer Failure Data taken over ~year. Glasgow Condor cluster scaled up to ~4600 cores by July. 4. 64 m jobs went through Panda: 5. 5% requested 8 cores and 94. 5% requested 1 core. Memory monitoring shut down in June to reduce load on Condor central manager, and then restarted in July with a lower sampling frequency and only on part of the cluster. 2. 5 m jobs monitored. Slide

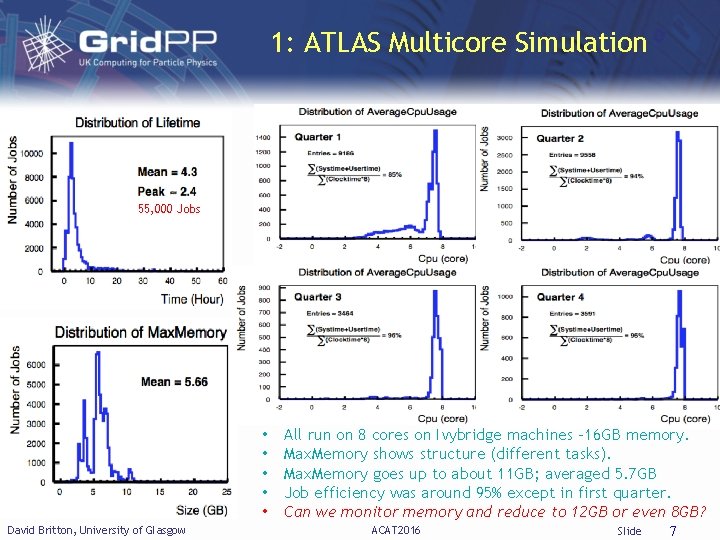

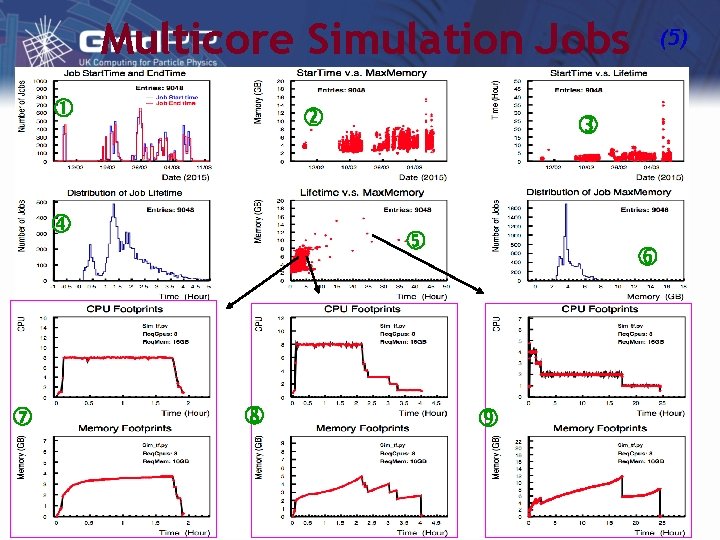

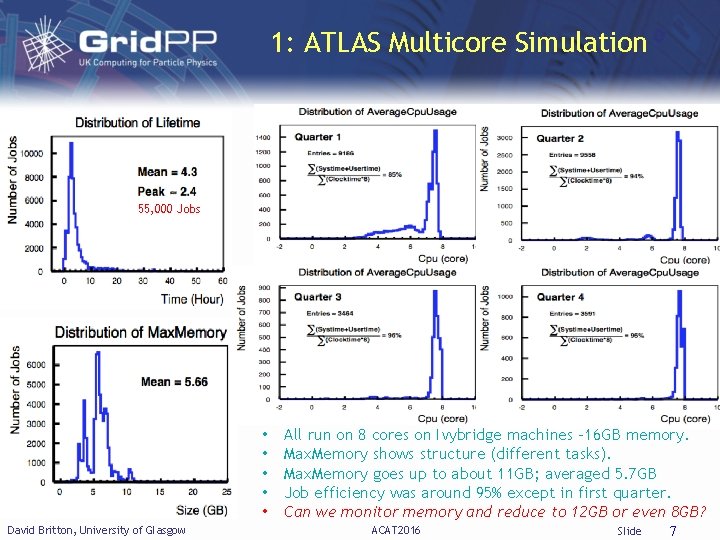

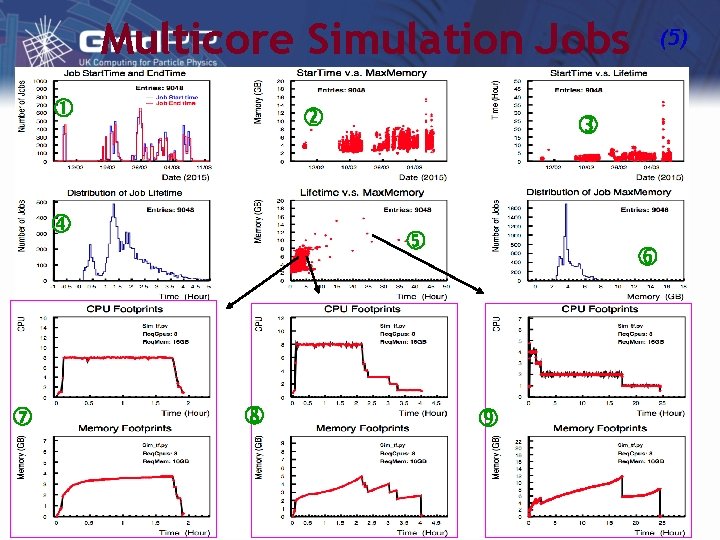

1: ATLAS Multicore Simulation 55, 000 Jobs • • • David Britton, University of Glasgow All run on 8 cores on Ivybridge machines -16 GB memory. Max. Memory shows structure (different tasks). Max. Memory goes up to about 11 GB; averaged 5. 7 GB Job efficiency was around 95% except in first quarter. Can we monitor memory and reduce to 12 GB or even 8 GB? ACAT 2016 Slide 7

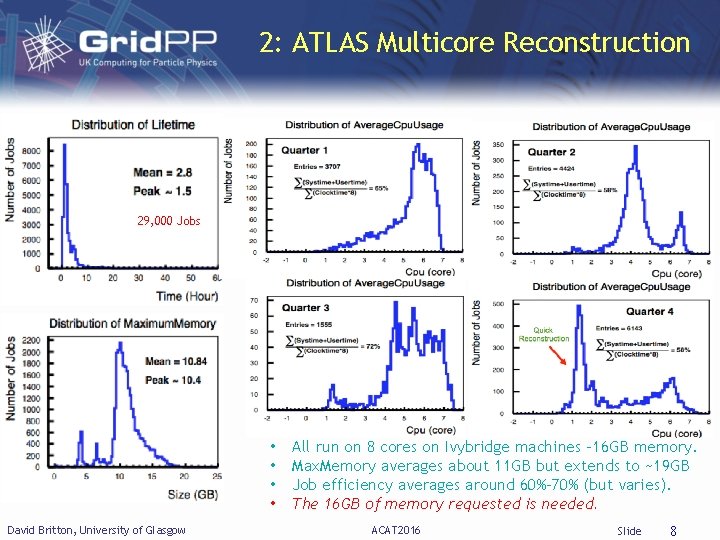

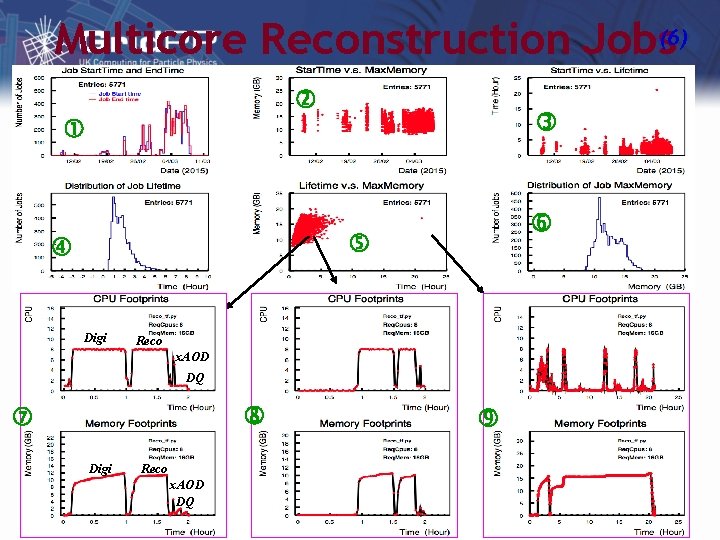

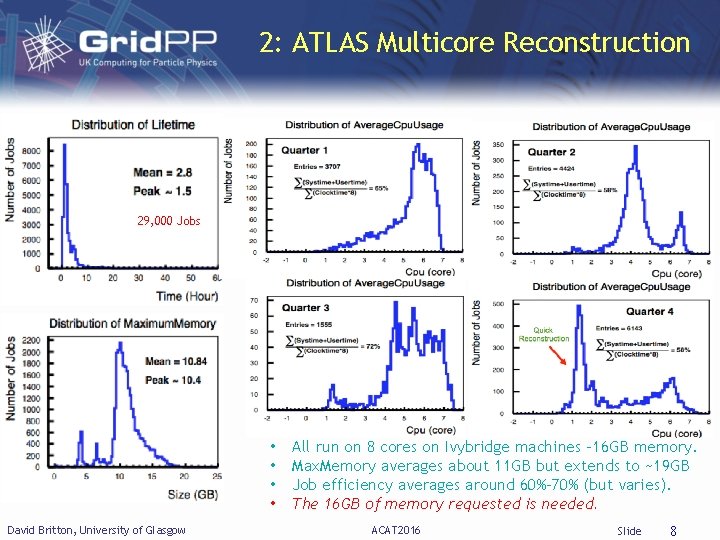

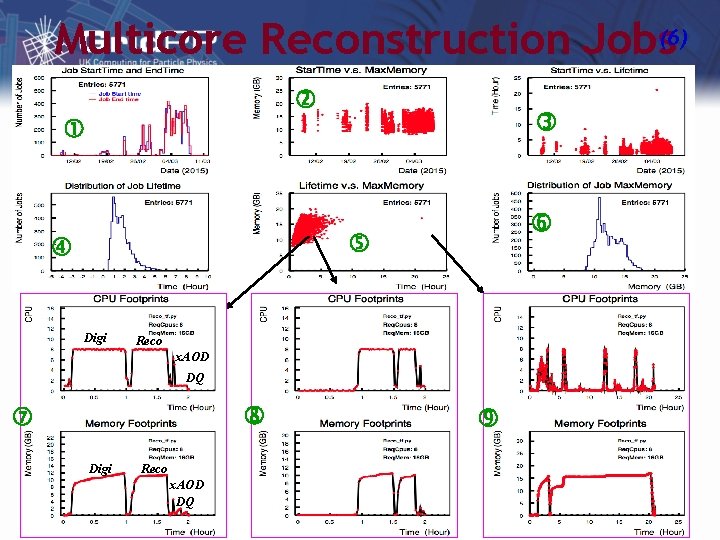

2: ATLAS Multicore Reconstruction 29, 000 Jobs • • David Britton, University of Glasgow All run on 8 cores on Ivybridge machines -16 GB memory. Max. Memory averages about 11 GB but extends to ~19 GB Job efficiency averages around 60%-70% (but varies). The 16 GB of memory requested is needed. ACAT 2016 Slide 8

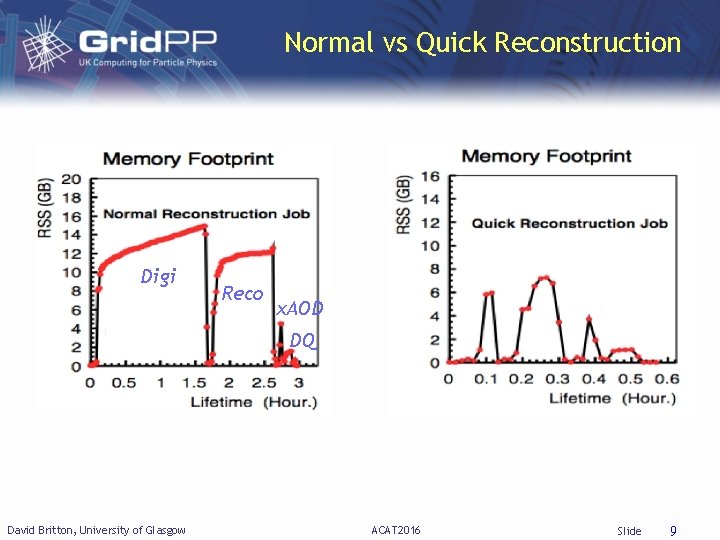

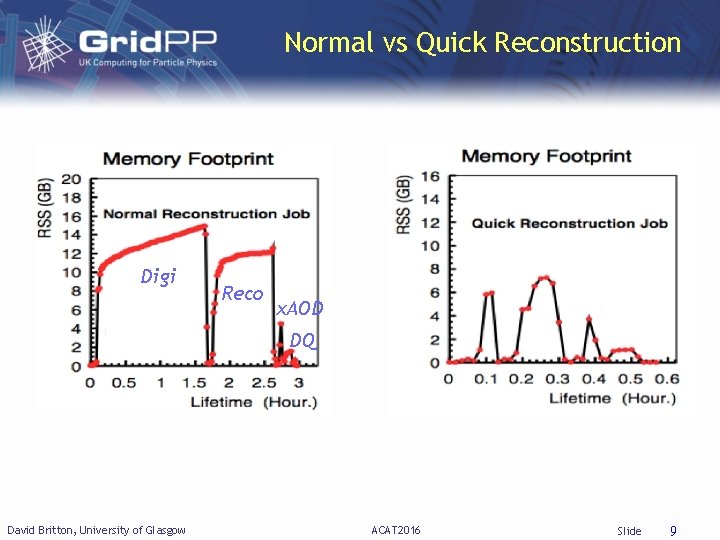

Normal vs Quick Reconstruction Digi Reco x. AOD DQ David Britton, University of Glasgow ACAT 2016 Slide 9

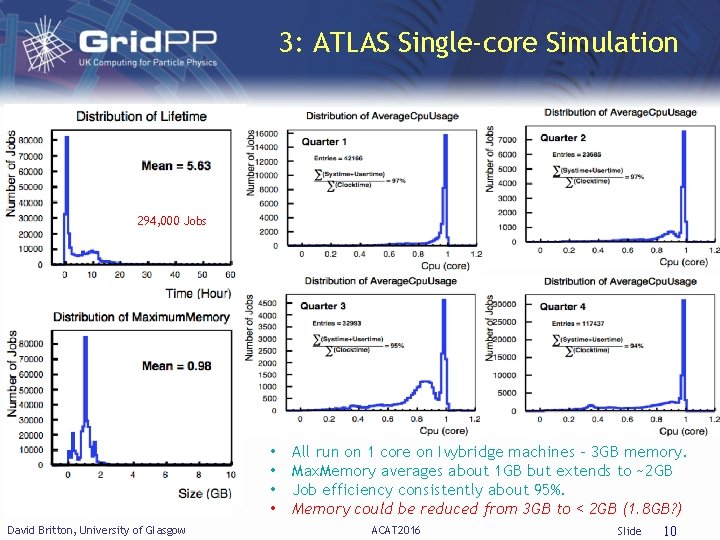

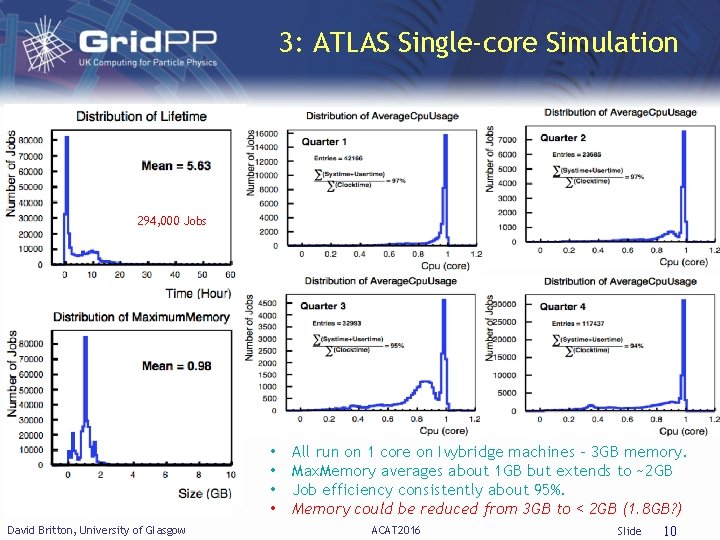

3: ATLAS Single-core Simulation 294, 000 Jobs • • David Britton, University of Glasgow All run on 1 core on Ivybridge machines - 3 GB memory. Max. Memory averages about 1 GB but extends to ~2 GB Job efficiency consistently about 95%. Memory could be reduced from 3 GB to < 2 GB (1. 8 GB? ) ACAT 2016 Slide 10

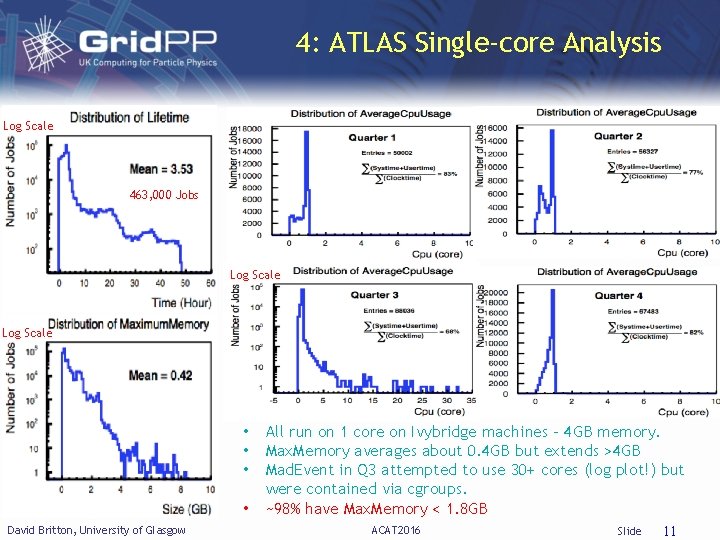

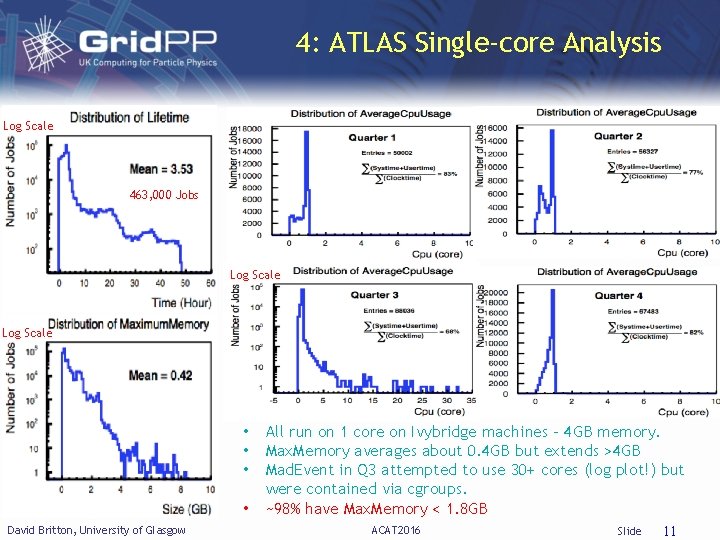

4: ATLAS Single-core Analysis Log Scale 463, 000 Jobs Log Scale • • David Britton, University of Glasgow All run on 1 core on Ivybridge machines - 4 GB memory. Max. Memory averages about 0. 4 GB but extends >4 GB Mad. Event in Q 3 attempted to use 30+ cores (log plot!) but were contained via cgroups. ~98% have Max. Memory < 1. 8 GB ACAT 2016 Slide 11

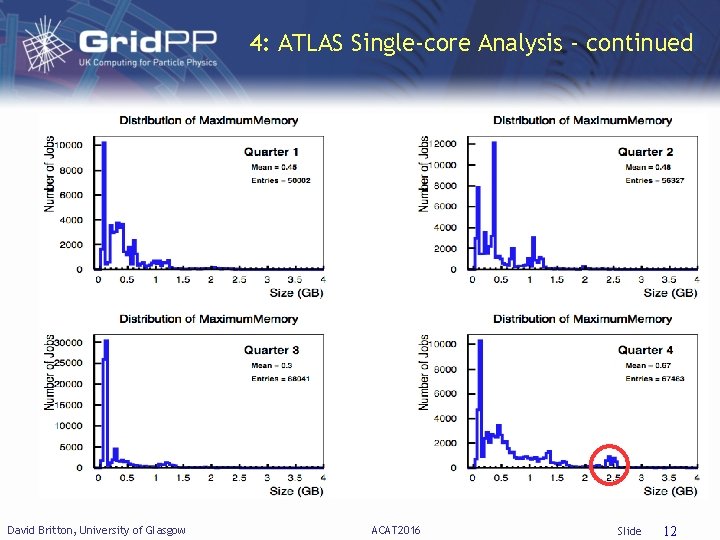

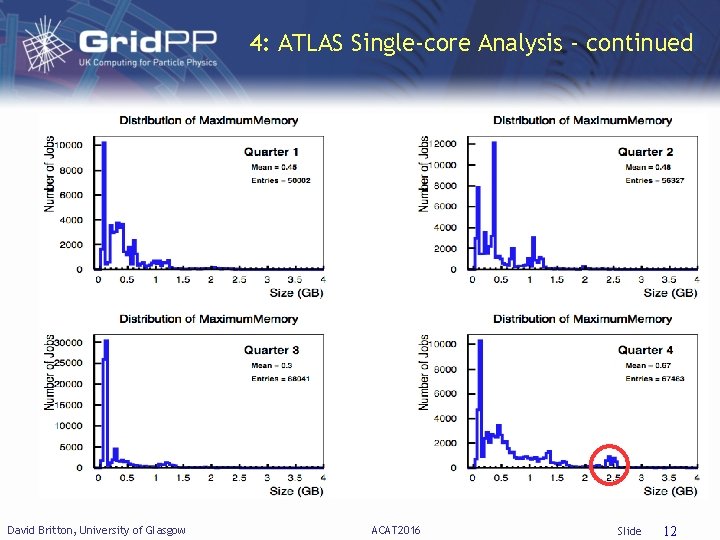

4: ATLAS Single-core Analysis - continued David Britton, University of Glasgow ACAT 2016 Slide 12

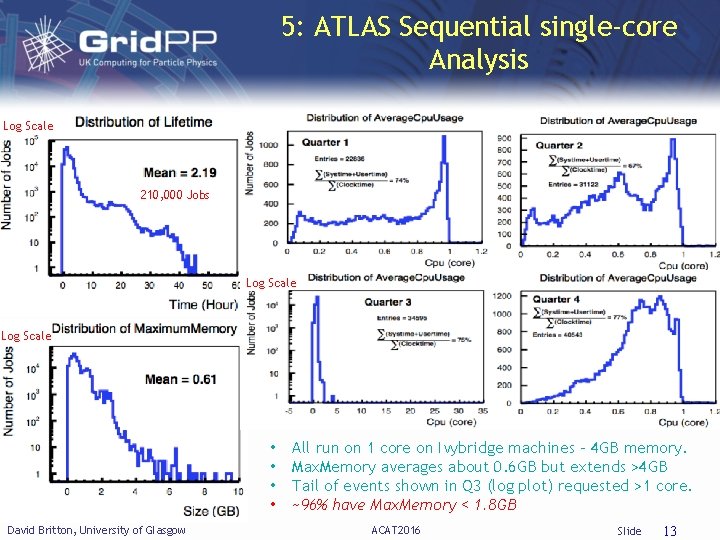

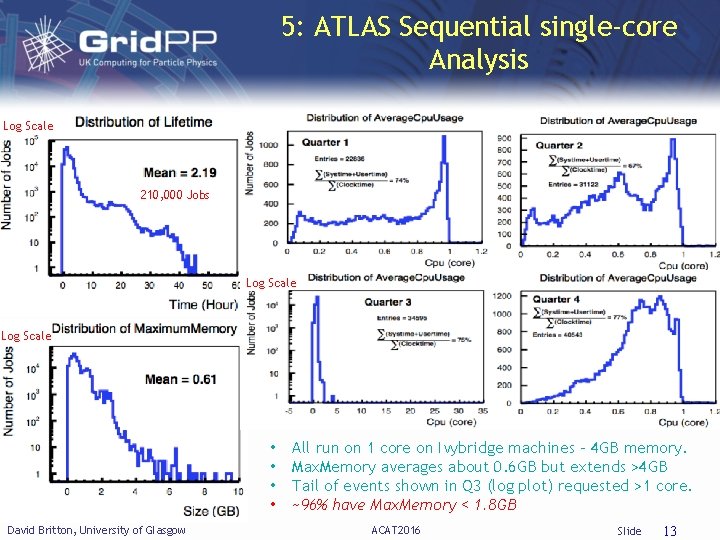

5: ATLAS Sequential single-core Analysis Log Scale 210, 000 Jobs Log Scale • • David Britton, University of Glasgow All run on 1 core on Ivybridge machines - 4 GB memory. Max. Memory averages about 0. 6 GB but extends >4 GB Tail of events shown in Q 3 (log plot) requested >1 core. ~96% have Max. Memory < 1. 8 GB ACAT 2016 Slide 13

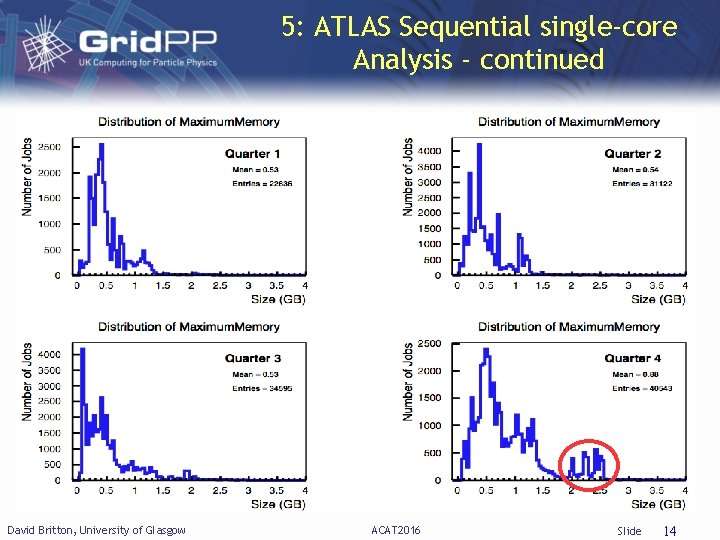

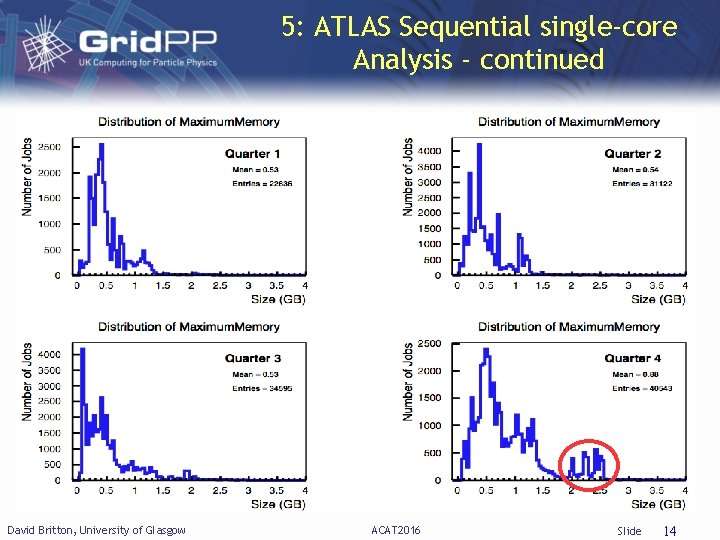

5: ATLAS Sequential single-core Analysis - continued David Britton, University of Glasgow ACAT 2016 Slide 14

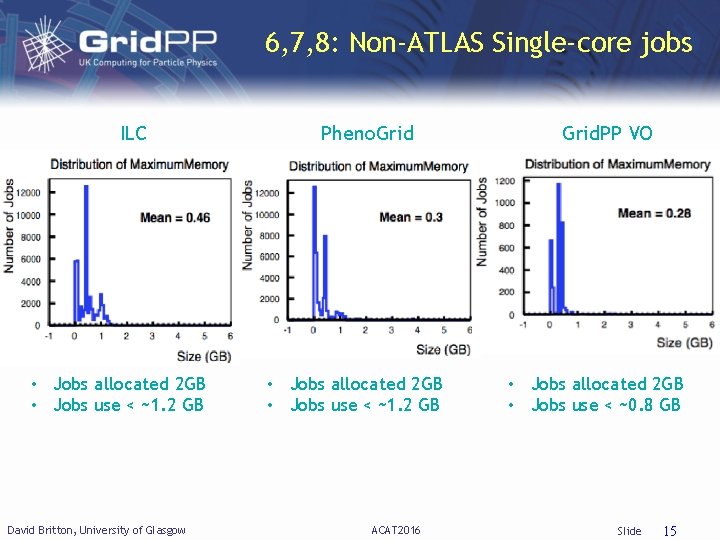

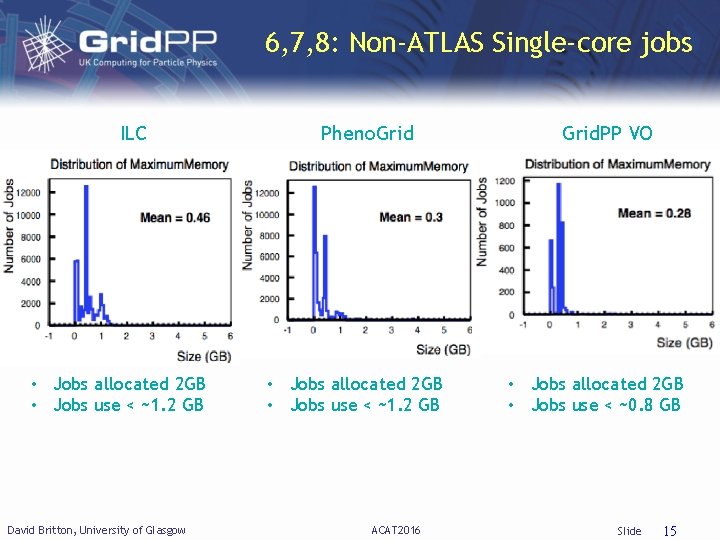

6, 7, 8: Non-ATLAS Single-core jobs ILC • Jobs allocated 2 GB • Jobs use < ~1. 2 GB David Britton, University of Glasgow Pheno. Grid • Jobs allocated 2 GB • Jobs use < ~1. 2 GB ACAT 2016 Grid. PP VO • Jobs allocated 2 GB • Jobs use < ~0. 8 GB Slide 15

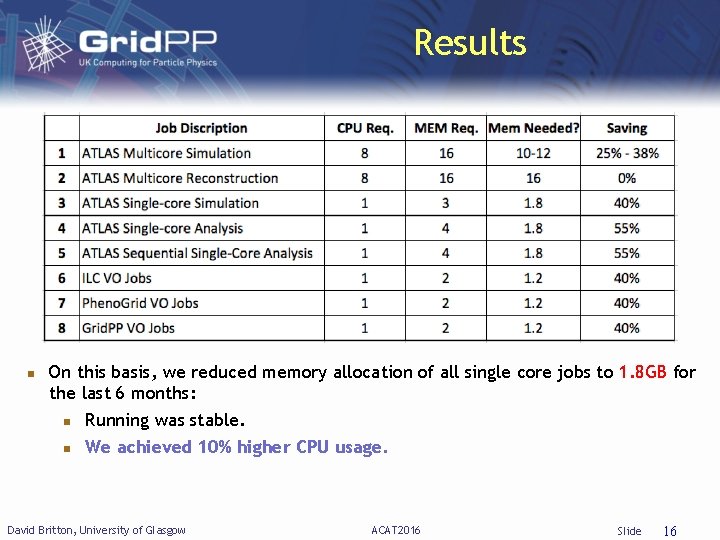

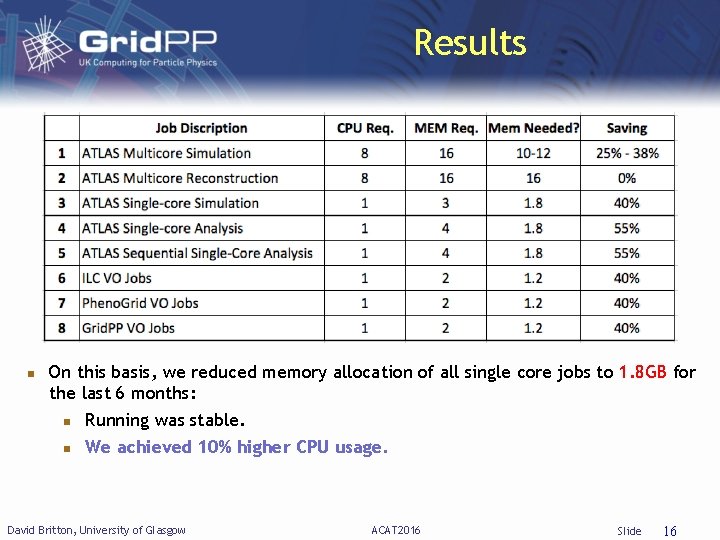

Results On this basis, we reduced memory allocation of all single core jobs to 1. 8 GB for the last 6 months: Running was stable. We achieved 10% higher CPU usage. David Britton, University of Glasgow ACAT 2016 Slide 16

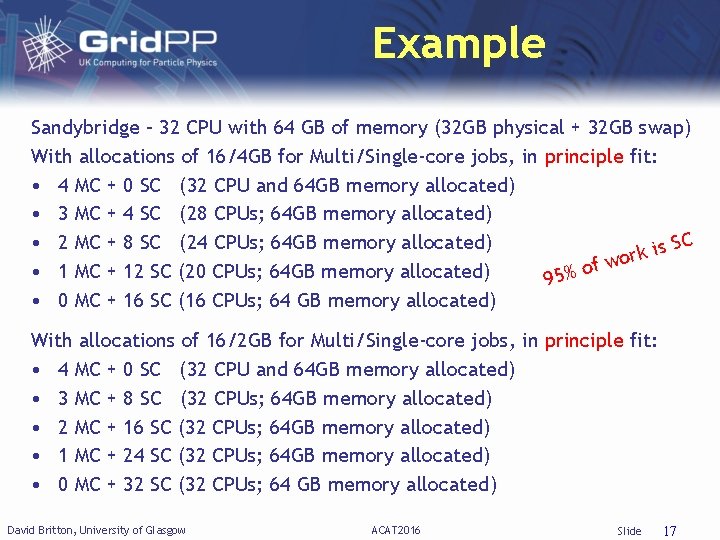

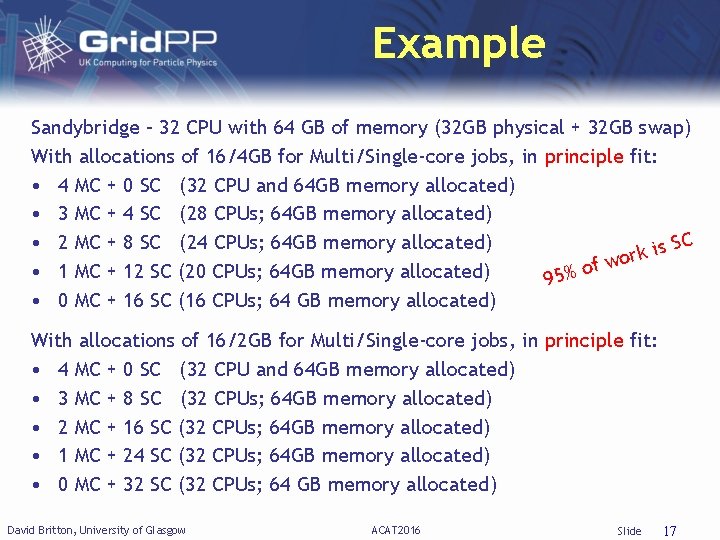

Example Sandybridge – 32 CPU with 64 GB of memory (32 GB physical + 32 GB swap) With allocations of 16/4 GB for Multi/Single-core jobs, in principle fit: • 4 MC + 0 SC (32 CPU and 64 GB memory allocated) • 3 MC + 4 SC (28 CPUs; 64 GB memory allocated) • 2 MC + 8 SC (24 CPUs; 64 GB memory allocated) SC s i k or w f o • 1 MC + 12 SC (20 CPUs; 64 GB memory allocated) 95% • 0 MC + 16 SC (16 CPUs; 64 GB memory allocated) With allocations of 16/2 GB for Multi/Single-core jobs, in principle fit: • 4 MC + 0 SC (32 CPU and 64 GB memory allocated) • 3 MC + 8 SC (32 CPUs; 64 GB memory allocated) • 2 MC + 16 SC (32 CPUs; 64 GB memory allocated) • 1 MC + 24 SC (32 CPUs; 64 GB memory allocated) • 0 MC + 32 SC (32 CPUs; 64 GB memory allocated) David Britton, University of Glasgow ACAT 2016 Slide 17

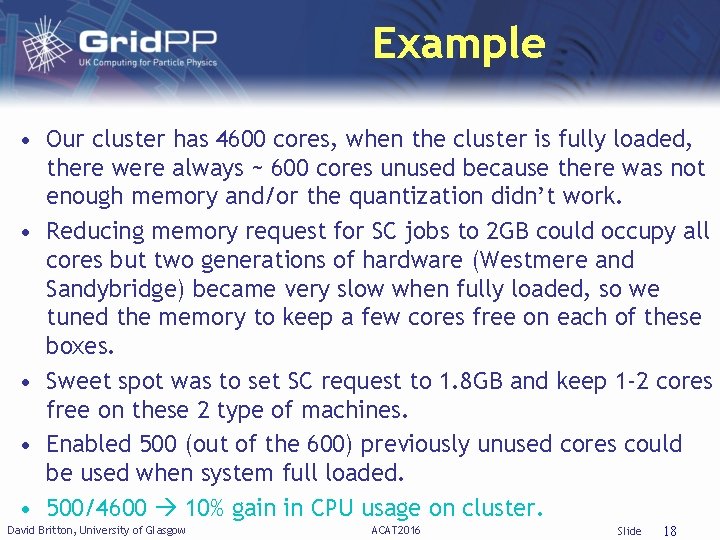

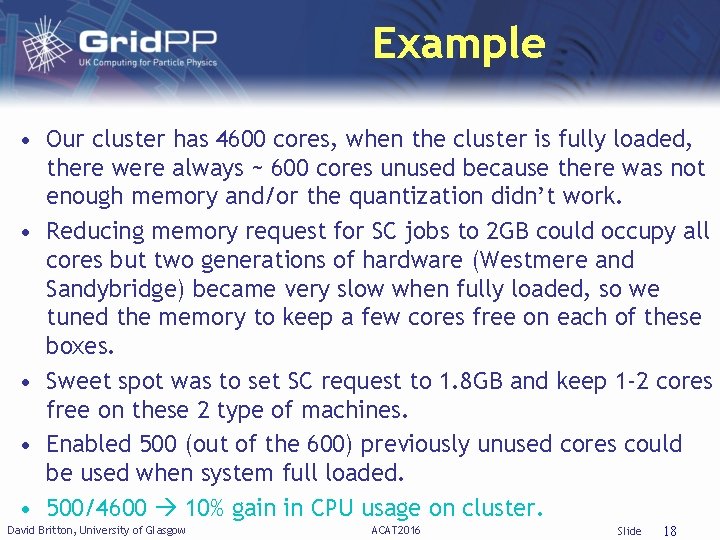

Example • Our cluster has 4600 cores, when the cluster is fully loaded, there were always ~ 600 cores unused because there was not enough memory and/or the quantization didn’t work. • Reducing memory request for SC jobs to 2 GB could occupy all cores but two generations of hardware (Westmere and Sandybridge) became very slow when fully loaded, so we tuned the memory to keep a few cores free on each of these boxes. • Sweet spot was to set SC request to 1. 8 GB and keep 1 -2 cores free on these 2 type of machines. • Enabled 500 (out of the 600) previously unused cores could be used when system full loaded. • 500/4600 10% gain in CPU usage on cluster. David Britton, University of Glasgow ACAT 2016 Slide 18

Future Plans • If ATLAS were to produce two separate types of multicore jobs (simulation and reconstruction) we could try reducing memory for the simulation jobs from 16 GB to 12 GB (? ). • Develop a memory monitoring system so that we can: – Set more fine-grained and more dynamic limits. – Spot stuck or suspicious workloads. – Provide feedback to ATLAS on memory requirements on a realtime bases so that memory requests can be adjusted (benefits all sites). • Stick to 2 GB/hyperthreaded-core in the current procurement and spend the last 12% on additional CPU rather than extra memory. David Britton, University of Glasgow ACAT 2016 Slide 19

Backup Slides David Britton, University of Glasgow ACAT 2016 Slide 20

Multicore Simulation Jobs (5) Slide

Multicore Reconstruction Jobs(6) Digi Reco x. AOD DQ Slide