Cluster Computing with Dryad LINQ Mihai Budiu MSRSVC

Cluster Computing with Dryad. LINQ Mihai Budiu, MSR-SVC PARC, May 8 2008

Aknowledgments MSR SVC and ISRC SVC Michael Isard, Yuan Yu, Andrew Birrell, Dennis Fetterly Ulfar Erlingsson, Pradeep Kumar Gunda, Jon Currey 2

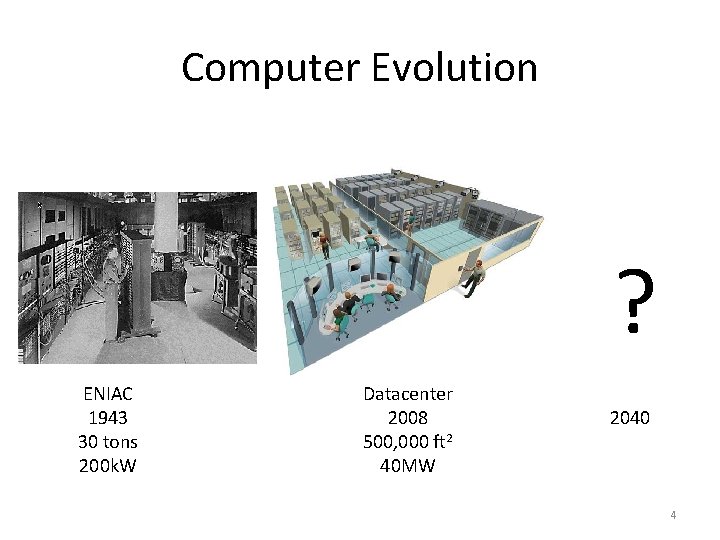

Computer Evolution ? 1961 2008 2040 3

Computer Evolution ? ENIAC 1943 30 tons 200 k. W Datacenter 2008 500, 000 ft 2 40 MW 2040 4

2040 5

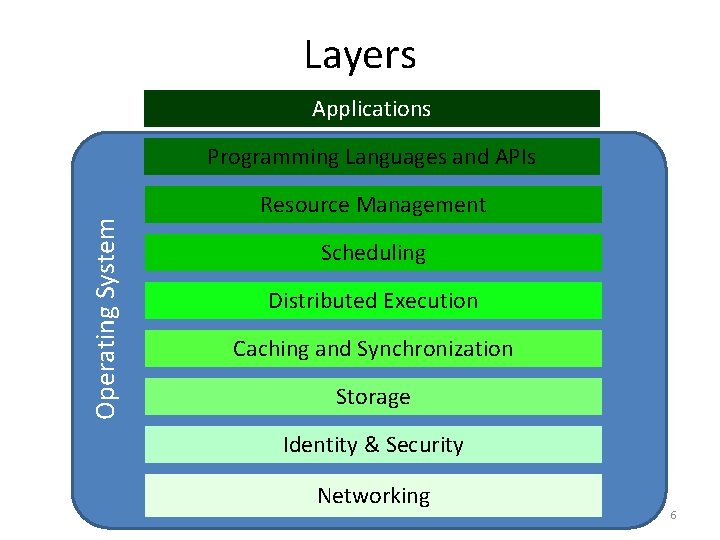

Layers Applications Operating System Programming Languages and APIs Resource Management Scheduling Distributed Execution Caching and Synchronization Storage Identity & Security Networking 6

Pieces of the Global Computer 7

This Work 8

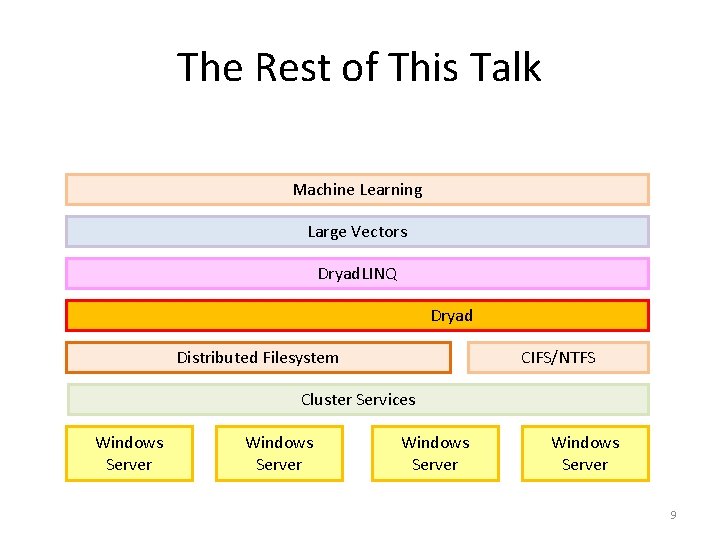

The Rest of This Talk Machine Learning Large Vectors Dryad. LINQ Dryad Distributed Filesystem CIFS/NTFS Cluster Services Windows Server 9

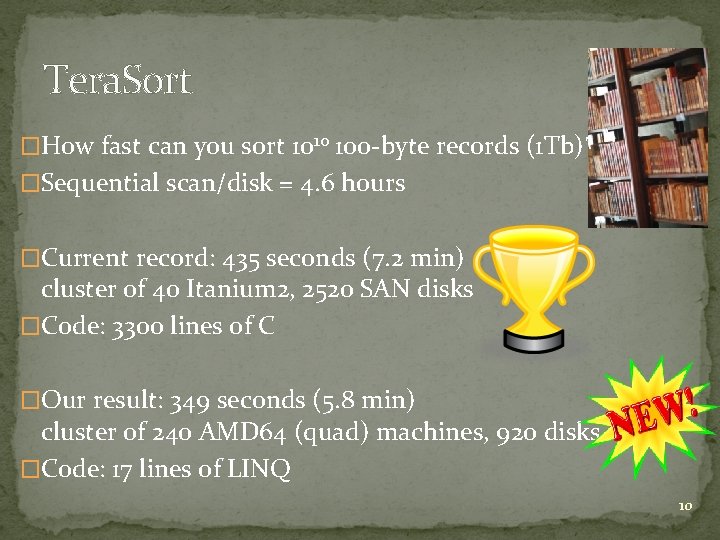

Tera. Sort �How fast can you sort 1010 100 -byte records (1 Tb)? �Sequential scan/disk = 4. 6 hours �Current record: 435 seconds (7. 2 min) cluster of 40 Itanium 2, 2520 SAN disks �Code: 3300 lines of C �Our result: 349 seconds (5. 8 min) cluster of 240 AMD 64 (quad) machines, 920 disks �Code: 17 lines of LINQ 10

Outline • • Introduction Dryad. LINQ Building on Dryad. LINQ 11

Outline • Introduction • Dryad – deployed since 2006 – many thousands of machines – analyzes many petabytes of data/day • Dryad. LINQ • Building on Dryad. LINQ 12

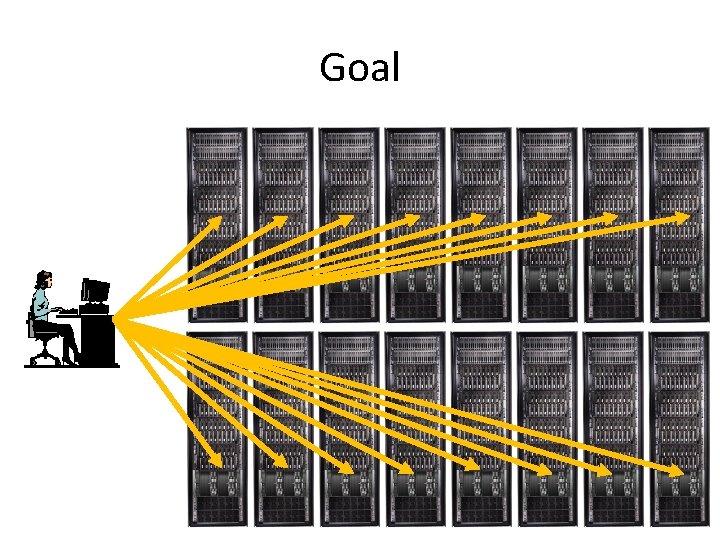

Goal 13

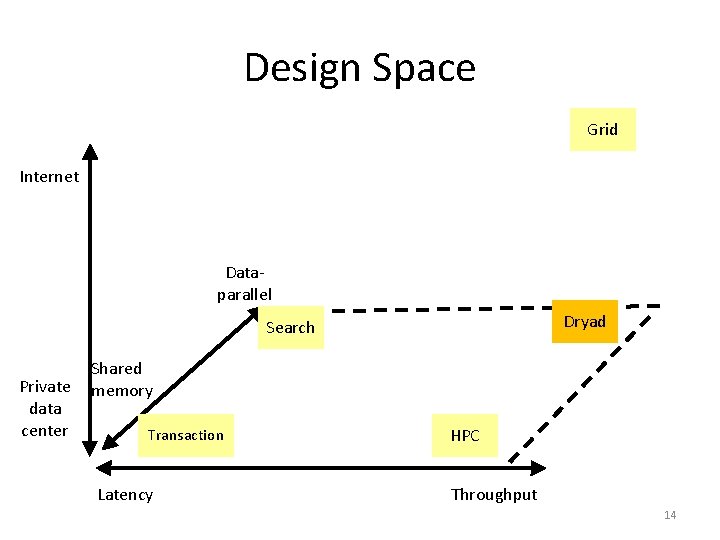

Design Space Grid Internet Dataparallel Dryad Search Private data center Shared memory Transaction Latency HPC Throughput 14

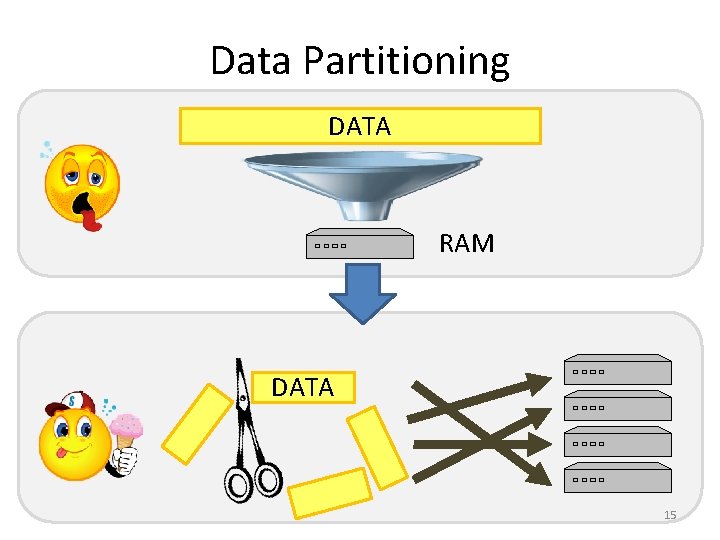

Data Partitioning DATA RAM DATA 15

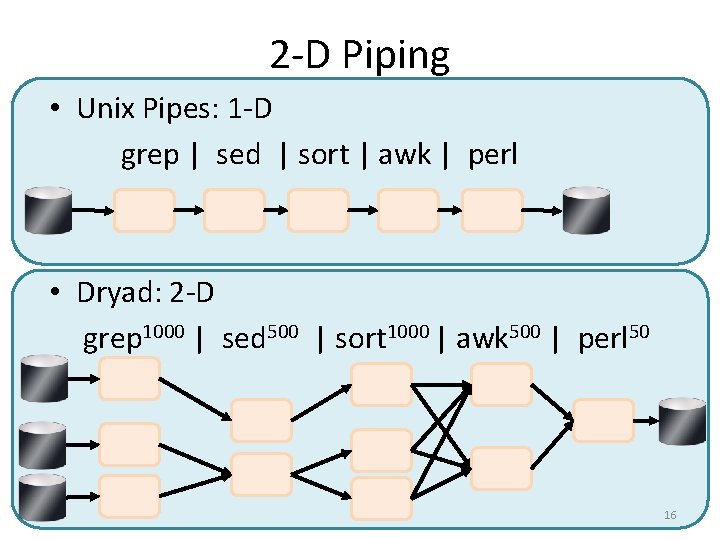

2 -D Piping • Unix Pipes: 1 -D grep | sed | sort | awk | perl • Dryad: 2 -D grep 1000 | sed 500 | sort 1000 | awk 500 | perl 50 16

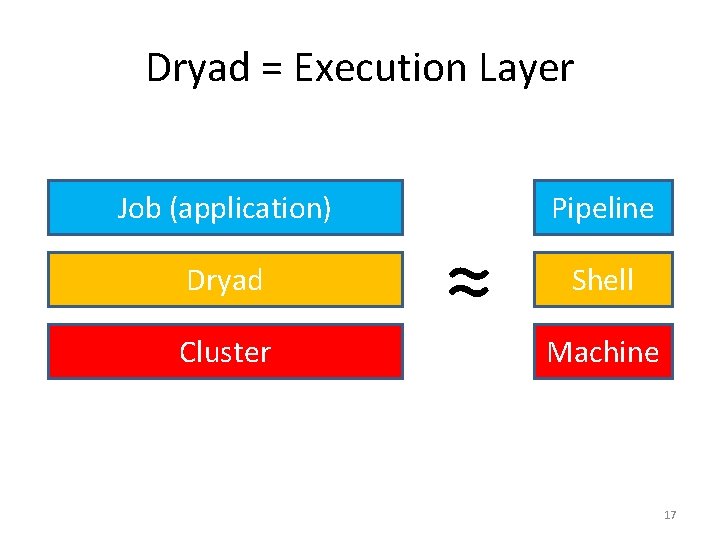

Dryad = Execution Layer Job (application) Dryad Cluster ≈ Pipeline Shell Machine 17

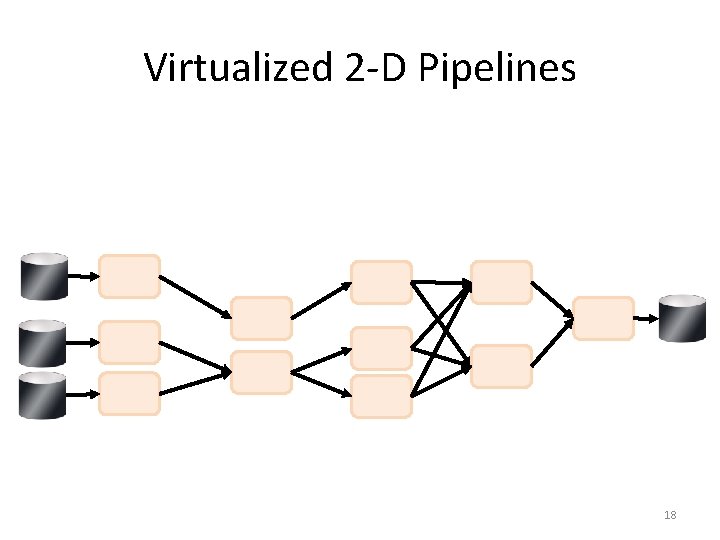

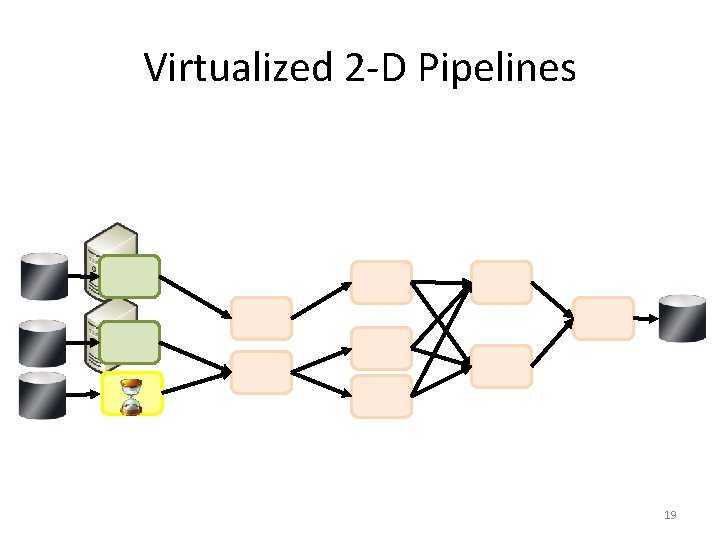

Virtualized 2 -D Pipelines 18

Virtualized 2 -D Pipelines 19

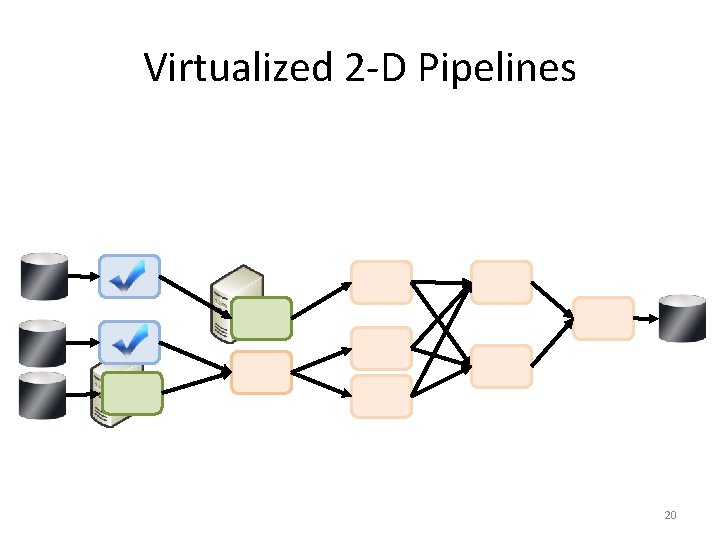

Virtualized 2 -D Pipelines 20

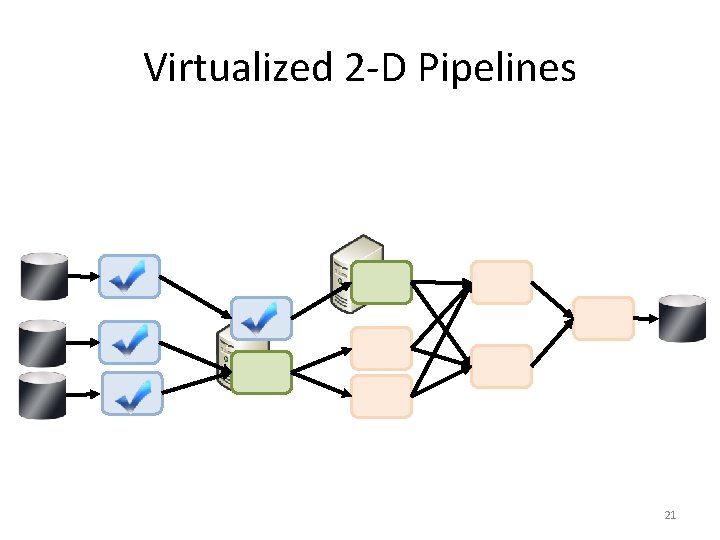

Virtualized 2 -D Pipelines 21

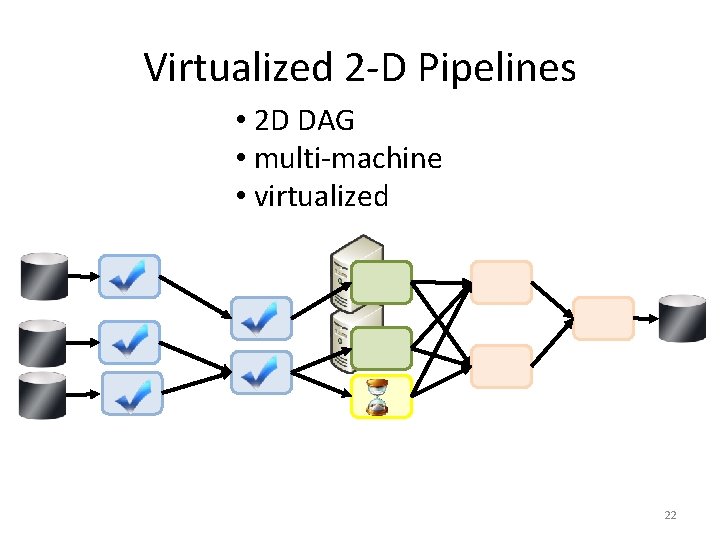

Virtualized 2 -D Pipelines • 2 D DAG • multi-machine • virtualized 22

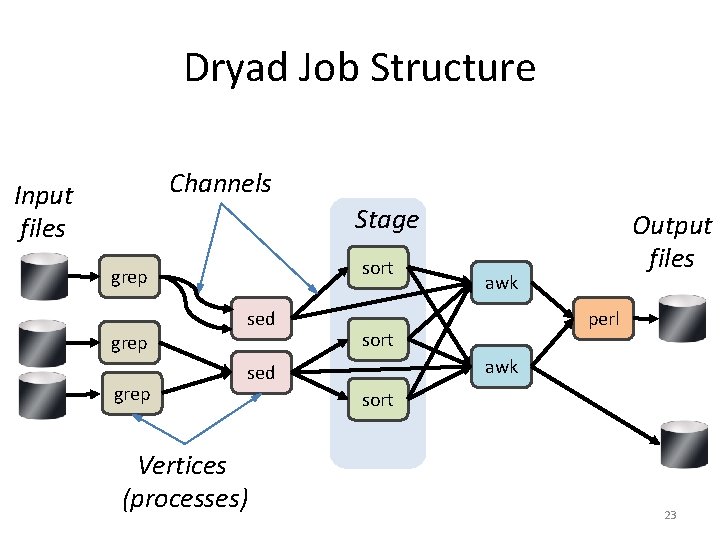

Dryad Job Structure Channels Input files Stage sort grep sed awk perl sort awk sed Vertices (processes) Output files sort 23

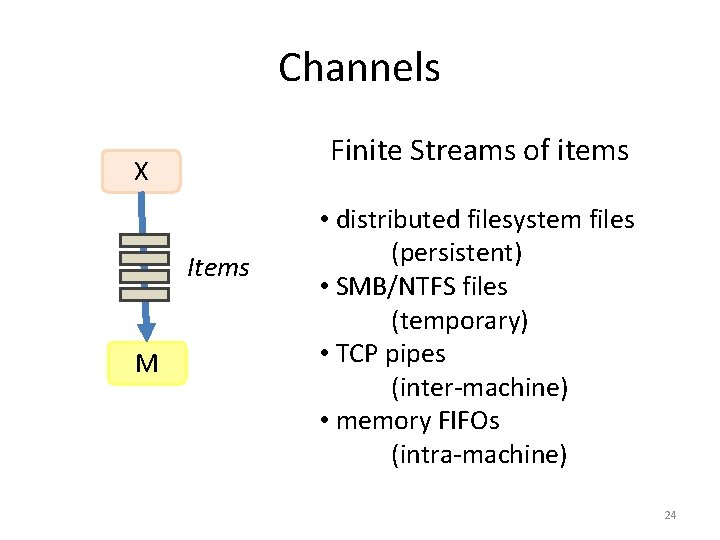

Channels Finite Streams of items X Items M • distributed filesystem files (persistent) • SMB/NTFS files (temporary) • TCP pipes (inter-machine) • memory FIFOs (intra-machine) 24

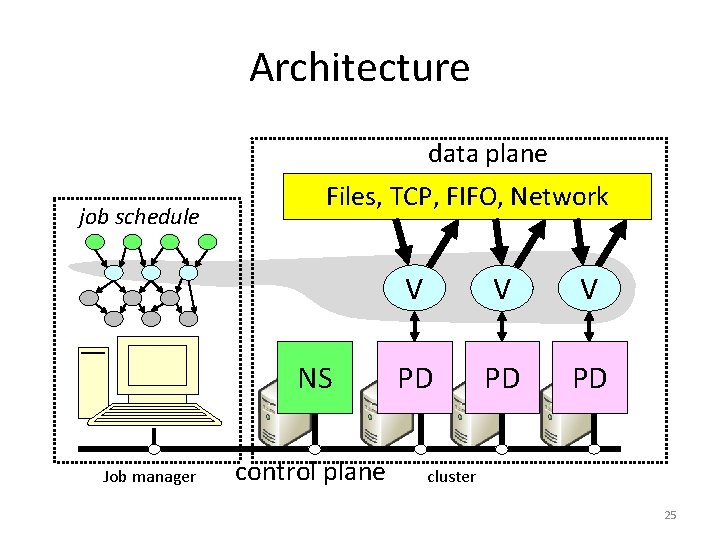

Architecture data plane job schedule Files, TCP, FIFO, Network NS Job manager control plane V V V PD PD PD cluster 25

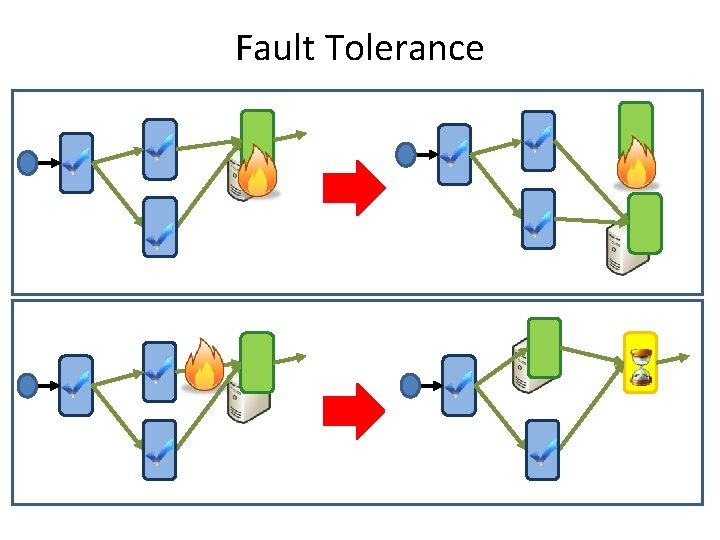

Fault Tolerance

![Dynamic Graph Rewriting X[0] X[1] X[3] Completed vertices X[2] Slow vertex X’[2] Duplicate vertex Dynamic Graph Rewriting X[0] X[1] X[3] Completed vertices X[2] Slow vertex X’[2] Duplicate vertex](http://slidetodoc.com/presentation_image_h2/11a2abad1b780539b24dc34bb64b3846/image-27.jpg)

Dynamic Graph Rewriting X[0] X[1] X[3] Completed vertices X[2] Slow vertex X’[2] Duplicate vertex Duplication Policy = f(running times, data volumes)

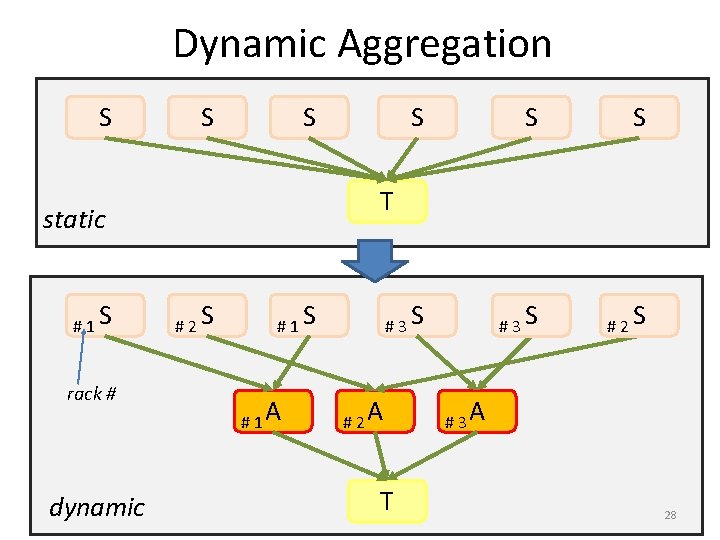

Dynamic Aggregation S S S rack # dynamic S S #3 S #2 S T static #1 S S #2 S #1 S # 1 A # 2 A T # 3 A 28

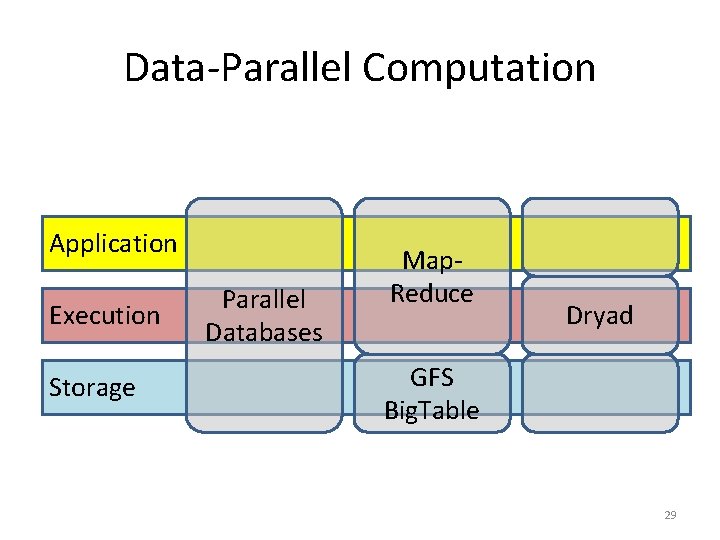

Data-Parallel Computation Application Execution Storage Parallel Databases Map. Reduce Dryad GFS Big. Table 29

Outline • • Introduction Dryad. LINQ Building on Dryad 30

Dryad. LINQ Dryad 31

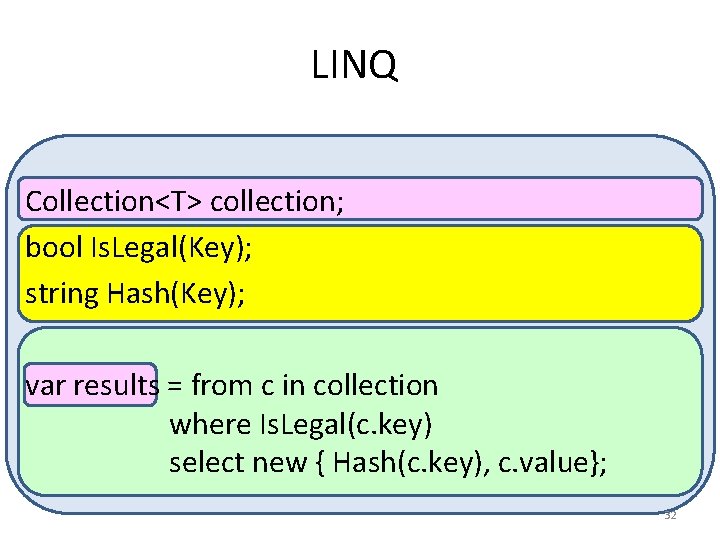

LINQ Collection<T> collection; bool Is. Legal(Key); string Hash(Key); var results = from c in collection where Is. Legal(c. key) select new { Hash(c. key), c. value}; 32

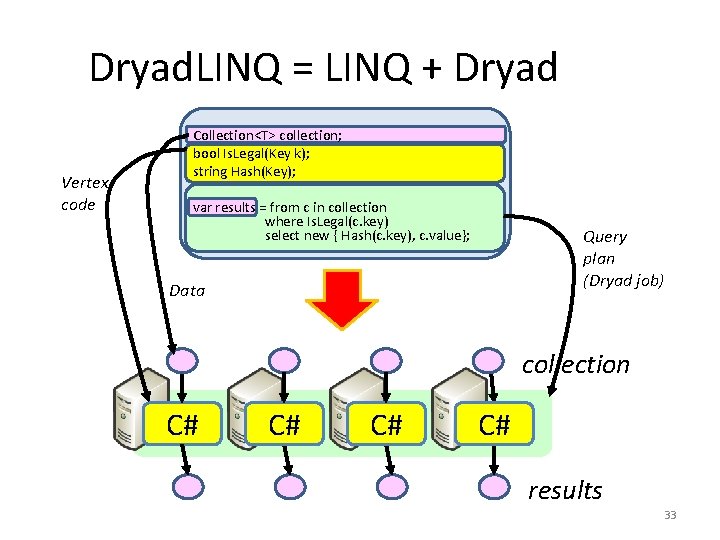

Dryad. LINQ = LINQ + Dryad Vertex code Collection<T> collection; bool Is. Legal(Key k); string Hash(Key); var results = from c in collection where Is. Legal(c. key) select new { Hash(c. key), c. value}; Query plan (Dryad job) Data collection C# C# results 33

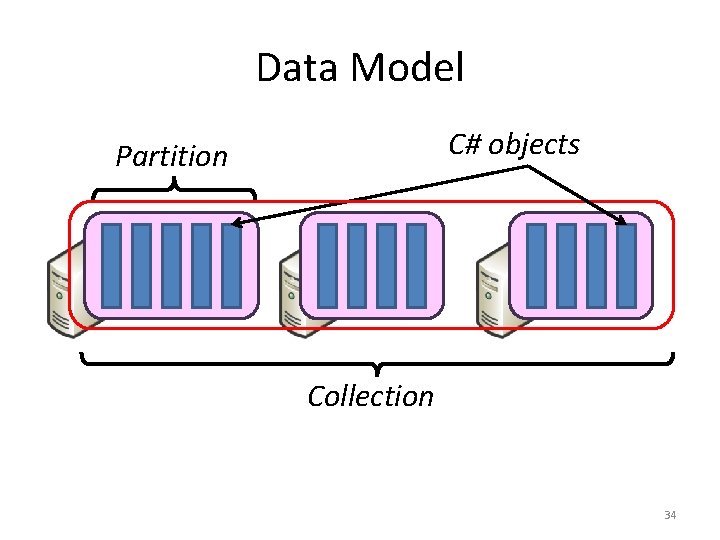

Data Model C# objects Partition Collection 34

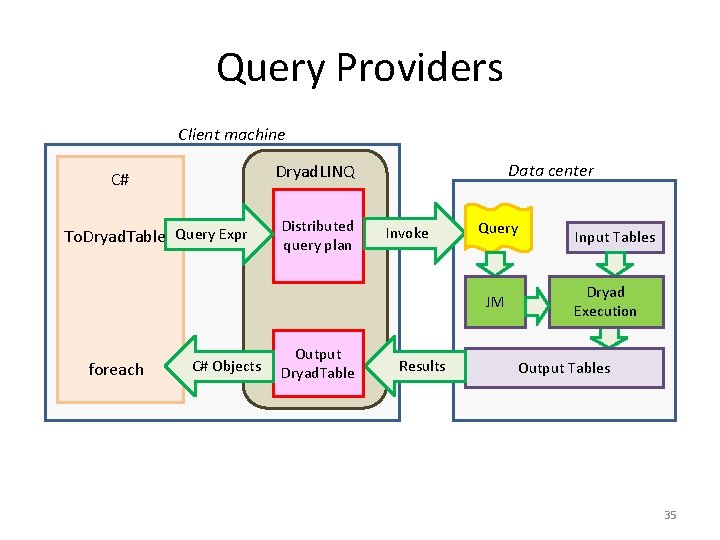

Query Providers Client machine Data center Dryad. LINQ C# To. Dryad. Table Query Expr Distributed query plan Invoke Query JM foreach C# Objects Output (11) Dryad. Table Results Input Tables Dryad Execution Output Tables 35

Demo 36

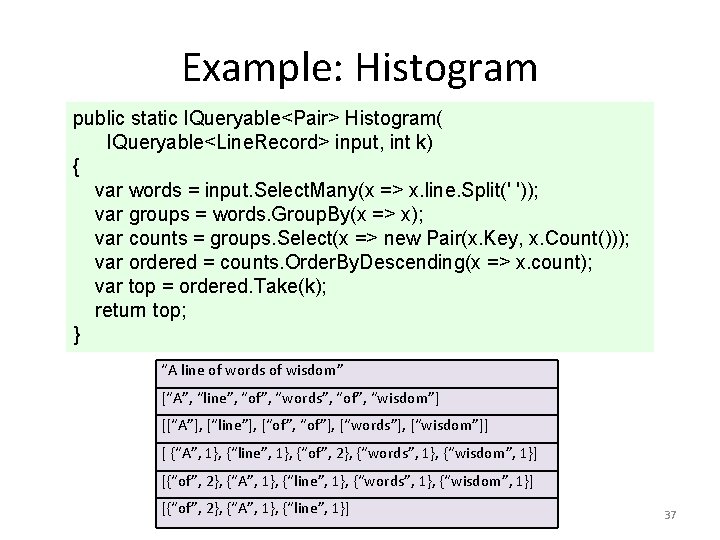

Example: Histogram public static IQueryable<Pair> Histogram( IQueryable<Line. Record> input, int k) { var words = input. Select. Many(x => x. line. Split(' ')); var groups = words. Group. By(x => x); var counts = groups. Select(x => new Pair(x. Key, x. Count())); var ordered = counts. Order. By. Descending(x => x. count); var top = ordered. Take(k); return top; } “A line of words of wisdom” [“A”, “line”, “of”, “words”, “of”, “wisdom”] [[“A”], [“line”], [“of”, “of”], [“words”], [“wisdom”]] [ {“A”, 1}, {“line”, 1}, {“of”, 2}, {“words”, 1}, {“wisdom”, 1}] [{“of”, 2}, {“A”, 1}, {“line”, 1}] 37

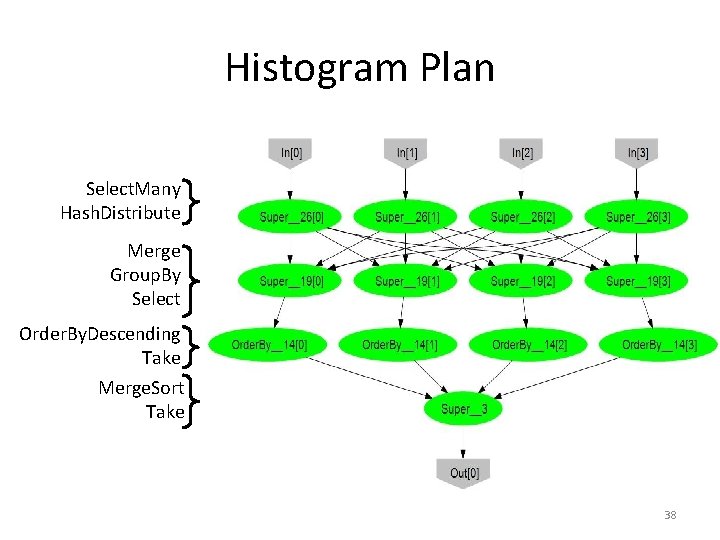

Histogram Plan Select. Many Hash. Distribute Merge Group. By Select Order. By. Descending Take Merge. Sort Take 38

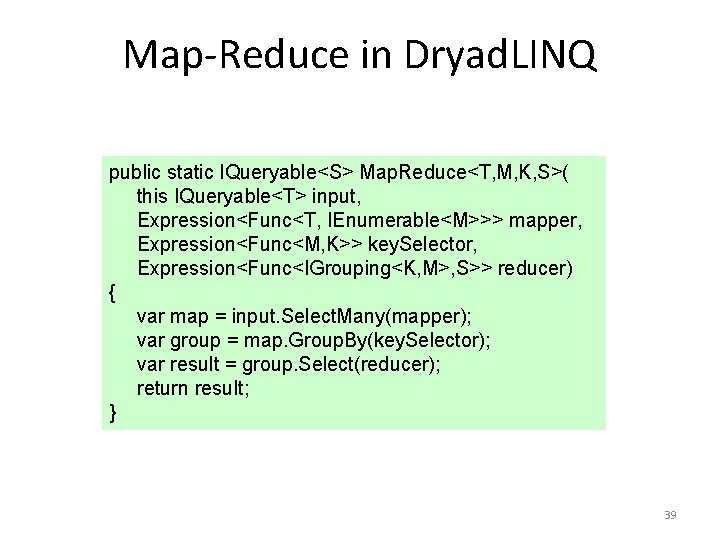

Map-Reduce in Dryad. LINQ public static IQueryable<S> Map. Reduce<T, M, K, S>( this IQueryable<T> input, Expression<Func<T, IEnumerable<M>>> mapper, Expression<Func<M, K>> key. Selector, Expression<Func<IGrouping<K, M>, S>> reducer) { var map = input. Select. Many(mapper); var group = map. Group. By(key. Selector); var result = group. Select(reducer); return result; } 39

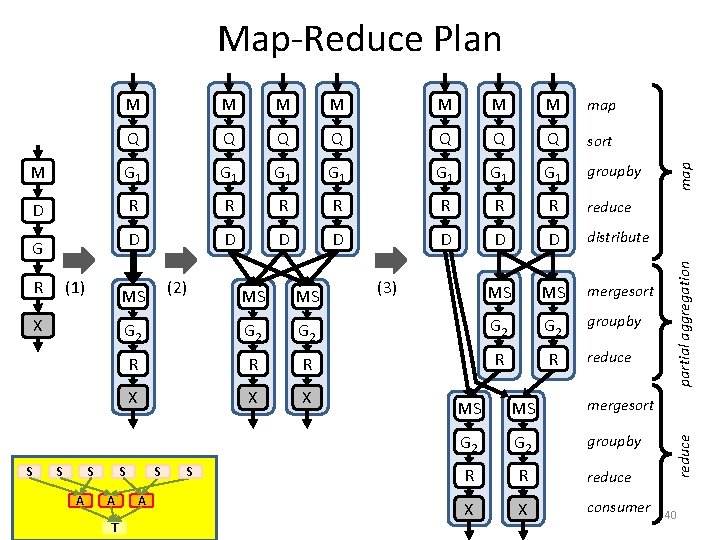

M M M map Q Q Q Q sort M G 1 G 1 groupby D R R R R reduce D D D D distribute MS MS mergesort G R (1) X S S S A (2) MS MS G 2 G 2 G 2 groupby R R R reduce X X X S A T (3) MS S A S MS MS mergesort G 2 groupby R R reduce X X consumer partial aggregation M reduce M map Map-Reduce Plan 40

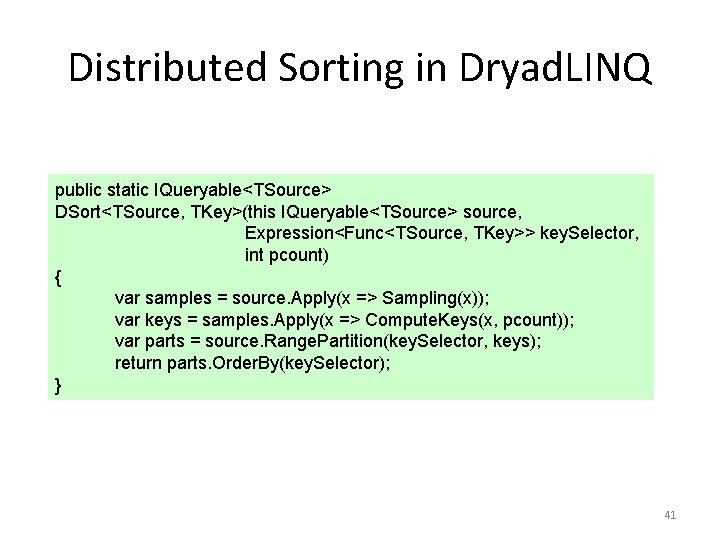

Distributed Sorting in Dryad. LINQ public static IQueryable<TSource> DSort<TSource, TKey>(this IQueryable<TSource> source, Expression<Func<TSource, TKey>> key. Selector, int pcount) { var samples = source. Apply(x => Sampling(x)); var keys = samples. Apply(x => Compute. Keys(x, pcount)); var parts = source. Range. Partition(key. Selector, keys); return parts. Order. By(key. Selector); } 41

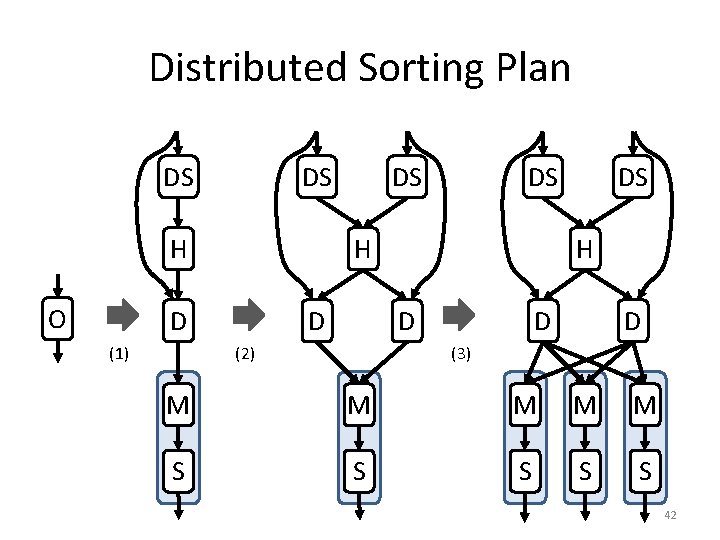

Distributed Sorting Plan DS DS H O DS H D (1) DS D H D (2) DS D D (3) M M M S S S 42

Outline • • Introduction Dryad. LINQ Building on Dryad. LINQ 43

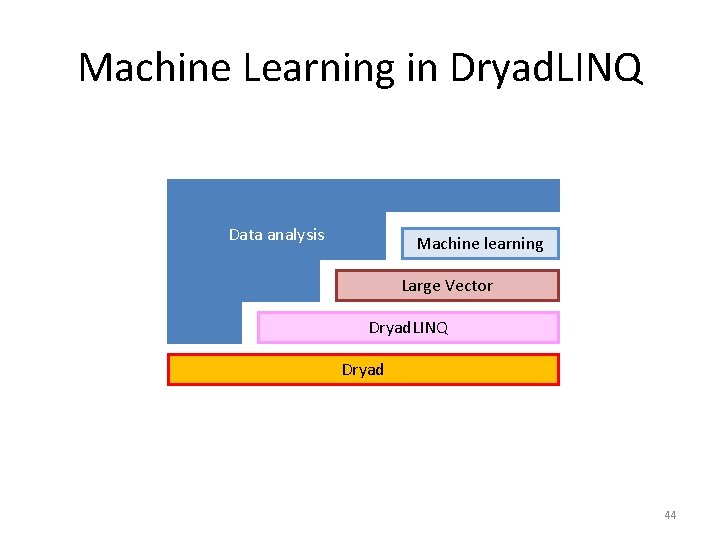

Machine Learning in Dryad. LINQ Data analysis Machine learning Large Vector Dryad. LINQ Dryad 44

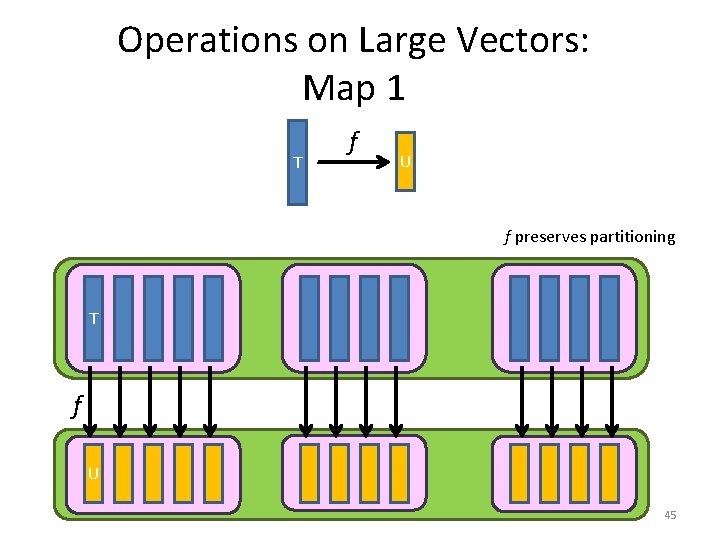

Operations on Large Vectors: Map 1 T f U f preserves partitioning T f U 45

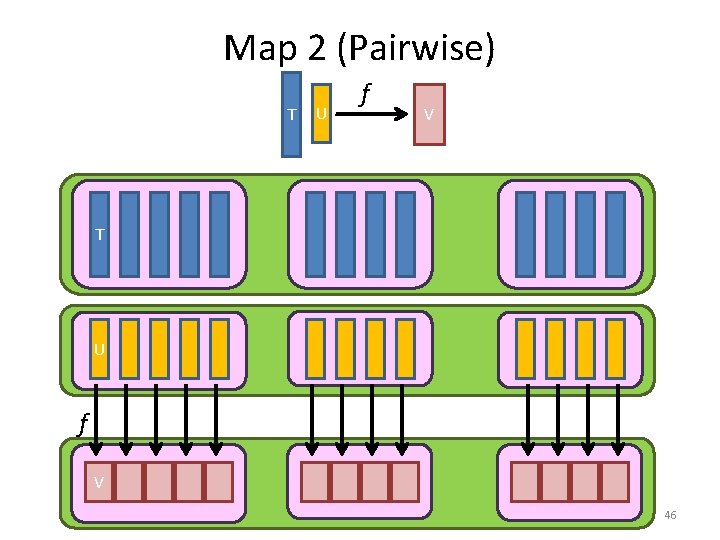

Map 2 (Pairwise) T U f V 46

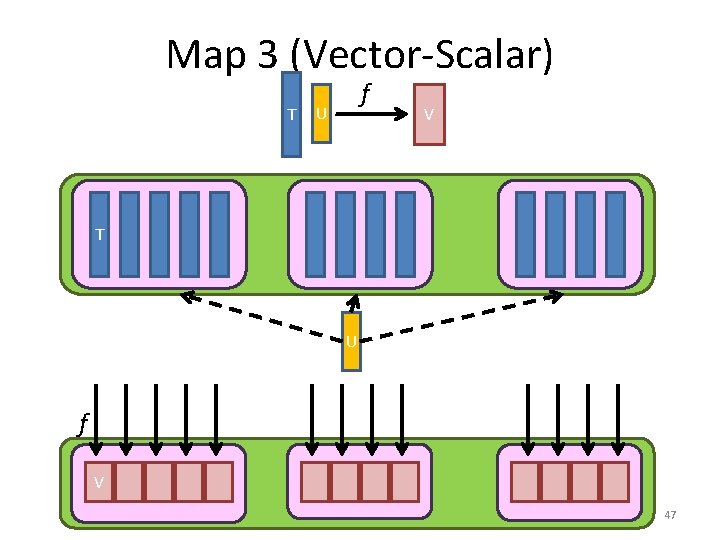

Map 3 (Vector-Scalar) T f U V T U f V 47

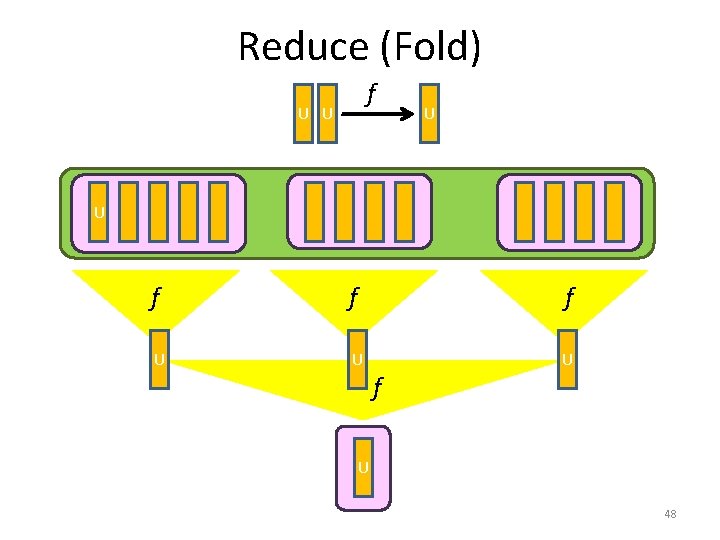

Reduce (Fold) f U U f f f U U U f U 48

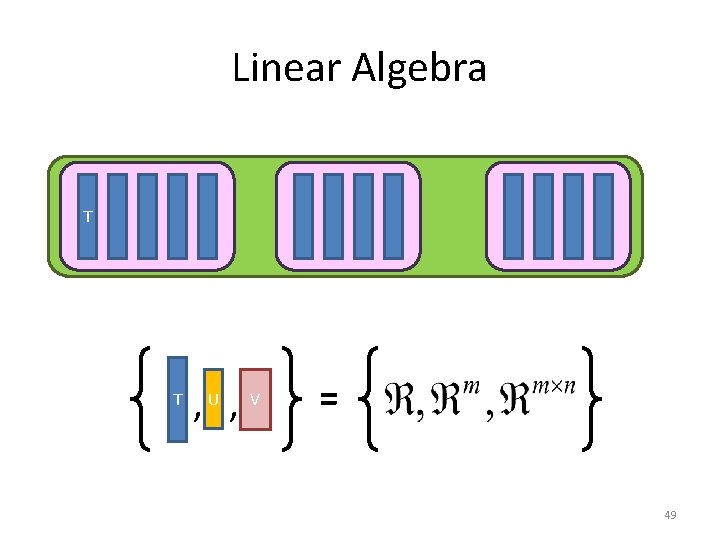

Linear Algebra T T , U , V = 49

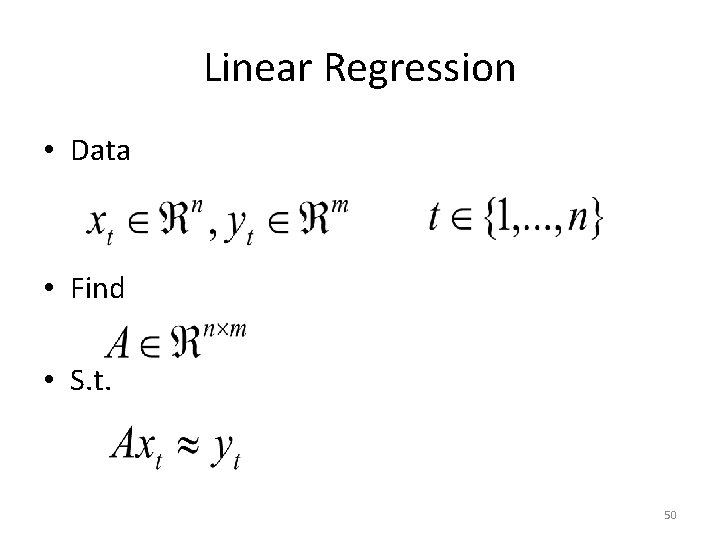

Linear Regression • Data • Find • S. t. 50

![Analytic Solution X[0] X[1] X[2] Y[0] Y[1] Y[2] Map X×XT Y×XT Reduce Σ Σ Analytic Solution X[0] X[1] X[2] Y[0] Y[1] Y[2] Map X×XT Y×XT Reduce Σ Σ](http://slidetodoc.com/presentation_image_h2/11a2abad1b780539b24dc34bb64b3846/image-51.jpg)

Analytic Solution X[0] X[1] X[2] Y[0] Y[1] Y[2] Map X×XT Y×XT Reduce Σ Σ [ ]-1 * A 51

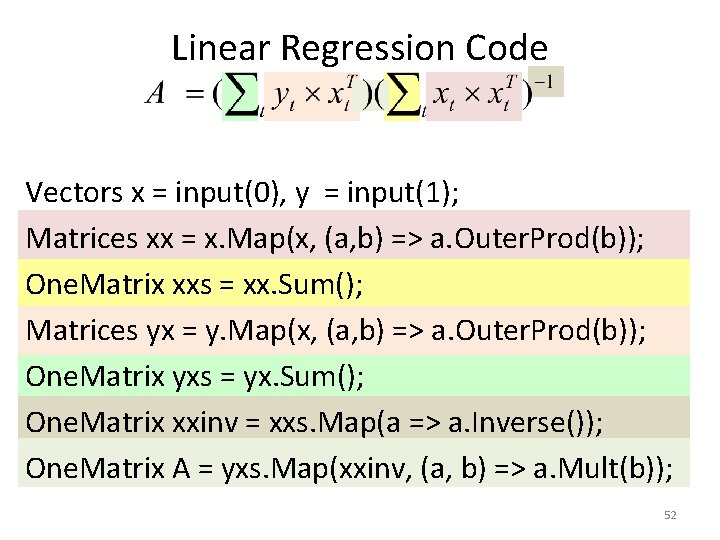

Linear Regression Code Vectors x = input(0), y = input(1); Matrices xx = x. Map(x, (a, b) => a. Outer. Prod(b)); One. Matrix xxs = xx. Sum(); Matrices yx = y. Map(x, (a, b) => a. Outer. Prod(b)); One. Matrix yxs = yx. Sum(); One. Matrix xxinv = xxs. Map(a => a. Inverse()); One. Matrix A = yxs. Map(xxinv, (a, b) => a. Mult(b)); 52

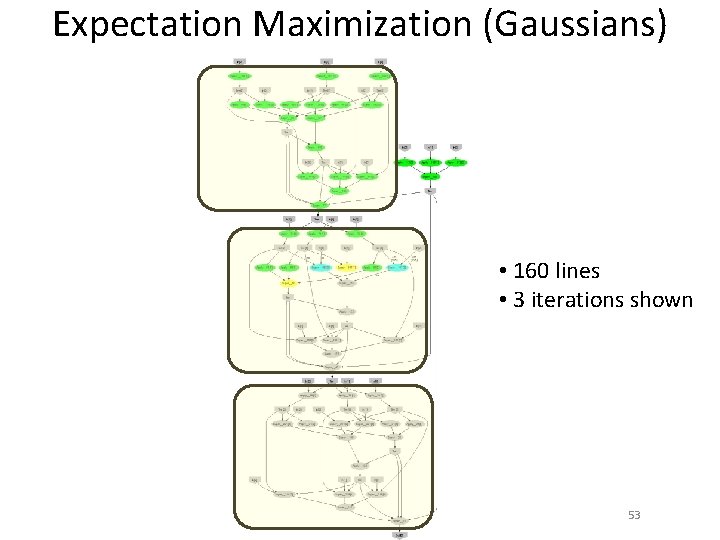

Expectation Maximization (Gaussians) • 160 lines • 3 iterations shown 53

Conclusions • Dryad = distributed execution environment • Application-independent (semantics oblivious) • Supports rich software ecosystem – Relational algebra, Map-reduce, LINQ • Dryad. LINQ = Compiles LINQ to Dryad • C# objects and declarative programming • . Net and Visual Studio for parallel programming 54

Backup Slides 55

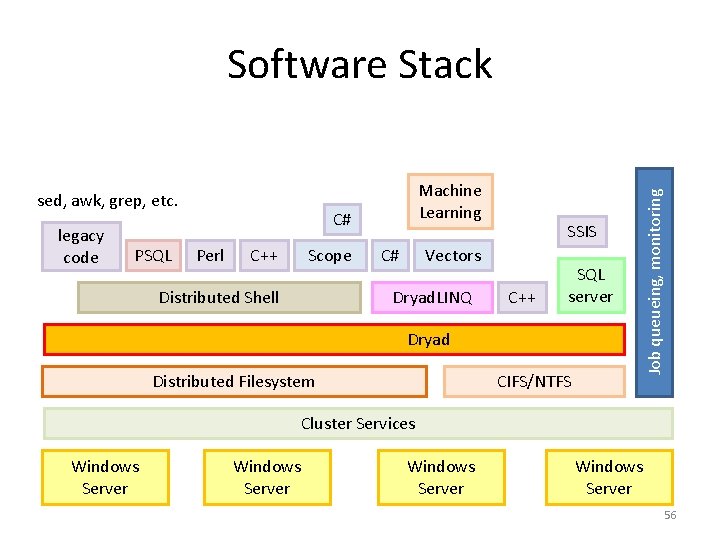

sed, awk, grep, etc. legacy code PSQL Machine Learning C# Perl C++ Scope Distributed Shell C# SSIS Vectors Dryad. LINQ C++ SQL server Dryad Distributed Filesystem CIFS/NTFS Job queueing, monitoring Software Stack Cluster Services Windows Server 56

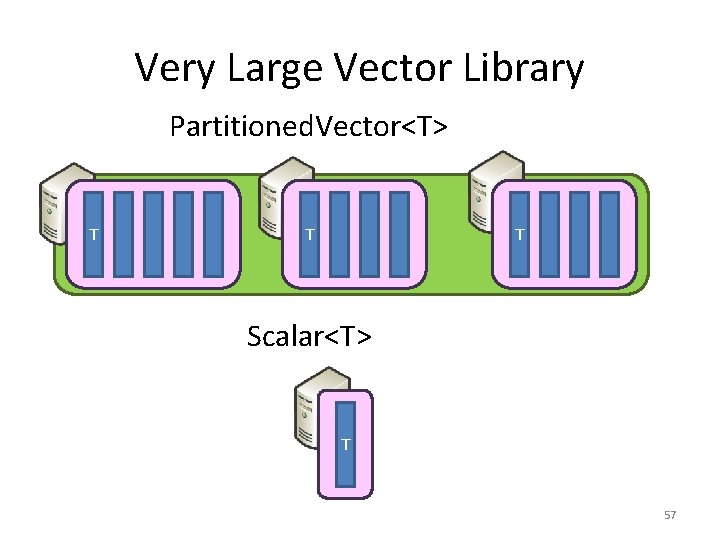

Very Large Vector Library Partitioned. Vector<T> T T T Scalar<T> T 57

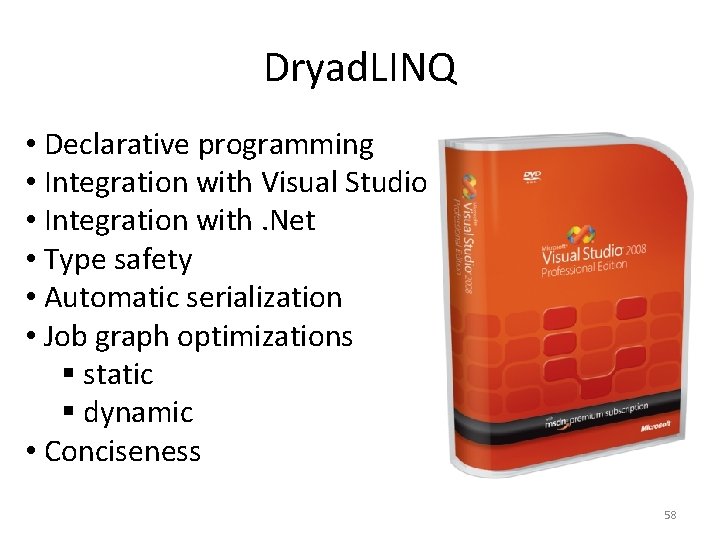

Dryad. LINQ • Declarative programming • Integration with Visual Studio • Integration with. Net • Type safety • Automatic serialization • Job graph optimizations § static § dynamic • Conciseness 58

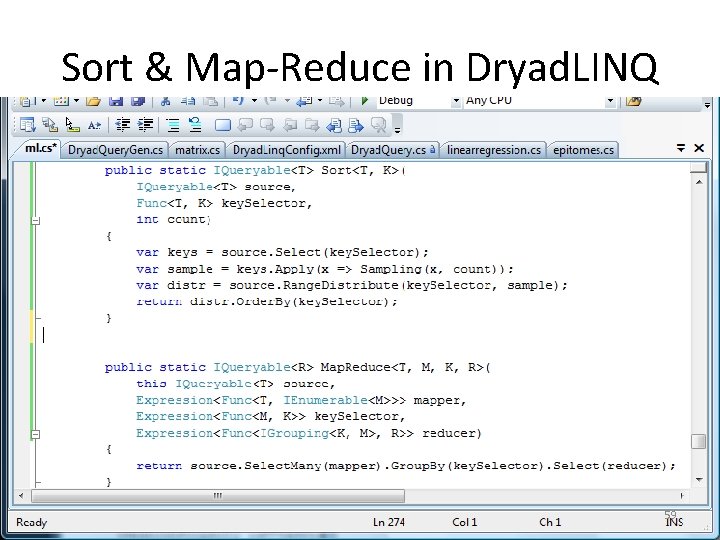

Sort & Map-Reduce in Dryad. LINQ 59

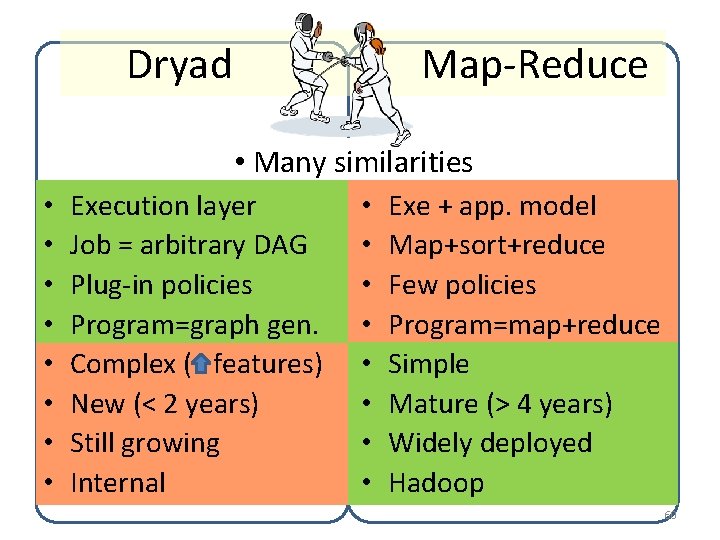

Dryad Map-Reduce • Many similarities • • Execution layer Job = arbitrary DAG Plug-in policies Program=graph gen. Complex ( features) New (< 2 years) Still growing Internal • • Exe + app. model Map+sort+reduce Few policies Program=map+reduce Simple Mature (> 4 years) Widely deployed Hadoop 60

PLINQ public static IEnumerable<TSource> Dryad. Sort<TSource, TKey>(IEnumerable<TSource> source, Func<TSource, TKey> key. Selector, IComparer<TKey> comparer, bool is. Descending) { return source. As. Parallel(). Order. By(key. Selector, comparer); } 61

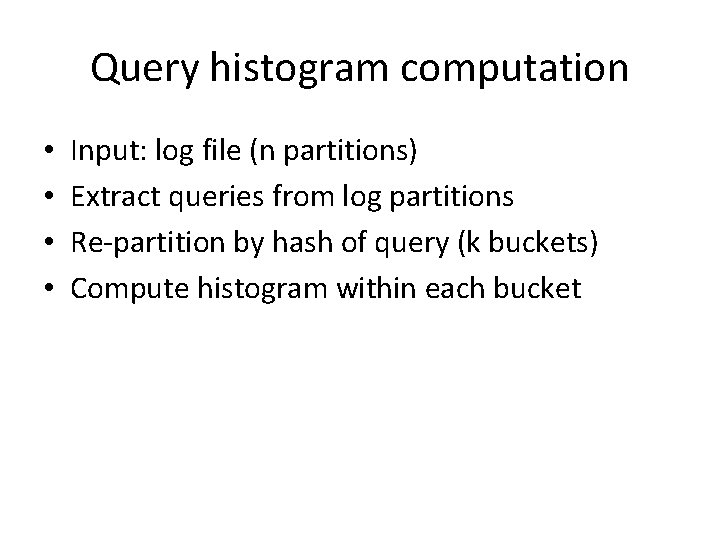

Query histogram computation • • Input: log file (n partitions) Extract queries from log partitions Re-partition by hash of query (k buckets) Compute histogram within each bucket

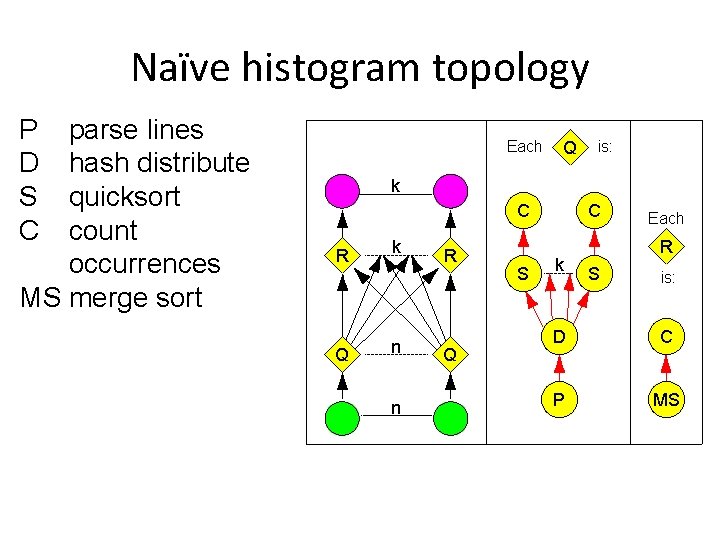

Naïve histogram topology P D S C parse lines hash distribute quicksort count occurrences MS merge sort Each Q is: k C R Q k n n R Q S C k Each R S is: D C P MS

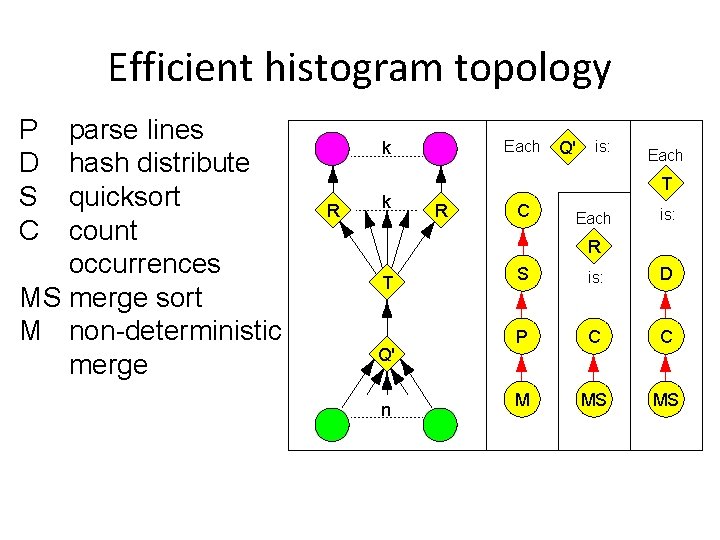

Efficient histogram topology P D S C parse lines hash distribute quicksort count occurrences MS merge sort M non-deterministic merge Each k R k Q' is: Each T R C Each is: R T Q' n S is: D P C C M MS MS

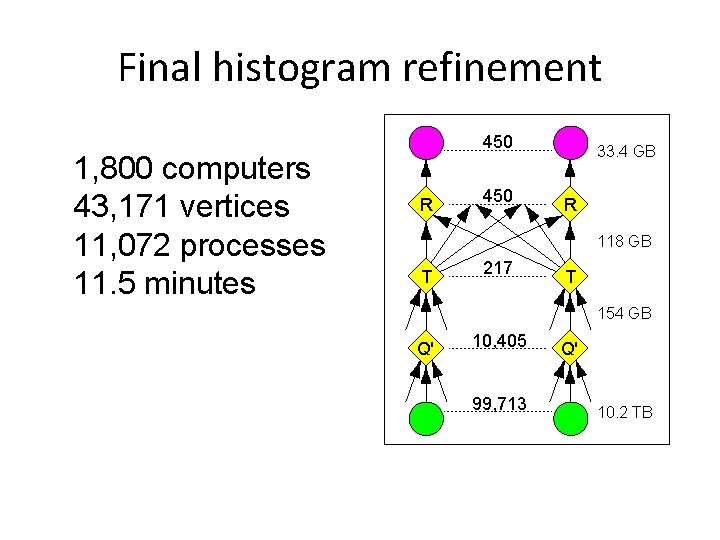

Final histogram refinement 1, 800 computers 43, 171 vertices 11, 072 processes 11. 5 minutes 450 R 450 33. 4 GB R 118 GB T 217 T 154 GB Q' 10, 405 99, 713 Q' 10. 2 TB

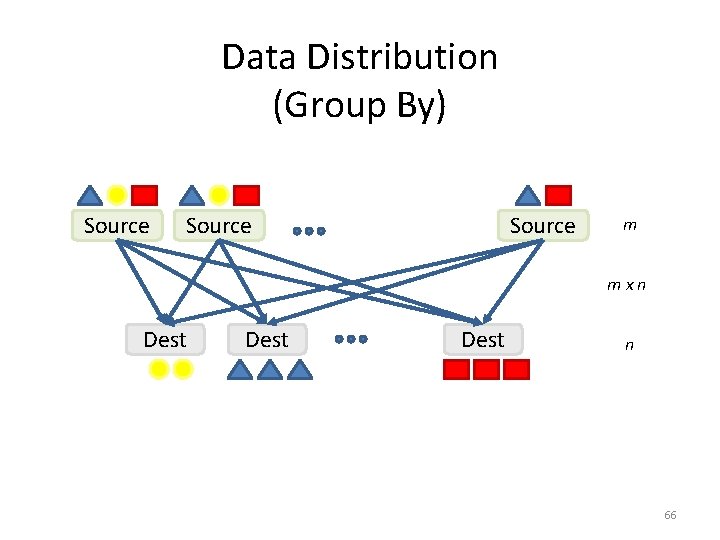

Data Distribution (Group By) Source m mxn Dest n 66

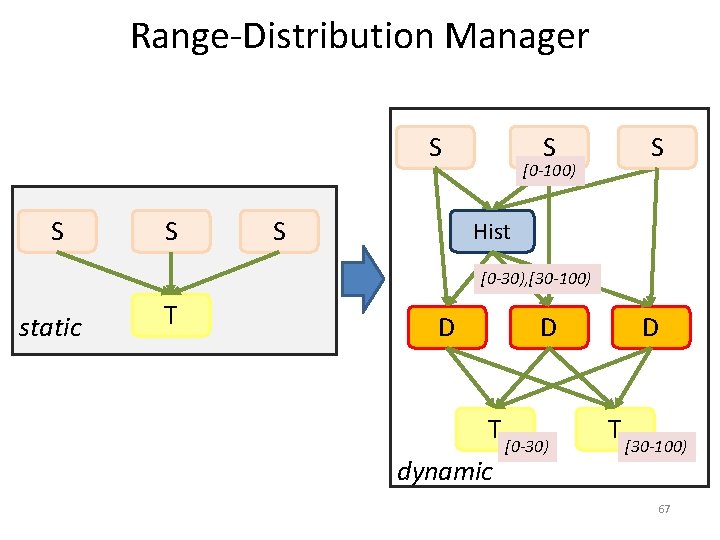

Range-Distribution Manager S S S [0 -100) Hist [0 -30), [30 -100) static T D D T [0 -? ) [0 -30) dynamic D T [? -100) [30 -100) 67

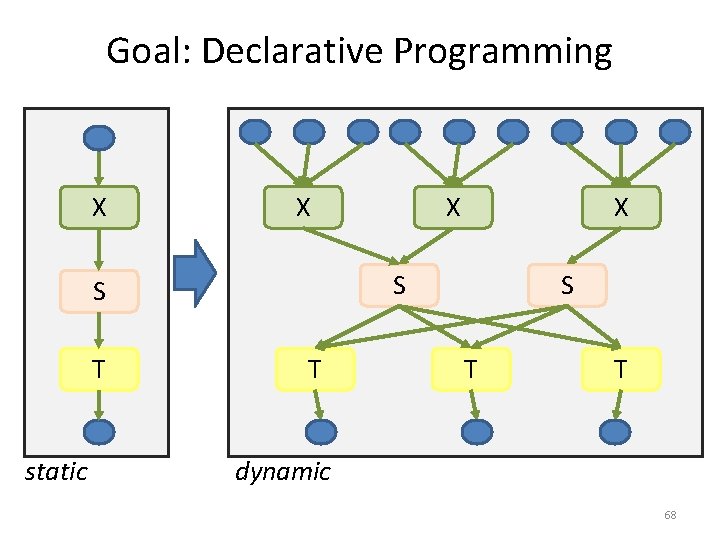

Goal: Declarative Programming X X static X S S T X T S T T dynamic 68

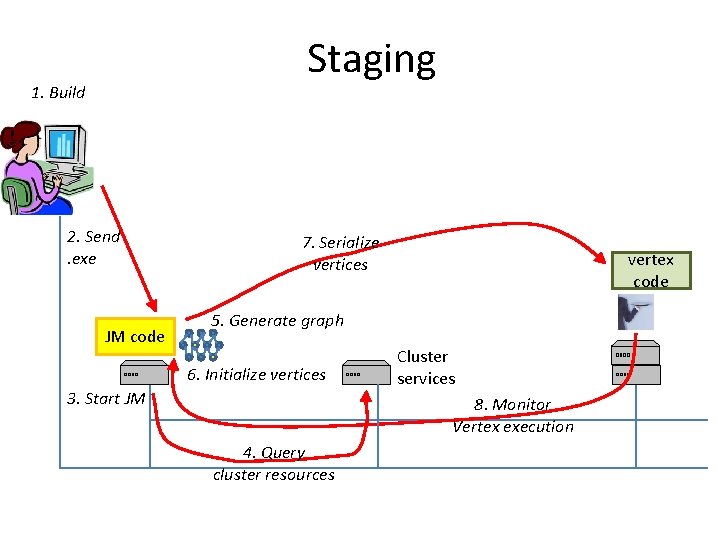

Staging 1. Build 2. Send. exe JM code 7. Serialize vertices vertex code 5. Generate graph 6. Initialize vertices 3. Start JM Cluster services 8. Monitor Vertex execution 4. Query cluster resources

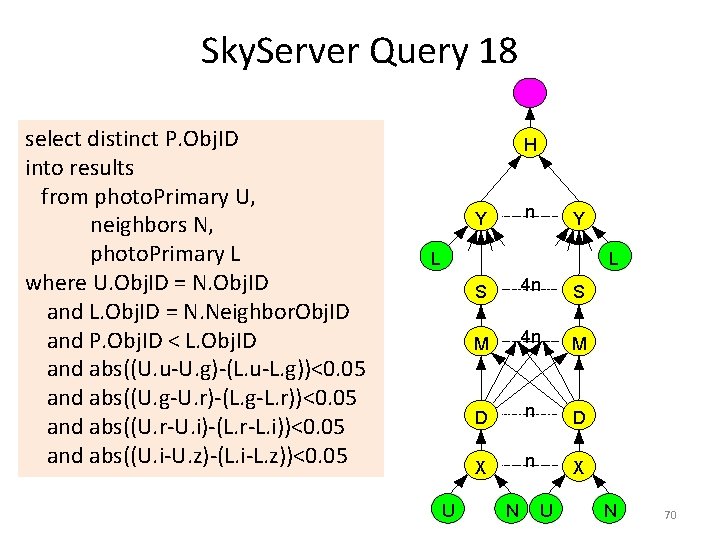

Sky. Server Query 18 select distinct P. Obj. ID into results from photo. Primary U, neighbors N, photo. Primary L where U. Obj. ID = N. Obj. ID and L. Obj. ID = N. Neighbor. Obj. ID and P. Obj. ID < L. Obj. ID and abs((U. u-U. g)-(L. u-L. g))<0. 05 and abs((U. g-U. r)-(L. g-L. r))<0. 05 and abs((U. r-U. i)-(L. r-L. i))<0. 05 and abs((U. i-U. z)-(L. i-L. z))<0. 05 H n Y Y L L U S 4 n S M 4 n M D n D X n X N U N 70

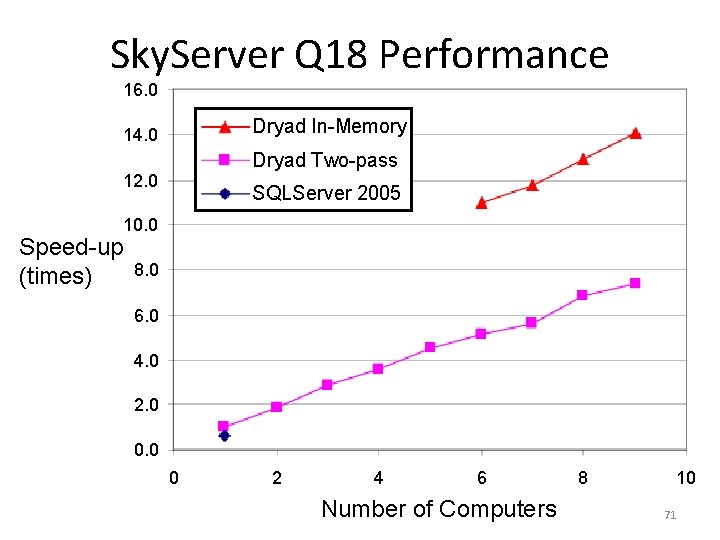

Sky. Server Q 18 Performance 16. 0 Dryad In-Memory 14. 0 Dryad Two-pass 12. 0 Speed-up (times) SQLServer 2005 10. 0 8. 0 6. 0 4. 0 2. 0 0 2 4 6 Number of Computers 8 10 71

- Slides: 71